1. Introduction

The explosive growth of e-commerce has propelled the warehousing and logistics industry into the era of real-time inventory [

1,

2]. Owing to their lightweight structure, low cost, and excellent stackability, logistics boxes have become the most common storage units in these scenarios [

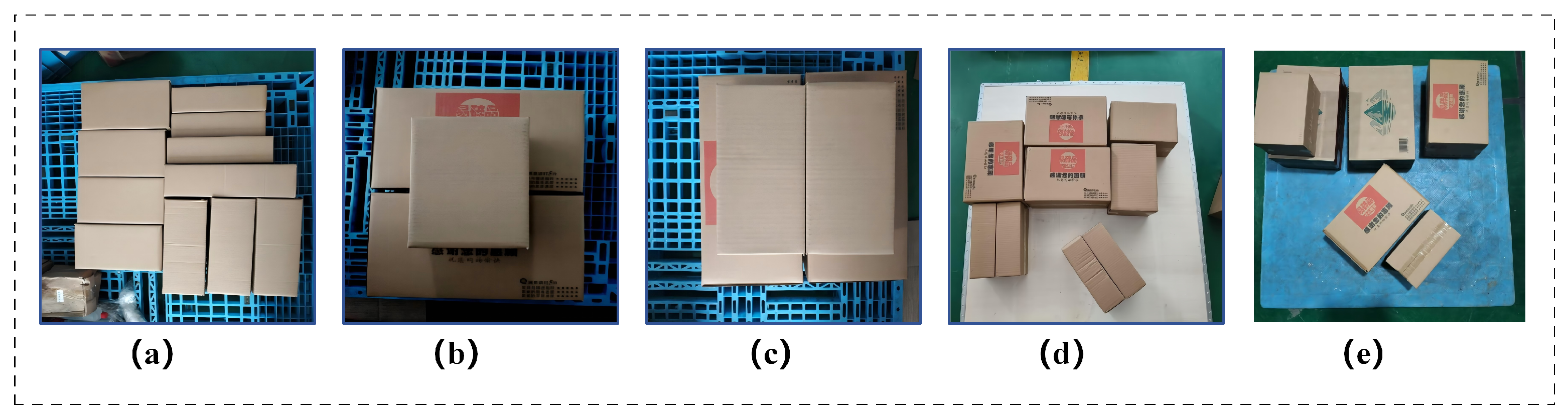

3]. Their identification efficiency directly impacts the overall performance of the supply chain. The traditional manual inventory method in a modern high-level three-dimensional warehouse has difficulty meeting the actual demand for efficiency and accuracy. On the one hand, to count the inventory in a modern high-level three-dimensional warehouse, for example, where the height of the warehouse usually reaches 20–40 m, it is difficult to manually complete the task by checking through the items one by one; on the other hand, relying on stacker cranes to transport goods piece by piece to the visual position for manual identification not only takes a long time but also seriously affects operational efficiency. In contrast, vision-based automatic identification offers non-contact, high-efficiency, and other advantages, and it has gradually become the mainstream alternative means, widely used in high-standing warehouses, conveyor lines, automatic sorting, and other scenarios. However, in a real warehousing logistics environment, visual occlusion, spatial compression, fragmented light shadows, edge overlap, background interference, and other non-structural topological noise significantly exacerbate the uncertainty of box identification and inventory. Therefore, how to quickly and accurately detect and recognize these dense and stacked boxes has become one of the core technologies to ensure the real-time consistency of inventory data and to prevent wrong or missed pickup in application scenarios involving conveyor line transfer inventory and high-level warehouse inventory, which are also the main research goal of this paper.

To date, researchers have conducted extensive studies on object detection in the logistics industry [

4,

5]. Early on, traditional machine vision method made some progress, but they faced challenges such as poor durability, limited accuracy, and low real-time performance. These issues were particularly pronounced in complex environments, limiting their application in modern logistics systems. Recently, with rapid advances in convolutional neural networks (CNNs) and computer hardware, deep learning [

6] has become a key technology for object detection and recognition. These algorithms generally fall into two categories. The first is two-stage detectors, such as Fast R-CNN [

7] and Faster R-CNN [

8], which are characterized by high detection accuracy but low speed. The second is single-stage detectors, which significantly improve detection speed and include models from the You Only Look Once (YOLO) series [

9,

10,

11,

12,

13,

14] and SSD [

15]. These detectors have become the preferred choice for tasks requiring high real-time performance. In addition, lightweight networks like YOLOx [

16] are notable representatives in this domain. Consequently, in recent years, an increasing number of logistics systems have adopted deep learning and artificial intelligence technologies to overcome the limitations of traditional vision methods. Yoon et al. [

16] proposed a logistics box recognition method based on deep learning and RGB-D image processing, combining Mask R-CNN and Cycle GAN technologies. By using Cycle GAN to optimize the surface of the box, this method eliminates the influence of labels on feature extraction. It is particularly suited for the robotic de-stacking process, and it addresses the issue of degraded recognition quality caused by the disorderly arrangement of boxes and varying surface textures in complex working conditions. Gou et al. [

17] employed a local surface segmentation algorithm to extract the skeleton of the stacked cardboard boxes and replace the foreground textures of the source dataset with the textures of the target dataset, enabling the rapid generation of labeled datasets and improving the model’s generalization ability in new logistics scenarios. Wu et al. [

18] proposed an adaptive tessellation method to redesign the traditional Faster RCNN to solve the problems of large differences in the number of categories in the box dataset, the imbalance of categories and the consumption of hardware resources. Arpenti et al. [

19] utilized RGB-D technology and a deep learning model YOLACT for box boundary detection, incorporating image segmentation techniques to extract box edges, which helped address the challenges caused by the diverse and complex box stacking methods. Ke et al. [

20] proposed an improved Faster R-CNN model for parcel detection by introducing an edge detection branch and an object edge loss function, which effectively addressed the misdetection problem caused by occlusion between parcels. They also proposed a self-attention ROI alignment module to further enhance detection accuracy. Domenico et al. [

21] compared various training strategies aimed at minimizing the number of images needed for model fitting, while ensuring the reliability and robustness of the model’s performance. After evaluating cardboard detection under different conditions, they identified a fine-tuning strategy based on a CNN model pre-trained for box detection as the optimal approach, achieving an over 85.0 median F1 score. Kim et al. [

22] developed a system that uses the regression KNN algorithm to calculate the minimum Euclidean distance to detect the geometric point cloud of parcels, thereby improving detection accuracy and providing guidance for robots in unloading tasks.

The widespread use of object detection algorithms in intelligent logistics has made the conventional axis-aligned bounding box inadequate for precisely localizing objects with arbitrary orientations. The object detection method based on Rotated Bounding Boxes gradually became a focal point of research due to its ability to fit detection objects more compactly and accurately in arbitrary directions. For example, Zou et al. [

23] found that ground targets such as airplanes and ships presented arbitrary rotational directions in high-resolution remote sensing images. Traditional IoU evaluation metrics exhibited discontinuous values when the angle changed drastically, leading to instability in rotating bounding box regression. Therefore, they proposed a probability distribution-based regression method for rotating angles, ProbIoU, by modeling the rotating box and the rotating frame as two-dimensional Gaussian distributions and constructing a differentiable regression loss function using the Hellinger distance, which significantly improved the fitting accuracy for objects with large angular changes. Zhao et al. [

24] pointed out that narrow objects were prone to angular regression bias under different rotation angles in the task of object detection with arbitrary orientation, and that objects with large differences in aspect ratios did not perform consistently with a uniform sampling strategy. Therefore, they proposed the OASL method, which introduced an aspect ratio-aware adaptive sampling mechanism and an angular loss adjustment strategy. Thus, the regression difficulty of targets with different shapes was dynamically balanced, and the robustness of rotational regression under extreme shape targets was effectively improved. Zhu et al. [

25] found that using a horizontal bounding box introduced ambiguities when fitting detected objects at certain angles together in remote sensing images. Therefore, they designed an enhanced FPN with angle-aware branching and a scale-adaptive fusion module to enhance the ability to capture rotational information through multi-level orientation guidance. Consequently, the accuracy of rotating frame detection in dense regions was improved.

The above methods have made different degrees of progress in box detection tasks such as box surface labeling, uneven number of categories, dataset generation, mutual shading of parcels, and diverse stacking methods, etc. However, many challenges, such as edge blurring and severe shaded boxes caused by dense stacking, remain in logistics box detection tasks. In addition, most of the current methods in the logistics field are still based on Horizontal Bounding Box (HBB), which has localization bias when dealing with scenarios in which a box has a rotation angle, affecting the actual detection accuracy. At the same time, the balance between lightweight models and high accuracy demand has not been fully resolved. In this paper, a lightweight rotating box detection model REW-YOLO is proposed. First, based on YOLOv8n, the C2f-RVB module is introduced, and the C2f module of YOLOv8 is replaced by RepViTBlock. Through structure reparameterization with depth separable convolution, the multi-branch structure is merged in the inference stage to reduce the computational overhead. Second, the ELA-HSFPN structure is designed by fusing an advanced feature fusion pyramid (HSFPN) and an efficient local attention mechanism (ELA), which enhances the model’s ability to extract local features and solves the problem of missed detection of dense box stacking placement.

2. Detection Model Based on Improved YOLOv8

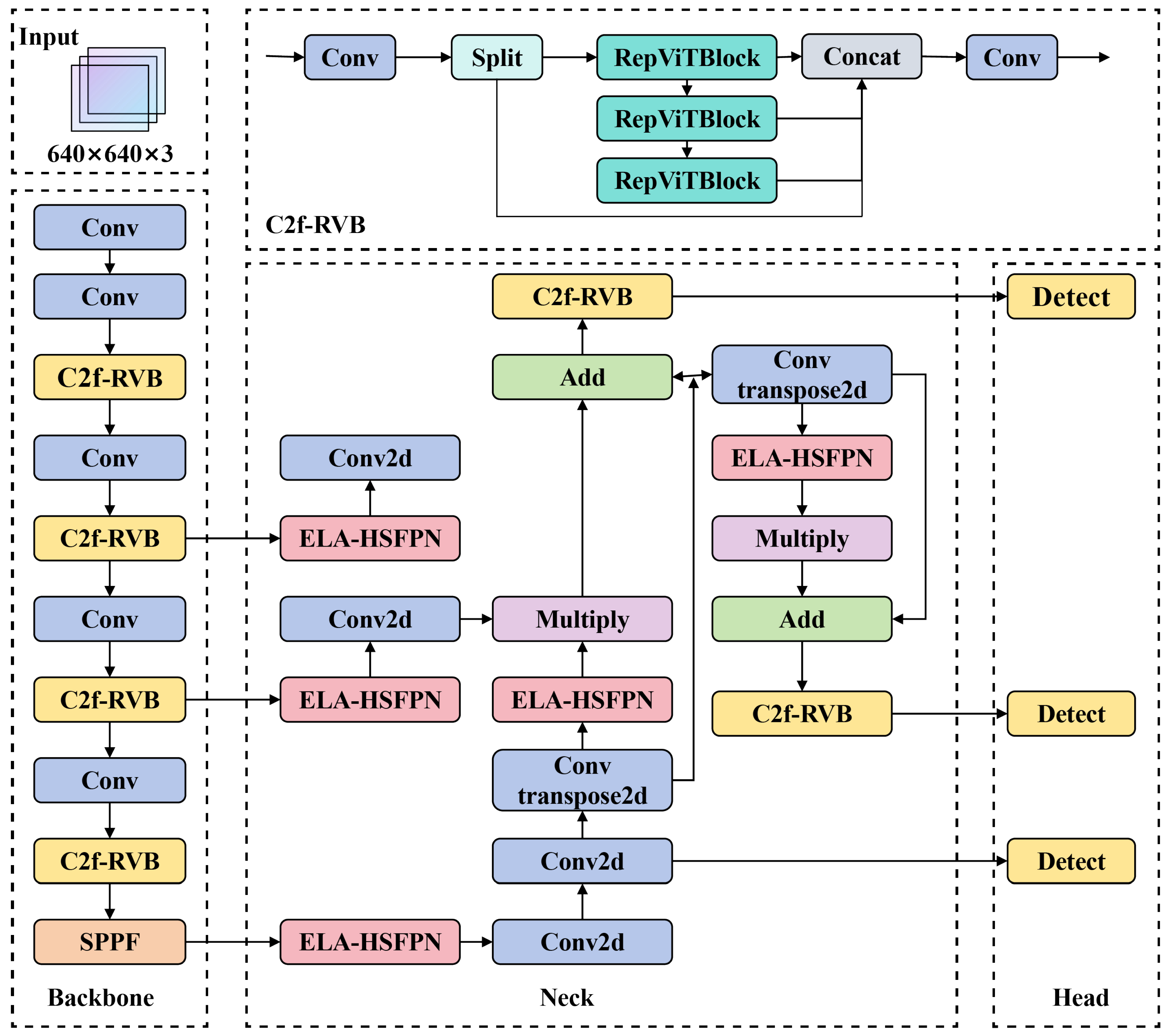

To improve the accuracy and robustness of box detection algorithms, this paper proposes an improved YOLOv8 model, REW-YOLO, as illustrated in

Figure 1.

First, the RepViTBlock module is integrated into the YOLOv8 backbone, replacing certain traditional convolution operations to allow more efficient processing of global structural and semantic information. Secondly, the HSFPN is fused with the Enhanced Local Attention (ELA) mechanism to construct the ELA-HSFPN structure, which improves the model’s ability to extract both local target features and target features of different shapes and sizes. Thirdly, the rotational angle regression is introduced to enhance the model’s capability to handle the rotated or tilted targets while reducing background interference. Finally, the random occlusion simulation is conducted, and the bounding box regression loss function is optimized using WIoU v3 (Wise-IoU v3), mitigating the effects of low-quality samples and accelerating model convergence. These improvements significantly enhance the accuracy and robustness of the proposed REW-YOLO model, making it more suitable for box detection in complex logistics environments, particularly in occlusion scenarios.

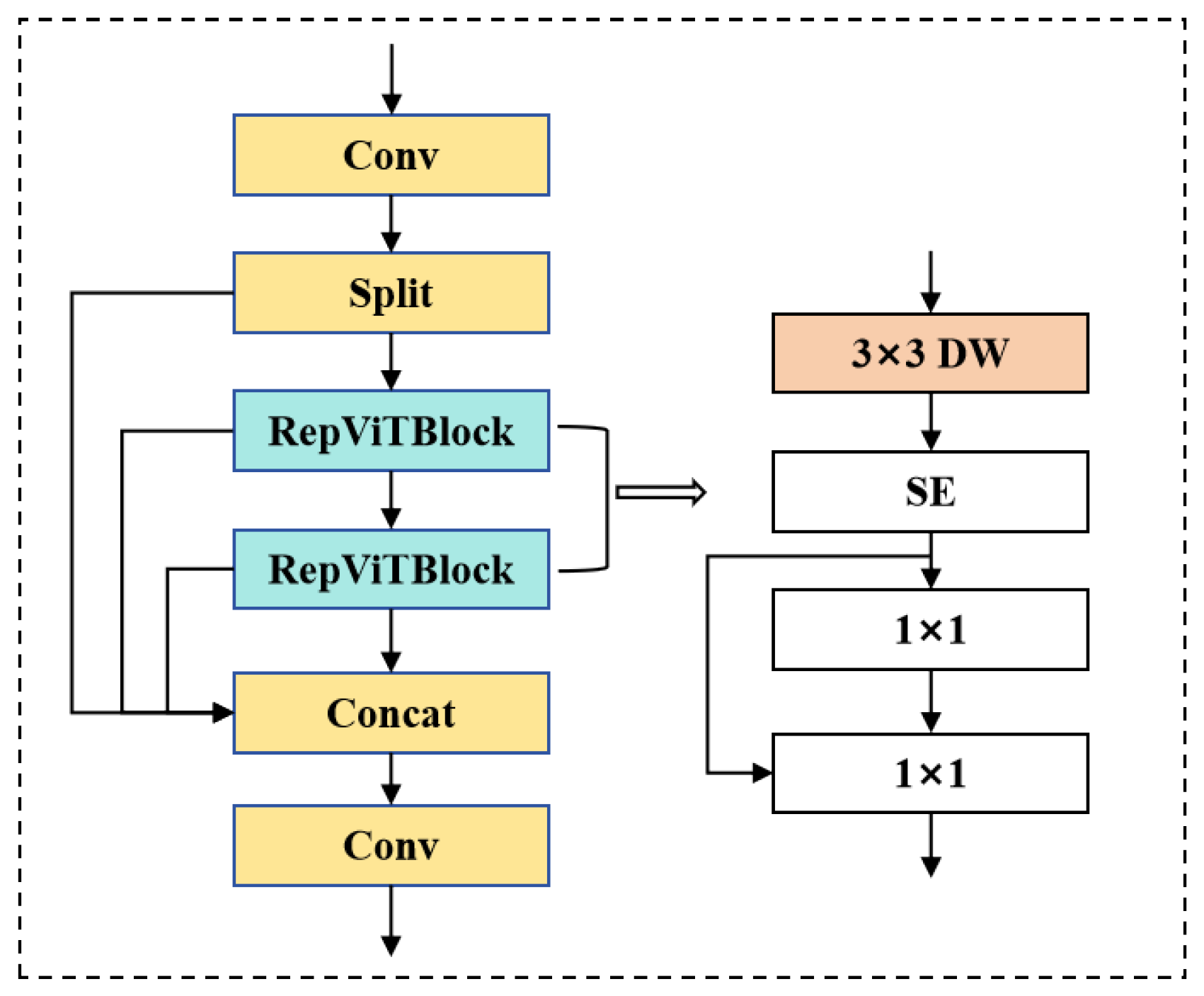

2.1. C2f-RVB Block

YOLOv8 redefines object detection as a bounding box regression problem, utilizing CNNs for efficient feature extraction and object classification. However, when applied directly to box detection in complex logistics environments, it faces a critical trade-off between computational cost and the need for model lightweighting. While its core module, C2f, improves feature reuse efficiency through cross-layer connections, the reliance on standard convolution operations leads to parameter inflation when stacked, making it difficult to meet the stringent requirements of mobile devices in terms of model size and computational latency. To enable deployment on resource-constrained mobile devices, inspired by Wang et al. [

26], the original C2f module from YOLOv8 has been replaced by the RepViTBlock module as the redesigned C2f-RVB module. This module reduces computational complexity through structural re-parameterization, depthwise separable convolutions, and feedforward mechanisms while maintaining performance. The use of depthwise separable convolutions decreases both the number of parameters and the computational cost, making the model more suitable for mobile devices with limited computing resources.

The RepViT module combines the advantages of CNN and Transformer by incorporating 3 × 3 depthwise separable convolution, 1 × 1 convolution, structural reparameterization, and an SE layer. The 3 × 3 depthwise separable convolution captures local textures, while the 1 × 1 convolution adjusts channels and fuses features. During training, structural reparameterization enhances expressive capability through a multi-branch architecture, and during inference, it merges into a single branch to reduce computational cost. The SE layer highlights key features through channel attention, enhancing the model’s focus on important information. The specific design of the RepViTBlock module is shown in

Figure 2.

In this case, the DWConv (Depthwise Separable Convolution) [

27] process is shown in

Figure 3. DWConv first performs spatial filtering on each input channel individually by depth convolution, and then utilizes point-by-point convolution to combine the features and adjust the channels by 1 × 1 convolution kernel to realize spatial filtering and feature combination on each input channel. This approach is well-suited for the requirements of a box detection model, as it achieves a significant reduction in computational complexity and parameter count without compromising model performance.

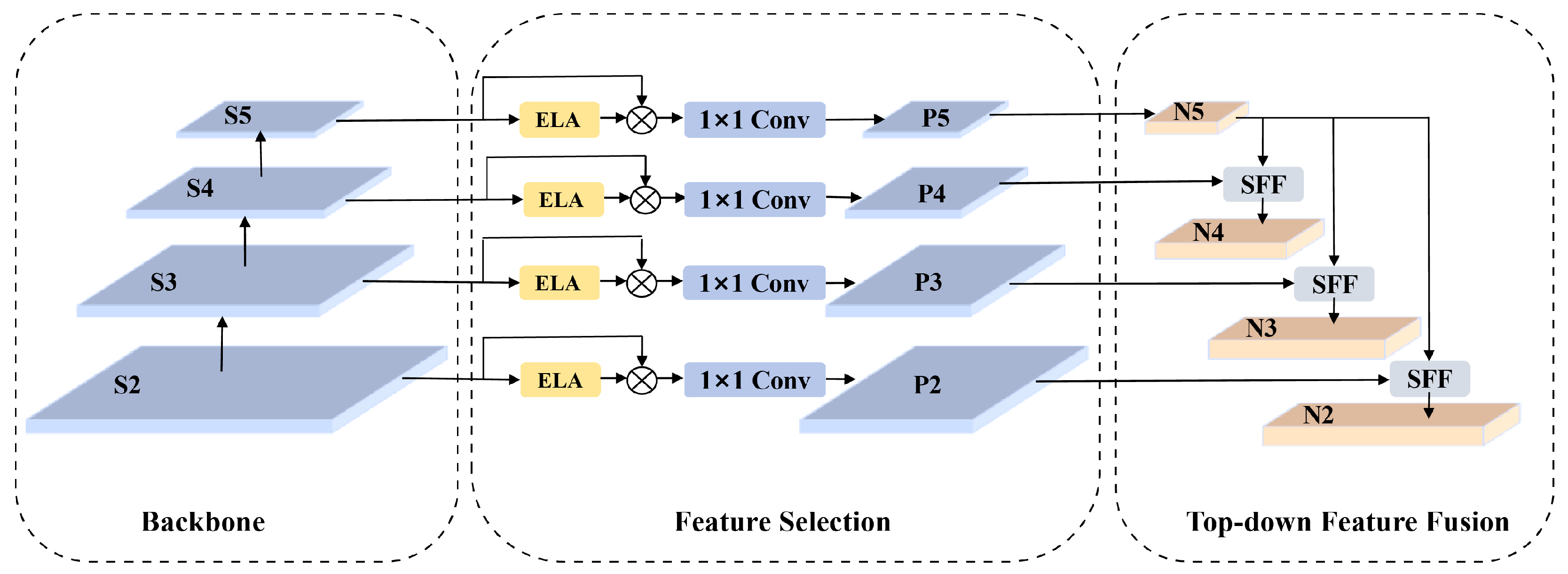

2.2. ELA Module

Attentional mechanisms are able to focus on important information and reduce distracting information according to task demands. The Efficient Local Attention (ELA) [

28] mechanism aims to efficiently capture spatial attention without reducing the channel dimension. The mechanism is inspired by the Coordinate Attention (CA) [

29] approach and addresses its limitations by using 1D convolution and group normalization (GN) [

30] instead of 2D convolution and batch normalization (BN) [

31]. The ELA mechanism captures feature vectors in both horizontal and vertical directions through strip pooling, maintains a narrow convolutional kernel shape to capture long-range dependencies, pays more attention to the box-local features, and prevents box-related target-independent regions from affecting label prediction, thus improving the accuracy of box target detection. The module of ELA is depicted in

Figure 4.

Given an input feature map

, ELA first applies strip pooling along the horizontal and vertical directions to extract direction-sensitive global features. For the

c-th channel, the pooling outputs in the horizontal and vertical directions are given by (

1) and (

2).

The location of the

c-th channel of the input feature map is imperative for comprehension. The dimensions of the feature map are denoted by

H and

W, representing its height and width, respectively. The target height position is designated by

h, while

w signifies the target width position. The pooling step is essential for preserving the spatial location information and providing directional feature vectors

and

for subsequent local interactions. Subsequently, 1D convolution is applied to

and

, respectively, to capture the correlation of neighboring locations. The convolution results are processed by GroupNorm to enhance the model’s generalizability, and then passed through a Sigmoid function to generate directional attention weights, as follows:

where

represents group normalization and

is the Sigmoid function. The final output feature map is obtained by element-wise multiplication to integrate bidirectional attention:

where ⊙ denotes element-wise multiplication.

To enhance the model’s local feature extraction capability, the ELA-HSFPN architecture was designed and constructed by integrating the ELA mechanism into the HSFPN. HSFPN divides the feature pyramid into multiple sub-pyramids, each with its own feature fusion and upsampling operations. First, the backbone network extracts multi-level feature maps, where lower-level features contain richer spatial information, while higher-level features provide stronger semantic information. Then, the feature fusion network utilizes the ELA module to refine the features, employing 1 × 1 convolution to reduce channel dimensions and fuse low- and high-level features. Finally, the top-down feature fusion module progressively upsamples and transfers high-level features to lower levels through skip connections, enhancing the multi-scale feature representation. This architecture enables multi-level feature fusion, which improves the model’s performance in detecting boxes with different sizes. The ELA-HSFPN structure is illustrated in

Figure 5.

2.3. WIoU Loss Functions

The YOLOv8 loss function consists of multiple components, including classification loss (VFL loss) [

32] and regression loss, which combines CIoU loss [

33] for bounding box regression. However, CIoU loss does not account for the balance between high-quality and low-quality samples in the dataset. Since most box datasets contain objects with features similar to the background, there is an imbalance between samples of different qualities. To address this limitation, the improved network adopts Wise-IoU (WIoU) [

34] as the loss function. The core of WIoU lies in the introduction of an outlier degree metric and a dynamic fuzzy gain allocation strategy. Specifically, WIoU achieves dynamic gradient allocation through a three-level optimization process. The base loss (WIoUv1) enhances local details of the center points by incorporating a distance attention factor. RWIoU further focuses on the center distance between anchor boxes and ground-truth boxes—anchors with lower overlap receive higher RWIoU values.

The variables and represent the minimum enclosing box size, while and denote the center coordinates of the target box.

To further reduce the loss contribution from low-quality samples, a dynamic non-monotonic frequency modulation is introduced based on WIoUv1. By integrating the non-monotonic modulation factor

r with the base loss, WIoUv3 is constructed, as shown in (

8) to (

10).

where

represents the monotonic focus factor,

denotes the outlier degree of the anchor box,

r is the non-monotonic focus factor and

is a hyperparameter. A lower

indicates a high-quality anchor box, while a higher

indicates possible annotation noise or localization errors. By constructing the non-monotonic modulation factor

r using the outlier degree and parameters

and

, WIoU effectively prevents low-quality samples from generating harmful gradients, enhances the focus on correctly labeled samples, and improves the accuracy of the network model.

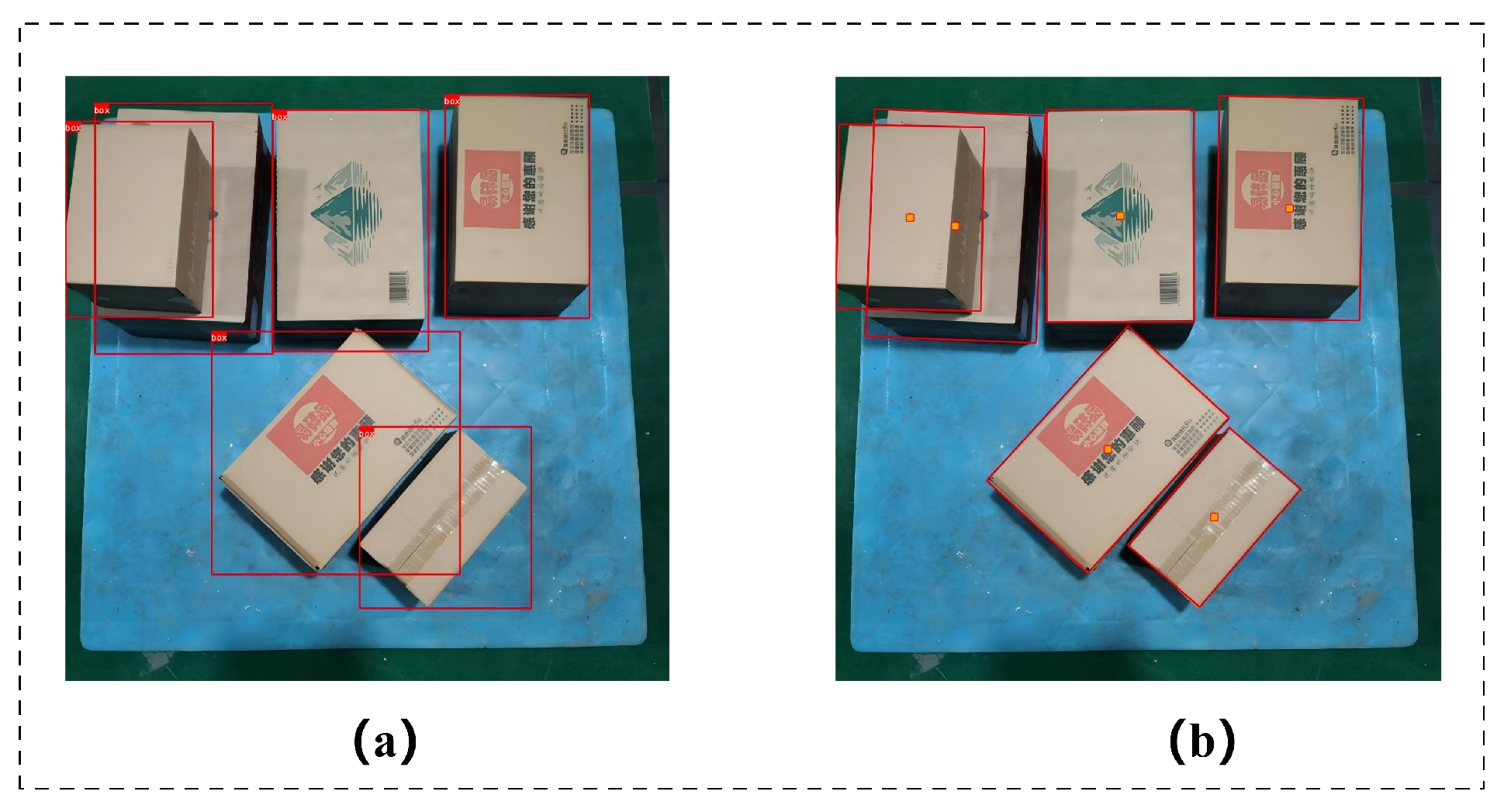

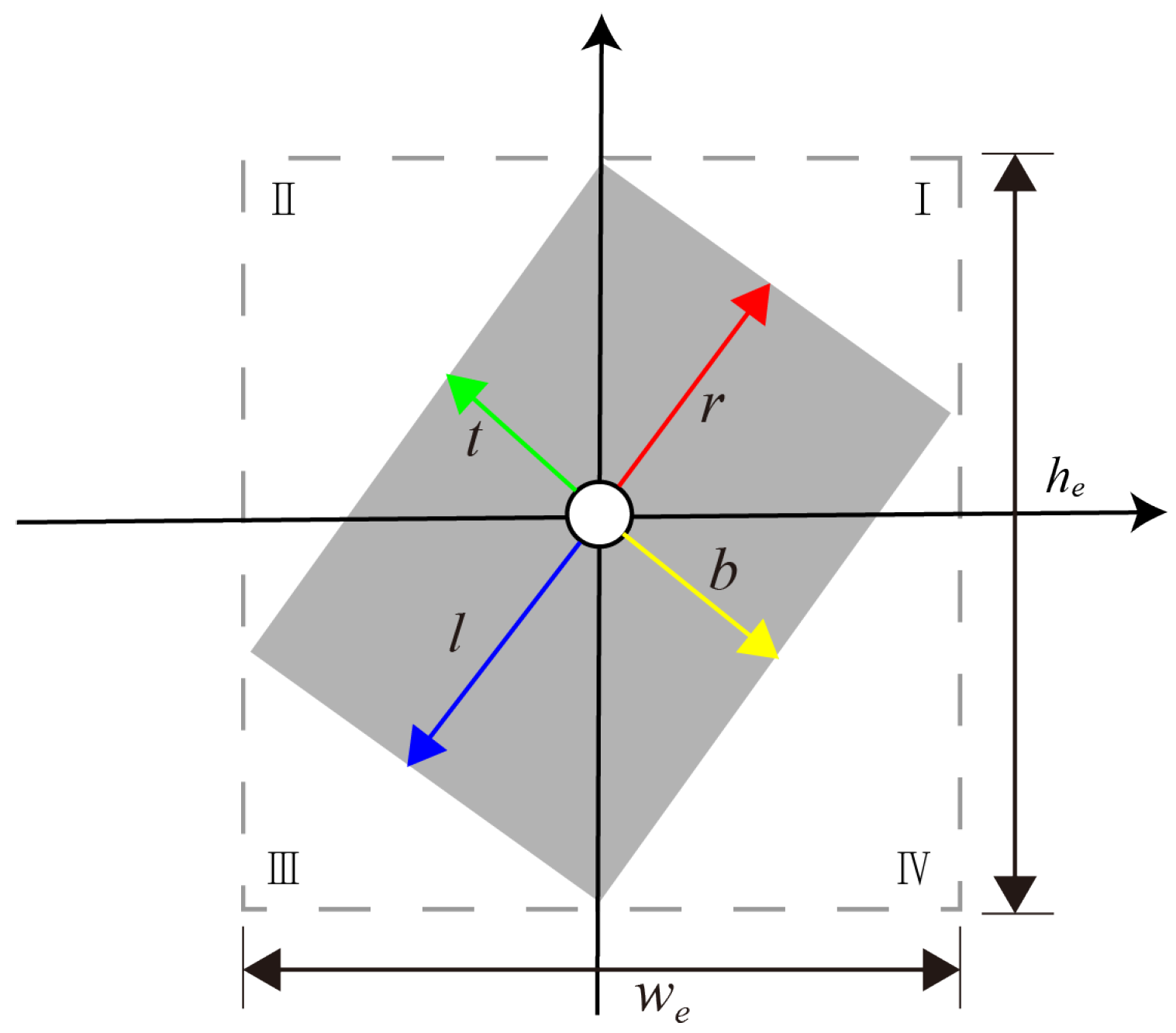

2.4. Incorporating Rotational Angle Regression

In the field of industrial logistics automation, object detection methods based on axis-aligned bounding boxes (AABB) [

35] have long been the dominant approach due to their structural simplicity and computational efficiency. However, in real-world logistics scenarios, box-shaped objects often exhibit irregular rotations, as illustrated in

Figure 6a. When the object rotation angle

, axis-aligned bounding boxes introduce excessive background noise,

on average, which degrades localization accuracy.

Figure 6b. compares rotated bounding boxes with horizontal bounding boxes and shows that the latter often encompass background areas such as pallets, leading to inaccurate object localization. Additionally, horizontal bounding boxes may overlap with other boxes, further reducing detection accuracy. In contrast, rotated bounding boxes provide a more accurate representation of object boundaries, making them a more suitable annotation method for logistics box detection. In contrast, the rotated box can more accurately represent the boundaries of the box. Therefore, the OBB box with angular representation is used in the data annotation and modeling process. Each rotated bounding box in the data annotation is represented by five parameters

, where

are the coordinates of the center point of the box.

w and

h represent the width and height of the box when it is not rotated, respectively.

represents the angle corresponding to the long edge of the box rotated counterclockwise from the positive horizontal direction of the image.

Despite the superior spatial representation capability of the OBB box, there are multiple challenges in directly adopting angular regression. On the one hand, the rotation angle is periodic, e.g.,

and

represent nearly the same direction but with a

difference in values, which can easily lead to gradient discontinuity; on the other hand, the box sizes are significantly different, and it is difficult to learn the offset value of small box targets with small values, while the offset of large box targets with large values can easily trigger the gradient explosion, resulting in training instability. To alleviate the above problems, this paper introduces the SAVN (Scale-Adaptive Vector Normalization) strategy in the OBB detection framework and constructs the regression structure based on BBAVectors(Box Boundary-Aware Vectors). Specifically, the network first branches the Box Param to regress the outer width and outer height of the rotating box, which are defined as the smallest rectangular dimensions that can completely enclose the rotating box horizontally and vertically, respectively; then, at the center point

, the network simultaneously regresses the four boundary-aware vectors (

,

,

,

). As shown in

Figure 7, where

(top) denotes the vertical offset from the center point to the upper boundary of the box,

(right) denotes the horizontal offset from the center point to the right boundary of the box,

(bottom) denotes the vertical offset from the center point to the lower boundary of the box, and

(left) denotes the horizontal offset from the center point to the left boundary of the box.

In order to eliminate the influence of target scale on training, in this paper, the vectors are normalized so that the offset values reflect only the relative geometric relationship rather than the absolute pixel distance. The specific expression is as follows:

so that all are mapped

to the

interval, the network only needs to learn the relative offset ratio that is independent of the absolute size of the target, alleviating the problem of the gradient instability caused by too small regression values for small bins that are difficult to fit, as well as large regression values for large bins that are too large. In the inference stage, the same location is predicted in accordance with

:

Reduced to actual pixel offset vectors

,

,

,

. Finally, the coordinates of the four vertices of the rotating frame are computed by summing the center point with the four boundary vector components based on the following equation:

where

,

,

, and

represent the coordinates of the four vertices of the rotating bounding box:

(top-left) represents the upper-left corner point,

(top-right) represents the upper-right corner point,

(bottom-right) represents the lower-right corner point, and

(bottom-left) represents the bottom-left corner.

6. Conclusions

This paper proposed a REW-YOLO network model based on YOLOv8, designed to address the complex detection challenges of box stacking, rotation, and occlusion in logistics scenarios. The REW-YOLO achieves innovative breakthroughs by integrating structural reparameterization techniques and direction-aware attention mechanisms. The lightweight C2f-RVB module was constructed by combining the global modeling capability of RepViTBlock and the local feature extraction advantages of depthwise separable convolutions based on the original C2f structure, thereby reducing the number of parameters to 2.18 M and computational redundancy by 28%. The ELA-HSFPN hybrid network was designed to generate direction-sensitive attention weights using strip pooling and 1D convolution, enhancing occlusion box contour features and improving multi-scale occlusion target recall by 4.2%. A rotation angle regression branch and a dynamic Wise-JoU v3 loss function were introduced to optimize the positioning accuracy of the rotated boxes through an outlier-aware gradient allocation strategy. In addition, a rotational angle regression branch is introduced to achieve accurate positioning of rotating boxes, as well as a dynamic Wise-IoU loss function that balances the sample quality distribution of the dataset through an outlier-aware gradient assignment strategy.

Experimental results demonstrate that the model achieves 88.6% mAP50 and real-time performance of 130.8 FPS on the self-constructed BOX-data dataset. The REW-YOLO model meets the accuracy requirements of the warehouse logistics box recognition system. Compared with other lightweight detection frameworks, REW-YOLO shows superior performance in scenarios involving dense object placement, complex orientations, and severe occlusion, achieving a better balance between model compactness and robustness.

Tests conducted in real-world environments have shown relatively low confidence scores for heavily occluded boxes, resulting in a number of non-detections or misclassifications. Although random occlusion-based data augmentation was applied during preprocessing, the model did not fully account for occlusions occurring at different angles. Additionally, we observed a significant increase in misdetection rates when the occlusion ratio reached approximately 70% or higher. In the future, we will incorporate a wider range of occlusion angles and extreme scenarios in the dataset and analyze multi-view images to improve the robustness and adaptability of the model.

References yes