Abstract

Deep Neural Networks (DNNs) are nurturing clinical decision support systems for the detection and accurate modeling of coronary arterial plaques. However, efficient plaque characterization in time-constrained settings is still an open problem. The purpose of this study is to develop a novel automated classification architecture viable for the real-time clinical detection and classification of coronary artery plaques, and secondly, to use the novel dataset of OCT images for data augmentation. Further, the purpose is to validate the efficacy of transfer learning for arterial plaques classification. In this perspective, a novel time-efficient classification architecture based on DNNs is proposed. A new data set consisting of in-vivo patient Optical Coherence Tomography (OCT) images labeled by three trained experts was created and dynamically programmed. Generative Adversarial Networks (GANs) were used for populating the coronary aerial plaques dataset. We removed the fully connected layers, including softmax and the cross-entropy in the GoogleNet framework, and replaced them with the Support Vector Machines (SVMs). Our proposed architecture limits weight up-gradation cycles to only modified layers and computes the global hyper-plane in a timely, competitive fashion. Transfer learning was used for high-level discriminative feature learning. Cross-entropy loss was minimized by using the Adam optimizer for model training. A train validation scheme was used to determine the classification accuracy. Automated plaques differentiation in addition to their detection was found to agree with the clinical findings. Our customized fused classification scheme outperforms the other leading reported works with an overall accuracy of 96.84%, and multiple folds reduced elapsed time demonstrating it as a viable choice for real-time clinical settings.

1. Introduction

Deep Neural Networks (DNNs) are fueling medical imaging technology, medical diagnostics, and healthcare in general [1]. DNNs also continue to be of significance in the field of cardiovascular imaging for the detection of coronary arterial plaques [2,3]. Coronary plaques are cholesterol deposits in the wall of the heart arteries and are the leading cause of death globally (projected one in four deaths). According to the World Health Organization (WHO), 85% of these deaths were due to plaque buildup that resulted in the narrowing of the coronary arteries through a process termed atherosclerosis.

Each layer of DNN optimized its weights based on the Boltzmann machine [4] to avoid overfitting and vanishing gradients when used in cardiac imaging problems. DNNs with sufficient depth can foster compact representation that requires fewer training examples to tune the parameters and produces better classification results [5,6]. Due to their ability to correlate deep features with each layer, they are preferred over conventional architectures for improved patient outcomes [7,8,9,10,11,12]. However, the bulk of parameters sometimes leads to overfitting and poor generalization [13], and this paved way for the evolution of other leading architectures including AlexNet, ResNet, and GoogleNet [14,15,16,17,18]. These deep learning models embed Cartesian and polar image representations for multi-path classification architectures [19,20,21] but at the cost of computational effort.

The resolve of this paper is to develop a time-efficient classification architecture for real-time clinical support systems and to validate Generative Adversarial Networks (GANs) for data augmentation. We propose a time-efficient hybrid fused Convolutional Neural Networks (CNNs) classifier. Our proposed architecture with Support Vector Machines (SVMs) embedded in high-density layers reduced the computational burden. Data augmentation was done to remove the class imbalance [22,23]. The objective functions of the generator and discriminator were optimized via the gradient descent method to make perfect aliases. Transfer learning was used to train our OCT dataset by freezing early layers of the architecture and fine-tuning them later on. For ground-truth annotation, three trained experts with daily experience in OCT-assisted interventions determined the plaque type in an A-Scan. All experts were partially provided the same and different images for labeling. For the same images, the final label was determined based on the consensus between the experts. The proposed architecture recorded the best classification performance in minimal time compared to other leading architectures, with potential viability for enhancing decision-making in clinical settings.

2. Methods

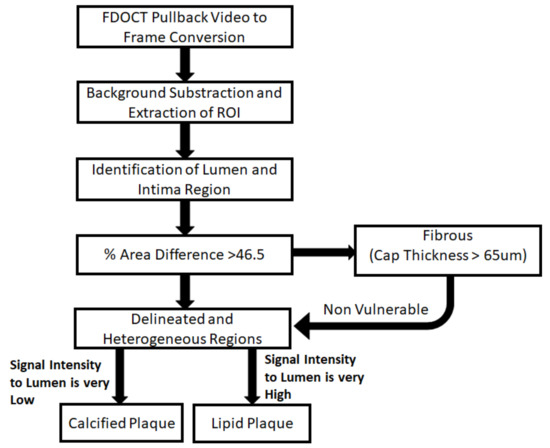

A novel dataset of 53 coronary stenosis in 39 patients was assessed using labels by an OCT available system (C7-XR, St. Jude Medical, St. Paul, MA, USA) using the C7 Dragonfly intravascular OCT catheter (St. Jude). This system provides spatial resolution up to 10 µm and tissue penetration up to 3 mm. Target vessels were those with stenosis (>30% diameter stenosis visual estimation). Serial stenosis, left main stenosis, by-pass graft stenosis, and anatomical characteristics were excluded from the study, as this would distort results. The study was approved by Galway Clinical Research Ethics Committee (GCREC) and informed consent was obtained from the patients. The dataset was built from acquired OCT images having A-scans. All experts were partially provided the same images and different images for labeling. For the same images, the final label was determined based on consensus between the experts. We classified based on the particular label versus the rest. Preprocessing steps were applied to raw OCT acquired images, and the A-line values within the guidewire were set to zero. Vulnerable plaques were determined and excluded using the flow diagram as illustrated in Figure 1.

Figure 1.

A schematic flow of the steps performed by the clinicians for the detection and characterization of different arterial plaques.

Finally, we had 20% images labeled as “calcified,” 20% as “lipid plaques,” 15% were labeled as “fibro-lipidic plaque,” 15% “fibro-calcified,” 5% were labeled as “mixed plaque,” and 25% labeled as “no plaque”.

2.1. Data Augmentation Using GANs

For data amplification, we used GANs, as these networks facilitate hybridization and ease merging pre-existing models [24,25,26,27]. GAN generator consisting of a fully connected layer was used. It helps in projecting input to the next layers using stride convolutions and is presented in Figure 2. We approximated unknown distribution through a generator that maps samples from a fixed prior distribution. The Generator (G) was trained in parallel with a Discriminator (D) by searching a saddle point. Batch normalization was performed in each layer.

Figure 2.

An illustration of the CWGAN routine used for data synthesis.

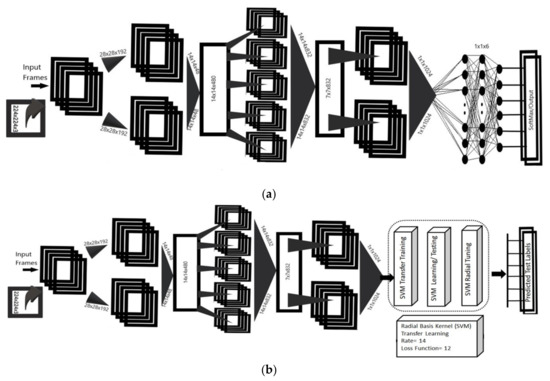

The generator creates realistic samples, and a discriminator was then used to distinguish between real and real-like copies as illustrated in Figure 3.

Figure 3.

The models we employ in our dataset. The upper architecture (a) is of CNN (GoogleNet), the lower (b) is the proposed fused CNN architecture. In each block number of input and output feature maps is exhibited. Our modified model replaced the fully connected layers with RBF multiclass SVM within the CNN model for classification.

2.2. Proposed Fused Deep Learning Classifier

Figure 3a represents the leading CNN architecture (GoogleNet), where each block represents the input and output features maps. Figure 3b is our proposed fused classification architecture, where the densely connected layers of the CNNs were replaced by SVMs as indicated using dotted blocks. Radial Basis Function (RBF) was used to ensure faster convergence of the global hyper-plane. As reported elsewhere [28,29], we implemented transfer learning to ensure improved learning in the target domain. The labeled data was fed to the classifier and, via transfer learning, different features were extracted at different levels in the network. For transfer learning, we removed the final layer and used GoogleNet as our pre-trained model. Then, we unfroze convolution layers 4 and 5 while keeping the first three blocks frozen for the second pass of training. Finally, the replaced end layers were trained by freezing all convolutional layers of the module (GoogleNet). Fine-tuning was done by removing the fully connected nodes and embedding new layers as illustrated in Figure 3b. We normalized our network predictions based on the cross-entropy between the true label distribution and the predicted label. Hinge Loss was set up for the SVM classifier based on maximum margin classification. For model training, we minimized the cross-entropy loss by using the Adam optimizer with a learning rate of Lr = 10−4. To find the optimal schedule, we reduced the learning rate by a factor of two when the validation error saturated. In total, we trained each model for 100 epochs.

In our model, the intermediate output value Z was obtained as a result of convolution input data A from the previous layer with weight tensor W, as indicated in Equation (1). The model was trained without partitioning the replicas for memory optimization.

where l is the number of layers and b is the bias term.

We minimized the cross-entropy loss L in our classification tasks using Equation (2).

where m represents the number of classes, y is the ground truth label, and Y represents the softmax normalized model prediction.

Our model utilizes specific learning rates and suitable hyper-parameters including epoch count, batch size, and filter counts for each layer. Langrangian function (LF) loss for back-propagation was computed using the expression in Equation (3).

where ai represents the Langrangian multiplier and Rb is the Radial Basis Kernel function.

3. Results and Discussion

The gain of our proposed solution is that it not only detects but also characterizes coronary arterial plaques in real-time clinical settings. It provides better classification accuracy with much less computational effort as compared to the other leading classification architectures. Data Augmentation through GANs has proven handy for our limited arterial plaques dataset. However, data variability in other clinical datasets is yet to be validated in terms of generalization. Similarly, the effect of distributional shift caused by externalities is another key issue.

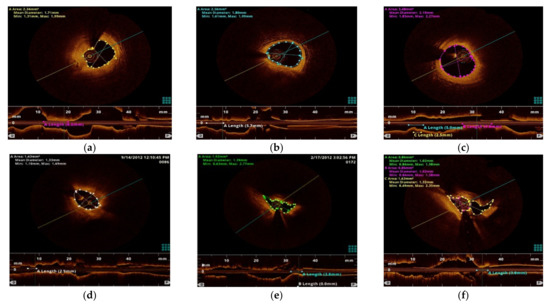

For all the experiments presented in this section, a train validation-test scheme was utilized. The presented results were calculated on the test set where the selection of hyper-parameters was made over the validation set. In the rest of this section, we compare the performance of different leading CNN-based classification architectures with our proposed fused CNN architecture for this dataset. Calculated results were cross verified with the preliminary expert’s marking and their automated characterization results as illustrated in Figure 4.

Figure 4.

Characterization of coronary plaques using developed scheme (a) outlines the fibrous plaques using the method. (b) exhibits marking for calcified plaque. (c) differentiates lipid plaque-based automation routine. (d) highlights the characterization of fibro-lipidic plaque. (e) identifies the fibro/calcific plaque. (f) represents the mixed plaque based on the proposed architecture of Figure 3b.

The confusion matrix was computed by calculating True Positive Rate (TPR), True Negative Rate (TNR), False Positive Rate (FPR), and False Negative Rate (FNR) along with Positive Predicted Value (PPV) and Negative Predicted Value (NPV) [30]. This is presented in Table 1.

Table 1.

Confusion Matrix for AlexNet Architecture.

The numbering on the left side and at the bottom of Table 1 represents different classes. Diagonal elements in the confusion matrix represent the number of correct predicted frames against each class label during the testing process. The cells in the last column indicate the percentage of correctly identified positive predictions (specificity) against each class. It was confirmed that the precision value for AlexNet remained above 77% for each of the six classes. The cells in the last row indicate the sensitivity of the model. Finally, the last diagonal cell is indicative of overall accuracy that remained 81.6%. After the training phase, the model was cross-validated by picking up a few random frames to mark its accuracy against real-time frames. The same procedure was repeated for the DenseNet model and the results are illustrated in Table 2. GoogleNet resulted in calcified plaque detection with PPV of 58.3% and an overall accuracy of 80.2%, whereas Table 3 highlights that our proposed architecture has achieved the highest classification accuracy of 96.84%.

Table 2.

Confusion Matrix for DenseNet architecture.

Table 3.

Confusion Matrix for the proposed CNN architecture.

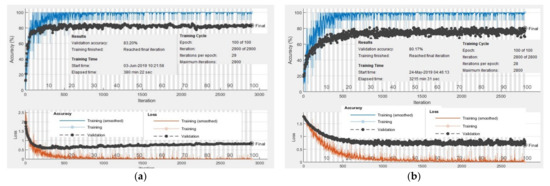

Figure 5a demonstrates the training process of AlexNet with a layer size of 25. AlexNet was fed with an input tensor having a dimension of 227 × 227 × 3 and the learning rate was kept initially at 0.5 for low-level feature learning. It achieved 83.20% validation accuracy for 40 epochs after 29 iterations for the pre-defined six classes. The training process took 2900 iterations and the elapsed time remained 380 min and 22 s. Figure 5b revealed that the training DenseNet with the same layer size and an input tensor having a dimension of 227 × 227 × 3 achieved 79.37% validation accuracy. However, the training process took 2900 iterations and the measured elapsed time was 3215 min and 31 s. Though both these architectures provide reasonable accuracies, they suffer from intense time computations. Hence, they are not ideal for any real-time clinical decision support.

Figure 5.

Accuracy and loss plots for AlexNet and DenseNet frameworks. (a) represents the validation accuracy, training time, and loss computations for AlexNet. (b) exhibits the validation accuracy, training cycles, and loss profile for DenseNet.

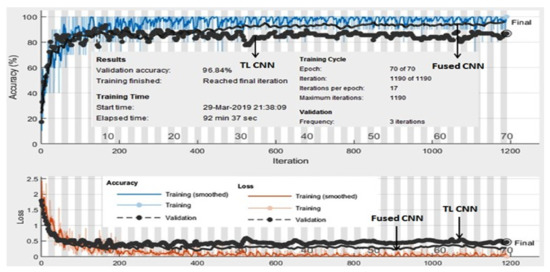

As illustrated in Figure 6, the validation accuracy for the GoogleNet was found to be 83.4% with an elapsed time of 92 min and 37 s, but our modified architecture with SVM embedded in the output layers of GoogleNet resulted in an accuracy of 96.84%. This is 16% more than the state-of-the-art GoogleNet with an elapsed time of 20 s that is ideal for real-time implementations.

Figure 6.

Accuracy and loss plots for the proposed method and its cross-verification on real-time frame passing.

In this paper, cutting-edge models [31,32,33,34,35,36] were compared to our proposed architecture. DenseNet, AlexNet, and GoogleNet architectures were implemented and tested with our newly created dataset. AlexNet includes repeating convolutional layers, followed by max-pooling and then a few dense layers operations, whereas DenseNet relied heavily on the extensive computations. GoogleNet, with a quite different architecture from both, uses combinations of inception modules and 1 × 1 feature convolutions for feature selection. Each inception module captured salient features at different levels and concatenated them before feeding them to the next layer. Our proposed architecture is unique as it takes advantage of multi-level feature extraction, both general (5 × 5) and local (1 × 1), concurrently. The output format of our fully connected layer is 1 × 10 which significantly reduces the number of training parameters as compared to a conventional CNN model. By exploiting batch normalization, the sample distribution characteristics within the same layer were preserved while the distribution gap between layers was eliminated.

Table 4 indicates the accuracy of leading architectures against our proposed architecture in terms of elapsed time, number of iterations, and number of layers involved. Elapsed time was found to be a function of several parameters. Proposed fused CNN took a minimum of 17 iterations per epoch to reach the highest classification accuracy of 96.84%. This inter-comparison substantiates the motivation behind the development of the proposed architecture.

Table 4.

Inter-comparison of the proposed architecture with the leading classification schemes in terms of accuracy and time.

4. Conclusions

We presented an in-depth exploration of plaque detection in OCT pullbacks using hybrid CNNs. We validated our model by creating a new dataset of OCT acquired images labeled by three trained experts. GANs were implemented for the synthetic creation of OCT images. Insertion of fully connected SVMs in the GoogleNet at the Softmax layer leads to better extraction of cross-entropy features and multi-class label prediction. Real-time processing in a few seconds is indicative of the potential of our model to become integrated into catheter laboratories for real-time assessments.

Author Contributions

Conceptualization, H.Z. and J.Z.; methodology, H.Z.; J.Z.; and F.S.; software, J.Z.; validation, H.Z. and J.Z.; formal analysis, H.Z.; J.Z. and F.S.; investigation, H.Z. and J.Z.; resources, H.Z. and F.S.; data curation, F.S.; writing—original draft preparation, H.Z., J.Z. and F.S.; writing—review and editing, H.Z., J.Z. and F.S.; visualization, J.Z. and F.S.; supervision, H.Z. and F.S.; project administration, H.Z.; funding acquisition, H.Z. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Galway Clinical Research Ethics Committee.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

Haroon Zafar is supported by Science Foundation Ireland (SFI) Technology Innovation Development Award (Grant number: 18/TIDA/6017) and Irish Research Council New Foundations Grant 2020. Faisal Sharif is supported by SFI Research Infrastructure Grant (17/RI/5353).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abdolmanafi, A.; Duong, L.; Dahdah, N.; Adib, I.; Cheriet, F. Characterization of coronary artery pathological formations from OCT imaging using deep learning. Biomed. Opt. Express. 2018, 9, 4936–4960. [Google Scholar] [CrossRef] [PubMed]

- Retson, T.A.; Besser, A.H.; Sall, S.; Golden, D.; Hsiao, A. Machine Learning and Deep Neural Networks in Thoracic and Cardiovascular Imaging. J. Thorac. Imaging 2017, 34, 192–201. [Google Scholar] [CrossRef] [PubMed]

- Henglin, M.; Stein, G.; Hushcha, P.V.; Snoek, J.; Wiltschko, A.B.; Cheng, S. Machine Learning Approaches in Cardiovascular Imaging. Circ. Cardiovasc. Imaging 2017, 10, 005614. [Google Scholar] [CrossRef] [PubMed]

- Su, S.; Hu, Z.; Lin, Q.; Hau, W.K.; Gao, Z.; Zhang, H. An artificial neural network method for lumen and media-adventitia border detection in IVUS. Comput. Med. Imaging Graph. 2017, 57, 29–39. [Google Scholar] [CrossRef]

- Gao, Z.; Chung, J.; Abdelrazek, M.; Leung, S.; Hau, W.K.; Xian, Z.; Li, S. Privileged Modality Distillation for Vessel Border Detection in Intracoronary Imaging. IEEE Trans. Med. Imaging 2020, 39, 1524–1534. [Google Scholar] [CrossRef]

- Tearney, G.J.; Regar, E.; Akasaka, T.; Adriaenssens, T.; Barlis, P.; Bezerra, H.; Bouma, B.; Bruining, N.; Cho, J.; Chowdhary, S.; et al. Consensus standards for acquisition, measurement, and reporting of intravascular optical coherence tomography studies: A report from the International Working Group for Intravascular Optical Coherence Tomography Standardization and Validation. J. Am. Coll. Cardiol. 2012, 59, 58–72. [Google Scholar] [CrossRef]

- Lee, J.; Prabhu, D.; Vladislav, N.; Zimin, H.G.; Wilson, D.L. Automated plaque characterization using deep learning on coronary intravascular optical coherence tomographic images. Biomed. Opt. Express. 2019, 10, 6497–6515. [Google Scholar] [CrossRef]

- Zafar, H.; Sharif, F.; Leahy, M. Assessment of coronary artery stenosis with FD-OCT derived blood flow measurements: Relationship with FFR. J. Am. Coll. Cardiol. 2014, 64, 307–311. [Google Scholar] [CrossRef][Green Version]

- He, S.; Zheng, J.; Maehara, A. Convolutional neural network based automatic plaque characterization for intracoronary optical coherence tomography images. Med. Imaging 2018, 32, 10574. [Google Scholar]

- Abdolmanafi, A.; Duong, L.; Dahdah, N.; Adib, I.; Cheriet, F. Deep feature learning for automatic tissue classification of coronary artery using optical coherence tomography. Biomed. Opt. Express. 2017, 8, 1203–1220. [Google Scholar] [CrossRef]

- Aref, S.; Anchouche, K.; Singh, G.; Slomka, P.; Kolli, K.; Kumar, A. Clinical applications of machine learning in cardiovascular disease and its relevance to cardiac imaging. Eur. Heart J. 2019, 40, 1975–1986. [Google Scholar] [CrossRef]

- Johnson, K.W.; Soto, J.T.; Glicksberg, B.S.; Shameer, K.; Miotto, R.; Ali, M. Artificial Intelligence in Cardiology. J. Am. Coll. Cardiol. 2018, 71, 2668–2679. [Google Scholar] [CrossRef]

- Khened, M.; Alex, V.; Krishnamurthi, Fully convolutional multi-scale residual DenseNets for cardiac segmentation and automated cardiac diagnosis using ensemble of classifiers. G. Med Image Anal. 2019, 51, 21–45. [Google Scholar] [CrossRef]

- He, C.; Li, Z.; Wang, J.; Huang, Y.; Yin, Y.; Zhiyong, L. Atherosclerotic Plaque Tissue Characterization: An OCT-Based Machine Learning Algorithm With ex vivo Validation. Front Bioeng. Biotechnol. 2020, 8, 749. [Google Scholar] [CrossRef]

- Fedewa, R.; Puri, R.; Fleischman, E.; Lee, J.; Prabhu, D.; Wilson, D.L.; Fleischman, A. Artificial Intelligence in Intracoronary Imaging. Curr. Cardiol. Rep. 2020, 22, 46. [Google Scholar] [CrossRef]

- Prabhu, D.; Bezerra, H.; Kolluru, C.; Gharaibeh, Y.; Mehanna, E.; Wu, H.; Wilson, D.L. Automated A-line coronary plaque classification of intravascular optical coherence tomography images using handcrafted features and large datasets. J. Biomed. Opt. 2019, 24, 106002. [Google Scholar] [CrossRef]

- Zeng, X.; Cui, S.; Qian, J.; Cheng, X.; Dong, J.; Zhou, J.; Xu, Z.; Feng, Y. 10 W low-noise green laser generation by the single-pass frequency doubling of a single-frequency fiber amplifier. Laser Phys. 2020, 30, 075001. [Google Scholar] [CrossRef]

- Macedo, M.M.; Oliveira, D.A.; Gutierrez, M.A. Atherosclerotic Plaques Recognition in Intracoronary Optical Images Using Neural Networks. In Proceedings of the 2019 Computing in Cardiology (CinC), Singapore, 8–11 September 2019; pp. 1–4. [Google Scholar]

- Liu, X.; Du, J.; Yang, J.; Xiong, P.; Liu, J.; Lin, F.J. Recent progress of chatter prediction, detection and suppression in milling. Signal. Process. Syst. 2020, 92, 325–333. [Google Scholar] [CrossRef]

- Gessert, N.; Lutz, M.; Heyder, M.; Latus, S.; Leistner, D.M.; Abdelwahed, Y.S.; Schlaefer, A. Automatic Plaque Detection in IVOCT Pullbacks Using Convolutional Neural Networks. IEEE Trans. Med. Imaging 2019, 38, 426–434. [Google Scholar] [CrossRef]

- Athanasiou, L.S. Three-dimensional reconstruction of coronary arteries and plaque morphology using CT angiography-comparison and registration using IVUS. IEEE Eng. Med. Biol. Soc. 2015, 2015, 5638–5641. [Google Scholar]

- Taqi, A.M.; Awad, A.; Al-Azzo, F.; Milanova, M. The Impact of Multi-Optimizers and Data Augmentation on TensorFlow Convolutional Neural Network Performance. In Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval, Miami, FL, USA, 10–12 April 2018; pp. 140–145. [Google Scholar]

- Miyagawa, M.; Costa, M.G.; Gutierrez, M.A.; Costa, J.P.; Costa, F. Detecting vascular bifurcation in IVOCT images using convolutional neural networks with transfer learning. IEEE Access 2019, 7, 66167–66175. [Google Scholar] [CrossRef]

- Cheplygina, V.; Bruijne, M.; Pluim, J. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image Analy. 2019, 54, 280–296. [Google Scholar] [CrossRef] [PubMed]

- Sood, R.; Topiwala, B.; Choutagunta, K. Position Specific Scoring Matrix and Synergistic Multiclass SVM for Identification of Genes. In Proceedings of the17th IEEE International Conference on Machine Learning and Applications2018, Orlando, FL, USA, 17–20 December 2018; pp. 17–20. [Google Scholar]

- Kazuhiro, K. Generative Adversarial Networks for the Creation of Realistic Artificial Brain Magnetic Resonance Images. Tomography 2018, 4, 159–163. [Google Scholar] [CrossRef] [PubMed]

- Gibson, E. NiftyNet: A deep-learning platform for medical imaging. Comput. Methods Programs Biomed. 2018, 158, 113–122. [Google Scholar] [CrossRef] [PubMed]

- Massalha, S.; Clarkin, O.; Thornhill, R.; Wells, G.; Benjamin, J. Decision Support Tools, Systems, and Artificial Intelligence in Cardiac Imaging. Can. J. Cardiol. 2018, 34, 827–838. [Google Scholar] [CrossRef] [PubMed]

- Ravi, D. Deep Learning for Health Informatics. IEEE J. Biomed. Health Informatics. 2017, 21, 4–21. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Shin, J.; Hurst, J.; Kendall, C.; Liang, J. Integrating Active Learning and Transfer Learning for Carotid Intima-Media Thickness Video Interpretation. J. Digit. Imaging. 2019, 32, 290–299. [Google Scholar] [CrossRef]

- Zreik, M.; Hamersvelt, R.; Wolterink, J.; Leiner, T.; Viergever, M.; Isgum, I. A Recurrent CNN for Automatic Detection and Classification of Coronary Artery Plaque and Stenosis in Coronary CT Angiography. IEEE Trans. Med. Imaging 2018, 38, 1588–1598. [Google Scholar] [CrossRef]

- Fischer, A.M. Accuracy of an Artificial Intelligence Deep Learning Algorithm Implementing a Recurrent Neural Network with Long Short-term Memory for the Automated Detection of Calcified Plaques From Coronary Computed Tomography Angiography. J. Thorac. Imaging. 2020, 35, S49–S57. [Google Scholar] [CrossRef]

- Vos, B.; Wolterink, J.; Leiner, T.; Jong, P. Direct Automatic Coronary Calcium Scoring in Cardiac and Chest CT. IEEE Trans. Med. Imaging 2019, 8, 2127–2138. [Google Scholar] [CrossRef]

- Duan, J.; Bello, G.; Schlemper, J.; Bai, W. Automatic 3D Bi-Ventricular Segmentation of Cardiac Images by a Shape-Refined Multi-Task Deep Learning Approach. IEEE Trans. Med. Imaging 2019, 38, 2151–2164. [Google Scholar] [CrossRef]

- Alawad, M.; Wang, L. Learning Domain Shift in Simulated and Clinical Data: Localizing the Origin of Ventricular Activation from 12-Lead Electrocardiograms. IEEE Trans. Med. Imaging 2019, 38, 1172–1184. [Google Scholar] [CrossRef]

- Rogers, M.A.; Aikawa, E. Cardiovascular calcification: Artificial intelligence and big data accelerate mechanistic discovery. Nat. Rev. Cardiol. 2019, 16, 261–274. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).