1. Introduction

The field of computer vision has seen remarkable advancements in the last couple of decades. The early computer vision systems relied on feature engineering techniques such as edge detection, shape descriptors, and dimensionality reduction among others, before feeding the enhanced information to a machine learning classifier. While these worked to some degree, their recognition accuracy lagged far behind human perception. Even though Convolutional Neural Networks (CNNs) were proposed more than two decades ago, the refinements in their design have been revolutionary in the field of computer vision, often matching or surpassing human recognition.

CNNs learn to identify relevant patterns and implicit details in images, such as edges, textures, and shapes, as they are trained on supervised data. They are capable of learning hierarchical representations as they process visual data in layers; beginning layers focus on learning simple features such as edges, while later layers focus on learning more complex patterns and visual relationships. In each layer of the CNN, multiple learnable convolution kernels are applied to an input image in a sliding manner. The resulting image after the application of a convolution kernel is referred to as a feature map. The feature map operation is followed by a pooling layer that aggregates the information in neighboring blocks. After passing the image through a number of layers involving feature maps and pooling operations, a classifier in the form of a simple neural network is applied to the linearized form of feature maps in the last layer to make the final classification decision. This learning process provides the inductive bias to CNNs in generalizing to new, unseen images, recognizing objects and patterns regardless of variations in lighting, angles, or backgrounds.

While CNNs have demonstrated remarkable success in computer vision applications, their performance when used in Natural Language Processing (NLP) is unimpressive. One of the reasons for the CNNs to be less effective in NLP is their limited receptive field. This is because of the convolution operation which typically uses a kernel much smaller than the data it needs to process. Although the sliding of the kernel and the subsequent pooling operation are able to aggregate more information about the data, this limited receptive field causes loss of information in language modeling. This is akin to trying to understand a paragraph by breaking it into a few words at a time. Thus, a different architecture is desirable for effective language modeling.

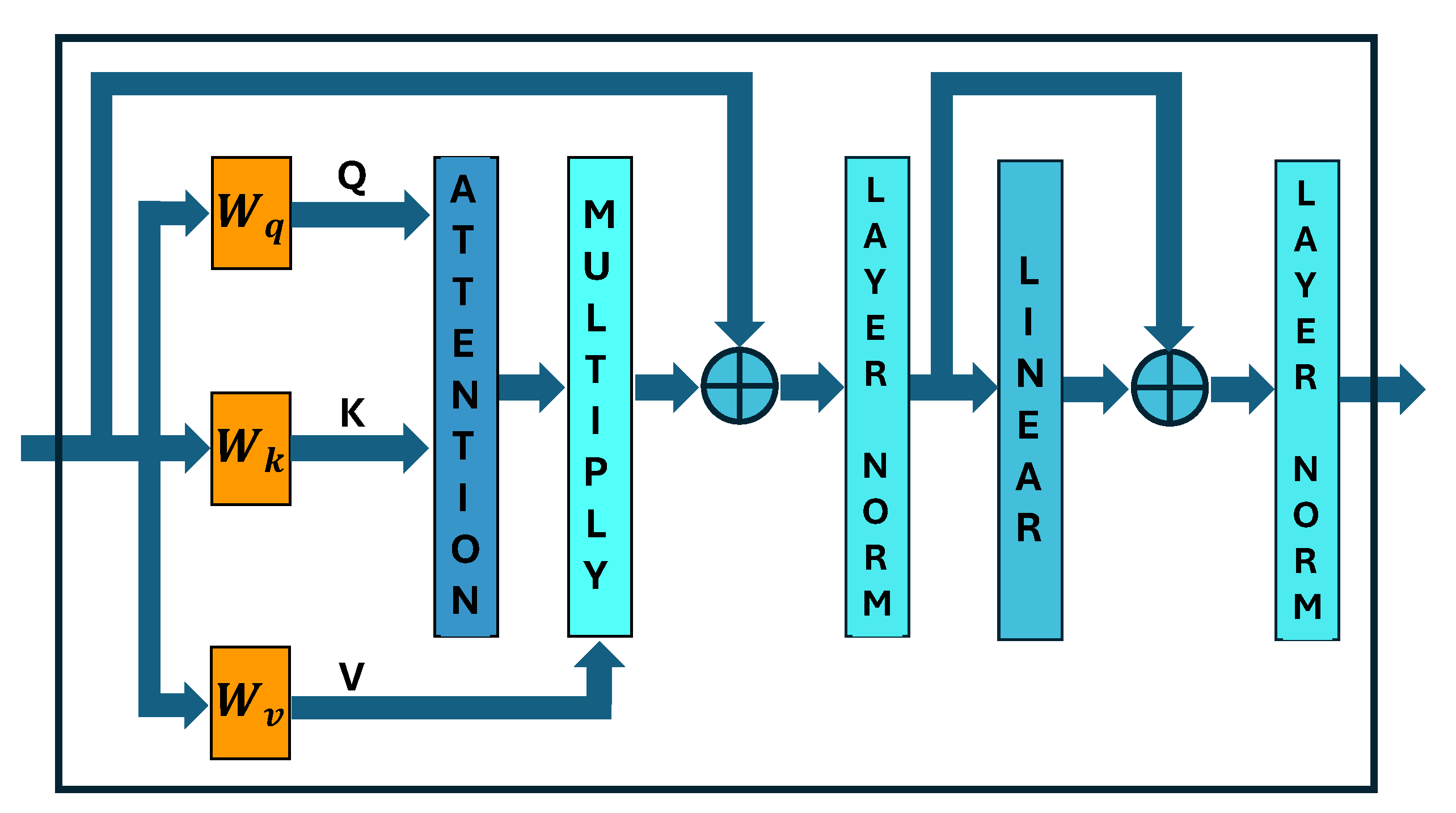

The Transformer architecture as proposed in the pioneering work of Vaswani et al. [

1] has been monumental in the NLP field. The recent success of Large Language models such as ChatGPT [

2], Gemini [

3], Llama [

4], DeepSeek [

5], Claude [

6], Qwen [

7] etc. with their language comprehension and human-like conversation capabilities corroborates the transformer architecture’s capacity for capturing complex language representations in NLP. One of the main reasons for the success of the Transformer is that it uses the attention mechanism, which potentially has a very large receptive field and can examine the entire text document at once. Attention measures the pairwise similarity between the words (or tokens) of the entire input sequence (converted to tokens) in order to comprehend it.

Similar to a CNN, the Transformer also uses many layers, with each layer doing multiple attentions (referred to as attention heads). The output from these attention heads is aggregated in a learnable manner by a linear network. After processing the tokens through many layers, the last layer feeds the learned representations to a classifier to decide which word would be produced next from the Transformer. The produced word becomes part of the input that is then used to generate the following word. This concept where output is generated one word at a time, and the previously generated output is appended to the input in an iterative manner is referred to as “autoregressive” generation. During the training of the Transformer for language modeling, the target output sequence is incrementally masked so that the Transformer learns to predict the next masked token.

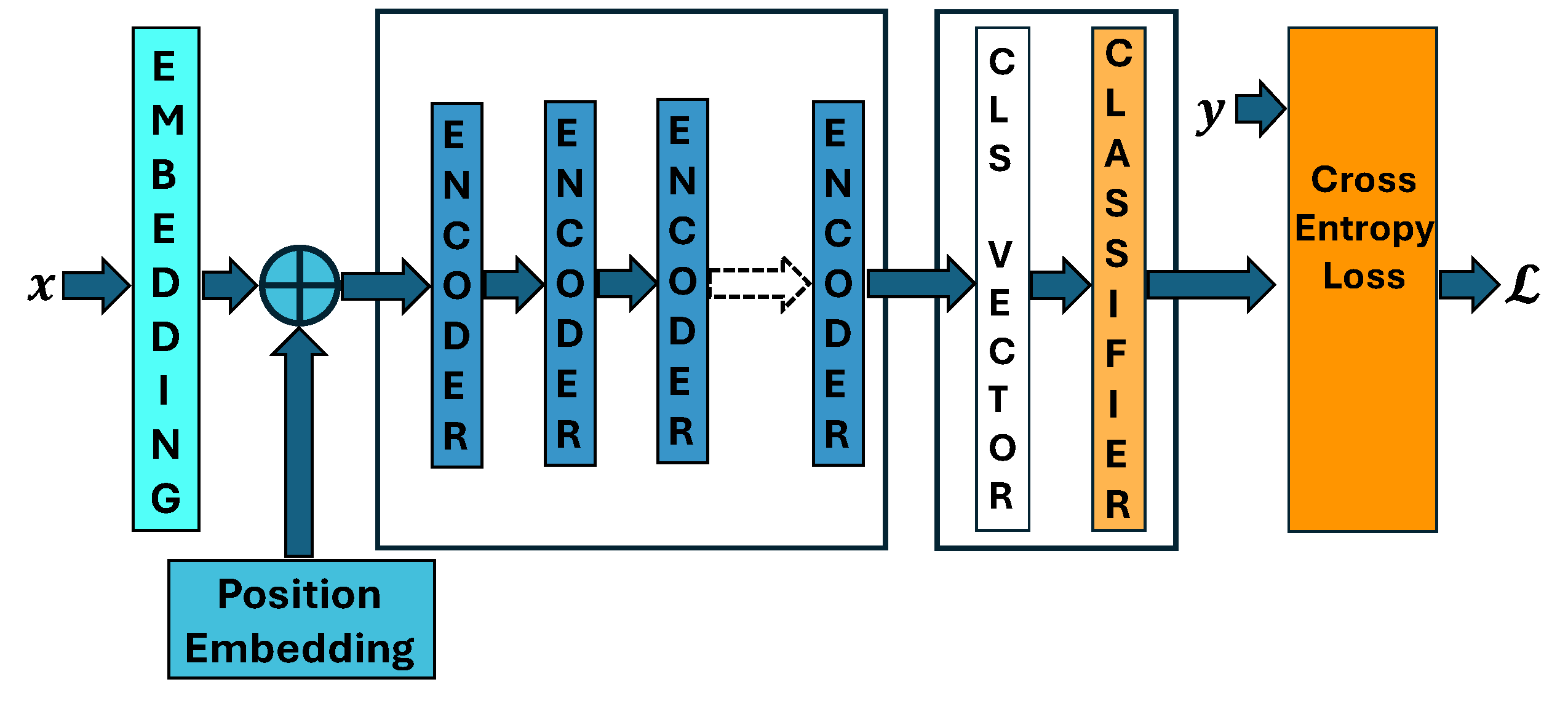

For text classification, e.g., sentiment analysis, the masking of future tokens during training and autoregressive generation of one token at a time is not needed. Instead, an extra token referred to as a “CLS”, or class token, is appended in the beginning of the input data. This token accumulates the class knowledge as the information is refined in each layer for each token by looking at its attention with respect to all other tokens. The classifier at the end of all layers then processes the information in the CLS token to determine the class of the input text.

The immense success of the Transformer in NLP and its potentially large receptive field, raises the question: can the Transformer be equally or more effective than a CNN at image comprehension and classification? The answer to this question was partially given in the seminal work of the Vision Transformer paper [

8]. In this paper, the authors adapted the NLP Transformer for classification by converting the input image into small patches and treating the sequence of these patches as sequence of input tokens like in an NLP Transformer. Two important conclusions were given about the effectiveness of image classification using CNNs and Transformers.

- 1.

CNNs have an inductive bias for image classification and therefore perform better than Transformers, especially when the training data is relatively small.

- 2.

Transformers can match the classification accuracy of CNNs if pretrained on large datasets. The authors used the JFT training dataset with 300 million images, and demonstrated impressive image classification capabilities of Transformers matching the best CNN architectures for it.

Since the pioneering work of the Vision Transformer, many research ideas have been proposed to enhance the effectiveness of the Transformer in the computer vision field. Why do we strive to make the Transformer better in the image domain when we know that CNN has an inductive bias for vision? There are two reasons for it. One is the expressive power of the attention mechanism in a Transformer as evidenced in LLMs. If we can improve the lack of inductive bias for vision in a Transformer, then it can be better at image comprehension than a CNN. The second reason is the rise of Vision Language Models (VLMs).

Vision language models are multimodal models that are trained on both image and text data. VLMs can understand images, answer questions about images, do visual question answering, image captioning, capture spatial properties, and segment images among other applications. Since VLMs need a unified architecture for both NLP and image domains, the Transformer is a natural choice. The Transformer’s core principle in understanding the relationships in the input is the attention mechanism. Since the attention computes a pairwise similarity between all input tokens, it has a time complexity of if n is the input sequence length. For LLMs and VLMs, the reduction in the computation of attention without loss in performance is an important goal in a Transformer architecture.

In this paper, we focus on enhancing the image classification capabilities of the Vision Transformer (ViT). We first analyze the most popular SOTA Transformer architectures and highlight the key architectural enhancements introduced in these models. We focus on reducing the complexity of the attention mechanism while maintaining or even improving the classification accuracy as compared to the existing ViTs. The important contributions of our work can be summarized as follows:

- 1.

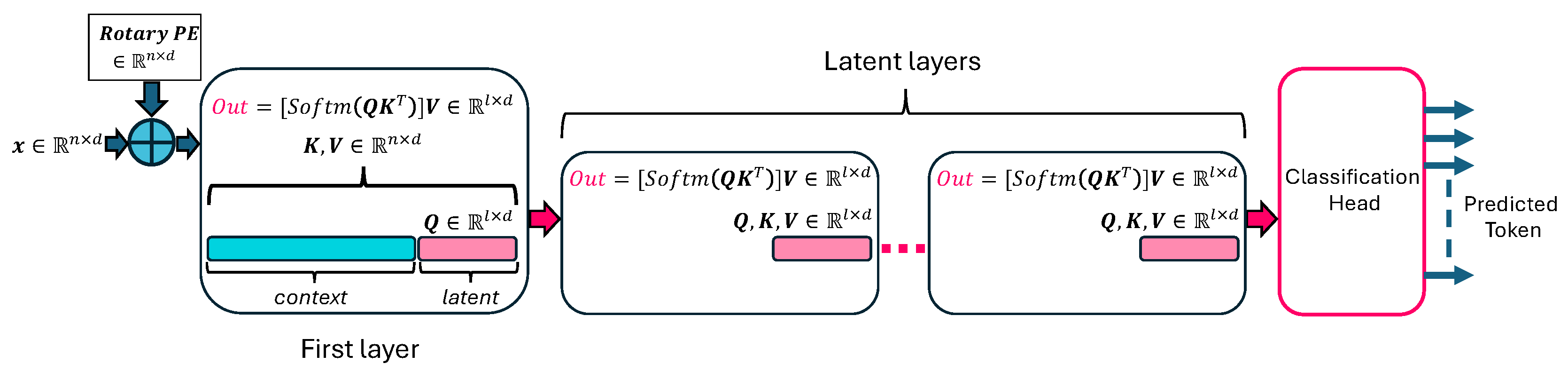

We enhance the popular PerceiverAR [

9] architecture for computer vision which is more efficient than a ViT. Our enhanced Perceiver has approximately one fourth the attention computations as compared to the standard ViT, but has much better classification accuracy than the PerceiverAR.

- 2.

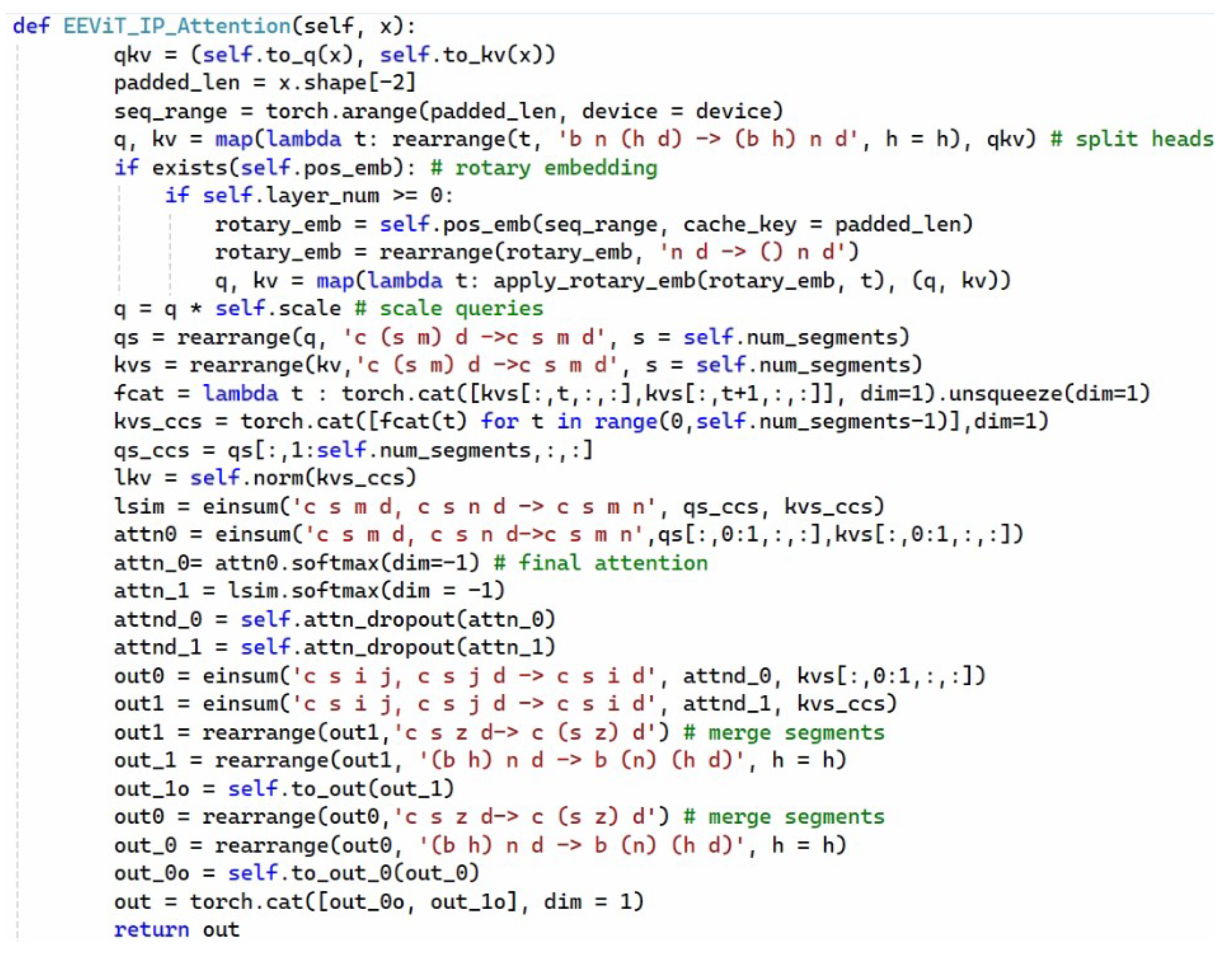

We adapt a recent segment level PerceiverAR based attention architecture [

9] originally proposed for language modeling, for computer vision. We further enhance this attention design to improve the inductive bias for vision Transformer.

- 3.

We do a detailed comparison of recent SOTA ViT architectures on different popular datasets under different dataset augmentations to provide a proper comparison framework between different designs. We believe such an evaluation environment is important for the research community to fairly compare new designs against published models.

The rest of the paper is organized as follows. We review the important works in the computer vision field focusing on the Transformer based architectures in the next section entitled “Related Work”. Then in

Section 3, we cover some preliminaries in terms of formal computations involved in a ViT and the PerceiverAR design, upon which most of our enhancements take place. In

Section 4, we present our two enhanced efficient ViT designs. The results section (

Section 5) provides a detailed comparison of our enhanced architectures with the popular SOTA ViTs. In

Section 6, we discuss the insights gained from our enhanced architectures. Finally, we provide conclusions and future work in

Section 7.

2. Related Work

The seminal work, “An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale” [

8], first introduced the Vision Transformer (ViT) by applying transformer architectures—originally developed for natural language processing—directly to image classification tasks. This method challenged the then-traditional reliance on convolutional neural networks (CNNs) in computer vision by demonstrating that transformers can achieve competitive, if not superior, performance when trained on sufficiently large datasets. To process images, ViTs operate by dividing each image into fixed-size patches (e.g., 16 × 16 pixels). These patches are then flattened and linearly projected into embeddings, treating them analogously to word tokens in NLP models. This sequence of patch embeddings, along with a special classification token and the corresponding positional embeddings, is then fed into a standard transformer encoder. This setup allows the model to capture global relationships across the entire image. The output corresponding to the classification token is finally passed through a multilayer perceptron (MLP) head to produce the final class predictions. ViT models require significantly less computational resources to train compared to CNNs when pre-trained on large datasets. However, they tend to overfit on smaller datasets due to the lack of inductive biases that are present in CNNs, such as locality and translation equivariance.

CaiT (Class-Attention in Image Transformers) [

10] is a novel architecture that enhances Vision Transformers (ViTs) by enabling deeper models to train more effectively through two key contributions. One, CaiT introduces a class token that is updated separately via special class-attention layers, thus decoupling class token processing from patch token processing. Two, CaiT uses a technique called LayerScale, which applies small initial values to residual branch weights, analogous to techniques like residual scaling in ResNets. With these techniques, CaiT achieves superior performance on ImageNet without needing the use of external data or distillation, surpassing both CNNs and previous ViTs.

Bridging the gap between transformers and CNNs in vision, the Swin Transformer [

11] introduces a hierarchical vision transformer that computes self-attention within local windows and shifts the windows across layers. This makes it efficient and scalable for high-resolution vision tasks (like detection and segmentation), while maintaining global modeling capabilities. Rather than computing global attention (which is costly), Swin performs self-attention within non-overlapping local windows. It then shifts the windows by a small offset in the next layer, thus enabling cross-window interaction without large compute cost. Similar to CNNs, Swin processes images in stages, gradually reducing resolution and increasing feature dimensions. This allows Swin to produce multi-scale feature maps, which are useful for downstream tasks like object detection and semantic segmentation. Unlike ViT, which scales quadratically, Swin scales linearly with image size due to window-based attention. While Swin has produced impressive image classification results, its drawback is high GPU memory usage due to increased depth and feature map resolution in earlier stages [

12].

Twins [

13] is a vision transformer architecture that improves spatial modeling by combining local and global attention mechanisms in a hierarchical design. The aim is to better capture both fine-grained local patterns and long-range dependencies, which are key for dense prediction tasks like segmentation and detection. Twins offers two types of attention: Locally-grouped Self-Attention (LSA), which operates within small non-overlapping local windows to efficiently model local dependencies, and Global Sub-sampled Attention (GSA), which captures global context by applying attention on a sub-sampled version of tokens, reducing computational cost. Like the Swin Transformer, Twins builds multi-scale feature maps by gradually reducing spatial resolution and increasing depth, resulting in a model that achieves improved accuracy and efficiency, as well as demonstrates the need for both local and global attention. Since Twins uses both local and global attention, its training and inference computations and memory usage are relatively high.

Data-efficient image Transformers (DeiT) [

14] show that with the right training recipe and a novel distillation token, Vision Transformers can be trained from scratch on ImageNet-1K without any extra data, matching or even surpassing CNNs of similar size. DeiT achieves this by adding a learnable distillation token alongside the class token. During training, this token attends to a CNN teacher’s soft labels, thus enabling the ViT student to distill knowledge via its own attention mechanism. Further, DeiT leverages strong regularization and augmentations, stochastic depth, and an optimized learning-rate schedule. Overall, this integrated knowledge distillation into the transformer architecture allows transformer-based vision models to be accessible and accurate without massive pretraining.

The Tokens-to-Token (T2T) Vision Transformer [

15] addresses a key limitation of vanilla Vision Transformers (ViTs): they lack local structure modeling in early layers, making them data-hungry and inefficient for training on smaller datasets like ImageNet from scratch. To combat this, instead of directly splitting an image into fixed-size patches, T2T progressively aggregates neighboring tokens, preserving local structures. This mimics the hierarchical nature of CNNs and allows better low-level feature extraction. Progressive tokenization is achieved through passing the input image through a series of soft splits, followed by token transformation and then by re-tokenization steps. This in turn creates a smaller, more informative token set with improved spatial representation. By combining the T2T module with standard Transformer blocks to create T2T-ViT models, these models are more parameter-efficient. They can be trained from scratch on ImageNet without external data or heavy augmentations, while achieving competitive or better accuracy than standard ViT and CNN models, while using fewer parameters and no pretraining. While T2T has a better patch tokenization learning process, its attention backbone is same as a ViT having quadratic attention complexity.

To overcome the lack of inductive bias in Transformers, some hybrid CNN-Transformer architectures have been proposed, e.g., CeiT [

16], FasterViT [

17], Uniformer [

18], CoatNet [

19]. CeiT uses a beginning CNN layer to extract initial low-level features from the image. The smaller feature maps obtained are then converted to patches which contain more meaningful spatial information. The CeiT also uses convolutions in the feed forward component of the Transformer block to improve inductive bias. FasterViT also uses initial CNN layers to extract better visual features. It also introduces Hierarchical Attention (HAT) that decomposes global self-attention into a multi-level attention mechanism for better efficiency. Uniformer [

18] integrates the strengths of 3D convolutions and spatio-temporal self-attention in a unified Transformer format. It provides efficient and effective representation learning in both image and video understanding. CoatNet [

19] provides a principled vertical stacking of convolution and attention layers. It incorporates a simple “relative position bias” into the self-attention mechanism, allowing the model to implicitly learn both input-adaptive relationships and spatially fixed, translation-equivariant patterns like convolutions. Overall, due to the more complex hybrid designs involving CNNs and Transformers, and CNNs not being effective in NLP, such architectures are less favorable in VLMs where language understanding is also important.

5. Results

We perform detailed experiments comparing our proposed models with recent ViT architectures. The datasets used to compare ViT designs are shown in

Table 1. These span varying train/test and image sizes. Some of the datasets have a relatively higher number of classes, e.g., CIFAR-100, Tiny ImageNet, and ImageNet-1K, and therefore provide more challenges in classification accuracy, especially when no pretrained models are used, and only basic data augmentation is applied. All models are trained using the same learning rate schedules (cosine annealing) and Adam optimizer for 200 epochs. The starting learning rate is 1 × 10

−4 with a batch size of 64 for all models and datasets, except for ImageNet. Note that only on the ImageNet-1K dataset do we use a batch size of 128 and train the models for 100 epochs. This is because of the longer training times needed for this dataset (1.28 million images) on the RTX 4090 GPU system available to us. All transformer models being compared use the exact same data augmentations and are similar in size around 15 million parameters (using 8 layers with 6 heads per layer and an embedding dimension of 384), except for Swin-T which uses 27 million parameters. The model sizes were chosen to provide a good accuracy without overfitting or underfitting on the datasets tested.

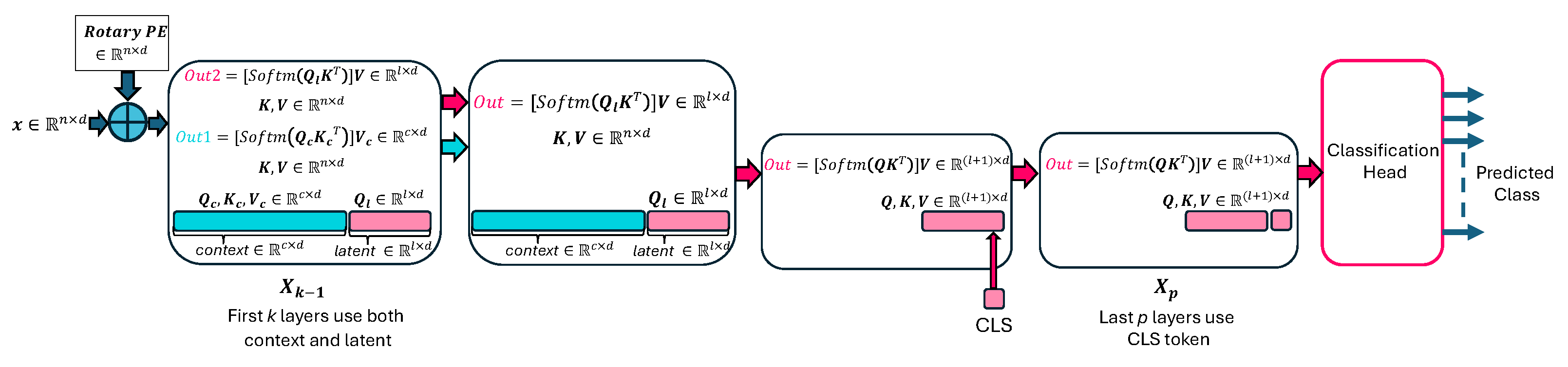

We first compare our EEViT-PAR, which improves upon the PerceiverAR [

9] design by continuing to propagate the context for initial

k layers. Further, it introduces the CLS token a few layers before the last layer (similar to the CaiT [

10] design).

Table 2 compares the top-1 accuracy for our EEViT-PAR model with the baselines of PerceiverAR [

9] and the Vision Transformer [

8]. All models in

Table 2 use 8 layers with 6 heads in each layer with an embedding dimension of 384. The EEViT-PAR propagates the context to two layers (

) and uses the CLS token in the last two layers (

). The choice of

and

was determined empirically to provide a good balance between reduced model complexity and performance. We provide more detail on this in

Section 6. During training, all models in

Table 2 use the basic data augmentations of random horizontal flip and random cropping after padding the image. All models use 4 segments, except for Tiny ImageNet which uses 8 segments, and ImageNet-1K which uses 16 segments. All results (except for the ImageNet) are based on the average of accuracies for three runs at the end of 200 epochs. ImageNet training takes about 4 days to complete on the compute resources available to us, and thus we provide results for a single run.

If more augmentations are applied to the datasets such as random shear, translation, solarization (but without cutmix [

24]), the performance of all models improves as shown in

Table 3. These augmentations encourage the model to learn from more diverse and difficult examples. The relative performance of the three models in

Table 2 and

Table 3 is similar. CIFAR-100 and Tiny Imagenet are more difficult datasets for achieving a higher classification accuracy, as the ratio of training data to the number of classes is relatively low and Transformer models require more training to achieve a higher accuracy.

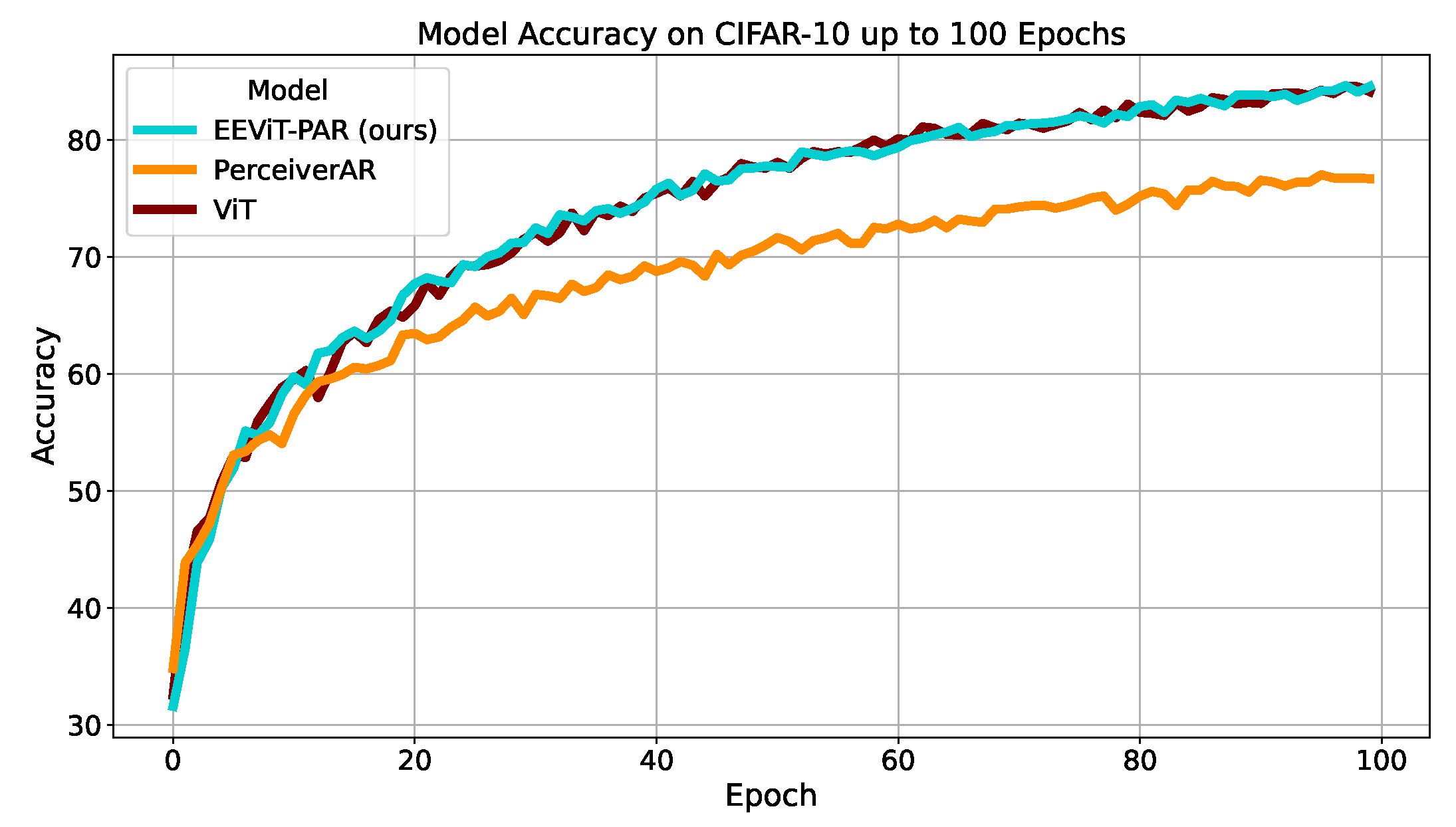

Figure 7,

Figure 8 and

Figure 9 show the accuracy results of the different models as training proceeds for the first 100 epochs. From the results of

Table 2 and

Table 3 and

Figure 7,

Figure 8 and

Figure 9, it can be noted that our enhanced architecture EEViT-PAR significantly improves upon the baseline PerceiverAR [

9] design. Even though the top-1 classification accuracy of EEViT-PAR is similar to the ViT [

8], it is important to note that EEViT-PAR has approximately one fourth the computation complexity of the standard ViT. This is because after the first

k layers (usually two or three), only the latent sized data is processed in the remaining layers of EEViT-PAR. It is remarkable that EEViT-PAR achieves similar performance as a ViT with one fourth the computational cost.

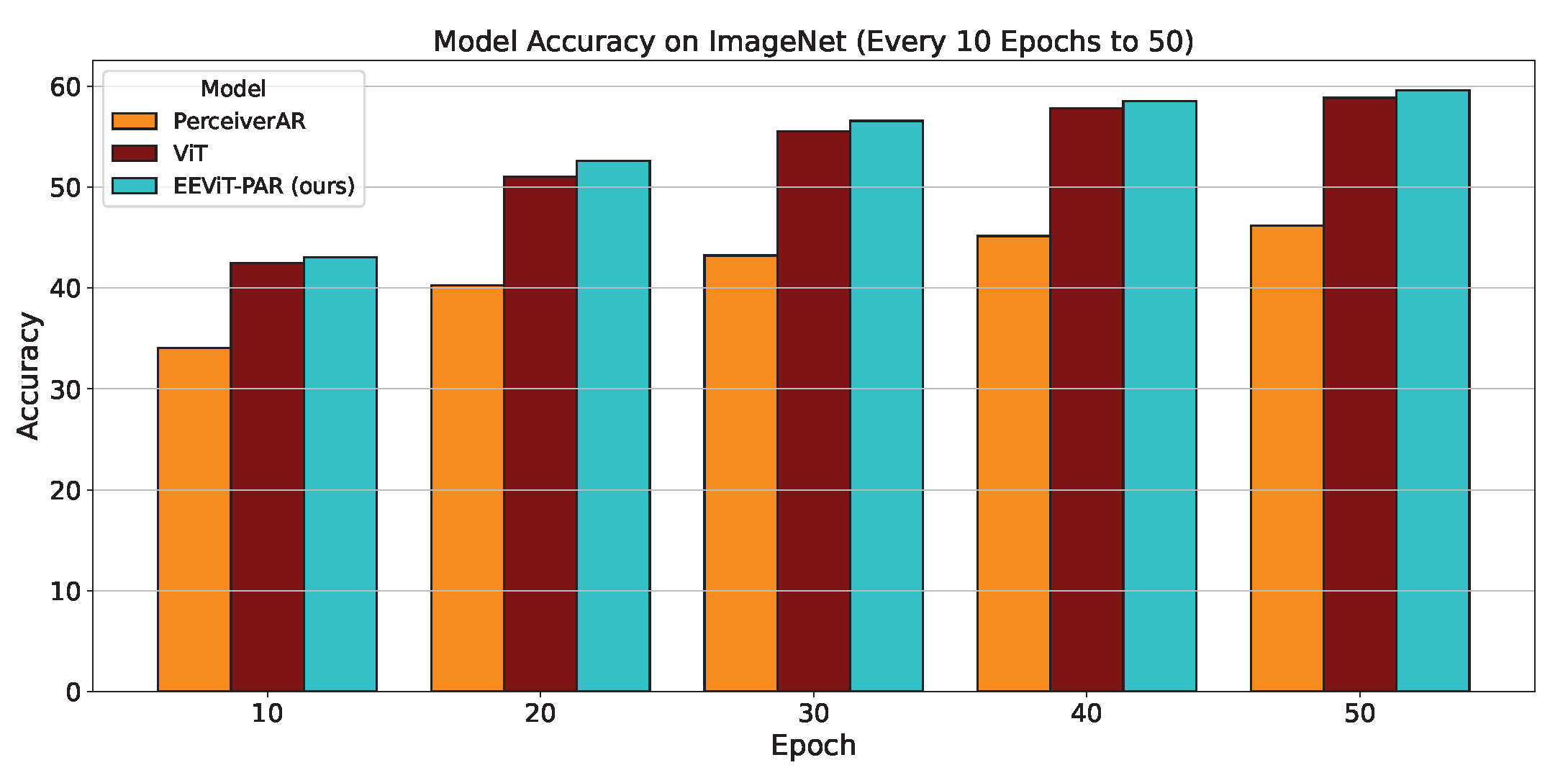

Figure 10 compares the performance of EEViT-PAR with the baseline models of PerceiverAR and ViT on the ImageNet-1K dataset. Here also, the EEViT-PAR is significantly better than the Perceiver-AR but fairly similar to the ViT. We emphasize again that the computation cost of EEViT-PAR is significantly less than the ViT.

Our second proposed enhanced architecture, EEViT-IP, further improves the execution efficiency as well as provides improved visual comprehension through its overlapped segmented PerceiverAR design.

Table 4 presents the top-1 accuracy results with other SOTA ViT models on the different benchmarks, using the basic augmentation of random crop and horizontal flips only.

Table 5 presents the top-1 accuracy results with other SOTA ViT models on the different benchmarks, using both basic augmentations (random crop and horizontal flip) as well as the random shear, translation, and solarization augmentations.

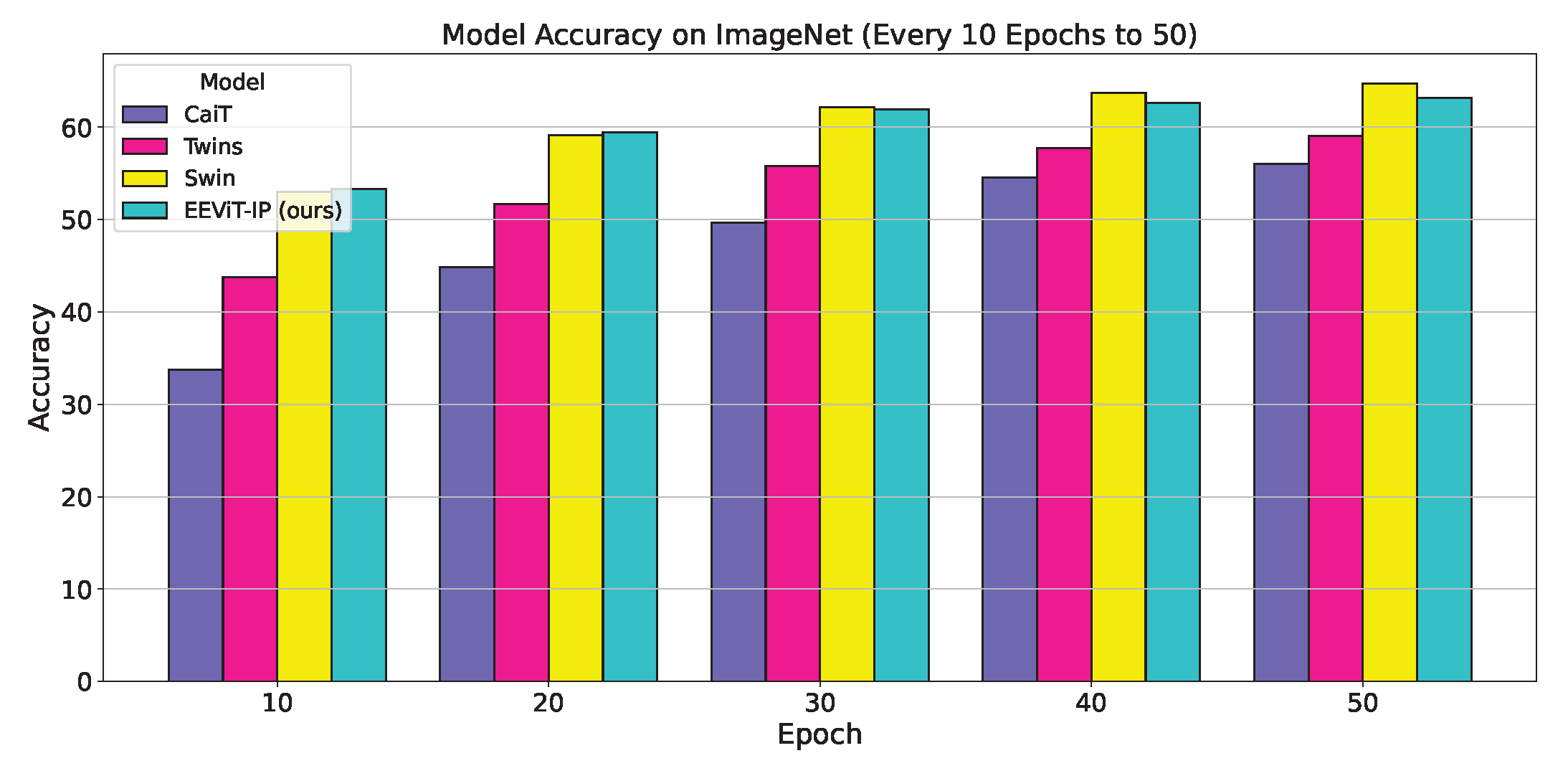

The best accuracy results in

Table 4 and

Table 5 are indicated in bold. As can be seen from

Table 4 and

Table 5, the Swin architecture and our proposed design, EEViT-IP, achieve the best results. It should be noted that the Swin architecture in

Table 4 and

Table 5 is the Swin-T (tiny) version with approximately 27 million parameters, as opposed to our EEViT-IP which is much smaller with eight layers and six heads only using approximately 14 million parameters. Our EEViT-IP design has better inductive bias due to the overlapping segments that compute the PerceiverAR-style attention. This is also empirically evident from the early adaptation of the model, obtaining relatively higher accuracies as shown in

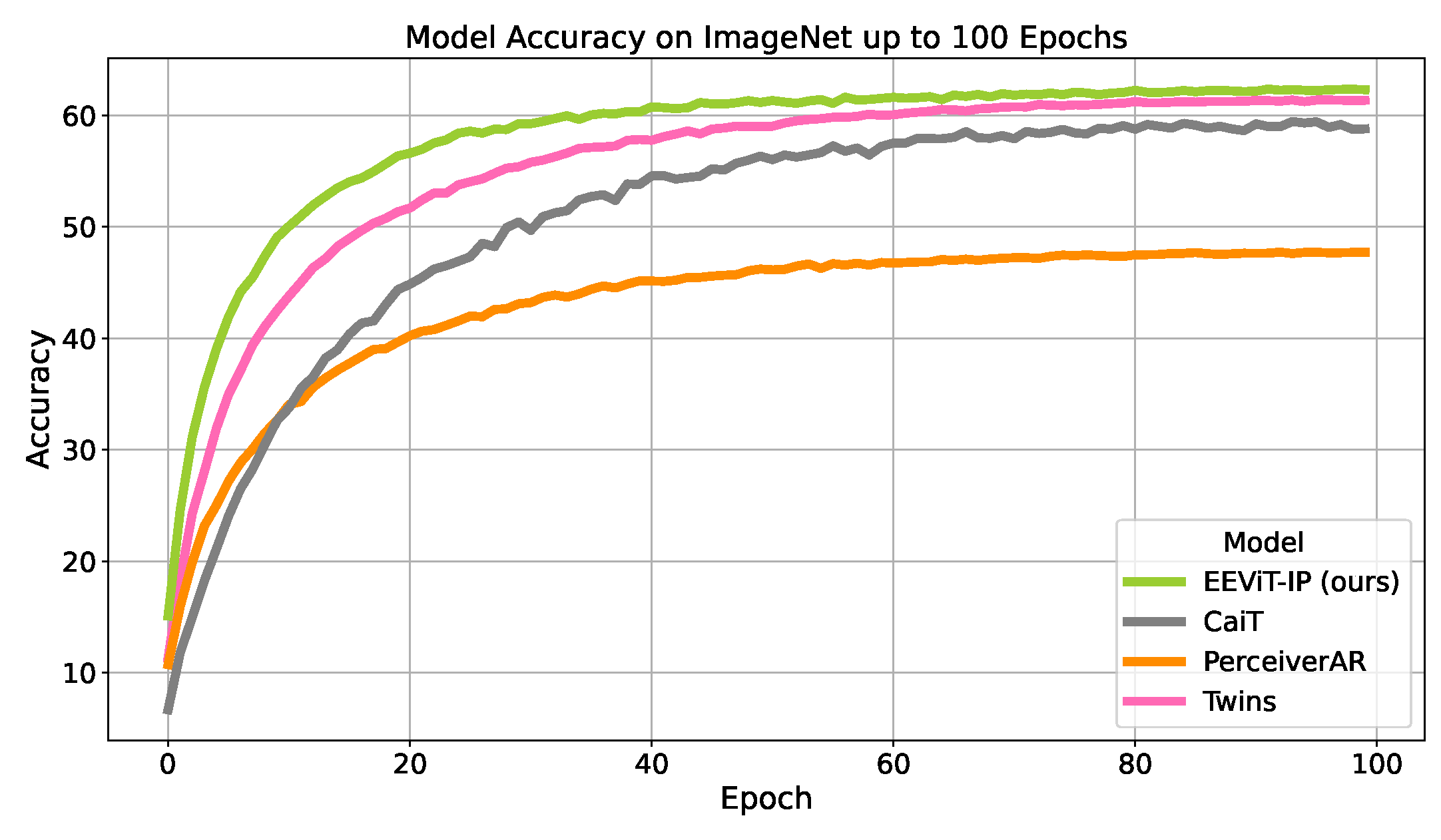

Figure 11,

Figure 12 and

Figure 13. We show the comparison of different models in their learning, depicting the test accuracy after each epoch on different datasets. The execution efficiency of EEViT-IP is approximately 12.5% of a standard ViT with similar number of layers and heads in each layer. With respect to the Swin Transformer, the EEViT-IP has 50% less computations. We analyze the efficiency of our models in the next section.

Compared to all other ViT-based architectures, the EEViT-IP extracts more visual representation information early in the training.

Figure 14 compares the performance of EEViT-IP with the other SOTA models of CaiT, Twins, and Swin on the ImageNet-1K dataset with basic augmentations only. Since our focus is on the architecture of the Transformer, we restrict the comparison to basic augmentations as Transformer’s performance is sensitive to more variations in data. Even though Swin-T performs slightly better than our EEViT-IP architecture, it is extremely memory and computationally inefficient in training [

12]. We benchmark the per-epoch execution time and the GPU RAM consumed for different batch sizes during ImageNet-1K training in

Table 6.

As compared to Swin, where layers in Swin differ in resolution, channel size, windowing, our EEViT-IP design is structurally identical in each layer. Each layer does a Perceiver-AR style attention on overlapping neighboring segments only. This results in not only a highly efficient attention computation but also a uniform memory access pattern.

As can be seen from

Figure 14, EEViT-IP extracts visual information very quickly due to its information propagation design by performing the PerceiverAR operation on overlapping segments. Thus, our EEViT-IP design is not only extremely computationally efficient, but it is also a more informative design that can learn visual features early on.

For our EEViT-IP design, to determine the best number of segments, we have done empirical studies and determined that depending upon the dataset, usually 8 or 16 segments result in higher performance (8 for smaller datasets, and 16 for larger image sizes). More segments result in smaller execution time, with a small drop in accuracy.

Table 7 shows how the segment size affects the classification accuracy in our EEViT-IP attention. For CIFAR-100, the EEViT model uses six layers with eight heads per layer and an embedding dimension of 384. The image size being fed to the model is 32 × 32 with a patch size of 4 × 4 resulting in a total of 64 tokens. For relatively smaller images, between four and eight segments usually yield the best classification accuracy. The Tiny ImageNet results in

Table 7 use the FasterViT-EEViT-IP model where the attention in FasterViT is replaced by our EEViT-IP attention. Since FasterViT works on 224 × 224 size images, we rescale the Tiny ImageNet images to 224 × 224 before feeding it to the FasterViT-EEViT-IP model. A patch size of 14 × 14 is used resulting in total of 256 patches. As can be seen from

Table 7, here if 16 segments are used, a higher classification accuracy is obtained.

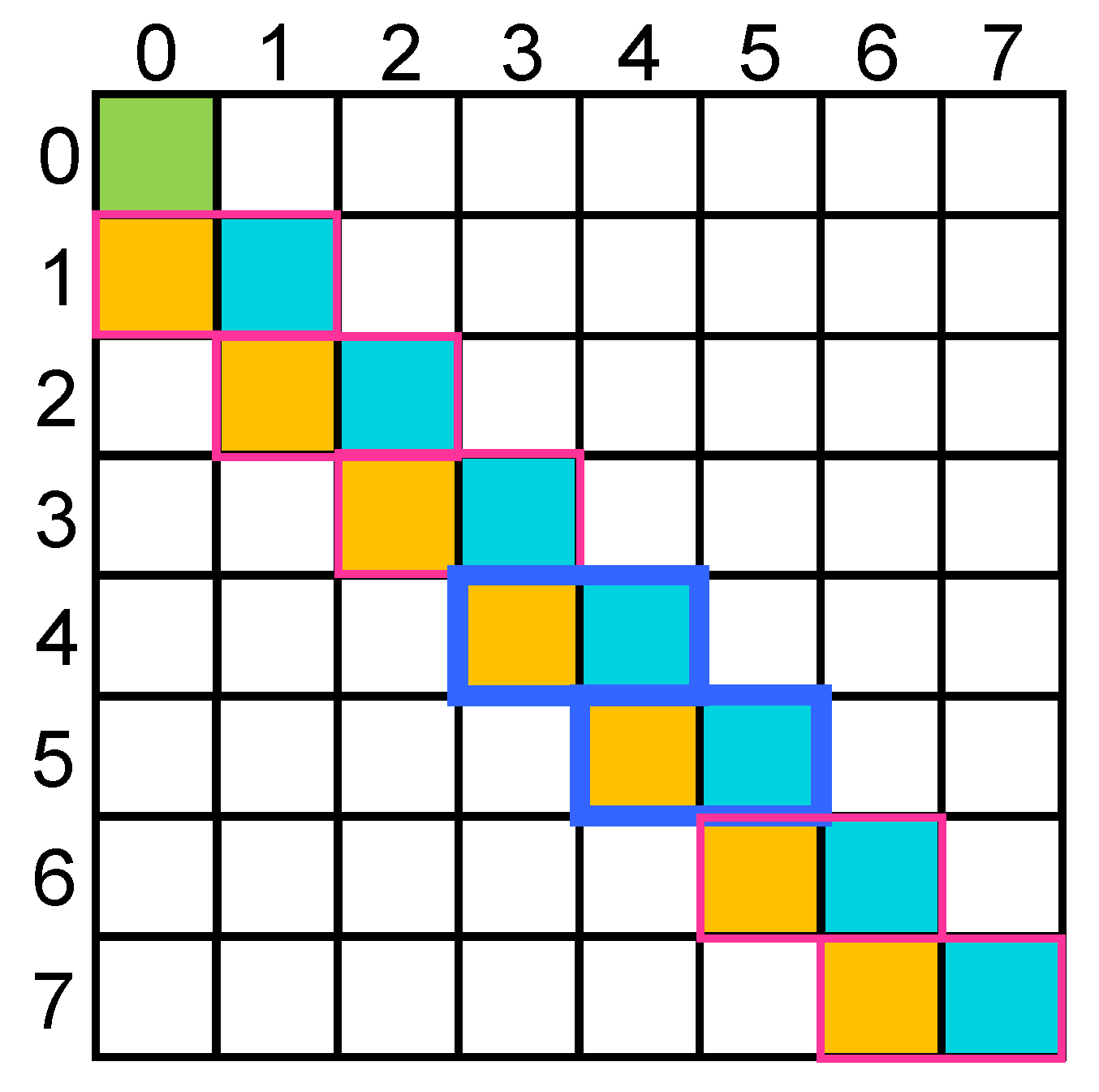

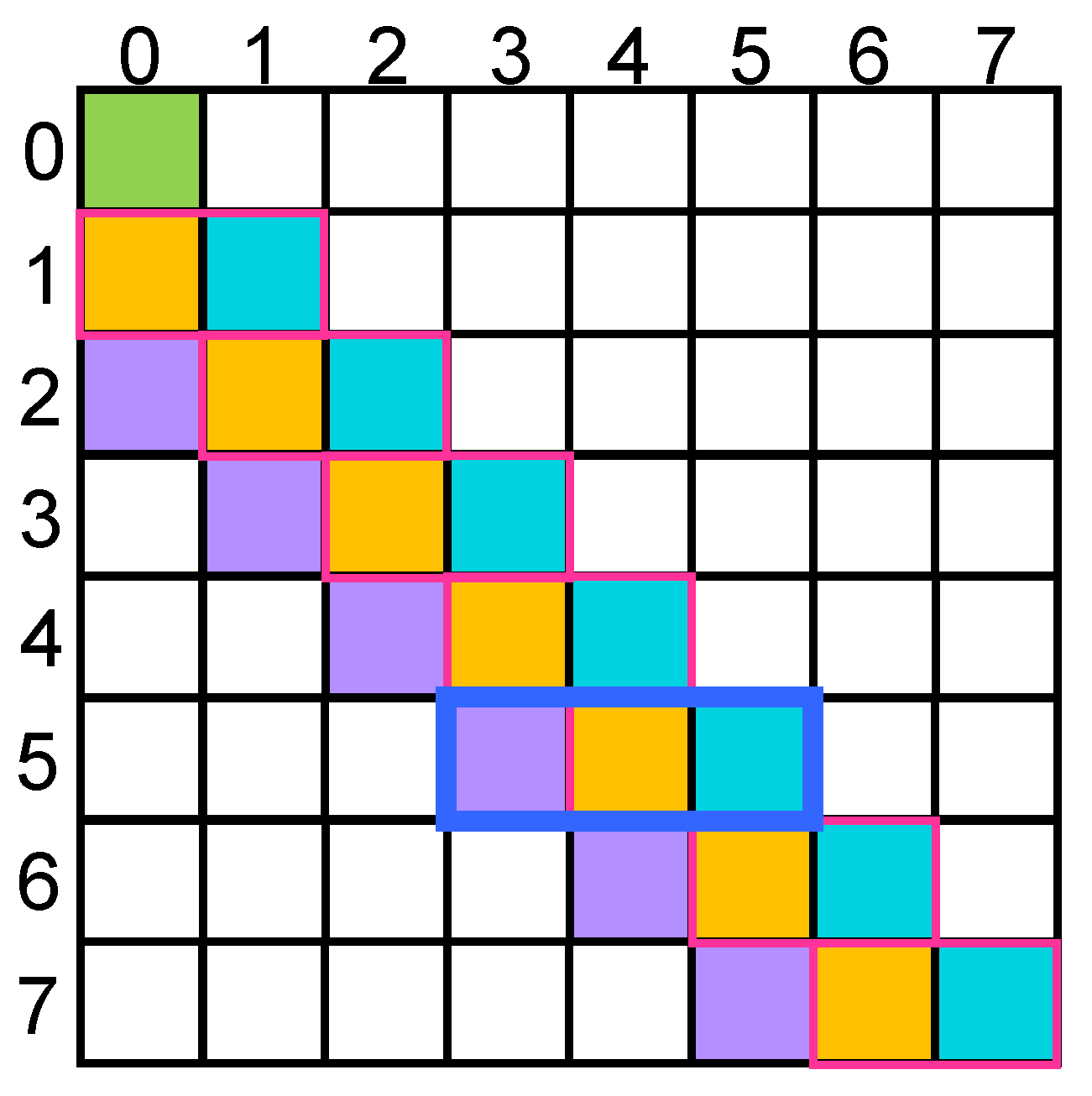

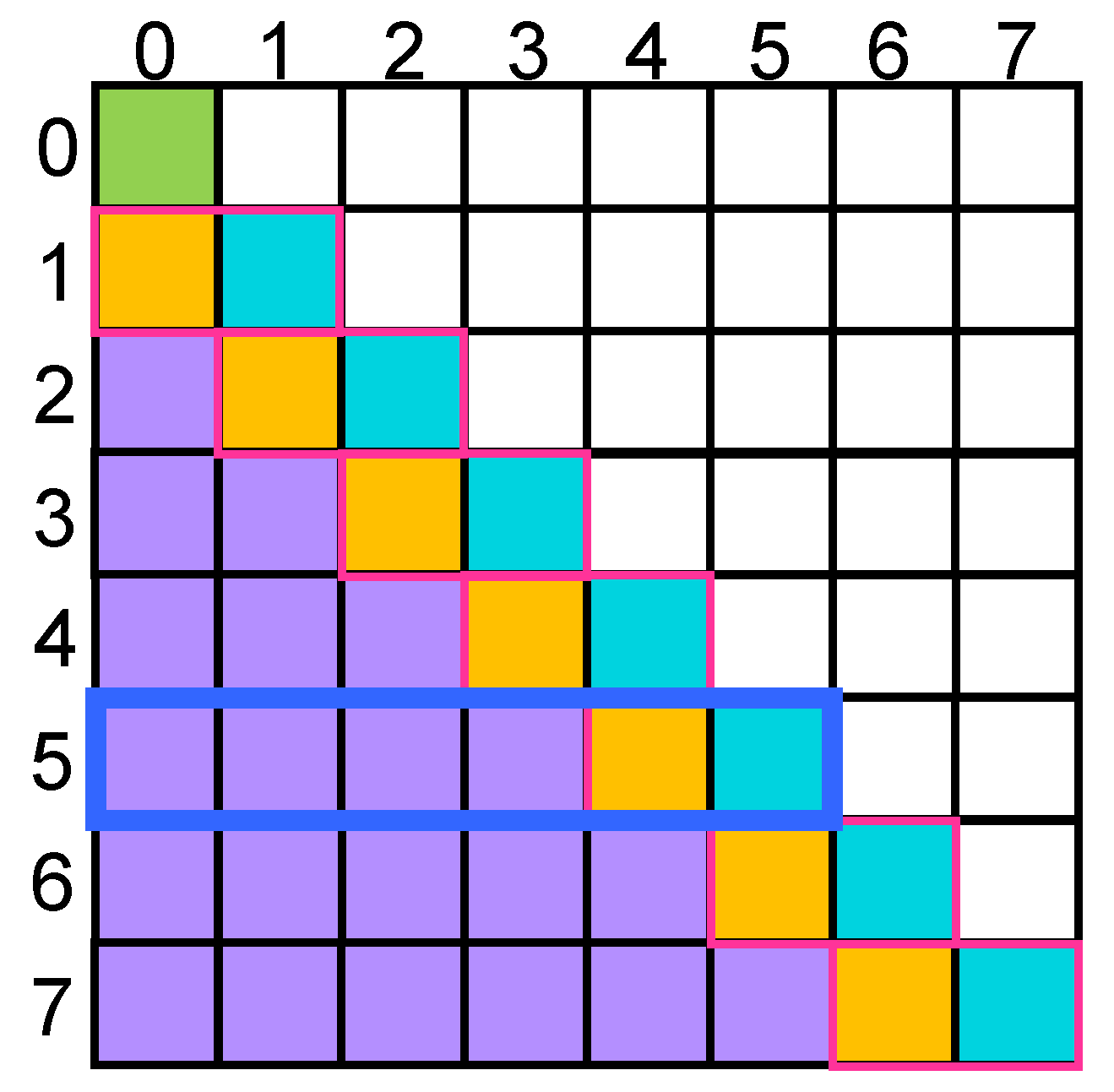

In general, since the EEViT-IP operates on the consecutive pair of segments in each layer, and as shown in

Figure 4,

Figure 5 and

Figure 6, the effective attention implicitly increases by one in each layer, in order to obtain implicit full attention across all patches, the number of segments need to be less or approximately equal to the number of layers. In

Table 7, the FasterViT that we use has 13 layers, so in this case 16 segments produce a better result. Note that more segments result in a more efficient execution model, as the attention computation is done on smaller segments. Thus, there is a tradeoff in accuracy versus efficiency with respect to number of segments to use in our EEViT-IP design.

Using a batch size of 256, the EEViT-IP consumes a GPU RAM of 16.5 GB, with a per-epoch training time of 35 min for a model with 26.5 million parameters while training on the ImageNet-1K dataset. We show the comparison of different models in their learning, depicting the test accuracy after each epoch on different datasets. The comparison to some other recent designs, e.g., T2T, is not applicable, as it uses the standard ViT attention but a much better patch tokenization than a standard ViT. If we replace T2T’s attention with our IP attention, we obtain a more efficient T2T. Similarly, the designs in the Super Vision Transformer [

25], the EViT [

26], ConvNext [

27], or that of DeiT [

14] are not meaningful in our comparison, as they are either token reorganization approaches, hybrid CNN-Transformer architectures, or distillation approaches. Our work is related to the design of a better attention mechanism, and thus we compare our approach to recent Transformer-based designs with novel attention mechanisms.

To reduce the attention complexity, the work in [

28] replaces the quadratic attention with linear attention mechanisms, such as Performer [

29], Nyströmformer [

30], and Linformer [

20], to reduce its GPU usage. The inductive prior for image data is provided by convolutional sub-layers. While the efficiency gains are achieved, the accuracy results reported on CIFAR-10, CIFAR-100, and the Tiny ImageNet datasets are lacking those of recent ViT models.

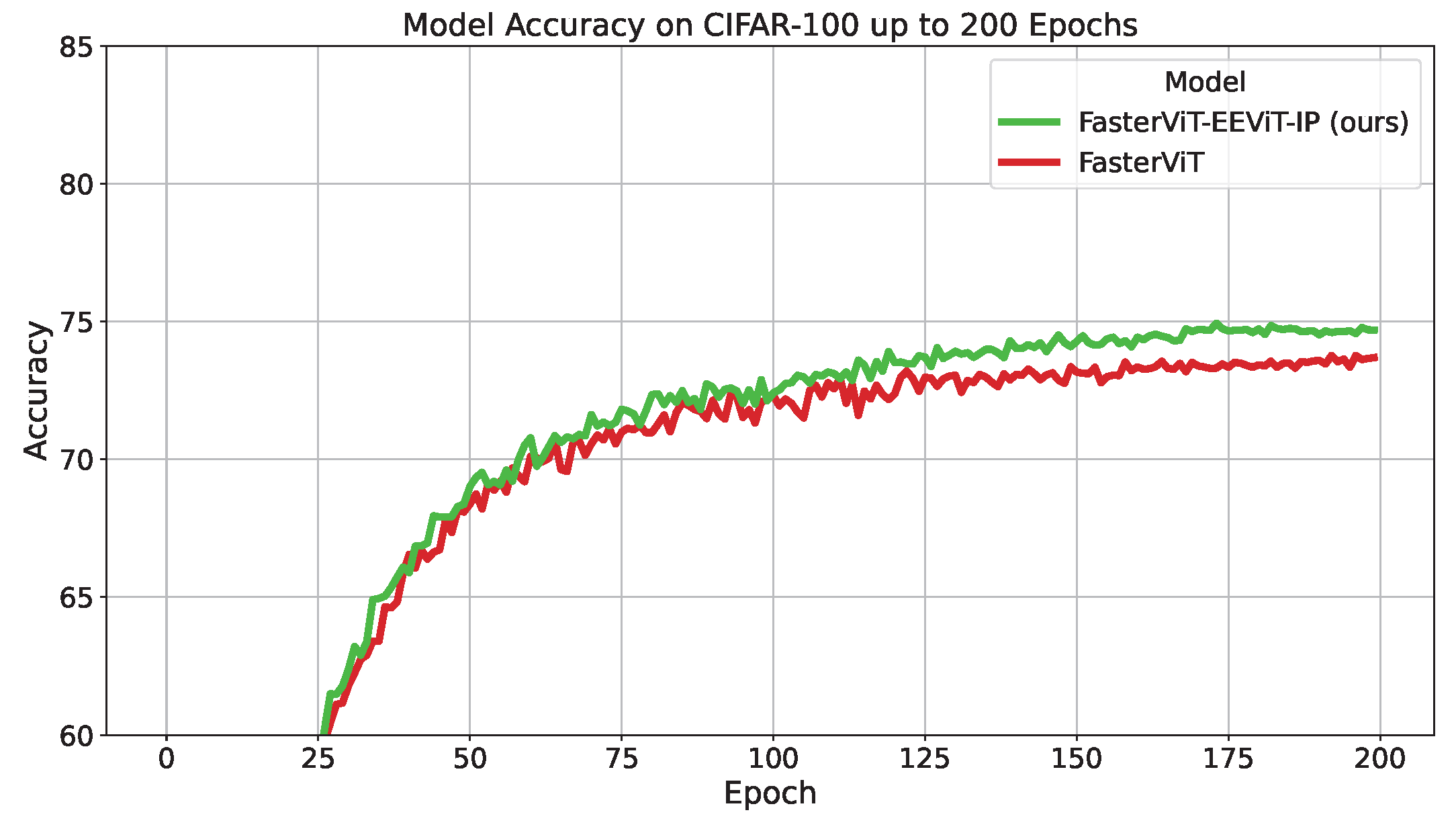

One of the recent important works in the ViT domain is the FasterViT [

17]. It uses a hierarchical attention mechanism along with carrier tokens. These dedicated tokens reside per window and facilitate both local and global receptive capability, enabling efficient cross-window communication. FasterViT replaces patch tokenization with a convolution-based patch embedding stem, i.e., a stack of convolutional layers that progressively reduce spatial resolution while increasing channel dimension. To demonstrate the effectiveness of our EEViT-IP attention mechanism, we can replace the FasterViT’s attention module with the EEViT-IP attention.

Figure 15 and

Figure 16 demonstrate the boosted performance on the CIFAR-10 and CIFAR-100 datasets.

6. Discussion

Even though the Transformer architecture has been amazingly successful in the NLP and vision domains due to its attention mechanism, the

complexity of attention is a concern, especially as the sequence length

n becomes longer. Thus, an active area of research is to reduce this complexity without causing a drop in its predictive performance. Towards this goal, we have proposed two enhanced architectures in this paper: EEViT-PAR and EEViT-IP. The EEViT-PAR improves the predictive performance of a previously proposed PerceiverAR [

9] design. While the PerceiverAR has 1/4th the computational efficiency of the standard ViT Transformer, it does experience a drop in accuracy when used in the image domain. We slightly increase the low computational cost of PerceiverAR but are able to achieve the same image classification accuracy as a standard ViT.

In the EEViT-PAR, we divide the set of input tokens into a context and a latent component like the original PerceiverAR, but propagate both the processed context and the latent to the next

layers. The original PerceiverAR stops the propagation of context after the first layer, thereby incurring a loss in performance as the context is absorbed into the latent after the first layer. Empirically, we have determined that only a

k of 2 or 3 is needed to achieve the equivalent classification performance of a ViT. Similar to the observations presented in Cait [

10], the use of the CLS token in the last 2–3 layers provides majority of the gains in classification accuracy. We further incorporate the CLS token in only the last

p layers like the CaiT [

10] to improve the performance of our model. This does not cause any noticeable change in overall computation efficiency. The exact computation equation for the attention component of EEViT-PAR is given in Equation (

37).

where

c is the size of the context,

l is the size of the latent, and

m is the total number of layers in the Transformer. For example, if we use

(12 layers), a sequence length of

, and if

, then the EEViT-PAR has approximately 29% of the attention computations as compared to a standard ViT with

attention computations in each layer. Note that the PerceiverAR has approximately 25% of the computations as compared to a standard ViT. Thus, with approximately a 5% increase in computations with respect to the baseline PerceiverAR, we are able to achieve comparable classification accuracy to a standard ViT, as demonstrated by our empirical results on different datasets.

Efficiency Analysis

With the goal of surpassing the classification accuracy of the ViT with even less computations than the PerceiverAR, our EEViT-IP uses the PerceiverAR concept on much smaller segments. By overlapping the segments, the learnt visual attention propagates from one segment to another in the layers of the Transformer. The exact computation in the attention component of the EEViT-IP is given by Equation (

38):

where

s is the segment size that the input sequence of length

n is divided into, and

m is the number of layers in the Transformer. As an example, if the input image is divided into 256 patches and the segment size is 32, then the EEViT-IP Transformer with 12 layers has only 13% of the attention computations as compared to a standard ViT. In addition, our EEViT-IP is 60% more efficient than the PerceiverAR.

As can be seen from

Table 4 and

Table 5, our EEViT-IP surpasses most SOTA ViT designs in top-1 accuracy on the different benchmarks. The Swin Transformer matches the top-1 performance of our design, but it should be noted that our design is more computation and memory efficient than the Swin Transformer. In a Swin Transformer, the image is first split into non-overlapping 4 × 4 patches, which are further grouped into 7 × 7 non-overlapping windows. The attention is calculated only locally within each 7 × 7 window, consisting of 49 tokens. In comparison, we also divide the input image into patches, but depending upon the image size, the patch size can be larger. We further divide the embedded patches into segments and perform the attention within the segment only. Because of the overlap in segments, we effectively achieve the same result as the shifted windows as in a Swin Transformer. Since our segment size can be made smaller, we can achieve higher computational efficiency than a Swin Transformer. Further, our segmentation scheme is much more flexible in terms of the requirements for image size than the Swin Transformer.

The PerceiverAR style computation on overlapped segments causes the attention information to flow from each segment to the neighboring segment in each succeeding layer, eventually reaching all segments after a few layers. We visually depict this feature of EEViT-IP in

Figure 17. The receptive attention field continues to grow towards the right of each segment as computation proceeds through different layers. The attention computation is between neighboring half segments only which also achieves similar effect as in a window attention in the Swin Transformer.

While the Swin Transformer obtains a very good image recognition accuracy, it has high GPU memory requirements during training. While the windowed attention mechanism of Swin theoretically reduces the quadratic complexity of attention to linear, the intermediate attention maps and other activations within each window still need to be stored for the backward pass, resulting in high GPU memory usage. To address this issue, the Swin V2 [

12] proposed “activation checkpointing” and “ZeRO” optimizer to save GPU memory. In our study, we observed that the GPU memory required for training ImageNet-1K on Swin-T with a batch size of 128 exceeds 24 GB. In comparison, our EEViT-IP requires only 7 GB of GPU RAM with a batch size of 128. Twins [

13] is another popular architecture similar in design to the Swin Transformer. It separates global and local attention into two distinct modules: Locally-grouped Attention (LgA) and Global Subsampled Attention (GSA). While being efficient and producing slightly better accuracy than Swin, its drawback is its difficulty in adapting to different tasks due to the complex integration of LgA and GSA. In comparison to Twins, our EEViT-IP architecture is much simpler in its attention processing and uses the simple mechanism of computing attention over overlapping segments, making it a better candidate for VLMs. We also achieve better classification accuracy than Twins in addition to computationally being more efficient.

While the EEViT-PAR architecture that we developed is an incremental improvement over the PerceiverAR baseline combining ideas from CaiT, our EEViT-IP model is an elegant extension of the ideas of PerceiverAR leading to a highly efficient ViT without loss of contextual information. The efficiency analysis, as described in the previous section, indicates that our EEViT-IP uses approximately 13% of the attention computations as compared to a standard ViT. Further, this reduction in computation does not cause any loss in performance, and in fact provides better inductive bias as the model learns the classification with less information in earlier layers. This is further supported by the empirical results on different datasets.

Our EEViT-IP architecture is extremely suitable for use in VLMs, as it is entirely an attention-based Transformer, which is highly efficient in its attention computation without loss of context for long input patches/token sequences. We list some of the important reasons why a Transformer-only based architecture is important for use in VLMs.

The unified attention mechanism of a Transformer can seamlessly process both visual and textual modalities, whereas convolutional backbones like ConvNeXt [

27] are optimized primarily for spatial hierarchies in images and lack native mechanisms for cross-modal alignment. While ConvNeXt-ViT [

27] hybrids leverage convolutional inductive biases for local feature extraction, Transformer-only designs offer a more unified and modality-consistent architecture, which is critical for cross-modal alignment in VLMs.

The attention mechanism in Transformers enables explicit comprehension of long-range dependencies, which is essential for capturing semantic correspondences between image regions and textual tokens. Convolutional networks, by contrast, are inherently local and require deeper stacks or added modules to approximate this capability.

State-of-the-art VLMs (e.g., CLIP [

31], BLIP [

32], LLaVA [

33], SigLIP [

34], SigLIP 2 [

35]) use Transformer-based designs, demonstrating that transformers offer superior scalability and generalization across tasks such as retrieval, captioning, and multimodal reasoning.

The scaling laws of Transformers have shown predictable improvements with larger model sizes and training data, a property that has not been consistently observed with convolutional-hybrid backbones in multimodal learning [

36].

The homogeneity of a Transformer stack simplifies optimization, transfer learning, and downstream adaptation (e.g., fine-tuning with LoRA or adapters), whereas hybrid ConvNeXt-ViT models often require more complex architectural tuning.