1. Introduction

Recent advances in AI have led to a surpassing of conventional methodologies and disruption of various human-powered fields. These are becoming more and more digital, from medical pathology [

1] to industrial smart systems [

2] and even socio-emotive companionship [

3]. However, this prosperity is fickle as the existing AI methodology is largely ineffective when devoid of human guidance [

4]. This is a clear indicator that research on AI learning should move closer to metalearning. Additionally, the parameter explicitness required of model designers should be considered when building training procedures for artificial agents (naturally without intrinsic motivation). In this context, we postulate artificial emotion as a missing catalyst of exploratory behavior in AI and develop primary work on how to use it for that goal. This particular characteristic can enable agents to focus on data in accordance with their needs/interests, given how appraisal and attention changes correlate to alter sensory processing of stimuli in an effect dubbed emotional salience [

5]. In fact, exploration is already known to be a fundamental aspect of cognitive development and independent behavior in human beings [

6] given its contribution to the acquisition of knowledge. For instance, confirmation biases influence how information is sought to ratify prior beliefs and inference [

7]. Consequently, for AI to grow autonomous, researchers should strive to develop methodologies congruent with biological processing and optimize informational search.

Scrutiny of epistemic/achievement states (i.e., emotions pertaining to the generation of knowledge and a sense of success) for the purpose of benefiting exploration tactics in AI has been attempted, though only from a few general perspectives. Approaches have applied model behavior differences as criteria to determine whether data is adequate for classification [

8,

9], and divergence in transition probability has been employed as learning reinforcement [

10]. There are also instances of states being inferred from intrinsic reward [

11] to drive curiosity in exploration. Results were interpreted as congruent with emotion in real life, where internal cognitive conditions related with emotion (e.g., incongruity and expectancy) do influence exploration [

12,

13]. However, these interpretations only posit conditions as causes of emotional variation, which then impacts exploration. Hence, emotion is only implicit and does not benefit from the better contextual adaptability, informational density, or socio-communicative relevance that explicitness can bring. This leads to low generalizability. Practical applications are lost, such as the impact over learning aspects besides exploration, human understanding of AI decision-making, or the ability to further integrate environmental information. Contrastingly, emotional influence is well acknowledged for several biological factors [

14]. Thus, reproducing conditions for direct emotion manifestation and consequential impact may represent a better methodology than current state-of-the-art approaches.

This improvement is corroborated by works which have achieved greater performances from autonomous emotion-mediated parameter optimization. For example, works on the prediction error, learning rate, or actual reward have presented emotional modulation based on the difference between short- and long-term average of reward entropy over time [

15] or emotion quantization as a linear combination of separate power levels [

16]. A mere difference in visual stimuli has also been made to influence valence–arousal pairs [

17,

18], which then affect learning parameters. Moreover, the basing of emotion metalearning techniques on neurophysiology, where researchers strive to replicate limbic circuitry and neuromodulation, has been shown to provide performance advantages in decision-making [

3]. It is a type of strategy that benefits from interdisciplinarity, yet it is uncommon. As such, it could provide an edge when developing new parameter calculation techniques and contribute to emotion-mediated AI progress.

Linking the lack of learning autonomy in artificial agents with an observable influence of intrinsic emotional drives in living beings, there is motivation to emulate the latter in an attempt to mitigate the former. This emulation should build on knowledge already established by neuropsychological studies as a bootstrapping point. Furthermore, it should remain task-agnostic yet still provide some type of advantage for agent learning, either in terms of autonomy or efficiency. In order to tackle the challenges of endowing AI with emotion-mediated intrinsic driving and study its outcomes, this paper considers the following two research questions:

RQ 1: How can emotion be represented in artificial agents, and how will its influence over their behavior be evaluated so that results may be valid and comparable to human behavioral studies? To achieve this, we first build epistemic and achievement emotion functions based on links demonstrated in cognitive psychology. These are applied to a learning framework, whose behavior is evaluated under conditions similar to those of human studies [

19,

20,

21,

22].

RQ 2: Will the manifested correlations between emotion and exploratory behavior be useful for data processing by agents, similar to what happens with human beings? To understand this we integrate the framework in a learning loop, replicated over a large number of agents, and assess emergent correlations. Parallelism is then drawn between the former and reported human behavior.

Answering these questions is meant to contribute a valid technique for artificial agents to explore data more autonomously. It is also meant to spark more interest in human–AI interdisciplinary studies. The rest of the paper is organized as follows:

Section 2 briefly introduces the topic of emotion–behavior studies in psychology after framing our approach within the context of AI.

Section 3 describes the design of each framework component, subsequently overviewing the experimental arrangement inspired by human studies.

Section 4 details the experimental procedure and obtained results, followed by

Section 5, which discusses them. Finally,

Section 6 concludes the paper.

3. Materials and Methods

The proposed framework consists of a task-oriented module, whose rate of exploration is dictated by an actor–critic module. The latter derives this rate from performance-based emotional scoring. The following sections overview each component of the system, namely how the emotional functions were replicated from psychology observations, what composes the framework, and how the learning cycle is designed.

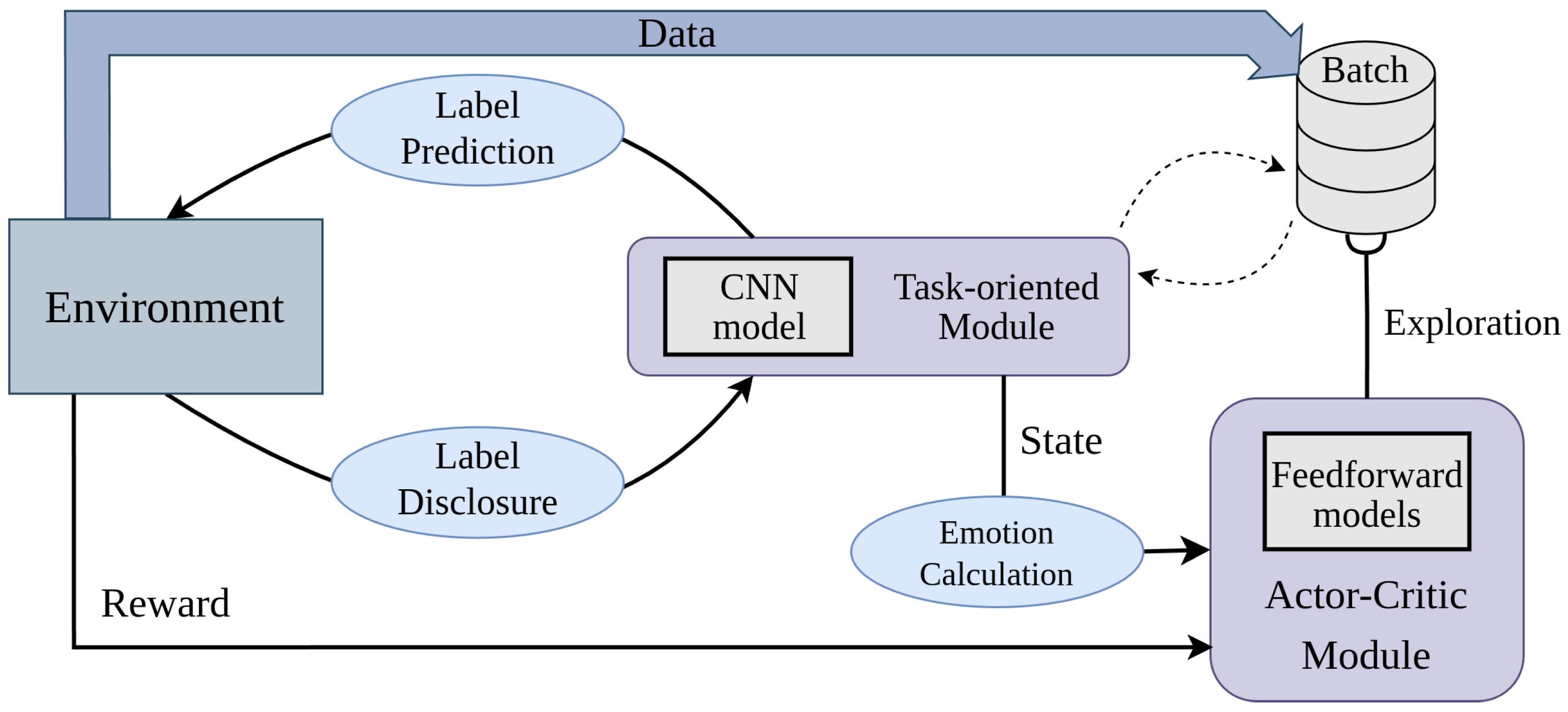

Figure 1 displays the proposed framework as a reference.

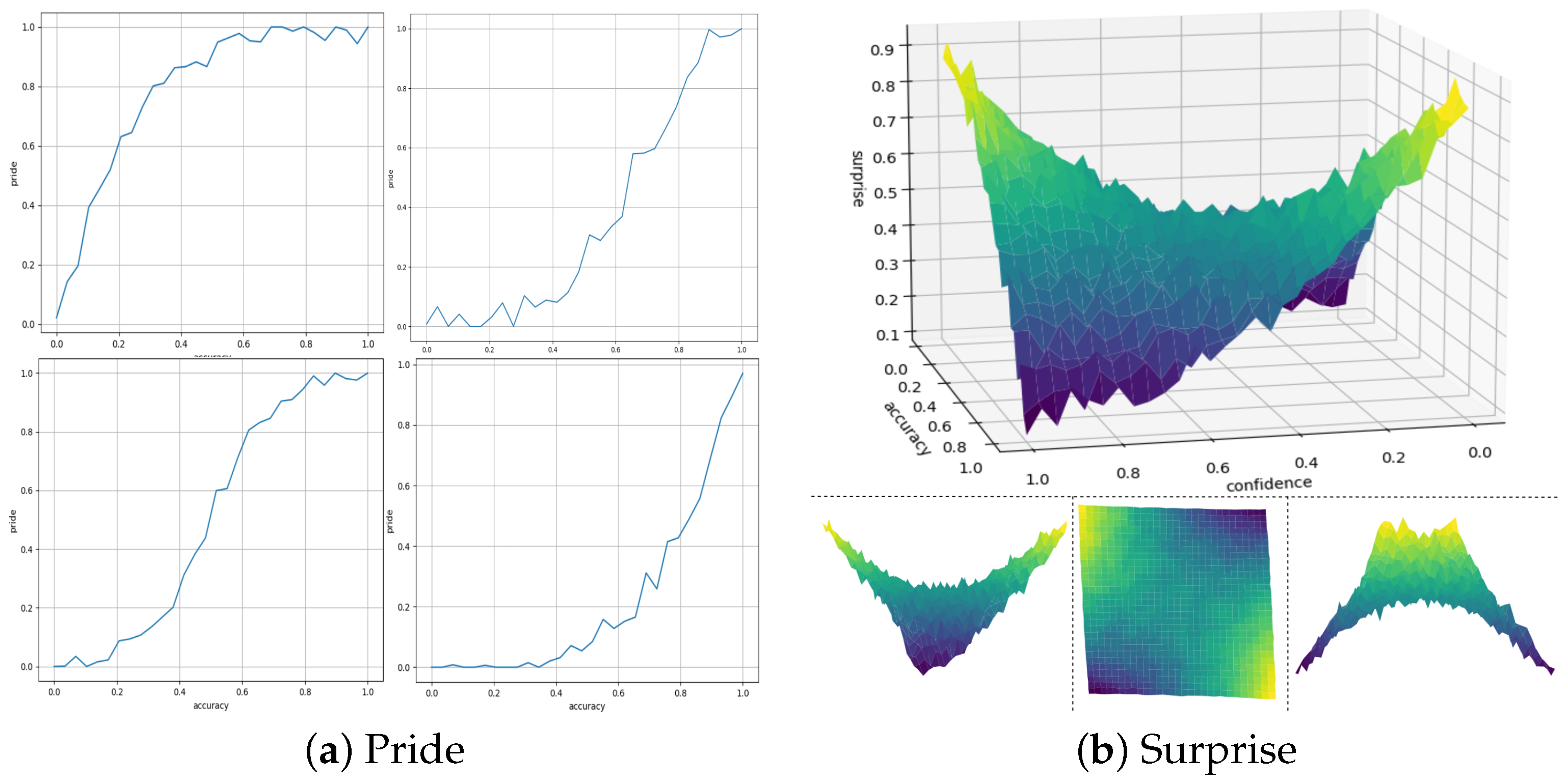

3.1. Replication of Surprise and Pride

We propose deriving epistemic and achievement emotion from the standard performance metrics of a deep learning methodology, interpreting them as underlying cognitive conditions. Specifically, testing accuracy reflects the adequacy of a model towards some task by gauging overall correctness over unseen data. Therefore, it may be employed as a pointer of error and achievement. In this case, escalation in accuracy scores can be interpreted as increasing success. Contrastingly, de-escalation entails a decrease in success. Variations in the feeling of pride should therefore match variations in accuracy, corresponding to personal achievement or lack thereof [

37]. This accuracy–pride match can entail a curve with a positive slope and unknown convexity, with small variations. Factoring in confidence, besides accuracy, can broaden the set of representable emotions. For instance, high-confidence errors trigger the feeling of surprise, as derived from the cognitive incongruity explained previously. Additionally, insecure attainment of success may also induce surprise [

38]. In these scenarios, a respective decrease or increase in confidence will instead lower surprise. A saddle-like behavior can therefore describe this emotion, as polarized variations of accuracy and confidence together imply intense values of surprise, whilst matching magnitudes of the two indicate a reduction or lack of this emotion. This view regarding surprise and pride is widely backed by the cognitive psychology literature [

12,

13,

20,

21].

Several different functions can represent the behavior described for surprise and pride. Here, a single set of functions that fulfill the requirements was selected arbitrarily for the main experiments. Examples are shown in

Figure 2 for reference. As described, these examples factored in performance metrics of the task-oriented module, which is inspired by the impact of action outcomes over emotion [

39]. This partially answers RQ1, contributing a novel way for emotion to occur in artificial agents, making it both objective and integrable in other AI pipelines.

3.1.1. Pride

Since accuracy has been shown to positively predict pride [

20], making it is easy to compute and already fixed within

, it can adequately model this emotion. To capture how pride might vary with increasing accuracy

a, we propose a Gaussian-like bump function

. This displays an overall upward trend, peaking near

(perfect accuracy), to reflect strong pride near high performance. We also include minor fluctuations to account for individual variability (e.g., personality and context). An example of such a function is

In the equation, controls how sharply pride rises as accuracy improves. The Gaussian noise introduces individual variability. The clip function keeps pride within its natural bounds (0 and 1). This example is not meant to be precise or universal, but rather to illustrate a plausible emotional trajectory. Pride grows with success, but its exact path varies across individuals.

3.1.2. Surprise

Surprise depends on confidence, besides accuracy. Prior work shows that high-confidence errors are strong predictors of surprise [

20]. To reflect this, we introduce a confidence score

, capturing the elevated confidence distribution yielded by the task-oriented model. Our surprise function

is proposed to provide high values when confidence and accuracy disagree (saddle-like behavior). This happens when an agent is confident but wrong (high

c and low

a) or unsure but correct (low

c and high

a). It takes the following form:

Here, the core term captures the disagreement between accuracy and confidence. A rotation (by ) and translation are applied to center and orient the surface so that surprise is maximized when accuracy and confidence diverge. As before, Gaussian noise introduces variability, and clipping keeps outputs within the range . The term re-centers the output toward the range middle, reducing clipping distortion.

3.2. Framework Overview

In the framework, three models were implemented: the task-oriented, actor, and critic models. This combination was loosely inspired by human neural functioning during a psychological experiment, where part of the brain is focused on task success (e.g., prefrontal cortex), which is mediated by feedback from other regions (e.g., basal ganglia). It constituted each artificial participant, being fed data for classification with potentially wrong labels. This biological parallelism was purposeful so that the obtained results could be contrasted with behavioral studies with adequate validity, in line with answering RQ1 and fomenting human–AI interdisciplinarity in state-of-the-art research. Furthermore, this clear separation between the task-oriented module and emotion to exploration optimization expands on current AI exploration techniques, which usually combine them and disregard bio-inspiration.

3.2.1. Task-Oriented Module

This module is meant to carry out a cognitive task. Here, handwritten digit recognition was performed using the MNIST dataset [

40] for the sake of simplicity. Other tasks would also be possible, as the module is task-agnostic. Convolutional and feedforward branches were combined in a VGG-like architecture [

41] to first enhance visual cues representative of the content in images and then classify them as digits. The architecture is simple, with the layer arrangement outlined by

Table 1.

In order to train this model, half of the MNIST training dataset was used unadulterated. Standard backpropagation was employed using the Adam optimizer [

42] for 50 epochs, with a batch size of 64. Testing with the MNIST test set yielded over

accuracy and near-zero loss. The confidence distribution is heavily concentrated in the high-confidence bins, with over 98% of samples falling within the [0.95, 1] range. This indicates that the model is highly confident in its predictions. Consequently, it is well-suited for applications in our framework, where such consistent certainty parallels the behavior of a person that is highly confident as a result of repeated success. This further validates an answer to RQ1.

As for the second half of the MNIST dataset, it was used directly in the main surprise/pride experiments. This was adulterated so that

of its instances had random labels, making them different from the specific digits they represented. The adulteration is represented in

Figure 3b. Evidently, this was performed at random indices, so there would be good sparseness of correct and incorrect labels. Hence, despite being technically correct when classifying any of the 30,000 images used in the experiment, the pre-trained task-oriented network would be met with disparate labels approximately 50% of the time. This discrepancy is meant as the emotional trigger in the framework.

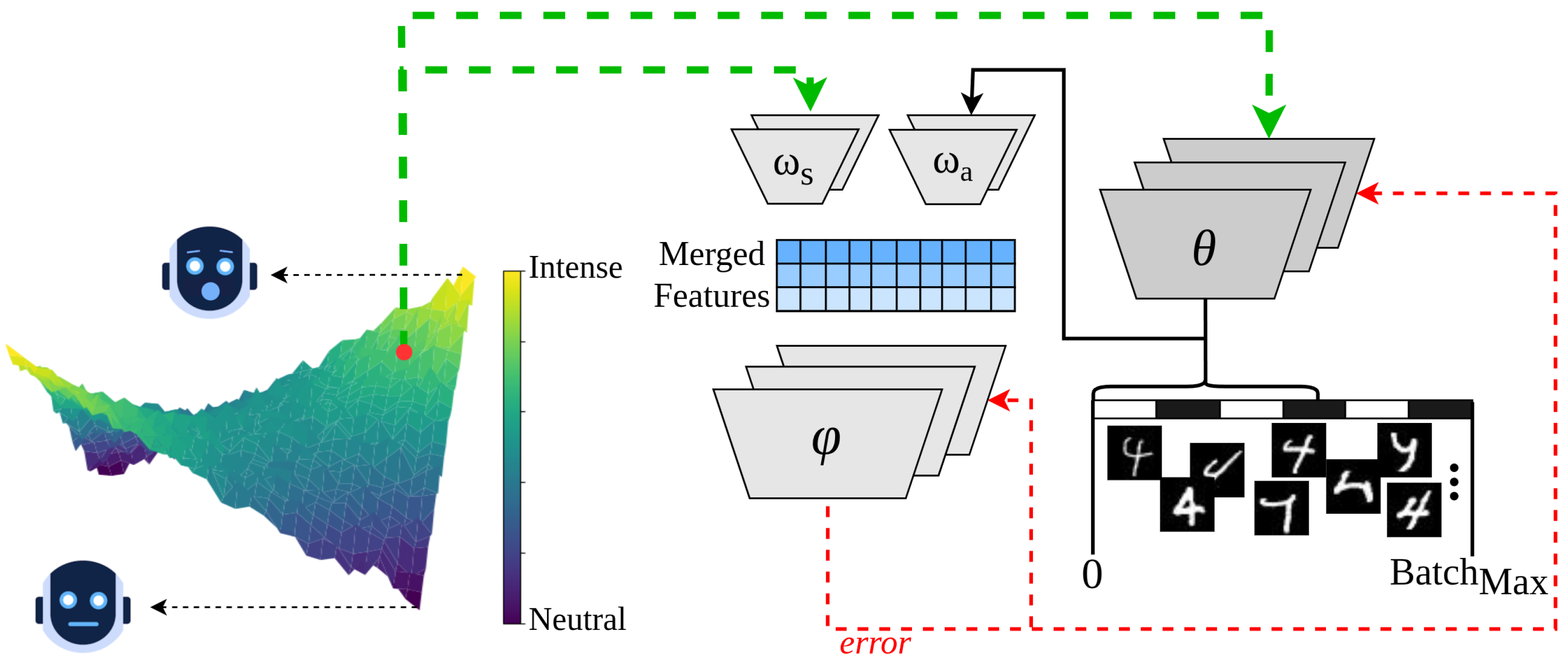

3.2.2. Actor–Critic Module

Our system requires a continuous evaluation of its own emotional state, processing it into an exploratory rate, as the most appropriate action. To achieve this, we drew inspiration from the habitual actor–critic dichotomy of the basal ganglia [

43] to design decision-making neural modules in our artificial agents. Since we employ a form of directed exploration in our agent task, a deterministic approach would be more fitting than a stochastic one. Hence, deep deterministic policy gradients (DDPGs) [

44] were implemented as AI parallels of the basal ganglia. This type of reinforcement learning (RL) methodology assumes separate networks: the critic model and the actor model. These collaborate to map the state to the action deterministically, attempting to maximize reward. Both the actor and the critic were implemented as multi-layer perceptrons focused on generating embeddings, which are then reduced, respectively, as an action or rectifying signal. These embeddings are parsed from the emotional state of the artificial agent, which is taken as the sole input for the actor, and tupled with the chosen action for the critic. An overview of this process is shown in

Figure 4.

Within the RL paradigm, the agent state is activated as formula-driven surprise or pride scores. With it, the actor decides on an exploratory rate to be used by the task-oriented module. The critic then signals the actor regarding that rate’s task-oriented usefulness. This translates as attentional shifting, as the actor is effectively warranting the task-oriented module to perform more/less intake of data in order to mitigate/potentiate emotional exacerbation. The resulting decision policy will therefore codify the exploratory rate in terms of epistemic or achievement emotion. We quantify this rate as a percentage to extract a fraction of the batch size, which is bound by . While not an exact representation of the basal ganglia and its related structures, this arrangement boasts similarities both architecturally and in terms of functioning of a human participant’s decision-making process. A comparison with human study results becomes more valid, in line with RQ1.

3.3. Learning Cycle

Learning a correlation between surprise or pride and exploratory behavior involved all three models: the task-oriented, actor, and critic models. Since the first is already trained and highly confident in its predictions, its weights are frozen and used for forward passes only. To start, the set of MNIST with partially adulterated labels is made available to the task-oriented model for classification at instance-wise steps, which are mediated by the actor–critic module. This means an item is first picked randomly and processed to generate a corresponding label. The adulteration of labels ensures that a portion of the predictions is unavoidably incorrect. Still, confidence remains high due to the initial training process and weight freezing. The system is now able to experience both successful classification as well as incur high-confidence errors. Such circumstances induce emotional variation in accordance with the formulae described previously. This information is inputted to the actor, which will decide how much data of the same type should be analyzed subsequently (i.e., explored), using the output rate to compute a batch fraction. Processing of this fraction results in further emotional variation. Its comparison with single-instance states can yield insight into emotional progression during the cognitive task, in addition to its relationship with exploratory fluctuation overall.

The emotional scores of the system and the actor-derived exploratory rate are taken in by the critic for adequacy assessments. This process depends on whether the chosen rate improves the system’s condition, contributing towards its objective. To specify this, we design a reward signal following the standard assumption that participants typically intend to perform well in the activities they perform, maximizing success. Therefore, reward varies analogously to common human functioning wherein reward-coding neurons respond to success from profitable decision-making [

45] and maintenance of a homeostatic balance [

46]. In our framework, each RL agent obtains a basis reward value, whose polarity corresponds to that of the difference between explored batch accuracy and single-instance accuracy. This ensures that exploration is useful only if yielding improvements in terms of task performance. Additionally, agents are provided with a sparse reward matching the variation in epistemic/achievement emotion, which occurs during a step. This either minimizes surprise or maximizes pride, complying with the free energy principle, which illustrates a necessity of self-organizing agents to reduce uncertainty in future outcomes [

47]. The reduction can stem from knowledge diversification, which is boosted by an exploratory increase, or from near-complete reliance on current knowledge, where exploration is largely avoided.

4. Experiments and Results

The experimental application of our framework attempted to remain as close to Vogl’s study as possible [

20]. Since variations in nature/nurture naturally influence emotion and decision-making [

48], it is important to account for personality variability. Thus, a total of 250 artificial agents were created, with distinct emotional functions obtained through parameter variation and added noise. These were employed for surprise and pride experiments separately. For either emotion function,

was employed, along with random combinations of

and

to generate varied artificial agents with individual differences while still following the same grand pattern. The learning cycle was applied to each artificial agent, with a reset occurring every 20 steps to match Vogl’s 20-statement procedure. This reset marked the beginning of each learning episode, with a total of 100 episodes solidifying the robustness of our observations on emotion–exploration. Additionally, the actor and critic models used the Adam optimizer during the cycle run, with learning rates of 0.001 and 0.002, respectively. A replay buffer was also implemented here to reduce the variance from temporal correlations. Target networks were also implemented to help regularize learning updates. Overall results are depicted in

Figure 5. The associations between exploratory behavior and epistemic/achievement emotions are analogous to findings reported in the original cognitive psychology study we strived to emulate [

20].

4.1. Outcome Analysis

First, model convergence was required to ensure behaviors learned by the artificial agents were not random. This was achieved for either emotion, as is demonstrated by the increase in and plateauing of cumulative reward over time (

Figure 5 middle column). Specifically for surprise (top row), the initial reward is restricted to

, peaks at

, and ends within the range of

, with an early-stage dip minimum of

. For pride, the initial reward is encompassed by

, within which

is the minimum. The final reward here varies at

, though the overall maximum value

is achieved shortly before. The average cumulative reward across agents also increases for both emotions. This demonstrates stable yet slight growth for pride. Contrastingly, surprise entails a short depression in earlier episodes followed by a steady increase later on. Overall, these trends indicate that agents successfully learned to correspond states to actions in a useful way.

As episodic cumulative reward validates the success of the learning cycle, observed emotional fluctuations over time are also legitimized. These are presented in the first column of

Figure 5. Expectedly, the initial variation is well-balanced for both emotions, as the number of increases matches that of decreases in the first episode. However, different outcomes manifest as episodes progress:

Surprise (top trend): On average, bursts become less frequent by the final episode (). As such, it seems a reduction or stasis is favored over increases. Stasis is also progressively preferential in the first 10 episodes of surprise, falling back as decreases become more prominent later in the cycle.

Pride (bottom trend): Overall, the average emotion variation among agents is minor yet still favoring an upwards tendency. Decreases occur fewer times () between the first and last episodes. This percentage is largely taken over by stasis scenarios, with the number of emotional increases remaining mostly unchanged throughout the cycle.

4.2. Observed Correlations

The third column of

Figure 5 evidences the impact of emotion over exploratory behavior. In spite of the emotional differences imposed via parameter fluctuation, a substantial number of artificial agents manifested similar behaviors after the cycle. A causation effect is most evident for the surprise experiment (top), which is akin to the emotional variation results. Averaging the decision-making behavior of all 250 agents displayed a

increase in exploration in response to greater surprise. This trend resonates with the 217 agents that learned positive correlations, outshining the remainder 33 who displayed negative correlations. Regardless of their variability, all instances were monotonic and either displayed a considerable increase (positive) or a limited decrease (negative). In the pride experiment (bottom), instances were likewise monotonic. However, agents here demonstrated a deflating exploratory effect. Behaviors encompassed a large amount of positive weak correlations, with few yet ample negative correlations. Specifically, 222 agents displayed a slight exploratory increase with pride. The impact proved minimal, as represented by the mean behavior of the subset. A smaller set of 22 agents decreased exploration between

and

towards null. Contrastingly, this caused a substantial negative change in mean behavior. Moreover, it is aided by the six remaining agents who manifested a more restrained reduction. The final result is a modest effect of a

overall average decrease in exploration for increasing pride, despite several weak positive correlations. Here medians can provide better insight by more accurately demonstrating negative cases as few yet hefty outliers against the whole.

Relationship strength between exploration and pride/surprise can be further demonstrated by measuring how each data pair correlates throughout a cycle. Here, we employ Spearman’s correlation coefficient

[

49] at each episode in an agent’s cycle. This assesses if observed monotonic relationships between emotion and exploration (

Figure 5 third column) become increasingly robust over time. Resulting coefficients for either experiment, with per-episode averaging of all 250 agent sample pairs, are shown in

Figure 6. These demonstrated considerable variability in the strength of the correlation between exploration and surprise/pride. To mitigate this effect, a sliding window encompassing 40 episodes was applied to smoothen the trends and clarify strength progression. Both cases display near-zero coefficients in earlier episodes. However, this mean

increases for surprise while decreasing for pride, resulting in

and

by the end of the cycles. This evidences a moderate positive correlation for surprise and exploration. For pride, there is instead a negative and much weaker association with this behavior. Succinctly, this weakness is congruent with the disparity of having positive cases 10 times more common than negatives ones while still obtaining a negative correlation.

5. Discussion

Results hold a clearly substantial minimization of surprise. This conforms with the free energy principle, as it led to a strong direct correlation being learned between this emotion and exploration. Contrarily, maximization of pride was somewhat negligible. This was paralleled by the weak dampening relationship obtained for pride over exploration, with its strength stagnating closer to zero. Notwithstanding the latter, both experiments successfully produced artificial agents capable of self-mediating their exploratory behavior. They did so by exploiting internal emotional drives towards improved task performance.

The outcome of our experiment strongly resonated with reports on emotion-mediated human exploratory behavior. Specifically, Vogl’s study [

20] demonstrated a causation effect from surprise over exploration of knowledge. This was evidenced by the successively positive path coefficients obtained when assessing surprise to curiosity and curiosity to exploration effects. Additionally, the first and second versions of the study reported within-person correlation coefficients of

and

for this emotion and exploration. Even higher values were reported when considering curiosity intermediately. Relating these findings with our work, the

mean exploratory increase leveraged by the agents over growing surprise validates Vogl’s postulated path relationships. Our Spearman’s correlation results further support this, given the additional value proximity. For surprise, as the non-windowed coefficient mean across agents reaches

by the final episode, it becomes considerably close to the within-person correlation value of the first study (with the most participants). It also goes reasonably near that of the second study.

In terms of pride, Vogl’s observations were also validated by our AI experiment. While negative correlation coefficients of

and

were reported in the first and second studies, respectively, these near-zero values indicate a weaker correlation between pride and exploration, if any. Though contradictory, path coefficients first indicated a weak but positive causation effect followed by a stronger dampening impact later. This suggested that pride has a faint influence over exploration. Our results are congruent, as Spearman’s correlation coefficient took longer to deviate from null compared to the surprise experiment, stagnating at a lower absolute value. Additionally, our

mean exploratory decrease over growing pride supports the higher likelihood of this emotion’s dampening effect over exploration. While this was due to a smaller amount of negative strong correlations, the insignificance of a considerably larger amount of positive relationships aligns with pride’s influence over exploration being modest. It could also be postulated that exploratory decrement is typical mostly during surges of pride. This is because, as a solo positive emotion, it may be damaging for cognitive performance [

50], of which exploratory behavior is a key aspect [

51]. Additional experimentation would be required to test such hypotheses. Regardless, under equivalent test conditions, our agents recognized a benefit in adopting behaviors akin to those exhibited by humans. These observations respond to RQ2, suggesting that emotion–exploration correlations manifested by agents are not only observable but also functionally identical. It is therefore plausible that these artificial correlations mirror their role in human cognition, being useful for data processing at least within comparable scenarios.

On a related note, Vogl’s appealed for conceptual replication of their results to bolster generalizability [

20,

21]. That is what inspired RQ1, to which our proposed framework responds and meets the appeal. Also, employing an image classification task is evidently different from the general knowledge trivia scenarios devised by those authors. Thus, our results further support the conclusion that observed emotion–exploration correlations were not merely triggered by biased input stimuli. Moreover, cognitive incongruity was induced differently, as half of the data instances were assigned randomly incorrect labels at reset, rather than always being correct/incorrect. This meant contradictory information was a possibility, as samples with visually distinct content could be assigned the same label. Vogl et al. also stressed the importance of considering various indicators of knowledge exploration when attesting the validity of an epistemic/achievement source. Unlike deep learning, where exploration may be parameterized, psychology relies on observations of behavior to assess how exploration occurs and to what extent [

19,

20,

38]. As our models objectively derive exploration from emotional scoring, this multi-indicator requisite was irrelevant in our approach, with no impact over findings. Finally, implementing artificial agents as participants in a within-“person” experiment is fundamentally distinct from human trialing. This constitutes further variability in comparison with Vogl’s original study.

Limitations

This work bears limitations, given the variability of its many factors and the aim of interdisciplinary comparisons. For instance, while authors strived to propose general solutions for emotional representation, the functions may still introduce a potential bias which hinders generalizability. The introduction of noise can help reduce this bias, yet a more robust approach would be to consider other functions that also match the psychological descriptions of surprise and pride and to extend the experiment. A similar limitation is related with the usage of MNIST and classification as the cognitive task. We used a simplistic example, where high success rates are well-established in deep learning. Different outcomes could occur if considering a different task or using a more complex dataset (e.g., CIFAR), and thus bias may also exist. To mitigate these limitations, we intend to vary the emotional functions further and employ different datasets for classification so that more generalizable conclusions can be made in future work. Still on the topic of validation, our study is limited to 250 agents. Naturally, experiment expansion can also entail a greater amount of agents in order to better reflect the diverse nature of living beings and their behaviors.

It is also important to note that artificial agents are unlikely to bear conscious metacognition and be refrained to supporting humans in complex tasks in the foreseeable future [

27]. Thus, a comparison of results obtained from biological participants and AI should be approached with care. Given this limitation, we opt for the corroborating usefulness of agent observation. This can provide further scrutiny for hypotheses on human cognition and behavioral traits [

52]. Finally, our system addresses each emotion individually as a precursor of exploration, whereas emotional states are most typically overlapping and combine effects over general behavior [

3]. In the future, we intend to expand the emotional basis of exploration to account for that overlap, further benefiting from and validating cognitive psychology hypotheses. For instance, epistemic and achievement states could be employed in tandem, equitably, or via weighted contributions as inputs to an actor module. We speculate that emotional overlap is needed to stabilize correlations, causing more well-defined behaviors, similar to living beings. If observed, this could provide further insights into human–AI parallelism and serve as inspiration for cognitive psychology expansion.

6. Conclusions

This work focused on developing a deep learning framework for emotional decision-making over exploration. The architectural design was inspired by basal ganglia circuitry, with the foundation for emotional operation stemming from cognitive psychology. Emulation of human experimental conditions from psichological studies was conducted via an original learning cycle. This was applied to a novel deep learning framework, which was replicated several times for generalizability purposes. This proposal adequately solves RQ1, though others may still be possible. Furthermore, AI-learned correlations between epistemic/achievement states and exploration demonstrated close proximity with observations taken from human studies. Hence, for RQ2 we speculate the correlations to indeed be useful for AI agents, much like they are to their human counterparts. Our work additionally supports emotion-mediated learning, given its benefits to explainable AI and its autonomy. These include, for instance, greater contextual adaptability for agents to self-adapt beyond just exploration or a human-like understanding of AI decision-making.

In terms of future research, our framework follows the outlined benefits and others, since it can be adapted for studying other behavioral traits and their relationship with emotional drives. Exploitation or engagement may be mediated through variable emotion during cognitive operation. This can prove beneficial, similarly to how agents here learned to explore when it is seemingly more useful for their own reward objective. Finally, we speculate that further research on these topics can push AI closer towards full autonomy and general intelligence.