Phishing Attacks in the Age of Generative Artificial Intelligence: A Systematic Review of Human Factors

Abstract

1. Introduction

- Highlighting the rapid enhancement and wide accessibility of GenAI and the associated risk of misuse in advancing phishing attacks.

- Highlighting opportunities for using GenAI as a solution for phishing attacks.

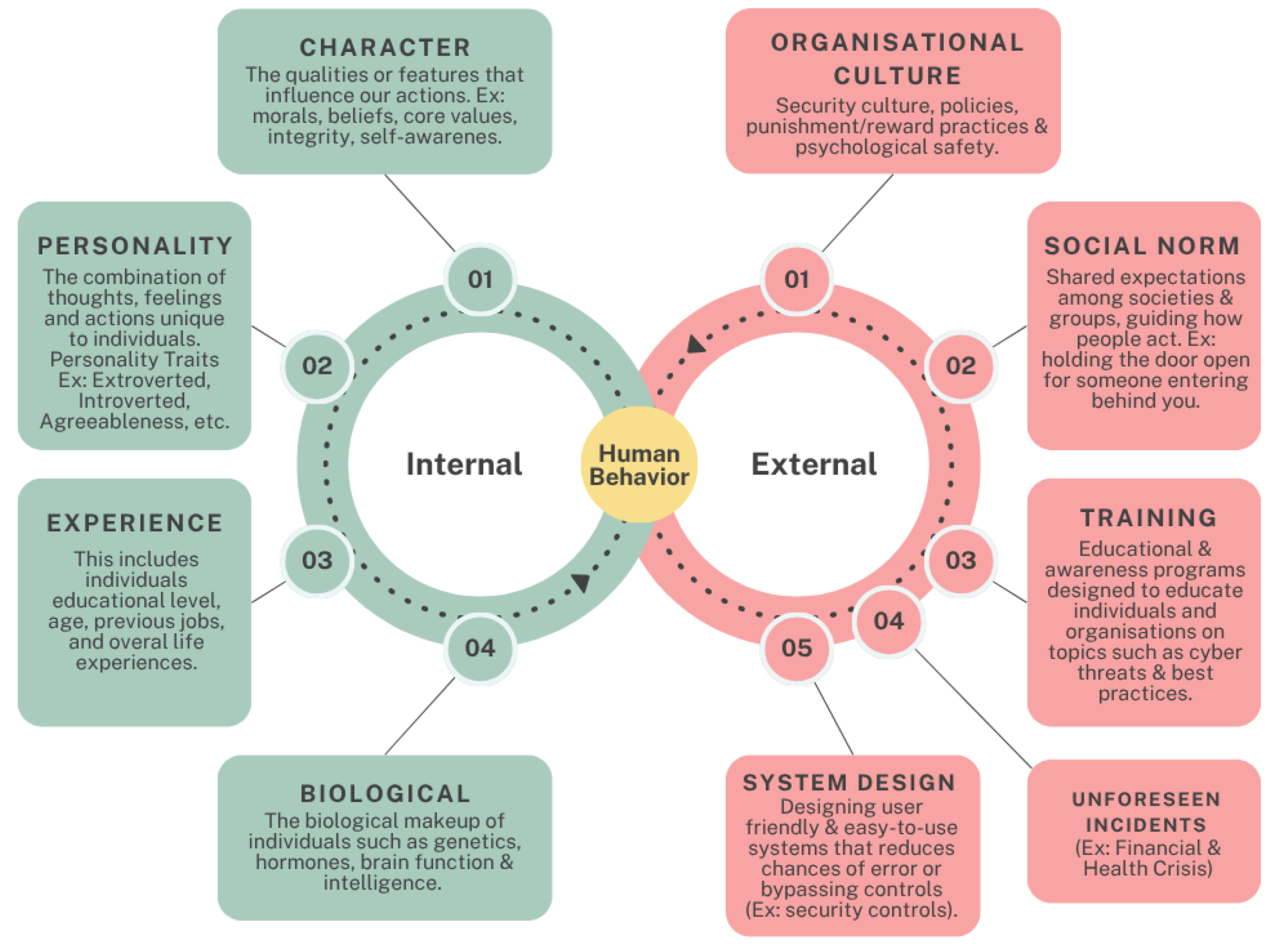

- Providing a holistic approach to all human factors that have been exploited in phishing attacks and how they contribute to negative cybersecurity behaviours.

- Highlighting research directions to support researchers and practitioners on the topic.

2. Background and Related Work

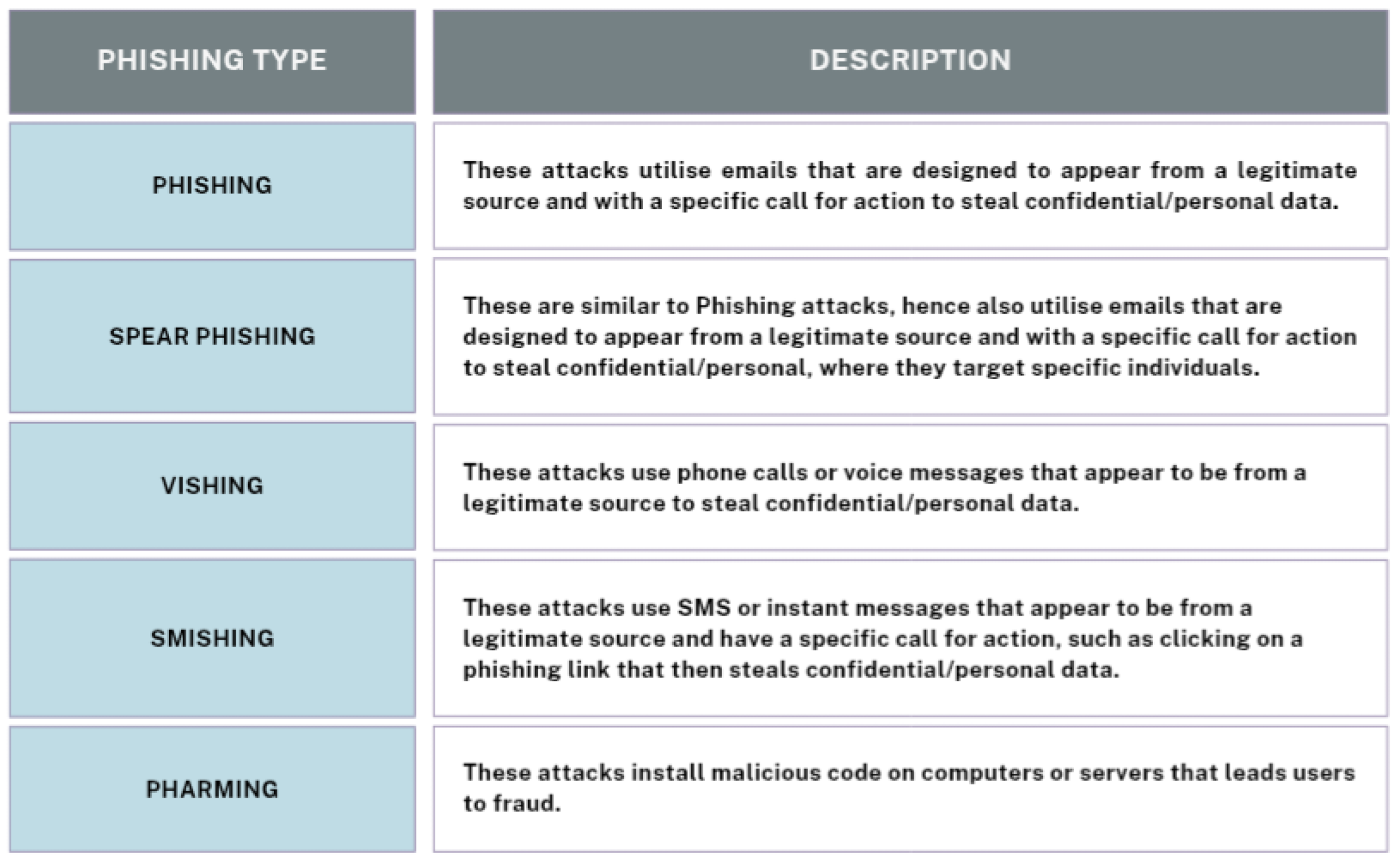

2.1. Phishing Attacks Description

- Email body: The email body content is scanned for attributes such as HTML, images or shapes, and specific sentences and phrases.

- Email Subject: The email’s subject line is scanned for specific common terms, such as ‘verify’ or ‘debit’.

- URLs: Emails are classified as suspicious if an IP address is used instead of the sender domain. Other attributes include, but are not limited to, the presence of external links and links with the ‘@’ sign.

- Sender email: The sender’s address is checked and compared with the reply-to reaction.

- Scripts or code: This refers to emails that include JavaScript, click-on activities, or any code present in the email’s body or subject.

2.2. Generative AI in Phishing Attacks

- Generative Adversarial Networks (GANs): GANs consist of two neural networks: the discriminator and the generator. The generator is designed to create sample data, and the discriminator verifies authenticity. The aim is to produce high-quality, realistic data by continuous refinement [27].

- Variational Autoencoders (VAEs): Input data are first encoded into a latent space and then decoded to rebuild the original/initial data. This enables the generation of new data that resemble the data in the original dataset [28].

- Transformer Models: These models advance natural language processing, leveraging long-range data dependencies and generating coherent and contextual content [29].

2.3. Human Factors in Phishing Attacks

| Papers | Phishing | Human Factors | Generative AI | Phishing Countermeasures |

|---|---|---|---|---|

| Desolda et al. (2021) [31] | Y | Y | N | Y |

| Schmitt and Flechais (2023) [44] | Y | • | Y | Y |

| Arevalo et al. (2023) [38] | Y | Y | N | • |

| Naqvi et al. (2023) [40] | Y | N | N | Y |

| Thakur et al. (2023) [43] | Y | N | • | Y |

| Kyaw et al. (2024) [42] | Y | N | • | Y |

| Ayeni el al. (2024) [41] | Y | • | N | Y |

| Our work | Y | Y | Y | Y |

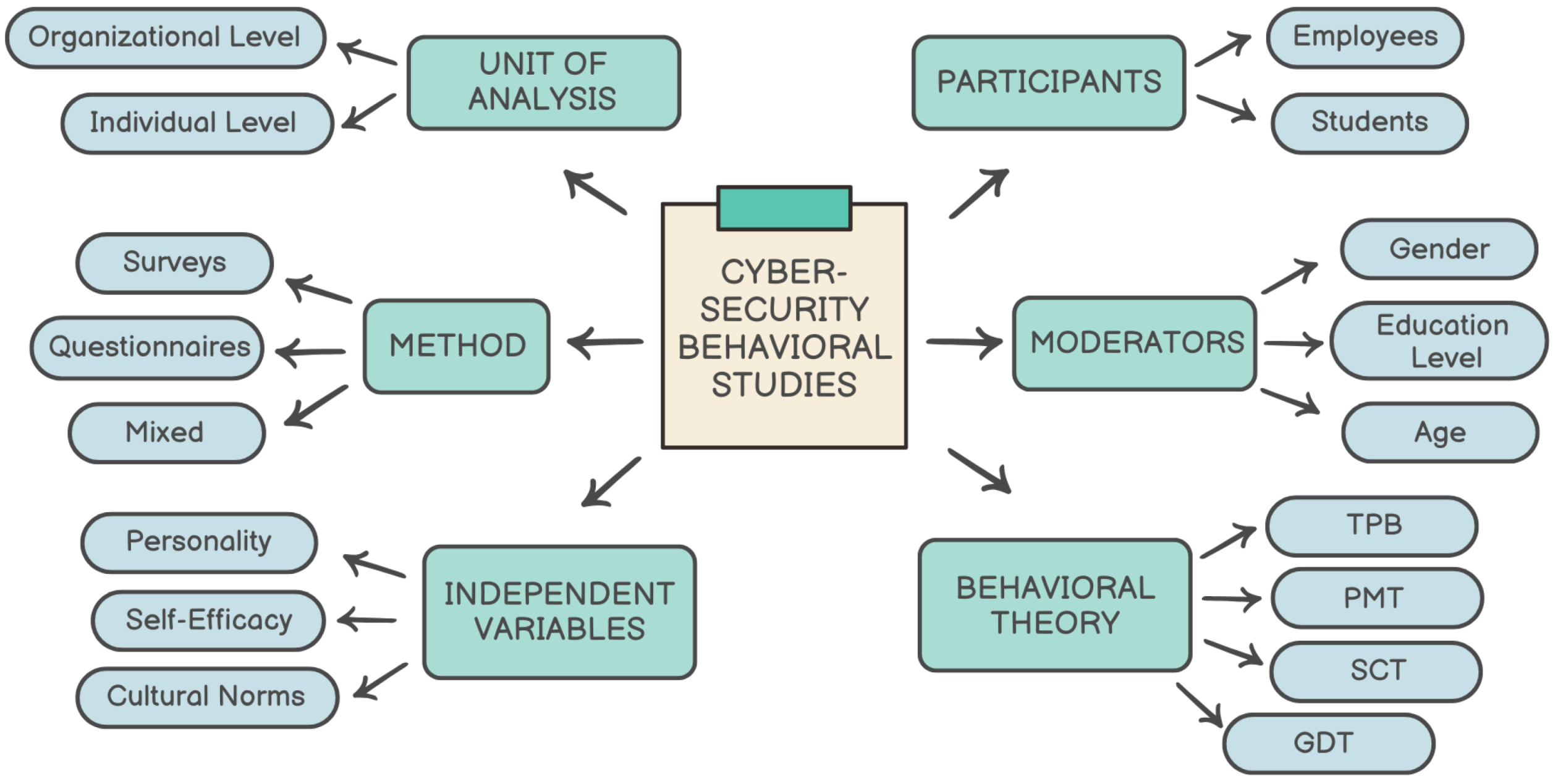

3. Methodology

3.1. Systematic Literature Review Planning Phase

3.1.1. Preparing the Right Research Questions

- Research Question 1 (RQ1): What factors make humans susceptible to phishing attacks?

- Research Question 2 (RQ2): How has GenAI increased the risks and sophistication of phishing attacks?

- Research Question 3 (RQ3): What are the most effective human-centred and technological solutions to mitigate phishing attacks?

3.1.2. Selecting Relevant Search Strings

3.1.3. Choosing the Search Databases

3.1.4. Defining the Inclusion and Exclusion Criteria

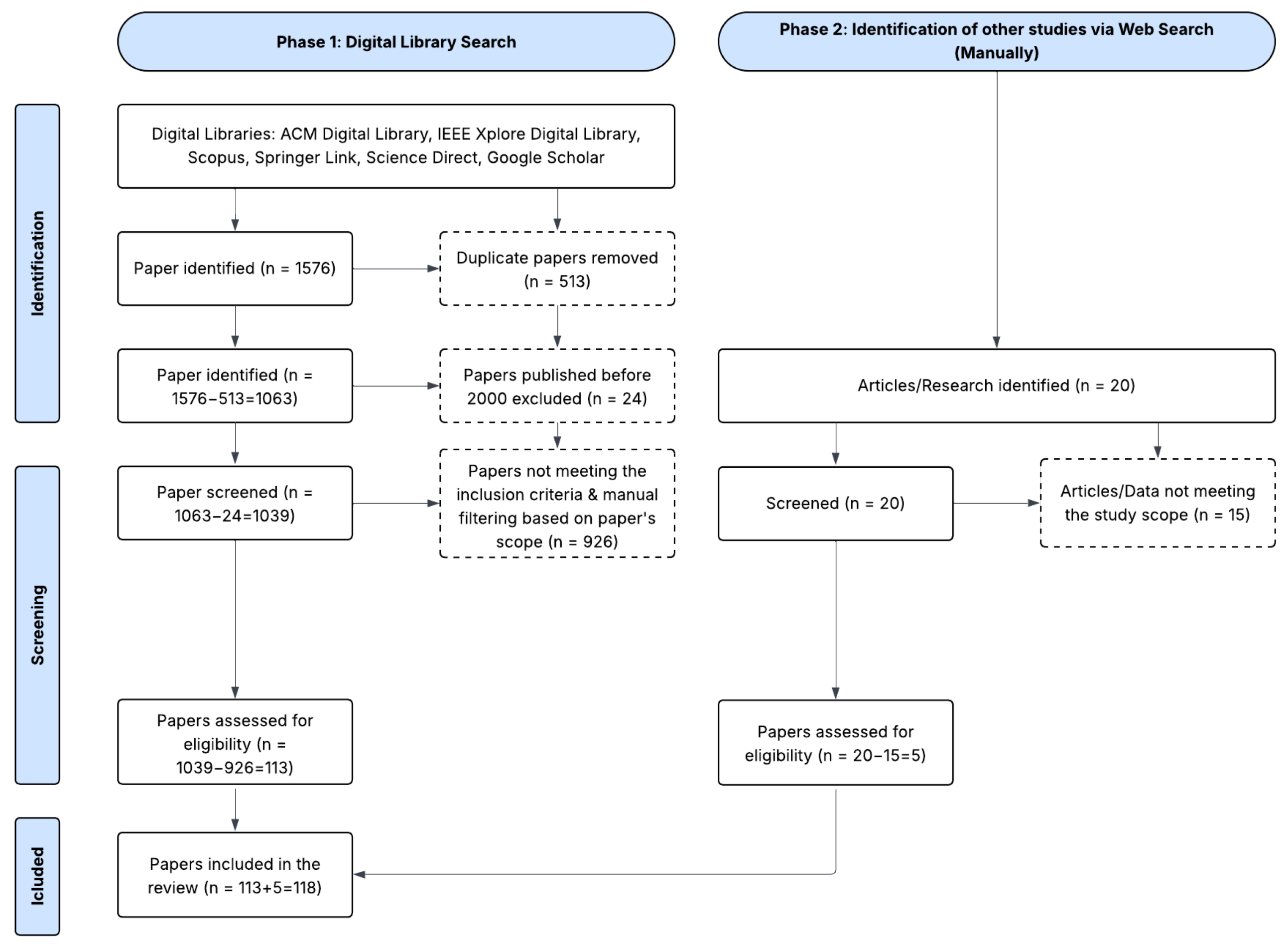

3.2. Conducting the Literature Review

- Literature Review Execution: At this stage, the search query was designed to be inclusive and was executed in several scientific digital libraries and databases, as mentioned in Section 3.1.3. The search objective was to study the relevant publications on phishing attacks, human factors, and AI from the years 2000 to 2025, thus including the latest publications on our research topic.

- Data Synthesis: At this stage, all duplicates were removed, and the articles were screened to ensure that they met the inclusion and exclusion criteria. The remaining eligible papers were then included in this study after reading the full text and determining that they were relevant to the topic of the research.

4. Findings

4.1. What Factors Make Humans Susceptible to Phishing Attacks? (RQ1)

4.1.1. Insufficient Training

4.1.2. Bias and Neglect

4.1.3. External Influence

4.2. How Has GenAI Increased the Risks and Sophistication of Phishing Attacks? (RQ2)

4.2.1. Defence System Evasion

4.2.2. Phishing Attack Content

4.2.3. Language Models in Phishing Attacks

4.2.4. GenAI in Phishing Attack Personalisation

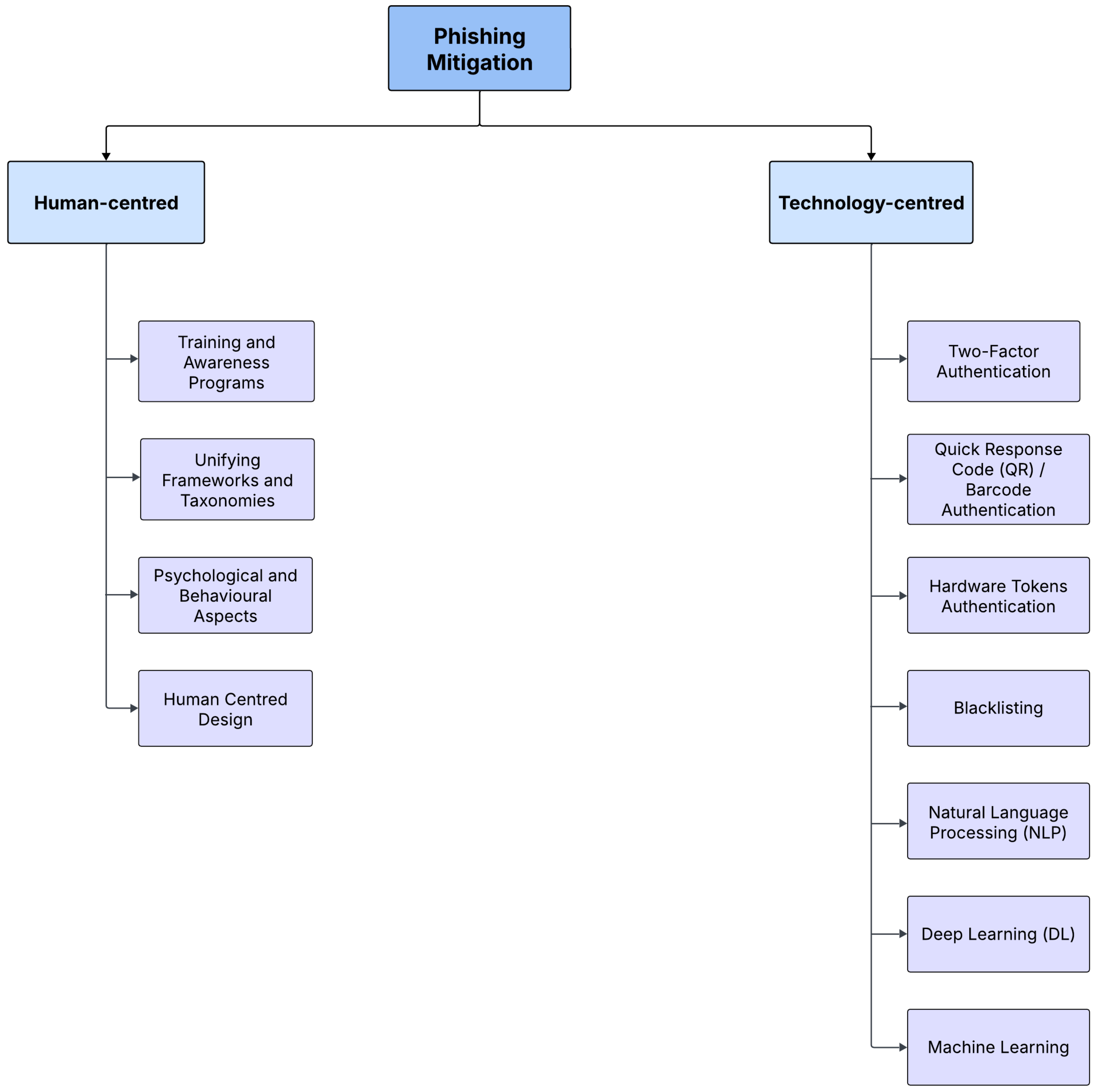

4.3. What Are the Most Effective Human-Centred and Technological Solutions to Mitigate Phishing Attacks? (RQ3)

4.3.1. Human-Focused Solutions

- -

- Training and Awareness Programmes:

- -

- Unifying Frameworks and Taxonomies:

- -

- Psychological and Behavioural Aspects:

- -

- Human-Centred Design:

4.3.2. Technology-Focused Solutions

- -

- Two-Factor Authentication:

- -

- Quick-Response Code (QR) or Barcode Authentication:

- -

- Hardware Token Authentication:

- -

- Blacklisting:

- -

- Natural Language Processing (NLP):

- -

- Deep Learning (DL):

- -

- Machine Learning (ML):

4.3.3. GenAI in Attack Prevention

- Advanced Training Programmes: GenAI can be used in cybersecurity training and awareness programmes by developing sophisticated phishing attacks, simulating potential threats such as phishing emails [110], and identifying the best approach to handling them. These simulations allow employees to be trained on the nuances of phishing attacks, thus reducing the probability that breaches will be successful.

- Security Testing: GenAI can improve security testing by automating the generation of test cases. Hilario et al. [111] introduced the potential of using GenAI in software testing to ensure that applications are secured against a wide range of attacks.

- Defence Mechanisms: GenAI can be used to simulate various attack scenarios. This supports security professionals in developing adaptive defensive strategies [58,112] against sophisticated threats. Sai et al. [113] provided a review examining different security products that can improve security measures by leveraging GenAI tools such as Google Cloud Security AI Workbench and Microsoft Security Copilot. Sai et al. [113] also identified that GenAI can be used in areas such as threat intelligence, vulnerability scanning, and secure code development. Although these studies have not explicitly examined their application to phishing attacks, we foresee great potential in applying them to the prevention and detection of phishing attacks. In addition, AI-powered techniques such as PhiShield, a spam filter browser extension, are used for real-time protection against phishing emails. The system combines signature-based checks with an LSTM AI model to alert users to phishing emails, achieving a detection accuracy of 98 per cent [114].

5. Discussion

6. Future Research

- Awareness and Education Programmes: With the complexity introduced by AI usage in phishing attacks, traditional cybersecurity awareness programmes are ineffective. The focus should be on increasing users’ knowledge of AI-driven attacks and advanced techniques that cybercriminals are deploying to deceive individuals and organisations. These programmes should also include interactive, situation-based training modules that highlight how human factors are exploited in phishing attacks.

- Implementing AI and ML in Defence Systems: This focuses on leveraging the latest techniques and algorithms to prevent GenAI-driven attacks. Future research in this area should focus on the introduction of robust AI/ML models and strategies to detect and defend against sophisticated phishing attacks.

- Explainable AI: More research should focus on AI model transparency; this would support security professionals and researchers in studying how AI models in defence systems identify anomalies and make decisions.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| GenAI | Generative Artificial Intelligence |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| DL | Deep Learning |

References

- Schatz, D.; Bashroush, R.; Wall, J. Towards a more representative definition of cyber security. J. Digit. Forensics Secur. Law 2017, 12, 8. [Google Scholar] [CrossRef]

- Sule, M.J.; Zennaro, M.; Thomas, G. Cybersecurity through the lens of digital identity and data protection: Issues and trends. Technol. Soc. 2021, 67, 101734. [Google Scholar] [CrossRef]

- King, Z.M.; Henshel, D.S.; Flora, L.; Cains, M.G.; Hoffman, B.; Sample, C. Characterizing and measuring maliciousness for cybersecurity risk assessment. Front. Psychol. 2018, 9, 39. [Google Scholar] [CrossRef]

- Australian Signals Directorate (ASD) Cyber Threat Report 2023–2024. Available online: https://www.cyber.gov.au/sites/default/files/2024-11/asd-cyber-threat-report-2024.pdf (accessed on 1 March 2025).

- Australian Scamwatch. Available online: https://www.scamwatch.gov.au (accessed on 1 March 2025).

- Mohammad, T.; Hussin, N.A.M.; Husin, M.H. Online safety awareness and human factors: An application of the theory of human ecology. Technol. Soc. 2022, 68, 101823. [Google Scholar] [CrossRef]

- Hong, Y.; Furnell, S. Understanding cybersecurity behavioral habits: Insights from situational support. J. Inf. Secur. Appl. 2021, 57, 102710. [Google Scholar] [CrossRef]

- Bowen, B.M.; Devarajan, R.; Stolfo, S. Measuring the human factor of cyber security. In Proceedings of the 2011 IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 15–17 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 230–235. [Google Scholar]

- Henshel, D.; Cains, M.; Hoffman, B.; Kelley, T. Trust as a human factor in holistic cyber security risk assessment. Procedia Manuf. 2015, 3, 1117–1124. [Google Scholar] [CrossRef]

- Alanazi, M.; Freeman, M.; Tootell, H. Exploring the factors that influence the cybersecurity behaviors of young adults. Comput. Hum. Behav. 2022, 136, 107376. [Google Scholar] [CrossRef]

- Shah, P.R.; Agarwal, A. Cybersecurity behaviour of smartphone users through the lens of fogg behaviour model. In Proceedings of the 2020 3rd International Conference on Communication System, Computing and IT Applications (CSCITA), Mumbai, India, 3–4 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 79–82. [Google Scholar]

- Lebek, B.; Uffen, J.; Breitner, M.H.; Neumann, M.; Hohler, B. Employees’ information security awareness and behavior: A literature review. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Wailea, HI, USA, 7–10 January 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2978–2987. [Google Scholar]

- Li, L.; He, W.; Xu, L.; Ash, I.; Anwar, M.; Yuan, X. Investigating the impact of cybersecurity policy awareness on employees’ cybersecurity behavior. Int. J. Inf. Manag. 2019, 45, 13–24. [Google Scholar] [CrossRef]

- Simonet, J.; Teufel, S. The influence of organizational, social and personal factors on cybersecurity awareness and behavior of home computer users. In Proceedings of the ICT Systems Security and Privacy Protection: 34th IFIP TC 11 International Conference, SEC 2019, Lisbon, Portugal, 25–27 June 2019; Proceedings 34. Springer: Berlin/Heidelberg, Germany, 2019; pp. 194–208. [Google Scholar]

- Al-Qaysi, N.; Granić, A.; Al-Emran, M.; Ramayah, T.; Garces, E.; Daim, T.U. Social media adoption in education: A systematic review of disciplines, applications, and influential factors. Technol. Soc. 2023, 73, 102249. [Google Scholar] [CrossRef]

- Chui, M.; Hazan, E.; Roberts, R.; Singla, A.; Smaje, K. The Economic Potential of Generative AI; McKinsey & Company: New York, NY, USA, 2023. [Google Scholar]

- Hatzius, J.; Briggs, J.; Kodnani, D.; Pierdomenico, G. The Potentially Large Effects of Artificial Intelligence on Economic Growth (Briggs/Kodnani). Goldman Sachs 2023, 1. Available online: https://static.poder360.com.br/2023/03/Global-Economics-Analyst_-The-Potentially-Large-Effects-of-Artificial-Intelligence-on-Economic-Growth-Briggs_Kodnani.pdf (accessed on 1 April 2024).

- Kaur, R.; Gabrijelčič, D.; Klobučar, T. Artificial intelligence for cybersecurity: Literature review and future research directions. Inf. Fusion 2023, 97, 101804. [Google Scholar] [CrossRef]

- Bueermann, G.; Rohrs, M. World Economic Forum (2024) Global Cybersecurity Outlook 2024. Available online: https://www3.weforum.org/docs/WEF_Global_Cybersecurity_Outlook_2024.pdf (accessed on 1 April 2024).

- Renaud, K.; Warkentin, M.; Westerman, G. From ChatGPT to HackGPT: Meeting the Cybersecurity Threat of Generative AI; MIT Sloan Management Review: Cambridge, MA, USA, 2023. [Google Scholar]

- Lastdrager, E.E. Achieving a consensual definition of phishing based on a systematic review of the literature. Crime Sci. 2014, 3, 9. [Google Scholar] [CrossRef]

- Salloum, S.; Gaber, T.; Vadera, S.; Shaalan, K. Phishing email detection using natural language processing techniques: A literature survey. Procedia Comput. Sci. 2021, 189, 19–28. [Google Scholar] [CrossRef]

- Varshney, G.; Kumawat, R.; Varadharajan, V.; Tupakula, U.; Gupta, C. Anti-phishing: A comprehensive perspective. Expert Syst. Appl. 2024, 238, 122199. [Google Scholar] [CrossRef]

- Hakim, Z.M.; Ebner, N.C.; Oliveira, D.S.; Getz, S.J.; Levin, B.E.; Lin, T.; Lloyd, K.; Lai, V.T.; Grilli, M.D.; Wilson, R.C. The Phishing Email Suspicion Test (PEST) a lab-based task for evaluating the cognitive mechanisms of phishing detection. Behav. Res. Methods 2021, 53, 1342–1352. [Google Scholar] [CrossRef]

- Wash, R.; Cooper, M.M. Who provides phishing training? facts, stories, and people like me. In Proceedings of the 2018 Chi Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Dhamija, R.; Tygar, J.D.; Hearst, M. Why phishing works. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 22–27 April 2006; pp. 581–590. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the NIPS’14: Proceedings of the 28th International Conference on Neural Information Processing Systems—Volume 2, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. Available online: https://dl.acm.org/doi/10.5555/2969033.2969125 (accessed on 1 April 2025).

- Bengesi, S.; El-Sayed, H.; Sarker, M.K.; Houkpati, Y.; Irungu, J.; Oladunni, T. Advancements in generative AI: A comprehensive review of GANs, GPT, autoencoders, diffusion model, and transformers. IEEE Access 2024, 12, 69812–69837. [Google Scholar] [CrossRef]

- Ronge, R.; Maier, M.; Rathgeber, B. Towards a definition of Generative artificial intelligence. Philos. Technol. 2025, 38, 31. [Google Scholar] [CrossRef]

- Archana, R.; Jeevaraj, P.E. Deep learning models for digital image processing: A review. Artif. Intell. Rev. 2024, 57, 11. [Google Scholar] [CrossRef]

- Desolda, G.; Ferro, L.S.; Marrella, A.; Catarci, T.; Costabile, M.F. Human factors in phishing attacks: A systematic literature review. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Pham, H.C.; Pham, D.D.; Brennan, L.; Richardson, J. Information security and people: A conundrum for compliance. Australas. J. Inf. Syst. 2017, 21. [Google Scholar] [CrossRef]

- Chowdhury, N.H.; Adam, M.T.; Skinner, G. The impact of time pressure on human cybersecurity behavior: An integrative framework. In Proceedings of the 2018 26th International Conference on Systems Engineering (ICSEng), Sydney, NSW, Australia, 18–20 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–10. [Google Scholar]

- Hwang, M.I.; Helser, S. Cybersecurity educational games: A theoretical framework. Inf. Comput. Secur. 2022, 30, 225–242. [Google Scholar] [CrossRef]

- Kalhoro, S.; Rehman, M.; Ponnusamy, V.; Shaikh, F.B. Extracting key factors of cyber hygiene behaviour among software engineers: A systematic literature review. IEEE Access 2021, 9, 99339–99363. [Google Scholar] [CrossRef]

- Herath, T.B.; Khanna, P.; Ahmed, M. Cybersecurity practices for social media users: A systematic literature review. J. Cybersecur. Priv. 2022, 2, 1–18. [Google Scholar] [CrossRef]

- Zhang, Z.; Gupta, B.B. Social media security and trustworthiness: Overview and new direction. Future Gener. Comput. Syst. 2018, 86, 914–925. [Google Scholar] [CrossRef]

- Arévalo, D.; Valarezo, D.; Fuertes, W.; Cazares, M.F.; Andrade, R.O.; Macas, M. Human and Cognitive Factors involved in Phishing Detection. A Literature Review. In Proceedings of the 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE), Las Vegas, NV, USA, 24–27 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 608–614. [Google Scholar]

- Andrade, R.O.; Yoo, S.G. Cognitive security: A comprehensive study of cognitive science in cybersecurity. J. Inf. Secur. Appl. 2019, 48, 102352. [Google Scholar] [CrossRef]

- Naqvi, B.; Perova, K.; Farooq, A.; Makhdoom, I.; Oyedeji, S.; Porras, J. Mitigation strategies against the phishing attacks: A systematic literature review. Comput. Secur. 2023, 132, 103387. [Google Scholar] [CrossRef]

- Ayeni, R.K.; Adebiyi, A.A.; Okesola, J.O.; Igbekele, E. Phishing attacks and detection techniques: A systematic review. In Proceedings of the 2024 International Conference on Science, Engineering and Business for Driving Sustainable Development Goals (SEB4SDG), Omu-Aran, Nigeria, 2–4 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–17. [Google Scholar]

- Kyaw, P.H.; Gutierrez, J.; Ghobakhlou, A. A Systematic Review of Deep Learning Techniques for Phishing Email Detection. Electronics 2024, 13, 3823. [Google Scholar] [CrossRef]

- Thakur, K.; Ali, M.L.; Obaidat, M.A.; Kamruzzaman, A. A systematic review on deep-learning-based phishing email detection. Electronics 2023, 12, 4545. [Google Scholar] [CrossRef]

- Schmitt, M.; Flechais, I. Digital deception: Generative artificial intelligence in social engineering and phishing. Artif. Intell. Rev. 2024, 57, 324. [Google Scholar] [CrossRef]

- Baker, J. The technology–organization–environment framework. In Information Systems Theory: Explaining and Predicting Our Digital Society, Vol. 1; Springer: Berlin/Heidelberg, Germany, 2012; pp. 231–245. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for performing systematic reviews. Keele UK Keele Univ. 2004, 33, 1–26. [Google Scholar]

- Fink, A. Conducting Research Literature Reviews: From the Internet to Paper; Sage Publications: Thousand Oaks, CA, USA, 2019. [Google Scholar]

- Torraco, R.J. Writing integrative literature reviews: Guidelines and examples. Hum. Resour. Dev. Rev. 2005, 4, 356–367. [Google Scholar] [CrossRef]

- Stanton, J.M.; Stam, K.R.; Mastrangelo, P.; Jolton, J. Analysis of end user security behaviors. Comput. Secur. 2005, 24, 124–133. [Google Scholar] [CrossRef]

- Zhang, R.; Bello, A.; Foster, J.L. BYOD security: Using dual process theory to adapt effective security habits in BYOD. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 20–21 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 372–386. [Google Scholar]

- Oner, U.; Cetin, O.; Savas, E. Human factors in phishing: Understanding susceptibility and resilience. Comput. Stand. Interfaces 2025, 94, 104014. [Google Scholar] [CrossRef]

- Mayer, P.; Kunz, A.; Volkamer, M. Reliable behavioural factors in the information security context. In Proceedings of the 12th International Conference on Availability, Reliability and Security, Reggio Calabria, Italy, 29 August–1 September 2017; pp. 1–10. [Google Scholar]

- Safa, N.S.; Sookhak, M.; Von Solms, R.; Furnell, S.; Ghani, N.A.; Herawan, T. Information security conscious care behaviour formation in organizations. Comput. Secur. 2015, 53, 65–78. [Google Scholar] [CrossRef]

- Corradini, I.; Nardelli, E. Building organizational risk culture in cyber security: The role of human factors. In Proceedings of the Advances in Human Factors in Cybersecurity: Proceedings of the AHFE 2018 International Conference on Human Factors in Cybersecurity, Loews Sapphire Falls Resort at Universal Studios, Orlando, FL, USA, 21–25 July 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 193–202. [Google Scholar]

- Alsharnouby, M.; Alaca, F.; Chiasson, S. Why phishing still works: User strategies for combating phishing attacks. Int. J. Hum.-Comput. Stud. 2015, 82, 69–82. [Google Scholar] [CrossRef]

- Sasse, M.A.; Brostoff, S.; Weirich, D. Transforming the ‘weakest link’—A human/computer interaction approach to usable and effective security. BT Technol. J. 2001, 19, 122–131. [Google Scholar] [CrossRef]

- Neupane, S.; Fernandez, I.A.; Mittal, S.; Rahimi, S. Impacts and risk of generative AI technology on cyber defense. arXiv 2023, arXiv:2306.13033. [Google Scholar] [CrossRef]

- AlEroud, A.; Karabatis, G. Bypassing detection of URL-based phishing attacks using generative adversarial deep neural networks. In Proceedings of the Sixth International Workshop on Security and Privacy Analytics, New Orleans, LA, USA, 18 March 2020; pp. 53–60. [Google Scholar]

- Apruzzese, G.; Conti, M.; Yuan, Y. SpacePhish: The evasion-space of adversarial attacks against phishing website detectors using machine learning. In Proceedings of the 38th Annual Computer Security Applications Conference, Austin, TX, USA, 5–9 December 2022; pp. 171–185. [Google Scholar]

- Yigit, Y.; Buchanan, W.J.; Tehrani, M.G.; Maglaras, L. Review of generative ai methods in cybersecurity. arXiv 2024, arXiv:2403.08701. [Google Scholar] [CrossRef]

- 2024 UK Cyber Security Breaches Survey. Available online: https://www.gov.uk/government/statistics/cyber-security-breaches-survey-2024/cyber-security-breaches-survey-2024 (accessed on 1 April 2025).

- Gambin, A.F.; Yazidi, A.; Vasilakos, A.; Haugerud, H.; Djenouri, Y. Deepfakes: Current and future trends. Artif. Intell. Rev. 2024, 57, 64. [Google Scholar] [CrossRef]

- Bray, S.D.; Johnson, S.D.; Kleinberg, B. Testing human ability to detect ‘deepfake’images of human faces. J. Cybersecur. 2023, 9, tyad011. [Google Scholar] [CrossRef]

- Kaur, A.; Noori Hoshyar, A.; Saikrishna, V.; Firmin, S.; Xia, F. Deepfake video detection: Challenges and opportunities. Artif. Intell. Rev. 2024, 57, 1–47. [Google Scholar] [CrossRef]

- Doan, T.P.; Nguyen-Vu, L.; Jung, S.; Hong, K. Bts-e: Audio deepfake detection using breathing-talking-silence encoder. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Seymour, J.; Tully, P. Generative models for spear phishing posts on social media. arXiv 2018, arXiv:1802.05196. [Google Scholar] [CrossRef]

- Greshake, K.; Abdelnabi, S.; Mishra, S.; Endres, C.; Holz, T.; Fritz, M. Not what you’ve signed up for: Compromising real-world llm-integrated applications with indirect prompt injection. In Proceedings of the 16th ACM Workshop on Artificial Intelligence and Security, Copenhagen, Denmark, 30 November 2023; pp. 79–90. [Google Scholar]

- Zou, A.; Wang, Z.; Carlini, N.; Nasr, M.; Kolter, J.Z.; Fredrikson, M. Universal and transferable adversarial attacks on aligned language models. arXiv 2023, arXiv:2307.15043. [Google Scholar] [CrossRef]

- Qi, Q.; Luo, Y.; Xu, Y.; Guo, W.; Fang, Y. SpearBot: Leveraging large language models in a generative-critique framework for spear-phishing email generation. Inf. Fusion 2025, 122, 103176. [Google Scholar] [CrossRef]

- Gupta, M.; Akiri, C.; Aryal, K.; Parker, E.; Praharaj, L. From chatgpt to threatgpt: Impact of generative ai in cybersecurity and privacy. IEEE Access 2023, 11, 80218–80245. [Google Scholar] [CrossRef]

- Webb, T.; Holyoak, K.J.; Lu, H. Emergent analogical reasoning in large language models. Nat. Hum. Behav. 2023, 7, 1526–1541. [Google Scholar] [CrossRef]

- Taddeo, M.; McCutcheon, T.; Floridi, L. Trusting artificial intelligence in cybersecurity is a double-edged sword. Nat. Mach. Intell. 2019, 1, 557–560. [Google Scholar] [CrossRef]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. Palm 2 technical report. arXiv 2023, arXiv:2305.10403. [Google Scholar] [CrossRef]

- Reed, S.; Zolna, K.; Parisotto, E.; Colmenarejo, S.G.; Novikov, A.; Barth-Maron, G.; Gimenez, M.; Sulsky, Y.; Kay, J.; Springenberg, J.T.; et al. A generalist agent. arXiv 2022, arXiv:2205.06175. [Google Scholar] [CrossRef]

- Asfoor, A.; Rahim, F.A.; Yussof, S. Factors influencing information security awareness of phishing attacks from bank customers’ perspective: A preliminary investigation. In Proceedings of the Recent Trends in Data Science and Soft Computing: Proceedings of the 3rd International Conference of Reliable Information and Communication Technology (IRICT 2018), Kuala Lumpur, Malaysia, 23–24 July 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 641–654. [Google Scholar]

- Wen, Z.A.; Lin, Z.; Chen, R.; Andersen, E. What. hack: Engaging anti-phishing training through a role-playing phishing simulation game. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Xiong, A.; Proctor, R.W.; Yang, W.; Li, N. Embedding training within warnings improves skills of identifying phishing webpages. Hum. Factors 2019, 61, 577–595. [Google Scholar] [CrossRef] [PubMed]

- Sturman, D.; Auton, J.C.; Morrison, B.W. Security awareness, decision style, knowledge, and phishing email detection: Moderated mediation analyses. Comput. Secur. 2025, 148, 104129. [Google Scholar] [CrossRef]

- Dixon, M.; Gamagedara Arachchilage, N.A.; Nicholson, J. Engaging users with educational games: The case of phishing. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Ndibwile, J.D.; Kadobayashi, Y.; Fall, D. UnPhishMe: Phishing attack detection by deceptive login simulation through an Android mobile app. In Proceedings of the 2017 12th Asia Joint Conference on Information Security (AsiaJCIS), Seoul, Republic of Korea, 10–11 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 38–47. [Google Scholar]

- Sheng, S.; Magnien, B.; Kumaraguru, P.; Acquisti, A.; Cranor, L.F.; Hong, J.; Nunge, E. Anti-phishing phil: The design and evaluation of a game that teaches people not to fall for phish. In Proceedings of the 3rd Symposium on Usable Privacy and Security, Pittsburgh, PA, USA, 18–20 July 2007; pp. 88–99. [Google Scholar]

- Kumaraguru, P.; Sheng, S.; Acquisti, A.; Cranor, L.F.; Hong, J. Teaching Johnny not to fall for phish. ACM Trans. Internet Technol. (TOIT) 2010, 10, 1–31. [Google Scholar] [CrossRef]

- Lim, I.k.; Park, Y.G.; Lee, J.K. Design of security training system for individual users. Wirel. Pers. Commun. 2016, 90, 1105–1120. [Google Scholar] [CrossRef]

- Nurse, J.R. Cybercrime and you: How criminals attack and the human factors that they seek to exploit. arXiv 2018, arXiv:1811.06624. [Google Scholar] [CrossRef]

- Avery, J.; Almeshekah, M.; Spafford, E. Offensive deception in computing. In Proceedings of the International Conference on Cyber Warfare and Security, Dayton, OH, USA, 2–3 March 2017; Academic Conferences International Limited: Reading, UK, 2017; p. 23. [Google Scholar]

- Gangire, Y.; Da Veiga, A.; Herselman, M. A conceptual model of information security compliant behaviour based on the self-determination theory. In Proceedings of the 2019 Conference on Information Communications Technology and Society (ICTAS), Durban, South Africa, 6–8 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- McElwee, S.; Murphy, G.; Shelton, P. Influencing outcomes and behaviors in simulated phishing exercises. In Proceedings of the SoutheastCon 2018, St. Petersburg, FL, USA, 19–22 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Noureddine, M.A.; Marturano, A.; Keefe, K.; Bashir, M.; Sanders, W.H. Accounting for the human user in predictive security models. In Proceedings of the 2017 IEEE 22nd Pacific Rim International Symposium on Dependable Computing (PRDC), Christchurch, New Zealand, 22–25 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 329–338. [Google Scholar]

- Metalidou, E.; Marinagi, C.; Trivellas, P.; Eberhagen, N.; Skourlas, C.; Giannakopoulos, G. The human factor of information security: Unintentional damage perspective. Procedia-Soc. Behav. Sci. 2014, 147, 424–428. [Google Scholar] [CrossRef]

- Steves, M.P.; Greene, K.K.; Theofanos, M.F. A phish scale: Rating human phishing message detection difficulty. In Proceedings of the Workshop on Usable Security (USEC) 2019, San Diego, CA, USA, 24 February 2019. [Google Scholar]

- Choong, Y.Y.; Theofanos, M. What 4500+ people can tell you–employees’ attitudes toward organizational password policy do matter. In Proceedings of the Human Aspects of Information Security, Privacy, and Trust: Third International Conference, HAS 2015, Held as Part of HCI International 2015, Los Angeles, CA, USA, 2–7 August 2015; Proceedings 3. Springer: Berlin/Heidelberg, Germany, 2015; pp. 299–310. [Google Scholar]

- Lévesque, F.L.; Chiasson, S.; Somayaji, A.; Fernandez, J.M. Technological and human factors of malware attacks: A computer security clinical trial approach. ACM Trans. Priv. Secur. (TOPS) 2018, 21, 1–30. [Google Scholar] [CrossRef]

- Nsiempba, J.J.; Lévesque, F.L.; de Marcellis-Warin, N.; Fernandez, J.M. An empirical analysis of risk aversion in malware infections. In Proceedings of the Risks and Security of Internet and Systems: 12th International Conference, CRiSIS 2017, Dinard, France, 19–21 September 2017; Revised Selected Papers 12. Springer: Berlin/Heidelberg, Germany, 2018; pp. 260–267. [Google Scholar]

- Kavvadias, A.; Kotsilieris, T. Understanding the role of demographic and psychological factors in users’ susceptibility to phishing emails: A review. Appl. Sci. 2025, 15, 2236. [Google Scholar] [CrossRef]

- Williams, N.; Li, S. Simulating human detection of phishing websites: An investigation into the applicability of the ACT-R cognitive behaviour architecture model. In Proceedings of the 2017 3rd IEEE International Conference on Cybernetics (CYBCONF), Exeter, UK, 21–23 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–8. [Google Scholar]

- Zhao, R.; John, S.; Karas, S.; Bussell, C.; Roberts, J.; Six, D.; Gavett, B.; Yue, C. The highly insidious extreme phishing attacks. In Proceedings of the 2016 25th International Conference on Computer Communication and Networks (ICCCN), Waikoloa, HI, USA, 1–4 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–10. [Google Scholar]

- Dodson, B.; Sengupta, D.; Boneh, D.; Lam, M.S. Secure, consumer-friendly web authentication and payments with a phone. In Proceedings of the Mobile Computing, Applications, and Services: Second International ICST Conference, MobiCASE 2010, Santa Clara, CA, USA, 25–28 October 2010; Revised Selected Papers 2. Springer: Berlin/Heidelberg, Germany, 2012; pp. 17–38. [Google Scholar]

- Jindal, S.; Misra, M. Multi-factor authentication scheme using mobile app and camera. In Proceedings of the International Conference on Advanced Communication and Computational Technology (ICACCT), Kurukshetra, India, 6–7 December 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 787–813. [Google Scholar]

- Lu, Y.; Li, L.; Peng, H.; Yang, Y. A novel smart card based user authentication and key agreement scheme for heterogeneous wireless sensor networks. Wirel. Pers. Commun. 2017, 96, 813–832. [Google Scholar] [CrossRef]

- Sheng, S.; Wardman, B.; Warner, G.; Cranor, L.; Hong, J.; Zhang, C. An empirical analysis of phishing blacklists. In Proceedings of the Sixth Conference on Email and Anti-Spam (CEAS), Mountain View, CA, USA, 16–17 July 2009; Carnegie Mellon University: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Phishtank Webiste. Available online: https://www.phishtank.com/ (accessed on 1 May 2025).

- Alhuzali, A.; Alloqmani, A.; Aljabri, M.; Alharbi, F. In-Depth Analysis of Phishing Email Detection: Evaluating the Performance of Machine Learning and Deep Learning Models Across Multiple Datasets. Appl. Sci. 2025, 15, 3396. [Google Scholar] [CrossRef]

- Mahmud, T.; Prince, M.A.H.; Ali, M.H.; Hossain, M.S.; Andersson, K. Enhancing cybersecurity: Hybrid deep learning approaches to smishing attack detection. Systems 2024, 12, 490. [Google Scholar] [CrossRef]

- Alam, M.N.; Sarma, D.; Lima, F.F.; Saha, I.; Ulfath, R.E.; Hossain, S. Phishing attacks detection using machine learning approach. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1173–1179. [Google Scholar]

- Rawal, S.; Rawal, B.; Shaheen, A.; Malik, S. Phishing detection in e-mails using machine learning. Int. J. Appl. Inf. Syst. 2017, 12, 21–24. [Google Scholar] [CrossRef]

- Nagy, N.; Aljabri, M.; Shaahid, A.; Ahmed, A.A.; Alnasser, F.; Almakramy, L.; Alhadab, M.; Alfaddagh, S. Phishing urls detection using sequential and parallel ml techniques: Comparative analysis. Sensors 2023, 23, 3467. [Google Scholar] [CrossRef]

- Brissett, A.; Wall, J. Machine learning and watermarking for accurate detection of AI generated phishing emails. Electronics 2025, 14, 2611. [Google Scholar] [CrossRef]

- Eze, C.S.; Shamir, L. Analysis and prevention of AI-based phishing email attacks. Electronics 2024, 13, 1839. [Google Scholar] [CrossRef]

- Bethany, M.; Galiopoulos, A.; Bethany, E.; Karkevandi, M.B.; Vishwamitra, N.; Najafirad, P. Large language model lateral spear phishing: A comparative study in large-scale organizational settings. arXiv 2024, arXiv:2401.09727. [Google Scholar] [CrossRef]

- Hilario, E.; Azam, S.; Sundaram, J.; Imran Mohammed, K.; Shanmugam, B. Generative AI for pentesting: The good, the bad, the ugly. Int. J. Inf. Secur. 2024, 23, 2075–2097. [Google Scholar] [CrossRef]

- Kucharavy, A.; Schillaci, Z.; Maréchal, L.; Würsch, M.; Dolamic, L.; Sabonnadiere, R.; David, D.P.; Mermoud, A.; Lenders, V. Fundamentals of generative large language models and perspectives in cyber-defense. arXiv 2023, arXiv:2303.12132. [Google Scholar] [CrossRef]

- Sai, S.; Yashvardhan, U.; Chamola, V.; Sikdar, B. Generative ai for cyber security: Analyzing the potential of chatgpt, dall-e and other models for enhancing the security space. IEEE Access 2024, 12, 53497–53516. [Google Scholar] [CrossRef]

- Mun, H.; Park, J.; Kim, Y.; Kim, B.; Kim, J. PhiShield: An AI-Based Personalized Anti-Spam Solution with Third-Party Integration. Electronics 2025, 14, 1581. [Google Scholar] [CrossRef]

- Marin, I.A.; Burda, P.; Zannone, N.; Allodi, L. The influence of human factors on the intention to report phishing emails. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–18. [Google Scholar]

- Jari, M. An overview of phishing victimization: Human factors, training and the role of emotions. arXiv 2022, arXiv:2209.11197. [Google Scholar] [CrossRef]

- Greitzer, F.L.; Li, W.; Laskey, K.B.; Lee, J.; Purl, J. Experimental investigation of technical and human factors related to phishing susceptibility. ACM Trans. Soc. Comput. 2021, 4, 1–48. [Google Scholar] [CrossRef]

- Jari, M. A comprehensive survey of phishing attacks and defences: Human factors, training and the role of emotions. Int. J. Netw. Secur. Its Appl. 2022, 14, 5. [Google Scholar] [CrossRef]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Publication is on phishing | Publication is not related to scope |

| Publication is on AI in phishing | Publication is not fully accessible |

| Publication is on human factors in phishing | Publication contains partial results |

| Publication reports complete results | |

| Publication language is English |

| Content | Description |

|---|---|

| Text | Crafting emails/text messages that are personalised to specific individuals. |

| Voice | Creating voice messages that can be used to impersonate trusted individuals. |

| Images | Creating images to add credibility to phishing attack content. |

| Videos | Creating realistic videos to add credibility to phishing attack content. |

| Paper | Human Factors Covered | Research Goal | Key Findings | Limitations |

|---|---|---|---|---|

| [115] | Psychological factors | Study factors influencing phishing reporting intentions | Identified factors affecting reporting intentions | Online questionnaire (Number of participants = 284) |

| [116] | Emotional factors in phishing victimisation | Explore emotional factors significant in phishing victimisation | 1. Attackers manipulate victims’ psychology and emotions; 2. Comparison of phishing types; 3. Review of training approaches. | Relied on literature review without original empirical data |

| [38] | Cognitive factors in phishing detection | Identify human and cognitive factors in phishing attacks | 1. Cybercriminals exploit human vulnerabilities; 2. Difficulty in identifying phishing emails; 3. Importance of cognitive and psychological factors. | Limited to existing literature only |

| [117] | Demographic, behavioural, and psychological factors | Examine factors influencing phishing susceptibility through a simulated campaign | 1. The limited effects of gender and age; 2. Previous phishing victims are more susceptible; 3. Impulsivity is correlated with phishing susceptibility; 4. Better security habits are linked to lower susceptibility. | Focused on a single university population |

| [118] | Emotional and psychological factors | Explore human/emotional factors in phishing victimisation | 1. Emotional manipulation in attacks; 2. Phishing types; 3. Training approaches. | Limited to existing literature only |

| Aspect | Traditional Phishing | AI-Driven Phishing |

|---|---|---|

| Message Quality | Message is generic, with grammatical errors and typing mistakes | Mimics real-life communication styles, with no spelling or grammatical mistakes |

| Personalisation | Broad targeting without personalisation | Carefully crafted, personalised messages |

| Scale | Manual messaging with limited scalability | High-volume generation and automation |

| Targeting Approach | Indiscriminately large audience targeting | Strategic targeting based on AI-driven analysis |

| Attack Vectors | Multi-channel; primary attack channel is email | Multi-channel, deploying AI technology |

| Detection Challenges | Easier to detect due to grammatical/typing errors | Harder to detect, with AI-driven attacks overcoming traditional controls |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jabir, R.; Le, J.; Nguyen, C. Phishing Attacks in the Age of Generative Artificial Intelligence: A Systematic Review of Human Factors. AI 2025, 6, 174. https://doi.org/10.3390/ai6080174

Jabir R, Le J, Nguyen C. Phishing Attacks in the Age of Generative Artificial Intelligence: A Systematic Review of Human Factors. AI. 2025; 6(8):174. https://doi.org/10.3390/ai6080174

Chicago/Turabian StyleJabir, Raja, John Le, and Chau Nguyen. 2025. "Phishing Attacks in the Age of Generative Artificial Intelligence: A Systematic Review of Human Factors" AI 6, no. 8: 174. https://doi.org/10.3390/ai6080174

APA StyleJabir, R., Le, J., & Nguyen, C. (2025). Phishing Attacks in the Age of Generative Artificial Intelligence: A Systematic Review of Human Factors. AI, 6(8), 174. https://doi.org/10.3390/ai6080174