Combined Dataset System Based on a Hybrid PCA–Transformer Model for Effective Intrusion Detection Systems

Abstract

1. Introduction

- Proposing a novel approach to IDS by implementing a combined dataset framework using enhanced preprocessing and feature engineering, followed by the vertical concatenation of the CSE-CIC-IDS2018 and CICIDS2017 datasets, enabling the detection of 21 unique classes, including 1 benign class and 20 distinct attack types.

- Adopting an enhanced hybrid PCA–Transformer model, where PCA performs feature extraction and dimensionality reduction, and the Transformer is responsible for classification, with class imbalance mitigated through the use of class weights, ADASYN, and ENN.

- Conducting an evaluation on the combined CSE-CIC-IDS2018 and CICIDS2017 datasets, as well as on the CSE-CIC-IDS2018, CICIDS2017, and NF-BoT-IoT-v2 datasets individually, highlighting the superior performance of the proposed model in comparison to state-of-the-art approaches, while effectively covering a broader spectrum of network traffic classes, including benign and diverse attack types.

- Validating the real-time effectiveness of the combined dataset system using the proposed model, accurately detecting and classifying benign traffic alongside multiple attack categories within a real-world IDS deployment.

2. Related Work

2.1. Traditional Machine Learning for IDSs

2.2. Deep Learning-Based Intrusion Detection

2.3. Transformer-Based Models in IDS

2.4. Dimensionality Reduction and Feature Selection Techniques

2.5. Hybrid Models for IDS

2.6. Challenges

3. Methodology

3.1. Dataset Description

3.1.1. CSE-CIC-IDS2018 Dataset

3.1.2. CICIDS2017 Dataset

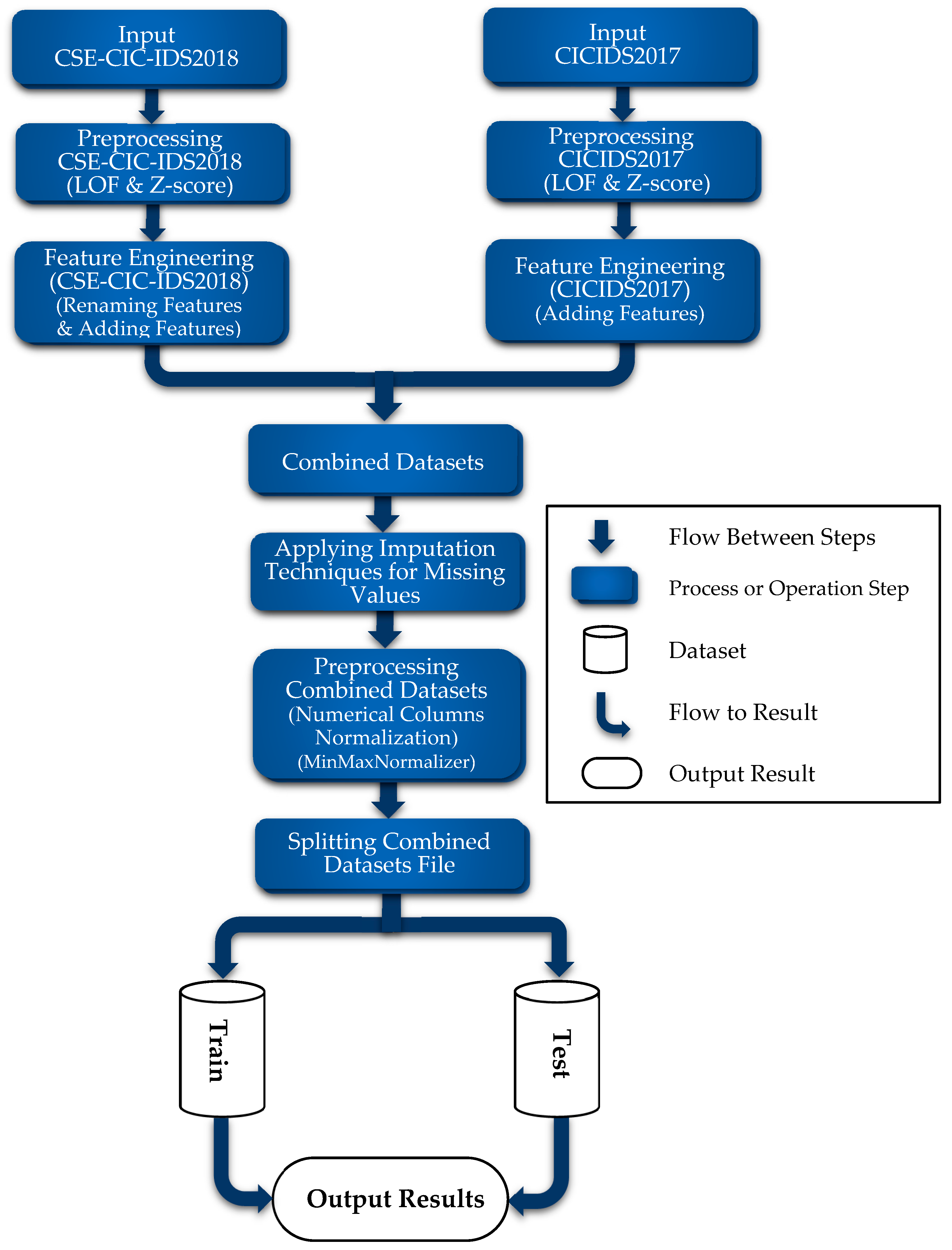

3.2. Dataset Preprocessing

3.2.1. CSE-CIC-IDS2018 Dataset

3.2.2. CICIDS2017 Dataset

3.2.3. Combined Dataset (CSE-CIC-IDS2018 and CICIDS2017)

- Applying Combined LOF and Z-score

- 2.

- Applying Feature Engineering

- 3.

- Combining (Concatenating Vertically) Datasets

- 4.

- Applying Imputation Techniques

- 5.

- Normalization

- 6.

- Splitting Combined Dataset to Train and Test File

- 7.

- Class Imbalance Mitigation

- Class Weights

3.3. Proposed Model

Principal Component Analysis–Transformer (PCA–Transformer)

- (i)

- Binary Classification

- (ii)

- Multi-class Classification

- (iii)

- Configuration of Hyperparameters for the PCA–Transformer Model

4. Results and Experiments

4.1. Dataset Characteristics and Preprocessing Overview

- NF-BoT-IoT-v2 Dataset

- CSE-CIC-IDS2018 Dataset

- CICIDS2017 Dataset

- Combined Dataset

4.2. Configuration and Hyperparameter Overview of Compared Models

- Convolutional Neural Network (CNN)

- Autoencoder

- Multilayer Perceptron (MLP)

- Transformer

Configuration of Hyperparameters for Models

4.3. Experiment’s Establishment

4.4. Evaluation Metrics

4.5. Results

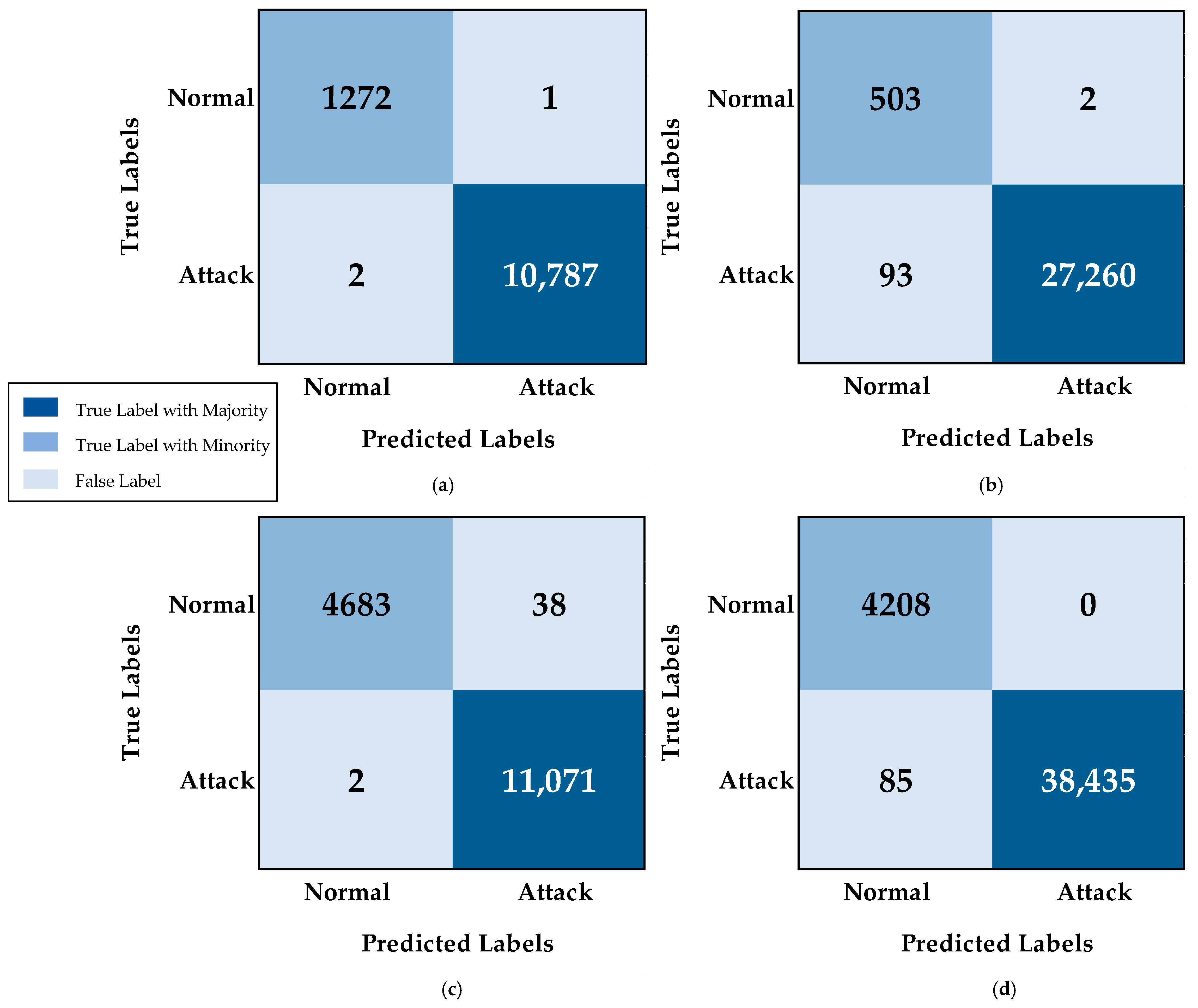

- (i)

- Binary Classification

- (ii)

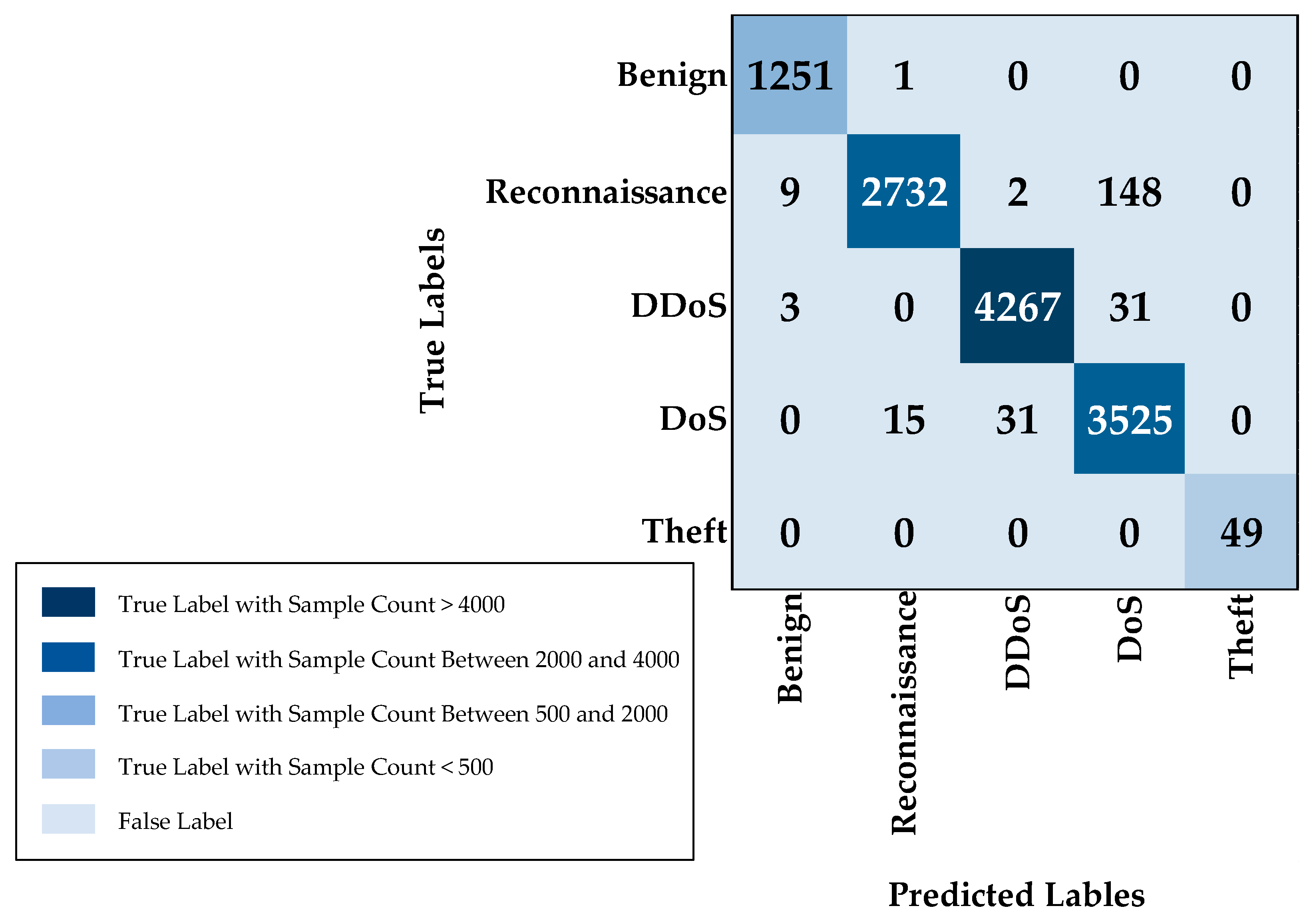

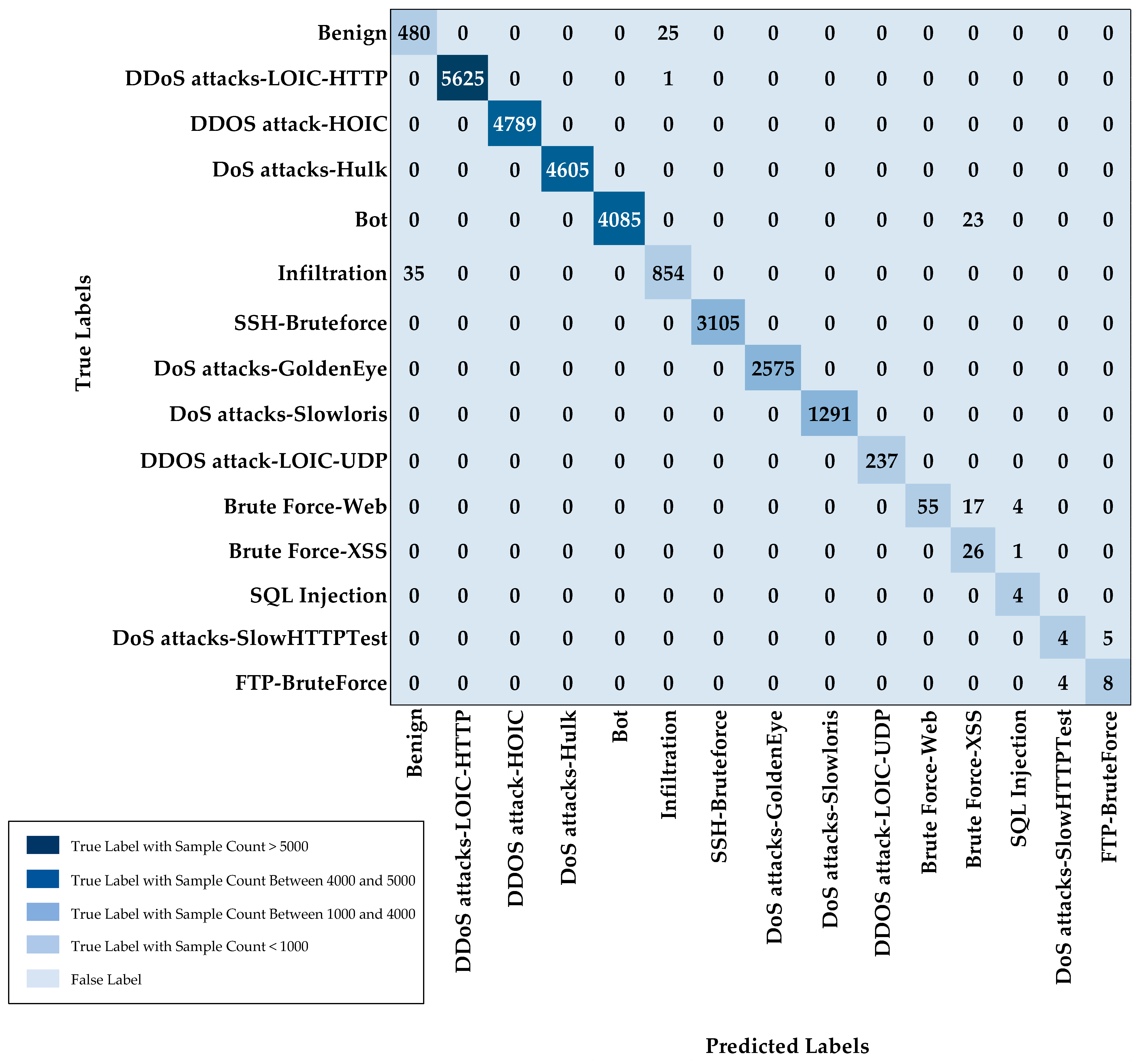

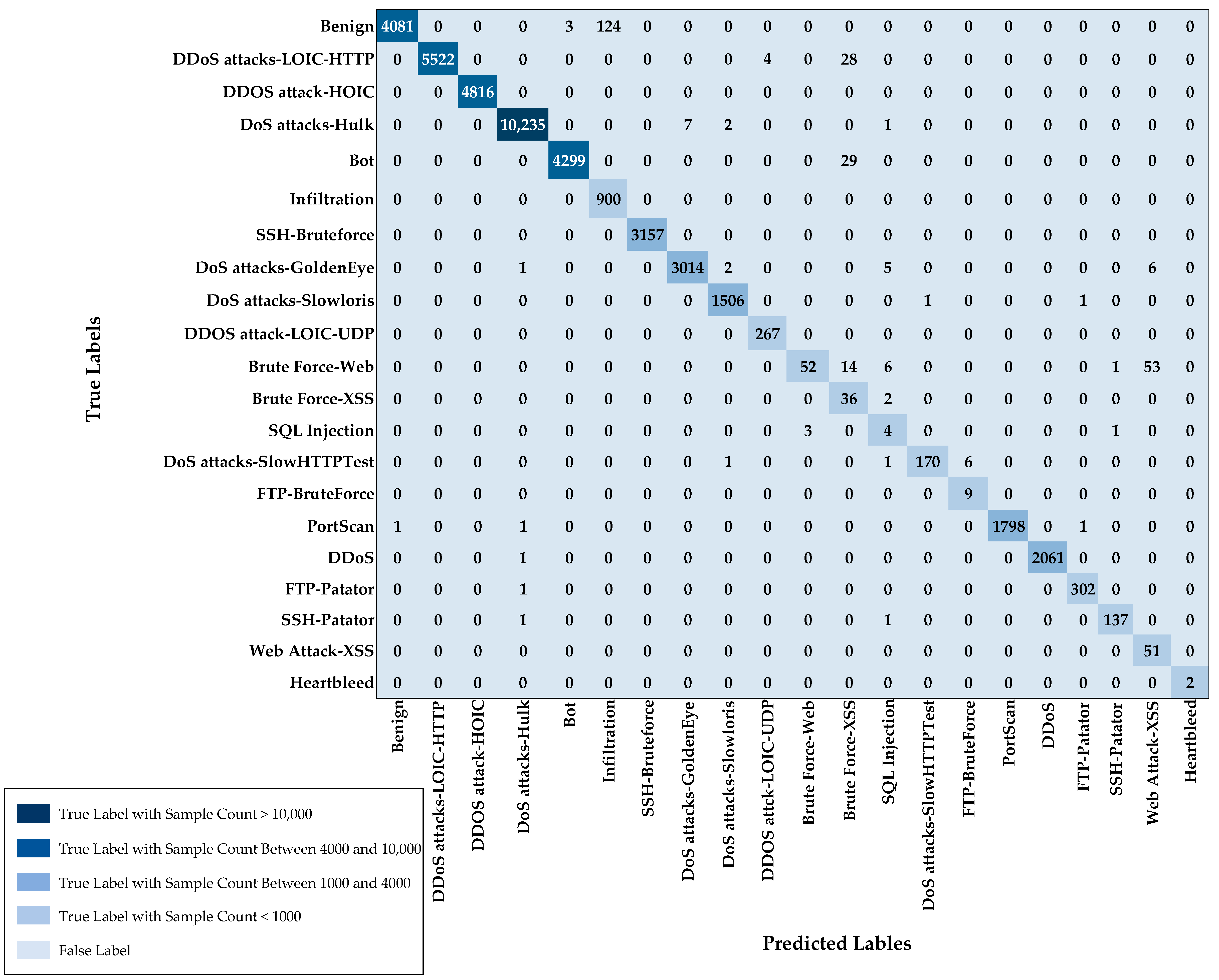

- Multi-Class Classification

4.6. Ablation Analysis on Component-Wise Enhancements in the PCA–Transformer Model

4.7. Inference Time, Training Time, and Memory Consumption

- (i)

- Inference time

- (ii)

- Training time

- (iii)

- Memory consumption

4.8. Comparative Analysis of Transformer Variants and Lightweight Attention Mechanisms for Performance and Scalability

4.9. Explaining Model Decisions Using LIME for the PCA–Transformer Model

5. Discussion

5.1. Binary Classification

5.2. Multi-Class Classification

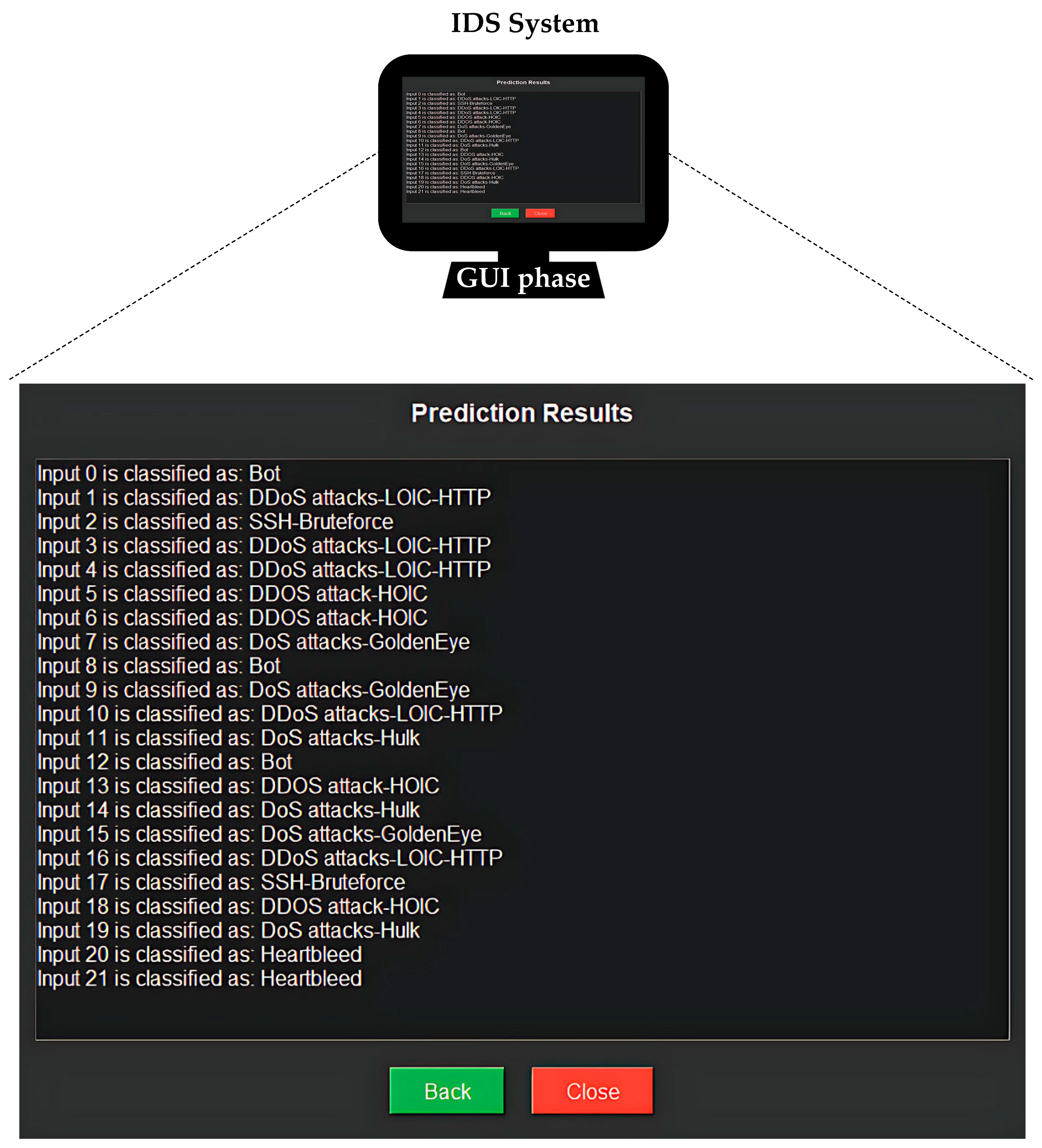

5.3. Case Study for Real-Time Evaluation Using the Hybrid PCA–Transformer Model

6. Limitations

- Real-Time Evaluation in Different Environments: Comprehensive real-time evaluation across diverse network environments remains a challenge. While many models demonstrate strong performance on benchmark datasets, their effectiveness in varied real-world settings such as edge devices, cloud platforms, and different network infrastructures is often unvalidated.

- Dataset Combination: To achieve comprehensive coverage of the wide variety of attack types and network behaviors, it is necessary to combine multiple datasets. However, the inherent differences in feature definitions, labeling schemes, and data distributions across these heterogeneous datasets pose significant challenges. These disparities complicate data integration and can negatively affect model performance and generalizability, highlighting the need for more effective methods to manage and unify heterogeneous data in intrusion detection research.

- Data Preprocessing: The model’s ability to perform optimally is directly tied to how well its data is preprocessed. It is crucial to accurately deal with missing values, appropriately encode categorical information, and effectively normalize numerical data.

- Model Adaptation: Adapting the model for peak performance on diverse datasets requires a comprehensive and iterative approach to hyperparameter tuning. This process is vital for ensuring the model perfectly aligns with the specific traits of every new dataset.

7. Conclusions

8. Future Work

- Real-Time Evaluation in Different Environments: The combined system using PCA–Transformer will be evaluated in real time across multiple environments to assess its adaptability and resilience to diverse challenges. Deployment will span from resource-constrained edge devices to large-scale cloud systems, ensuring consistent detection accuracy and operational efficiency in a wide range of scenarios.

- Dataset Combination: To enhance the robustness of intrusion detection models, future work should focus on combining a broader range of datasets to cover more diverse attack types and network behaviors. However, effectively integrating these heterogeneous datasets remains a challenge due to differences in feature definitions, labeling standards, and data distributions. Addressing these issues will require developing improved techniques for data harmonization and interoperability, which are crucial for advancing intrusion detection systems.

- Data Preprocessing: To achieve peak model performance, it is critical to tailor data preprocessing methods to each dataset. For a deeper dive into these refined techniques, see Section 3.2 herein.

- Model Adaptation and Hyperparameter Optimization: For successful deployment across varied data landscapes, enhanced hyperparameter optimization methods are crucial. These tuning strategies are detailed in Section 3.3.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Patel, A.; Qassim, Q.; Wills, C. A survey of intrusion detection and prevention systems. Inf. Manag. Comput. Secur. 2010, 18, 277–290. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Yuan, L.; Chen, H.; Mai, J.; Chuah, C.N.; Su, Z.; Mohapatra, P. Fireman: A toolkit for firewall modeling and analysis. In Proceedings of the 2006 IEEE Symposium on Security and Privacy (S&P’06), Berkeley/Oakland, CA, USA, 21–24 May 2006; IEEE: Manhattan, NY, USA, 2006; pp. 15–213. [Google Scholar]

- Musa, U.S.; Chhabra, M.; Ali, A.; Kaur, M. Intrusion detection system using machine learning techniques: A review. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; IEEE: Manhattan, NY, USA, 2020; pp. 149–155. [Google Scholar]

- Zhang, X.; Xie, J.; Huang, L. Real-Time Intrusion Detection Using Deep Learning Techniques. J. Netw. Comput. Appl. 2020, 140, 45–53. [Google Scholar]

- Kumar, S.; Kumar, R. A Review of Real-Time Intrusion Detection Systems Using Machine Learning Approaches. Comput. Secur. 2020, 95, 101944. [Google Scholar]

- Smith, B.J.; Taylor, C. Enhancing Network Security with Real-Time Intrusion Detection Systems. Int. J. Inf. Secur. 2021, 21, 123–135. [Google Scholar]

- Alzughaibi, S.; El Khediri, S. A cloud intrusion detection systems based on dnn using backpropagation and pso on the cse-cic-ids2018 dataset. Appl. Sci. 2023, 13, 2276. [Google Scholar] [CrossRef]

- Gopalsamy, M. Predictive Cyber Attack Detection in Cloud Environments with Machine Learning from the CICIDS 2018 Dataset. Int. J. Sci. Res. Technol. (IJSART) 2024, 10, 34–46. [Google Scholar]

- Talukder, M.A.; Islam, M.M.; Uddin, M.A.; Hasan, K.F.; Sharmin, S.; Salem; Alyami, A.; Moni, M.A. Machine learning-based network intrusion detection for big and imbalanced data using oversampling, stacking feature embedding and feature extraction. J. Big Data 2024, 11, 33. [Google Scholar] [CrossRef]

- Fathima, A.; Khan, A.; Uddin, M.F.; Waris, M.M.; Ahmad, S.; Sanin, C.; Szczerbicki, E. Performance evaluation and comparative analysis of machine learning models on the UNSW-NB15 dataset: A contemporary approach to cyber threat detection. Cybern. Syst. 2023, 1–7. [Google Scholar] [CrossRef]

- Alabdulwahab, S.; Kim, Y.-T.; Seo, A.; Son, Y. Generating synthetic dataset for ml-based ids us-ing ctgan and feature selection to protect smart iot environments. Appl. Sci. 2023, 13, 10951. [Google Scholar] [CrossRef]

- Alghamdi, R.; Bellaiche, M. An ensemble deep learning based IDS for IoT using Lambda architecture. Cybersecurity 2023, 6, 5. [Google Scholar] [CrossRef]

- Aljuaid, W.H.; Alshamrani, S.S. A deep learning approach for intrusion detection systems in cloud computing environments. Appl. Sci. 2024, 14, 5381. [Google Scholar] [CrossRef]

- Nawaz, M.W.; Munawar, R.; Mehmood, A.; Rahman, M.M.U.; Qammer; Abbasi, H. Multi-class Network Intrusion Detection with Class Imbalance via LSTM & SMOTE. arXiv 2023, arXiv:2310.01850. [Google Scholar] [CrossRef]

- Abdelkhalek, A.; Mashaly, M. Addressing the class imbalance problem in network intrusion detection systems using data resampling and deep learning. J. Supercomput. 2023, 79, 10611–10644. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, H.; Wang, P.; Sun, Z. RTIDS: A robust transformer-based approach for intrusion detection system. IEEE Access 2022, 10, 64375–64387. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Nam, H.; Kim, D. Transformer-based attention network for in-vehicle intrusion detection. IEEE Access 2023, 11, 55389–55403. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, L. Intrusion detection model based on improved transformer. Appl. Sci. 2023, 13, 6251. [Google Scholar] [CrossRef]

- Long, Z.; Yan, H.; Shen, G.; Zhang, X.; He, H.; Cheng, L. A Transformer-based network intrusion detection approach for cloud security. J. Cloud Comput. 2024, 13, 5. [Google Scholar] [CrossRef]

- Tseng, S.-M.; Wang, Y.-Q.; Wang, Y.-C. Multi-Class Intrusion Detection Based on Transformer for IoT Networks Using CIC-IoT-2023 Dataset. Future Internet 2024, 16, 284. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Robust Intrusion Detection System Using an Improved Hybrid Deep Learning Model for Binary and Multi-Class Classification in IoT Networks. Technologies 2025, 13, 102. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Advanced Hybrid Transformer-CNN Deep Learning Model for Effective Intrusion Detection Systems with Class Imbalance Mitigation Using Resampling Techniques. Future Internet 2024, 16, 481. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Enhanced Hybrid Deep Learning Models-Based Anomaly Detection Method for Two-Stage Binary and Multi-Class Classification of Attacks in Intrusion Detection Systems. Algorithms 2025, 18, 69. [Google Scholar] [CrossRef]

- Fu, Y.; Du, Y.; Cao, Z.; Li, Q.; Xiang, W. A deep learning model for network intrusion detection with imbalanced data. Electronics 2022, 11, 898. [Google Scholar] [CrossRef]

- Sajid, M.; Malik, K.R.; Almogren, A.; Malik, T.S.; Khan, A.H.; Tanveer, J.; Rehman, A.U. Enhancing intrusion detection: A hybrid machine and deep learning approach. J. Cloud Comput. 2024, 13, 123. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Improving Anomaly Detection in IDS with Hybrid Auto Encoder-SVM and Auto Encoder-LSTM Models Using Resampling Methods. In Proceedings of the 2024 6th Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 19–21 October 2024; pp. 34–39. [Google Scholar]

- Umair, M.B.; Iqbal, Z.; Faraz, M.A.; Khan, M.A.; Zhang, Y.-D.; Razmjooy, N.; Kadry, S. A network intrusion detection system using hybrid multilayer deep learning model. Big Data 2024, 12, 367–376. [Google Scholar] [CrossRef] [PubMed]

- Alghamdi, R.; Bellaiche, M. Evaluation and selection models for ensemble intrusion detection systems in IoT. IoT 2022, 3, 285–314. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. “CSE-CIC-IDS2018 Dataset.” Canadian Institute for Cybersecurity, University of New Brunswick. 2018. Available online: https://www.unb.ca/cic/datasets/ids-2018.html (accessed on 20 July 2025).

- Songma, S.; Sathuphan, T.; Pamutha, T. Optimizing intrusion detection systems in three phases on the 2053 CSE-CIC-IDS-2018 dataset. Computers 2023, 12, 245. [Google Scholar] [CrossRef]

- Ring, M.; Wunderlich, S.; Scheuring, D.; Landes, D.; Hotho, A. A survey of network-based intrusion detection data sets. Comput. Secur. 2019, 86, 147–167. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- UNB. Intrusion Detection Evaluation Dataset (CICIDS2017), University of New Brunswick. Available online: https://www.unb.ca/cic/datasets/ids-2017.html (accessed on 30 October 2024).

- Sharafaldin, I.; Habibi Lashkari, A.; Ghorbani, A.A. A detailed analysis of the cicids2017 data set. In Proceedings of the Information Systems Security and Privacy: 4th International Conference, ICISSP 2018, Funchal-Madeira, Portugal, 22–24 January 2018; Revised Selected Papers 4. Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 172–188. [Google Scholar]

- Bishop, C.M.; Nasser, M.N. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Ba, J.L. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Sarhan, M.; Layeghy, S.; Portmann, M. Towards a standard feature set for network intrusion detection system datasets. Mob. Netw. Appl. 2022, 27, 357–370. [Google Scholar] [CrossRef]

- Moustafa, N. Network Intrusion Detection System (NIDS) Datasets [Internet]; University of Queensland: Brisbane, Australia, 2025; Available online: https://staff.itee.uq.edu.au/marius/NIDS_datasets (accessed on 21 June 2025).

- El-Habil, B.Y.; Abu-Naser, S.S. Global climate prediction using deep learning. J. Theor. Appl. Inf. Technol. 2022, 100, 4824–4838. [Google Scholar]

- Song, Z.; Ma, J. Deep learning-driven MIMO: Data encoding and processing mechanism. Phys Commun. 2022, 57, 101976. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, C.; Sun, J.; Yao, K.; Xu, M. Detection of lead content in oilseed rape leaves and roots based on deep transfer learning and hyperspectral imaging technology. Spectroch. Acta Part A Mol. Biomol. Spectrosc. 2022, 290, 122288. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Kunang, Y.N.; Nurmaini, S.; Stiawan, D.; Zarkasi, A. Automatic features extraction using autoencoder in intrusion detection system. In Proceedings of the 2018 International Conference on Electrical Engineering and Computer Science (ICECOS), Pangkal Pinang, Indonesia, 2–4 October 2018; pp. 219–224. [Google Scholar]

- Gogna, A.; Majumdar, A. Discriminative autoencoder for feature extraction: Application to character recognition. Neural Pro-cessing Letters 2019, 49, 1723–1735. [Google Scholar] [CrossRef]

- Chen, X.; Ma, L.; Yang, X. Stacked denoise autoencoder based feature extraction and classification for hyperspectral images. J. Sens. 2016, 2016, 3632943. [Google Scholar]

- Michelucci, U. An introduction to autoencoders. arXiv 2022, arXiv:2201.03898. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Veeramreddy, J.; Prasad, K. Anomaly-Based Intrusion Detection System. In Anomaly Detection and Complex Network Systems; Alexandrov, A.A., Ed.; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Chen, C.; Song, Y.; Yue, S.; Xu, X.; Zhou, L.; Lv, Q.; Yang, L. FCNN-SE: An Intrusion Detection Model Based on a Fusion CNN and Stacked Ensemble. Appl. Sci. 2022, 12, 8601. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From Precision, Recall, and F-Measure to ROC, Informedness, Markedness & Correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Assy, A.T.; Mostafa, Y.; El-Khaleq, A.A.; Mashaly, M. Anomaly-based intrusion detection system using one-dimensional convolutional neural network. Procedia Comput. Sci. 2023, 220, 78–85. [Google Scholar] [CrossRef]

- Kamal, H.; Mashaly, M. Hybrid Deep Learning-Based Autoencoder-DNN Model for Intelligent Intrusion Detection System in IoT Networks. In Proceedings of the 2025 15th International Conference on Electrical Engineering (ICEENG), Cairo, Egypt, 12–15 May 2025; pp. 1–6. [Google Scholar]

| Author | Dataset | Year | Utilized Technique | Accuracy | Contribution | Limitations | Reason of Limitations | |

|---|---|---|---|---|---|---|---|---|

| B | M | |||||||

| Saud Alzughaibi and Salim El Khe-diri [8] | CSE-CICIDS2018 | 2023 | MLP-BP, MLP-PSO | 98.97% | 98.41% | The study develops two DNN models to enhance IDS in cloud environments, using MLP with Backpropagation and PSO. | • Scalability • Complex architecture • Lack of real-time evaluation | • Limited ability to process large or high-speed network traffic efficiently • High implementation and computational complexity • Unverified performance in live network environments |

| Mani Gopalsamy [9] | CSE-CICIDS2018 | 2024 | MLP-BP | 98.97% | - | The study utilizes the CSE-CIC-IDS2018 dataset to evaluate the MLP-BP model for anomaly-based intrusion detection through machine learning and data preprocessing. | • Lack of real-time evaluation • Scalability | • Real-time testing requires complex infrastructure and continuous processing of live network data, which is challenging to set up and manage. • Limited ability to process large or high-speed network traffic efficiently |

| Md. Alamin Talukder [10] | UNSW-NB15 | 2024 | RF and ET | 99.59% | 99.95% | The paper introduces a machine learning model that enhances intrusion detection by balancing data, reducing dimensions, and improving feature representation. | • Model capacity and advancement | • The model’s limited ability to learn complex patterns or adapt to evolving data due to insufficient capacity or outdated architecture |

| Afrah Fathima et al. [11] | UNSW-NB15 | 2023 | RF | 99% | 98% | The paper focuses on building and evaluating machine learning models to improve intrusion detection accuracy using the UNSW-NB15 dataset. | • Generalizability • Model capacity and advancement | • Limited dataset diversity • The model’s limited ability to learn complex patterns or adapt to evolving data due to insufficient capacity or outdated architecture |

| Saleh Alabdulwahab et al. [12] | Combined Dataset (TON_IoT, BoT-IoT and MQTT-IoT-IDS2020) | 2023 | CTGAN | - | 99% | The study uses CTGAN to generate synthetic IoT intrusion data from multiple datasets, improving data balance and detection accuracy. | • Generalizability | • Limited dataset diversity |

| Rubayyi Alghamdi and Martine Bellaiche [13] | Iot23 | 2023 | LSTM | 98.20% | 92.8% | The paper introduces a deep ensemble IDS using Lambda architecture, with LSTM-based models for both binary and multi-class traffic classification. | • Computational complexity and resource usage • Increased training and inference time | • Complex algorithms and large volumes of data require more processing power, memory, and time to execute efficiently • Complexity of models and large dataset sizes, which require more time for both training and making predictions |

| Wa’ad H. Aljuaid and Sultan S. Alshamrani [14] | CSE-CICIDS2018 | 2024 | CNN | - | 98.67% | The research proposes a deep learning model using an advanced CNN architecture to improve cyberattack detection efficiency in cloud computing environments. | • Scalability • Lack of real-time evaluation | • Limited ability to process large or high-speed network traffic efficiently • Real-time testing requires complex infrastructure and continuous processing of live network data, which is challenging to set up and manage |

| Muhammad Wasim Nawaz et al. [15] | CICIDS2017 | 2023 | LSTM | - | 99% | The paper proposes an LSTM-based model that tackles class imbalance in network intrusion detection using oversampling and customized loss functions for multi-class classification. | • Class imbalance • Applicable solely to problems with multiple classes | • Lack of resampling causes bias toward majority classes, reducing detection of rare threats • Multi-class problems have uneven class distributions that cause learning bias, unlike simpler binary problems |

| Ahmed Abdelkhalek and Maggie Mashaly [16] | NSL-KDD | 2023 | CNN | 93.3% | 81.8% | The paper addresses class imbalance in the NSL-KDD dataset by combining resampling methods with a CNN model to enhance detection of minority-class attacks. | • Generalizability | • Limited dataset diversity |

| Zihan Wu et al. [17] | CIC-DDoS2019 | 2022 | RTIDS | - | 98.48% | The paper introduces RTIDS, a Transformer-based IDS that addresses data imbalance by using positional embeddings and a stacked encoder–decoder network to reduce dimensionality while preserving essential features. | • Computational overhead | • Handling complex models, large datasets, and techniques like resampling requires extensive processing power and time |

| Trieu Phong Nguyen et al. [18] | CAN HACKING | 2023 | Transformer | 99.42% | - | The paper details a Transformer-powered attention network that acts as an intrusion detection system, specifically built to monitor CAN bus communications inside vehicles. | • Generalizability • Real-time applicability | • Limited dataset diversity • Real-time testing requires complex infrastructure and continuous processing of live network data, which is challenging to set up and manage |

| Yi Liu & Lanjian Wu [19] | NSL-KDD | 2023 | Transformer | 88.7% | 84.1% | An enhanced Transformer-based model is proposed to improve intrusion detection by reducing training time, improving class separation, and boosting classification accuracy. | • Generalizability • Class imbalance | • Limited dataset diversity • Lack of resampling causes bias toward majority classes, reducing detection of rare threats |

| Zhenyue Long et al. [20] | CSE-CICIDS2018 | 2024 | Transformer | - | 93% | This paper presents a Transformer-based intrusion detection system for cloud environments, using attention mechanisms to enhance feature analysis and improve detection accuracy. | • Generalizability • Scalability | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Shu-Ming Tseng et al. [21] | CIC-IoT-2023 | 2024 | Transformer | 99.48% | 99.24% | The Transformer is employed to scrutinize network traffic characteristics, enabling the detection of anomalous activities and possible intrusions via both two-category and multiple-category classification. | • Class imbalance • Generalizability | • Lack of resampling causes bias toward majority classes, reducing detection of rare threats • Limited dataset diversity |

| Yi Liu & Lanjian Wu [19] | NSL-KDD | 2023 | PCA | 85.1% | 82% | An enhanced PCA model is proposed to improve intrusion detection by reducing training time, improving class separation, and boosting classification accuracy. | • Generalizability • Class Imbalance | • Limited dataset diversity • Lack of resampling causes bias toward majority classes, reducing detection of rare threats |

| Hesham Kamal and Maggie Mashaly [22] | NF-BoT-IoT-v2 | 2025 | Autoencoder | 99.95% | 98.08% | The study proposes an autoencoder framework for anomaly-based intrusion detection in IoT environments, enabling accurate binary and multi-class traffic classification. | • Generalizability • Scalability | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Hesham Kamal and Maggie Mashaly [23] | NF-UNSW-NB15-v2 | 2024 | Autoencoder | 99.66% | 95.57% | The research develops an autoencoder model to enhance intrusion detection by handling data imbalance and identifying new threats in cloud environments. | • Generalizability • Scalability | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Yi Liu & Lanjian Wu [19] | NSL-KDD | 2023 | Autoencoder and stacked Autoencoders | 87.8% and 88.7% | 83.5% and 84.1% | An enhanced autoencoder and SEA model is proposed to improve intrusion detection by reducing training time, improving class separation, and boosting classification accuracy | • Generalizability • Class Imbalance | • Limited dataset diversity • Lack of resampling causes bias toward majority classes, reducing detection of rare threats |

| Hesham Kamal and Maggie Mashaly [22] | NF-BoT-IoT-v2 | 2025 | CNN-MLP | 99.96% | 98.11% | The study proposes a hybrid CNN-MLP framework for anomaly-based intrusion detection in IoT environments, enabling accurate binary and multi-class traffic classification. | • Generalizability • Scalability | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Hesham Kamal and Maggie Mashaly [24] | NF-BoT-IoT-v2 | 2025 | Transformer-DNN and Autoencoder-CNN | 99.98% and 99.98% | 97.90% and 97.95% | The study introduces two hybrid models: Autoencoder-CNN for addressing class imbalance and Transformer–DNN for extracting contextual features to improve classification. | • Scalability • Generalizability | • Limited ability to process large or high-speed network traffic efficiently • Limited dataset diversity |

| Yanfang Fu et al. [25] | NSL-KDD | 2022 | CNN and BiLSTMs | 90.73% | - | The paper introduces DLNID, a deep learning model that uses CNN, attention, and Bi-LSTM to enhance network intrusion detection accuracy and robustness. | • Lack of real-time evaluation | • Real-time testing requires complex infrastructure and continuous processing of live network data, which is challenging to set up and manage |

| Muhammad Sajid et al. [26] | CICIDS2017 | 2024 | CNN-LSTM | 97.90% | - | The paper presents a hybrid model using XGBoost, CNN, and LSTM to improve detection of evolving network attacks. | • Scalability • Class imbalance | • Limited ability to process large or high-speed network traffic efficiently • Lack of resampling causes bias toward majority classes, reducing detection of rare threats |

| Hesham Kamal and Maggie Mashaly [27] | CICIDS2017 | 2024 | Autoencoder-LSTM and Autoencoder-SVM | 99.6% and 95.01% | - | Two hybrid models, Autoencoder-LSTM and Autoencoder-SVM, were developed to improve IDS detection by enhancing feature extraction. | • Generalizability • Scalability • Applicable solely to problems with two distinct classes | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently • Multi-class problems have uneven class distributions that cause learning bias, unlike simpler binary problems |

| Muhammad Basit Umair et al. [28] | NSL-KDD | 2022 | Multilayer CNN-LSTM | - | 99.5% | The research presents an IDS using CNN and LSTM with a softmax classifier, evaluated on benchmark datasets and compared with a multilayer DNN. | • Generalizability | • Limited dataset diversity |

| Hesham Kamal and Maggie Mashaly [23] | CICIDS2017 | 2024 | Transformer–CNN | - | 99.13% | This research develops a Transformer–CNN model to enhance intrusion detection by handling data imbalance and identifying new threats in cloud environments. | • Generalizability • Scalability | • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Rubayyi Alghamdi and Martine Bellaiche [29] | Combined Dataset (UNSW-NB15, Ton_IoT and IoT23) | 2022 | RF, DT, CNN and LSTM | 99.45% | 97.81% | This study develops an ensemble IDS for IoT by combining ML and DL models with automated selection to enhance attack detection. | • Class imbalance • Generalizability • Scalability | • Lack of resampling causes bias toward majority classes, reducing detection of rare threats • Limited dataset diversity • Limited ability to process large or high-speed network traffic efficiently |

| Challenge | Approaches to Overcome Challenges |

|---|---|

| Detecting a Wide Range of Attack Types | One of the key challenges in intrusion detection is handling the wide range of attack types, each exhibiting distinct patterns and behaviors. This study addresses the challenge by proposing a novel IDS approach that implements a combined dataset framework with enhanced preprocessing and feature engineering. Specifically, it performs vertical concatenation of the CSE-CIC-IDS2018 and CICIDS2017 datasets, enabling the detection of 21 unique classes, comprising one benign class and 20 distinct attack types, thereby improving the system’s ability to recognize and differentiate a broad spectrum of attacks. |

| High Performance | Achieving high performance in IDSs remains a key challenge due to the need for accurate classification, efficient feature representation, and robust handling of anomalies. To address this, we present a high-performance IDS based on an integrated PCA–Transformer model. In this configuration, PCA is used to extract and condense relevant features, reducing dimensionality while preserving critical information. The Transformer network then performs accurate classification on these refined features. Additionally, our data preprocessing pipeline includes a hybrid outlier detection strategy, combining local outlier factor (LOF) and Z-score methods to effectively identify anomalies and enhance the overall reliability of the system |

| Class Imbalance | Class imbalance often results in biased predictions that favor majority classes, thereby reducing the model’s effectiveness in detecting rare but critical attacks. To mitigate this issue, different techniques were applied depending on the task. Class weights were incorporated during training to improve the representation of minority classes. Additionally, the ADASYN oversampling technique was used to generate synthetic samples for underrepresented classes, while the ENN method was employed to eliminate noisy majority class instances, thereby enhancing class boundary clarity |

| Generalization | Effectively managing variations in network traffic across diverse environments remains a significant challenge for IDSs. To address this, the PCA–Transformer model was initially applied to the combined CSE-CIC-IDS2018 and CICIDS2017 datasets, and subsequently evaluated on CSE-CIC-IDS2018, CICIDS2017, and NF-BoT-IoT-v2 to validate its generalization capability across different traffic conditions. |

| Scalability | Efficiently processing and classifying high-volume network traffic remains a critical requirement for modern IDSs. To address this, the proposed PCA–Transformer model was evaluated on the combined CSE-CIC-IDS2018 and CICIDS2017 dataset, as well as on the individual datasets: CSE-CIC-IDS2018, CICIDS2017, and NF-BoT-IoT-v2. This demonstrates the model’s capability to handle large-scale, diverse, and heterogeneous traffic scenarios. Furthermore, its scalability is validated through inference time, training time, and memory consumption metrics, as presented in Section 4.7, confirming its suitability for real-time and resource-constrained intrusion detection environments, as discussed in Section 5.3. |

| Label | Samples Before LOF and Z-Score | Samples After LOF and Z-Score |

|---|---|---|

| Benign | 39,000 | 3379 |

| DDoS attacks-LOIC-HTTP | 38,000 | 37,137 |

| DDOS attack-HOIC | 33,000 | 32,339 |

| DoS attacks-Hulk | 31,000 | 30,274 |

| Bot | 29,000 | 27,875 |

| Infiltration | 22,000 | 6071 |

| SSH-Bruteforce | 21,000 | 20,764 |

| DoS attacks-GoldenEye | 18,000 | 17,066 |

| DoS attacks-Slowloris | 9908 | 8453 |

| DDOS attack-LOIC-UDP | 1730 | 1649 |

| Brute Force-Web | 568 | 491 |

| Brute Force-XSS | 229 | 203 |

| SQL Injection | 85 | 46 |

| DoS attacks-SlowHTTPTest | 55 | 52 |

| FTP-BruteForce | 53 | 49 |

| Label | Samples Before LOF and Z-Score | Samples After LOF and Z-Score |

|---|---|---|

| Benign | 99,000 | 25,637 |

| PortScan | 12,000 | 11,956 |

| DDoS | 14,000 | 13,611 |

| DoS Hulk | 39,000 | 37,133 |

| DoS GoldenEye | 4000 | 3625 |

| FTP-Patator | 2000 | 1992 |

| SSH-Patator | 900 | 854 |

| DoS slowloris | 1700 | 1566 |

| DoS Slowhttptest | 1200 | 1136 |

| Bot | 700 | 656 |

| Web Attack-Brute Force | 450 | 443 |

| Web Attack-XSS | 350 | 341 |

| Infiltration | 36 | 24 |

| Web Attack-Sql Injection | 21 | 19 |

| Heartbleed | 11 | 11 |

| Original Feature Name | Renamed Feature |

|---|---|

| Fwd Packets Length Total | Total Length of Fwd Packets |

| Bwd Packets Length Total | Total Length of Bwd Packets |

| Packet Length Min | Min Packet Length |

| Packet Length Max | Max Packet Length |

| Avg Packet Size | Average Packet Size |

| Init Fwd Win Bytes | Init_Win_bytes_forward |

| Init Bwd Win Bytes | Init_Win_bytes_backward |

| Fwd Act Data Packets | act_data_pkt_fwd |

| Fwd Seg Size Min | min_seg_size_forward |

| Unique Classes | Unique Classes | Unique Classes |

|---|---|---|

| Benign | DoS attacks-GoldenEye | FTP-BruteForce |

| DDoS attacks-LOIC-HTTP | DoS attacks-Slowloris | PortScan |

| DDOS attack-HOIC | DDOS attack-LOIC-UDP | DDoS |

| DoS attacks-Hulk | Brute Force-Web | FTP-Patator |

| Bot | Brute Force-XSS | SSH-Patator |

| Infiltration | SQL Injection | Web Attack-XSS |

| SSH-Bruteforce | DoS attacks-SlowHTTPTest | Heartbleed |

| Framework | Dataset | Feature | Imputation Technique | Value |

|---|---|---|---|---|

| Combined System | CSE-CIC-IDS2018 | Destination Port | Constant value imputation | 0 |

| Fwd Header Length.1 | Mean | 127.866965 | ||

| CICIDS2017 | Protocol | Constant value imputation | 0 |

| Unique Classes | Train | Test |

|---|---|---|

| Benign | 24,808 | 4208 |

| DDoS attacks-LOIC-HTTP | 31,583 | 5554 |

| DDOS attack-HOIC | 27,523 | 4816 |

| DoS attacks-Hulk | 57,162 | 10,245 |

| Bot | 24,203 | 4328 |

| Infiltration | 5195 | 900 |

| SSH-Bruteforce | 17,607 | 3157 |

| DoS attacks-GoldenEye | 17,663 | 3028 |

| DoS attacks-Slowloris | 8511 | 1508 |

| DDOS attack-LOIC-UDP | 1382 | 267 |

| Brute Force-Web | 808 | 126 |

| Brute Force-XSS | 165 | 38 |

| SQL Injection | 57 | 8 |

| DoS attacks-SlowHTTPTest | 1010 | 178 |

| FTP-BruteForce | 40 | 9 |

| PortScan | 10,155 | 1801 |

| DDoS | 11,549 | 2062 |

| FTP-Patator | 1689 | 303 |

| SSH-Patator | 715 | 139 |

| Web Attack-XSS | 290 | 51 |

| Heartbleed | 9 | 2 |

| Class | Weight |

|---|---|

| Normal | 4.87996 |

| Attack | 0.55708 |

| Class | Weight |

|---|---|

| Benign | 0.46476 |

| DDoS attacks-LOIC-HTTP | 0.36506 |

| DDOS attack-HOIC | 0.41891 |

| DoS attacks-Hulk | 0.20170 |

| Bot | 0.47638 |

| Infiltration | 2.21939 |

| SSH-Bruteforce | 0.65484 |

| DoS attacks-GoldenEye | 0.65276 |

| DoS attacks-Slowloris | 1.35468 |

| DDOS attack-LOIC-UDP | 8.34277 |

| Brute Force-Web | 14.26945 |

| Brute Force-XSS | 69.87706 |

| SQL Injection | 202.27569 |

| DoS attacks-SlowHTTPTest | 11.41556 |

| FTP-BruteForce | 288.24286 |

| PortScan | 1.13537 |

| DDoS | 0.99833 |

| FTP-Patator | 6.82636 |

| SSH-Patator | 16.12547 |

| Web Attack-XSS | 39.75764 |

| Heartbleed | 1281.07937 |

| Block | Step | Output Size | Description |

|---|---|---|---|

| Input Block | Input features | number of Features | Raw input features depending on the dataset used |

| PCA Block | Fit PCA | 59 | PCA model trained with n_components = 0.999999999999 to retain all variance |

| PCA Block | Transform train/test data | 59 | Both training and test sets projected into reduced feature space |

| Output Block | PCA-transformed features | 59 | Final feature set used as input to the Transformer model |

| Block | Layer Type | Output Size | Activation Function | Parameters | Description |

|---|---|---|---|---|---|

| Input block | Input layer | PCA Output (59 Features) | - | - | Feeds on the output from PCA as input. |

| Transformer block | Multi-head attention | - | - | num_heads = 8, key_dim = 64 | Learns intricate patterns from the input data. |

| Layer Normalization | - | - | epsilon = 1 × 10−6 | Processes the attention layer’s output to ensure uniformity. | |

| Add (Residual Connection) | - | - | - | Merges input data with the attention output to ensure stability. | |

| Feed Forward block | Dense layer | 128 | ReLU | units = 128, activation = “relu” | Carries out a dense mapping followed by ReLU activation. |

| Dense layer | 128 | - | units = 128 | Implements another dense layer without activation. | |

| Add (Residual Connection) | - | - | - | Integrates the feed-forward output with the prior block’s output. | |

| Layer Normalization | - | - | epsilon = 1 × 10−6 | Ensures stability by normalizing the combined output. | |

| Output block | Output layer | 1 (Binary) | Sigmoid | - | Single unit for Binary classification |

| Output block | Output layer | Number of Classes (Multi-Class) | Softmax | - | Units (number of classes) for Multi-class classification |

| Parameter | Binary Classifier | Multi-Class Classifier |

|---|---|---|

| Batch size | 128 | 128 |

| Learning rate | Scheduled: Initial = 0.001, Factor = 0.5, Min = 1 × 10−5 (ReduceLROnPlateau) | Scheduled: Initial = 0.001, Factor = 0.5, Min = 1 × 10−5 (ReduceLROnPlateau) |

| Optimizer | Adam | Adam |

| Loss function | Binary cross-entropy | Categorical cross-entropy |

| Metric | Accuracy | Accuracy |

| Dataset | Model | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|

| NF-BoT-IoT-v2 | CNN | 99.73% | 99.73% | 99.73% | 99.73% |

| Autoencoder | 99.94% | 99.94% | 99.94% | 99.94% | |

| MLP | 99.81% | 99.81% | 99.81% | 99.81% | |

| Transformer | 99.92% | 99.92% | 99.92% | 99.92% | |

| PCA–Transformer | 99.98% | 99.98% | 99.98% | 99.98% | |

| CSE-CIC-IDS2018 | CNN | 99.61% | 99.68% | 99.61% | 99.63% |

| Autoencoder | 99.64% | 99.70% | 99.64% | 99.65% | |

| MLP | 99.62% | 99.69% | 99.62% | 99.64% | |

| Transformer | 99.64% | 99.70% | 99.64% | 99.65% | |

| PCA–Transformer | 99.66% | 99.71% | 99.66% | 99.67% | |

| CICIDS2017 | CNN | 98.58% | 98.57% | 98.58% | 98.57% |

| Autoencoder | 99.70% | 99.70% | 99.70% | 99.70% | |

| MLP | 99.59% | 99.59% | 99.59% | 99.59% | |

| Transformer | 99.72% | 99.72% | 99.72% | 99.71% | |

| PCA–Transformer | 99.75% | 99.75% | 99.75% | 99.75% | |

| Combined Dataset | CNN | 99.76% | 99.76% | 99.76% | 99.76% |

| Autoencoder | 99.77% | 99.78% | 99.77% | 99.77% | |

| MLP | 99.75% | 99.76% | 99.75% | 99.75% | |

| Transformer | 99.77% | 99.78% | 99.77% | 99.77% | |

| PCA–Transformer | 99.80% | 99.81% | 99.80% | 99.80% |

| Dataset | Model | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|

| NF-BoT-IoT-v2 | CNN | 97.75% | 97.82% | 97.75% | 97.75% |

| Autoencoder | 97.98% | 98.02% | 97.98% | 97.98% | |

| MLP | 97.82% | 97.89% | 97.82% | 97.82% | |

| Transformer | 97.97% | 98.02% | 97.97% | 97.97% | |

| PCA–Transformer | 98.01% | 98.06% | 98.01% | 98.01% | |

| CSE-CIC-IDS2018 | CNN | 99.52% | 99.64% | 99.52% | 99.55% |

| Autoencoder | 99.53% | 99.65% | 99.53% | 99.57% | |

| MLP | 99.56% | 99.67% | 99.56% | 99.59% | |

| Transformer | 99.57% | 99.66% | 99.57% | 99.60% | |

| PCA–Transformer | 99.59% | 99.68% | 99.59% | 99.62% | |

| CICIDS2017 | CNN | 99.04% | 99.12% | 99.04% | 99.07% |

| Autoencoder | 99.37% | 99.64% | 99.37% | 99.29% | |

| MLP | 98.65% | 98.79% | 98.65% | 98.65% | |

| Transformer | 99.45% | 99.69% | 99.45% | 99.40% | |

| PCA–Transformer | 99.51% | 99.57% | 99.51% | 99.52% | |

| Combined Dataset | CNN | 99.03% | 99.30% | 99.03% | 99.09% |

| Autoencoder | 99.22% | 99.41% | 99.22% | 99.25% | |

| MLP | 99.01% | 99.34% | 99.01% | 99.09% | |

| Transformer | 99.23% | 99.48% | 99.23% | 99.29% | |

| PCA–Transformer | 99.28% | 99.51% | 99.28% | 99.33% |

| Classification Type | Dataset | Model | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|---|

| Binary | NF-BoT-IoT-v2 | PCA | 97.80% | 97.92% | 97.80% | 97.84% |

| Transformer | 99.92% | 99.92% | 99.92% | 99.92% | ||

| PCA–Transformer | 99.98% | 99.98% | 99.98% | 99.98% | ||

| CSE-CIC-IDS2018 | PCA | 98.39% | 99.15% | 98.39% | 98.63% | |

| Transformer | 99.64% | 99.70% | 99.64% | 99.65% | ||

| PCA–Transformer | 99.66% | 99.71% | 99.66% | 99.67% | ||

| CICIDS2017 | PCA | 98.30% | 98.30% | 98.30% | 98.30% | |

| Transformer | 99.72% | 99.72% | 99.72% | 99.71% | ||

| PCA–Transformer | 99.75% | 99.75% | 99.75% | 99.75% | ||

| Combined Dataset | PCA | 98.61% | 98.78% | 98.61% | 98.65% | |

| Transformer | 99.77% | 99.78% | 99.77% | 99.77% | ||

| PCA–Transformer | 99.80% | 99.81% | 99.80% | 99.80% | ||

| Multi-Class | NF-BoT-IoT-v2 | PCA | 94.22% | 94.96% | 94.22% | 94.51% |

| Transformer | 97.97% | 98.02% | 97.97% | 97.97% | ||

| PCA–Transformer | 98.01% | 98.06% | 98.01% | 98.01% | ||

| CSE-CIC-IDS2018 | PCA | 97.06% | 98.30% | 97.06% | 97.57% | |

| Transformer | 99.57% | 99.66% | 99.57% | 99.60% | ||

| PCA–Transformer | 99.59% | 99.68% | 99.59% | 99.62% | ||

| CICIDS2017 | PCA | 97.21% | 97.93% | 97.21% | 97.35% | |

| Transformer | 99.45% | 99.69% | 99.45% | 99.40% | ||

| PCA–Transformer | 99.51% | 99.57% | 99.51% | 99.52% | ||

| Combined Dataset | PCA | 96.73% | 97.58% | 96.73% | 97.02% | |

| Transformer | 99.23% | 99.48% | 99.23% | 99.29% | ||

| PCA–Transformer | 99.28% | 99.51% | 99.28% | 99.33% |

| Dataset | Classification Type | Inference Time Per Batch (128 Samples) (Seconds) | Inference Time Per Sample (milliseconds) |

|---|---|---|---|

| NF-BoT-IoT-v2 | Binary | 0.083739 | 0.654211 |

| Multi-class | 0.084465 | 0.659883 | |

| CSE-CIC-IDS2018 | Binary | 0.077026 | 0.601766 |

| Multi-class | 0.081579 | 0.637336 | |

| CICIDS2017 | Binary | 0.080222 | 0.626734 |

| Multi-class | 0.080888 | 0.631938 | |

| Combined Dataset | Binary | 0.080309 | 0.627414 |

| Multi-class | 0.082079 | 0.641242 |

| Dataset | Classification Type | Training Time Per Batch (128 Samples) (Seconds) | Training Time Per Sample (milliseconds) |

|---|---|---|---|

| NF-BoT-IoT-v2 | Binary | 0.083813 | 0.654789 |

| Multi-class | 0.085264 | 0.666125 | |

| CSE-CIC-IDS2018 | Binary | 0.078359 | 0.612180 |

| Multi-class | 0.081682 | 0.638141 | |

| CICIDS2017 | Binary | 0.083152 | 0.649625 |

| Multi-class | 0.083170 | 0.649766 | |

| Combined Dataset | Binary | 0.080862 | 0.631734 |

| Multi-class | 0.082748 | 0.646469 |

| Dataset | Classification Type | Memory Consumption (MB) Per Batch (128 Samples) |

|---|---|---|

| NF-BoT-IoT-v2 | Binary | 0.25 |

| Multi-class | 0.29 | |

| CSE-CIC-IDS2018 | Binary | 0.34 |

| Multi-class | 0.74 | |

| CICIDS2017 | Binary | 0.34 |

| Multi-class | 0.73 | |

| Combined Dataset | Binary | 0.35 |

| Multi-class | 0.93 |

| Classification Type | Model | Accuracy | Inference Time per Batch (128 Samples) (Seconds) | Training Time per Batch (128 Samples) (Seconds) | Memory Consumption (MB) per Batch (128 Samples) |

|---|---|---|---|---|---|

| Binary | Linformer | 99.75% | 0.073526 | 0.077988 | 0.27 |

| Performer | 99.76% | 0.075856 | 0.079189 | 0.25 | |

| Transformer | 99.77% | 0.077694 | 0.079305 | 0.24 | |

| Multi-Class | Linformer | 95.31% | 0.078310 | 0.082654 | 0.29 |

| Performer | 96.06% | 0.078941 | 0.082798 | 0.27 | |

| Transformer | 99.23% | 0.081036 | 0.083193 | 0.92 |

| Class | Prediction Probability |

|---|---|

| Normal | 1.00 |

| Attack | 0.00 |

| Feature | Normalized Value | LIME Contribution Weight |

|---|---|---|

| ACK Flag Count | 0.000000 | 0.386353 |

| PSH Flag Count | 0.000000 | 0.242650 |

| Fwd PSH Flags | 0.000000 | 0.230619 |

| FIN Flag Count | 0.000000 | 0.221099 |

| SYN Flag Count | 0.000000 | 0.202133 |

| ECE Flag Count | 0.000000 | 0.130902 |

| Active Std | 0.000000 | −0.119029 |

| RST Flag Count | 0.000000 | 0.084017 |

| Bwd IAT Max | 0.000000 | 0.058481 |

| Fwd Packets/s | 0.006452 | −0.053315 |

| Class | Prediction Probability |

|---|---|

| Benign | 0.00 |

| DDoS attacks-LOIC-HTTP | 0.00 |

| DDOS attack-HOIC | 0.00 |

| DoS attacks-Hulk | 0.00 |

| Bot | 0.00 |

| Infiltration | 0.00 |

| SSH-Bruteforce | 0.00 |

| DoS attacks-GoldenEye | 0.00 |

| DoS attacks-Slowloris | 0.00 |

| DDOS attack-LOIC-UDP | 0.00 |

| Brute Force-Web | 0.00 |

| Brute Force-XSS | 0.00 |

| SQL Injection | 0.00 |

| DoS attacks-SlowHTTPTest | 0.00 |

| FTP-BruteForce | 0.00 |

| PortScan | 0.00 |

| DDoS | 0.00 |

| FTP-Patator | 0.00 |

| SSH-Patator | 0.00 |

| Web Attack-XSS | 0.00 |

| Heartbleed | 1.00 |

| Feature | Normalized Value | LIME Contribution Weight |

|---|---|---|

| Fwd Header Length.1 | 0.997014 | 0.002998 |

| Fwd Packet Length Max | 1.000000 | 0.001736 |

| Total Backward Packets | 0.458619 | 0.001727 |

| Subflow Bwd Bytes | 0.999816 | 0.001565 |

| Total Length of Bwd Packets | 0.999816 | 0.001556 |

| Subflow Fwd Packets | 0.011287 | 0.001545 |

| Avg Bwd Segment Size | 0.898546 | 0.001500 |

| act_data_pkt_fwd | 0.000484 | 0.001499 |

| Bwd Packet Length Mean | 0.898546 | 0.001491 |

| Dataset | Class | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|

| NF-BoT-IoT-v2 | Normal | 99.92% | 99.84% | 99.92% | 99.88% |

| Attack | 99.98% | 99.99% | 99.98% | 99.99% | |

| CSE-CIC-IDS2018 | Normal | 99.60% | 84.40% | 99.60% | 91.37% |

| Attack | 99.66% | 99.99% | 99.66% | 99.83% | |

| CICIDS2017 | Normal | 99.20% | 99.96% | 99.20% | 99.57% |

| Attack | 99.98% | 99.66% | 99.98% | 99.82% | |

| Combined Dataset | Normal | 100% | 98.02% | 100% | 99% |

| Attack | 99.78% | 100% | 99.78% | 99.89% |

| Class | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|

| Benign | 99.92% | 99.05% | 99.92% | 99.48% |

| Reconnaissance | 94.50% | 99.42% | 94.50% | 96.90% |

| DDoS | 99.21% | 99.23% | 99.21% | 99.22% |

| DoS | 98.71% | 95.17% | 98.71% | 96.91% |

| Theft | 100% | 100% | 100% | 100% |

| Class | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|

| Benign | 95.05% | 93.20% | 95.05% | 94.12% |

| DDoS attacks-LOIC-HTTP | 99.98% | 100% | 99.98% | 99.99% |

| DDOS attack-HOIC | 100% | 100% | 100% | 100% |

| DoS attacks-Hulk | 100% | 100% | 100% | 100% |

| Bot | 99.44% | 100% | 99.44% | 99.72% |

| Infiltration | 96.06% | 97.05% | 96.06% | 96.55% |

| SSH-Bruteforce | 100% | 100% | 100% | 100% |

| DoS attacks-GoldenEye | 100% | 100% | 100% | 100% |

| DoS attacks-Slowloris | 100% | 100% | 100% | 100% |

| DDOS attack-LOIC-UDP | 100% | 100% | 100% | 100% |

| Brute Force-Web | 72.37% | 100% | 72.37% | 83.97% |

| Brute Force-XSS | 96.30% | 39.39% | 96.30% | 55.91% |

| SQL Injection | 100% | 44.44% | 100% | 61.54% |

| DoS attacks-SlowHTTPTest | 44.44% | 50% | 44.44% | 47.06% |

| FTP-BruteForce | 66.67% | 61.54% | 66.67% | 64% |

| Class | Accuracy | Precision | Recall | F-score |

|---|---|---|---|---|

| Benign | 100% | 100% | 100% | 100% |

| PortScan | 99.89% | 100% | 99.89% | 99.94% |

| DDoS | 99.85% | 99.90% | 99.85% | 99.88% |

| DoS Hulk | 99.91% | 99.96% | 99.91% | 99.94% |

| DoS GoldenEye | 99.82% | 99.10% | 99.82% | 99.46% |

| FTP-Patator | 99.34% | 99.67% | 99.34% | 99.50% |

| SSH-Patator | 91.34% | 100% | 91.34% | 95.47% |

| DoS slowloris | 99.22% | 97.68% | 99.22% | 98.44% |

| DoS Slowhttptest | 100% | 98.77% | 100% | 99.38% |

| Bot | 98.99% | 98% | 98.99% | 98.49% |

| Web Attack-Brute Force | 60% | 72.41% | 60% | 65.62% |

| Web Attack-XSS | 71.70% | 60.32% | 71.70% | 65.52% |

| Infiltration | 33.33% | 100% | 33.33% | 50% |

| Web Attack-Sql Injection | 100% | 29.41% | 100% | 45.45% |

| Heartbleed | 50% | 100% | 50% | 66.67% |

| Class | Accuracy | Precision | Recall | F-score |

|---|---|---|---|---|

| Benign | 96.98% | 99.98% | 96.98% | 98.46% |

| DDoS attacks-LOIC-HTTP | 99.42% | 100% | 99.42% | 99.71% |

| DDOS attack-HOIC | 100% | 100% | 100% | 100% |

| DoS attacks-Hulk | 99.90% | 99.95% | 99.90% | 99.93% |

| Bot | 99.33% | 99.93% | 99.33% | 99.63% |

| Infiltration | 100% | 87.89% | 100% | 93.56% |

| SSH-Bruteforce | 100% | 100% | 100% | 100% |

| DoS attacks-GoldenEye | 99.54% | 99.77% | 99.54% | 99.65% |

| DoS attacks-Slowloris | 99.87% | 99.67% | 99.87% | 99.77% |

| DDOS attack-LOIC-UDP | 100% | 98.52% | 100% | 99.26% |

| Brute Force-Web | 41.27% | 94.55% | 41.27% | 57.46% |

| Brute Force-XSS | 94.74% | 33.64% | 94.74% | 49.66% |

| SQL Injection | 50% | 20% | 50% | 28.57% |

| DoS attacks-SlowHTTPTest | 95.51% | 99.42% | 95.51% | 97.42% |

| FTP-BruteForce | 100% | 60% | 100% | 75% |

| PortScan | 99.83% | 100% | 99.83% | 99.92% |

| DDoS | 99.95% | 100% | 99.95% | 99.98% |

| FTP-Patator | 99.67% | 99.34% | 99.67% | 99.51% |

| SSH-Patator | 98.56% | 98.56% | 98.56% | 98.56% |

| Web Attack-XSS | 100% | 46.36% | 100% | 63.35% |

| Heartbleed | 100% | 100% | 100% | 100% |

| Actual Class | Predicted Class | Probabilities |

|---|---|---|

| Bot | Bot | Bot: 1.0000, Others: 0.0000 |

| DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP: 1.0000, Others: 0.0000 |

| SSH-Bruteforce | SSH-Bruteforce | SSH-Bruteforce: 1.0000, Others: 0.0000 |

| DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP: 1.0000, Others: 0.0000 |

| DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP: 1.0000, Others: 0.0000 |

| DDOS attack-HOIC | DDOS attack-HOIC | DDOS attack-HOIC: 1.0000, Others: 0.0000 |

| DDOS attack-HOIC | DDOS attack-HOIC | DDOS attack-HOIC: 1.0000, Others: 0.0000 |

| DoS attacks-GoldenEye | DoS attacks-GoldenEye | DoS attacks-GoldenEye: 1.0000, Others: 0.0000 |

| Bot | Bot | Bot: 1.0000, Others: 0.0000 |

| DoS attacks-GoldenEye | DoS attacks-GoldenEye | DoS attacks-GoldenEye: 1.0000, Others: 0.0000 |

| DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP: 1.0000, Others: 0.0000 |

| DoS attacks-Hulk | DoS attacks-Hulk | DoS attacks-Hulk: 0.9926, DoS attacks-GoldenEye: 0.0074, Others: 0.0000 |

| Bot | Bot | Bot: 1.0000, Others: 0.0000 |

| DDOS attack-HOIC | DDOS attack-HOIC | DDOS attack-HOIC: 1.0000, Others: 0.0000 |

| DoS attacks-Hulk | DoS attacks-Hulk | DoS attacks-Hulk: 0.9921, DoS attacks-GoldenEye: 0.0079, Others: 0.0000 |

| DoS attacks-GoldenEye | DoS attacks-GoldenEye | DoS attacks-GoldenEye: 0.9996, DoS attacks-Hulk: 0.0003, DDoS attacks-LOIC-HTTP: 0.0001, Others: 0.0000 |

| DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP | DDoS attacks-LOIC-HTTP: 1.0000, Others: 0.0000 |

| SSH-Bruteforce | SSH-Bruteforce | SSH-Bruteforce: 1.0000, Others: 0.0000 |

| DOS attack-HOIC | DOS attack-HOIC | DDOS attack-HOIC: 1.0000, Others: 0.0000 |

| DoS attacks-Hulk | DoS attacks-Hulk | DoS attacks-Hulk: 0.9934, DoS attacks-GoldenEye: 0.0066, Others: 0.0000 |

| Heartbleed | Heartbleed | Heartbleed: 1.0000, Others: 0.0000 |

| Heartbleed | Heartbleed | Heartbleed: 1.0000, Others: 0.0000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kamal, H.; Mashaly, M. Combined Dataset System Based on a Hybrid PCA–Transformer Model for Effective Intrusion Detection Systems. AI 2025, 6, 168. https://doi.org/10.3390/ai6080168

Kamal H, Mashaly M. Combined Dataset System Based on a Hybrid PCA–Transformer Model for Effective Intrusion Detection Systems. AI. 2025; 6(8):168. https://doi.org/10.3390/ai6080168

Chicago/Turabian StyleKamal, Hesham, and Maggie Mashaly. 2025. "Combined Dataset System Based on a Hybrid PCA–Transformer Model for Effective Intrusion Detection Systems" AI 6, no. 8: 168. https://doi.org/10.3390/ai6080168

APA StyleKamal, H., & Mashaly, M. (2025). Combined Dataset System Based on a Hybrid PCA–Transformer Model for Effective Intrusion Detection Systems. AI, 6(8), 168. https://doi.org/10.3390/ai6080168