1. Introduction

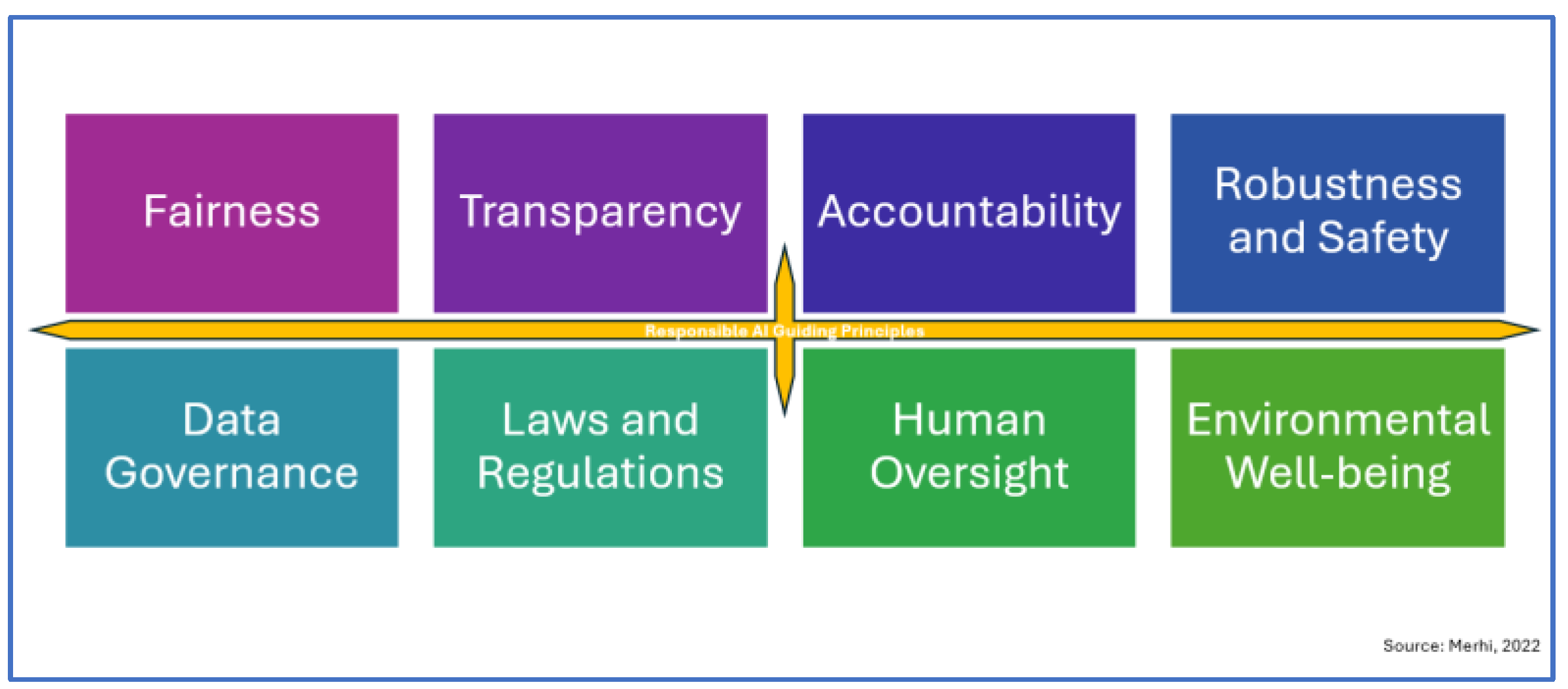

Artificial Intelligence (AI) and Generative AI (GenAI) promise transformative benefits by accelerating decision-making, automating routine tasks, and unlocking novel products and services. Yet, their rapid, pervasive adoption has outpaced our understanding of how to govern them responsibly. High-profile mishaps and regulatory scrutiny have underscored a widening “theory-to-practice” gap in Responsible AI (RAI): while scholars and industry bodies articulate principles of fairness, transparency, and accountability [

1,

2], detailed guidance on operationalising such ideals remains thin on the ground, particularly in highly regulated sectors such as that of financial services.

The paper seeks to address such a gap by examining two intertwined research questions: (a) What non-technical barriers inhibit the practical translation of RAI principles into routine governance and operations within financial services organisations? and (b) How can Corporate Digital Responsibility (CDR) frameworks be leveraged to bridge the distance between high-level RAI guidelines and the real-world challenges faced by practitioners in financial institutions? To address these questions, the paper’s specific objectives are (a) to conduct semi-structured interviews with AI/GenAI stakeholders across European financial institutions to elicit and map non-technical obstacles that reflect areas of alignment, tension, or omission between theoretical prescriptions and practitioners’ lived experiences, (b) to explore whether CDR orientation (as a set of core values, expected behaviours and ethical guidelines) can inform actionable governance mechanisms, decision-making processes, and accountability structures for AI/GenAI deployment, and (c) add to academic discourse and practitioner debates by laying the ground for further research on the identified barriers, cross-industry comparisons, and longitudinal assessments of AI governance efficacy along with targeted strategies to mitigate barriers and accelerate responsible adoption of AI/GenAI.

Drawing from 15 in-depth interviews with AI executives, risk officers, and CDR practitioners, this is the first study that attempts to systematically identify categories of organisational, cultural, and human-centric barriers that impede RAI implementation. We then examine how a CDR orientation—rooted in ethical data stewardship, stakeholder engagement, and sustainability—can help translate high-level RAI principles into actionable governance practices. In this respect, our analysis reveals three core findings: (1) practitioners perceive GenAI risks as more urgent than traditional AI challenges; (2) nine distinct non-technical barriers—from role ambiguity and legacy processes to human-factor and budget constraints—undermine RAI adoption; and (3) CDR practitioners adopt a more human-centric, consensus-driven approach to RAI, emphasising shared values such as “no margin for error” and placing trust and purpose at the centre of governance.

By integrating such insights, our contributions are threefold. First, we extend the Responsible AI literature [

3,

4] by empirically validating and richly describing organisational and cultural impediments that transcend technical concerns. Second, we advance CDR scholarship [

5,

6] by demonstrating its relevance to AI governance and outlining how CDR dimensions could be mapped onto RAI mechanisms. Third, we offer a practical taxonomy of nine non-technical barriers, equipping practitioners with a diagnostic lens for designing holistic governance protocols and laying the groundwork for future quantitative validation and cross-industry comparison.

The remainder of the paper is structured as follows.

Section 2 reviews the literature on Responsible AI and Corporate Digital Responsibility, highlighting the distance between high-level principles and practitioner needs.

Section 3 outlines the qualitative methodology and sampling approach.

Section 4 presents our thematic analysis findings on non-technical barriers and the mediating role of CDR.

Section 5 discusses implications for theory and practice and concludes with limitations and directions for future research.

4. Findings

4.1. Value and Practical Use of AI and GenAI in Financial Services

Providing context for RAI practices, participants highlighted the tremendous opportunity and tangible benefits of using AI and GenAI in finance. Although adopting it at varying degrees, participants were unanimous in admitting the transformative nature of AI in their organisations, and GenAI in particular. They all shared examples of applications and tasks or processes where their organisations are currently employing AI techniques (either actively or sparingly). The most common use case objective they indicated was for tangible benefits of cost reduction, productivity, and efficiency improvements, which is in line with Bhatnagar and Mahant’s [

9] finding that 36% of executives in finance have used AI to “reduce costs by 10%” and about 46% of financial services organisations reported “improved customer experience after implementing AI” (p. 446). P1 (Strategy Officer, Fintech, The Netherlands) indicated the use of AI/GenAI for money laundering and fraud detection, and P3 (Director/App Delivery Officer, Insurance, UK) for customer data analysis to identify business opportunities faster, while P2 (Chief Technology Officer, Insurance, UK) for underwriting (e.g., being able to summarise all of the client notes and documents), writing standard operating procedures (SOPs), rewriting legacy banking applications from PASCAL or COBOL to Python or JavaScript, detecting threats or cyber risks to retain cyber resilience, and improving code quality to reduce relevant vulnerabilities. P2 also identified several monotonous, tedious, and mundane tasks in financial services that are necessary but less value-adding. According to P2, “AI can do quite well”, particularly in such repetitive tasks that are not unique or those low-risk tasks that do not use sensitive or personal information. This view is consistent with the Bank of England survey [

41], echoing the value of AI in automating routine, repetitive, or undifferentiated activities that all FIs undertake, such as (financial) reporting, tax returns, client onboarding, and document recognition, retrieval, and processing.

4.2. AI and GenAI Risks: Inextricable Links to Barriers to Responsible AI

The thematic analysis revealed that barriers to practicing RAI are inextricably linked to two key aspects. First, the risks of deploying AI in financial operations, especially the new risks introduced by GenAI, which is a vastly different AI technology; this is because participants kept referring back to the risks when describing implementation challenges their organisations face. Understanding AI risks is essential in putting the implementation barriers in the right context and highlighting the importance and approaches to address them. Second, technical and legal barriers such as security, privacy, and algorithms/large language models monitoring.

Multiple common perspectives around the risks of AI, particularly GenAI and how that affected their RAI implementation, were surfaced. P4 (Security Officer, Stock Exchange, UK) highlighted how her organisation has “robust” architectural processes and a governance framework to manage the risks of traditional AI and machine learning (ML) because of traditional AI and ML’s maturity and its use within the organisation for between 10 and 15 years. Likewise, P12, P1, P3, P10, P8, and P7 all stated that their organisations have developed mature AI governance systems and guardrails for ensuring Responsible ML practices through safety systems and employee training to ensure testing of the input data for ethics and fairness.

Still, the risks of GenAI and practically managing it are top-of-mind across all interviewees:

“Gen AI is this new kid on the block that is more challenging because it’s multi multimodal to include natural language, voice, text to voice, text to image creating new threat vectors and risks”.

(P4, Security Officer, Stock Exchange, UK)

Similar thoughts were echoed by P6, P2, P3, P11, P7, and P8 that their “biggest” RAI concern is more around GenAI than AI itself:

“(…) Largely because of the fact that obviously generative AI is a learning module (…) and it learns from itself”.

(P3, Director/App Delivery Officer, Insurance, UK)

But as highlighted by four participants (P2, P7, P1, and P12), similar risks exist in other AI uses, too. P2 and P12 referred to the recent AI mishap from McDonalds, where its voice recognition AI software to process orders in its drive-through restaurants proved unreliable. The system misinterpreted orders to provide “bacon-topped ice cream to hundreds of dollars’ worth of chicken nuggets” in a single order [

42]; a project initiated in 2019, and in 2024, McDonalds announced the end of this automated order-taking system. P2 used this example to show that AI is nascent and can miss things. But gravely pointed out that a similar mishap in the finance industry ‘

in, say, medical insurance use case will be…far less funny’.

“If AI misses something in the medical history and the claim becomes invalid. This has a double impact: The insurer has to foot the entire bill versus the re-insurer as commonly practised; and re-insurance rates go up because the company was not diligent enough in the underwriting”.

(P2, Chief Technology Officer, Insurance, UK)

P2, P5, P4, and P8 also called out risks and issues around bias and security of AI. Still, GenAI remained the most recurring risk across interviews (identified through repetition and linguistic connectors used in thematic analysis). This is echoed in multiple studies backing GenAI as more challenging because traditional ML is fairly established with proven use cases in finance, predominantly in “pattern identification, classification, and prediction,” whereas GenAI models are able to create ‘original’ output that is often indistinguishable from human-generated content” (OECD [

43]: 6). With Bhatnagar and Mahant [

9] predicting a 270% increase in the growth of AI adoption in financial services over the next four years, concerns expressed in the interviews about managing GenAI risks are also documented in the recent literature (e.g., Dhake et al. [

19]). Through the narratives it was confirmed that, with its broad appeal and ease-of-use, GenAI appears to be a game-changer and also a disruptor of established RAI practices, with organisations having no option but to adapt and build new guardrails.

The latter requires an exploration of relevant non-technical challenges across people, process, skills, organisation, and budget in order to realise a principles-to-action transition for RAI based on the participants’ viewpoints.

4.3. Non-Technical Barriers in Making Responsible AI Actionable

Eight participants used words such as “overwhelming” and “million-dollar question” or long, reflective pauses followed by “hmmm, good question…” when explaining their difficulty to implement RAI. This may encapsulate the challenges executives face in governing AI effectively, given the breakneck speed of AI evolution as well as constantly evolving RAI conceptual guidelines.

“With AI, people tend to feel overwhelmed (…) and there are so many new terms popping up and challenges”.

(P11, Strategy Officer, Ethical bank, Germany)

4.3.1. Challenge #1: Determining Who’s Responsible for What

Seven of the officers interviewed used words such as “tricky”, “hardest”, “big risk”, “biggest risk”, and “main fear” when attempting to elaborate on challenges to ensure accountability. In five of these seven interviews, accountability was mentioned upfront and unsolicited. Apart from accountability, security and safety were cited by an equal number of participants as the critical principles of RAI; interestingly, robustness was not mentioned by any of the interviewees.

P2 highlighted the challenge to determine who is accountable/responsible if algorithms go wrong; using a self-driving car analogy, [P2] illustrated:

“If…, if, for instance, there is graffiti on a stop sign and a malicious actor puts in a command that ‘if graffiti go at 30 miles an hour’ and accident happens, who is liable?”

(P2, Chief Technology Officer, Insurance, UK)

Practical challenges in ensuring accountability became more nuanced when four participants explicitly used the word “black box” to describe AI, e.g.,:

“Too many unknown unknowns to bullet-proof responsible AI use. When data goes in, it is like a black-box. You can only provide data for training, you cannot control the outcomes”.

(P6, Chief Information Security Officer, Investment Bank, Switzerland]

“…As in we may not always understand how it reached a certain conclusion, right? Why a certain user is segmented in category A versus category B”.

(P1, Strategy Officer, Fintech, The Netherlands)

P1 placed emphasis on the value of having clear mechanisms to monitor and manage the data inputs into the AI system(s) and the outputs to facilitate “reverse engineering” for transparency. To make this practical, he continued to denote that periodic input–output audits and random checks can help organisations identify the issues, document them, and improve the system before deploying in critical use cases such as credit scores. Some participants’ tone changed to a slower pace when mentioning the consequences of lack of traceability, explainability, or accountability in AI systems because of their black box nature. This partially highlighted their seriousness in addressing this need.

“Imagine relying on a report that is an AI outcome and it is not accurate, it risks reputation… Already, mortgage crisis eroded trust in financial services organisations. (…) Without control, if AI is allowed to unleash, it will be chaos”.

(P15, Head of AI & Data, Trading Platform, UK/Germany)

Moreover, one of the Dutch executives (P7) highlighted another practical challenge: service providers’ approach to introducing AI features as default-on to their customers, including those in financial services. A few other participants described technology providers’ tactics of introducing AI features as default on or sneakily or too many, too quickly, making this a practical barrier to practicing AI use responsibly. These interviewees referred to external factors such as supply chain, absence of an implementable “shared responsibility model”, AI providers’ feature-innovation strategies, and the role of service partners as non-technical barriers.

P7 pointed out that one Dutch organisation blocked Microsoft’s GenAI tools because, most times, the AI features were auto-enabled, and practitioners conducted detailed risk assessments for six months to obtain full control and confidence before enabling them. Another participant also highlighted the provider risk, saying any changes to the models can access new data repositories without authorisation; every time a provider makes a change to their AI service, the guardrails internally need to be revised.

“If a vendor changes their privacy model or rights structure, it is impossible for us to update all the models in 10 s”.

(P15, Head of AI & Data, Trading Platform, UK/Germany)

Discussions on non-technical barriers were more fluid than moving from one principle after another. This shows that although there are different principles, the aim is to approach AI as holistically as possible to identify and mitigate risks. The practical barriers identified for ensuring accountability can be extended to transparency as well as fairness and inclusion.

4.3.2. Challenge #2: Preparing for and Managing Unintended Consequences at the Speed of AI

“New and evolving technology [such as AI] brings on new risks and it can be difficult to keep on track, build mitigants quickly.”

(P9, AI Governance Officer, Investment Bank, UK)

One area of long discussion in most interviews was when participants started talking about the fairness and inclusion principle of RAI. For example, P3 raised an important issue about rolling out AI equitably and the importance of identifying pilot groups or user segments in a factual way based on transparent decisions rather than arbitrarily rolling it out based on seniority alone. P3 warned that this could create a digital divide at a social level within the organisation (see Capraro et al. [

44]). Principles of fairness and inclusion in RAI would see organisations deciding user groups based on who would benefit the most from using AI tools and services in their jobs, P3 added.

P8 made similar points around transparency of decisions to ensure fairness.

“It’s really, really important to make sure you define everything, make sure you define the taxonomy.

Your AI risk description based on your risk taxonomies.

Make sure you give the proper examples of each…

Make sure you map them into the framework you prefer.”

(P8, Security Officer, Retail Bank, UK)

Interestingly, country-specific nuances of how organisations approached RAI implementation were evident, despite the small sample size. For instance, all three of the Dutch executives referred to the Dutch government’s algorithmic blunder from 2020 when highlighting practical challenges around the accountability principle. The issue involves the Dutch government’s welfare surveillance program called SyRI that uses vast amounts of personal and sensitive data with algorithms to predict how likely a person is to commit tax fraud or (child) benefit fraud. The AI system was a “risk calculation model” that was developed “over the past decade”, but the flawed system disqualified many genuine benefit seekers and wrongly categorised many innocent people as frauds and even affected their access to resources and/or credit scoring. The issue was so serious that Amnesty International called on governments everywhere to instantly stop using sensitive data in risk-scoring and billed the project as “opaque ‘black box’ systems, in which the inputs and calculations of the system are not visible, resulting in “absence of accountability and oversight” [

45]. P1 mentioned the mishap as a “delicate issue” and one that their financial services organisation is “cognisant about” when mapping out adoption plans. P10, however, highlighted the story as a learning opportunity. Through their narratives it was pointed out that this national experience has had an impact on how Dutch practitioners approach RAI. For instance, P7’s organisation now follows a conservative approach based on an “AI is scary mindset”, where such systems are prohibited in use cases such as in decision engines, while it relies on intensive training programs to make everyone take responsibility for using AI ethically and fairly and understand the implications of not doing so. Serious implications include loss of IP, non-compliance, exposure of sensitive data, and low-quality output from AI.

“We want to practice Responsible AI responsibly, especially to avoid such horrible situations.

But it also risks slowing down”.

(P1, Strategy Officer, Fintech, The Netherlands)

“It ruined people’s lives (…) Shows that there’s too much at stake to even plan responsibly across multiple facets [of AI] …Responsibility is always with people and we are keeping it with people. If AI fails, it can break a lot”.

(P7, DevOps Engineer, Pension Funds, The Netherlands)

“We’ve seen things go wrong in the past and you want to learn from them.”

(P10, Risk & Compliance Officer, Pension Funds, The Netherlands)

Another perspective around unintended consequences is the (lack of) organisational readiness to deal with future uncertainties. Participants imagined bad actors using AI to attack banks. Examples included how fraudsters can impersonate tens of thousands of genuine mortgage applicants by “hearing a recording of a couple seconds” to train an AI system. In this regard, two executives (P1 and P7) were particularly unsure on the overall value of AI to finance in the long term:

“We don’t fully know whether AI in the financial services market is gonna have a net negative or net positive effect”

(P1, Strategy Officer, Fintech, The Netherlands)

4.3.3. Challenge #3: Difficulty in Ensuring Fairness and Inclusion

Additional practical barriers around fairness and inclusion include a lack of understanding of what fairness is, what AI risks are, and how to define thresholds and risk appetite. According to P13, explaining data governance is far easier than explaining fairness. It is tricky for a few reasons, he elaborated. One, people are impatient and want to do things “quickly” rather than “properly”. This results in tactical decisions that make the environment complex and difficult to manage or justify.

“It is hard to tell how AI systems are using data for fair outputs given how automated AI systems are…With GenAI, you are compounding the problem and authorise decisions that lead to undesirable consequences”.

(P13, Data Management Officer, Insurance, UK)

P8 also alluded to similar concerns, saying the emphasis on speed of AI output or results is far higher than the emphasis on the fundamentals or input due diligence.

“Fairness is context-dependent and can be subjective”.

(P9, AI Governance Officer, Investment Bank, UK)

Many respondents had lots to say about fairness and inclusion and a desire to do the right thing. It was a repeated barrier, but participants highlighted aspects that are near and dear to them or their experiences. Although non-homogenised, the varied perspective further highlights the complexity of making RAI principles actionable. Highlighting disadvantages for certain communities, P14 identified GenAI’s usage limitations for non-native English speakers as another point around fairness. One of the Dutch participants agreed that to improve quality, the team has to embed a translation service into their GenAI tool, which requires more time, resources, and skills compared to native English users.

“…(my) conclusion was that the tool needs to be trained in equal number of books and papers in Norwegian as it is in English to have the same impact in that language…How am I going to do that?”.

(P14, AI Strategy Officer, Multi-Agent AI Services, UK)

Another perspective around fairness and inclusion was pointed out by P11, who indicated the critical importance of decision criteria in place in order to abort an AI service in case of conflict when it yields a great revenue model but makes job roles redundant. In a similar vein, P3 raised another fairness question around rolling out AI tools “equitably”, where members of the workforce do not feel less privileged for not having it.

“AI does have this ability to create social divide (…) Fair and inclusive AI is how you provide access so that if there are question around this, the decisions can be justified and explained”.

(P3, Director/App Delivery Officer, Insurance, UK)

4.3.4. Challenge #4: Traditional Business Approaches, Models, and Processes in Finance Unable to Cope with AI

Through their responses, executives suggested that, beyond business processes, legacy models and practices are barriers, too. Two interviewees pointed out how the banking sector has historically had a very mature culture of collaboration and cooperation and consensus-building.

“(…) This is one of the nicest thing about the industry where things usually change very slowly, with consensus. But with AI, it’s the opposite and we’re caught off-guard”.

(P11, Strategy Officer, Ethical bank, Germany)

Another past process that is considered a barrier was data management. P13 highlighted how their (small-sized) enterprise has not tagged the data or developed data access guidance for AI; adding this past practice makes practicing AI responsibly an impossible task according to this data management officer. Data access was also cited by P5, P6, and P3 and billed as a “bottleneck”. P6 further highlighted how traditional governance mechanisms are incapable of dealing with the new kind of unstructured data used in AI. Aspects such as policies and guardrails around data integration or data leakage prevention, as P6 denoted, are sparse to make AI use safe.

“There are still lot of questions…And so, use of AI can lead to human error because there are no controls to alert the team of human errors”.

(P6, Chief Information Security Officer, Investment Bank, Switzerland)

4.3.5. Challenge #5: Balancing Trade-Offs and Meeting Expectations of Different Stakeholders

There were two distinct viewpoints among the executives interviewed, with one camp with 5 participants valuing an ‘innovation-first’ approach, while the rest supported a ‘governance-first’ approach to deploying AI/GenAI in finance. Six of the respondents (four with direct security backgrounds, one with data management, and one with AI governance) called out security or safety as their highest priority when turning RAI principles into practice. These practitioners placed emphasis on resolving privacy-by-design and security loopholes. The former five participants focused on innovation included a Dutch fintech (P1) that has provided access to CoPilot to everyone in the organisation with the expectation of high usage and high productivity. In fact, in P1’s words, in his organisation, managers obtain notification to nudge the team in case of low adoption.

“We are a born-digital company, so tech-savvy culture is ingrained in the workforce. Why bother a colleague if [GenAI tool] CoPilot can do… what you need”.

(P1, Strategy Officer, Fintech, The Netherlands)

P2, P11, and P6 expressed difficulty in surfacing innovator perspectives when security voices are the loudest. P2 admitted it is difficult not to “govern AI to death”. P14 and P11 particularly expressed the desire for financial services organisations to adopt some of “Silicon Valley” attitude of innovation. But P7, P8, P4, and P15 emphasised the importance of governance. In fact, P15, with 20 years of experience in neural networks and deep learning, declared:

“(…) Of course, the most secure technology is one that has been turned off, battery removed and buried in the desert. Incredibly secure…But not usable”.

(P12, Cybersecurity Officer, General Bank, UK)

“(…) It’ almost impossible to get to a responsible Generative AI. There is no way to establish a stable governance on pure stochastic systems like the ones based upon this AI paradigm. A system that gives you different answers to the same question can’t be 100% reliable and can’t be controlled or governed”.

(P15, Head of AI & Data, Trading Platform, UK/Germany)

Such a polarised view is itself a big challenge that practitioners have to reconcile first when implementing RAI that meets everyone’s objectives or explain the trade-offs. In contrast to the Dutch fintech’s CoPilot adoption, one UK FI took 12 months to roll out CoPilot once a decision was made. Similarly, P10 highlighted how getting approval for use in 8 weeks is “lucky and fast”, as certain proof of concepts (POCs) take up to half a year because they have to go through layers of approval, which include the immediate business unit and multiple governing bodies, such as data teams, privacy teams, security architects, legal teams, and so on. Those supporting the ‘security-first’ approach admitted to the challenges of coping with the speed of AI expectations of an enthusiastic workforce. If adoption is faster than governance, then double the effort is required to bring it back on track, admitted P8. P4 also noted how certain groups in his organisation are excited to use the technology. But insisted that a thorough evaluation of appropriate solutions takes time, indicating the enormity of this non-technical barrier.

“Locking it down is like trying to get salt out of the ocean because there are so many ways around it…we will end up with shadow usage of different tools. They will start using and then ask for waiver to use saying they have invested time and money”.

(P7, DevOps Engineer, Pension Funds, The Netherlands)

Further highlighting the trickiness in balancing security with innovation, P4 highlighted that slow governance can backfire, with frustrated users finding newer and more convenient GenAI tools in traditional, low-risk AI facets such as machine learning, accentuating the problem.

“If governance is always playing catch-up, they may use GenAI even in areas where traditional ML is appropriate bringing in new risks”.

(P4, Security Officer, Stock Exchange, UK)

“(…) We’re always in such a hurry to find answers”.

(P11, Strategy Officer, Ethical bank, Germany)

P3 expressed the ‘pain’ differently:

“We don’t want to find out that our IP is being utilised in GenAI tools like ChatGPT, and that meant that we had to quite quickly react to being able to put the guardrails.”

(P3, Director/App Delivery Officer, Insurance, UK)

The risks, if this challenge is not addressed, are far-reaching to compliance and costs too. For example, if GenAI tools are adopted without following appropriate licensing or usage agreements, there can be serious IP, security, and compliance risks. P5 delineated few examples of French companies having experienced this, adding a warning that the “Internet and AI never forget”. The speed intensifies the governance or monitoring challenge, as P9 underlined that “(…) Responsible AI takes time…evidence gathering, filling in assessments, etc”.

Overall, balancing the needs of innovation-orientated tech enthusiasts in the organisation, the murky world of AI risks, and long-established organisational structures or processes is tough, admitted by nine participants. In fact, interviewees cited that they are torn between the enthusiasm to use AI quickly and the traditional organisational aspects that are not yet ready for such an unprecedented new way of business operations, with regulations “somewhere in between”, as P3 pointed out that regulations are a good starting point but do not provide complete, clear answers to all barriers, particularly the non-technical ones. Yet, most participants expressed a strong awareness and intention to be a catalyst for RAI practices but have no blueprint to follow.

“Trade-off between performance and/or profit and fairness (is one of the top barriers in implementing Responsible AI) and business stakeholders have to make those decisions”.

(P9, AI Governance Officer, Investment Bank, UK)

4.3.6. Challenge #6: Ensuring Sustainability

Eight participants mentioned sustainability or carbon footprint as a significant challenge. This was an area that participants discussed with a rather passionate, human-centric, and purpose-driven vision of RAI. Most emphasised short-term vision and views as compounding the problem. For instance, P11 denoted:

“You have a lot of use-and-drop technology and that’s not very sustainable”.

(P11, Strategy Officer, Ethical bank, Germany)

P13 expressed a similar viewpoint in that it requires tedious efforts to identify the use cases and data to make sure that “the algorithms will last in time and not be replaced by, say, the next new version 3.5 or 4 or so on every 5 months”. P3, P5, and P2 also emphasised the difficulty in effectively meeting sustainability goals as a key non-technical barrier. There was agreement that GenAI is very resource-intensive in terms of servers and memory. The inability to manage this not only increases costs but also has an environmental impact that is far from negligible. One particularly “wasteful” trend pointed out by P5 is the impact of a decentralised approach to AI deployment: every business unit developing and managing their own AI projects results in duplicity of resources for providing “pretty much the same services” with direct impacts on the cumulative carbon footprint.

4.3.7. Challenge #7: The Human Factor

Most (i.e., 13 out of 15) of the respondents highlighted people-related challenges ranging from mindset and culture to skills and attitudes. “People, people, people”, “team”, “staff”, “everyone”, “they”, “we” were repeatedly used when highlighting the human effect as a non-technical barrier. One participant (P6) asserted that 80% of the success depends on people, while others agreed that convincing people to get on board with a shared, common (AI) vision can indeed be a non-technical barrier. One powerful remark on this came from P5 in the context of sustainability, accountability, and ethics of AI:

“You can explain the importance of practising AI responsibly. Most people already know it but it is getting people to care to do the right thing”.

(P5, Data/Sustainability Officer, Insurance, France)

Similar sentiments were expressed by P12, P13, and P8. One way to engage people to care is to empathise with them, P12 suggested. According to P8, the problem stems from how guidelines are handed down, and not everyone views them from the same lens. While P7 said, demonstrating value that resonates with everyone about responsibility is “massively hard.”

“It’s easy to produce guidelines and standards for any organisations and then just tell everyone to follow it. In practice, how you wanna actually convince them that this is right for them and how you can show a return on investment. This is not an easy social skill”.

(P8, Security Officer, Retail Bank, UK)

Multiple participants pinpointed the skills and knowledge shortage in RAI as a severe human-orientated barrier to action.

“Talent is already rare (…) now the combination of AI and governance is (becoming) even more rare, niche, find”.

(P6, Chief Information Security Officer, Investment Bank, Switzerland)

Responses touching upon skills suggested that a major non-technical barrier unique to RAI compared to any other previous technologies is how every individual is expected to be accountable because otherwise, they risk jeopardising the whole organisation. Bringing everyone up to speed with digestible, easy-to-understand training programs and communication strategies so they all become responsible users at ground level appears to be a mighty task to move from theory to practice. People’s skills, use of sharp judgement, emotional intelligence, and sense-checking are essential to identify bias in AI. In this regard, P12 explained this with an analogy of SatNavs:

“If it asked you to drive into a river because it’s the fastest route between A and B, at that point you may as well turn it off because you don’t trust it. So if AI tells you something, you still need skilled professional to validate that output”.

(P12, Cybersecurity Officer, General Bank, UK)

4.3.8. Challenge #8: Operationalising AI and Planning for What Happens Beyond Day Zero

Building, deploying, or adopting AI responsibly is only half the story. The barriers become murkier when practitioners have to think about what happens after Day Zero (implementation day). P4, P14, and P3 specifically consider not just how to introduce AI responsibly but also how to make it business-as-usual. One difficulty is creating processes and policies to allocate AI licenses appropriately and managing the different types of employees (joiners, movers, and leavers) and making sure it is funded within the right budget cycle and charged to the correct business unit. Additional hurdles had connections back to the fairness and inclusion principles, stressing the difficulty in rolling out AI “equitably” without making employees feel less privileged.

“How can I ensure no-one feels like a lower-valued employee because they can’t have it?”.

(P3, Director/App Delivery Officer, Insurance, UK)

The laser focus on AI implementation means organisations are not considering barriers that they will stumble upon when pilots become successful and they have to make it business-as-usual. Addressing tough questions such as the impact on AI access policies, redundant skills, job losses, or changing roles. This means when there are questions around why a particular user obtained access and another did not, there is a valid, consistent explanation for it so that it is not seen as creating a digital divide at a social level and in the workplace environment since AI has the potential to create social divides. Analysing responses indicates that almost all interviewed organisations have clear policies and guidelines and architectural principles in place but admitted their AI strategies may still not be fully risk-free.

“There are so many unknown unknowns, we can’t plan for all scenarios.

(P2, Chief Technology Officer, Insurance, UK)

4.3.9. Challenge #9: Justifying and Securing Budgets

Multiple thoughts were shared on budgetary resources (or lack thereof) for implementing RAI. Respondents revealed that budget allocation for RAI practice is arbitrary, ranging from 2% to as high as 50%. Three participants refused to share information on budgets. Those with high spending expect it to go down in a couple of years when it stabilises. P5, P7 and P11, and P10 (representing entities from France, Switzerland, and Germany, respectively) indicated a higher budget compared to P4, P8, and P12 on the lower side.

AI use requires heavy investment that smaller FIs find hard to undertake, risking their competitiveness. P13 highlighted it takes about GBP 50–70 k to take one use case fully scaled and ready after the PoC. In addition, there was little confidence in whether what each organisation is investing in is appropriate or not. Some participants were exercising caution in overinvesting into building sophisticated controls for GenAI at a time when there is so much hype around it, indicating that it may be a waste of resources if the adoption hits a ceiling sooner than expected. In fact, P3 asserted that stagnation or usage of GenAI tools for “the same use cases over and over again” is already visible nowadays. Likewise, P15 expects GenAI to work primarily on creative tasks and, in general, on anything where reliability and preciseness are not mandatory because of the difficulty in governing it. Similar viewpoints about the future were expressed by other executives in the context of operationalisation: one main observation was that there is no shining example within the financial services industry of how to embed AI and GenAI systems and completely transform their business. However, this is in stark contrast to examples of FIs like JPMorgan, Visa, or HSBC that have completely transformed how they overcome ‘tough’ business challenges such as money laundering and fraud management with AI.

This contrast of some financial services using AI more than others is down to attitudes and culture. Those interviewees that see AI as a hype (P2, P3, P5, P6, P7, P13, and P15) are cautious to experiment vs. those that see it as a transformational technology (P1, P4, P8, P9, P10, and P14).

P14 identified that some organisations are so worried about the things that can go wrong that they “don’t do anything”. His belief is that the technology progresses, and what AI does not process right today, it will process right in six months or a year. One piece of evidence of this is how HSBC or JPMorgan has been working and testing the use cases for years, identifying issues and co-developing solutions to address the issues before making the AI use case live.

“What’s really important at the moment is to experiment and understand what is possible and be conscious of what is not.”

(P14, AI Strategy Officer, Multi-Agent AI Services, UK)

But not everyone shares the optimism and wants assurances of tangible returns before allocating budgets.

“It is easy to fall for the hype. Analysing everything and understanding the benefits thoroughly before jumping into the trend is important.”

(P6, Chief Information Security Officer, Insurance Bank, Switzerland)

P15 shared similar sentiments.

“… after the incredible hype that accompanied GenAI, I’m pretty sure its scope will shrink quite by much.”

(P15, Head of AI & Data, Trading Platform, UK/Germany)

4.4. The Big Debate: Which Framework? … and the Role of Corporate Digital Responsibility

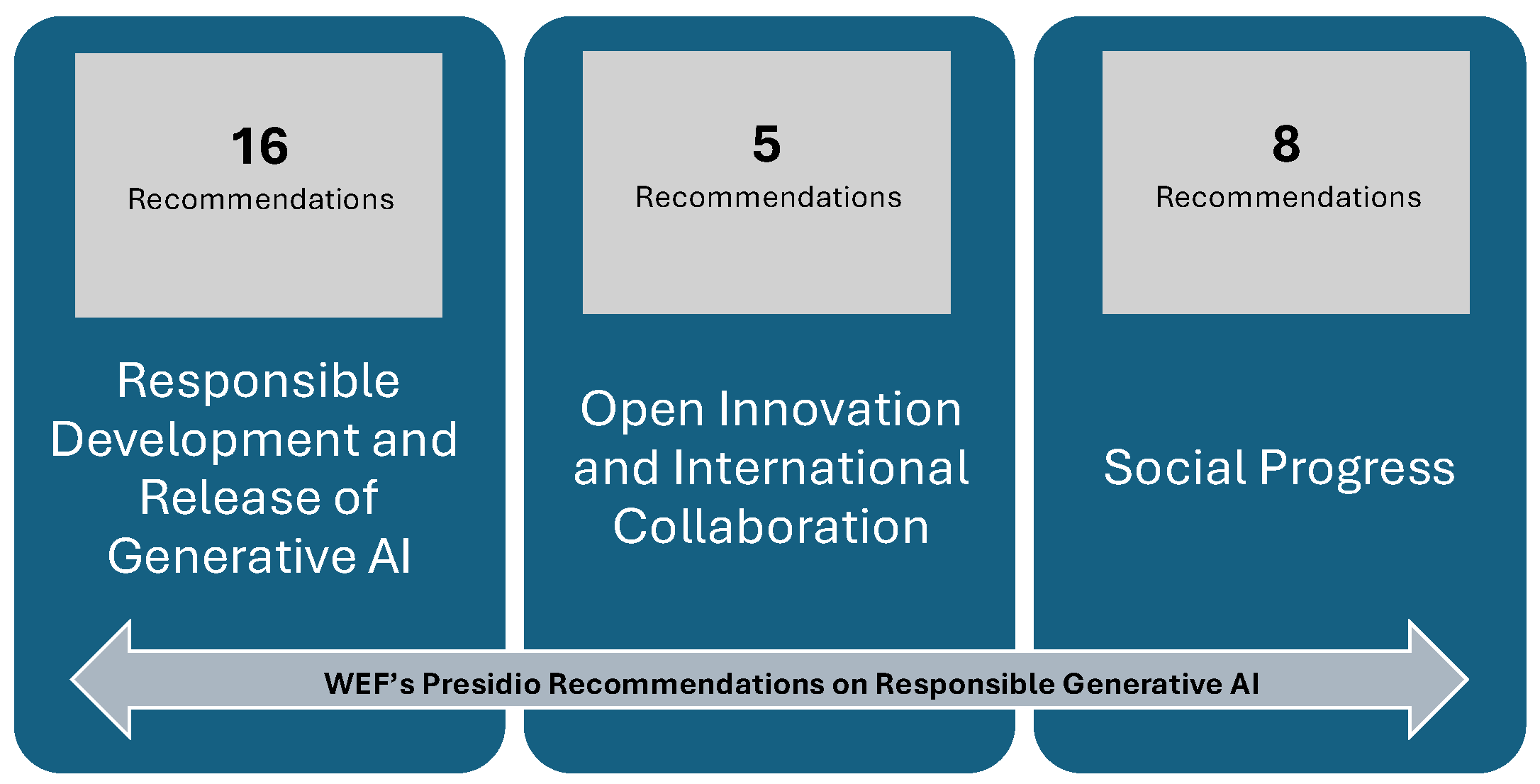

One theme that stood out in the analysis was how different participants used different RAI frameworks. Three executives revealed that their organisation adopted the US NIST AI framework, which tried to make it actionable. The other twelve participants were following frameworks their FIs developed in-house by taking inspiration from GDPR, the EU AI Act, EU recommendations, the Chartered Institute for IT, and the OECD AI Principles (OECD [

46] 2023b: 17), while the frameworks’ dimensions covered aspects similar to the WEF’s 7 principles (WEF, 2024b [

24]). There was also no definitive approach on whether the AI approach is or should be centralised or decentralised. Some organisations that are large and with a global presence focused on a decentralised AI strategy, but equally, other multinational financial organisations opted for a centralised strategy to minimise challenges around sustainability. P3 said their organisation is developing their own framework as AI is evolving rapidly.

“We have developed our own AI framework and strategy rooted in our own risk framework, security framework and financial framework.”

(P3, Director/App Delivery Officer, Insurance, UK)

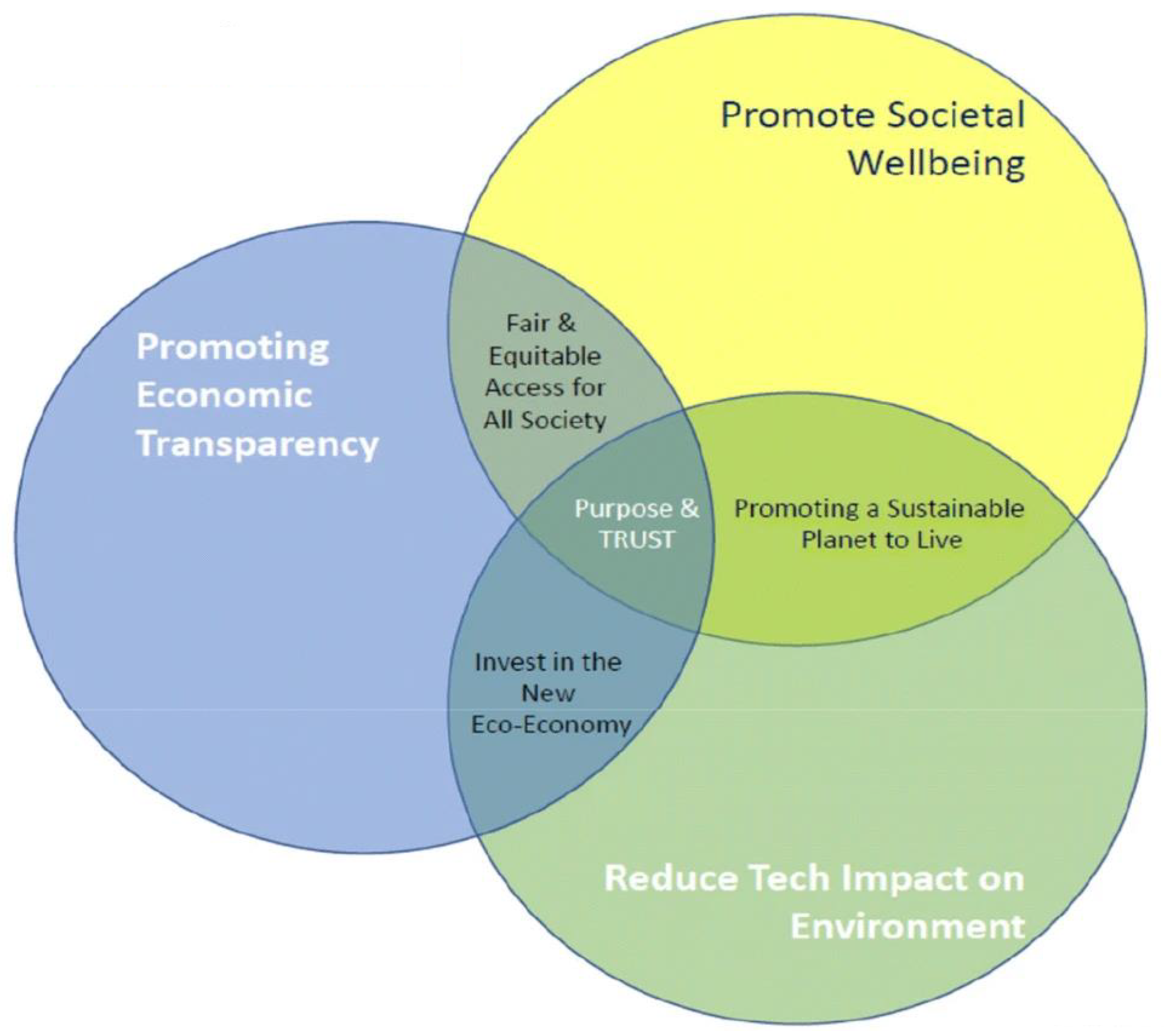

4.5. CDR Intersection and Impact

Interestingly, two organisations that practice Corporate Digital Responsibility rooted their RAI practices within their CDR principles. Their representatives maintained that this allowed them to demystify some of the challenges identified by other participating executives, especially those barriers around dealing with unintended consequences. For example, P11 pointed out that the CDR framework “came in very handy” in identifying unintentional effects from AI, such as staff reduction, and helped them be proactively engaged in addressing newer threats. However, the challenge, as executives’ responses denoted, is that an alignment between CDR and RAI can be a cumbersome, time-consuming, and thorough task (for every single principle through the lens of CDR), which can also slow down innovation potential and make certain stakeholders disgruntled. This further emphasises how delicate the balancing act such an alignment can be. Interestingly, those interviewees who were CDR practitioners displayed a stronger human-centric approach to RAI and deliberated more, using terms such as “idealist”, “building consensus”, “no margin for error” when it came to adopting AI responsibly (most likely because the very origins of CDR lie in corporate social responsibility and stakeholder theory, which prioritise human welfare, rights, and agency over strictly technical optimisation). CDR practitioners went beyond the quantitative aspects of the RAI framework, such as return on AI investments, to focus deeply on human-related consequences to shape their organisations’ RAI actions. In their words, they considered the unintended consequences of AI upfront and employed CDR tenants to update HR and legal policies and related organisational processes to ensure value-first banking. One participant echoed how in a recent CDR group discussion on RAI, members felt that the discussion was “evolving from Responsible AI to actually more of a human rights conversation”. The CDR practitioners also highlighted a growing, thriving, and collaborative community within their organisations that has RAI as an agenda item for discussion at every executive meeting. This helps in learning, sharing, and addressing challenges together as opposed to every organisation trying to figure it out themselves, as is evident from other interviewees’ statements.

For example, both P3 and P4 admitted they are still in the process of fully building out their RAI framework, although they are clear it is going to be their own, unique framework. For practical purposes, P4’s organisation (which was not aware of CDR frameworks) is working through existing control policies:

“Currently, there are some big debates internally on whether we have a separate AI framework or do we work through existing control policies and strengthen those for AI risks to ensure that we have it all joined up. At this point, a decision hasn’t been made…”.

(P4, Security Officer, Stock Exchange, UK)

Similarly, P7 said they developed their own RAI framework because they wanted a ‘head start’ in ensuring AI adoption is well-controlled in the organisation. However, putting the framework into practice was challenging, as every stakeholder works in their silos with their agenda items.

“Developers say ‘we have a vision for AI and we want all these data sources’, but privacy officer declines. So, AI trials within our RAI framework was of little use as it was based on dummy data giving very different results.”

(P7, DevOps Engineer, Pensions Fund, The Netherlands)

Having documented non-technical barriers and CDR’s mediating effects, we next contextualise these findings within scholarly debates on AI governance and further reflect on this study’s implications, limitations, and avenues for future research.

5. Discussion and Concluding Remarks

The findings to answer the main research purpose of this paper highlight nine non-technical barriers: (i) complexities in determining accountability; (ii) trickiness in preparing and managing unintended consequences quickly; (iii) difficulty in ensuring fairness and inclusion, particularly because defining fairness remains vague; (iv) limitations of existing business approaches, processes, and models to cope with AI; (v) difficulties in balancing trade-offs; (vi) managing AI’s carbon impact; (vii) people’s attitudes, skills, and cultures; (viii) managing unforeseen challenges when AI is operational; and (ix) securing investments for RAI. Approaches to address certain non-technical challenges, such as how many resources to dedicate to AI governance or whether the approach should be centralised or decentralised, were largely non-homogenous. Interestingly, addressing people-orientated barriers was identified as a high-impact solution in implementing RAI. Those executives from entities with CDR practices in place prioritised the need to resolve people-orientated barriers and sustainability considerations, acknowledging those as important elements of RAI success. Such prioritisation aligns with observations from Elliot et al. [

5] that “CDR creates cultural change to avoid invoking “tick-box” compliance” (p. 185) and materialises Lewis’s statement that “business will never be any more ethical than the people who are in the business,” (Lewis [

47]: 377).

The interview findings suggest that Responsible AI is non-negotiable but remains a work in progress in the financial services sector, with GenAI concerns superseding AI concerns. Moreover, responses reveal that non-technical barriers around GenAI are identified as trickier to address, given the inherent nature of the technology. Executives expressed a common approach for financial institutions to overcome such challenges by being fast-followers and not early-adopters, with terms such as “not being at the bleeding edge” and “wait, watch, and get on” (to mitigate risks) used during the interviews. Retaining human oversight was stressed as an imperative, and participants insisted on making AI a human augmenter and not a decision-maker to retain control; similar sentiments are raised by Mikalef et al. [

2] in their discussion of human oversight, highlighting the concept of “conjoined agency to balance the strengths of humans and machines in symbiotic relationships” (p. 263). In this regard, taking a staged approach can help in making Responsible AI digestible and actionable, according to certain interviewees.

It was also revealed that the technical and non-technical barriers are intertwined, requiring practitioners to understand and address both to effectively practice RAI. For example, fairness or transparency principles depend not only on technical features, such as explainability or algorithm monitoring, but also on non-technical aspects, such as AI skills and literacy and the organisation’s policies on data input sources. Practitioners find technical challenges more tangible to address and adopt a broad-stroke AI governance approach. This is making AI challenges overwhelming with no clear starting point or step-by-step approach. Among the interviewees, those with technical jobs or security responsibilities tend to view RAI implementation through a strong technical lens, whereas those with CDR practices take a creative, idealistic, and thorough approach, indicating that for some organisations, making a start and learning along the way is more important, while for others, getting it right is a prerequisite to starting. A similar observation has recently been made by Wang et al. [

20] in their study, highlighting how “organisational macro-motivators” such as “company culture and individuals” are “overlooked” aspects that motivate some companies to (de)prioritise RAI (Wang et al. [

20]: 3). In a similar vein, as Lobschat et al. [

27] emphasise that digital responsibility must be embedded into a company’s digital strategy and culture, the paper corroborates this by showing that where CDR mindsets were institutionally reinforced, there was a stronger alignment between RAI intentions and governance practices. In addition, this study’s findings align with and build on the growing body of the literature exploring the integration of ethical, social, and environmental considerations into AI governance. Specifically, our study empirically grounds the conceptual works of Elliot et al. [

5] and Tóth and Blut [

48], who propose that CDR offers a moral compass for AI deployment by extending traditional legal and risk frameworks and where “(…) accountability and the division of responsibilities should be made as clear as possible” so that financial services materially “demonstrate their commitment to responsible AI use via CDR” (Tóth and Blut [

48]: 6–7). Moreover, the findings lend support to the view of Olatoye et al. [

6], who argue that CDR and RAI can form a “symbiotic framework” primarily in sectors where high public accountability pressures and stakeholder expectations occur. Lastly, the need for interdisciplinarity defining the governance models in the digital economy [

49] is reflected in the qualitative findings, demonstrating how cross-functional AI boards, anchored in CDR principles, may serve as viable governance mechanisms that bridge technological innovation with ethical deliberation. Taken together, these insights suggest that CDR is not merely supportive of RAI but can be foundational to its meaningful implementation, especially in sectors like finance, where trust, risk, and fairness are critical to legitimacy [

1,

50]. Rather than treating RAI and CDR as parallel or competing frameworks, this study proposes integrated models in which CDR functions as a practical mediator that translates ethical aspirations into organisational processes, cultural practices, and measurable outcomes [

5,

50]. In this respect, insights from these interviews lend support to previous warnings stressing that without “(…) a viable value proposition integrated into a company’s overall goals and model, responsible practices are unlikely to be properly incentivised or prioritised against other competing demands” [

51], which indicates that practitioners, even if they use all their subject-matter expertise to practice RAI, its effectiveness may be limited, given inhibitory factors and gaps setting back broader business model designs.

To demonstrate how CDR principles address the barriers identified in the interviews, in

Table 2, we attempt to link each non-technical challenge to a corresponding CDR domain and illustrate examples of concrete mechanisms either explicitly described by participants or synthesised from closely related statements that emerged during thematic analysis and abstracted to reflect the underlying logic of the participants’ responses (while aligning with recognised CDR concepts). Such mapping reinforces the role of CDR as a practical mediator of responsible AI adoption, with the CDR principles shown in

Table 2 drawn from established frameworks found in the literature (e.g., Lobschat et al. [

27]; Mihale-Wilson et al. [

50]) and adapted inductively to reflect how practitioners can frame their own relevant digital governance practices.

This approach has several implications. From an academic standpoint, it invites scholars to deepen their focus into how varying organisational interpretations of CDR influence the scope, depth, and effectiveness of RAI initiatives across contexts [

6,

25]. It also demonstrates how high-level ethical guidelines translate (or fail to embed) into day-to-day governance challenges. By foregrounding the role of CDR as a governance lens, the rich, interview-based data illuminate that future research should develop more integrated, interdisciplinary models that weave together ethics, organisational behaviour, and information systems theory. Additionally, it empirically extends Fjeld et al.’s [

1] “thorny gap” between RAI principles and practice by introducing a sector-specific taxonomy of critical barriers. Likewise, it addresses Merhi’s [

3] call for research on organisational dimensions of RAI and provides the first empirical evidence supporting Elliot et al.’s [

5] proposition that CDR “demystifies governance complexity”, revealing that human-operational barriers dominate practitioner challenges (against an RAI literature that overemphasises technical solutions and persistently privileges concerns such as bias mitigation and explainability). In this respect, by documenting how GenAI supersedes traditional AI concerns due to its opacity and multimodal risks, this study responds to the OECD’s [

43] call for industry-specific risk assessments, positioning GenAI as a distinct research priority in finance.

In terms of practical implications in finance, it offers a nuanced roadmap for embedding RAI and suggests that AI governance strategies should be shaped within wider digital responsibility agendas, not simply tacked on as standalone ethical checklists, aligning with calls for value(s)-led digital transformation [

27,

52]. For instance, CDR’s efficacy in resolving human-centric barriers (e.g., ethics training and consensus-building) offers a replicable model for better embedding RAI in the enterprise. Moreover, the suggested barrier taxonomy equips practitioners with a diagnostic tool to prioritise interventions and underscores the necessity of holistic governance architectures that balance innovation speed with robust risk controls to meet ESG commitments (such as carbon-footprint metrics for AI workloads to address critical underlying gaps in the current EU AI Act). The demonstrated value of CDR frameworks suggests that firms could achieve better alignment between ethical aspirations and operational realities by formally codifying espoused values around data stewardship, environmental impact, and employee empowerment and operationalising RAI through integrated policies (e.g., HR-legal alignment), urging its adoption beyond those pace-setting business entities. In practice, this means establishing cross-functional AI governance bodies, embedding and regularly conducting input/output audits, designing and applying clear responsibility matrices, and allocating budgetary and training resources for the post-implementation (“day-after”) maintenance of AI systems. Organisations that adopt such a socio-technical approach are more likely better positioned to realise (Gen)AI’s productivity and customer-service benefits while safeguarding trust, accountability, and legitimacy with governance protocols, such as cross-functional “risk rapid-response” teams, as guardrails to address emergent threats like algorithmic creativity misuse.

Beyond individual business entities, findings from research endeavours, such as ours, have broader social resonance as they stimulate policymakers to incorporate CDR dimensions and non-technical factors into emerging AI regulatory frameworks [

24,

53,

54]. As financial institutions deploy AI/GenAI at scale, failures in fairness, trust, transparency, or sustainability can erode public trust, exacerbate inequalities, and contribute to environmental externalities. The risk of a “digital divide” (whereby certain employee cohorts may gain AI fluency and decision-making power) mirrors larger societal concerns about digital exclusion and/or algorithmic bias, underscoring a need for bottom-up participatory RAI design by engaging civil society in key interventions to prevent marginalisation. By advocating for a human-centric, consensus-driven CDR orientation, this study amplifies the social imperative that AI systems serve collective well-being, not just organisational optimisation. Policymakers can draw on such insights to enact regulations and disseminate best-practice guidelines that mandate organisational readiness metrics that ensure AI’s benefits accrue across society and communities in a transparent (e.g., explainable credit denials), equitable (e.g., mitigating GenAI’s language biases), and sustainable (e.g., enforcing carbon standards for AI infrastructure) manner, and thus, ensure social acceptability and support.

Indeed, by its nature, qualitative research is open-ended and subject to bias, as “…researchers need to remember that patterns discovered in such data may come from informants as well as from investigators’ recording biases” (Ryan and Bernard [

38]: 100). Although care was taken, Ryan and Bernard call out that the researcher acts as a subconscious theme filter, potentially limiting findings. The amount of data collected has been overwhelming, distracting, and sometimes difficult to analyse systematically. Efforts to avoid “describing the data rather than analysing it” (Bell et al. [

33]: 530) also helped navigate through data that are interesting but not relevant to the research objective. Interviews depend on participants’ own expertise, experiences, and priorities, as well as what strikes them as significant or important, which is decided by the researcher. The small sample size is practical for this study, but the scope/scale of the findings can be limited. However, this study aims to bring diverse viewpoints from participants with deeper practical knowledge and those with direct stake/engagement in the topic. While the value of small samples in making “empirical generalisations” is questioned (Bell et al. [

33]: 376), the value of important inferences derived from the qualitative data cannot be discounted. Defending qualitative interviews and case studies in generalisation, Flyvbjerg [

55] highlights the importance of being “problem driven and not methodology driven” (p. 242) and sees methodology as the best available means to “best help answer the research questions at-hand”.

Thus, while this study offers novel insights into emerging AI domains, it is not without limitations. First, the sample was restricted to 15 professionals from European financial institutions, selected through purposive-convenience sampling. Although participants occupied diverse roles (e.g., AI leads, risk managers, and ethics officers), their perspectives may reflect organisational or cultural biases specific to the financial sector in Western regulatory settings. This limits the transferability of findings to other regions or industries with different governance dynamics or levels of digital maturity. Second, reliance on a single qualitative data source, i.e., semi-structured interviews, means that we were unable to triangulate themes with other forms of evidence, such as organisational documentation, AI policy artefacts, or direct observation. While we employed reflexive thematic analysis and maintained an audit trail of coding decisions, we acknowledge the absence of formal cross-validation, which may affect the confirmability of interpretations. Third, gender and positional diversity within the sample was uneven; the majority of interviewees were male and held senior positions. This may have led to an underrepresentation of frontline or non-dominant voices, particularly with regard to fairness, inclusion, or human-centric governance concerns. We encourage future studies to intentionally oversample underrepresented groups to broaden the ethical and experiential scope of relevant insights.

Future research should build on these findings in several ways. First, scholars could confirm and expand on the non-technical barriers identified and extend the scope of inquiry beyond the financial sector to examine how RAI frameworks are applied (or struggle to gain traction) in other domains such as healthcare, manufacturing, or the public sector. Comparative, cross-sectoral studies would help assess the generalisability and contextual specificity of CDR-informed RAI governance models, as it is essential to “include a greater diversity of actors in AI governance activities” (Attard-Frost and Lyon [

56]: 17). Second, empirical studies could further develop and test the operational components of CDR by using quantitative or mixed-method approaches. For instance, surveys, action research, or longitudinal case studies could evaluate how specific CDR mechanisms (e.g., AI carbon footprint management, stakeholder management) impact ethical outcomes, trust, or innovation performance across firms. In this respect, researchers could also examine how evolving legal frameworks (e.g., the EU AI Act) influence the practical adoption of CDR principles and whether they promote or hinder human-centric governance in AI ecosystems. Third, there is a need for deeper theoretical exploration of the interplay between RAI and CDR, particularly regarding how organisational culture, leadership, and regulation mediate their integration.

By acknowledging the aforementioned limitations and outlining targeted paths forward, studies such as ours lay the groundwork for a more rigorous and empirically grounded understanding of complexities in AI deployment, aligning technological ambition with responsibility principles and ensuring not only compliance but also legitimacy and trust in the digital age.