Understanding Video Narratives Through Dense Captioning with Linguistic Modules, Contextual Semantics, and Caption Selection

Abstract

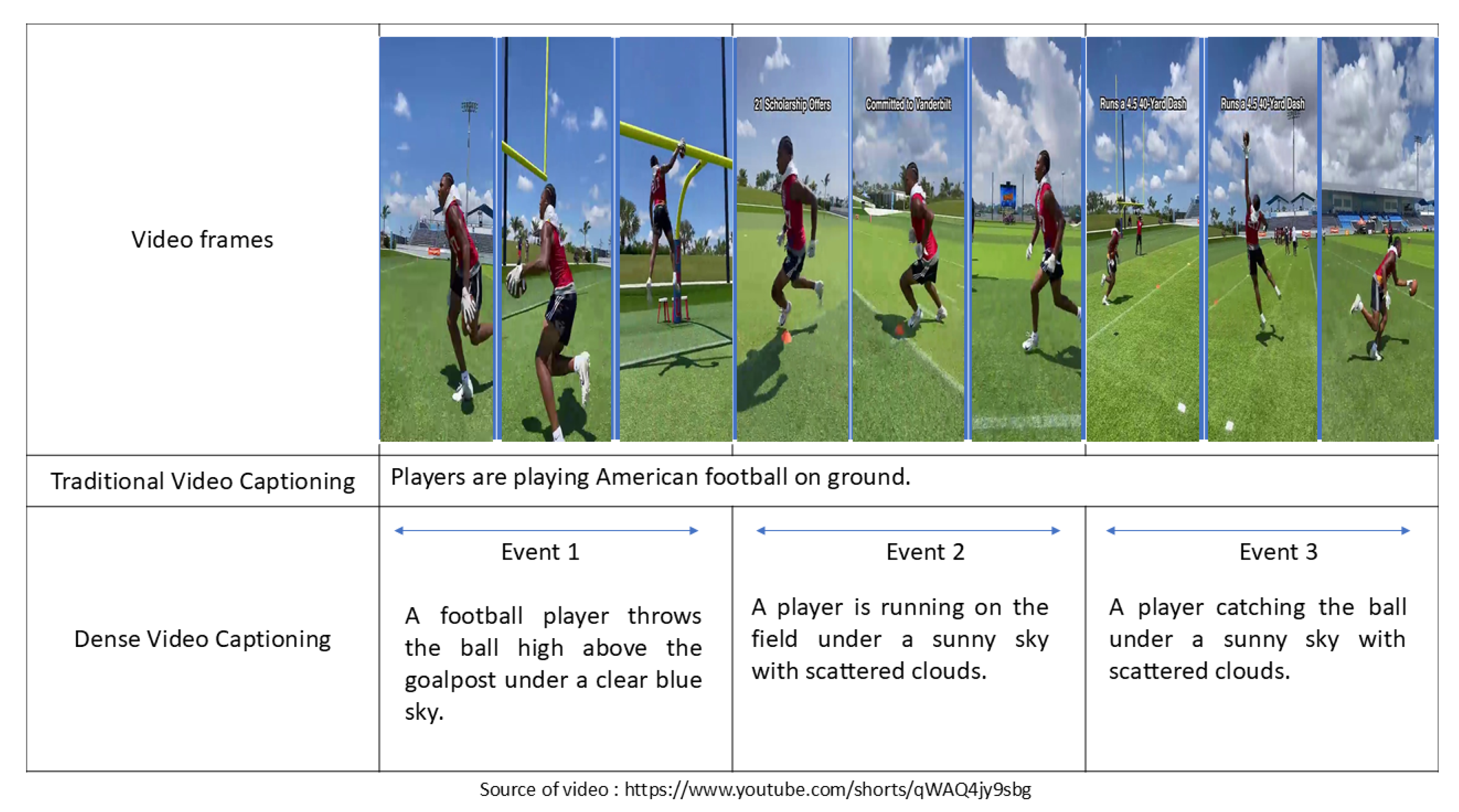

1. Introduction

2. Literature Review of Dense Video Captioning Methods

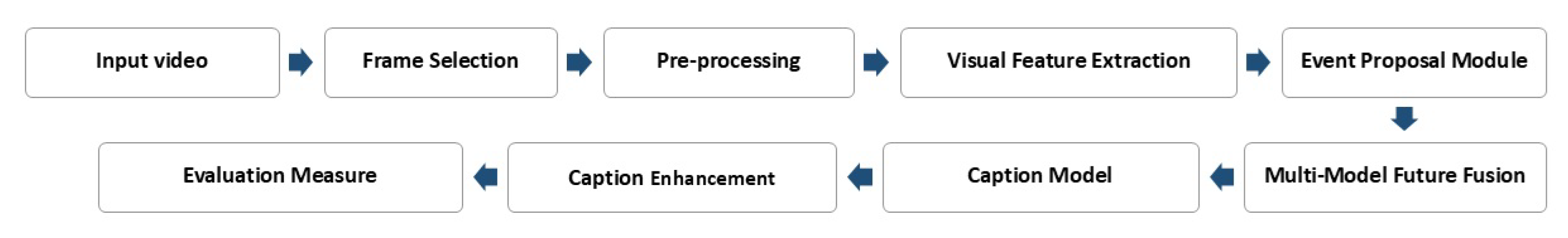

3. Context-Aware Dense Video Captioning with Linguistic Modules and a Caption Selection Method

| Algorithm 1 Context-Aware Dense Video Captioning with Linguistic Modules and a Caption Selection Method | |

Input: X = [(]) | ▹ N = Total Frames |

Input: V = [(]) | ▹ T = N/ |

Input: E = Word embedding vector | |

Input: = 16 | |

Output: R = [()] | |

: C3D features of video V for frames | ▹ i = 1,2,…T |

: n number of events (P) from video | ▹ i = 1,2,…n |

: n number of captions (c) from video | ▹ i = 1,2,…n |

: List of captions for (p) event in video | |

: Generated n proposal at t time stamp | |

: confidence score of n proposal at t time stamp | |

: hidden vector of selected event at m time stamp, m is start time | |

: hidden vector of selected event at n time stamp, n is end time | |

: C3D features + hidden vectors of event | |

: C3D features of selected event | |

: word Vector in forward direction | |

: word Vector in backward direction | |

: Feature vector(visual + textual features of previous caption) | |

| |

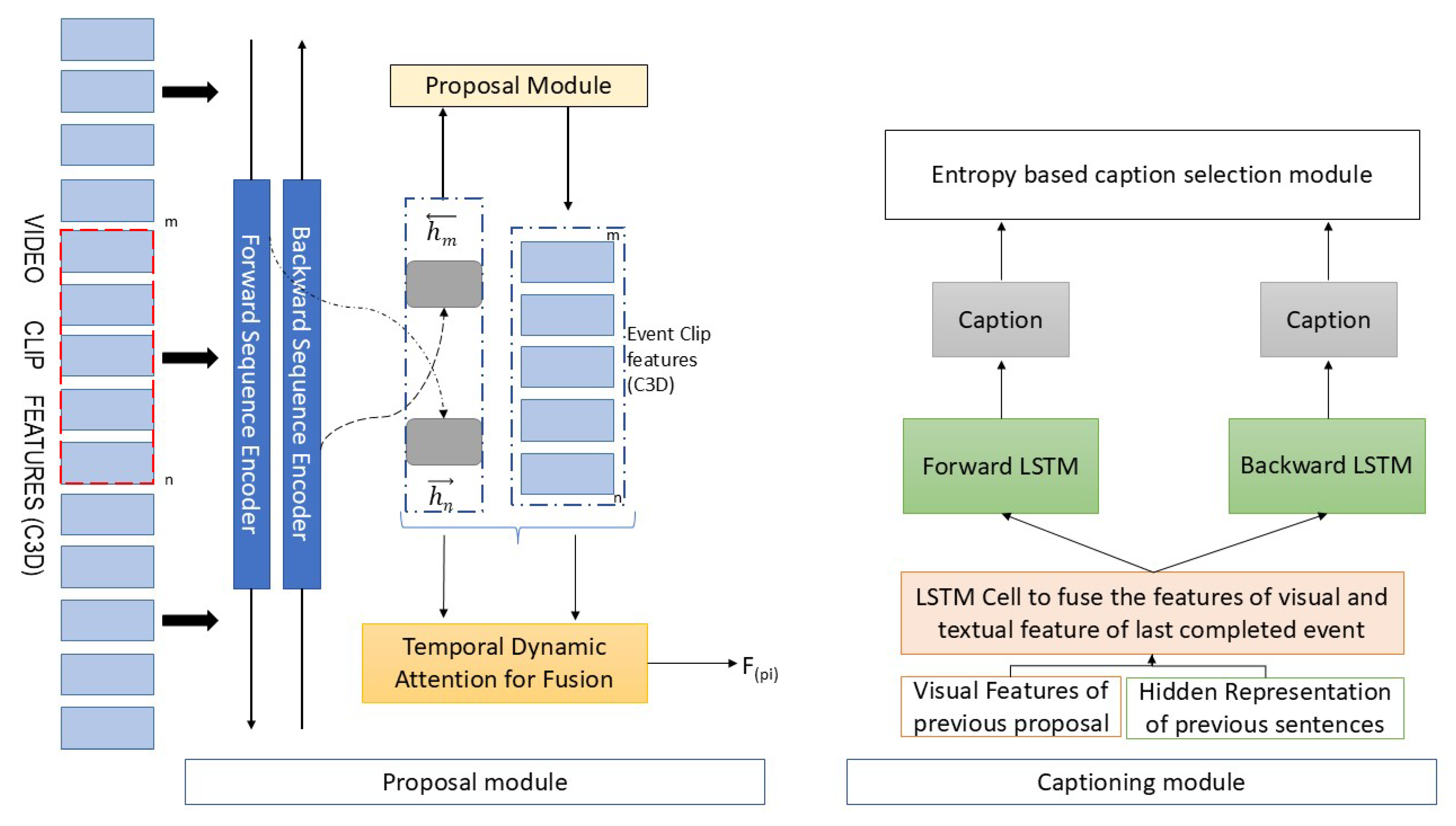

3.1. Event Proposal Module

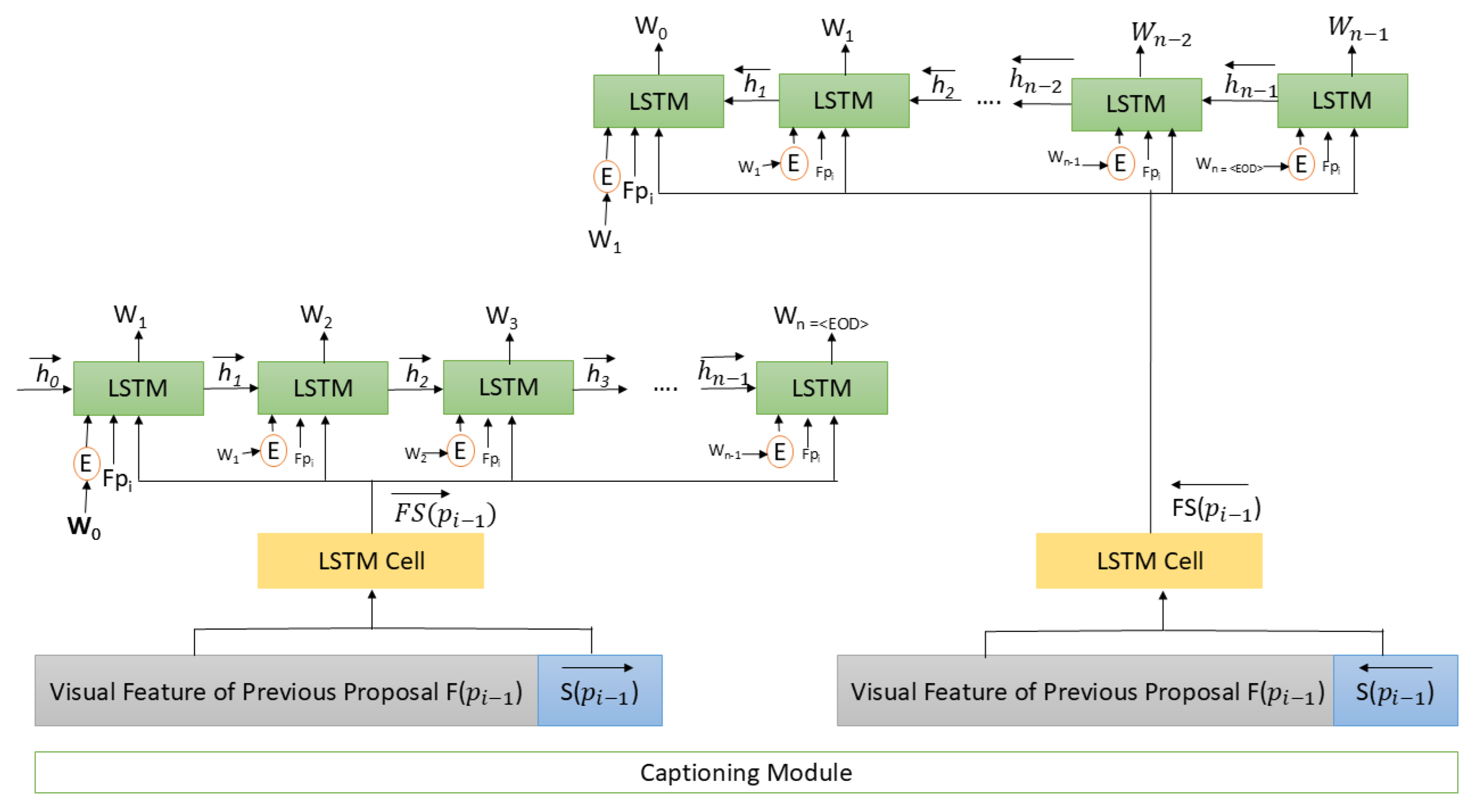

3.2. Caption Module with Semantic and Linguistic Model

3.3. Caption Selection Module

3.4. Loss Function

4. Result and Implementation

5. Conclusions and Future Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, J.; Rohrbach, A.; Darrell, T.; Canny, J.; Akata, Z. Textual Explanations for Self-Driving Vehicles. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 577–593. [Google Scholar]

- Potapov, D.; Douze, M.; Harchaoui, Z.; Schmid, C. Category-specific video summarization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 540–555. [Google Scholar]

- Dinh, Q.M.; Ho, M.K.; Dang, A.Q.; Tran, H.P. Trafficvlm: A controllable visual language model for traffic video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 7134–7143. [Google Scholar]

- Yang, H.; Meinel, C. Content based lecture video retrieval using speech and video text information. IEEE Trans. Learn. Technol. 2014, 7, 142–154. [Google Scholar] [CrossRef]

- Anne Hendricks, L.; Wang, O.; Shechtman, E.; Sivic, J.; Darrell, T.; Russell, B. Localizing moments in video with natural language. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 22–25 July 2017; pp. 5803–5812. [Google Scholar]

- Aggarwal, A.; Chauhan, A.; Kumar, D.; Mittal, M.; Roy, S.; Kim, T. Video Caption Based Searching Using End-to-End Dense Captioning and Sentence Embeddings. Symmetry 2020, 12, 992. [Google Scholar] [CrossRef]

- Wu, S.; Wieland, J.; Farivar, O.; Schiller, J. Automatic Alt-text: Computer-generated Image Descriptions for Blind Users on a Social Network Service. In Proceedings of the CSCW, Portland, OR, USA, 25 February–1 March 2017; pp. 1180–1192. [Google Scholar]

- Shi, B.; Ji, L.; Liang, Y.; Duan, N.; Chen, P.; Niu, Z.; Zhou, M. Dense Procedure Captioning in Narrated Instructional Videos. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 6382–6391. [Google Scholar]

- Chen, Y.; Wang, S.; Zhang, W.; Huang, Q. Less is more: Picking informative frames for video captioning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 358–373. [Google Scholar]

- Suin, M.; Rajagopalan, A. An Efficient Framework for Dense Video Captioning. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; pp. 12039–12046. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic And Extrinsic Evaluation Measures For Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29–30 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. Spice: Semantic propositional image caption evaluation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 382–398. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Kusner, M.; Sun, Y.; Kolkin, N.; Weinberger, K. From word embeddings to document distances. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 957–966. [Google Scholar]

- Fujita, S.; Hirao, T.; Kamigaito, H.; Okumura, M.; Nagata, M. SODA: Story Oriented Dense Video Captioning Evaluation Framework. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VI 16; Springer: Cham, Switzerland, 2020; pp. 517–531. [Google Scholar]

- Escorcia, V.; Heilbron, F.C.; Niebles, J.C.; Ghanem, B. Daps: Deep action proposals for action understanding. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 768–784. [Google Scholar]

- Buch, S.; Escorcia, V.; Shen, C.; Ghanem, B.; Carlos Niebles, J. Sst: Single-stream temporal action proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2911–2920. [Google Scholar]

- Krishna, R.; Hata, K.; Ren, F.; Fei-Fei, L.; Niebles, J.C. Dense-Captioning Events in Videos. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 706–715. [Google Scholar]

- Wang, J.; Jiang, W.; Ma, L.; Liu, W.; Xu, Y. Bidirectional Attentive Fusion with Context Gating for Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7190–7198. [Google Scholar]

- Li, Y.; Yao, T.; Pan, Y.; Chao, H.; Mei, T. Jointly Localizing and Describing Events for Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7492–7500. [Google Scholar]

- Xu, H.; Li, B.; Ramanishka, V.; Sigal, L.; Saenko, K. Joint event detection and description in continuous video streams. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 396–405. [Google Scholar]

- Mun, J.; Yang, L.; Ren, Z.; Xu, N.; Han, B. Streamlined Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6588–6597. [Google Scholar]

- Zhang, Z.; Xu, D.; Ouyang, W.; Zhou, L. Dense Video Captioning using Graph-based Sentence Summarization. IEEE Trans. Multimed. 2020, 23, 1799–1810. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 652–660. [Google Scholar]

- Zhou, L.; Zhou, Y.; Corso, J.J.; Socher, R.; Xiong, C. End-to-End Dense Video Captioning with Masked Transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8739–8748. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar]

- Huang, X.; Chan, K.H.; Wu, W.; Sheng, H.; Ke, W. Fusion of multi-modal features to enhance dense video caption. Sensors 2023, 23, 5565. [Google Scholar] [CrossRef] [PubMed]

- Iashin, V.; Rahtu, E. A Better Use of Audio-Visual Cues: Dense Video Captioning with Bi-modal Transformer. arXiv 2020, arXiv:2005.08271. [Google Scholar]

- Yu, Z.; Han, N. Accelerated masked transformer for dense video captioning. Neurocomputing 2021, 445, 72–80. [Google Scholar] [CrossRef]

- Iashin, V.; Rahtu, E. Multi-modal Dense Video Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 958–959. [Google Scholar]

- Wang, T.; Zhang, R.; Lu, Z.; Zheng, F.; Cheng, R.; Luo, P. End-to-end dense video captioning with parallel decoding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 6847–6857. [Google Scholar]

- Choi, W.; Chen, J.; Yoon, J. Parallel Pathway Dense Video Captioning With Deformable Transformer. IEEE Access 2022, 10, 129899–129910. [Google Scholar] [CrossRef]

- Huang, X.; Chan, K.H.; Ke, W.; Sheng, H. Parallel dense video caption generation with multi-modal features. Mathematics 2023, 11, 3685. [Google Scholar] [CrossRef]

- Aafaq, N.; Mian, A.S.; Akhtar, N.; Liu, W.; Shah, M. Dense Video Captioning with Early Linguistic Information Fusion. IEEE Trans. Multimed. 2022, 25, 2309–2322. [Google Scholar] [CrossRef]

- Deng, C.; Chen, S.; Chen, D.; He, Y.; Wu, Q. Sketch, ground, and refine: Top-down dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 234–243. [Google Scholar]

- Choi, W.; Chen, J.; Yoon, J. Step by step: A gradual approach for dense video captioning. IEEE Access 2023, 11, 51949–51959. [Google Scholar] [CrossRef]

- Yang, A.; Nagrani, A.; Seo, P.H.; Miech, A.; Pont-Tuset, J.; Laptev, I.; Sivic, J.; Schmid, C. Vid2seq: Large-scale pretraining of a visual language model for dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 10714–10726. [Google Scholar]

- Wei, Y.; Yuan, S.; Chen, M.; Shen, X.; Wang, L.; Shen, L.; Yan, Z. MPP-net: Multi-perspective perception network for dense video captioning. Neurocomputing 2023, 552, 126523. [Google Scholar] [CrossRef]

- Zhou, X.; Arnab, A.; Buch, S.; Yan, S.; Myers, A.; Xiong, X.; Nagrani, A.; Schmid, C. Streaming dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 18243–18252. [Google Scholar]

- Kim, M.; Kim, H.B.; Moon, J.; Choi, J.; Kim, S.T. Do You Remember? Dense Video Captioning with Cross-Modal Memory Retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 13894–13904. [Google Scholar]

- Wu, H.; Liu, H.; Qiao, Y.; Sun, X. DIBS: Enhancing Dense Video Captioning with Unlabeled Videos via Pseudo Boundary Enrichment and Online Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 18699–18708. [Google Scholar]

- Bhatt, D.; Thakkar, P. Improving Narrative Coherence in Dense Video Captioning through Transformer and Large Language Models. J. Innov. Image Process. 2025, 7, 333–361. [Google Scholar] [CrossRef]

- Shen, Z.; Li, J.; Su, Z.; Li, M.; Chen, Y.; Jiang, Y.G.; Xue, X. Weakly supervised dense video captioning. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; Volume 2. [Google Scholar]

- Duan, X.; Huang, W.; Gan, C.; Wang, J.; Zhu, W.; Huang, J. Weakly Supervised Dense Event Captioning in Videos. arXiv 2018, arXiv:abs/1812.03849. [Google Scholar] [CrossRef]

- Rahman, T.; Xu, B.; Sigal, L. Watch, listen and tell: Multi-modal weakly supervised dense event captioning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8907–8916. [Google Scholar]

- Chen, S.; Jiang, Y.G. Towards bridging event captioner and sentence localizer for weakly supervised dense event captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8425–8435. [Google Scholar]

- Aytar, Y.; Vondrick, C.; Torralba, A. Soundnet: Learning sound representations from unlabeled video. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 892–900. [Google Scholar]

- Estevam, V.; Laroca, R.; Pedrini, H.; Menotti, D. Dense video captioning using unsupervised semantic information. arXiv 2021, arXiv:2112.08455. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Chen, S.; Zhao, Y.; Jin, Q. Team RUC_AIM3 Technical Report at Activitynet 2020 Task 2: Exploring Sequential Events Detection for Dense Video Captioning. arXiv 2020, arXiv:2006.07896. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, H.; Yu, M.; Tian, Q.; Hu, H. Event-Centric Hierarchical Representation for Dense Video Captioning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1890–1900. [Google Scholar] [CrossRef]

- Marafioti, A.; Zohar, O.; Farré, M.; Noyan, M.; Bakouch, E.; Cuenca, P.; Zakka, C.; Allal, L.B.; Lozhkov, A.; Tazi, N.; et al. Smolvlm: Redefining small and efficient multimodal models. arXiv 2025, arXiv:2504.05299. [Google Scholar] [CrossRef]

| Literature Survey on Dense Video Captioning | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Dense Video Captioning Model | Year | Multi Model | Visual Model | Localization Model | Captioning Model | Audio Features | Event Loss Function | Event Localization Loss Function | Captioning Loss |

| Supervised Dense Video Captioning Models | |||||||||

| DenseCap [20] | 2017 | - | Convolution 3D | DAPs | LSTM | - | Weighted Binary Cross Entropy | Recall with tIoU | Cross Entropy |

| Jointly Dense [22] | 2018 | - | Convolution 3D | TEP | LSTM | - | Softmax | Smooth L1 | Reinforcement Learning |

| EndtoEnd [27] | 2018 | - | CNN with Self Attention | ProcNet without LSTM Gate | Transformer | - | Binary Cross Entropy | Smooth L1 with tIoU | Weighted Cross Entropy |

| Bidirectional [21] | 2018 | - | Convolution 3D | Bidirectional SST | LSTM with Context Gate | - | Weighted Binary Cross Entropy | tIoU | Sum of Negative Likelihood |

| JEDDi-Net [23] | 2019 | - | Convolution 3D | R-C3D | LSTM | - | Binary Cross Entropy | tIoU | Log Likelihood |

| Streamline [24] | 2019 | - | Convolution 3D | SST PointerNet | LSTM with Context Gate | - | Weighted Binary Cross Entropy | tIoU | Reinforcement Learning |

| EfficientNet [10] | 2020 | - | CNN with Self Attention | ProcNet without LSTM Gate | Transformer | - | Binary Cross Entropy | Smooth L1 with tIoU | Weighted Cross Entropy |

| GPaS [25] | 2020 | - | Convolution Graph Network | GCN-LSTM | LSTM | - | - | - | Cross Entropy |

| Bidirectional Transformer [30] | 2020 | ✓ | Inflated 3D | Bimodal Multi Headed Attention | Bimodal Transformer | VGGish | Binary Cross Entropy | MSE | KL-divergence |

| AMT [31] | 2021 | ✓ | TCN Self Attention | Anchor-free Local Attention | Single Shot Masking | - | Cross Entropy | Regression Loss | Weighted Cross Entropy |

| SGR [37] | 2021 | ✓ | CNN back-bone with Transformer | Dual Path Cross Attention | - | - | - | Logistic Regression Loss | Cross Entropy |

| PDVC [33] | 2021 | ✓ | CNN with Transformer | Transformer with Localization Head | LSTM with Soft Attention | - | Binary Cross Entropy | Generalize IOU | Cross Entropy |

| PPVC [34] | 2022 | ✓ | Convolution 3D with Self Attention | Cross Attention | Multi-Stack Cross Attention | - | - | Regression Loss | Log Likelihood |

| VSJM-Net [36] | 2022 | ✓ | 2D-CNN ViSE | Multi-headed Attention | FFN | - | Binary Cross Entropy | - | Cross Entropy |

| SBS [38] | 2023 | - | C3D + Transformer | Multi-headed Attention | - | Binary Cross Entropy | Negative log likelihood | Cross Entropy | |

| Vid2seq [39] | 2023 | - | Transformer | Multi-headed Attention | Transformer | - | - | - | Cross Entropy |

| MPP-net [40] | 2023 | - | CNN + Transformer | Self Attention | LSTM + Transformer | - | Cross Entropy | Generalize tIoU | Cross Entropy |

| PDVC-MM [35] | 2023 | ✓ | I3D | Transformer | LSTM with deformable soft attention | VGGish | Cross Entropy | IoU | Cross Entropy |

| FMM-DVC [29] | 2023 | ✓ | I3D | Transformer | LSTM | VGGish | - | IoU | Cross Entropy |

| Streaming DVC [41] | 2024 | - | Transformer | Self Attention | Transformer | - | - | - | - |

| CM-DVC [42] | 2024 | - | Transformer | Self Attention | Transformer | - | Cross Entropy | - | Cross Entropy |

| DIBS [43] | 2024 | - | Transformer | LLM | LLM | - | - | - | Cross Entropy |

| TL-DVC[44] | 2025 | - | Convolution 3D | Bidirectional SST | Transformer | - | Weighted Binary Cross Entropy | tIoU | Sum of Negative Likelihood |

| Weakly Supervised Models | |||||||||

| L-FCN [45] | 2017 | - | VGG-16 | - | LSTM | - | - | MIMLL | Cross Entropy |

| ws-dec [46] | 2018 | - | - | - | GRU | - | Self-Reconstruction Loss | L2 | Cross Entropy |

| Watch Listen Tell [47] | 2019 | ✓ | Convolution 3D | - | GRU | MFCC, CQT, SoundNet | Binary Cross Entropy | L2 | Cross Entropy |

| EC-SL [48] | 2021 | - | Convolution 3D | - | RNN | - | Reconstruction Loss | - | Cross Entropy |

| Model | Evaluation Measures for DVC | ||||||

|---|---|---|---|---|---|---|---|

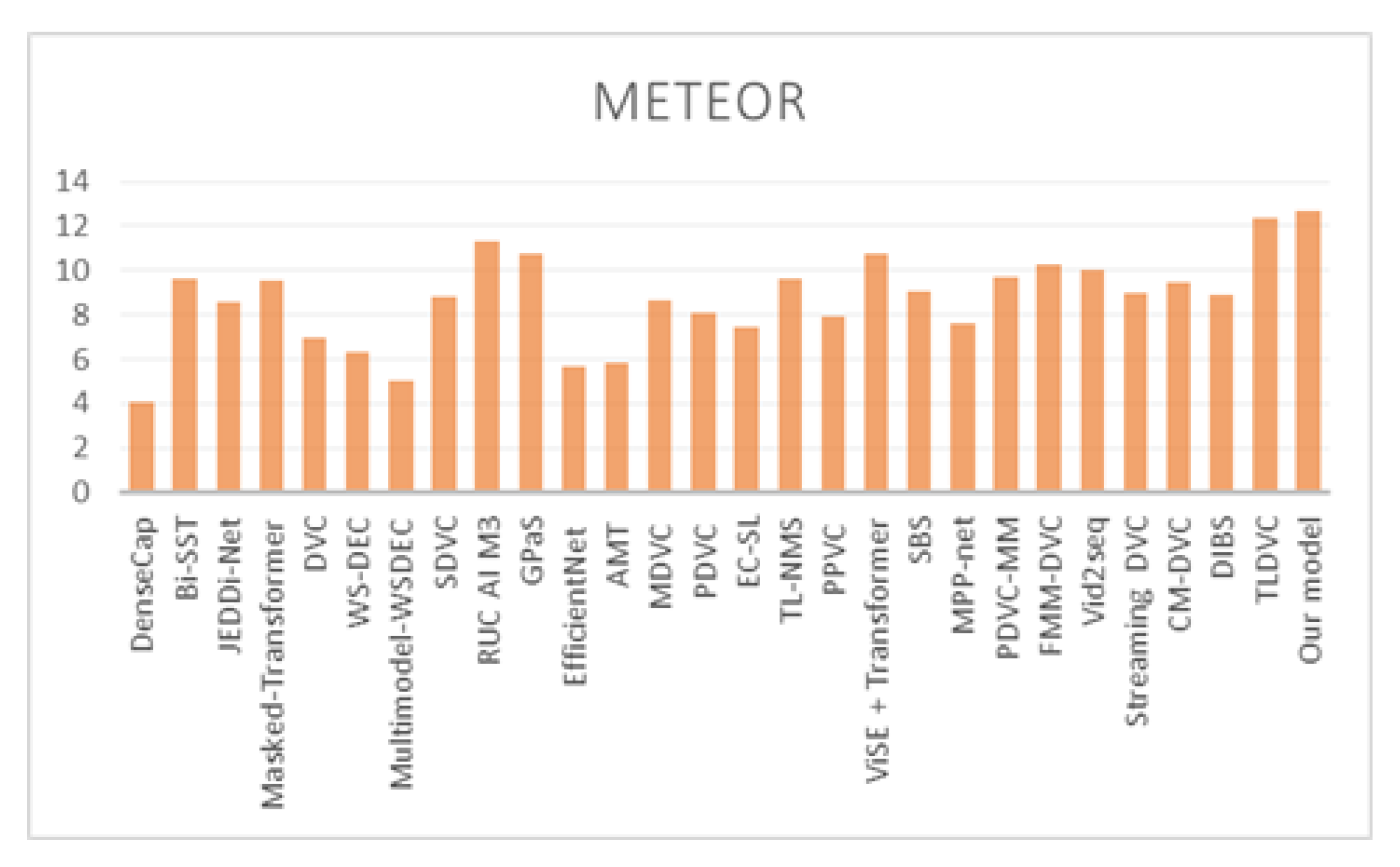

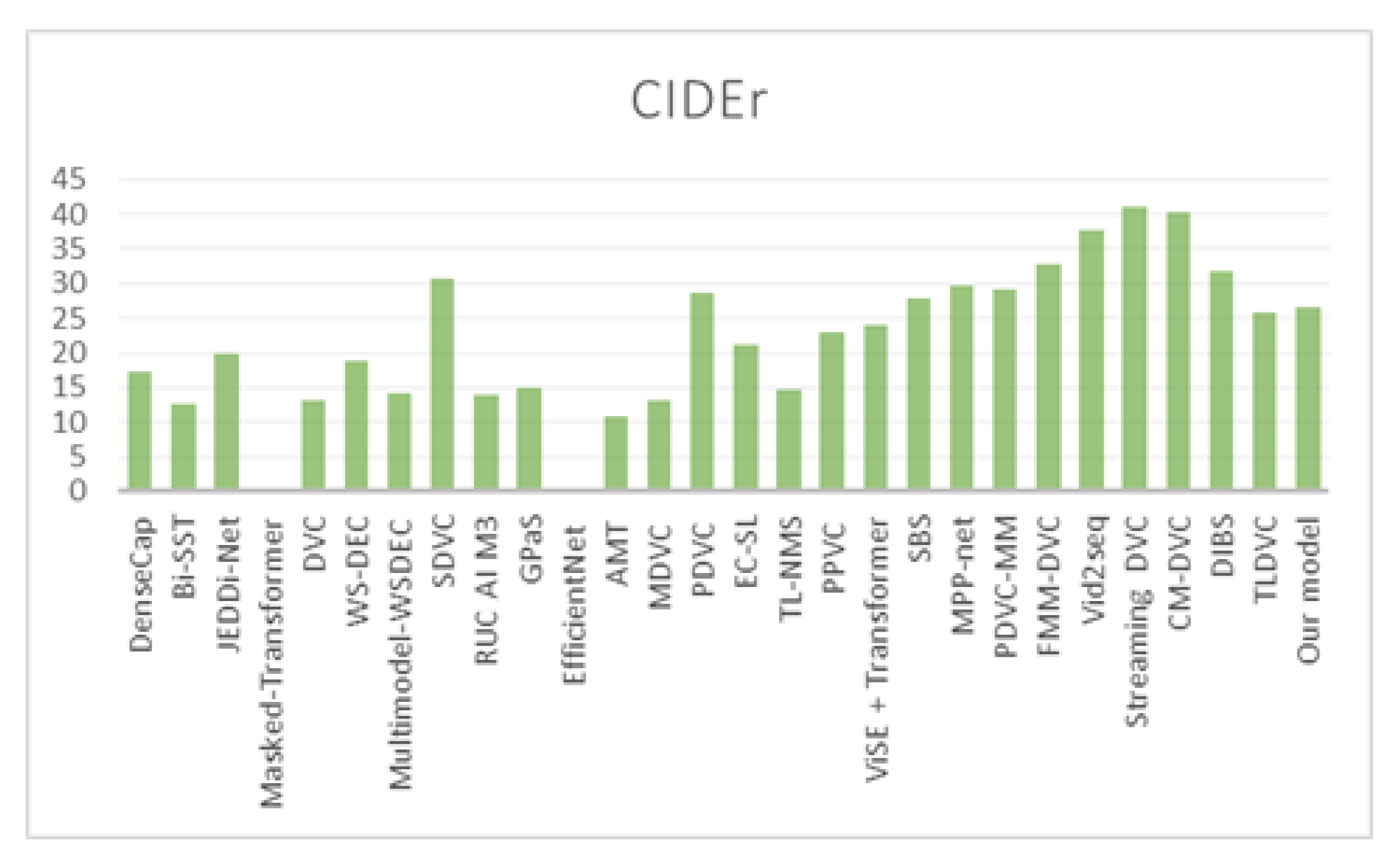

| BLEU @ 1 | BLEU @ 2 | BLEU @ 3 | BLEU @ 4 | METEOR | ROUGH | CIDEr | |

| DenseCap [20] | 17.95 | 7.69 | 3.89 | 2.2 | 4.05 | - | 17.29 |

| Bi-SST [21] | 19.37 | 8.84 | 4.41 | 2.3 | 9.6 | 19.29 | 12.68 |

| JEDDi-Net [23] | 19.97 | 9.1 | 4.06 | 1.63 | 8.58 | 19.63 | 19.88 |

| Masked-Transformer [27] | - | - | 4.76 | 2.23 | 9.56 | - | - |

| DVC [22] | 12.22 | 5.72 | 2.27 | 0.74 | 6.93 | - | 13.21 |

| WS-DEC [46] | 12.41 | 5.5 | 2.62 | 1.27 | 6.3 | 12.55 | 18.77 |

| Multimodel-WSDEC [47] | 10.0 | 4.2 | 1.92 | 0.94 | 5.03 | 10.39 | 14.27 |

| SDVC [24] | 17.92 | 7.99 | 2.94 | 0.93 | 8.82 | - | 30.68 |

| RUC AI M3 [52] | 16.59 | 9.65 | 5.32 | 2.91 | 11.28 | - | 14.03 |

| GPaS [25] | 19.78 | 9.96 | 5.06 | 2.34 | 10.75 | - | 14.84 |

| EfficientNet [10] | - | - | 2.54 | 1.10 | 5.70 | - | - |

| AMT [31] | 11.75 | 5.61 | 2.42 | 1.20 | 5.82 | - | 10.87 |

| MDVC [50] | - | - | 4.57 | 2.5 | 8.65 | 13.62 | 13.09 |

| PDVC [33] | - | - | - | 1.96 | 8.08 | - | 28.59 |

| TL-NMS [53] | - | - | - | 1.29 | 9.63 | - | 14.71 |

| PPVC [34] | 14.93 | 7.40 | 3.58 | 1.68 | 7.91 | - | 23.02 |

| EC-SL [48] | 13.36 | 6.05 | 2.78 | 1.33 | 7.49 | 13.02 | 21.21 |

| ViSE + Transformer [36] | 22.18 | 10.92 | 5.58 | 2.72 | 10.78 | 21.98 | 23.89 |

| SBS [38] | - | - | - | 1.08 | 9.05 | - | 27.92 |

| MPP-net [40] | - | - | - | 2.04 | 7.61 | - | 29.76 |

| PDVC-MM [35] | 15.23 | 8.02 | 3.91 | 1.75 | 9.68 | - | 29.17 |

| FMM-DVC [29] | 16.77 | 8.15 | 4.03 | 1.91 | 10.24 | - | 32.82 |

| Vid2seq * [39] | - | - | - | - | 10.0 | - | 37.8 |

| Streaming DVC [41] | - | - | - | - | 9.0 | - | 41.2 |

| CM-DVC [42] | - | - | - | 2.88 | 9.43 | - | 40.24 |

| DIBS [43] | - | - | - | - | 8.93 | - | 31.89 |

| TL-DVC [44] | 23.01 | 11.27 | 5.78 | 2.84 | 12.32 | 22.64 | 25.81 |

| Our model and its variants | |||||||

| DVC-CSL with R | 20.98 | 9.23 | 4.49 | 2.32 | 9.74 | 19.79 | 17.35 |

| DVC-DCSL with R | 21.89 | 9.46 | 4.97 | 2.36 | 10.01 | 20.07 | 18.17 |

| DVC-DCSL with R+MC+E | 22.02 | 9.86 | 5.02 | 2.45 | 10.32 | 20.68 | 18.79 |

| DVC-DCSL with R+MC+M | 21.98 | 9.82 | 5.04 | 2.43 | 10.31 | 20.58 | 18.81 |

| DVC-DCSL with R+MC+E+L | 24.87 | 12.02 | 6.02 | 2.86 | 12.24 | 23.69 | 26.23 |

| DVC-DCSL with R+MC+M+L | 23.98 | 11.92 | 6.13 | 2.94 | 12.71 | 23.63 | 26.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhatt, D.; Thakkar, P. Understanding Video Narratives Through Dense Captioning with Linguistic Modules, Contextual Semantics, and Caption Selection. AI 2025, 6, 166. https://doi.org/10.3390/ai6080166

Bhatt D, Thakkar P. Understanding Video Narratives Through Dense Captioning with Linguistic Modules, Contextual Semantics, and Caption Selection. AI. 2025; 6(8):166. https://doi.org/10.3390/ai6080166

Chicago/Turabian StyleBhatt, Dvijesh, and Priyank Thakkar. 2025. "Understanding Video Narratives Through Dense Captioning with Linguistic Modules, Contextual Semantics, and Caption Selection" AI 6, no. 8: 166. https://doi.org/10.3390/ai6080166

APA StyleBhatt, D., & Thakkar, P. (2025). Understanding Video Narratives Through Dense Captioning with Linguistic Modules, Contextual Semantics, and Caption Selection. AI, 6(8), 166. https://doi.org/10.3390/ai6080166