1. Introduction

The rise of artificial intelligence (AI) led to profound transformations across various sectors, enabling new models of interaction between people, technology, and information [

1]. As AI continues to permeate everyday life, it presents both opportunities and challenges, particularly in addressing the ethics of inclusion, diversity, equity, accessibility, and safety (IDEAS). AI’s ability to analyse vast datasets, automate processes, and deliver customised experiences can democratise access to critical services such as healthcare, education, and public welfare [

2]. In the educational field, AI-powered tools have the potential to enhance accessibility for students with disabilities by providing real-time transcription, text-to-speech functionalities, and personalised learning experiences. These advancements contribute to creating more inclusive educational environments [

3].

Also, AI applications can assist individuals with disabilities, such as AI-powered lip-reading apps that aid speech-impaired patients in communication. These innovations contribute to more equitable healthcare solutions [

4]. Nonetheless, AI may facilitate the job search process for people with disabilities and promote inclusive workplaces by automating tasks and tailoring work environments to specific needs, thereby supporting diversity and inclusion in the workforce [

5]. In the media and entertainment sector, AI may enable visually and hearing-impaired individuals to enjoy films and shows with features like audio subtitles and sign interpretation, making entertainment more accessible [

6].

Recent work has demonstrated AI’s utility in supporting neurodivergent populations, for instance, by using explainable machine-learning features and smart web interfaces to detect autism spectrum disorders at early developmental stages [

7].

However, this technological advancement also raises concerns about exacerbating existing inequalities, reinforcing biases, and marginalising vulnerable populations [

8,

9]. Consequently, the ethical and societal implications of AI’s integration into society are urgent areas of inquiry, particularly through the ethical lens of IDEAS.

Inclusion is defined as designing AI that empowers all stakeholder groups, diversity as ensuring varied demographic and cognitive representation, equity as fair treatment and resource allocation, accessibility as barrier-free technical interfaces, and safety as risk-mitigation against harm. Each principle draws on human-centred design theory [

9].

While the potential benefits of AI are widely acknowledged, its rapid deployment has ignited global debates over ethical use. AI systems are increasingly employed in high-stakes decision-making processes, such as hiring, healthcare diagnostics, criminal justice, and much more. Yet, these systems remain susceptible to biases that can perpetuate systemic inequalities [

10,

11]. Algorithmic decision-making often reflects and reinforces societal biases encoded in training data [

12]. For instance, facial recognition technologies have been shown to exhibit racial and gender biases, disproportionately misidentifying people [

13]. These biases underscore the critical need to examine AI’s intersection with IDEAS principles and to ensure that technological advancements do not deepen social disparities.

The ethical implications of AI have not gone unnoticed by policymakers and scholars. Efforts to regulate AI, such as the European Union’s AI Act [

14], reflect a growing recognition that technological innovation must be accompanied by ethical frameworks to safeguard fundamental human rights. The AI Act aims to establish stringent guidelines to ensure AI technologies are developed and used responsibly, minimising risks related to privacy violations, algorithmic biases, and societal harm [

15]. Beyond regulatory measures, there is an increasing demand for AI systems that not only avoid harm such as discrimination or unfair treatment [

16], but actively promote IDEAS principles, such as designing AI systems that are inclusive, equitable, and accessible. These must place emphasis on marginalised groups, who are often excluded from technological innovation [

17]. AI’s growing presence in social, economic, and political spheres makes it essential to scrutinise its influence on the broader IDEAS agenda. The lack of transparency in AI decision-making exacerbates equity concerns, as individuals from marginalised communities may have little recourse to challenge biassed or unfair outcomes [

18].

To explore these pressing issues, this paper presents the findings of a pilot qualitative study conducted with industry and academic experts in AI, exploring how AI could be developed and improved through IDEAS principles, along the lines of previous research [

19]. The pilot study investigates the opportunities and challenges posed by AI technologies in promoting inclusion, diversity, equity, accessibility, and safety across sectors, such as education, healthcare, employment, media, and entertainment, as previously illustrated.

This research is needed to connect the interdisciplinary features of AI and gaining insights on its impact in various fields of society. Key questions include the following:

- -

(RQ1) How can AI technologies be designed to foster inclusion and equity rather than perpetuate existing inequalities?

- -

(RQ2) What risks exist in AI use, particularly for marginalised communities, and how can these risks be mitigated?

- -

(RQ3) How can we ensure that AI systems are accessible to all, enabling meaningful participation from diverse groups?

By addressing these questions, this paper aims to contribute to a growing body of knowledge on the ethical development of AI and provide actionable recommendations for integrating IDEAS principles into AI design and implementation.

1.1. State of the Art: Brief Review of Artificial Intelligence and the IDEAS Principles

This section aims to review prior work on AI ethics to identify gaps in empirical analyses of IDEAS principles (RQ1), the role of participatory frameworks in bridging theory and practice (RQ2), and methodological best practices for inclusive design (RQ3).

Due to its complexity and widespread implications, defining artificial intelligence might be challenging, as there is no generally accepted definition [

20,

21]. Some scientists define AI in relation to algorithms; others emphasise its resemblance to human intelligence or describe it as a technology that enables machines to imitate complex human skills [

20]. Current definitions often lack consensus, and they are broad enough to leave room for further refinement [

20]. One widely cited definition by the High-Level Expert Group on Artificial Intelligence (AI HLEG) describes AI as “systems that display intelligent behaviour by analysing their environment and taking actions—with some degree of autonomy—to achieve specific goals” [

20,

22]. This definition provides a comprehensive view of AI, encompassing its diverse applications beyond being an imitation machine.

The application of AI in critical societal domains, such as governmental organisations, healthcare systems, defence and education, media, and entertainment, highlights the need to address ethical, safety, and privacy concerns [

20]. However, AI’s multifaceted nature requires examination from different perspectives. The 2024 Hype Cycle for Artificial Intelligence [

23] illustrates rising expectations for technologies like autonomic systems, embodied AI, and composite AI, with AI engineering currently at its peak. This indicates that AI will keep evolving, fostering further advancements and encouraging the creation of new subfields with expected impacts in all sectors.

The growing body of research and development in AI has registered significant growth over the past decade, as evidenced by the increasing number of publications and patents [

24]. A Scopus search for the keywords “artificial AND intelligence” yielded over 600,000 results spanning from 1911 to 2025, most of which published between 2024 and 2025. However, fewer than 500 papers addressed “artificial AND intelligence” and “inclusive AND design,” underscoring the limited attention paid to the intersection of AI and inclusion.

As highlighted in previous research [

25] and similarly to other emerging fields, existing studies on AI and inclusive design primarily focus on topics like inclusive learning [

26,

27,

28], tourism [

29,

30], smart cities and urban design [

31,

32], healthcare [

33], and civil engineering [

34,

35]. Limited references exist concerning how AI can support the IDEAS principles or mitigate associated barriers. While initiatives like the Joint Research Centre’s 2024 investigation into diversity at AI conferences [

36] highlight some progress, a broader effort is needed to ensure that AI aligns with and advances IDEAS principles.

In response to these needs, the European Commission has announced the establishment, since 2025–2026, of seven “AI factories” across Europe to enhance AI innovation and highlight Europe’s global leadership in the field [

37].

With reference to human–computer interaction, artificial intelligence is being used in different fields, aiming to increase inclusion and accessibility. This is due to its potential in creating personalised experiences and reducing bias. To reach this, studies show the importance of the data used for training AI, to increase representation, and to promote access to an extended range of users. The possibility to adjust features in interfaces such as text size or colour contrast through AI is being explored, as well as automatic translation or speech recognition to enhance access to the same content for people coming from different parts of the world. Voice-recognition systems may also be used to support learning platforms for people with visual impairments [

38].

Considering the various ethical implications, specific interest is given to supporting neurodiversity through AI systems. For example, one area of interest is the potential of artificial intelligence in enhancing communication for people with autism and aiding them in the socialising process [

39]. Several applications are being investigated in this direction, such as the use of voice recognition systems to support people with autism in developing intonation and rhythm, or that of AI-powered augmentative and alternative communication devices. In these cases, artificial intelligence can be used to track and monitor users’ progress and adjust to their needs and preferences [

39].

Recently, Generative AI (GenAI) technologies like ChatGPT and MidJourney have gained widespread prominence [

40]. These systems leverage machine learning models to “generate media content…that mirrors human-made content,” impacting various professions with their “creativity and reasoning abilities” [

40].

Despite rapid advancements in AI and initiatives like the AI Act, “the first-ever legal framework on Artificial Intelligence” [

41], challenges persist, particularly regarding privacy and safety. The proliferation of devices collecting personal data has yielded benefits, such as improved healthcare monitoring and human–computer interaction, but has also raised significant privacy concerns [

42].

Recent emphasis has been placed on “mental privacy risks” with reference to concerns that arise when AI technologies have the capability to access, interpret, or manipulate individuals’ cognitive and emotional data. This includes information derived from facial expressions, social media interactions, and other behavioural indicators. Such risks encompass the potential exploitation of psychological vulnerabilities, unauthorised inference of personal thoughts or emotions, and the erosion of the boundary between private mental states and external observation. For instance, the increasing prevalence of neurotechnology that monitors brain activity has heightened concerns about “neural” or “mental” privacy. These technologies can potentially access intimate aspects of an individual’s mental life, leading to fears about the sanctity of personal thoughts and the potential for misuse of such sensitive data [

43]. Moreover, AI systems capable of analysing behavioural data to predict mental states have high potential in different fields such as healthcare monitoring but still pose significant privacy challenges when used without careful consideration. The unauthorised collection and analysis of such data can lead to intrusive profiling and potential manipulation, raising ethical and legal questions about the protection of individual autonomy and dignity. Addressing these mental privacy risks requires robust ethical frameworks and regulatory measures to ensure that AI technologies respect and uphold individuals’ rights to cognitive liberty and mental integrity, guiding the design of new solutions in this direction to ensure positive outcomes [

42,

44].

This pilot study aims to examine AI’s multifaceted nature, highlighting its strengths and weaknesses, as well as proposing future directions. By addressing key issues raised by industry and academic experts, this study seeks to advance discussions on AI’s growth while contributing to solutions that promote inclusion, diversity, equity, accessibility, and safety.

Unlike Fairness-Aware Machine Learning frameworks that focus primarily on bias metrics [

45], and Value-Sensitive Design that embeds stakeholder values broadly [

46], the IDEAS framework holistically integrates five explicit principles tailored to AI design, bridging ethical theory and practitioner action.

Furthermore, this pilot study uniquely integrates practitioner-driven insights across five IDEAS dimensions, introduces a participatory IDEAS framework grounded in both design theory and expert input, and offers actionable recommendations for embedding ethical and inclusive principles into AI development processes.

2. Materials and Methods

To explore the various aspects of artificial intelligence and better understand its impact on inclusion, diversity, equity, accessibility, and safety (IDEAS), this paper investigates the challenges and opportunities presented by this technology through the results of a pilot qualitative study. A preliminary set of semi-structured interviews was conducted to collect insights, perspectives, and expert opinions from professionals in the AI industry and academia. This initial phase of data collection served as the foundation for our inquiry, facilitating further exploration of these topics in future, sector-specific studies. The primary goal of this pilot study was to examine the benefits and challenges of AI, fostering discussions on future developments while considering IDEAS principles as fundamental to its evolution.

The interviews were structured around five key topics: awareness and literacy; privacy, integrity and ethics; safety and well-being; inclusion, diversity, equity, accessibility, and safety; and AI futures. Each interview was analysed using the collaborative platform Miro to extract key areas and insights, future visions, conversation highlights, and identified challenges, as presented in the

Section 3 of this paper. Sub-clusters were created within these categories to highlight the primary issues emerging from the interviews.

2.1. Participant Recruitment

This exploratory pilot study included 12 participants (n = 12), recruited through a purposive snowball sampling approach. Experts in artificial intelligence from academia, policymaking, and the technology industry were selected based on having at least five years of experience in AI research or development. To ensure epistemic diversity, a maximum variation sampling strategy was implemented. Participants were intentionally selected across a range of professional sectors (academia, industry, design, and policy), gender identities (including women and non-binary participants), and different geographic regions. This sampling logic was not intended to be statistically representative but rather to surface a broad set of expert perspectives on the IDEAS dimensions, purposefully identified for this pilot study.

Participant inclusion was guided by two criteria: (1) demonstrated engagement with AI-related design, development, or governance, and (2) affiliation with recognised institutions or initiatives (e.g., Microsoft, Redmond, WA, USA; Stanford University, Stanford, CA, USA, leading startups from various countries,). Diversity of region, culture, age, ability, disability, gender was monitored iteratively during recruitment to reduce the over-representation of different groups.

Recruitment was conducted via email and LinkedIn, in line with the University of Cambridge’s ethics guidelines. This study received ethical clearance from the University of Cambridge under ethics submission AHAET/57759/ETH/4 on date 31 January 2024.

Before each interview, participants signed an informed consent form. Each outreach included a brief description of this study and an invitation to participate in a one-hour Zoom interview. This duration provided ample time to establish a dialogue with the interviewees and gather in-depth insights on the topics discussed.

The interviews were conducted online between February and September 2024 and began with a brief overview of this study’s objectives.

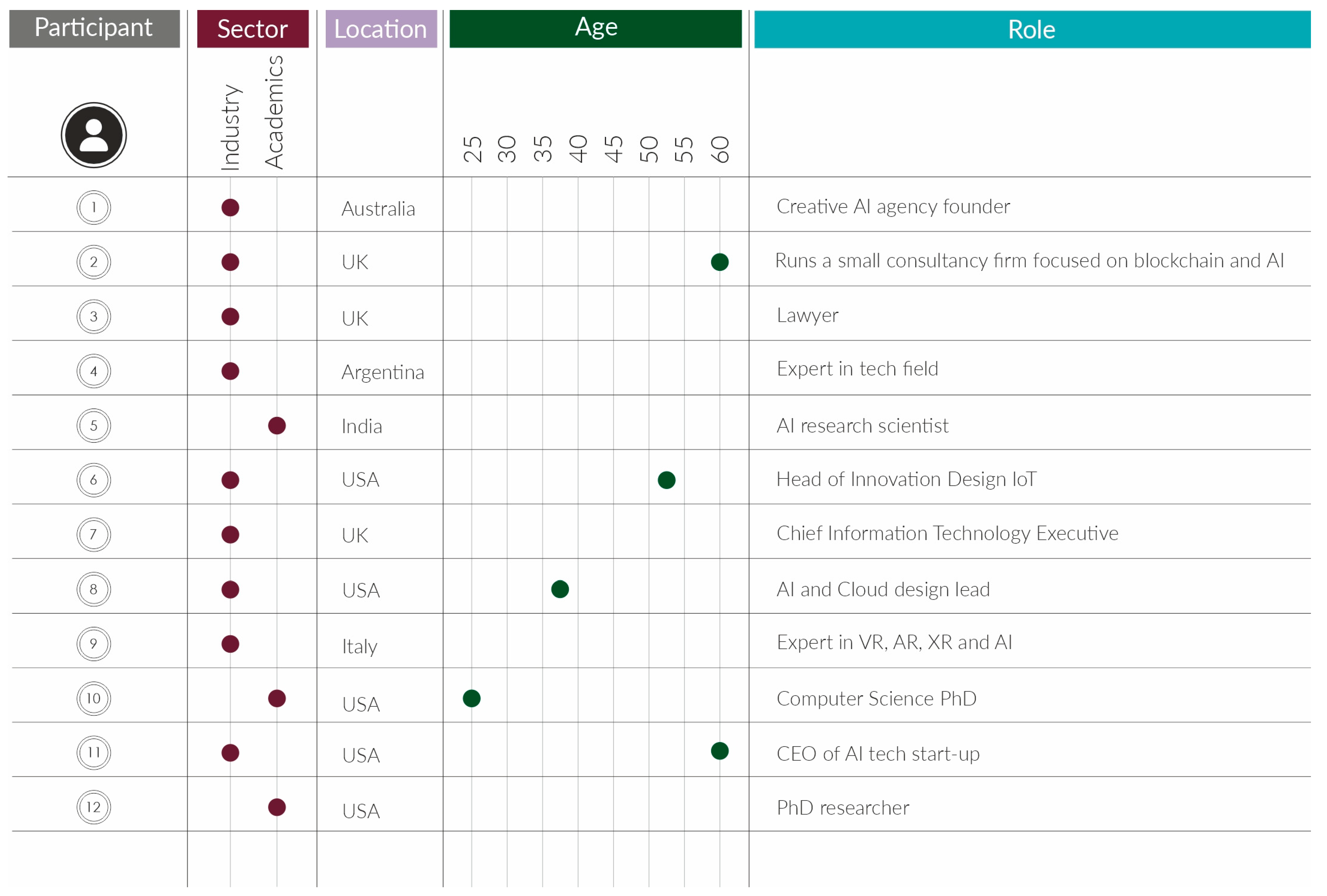

The demographic data (see

Figure 1) show seven industry professionals and five academics involved in policymaking, with geographic coverage spanning Europe and North America. Participants ranged from 25 to 60 years old and had varying levels of experience with artificial intelligence, with a minimum of five years, allowing for a broader spectrum of viewpoints. Their roles included AI agency founders, consultants, researchers, CEOs of AI companies, and legal experts. Notably, the interviewees represented a diverse set of countries across multiple continents, including Australia, the USA, the UK, Italy, India, and Argentina (

Figure 1). This diversity of expertise and professional background contributed to achieving a satisfactory, comprehensive perspective on the analysed technology, enabling the identification of a wide range of challenges and opportunities associated with AI, in line with the scope of this pilot project.

Several limitations should be acknowledged in interpreting the findings of this pilot study. First, not all participants disclosed their exact age, as reflected in the demographic overview (

Figure 1), which limits the precision of age-related analysis. Second, the sample leaned toward industry professionals, and future research should aim to achieve a more balanced representation across sectors, particularly increasing participation from academia, policymaking, and civil society. Future research will prioritise a broader gender diversity to ensure more inclusive and representative insights.

Additionally, although the sample size of 12 participants aligns with common practice in pilot studies designed for early-stage framework development, it limits the generalisability of findings. These results are interpreted as exploratory rather than conclusive. To enhance analytical depth and external validity, future studies that are currently in place from the research group should expand the sample size and include perspectives from under-represented sectors and regions.

Finally, thematic saturation was applied as a qualitative benchmark, defined as the point at which no new codes or themes emerged during the analysis of the final three interviews, following established qualitative research standards [

47].

Nonetheless, broader replication and triangulation are required to fully validate the IDEAS framework in more diverse contexts.

Figure 1.

Participant demographics, roles, and areas of expertise.

Figure 1.

Participant demographics, roles, and areas of expertise.

2.2. Interview Protocol

Each expert interview lasted approximately one hour and was conducted via Zoom. After obtaining verbal consent (5 min), the facilitator began with a brief warm-up to collect professional background and AI-related experience (10 min). The core segment (40 min) followed a semi-structured guide covering:

IDEAS Awareness (10 min): Questions were asked on participants’ understanding of inclusion, diversity, equity, accessibility, and safety in AI.

Domain Challenges and Opportunities (15 min): Recent projects or observed gaps were probed, with follow-up prompts used to elicit concrete examples.

Framework Feedback (15 min): Preliminary IDEAS dimensions were presented and refinements or missing elements were asked for.

A final 5 min was reserved for open reflections and suggestions for further research. All sessions were audio-recorded and transcribed verbatim; facilitator notes captured non-verbal cues and emergent themes in real time.

The transcripts were anonymised before analysis.

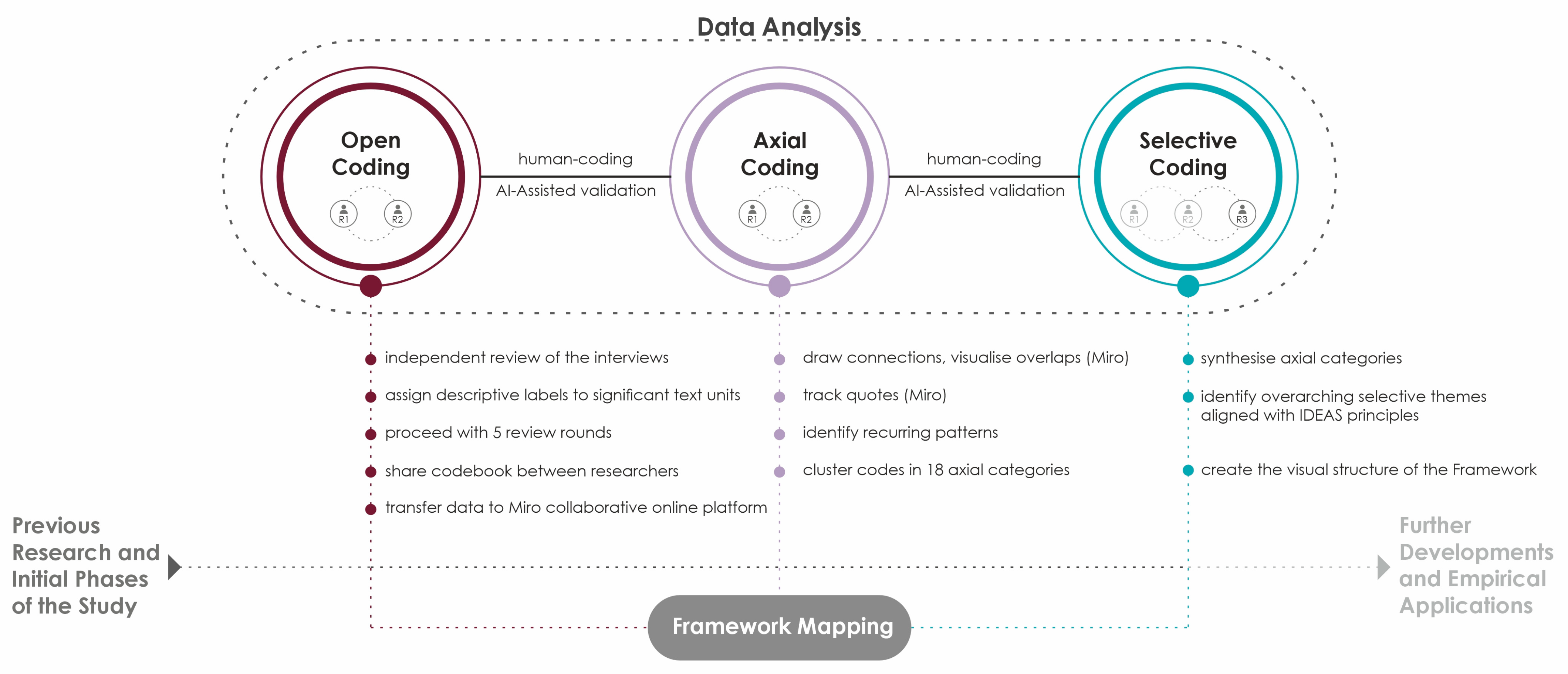

2.3. Data Analysis

All interview transcripts were anonymised and imported into Miro (version 5.4) to support a collaborative, audit-ready workflow. The analysis followed a systematic, iterative approach grounded in qualitative research methodology.

A three-stage thematic analysis was conducted.

Open Coding: Two researchers independently reviewed each transcript line by line, assigning descriptive codes to relevant text segments. After every three transcripts, they met to compare code lists, refine definitions, and assess inter-rater reliability.

Axial Coding: Using Miro’s clustering features, the researchers grouped related codes into higher-order categories. This process revealed key patterns across the five IDEAS dimensions and yielded 18 preliminary themes.

Selective Coding: A third reviewer synthesised these axial categories into core themes that form the foundation of the IDEAS framework. The links between emergent themes and framework elements were independently reviewed and validated.

AI tools (ChatGPT-3.5/4.0) were employed solely for triangulation. No AI-generated code was used unless both human coders independently validated its thematic relevance. This ‘human-in-the-loop’ methodology maintained analytic rigour and minimised epistemic drift. AI suggestions and coder decisions were documented in the audit log for full transparency, recognising that the current literature cautions against over-reliance on AI in qualitative research [

48].

All coding decisions, memos, and codebook iterations were documented in Miro to ensure full transparency and reproducibility.

Thematic synthesis revealed a consistent narrative among participants: AI is perceived as an unstoppable transformative force with the potential to profoundly reshape society. Based on the analysis, three overarching thematic clusters were identified, challenges, opportunities, and recommendations, which structure the

Section 4 of the paper.

3. Results

The results present users’ responses, organised into the five topics addressed throughout the interviews, as outlined in the

Section 2. They highlight the key issues raised by participants and recurrent themes in the various topics, reporting some of the representative quotes for the main areas addressed.

Table 1 shows a summary of the themes with representative quotes from participants.

This will aid in shaping the framework of the focal challenges, opportunities, recommendations, and AI futures, as examined in the

Section 4.

3.1. Awareness and Literacy

The first part of the interviews focused on “Awareness and Literacy” around AI technology through two main questions “What should be done to promote AI Literacy and Awareness for people of all ages and abilities?” and “How can we give people more choice by encouraging safe competition and maintaining a thriving digital economy within the context of AI?”.

The interviewees’ responses show a consensus that building awareness of AI is essential with reference to various fields, such as education, governance, regulations, digital literacy, and industry. The results show that the most widely spoken topic interviewees focused on (50% of respondents) was dispelling negative misconceptions about AI, how AI works, and its impact on society in different sectors. One of the interviewees with experience in building Large Language Models (LLMs), states that

“[…] many people have misconceptions about AI, [which are] often influenced by media portrayals of robots and dystopian futures.”

It is, however, important to highlight that if the data used to generate specific outcomes is flawed, or if the provided prompts lack sufficient detail, these models can produce errors, fabricate information, or generate incorrect references due to underlying data inconsistencies or missing information. One interviewee expresses this point very well:

“AI is extremely powerful- but not at all autonomous. Everything AI does, has to be revised by a human or person. AI does not have critical thinking. That’s the main problem. […] AI systems […] are much closer to how we, as people, learn things- […] AI is similar to us in the way that it can make up things- and even get things wrong. The notion that the machine is always right needs to die when looking at this type of technology.”

The second commonly discussed issue (42% of interviewees) concerned the need to educate the audience on the real-world benefits of AI and on how to use these tools. The same percentage of interviewees also emphasised the importance of promoting the accessibility of this technology. A particular focus in this sense regarded the intergenerational digital divide, underlining the need to close the gap between older and younger populations.

“The digital literacy and understanding gap will happen at an earlier age [due to tech advancing so quickly]. Think 40–50-year-olds who already feel disconnected from most tech.”

The same interviewee claims that, in fact,

“[…] Education is key- starting from the bottom up, by having children learn about it through play could be one solution. It is very important that we teach the youth but also make sure not to leave people who are closer to retirement out of it and teach them the basics, how to recognise AI generated content that might have an agenda and seek trusted media sources [in its stead].”

One of the experts consulted claims that AI literacy can be promoted through accessible formats such as social media platforms (e.g., Instagram and TikTok) or by relying on comedians as intermediaries to moderate discussions. This approach lowers the entry barrier by simplifying complex AI concepts and making them relatable to a broader audience.

In total, 33% percent of the respondents also suggested that government and schools should take action to educate the public about this emerging technology.

An interviewee advocated that the most effective way to increase awareness is by demonstrating how AI-based tools can impact daily tasks and lives. They mentioned that people might not understand how certain technologies work, but when provided with hands-on experience, they can see the differences these tools bring.

Of the less discussed topics in this section, 16% of participants believed it was important to help people distinguish unreliable AI content or “noise” and encourage them to seek trusted media sources to avoid validating their own biases. One participant out of the twelve respondents emphasised the need to raise awareness on how AI can be prone to error, aiming to dispel positive misconceptions. Another issue raised by a different interviewee concerned how quickly this technology is evolving, noting that people are not yet ready to understand it.

These results show that most interviewees focused on dispelling misconceptions of AI or communicating its benefits through awareness (75% of those interviewed), while many others (67%) emphasised the need to increase the accessibility of this technology or wanted the government/schools to educate the public about it. This highlights a significant focus on raising accessibility to information and inclusion for a wide range of users as part of the IDEAS principles.

3.2. Privacy, Integrity, and Ethics

While focusing on the second topic of “Privacy, Integrity and Ethics” in relation to AI models, participants were asked the following: “To help personalise content and provide a safe experience, what should we do to guarantee the safe use of AI for everyone and give people transparency and control over their data?”.

There was greater variability in the interviewees’ opinions on this prompt. Among the more common stances, 42% of the respondents agreed that the transparency of user data and how it is handled are a priority for privacy. To address the challenges associated with ethical AI use and responsible AI design, almost half of the interviewees emphasised the need to implement robust AI moderation mechanisms, age restrictions, and comprehensive safety features, also showing the importance in stressing such issues through regulations.

“There is a dilemma. The technology needs data to grow, but people generally do not want to share their data. The option of what data to share should already be part of the AI being used, but oftentimes the option is hidden so that people don’t click on it. Categorising and anonymising data are key points in making sure the LLMs only use the data points they need and nothing more.”

Another 33% of the participants urged governments and regulators to enforce laws around AI models. An expert from a consultancy firm noted that effective AI regulation requires well-defined standards: a set of rules to ensure that associated risks are both knowable and measurable. In this context, the EU AI Act and the NIST AI Risk Management Framework are currently at the forefront of regulatory efforts. Similarly, a lawyer with experience in providing legal counsel to AI-focused companies stressed the critical role of government oversight in holding AI organisations accountable. According to this expert, it is essential to strike a balance that fosters technological advancement while ensuring robust safety mechanisms to prevent malicious applications of AI. Implementing ‘light-touch’ regulations as an interim measure, along with establishing a dedicated commission or agency for reporting AI-related concerns, was suggested as a pragmatic approach until more comprehensive legislation is codified.

The same percentage of respondents prioritised prompting informed consent from users and clarifying their privacy options. The security of user data, such as encryption, is also important, as claimed by 16% of the participants, which connects to the safety principle of IDEAS. Another 16% discussed how user data should be categorised and anonymised, while the same number of participants encouraged communication with users and public feedback to refine models and align them with values. A few respondents held opposing views to the general consensus, stating, for example, that regulation alone is not enough, and that transparency is key. Another issue raised was that transparency should be case-specific and, in some instances, limited to prevent manipulation by users. Further opinions related to having default privacy settings, teaching people what personalisation means, as suggested by one interviewee, and making procedures and workflows for the future.

One interviewee provided a pertinent example related to privacy and data integrity. They noted that discussions around privacy and integrity are ongoing, pointing out that several organisations have refrained from adopting Microsoft’s Copilot due to concerns over data collection practices. Specifically, there are apprehensions regarding the extent of information that Microsoft may have gathered in the background about their clients and employees. Considering these concerns, it is not uncommon for companies to develop proprietary, in-house AI solutions.

The results from this question show that points made about privacy and ethics have a higher variance among experts. The most common suggestions (75% of all participants made one or more of these points) are increasing transparency and user options in relation to personal data, as well as regulating AI models through laws.

3.3. Safety and Well-Being

The third topic of the interviews focused on how AI models relate to users’ “Safety and Well-being”, asking participants “How can we keep people safe and give them tools to protect themselves from harms, harassment and other risks related to AI use?” and “How can people navigate AI systems that represent freedom of expression and simultaneously guarantee respect for people’s diversity, without causing harm to others?”.

The experts interviewed provided a significantly greater number of diverse responses compared to the previous two topics, with only a few shared sentiments. Forty-two percent of the interviewees suggested that governments should make regulations and enforce ethical standards around AI models, while 33% encouraged built-in safety measures to be developed in the models, restricting and aligning them to public values, which could help increase confidence in this technology as well. An interviewee specialised in Computer Science stated that

“Some people do not want to use AI, [they are] concerned about their data being used to later replace them.”

In total, 25% percent of the respondents wanted different reporting systems to be in place for the AI models or the organisations behind them, while another 25% believed there is a need to help people adapt to the changes AI is bringing. Safety issues are, in fact, a challenge to be addressed by both small and large companies, as claimed during one interview:

“Some of the biggest tech companies are trying their best and failing to make systems safe. It’s still a work in progress. See Google’s Gemini and how they had to restrict it to reflect the training team’s views.”

The remaining shared opinions were suggested by two out of twelve experts, discussing how the diversity of the teams involved in designing AI and data itself will aid in combatting the models’ potential biases. To this end, early risk management and assessment are needed to prevent future dangers. Additionally, watermarking AI-generated content is considered a way to prevent misinformation. As pointed out by the founder of an AI startup:

“Misinformation is going to be the biggest downside of AI […] It’s no longer just someone’s tweet that’s fake from a bot. It’s actually someone putting out something that’s very compromising and different. And even if we understand that, even if we second guess all this is probably fake, what is that doing to us? If we see it over and over and over again… we don’t have those answers yet.”

Customising the models’ behaviour to the individual user is considered an effective approach to preventing harm and ensuring respect, as addressed by two of the respondents.

One of the interviewees proposed a hierarchical framework for safety that addresses potential issues on individual, community and societal levels, inspired by the work of James Landay, a Stanford University Professor in Computer Science. Landay advocates for designing AI systems with metrics that measure safety and impact across these three levels, ensuring alignment with societal values and sensitivity to cultural differences. For example, a wearable device such as a smart watch could assess individual comfort, community interaction and broader societal impacts like health and well-being. This approach ensures that AI not only protects individuals but also benefits communities and society at large.

The following responses were mentioned by only one expert out of the twelve, highlighting the need to use AI to combat AI, as well as to increase transparency and accountability to address these issues. An expert from an AI consultancy firm covered this point in depth.

“[…] it doesn’t matter what the technology is. That’s not where the problem is coming from. The problem is psychological. It is human […] bias is inevitable. It happens. So, it’s not about designing a model that isn’t biassed. You can succeed at that at one point in time, but within two or three iterations, bias will always appear. So rather than saying ‘How do I make sure my model isn’t biassed?’, you say ‘you have to ask two questions: how to identify bias when it picks up- and what do I do about it when it picks up?’”

Focus on education was also reinforced in this section, with suggestions that schools should ban certain models and make them inaccessible to minors. However, restrictions must be balanced with user consent and data must be vetted for accuracy. Additionally, it was noted that the effectiveness of regulations depends on the specific use case of the AI model.

These responses highlight the diversity of suggestions regarding safety and well-being, drawing attention to various topics and fields where artificial intelligence might have an impact. Regulations and built-in safety measures were the only commonly suggested actions, underscoring the importance of implementing these aspects as soon as possible across all sectors.

3.4. Inclusion, Diversity, Equity, and Accessibility of AI Technology

The “Inclusion, Diversity, Equity and Accessibility of AI technology” topic focused specifically on the IDEA principles and included two main questions: “How can we ensure AI and its correlated technologies are designed inclusively and in a way that is accessible to everyone, considering users from different ages, cultures, abilities, genders, languages and religions?” and “How can we inspire equity and diversity in AI and better represent under-represented groups and minorities?”.

The most common suggestion (33%) was getting minorities and under-represented groups involved to improve the way they are represented. A very well-known problem in this area regards the limitations of certain generative models, which often struggle to produce diverse representations when generating personas for specific jobs or positions.

“If you say: ‘create the image of […] [a] CEO’, even when you say, ‘Hey, no, I want a female CEO’ and you specify that… [the] female CEO is going to have the same attributes: [she is] going to be skinny. [Even] the cheeks, the lips- It’s pretty much how Western adults prefer women. [AI] looks for this type of woman in magazines. […] So, even if you show a woman- even [if] you show diversity, you’re not fully showing diversity.”

Four suggestions were shared by 25% of the interviewees. These included helping people access this emerging technology economically through funding, internships, and other means. Emphasis was also placed on training models with balanced datasets or applying certain restrictions, as well as developing frameworks, guidelines, and regulations to enforce the values. Finally, respondents highlighted the necessity of prioritising inclusion and diversity in AI models to promote these principles.

Sixteen percent of participants stressed the importance of providing education to minorities to improve access to AI-related jobs. Another interviewee suggested that AI models could accommodate people with disabilities or those struggling with daily tasks. Additional recommendations included the implementation of separate AI models to regulate target AI models and fostering competition by encouraging multiple, competing AI solutions.

3.5. AI Futures

The last part of the interviews focused on “AI Futures” and included a speculative question: “Where do you see Artificial Intelligence going in the next ten to fifteen years?”. This allowed interviewees to discuss very different topics, raising awareness on the various opportunities and challenges for the future development of AI.

This section categorises participants’ responses based on the type of future they envisioned or discussed. In total, 33% percent of participants expressed a cautious approach to the future, discussing how AI might impact creators, jobs, and information. In total, 25% focused on the positive advancements AI technology could bring, such as improvements in education, hardware and daily tasks. Another 25% speculated on significant societal changes, including the need to redesign the economy and the possibility of granting rights to AGI (Artificial General Intelligence) androids.

In total, 25% of the interviewees highlighted the importance of regulation and ethics in shaping AI’s future, particularly with the advent of AGI. They suggested that AGI would likely serve as the catalyst for integrating AI into robotics, as one of the interviewees explained:

“Considering all the big companies that are working on it, like NVIDIA and OpenAI- if there won’t be anything stopping the rate of progress AI is currently growing at, there is a very good chance we may end up with AGI sooner, rather than later. Things are evolving at an unprecedented, very fast rate. Including robotics. It’s not absurd to imagine that in the span of a decade we will have humanoid androids, who spot AGI in their systems- and it’s not an ethical problem to ridicule or ignore!”

The data gathered from the interviews asserts the variance in opinions among AI technology experts across the five topics investigated. Among these topics, “Awareness and Literacy” showed the lowest variance, with the largest group (50%) expressing similar ideas. In contrast, the

Section 3.3 generated the widest range of responses, with thirteen distinct suggestions on the topic.

4. Discussion

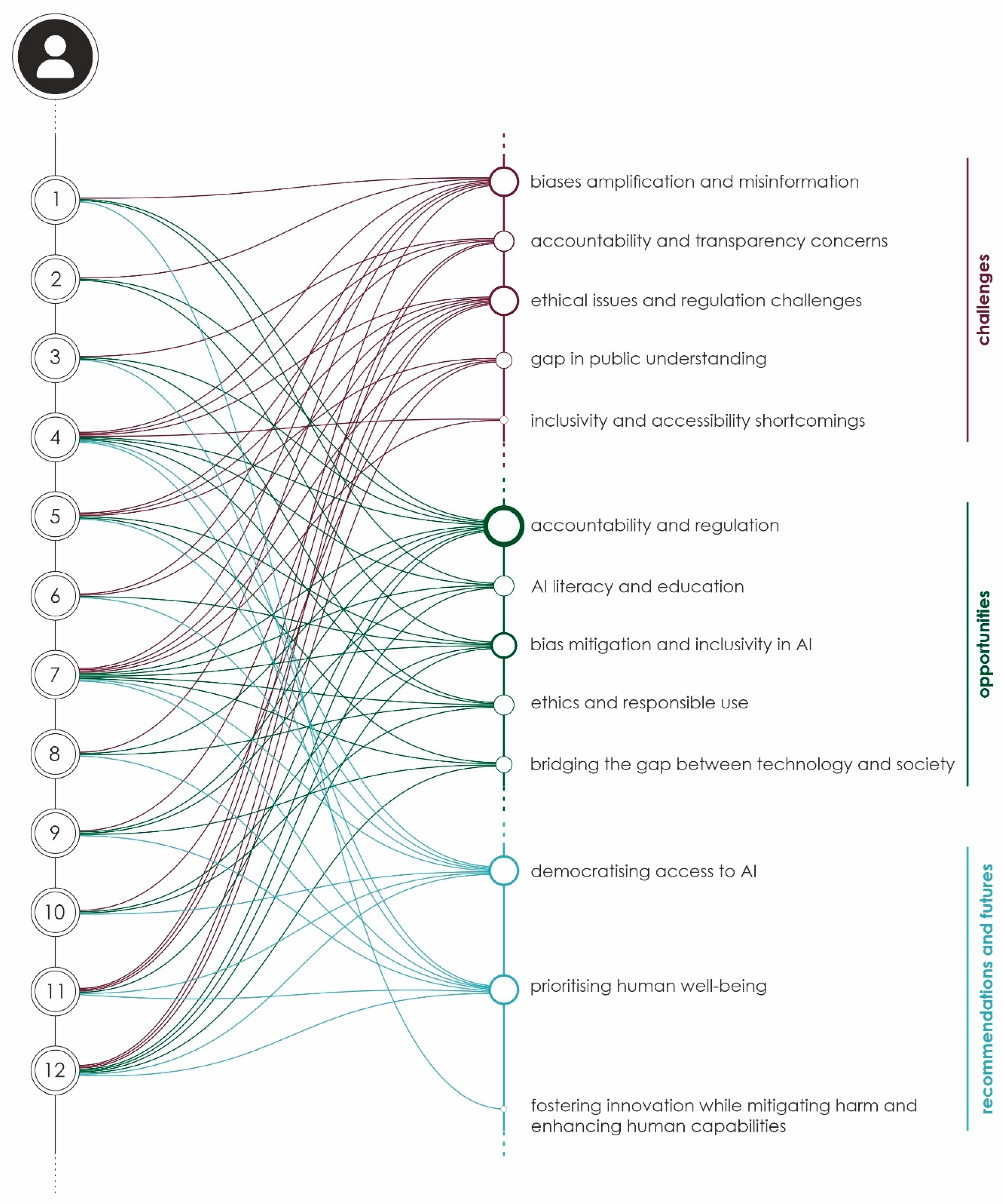

Following the analysis of the reported results, this pilot study allowed us to identify a framework of challenges, opportunities, recommendations, and futures for the use of artificial intelligence (

Figure 2), which may be an initial step to guide the design of AI systems that incorporate the IDEAS principles. This aims to stimulate further investigation and explore the opportunities provided by AI systems as viewed from the perspectives of experts from around the world, by not underestimating risks.

4.1. Challenges

As uncovered by the interviews, a significant concern is AI’s potential to amplify biases present in society. Many participants stressed the importance of preventing AI from perpetuating misinformation or manipulating people based on pre-existing societal biases. There is also an ongoing discussion about the need for diverse datasets and inclusive AI systems to avoid reinforcing biases and discrimination.

One notable risk identified is the deliberate embedding of AI-driven bias through manipulation, often referred to as “suggestion”. For instance, AI-generated content, such as images or videos, can be repeatedly shown to users on social media platforms during an electoral campaign. Prolonged exposure to such content may subtly influence users, causing them to internalise misinformation or develop biases against opposing political candidates. A real example of this was the ‘Facebook and Cambridge Analytica’ scandal from the 2016 US elections. In this case, personal user data, initially collected by Facebook for “academic purposes”, was later handed over to the now-defunct political consultancy firm, effectively sabotaging a democratic electoral process [

49]. In today’s media landscape, such risks have intensified, as individuals are increasingly susceptible to covert influences that may sway voting behaviours. The potential challenges, including threats to democratic integrity, are significant and demand serious attention.

While it may be tempting to attribute blame solely to AI technologies, a critical question arises: can AI itself be held accountable, or does responsibility lie with the companies, developers, stakeholders, and decision-makers tasked with ensuring that AI systems are not deployed exploitatively or trained on inappropriate datasets? Addressing bias and ensuring the safe deployment of AI remain among the most pressing challenges to realising technology’s full potential while aligning with the IDEAS framework values. This issue is not confined to small, emerging startups, but it is a pervasive concern affecting even the largest tech corporations.

Beyond misinformation, concerns about accountability and transparency in AI development also emerged as key themes among interviewees. Apprehensions about the trustworthiness of individuals and corporations developing AI technologies were made evident by several participants, underscoring the urgent need for regulatory frameworks and ethical reviews to ensure the responsible use of AI.

Examining the ethical issues within the IDEAS framework reveals the pervasive challenges surrounding AI. Seven out of twelve interviewees reported concerns such as privacy risks, the absence of reporting mechanisms for unethical AI applications, and the inherent difficulties in regulating rapidly evolving technologies. Particular attention was given to the exploitation of AI in industries like gaming and social media, where it can be depleted to manipulate users or increase dependency.

Moreover, there is a significant tendency for users to rely on AI to enhance workflow efficiency, often assuming that AI is infallible. However, current Large Language Models (LLMs) and generative AI (GAI) systems are susceptible to inaccuracies and fabrications due to their reliance on predictive algorithms based on large datasets.

The gap in public understanding of AI also emerged as a prominent issue, particularly among older generations and non-technical users. Media portrayals significantly influence public perception, often amplifying negative views that make it challenging for users to accurately assess AI’s potential benefits and risks. This lack of understanding may exacerbate fears of exploitation and harm. It is not uncommon to observe individuals, both on social media and in everyday contexts, expressing strong opposition to AI, largely driven by fears of job displacement and threats to job security across various industries.

Revisiting the IDEAS framework, the shortcomings of current AI models in inclusion and accessibility were also exemplified. These models often have inherent gaps that exclude certain age groups or demographics from accessing their full benefits.

It is important to note that the IDEAS framework is empirically grounded in emergent patterns derived from expert interviews, rather than deductively imposed categories.

4.2. Opportunities

Beyond the numerous challenges discussed, this pilot study highlights a variety of solutions proposed by the participants to address both current and emerging dilemmas associated with AI models and systems. Even though this is a first pilot study, the questions raised were numerous and show the importance of further investigations on this topic. A prevailing recommendation, emphasised across multiple responses, is the need for AI companies to be held accountable for their actions, whether through government regulation, societal pressure, or internal policy reforms. Recurring concerns surrounding data privacy and the balance between innovation and ethical standards were expressed in three interviews, underscoring the importance of safeguarding individuals’ privacy rights while allowing AI technologies to evolve responsibly.

Recent advances in high-stakes environments, such as a GAN- and encoder-based soft-voting ensemble for tax fraud detection, illustrate how AI can enhance safety through robust decision-fusion mechanisms [

50].

As briefly mentioned in the previous section, the importance of AI education for both younger generations and non-technical users remains an unresolved issue that will need to be addressed. There is a call for integrating AI into school curriculums, raising awareness among the public, and simplifying complex AI concepts to promote AI literacy. Education is seen as a key component in mitigating AI risks and aiding responsible engagement with technology.

To bridge the gap between technology and society, the importance of making AI accessible to the public becomes a fundamental aspect, ensuring that people from all backgrounds can understand and engage with technology. Bridging the perception gap around AI, whether through simplified explanations or tailored communication, is crucial to fostering a more AI-literate society. Safety frameworks and integrating AI into already-known technology are other viable pathways to achieve the greater goal of bridging the understanding gap.

While discussing bias mitigation and inclusion in AI, five interviewees expressed their concerns regarding biases in AI models and stressed the critical importance of developing systems that are both inclusive and equitable. Central to this discussion is the need to use diverse and representative training data to minimise biases, alongside ensuring that AI systems are designed to augment human capabilities rather than displace jobs. A recurring theme is the imperative to incorporate inclusion into AI design processes from the earliest stages of development, as well as fostering collaboration with community stakeholders to ensure equitable outcomes. Additionally, the promotion of equal access to AI technologies remains a pivotal concern.

One AI research scientist highlighted that traditional datasets often perpetuate existing biases, reinforcing the need for diverse and representative data to enhance the fairness and accuracy of AI models. A proposed approach to address this issue involves the application of Retrieval-Augmented Generation (RAG) frameworks, which rely on standardised and rigorously vetted sources. This method ensures that information concerning under-represented communities is both accurate and comprehensive, thereby mitigating the risk of perpetuating inaccuracies or exclusion in AI-generated outputs.

Ethical frameworks must prioritise minimising harm, curbing the spread of misinformation and safeguarding personal data against misuse. In this context, simplifying the process of obtaining informed consent and educating users about the implications of data sharing are critical to establishing trust and accountability in AI systems.

Individuals often compromise their privacy when sharing data with companies and organisations. This raises a significant ethical concern regarding the fine line between leveraging data responsibly to provide personalised value and encroaching on users’ privacy.

A pressing question arises: how can content be ethically personalised, respecting users’ privacy while using their data? AI systems could play a pivotal role in addressing this challenge by enabling users to customise their experiences and better understand the implications of data sharing. Educating users about the functionality and impact of AI on their data privacy is crucial, as many remain unaware of the full extent of their consent when agreeing to share personal information.

New technologies are explored by enhancing virtual and augmented reality with artificial intelligence and IoT applications in various fields of interest, like media and entertainment or industry [

51]. The use of interactive avatars as virtual assistants that support users through training is one of the mostly used applications. This helps to enhance interaction and user experience and provides, in some cases, the opportunity to simulate multisensory experiences [

52,

53]. The term “Intelligent Reality” is gaining relevance as it integrates various available technologies with AI-accelerated workflows [

53]. However, challenges like bias arise in this case as well [

52]. Furthermore, as explored in “Young Skeptics: Exploring the Perceptions of Virtual Worlds and the Metaverse in Generations Y and Z” [

54], younger users, particularly those from Generation Y and Z, express scepticism towards emerging technologies such as Virtual Reality and Augmented Reality. Their perceptions often reflect concerns about the technology’s potential risks and its implications for personal privacy and security. A future study could further investigate how these generations perceive AI in relation to their own experiences and digital habits.

4.3. Recommendations

Many interviewees anticipate that AI will permeate nearly every aspect of life, transforming industries, daily routines, and global societies in ways reminiscent of other transformative technologies. AI has the potential to drive advancements in sectors such as education, healthcare, and enhance accessibility, envisioning a more equitable future. Much of AI’s impact, however, is expected to occur invisibly, seamlessly integrating into everyday spaces and services.

The societal shifts conveyed by AI emphasise the importance of addressing disparities. Strategies are needed to support those at risk of being left behind by rapid technological development. Discussions about AI-enhanced services and fostering an AI-literate society suggest a future featuring more inclusive, engaged communities with improved access to AI-related education and safety. This vision includes recommendations for democratising access to AI to ensure its benefits reach everyone, particularly in areas like education, healthcare, and accessibility. Advocating for policies that promote equitable access to AI technologies and learning resources is a critical step in this direction.

Implementers must navigate tensions. Boosting accessibility (open interfaces) can introduce safety vulnerabilities, while strict safety controls may impede usability for neurodivergent users. Iterative stakeholder workshops were recommended to negotiate these trade-offs in context.

Another key theme discussed with interviewees is the interplay between AI and robotics. Ethical challenges are expected to emerge as human and machine roles become increasingly blurred, with many jobs replaced by AI or reduced to human supervision. An expert in the tech field predicted that AI could surpass traditional devices like smartphones and personal computers, leading to a fundamental shift in how users interact with technology.

There is considerable pressure to establish international cooperation and enforce stringent ethical standards in AI development and deployment. From the interviews, safety concerns at individual, community, and societal levels emerged, accentuating the importance of public feedback, comprehensive safety frameworks, and inclusion in AI implementation. Generative AI is seen as a catalyst for entrepreneurship and content creation, particularly among younger generations like Generation Alpha. This perspective frames AI as a tool for supporting innovation and enhancing human capabilities. The resulting recommendations advocate for strategies that balance encouraging innovation with mitigating potential risks, supporting the development of clear, ethically grounded guidelines for AI applications with significant societal impact.

Prominent concerns were also raised about AI’s potential to disrupt industries, particularly regarding copyright infringement and the creative sector. This perspective reflects a more cautious view, outlining the risks of unsupervised AI development. Enforcing copyright may become increasingly challenging as individuals would use AI for unprecedented creative freedom, potentially leading to a highly tailored world. Such developments could make it harder for artists and creatives to differentiate themselves, especially with AI-generated content seizing control and replacing creative domains, producing personalised music or media tailored to individual preferences. This scenario raises critical concerns about ownership, user privacy, and the erosion of human creativity, hinting at a dystopian future that devalues human contributions.

To address these challenges, it is recommended that AI development prioritises human well-being by aligning with human values and ethics while avoiding exploitative practices. AI should be leveraged as a tool to augment human capabilities, enhancing productivity, creativity, and decision-making, rather than serving as a replacement for human labour. In addition, embracing the W3C Accessibility Guidelines (WCAG 2.2) for AI interfaces and implementing algorithmic-impact assessments per the EU AI Act, and running participatory design sprints with under-represented user groups are key instruments to guarantee more inclusive, ethical, and accessible development of AI tools for the community.

Figure 2 illustrates a framework of the challenges, opportunities, recommendations, and futures as emerged from this study. The framework is grounded in inclusive design and participatory design theory [

55], reflecting both empirical themes and established design principles [

56].

Figure 2.

A framework of the challenges, opportunities, recommendations, and futures as emerged from this study.

Figure 2.

A framework of the challenges, opportunities, recommendations, and futures as emerged from this study.

4.4. Limitations of This Study and Planned Empirical Applications

This study’s pilot nature and small sample of twelve AI experts, recruited via snowball sampling, introduce risks of selection bias and limit generalisability. Although participants spanned academia, industry, and geography, under-represented regions (e.g., Africa, Latin America) and stakeholder groups (e.g., non-technical community advocates, end users) were not fully captured. The predominance of male and industry participants may have skewed perspectives on inclusion, diversity, equity, accessibility, and safety. Future research should employ stratified sampling and increase the sample size to validate and extend these findings.

While this study focused on conceptualising the IDEAS framework through expert interviews and thematic analysis, we recognise the importance of empirical validation. In forthcoming research, we plan to operationalise and apply the framework across multiple real-world design and technology contexts to assess its practical utility and scalability.

Specifically, we are preparing a follow-up study that integrates the IDEAS framework into three distinct application domains:

Inclusive AI Product Development Workshops: The IDEAS framework will be embedded into co-design workshops with AI development teams at a leading European technology company. The framework will be used to guide the early phases of ideation and feature planning for a natural language interface aimed at neurodiverse users. The researchers will evaluate how the framework influences decision-making, priority setting, and perceived user empathy among designers and engineers.

Accessibility Audits in Higher Education Environments: In collaboration with a university accessibility office, the IDEAS framework will be applied to assess and redesign campus digital services, such as student portals and course platforms. The framework will be used both as an evaluation rubric and as a participatory planning tool. Accessibility scores and student satisfaction measures before and after intervention will be compared.

AI Governance Policy Review: In partnership with a public sector innovation lab, the IDEAS framework will inform an audit of existing AI governance guidelines for automated welfare screening systems. A retrospective policy analysis will examine whether current practices align with the IDEAS dimensions, and stakeholder interviews will assess how the framework facilitates inclusive decision-making.

Across all three cases, mixed-methods evaluations will be used, including pre/post surveys, behavioural observations, and expert validation, to assess how well the framework translates theoretical principles into practical design choices. These studies are designed to move the IDEAS framework from conceptual grounding to validated application and to iterate its structure based on empirical outcomes.

In addition, to clarify the distinct contributions of the IDEAS framework, a structured comparative analysis against several established ethical AI frameworks was conducted. These include the following:

IEEE Ethically Aligned Design (EAD) [

57];

AI4People’s Ethical framework [

58];

The European Commission’s Ethics Guidelines for Trustworthy AI [

59];

The Inclusive Design Toolkit (Cambridge) [

60].

The comparison was guided by four dimensions:

- (1)

The breadth of inclusion across demographic and experiential factors;

- (2)

Operationalisability in design practice;

- (3)

The integration of safety and accessibility as core elements;

- (4)

Support for participatory co-design or stakeholder feedback mechanisms.

The IDEAS framework differs in the following keyways:

It explicitly integrates accessibility and safety as first-class design principles, whereas most frameworks treat these as secondary or implicit.

Unlike policy-centric frameworks like AI4People [

58], IDEAS is designed to be actionable within design workflows, providing scaffolding for ideation, evaluation, and iteration.

It uniquely merges human-centred design with ethical AI principles, making it relevant not only to developers and policymakers, but also to architects, interaction designers, and service developers.

Through its development via expert interviews and thematic analysis, it reflects bottom-up lived expertise and promotes epistemic diversity in design considerations.

While EAD [

57] and EU guidelines [

59] focus heavily on governance, and the Inclusive Design Toolkit [

60] emphasises user diversity, IDEAS contributes through a holistic synthesis that bridges technical, humanistic, and ethical concerns. Its strength lies in translating normative principles into practical heuristics that can be embedded across AI design lifecycles.

5. Conclusions

This study explored the opportunities, challenges, and potential recommendations regarding the use of AI, based on qualitative research conducted with experts from both industry and academia. It addresses several topics to investigate how AI connects to the IDEAS principles (inclusion, diversity, equity, accessibility, and safety) and emphasises the need to prioritise these principles when designing AI. Artificial intelligence holds the potential to facilitate access to information and promote inclusion, equity, and diversity. It must account for safety concerns to ensure its responsible use.

The initial questions posed by this study as follows have been answered through many expert opinions:

- -

How can AI technologies be designed to foster inclusion and equity rather than perpetuate existing inequalities?

- -

What risks exist in AI use, particularly for marginalised communities, and how can these risks be mitigated?

There is a need for initiatives, legislation, and industry standards that involve AI bias and discrimination control, as well as the presence of diverse development teams and reliance on diverse datasets. Ethical committees and regulators also play a crucial role, as they actively need to consult with businesses behind AI solutions during the early stages of development to ensure the AI model’s safety.

- -

How can we ensure that AI systems are accessible to all, enabling meaningful participation from diverse groups?

To promote accessibility, more school and government programmes need to engage the general public, both young and old, with AI literacy to diminish the understanding gap.

While the crucial questions of this pilot study were answered, the topic of AI development in relation to IDEAS principles needs to be explored further. Rather than providing definitive solutions to every issue encountered, this study aims to raise awareness and foster open discussions among experts. By directing greater attention to these topics, this study encourages stakeholders to adopt AI with heightened awareness of the IDEAS principles, supporting the development of AI for a broader and more diverse user base. While new regulations, such as the AI Act, are being introduced, industry experts, researchers, and end users are positioned to shift perspectives and embrace AI responsibly.

In terms of future work, the research group intends to validate the IDEAS framework in a live AI development setting, partnering with design teams to assess usability, ethical alignment, and stakeholder satisfaction.