A Hybrid and Modular Integration Concept for Anomaly Detection in Industrial Control Systems

Abstract

1. Introduction

- We provide a detailed specification of the real-world challenges and resulting requirements that arise from integrating a hybrid anomaly detection in an ICS.

- We introduce a novel hybrid and modular integration concept for AD in ICSs and outline the individual modules, their functionalities, and the integration process.

- We demonstrate the effectiveness of our approach through a comprehensive evaluation of a proof-of-concept implementation against the predefined requirements.

- We show that achieving high-performing, secure, and efficient anomaly detection is feasible even for resource-constrained legacy ICSs while maximizing the utilization of all available resources.

2. Related Work

- In comparison to other work, we consider in detail a whole range of real-world challenges that arise from a hybrid and modular integration of anomaly detection in an ICS. These include handling high-dynamic systems, limited resource availability, the complexity of the underlying process, data security and knowledge protection, increasing volume of data, risk management, and effective anomaly detection. After analyzing all these challenges, we define the respective requirements and consider all within the developed concept.

- We present a hybrid and modular integration concept that, compared to other concepts, can be fully decentralized and centrally integrated. Within the concept, both approaches can be flexibly and efficiently combined to optimize resource utilization. The complete modular design facilitates easy expansion, enabling the system to adapt to changes, modifications, and diverse hardware within the ICS.

- In contrast to other work, we implement the described concept prototypically within a real ICS and utilize existing devices to maximize decentralization and resource efficiency. We demonstrate the effectiveness of the approach through a comprehensive evaluation against the previously defined requirements and present a detailed analysis of the required memory and CPU power, the energy consumption, and the achieved latency.

- We demonstrate that the integration we present enables effective, secure, and efficient anomaly detection in resource-limited ICSs without relying on a central high-performance unit, which is often necessary in many other approaches. Additionally, the introduced concept can be easily expanded and adapted, allowing the implementation in existing and legacy systems with minimal effort.

3. Challenges

- Highly dynamic systems: ICSs are highly dynamic, with varying system structures and hardware. Devices from different manufacturers are connected via various communication protocols to realize an underlying physical process [37]. During the lifespan of a system, processes can change, and hardware components may be added, removed, or replaced. This presents a significant challenge, as the continuous and repeated development of one AD for the entire system is associated with time, effort, and increasing costs [38]. Consequently, the concept should enable the integration of a wide variety of hardware while remaining flexible and adapting to system changes.

- Limited available resources: Due to the varying hardware used in such systems, the resources available for a possible integration are also changing [39]. This presents an important challenge, especially in decentralized integration, as not all devices can integrate additional functionality directly. Furthermore, adding hardware is associated with costs and labor [40]. Therefore, a practical and effective integration must adapt to the existing system, address limited capacities, optimize available resource usage, and allow flexible expansion through additional software and hardware when necessary.

- The complexity of the underlying process: ICSs realize complex industrial processes that often focus on productivity and speed to achieve maximum efficiency. These physical processes naturally exhibit noisy behaviour, which affects the recorded data [41]. This poses a specific challenge in identifying anomalies, particularly for the AD models, which can be influenced by poor data quality and varying recording rates [42]. Thus, any possible integration must handle various data types and qualities, differentiating sampling rates while also considering the natural behaviour of industrial data.

- Data security and knowledge protection: Another essential criterion is data security. Knowledge about the system and the realized process can be found in the sampled data from the ICS [43,44]. This is a crucial factor, especially for small- and medium-sized companies, as knowledge and expertise are key factors for competing against larger competitors. Consequently, companies often do not want to record, store, or transport their data outside their system to mitigate such risks, which can be challenging, as large numbers of data and increased performance are often required, especially when creating and training AD models [45]. Therefore, the resulting integration should process all data internally while simultaneously utilizing the system’s resources to realize all functionalities.

- Volume of data: The overall volume of data produced in industry is increasing, driven by modern complex processes and new industrial hardware and software [46]. For instance, some field devices are already achieving sample rates in the microsecond ranges. By utilizing these rapidly recorded data for anomaly detection, it is possible to better identify specific anomalies, such as short collisions, while simultaneously improving reaction times [47,48]. However, this poses a distinct challenge for integration, as it must handle large volumes of data and high sample rates within limited available resources [49]. Consequently, integration needs to be both efficient and flexible to effectively process the resulting data stream, even under resource constraints, thereby enabling rapid reaction times.

- Risk management: In an ICS, the correct and safe execution of the physical process must always be ensured. This is especially challenging in decentralized integration, where different devices, such as control units, can be actively influenced by the integration of additional software [50,51]. Such integration can lead to an overload or breakdown of the device, which may impact production or, in the worst-case scenario, endanger workers [52]. Therefore, when integrating AD into a system or device, it is crucial to prevent any negative effects on the safety or functionality of the system.

- Effective anomaly detection: Prompt detection, quick access to results, and reasonable reactions are essential for effective and reliable anomaly detection [53,54]. This is especially critical in cases like collisions or in rapid processes. Immediate detection enables a quick response, which can prevent faults from occurring or propagating through the entire production line [55]. The system should efficiently identify anomalies, provide real-time information, assist users in finding solutions, and respond appropriately when necessary.

4. Hybrid and Modular Integration Concept

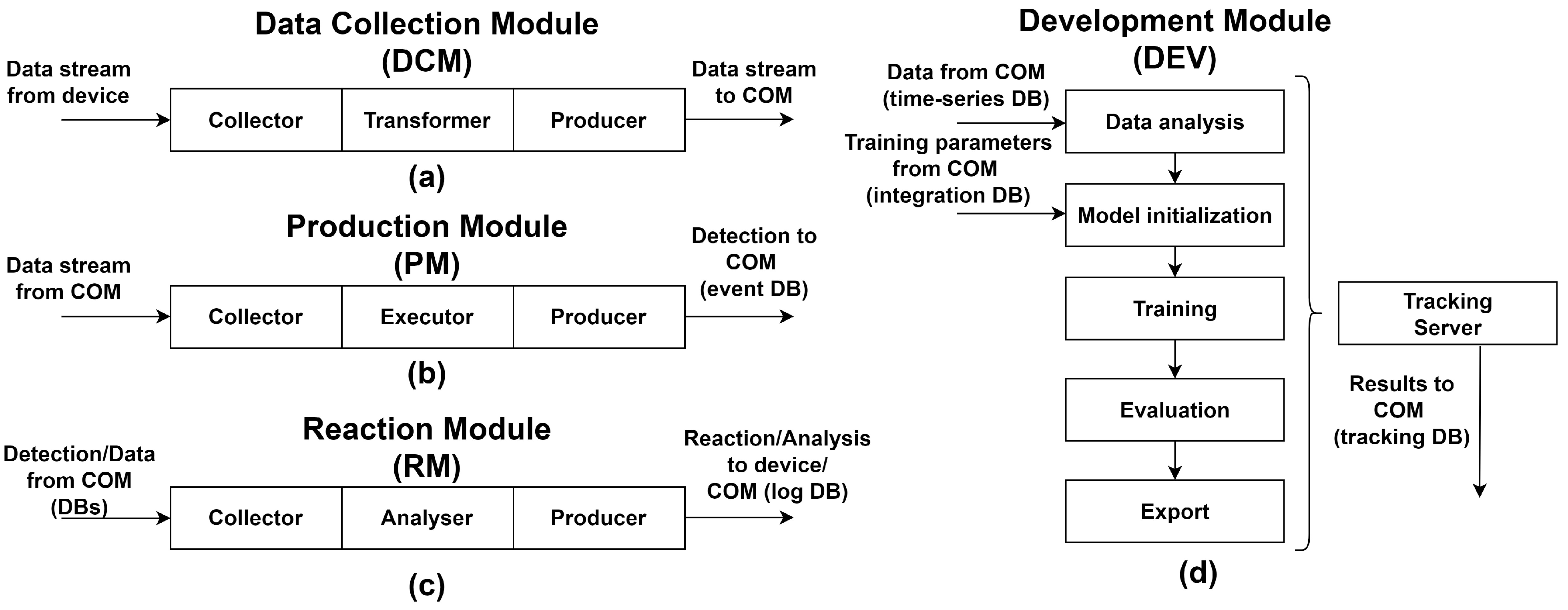

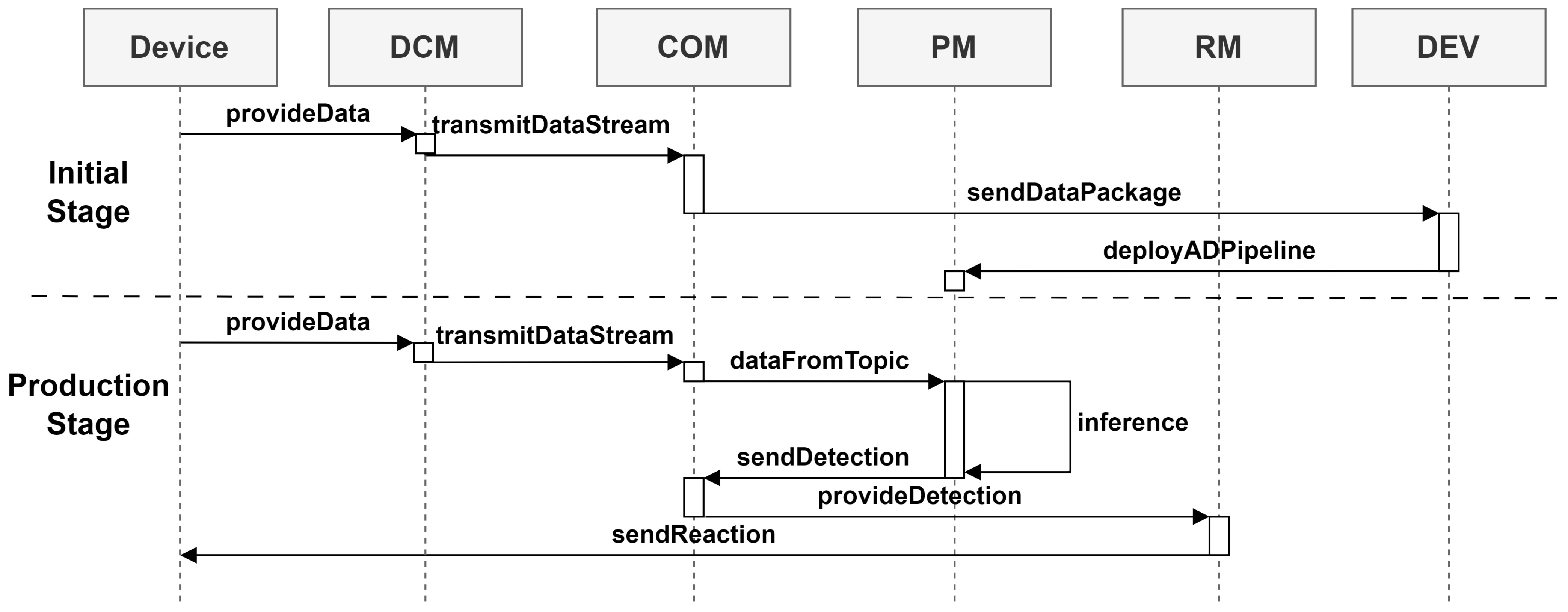

4.1. Data Collection Module

4.2. Production Module

4.3. Development Module

4.4. Reaction Module

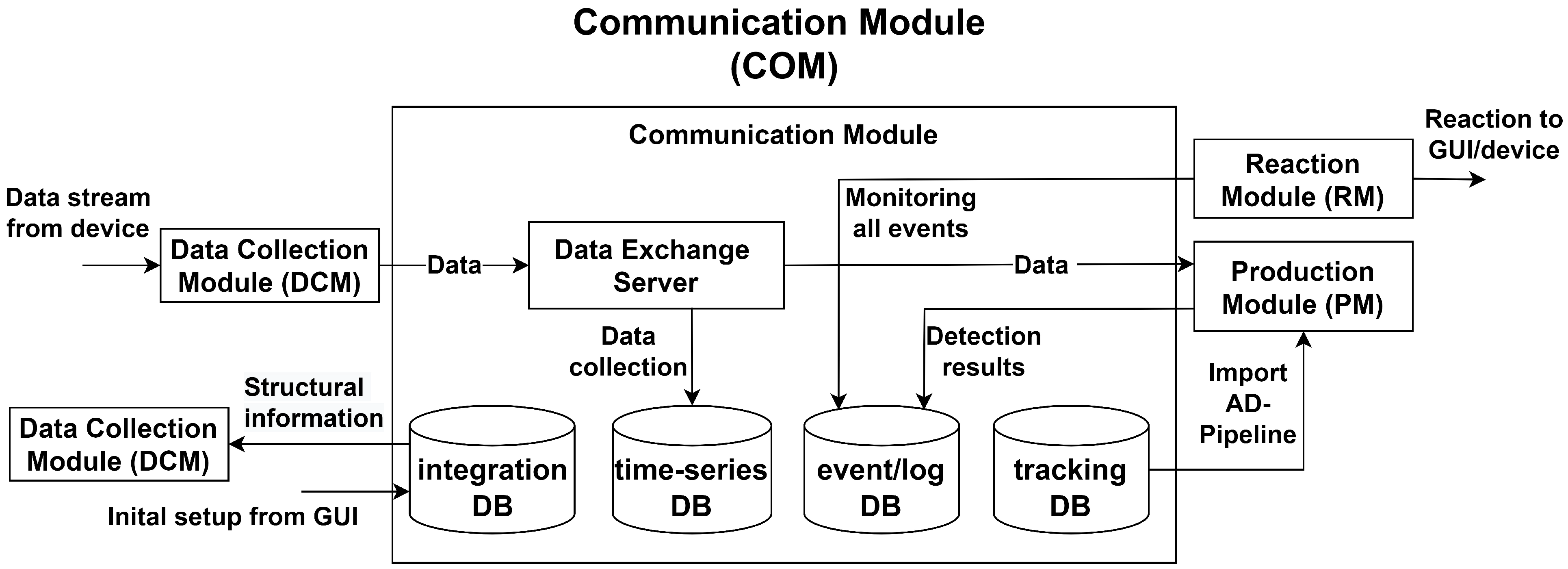

4.5. Communication Module

- A time-series DB that facilitates the storage of short-term data from various devices, enabling the development of AD models.

- A integration DB for storing essential information and structural knowledge about the system, modules, and the development process.

- An event/log DB for collecting detected anomalies, which can be later retrieved for in-depth analysis by the RM.

- A tracking DB that records the development results and stores the generated AD model for later distribution to the PMs.

4.6. Process Cycle

5. Prototype Implementation

5.1. GUI

5.2. Anomaly Detection

5.3. Cybersecurity

6. Evaluation

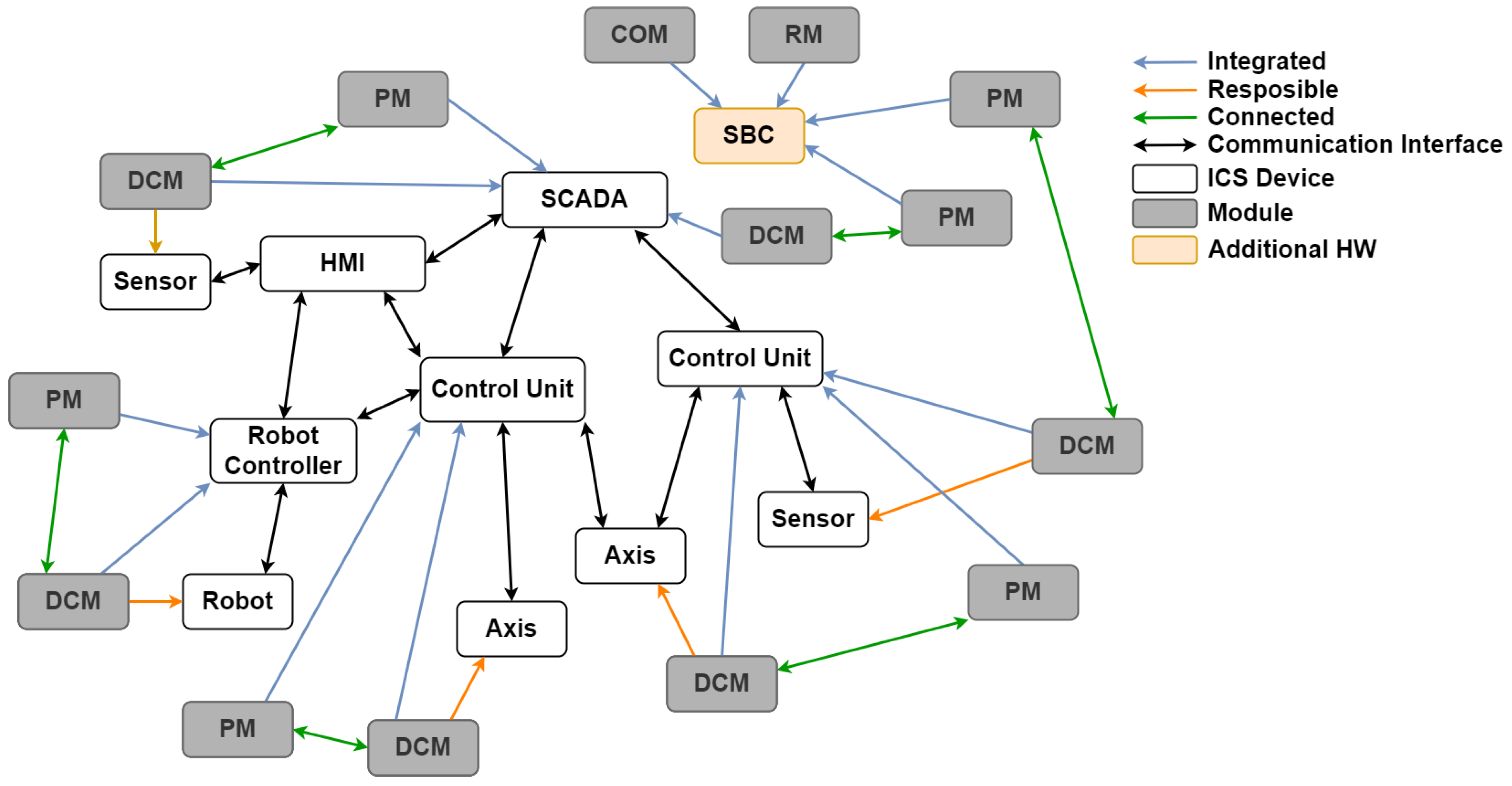

6.1. Experimental Setup

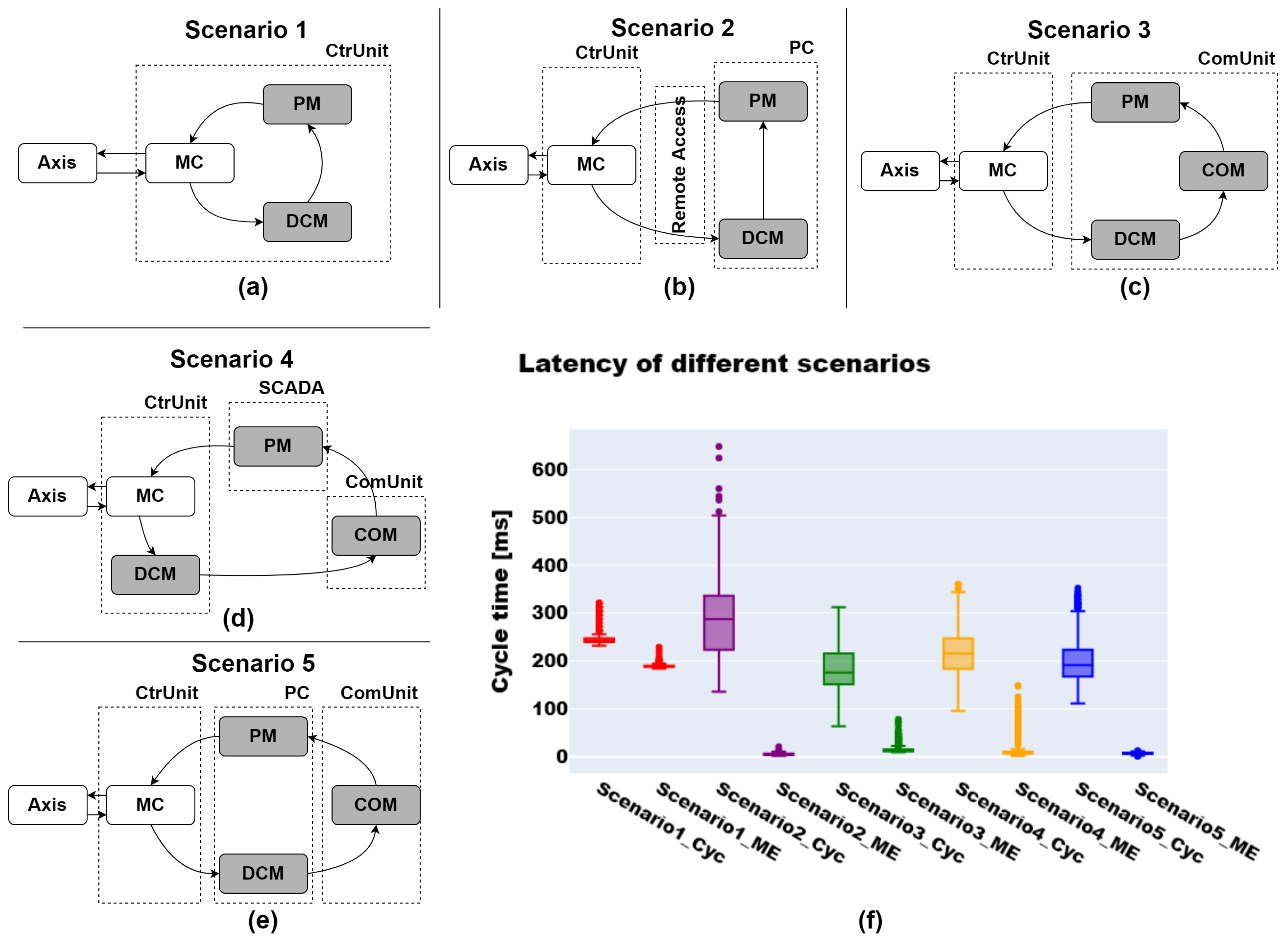

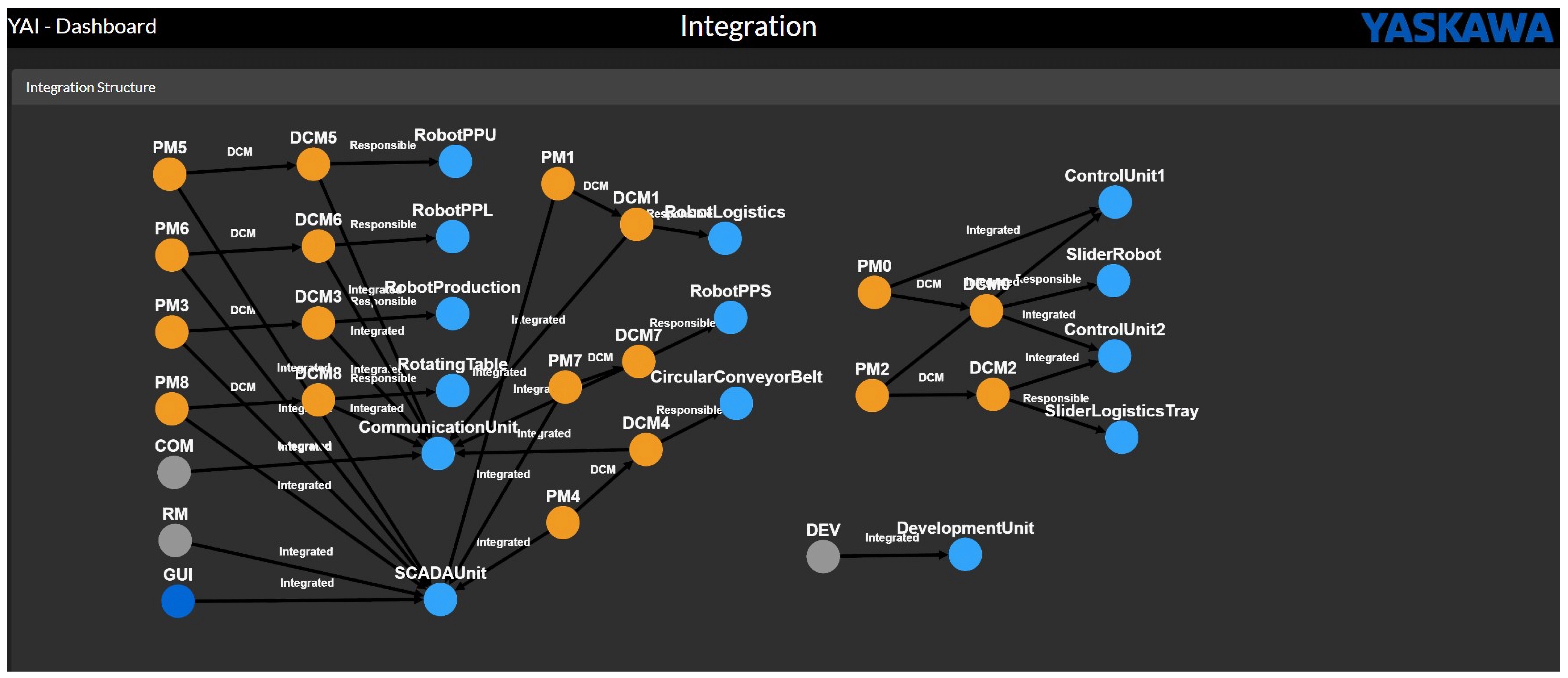

6.2. Experimental Integration

6.3. Experimental Results

- Highly dynamic systems: Based on the flexible concept and the customization of the DCMs, multiple devices from different manufacturers could be integrated with various communication protocols (e.g., OPCDA, OPCUA, Modbus, and RSC). By designing the modules as adaptable microservices, seamless integration into the existing devices of the experimental ICS was possible. In addition to the devices used in the experimental setup, the integration of modules into controllers from other manufacturers (e.g., PxC AXC F2152), other SBCs (e.g., Raspberry Pi4, Nvidia Jetson TX2), and those in various edge PCs could already be realized. In order to adapt to structural changes in the system, the concept enhances the flexible addition, removal, or modification of modules. For example, existing AD-Pipelines can be exchanged during continuous production, ensuring that the ongoing monitoring of the remaining system is not affected. Therefore, the concepts consider the highly dynamic nature of modern ICSs, enabling the integration of a wide variety of hardware while remaining flexible and adaptable to system changes.

- Limited available resources: With the creation of lightweight modules, the possibility of integration in a wide range of hardware, and the option to outsource functionalities to adjacent devices, the optimal utilization of the existing system resources can be ensured. Various modules could be incorporated into existing devices within the experimental setup, such as directly into the control units or the SCADA system. By incorporating a low-cost SBC as an additional hardware component, the system’s expandability can be effectively demonstrated. Through the interconnection of multiple COM units, the concept can be further extended to monitor entire production lines. An extended analysis of used resources and energy consumption can be found in Appendix A and Appendix C. Thus, the concept enables practical and effective integration even in resource-constrained environments, optimizes available resource usage, and allows flexible expansion through additional hardware when necessary.

- Complexity of the underlying process: By individually adapting the models and AD pipelines, different types of devices and the unique physical characteristics of the industrial processes could be considered. For instance, it was possible to integrate various motion devices of the experimental unit, including robots and linear and rotary axes from the experimental unit (Section 5), while simultaneously addressing the noise and jitter of each device separately by customizing the transformers in the DCMs. Given the individual connections of the DCMs and PMs, asynchronous and varying recording rates of the devices can be considered. Consequently, a broad range of devices and processes can be incorporated while taking into account their unique characteristics, data quality, sample rates, and the natural behavior of the industrial process.

- Data security and knowledge protection: The lightweight and hybrid integration of all necessary modules and functionalities directly within the system ensures that no data need to be recorded, stored, or transported to external locations. Depending on the required resources, additional devices (e.g., a laptop within the experimental setup) can be flexibly incorporated into the integration. Even during the resource-intensive training of the AD models, an external device can be temporarily added and subsequently removed. Consequently, no external connection to a data centre or cloud is necessary, resulting in enhanced data security and knowledge protection.

- Volume of data: With the flexible and hybrid integration of the modules, such as the direct integration within the control units or the possibility of outsourcing functionalities to adjacent devices, the large volume of data generated from high sampling rates can be processed effectively. At the same time, this reduces cost and energy consumption related to transmitting data to an external central location while minimizing latency. In the experimental unit, the data generated could be processed directly on the respective device, resulting in a reduced data stream and achieving high sampling rates, e.g., 8 ms, even with limited computing resources.

- Risk management: Through the implementation of the modules as microservices and thus the partial decoupling of the functionality from the device’s operating system, the associated risk can be mitigated, even when direct integration within the devices is employed. Furthermore, the maximum CPU and memory consumption of individual services can be defined, which minimizes the effects on the device and the respective process, even in the event of an error within a module. By separating the individual functions, if one module fails, the rest of the system remains stable, allowing all other devices to be continuously monitored. The error-prone module can then be reported, and once rectified, the service can be restarted, allowing full functionality of the AD to resume. It is also possible to implement redundancy, as can be seen in Appendix A, whereby multiple modules can monitor the same device. As a result, the concept reduces risks and minimizes the interference of devices and processes within the ICS, even in direct integration.

- Anomaly detection: Fast response times can be achieved through directly integrating functionalities within adjacent devices and the individual adaptation of DCMs and PMs. As a result, latency times of 300 ms were achieved with a model execution time of 200 ms within the experimental concept, even on resource-limited devices. A detailed analysis and discussion of the response times achieved with various integration concepts can be found in Appendix B. This also facilitates the early detection of critical anomalies, allowing for rapid responses. Simultaneously, the immediate localization of anomalies can be realized through the separate monitoring of individual devices, which can subsequently assist the user in resolving the anomalies.

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Resource Consumption

Appendix B. Latency

Appendix C. Energy Consumption

References

- Umer, M.A.; Junejo, K.N.; Jilani, M.T.; Mathur, A.P. Machine learning for intrusion detection in industrial control systems: Applications, challenges, and recommendations. Int. J. Crit. Infrastruct. Prot. 2022, 38, 100516. [Google Scholar] [CrossRef]

- Bhamare, D.; Zolanvari, M.; Erbad, A.; Jain, R.; Khan, K.; Meskin, N. Cybersecurity for industrial control systems: A survey. Comput. Secur. 2020, 89, 101677. [Google Scholar] [CrossRef]

- Conti, M.; Donadel, D.; Turrin, F. A Survey on Industrial Control System Testbeds and Datasets for Security Research. IEEE Commun. Surv. Tutor. 2021, 23, 2248–2294. [Google Scholar] [CrossRef]

- Kabore, R.; Kouassi, A.; N’goran, R.; Asseu, O.; Kermarrec, Y.; Lenca, P. Review of anomaly detection systems in industrial control systems using deep feature learning approach. Engineering 2021, 13, 30–44. [Google Scholar] [CrossRef]

- Kriaa, S.; Pietre-Cambacedes, L.; Bouissou, M.; Halgand, Y. A survey of approaches combining safety and security for industrial control systems. Reliab. Eng. Syst. Saf. 2015, 139, 156–178. [Google Scholar] [CrossRef]

- Feng, C.; Li, T.; Chana, D. Multi-level Anomaly Detection in Industrial Control Systems via Package Signatures and LSTM Networks. In Proceedings of the 2017 47th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Denver, CO, USA, 26–29 June 2017; pp. 261–272. [Google Scholar] [CrossRef]

- Armellin, A.; Caviglia, R.; Gaggero, G.; Marchese, M. A Framework for the Deployment of Cybersecurity Monitoring Tools in the Industrial Environment. IT Prof. 2024, 26, 62–70. [Google Scholar] [CrossRef]

- Goetz, C.; Humm, B. Decentralized Real-Time Anomaly Detection in Cyber-Physical Production Systems under Industry Constraints. Sensors 2023, 23, 4207. [Google Scholar] [CrossRef]

- Koay, A.M.; Ko, R.K.L.; Hettema, H.; Radke, K. Machine learning in industrial control system (ICS) security: Current landscape, opportunities and challenges. J. Intell. Inf. Syst. 2023, 60, 377–405. [Google Scholar] [CrossRef]

- Huč, A.; Šalej, J.; Trebar, M. Analysis of Machine Learning Algorithms for Anomaly Detection on Edge Devices. Sensors 2021, 21, 4946. [Google Scholar] [CrossRef]

- Tsukada, M.; Kondo, M.; Matsutani, H. A Neural Network-Based On-Device Learning Anomaly Detector for Edge Devices. IEEE Trans. Comput. 2020, 69, 1027–1044. [Google Scholar] [CrossRef]

- Huong, T.T.; Bac, T.P.; Ha, K.N.; Hoang, N.V.; Hoang, N.X.; Hung, N.T.; Tran, K.P. Federated Learning-Based Explainable Anomaly Detection for Industrial Control Systems. IEEE Access 2022, 10, 53854–53872. [Google Scholar] [CrossRef]

- Brandalero, M.; Ali, M.; Le Jeune, L.; Hernandez, H.G.M.; Veleski, M.; da Silva, B.; Lemeire, J.; Van Beeck, K.; Touhafi, A.; Goedemé, T.; et al. AITIA: Embedded AI Techniques for Embedded Industrial Applications. In Proceedings of the 2020 International Conference on Omni-layer Intelligent Systems (COINS), Barcelona, Spain, 31 August–2 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Nedeljkovic, D.; Jakovljevic, Z. CNN based method for the development of cyber-attacks detection algorithms in industrial control systems. Comput. Secur. 2022, 114, 102585. [Google Scholar] [CrossRef]

- Yu, X.; Yang, X.; Tan, Q.; Shan, C.; Lv, Z. An edge computing based anomaly detection method in IoT industrial sustainability. Appl. Soft Comput. 2022, 128, 109486. [Google Scholar] [CrossRef]

- Lübben, C.; Pahl, M.O. Distributed Device-Specific Anomaly Detection using Deep Feed-Forward Neural Networks. In Proceedings of the NOMS 2023-2023 IEEE/IFIP Network Operations and Management Symposium, Miami, FL, USA, 8–12 May 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Hu, Y.; Yang, A.; Li, H.; Sun, Y.; Sun, L. A survey of intrusion detection on industrial control systems. Int. J. Distrib. Sens. Netw. 2018, 14, 1550147718794615. [Google Scholar] [CrossRef]

- Kim, B.; Alawami, M.A.; Kim, E.; Oh, S.; Park, J.; Kim, H. A Comparative Study of Time Series Anomaly Detection Models for Industrial Control Systems. Sensors 2023, 23, 1310. [Google Scholar] [CrossRef]

- Yan, P.; Abdulkadir, A.; Luley, P.P.; Rosenthal, M.; Schatte, G.A.; Grewe, B.F.; Stadelmann, T. A Comprehensive Survey of Deep Transfer Learning for Anomaly Detection in Industrial Time Series: Methods, Applications, and Directions. IEEE Access 2024, 12, 3768–3789. [Google Scholar] [CrossRef]

- Nankya, M.; Chataut, R.; Akl, R. Securing Industrial Control Systems: Components, Cyber Threats, and Machine Learning-Driven Defense Strategies. Sensors 2023, 23, 8840. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, L.; Cao, Y.; Jin, K.; Hou, Y. Anomaly Detection Approach in Industrial Control Systems Based on Measurement Data. Information 2022, 13, 450. [Google Scholar] [CrossRef]

- Kravchik, M.; Shabtai, A. Detecting Cyber Attacks in Industrial Control Systems Using Convolutional Neural Networks. In Proceedings of the 2018 Workshop on Cyber-Physical Systems Security and PrivaCy, CPS-SPC’18, Toronto, ON, Canada, 15–19 October 2018; pp. 72–83. [Google Scholar] [CrossRef]

- Wang, C.; Wang, B.; Liu, H.; Qu, H. Anomaly Detection for Industrial Control System Based on Autoencoder Neural Network. Wirel. Commun. Mob. Comput. 2020, 2020, 8897926. [Google Scholar] [CrossRef]

- Xu, L.; Wang, B.; Zhao, D.; Wu, X. DAN: Neural network based on dual attention for anomaly detection in ICS. Expert Syst. Appl. 2025, 263, 125766. [Google Scholar] [CrossRef]

- Sater, R.A.; Hamza, A.B. A federated learning approach to anomaly detection in smart buildings. ACM Trans. Internet Things 2021, 2, 1–23. [Google Scholar] [CrossRef]

- Liu, C.; Su, X.; Li, C. Edge Computing for Data Anomaly Detection of Multi-Sensors in Underground Mining. Electronics 2021, 10, 302. [Google Scholar] [CrossRef]

- Schneible, J.; Lu, A. Anomaly detection on the edge. In Proceedings of the MILCOM 2017—2017 IEEE Military Communications Conference (MILCOM), Baltimore, MD, USA, 23–25 October 2017; pp. 678–682. [Google Scholar] [CrossRef]

- Liu, Y.; Garg, S.; Nie, J.; Zhang, Y.; Xiong, Z.; Kang, J.; Hossain, M.S. Deep Anomaly Detection for Time-Series Data in Industrial IoT: A Communication-Efficient On-Device Federated Learning Approach. IEEE Internet Things J. 2021, 8, 6348–6358. [Google Scholar] [CrossRef]

- Lee, H.; Oh, J.; Kim, K.; Yeon, H. A data streaming performance evaluation using resource constrained edge device. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 18–20 October 2017; pp. 628–633. [Google Scholar] [CrossRef]

- Raptis, T.P.; Cicconetti, C.; Passarella, A. Efficient topic partitioning of Apache Kafka for high-reliability real-time data streaming applications. Future Gener. Comput. Syst. 2024, 154, 173–188. [Google Scholar] [CrossRef]

- Peddireddy, K. Streamlining Enterprise Data Processing, Reporting and Realtime Alerting using Apache Kafka. In Proceedings of the 2023 11th International Symposium on Digital Forensics and Security (ISDFS), Chattanooga, TN, USA, 11–12 May 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Raptis, T.P.; Passarella, A. A Survey on Networked Data Streaming with Apache Kafka. IEEE Access 2023, 11, 85333–85350. [Google Scholar] [CrossRef]

- An, Y.; Yu, F.R.; Li, J.; Chen, J.; Leung, V.C.M. Edge Intelligence (EI)-Enabled HTTP Anomaly Detection Framework for the Internet of Things (IoT). IEEE Internet Things J. 2021, 8, 3554–3566. [Google Scholar] [CrossRef]

- Queiroz, J.; Leitão, P.; Barbosa, J.; Oliveira, E. Agent-Based Approach for Decentralized Data Analysis in Industrial Cyber-Physical Systems. In Proceedings of the Industrial Applications of Holonic and Multi-Agent Systems, Linz, Austria, 26–29 August 2019; Mařík, V., Kadera, P., Rzevski, G., Zoitl, A., Anderst-Kotsis, G., Tjoa, A.M., Khalil, I., Eds.; Springer: Cham, Switzerland, 2019; pp. 130–144. [Google Scholar]

- Mocnej, J.; Pekar, A.; Seah, W.K.; Papcun, P.; Kajati, E.; Cupkova, D.; Koziorek, J.; Zolotova, I. Quality-enabled decentralized IoT architecture with efficient resources utilization. Robot. Comput.-Integr. Manuf. 2021, 67, 102001. [Google Scholar] [CrossRef]

- Gerz, F.; Bastürk, T.R.; Kirchhoff, J.; Denker, J.; Al-Shrouf, L.; Jelali, M. A Comparative Study and a New Industrial Platform for Decentralized Anomaly Detection Using Machine Learning Algorithms. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Zamanzadeh Darban, Z.; Webb, G.I.; Pan, S.; Aggarwal, C.; Salehi, M. Deep Learning for Time Series Anomaly Detection: A Survey. ACM Comput. Surv. 2024, 57, 1–42. [Google Scholar] [CrossRef]

- Pinto, R.; Gonçalves, G.; Delsing, J.; Tovar, E. Enabling data-driven anomaly detection by design in cyber-physical production systems. Cybersecurity 2022, 5, 9. [Google Scholar] [CrossRef]

- Takyiwaa Acquaah, Y.; Kaushik, R. Normal-Only Anomaly Detection in Environmental Sensors in CPS: A Comprehensive Review. IEEE Access 2024, 12, 191086–191107. [Google Scholar] [CrossRef]

- Nardi, M.; Valerio, L.; Passarella, A. Centralised vs decentralised anomaly detection: When local and imbalanced data are beneficial. In Proceedings of the Third International Workshop on Learning with Imbalanced Domains: Theory and Applications, Bilbao, Spain, 17 September 2021; Proceedings of Machine Learning Research. Moniz, N., Branco, P., Torgo, L., Japkowicz, N., Woźniak, M., Wang, S., Eds.; PMLR: Cambridge, MA, USA, 2021; Volume 154, pp. 7–20. [Google Scholar]

- Adhikari, D.; Jiang, W.; Zhan, J.; Rawat, D.B.; Bhattarai, A. Recent advances in anomaly detection in Internet of Things: Status, challenges, and perspectives. Comput. Sci. Rev. 2024, 54, 100665. [Google Scholar] [CrossRef]

- Gunes, V.; Peter, S.; Givargis, T.; Vahid, F. A Survey on Concepts, Applications, and Challenges in Cyber-Physical Systems. KSII Trans. Internet Inf. Syst. 2014, 8, 4242–4268. [Google Scholar] [CrossRef]

- Nasir, Z.U.I.; Iqbal, A.; Qureshi, H.K. Securing Cyber-Physical Systems: A Decentralized Framework for Collaborative Intrusion Detection with Privacy Preservation. IEEE Trans. Ind. Cyber-Phys. Syst. 2024, 2, 303–311. [Google Scholar] [CrossRef]

- Salam, A.; Abrar, M.; Amin, F.; Ullah, F.; Khan, I.A.; Alkhamees, B.F.; AlSalman, H. Securing Smart Manufacturing by Integrating Anomaly Detection with Zero-Knowledge Proofs. IEEE Access 2024, 12, 36346–36360. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, J. Data anomaly detection in the internet of things: A review of current trends and research challenges. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 9. [Google Scholar] [CrossRef]

- Luo, Y.; Xiao, Y.; Cheng, L.; Peng, G.; Yao, D.D. Deep Learning-based Anomaly Detection in Cyber-physical Systems: Progress and Opportunities. ACM Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Ahmed, C.M.; M R, G.R.; Mathur, A.P. Challenges in Machine Learning based approaches for Real-Time Anomaly Detection in Industrial Control Systems. In Proceedings of the 6th ACM on Cyber-Physical System Security Workshop, CPSS ’20, Taipei, Taiwan, 6 October 2020; pp. 23–29. [Google Scholar] [CrossRef]

- Jankov, D.; Sikdar, S.; Mukherjee, R.; Teymourian, K.; Jermaine, C. Real-time High Performance Anomaly Detection over Data Streams: Grand Challenge. In Proceedings of the 11th ACM International Conference on Distributed and Event-Based Systems, DEBS ’17, Barcelona, Spain, 19–23 June 2017; pp. 292–297. [Google Scholar] [CrossRef]

- Iglesias Vázquez, F.; Hartl, A.; Zseby, T.; Zimek, A. Anomaly detection in streaming data: A comparison and evaluation study. Expert Syst. Appl. 2023, 233, 120994. [Google Scholar] [CrossRef]

- Zhou, X.; Peng, X.; Xie, T.; Sun, J.; Ji, C.; Li, W.; Ding, D. Fault Analysis and Debugging of Microservice Systems: Industrial Survey, Benchmark System, and Empirical Study. IEEE Trans. Softw. Eng. 2021, 47, 243–260. [Google Scholar] [CrossRef]

- Laigner, R.; Zhou, Y.; Salles, M.A.V.; Liu, Y.; Kalinowski, M. Data management in microservices: State of the practice, challenges, and research directions. Proc. VLDB Endow. 2021, 14, 3348–3361. [Google Scholar] [CrossRef]

- Zografopoulos, I.; Ospina, J.; Liu, X.; Konstantinou, C. Cyber-Physical Energy Systems Security: Threat Modeling, Risk Assessment, Resources, Metrics, and Case Studies. IEEE Access 2021, 9, 29775–29818. [Google Scholar] [CrossRef]

- Feng, C.; Tian, P. Time Series Anomaly Detection for Cyber-physical Systems via Neural System Identification and Bayesian Filtering. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, KDD ’21, Virtual, 14–18 August 2021; pp. 2858–2867. [Google Scholar] [CrossRef]

- Dehlaghi-Ghadim, A.; Moghadam, M.H.; Balador, A.; Hansson, H. Anomaly Detection Dataset for Industrial Control Systems. IEEE Access 2023, 11, 107982–107996. [Google Scholar] [CrossRef]

- Gaddam, A.; Wilkin, T.; Angelova, M.; Gaddam, J. Detecting Sensor Faults, Anomalies and Outliers in the Internet of Things: A Survey on the Challenges and Solutions. Electronics 2020, 9, 511. [Google Scholar] [CrossRef]

- Micskei, Z.; Waeselynck, H. The many meanings of UML 2 Sequence Diagrams: A survey. Softw. Syst. Model. 2011, 10, 489–514. [Google Scholar] [CrossRef]

- Yoo, H.; Ahmed, I. Control Logic Injection Attacks on Industrial Control Systems. In Proceedings of the ICT Systems Security and Privacy Protection, Lisbon, Portugal, 25–27 June 2019; Dhillon, G., Karlsson, F., Hedström, K., Zúquete, A., Eds.; Springer: Cham, Switzerland, 2019; pp. 33–48. [Google Scholar]

- McLaughlin, S.; Konstantinou, C.; Wang, X.; Davi, L.; Sadeghi, A.R.; Maniatakos, M.; Karri, R. The Cybersecurity Landscape in Industrial Control Systems. Proc. IEEE 2016, 104, 1039–1057. [Google Scholar] [CrossRef]

- Marulli, F.; Lancellotti, F.; Paganini, P.; Dondossola, G.; Terruggia, R. Towards a novel approach to enhance cyber security assessment of industrial energy control and distribution systems through generative adversarial networks. J. High Speed Netw. 2024. [Google Scholar] [CrossRef]

| Motion Device | Modules | Hardware Identifier |

|---|---|---|

| SliderRobot | PM0 | Control Unit 1 |

| DCM0 | Control Unit 2 | |

| RobotLogistics | PM1 | SCADA Unit |

| DCM1 | Communication Unit | |

| SliderLogisticsTray | PM2 | Control Unit 1 |

| DCM2 | Control Unit 2 | |

| RobotProduction | PM3 | SCADA Unit |

| DCM3 | Communication Unit | |

| CircularConveyorBelt | PM4 | SCADA Unit |

| DCM4 | Communication Unit | |

| RobotPPU (U Axis of RobotPP) | PM5 | SCADA Unit |

| DCM5 | Communication Unit | |

| RobotPPL (L Axis of RobotPP) | PM6 | SCADA Unit |

| DCM6 | Communication Unit | |

| RobotPPS (S Axis of RobotPP) | PM7 | SCADA Unit |

| DCM7 | Communication Unit | |

| RotatingTable | PM8 | SCADA Unit |

| DCM8 | Communication Unit | |

| COM | Communication Unit | |

| DEV | Development Unit | |

| RM | SCADA Unit | |

| GUI | SCADA Unit |

| Hardware Identifier | Hardware | Specification |

|---|---|---|

| Development Unit | Dell Latitude 7490 | Intel i7-8650U 1.90 GHz/32 GB RAM |

| Communication Unit | Raspberry Pi 5 | ARM Cortex-A76/8 GB RAM |

| SCADA Unit | Intel NUC7i7DNB | Intel i7-8650U 1.90 GHz/16 GB RAM |

| Control Unit 1 | Yaskawa iC9212-EC | ARM Cortex-A17 1.26 GHz/2 GB RAM |

| Control Unit 2 | Yaskawa iC9226-EC | ARM Cortex-A17 1.26 GHz/2 GB RAM |

| Parameter Set | Set 1 | Set 2 |

|---|---|---|

| Model | 1D-CAE | MLP-AE |

| Window size | 64 | 64 |

| Step size | 1 | 1 |

| Number of layer | 5 | 5 |

| Dimensions of layer | 64/32/16/32/64 (MaxPooling) | 64/32/16/32/64 |

| Loss function | MAE | MAE |

| Optimizer | Adam | Adam |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goetz, C.; Humm, B.G. A Hybrid and Modular Integration Concept for Anomaly Detection in Industrial Control Systems. AI 2025, 6, 91. https://doi.org/10.3390/ai6050091

Goetz C, Humm BG. A Hybrid and Modular Integration Concept for Anomaly Detection in Industrial Control Systems. AI. 2025; 6(5):91. https://doi.org/10.3390/ai6050091

Chicago/Turabian StyleGoetz, Christian, and Bernhard G. Humm. 2025. "A Hybrid and Modular Integration Concept for Anomaly Detection in Industrial Control Systems" AI 6, no. 5: 91. https://doi.org/10.3390/ai6050091

APA StyleGoetz, C., & Humm, B. G. (2025). A Hybrid and Modular Integration Concept for Anomaly Detection in Industrial Control Systems. AI, 6(5), 91. https://doi.org/10.3390/ai6050091