1. Introduction

The rapid digitization of business operations has ushered in unprecedented opportunities for innovation, scalability, and operational efficiency. However, this transformation has also significantly expanded the attack surface of organizations through increasingly complex and interconnected technological infrastructures [

1]. The widespread adoption of internetworking devices, cloud services, and Internet of Things (IoT) technologies has introduced multiple vectors for exploitation, complicating efforts to secure digital assets [

2,

3].

As the cyber threat landscape continues to evolve, organizations face mounting challenges in maintaining robust security postures. Traditional security mechanisms such as firewalls, antivirus software, and intrusion detection systems are inherently reactive and often insufficient against emerging threats that exploit vulnerabilities before detection and response can occur [

1]. These legacy systems rely heavily on known threat signatures, limiting their capacity to identify novel or sophisticated attacks in real time [

4]. Consequently, there is a growing shift toward proactive cybersecurity strategies, notably threat hunting, which aims to detect and mitigate both known and unknown threats before they materialize [

5].

Threat hunting is a dynamic and iterative process involving the generation, monitoring, and refinement of threat hypotheses. It operates under the assumption that adversaries may already reside within the network, prompting analysts to validate hypotheses through active investigation and anomaly detection [

6]. Unlike traditional security tools, threat hunting enables pre-emptive identification of malicious activity, offering a more aggressive and anticipatory defense posture [

7]. Hypotheses are typically informed by threat intelligence and historical data, serving as educated guesses to explain potential intrusions or suspicious behavior [

3]. This proactive stance is critical, as cyber-attacks frequently bypass signature-based detection and do not always trigger conventional alerts [

4].

To enhance the effectiveness of threat hunting, organizations increasingly leverage machine learning (ML) algorithms capable of processing vast volumes of network and system data. These algorithms identify anomalous patterns and deviations from baseline behavior, improving detection accuracy over time through continuous learning. ML-driven threat hunting facilitates rapid classification of threats, enabling timely response and mitigation [

8]. By analyzing historical data and categorizing incidents, AI-based systems significantly reduce response times and limit the impact of cyber-attacks.

Advanced techniques, such as natural language processing and predictive analytics, further empower security tools to detect subtle indicators of compromise. AI-powered systems can autonomously monitor network traffic, identify anomalies, and forecast potential threats before they escalate [

9]. Technologies, including deep learning and pattern recognition, have become instrumental in developing intelligent systems capable of real-time threat detection and adaptive response [

10]. These autonomous platforms not only strengthen threat intelligence but also accelerate incident response, thereby minimizing organizational exposure to cyber risks [

8].

Traditionally, hypotheses are crafted manually by security analysts, who rely on their expertise to interpret vast amounts of network data and identify potential signs of compromise. However, as Nour et al. (2023) [

11] highlight, this process is both time-consuming and highly dependent on specialist knowledge, which is not always available. In large enterprise environments, where data volumes are immense and threats are increasingly sophisticated, manual hypothesis generation quickly becomes unsustainable. Equally critical is the validation and verification of these hypotheses, which determines whether a threat truly exists and reveals the tactics, techniques, and procedures (TTPs) used by attackers. Yet, as Jadidi and Lu (2021) [

4] note, manual validation often overlooks the reliability and quality of the hypotheses themselves, leading to delayed responses and missed threats.

As a result, manual hypothesis generation and validation remain major bottlenecks in the threat hunting process—slowing detection, increasing the risk of human error, and limiting organizational agility in responding to emerging threats. Although prior research has advanced threat hunting through machine learning, graph-based approaches, and proactive frameworks, these methods remain constrained by reliance on static datasets, the continued need for manual hypothesis generation, and limited integration of heterogeneous data sources. Moreover, high-performing ML models often lack explainability, which hinders trust and adoption in real-world enterprise environments. This creates a clear methodological gap: the absence of an automated, standardized, and interpretable and standardized threat hunting methodology that unifies hypothesis generation, validation, and proactive detection across diverse and evolving data sources.

This study aims to automate the generation and validation of threat hypotheses using deep learning techniques, ultimately improving detection speed, reducing false positives, and strengthening the cybersecurity posture of the enterprise. Hence, the study makes the following contribution:

Introduce a hybrid CNN-LSTM model that automates both hypothesis generation and validation, reducing reliance on manual analyst input.

Implement TON_IoT to automate hypothesis generation and validation in the threat hunting process

Confidence-based hypothesis prioritization, transforming raw anomaly scores into actionable, ranked threat leads for SOC triage

Enhance the framework with PCA and RFE-RF for interpretable feature selection and applied imbalance-handling techniques (SMOTE, stratified sampling) to improve robustness on IoT/IIoT datasets.

The structure of this paper is as follows:

Section 2 reviews relevant literature on AI-based threat detection and hybrid deep learning models.

Section 3 outlines the proposed methodology, including the CNN-LSTM architecture and feature selection techniques.

Section 4 describes the experimental setup, and

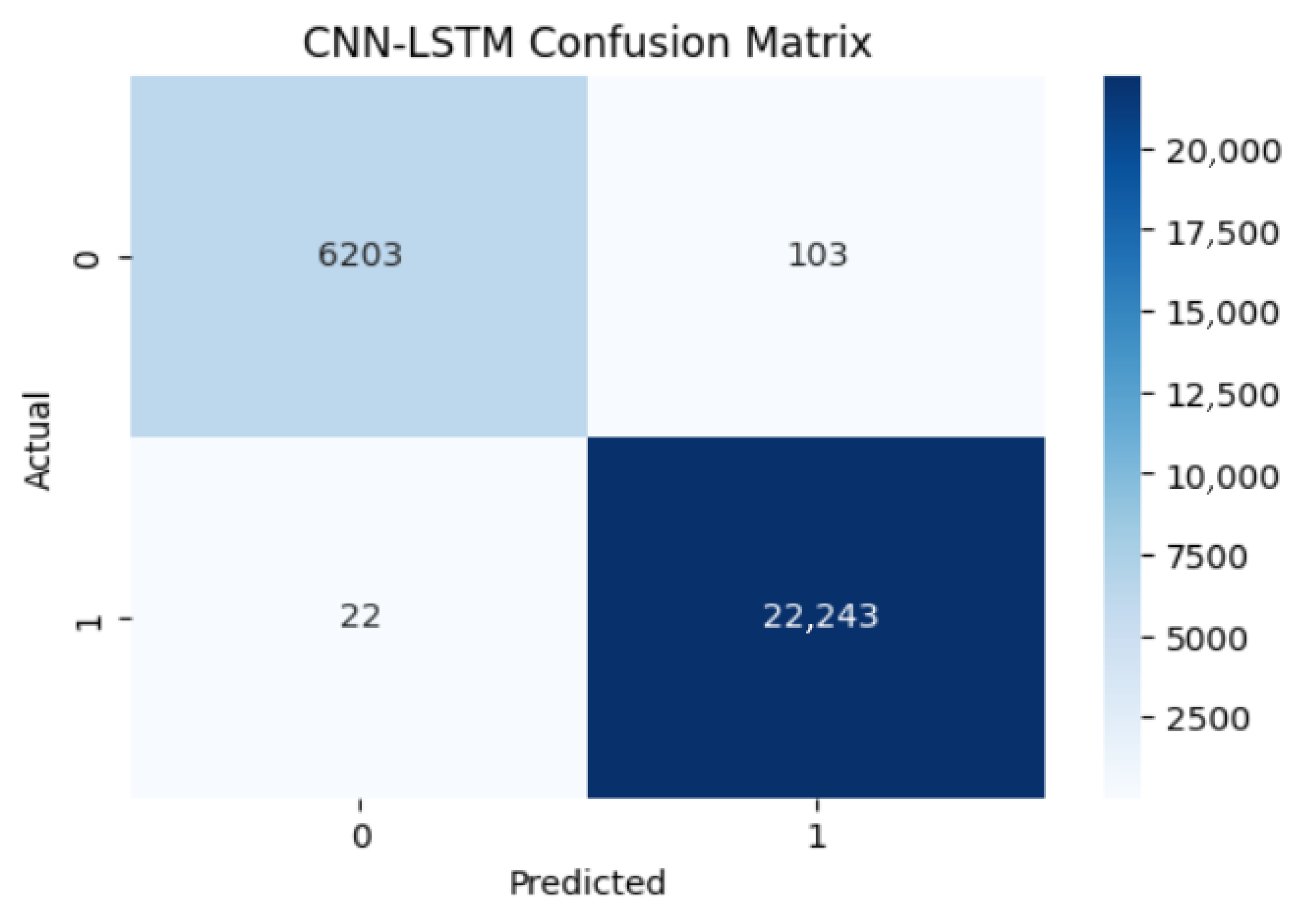

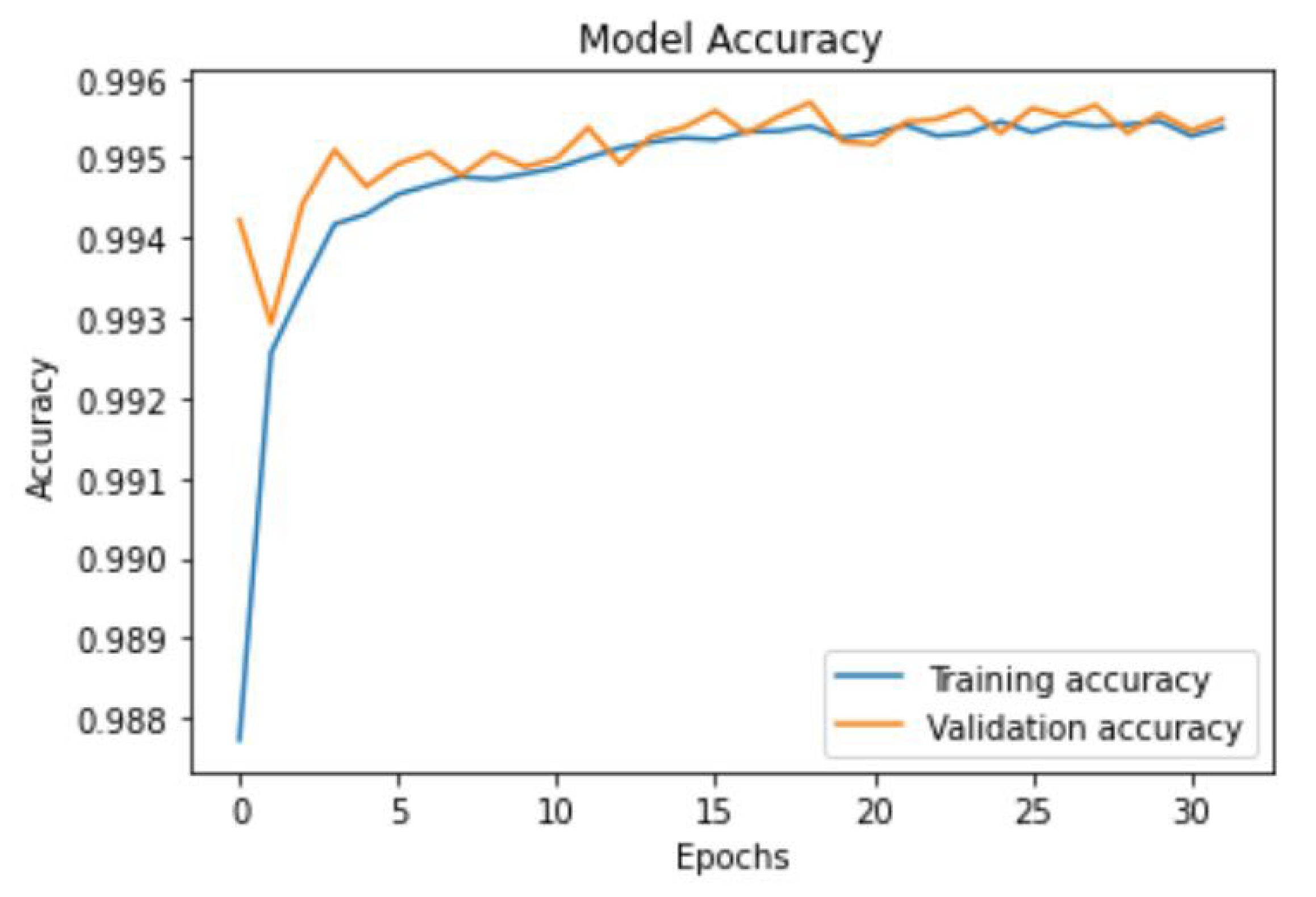

Section 5 presents performance evaluation using the TON_IoT dataset. This section also presents the empirical findings, including confusion matrices, accuracy and loss curves, ROC curves, and comparative performance analyses.

Section 6 concludes the study with key findings, and

Section 7 highlights directions for future research.

3. Methodology

The proposed hybrid model integrates Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks to enhance the classification accuracy of normal versus anomalous network traffic. CNNs are supervised learning algorithms known for their robustness in computer vision and efficiency in detecting malicious activity due to their ability to learn directly from data and capture spatial features [

20,

21,

25]. They mitigate parameter explosion through weight sharing, accelerating training processes. LSTMs, a variant of Recurrent Neural Networks (RNNs), are designed for time-series data and address the vanishing gradient problem by retaining relevant long-term dependencies through internal loops [

17,

26].

As baseline models, Random Forest Classifier (RFC) and Autoencoder (AE) are employed. RFC is a supervised ensemble method that constructs multiple decision trees and aggregates their predictions to reduce overfitting and improve accuracy. It handles high-dimensional data efficiently, is resistant to noise and outliers, and uses bootstrap sampling and voting mechanisms to enhance generalisation [

27,

28]. Its decision-making is guided by the Gini Index Formula (1):

Pi is the proportion of the number of attributes in each class, and m is the number of each class. The feature that has the lowest total Gini Index value will be the root node in the tree. The total Gini Index Formula (2) at an internal node:

where

T1 is the total records belonging to the first class,

T2 is the total records belonging to the second class, and T is the total records of all classes.

The Autoencoder (AE), an unsupervised neural network, is used to detect anomalies (Equation (4)) by learning and reconstructing normal traffic patterns. Deviations from these patterns are flagged based on reconstruction error, using a threshold derived from the Mean Squared Error (MSE) in Formula (3) [

29,

30,

31]. The AE architecture consists of an encoder and decoder, trained to minimise:

where

xi is the original input,

is the reconstructed output and n is the number of data points.

where 1 denotes an anomaly and 0 represents normal traffic.

3.1. Data Collection

To train the proposed model, an appropriate dataset is required. The network traffic dataset is obtained from the publicly available TON_IoT dataset. The TON_IoT dataset is generated from a variety of data sources collected from telemetry datasets of IoT and IIoT systems, including data of connected devices such as Windows and Linux operating systems, as well as network traffic from the IIoT system [

22]. The full TON_IoT dataset contains 22,339,021 instances, with 21,542,641 attack samples and 796,380 normal samples, indicating a severe class imbalance (96.43% attack vs. 3.57% normal). The attack samples encompass nine categories: scanning, injection, ransomware, backdoor, man in the middle (MITM), distributed denial of service (DDoS), denial of service (DoS), cross-site scripting (XSS), and password attacks. While the exact distribution of samples across these specific attack categories in the full dataset is not detailed here, the overwhelming majority of samples being attack instances confirms the dataset’s significant imbalance, with normal traffic representing only 3.57% of the total. For this study, the network train-test subset (train_test_network.csv), comprising 211,043 instances initially, was used. This subset maintains a similar imbalance pattern to the full dataset, with the majority of samples being attack instances across the nine attack categories mentioned. After preprocessing (as detailed in

Section 3.1.1), the dataset was reduced to 190,474 instances while preserving the class imbalance characteristics. The dataset includes a label column with two classes for binary classification: normal (0) and anomalous (1). To address the severe class imbalance observed in both the full dataset and the train-test subset, synthetic minority oversampling technique (SMOTE) was applied during preprocessing to balance class representation, as described in the following section.

3.1.1. Pre-Processing

The dataset, initially comprising 211,043 instances and 44 features, was loaded using Pandas and cleaned to ensure quality for machine learning classification. Features such as source/destination ports and IP addresses were removed due to their limited predictive value and risk of over-fitting. After eliminating duplicates and redistributing missing (‘-’) values across categorical classes, the dataset was reduced to 190,474 instances and 40 features. To address the severe class imbalance inherent in the TON_IoT dataset (96.43% attack vs. 3.57% normal in the full dataset, and similarly imbalanced in the 190,474-instance subset), SMOTE (Synthetic Minority Over-sampling Technique) was applied to generate synthetic samples of the minority class (normal traffic), effectively balancing the dataset for training. Stratified sampling was also employed during dataset splitting to maintain class proportions across train, validation, and test sets. After SMOTE augmentation and feature selection (PCA retaining 95% variance and RFE-RF selecting the top 20 most predictive features), the final dataset distribution is as follows:

Training set: 1,333,331 samples × 20 features (95.89% of total)

Validation set: 28,572 samples × 20 features (2.05% of total)

Test set: 28,571 samples × 20 features (2.05% of total)

Total: 1,390,474 samples × 20 features

Due to SMOTE augmentation, the final training dataset achieves approximately balanced class distribution (roughly 50% normal vs. 50% attack samples), enabling the hybrid CNN-LSTM model to learn effectively from both classes without bias toward the dominant attack category.

Table 2 illustrates the final dataset shapes after preprocessing and augmentation. The dataset was split using an 96:2:2 ratio (train:validation:test) to provide sufficient training data while maintaining adequate validation and test sets for model evaluation.

Categorical features were transformed using one-hot encoding, resulting in binary feature expansion and increased dimensionality [

29]. For example, the “service” feature was split into new binary features like “service_dns” and “service_ftp”.

To ensure balanced feature contribution during training, min-max normalisation was applied to scale all values between 0 and 1 [

21,

32]. The normalisation Formula (5) used was:

The dataset is split into a train (70%) and validation (15%) sets to train and validate the model, and an unseen test (15%) set to measure the model’s performance. Stratified sampling is applied due to class imbalance of the dataset, thus maintaining class distribution.

3.1.2. Exploratory Data Analysis (EDA)

To better understand the dataset and guide feature selection, visual exploration was conducted using Seaborn (version 0.12.2) and Matplotlib (version 3.7.1). A bar chart revealed a clear imbalance between normal (0) and anomalous (1) traffic classes, which could bias classification results. To address this, the study applied a hybrid oversampling technique –SMOTE to generate synthetic samples of the minority class while slightly reducing the majority class. This balanced dataset was used to train the RFC baseline model for improved detection of normal and anomalous traffic [

28].

For feature selection, the study adopted a correlation-based approach using the Pearson correlation coefficient (PCC) to identify and eliminate highly correlated feature pairs, thereby reducing multicollinearity.

A correlation matrix was generated, and a threshold of 0.8 was set to determine which features to retain. This helped streamline the dataset while preserving predictive power.

To further enhance model performance, feature engineering was employed to uncover hidden relationships within the data [

31]. Feature extraction techniques were used to reduce dimensionality and retain only the most informative attributes. Among available methods:PCA, LDA, and AE, this study used Principal Component Analysis (PCA), targeting 95% variance retention. The training data was standardised, and PCA was applied to transform the dataset into a lower-dimensional space while preserving essential variance [

32,

33]. The covariance matrix was computed using (6):

Here, C represents the covariance matrix of the standardised data consisting of N samples, and XT is the transpose of X.

Finally, Recursive Feature Elimination with a Random Forest estimator (RFE-RF) was used to select the most relevant features. This iterative process ranks features by importance, removes the least useful ones, and refits the model until an optimal subset is reached. RFE-RF was chosen over RFE-SVM for its superior computational efficiency and predictive accuracy [

18,

30].

3.1.3. Dataset Splitting and Training Preparation

Following feature selection, the preprocessed TON_IoT dataset (190,474 instances post-SMOTE balancing, with 20 selected features) was split into training (70%), validation (15%), and testing (15%) sets using stratified sampling to preserve the class distribution (96.43% normal vs. 3.57% anomalous). This split ensures robust evaluation across heterogeneous IoT/IIoT telemetry, network flows, and OS logs, mitigating overfitting on imbalanced data. Synthetic Minority Over-sampling Technique (SMOTE) was reapplied during training with k = 5 nearest neighbors to achieve 1:1 balance, generating ~133,332 synthetic anomalous samples. Data was reshaped into sequences of timesteps = 10 for temporal modeling.

3.2. Proposed Model

The proposed model consists of CNN layers and LSTM layers. The task primarily involves capturing local sequential dependencies and temporal patterns, which CNN and LSTM models are well-suited for. CNNs efficiently extract local features, while LSTMs capture long-term dependencies without the need for the extensive pretraining typically required by Transformer architectures. CNN and LSTM models allow more direct interpretability of learned features and temporal behavior, which aligns with the goal of analyzing the underlying patterns in the data rather than solely maximizing predictive accuracy. For the CNN layers, consider x ∈ R

d as the d-dimensional feature vector associated with the ith attribute in the network traffic data. Let x ∈ R

A×d represent the training input dataset, with A denoting the total length of the input sequence. For every sample, j, within this training dataset, examine the data segment w

j that comprises k successive consecutive vectors. This can be expressed as:

A convolution procedure implies a filter

p, which is used to generate a new feature c. Each feature element

cj for data vector

wj is represented as follows:

Here, ⊗ denotes the convolution operation, b ∈ R serves as the bias term in the neural network, and

f presents the nonlinear activation function. In this implementation, we apply the Rectified Linear Unit (ReLU) as the nonlinear activation, defined as follows:

Vectors of the same dimensions as those defined by X (t). The forget gate determines which details to retain or discard by integrating X (t) with the prior hidden state X (t − 1). Additionally, the output is produced using the sigmoid function and then scaled by the earlier cell state C (t − 1). The update gate incorporates the input gate, which identifies the data to incorporate for creating C (t). This process relies on the sigmoid function and the tanh function via the tanh gate. The product of these gates is combined with the result from the forget gate multiplied by C (t − 1) to produce C (t). The present cell state C (t) is passed through a tanh activation, then multiplied by the sigmoid output from the output gate to yield the current hidden state h (t), which serves as the LSTM network’s output. The equation below illustrates the output formula:

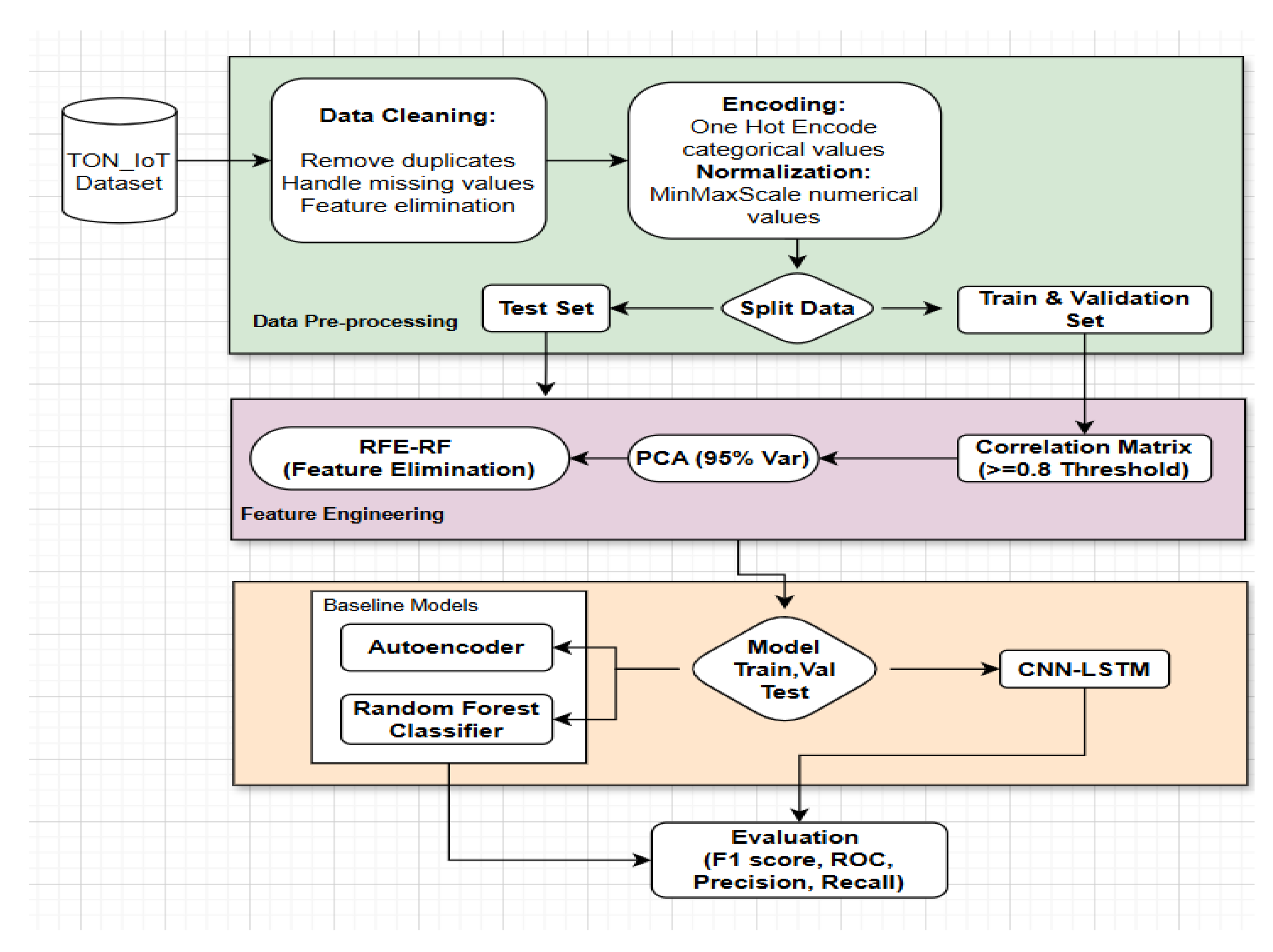

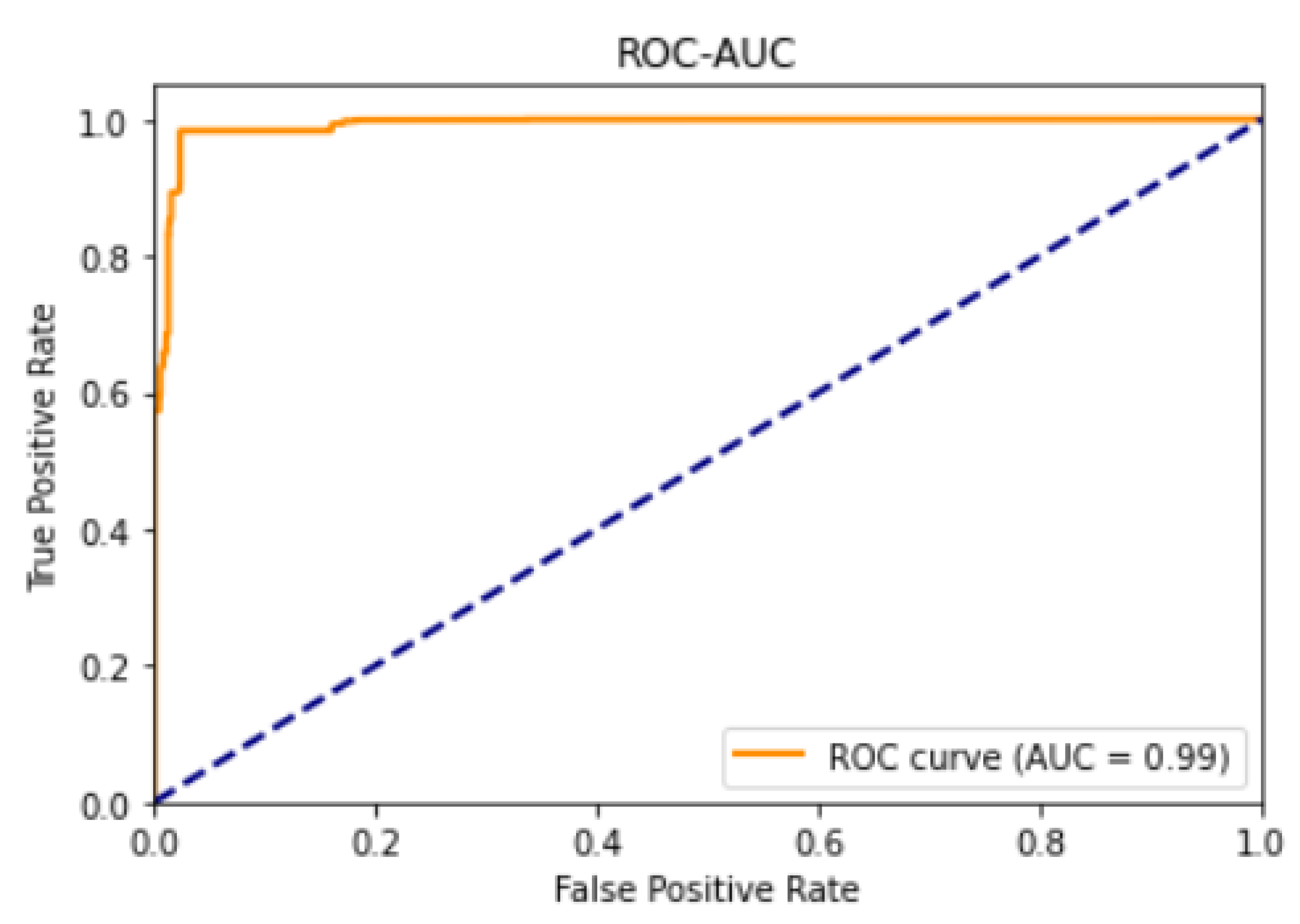

The proposed model is designed to automate hypothesis generation within enterprise networks. The combination of spatial and temporal feature extraction enables the model to detect threats such as zero-day attacks. The workflow of the proposed model is illustrated using a flowchart and block diagram, from data collection to model evaluation. Data is collected from the TON_IoT dataset, and data preprocessing is carried out. Duplicates are removed, missing values are handled, and unique features are eliminated. One-hot encoding performed on categorical values and numerical values is normalised using a min-max scaler into a range of 0 and 1. The dataset is split into train (70%), validation (15%) and test sets (15%), and a correlation matrix of the train set is generated. A threshold of greater than 0.8 is implemented, and highly correlated feature pairs are eliminated. PCA is applied on the train set to reduce dimensionality, selecting principal components and retaining 95% of variance. The PCA dataset sample is fitted to the RFE-RF, and the features are ranked by importance, and the most relevant features based are the importance scores are chosen. PCA and RFE-RF are also performed on test and validation sets, transforming them into a shape like the train set. After the training performance of the models is evaluated using F1 score, precision, recall, and ROC.

Figure 1 illustrates the sequential process, providing clarification of the workflow between steps.

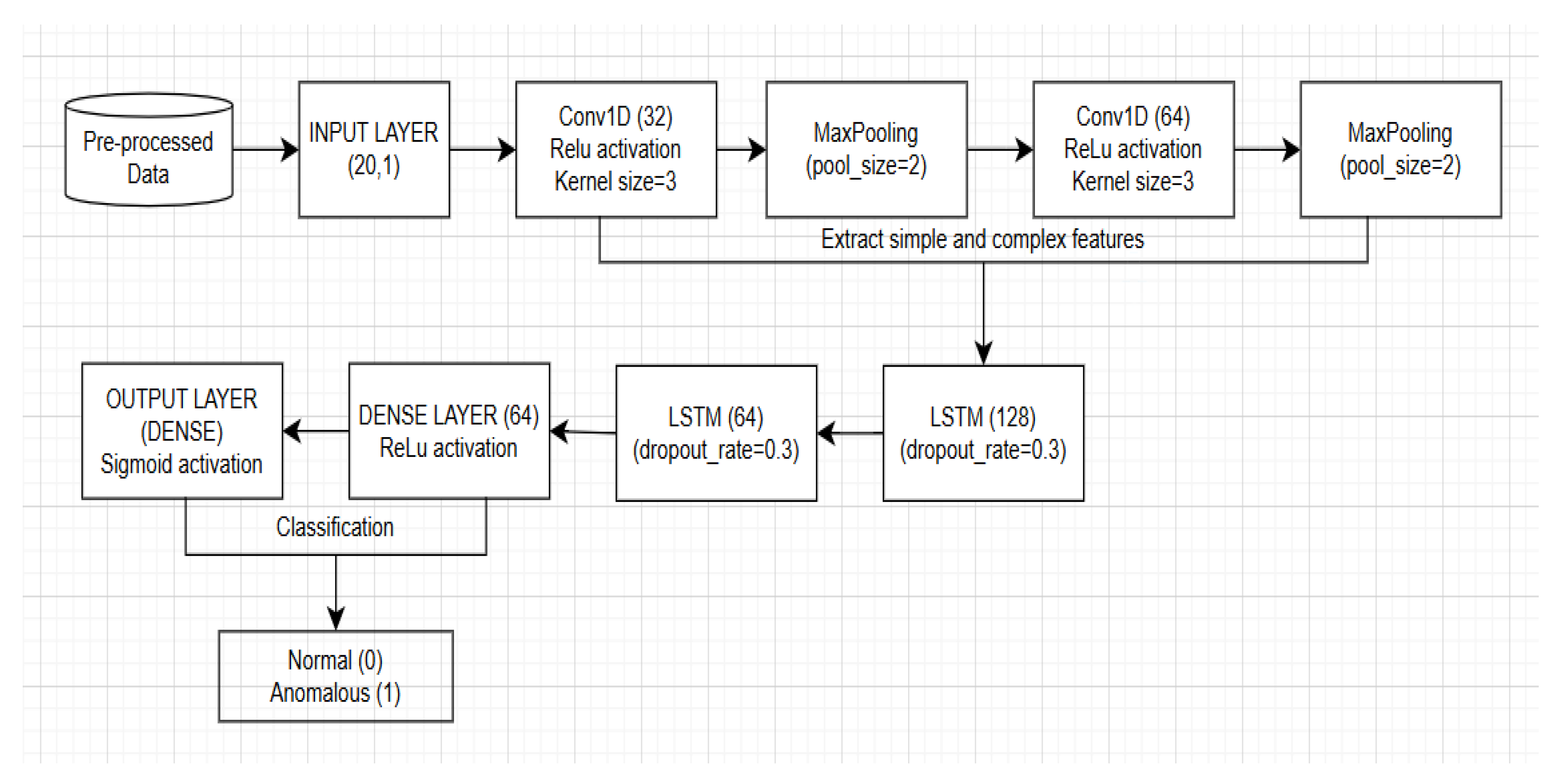

Figure 2 represents a high-level architecture of the hybrid CNN-LSTM model. It shows that the CNN layers (Conv1D) are responsible for extracting the simple and complex features of network traffic. These are later transmitted to the LSTM layers, which capture the temporal feature patterns, which are transmitted to the fully connected dense layer and the output layer, which classify the network traffic as either normal (0) or anomalous (1).

Model Architecture and Hyperparameters

The hybrid CNN-LSTM model was implemented in Python using Keras/TensorFlow, with the following layer configuration optimized via grid search on a validation subset:

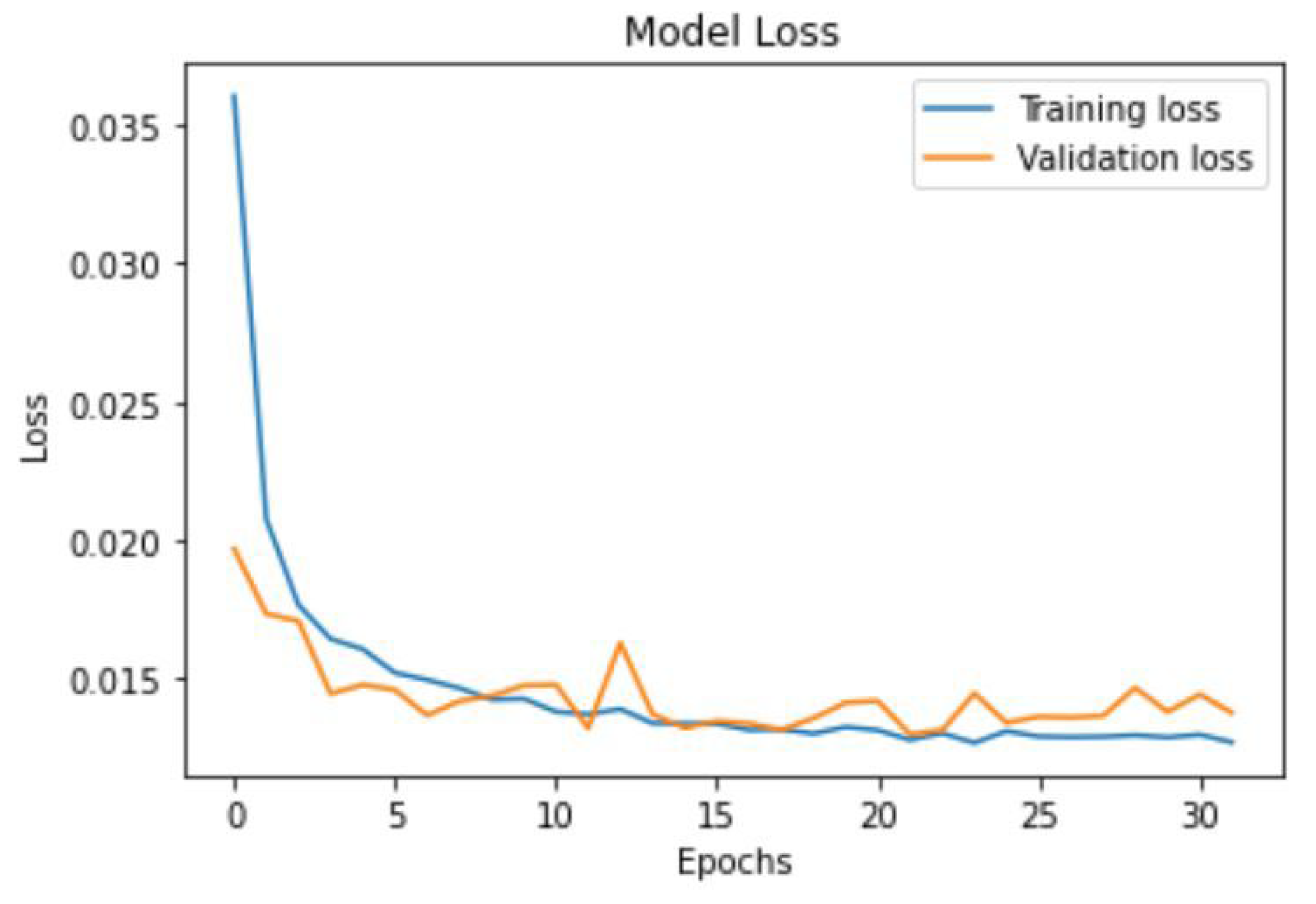

(1) CNN Branch: Two 1D convolutional layers (64 filters, kernel_size = 3, ReLU activation), followed by max-pooling (pool_size = 2) to extract spatial patterns from network flows (e.g., packet byte distributions). (2) LSTM Branch: Two LSTM layers (128 units each, return_sequences = True for the first), with dropout = 0.2 to handle temporal dependencies in telemetry sequences while preventing overfitting on TON_IoT’s noisy IoT data. (3) Fusion and Output: Concatenated features fed into a dense layer (64 units, ReLU) and final sigmoid output for binary anomaly classification, yielding probabilistic confidence scores for hypothesis prioritization. The model was compiled with Adam optimizer (learning_rate = 0.001, β1 = 0.9, β2 = 0.999) and binary cross-entropy loss, suitable for imbalanced anomaly detection. Training used mini-batch gradient descent for 50 epochs with batch_size = 128, balancing computational efficiency (~1.5 h on NVIDIA RTX 3060 GPU) and gradient stability on the ~1.39 million augmented samples. This batch size was selected as larger values (e.g., 256) risked noisy updates on variable-length IoT sequences, while smaller (e.g., 64) increased training time without AUC gains. Epochs were capped at 50 with early stopping (patience = 10, monitoring validation AUC), converging at ~35–40 epochs to avoid overfitting, as validated by a 1–2% performance drop in ablation tests with 30 epochs. For baselines: Random Forest used 100 estimators (as in RFE-RF); Autoencoder had 3 hidden layers (32 units) trained for 50 epochs with MSE loss.

7. Future Work

Future research will address key limitations identified in this study, particularly dataset constraints, deployment challenges, and the need for advanced automated hypothesis generation. First, the model’s reliance on the static TON\_IoT dataset despite its comprehensive IoT/IIoT telemetry and attack diversity limits validation of generalizability in real-time, evolving enterprise environments. To overcome this, the framework will be evaluated on additional benchmark datasets (e.g., UNSW-NB15, CIC-IDS2017) and real-time telemetry streams to confirm robustness across heterogeneous network conditions and emerging attack patterns. Second, critical deployment metrics essential for enterprise adoption were not assessed due to the study’s focus on model accuracy and preprocessing optimization. These include throughput (inferences per second), latency (real-time processing delay), model size (for edge deployment), inference cost, and resource utilization (CPU/GPU demands), particularly on gateway devices and when integrated with SIEM/SOAR systems. Future work will rigorously quantify these metrics to ensure scalability and operational feasibility. Third, while the current framework automates anomaly scoring to support hypothesis generation, it lacks integration with structured multi-criteria decision-making systems or knowledge graph-based hypothesis refinement. Extending the model to incorporate automated hypothesis variants and MITRE ATT\&CK TTP mapping will enable full lifecycle automation from detection to analyst-ready threat narratives. Finally, given the black-box nature of deep learning, integrating explainable AI (XAI) techniques such as SHAP and LIME will provide global and local feature attributions, enhancing interpretability and analyst trust. Additionally, Transformer-based models will be explored to generate natural language justifications for detected anomalies, delivering human-readable threat rationales. These advancements will directly address current gaps, transforming the framework into a fully interpretable, deployable, and proactive threat hunting solution for modern enterprise security operations.