1. Introduction

In recent months, numerous insights into the accessibility and applicability of Large Language Models (LLMs) have been presented in reports and papers, including one from OpenAI researchers that defined Generative Pre-trained Transformer models as general-purpose technologies [

1,

2,

3]. These works showcase the transformative potential of technologies such as ChatGPT, Claude.ai, Google Gemini, and HuggingChat, which have been instrumental in generating texts across a spectrum of subjects and varying levels of intricacy based on user-provided prompts.

Simultaneously, the rapid advancement of technologies, particularly Artificial Intelligence (AI), poses significant challenges to critical societal sectors such as employment and education [

4,

5,

6,

7,

8]. In this context, the field of Future Studies becomes increasingly important, as it aims to understand the trends shaping our future and identify paths toward desirable outcomes. Traditional methodologies, such as the Delphi method, involve consulting experts through questionnaires in iterative rounds to converge on consensus-based future scenarios, and are widely acknowledged.

However, accessibility limitations to these experts, often due to geographic or time constraints, represent a critical challenge. These difficulties become even more evident in large-scale decision-making processes, where the involvement of a diverse and substantial group of experts is essential to ensure the robustness and reliability of the results. When such collaboration is limited, the quality, diversity, and depth of the insights may be significantly compromised.

The proficiency of LLMs in emulating specialized knowledge and delivering prompt, accurate insights, even with a reasonable degree of confidence, offers an effective solution to the challenges of expert accessibility and participation. This capability not only enhances the efficiency and scope of research but also opens the door to new ways of acquiring and utilizing expert knowledge. By simulating diverse personas, LLMs provide a means to explore their potential role in Delphi studies, assessing whether such AI-generated participants can contribute meaningfully to consensus-building and the generation of robust insights. Consequently, this approach paves the way for more inclusive and comprehensive research, ensuring that multiple perspectives are incorporated into future-oriented analyses. Integrating advanced AI tools like LLMs into established methodologies such as the Delphi method could thus significantly transform expert consultation and collaborative research practices.

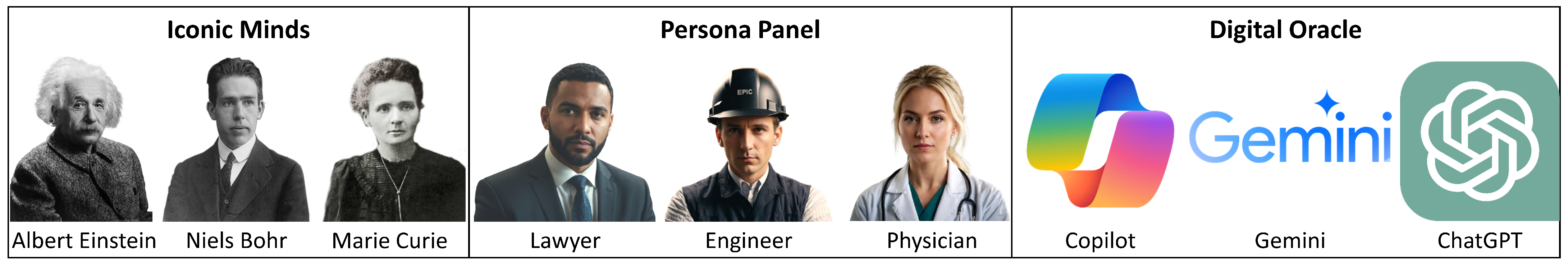

In this work, we propose AI Delphi, an innovative application of LLMs for emulating expert personas in Delphi studies. This approach leverages the advanced capabilities of LLMs to simulate the insights and expertise of renowned individuals across various fields. Within this framework, we explore three distinct models: Iconic Minds Delphi, which emulates personas of notable researchers; Persona Panel Delphi, involving fictional personas representing professionals from diverse disciplines; and Digital Oracle Delphi, where different LLMs serve as the panel of experts. These models represent a groundbreaking fusion of AI and traditional research methodologies, potentially reshaping our approach to understanding and predicting future trends and scenarios.

Moreover, we conducted two experiments based on the Iconic Minds Delphi, emulating the perspectives of influential individuals such as Andrew Ng, Thomas Piketty, and Martha Nussbaum. In the first experiment, we analyzed the Future of Work by replicating the Delphi questionnaire originally coordinated by the Millennium Project on Future Work/Technology 2050 [

9], adapting the questionnaire to the 2030–2060 time frame. In the second experiment, we aim to reach a consensus on the Future of higher education in Brazil through a Delphi process with two rounds of discussions. This Higher Education Delphi study focuses on refining and improving scenarios developed previously using other methods. These two experiments offer a balance between global and local perspectives, demonstrating the versatility of the approach across varied themes, domains, and contextual settings.

This work is organized as follows:

Section 2 reviews the existing literature, establishing the background for the investigation conducted in this work.

Section 3 discusses the theoretical grounding, establishing the theoretical and contextual basis for the research.

Section 4 outlines the methodology employed in this work, detailing the development and application of AI-generated personas within the Delphi method.

Section 5 details the Delphi experiments conducted with the Iconic Minds Delphi, highlighting the key findings. Then,

Section 6 reflects on the results, exploring their implications and potential limitations of the study. Finally,

Section 7 summarizes the findings, discusses our contributions, and suggests directions for future research.

2. Related Work

The emergence and popularization of LLMs have led to exploratory studies on their capabilities, implications, and applications in different fields. In Futures Research, how these models influence and can be integrated into established methodologies, such as the Delphi approach, has become a significant research focus. This section examines previously conducted work that addresses LLMs’ reasoning abilities and potential biases and discusses the job market, educational systems, and proposed methodological innovations.

Van Belkom [

10] discussed the possibilities and challenges of using AI as an analytical tool for investigating the future of AI itself. This tool explores three distinct scenarios: exploration, prediction, and future creation. In this context, AI has demonstrated remarkable capabilities in categorizing data, identifying patterns, and projecting these patterns into future scenarios.

The implication of LLMs in the job market has been the focus of several studies in recent months. Eloundou et al. [

4], and Eisfeldt et al. [

5] investigated the impact of LLMs on the U.S. labor market from different angles. Using a methodology that employs LLMs to assess the exposure and overlap of tasks among workers, Eloundou et al. demonstrate that around 19% of jobs have at least half of their tasks susceptible to automation by current and soon-to-be-developed LLM-powered software. On the other hand, Eisfeldt et al., by utilizing generative AI to evaluate whether tasks performed by various occupations can be carried out by their current or future versions, discovered that the introduction of ChatGPT significantly influenced valuations of publicly traded companies, resulting in a daily increase in firm returns of about .4 percentage points.

Expanding this discussion to other regions, Chen et al. [

6] explored the potential impacts of generative AIs on the Chinese job market, employing various LLMs as classifiers to determine occupational exposure, concluding that the release of LLMs could significantly alter labor demand trends, representing a substantial shock to the market. Similarly, Gmyrek et al. [

7] presented a global analysis of the potential exposure of occupations and tasks performed by generative AIs. By using LLMs to estimate potential exposure scores at the task level, they found that clerical jobs have the highest share of tasks vulnerable to Generative Pre-trained Transformer (GPT) technology, with a significant portion at medium-level exposure and about 25% at high risk of automation, indicating that many such jobs might not emerge in developing countries. For other types of “knowledge work”, it shows only partial exposure, suggesting more potential for augmentation and productivity benefits than outright displacement.

Regarding the future of education, recent studies offer visionary perspectives on adapting higher education to address the rapid evolution of the job market and technological advancements. According to Ahmad [

11] and Hammershøj [

12], scenario-based planning has become a strategic tool for forecasting the future of education. This approach, grounded in the creation of diverse future scenarios, serves not only to anticipate technological disruptions but also to prepare educational institutions for these impending changes. Meanwhile, Kromydas [

13] and Raich et al. [

8] spotlight the need for educational systems to undergo significant transformations. These works highlight the importance of adopting innovative and flexible educational models that can respond to current technological trends and shape a future where higher education plays a pivotal role in societal advancement and individual empowerment.

The reasoning ability of LLMs has also been a focal point in various research studies. Wang et al. [

14] and Liang et al. [

15] delved into this aspect extensively. Wang et al. employed a debate approach to understanding ChatGPT’s reasoning, aiming to assess the model’s understanding of a particular topic. The central idea is to present the LLM with a new question and challenge it with an initially incorrect user-provided solution, prompting it to engage in a discussion until arriving at the correct decision. In contrast, Liang et al. adopted a strategy in which the LLM refines its solutions iteratively with self-generated feedback. They introduced a multi-agent debate framework, in which each agent presents arguments and a judge oversees the process until a final solution is reached.

Advancing in the exploration of LLM capabilities, Talebirad and Nadiri [

16] conducted research focused on the use of multiple agents within an LLM, where each agent possesses distinct characteristics, aiming to enhance performance in various tasks. On the other hand, Ling et al. [

17] proposed that LLMs engage in explicit and rigorous deductive reasoning, ensuring process reliability through self-verification. This approach enables LLMs to produce precise reasoning steps, where subsequent steps are firmly grounded in previous ones. Wang et al. [

18], meanwhile, adopted a methodology that transforms a single LLM into a cognitive synergist for tasks requiring intensive domain knowledge and complex reasoning. This approach involves multi-turn self-collaboration with various personas to intensify the model’s reasoning capabilities.

Within the exploration of LLMs for future studies and the generation of personas, another critical area of interest is the evaluation of biases within these models, particularly in terms of political inclinations. In this context, Motoki et al. [

19] played a significant role in investigating the political bias of ChatGPT. The proposed methodology involved requesting ChatGPT to assume the persona of a specific political stance. The study could analyze the model’s responses and compare them to its standard responses. This approach provided valuable insights into whether ChatGPT exhibits any significant political bias, shedding light on discussions about the neutrality and impartiality of language models in practical applications.

Through the review of the studies highlighted in this section, it becomes evident that there has been extensive exploration and evaluation of LLMs in various contexts. However, the innovative proposal of integrating LLMs with established methodologies, such as the Delphi method, represents a relatively uncharted frontier in research. This intersection has the potential to combine the agility and processing power of LLMs with the robustness and reliability of traditional methods, indicating a promising direction for future work at the intersection of generative AI and studies about the future.

3. Theoretical Background

3.1. Delphi

The Delphi methodology, developed in the early 1960s by researchers at RAND Corporation, responded to the need for reliable forecasting methods during the Cold War. These researchers addressed issues related to the military potential of future technologies and the resolution of political questions. Forecasting methods at the time included simulations of individuals and single expert forecasting, but they were limited in scope and effectiveness [

20].

Faced with these limitations, RAND researchers explored the use of expert panels to address forecasting issues. Experts tend to be more accurate than individual experts, especially when they agree on an issue within their field [

20]. However, group dynamics in face-to-face meetings introduce factors that can influence the outcome, such as psychological factors, deceptive persuasion, reluctance to abandon publicly expressed opinions, and the effect of adhering to the majority opinion [

21].

Therefore, in response to the need for a more objective method, the Delphi method was developed by Olaf Helmer, Nicholas Rescher, Norman Dalkey, and other researchers at the RAND Corporation. They sought a true consensus of experts, and the method was designed to eliminate the obstacles encountered in conference rooms. Emphasis was placed on anonymity and iterative feedback, elements considered indispensable in the methodology [

20].

In general, Delphi is applied to establish forecasts on a specific subject. The process begins by identifying and inviting relevant experts in the relevant area. Then, clear and objective questions are formulated, followed by individual questionnaires in several rounds. After each round, the results are compiled, and collective feedback is provided to the participants. This process encourages experts to reconsider and revise their initial opinions, taking into account the divergent perspectives presented. This feedback and revision cycle is repeated for a predefined number of rounds or until the experts have formed a consensus [

20].

The way consensus is understood and reached in Delphi studies is often ambiguous [

22,

23]. We observe that the accepted consensus is distinct from conventional definitions that rely on numerical agreement thresholds, such as unanimity, absolute majority (50% + 1), or even relative majorities where less than half may determine an outcome. In Delphi studies, consensus is not strictly defined by the proportion of agreement, but rather by the stability of opinions across successive rounds [

24]. This means that even in the presence of persistent disagreement or minority viewpoints, a consensus is considered reached when participant responses show little to no variation between rounds—signaling a stationary state of collective judgment.

3.2. Persona

The concept of Persona has its roots deeply embedded in the quest to gain a more nuanced understanding of target audiences within the marketing and design landscapes [

25,

26]. While interviewing real users is an evident approach, it often proves impractical due to time constraints, costs, and challenges in accessing a representative sample. Alternatively, creating fictitious users offers a viable solution. These fictitious users, called personas, are elaborated with a detailed description of users and their intentions. Such detailed personas are instrumental in devising targeted products and strategies, ensuring they meet the specific demands of diverse user segments, according to Lewrick [

25].

On the other hand, advancements in AI, primarily through the development of LLMs, have significantly expanded the applicability of the Persona methodology [

15,

16,

18]. The vast amounts of data these models can process and interpret enable the accurate emulation of specific individuals and broader groups with shared characteristics. This capability enhances our understanding of complex user behaviors and preferences, showcasing the potential of AI to transform research and practical applications across numerous fields. By leveraging the rich insights derived from extensive data, AI-generated persona creation promotes a more dynamic and nuanced approach to user segmentation and behavior prediction, overcoming some of the limitations of traditional methods.

4. Methodology

In this research, we will explore an innovative approach that leverages the advanced capabilities of LLMs within a Delphi study to enhance the analysis and understanding of future trends. This methodology represents a significant advancement in emulating diverse expert perspectives, as it transcends the usual barriers of time and geography typically associated with traditional expert consultations. By creating AI-generated personas, we can encapsulate and explore the insights and viewpoints of professionals from various knowledge areas, such as specific individuals or broader profiles, enabling a more dynamic and comprehensive exploration of future scenarios through the iterative Delphi process.

Therefore, we propose three models that utilize LLMs as central participants in a Delphi process: Iconic Minds Delphi, Persona Panel Delphi, and Digital Oracle Delphi—presented in

Figure 1.

The Iconic Minds model (

Figure 1—left) involves conducting a Delphi questionnaire where personas of well-known researchers represent experts. This is an interesting and innovative approach to researching the future. In this scenario, instead of directly consulting researchers, their views and knowledge would be encapsulated in fictional personas created based on the knowledge that the LLM has about them. This approach enables a more scalable and flexible method for connecting with experts from diverse fields of knowledge.

The Persona Panel model (

Figure 1—center) uses personas to represent experts in the Delphi questionnaire. In this scenario, a group of fictional personas will be developed to represent various disciplines relevant to the study of the future of a specific topic. These personas will reflect the typical characteristics and knowledge of experts in their respective fields. Each persona should have a detailed profile that includes information such as demographic information, academic background, professional experience, areas of expertise, and methodological approach.

The Digital Oracle model (

Figure 1—right) applies a methodology where the experts in the Delphi process are distinct LLMs. This approach represents yet another innovative approach to researching and analyzing various topics. In this scenario, instead of relying on human experts, we propose using various LLMs like ChatGPT, Claude.ai, Google Gemini, and HuggingChat as participants to generate responses and insights based on their extensive knowledge and text-generation capabilities. Furthermore, there is the possibility of merging this model with the previous ones to obtain a more comprehensive and dynamic result.

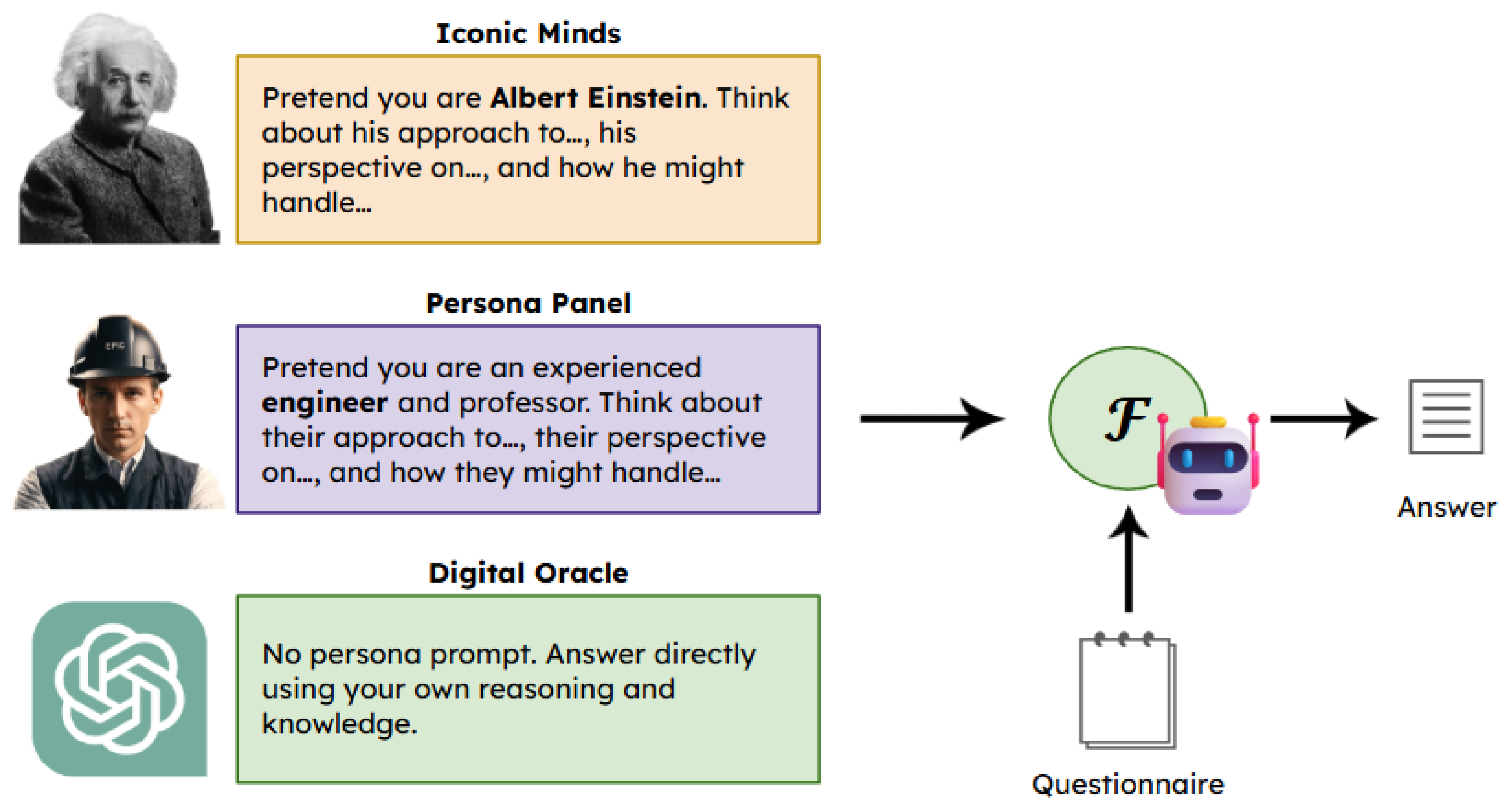

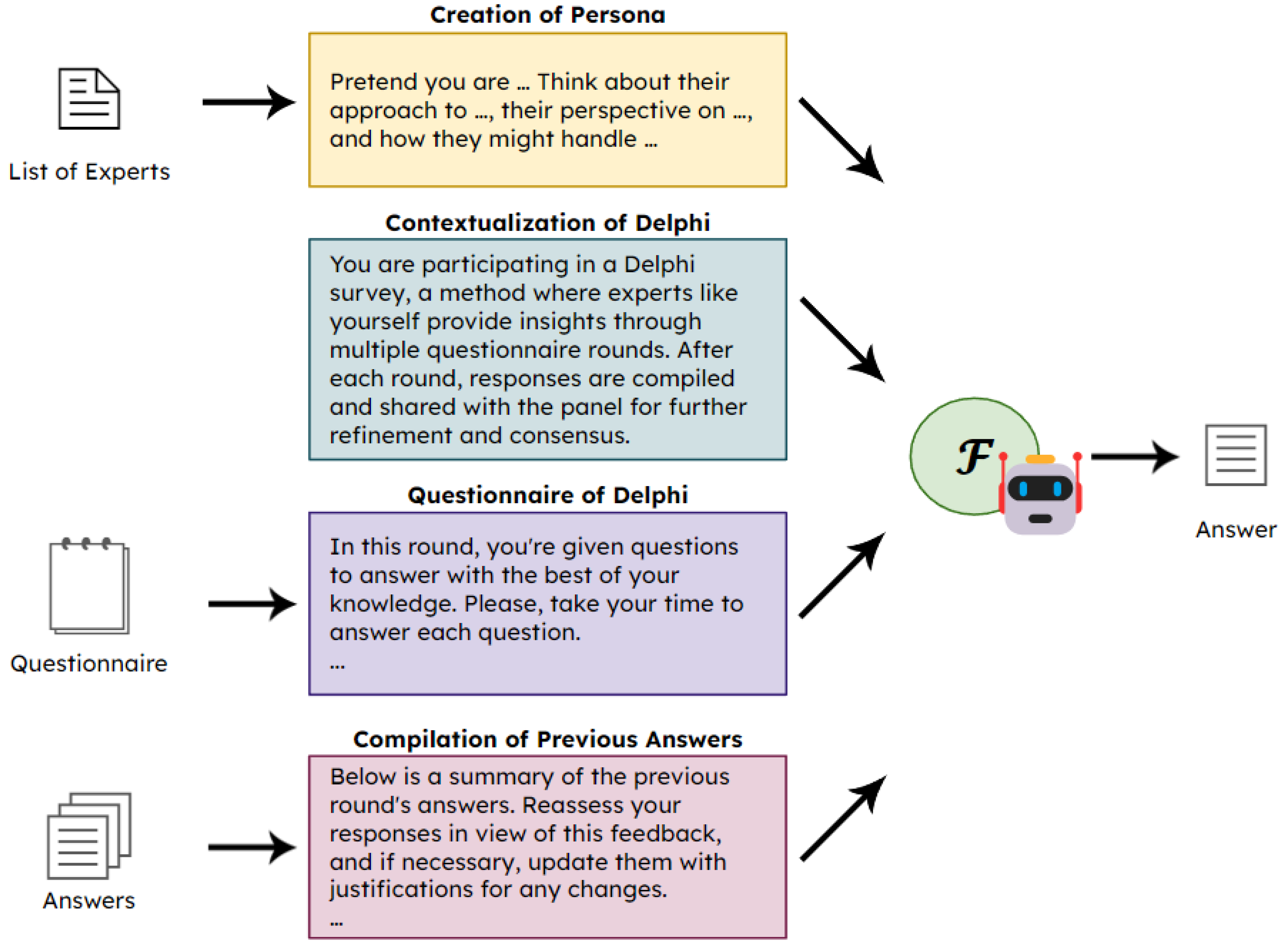

In all three AI Delphi models, expert simulation begins with a structured prompting process that defines the expert’s role, perspective, and reasoning scope. This prompt serves as an initialization layer for the LLM, guiding its interpretation of the questionnaire and formulation of responses. The level of persona conditioning varies across models—from emulating specific historical figures to adopting generic expert profiles or generating answers directly from the model’s intrinsic knowledge.

Figure 2 illustrates this process of expert creation, which forms the foundation of the three proposed approaches.

The three AI Delphi models, each with distinct approaches, offer varying depths and scopes of expertise. The Iconic Minds model utilizes specific personas of renowned thinkers, providing specialized knowledge in focused areas. For instance, we can apply this model to renowned politicians, journalists, or philosophers. This contrasts with the Persona Panel, which employs generic personas representing a range of disciplines, allowing for broader insights. Finally, the Digital Oracle model diverges from human expert emulation, instead leveraging the capabilities of LLMs like ChatGPT and Google Gemini for a diverse and comprehensive analysis across multiple disciplines. This model’s reliance on AI-driven insights represents a significant shift from the more persona-focused approaches of the other two models.

Table 1 shows the comparison between these three models.

Even when the same language model generates all simulated experts, this approach does not entail a lack of diversity or independence among participants. Depending on the model configuration, diversity can emerge from multiple simulated personas, each with distinct disciplinary orientations, professional backgrounds, and reasoning patterns, or from the participation of different LLMs with varied architectures and training corpora. In both cases, the methodology enables the simulation of heterogeneous viewpoints and preserves the plurality typically observed in traditional Delphi panels.

While initially designed for the Delphi method, the AI Delphi models proposed in this work have broader applicability across expert participation methods. Their versatility enables application in various contexts where gathering and synthesizing expert opinions are crucial. Whether in brainstorming sessions, consensus-building meetings, or scenario planning exercises, these models can be effectively integrated, offering new dimensions of insight and analysis. This flexibility opens up possibilities for enhancing decision-making processes across diverse fields, leveraging each model’s unique strengths according to the specific needs of the situation.

In AI-based Delphi models, consensus is understood as the stabilization of expert opinions over successive rounds, rather than majority or full agreement. This reflects the Delphi method’s core principle: convergence toward a steady state of judgment. While the current model does not yet trace the influence of individual simulated agents, future enhancements may include mechanisms to monitor opinion shifts and identify key contributors to the consensus.

Although three different models that use LLMs as central participants in a Delphi process were presented in this work, only the Iconic Minds Delphi model, which uses personas of well-known researchers as experts, was implemented.

5. Experiments

5.1. Experiment 1—Future of Work

5.1.1. Problem

The intersection of technology, the economy, and societal values is reshaping professional engagement and labor markets in a rapidly evolving global landscape. Technological advancements, especially in artificial intelligence and automation, present opportunities and challenges, signaling a shift in the requirements and nature of work. These changes introduce new tools and fundamentally rethink work structures, skill requirements, and employment paradigms.

This evolution prompts a critical examination of what the future holds for the workforce. It raises questions about the balance between human and machine labor, the emergence of new job categories, and the potential obsolescence of traditional roles. The implications for workforce training, educational systems, and policy development are profound, as they need to adapt to a landscape where skills, jobs, and worker expectations are in constant flux.

Understanding these transitions is essential to navigating and shaping the future of work. It’s not just about adapting to change but about anticipating and influencing it to foster a future that balances technological advancements with human-centric employment and development. This understanding will guide the development of strategies and policies that maximize the benefits of these changes while mitigating potential disruptions, ensuring a resilient, adaptable workforce prepared for the challenges and opportunities ahead.

5.1.2. Method

In this experiment, we used the Iconic Minds Delphi model (

Figure 1—left) and GPT-4 Turbo (gpt-4-1106-vision-preview) to emulate the participation of experts in the Delphi method. The primary goal of this experiment was to aggregate and refine perspectives on the “Future of Work” among the involved participants, which was realized in two pre-defined rounds of discussions. This two-round design follows Delphi’s stability-based view of consensus. In this context, we replicated a Delphi questionnaire coordinated initially by the Millennium Project on Future Work/Technology 2050 [

9]. However, we made a single adaptation in the questionnaire: we advanced the time frame from the original 2020–2050 period to 2030–2060 to reflect current perspectives and future projections.

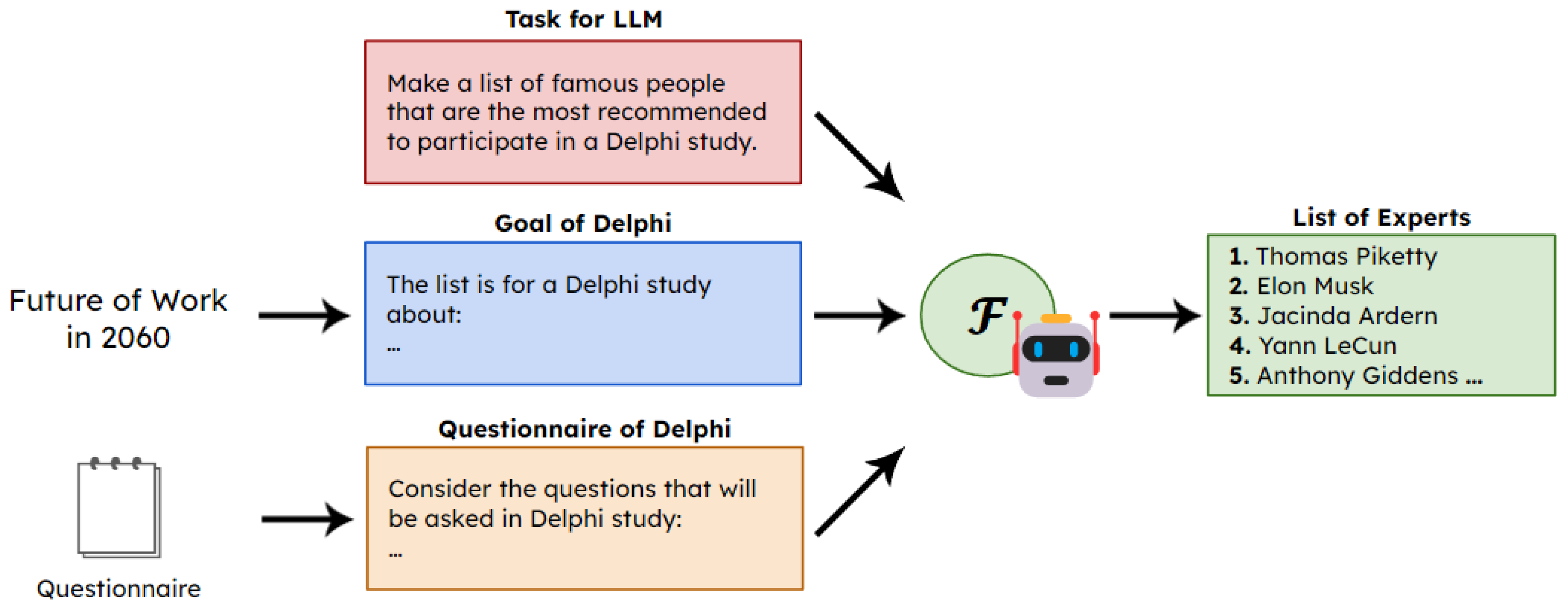

In the experiment, we first use the LLMs to generate the potential list of experts to be used as Personas in the Delphi, a process depicted in

Figure 3. We provide the LLM with the study’s goals and questionnaire. Next, we carefully analyze the proposed experts to select those whose expertise aligns with the study objectives and to ensure diverse perspectives. Next, we gathered the responses for each persona from the list of selected experts, as shown in

Figure 4. This structured approach enhances the interaction process with the persona, enabling a degree of reproducibility. While LLMs may offer varying responses, they often follow recurring patterns specific to each persona. The complete set of prompts used throughout the experiment, including expert definition, persona creation, and Delphi execution, is provided in

Appendix A for reference and reproducibility.

Therefore, the following questions were used in our work:

Question 1: If socio-political-economic systems stay the same around the world, and if technological acceleration, integration, and globalization continue, what percent of the world do you estimate could be unemployed—as we understand being employed today—during each of the following years: 2030; 2040; 2050; 2060?

Question 2: More jobs were created than replaced during the Industrial and Information Ages. However, many argue that the speed, integration, and globalization of technological changes over the next 35 years (by 2060) will cause massive structural unemployment. What are the technologies or factors that might make this true or false?

Question 3: What questions have to be resolved to answer whether AI and other future technologies create more jobs than they eliminate?

Question 4: How likely and effective could these actions be in creating new work and/or income to address technological unemployment by 2060?

Question 5: Will wealth from artificial intelligence and other advanced technologies continue to accumulate income to the very wealthy, increasing the income gaps?

Question 6: How necessary or important do you believe that some form of guaranteed income can be in place to end poverty, reduce inequality, and address technological unemployment?

Question 7: Do you expect that the cost of living will be reduced by 2060 due to future forms of AI, robotic and nanotech manufacturing, 3D/4D printing, future Internet services, and other future production and distribution systems?

Question 8: What big changes by 2060 could affect all this?

Question 9: What alternative scenario axes and themes should be written connecting today with 2060, describing cause-and-effect links and decisions that are important to consider today?

Additional Question: Other comments to improve this study?

The “iconic minds” to participate in this work were chosen with the help of ChatGPT, as we prompted it to suggest names of renowned individuals who would be most recommended to participate in a Delphi study on the future of work in 2060. Regarding the questionnaire, ChatGPT was asked to list three names for each of the following knowledge areas: Economics, Entrepreneurship, Politics, Computer Science, Sociology, and Philosophy. The selection of experts from these diverse areas enabled a combination of global recognition and expertise in their respective fields. The final list of selected experts and their descriptions, as provided by ChatGPT, is presented in

Table 2.

The analysis of the selected participants shows that 2 participants are from North America, 12 from Europe, two from the Asia-Pacific region, one from Oceania, and one from Africa. Analyzing the age range, 3 participants are under 50, 7 are between 50 and 60, 3 are between 61 and 70, 2 are between 71 and 80, and 3 are over 80 years old. Regarding gender, there were twice as many male participants as female participants.

5.1.3. Results

Next, we explore the results of Experiment 1, presenting the average values of the quantitative responses and a summary of the participants’ comments. This analysis aims to highlight the consensus reached through the AI Delphi method and explore the range of perspectives and insights that emerged from the expert simulations.

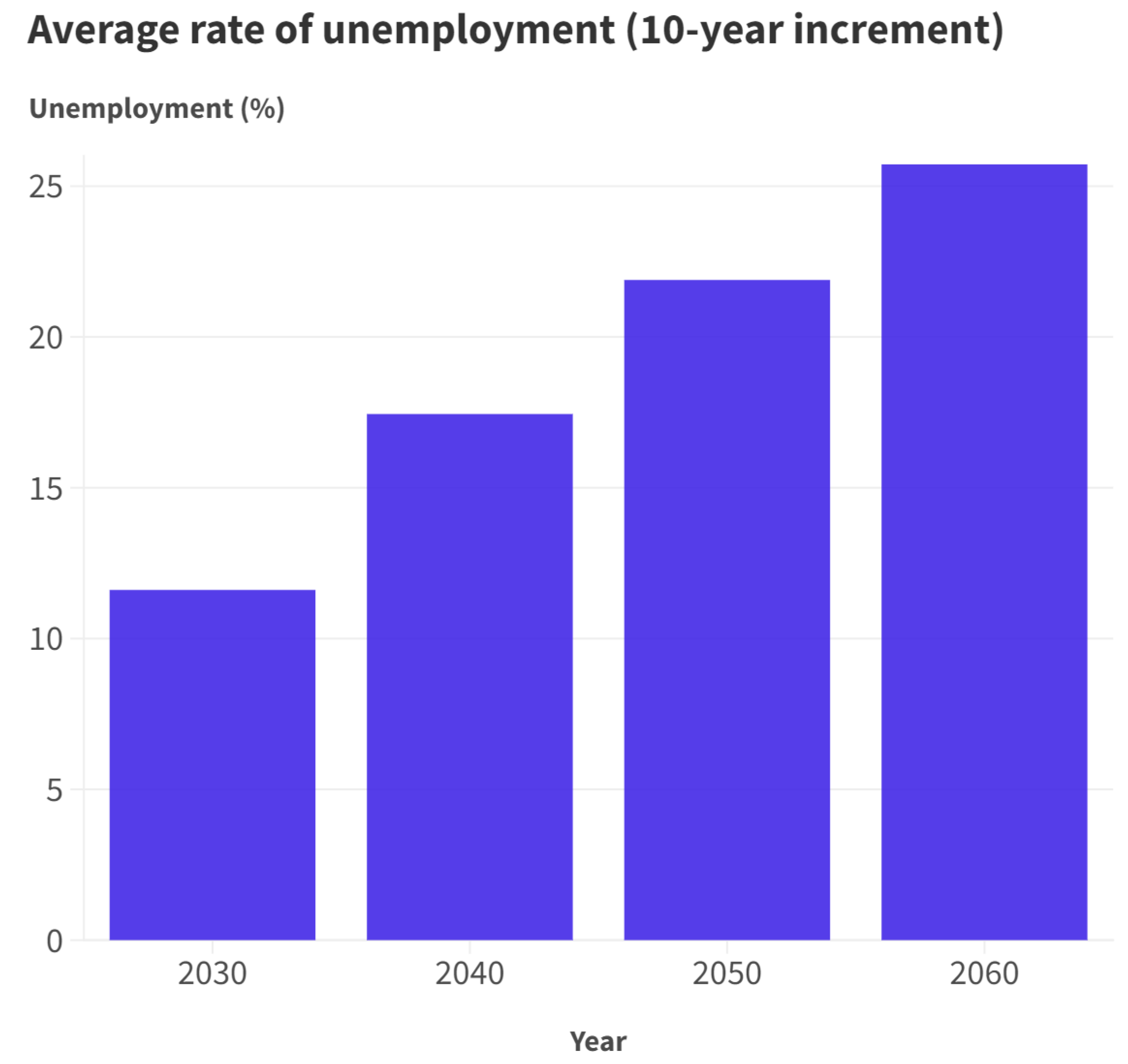

Question 1 focused on projecting global unemployment rates through 2030, 2040, 2050, and 2060.

Figure 5 shows the averages of the 18 responses in 10-year intervals. This suggests that unemployment is perceived as a growing trend, given the ongoing technological advancements and unchanged socio-political and economic frameworks. The feedback highlighted the potential for technological innovations to create new employment sectors, the need for policy and educational reforms to mitigate job losses resulting from automation, and the crucial role of societal and economic adaptability in shaping future employment landscapes. Despite the positive outlook on job creation, there was a prevailing concern that technological advancements were outpacing the generation of new employment opportunities, highlighting the critical need for forward-thinking in workforce preparation.

By the Delphi stability criterion, in which consensus is indicated by the reduction of response variation between rounds rather than by majority voting, a consistent decline in the dispersion of results can be observed in

Table 3. The standard deviation decreased across all time horizons: in 2030, from 2.3 to 1.9 (a 17.4% reduction); in 2040, from 3.7 to 2.9 (−21.6%); in 2050, from 4.7 to 3.6 (−23.4%); and in 2060, from 5.9 to 4.4 (−25.4%). On average, the standard deviation fell from 4.15 to 3.20 (an absolute decrease of 0.95; −22.9%). In simple terms, after the feedback provided in the second round, the responses became closer to one another, a clear indication of greater consensus within the panel.

This convergence is also evident in the coefficient of variation (CV), which relates dispersion to the mean. In Round 2, the CV remained within a narrow range of approximately 16–17% (2030: 16.4%; 2040: 16.7%; 2050: 16.4%; 2060: 17.1%). In Round 1, by contrast, not only were the values higher, but the CV increased as the time horizon extended (from about 18.9% in 2030 to 25.4% in 2060). In other words, the more distant the year, the greater the initial uncertainty; after the inter-round discussion, this uncertainty decreased and became more homogeneous among participants.

It is worth noting that some individual reestimations were substantial for longer horizons (for example, some participants adjusted their 2050–2060 projections upward). Still, the aggregate effect was a compression of dispersion: even with individual changes, the group as a whole became more aligned. This is consistent with the notion of consensus in Delphi studies, which refers to the stabilization of responses across successive rounds.

Following the initial exploration, Question 2 raises the discussion on whether the rapid evolution of technology could lead to widespread structural unemployment by 2060. Experts universally recognize the dual impact of technological advancements, highlighting both the risks of job displacement due to AI, robotics, and automation, as well as the potential for creating new job opportunities. This question sparked a multifaceted conversation on the need for significant educational reforms and policy interventions to adapt to and mitigate the potential negative impacts of these technological shifts, highlighting a collective optimism for innovation-driven growth alongside caution regarding the challenges of globalization and digital monopolies.

Then, Question 3 probes into the unresolved inquiries essential for determining if AI and other futuristic technologies will create more jobs than they eliminate. This query surfaces a consensus on the need for clarity regarding the emergence of new job types, the adaptability of educational and training frameworks to future job markets, and the role of policy and economic frameworks in facilitating technological transitions. Experts also emphasize the importance of investing in new sectors, ensuring the equitable distribution of technology’s benefits, advocating for a multidisciplinary and multi-stakeholder approach, and highlighting the enduring value of human creativity and ethical judgment in the evolving employment landscape.

In Question 4, the discussion focuses on assessing the likelihood and effectiveness of various actions in generating new employment and income to mitigate technological unemployment by 2060.

Table 4 presents the average rating of the actions proposed by Glenn and Florescu [

9] using the following scale: 5 (Solves the Problem), 4 (Very Effective), 3 (Effective), 2 (Little Impact), and 1 (Makes It Worse). The consensus highlights the potential of retraining programs, the integration of Science, Technology, Engineering, and Mathematics (STEM) education, and coding education, as well as the strategic emphasis on enhancing national and individual intelligence as key measures. Moreover, incentives for advanced, skilled jobs, national innovation programs, and the concept of a basic guaranteed income are debated for their effectiveness in navigating the challenges posed by automation and AI. This collective insight emphasizes the need for a multifaceted approach, combining education reform, policy innovation, and economic incentives to foster job creation and promote equitable growth in the face of rapid technological advancements.

On the other hand, Question 5 explores the critical issue of wealth distribution in the era of AI and advanced technologies. The unanimous feedback from participants underscores a growing concern over the exacerbation of income disparities, with technological progress primarily benefiting the already wealthy. This consensus underscores the pressing need for comprehensive policy interventions, including progressive taxation, antitrust measures, and wealth redistribution mechanisms, to ensure that the benefits of technological advancements contribute to a more equitable society. Moreover, the emphasis on equitable access to technology and the role of corporate social responsibility suggest that a multifaceted approach is vital for addressing these disparities, indicating a need for a reevaluation of current economic models to prioritize equitable growth and wealth distribution.

Still discussing income distribution, Question 6 addresses the significance of implementing a guaranteed income to combat poverty, inequality, and the effects of technological unemployment. The consensus underscores a shared acknowledgment of the societal challenges posed by technological advancements, viewing guaranteed income as an essential response. Its potential for promoting economic stability and social cohesion is highlighted, with a sense of urgency and importance placed on exploring and instituting such measures. This collective perspective emphasizes guaranteed income not just as a remedy but as part of a holistic strategy for fostering inclusive growth, reflecting a strong inclination towards its serious consideration and implementation to navigate future societal shifts.

Question 7 focuses on the anticipated impact of AI, robotics, nanotechnology, and 3D/4D printing on the cost of living by 2060. Participants share optimism that these technological advancements could significantly reduce the cost of living through enhanced efficiencies in production and distribution. However, this positive outlook is balanced with a strong emphasis on the necessity for comprehensive policy frameworks to ensure these benefits are widely distributed and accessible, highlighting the importance of policy in bridging technological potential with equitable societal gains.

Building on the discussion, Question 8 examines the significant changes by 2060 that could impact these dynamics. The responses suggest a convergence of transformative shifts across technology, society, economy, and the environment, emphasizing the critical roles of sustainable development, global cooperation, and adaptability. Key themes include the transition to green technologies, the importance of healthcare innovations, and the potential of space exploration, all underpinned by the need for anticipatory governance and ethical foresight to navigate future challenges and opportunities.

Building on insights from earlier discussions, Question 9 aims to identify crucial scenario axes and themes that bridge the present with 2060, focusing on cause-and-effect relationships and the pivotal decisions of today. Consensus emphasizes the transformative role of technology, the imperative of environmental sustainability, the evolution of global governance, labor market restructuring, the reshaping of education for lifelong learning, and the ethical deployment of AI. These areas highlight the interconnectedness of decisions across sectors, underscoring the urgency for comprehensive policy development, ethical considerations, and the adaptability of education systems to ensure a future where technological progress supports human and environmental well-being.

Finally, the additional question invites reflection on how to enhance the study, yielding insights that advocate for a multidisciplinary, inclusive approach, emphasizing the need for adaptability, resilience, and extensive stakeholder engagement. The feedback highlights the importance of global and ethical considerations in the societal impacts of technology, the crucial role of policy and institutional frameworks, and the necessity of preparing for unforeseen events. This collective input calls for collaborative, forward-thinking strategies that are flexible enough to adapt to rapid changes, ensuring that technological advancements contribute positively to an equitable and sustainable future.

5.1.4. Comparison Between Human-Based and AI-Based Delphi Studies

Considering that the questionnaire was adapted from the original Delphi study conducted by the Millennium Project on Future Work/Technology 2050, it is possible to compare the results obtained by the AI Delphi with those generated by human experts. Although the two studies differ in temporal scope—the original focusing on the 2020 to 2050 horizon and the AI Delphi on 2030 to 2060—their methodological foundations and thematic structures are comparable.

Both studies identify a likely increase in technological unemployment and the intensification of income inequality as major structural challenges in the coming decades. A shared expectation emerges regarding the central role of AI and automation in reshaping the labor market, the growing importance of guaranteed income mechanisms, and the potential for advanced technologies to reduce living costs, provided that equitable access is ensured.

Despite these convergences, notable differences emerge in tone, focus, and reasoning patterns. The AI Delphi responses tend to exhibit greater internal coherence and long-term consistency, often emphasizing ethical governance, inclusive planning, and institutional resilience as key mitigation strategies. In contrast, the human-based Delphi results reveal a broader range of uncertainty and skepticism, with participants frequently expressing concerns about political inertia, uneven technological adoption, and the risk of systemic collapse. These divergences suggest that, while human experts bring contextual sensitivity and social awareness, AI-generated personas offer a more structured and policy-oriented view of the future.

To make the comparison more objective, we also analyzed the quantitative results from both studies. For Question 1, the unemployment projections from the AI Delphi followed a similar growth pattern to those from the human-based Delphi between 2030 and 2050, the period common to both studies. The AI Delphi estimated an increase from around 12% to 22%, while the human study projected growth from 16% to 24%. To verify the similarity of these trends, a correlation analysis was conducted between the two datasets, yielding a coefficient of 0.98, which indicates a very strong positive relationship. In addition, the average annual growth rates were nearly identical, confirming that both panels identified the same long-term trajectory, with only minor differences in scale.

For Question 4, which asked participants to rate the likelihood and effectiveness of different actions to reduce technological unemployment, the AI Delphi gave slightly higher ratings on average (3.60 for likelihood and 3.57 for effectiveness) than the human-based Delphi (2.79 and 3.17, respectively), indicating a somewhat more optimistic view from the AI-generated personas. It is worth noting that the time horizons of the two studies were not identical, which may have influenced the differences in expectations. To assess the degree of alignment between the two panels, a Spearman rank-order correlation was calculated, yielding a coefficient of approximately 0.75. This strong positive correlation shows that both groups prioritized the actions in a very similar order.

From a methodological perspective, these results reinforce that AI-based Delphi processes can serve as a complementary instrument rather than a replacement for traditional expert panels. The ability of the AI Delphi to replicate key foresight trends and reasoning patterns observed among human experts suggests that it can effectively support large-scale, time-efficient consultations, especially when access to diverse experts is limited. At the same time, the observed tendency toward analytical optimism underscores the need for hybrid approaches that combine human judgment with AI-generated insights to strike a balance between depth, nuance, and interpretive rigor in foresight research.

5.2. Experiment 2—Future of the Higher Education in Brazil

5.2.1. Problem

The Higher Education landscape in Brazil is undergoing significant transformations driven by technological advancements, shifting societal values, and evolving economic conditions. These changes herald a future where traditional educational models may no longer be sufficient, and new paradigms will likely emerge. Technologies such as artificial intelligence, virtual reality, and online learning platforms are poised to transform the way education is delivered and consumed. These technological integrations promise personalized learning experiences, potentially leading to more effective and engaging education systems.

However, this evolution is not without its challenges. There is a growing concern about the digital divide and its impact on educational equality. As technology becomes more integrated into the educational system, ensuring equal access for all students becomes paramount. Additionally, educators must be prepared for this new landscape, equipping themselves with the necessary skills and tools to utilize technology effectively in teaching.

Understanding and anticipating these changes is crucial for policymakers, educators, and students. Studying the future of education enables proactive measures, ensuring that the education system evolves in a manner that benefits society as a whole. It is about adapting to changes and shaping them to create a more inclusive, effective, and forward-looking educational landscape.

5.2.2. Method

We adopt the Iconic Minds Delphi model (

Figure 1—left) and GPT-4 Turbo (gpt-4-1106-vision-preview) in this experiment. Our goal was to refine and modify the initial scenarios and create new ones for the “Future of the Higher Education in Brazil” among the involved experts, which was realized in two pre-defined rounds of discussions. This two-round design follows Delphi’s stability-based view of consensus. The primary focus was to employ Delphi to refine and modify the initial scenarios and create new ones, aiming for a comprehensive understanding of future trends in this field.

Initially, we performed several steps using a Foresight Supporting System [

27] and a group of 13 researchers, starting with a bibliographic analysis, followed by brainstorming, futures wheel, roadmapping, and scenario. This research methodology has been applied in studies of varied subjects. The first phase of the study concluded with the development of three educational scenarios. In the study’s second phase, we utilized the Iconic Minds Delphi method to refine and develop new scenarios. All prompts applied in this second experiment—covering expert selection, persona setup, and the iterative Delphi rounds—are fully documented in

Appendix A to ensure methodological transparency and reproducibility.

These three distinct scenarios guide our research on the future of higher education in Brazil. Each reflects a possible educational trajectory, taking into account socioeconomic, technological, and political factors. We present a short description for each scenario:

Scenario 1—Technology and Empowerment: Advancing the Quality of Universities: This scenario for 2040 predicts a significant evolution in Brazilian universities, characterized by the widespread adoption of technology coupled with robust professor preparation. This optimistic scenario suggests considerable improvements in the quality of universities.

Scenario 2—Widespread Use of Technology, Deteriorating Teaching Quality with Ill-Prepared Professors: In this scenario, technology is widely adopted in universities, but paradoxically leads to the deterioration of teaching quality. Professors are relegated to secondary roles, serving more as monitors, while education is increasingly dominated by robots, particularly in the private sector, where profitability often takes precedence over educational quality.

Scenario 3—Slow Adoption of Technology due to Government Regulation, Lack of Interest from Educational Institutions, or Professors’ Lack of Preparation: This scenario describes a slow progression in the adoption of educational technologies by university institutions in Brazil, influenced by governmental regulations, lack of institutional interest, and inadequate professor preparation. Technological adoption is hindered by economic interests that prioritize low costs and high profits over teaching quality.

Following the presentation of the initial prospective scenarios, we applied the Delphi method, aiming to collect detailed feedback from the experts. This questionnaire, consisting of five standardized questions, was carefully crafted to address each of the three scenarios. These questions cover aspects such as the likelihood of the scenarios occurring, the desirability of these outcomes, additional events that could influence them, areas of potential disagreement among the experts, and general observations. Additionally, an extra question was included at the end of the process to deepen the analysis. This structure enabled a detailed and multifaceted evaluation of each scenario, providing valuable insights into the different perspectives of the involved experts. The specific questions of the questionnaire are as follows:

Question 1: Considering the scenario, how likely is it to occur in Brazil? Please choose from the options: Very Improbable, Improbable, Neutral, Probable, or Very Probable. If possible, justify your answer.

Question 2: Considering the above scenario, how desirable do you find it? Please choose from the options: Very Undesirable, Undesirable, Neutral, Desirable, or Very Desirable. If possible, justify your answer.

Question 3: What other events do you think might occur in the scenario?

Question 4: Do you disagree with any event within the scenario? If possible, justify your answer.

Question 5: Do you have any observations to make about the scenario?

Additional Question: Would you like to leave any comments or suggestions?

This questionnaire includes both closed-ended and open-ended questions, allowing for a structured quantitative assessment while also capturing qualitative insights. Although Zheng et al. [

28] have identified selection biases in LLMs when dealing with multiple-choice questions—particularly related to positional and token ID preferences—such biases are not applicable in our setup. Unlike traditional multiple-choice formats that rely on labeled options (e.g., A/B/C/D), our closed-ended questions employ semantically meaningful and balanced natural language choices (e.g., “Very Improbable” to “Very Probable”) without positional labels. This design minimizes the risk of token-level bias influencing the responses.

In the development of our study, we selected 15 participants following a hybrid approach. The GPT automatically selected some experts based on relevance to the theme and the proposed questionnaire. Simultaneously, other participants were chosen directly by the paper’s authors, focusing on experts that the GPT recognizes as such. This hybrid process combined the efficiency of automated selection with the authors’ thoughtful consideration to ensure comprehensive and relevant representation in the expert group. Subsequently, a brief bio of each expert, generated by ChatGPT, will be presented in

Table 5, highlighting their primary areas of expertise and notable contributions.

The analysis of the selected participants reveals that, among the 15 experts, 12 are affiliated with Brazilian public universities, such as Unifesp, UFPE, USP, and UFRJ, and 3 with private or international institutions, including Fundação Getulio Vargas (FGV), Pontifícia Universidade Católica (PUC), Duke, Harvard, and the University of Geneva. In terms of age, 3 participants are under 60 years old, 4 are between 61 and 70 years old, 7 are between 71 and 80, and 1 is over 80. Regarding gender, there were twice as many male participants as female participants.

5.2.3. Results

Here, we discuss the outcomes obtained from the Delphi questionnaire, using AI Delphi to simulate expert participation, and emphasize the agreements and discrepancies noted in their analyses. Moreover, we present the enhanced scenarios and introduce new scenarios that emerged during this process. The validation of these results, particularly the persona emulation of Nelson Maculan Filho, was meticulously conducted by himself, ensuring the relevance and accuracy of the conclusions. Although there is a need to involve more experts for a more comprehensive validation of the model, these results are crucial for assessing the efficiency of AI Delphi in emulating experts and supporting decision-making.

In the first scenario, “Technology and Empowerment: Advancing the Quality of Universities”, the personas indicated a “Probable” probability of realization, as shown in

Table 6, revealing an optimistic perspective about the potential of technology and training in improving universities. Despite the predominantly “Neutral” view on the desirability of this scenario, as represented by

Table 7, discussions emerged about the need for innovation and adaptation in educational systems, focusing on collaboration and inclusion. Notable were the disagreements that emphasized the importance of a balanced and inclusive approach, highlighting the necessity of addressing inequalities and upholding humanistic values in education.

In Scenario 2, “Widespread Use of Technology, Deteriorating Teaching Quality with Ill-Prepared Professors for Technology Adoption”, a consensus was reached on its probability, classified as “Probable”, as demonstrated by

Table 6. However, the view on the desirability of this scenario was predominantly “Very Undesirable”, as observable in

Table 7. The experts reflected on the complexities and challenges associated with the widespread implementation of technology in universities, highlighting critical issues such as mental health, ethics, and the need for balanced educational policies. Despite concerns, there was a consensus that enhancing teaching quality with appropriate educational policies and effective professor training is possible. This scenario underscores the importance of careful planning and institutional support in integrating technology with effective pedagogical methods, suggesting a balanced approach that harmonizes technological innovation with pedagogical, equitable, and inclusive considerations.

Finally, in Scenario 3, “Slow Adoption of Technology due to Government Regulation, Lack of Interest from Educational Institutions, or Professors’ Lack of Preparation for New Technologies”, the experts fluctuated between considering it “Probable” or “Neutral”, as illustrated by

Table 6, with a general tendency towards the view “Undesirable”, as shown by

Table 7. This scenario highlighted the need for innovative and adaptive solutions in the face of challenges posed by slow technological integration. Despite concerns, a consensus emerged that teaching quality can be maintained or improved with effective pedagogical methods, curricular reforms, adequate professor training, and a student-focused educational approach. The analysis highlights the importance of carefully integrating new technologies, striking a balance between innovation and educational quality, and considering the preparation and adaptation of professors to current challenges in higher education institutions.

Table 8 reveals a contrast between Scenarios 1 and 2, and Scenario 3. A five-point scale was used in this study (from 5 for “Very Probable” to 1 for “Very Improbable”). For Scenario 1 and Scenario 2, the panel begins and ends with perfect agreement at 4 (Probable) for every participant in both rounds. Hence, the mean remains 4.0 (Probable) and the standard deviation is 0.0 throughout. Because dispersion is already zero, the second round feedback has nothing to reconcile, and the consensus is “locked in” from the start.

By contrast, Scenario 3 exhibits the typical Delphi pattern of post-feedback convergence. The mean probability rises from 3.1 (just above Neutral) to 3.5 (between Neutral and Probable), indicating a shift toward greater perceived likelihood after feedback. Simultaneously, the standard deviation falls from 0.7 to 0.5 —a 28.6% reduction that signals tighter agreement. The coefficient of variation declines from roughly 22.6% to 14.3% (approximately −37%). All Round-1 ratings of 2 (Improbable) move up to 3 (Neutral), and the count of 4 (Probable) increases from 5 to 7 (from 33% to 47% of the panel), with no downward moves. Therefore, Scenarios 1 and 2 remain unchanged because they started at full consensus at “Probable”, whereas Scenario 3 shows a reduction in dispersion, which is evidence of increased consensus in the second round.

Following the detailed analysis of the AI Delphi results, we utilized the collected insights to refine and improve these scenarios. This enhancement process was based on the agreements and discrepancies identified, focusing on areas that demanded greater attention and innovation. For example, in Scenario 1, adjustments were made to incorporate elements such as personalized education facilitated by AI. The redefinition of the professor’s role expanded the discussion about adapting universities to technological demands, highlighting the importance of inclusion and equity. In Scenario 2, balancing technology and educational quality made the narrative more realistic, emphasizing ethical policies and mental health. Finally, in Scenario 3, which emphasizes a holistic approach to technological adoption, including professor preparation, a more practical view of contemporary educational challenges was presented.

Moving forward in analyzing the results, we highlight the new scenarios developed based on the insights from the Delphi method. These scenarios reflect an evolution of the initial perspectives, offering new visions of the future of universities in Brazil. Next, we detail each new scenario.

Scenario 4—Transformed Education: A New Paradigm in Brazilian Universities: This scenario predicts a revolution in Brazilian universities, with profound technological and pedagogical transformations driven by socioeconomic changes and emerging global demands. It examines how these changes redefine the roles of educational institutions, educators, and students, establishing a new educational paradigm centered on innovation, inclusion, and sustainability.

Scenario 5—Technological Balance and Humanistic Development in Brazilian Universities: This scenario portrays Brazilian universities achieving an ideal balance between technology and traditional teaching, using digital technology and artificial intelligence to enrich learning without replacing human interaction. With a strong emphasis on the quality and integrity of teaching, this scenario reflects a revolution in professor training and a greater appreciation of education’s role in society, promoting equity in access to universities.

Scenario 6—Innovative and Connected Universities: This scenario is marked by the synergy between technological and pedagogical innovation. Characterized by the strategic adoption of emerging technologies, such as artificial intelligence and virtual reality, integrated into curricula. The scenario emphasizes personalized teaching, flexible curricula, and a student-centered learning model. The evolution of the professor’s role from a traditional instructor to a facilitator, along with continuous professional development, is highlighted, as well as the expansion of alternative education and its integration with traditional teaching methods. Significant efforts are made to overcome financial challenges, reduce disparities, and align the curriculum with the needs of the labor market.

Nelson Maculan Filho played a crucial role in validating the results of the AI Delphi, conducting a meticulous assessment of his emulated persona. During this process, each response generated by the model was individually reviewed and compared with how he would personally answer the same question. The evaluations were categorized as fully consistent, partially consistent, or divergent, based on the degree to which the simulated answer reflected his own perspective and reasoning. This evaluation resulted in a high agreement rate of approximately 85% with most of the responses, underscoring the effectiveness of the AI Delphi in capturing his thoughts and judgments. Although there were some divergences in terms of intensity in the responses, the vast majority aligned with his views. This agreement reinforces the validity of using AI Delphi to emulate experts, but further evaluation with additional simulated experts is necessary to strengthen the model’s validation and ensure its broader applicability.

In addition to this validation, a comparative analysis was carried out between the AI Delphi responses and authentic opinion texts, interviews, and public statements from the same experts whose personas were emulated [

29]. The results indicated a strong semantic correspondence, showing that the AI-generated responses reproduced the main ideas and terminology typically used by the real experts. This consistency reinforces the reliability of the simulated personas in reflecting genuine expert perspectives, even though further studies involving broader comparisons are still needed to consolidate the model’s validation.

6. Discussion

The ability of LLMs to make predictions related to the future of work and education, as demonstrated in our results, aligns with the research of Van Belkom [

10], which examines the use of AI in investigating its future, addressing distinct scenarios of exploration, prediction, and future creation. Our findings suggest that LLMs have potential as a tool in Future Studies.

Regarding the future of education, our results demonstrate that advancements in AI and the creation of new technologies have significant direct and indirect impacts. This information is in alignment with the investigations of Eloundou et al. [

4] and Eisfeldt et al. [

5], which focused on the U.S. job market and extend the discussion initiated by Chen et al. [

6] and Gmyrek et al. [

7] regarding the global impact.

In our exploration of the future of education through the lens of technological advancements, the use of scenario-based planning aligns with the methodologies proposed by Ahmad [

11] and Hammershøj [

12], serving as a foundational approach to navigating and preparing for the technological shifts within educational frameworks. Additionally, our results echo the critical need for systemic reform in the educational sector, as also discussed by Kromydas [

13] and Raich et al. [

8], highlighting the imperative for educational models to evolve.

Although the alignment between the LLM-generated responses and the existing literature is noteworthy, it is also, to a certain extent, expected. LLMs are trained on massive corpora that encompass a wide range of textual sources, including academic papers, reports, expert opinions, and other relevant documents. Therefore, their ability to produce outputs that align with current academic discourse reflects the nature and composition of their training data.

Within the realm of the reasoning capabilities of LLMs, our results demonstrated how ChatGPT efficiently participated in the Delphi process, providing valuable insights and qualitative analyses. This aligns with the studies of Wang et al. [

14] and Liang et al. [

15], which explored the reasoning capabilities of LLMs in different frameworks. While our approach and methodology differ, the positive performance of the LLM in our research suggests the versatility of these models in adapting and providing insights across different research paradigms.

Concerning methodologies centered on the complex reasoning capabilities provided by LLMs, this work integrated personas to obtain different responses in the Delphi questionnaire. This approach finds parallels in the work of Wang et al. [

18], where the implementation of multiple personas was crucial in transforming a single LLM into a cognitive synergist, thereby expanding its reasoning capabilities. The proposed technique confirms that ChatGPT can generate more robust and varied results when fed with multiple perspectives. The relevance and the necessary caution in applying such models to futures research are underscored by recent studies on potential LLM biases and their global impacts [

6,

19].

The convergence of our results with those of various researchers in the literature suggests the growing capacity and applicability of LLMs in the field of Future Studies. Incorporating new approaches and adapting traditional methods, such as the Delphi method, illustrates the innovative potential and flexibility of these models. As we continue to explore LLMs, it is essential to maintain a critical stance, be attentive to potential biases, and consistently seek to improve their application to ensure accurate, relevant, and impactful results.

Transitioning to the ethical challenges of the research, we face a multifaceted landscape, particularly regarding the development of personas based on publicly known individuals. Prior to data generation, we established an ethical framework specifying eligibility criteria for personas (public figures with citable records), strict domain scoping, source curation rules privileging verifiable public materials, a misrepresentation risk analysis, and auditability requirements (versioned prompts/models and round logs).

While only Nelson Maculan Filho was directly contacted and provided explicit consent, the others are public personalities whose contributions are well documented in the public domain. All personas were constrained to information already available in the public record, and no private communications were made prior to the study.

In iconic minds, anonymization is not feasible nor appropriate, as the personas are intentionally modeled after recognizable profiles. While using publicly available data about notable figures mitigates some privacy concerns, it raises critical ethical issues surrounding implicit consent, representational accuracy, and potential bias in projecting inferred opinions. Accordingly, we conducted a misrepresentation risk assessment that identified overreach, stereotyping, and unwarranted novelty as primary failure modes, and we put in place controls to detect and mitigate each.

The core ethical challenge is the risk of attributing views to individuals that they may not hold or endorse, especially when extrapolating from limited public records. This raises questions about the boundaries of acceptable extrapolation. Such bias in the extrapolation can distort public perception and unintentionally reinforce stereotypes or biases. When feasible, incorporating targeted post-hoc validation with the referenced experts (or knowledgeable proxies) to assess fidelity and update guardrails where divergences are observed to improve the ethical framework over time.

Therefore, we propose a roadmap of four actionable steps to address these ethical concerns:

- 1.

Limit persona inference strictly to domains of public expertise, avoiding extrapolation into unrelated or controversial areas (e.g., refraining from attributing political stances to a figure known solely for technological expertise);

- 2.

Establish a review process involving ethics committees or expert panels, particularly when personas are derived from real individuals, to ensure that the scope of representation is justifiable and ethically sound;

- 3.

Document and disclose potential biases in persona construction, including limitations of the data sources and the reasoning behind inferred viewpoints; and

- 4.

Develop a protocol for retroactive consent or contestation, allowing public figures, if contacted later, to review and challenge how their personas have been represented.

These safeguards are expected to uphold the research’s ethical rigor while ensuring the respectful treatment of the individuals represented.

Reproducibility is another critical aspect in studies involving LLMs. The evolving nature of LLMs introduces challenges for replicating results over time, especially given the inherent variability in model outputs—even when using the same prompts and configurations. However, the increasing practice of releasing versioned models mitigates this issue. In this study, we used a clearly defined version, gpt-4-1106-vision-preview, which allows future researchers to access the same model configuration. While exact replication is not guaranteed, this approach enhances transparency and supports methodological consistency in AI-driven research.

Beyond reproducibility concerns, the use of LLMs also introduces potential biases that may have influenced how expert perspectives were represented in this study. Because the models rely on unevenly distributed public data, some viewpoints—especially from regions or disciplines with less digital visibility—are likely to be underrepresented. This imbalance can subtly shape how personas reason or prioritize certain issues. Moreover, as the model integrates diverse textual traces into coherent profiles, it may compress complex or even contradictory viewpoints into simplified narratives, creating an illusion of internal consistency. Finally, since the selection of “iconic minds” naturally favors prominent Western figures, the resulting panels may reflect global academic hierarchies more than genuine diversity of thought. These factors do not invalidate the approach but underscore that the AI Delphi reflects the informational landscape from which it emerges. Its results should, therefore, be interpreted with awareness of these structural limitations, which future research should explicitly measure and address.

This work was conceived as a proof of concept. Iconic Minds was foregrounded because it directly instantiates key methodological risks in applying LLMs to Delphi, while the two alternative designs are reserved for future work. The Delphi procedure comprised two pre-specified rounds, consistent with the method’s allowance for a predefined number of iterations until stabilization. Rather than extending the number of rounds, robustness was pursued via triangulation: close agreement with a comparable human-run Delphi and expert validation (85%). This design aims to limit analytical degrees of freedom, support transparency, and provide preliminary evidence of method performance at this stage, without introducing rounds that are unlikely to affect the stability criterion significantly. The other models (Persona Panel and Digital Oracle) are presented as a conceptual framework that shares the core mechanics exercised by Iconic Minds. Therefore, first validating a single model was an appropriate objective for this phase.

7. Conclusions

LLM models are general-purpose technologies capable of generating texts across a wide range of subjects and varying levels of complexity based on user-provided prompts. On the other hand, traditional participatory decision-making methodologies, such as the Delphi approach, which involves consulting experts through iterative questionnaires to converge on consensus-based future scenarios, are widely used. However, access to these experts is a challenge, as they have a limited time for consulting. Consulting experts for predictions requires substantial resources and may incur obstacles that slow down the decision-making process.

In this work, we proposed three machine–machine collaboration models to streamline and accelerate Delphi execution time by leveraging the extensive knowledge of LLMs. We then applied one of these models, the Iconic Minds Delphi, to run future-focused Delphi questionnaires. This work delved into the intersection between recent LLM advances and Futures Research. The primary goal of this research was to explore the possibility of leveraging LLMs to understand the future, especially considering the limitations and challenges of conventional approaches like the Delphi method. The results show agreement in 85% of responses, indicating alignment between one of the emulated personas’ responses and the real person.

The main contribution of this work lies in the successful integration of traditional approaches with the emerging power of LLMs, creating a hybrid methodology that leverages the strengths of both worlds. The introduction of the AI Delphi model not only streamlines and expedites the process of consulting experts for future scenarios but also introduces a dimension of diversity and adaptability that was previously unattainable. By incorporating different personas and leveraging the vast knowledge of LLMs, we generated multifaceted scenarios that reflect a broader range of possibilities and perspectives. Although initially developed for the Delphi technique, the AI Delphi models proposed demonstrate their potential for broader application in various methods involving expert participation, marking another significant contribution of this work. Furthermore, by paving the way for the use of LLMs in Futures Research, this work serves as a milestone for researchers and professionals seeking to leverage AI.

Regarding the limitations and risks to the validity of the research, we acknowledge the 85% agreement between Nelson Maculan Filho’s responses and those of his persona as a promising indicator. Still, we emphasize the need to involve more experts for a more comprehensive validation of the model. Furthermore, we acknowledge that the training base of GPT-4 Turbo, which excludes data from a specific date, may lead to an incomplete or outdated understanding of certain topics. This limitation is crucial, as the model may not incorporate recent information, advances, or discoveries, which can impact the analysis. Despite these aspects, the observed agreement strengthens the credibility of the results, emphasizing the importance of a more comprehensive evaluation for a complete understanding.

For future work, it is essential to consider exploring and implementing alternative approaches that complement personas. A crowd-based approach is a potential solution to the limitation presented earlier. The approach could be systematically tested and used to compare human responses with those obtained by incorporating personas into LLMs. Additionally, it would be valuable to investigate other emerging AI technologies and methodologies that can provide deeper insights and nuances about the future. Furthermore, it would be interesting to extend the applicability of the AI Delphi model to other domains and sectors, assessing its effectiveness in dynamic and multifaceted environments.

In conclusion, our research has demonstrated that the synergy between Delphi and LLMs offers a new dimension for future research. Recognizing its limitations, we continue to advocate for the importance and relevance of combining human expertise with technology to create a more holistic and adaptable foresight of the future. Through ongoing innovation and refinement of our models, we aim to make further contributions to understanding and preparing for future scenarios in the professional world.