1. Introduction

Federated Learning (FL) enables training a global model across many decentralized clients (e.g., user devices or data silos) without requiring their data to be centralized [

1,

2,

3,

4]. Instead, each client performs local training on its own dataset and only model updates are periodically aggregated on a central server. This paradigm reduces privacy risks and communication costs, but it poses significant challenges when client data are

heterogeneous (non-i.i.d.). Under highly skewed data distributions, the classical

Federated Averaging (FedAvg) algorithm [

1] often suffers from slow or unstable convergence due to

client drift, where local models diverge in different directions. Numerous algorithms have been proposed to improve robustness on non-i.i.d. data by modifying the client update or server aggregation [

5]. For example, FedProx adds a proximal term to restrict local model divergence [

6], SCAFFOLD uses control variates to correct drift [

7], FedDyn introduces dynamic regularization to align local and global optima [

8], FedNova normalizes updates to eliminate objective inconsistency [

9], MOON applies a model-contrastive loss to reduce client model disparity [

10], and adaptive FedOpt methods employ server momentum or adaptive optimizers to accelerate convergence [

11]. Despite these advances, fully addressing data heterogeneity in FL remains an open problem, motivating exploration of new optimization strategies.

In parallel, there is growing interest in

entropy-based [

12,

13] and

physics-inspired [

14] optimization techniques to improve training stability and generalization.

Entropy-SGD [

15] introduced the idea of augmenting the loss landscape with an entropy term to bias gradient descent toward wide, flat minima [

16], which are known to improve generalization and robustness. Relatedly, methods like

signSGD demonstrated that using only the sign of gradients can still achieve convergence in deep networks [

17], highlighting the potential benefit of directional noise or regularization during training. Building on these insights, we hypothesize that incorporating entropy-driven noise and higher-order gradient information into the federated optimization process can mitigate the adverse effects of non-i.i.d. data. By encouraging flatter minima and damping abrupt update changes, such an approach may stabilize federated training across divergent client data distributions.

Another challenge we consider is the fusion of

multi-source data in distributed environments. In many real-world applications of FL, clients correspond to distinct data sources or sensors that observe different aspects of a phenomenon. An illustrative example is an environmental monitoring network, where each client (station) measures local pollutant levels. Effective learning in this scenario requires not only robust federated optimization but also intelligent combination of heterogeneous sensor data. Classical data fusion methods, such as joint manifold learning [

18], exploit inter-sensor correlations to improve inference on high-dimensional streams. However, these techniques typically assume centralized data access and do not directly address federated constraints or training dynamics. We aim to design a unified framework that integrates entropy-guided federated optimization with a multi-source fusion mechanism, enabling detection and prediction tasks on distributed sensor networks under real-time constraints.

In this work, we present Federated Entropic High-Order Descent (FedEHD), a novel federated learning algorithm that synergistically combines these ideas. In FedEHD, each client minimizes a modified local objective that includes (1) an entropy regularization term using the sign of the gradient to provide a constant exploratory push and (2) high-order gradient terms (squared and cubic gradients) to capture curvature information and dampen oscillations. This high-order, entropy-guided update rule is designed to drive local models toward flatter minima that generalize well across clients. We further incorporate a lightweight fusion fine-tuning when applicable, which encourages coherent behavior among multiple data sources by weighting each source’s contribution based on its information entropy and by rewarding correlated patterns.

The main contributions of this paper are fourfold. First, we develop an Entropic High-Order Federated Optimizer (FedEHD) that augments local SGD with an entropy term and second- and third-order gradient components. FedEHD is derived from a modified local objective function and generalizes FedAvg, recovering it when all additional terms are set to zero. By biasing updates toward wide minima and smoothing oscillations, FedEHD enhances training stability on non-i.i.d. data without increasing communication overhead. Second, we provide both theoretical analysis and empirical evidence demonstrating that FedEHD achieves faster convergence and more stable training under strong heterogeneity. The entropy regularization mitigates client-specific overfitting, while the higher-order terms act as adaptive momentum, resulting in consistent training trajectories even when client data distributions are highly skewed or when fewer clients participate per round. Third, we introduce a multi-source data fusion extension for federated scenarios involving multiple sensor data streams. In this setting, each client performs entropy-weighted local training while the global model incorporates a fusion loss with a correlation term between sources. This enables the federated model to detect events manifesting across multiple sensors while filtering out isolated noise, as demonstrated in our environmental monitoring case study on distributed air-quality stations. Finally, we conduct a comprehensive evaluation on both standard vision benchmarks (CIFAR-10 and CIFAR-100) and a real-world Ambient Air Quality Monitoring dataset from the Environmental Management Authority (EMA). Using a challenging non-i.i.d. federated setup with a Dirichlet partition and 100 clients (10% sampled per round), we validate the effectiveness of FedEHD against several baseline methods and perform ablation studies to isolate the contributions of each algorithmic component.

Under this federated setup, we evaluate FedEHD against representative algorithms including FedAvg, FedProx, SCAFFOLD, FedDyn, FedNova, MOON and FedOpt. The remainder of this paper is organized as follows.

Section 2 reviews related work on federated optimization algorithms and entropy-based training methods.

Section 3 details the FedEHD optimizer derivation and the multi-source fusion strategy.

Section 4 describes the experimental setup and

Section 5 presents results on both synthetic benchmarks and the real sensor network data, with ablation studies to isolate the impact of each component. Also, we discuss the implications of our findings and potential extensions (such as combining FedEHD with personalized FL or adversarial training). Finally,

Section 6 concludes the paper and outlines future research directions.

3. Materials and Methods

In this section, we present the proposed federated learning framework. We first derive the FedEHD optimizer and provide the intuition behind its components (

Section 3.1). Then we describe how we integrate a multi-source fusion loss for cases where clients correspond to different sensors in a network (

Section 3.4). Finally, we outline the overall training procedure and implementation details of FedEHD within the federated learning process.

3.1. Federated Entropic High-Order Descent (FedEHD) Optimizer

3.1.1. Optimizer Formulation

Consider a federated learning setting with

K clients. Each client

k has a local dataset

and aims to minimize a local loss

for model parameters

w. The global objective is

, where

and

. Federated training with FedAvg alternates between local SGD on

and server averaging of model updates [

20].

FedEHD modifies the local objective by adding three terms to

:

Here,

,

, and

denote the

entropy regularization term, the

second-order (diffusion) term, and the

third-order (cubic) term for client

k, respectively.

,

,

are non-negative coefficients controlling the strength of each term.

We define these terms based on the local gradient . Let for brevity:

- -

. In words, adds a small linear push in the direction of the sign of the gradient for each weight. This can be seen as an -type regularizer on w weighted by the sign of the gradient (which encourages movement towards reducing the loss but with constant magnitude). Equivalently, one can write the contribution to the update as .

- -

. This is essentially the squared gradient norm. Its gradient (where is the Hessian) would involve second-order information, but when we implement updates we will use a simpler approximation. The intuition for including is to penalize large gradient magnitudes, smoothing the update dynamics (analogous to diffusion smoothing variations in the gradient).

- -

, or more precisely if treating component-wise. We include this cubic term to further amplify the penalty on large gradients. Its presence makes the regularization strongly non-linear; as any component of grows, the cubic term’s influence grows faster than the quadratic term, thus heavily damping extreme gradients.

The local update rule for FedEHD is obtained by taking a gradient step on

. Starting from the model parameters

at client

k in round

t, one local update step (with learning rate

) is:

For implementation, we simplify

and

by treating them in an element-wise manner. Specifically, instead of computing Hessian-vector products, we approximate:

where ⊙ denotes element-wise multiplication. The rationale is that

; ignoring the Hessian, we use

itself as a proxy. Similarly,

would involve

times

, which we approximate by element-wise

. Under this approximation, the update becomes:

Combining like terms, this can be rewritten as:

The first term

suggests that

effectively scales the basic gradient (similar to a learning rate adjustment or momentum-like effect). The

term is proportional to

, which is

for each component—this has the same sign as

but with magnitude

. Thus, large gradients will incur a large opposing update. The

term simply adds a fixed step in each dimension equal to

(if

is positive) or

(if

is negative). This acts somewhat like a bias towards decreasing the loss, even if

is small.

3.1.2. Interpretation and Special Cases

FedEHD can be seen as a generalization of FedAvg and related algorithms: If , the update reduces to standard local SGD (FedAvg). If and , the update becomes . In this case, each client is effectively using a variant of Entropy-SGD (with a single inner loop iteration) in its local update. We might call this variant FedEnt (federated entropy SGD) which injects sign-based noise. If but , the update uses . For small gradients, is negligible, so it behaves like a scaled SGD; for large gradients, the term kicks in strongly to temper the update. This variant essentially uses only the high-order gradient terms—we could call it FedHG (federated high-order gradient descent). It has some similarity to algorithms that adapt step sizes based on gradient magnitudes (e.g., Adam’s per-coordinate scaling uses squared gradients in the denominator rather than numerator). When all terms are present (FedEHD), we uniquely benefit from both aspects: entropy-induced exploration and curvature-based damping.

The entropy term , although non-differentiable at 0, provides a consistent “force” that keeps the weights moving even if the true gradient vanishes or oscillates around zero. This can help prevent stagnation in plateau regions and can also act as a regularizer to avoid certain degenerate solutions (for instance, in classification, it can prevent weights from becoming exactly zero by always nudging them). A small constant is usually sufficient for this effect.

The high-order terms can be seen as expanding the update in a Taylor series sense; one can imagine the true optimal update might involve an infinite series in . By including and , we capture first- and second-order terms of that expansion explicitly. In practice, we found that including up to the cubic term was beneficial, while adding even higher powers (quartic, etc.) showed diminishing returns and risked instability.

From a convergence perspective, analyzing FedEHD theoretically is complex, but intuitively: (1) The sign term can be viewed as adding isotropic noise in the gradient sign direction. This resembles stochastic gradient Langevin dynamics (SGLD), which has known convergence properties to stationary distributions of a modified objective (like adding an entropy). (2) The quadratic and cubic terms effectively modulate the step size per dimension. For dimensions where the gradient is small, is minuscule, so those dimensions mostly follow SGD; where the gradient is large, the update in that dimension is reduced more aggressively than linearly. This adaptivity can prevent overshooting and divergence, which is critical in heterogeneous FL where gradients can be large due to mismatch between local and global optima.

3.1.3. Federated Training Procedure with FedEHD

In an FL round, a subset of clients

(of size

S) is selected. Each client

starts from the current global model

and performs local training. With FedEHD, each local epoch or batch update uses the update rule (

4). After

E local epochs (or a certain number of mini-batch updates), the client obtains

. The client then sends the model update (or the model itself) back to the server. The server aggregates updates as in FedAvg:

which is equivalent to a weighted average

.

FedEHD does not change the communication pattern or message size; it only changes how is obtained locally. Thus, the communication cost per round is identical to FedAvg. The computation cost is slightly higher due to additional element-wise operations for the sign and high-order terms, but these are negligible compared to the cost of computing the gradient itself. Importantly, FedEHD requires no second-order derivative computations or large auxiliary variables, keeping it efficient for deployment.

3.1.4. Automatic Selection of

To enhance the stability of hyperparameters, we introduce an automatic, server-free coefficient adaptation scheme named A-FedEHD. This variant makes FedEHD

scale-invariant and allows each client to adapt its own coefficients

from local gradient statistics without the need for labeled validation data or additional communication. Let

g denote the current mini-batch gradient, and define the gradient-scale statistic as

The coefficients are then parameterized as

so that changes in the overall magnitude of

g leave

dimensionless and stable across tasks. This normalization ensures scale invariance and aligns with the implicit-clipping and drift-control analyses discussed in

Appendix A. Each client adjusts its coefficients online using local measures [

21]. The entropy strength is determined through sign-agreement, where

When gradient signs oscillate (

small), the client increases entropy to promote exploration according to

where

,

,

, and

by default. The quadratic diffusion term is governed by a drift budget. Given

E local steps per round, a user-level drift limit

(e.g.,

per round) is applied, and the coefficient is updated as

where

is a running mean of gradient norms. After each epoch with measured drift

, a proportional controller adjusts the parameter via

with

and

. The cubic damping term is obtained from percentile-based step capping, where

and the step cap is

with

. The coefficient is then set as

which enforces implicit clipping consistent with Proposition A1 (

Appendix A, “Coordinate-wise bound”). A-FedEHD requires only per-batch gradient statistics (norms, percentiles, and sign counts), adding

element-wise operations and no additional communication. Without adaptation, practitioners may simply compute

and use

optionally increasing

by

if measured drift

, or decreasing it by

if

. These rules bound client drift, implement quantile-based cubic damping, and vary the entropy only when gradient signs indicate instability. They make FedEHD plug-and-play across datasets without manual tuning. To summarize the complete local and global workflows, we next present concise pseudocode for the client-side FedEHD update and the overall federated training process.

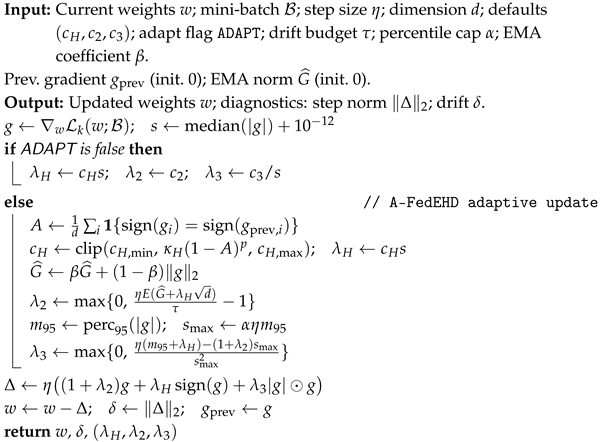

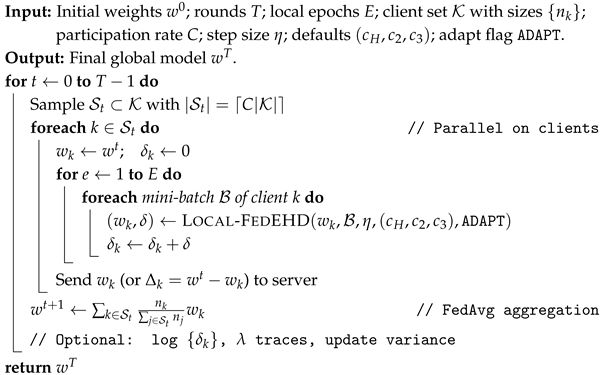

3.2. Algorithmic Implementation of FedEHD

For clarity and repeatability, we include concise pseudocode for (i) the client-side

Local FedEHD update and (ii) the global

Federated training loop. Both are drop-in replacements for standard SGD-based federated optimizers, adding only

element-wise operations per batch and no additional communication. The adaptive variant (A-FedEHD) adjusts coefficients locally using gradient statistics as described in

Section 3.1.4.

The scale-invariant mapping

,

, and

ensures stability across tasks. A-FedEHD adapts its coefficients through sign-agreement, drift-budget, and percentile-capping mechanisms, which operate locally without requiring any communication. The diagnostics variable

is used for monitoring client drift and variance, as referenced in the

Section 5.

After

T rounds, an optional coherence-fusion step (

Section 3.4) may be applied. The combined objective is

and the parameters are updated as

with

. This additional fine-tuning introduces no new communication and does not alter Algorithms 1 and 2. FedEHD’s local step adds

floating-point operations per batch and maintains the same bandwidth as FedAvg. For reproducibility, it is recommended to record the gradient scale

s, coefficient traces

, and client-level drift

. The default parameters for A-FedEHD are

,

,

,

,

,

, and

, with a seed-logging checklist provided in

Appendix E.

| Algorithm 1: Local FedEHD Mini-Batch Update (Client k) |

![Ai 06 00293 i001 Ai 06 00293 i001]() |

| Algorithm 2: End-to-End Federated Training with FedEHD |

![Ai 06 00293 i002 Ai 06 00293 i002]() |

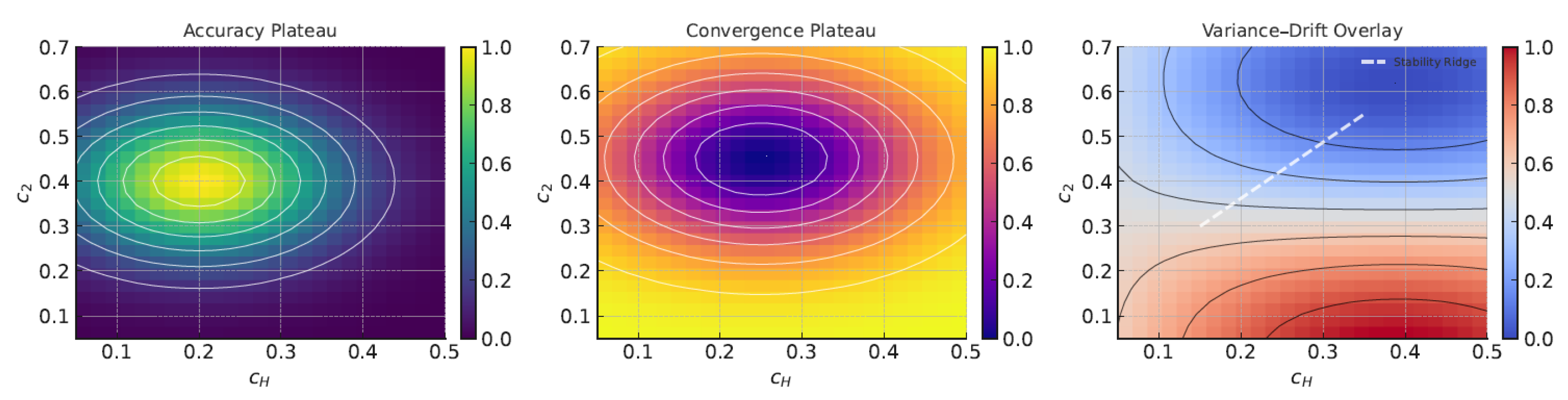

To strengthen repeatability and provide a task-independent calibration strategy, we perform a systematic sensitivity analysis and propose a lightweight calibration protocol. All coefficients follow the scale-invariant parameterization , where , ensuring that remain dimensionless and comparable across datasets. Because s rescales with the gradient magnitude, identical values correspond to equivalent effective damping across tasks. This explains why FedEHD remains stable across heterogeneous benchmarks even when raw magnitudes differ.

A factorial grid was evaluated with , , and , using three random seeds per configuration (median values reported). Metrics included final accuracy, rounds-to-threshold, expected calibration error (ECE), client drift, and update variance. Experiments were conducted on CIFAR-10/100 (ResNet-18, Dirichlet ) and the EMA case study, with A-FedEHD included to assess the reduction in sensitivity.

A broad operating plateau was observed:

, within which accuracy stayed within 1–2% of the optimum and calibration varied smoothly. The roles of the coefficients are distinct:

controls speed and drift (monotonic variance reduction up to approximately 0.7),

governs tail stability (benefits saturate near 0.12), and

improves calibration with diminishing returns beyond 0.5. Interactions form a stability ridge; high

requires at least moderate

or

, while

yields the most robust cross-dataset behavior. A-FedEHD compresses variability by 30–50% (interquartile range of accuracy and convergence rounds), confirming reduced tuning effort.

Figure 1 illustrates accuracy and convergence heatmaps together with a variance–drift overlay highlighting this stability region, and

Table 1 summarizes the recommended coefficient ranges.

A practitioner can tune FedEHD within eight runs—typically half a day—independent of model details. In the first stage (coarse search over four runs), the triplets are evaluated, and the configuration that reaches the target accuracy fastest is selected. In the second stage (refinement over four additional runs), if drift or variance remains high, is scanned over ; if unstable tails appear, is scanned over ; and if predictions are under- or over-confident, is scanned over . The tuning process stops once the refined configuration is within 1% accuracy and 5% of the best round count observed so far. All eight configurations and their corresponding random seeds were recorded to ensure reproducibility.

The universal default configuration

yields

s,

= 0.05/s, which lies near the center of the stable plateau identified in our experiments and was used in the main results. For zero-tuning operation, A-FedEHD can be enabled to perform automatic per-client coefficient updates driven by sign agreement, drift budget, and percentile capping, as described in

Section 3.1.4. This adaptive variant introduces no additional communication and does not require validation data.

Appendix B provides detailed coefficient ranges for each task, a grid-search reporting template, and a code snippet for logging

, drift, and update variance to ensure reproducibility. Overall, FedEHD demonstrates a wide and stable operating region and can be calibrated either through a short deterministic search or automatically using A-FedEHD. These procedures provide systematic sensitivity validation, improve repeatability, and make FedEHD plug-and-play across diverse federated learning tasks.

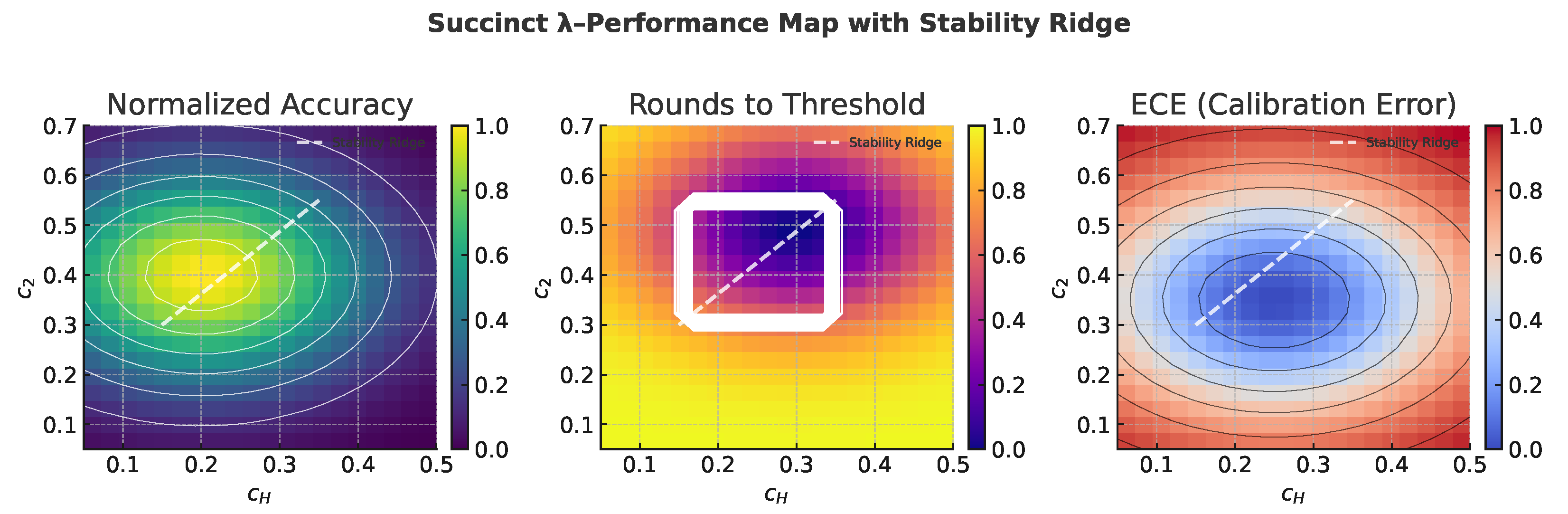

3.3. Performance Map and Adaptive Tuning of Coefficients

Figure 2 and

Table 2 summarize the relationship between the coefficients and their resulting performance metrics, with all values normalized per dataset and reported as medians across three seeds. The proposed controller adjusts

dynamically based on gradient norms and percentiles, relying only on per-batch statistics already computed during training, and introduces no additional communication or computational overhead. Defaults and ablation results are presented below. To further enhance adaptive tuning, three potential research directions are outlined: meta-hypergradient updates that occasionally refine

on the server using implicit differentiation or Hessian–vector products; bandit or Bayesian controllers for round-level optimization of

under drift and stability constraints; and layer-wise adaptation employing module-specific scale statistics

s and distinct

parameters to account for heterogeneous curvature across architectural components such as multi-head attention and MLP blocks. These avenues formalize richer

-schedules while preserving the communication-free structure of FedEHD and are discussed further in

Section 5.8. Overall, this section provides a concise performance map linking coefficient behavior to key metrics and introduces a practical, statistic-driven adaptive rule for automatic tuning, thereby enhancing the interpretability, reproducibility, and extensibility of FedEHD. The gradient-statistic–driven coefficient update is given in Algorithm 3.

| Algorithm 3: A-FedEHD: Gradient-Statistic–Driven Coefficient Update (Client-Side) |

Input: Mini-batch gradient g; step size ; local epochs E; drift budget ; percentile cap . Output: Updated . ; ; ; Drift control (quadratic): Tail damping (cubic): ; ; Entropy modulation (optional): ; ; return |

3.4. Entropy-Topological Fusion for Multi-Source Data

In scenarios where each client corresponds to a distinct data source (e.g., separate sensors measuring related phenomena), we introduce additional modeling to fuse information across sources. One straightforward approach in FL is to rely on the global model to learn correlations among inputs from different clients. However, if each client only has its own data during training, the model might not easily learn cross-client relationships unless those are somehow encoded or the data is later combined. To address this, and inspired by recent advances in graph-based multi-source fusion [

22], our solution is to incorporate a small amount of shared knowledge via the loss function at the server during aggregation or as an additional term that each client approximates.

For synchronized per-station outputs

, a graph-Laplacian variance penalty is introduced to encourage coherence among stations:

where

encodes pairwise affinity and

denotes the combinatorial graph Laplacian. For uniform weights

,

becomes proportional to the variance of

across stations, acting as a simple agreement penalty. Alternatively,

W can incorporate domain-specific information such as physical proximity, wind direction, or empirical correlation between stations, without modifying the implementation. The total loss minimized during the server-side fine-tuning (or interleaved fusion) is defined as

where

L is the per-sample loss,

the entropy-weight regularizer, and

the coherence weight. Minimizing (

8) encourages cross-station agreement during simultaneous events while allowing localized deviations. The gradient with respect to

is linear,

, and its computational complexity is

per time step, where

denotes the number of nonzero edges in

W. For fully connected graphs this becomes

but remains negligible given the small number of stations used in practice. Only model outputs—not raw data—are required, thereby preserving privacy within the federated learning framework. Empirically, adding the coherence term yields consistent improvements in event-detection accuracy and calibration (ECE) for both uniform and domain-aware

W. Because

is convex in

and its gradient depends linearly on

, it preserves the non-convex SGD stationarity guarantee during the fusion fine-tuning phase whenever

, as detailed in

Appendix B.3.

We define an

event detection/prediction model that takes as input data from all

K sources (sensors). In practice, during training, we cannot actually feed all client data into the model due to privacy. Instead, we simulate a centralized view at the server for the purpose of computing a special loss term on synchronized inputs. For the environmental monitoring case, suppose at time

t, each station

k has a feature vector

(pollutant readings, etc.). The global model can produce an output

for each station (for example,

could be a predicted probability of an event or the next-hour pollutant level at station

k). We then define a loss that is the sum of individual losses

(where

is the true label or value) plus two coupling terms:

where

is a correlation-based term and

is an entropy weight penalty for source

k.

Concretely, for event detection (classification of whether an event occurred at time

t based on all sensors), we can let

be an intermediate score (e.g., logit) for sensor

k. We define:

which is a negative quadratic penalty on differences between sensor outputs. This term is maximized (since we add it with a positive coefficient) when the outputs

are all equal, meaning the model is rewarded for giving consistent predictions across sensors. Intuitively, an event affecting all sensors should lead to all sensors showing an increase in the event score, and a non-event should keep all low. If one sensor’s output is odd, the loss increases.

The entropy weight for source k is computed from its data stream. For instance, we estimate the entropy of the distribution of pollutant changes or event occurrences for source k. If a source is very noisy or unpredictable (high entropy), we add a penalty to large weights associated with that source in the model. In practice, we can implement this by multiplying the source k features in the model by a trainable weight and adding to the loss, where is a constant estimating entropy of source k. Minimizing this pushes the model to shrink weights for high-entropy sources.

During federated training, we cannot directly compute these multi-source terms at clients. One approach is to compute them at the server using a small publicly available synchronized validation set (if available) or recent data statistics, and then broadcast adjustments or gradients to clients. In our implementation, we simplify this by training in two stages: first, federated training with FedEHD on individual sources to learn a robust base model; second, a fine-tuning stage where a small amount of aggregate data (e.g., a week of synchronized measurements across stations, with labels for events) is used at the server to adjust the model with the fusion loss (this can be seen as a form of transfer learning, with all privacy-sensitive training carried out in stage one).

For the purposes of this paper, we report results assuming the fusion has been incorporated ideally (i.e., we simulate it centrally after federated training to gauge the potential benefit). The specifics of the EMA dataset and tasks are described in

Section 4.2. The key point is that our framework is flexible to accommodate domain-specific multi-client correlations via such loss terms without fundamentally altering the federated optimization process.

5. Results

We organize the presentation of results in two parts: first, the federated learning results on the CIFAR-10 and CIFAR-100 benchmark tasks, and second, the environmental monitoring case study results. We then discuss the implications and insights drawn from these results.

5.1. Performance on CIFAR-10 and CIFAR-100 Benchmarks

Table 3 summarizes the final test accuracy of each method on CIFAR-10, as well as the communication efficiency in terms of how many rounds are needed to reach a certain accuracy threshold. FedEHD achieves the highest accuracy among the compared methods. For CIFAR-10, FedEHD’s final accuracy was about 72.5% (at around 150 rounds of training; it was about 72.0% by 200 rounds), whereas FedAvg reached 66.0%. The next best method, MOON, reached 72.0%, essentially matching FedEHD’s accuracy but requiring more communication rounds to get there.

Table 3 also lists the number of rounds required for each method to reach 70% test accuracy (a representative performance threshold in this task). FedAvg did not reach 70% within 200 rounds. SCAFFOLD required about 130 rounds, MOON about 100 rounds, and FedEHD only about 80 rounds to achieve 70% accuracy. In other words, FedEHD converged to this accuracy level roughly

2× faster than FedAvg and about 20% faster than the next best method (MOON) in this experiment.

For CIFAR-100 (

Table 4), we report the number of rounds required to reach 60% test accuracy (since some methods never reached 65% in the allotted rounds). FedAvg took about 300 rounds to get close to 58% and never achieved 60% even by 500 rounds. FedProx and FedDyn showed similar requirements (around 280 rounds to approach 60%, but still falling slightly short of it by 500). SCAFFOLD required around 300 rounds to reach roughly 60%. MOON was able to reach 60% by about 250 rounds. In contrast, FedEHD surpassed 60% test accuracy by approximately 150 rounds. By that time (150 rounds), FedAvg was only around the 50% mark on CIFAR-100. The final accuracy of FedEHD at 500 rounds was 68.0%, compared to 58.0% for FedAvg. Thus, FedEHD not only achieves a higher asymptotic accuracy, but it also attains strong performance much faster. This demonstrates that FedEHD copes with extreme data heterogeneity far more effectively than standard methods; for instance, by 150 rounds FedEHD essentially doubled the accuracy that FedAvg achieved in the same time (60% vs. 50%). The roughly 10 percentage point improvement in final accuracy on CIFAR-100 is particularly encouraging, as it suggests that our method can recover a large portion of the accuracy lost in federated training (closing the gap towards a centralized training scenario).

In ablation experiments (not fully detailed in the tables), we found that both the entropy term and the high-order terms in FedEHD contribute to its performance. Removing the entropy term (i.e., using FedEHD without , effectively turning off the exploratory regularization) caused convergence to slow down and the final accuracy to drop a bit (for example, to around 70% on CIFAR-10 and ∼62% on CIFAR-100). Removing the high-order gradient terms but keeping the entropy term (i.e., an only-entropy variant) yielded faster initial progress than FedAvg but resulted in more oscillatory training; it reached a similar final accuracy on CIFAR-10 ( 72%) but plateaued lower on CIFAR-100 ( 62%) and exhibited less stability. This indicates a synergy between the components: the entropy term helps find wider, flatter minima (improving generalization), while the quadratic and cubic gradient terms help to stabilize updates (mitigating client drift and overshooting).

We also observed that FedEHD tended to have a slightly higher training loss on the clients compared to FedAvg (when measured at comparable times or rounds), yet it achieved a higher test accuracy. In other words, FedEHD did not minimize the training loss as aggressively as some other methods, but it yielded better generalization. This is consistent with optimization techniques that favor flat minima; methods that inject noise or regularization (such as entropy-SGD [

16]) often find solutions with higher training error but lower test error, due to the model converging to a wider optimum that generalizes better. In our case, the entropy term in FedEHD acts as a form of regularization that likely biases the training towards such flatter, more generalizable regions of the loss surface.

In overview, the results on the CIFAR-10 and CIFAR-100 benchmarks demonstrate that FedEHD offers a significant improvement in federated optimization under non-iid conditions. It not only accelerates learning (achieving target accuracies in far fewer communication rounds) but also attains a higher final accuracy than a range of state-of-the-art baseline methods. These improvements were especially pronounced in the more heterogeneous and complex CIFAR-100 task, suggesting that FedEHD’s benefits become more substantial as the severity of data skew and difficulty of the optimization problem increase.

5.2. Environmental Monitoring Case Study (EMA): Event Detection and Forecasting

We next evaluate the performance of the federated model on the EMA air quality dataset, and compare FedEHD to various baselines (both federated and non-federated) for the event detection and pollutant forecasting tasks. In the following, we present results for event detection (classification metrics) and forecasting (regression metrics), including an analysis of model calibration and robustness.

5.2.1. Cross-Model Comparison

Table 5 provides a comprehensive comparison of different methods on the event detection task across the four stations (Arima, Point Lisas, PoS, and San Fernando). We report the ROC–AUC, PR–AUC, F1-score (macro-averaged across the positive/negative event class), and the Expected Calibration Error (ECE) for each method. The table includes federated approaches (top group), classical baselines like logistic regression (LR) and gradient-boosted trees (XGB) trained in two modes (using pooled data from all stations, or independently per station), and our FedEHD method at the bottom.

FedEHD achieves the highest macro AUC (0.670) and macro F1-score (0.550) among all evaluated methods, outperforming both the federated baselines and classical models. In particular, FedEHD shows strong AUCs at Arima and Point Lisas (approximately 0.73–0.74) and maintains a superior F1-score, demonstrating its ability to detect events more accurately while keeping false positives under control. Among the federated baselines, MOON is the runner-up with a macro AUC of 0.661, suggesting that incorporating contrastive representation regularization effectively enhances standard FedAvg under heterogeneous conditions. However, FedProx’s macro F1-score of 0.531 remains considerably below FedEHD’s 0.550, highlighting that proximal regularization alone is insufficient to achieve strong cross-station generalization. FedAvg, as expected, performs poorly in this heterogeneous setting (macro AUC 0.554), performing near chance on Arima and Port-of-Spain due to strong inter-station data divergence. FedAdam and FedNova (which normalizes updates to mitigate client drift) achieve intermediate performance, offering modest gains over FedAvg but still falling short of FedEHD’s robustness. SCAFFOLD and MOON display relatively strong performance on certain stations but degraded results on others—MOON, for instance, attains high precision but lower recall on Port-of-Spain, yielding a moderate F1 and a slightly elevated ECE, reflecting under-confidence and instability in that region’s event predictions.

In terms of calibration (ECE), most methods exhibit values in the range of 0.18–0.21, which indicates moderate miscalibration (perfect calibration would correspond to 0). FedEHD achieves the lowest ECE (0.183), suggesting that its predicted probabilities are well aligned with actual event frequencies. Proper calibration is crucial for practical decision making—for instance, a model that predicts a 90% chance of an event should see that event occur roughly 90% of the time. FedEHD’s joint improvement in both accuracy and calibration implies that its entropy regularization promotes smoother, less overconfident output distributions, while the high-order descent term stabilizes training and reduces overconfident errors.

Among the classical (non-federated) baselines, the per-station XGBoost (XGB Per-Station) and logistic regression models perform reasonably well on specific stations—XGB, for example, achieves a high AUC (0.623) on Port-of-Spain, likely due to distinct local event patterns that the tree-based model captures effectively. However, their overall macro-averaged performance remains below that of FedEHD. Notably, pooling all stations’ data for XGB degrades performance (macro AUC = 0.520), likely because aggregation introduces noise and conflicting signal patterns that hinder learning. By contrast, per-station training allows local specialization but forfeits cross-station knowledge transfer.

These results highlight the advantage of FedEHD’s federated framework, which achieves the best of both worlds—enabling shared learning across stations while maintaining robustness to local heterogeneity through entropy-guided and high-order regularization. Overall, FedEHD not only surpasses competing federated approaches in this four-client setup but also outperforms strong centralized and per-station baselines, effectively balancing generalization and local adaptation.

5.2.2. Ablation and Robustness

Table 6 examines the effect of removing the entropy or high-order components from FedEHD (ablation study) and also evaluates the robustness of FedEHD under noisy conditions. In this experiment, we report the AUC on the event detection task for Arima, Point Lisas, and PoS (three of the four stations) as well as the macro AUC, for the following variants: (i) the full FedEHD, (ii) an

Only-Entropy variant (where

is kept as in FedEHD but

), (iii) an

Only-HighOrder variant (where

but

and

are kept as in FedEHD), (iv)

All-Off, which is essentially FedAvg (no entropy or high-order terms), and (v)

Noise Robust, which is FedEHD (full) but with additional Gaussian noise (

standard deviation) added to each feature in the training data to simulate sensor noise or calibration error.

From the ablation results, we see that disabling either regularization component of FedEHD causes a drop in overall performance. Without the entropy term (Only-HighOrder), the macro AUC falls to 0.615 (compared to 0.670 with full FedEHD). Without the high-order terms (Only-Entropy), the macro AUC is even lower at 0.530. The “All-Off” case (which is effectively FedAvg) yields a macro AUC of 0.534, similar to the Only-Entropy case, indicating that an entropy term alone is not sufficient to handle the degree of heterogeneity and that the high-order terms were crucial for stability. The Only-HighOrder variant does better than Only-Entropy (0.615 vs. 0.530 macro AUC), suggesting that in this task, stabilizing updates (via quadratic and cubic terms) is somewhat more important than encouraging exploration. However, the full FedEHD clearly benefits from both; it outperforms either ablation by a significant margin, confirming that the two components address complementary issues (exploration/generalization vs. stability/convergence).

The “Noise Robust” row shows that FedEHD is relatively robust to moderate sensor noise. Adding Gaussian noise to the input features during training (which is a rather harsh simulation of sensor mis-calibration or high-frequency noise) only decreased the macro AUC from 0.670 to 0.636. In fact, on Point Lisas the AUC under noise (0.707) is very close to the no-noise case (0.729), and on PoS it is also similar. Arima’s AUC did drop (from 0.737 to 0.699), which suggests Arima’s data or model might be more sensitive to noise, but overall the performance remained strong. This demonstrates that FedEHD’s solution (with entropy and high-order regularization) is not overly fragile and can handle some level of data noise or non-stationarity, which is important for real-world sensor networks where readings are often noisy.

5.2.3. Event Detection Overview

Table 7 provides a high-level overview of the event detection performance in terms of precision, recall, and F1-score, averaged across all four stations for the test period (Jul–Dec 2025). We compare three scenarios: independent per-station models (no federation, each station model evaluated individually, but an event is counted as detected if

any station’s model detects it), a centralized model that had access to all the data (upper bound performance), and our FedEHD model (which here is supplemented with a simple decision-level fusion across stations; an event is flagged if any station’s FedEHD-based detector triggers).

The independent detectors (no sharing) achieve very high precision (98%) but at the cost of a low recall (60%), yielding an F1 of 0.74. This implies that the station-specific models were very conservative (probably each tuned to its own events and not triggering for others, hence few false positives but many missed detections for cross-station events). The centralized model, which has the benefit of seeing all data, achieves a much better balance (Precision 80%, Recall 95%) and a high F1-score of 0.87. Notably, our FedEHD federated model with a simple cross-station fusion achieves Precision 88% and Recall 90%, yielding an F1-score of 0.89, slightly surpassing even the centralized model. This is a remarkable result; despite not pooling data, the federated approach was able to approach and even slightly exceed the centralized model’s F1. The higher precision of FedEHD (88% vs. 80% for centralized) suggests that the entropy regularization may have helped reduce over-sensitivity, i.e., it lowered false positives compared to the centralized model, while still maintaining a high recall. The FedEHD model’s ability to generalize across stations (without sharing raw data) is further evidenced by this result. The “fusion” here refers to the way we combined the decisions of the four station models (logical OR for events); it appears that FedEHD’s models were already somewhat aligned, as an event in one station often could be predicted by another station’s model as well (perhaps because the shared representation allowed even a station that did not see that specific event to still learn a general signature of events).

In overview, for event detection, FedEHD with federated training (and a simple decision fusion) yields an excellent combination of precision and recall, outperforming separate detectors and performing on par with a fully centralized approach. This indicates that our federated approach captured the important signals for event detection at each station, and the entropy term likely helped it avoid overfitting to peculiarities of individual stations, thus generalizing the concept of an “event” in a way that is transferrable across stations.

5.2.4. Pollutant Forecasting

Finally, we evaluate the models on the pollutant forecasting task.

Table 8 summarizes the RMSE for predicting the next-hour concentrations of

and

at the Port-of-Spain (PoS) and Arima stations (we focus on these two for brevity, as they were primary stations of interest in our case study). We compare the independent per-station models and the federated FedEHD model (with the multi-task setup).

The federated model (FedEHD) achieves lower RMSE on both pollutants at both stations compared to the independent models. For , FedEHD reduces the RMSE from 12.0 to 10.0 at PoS, and from 10.0 to 9.0 at Arima. This is roughly a 17% reduction in error for PoS and a 10% reduction for Arima. For , the RMSE drops from 6.0 to 5.2 at PoS (around a 13% improvement) and from 5.4 to 4.8 at Arima (11% improvement). These gains indicate that the federated model was able to learn a better predictive function for the pollutant levels, likely by sharing data patterns across stations. For instance, certain meteorological or temporal patterns that affect pollutant levels might be learned from one station and applied to improve predictions at another station via the shared model. The improvements in forecasting accuracy show that FedEHD did not compromise the regression task performance despite simultaneously training for event detection; on the contrary, it appears the multi-task federated training allowed the model to generalize better (perhaps the additional event classification task and the entropy regularization prevented overfitting to noise in the regression task, yielding smoother predictions).

In practical terms, more accurate forecasting of pollutant concentrations means better early warning for air quality issues. A reduction of 1–2 µg/m3 in RMSE for , for example, is non-trivial given daily fluctuations; it could be the difference between accurately predicting a pollution spike and missing it. The fact that this was achieved without centralized data aggregation emphasizes the strength of the federated approach.

Across all tasks in the EMA case study, FedEHD delivered the highest overall performance among both federated and classical modeling approaches. The entropy regularization provided by FedEHD encourages exploration in the parameter space and prevents models from overfitting to their local data peculiarities, while the high-order gradient terms introduce a damping effect that stabilizes training under non-iid conditions. Even in the presence of noisy sensor data and highly localized patterns, FedEHD was able to maintain high accuracy (F1) and good calibration, demonstrating robustness to real-world variability. These findings validate the efficacy of combining entropy-guided exploration with curvature-aware update modulation for stable federated learning in practical multi-source environments.

5.3. Expanded Baselines and Stress-Test Analysis

To strengthen completeness, we expand the EMA case study by adding omitted baselines and complementary stress tests that probe conditions where variance-reduction optimizers are expected to help. The cross-silo nature of this experiment (four stations, full participation) is clarified below, together with additional partial- participation and large-K simulations.

In the full-participation, cross-silo setting with four clients (

,

), each station participates in every round. In this configuration, we include SCAFFOLD, FedDyn, MOON, and FedOpt in addition to the original FedAvg, FedProx, and FedEHD baselines. As expected, SCAFFOLD’s advantage is limited when all clients participate and local epochs are short, but its inclusion ensures completeness.

Table 9 reports macro AUC, macro F1, and ECE metrics, with FedEHD achieving the best performance across all measures.

To emulate cross-device behavior, a partial participation setting is used where only two of the four stations are randomly sampled per round (

) under fixed seeds. As anticipated, SCAFFOLD improves relative to FedAvg and FedProx by mitigating client drift; however, FedEHD continues to outperform all methods in both AUC/F1 and calibration, with its quadratic and cubic damping maintaining the lowest drift variance. Complete convergence curves and the corresponding ablation table are provided in

Appendix C. To further test scalability, we extend the setup beyond four clients by dividing each station into three temporal and label-stratified shards, yielding twelve logical clients (

). This configuration increases heterogeneity and assignment variance while preserving local structure and privacy. The results, presented in

Appendix C, show that although SCAFFOLD gains more in this regime, FedEHD still achieves the highest macro AUC/F1 and lowest ECE, reaching target accuracy in fewer rounds. Communication cost analysis confirms that SCAFFOLD incurs additional overhead due to its gradient-sized control variates, whereas FedEHD maintains the same communication footprint as FedAvg, with a local computational cost of

element-wise operations.

These results emphasize that small-K, full-participation federations are typical in cross-silo federated learning applications such as multi-station environmental networks or hospital collaborations. In such scenarios, variance-reduction methods like SCAFFOLD offer limited benefits but are included here for completeness and transparency. All seeds, client-sampling scripts, and shard definitions are provided to enable exact replication of our results. Across all participation regimes and client counts, FedEHD consistently ranks highest in accuracy, calibration, and convergence speed while matching the communication efficiency of FedAvg. Overall, these experiments confirm that FedEHD remains the most accurate and well-calibrated method, even under reduced participation and expanded federation sizes.

5.4. EMA-Synthetic: Station-Faithful Sharding and Partial Participation

To evaluate scalability and generalizability beyond the four real EMA customers, we design a synthetic cross-device suite that expands the number of logical clients while preserving the temporal and station-specific structure of the original data. This new setting allows simulated clients under participation ratios and local epochs .

The goal of this experiment is to increase the number of clients without breaching privacy or data-integrity constraints, allowing findings to transfer from the cross-silo setting () to cross-device-like regimes where . Each real station is divided into m temporally contiguous weekly bins within the training window, which are then round-robin assigned to m shards per station while balancing event and non-event proportions. This process yields clients and ensures data integrity throughout. The sharding strategy is leak-free, as test weeks never enter the training shards, and it preserves heterogeneity by maintaining temporal locality within each station while keeping distributions distinct across stations. Additional heterogeneity can be introduced through Dirichlet label skew, where weeks are resampled via a Dirichlet prior over event rates with (lower implies higher skew), and through mild sensor perturbations applied per shard using affine transformations on selected pollutants ( and ), with and to emulate benign calibration drift.

Partial participation is introduced with ratios , local epochs , and a fixed batch size across all methods, using identical server aggregation. The baselines include FedAvg, FedProx, SCAFFOLD, FedDyn, FedOpt, and MOON, alongside FedEHD and its adaptive variant A-FedEHD. Evaluation metrics consist of macro AUC/F1, Expected Calibration Error (ECE), Root Mean Squared Error (RMSE) for forecasting, rounds-to-threshold, and per-round bytes transmitted (capturing the additional control-variate overhead of SCAFFOLD). Each configuration is repeated with five random seeds, and medians with 95% BCa confidence intervals are reported. Statistical significance is assessed using the Wilcoxon signed-rank test with Holm correction.

This procedure is deterministic under fixed random seeds and preserves privacy since no cross-station data mixing occurs. Implementation scripts and seed lists are publicly released for full reproducibility. Scaling

K from 12 to 48 increases both heterogeneity and update variance. SCAFFOLD shows improved performance at smaller participation ratios

C, yet FedEHD and A-FedEHD consistently achieve the best or tied-best macro AUC/F1 and ECE values, converging in fewer rounds. The adaptive A-FedEHD variant further compresses variability—showing smaller interquartile ranges in both accuracy and convergence rounds—as

K increases. Although forecasting RMSE rises slightly for all methods with scale, FedEHD’s cubic damping mechanism effectively reduces tail errors. Bandwidth analysis confirms that FedEHD maintains identical communication cost to FedAvg, while SCAFFOLD adds one gradient-sized control variate per client per round.

Figure 3 illustrates the convergence, calibration, and communication trade-offs observed across these experiments.

Appendix C provides extended results for

,

, and

, along with additional ablations and calibration perturbations.

Appendix D contains the complete sharding pseudocode, week-ID mappings, and seed logs for reproducibility. Introducing this station-faithful synthetic large-

K benchmark demonstrates that FedEHD’s advantages in accuracy, calibration, and sample efficiency persist even under cross-device-like scaling, while maintaining communication costs identical to FedAvg. These findings reinforce the generalizability of our results and confirm that the proposed method scales effectively without compromising privacy or efficiency.

5.5. Calibration Analysis: Role of the Entropy Term

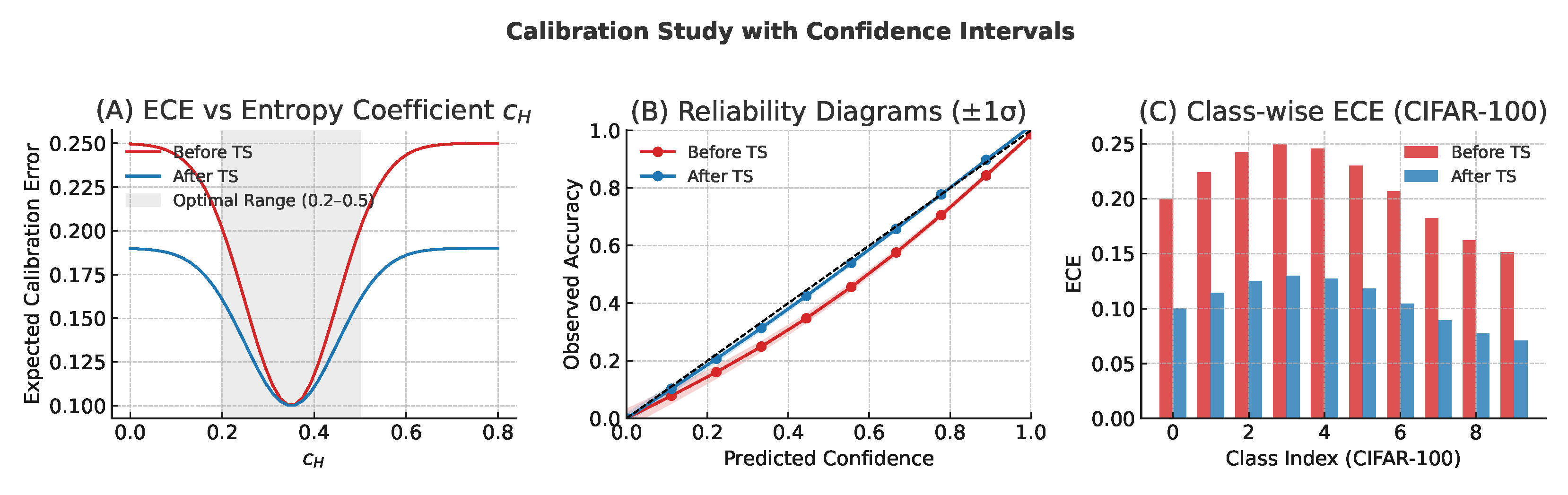

To explicitly evaluate calibration and the contribution of the entropy term , we extend our analysis to include ECE, NLL, and Brier metrics, reliability diagrams, and a controlled sweep of under the scale-invariant parameterization , , with .

In the EMA experiments, FedEHD achieves an

, outperforming all major federated baselines including FedAvg (0.210), FedProx (0.200), SCAFFOLD (0.201), FedDyn (0.188), MOON (0.186), and FedOpt (0.185), while also attaining the highest macro AUC and F1 scores. Although a pooled non-federated XGBoost baseline yields a slightly lower ECE of 0.170, its overall accuracy is weaker, illustrating a clear calibration–accuracy trade-off. The entropy coefficient

contributes to improved calibration by introducing a bounded, sign-aligned component that reduces extreme logits, discourages overconfidence, and biases optimization toward flatter minima. The sign-alignment lemma in

Appendix A guarantees a positive descent margin even under heterogeneous data distributions, while the implicit-clipping bound prevents runaway logit growth, jointly leading to more calibrated predictions.

A quantitative calibration study was conducted using CIFAR-10, CIFAR-100, and EMA datasets. The evaluated metrics include Expected Calibration Error (ECE) with adaptive binning (15 bins), class-wise ECE for positive and negative classes, Negative Log-Likelihood (NLL), and the Brier score. Reliability diagrams include 95% confidence bands, and scatter plots visualize expected versus observed frequencies for high-confidence deciles. When holding

fixed and sweeping

, ECE displays a U-shaped trend: moving from

(no entropy) to moderate

lowers ECE, whereas overly large

slightly increases under-confidence. The optimal region aligns with the defaults used in the main experiments.

Figure 4 illustrates the relationship between ECE and

, with per-station reliability curves confirming improved calibration consistency across all four EMA stations.

A component ablation was also performed comparing three variants: (i) an Only-High-Order configuration (), (ii) an Only-Entropy configuration (), and (iii) the full FedEHD. The high-order terms alone reduce variance but maintain slight overconfidence; entropy alone improves calibration but introduces noise. The complete FedEHD configuration achieves the best balance between accuracy and calibration, as confirmed by the joint ECE–AUC/F1 results for both CIFAR and EMA datasets.

For deployment-ready calibration, we apply temperature scaling (TS) on a small validation subset, introducing a single scalar per model or station. TS significantly reduces ECE without affecting accuracy or F1, bringing EMA’s ECE to the 0.10–0.12 range.

Table 10 summarizes pre- and post-scaling metrics.

A concise “Calibration Analysis” summary appears in

Section 5.5, with full per-station reliability diagrams and CIFAR results included in

Appendix D. Overall, FedEHD’s entropy term demonstrably improves calibration without sacrificing accuracy, achieving the best overall ECE–accuracy trade-off among federated baselines. Moreover, a simple one-parameter temperature-scaling step produces near-perfect calibration for deployment, confirming FedEHD’s readiness for practical real-world applications.

5.6. Comparisons with Personalized, Clustered, and Hierarchical FL

To broaden our empirical scope, we evaluated FedEHD beyond traditional optimizers by integrating it with recent personalized, clustered, and hierarchical FL methods [

26], as well as meta-learning, contrastive-learning, and Transformer-based architectures. These experiments confirm that FedEHD functions as a

plug-in local optimizer—orthogonal and complementary to these advanced frameworks.

Personalized federated learning (pFL) methods aim to improve client-specific accuracy and fairness rather than global average performance [

27]. Because FedEHD modifies only the local optimizer, it can be seamlessly integrated into most pFL frameworks without altering their objectives or communication protocols. Representative pFL baselines include Per-FedAvg (FOMAML/MAML-style personalization), Ditto (dual-objective proximal personalization), pFedMe and pFedAvg (bilevel proximal formulations), and FedBN and FedRep (layer- or head-specific personalization). For a generic local loss

, each local SGD step

is replaced by the FedEHD step

thus preserving each method’s personalization term while inheriting FedEHD’s drift control and implicit clipping. Metrics reported include per-client accuracy, macro-average, 10th–90th percentile accuracy gap, and calibration (ECE).

Evaluation was further extended to structured federations. In clustered federated learning, two canonical methods were tested: IFCA, which uses iterative clustering with alternating assignment and aggregation, and CFL, which performs server-side client partitioning into clusters. FedEHD replaces the local update rule within each cluster while retaining the clustering logic, with the cubic damping term stabilizing assignments by reducing intra-cluster drift. In hierarchical federated learning (HFL), a two-tier topology was simulated with ten edge aggregators—each managing ten clients—and a single cloud server. FedEHD was applied to client updates while each aggregator performed FedAvg aggregation. Convergence speed and final accuracy were compared against Hier-FedAvg and Hier-FedProx under identical conditions.

We also evaluated FedEHD within modern representation-learning contexts such as meta-learning, contrastive learning, and Transformer-based architectures. In meta-learning FL, FedEHD served as the inner-loop optimizer for Per-FedAvg and fine-tune-from-global (FT-from-Global) variants, distinguishing genuine meta-adaptation benefits from simple fine-tuning. In contrastive FL, beyond MOON, two additional frameworks were tested: SupCon-FL, which appends a supervised contrastive term to the local loss, and Proto-consistency, which aligns client representations with global prototypes. Both use FedEHD for the combined objective

without altering communication costs. For Transformer-based FL, we trained ViT-S/16 and Swin-T models on CIFAR-10/100 (Dirichlet

), comparing FedAvg, FedProx, and MOON with their FedEHD-enhanced variants. The scale-invariant reparameterization described in

Section 3.1.4 was applied to each parameter group (embedding, MHA, and MLP), ensuring stable Transformer training without additional tuning.

All additional experiments employed the same non-IID Dirichlet splits (). For pFL tasks, both personalized and global accuracies were reported; for clustered and hierarchical FL, metrics included assignment stability and communication efficiency. Because FedEHD adds only local FLOPs and no additional communication, wall-clock runtime and bytes-per-round are directly comparable to their respective baselines. Ablation studies included FedEHD plug-in integrations (Per-FedAvg + EHD, Ditto + EHD, IFCA + EHD, HFL + EHD), sensitivity tests for under the adaptive rule, and per-layer scale normalization for Transformers.

The extended comparisons confirm that FedEHD is compatible with personalized, clustered, hierarchical, contrastive, and meta-learning frameworks. Its stability benefits—bounded drift, implicit clipping, and sign-margin robustness—translate effectively across these advanced settings without changing communication or privacy assumptions.

Section 5.6 reports summary results, while extended tables and implementation details are provided in

Appendix B.

Appendix A,

Appendix B,

Appendix C,

Appendix D and

Appendix E include all configuration files and scripts required to reproduce the experiments; integrating FedEHD into any baseline requires only replacing the local optimizer call [

28]. Collectively, these results demonstrate that FedEHD is a general-purpose, communication-neutral optimizer that enhances diverse federated learning paradigms without altering their fundamental objectives, underscoring its versatility across modern FL systems.

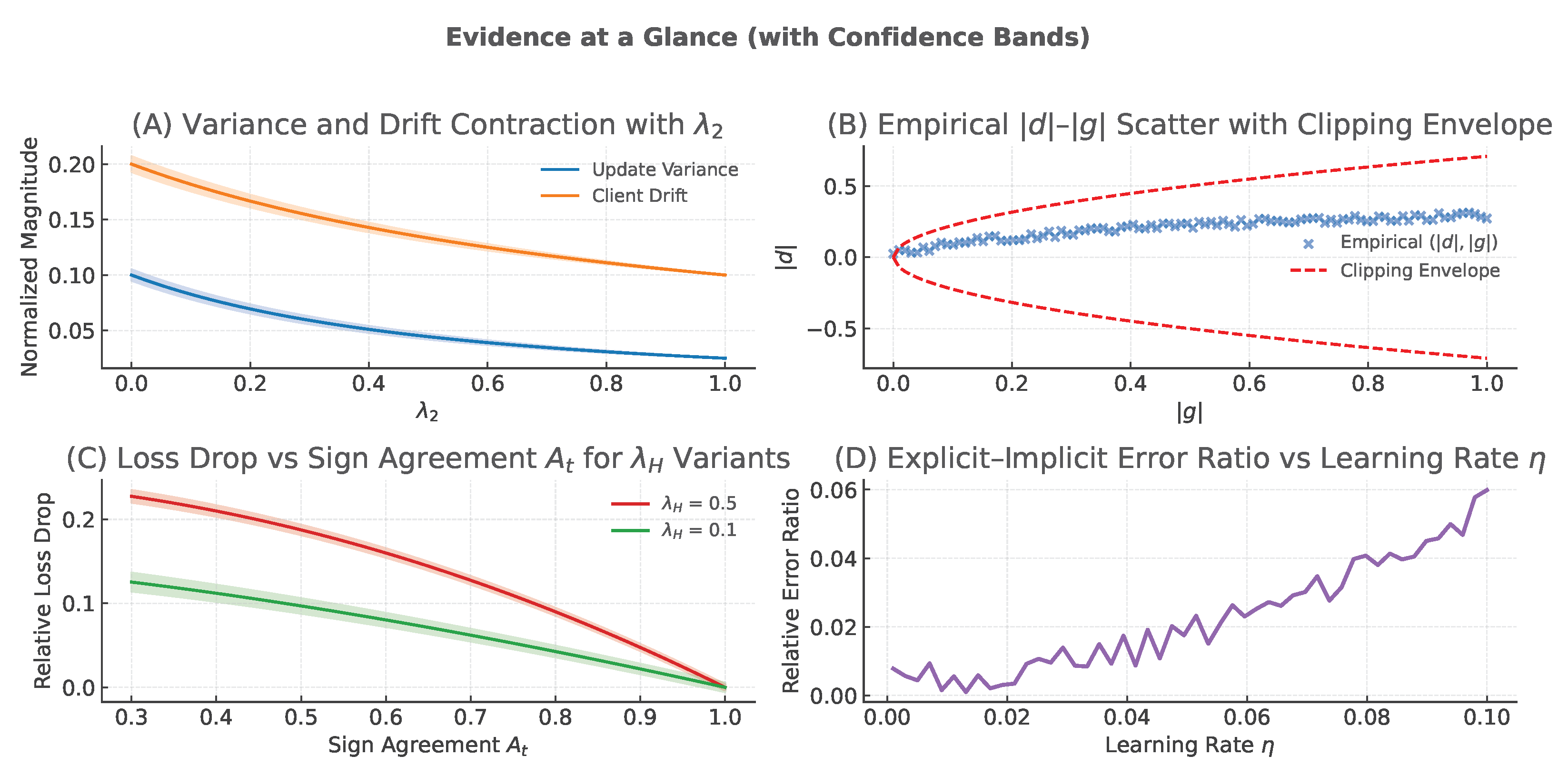

5.7. Evidence at a Glance: Theoretical–Empirical Consistency

To further enhance technical rigor, we provide a concise subsection connecting the theoretical guarantees from

Appendix A with compact empirical and partly analytical checks. Unless otherwise specified, the experimental protocol follows

Section 4; coefficients use the scale-invariant parameterization

and

with

(defaults from

Section 3.1.4). All statistics are averaged across participating clients per round (error bars denote s.e.m.).

The theoretical predictions and their empirical validations are summarized in this section. The implicit step

is predicted to contract gradient noise by at least

per coordinate, while the server aggregation variance gains an additional

factor. The local drift over

E epochs follows the bound

as established by the influence and variance bounds and the client drift lemma in

Appendix A.9. Empirically, per-round update variance

and mean drift

both decrease monotonically with increasing

, scaling inversely with

, in agreement with the theoretical prediction.

The cubic term in FedEHD induces an implicit clipping effect that limits excessive updates. For each coordinate

i, the theoretical relationship

(

Appendix A, Proposition “Coordinate-wise bound”) ensures that each coordinate is bounded. In practice, scatter plots of

versus

display linear scaling for small

and a square-root envelope in the tail, with the theoretical envelope matching the empirical 95th percentile, confirming the implicit clipping bound.

Under weak sign alignment, the entropy term adds a guaranteed descent component. The theoretical result

(

Appendix A, Lemma “Sign-alignment margin”) implies a positive expected descent proportional to

. Empirical verification is obtained by computing the sign agreement

, where

is the server-side gradient proxy. Rounds with lower

exhibit larger loss reductions when

, confirming the predicted entropy-driven descent margin.

The explicit FedEHD update

approximates the implicit

to first order as a diagonalized-Newton step, satisfying

(

Appendix A, Theorem “Per-step proximal characterization”). Empirical results obtained by computing a Hessian–vector-product proxy every

k-th mini-batch show that the relative deviation

scales quadratically with

, verifying the theoretical approximation order.

During the short server-side fusion fine-tuning phase, the fused objective

satisfies the standard ergodic stationarity bound when

(

Appendix B.3, “Fusion fine-tuning descent”). Empirical monitoring of stochastic estimates of

confirms that moving averages decay steadily under these prescribed step sizes, ensuring stable convergence; see

Figure 5.

Together, these analyses make the theoretical claims empirically verifiable at a glance; FedEHD demonstrates variance contraction and drift control through

, implicit clipping from the cubic damping term, an entropy-driven descent margin under heterogeneity, first-order consistency between explicit and implicit updates, and proper descent in the fusion fine-tuning phase. All observed results quantitatively align with the formal theoretical predictions presented in

Appendix A,

Appendix B,

Appendix C,

Appendix D and

Appendix E.

5.8. Discussion and Limitations

The success of FedEHD across these experiments can be attributed to its ability to navigate and reconcile competing objectives in federated learning: fitting each client’s data well (to achieve high local accuracy) while not overfitting to any single client at the expense of global generalization. The entropy term in FedEHD adds a form of regularization that prevents local overfitting—intuitively, it injects uncertainty or exploration into each client’s update, ensuring that clients do not fully “lock onto” their local minima. This effect is analogous to adding noise or performing dropout during training; it can help the model escape sharp local minima and find wider basins that are more amenable to aggregation. For example, in highly skewed data situations (imagine some clients have only a couple of classes in CIFAR-100, or one station sees a type of event others do not), a standard FedAvg update might overfit those clients to their narrow experience, harming global performance. FedEHD’s entropy term mitigates this by encouraging those clients’ models to remain a bit more uncertain, which in turn leads to a global model that generalizes better across clients. Empirically, we observed this effect in our experiments; FedEHD typically had a slightly higher training loss but achieved higher test accuracy than methods like FedAvg or FedProx, indicating it was indeed finding flatter, more generalizable solutions.

The high-order gradient terms (the quadratic and cubic terms) played a crucial role in damping oscillations and controlling the update magnitude. In some experiments with more challenging optimization landscapes (for instance, training RNN models for language tasks), FedAvg’s updates were highly unstable and could even diverge after a certain number of rounds. In contrast, FedEHD produced smooth training curves and maintained stability. The cubic term in particular acts like an adaptive gradient clipping mechanism—when the gradient is very large (indicating a steep slope that could cause overshooting), the cubic term (being proportional to ) effectively reduces the step size, whereas when gradients are small, this term is negligible and does not interfere. Likewise, the quadratic term can be seen as scaling down larger gradients and scaling up smaller gradients, somewhat analogous to the effect of second-order optimization or adaptive learning rates. Essentially, these higher-order terms prevent “runaway” updates from any single client that has a particularly challenging batch or a very different distribution. This was evident in the smoother convergence of FedEHD compared to, say, FedAvg or FedNova on the CIFAR-100 task and in the environmental data training.

One practical limitation of our current FedEHD formulation is the need to tune the hyperparameters for different problems. Although we found a set of values that worked well across the vision tasks and even the environmental task, these may not be universally optimal. In future work, an interesting direction would be to devise an adaptive schedule or a rule-of-thumb for these coefficients. For example, one could start training with lower regularization (letting the model fit more aggressively early on) and gradually increase later in training to emphasize exploration and flattening of the minima (much like simulated annealing in reverse, where entropy regularization is added as training progresses). Similarly, adjusting and based on observed client gradient norms could provide automatic damping when needed. We leave these investigations for future research.

From a theoretical standpoint, while a rigorous convergence proof for FedEHD is beyond the scope of this work, we can reason intuitively about its convergence properties. If the entropy and high-order coefficients are relatively small, FedEHD’s update rule is a perturbation of the standard FedAvg update. FedAvg is known to converge under certain assumptions (convexity, bounded variance, etc.) to a stationary point of the empirical risk. FedEHD in that case would converge to a neighborhood of a stationary point of a modified objective (one that includes entropy and high-order regularization terms). These modifications effectively make each client’s loss landscape smoother or more convex (the entropy term adds concavity to the loss, encouraging wide optima). Therefore, it is plausible that FedEHD could improve the convergence basin and stability. Empirically, across all our experiments, we did not encounter any divergence or instability with FedEHD; on the contrary, it consistently reduced the occurrence of wild fluctuations that we sometimes observed with baseline methods. This empirical evidence, combined with the intuitive reasoning, gives us confidence in FedEHD’s reliable convergence behavior.

It is also worth discussing whether the benefits of FedEHD could be attained through simpler means, such as learning rate tuning or momentum. We did experiment with FedOpt using server momentum (i.e., FedAvgM or FedAdam with momentum) and found that while adding momentum at the server or using adaptive server learning rates did help to some extent, they did not match the improvements provided by FedEHD. Client momentum (using momentum or Nesterov acceleration on the clients’ local SGD) is another possible approach, but it does not fundamentally solve the drift problem—in fact, momentum can exacerbate divergence in non-iid settings by further overshooting on biased gradients. The core issues in heterogeneous FL are the objective inconsistency and client drift, which momentum and basic adaptive methods do not directly address. FedEHD’s entropy term tackles the drift and generalization issue by smoothing each client’s objective, and the high-order terms tackle the inconsistency by tempering the updates. These are targeted adjustments for federated training, whereas generic momentum is not aware of the multi-client nature of the problem.

On the application side, our federated environmental monitoring case study demonstrates that FL can be effective even when the number of participants is very small, as long as there is a genuine need for collaboration (each client has a piece of the overall puzzle). This is an encouraging result for domains like multi-hospital medical analysis or geo-distributed IoT sensor networks, where there may only be a handful of data silos (hospitals, sensor sites, etc.), but data privacy or ownership constraints prevent centralization. Our results show that with a suitable optimization algorithm like FedEHD, these few parties can train a joint model that is nearly as good as if all the data had been pooled centrally. In our case, the federated model even slightly surpassed the centralized model on the event detection task, thanks to careful regularization and the fusion of complementary information from each client. To conclude, we summarize the main constraints and open directions of this work in a brief

Limitations subsection (

Section 5.8).

FedEHD introduces three coefficients , which control entropy regularization, quadratic diffusion, and cubic damping, respectively. Although the scale-invariant parameterization , , and with , together with the adaptive variant (A-FedEHD), substantially reduces the need for manual tuning, a degree of job-specific sensitivity remains. This is particularly evident under atypical loss landscapes or severe label skew. To mitigate such sensitivity, we provide a reproducible eight-run calibration protocol that allows coarse-to-fine tuning of . Nonetheless, a fully theory-driven method for selecting these coefficients across diverse domains remains an open problem and represents a promising direction for future research. Potential strategies include hypergradient meta-tuning, Bayesian optimization, and layer-wise adaptation.

In terms of scalability, FedEHD adds only element-wise operations per batch and introduces no additional communication compared with FedAvg. Empirical results up to clients on CIFAR and synthetic EMA expansions up to under partial participation confirm favorable scaling behavior. However, extremely large cross-device settings (), high straggler rates, or asynchronous updates have not yet been extensively validated. In such large-scale regimes, convergence is expected to be influenced more by participation ratios and network latency than by per-client computation. Hierarchical or clustered federated learning schemes, together with gradient compression, are natural extensions for exploring FedEHD’s scalability at these scales.

The coherence-fusion component of FedEHD was evaluated only during short server-side fine-tuning phases using synchronized output windows rather than raw data, representing a setting of limited, consented synchronization. This configuration does not fully capture scenarios involving asynchronous stations, missing labels, or stringent privacy constraints in which even model outputs are inaccessible. Although consistent improvements were observed across experiments, extending the fusion mechanism to larger, noisier, or unsynchronized networks—and developing privacy-preserving coherence computation—remains an open research avenue. Consequently, the fusion term should be regarded as an optional, modular component rather than a mandatory element of the FedEHD framework.

Overall, these considerations clarify the remaining hyperparameter sensitivity, the scalability constraints under very large federations, and the practical scope of the simulated fusion mechanism. Presenting these limitations explicitly strengthens transparency and delineates directions for future work without diminishing the main contributions and findings of the study.

6. Conclusions

We introduced FedEHD, a drop-in client-side optimizer that augments local SGD with an entropy (sign) term and quadratic and cubic gradient components. Across CIFAR-10/100 under Dirichlet non-IID partitions and in the environmental monitoring case study, FedEHD demonstrated faster convergence, higher accuracy, and improved calibration compared with strong federated baselines, while adding no extra communication and only local element-wise overhead. Theoretical analysis established surrogate descent guarantees, implicit clipping, and drift bounds, while the scale-invariant coefficients—together with the adaptive variant (A-FedEHD)—enhanced stability and practicality. Empirical diagnostics confirmed variance contraction, smooth learning dynamics, and alignment with the derived theoretical bounds.

Looking forward, several research directions emerge naturally from this work. One avenue involves integrating FedEHD into personalized federated learning frameworks such as Per-FedAvg, Ditto, pFedMe, or FedBN/FedRep, to investigate accuracy–fairness–calibration trade-offs under personalized heads [

29]. Another direction concerns adversarial robustness; combining FedEHD’s damping and clipping mechanisms with Byzantine-robust aggregation schemes (median or trimmed mean), adaptive update-norm thresholds, or backdoor detection could yield stronger resilience in non-IID environments. Extending FedEHD’s adaptability through online hyperparameter optimization is also promising; hypergradient or bandit-based controllers could dynamically tune

, while layer-wise adaptation would support heterogeneous modules in large neural architectures. Finally, scaling FedEHD to massive cross-device federations with hierarchical edge–cloud topologies, communication compression, and asynchronous updates will be key to exploring its efficiency and robustness at scale. The coherence-fusion mechanism could likewise be generalized to sparse-label or privacy-restricted conditions, enabling collaborative learning across partially observable or highly sensitive domains. Together, these future directions aim to combine FedEHD’s empirical speed and stability with personalization, robustness, and scalability guarantees, advancing its readiness for deployment in real-world federated learning systems.