Abstract

It is natural and efficient to use human natural language (NL) directly to instruct robot task executions without prior user knowledge of instruction patterns. Currently, NL-instructed robot execution (NLexe) is employed in various robotic scenarios, including manufacturing, daily assistance, and health caregiving. It is imperative to summarize the current NLexe systems and discuss future development trends to provide valuable insights for upcoming NLexe research. This review categorizes NLexe systems into four types based on the robot’s cognition level during task execution: NL-based execution control systems, NL-based execution training systems, NL-based interactive execution systems, and NL-based social execution systems. For each type of NLexe system, typical application scenarios with advantages, disadvantages, and open problems are introduced. Then, typical implementation methods and future research trends of NLexe systems are discussed to guide the future NLexe research.

1. Introduction

1.1. Background

Human-robot cooperation facilitated by natural language (NL) has garnered increasing attention in human-involved robotics research. In this process, a human communicates with a robot using either spoken or written instructions for task collaboration [1,2,3,4]. The use of natural language enables the integration of human intelligence in high-level task planning with the robot’s physical capabilities, such as force, precision, and speed [5], in low-level task executions, resulting in intuitive task performance [6,7].

In a typical NL-instructed robot execution process, a human gives spoken instructions to a robot to modify robot executions for improved performance. The sensors mounted on a robot capture human voice and translate it to written NL using speech recognition techniques. NL understanding is then executed to analyze user intention to perform human-expected tasks. A robot’s action is decided by multiple factors including current robot status, dialog instructions, user intention, and robot memory. Using NL generation and speech synthesis, a robot answers a user’s question and asks for help if needed. With speech recognition sensors and environmental sensors, human’s task understanding will be integrated into robot decision making with improved performance and reliability.

Human-instructed robot executions using tactile indications have many applications, such as identifying human-robot contact states (tactile states between robot and human body) based on contact location [8], measuring hand control forces for robust stable control of a passive system [9], visual indications, such as intention estimation by understanding human attention based on gestures or body pose [10,11,12] and human behavior understanding based on motion detection for transferring skills to humanoid robots [13,14,15]. In comparison, robot executions using spoken NL indications have several advantages. First, NL makes the human-instructed robot executions natural. For the aforementioned traditional methods, it is necessary for the human involved in robot executions to undergo training in order to use specific actions and poses that facilitate comprehension [16,17,18,19,20]. While in NLexe, even non-expert users without prior training can cooperate with a robot by using natural language intuitively [21,22]. Second, NL describes execution requests accurately. Traditional methods that rely on actions and poses offer only limited patterns to approximate execution requests, primarily due to the information loss inherent in the simplification of actions and poses (e.g., the use of markers to simplify actions) [23,24,25]. While in NLexe, execution requests related to action, speed, tool and location are already defined in NL expressions [6,26,27]. With these expressions, execution requests for various task executions are described accurately. Third, NL transfers execution requests efficiently. The information-transferring method using actions/poses requires the design of various patterns for different execution requests [23,24,25]. While existing languages, such as English, Chinese and German, already have standard linguistic structures, which contain various expressions to serve as patterns [28,29]. NL-based methods do not need to design specific patterns for various execution requests, making human-instructed robot executions efficient. Lastly, since the instructions are delivered verbally, instead of physically involving a human, human hands are set free to perform more important executions, such as “grasp knife and cut lemon” [30,31]. NLexe has been widely researched in the realm of automation due to its numerous advantages. Its applications span across various areas, including task planning and handling unexpected events for daily assistances [31,32], voice communication and control of knowledge-sharing robots in medical caregiving area [33,34,35,36], NL assisted manufacturing automation with failure learning to minimize human workload [6,37], parametrized indoor/outdoor navigation planning with human commands [2,38,39], and human-like interaction for social accompany for children with emotion recognition [40,41,42]. These scenarios are shown in Figure 1.

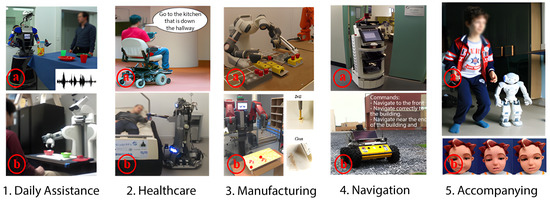

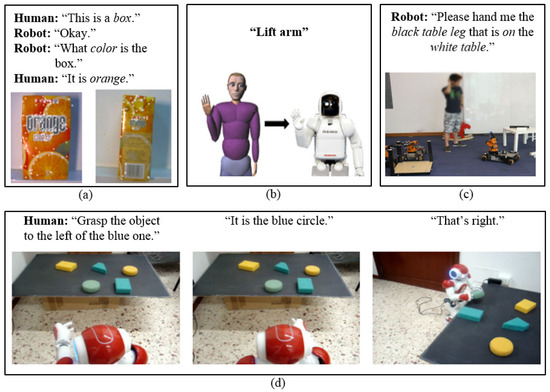

Figure 1.

Typical areas utilizing NLexe systems include various applications. NL-based robotic daily assistance is exemplified by studies such as (1a) [8] and (1b) [1]. NL-based healthcare applications are demonstrated in works like (2a) [33] and (2b) [34]. NL-based intelligent manufacturing is represented by research such as (3a) [37] and (3b) [6]. NL-based indoor/outdoor navigation is explored in studies like (4a) [38] and (4b) [39]. NL-based companion systems are investigated in research such as (5a) [43] and (5b) [41].

1.2. Two Pushing Forces: Natural Language Processing (NLP) and Robot Executions

From a realization perspective, the recent advancements in NLexe have been facilitated by the progress made in natural language processing (NLP) and robotic execution technologies.

Different from NLP for text semantic analysis, NLP for robot executions needs to consider execution-specific situation conditions, such as human intentions, task progresses, environmental context, and robot working statuses. Understanding human NL in a robot execution scenario is challenging for the following reasons. (1) Human NL requests are ambiguous that human desired objects, such as ‘cup’, are unclearly referred to in the expression ‘a cup of water’, without specific descriptions of object location and physical attributes [44]. (2) Human NL requests are abstract that high-level plans, such as ‘prepare coffee, then deliver the coffee to me’, are usually instructed without robot execution details, such as ‘hand pose, movement speed’ [45]. (3) Human NL requests are information-incomplete. Key information, such as ‘coffee type, cup placement location’, in the above example is missing [46]. (4) Human NL requests are real-world inconsistent that human instructed object ‘cup’ may be unavailable in a practical situation [47]. To solve these problems, natural language processing (NLP) techniques, which automatically analyze semantic meanings of human NL, such as speech and written text, are adopted. Supported by machine learning techniques in classification (use predefined classes to classify what type an object might be), clustering (identify similarities between objects in the scene) and feature extraction (select variables into features to represent the input and reduce set of features to be processed), NLP has been developed from simply syntax-driven processing, which builds syntax representations of sentence structures, to meaning-driven processing, which builds semantic networks for sentence meanings [48]. Improvements in NLP methods enable robots to understand human NL accurately, further enhancing the naturalness of its executions.

Recent developments in robot execution have evolved significantly over time. Initially, it began with low-cognition-level action research, wherein actions were designed and selected based on human instructions. This phase primarily focused on executing predefined tasks without much autonomy. Subsequently, research progressed to a middle-cognition-level interaction stage, which involved a basic understanding of human motions, activities, tasks, and safety concerns [49]. The most recent advancements have focused on high-cognition-level human-centered engagement research. In this stage, the robot’s performance considers human psychological and cognitive states, such as attention, motivation, and emotion, to enhance the effectiveness and naturalness of human-directed robot actions [50]. The increasing emphasis on human factors in robot execution has led to a closer integration between robots and human users, thereby improving the intuitiveness of robot operations.

For a robot to collaborate with human users, techniques like text generation, speech synthesis, and multi-domain sensor fusion were developed to enrich communication. Recent text generation techniques utilize sequence-to-sequence models like variational autoencoders (VAE) [51] and generative adversarial network (GAN) [52] to generate NL sentences which increases Human-robot Interaction (HRI) naturalness and facilitates users’ understanding. In NLexe scenarios like human asks robots for information, these methods need to combine with the robots’ specific tasks to generate text with pragmatic information from knowledge for human users to understand [53]. To achieve a fluent and intuitive human-robot interaction, robots need speech synthesis techniques that convert the generated text to sound that a human hears easily, especially for people with visual impairment [54]. The current trending method, WaveNet [55], uses a probabilistic and autoregressive deep neural network to generate raw audio waveforms. Moreover, Ref. [56] further reduced the network’s complexity to make it possible to synthesize voice in a low-performance device. For a typical NLexe task, a robot accepting NL instructions from its user usually has sensors like a camera and a distance sensor to assist in executing its task, which brings the multi-sensor fusion techniques. By combining the information from multiple sensors and instructions, the robot disposes of the information and decides the best solution in the current moment [57,58,59].

1.3. Systematic Overview of NLexe Research

Advancements of NLP, such as modeling semantic meanings for complex NL expressions [60,61], interpreting implicit NL expressions by extracting the logic like “I need food” from commands like “I am hungry” [62,63], and enriching abstract NL expressions by associating them with extra commonsense knowledge [64,65], support an accurate task understanding in NLexe. Advancements of robot execution capabilities, such as replicating or representing the action of human [50,66], human intention inference and reaction based on reinforcement learning [67,68,69], and the ability to understand human’s implicit intention with the environment and external knowledge [70,71], support intuitive task execution in NLexe. A Common architecture for an NLexe system includes three key parts: instruction understanding, decision-making, and knowledge-world mapping. In instruction understanding, the user’s verbal instructions were acquired by sensors and processed by speech recognition and language understanding systems to perform a comprehensive semantic analysis. In the decision-making phase, various algorithms such as deep neural networks were used to collect and construct the task-related knowledge using information from the robot knowledge base or NL knowledge and supported robot decision-making in various manners. Through the process of knowledge-world mapping, information patterns were accurately contextualized within real-world scenarios, allowing for the resolution of incomplete knowledge gaps. This facilitated the successful execution of the NLexe process. With supporting techniques from both NLP and robot execution, NLexe has been developed from a low-cognition-level symbol matching control, such as using “yes” or “no”, to control robotic arms, to a high-cognition-level task understanding, such as identifying a plan from the description “go straight and turn left at the second cross”. Combining the advancements of both NLP and robot executions improves the effectiveness of both communication and execution in NLexe.

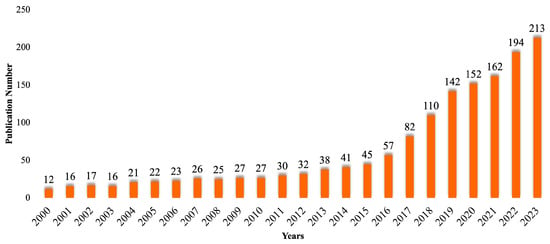

As a result of NLexe research, a substantial number of NLexe projects were launched, including the following. (1) “collaborative research: jointly learning language and affordances” from Cornell University, which interprets objects by natural-language-described affordances, such as ‘water: drinkable; cup: containable’, to help robot with its manipulation [72]; (2) “robots that learn to communicate with human through natural dialog” from University of Texas at Austin, which enables robots to directly learn task executions from NL instructions with a user-friendly manner [73]; (3) “collaborative research: modeling and verification of language-based interaction” from MIT, which uses NL to interpret human interactions and understand human perspective in physical world for finally integrating humans and robots [74]; (4) “language grounding in robotics” in University of Washington, which maps theoretical knowledge, such as object-related descriptions, to practical sensor values, such as sensor-captured object attributes [75]; (5) “semantic systems” from Lund University, which uses NL to describe industrial assembly processes [76]. NLexe research is frequently disseminated through prestigious international journals, including International Journal of Robotics Research (IJRR), IEEE Transactions on Robotics (IEEE TRO), The Journal of Artificial Intelligence (AIJ) and IEEE Transactions on Human-machine Systems (IEEE THMS), and international conferences, like International Conference on Robotics and Automation (ICRA), International Conference on Intelligent Robots and Systems (IROS) and AAAI Conference on Artificial Intelligence (AAAI). By using the following keywords, NLP, human, robot, execution, speech, dialog, and natural language, human-robot interaction, HRI, social robotics, about 4410 papers were retrieved from Google Scholar. Then with a focus on NL-instructed robot executions, about 1390 papers were finally kept for plotting the publication trend, shown in Figure 2. The steadily increasing publication numbers demonstrate the increasing significance of NLexe research.

Figure 2.

The annual amount of NLexe-related publications since the year 2000. In the past 23 years, the number of NLexe publications has steadily increased and reached a historical high.

2. Scope of This Review

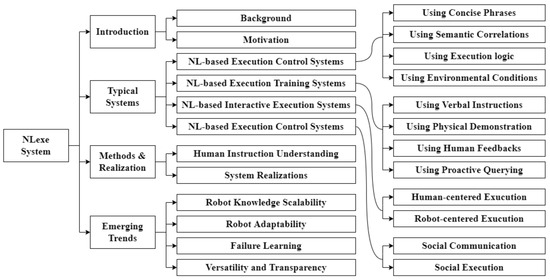

Compared with the existing review papers about human-instructed robot executions using communication means of gesture and pose [77], action and motion [78], and tactile [79], a comprehensive review of NLexe, which employs natural language for command delivery and execution planning, is currently lacking in the literature. Considering the significant potential and growing interest in NLexe, it is imperative to provide a summary of the state-of-the-art systems in this domain. Such a review would highlight the current research advancements and guide future research directions in NLexe. NLexe is a process of interactive information sharing and execution between humans and robots [7]. In this paper, the focus is on robotic execution systems with natural language facilitation. This review systematically presents the implementations of NLexe, encompassing topics such as motivations, typical systems, realization methods, and emerging trends, as illustrated in Figure 3.

Figure 3.

Organization of this review paper. This review systematically introduces NLexe implementations, encompassing topics such as motivations, typical systems, realization methods, and emerging trends. The NLexe systems are comprehensively categorized into four groups: NL-based execution control systems, NL-based execution training systems, NL-based interactive execution systems, and NL-based social execution systems. Within each category, typical application scenarios, knowledge manners, knowledge formats, as well as their respective advantages and disadvantages, are summarized.

NL is used as a communication means to realize interactive information sharing, which further facilitates interactive task executions between robots and humans. NL instructions during task executions could deliver information, including human intentions, task execution methods, environmental conditions, and social norms which are embedded in human oral speech. By extracting different contents from human NL instructions in different manners, different NLexe systems are designed for supporting human-guided robot task executions in various scenarios. To perform a simple task in the lab environment, a robot only needs a low-level cognition to execute the control commands given by its human users. In this situation, robots do not involve decision-making because it is unnecessary, which represents an NL-based execution control system. As the environment and user become different, robots need to understand the different situations and human preferences, so they can make use of a middle-level cognition to learn new execution knowledge to adapt its logic, which represents an NL-based training system. Because the execution process is not always the same in real-life production scenarios, human users may actively take charge of the execution procedure or change their minds to execute other tasks. Also, robots may produce errors and need help from human users, so the ability to describe the current situation is required. Thus, robots need a higher cognition level, so human users communicate with robots updating plans or progress, which represents an NL-based interactive execution system. When robots are used by ordinary people in daily life, communicating via NL in the execution process becomes essential. The robots thereby need to understand commonsense as humans since ordinary people do not have expertise in robotics and may get confused when the abnormal behavior of a robot occurs. Robots then need a remarkably high cognition level to understand what an ambiguous user instruction implies and figure out the optimal plan to execute or change its behavior pattern, which represents an NL-based social execution system.

Based on the level of robotic cognition during execution, NLexe-based robotic systems can be classified into four primary categories. (1) NL-based execution control systems. In these systems, NL is used to convey human commands to robots for remote control. The robots receive only NL-format control symbols, such as “start”, “stop”, and “speed up”, without comprehensive instruction understanding. Consequently, robots operate with a low cognition level, lacking execution planning. The human-robot role relation is “leader-follower”, where humans assume all cognitive burdens in task planning, while robots undertake all physical burdens during task execution. (2) NL-based robot execution training systems. In these systems, NL transfers human execution experiences to robots for knowledge accumulation, a process termed robot training. Robots must consider environmental conditions and human preferences to adjust execution plans, including action and position adjustments, thus exhibiting a middle cognition level. The human-robot role relation remains “leader-follower”, with humans handling the major cognitive burdens, such as defining sub-goals and execution procedures, while robots follow human instructions and assume minor cognitive burdens. (3) NL-based interactive execution systems. In these systems, NL is used to instruct and correct robot executions in real-world situations. Robots operate with a high cognition level, as they can infer human intentions and assess task execution progress. The human-robot role relation is “cooperator-cooperator”, with both robots and humans sharing major cognitive burdens. (4) NL-based social execution systems. In these systems, NL facilitates verbal interaction between humans and robots to achieve social purposes, such as storytelling. Robots require the highest cognition level to understand social norms and task execution methods. The human-robot role relation is “cooperator-cooperator”. Table 1 allows clear comparisons between four types of NLexe systems’ cognition levels and execution manners, and shows human and robot involvements for the four types, respectively.

Table 1.

Comparison of typical NLexe systems.

3. NL-Based Execution Control Systems

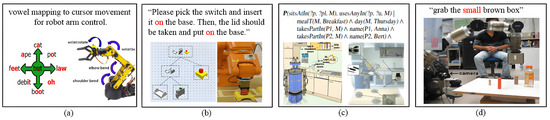

To alleviate the physical demands on humans and allow their hands to be free for other tasks, NL was initially employed to replace physical control mechanisms such as joysticks and remote controllers, which traditionally necessitated manual operation [31]. NL-based execution control is a single-direction NLexe in which a human mainly gives NL control commands on task executions, and a robot follows the control commands to execute the plan. In NLexe control systems, only speech recognition and direct language pattern mapping are needed without high-level semantic understanding. A robot receives simple user instructions and directly map into the actions for task execution. These simple instructions include the following. (1) Simplest instructions, with which a user gives are symbolic words identified by a robot without semantic understanding using predefined instruction-action mapping. (2) Phrases (word groups) or short sentences are complicated as the user gives a semantic correlation to describe a task. (3) Logic structure mapping, with which a user indicates an object with details like “color or shape of that object” and execution steps of a task to guide robot executions. During the controlling process, NL functioned as an information-delivering medium, conveying the robot’s actions as desired by the human operator, as illustrated in Figure 4. The human user primarily made decisions during this process, while the robot was directed by recognizing and executing commands expressed through NL.

Figure 4.

Typical NL-based execution control systems. (a) Control using concise phrases: The motion directions of a robot arm’s joints were controlled by mapping symbolic vowels from human spoken instructions [80]. (b) Control using semantic correlations. A robot was verbally directed by a human to perform assembly tasks by mapping semantic correlations of the components from spoken instructions [81]. (c) Control using execution logic. The execution of cooking tasks was logically defined. By mapping the logical structure of human spoken instructions, a robot was able to perform kitchen tasks [82]. (d) Control using environmental conditions. During task execution, a robot took into account practical environmental conditions, such as “object availability” and “objects” relative sizes“, to perform tasks like ”grabbing the small brown box” [31].

3.1. Typical NLexe Systems

According to NL command formats, systems for execution control based on NL can be primarily classified into four distinct categories.

3.1.1. NL-Based Execution Control Systems Using Concise Phrases

To realize simple NLexe, NL-based execution control systems based on short commands or keywords, uttered by users to adjust robot executions, were designed. In this system, speech recognition and word-to-action mapping are required, which brings the difficulties of accurate recognition of words and predefinition of commands. These systems are designed for concise execution with simple and straightforward words or phrases instruction. Typical tasks include asking the robot to accelerate by using the word “faster” and confirming your command by saying “yes”. The NL-based execution control for planning execution procedures involved a word-to-action mapping process. This process was discrete, with the associations between words and actions predefined in the robot’s database. In this framework, human operators were limited to providing the robot with symbolic and simple NL commands. The robot was required to accurately recognize the symbolic words in human speech and subsequently associate these words with the predefined actions or sequences of actions.

Typical symbol words involved in NLexe systems include action types, such as “bring, come, go, send, take” [83], motion types, such as “rotate, slower, accelerate, initialize” [84,85], spatial directions, such as “left, right, forward, backward” [86,87], confirmation and denial commands, such as “yes, no” [88], and objects, such as “cup, mug” [89].

Typical NLexe systems using symbolic word control include the following. (1) Robot motion behavior control by verbally describing motor skills, such as “find object, track object, give object, move, set velocity”, for daily object delivery [90]. (2) Robotic gripper pose control by verbally describing action types, such as “open or close the grip”, for teleoperation in manufacturing [91,92]. (3) Mobile robot navigation behavior control by using location destination, such as “kitchen, there”, to plan robot routine [88,93]. NL-based execution control was employed to specify detailed action-related parameters, including action direction, movement amplitude, motion speed, force intensity, and hand pose status. This NL-based execution control for action specification involved a word-to-value mapping process, which was continuous, with value ranges predefined in the robot database. During the development of NL-based execution control, two mainstream mapping rules were established: fuzzy mapping, use terms, such as “move slowly, move far” [94], and strict mapping, such as “rotate 180 degrees, go to the laser printer” [95].

3.1.2. NL-Based Execution Control Systems Using Semantic Correlations

To perform relatively complex NLexe, NL-based execution control systems using semantic correlations between verbal instructions defining a series of robot actions, were designed. In such systems, speech recognition and linguistic structure analysis are required, which brings the difficulties of correctly recognizing the relationship between words. This system is designed for execution with an understanding of action-property-object-location relations. Typical tasks include giving a simple description of an object like “white cube”, where robots distinguish the white cube from all cubes. To enhance the robustness of NL-based execution control in human-guided task execution, semantic correlations among control symbols were investigated through an analysis of the linguistic structures of NL commands. The control symbols encompass various types of actions, hand poses, and objects.

Typical semantic correlations used for robot execution control include action-object relations, such as “put-straw”, object-location relations, such as “straw-cup” [89], object-property relations, such as “apple-red, apple-round” [96], action-action relations, such as “open–move” [97], and spatial-spatial relations, such as “hole constraint-shaft constraint” [81].

Typical NLexe systems using semantic correlation control include the following. (1) flexible object grasping and delivery by using action-object-location relations, such as “put book on desk” [98]. By introducing semantic correlations into object-related executions, human daily commonsense about object usage was initially introduced into NL-supported systems. (2) Accurate object recognition by using correlations, such as “move the black block” [96]. Semantic correlations reveal existing associations between object properties. (3) General task execution procedures, such as “open gripper—move to gripper”, were defined to plan assembly procedures for industrial robotic systems [81]. (4) Flexible indoor navigation by using general motion planning patterns, such as “go-to location” [2], improving robots’ adaptability towards instruction varieties.

3.1.3. NL-Based Execution Control Systems Using Execution Logic

To flexibly adjust execution plans, NL-based execution control systems using execution logic were designed, in which hierarchical logic is verbally specified to define execution preconditions and procedures. In this system, speech recognition and logic level NL understanding are required, which brings the difficulty of accurately understanding the coordination of consecutive instructions. This system is designed for tasks with an ordered execution or a conditional execution. Typical tasks include instructing with logic words like “turn left, then go forward”, where robots figure the order and turn left to go straight. Despite the improvements in flexibility brought about by the integration of semantic correlation in NL execution mapping, control performance in dynamic situations remains limited due to the neglect of control logic within these semantic correlations. This oversight renders robots unable to adapt to environmental changes and hampers their ability to intuitively reason about execution plans. For instance, the NL instruction “fill the cup with water, deliver it to the user” inherently includes the logical sequence: “search for the cup, then use the water pot to fill the cup, and finally deliver the cup”. Ignoring this logic during the control process can lead to incorrect executions, such as “use the water pot to fill the cup, then search for the cup”, or can restrict proper executions, such as “deliver the cup, then use the water pot to fill the cup”, in dynamic environments. This results in the incorrect removal or addition of execution steps. To address this issue, the study explores logic correlations—encompassing temporal logic, spatial logic, and ontological logic—among controlling symbols to enhance the adaptability of NL-based execution control methods.

Typical NLexe systems that employ NL-described logic relations include the following: (1) Using NL commands, such as “if you enter a room, ask the light to turn on and then release the gripper”, to modify the robot trajectory in different situations with self-reflection on task executability [99,100,101]. (2) Designing robot manipulation posts based on fuzzy action type and speed requirements, exemplified by commands like “move up, then move down” [88,94]. (3) Serving meals by considering object function cooperation logic, such as “foodType—vesselShape” relation [82,102]. (4) Assembling industrial parts by considering assembly logic, such as “first pick part, then place part” [81,103].

3.1.4. NL-Based Execution Control Systems Using Environmental Conditions

To realize human-robot executions with consideration of the surrounding environment, NL-based execution control systems using environmental conditions were designed, allowing users to give verbal notifications to the robot, describing the environmental conditions to consider in a situation-aware control context. In such systems, speech recognition, logic level NL understanding, and knowledge-world mapping are required, which brings the difficulty of correctly mapping descriptions in instruction to environmental conditions. This system is designed for tasks that real-world conditions are included in NL instructions. Typical tasks include asking a robot to identify environmental information like “box under the table”, where robots locate the table to understand which box the user is referring to. In addition to the logical relationships among control commands, it is imperative to consider environmental conditions for the practical execution of NL-based execution control in real-world scenarios. Achieving an intuitive NLexe requires a balance between human commands, robot knowledge, and environmental conditions.

Typical environment conditions verbally described for control include the following. (1) human preferences on object placement location, obstacle avoiding distances, and furniture set up manners [84,104]; (2) human safety-related context, such as arms’ reachable space and joint limit constraints [97]; (3) environmental conditions, such as ‘door is closed, don’t go to the lounge’ [19]; (4) object properties, such as “mug’s usage is similar to cup, mug’s weight and mug’s cylinder shape” [89]; (5) robot working statuses, such as “during movement (ongoing status), movement is reached (converged status)” [105].

Typical NLexe systems using practical environmental conditions include the following. (1) By using NL-described safety factors, such as “open hand before reaching, avoid collision”, a robotic arm was controlled for grasping with considerations of both robot capabilities, such as “open griper, move gripper”, and runtime disturbances, such as “encountering a handle, distance with an obstacle is reduced” [84,97]. (2) Outdoor navigation robot planned path by considering the location and building matchings, such as “navigate to the building behind the people” [39]. (3) Food serving robots served customers with consideration of user locations [106], and path conditions [89]. In these systems, real-world conditions were embedded in NL commands to improve robot control accuracy.

3.2. Open Problems

In the context of NL-based robot control, human-robot interaction was facilitated through verbal communication. The control commands in natural language were issued either individually or in a hybrid manner, wherein the NL commands were integrated with visual and haptic cues. A human was the only information source, guiding the whole control process. A robot was designed to simply map the human NL commands to the knowledge structure in robot databases, or to the real-world conditions perceived by the robot’s sensors. With physical and mental work assignments for robots and humans, current efforts in NL-based execution control focus on improving control accuracy, decreasing human users’ cognition burdens, and increasing robots’ cognition burdens. However, some open problems are still existing. (1) The cognition burdens of humans in NL-based execution control were at a high level and the robot cognitions were at a low level. A human user was required to lead the execution and a robot was required to follow human instructions without understanding the task executions. The big cognition-level difference between a human and a robot restrained the intuitiveness and naturalness of NLexe with NL-based execution control systems. (2) Low robot cognition level endows robots with limited reasoning capability, restraining NLexe systems’ autonomous level. (3) The robot knowledge scale was small, limiting a robot’s capabilities in dealing with unfamiliar or dynamic situations where user varieties, task complexities, and real-world uncertainties were involved in disturbing the performances of NL-based execution control systems. Detailed comparisons among NL-based execution control systems are shown in Table 2 to present four types of systems and their corresponding instruction manner, instruction format, application, pros, and cons.

Table 2.

Summary of NL-based execution control systems.

4. NL-Based Execution Training Systems

To scale up robot knowledge for executing multiple tasks under various working environments, NL was used to train a robot. During the training, knowledge about task execution methods was transferred from a robot or a human expert to targeted robots. An NL-based execution training system uses human knowledge in the form of NL to train an inexperienced robot for task understanding and planning. In NLexe training systems, with the support of speech recognition technologies, robots have the capability of initial language understanding based on interpreting multiple sentences. User instructions include simple words like the name and property of an object and the action of robot motion and grasping. Human users give verbal descriptions, and robots extract information and task steps as well as their meaning according to the way instructions are interpreted. A robot also proactively asks its human users questions for object or action disambiguation, bringing the instruction complexity to a higher level since the robots need both language understanding and generation during training. Typical NL-based execution training systems are shown in Figure 5. Human commonsense knowledge was organized into executable task plans for robots with consideration of the robot’s physical capabilities, such as force, strength, physical structure, and speed, human preferences, such as motion and emotion, and real-world conditions, such as object availability, object distributions, and object locations. With the executable knowledge, a robot’s capabilities in task understanding, environment interpretation, and human-request reasoning were improved. Different from NL-based execution control, where robots were not involved in advanced reasoning, in NL-based execution training, robots were required to reason about human requirements during human-guided executions.

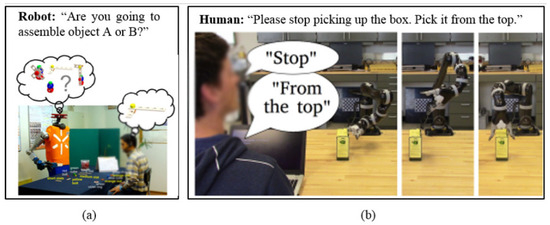

Figure 5.

Typical NL-based execution training systems. (a) Training using human instructions. By describing the physical properties of the objects, the robot-related knowledge was transferred from a human to a robot, enabling the robot to recognize objects in the future [107]. (b) Training using human demonstrations. With a human’s physical demonstrations of actions, a robot learns to perform an action by imitating the motion patterns, such as trajectory, action sequence, and motion speed [108]. (c) Training using proactive robot querying. A robot proactively detected its missing knowledge and proactively asked human users for knowledge support [109]. (d) Training using human feedback. During the execution process, a human proactively interfered with the execution and gave timely feedback to improve a robot’s performance [110].

4.1. Typical NLexe Systems

According to the knowledge learning manners, NL-based execution training systems are mainly categorized into four types.

4.1.1. NL-Based Execution Training Systems Using Verbal Instructions

To teach a robot with high-level execution plans, execution training systems that only use verbal instructions were designed. In this way, robots learn from these instructions without any physical participation or help from their users. In this type of system, speech recognition, NL understanding, learning, and reasoning mechanisms are required, which brings the difficulty of transforming high-level human instructions to real-world implementation. They are designed for training robots on how to execute certain tasks using command sequences. Typical tasks include training robots with action combinations like “lift, then move to location”, where robots learn this plan and execute it in the future. During the high-level knowledge grounding, human cognition processes on task planning and performance were modeled. The advantage of the training using human NL instructions is that a robot’s reasoning mechanisms during NLexe are initially developed; the disadvantage is that the execution methods directly learned from the human NL instructions are still abstract in that the sensor-value-level specifications for the NL commands are lacking, limiting the knowledge implementations in real-world situations.

Given that human verbal instructions can deliver basic information about task execution methods, NL-based execution training initially started with defining simple commonsense by using human NL instructions, enabling a robot with initial reasoning capability during execution [111]. Typical NLexe execution training systems using human NL instructions include (1) daily assistive robots with NL-trained object identities, such as “cup, mug, apple, laptop” [102,112]; (2) robots with NL-trained object physical attributes, such as “color, shape, weight” [107,113,114]; (3) robotic grippers with NL-trained actions, such as “grasp, move, lift” [115,116].

Instead of modeling correlations among task execution procedures, the knowledge involved in the low-level reasoning was merely piecemeal with only separate knowledge entities, such as “cup, cup color, grasp action”. Piecemeal knowledge enables robots with a shallow understanding of motivations and logic in task executions. As information and automation techniques improved, the low-level reasoning method was then evolved into a high-level reasoning method, in which complex NL expressions were grounded into hierarchical knowledge structures for motivation and logical understanding. With hierarchical knowledge, NLexe systems were enabled to learn complex task executions. Typical NLexe systems include the following. (1) Industrial robot grippers with NL-trained object grasping methods, such as “raise, then move-closer” [117]. (2) Executing tasks in unfamiliar situations with NL-trained spatial and temporal correlations, such as “landmark—trajectory—object, computer—on—table, mouse—leftOf—computer” [118,119,120,121]. (3) Daily assistive robots with NL-trained object delivery methods, such as “moved forward → moved right → moved forward” [122,123].

4.1.2. NL-Based Execution Training Systems Using Physical Demonstration

To teach a robot with environment-specific execution details during NLexe, execution training systems using physical demonstration were designed to align NL instructions with real-world conditions, in which robots passively learn from their human users while human users need to participate physically. In this system, speech recognition, NL understanding, and sensor value association are required, which brings the difficulty of interpreting sensor data with abstract knowledge from a human. This system is designed for learning task execution methods and extending existing knowledge in real-world scenarios. Typical tasks include training robots with both human action and language instruction like pointing at an object in the room and telling the robot “that is a book”, so robots learn the relation between the object and “book”. With training in a physical demonstration manner, theoretical knowledge, such as “actions, action sequences, and object weight, object shape, and object color”, was associated with sensor values. This theory-practice association enabled a straightforward, sensor-data-based interpretation of the abstract task-related knowledge, improving robot execution capability by practically implementing the learned knowledge.

A general demonstration process was that a human physically performs a task and meanwhile verbally explains the execution intuitions for a robot. The robot was expected to associate the NL-extracted knowledge with sensor data to specify the task executions. Human demonstration enables a robot with a practical understanding of real-world task executions. Compared with robot training using instructions, robot training using demonstrations specified the abstract theoretical knowledge with the real-world conditions, making the learned knowledge executable [124]. However, a robot’s reasoning capability was not largely improved since demonstration-based training was actually a sensor-data-level imitation of human behaviors. And it ignored the “unobservable human behaviors”, such as a human’s subjective interpretation of real-world conditions, a human’s philosophy in execution, and a human cognitive process in decision-making.

Typical NL-based execution training systems using human demonstration include the following. (1) Learning object-manipulation methods by associating human NL expressions with sensor data, such as “touching force values, object color, object shape, object size, and visual trajectories” [117,125,126]. (2) Learning human-like gestures by associating NL speech, such as “bathroom is there”, with real-time human kinematic model and speeds [108,127]. (3) Learning object functional usages, such as “cup-like objects”, by simultaneously considering human voice behaviors, such as “take this”, motion behaviors, such as “coordinates of robot arms”, and environmental conditions, such as “human locations” [122]. (4) Learning abstract interpretations of environmental conditions by combining high-level human NL explanations, such as “the kitchen is down the hall”, with the corresponding sensor data patterns, such as “robot speed, robot direction, robot location” [20,127]. (5) Adapting new situations by replacing NL-instructed knowledge, such as “vacuum cleaner”, with real-world-available knowledge, such as “rag” [6,33,128]. (6) Robot arms learned new actions by interpreting NL instructions, such as “move the green box on the left to the top of the green block on the right”, by using visual perceptions which are perceived by cameras [129,130].

4.1.3. NL-Based Execution Training Systems Using Human Feedbacks

To teach a robot to consider human preferences during NLexe, execution training systems using human feedback were designed, in which robots proactively learn from a human according to knowledge needs in specific task execution scenarios and a human decides what knowledge to learn. In this system, speech recognition, NL understanding, and real-time behavior correction are required, which brings the difficulty of proactively understanding human feedback and combining them with the current execution situation. This system is designed to change or improving the current behavior pattern using real-time human NL instructions. Typical tasks include correcting the robot’s wrong behavior with an instruction like “stop, and do something else”, where robots terminate the current wrong action and perform other tasks. This system has an aim of using human NL feedback to directly tell the robot “the unobservable human behaviors”. With human NL feedback, robot behaviors in human-guided execution were logically modified by adding and removing some operation steps [69,109,110] or subjectively emphasizing on executions [131,132,133]. Compared with training using human demonstrations, training using human feedback proactively and explicitly indicates a robot with operation logic and decision-making mechanisms that its human users desire. A better robot understanding is enabled by enriching existing robot decision-making models with execution methods, such as execution logics and execution conditions, and execution details, such as potential action and tool usages, supporting better robot understanding of the human cognitive process in task execution. Based on both human cognition, understanding, and environment perception, a robot’s surrounding environments in NLexe were interpreted as a human-centered situation. In this human-centered situation, task execution was interpreted from a human perspective, improving a robot’s reasoning capability in cooperating with a human. However, feedback-based learning requires frequent human involvement, imposing a heavy cognitive burden on a human. Moreover, the knowledge learned from human feedback was given by a human without considering the robot’s actual knowledge needs, limiting the robot’s adaptation to new environments where its knowledge shortage was waiting to be compensated for successful NLexe.

Typical execution training systems using human feedback include the following. (1) Object arrangement robots with consideration of human-desired object clusters, such as “yellow objects, rectangle objects” [110]. (2) Real-time robot behavior correction with real-time human NL feedback on hand pose and object selections [69]. (3) Self-improved robots with human-guided failure learning during industrial assembly [109]. (4) Human-sentiment-considered robot executions with subjective NL rewards and punishments, such as “joy, anger” [132]. (5) Human NL feedback, such as “yes, I think so, Okay, let’s do that”, was used to confirm the robot’s understanding, such as “We should send UAV to the upper region”, to achieve a consistent understanding between humans and robots [134].

4.1.4. NL-Based Execution Training Systems Using Proactive Querying

To solve new-situation adaptation problems for further improving a robot’s reasoning ability, execution training systems using proactive querying were designed, in which robots proactively learn from a human according to knowledge needs in specific task execution scenarios and robots decide what knowledge to learn. In this system, speech recognition, NL understanding, and generation are required, which brings the difficulty of being aware of the current situation and generating a concise description of the knowledge a robot needs to learn. This system is designed for actively acquiring knowledge in uncertain conditions or physical help from a human. Typical tasks include a robot asking for the user’s confirmation if the instruction is ambiguous and then performing a task with consent. In the querying process, a robot used NL to proactively query its human users about its missing knowledge related to human-intention disambiguation, environment interpretations, and knowledge-to-world mapping. After the training, a robot was endowed with more targeted knowledge to adapt to previously-encountered situations, thereby improving a robot’s environmental adaptability. With a proactive querying manner, robots were endowed with an advanced self-improving capability during human-guided task execution. Supported by a never-ending learning mechanism, robot performances in NLexe were improved in the long term by continuous knowledge acquiring and refining [70].

For developing NL-based execution training systems using proactive querying, a challenging research problem is robot NL generation, which uses NL to generate questions to appropriately express a robot’s knowledge needs to a human. Robot NL generation is challenging for the following reasons. (1) Human decision-making in human-guided execution is uncertain due to limited robot observations on the human cognitive process [135]. It is difficult for a robot to accurately infer a human’s execution intentions [136]. (2) Self-evaluation mechanism for reasoning about a robot’s knowledge proficiency is missing. It is difficult for a robot to reason about its missing knowledge by itself [137]. (3) NL questions for expressing the robot’s task failures and knowledge needs are hard to organize in a concise and accurate way [138]. To address these problems, several solutions were proposed. (1) Use environmental context to reduce uncertainties in human execution request understanding, improving recognition accuracy of human intentions during human-instructed robot executions [71]. (2) Hierarchical knowledge structure representations were used for detecting missing knowledge in execution [6]. (3) Concise NL questions were generated by involving both human execution request understanding and missing knowledge filling [139].

Typical NL-based execution training systems using proactive robot querying include the following. (1) Ask for cognitive decisions on trajectory, action, and pose selections in tasks, such as “human-robot jointly carrying a bulky bumper” [140]. (2) Ask for knowledge disambiguation of human commands, such as confirming the human-attended object “the blue cup” [141,142]. (3) Ask for human physical assistance to deliver a missing object or to execute robot-incapable actions, such as “deliver a table leg for a robot” [109,139]. (4) Ask for additional information, such as “the object is yellow and rectangle”, from a human to help with robot perception [46,110].

4.2. Open Problems

During the development of training methods starting from instruction training to querying training, the human cognition burden was gradually decreased, and the robot cognition level was gradually improved. Robot training using the above-mentioned methods is suffering the shortcomings of the robot knowledge scalability and adaptability. The knowledge scalability problem, which is caused by limited knowledge sources and limited knowledge learning methods, has been solved to some degree, improving a robot’s execution intuitiveness and naturalness. However, some open problems for current NL-based execution training systems are still existing. (1) Learning from a human is time-consuming and labor-intensive. It is challenging to largely scale up robot knowledge in an economical manner. (2) Human knowledge is not always reliable, or at least different types of human knowledge have different degrees of reliabilities. It is really challenging for a robot to assess knowledge reliability and use knowledge differently in manners, such as different knowledge types, different knowledge amounts, and different knowledge implementation scenarios. Detailed comparisons among NL-based execution training systems including verbal instruction, demonstration, human feedback, and proactive query, and their instruction methods, roles, applications, and advantages are shown in Table 3.

Table 3.

Summary of NL-based execution training systems.

5. NL-Based Interactive Execution Systems

To fully cooperate with robots in both interactive information sharing and interactive physical executions, instead of mainly information sharing (NL-based execution training systems) or physical execution (NL-based execution control systems), NL-based interactive execution systems were developed. An NL-based interactive execution system used NL in the stage of task execution and combined NL instructions and other sensor information to understand the optimal approach to complete a task. In NLexe interactive execution systems, speech recognition, natural language understanding, and dialog management are needed for establishing effective communication between a human and a robot. NL generation and speech synthesis are used to have a conversation with users. The instruction format is complex and based on interactive descriptions, which contain logic correspondence and the use of pronouns to refer to a previous element already mentioned in the conversation/dialog. Simple instructions are provided by robots to remind a user to help the execution process in robot-centered interactive execution systems. Instructions with multiple sentences about the execution target, logic, and environment are provided by users while guiding a robot. Different from NL-based execution training systems, in which human NL was helping a robot with its task understanding, in NL-based execution systems, human NL was helping a robot with its task executions, in which the understanding of execution requests, working statuses of both robots and humans and the task progress is focused for physically integrating both robots and humans. In NL-based training, a robot created a structure-completed and execution-specified knowledge representation. But in NL-based task executions, including understanding the task, the robot was also required to understand the surrounding environments, predict human intentions, and make optimal decisions satisfying the constraints from the environment, task executions, robot capabilities, and human requirements. Typical interactive execution systems using NL-based task execution are shown in Figure 6. Given that the reasoning was strictly requested in NL-based task execution, robot cognition levels in NL-based task execution were higher than that in NL-based execution training.

Figure 6.

Typical NL-based interactive execution systems. (a) A human-centered execution system. A human was performing tasks, such as “assemble a toy”. A robot was standing by and meanwhile prepared to provide help, ensuring the success and smoothness of the human’s task executions. A robot was expected to infer the human’s ongoing activities, detect human needs timely and proactively provide the appropriate help, such as “a toy part” [145]. (b) Robot-centered execution system. A robot was autonomously performing a task. A human was standing by to monitor the robot executions. If abnormal executions or execution failures occurred, the human-provided timely verbal corrections, such as “stop, grasp the top” or physical assistance, such as “delivering the robot-needed object” [146].

5.1. Typical NLexe Systems

With respect to who is leading the execution, NL-based interactive execution systems are categorized into human-centered task execution systems and robot-centered task execution systems.

5.1.1. Human-Centered Task Execution Systems

To integrate human mental intelligence and robot physical executions, human-centered task execution systems were designed, in which a human mentally leads the task executions, and a robot mainly provides appropriate physical assistance for facilitating human executions. In this system, speech recognition, NL understanding, and multi-sensor fusion are required, which brings the difficulty of closely monitoring human task processes by combining both NL and multi-sensor data to provide help when needed. This system is mainly designed to provide appropriate physical help to human users when asked. Typical tasks include assembling a device and asking for help like “give me a wrench” or “what is the next step” where robots provide assistance accordingly. NL expressions in task execution deliver information, such as explanations of a human’s execution requests, descriptions of a human’s execution plan, and indications of a human’s urgent needs. With this information, a robot provides appropriate assistance timely. Correspondingly, a robot took on only physical responsibilities, such as “grasping and transferring the fragile objects” [147,148]. Both the human and the robot performed independent sub-tasks by sharing the same high-level task goal. The robot received fewer instructions for its tasks and meanwhile was expected to monitor the human’s task processes so that the robot provided appropriate assistance when the human needs it. This execution proposed a relatively high standard towards the robot cognition on providing appropriate assistance at the right location and time. Overall, in the human-centered NL-based task execution, a human was leading the execution at the cognition level, and a robot provided the appropriate assistance for saving the human’s time and energy, thereby enhancing the human’s physical capability.

Typical NLexe systems with a human-centered task execution manner include the following. (1) Performing tasks, such as “table assembly”, during which the human-made task goals (assembly of a specific part) and plans (action steps, pose and tool usages), and partially executes tasks (assemble the parts together), and the robot provides human-desired assistances (tool delivery, part delivery, part holding) which were required verbally by a human user [149,150]. During the executions, the human took both cognitive and physical responsibilities, and the robot took partial physical responsibilities. (2) Comprehensive human-centered execution was developed so that a human user was only burdened with cognitive responsibilities, such as “explaining the navigation routine” [151,152], “describing the needed objects, location and pose” [153,154], and “guiding the fine and rough processing” [6,155].

5.1.2. Robot-Centered Task Execution Systems

To further improve execution intuitiveness and decrease a human’s mental and physical burdens, robot-centered task execution systems were developed, in which a robot mentally leads the task executions, and the human mainly provides physical assistance for facilitating robot executions. In this system, speech recognition, NL understanding and generation, and multi-sensor fusion are required, which brings the difficulty of understanding the whole execution process and having the current situation considered to ask for human assistance when needed. This system is mainly designed for acquiring human help when robots are incapable of some type of task process. Typical tasks include robots moving heavy objects and asking human users for help with NL sentences/utterances like “not enough room to pass”. Different from human-centered NLexe systems in which a human mainly took the cognitive and physical burdens while a robot gave human-needed assistances to facilitate human execution, in robot-centered systems, a robot mainly took the cognitive and physical burdens, while a human gives robot-needed assistances physically to facilitate the robot executions. NL expressions in the robot-centered systems were used for a robot to ask for assistance from a human. Compared with robots in human-centered NLexe systems, where a robot was required to comprehensively understand human behaviors, robots in robot-centered applications were required to comprehensively understand the limitations on robot knowledge, real-world conditions, and both humans’ and robots’ physical capabilities. The advantage was that the human was less involved and their hands and mind were partially set free.

Typical robot-centered NLexe systems include the following. (1) A Robot led the industrial assembly, in which a human enhanced a robot’s physical capability by providing a robot with physical assistance, such as grasping [156] and fetching [157] (2) A robot executed tasks, such as object moving and elderly navigation in unstructured outdoor environments, in which a human analyzed and conquered the environment limitations, such as objects and space availability [158,159,160]. (3) By considering both human NL instructions, such as “go up, ascend, come back towards the tables and chairs”, and indoor environment conditions, such as “door is open that path is available, a large circular pillar is in front that it is an obstacle”, Micro-air vehicle (MAV) intuitively planed the path in an uncertain indoor environment with assistances of human NL instructions [161].

5.2. Open Problems

NL-based robot execution enables a robot to practically implement its knowledge in complex execution situations. A robot becomes situation-aware that robots’ capability limits, human capability limits, and environmental conditions’ constraints are analyzed by a robot to facilitate the execution with a human. However, limited by current techniques in both robotics and artificial intelligence, some open problems in NLexe still exist. (1) Robot cognitive levels are still low and human involvements are still intensive, bringing heavy cognitive burdens for a human [146]. (2) For a robot, it is difficult to understand human NL requests. This is because human NL expressions are abstract and implicit [162]. It is difficult to interpret high-level abstract human execution requests, such as “bring me a cup of water” into low-level robot-executable plans, such as “bring action: grasp; speed: 0.1 m/s; ….”. It is also challenging to understand indicated meanings, such as “water type: iced water; placement location: table surface (x, y, z); …”, from implicit NL expressions, such as “bring me a cup of water” [44]. (3) Human intentions are difficult to infer for that human intention is dynamic and implicit [163]. (4) It is challenging for a robot to generate NL execution requests. Execution issues related to task execution progress, human status, and robot knowledge gaps are hard to identify; appropriate NL questions covering execution issue description and solution querying are hard to generate [136]. Therefore, an NLexe system involving human cognitive process modeling, intelligent robot decision-making, autonomous robotic task execution, and human and robot physical capability consideration is in urgent need. As shown in Table 4, human-centered task execution and robot-centered task execution focus on different centers in NL-based interactive execution systems.

Table 4.

Summary of NL-based interactive execution systems.

6. NL-Based Social Execution Systems

To make the NLexe system socially natural and acceptable, NL-based social execution systems were developed by involving human social norms in both communication and task executions, as shown in Figure 7. An NL-based social execution system uses NL not only in task execution but also appropriately communicates with human users as social norms are under consideration. In NLexe social execution systems, speech recognition, natural language understanding with extra knowledge, dialog management, natural language generation, and speech synthesis are all required to understand both user’s intention and social norms. The instruction format used in NLexe social execution systems is relatively more complex and based on an interactive conversation that contains logic correspondence and ambiguous descriptions that have implied referents. A robot needs to be accepted by a human, and it needs to understand what users say and react accordingly. Various users have different expressions which further increase the complexity. Different from NL-based interactive execution systems which merely consider task execution details, such as robot and human capabilities, working status, and task statuses—NL-based social execution systems consider social norms as well as execution details.

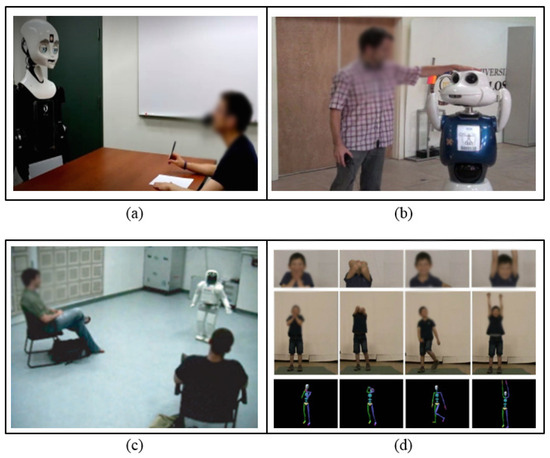

Figure 7.

Typical NL-based social execution systems. (a,b) are NL-based social communication. A robot learned to nicely response to a human’s request, such as “drawing a picture on the paper” [166] and “stop until I touch you” [167]. (c,d) are NL-based social execution. A robot learned to use appropriate body language during its speaking, such as storytelling [168,169].

6.1. Typical NLexe System

According to application scenarios of using social norms, NL-based social execution systems are categorized into social communication systems and social execution systems.

6.1.1. NL-Based Social Communication Systems

To make human-robot communication information correct and social-norm appropriate for natural NLexe, NL-based social communication systems were designed, in which social NL expressions are used for facilitating communication. In this system, speech recognition, highly capable NL understanding, and generation are required, which brings the difficulties of exploiting commonsense knowledge to understand both human instructions and environmental conditions and also generating reasonable NL for a response. This system is mainly designed for socially acceptable robot execution with consideration of human emotions. Typical tasks include robots using friendly expressions like “please” to have a conversation with users. Capturing social norms from humans’ NL expressions was helpful in aspects, such as detecting human preferences in execution, specifying execution roles, such as “leader, follower, cooperator”, and increasing social acceptance. NL in social communications served as an information source, from which both the objective execution methods and the subjective human preferences were extracted.

Typical NL-based social communication systems include the following. (1) A receptionist robot increased its social acceptance in conference arrangements by using social dialogs with pleasant prosodic contours [170]. (2) Cooperative machine operations used social cues, such as a subtle head nod, perk ears, friendly NL feedback “let’s learn …, can you …, do task x …,” to indicate human execution preferences, such as “please press the red button”, in task executions [171,172]. (3) Health-caregiving robots searched and delivered objects by considering user speech confidences, user safety, and user roles, such as “primary user, bystander” [173]. (4) Adapted unfamiliar users by using NL expressions with fuzzy emotion statuses, such as “fuzzy happiness, sadness, or anger” [174]. (5) Modeled social NL communications in NLexe by defining human-robot relations, such as “love”, “friendship”, and “marriage” [175]. (6) A robotic doctor used friendly NL conversations to lead physiotherapy [43,176,177].

6.1.2. NL-Based Social Execution Systems

To make task executions social-norm appropriate for natural NLexe, NL-based social execution systems were designed, in which social NL expressions are used for facilitating executions. In this system, speech recognition, highly capable NL understanding, and environment understanding are required, which brings the difficulty of understanding implied user preferences in human NL and acting accordingly. This system is mainly designed to adapt a robot’s behaviors to the current environment based on human NL and improve their social acceptance. Typical tasks include robots slowing their speed when the environment is crowded with the reminding of NL. NL was used to indicate socially preferred executions for robots, enhancing robots’ understanding of social motivations behind task executions and further making robot executions socially acceptable.

With the NLexe systems, typical applications using NL-based social executions include the following. (1) A navigation robot autonomously modified its motion behaviors (stop, slower, faster) by considering human density (crowded, dense) with the reminding of human NL instructions (“go ahead to move”, “stop”) [178]. (2) A companion robot moved its head towards the human speaker according to a human’s NL tones [179]. (3) A storytelling robot told stories by mapping NL expressions with a human’s body motion behaviors to catch human attention [168,180]. (4) A bartender robot appropriately adjusted its serving orders in social manners, such as “serve customers one by one, notify the new-coming customers, proactively greet and serve, keep appropriate distance with customers”, according to business situations [181]. (5) By detecting emotion statuses, such as “angry, confused, happy, and impatient”, in human NL instructions, such as “left, right, forward, backward, down, and up”, a surgical robot autonomously adjusted camera positions to prevent harms to patients during surgical operation [182,183].

6.2. Open Problems

NL-based social communication and NL-based social execution focused on two different aspects of NLexe. To develop socially-intuitive NLexe systems, the two aspects need to be developed simultaneously. Even though the introduction of social norms in NLexe systems increased systems’ social acceptance in human-guided task execution, some open problems still exist, impeding performance improvements of NL-based social execution systems. (1) Social norms are too variable to be summarized. Different regions, countries, cultures, and races have different social norms. It is difficult to summarize representative norms from various social norms for supporting robot executions [168]. (2) Social norms are too implicit to technically learn. It is technically challenging to learn social norms from human behaviors [173]. (3) Last, social norms are currently non-evaluable. It is challenging to assess the correctness of social norms because there are no clear standards to judge the correctness of social norms. Different persons have different levels of social behavior acceptance and tolerance [171]. Detailed comparisons including the instruction manner and format, applications, and respective advantages among NL-based social execution systems are shown in Table 5.

Table 5.

Summary of NL-based social execution systems.

7. Methods and Realizations for Human Instruction Understanding

To support natural communications between robots and humans during task executions, the key technical challenges are the methods of human instruction understanding and system realization. With instruction understanding, robots extract task-related information from human instructions; with system realization, speech recognition systems are integrated into robotic systems to design NLexe systems.

7.1. Models for Human Instruction Understanding

An accurate understanding of human execution requests influences robot executions’ accuracy and intuitiveness. With recent decades’ developments, human instruction understanding has been developed from shallow-level literally request understanding to comprehensive-level interpretive request understanding. According to linguistic features involved in analyzing execution requests’ semantic meanings, the understanding models are mainly categorized into two types: literal understanding model and interpreted understanding model.

7.1.1. Literal Understanding Model

Literal understanding models for execution request understanding use literal linguistic features, such as words, Part-of-Speech (PoS), word dependencies, word references, and sentence syntax structures, which are explicitly mentioned in human NL expression modality. PoS is a common NLP technique that categorizes each word in the text as corresponding to a particular part of speech based on the definition of the word and the context around it. Word dependency indicates the semantic relation between words in a sentence, which is used to identify the inner logic of the sentence. Word reference is introduced to resolute co-references for understanding pronoun references. Sentence syntax structures indicate the word order in a sentence like “subject + verb + object”, and are used to analyze the causal relations. According to literal linguistic features’ usage manners, literal understanding models are mainly categorized into predefined models, grammar models, and association models. For predefined models, symbolic literal linguistic features, such as keywords and PoS, are manually defined to model NL requests’ meanings, realizing an initial interactive information sharing and execution between humans and robots.

Feature usage manners of predefinition models include the following. (1) Used keywords as triggers to identify task-related targets, such as ‘book, person’ [188]. (2) Used PoS tags, such as ‘verb, the noun’ to disambiguate word meanings, such as book (verb: reading materials; v: buying tickets) in polysemy situations [189]. (3) Used features, such as ‘red color’ to improve robot understanding accuracy in identifying human-desired objects, such as ‘apple’ [190]. For grammar models, grammar patterns, such as execution procedure ‘V(go) + NN(Hallway), V(grasp) + NN(cup)’, logic relation ‘if(door open), then(turn right)’, and spatial relation ‘cup IN room’, were manually defined to model NL requests’ meanings, realizing a relatively improved robot adaptability towards various human NL expressions. Feature usage manners of grammar models include the following. (1) Using action-object relations to define robot grasping methods, such as ‘grasp(cup)’ [191]. (2) Using action-location relations to describe robot navigation behaviors, such as ‘go(Hallway), open(door), turn right (stairs)’ [22]. (3) Using logic relations to define execution preconditions, such as ‘if (door open), then (turn right)’ [192]. (4) Using spatial relation to give execution suggestions, such as ‘(cup)CloseTo(plate)’ for a robot [39].

For association models, semantic meanings, such as empirical explanations ‘beverage: juice’, quantitative dynamic values, such as ‘1 m/s’ for NL expressions, such as ‘quickly, slowly’, and execution parameters, such as ‘driller, upper-left corner’ were manually defined for specifying NL expressions, such as ‘drill a hole’ [6], scaling up robot knowledge and further improving robot cognition levels. Typical feature usage manners of association models include the following. (1) Using probabilistic correlations, such as ‘beverage-juice (probability 0.7)’ to recommend empirical explanation for disambiguating human NL requests, such as ‘delivery a beverage’ [151]. (2) Using quantitative dynamic values to translate subjective NL expressions ‘quickly, slowly’ to sensor measurable values, such as ‘0.5–1 m/s’ [193].

7.1.2. Interpreted Understanding Model

Interpreted understanding models for execution request understanding use implicitly interpreted linguistic features, which are not explicitly mentioned in human NL modality while are implicitly indicated by NL expressions. According to interpreted features’ usage manners, interpreted understanding models are mainly categorized into single-modality models and multi-modality models.

In a single-modality model, interpreted linguistic features are only from the NL information modality. Implicit expressions, such as indicated objects/locations and commonsense-based logic, are inferred from explicit NL expressions for enriching information in human NL commands. Typical interpreted linguistic features include object function interpretations, such as ‘cup: containing liquid’, human fact interpretations, such as ‘preferred action, head motion’, and environment context interpretations, such as ‘elevator (at right side)’. Typical feature usage manners of single-modality models include the following. (1) Combined explicitly-mentioned execution goals, such as ‘drill, clean’, with implicitly-mentioned execution details, such as ‘drill: tool: driller. Action: move down, sweep, move up. Precondition: hole does not exist’, to specify human-instructed abstract plans as robot-executable plans [44]. (2) Combined explicitly-mentioned human NL requests, such as ‘take’, with human physical statuses, such as ‘human torso pose’, to understand human visual perspective, such as ‘gazed cup’ [122].

In a multi-modality model, interpreted linguistic features are from other modalities, such as vision modality, motion modality, tactile modality as well as NL modality. Mutual correlations among modalities enrich and confirm the information embedded in human NL commands. Typical interpreted linguistic features include human tactile indication (tactile modality), human hand/body pose (vision modality and motion dynamics modality), and environmental conditions (environment context modality). Typical feature usage manners include the following. (1) Combined human NL expression ‘person’ with real-time RFID sensor values to identify individual identities [194]. (2) Combined NL expressions, such as ‘hand over a glass of water’, with a tactile event, such as ‘handshaking’, to confirm object exchange between a robot and a human [156]. (3) Combined NL requests, such as ‘very good’, with facial expressions, such as ‘happy’, to enable a social human-robot interaction [170]. (4) Combined NL commands, such as ‘manipulate the screw on the table’, with visual cues, such as ‘screw color’, to perform cooperative object manipulation tasks [195].

7.1.3. Model Discussion

For literal models, they are good at scenarios with simple execution procedures and clear work assignments, such as robot arm control and robot pose control. For interpreted models, they are good at scenarios with involvements of daily commonsense, human cognitive logics, and rich domain information, such as object physical property assisted object searching, intuitive machine-executable plan generation, vision-verbal-motion-supported object delivery, etc. However, a literal model relies on a large amount of training data to define correlations between NL expressions and practical execution details. Learning these correlations is time-consuming and labor-intensive. An interpreted model’s performance is limited by robot cognition levels and data fusion algorithms. Ref. [196] discussed the synergies between human knowledge and learnable knowledge, providing insights into the natural language model learning process.

7.2. System Realizations

7.2.1. NLP Techniques