1. Introduction

Artificial intelligence techniques draw inspiration from many different sources. One common source for inspiration is from nature. Techniques have been inspired by insects, such as the artificial bee colony algorithm [

1], the firefly algorithm [

2], and ant colony optimization [

3]. Other techniques have been inspired by birds, such as cuckoo search [

4] and migrating birds optimization [

5]. Particle swarm optimization (PSO) is also inspired by birds [

6].

These techniques have found use in numerous problem domains. PSO, for example, has been demonstrated to be effective for antenna design, biomedical applications, communication network optimization, classification and control problems, engine design and optimization, fault diagnosis, forecasting, signal processing, power system control, and robotics [

7]. Zhang, Wang, and Ji [

8], in fact, reviewed nearly 350 papers on particular swarm optimization and identified over 25 variants of the technique, which found use in ten broad categories ranging from communications to civil engineering.

There are many different sources that can be used for inspiration other than nature. This paper presents a new technique that is inspired by the science fiction concept of time travel. Time travel is a common plot element used in science fiction where a person travels to a different point in time. Time travel can be used to answer complex questions; however, it is only hypothetical and can be, more practically, a source of inspiration. The technique proposed in this paper uses the concept of time travel by returning to an impactful moment in PSO and makes alterations.

The proposed time-travel inspired technique is an enhancement of PSO. PSO is a heuristic method used to solve complex optimization problems that cannot be solved easily with standard mathematical methods. One issue with PSO is that it oftentimes finds solutions that are not the globally best solution for a given search space. This happens when a particle gets trapped in a local minimum and leads other particles to this suboptimal solution. Another issue is the use of time and computational resources. Enhancements to the base PSO technique attempt to solve these issues. Examples include center PSO [

9], chaotic PSO [

10], and the hybrid genetic algorithm and PSO [

11]. Center PSO adds a particle to the center of the search space. This particle does not have a velocity and is used only for calculating the velocity of other particles. The center particle is useful because it increases efficiency for the common case of the optimum being near the center of the search space. Chaotic PSO adds the addition of chaos to traditional PSO. This allows for the algorithm to avoid local maxima. The hybrid Genetic Algorithm and PSO attempts to combine PSO with a genetic algorithm to utilize the benefits of both algorithms.

The technique presented in this paper is a potential solution to the issue of being trapped in local minima. The algorithm can be broken down into eight steps, which are explained in detail in

Section 3. It begins by running the PSO algorithm, but not completing the run. The second step is to calculate the most impactful iteration of the run. The third step is to copy the swarm at this iteration. The algorithm continues, in the fourth step, by terminating the particle swarm run. The fifth step of the algorithm is to make an alteration to the copied swarm. The sixth step is to begin a new particle swarm run with the copied swarm. The seventh step is to end this run. Finally, the results of the two runs are compared and the better of the two solutions is chosen. In addition to use with the base PSO algorithm, this technique has the potential to also enhance variations of PSO. This could be achieved by running a new enhancement of PSO, instead of regular PSO, for the first and sixth steps of the algorithm.

This technique aims to change PSO to avoid finding suboptimal solutions. It is expected to find better solutions at the expense of using more computing resources. This paper explores multiple methods of implementing the proposed technique. Two methods for finding impactful iterations are discussed. Multiple changes to the particle swarm, at the impactful iteration, are also discussed. The experiment, outlined in

Section 4, assesses the efficacy of combinations of four different change technique variations and two different impact calculation variations of the proposed technique for different complex functions representing optimization problems and several benchmark functions.

This paper continues with background information on related topics–such as artificial intelligence, science fiction, time travel, and PSO–being discussed in

Section 2. The proposed technique is described in

Section 3 and the experimental design is reviewed in

Section 4. Data are presented and analyzed in

Section 5 (related to several problem domain applications) and

Section 6 (related to common PSO evaluation functions). Finally, the conclusions and potential areas for future work are discussed in

Section 7.

3. Technique Description

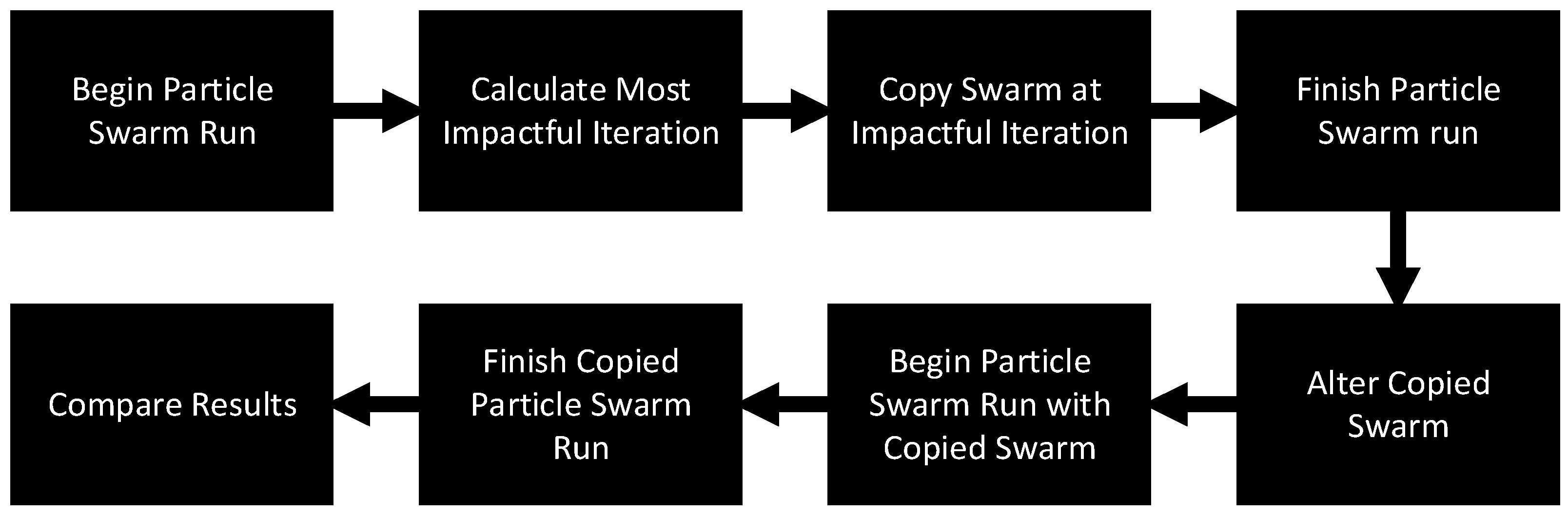

The technique proposed herein is an enhancement of PSO. The technique is split into eight sections, for easier understanding, which are now discussed. These are also depicted in

Figure 1. The first step is to begin a particle swarm run. The second step is to calculate the most impactful iteration of this run. The third step is to make a copy of the swarm at the impactful iteration. The fourth step is to terminate the particle swarm run. The fifth step is to make a change to the copied particle swarm from step three. The sixth step is to begin a new particle swarm run with the copied particle swarm. The seventh step is to terminate this particle swarm run. The final step is to compare the results of the two particle-swarm runs and choose the better result.

3.1. First Step: Particle Swarm Run Initiation

The process starts by initiating a particle swarm run. The proposed technique was tested with the base particle swarm optimization algorithm; however, it could be easily adapted for use with other PSO techniques. One minor variation, when implementing PSO, is the velocity update formula. Although many studies use the original formula, others include small changes. The original velocity update formula is [

74]:

In this formula, bolded letters symbolize a vector containing an element for each dimension. Scalar terms are not bolded. The vector vi+1 contains the velocity for the particle for the next iteration. The vector vi contains the velocity of the particle in the current iteration. The constants, c1 and c2, are weights affecting the influence of the particle’s best position and the swarm’s best position, respectively, on the new velocity. The variables r1 and r2 are pseudorandom numbers generated between zero (inclusively) and one (exclusively). The vectors pi and gi are the particle’s best recorded position and the swarm’s best recorded position, respectively. Finally, the vector xi denotes the particles’ position at the current iteration.

A minor adaptation of this velocity update formula includes another added constant as its only change. The added constant is denoted by the letter w. This constant is called the inertia weight and impacts the influence of the velocity at the current iteration. The rest of the terms are denoted the same. This updated formula is [

75]:

The velocity update equation for the proposed technique is a minor adaptation. The only change from this formula to the one used for the proposed technique is the removal of both stochastic variables. The removal of these variables was done to simplify the formula slightly and add less randomness to the environment. This allows for fewer variables changing the results of the proposed technique. The formula used for the proposed technique is shown here with all terms denoted the same as previously:

The particle swarm was initialized with ten particles. The particles begin in random locations of the search space. The swarm is then run for 100 iterations. The results of this run are saved for the purpose of comparing them to the second particle swarm run.

3.2. Step Two: Impactful Iteration Identification

The second step of the proposed technique is to calculate the most impactful iteration. This step begins during the first particle swarm run. This particle swarm run will terminate before the third step. To determine the most impactful iteration, a numerical variable for determining impact was used. This variable was defined as the extent to which a particle was influenced by other particles in one iteration. Impact was calculated for each particle separately using the equation:

Here, mi identifies the impact at the current iteration, gi represents the global best position of the swarm, and xi represents the current position of the particle. This formula was derived from the definition included in the previous paragraph. The only point in the algorithm where a particle is impacted by other particles is during the velocity update. The only part of this equation containing effects from other particles during the current iteration is the third term: c2 * (gi − xi).

There are two other terms of the equation. The first only includes the inertia weight and the current velocity. Even though the current velocity does contain information that was impacted by other particles, this information was updated in previous iterations. This means that this term contains only information regarding the impact of previous iterations, and thus is not considered for the calculation of the impact of the current iteration. The second term, c1 * (pi − xi), contains a constant and the distance of the particle from its own best previously recorded position. This term includes no information from other particles, and thus is not included in the calculation of impact. The last term, c2 * (gi − xi), contains a constant and the distance of the particle from the current global best position. Since the global best position contains information from other particles, the last term has a direct impact on the updating of the velocity of the particle. There are no other terms that include information where a particle influences the particle in the current iteration. Thus, this is the only term that is important in calculating impact. Finally, c2 is a constant that is the same for every particle. Because of this, it was removed from the calculation of impact.

The most impactful iteration, which is defined as the iteration where the most impact occurred, determines which iteration the algorithm will return to later.

In order to determine what the most effective iteration to travel back to would be, the most impactful iteration was calculated in two different methods. In the first method, shown in Listing 1, the most impactful iteration is calculated first by summing the impact of each particle. Then, the total impacts for each iteration are compared. The iteration with the highest total impact is the most impactful iteration. The second method, shown in Listing 2, of calculating the most impactful iteration is similar to the first. The difference is that the more impactful iteration only replaces the current most impactful iteration if it is greater by a certain amount. For the purposes of this experiment, this was calculated by performing a comparison during every iteration of the particle swarm run. This comparison involved first subtracting the largest total impact recorded from the current total impact. Then, this value was divided by the largest total impact recorded. The final value was compared with a set value of 0.05. This value was chosen to ensure the increase was high enough to be worth the computational resources of copying the swarm. If the calculated value was greater than 0.05, then the current total impact replaced the recorded largest total impact. If not, then the current total impact was ignored. For both methods, the largest total impact is saved to be used in the next step.

Listing 1. Impact Calculation Method 1.

GreatestTotalImpact = 0

Foreach Iteration After Iteration 1:

IterationTotalImpact = 0

For particle in swarm:

IterationTotalImpact += ImpactOfParticle

If IterationTotalImpact > GreatestTotalImpact:

GreatestTotalImpact = IterationTotalImpact

Listing 2. Impact Calculation Method 2.

GreatestTotalImpact = 0

ImpactThreshold = 0.05

Foreach Iteration after Iteration 1:

IterationTotalImpact = 0

For particle in swarm:

IterationTotalImpact += ImpactOfParticle

If (IterationTotalImpact-GreatestTotalImpact)/GreatestTotalImpact > ImpactThreshold:

GreatestTotalImpact = IterationTotalImpact

3.3. Third Step: Swarm Duplication

The third step is to create a copy of the particle swarm at the most impactful iteration found in the previous step. Every variable is copied over. This includes the number of particles, the positions of the particles, the velocities of the particles, the individual best position found for each particle, and the global best position found by the swarm. This copied swarm will be used for the second particle swarm run.

3.4. Fourth Step: Particle Swarm Run Completion

The fourth step is to finish the first particle swarm run. This particle swarm run is finished by reaching a termination condition. The most common termination condition for PSO is reaching a set a number of iterations of the algorithm running. This is necessary because PSO typically does not find the global optimum, and it was the termination condition used for this technique. The result found by terminating the particle swarm run at this iteration is recorded for use in the last step.

3.5. Fifth Step: Duplicated Swarm Alternation

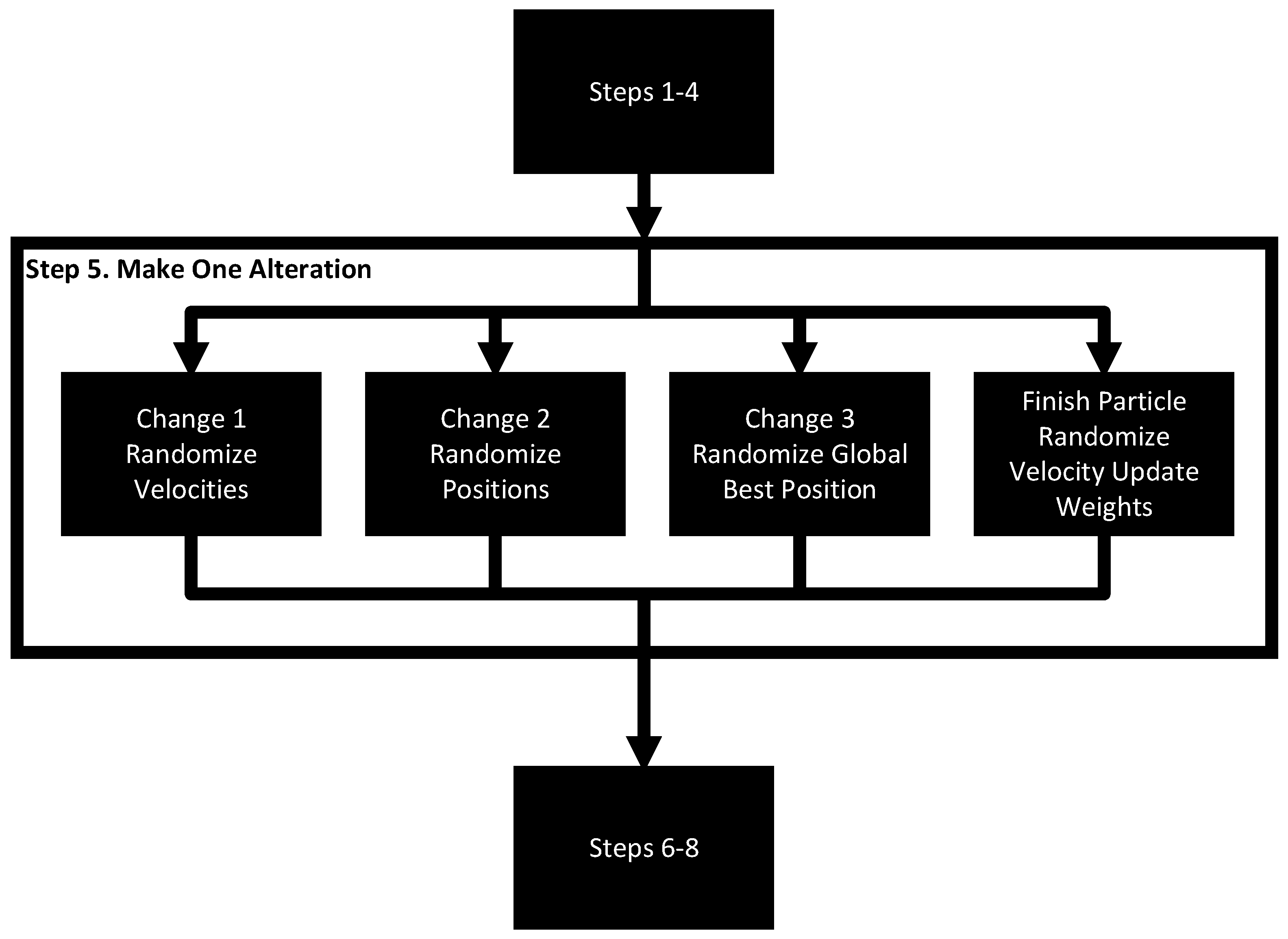

The fifth step, which is depicted in

Figure 2, is to alter the copied swarm from step three. The original velocity update formula for particle swarm contained stochastic variables. This would result in a changed final result if the swarm was run beginning at the copied iteration and terminated at the same iteration as the original. One major goal of the proposed technique was for the particles to avoid being trapped by local optima. A concern of using these variables as the only variation from the original swarm and the copied swarm was that they may not provide a great enough change to escape a local optimum in the second particle swarm run. Instead, a deterministic velocity update formula was used, along with other changes, to solve this problem. The determinism of this formula allows for a comparison of the changes presented with fewer stochastic variables altering the results.

In order to test which form of alteration to the copied swarm would be most effective, four different types of changes were analyzed. The first change randomized the velocities of all particles. These velocities were randomized in the same range as they were first randomized. New velocities, at this point, allow each particle to travel to a location very different than they had in the initial particle swarm run. The particles will then travel back toward the global optimum and the particle’s best recorded optimum, allowing for different areas of the search space to be explored. The second change randomized the positions of each particle in the same range as they were first initialized. This also allowed each particle to explore areas of the search space they normally would not explore. It also gives them a head start towards the best global optimum found so far and each particle’s best recorded optimum. The third change randomized the global best position. This change intended to pull particles toward parts of the search space that were not very well explored, while allowing them to also search for better locations along the way. The final change altered the constant values used in the velocity update equation. The inertia weight (w), the individual weight (c1), and the global weight (c2) were all changed. These weights began with the values 0.65, 1.15, and 1.15, respectively. The constants were randomized in this change between the ranges of 0.55–0.65, 1.2–1.3, and 1.0–1.1, respectively.

3.6. Step Six: Altered Swarm Run Initiation

The proposed technique continues in the sixth step by initiating a new particle swarm run using the copied and altered particle swarm. This run begins at the same iteration it was copied from in step three. The run will still terminate at the same iteration specified for the first particle swarm run. This means that the second particle swarm run will run for less iterations than the first. For example, if the first run was specified to stop at iteration ten, and was copied at iteration four, the second particle swarm run will go for six iterations. The only alteration to this copied swarm is the change made during the fifth step.

3.7. Step Seven: Swarm Run Completion

Step seven is to finish the particle swarm run. This occurrs automatically when the iteration count reaches the iterations specified by the termination condition. The best position and value of this position is then recorded.

3.8. Step Eight: Swarm Results Comparison

The final step compares the best position recorded by the first and second particle swarm runs. The best position of the two is used as the final output from the technique.

4. Experimental Design

An experiment was performed to test the efficacy of the proposed technique through the comparison of execution times, positions, and optima values. The proposed technique was compared with basic PSO using the velocity update equation presented as Equation (3).

The impact of the four different changes and two different impact calculation methods were compared and tested on four different two-dimensional functions. These functions were chosen because of the high number of local optima, to test the proposed technique’s effectiveness in finding new optima. They also had extremely large domains, and because they are still difficult to optimize even with only two dimensions. Two-dimensional functions were chosen for visualization and easier understanding. The four functions used were:

Function 1:

Function 2:

Function 3:

Function 4:

The experiment tested each combination of change and impact calculation on each function individually. It was conducted by running the proposed algorithm with each of these differences 1000 times. The algorithm was run with a swarm of ten particles for 100 iterations. The time of the PSO section of the algorithm was recorded to compare with the total time of the algorithm, which was recorded in the same run. The final best position and values of both the regular particle swarm before backtracking to the impactful iteration, and after the entire algorithm had finished were recorded. When all tests were completed, averages of these values were calculated, which are presented in tables in

Section 5.

In

Section 6, a similar technique was used to collect performance data for several benchmark functions. For these functions, only 100 runs per experimental condition were performed.

5. Data and Analysis

This section presents the results of the experimentation performed using the four functions described in the previous section.

Section 5.1 describes and compares the performance and speed of the proposed method with standard PSO.

Section 5.2 describes how often and to what extent the proposed technique evicenced improvement over standard particle swarm optimization.

5.1. Performance and Time Comparison

This section compares the different speeds, changes in position, and changes in final value between standard PSO and the proposed technique. The data are organized into multiple tables to compare the efficacy of the technique with regards to different functions, changes, and impact calculations. The column headers for each table in this section are now described in detail.

“PSO Time” refers to the average length of time taken for particle swarm optimization to calculate 100 iterations for ten particles. “Total Time” is the average time taken for the entire proposed technique to run. This includes the time for all steps described in

Section 3. The average distance between the final best X1 position of the standard particle swarm run and the run of the proposed technique is represented by “Change in X1.” “Change in X2” is the same as “Change in X1”, but with the X2 positions instead of the X1 positions. “Change in value” is the average change from the PSO algorithm’s final optimum value discovered to the proposed technique’s final optimum value discovered. Lower values for this column mean that the average value improved from the proposed technique. “Value” is the average value of the final optimum value discovered after ten iterations. Negative values are better optima because the test aims to find the global minimum.

The data in this section are divided into three groups to facilitate comparison. These groups show some of the same information from different perspectives.

Table 1,

Table 2,

Table 3 and

Table 4 present the results for functions 1 to 4, respectively. The second group,

Table 5,

Table 6,

Table 7 and

Table 8, is organized by the different changes made in step 5 of the algorithm. Finally,

Table 9 and

Table 10 present the two different impact calculation methods.

Some trends can be seen in this data. Function 3 tends to have deviation from the mean values and changes in value more than the other functions, even when change 3 is removed from the calculations. Functions 1, 2, and 4 are more consistent in this regard. Functions 1 and 2 tend to vary much more than functions 3 and 4 for the change in position when not considering change 3. It is likely that these large variations show that the particles in proposed technique ended up in different minima for functions 1 and 2. The time taken for PSO and the proposed technique varies by function.

When comparing the data from the perspective of changes instead of functions, some interesting trends stand out. First, and most notably, change 3 finds consistently worse values, by many orders of magnitude, in all tests. This shows that change 3 does not perform well at finding optimum values. The average time taken for PSO runs is consistent when comparing different changes. This is because the changes do not affect the PSO process. Another notable trend is that the differences between the total time taken for each change vary very little. This demonstrates that the different benefits of the changes have similar costs. Apart from change 3, changes in position are more correlated with the function than with the type of change. This shows that these changes are capable of finding new minima in functions 1 and 2, as was the goal of the technique.

The average of the PSO times for impact calculation 1 was approximately 2198.17. For impact calculation 2, it was approximately 2273.43. The lack of major change here was expected because standard PSO does not use the impact calculation. The average of the total time for iteration 1 was approximately 4231.63, while it was 4535.92 for iteration 2. This is also not a major change. The changes in the other columns, between the two iteration calculation methods, are also minor.

5.2. Improvement Comparison

This section evaluates the frequency of the improvement of the proposed technique and the magnitude of the improvement when it occurs. The table headers for the data presented in this section are now described in detail.

“Runs Improved” represents the percentage of runs, out of 1000, where the proposed technique found a better solution than standard PSO. “Improvement” indicates the average change from the PSO’s final optimum value discovered to the proposed technique’s final optimum value discovered during runs where improvement occurred. This is the same as the “Change in Value” from

Section 5.1 with the values where improvement did not occur excluded from the calculation.

The tables in this section are grouped similarly to in

Section 5.1. They are split into three groups with each group containing all information from the data in this section. The groups allow for an easier comparison of data.

Table 11,

Table 12,

Table 13 and

Table 14 are grouped by function.

Table 15,

Table 16,

Table 17 and

Table 18 are grouped by the four changes. The last group is comprised of

Table 19 and

Table 20, which are organized by the different PSO impact calculations.

Several conclusions can be drawn from comparing the first group of tables, which compare the different functions. The average values for the column “runs improved” for

Table 1,

Table 2,

Table 3 and

Table 4 are 42.7%, 36.2%, 38.9%, and 38.9%, respectively. This shows that the proposed technique was effective most often for function 1, effective less frequently for functions 3 and 4, and effective least often for function 2. The average values for the column “Improvement” for the same tables are −0.92, −0.20, −5.07, and −2.22, respectively. This means that the when the proposed technique improved, it improved much more for function 3 than the other functions. The technique shows the next best improvement for function 4, then function 1, then finally, function 2. Looking at the columns together, the technique performed the worst for function 2 in terms of both metrics.

There are also several notable trends in the data for the second group of tables comparing the efficacy of the changes. First, change 3 improved significantly less often than the other three changes. The average values for the column “runs improved” for change 3 was 19.0%, while for changes 1, 2, and 4, the average values for this column were 44.3%, 43.1%, and 50.3%, respectively. Changes 1 and 2 improved approximately as often as each other. Change 4 improved the most often. The average values for the column “improvement” are −2.34, −2.49, −1.97, and −1.60 for changes 1, 2, 3, and 4, respectively. This data show that change 3 provided the third least improvement when improvement occurred. This, combined with the trend that change 3 improved the least often, shows that the other changes were more effective than change 3. Changes 1 and 2 caused improvement by similar amounts when improvement occurred and improved significantly more than change 4. Change 4 caused improvement by the least on runs where improvement occurred.

When comparing the results for group 3, there is not much difference between the two. The average value for the column “runs improved” is 39.4% for

Table 9 and 39.1% for

Table 10, while for the column “Improvement” the values are −2.20 for

Table 9 and −2.09 for

Table 10. This shows that the two different impact calculations are approximately the same in terms of how often they indicate that the proposed technique improved and how much the proposed technique improved.

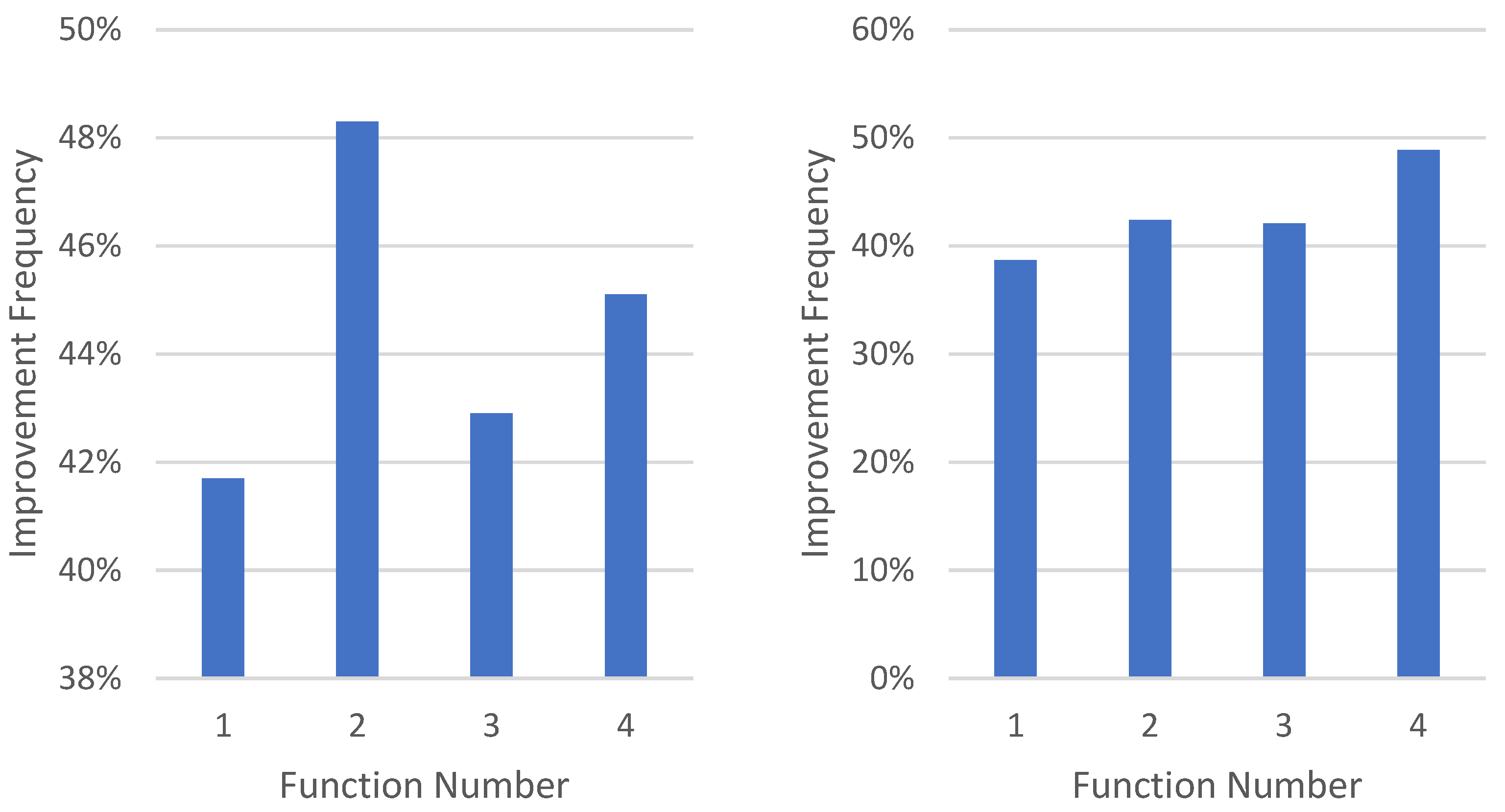

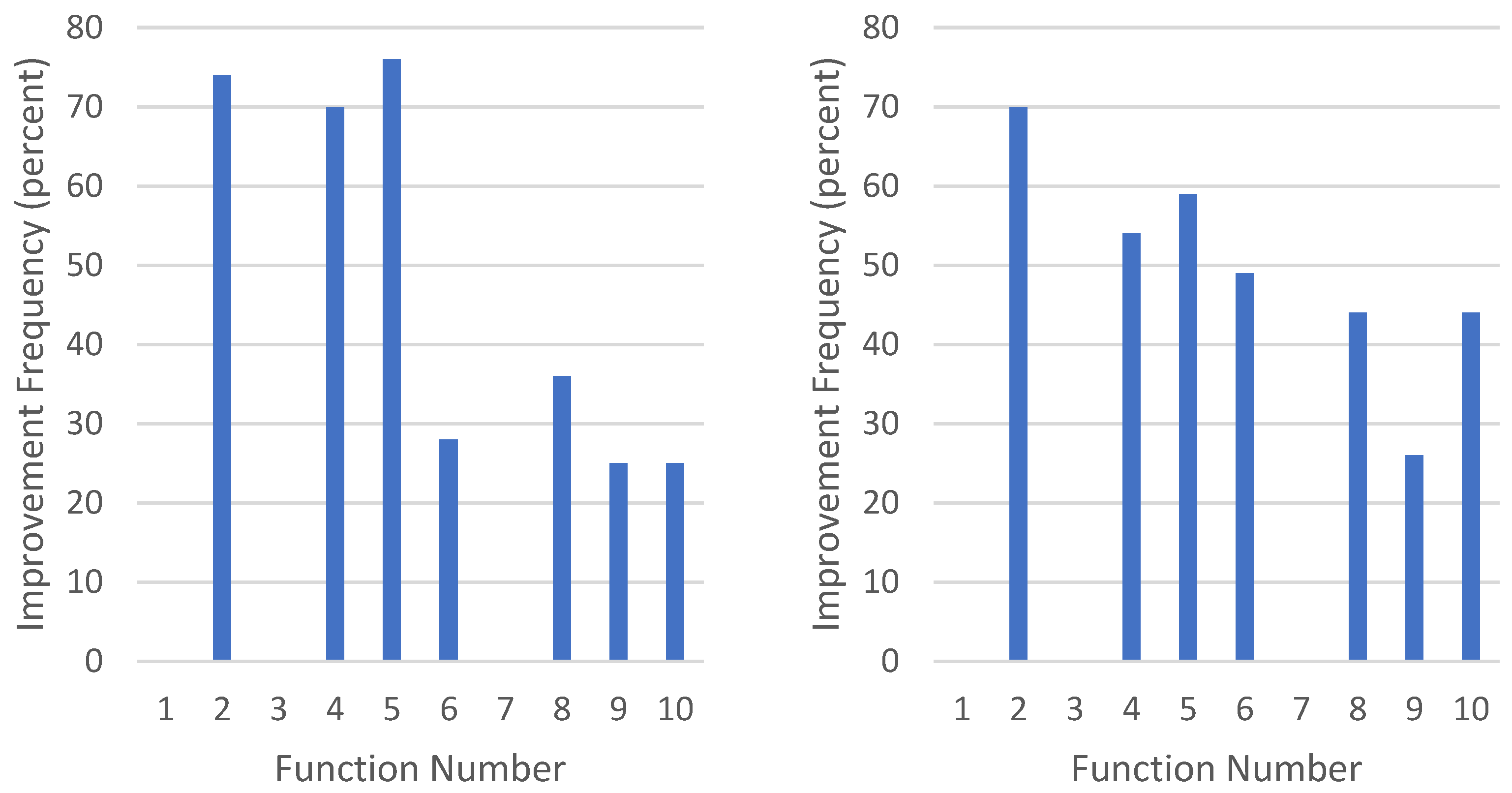

Figure 3 and

Figure 4 show the frequency of improvement, for each function, for all of the changes.

6. Evaluation with Standard PSO Benchmark Functions

The proposed technique was also tested with ten functions that have been used, in the past, as benchmarks for testing PSO and new techniques based on PSO [

76]. This data is presented in

Table 21,

Table 22,

Table 23,

Table 24,

Table 25,

Table 26,

Table 27,

Table 28,

Table 29 and

Table 30. Functions 1, 2, 4, 5, and 7 were used to test an improved PSO algorithm in [

77]. Functions 1, 2, 3, 4, and 7 were also used for testing another improved PSO algorithm in [

78]. Additionally, functions 1, 2, 3, 4, 5, 7, and 10 have been considered when deciding which benchmark functions should be made as an acceptable standard for testing PSO algorithms [

79]. All of the functions used for this section were tested with 20 dimensions, except for functions 9 and 10. Functions 1, 3, 6, 8, 9, and 10 are known as the Rastrigin Function, Griewank Function, Schewefel Function, Sine Function, Himmelblau Function, and Shubert Function, respectively [

76]. These functions are highly multimodal, which make them effective choices for testing the efficacy of PSO-based techniques. Function 2, the Spherical Function, and function 4 have considerably fewer minima. Function 5 is an interesting function because it adds noise with the random variable to make it more difficult to optimize. These tests were performed in a similar manner as those in

Section 5. For each experimental condition, 100 tests of ten particles on 100 iterations were conducted and averaged for each combination of function, impact method, and change.

Several conclusions can be drawn from looking at the results of the tests on the benchmark functions. Most notably, change 3 performed much better running against these functions as compared to the functions discussed in

Section 5. In

Section 5, change 3 was outperformed by the other changes in both improvement frequency and improvement amount on most functions. In this section, change 3 had a significantly higher improvement level for functions 6, 8, and 9 than the other changes. It also improved about as often as the other changes for functions 2 and 9. It also performed well, improving the most or second most often, for functions 4 and 5.

Change 2 performed similarly to these functions as it had for the functions in

Section 5. It performed better more often than the other changes, on average, for most functions. This is similar to the results from

Section 5. There is a major difference for change 1 with these functions. Change 2 outperformed change 1 notably, in terms of frequency of improvement, for functions 2, 4, and 5. These changes were roughly equal in performance for the tests in

Section 5. Change 1 still outperformed change 2 for functions 9 and 10, although not by much.

Similar to change 2, change 4 did about as well for the benchmark functions as it performed in

Section 5. Change 4 performed moderately well for the functions in

Section 5 and repeated this trend for the benchmark functions. It was rarely the best or the worst change for the benchmark functions in terms of frequency of improvement. In terms of improvement amount, it tended to do worse than all other changes on average. It did show the highest improvement on function 10, however.

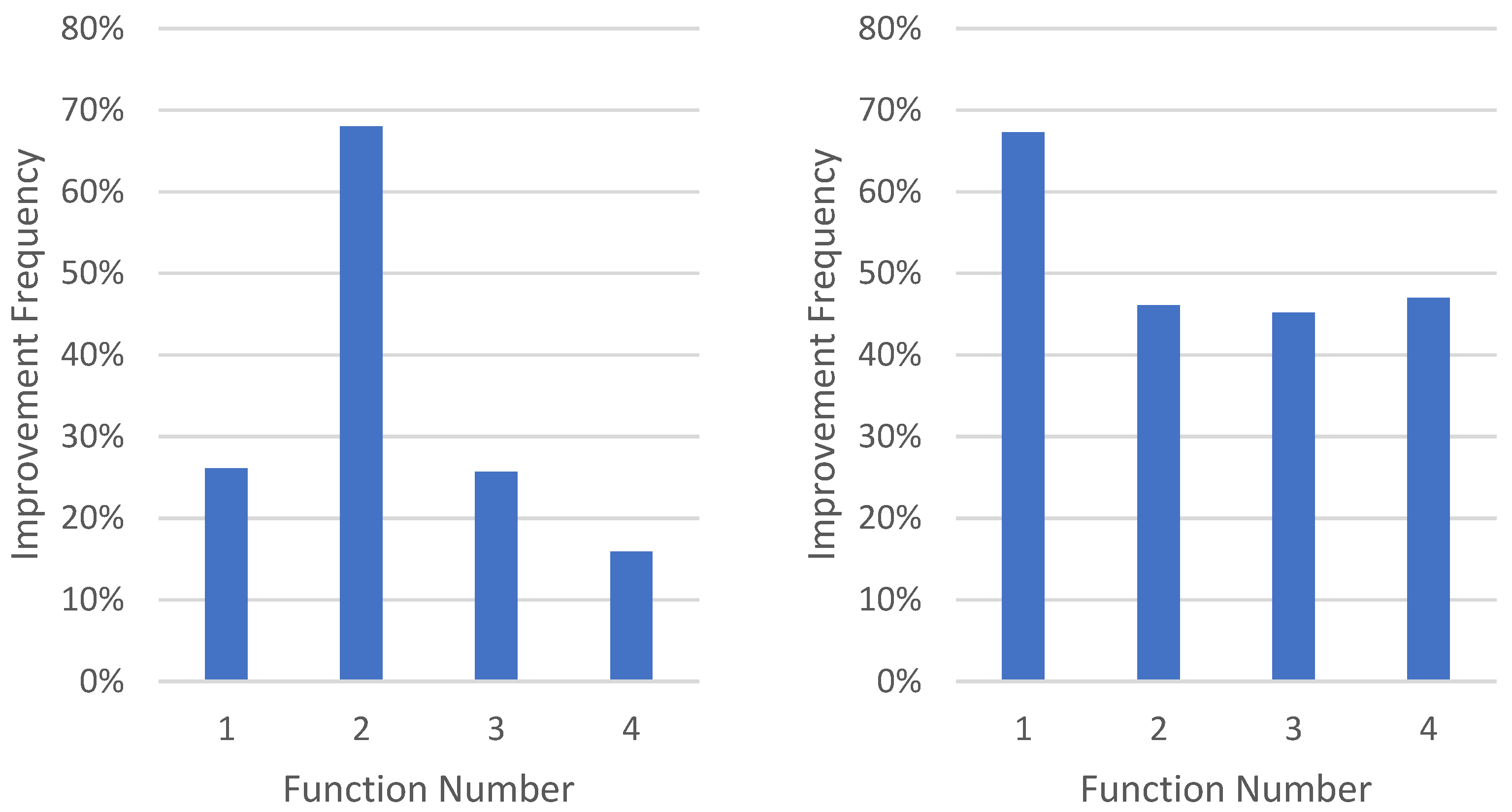

Figure 5 and

Figure 6 show the frequency of improvement, for each function, for all of the changes.

Compared to other algorithms, which have been previously analyzed by others, the approaches proposed herein would appear to outperform some and underperform others. As there are considerable differences in the testing conditions used by prior work, direct comparison has notable limitations. Engelbrecht [

78], who noted the importance of the swarm size in comparisons, performed evaluations with a swarm size of ten (as did the work presented herein), among other sizes. Performance with this swarm size ranged from 1.34 to 4.90, with overall values (across all swarm sizes) ranging from 0.00 to 5.08. Pant, Thangaraj, and Abraham [

76], using four different algorithms and three different initialization techniques found results as high as 22.34 and as low as nearly 0, for functions with a 0 ideal value. Yi [

77], similarly, compared four functions, returning values as high as 52.43 and as low as nearly 0, for functions with a 0 ideal value. This was also the case with the work of Uriarte, Melin, and Valdez [

79], who compared two functions and had average values as high as 4.26 and as low as 0 (again, for functions with a 0 ideal value). These prior studies, though, failed to report the percentage or number of runs improved. Additionally, some studies reported far better base PSO results than were seen in this study, further impairing the direct comparison of results. As the techniques proposed herein have the potential to be added to other techniques to further improve their performance, additional study of the discussed techniques and the proposed algorithms’ ability to perform them is a key area of potential future work.

7. Conclusions and Future Work

This paper has proposed a new method for solving optimization problems based upon particle swarm optimization, which has been inspired by science-fiction time travel. It has also presented and analyzed experimentation showing the efficacy of the proposed technique. A description of the proposed technique was provided, experimentation was described in detail, and the effectiveness of the technique was demonstrated using the data presented in

Section 5 and

Section 6.

The method outlined in this paper, which is based on PSO, uses an eight-step process. It initiates a particle swarm run, calculates the most impactful iteration of the run, copies the swarm at the impactful iteration, terminates the particle swarm run, makes a change to the copied particle swarm, begins a second particle swarm run with the copied particle swarm, terminates the second particle swarm run, compares the results of the two particle-swarm runs, and selects the best solution.

The experimentation described in this paper characterized the efficacy of the proposed technique on four different complex functions and ten standard benchmark functions. The proposed technique was compared to the basic form of PSO. For the proposed technique, four different variations of changes were tested along with two different impact calculation methods.

The analysis of the proposed technique, operating under the four functions presented in

Section 5, shows that it is capable of improving PSO’s performance with all four change types. Function 1 experienced improvement the most often, of the functions, and function 3 experienced the most improvement, when improvement occurred. Change 3 produced the worst improvement; however, it might have utility in finding minima, due to the substantial position change it incorporates. Change 4 caused improvement the most often, but caused the least improvement. Changes 1 and 2 caused the most improvement, when improvement occurred, but did not cause improvement as often as change 4 did. Different impact calculation methods were not shown to have a notable effect on the results.

Section 6 showed that all four changes were capable of improving performance for many of the benchmark functions. The changes caused improvement for functions 2, 4, 5, 6, 8, 9, and 10. They did not cause improve for functions 1, 2, and 7. For all three functions, where the technique did not provide an improvement, the technique produced the same results as basic PSO. The results from testing on benchmark functions, in terms of the performance of the different change types, were somewhat different than the four functions presented in

Section 5. For the benchmark functions, change three was often more effective than change 4. Change 2 proved to be the most effective change, for the benchmark functions, both in terms of the frequency of improvement and the amount of improvement when change occurred. Change 1 performed second best in regards to both of these metrics. Changes 3 and 4 excelled in different areas from each other. Change 3 performed better in terms of the amount of improvement produced. Change 4 performed better in terms of the frequency of improvement produced.

These findings show that each of the changes could have potential applications. Change 4 could be used in cases where a reliable slight improvement is more important than a lower chance at a high improvement in an optimization problem. Changes 1 and 2 could be used in the opposite situation, where a chance at a high improvement is more important. Change 3 could be used for applications where exploring new areas of a search space is valued the most. The PSO process could potentially be run multiple times or different changes could be used in conjunction with one another to take advantage of their different strengths. For example, the first and second changes could first be used together for a particular application for a larger improvement. If these fail to produce an improvement, change 4 could be used to attempt to achieve a smaller improvement with a higher chance of improvement. Change 3 could also be added in to explore new areas of the search space to find undiscovered minima, while giving a low chance of a high improvement as well. Combinations of the number of iterations and changes might also have some benefit. These, along with trying the technique with other PSO techniques, are all potential areas of future work.