Using Convolutional Neural Networks to Map Houses Suitable for Electric Vehicle Home Charging

Abstract

1. Introduction

2. Related Work

2.1. Deep Learning in Remote Sensing

2.2. Challenges and Limitations of Prior Research

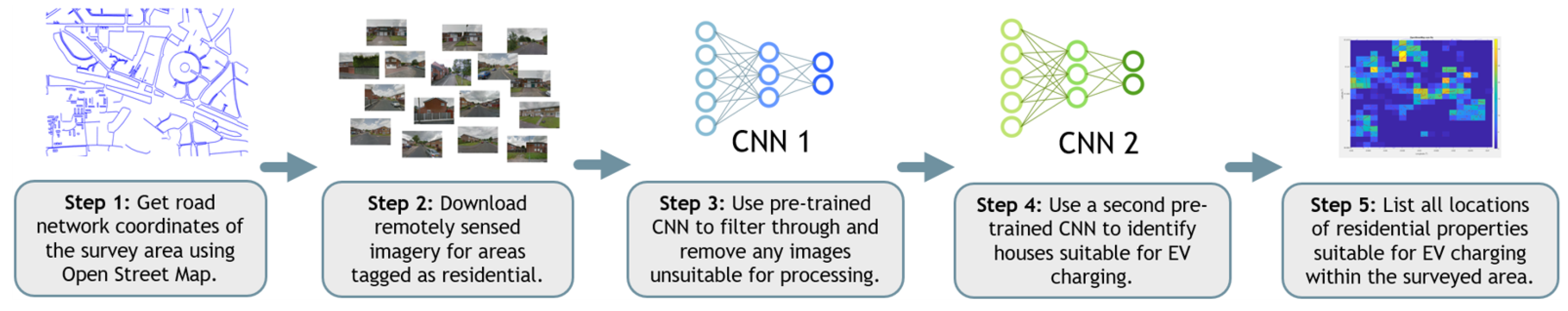

3. Methodology

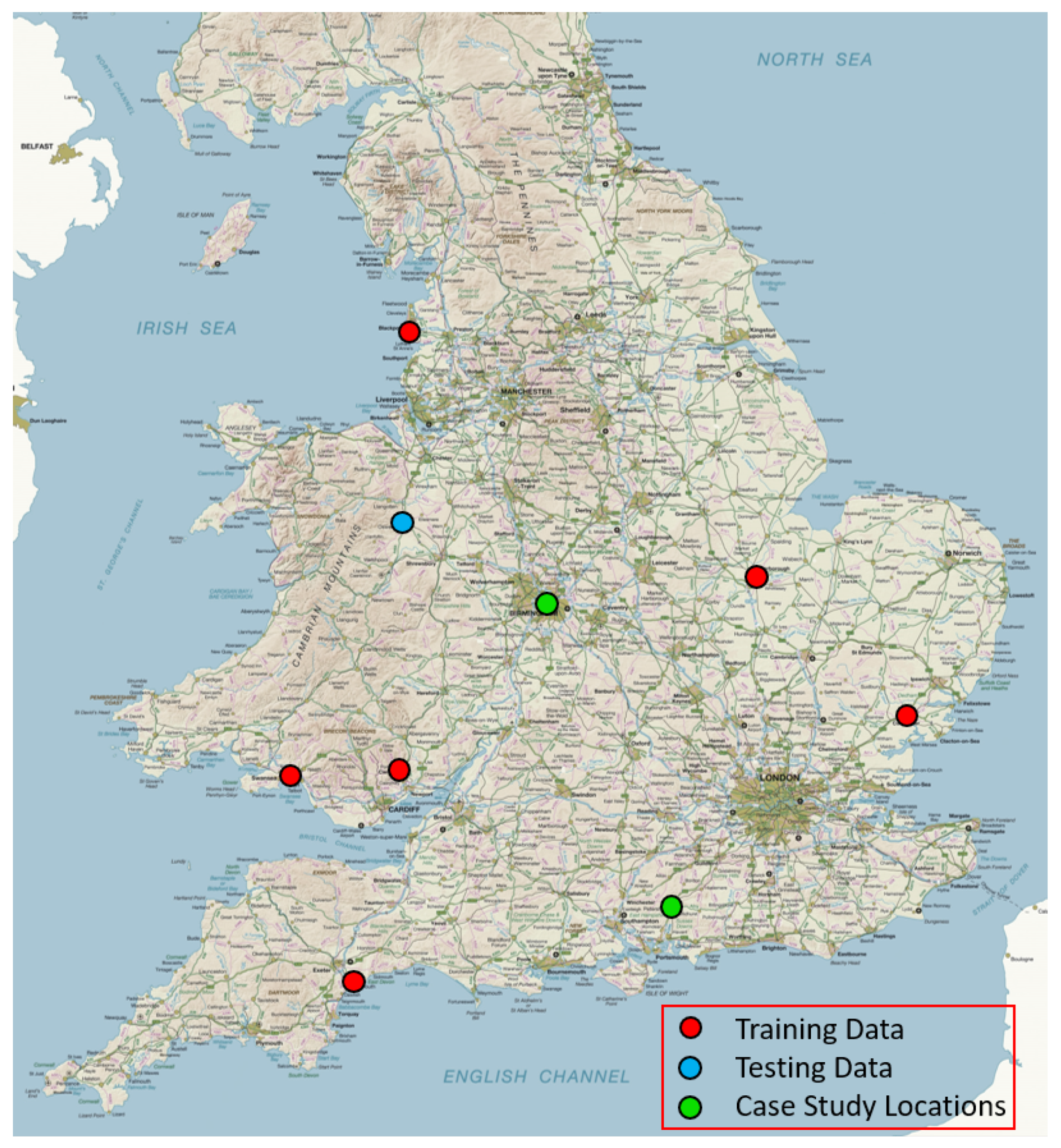

3.1. Training and Testing Data Acquisition

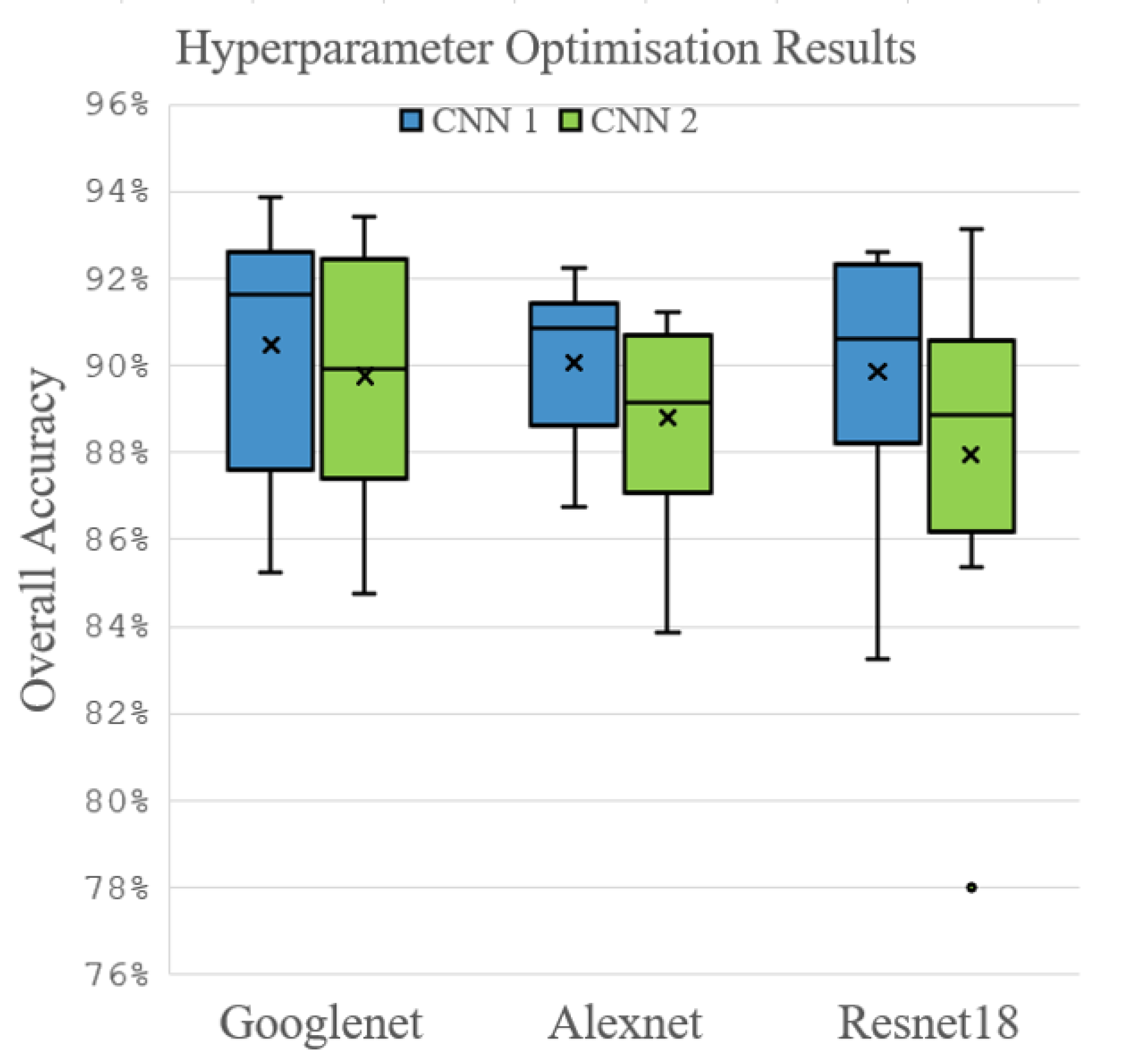

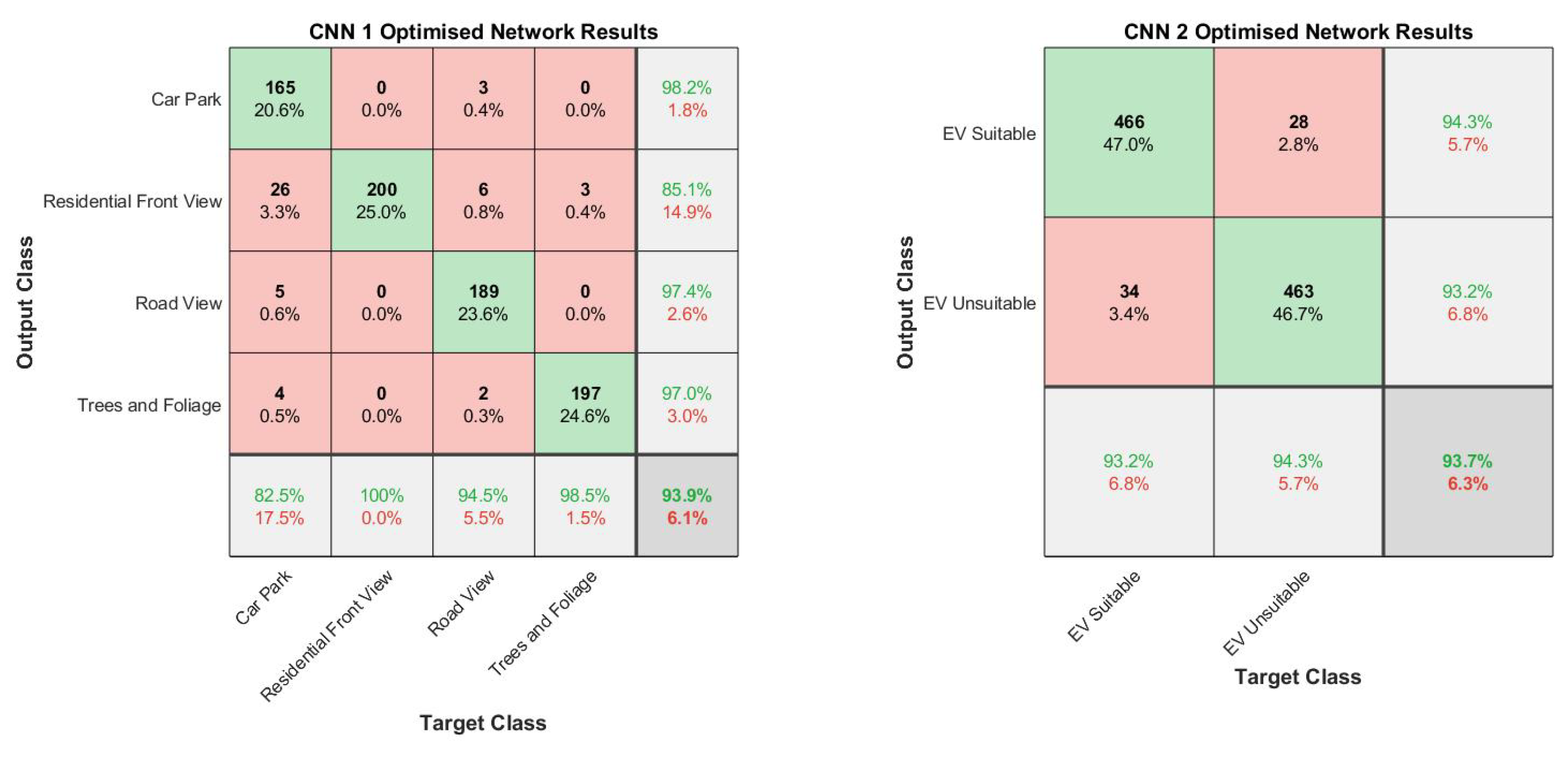

3.2. Network Selection

3.3. Data Post-Processing

4. Testing the Full Workflow

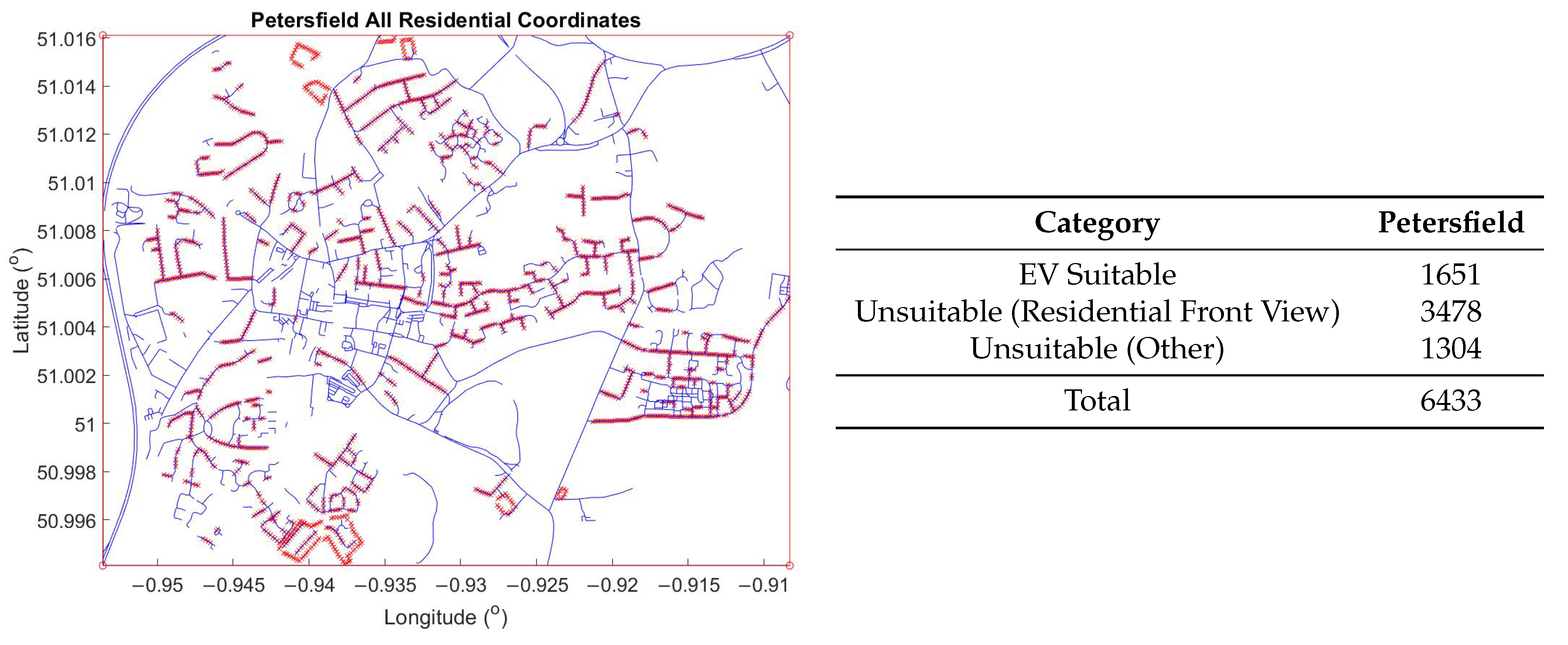

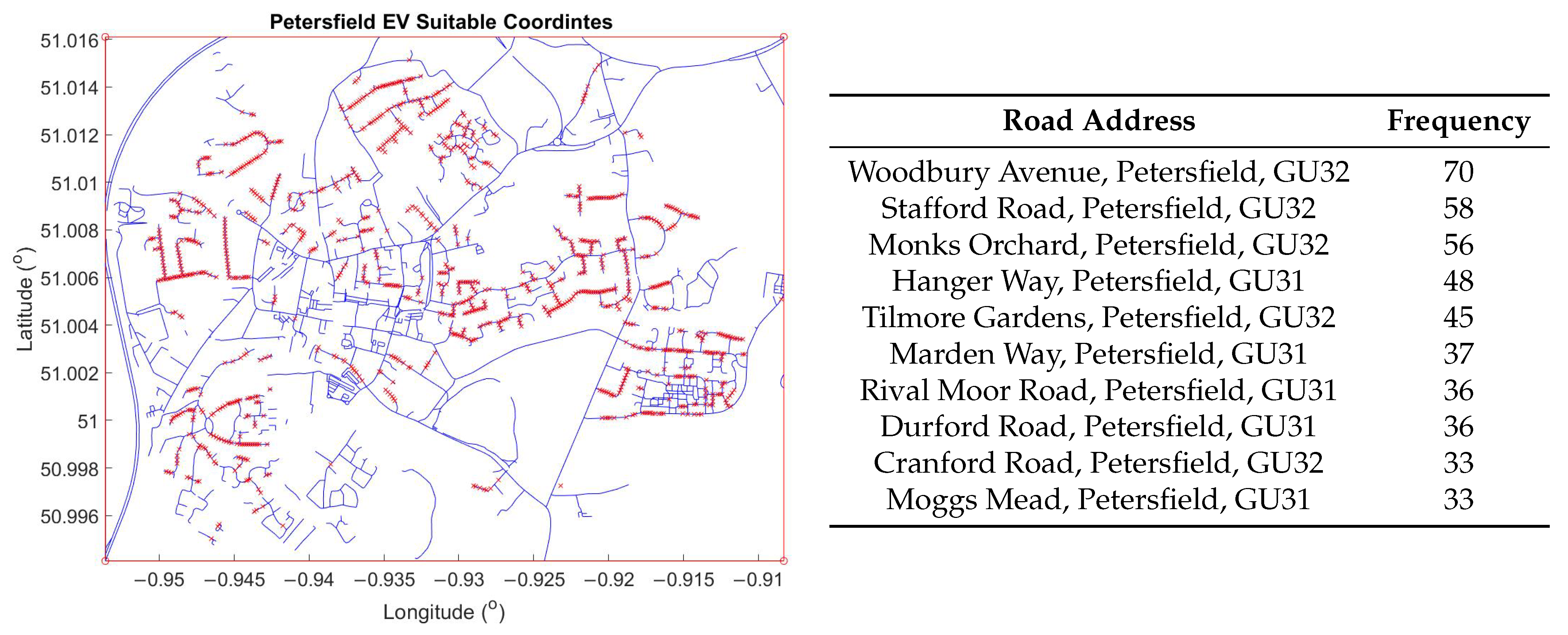

4.1. Test Area 1—Petersfield

4.1.1. Data Acquisition

4.1.2. Results

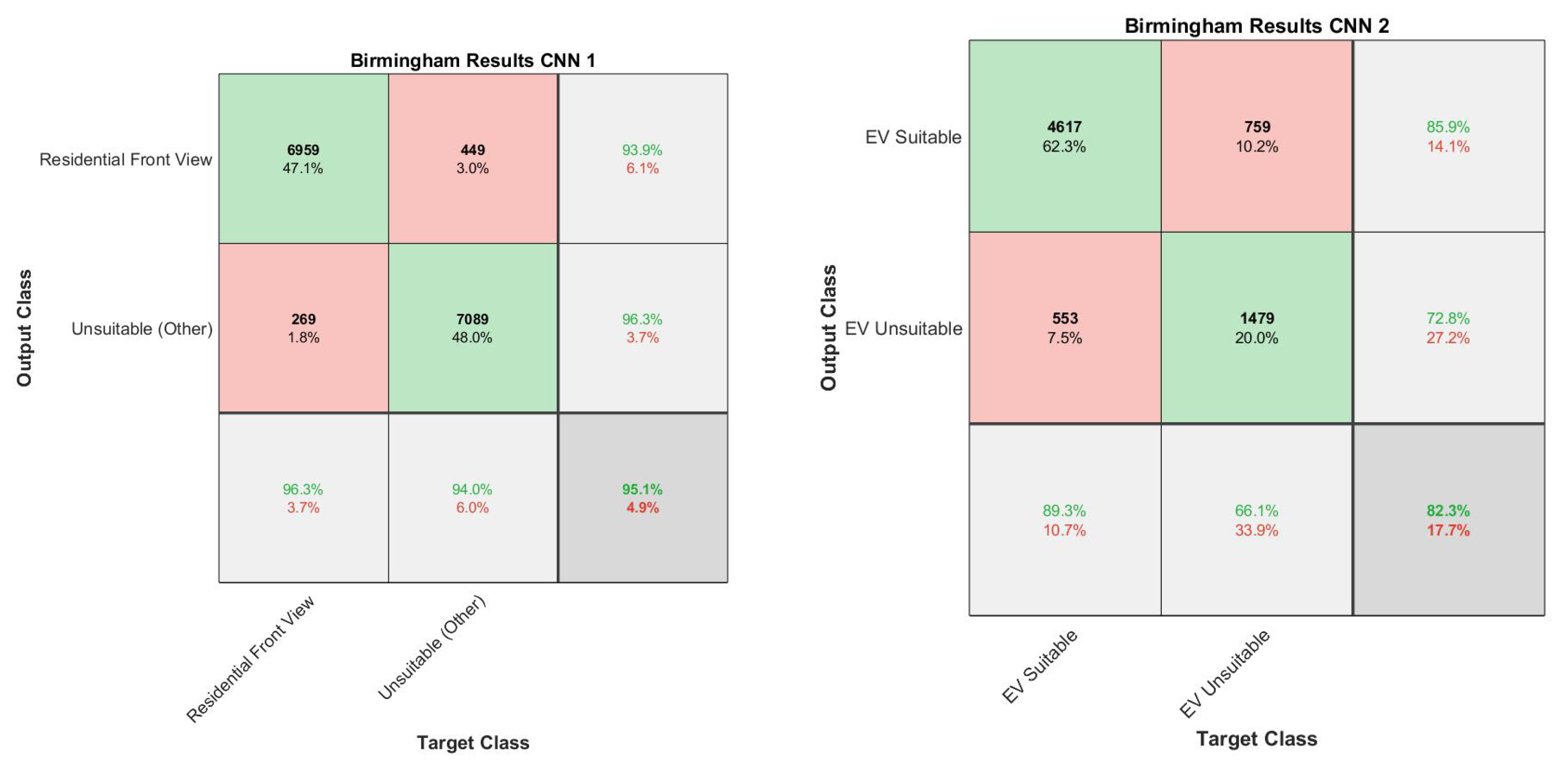

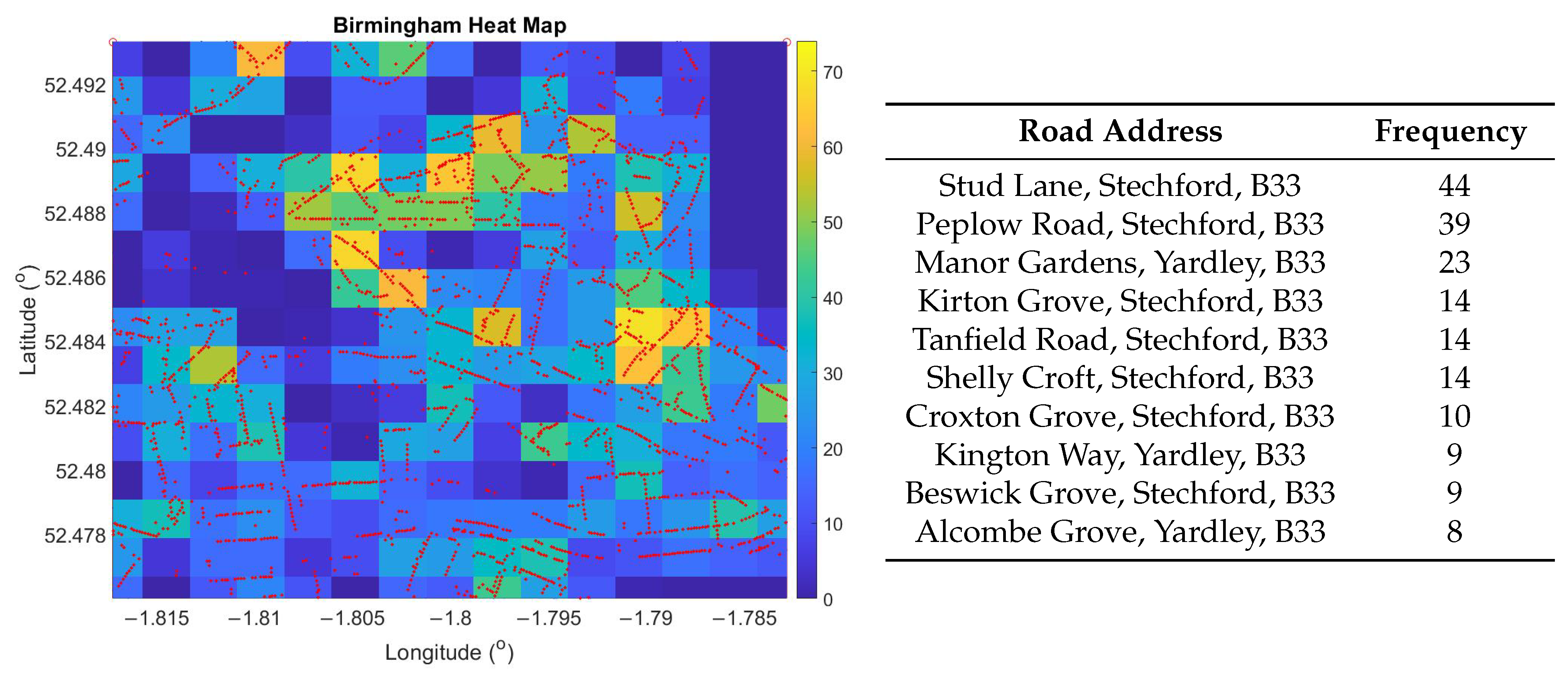

4.2. Test Area 2

4.2.1. Data Acquisition

4.2.2. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | linear dichroism |

References

- Srivastava, S.; Vargas-Muñoz, J.E.; Tuia, D. Understanding urban landuse from the above and ground perspectives: A deep learning, multimodal solution. Remote Sens. Environ. 2019, 228, 129–143. [Google Scholar] [CrossRef]

- Leung, D.; Newsam, S. Exploring geotagged images for land-use classification. In Proceedings of the GeoMM 2012—Proceedings of the 2012 ACM International Workshop on Geotagging and Its Applications in Multimedia, Co-located with ACM Multimedia 2012, Nara, Japan, 29 October–2 November 2012; pp. 3–8. [Google Scholar] [CrossRef]

- Kang, J.; Körner, M.; Wang, Y.; Taubenböck, H.; Zhu, X.X. Building instance classification using street view images. ISPRS J. Photogramm. Remote Sens. 2018, 145, 44–59. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Enríquez, F.; Soria, L.M.; Antonió Alvarez-García, J.; Velasco, F.; Déniz, O. Existing Approaches to Smart Parking: An Overview. In Proceedings of the Smart-CT 2017: Smart Cities, Malaga, Spain, 14–16 June 2017; pp. 63–74. [Google Scholar] [CrossRef]

- Zeng, B.; Dong, H.; Xu, F.; Zeng, M. Bilevel Programming Approach for Optimal Planning Design of EV Charging Station. IEEE Trans. Ind. Appl. 2020, 56, 2314–2323. [Google Scholar] [CrossRef]

- Department for Environment Food and Rural Affairs. UK Plan for Tackling Roadside Nitrogen Dioxide Concentrations: An Overview; Department for Environment Food and Rural Affairs: London, UK, 2017.

- Department for Environment Food and Rural Affairs. Clean Air Zone Framework Principles for Setting Up Clean Air Zones in England; Department for Environment Food and Rural Affairs: London, UK, 2017.

- Palmer, K.; Tate, J.E.; Wadud, Z.; Nellthorp, J. Total cost of ownership and market share for hybrid and electric vehicles in the UK, US and Japan. Appl. Energy 2018, 209, 108–119. [Google Scholar] [CrossRef]

- Brook Lyndhurst Ltd. Uptake of Ultra Low Emission Vehicles in the UK; Brook Lyndhurst Ltd.: London, UK, 2015. [Google Scholar]

- Office for Low Emission Vehicles. GOV.UK Electric Vehicle Homecharge Scheme: Customer Guidance. Available online: https://www.gov.uk/government/publications/customer-guidance-electric-vehicle-homecharge-scheme (accessed on 29 January 2021).

- Law, S.; Shen, Y.; Seresinhe, C. An application of convolutional neural network in street image classification. In Proceedings of the 1st Workshop on Artificial Intelligence and Deep Learning for Geographic Knowledge Discovery—GeoAI ’17, Los Angeles, CA, USA, 7–10 November 2017; pp. 5–9. [Google Scholar] [CrossRef]

- Hussain, M.; Davies, C.; Barr, R. Classifying Buildings Automatically: A Methodology. In Proceedings of the GISRUK 2007 Geographical Information Science Research Conference, Maynooth, Ireland, 11 April–13 April 2007; pp. 343–347. [Google Scholar]

- Haklay, M. How good is volunteered geographical information? A comparative study of OpenStreetMap and ordnance survey datasets. Environ. Plan. Plan. Des. 2010, 37, 682–703. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Semantic segmentation of small objects and modeling of uncertainty in urban remote sensing images using deep convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Las Vegas, NV, USA, 27 June–30 June 2016; pp. 1–9. [Google Scholar]

- Zhang, C.; Pan, X.; Li, H.; Gardiner, A.; Sargent, I.; Hare, J.; Atkinson, P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote Sens. 2018, 140, 133–144. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Krizhevky, A.; Ilya, S.; Geoffrey, H. ImageNet Classification with Deep Convolutional Neural Networks. Handb. Approx. Algorithms Metaheuristics 2007, 45-1–45-16. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; p. 226. [Google Scholar]

- Amato, G.; Carrara, F.; Falchi, F.; Gennaro, C.; Meghini, C.; Vairo, C. Deep learning for decentralized parking lot occupancy detection. Expert Syst. Appl. 2017, 72, 327–334. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. arXiv 2015, arXiv:1508.00092. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Cai, B.Y.; Li, X.; Seiferling, I.; Ratti, C. Treepedia 2.0: Applying Deep Learning for Large-Scale Quantification of Urban Tree Cover. In Proceedings of the 2018 IEEE International Congress on Big Data, BigData Congress 2018—Part of the 2018 IEEE World Congress on Services, San Francisco, CA, USA, 2–7 July 2018; pp. 49–56. [Google Scholar] [CrossRef]

- Barbierato, E.; Bernetti, I.; Capecchi, I.; Saragosa, C. Integrating remote sensing and street view images to quantify urban forest ecosystem services. Remote Sens. 2020, 12, 329. [Google Scholar] [CrossRef]

- Li, X.; Zhang, C.; Li, W.; Ricard, R.; Meng, Q.; Zhang, W. Assessing street-level urban greenery using Google Street View and a modified green view index. Urban For. Urban Green. 2015, 14, 675–685. [Google Scholar] [CrossRef]

- Stubbings, P.; Peskett, J.; Rowe, F.; Arribas-Bel, D. A Hierarchical Urban Forest Index Using Street-Level Imagery and Deep Learning. Remote Sens. 2019, 11, 1395. [Google Scholar] [CrossRef]

- Li, L.J.; Li, K.; Li, F.F.; Deng, J.; Dong, W.; Socher, R.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 4, 3320–3328. [Google Scholar]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A deep convolutional activation feature for generic visual recognition. In Proceedings of the 31st International Conference on Machine Learning, ICML 2014, Beijing, China, 21–26 June 2014; Volume 2, pp. 988–996. [Google Scholar]

- Zhu, Y.; Chen, Y.; Lu, Z.; Pan, S.J.; Xue, G.R.; Yu, Y.; Yang, Q. Heterogeneous Transfer Learning for Image Classification. In Proceedings of the Twenty-Fifth AAAI Conference on Artificial Intelligence—AAAI’11, San Francisco, CA, USA, 7–11 August 2011; pp. 1304–1309. [Google Scholar]

- Hara, K.; Le, V.; Froehlich, J.E. Combining crowdsourcing and Google Street View to identify street-level accessibility problems. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Paris, France, 27 April–2 May 2013; pp. 631–640. [Google Scholar] [CrossRef]

- Contributors, O. Planet Dump. 2019. Available online: https://planet.osm.org (accessed on 11 March 2020).

- Filippidis, I. Openstreetmap: Interface to OpenStreetMap. 2013. Available online: https://www.openstreetmap.org (accessed on 20 July 2020).

- ONS. 2011 Census Aggregate Data; ONS: London, UK, 2011. [Google Scholar] [CrossRef]

| List of Acronyms | |

|---|---|

| LULC | Land Use and Land Cover |

| EV | Electric Vehicle |

| OSM | Open Street Maps |

| OS | Ordnance Survey |

| GSV | Google Street View |

| VGI | Volunteered Geographical Information |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| Category | B’pool | P’borough | S’sea | C’bran | Co’ster | Ex’th | Os’try | Totals |

|---|---|---|---|---|---|---|---|---|

| Car Parks | 349 | 424 | 308 | 405 | 90 | 117 | 200 | 1893 |

| Trees and Foliage | 318 | 1658 | 573 | 311 | 348 | 402 | 200 | 3810 |

| Road Views | 354 | 388 | 434 | 334 | 337 | 375 | 200 | 2422 |

| Residential Front View | 1251 | 937 | 947 | 1019 | 972 | 1033 | 200 | 6359 |

| Totals | 2272 | 3407 | 2262 | 2069 | 1747 | 1927 | 800 | 14,484 |

| Category | B’pool | P’borough | S’sea | C’bran | Co’ster | Ex’th | Os’try | Totals |

|---|---|---|---|---|---|---|---|---|

| EV Suitable | 627 | 743 | 295 | 451 | 898 | 759 | 500 | 4273 |

| EV Unsuitable | 542 | 379 | 411 | 94 | 322 | 415 | 500 | 3232 |

| Totals | 1169 | 1122 | 706 | 545 | 1220 | 1174 | 1000 | 7505 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Flynn, J.; Giannetti, C. Using Convolutional Neural Networks to Map Houses Suitable for Electric Vehicle Home Charging. AI 2021, 2, 135-149. https://doi.org/10.3390/ai2010009

Flynn J, Giannetti C. Using Convolutional Neural Networks to Map Houses Suitable for Electric Vehicle Home Charging. AI. 2021; 2(1):135-149. https://doi.org/10.3390/ai2010009

Chicago/Turabian StyleFlynn, James, and Cinzia Giannetti. 2021. "Using Convolutional Neural Networks to Map Houses Suitable for Electric Vehicle Home Charging" AI 2, no. 1: 135-149. https://doi.org/10.3390/ai2010009

APA StyleFlynn, J., & Giannetti, C. (2021). Using Convolutional Neural Networks to Map Houses Suitable for Electric Vehicle Home Charging. AI, 2(1), 135-149. https://doi.org/10.3390/ai2010009