1. Introduction

Hard constrained optimisation problems (COPs) exist in many different applications. Examples include airline scheduling [

1] and CPU efficiency maximisation [

2]. Perhaps the most common approach for modelling and solving COPs in practice is mixed integer linear programming (MILP, or simply MIP). State-of-the-art MIP solvers perform sophisticated pre-solve mechanisms followed by branch-and-bound search with cuts and additional heuristics [

3].

A growing trend is to use machine learning (ML) to improve COP solving. Bengio et al. [

4] survey the potential of ML to assist MIP solvers. One promising idea is to improve node selection within a MIP branch-and-bound tree by using a learned policy. A policy is a function that maps states to actions, where in this context an action is the next node to select. However, research in ML-based node selection is scarce, as the only available literature is the work of He et al. [

5]. The objective of this article is to explore learning approximate node selection policies.

Node selection rules are important because the solver must find the balance between exploring nodes with good lower bounds, which tend to be found at the top of the branch-and-bound search tree, and finding feasible solutions fast, which often happens deeper into the tree. The former task allows for the global lower bound to improve (which allows us to prove optimality), whereas the latter is the main driver for pruning unnecessary nodes.

This article contributes a novel approach to MIP node selection by using an offline learned policy. We obtain a node selection and pruning policy with imitation learning, a type of supervised learning. In contrast to He et al. [

5], our policy learns solely to choose which of a node’s children it should select. This encourages finding solutions quickly, as opposed to learning a breadth-first search-like policy. Further, we generalise the expert demonstration process by sampling paths that lead to the best

k solutions, instead of only the top single solution. The motivation is to obtain different state-action pairs that lead to good solutions compared to only using the top solution, in order to aid learning within a deep learning context.

We study two settings: the first is heuristic that approximates the solution process by committing to pruning of nodes. In this way, the solver might find good or optimal solutions more quickly, however with the possibility of overlooking optimal solutions. This first setting has similarities to a diving heuristic. By contrast, the second setting is exact: when reaching a leaf the solver backtracks up the branch-and-bound tree to use a different path. In the first setting, the learned policy is used as an heuristic method. This is akin to a diving heuristic, with the difference that it uses the default branching rule as a variable fixing strategy. In the second setting, the learned policy is used as a child selector during plunging. The potential of learning here is to augment node selection rules, such that the child selection process is better informed than the simple heuristics that solvers typically use.

We apply the learned policy within the popular open-source solver SCIP [

3], in both settings. The results indicate that, while our node selector finds (optimal) solutions a little slower on average than the current default SCIP node selector,

BestEstimate, it does so much more quickly than the state-of-the-art in ML-based node selectors. Moreover, our heuristic method finds better initial solutions than

BestEstimate, albeit in a higher solving time. Overall, our heuristic policies have a consistently better optimality gap than all baselines if the accuracy of the predictive model adds value to prediction. Further, when a time limit is applied, our heuristic method finds better solutions than all the baselines (including

BestEstimate) in three of five problem classes tested and ties in a fourth problem class.

While these results indicate the potential of ML in MIP node selection, we uncover several limitations. This analysis comes from showing that the learned policies have imitated the SCIP baseline, but without the latter’s early plunge abort. Therefore our recommendation is that, despite the clear improvements over the literature, this kind of MIP child node selector is better seen in a broader approach to using learning in MIP branch-and-bound tree decisions.

The outline of this article is as follows:

Section 2 contains preliminaries,

Section 3 specifies the imitation learning approach to node selection and pruning,

Section 4 reports the results on benchmark MIP instances (with additional instances reported in the

Appendix A),

Section 5 discusses the results,

Section 6 reviews related work, and

Section 7 concludes with future directions.

2. Background

Mixed integer programming (MIP) is a familiar approach to constraint optimisation problems. A MIP requires one or more variables to decide on, a set of linear constraints that need to be met, and a linear objective function, which produces an objective value without loss of generality to be minimised. Thus we have, for

y the objective value and

x the vector of decision variables to decide on:

where

A is an

m ×

n constraint matrix with

m constraints and

n variables;

c is a

n × 1 vector.

At least one variable has integer domain in a MIP; if all variables have continuous domains then the problem is a linear program (LP). Since general MIP problems cannot be solved in polynomial time, the LP relaxation of (

1) relaxes the integer constraints. A series of LP relaxation can be leveraged in the MIP solving process. For minimisation problems, the solution of the relaxation provides a lower bound on the original MIP problem.

Equation (

1) is also referred to as the primal problem. The primal bound is the objective value of a solution that is feasible, but not necessarily optimal. This is referred to as a ‘pessimistic’ bound. The dual bound is the objective value of the solution of an LP relaxation, which is not necessarily feasible. This is referred to as an ‘optimistic’ bound. The

integrality gap is defined as:

where

is the primal bound,

is the dual bound, and

returns the sign of its argument. Note this definition is that used by the SCIP solver [

3], and requires care to handle the infinite gaps case.

Related to the integrality gap is the optimality gap: the difference between the objective function value of the best found solution and that of the optimal solution. Both the integrality gap and the optimality gap are monotonically reduced during the solving process. The solving process combines inference, notably in the form of inferred constraints (‘cuts’), and search, usually in a branch-and-bound framework.

Branch and bound [

6] is the most common constructive search approach to solving MIP problems. The state space of possible solutions is explored with a growing tree. The root node consists of all solutions. At every node, an unassigned integer variable is chosen to branch on. Every node has two children: candidate solutions for the lower and upper bound respectively of the chosen variable. Note that a node (and its entire sub-tree) is pruned when the solution of the relaxation at that node is worse than the current primal bound. The main steps of a standard MIP branch-and-bound algorithm are given in Algorithm 1.

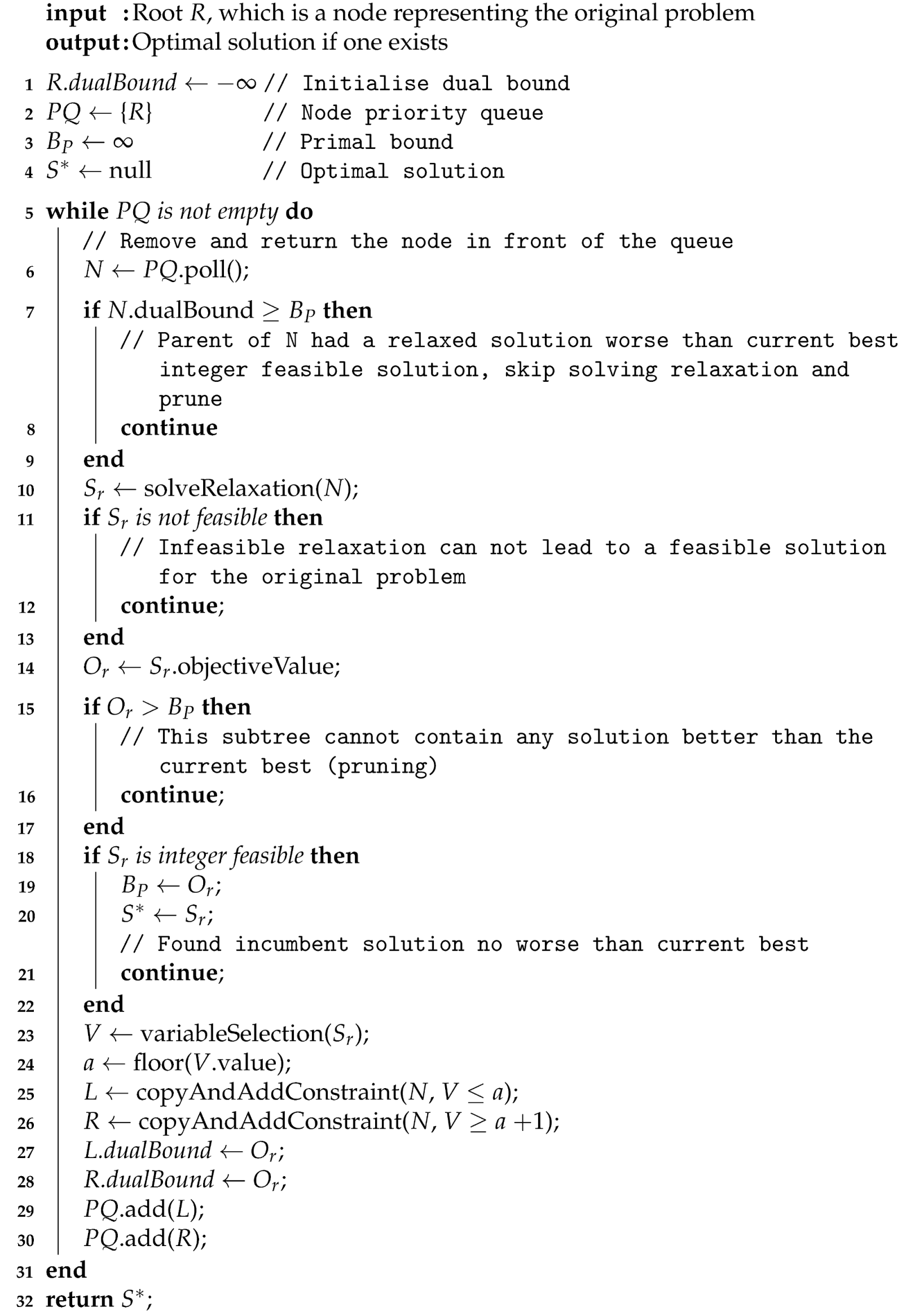

| Algorithm 1 Solve a minimisation MIP problem using branch and bound |

![Ai 02 00010 i001 Ai 02 00010 i001]() |

Choosing on which variable to branch is not trivial and affects the size of the resulting search tree, and therefore the time to find solutions and prove optimality. For example, in the SCIP solver, the default variable selection heuristic (the ‘brancher’) is hybrid branching [

7]. Other variable selection heuristics are for example pseudo-cost branching [

8] and reliability branching on pseudo-cost values [

9]. The brancher can inform the node selector which child it prefers; it is up to the node selector, however, to choose the child. The child preferred by the brancher, if any, is called the priority child.

SCIP’s brancher prefers the priority child by using the

PrioChild property. It is used as a feature in our work. The intuition is to maximise the number of inferences and pushing the solution away from the root node values. In more detail, the left child priority value is calculated by SCIP as:

and the right child priority value as:

where

(respectively

) is the average number of inferences at the left child (right child),

is the value of the relaxation of the branched variable at the root node and

V is the value of the relaxation of the branched variable at the current node. An inference is defined as a deduction of another variable after tightening the bound on a branched variable [

10]. If

, then the left child is prioritised over the right child, if

, then the right child is prioritised. If they are equal, then none are prioritised. Note that while this rule for priority does not necessarily hold for all branchers in general, it does hold for the standard SCIP brancher.

Choosing on which node to prioritise for exploration over another node is defined by the node selector. As is the case for branching, different heuristics exist for node selection. Among these are depth-first search (

DFS), breadth-first search (

BFS),

RestartDFS (restarting

DFS at the best bound node after a fixed amount of newly-explored nodes) and

BestEstimate. The latter is the default node selector in the SCIP solver from version 6. It uses an estimate of the objective function at a node to select the next node and it prefers diving deep into the search tree (see

www.scipopt.org/doc/html/nodesel__estimate_8c_source.php, accessed on 1 September 2020).

In more detail, BestEstimate assigns a score to each node, which takes into account the quality of its dual bound, plus an objective value penalty for lack of integrality. In particular, the penalty is calculated using the variable’s pseudo-costs, which act as an indicator of the per unit objective value increase for shifting a variable downwards or upwards. SCIP actually uses, BestEstimate with plunging, which applies depth-first search (selecting children/sibling nodes) until this is no longer possible or until a diving abort mechanism is triggered. SCIP then chooses a node according to the BestEstimate score.

Node selection heuristics can be grouped into two general strategies [

11]. The first is choosing the node with the best lower bound in order to increase the global dual bound. The second strategy is diving into the tree to search for feasible solutions and decrease the primal bound. This has the advantage to prune more nodes and decrease the search space. In this article we use the second strategy to develop a novel heuristic using machine learning, leveraging local variable, local node and global tree features, in order to predict as far as possible the best possible child node to be selected.

We can further leverage node pruning to create a heuristic algorithm. The goal is then to prune nodes that lead to bad solutions. Correctly pruning sub-trees that do not contain an optimal solution is analogous to taking the shortest path to an optimal solution, which obviously minimises the solving time. It is generally preferred to find feasible solutions quickly, as this enables the node pruner to prune more sub-trees (due to bounding), with the effect of decreasing the search space. There is no guarantee, however, that the optimal solution is not pruned.

3. Approach

Recall that our goal is to obtain a MIP node selection policy using machine learning, and to use it in a MIP solver. The policy should lead to promising solutions more quickly in the branch-and-bound tree, while pruning as few good solutions as possible.

Our approach is to obtain a node selector by imitation learning. A policy maps a state

to an action

. In our case

consists of features gathered within the branch-and-bound process. The features consist of branched variable features, node features and global features. The branched variable features are derived from Gasse et al. [

12]. See

Table 1 for the list of features. Note that we define a separate

left_node_lower_bound and

right_node_lower_bound, instead of a general node lower bound, because during experimentation, we obtained two different lower bounds among the child nodes.

The actions are the node selection decisions at a node. As opposed to unconstrained node selectors—which can choose any open node s- we constrain our node selector’s action space to the selection of direct child nodes. This leads to the restricted action space , where L is the left child, R is the right child and B are both children.

In order to train a policy by imitation learning, we require training data from the expert. We obtain this training data, in the form of sampled state-action pairs, by running a MIP solver (specifically SCIP), and recording the features from the search process and the node selection decisions at each sampled node. We now explain the process.

3.1. Data Collection and Processing

Our sampling process is similar to the prior work of He et al. [

5], with two major differences. The first is that our policy learns

only to choose which of a node’s children it should select; it does not consider the sub-tree below either child. This encourages finding solutions quickly, as opposed to attempting to learn a breadth-first search-like method.

The second difference from previous work is that we generalise the node selection process by sampling paths that lead to the best

k solutions, instead of only the top solution. The reason for this is to obtain different state-action pairs that lead to good solutions compared to only using the top solution, in order to aid learning within a deep learning context. In more detail, the best

k solutions are chosen in the same order as output from SCIP; we therefore adopt SCIP’s solution ranking which is based on the optimality gap. Thus, whereas He et al. [

5] check whether the current node is in the path to the best solution, we check whether the left and right children of the current node are in a path that leads to one of the best

k solutions. If that is the case, then we associate the label of the current node as ‘B’; if not, we check the left child or right child and associate the appropriate label (‘L’ or ‘R’ respectively). If neither are in such a path, then the node is not sampled.

Pre-processing of the dataset is done by removing features that do not change and standardising every non-categorical feature. We then feed it to the imitation learning component, described next, after the example.

3.2. Example of Data Collection

We here give an example of the data collection and processing on a toy problem with two variables. Let and suppose the known best 3 solutions are: . The sampling process begins by activating the solver.

The solver solves the relaxation of current (root) node and variable selection tells it to branch on variable with bounds and . In this case we know that both children are in the path of best 3 solutions, so the label here is ‘B’. Note that we just found a state-action pair and so we save it.

The solver continues its process as usual and decides to enter the left child.

Again, the solver solves the relaxation and applies variable selection, the following two child nodes are generated with bounds: and . This case is interesting, because only the left child can lead to best 3 solutions, so the label here is ’L’. Again we save this state-action pair.

Since we do not assume control of the solver in the sampling process, let us suppose the solver enters the right child (recall the best 3 solutions are not here, but the solver does not know that).

Two additional child nodes are generated: and . The left node has the following branched variable conditions in total: , and . and right node has: , and . None of these resolve to any of the best 3 solutions. Thus we do not sample anything here.

The solver continues to select the next node.

We continue to proceed with the above until the solver stops. Now we have sampled state-action pair from one training instance. This process is repeated for many training instances.

3.3. Machine Learning Model

Our machine learning model is a standard fully-connected multi-layer perceptron with

H hidden layers,

U hidden units per layer, ReLU activation layers and Batch Normalisation layers after each activation layer, following a Dropout layer with dropout rate

.

Figure 1 gives a visual overview of the operations within a hidden layer.

We obtain the model architecture parameters and learning rate

using the hyperparameter optimisation algorithm [

13]. Since during pre-processing features that have constant values are removed, the number of input units can change across different problems. For example, in a fully binary problem, the features

left_node_branch_bound and

right_node_branch_bound are constants (0 and 1 respectively), while for a general mixed-integer problem this is not the case. The number of output units is three. The cross-entropy loss is optimised during training with the Adam algorithm [

14].

During policy evaluation, the action

B (‘both’) can result in different operations, as seen in

Table 2. We define

PrioChild,

Second and

Random as possible operations.

PrioChild selects the priority child as indicated by the variable selection heuristic (i.e., the brancher);

Second selects the next best scoring action from the ML policy;

Random selects a random child. Additionally, when the solver is at a leaf and there is no child to select, then we define three more operations. These are

RestartDFS,

BestEstimate and

Score. The first two are baseline node selectors from SCIP [

3];

Score selects the node which obtained the highest score so far as calculated by our node selection policy.

Obtaining the node pruning policy is similar to obtaining the node selection policy. The difference is that the node pruning policy also prunes the child that is ultimately not selected by the node selection policy. If prune_on_both = True, then this results in diving only once and then terminating the search. Otherwise, the nodes initially not selected after the action B are still explored. The resulting solving process is thus approximate, since we cannot guarantee that the optimal solution is not pruned.

To summarise, as seen in

Figure 2, we use our learned policy in two ways: the first is heuristic by committing to pruning of nodes, whereas the second is exact: when reaching a leaf, we backtrack up the branch-and-bound tree to use a different strategy. Source code is available at:

www.doi.org/10.4121/14054330 (accessed on 1 September 2020); note that due to licensing restrictions, SCIP must be obtained separately from

www.scipopt.org (accessed on 1 September 2020).

4. Empirical Results

This section studies empirically the node selection policies described in

Section 3. The goal is to explore the effectiveness of the learning and of the learned policy within a MIP solver.

The following standard NP-hard problem classes were tested: set cover, maximum independent set, capacitated facility location and combinatorial auctions. The instances were generated by the generator of Gasse et al. [

12] with default settings. The classes differ from each other in terms of constraints structure, existence of continuous variables, existence of non-binary integer variables, and direction of optimisation.

For the MIP branch-and-bound framework we use SCIP version 6.0.2. (Note that SCIP version 7, released after we commenced this work, does not bring any major improvements to its MIP solving.) As noted earlier, SCIP is an open source MIP solver, allowing us access to its search process. Further, SCIP is regarded as the most sophisticated and fastest such MIP solver. The machine learning model is implemented in PyTorch [

15], and interfaces with SCIP’s C code via PySCIPOpt 2.1.6.

For every problem, we show the learning results, i.e., how well the policy is learned, and the MIP benchmarking results, i.e., how well the MIP solver does with the learned policy. We compare the policy evaluation results with various node selectors in SCIP, namely

BestEstimate with plunging (the SCIP default),

RestartDFS and

DFS. Additionally, we compare our results with the node selector and pruner from He et al. [

5], with both the original SCIP 3.0 implementation by those authors (

He) and with the a re-implementation in SCIP 6 developed by us (

He6). He et al. [

5] has three policies: selection only (S), pruning only (P) and both (B). For exact solutions, we only use (S). For the first solution found at a leaf, we compare (S) and (B). For the experiments with a time limit, we compare (P) and (B).

Table 3 summarises the node selectors compared.

We train on 200 training instances, 35 validation instances and 35 testing instances across all problems. These provide sufficient state-action pairs to power the machine learning model. The number of obtained samples (state-action pairs) differs per problem. The process of obtaining samples from each training instance was described in

Section 3. For every problem, we use the

best solutions to gather the state-action pairs; for maximum independent set we also experiment with

. The higher the value of

k, the more data we can collect and the better one can generalise the ML model; we discuss further in

Section 5. We selected

having tried lower values (

) and found inferior results in initial experiments. Due to computational limitations on training time, higher values of

k were not feasible across the board.

Based on initial trials and the hyperparameter optimisation, we use a batch size of 1024, dynamically lower the learning rate after 30 epochs and terminate training after another 30 epochs if no improvement was found. During training, the validation loss is optimised. The maximum number of epochs is 200.

We evaluate a number of different settings for our node selection and pruning policy, as seen in

Table 2. This leads to nine different configurations for the node selection policy and twelve different configurations for the node pruning policy. Note that for the node pruning policy, when

prune_on_both is true, then optimisation terminates when a leaf is found; thus the parameter value for

on_leaf does not matter. We refer to our policies as

ML_{on_both}{on_leaf}{prune_on_both}. For example,

ML_PB denotes the node pruning policy that uses

PrioChild for

on_both and

BestEstimate for

on_leaf.

Table 4 enumerates the set of configurations for the learned policies.

In the following we report three sets of experiments, and then a subsequent fourth experiment:

First solution. In the first experiment, we examine the solution quality in terms of the optimality gap and the solving time of the first solution found at a leaf node. Recall that the optimality gap is the difference between the objective function value of the best found solution and that of the optimal solution. Note that it is possible an infeasible leaf node is found, in that case, a solution is returned that was found prior to the branch-and-bound process, through heuristics in-built in SCIP.

Optimal solution. In the second experiment, we evaluate the policy on every problem in terms of the arithmetic mean solving time of each node selector. That is, the total time to find an optimal solution and prove its optimality.

Limited time. In the third experiment, we select one ML policy, based on the (lowest) harmonic mean between the solving time and optimality gap. For each instance, we run the solver on each baseline with a time limit equal to the solving time of the selected ML policy and present the obtained optimality gaps. We also report the initial optimality gap obtained by the solver before branch-and-bound is applied.

Imitation. In the fourth experiment, we analyze the behaviour of the learned policy during the first plunge, and compare it to SCIP’s default BestEstimate rule. We do this in detail on one set of instances.

By solving time we mean the gross difference in time between starting SCIP and terminating it. When we terminate SCIP depends on the task of the experiment:

Solve to optimality and record solving time: termination condition is finding an optimal solution.

Solve until we find a solution in a leaf node (or by SCIP’s built-in heuristics if the leaf node reached is infeasible) and record the solving time and optimality gap: termination condition is finding a first leaf node.

Solve until a certain time limit and record optimality gap: termination condition is the time limit itself.

We apply the policies on instances of two different difficulties.

First, easy instances, which can be solved within 15 min. Second, hard instances, where we set a solving time limit to one hour. Here, for all experiments we substitute the integrality gap for the optimality gap, because the optimal solution is not known for every hard instance. An alternative approach is to compare directly the primal solution quality (see

Section 5). Additionally with hard instances, for Experiment 1, instead of checking the solving time, we check the integrality gap.

Table 5 provides an overview of the machine learning parameters and results. The baseline accuracy (column 2) is what the accuracy would have been if each sample is classified as the majority class. The test accuracy (column 3) is the classification accuracy on the test dataset. Note that

is included in the maximum independent set instances: see

Appendix A.1. The best performing ML model is the model with the settings that achieve the lowest validation loss.

The experiments reported in

Section 4.2,

Section 4.3 and

Section 4.4 are run on a machine with an Intel i7 8770K CPU at 3.7–4.7 GHz, NVIDIA RTX 2080 Ti GPU and 32GB RAM. For the hard instances, the default SCIP solver settings are used. For the other instances, pre-solving and primal heuristics are turned off, to better capture the effect of the node selection policy. We use the shifted geometric mean (shift of 1) as the average across all metrics. This is standard practice for MIP benchmarks [

10].

4.1. Summary of Results

The experiments can be summarised as follows:

Set cover:BestEstimate has the lowest mean solving time.

ML_RB is second, but is not statistically significantly slower. The policies of He et al. [

5] are slow. When a time limit has imposed,

ML_SRF has the lowest harmonic mean of solving time and optimality gap; this is statistically significant compared to all others.

Other instances: On maximum independent set, DFS dominates and the ML policies are relatively poor. Only on this dataset are our policies slower (slightly) than He6; in all other cases they dominate. On capacity facility location and on combinatorial auctions, the ML policies and BestEstimate respectively are fastest, but not statistically significantly so.

Hard set cover:BestEstimate has the lowest mean solving time, but no ML policy was statistically significantly slower. When a time limit is imposed, ML_PST has the lowest harmonic mean of solving time and optimality gap; this is statistically significant compared to all others.

Imitation quality: When the policies are analysed in detail, the PrioChild policies ML_P·· are found to accurately imitate BestEstimate.

4.2. Set Cover Instances

These instances consist of 2000 variables and 1000 constraints forming a pure binary minimisation problem. We sampled 17,254 state-action pairs on the training instances, 2991 on the validation instances and 3218 on the test instances. The model achieves a testing accuracy of 76.4%, with a baseline accuracy of 57.5%.

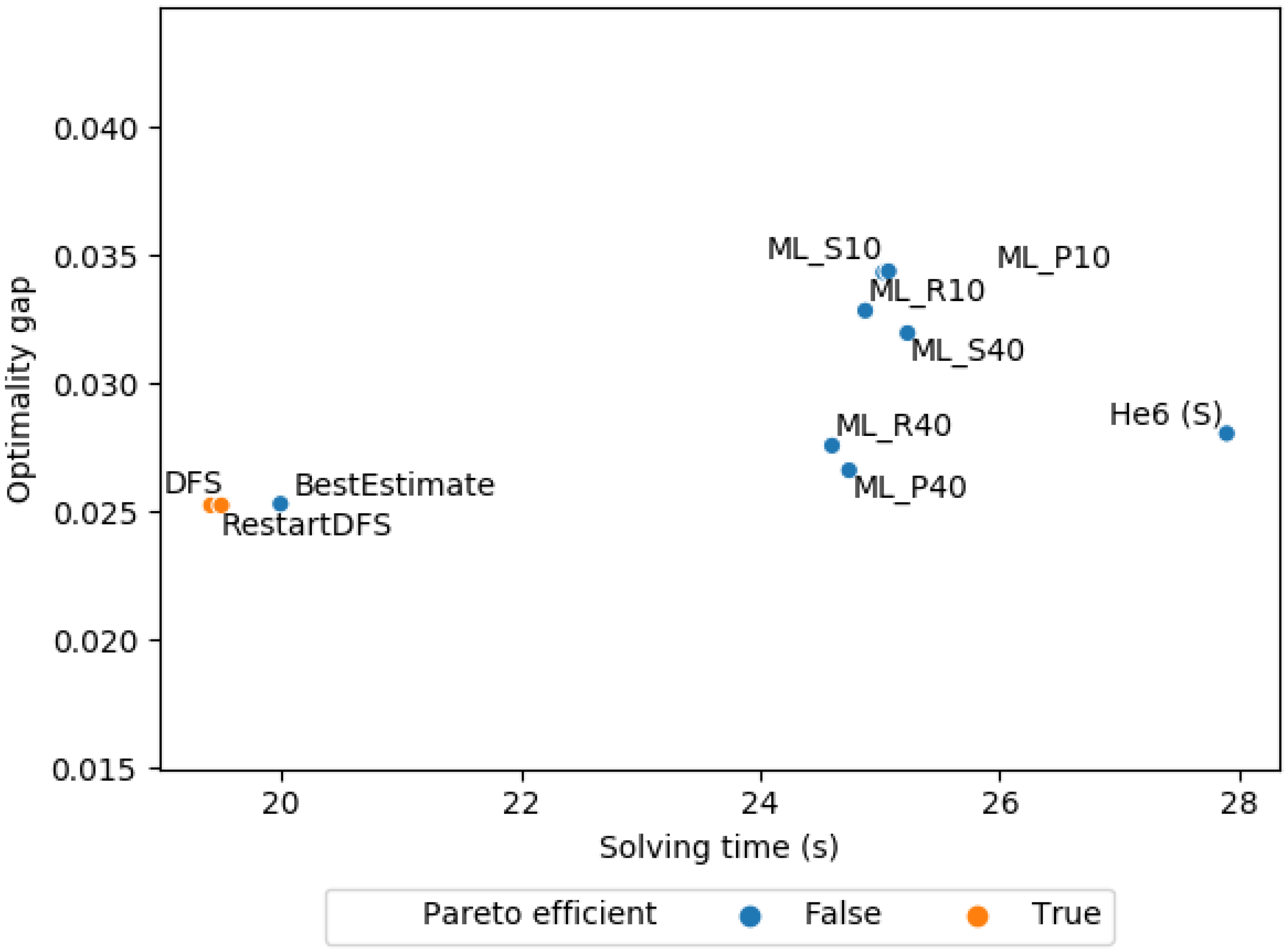

Figure 3 shows the mean solving time against the mean optimality gap of the first solution obtained by the baselines and ML policies at a leaf node. Note that the only parameter that is influential for the ML solver is the first parameter, i.e.,

on_both. (The second parameter

on_leaf and the third

prune_on_both do not influence the solving time or quality of the first solution, since the search terminates at the first found leaf that is found.) The policy of He et al. [

5] is not included here due to its substantial outliers. We see here that our ML policy obtains a lower optimality gap at the price of a higher solving time for the first solution.

Table 6 reports the mean solving time and explored nodes of various exact node selection strategies.

BestEstimate achieves the lowest mean solving time at 26.4 s;

ML_RB comes next at 35.7 s. The policies of He et al. [

5]—both the original and our re-implementation—are markedly slower than all other methods.

We conducted a pair-wise t-test between the mean solving time of BestEstimate and the mean solving time of the other policies. We can only reject the null hypothesis of equal means with p-value below 0.1 for ML_RR (p-value: 0.08). For the rest of our ML policies, we cannot reject the null hypothesis of equal means. We also conducted a pair-wise t-test between the mean number of explored nodes of BestEstimate and the mean number of explored nodes of the other policies. Again, we cannot reject the null hypothesis of equal means with p-value below 0.05 for any of our ML policies (lowest observed p-value: 0.34).

Table 7 reports the mean optimality gap of the baselines under a

time limit for each instance. The time limit for each instance is based on the solving time of the ML policy that achieved the lowest harmonic mean between the mean solving time and mean optimality gap across all instances. This time limit fosters comparison because it is certain to be neither excessively low, so that the different methods would accomplish little, nor excessively high, so that all methods would find an optimal solution. In the case of the set cover instances,

ML_SRF has the lowest harmonic mean and also achieves the lowest mean optimality gap. We conducted a pairwise

t-test between the mean optimality gap of our best ML policy and the mean optimality gap of each baseline. We can reject the null hypothesis of equal means with

p-value below 0.005 for all baselines. This shows that

ML_SRF’s smaller optimality gap is significant.

The initial optimality gap obtained by the solver before branch-and-bound is 2.746. This shows that applying branch-and-bound—with whatever node selection strategy—to find a solution has a significant difference.

4.3. Three Further Problem Classes

The appendix gives detailed results for the maximum independent set, capacitated facility location, and combinatorial auctions problems. Briefly, on maximum independent set, baseline DFS dominates, and the ML policies are relatively poor; this is the only problem class where He6 outperforms our policies slightly (although we outperform the original He easily). On capacitated facility location, our ML policies give the best results on solving time and on explored nodes, although the results are not significantly better than BestEstimate according to the t-test. On combinatorial auctions, BestEstimate dominates, although its explored nodes are not significantly fewer than our ML policies.

When a time limit is applied, our best ML policy either achieves the best optimality gap (as with set cover problems), or is Pareto-equivalent to the baseline which does (interestingly, this is DFS, never BestEstimate in these problems). This in in contrast to He6, which in all problems is significantly poorer in optimality gap obtained.

4.4. Set Cover: Hard Instances

To assess how the ML policies perform on hard instances, we use the same trained model of the ML policies that were previously trained on the easier set cover instances. The hard instances consist of 4000 variables and 2000 constraints, while the easier set cover instances had 2000 variables and 1000 constraints. We evaluated 10 hard instances (due to computational limitations) and focused on BestEstimate as a baseline on the node selection policy. Primal heuristics and pre-solving were enabled.

For the pruning policies,

Figure 4 shows the solving time plotted against the integrality gap of the first solution obtained by the baselines and ML policies. As before, we see the same trend where the ML policies find a lower gap at the cost of a higher solving time. See

Table 8 and

Figure 5 for the integrality gap of the baselines using a time limit for each instance. In this case,

ML_PST has the lowest harmonic mean between the mean solving time and mean integrality gap of all ML policies.

ML_PST achieves a significantly lower integrality gap compared to the baselines: we conducted a pairwise

t-test between the mean optimality gap of our best ML policy and the mean optimality gap of each baseline. We can reject the null hypothesis of equal means with

p-value below 0.001 for all baselines.

For the node selection policies, we set the time limit to one hour per problem.

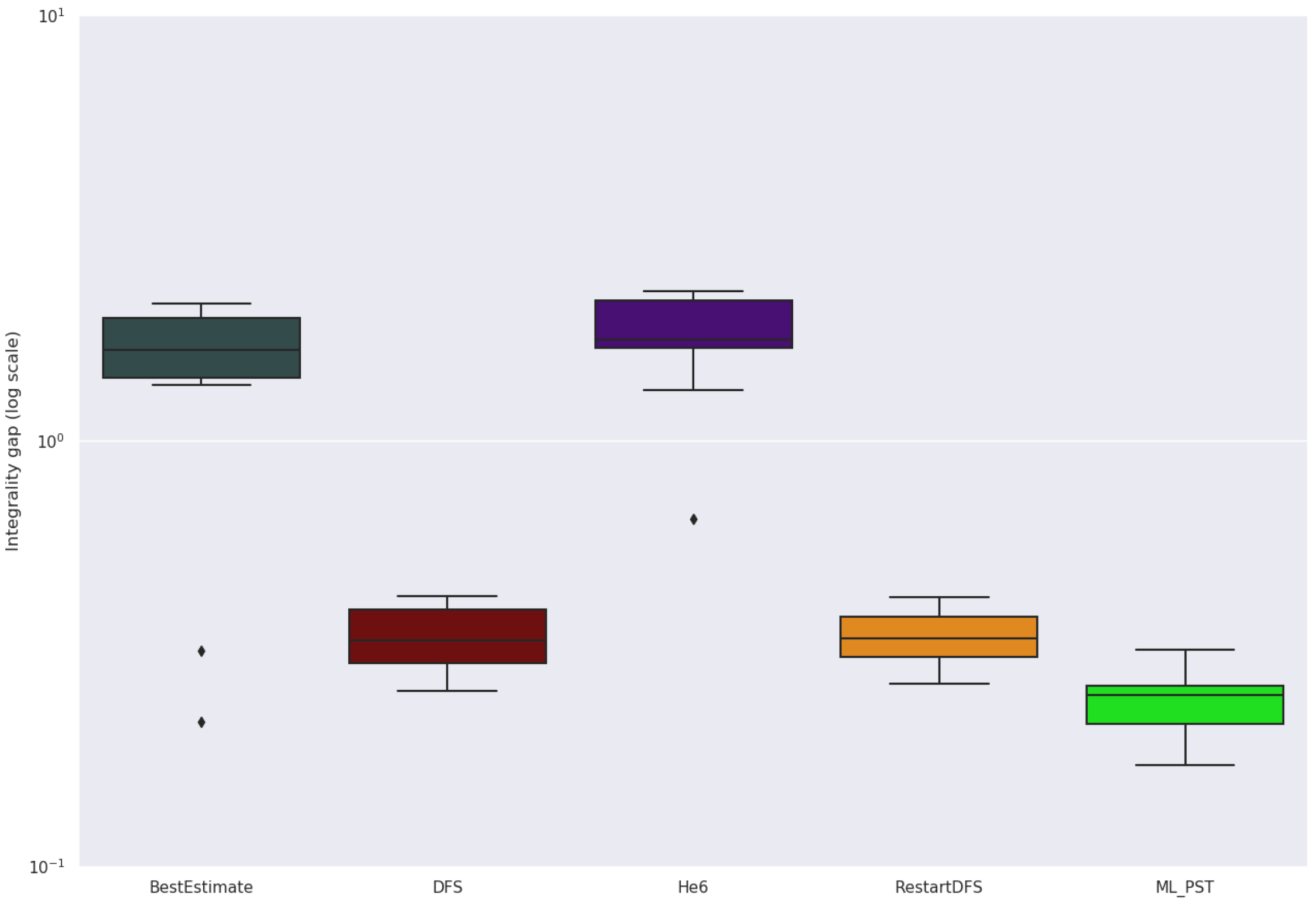

Figure 6 shows the number of solved instances per policy, and

Figure 7 shows boxplots of the integrality gaps for each policy. We use integrality gap here, because we do not know the optimal objective value for all instances.

Table 9 shows the mean solving time, explored nodes and integrality gap of various node selection strategies.

BestEstimate achieves the lowest mean solving time at 2256.7 s and integrality gap at 0.0481.

ML_SB has the lowest number of explored nodes at 109298. A caveat over the reduced number of nodes of the best ML policies versus

BestEstimate comes from the solver timing out on many instances—the instances being hard. Fewer nodes could be due to the overhead that the ML classifier requires at each node.

We conducted a pair-wise t-test between the mean solving time of BestEstimate and the mean solving time of the other policies. We cannot reject the null hypothesis of equal means with p-value below 0.1 for all our ML policies (lowest observed p-value: 0.64). We also conducted a pair-wise t-test between the mean number of explored nodes of BestEstimate and the mean number of explored nodes of the other policies. We can reject the null hypothesis of equal means with p-value below 0.05 for ML_PB, ML_RB and ML_SB. Lastly, we conducted a pair-wise t-test between the mean integrality gap of BestEstimate and the mean integrality gap of the other policies. We can reject the null hypothesis of equal means with p-value below 0.1 for ML_RS and ML_SS. Summarising, ML_SB has a statistically significant fewer number of nodes, while not having a statistically significant higher time or integrality gap than BestEstimate.

Unlike the easier set cover instances, in all the hard instances, the solver could only obtain an integrality gap of infinity on the first feasible solution. Hence we cannot compare the initial integrality gap to the found integrality gaps during branch and bound. A possible reason for the solver’s integrality gap of infinity could be that the first solution is found before the root LP relaxation was solved.

4.5. Success of the Learned Imitation

The results reported so far correspond to the first three experiments: optimality gap and solving time of first solution at a leaf node; total solving time to find an optimal solution and prove its optimality; and optimality gap within a fixed solving time. The results are on set cover (easy and hard instances), and three further problem classes.

We further analyze the behaviour of the learned policy during the first plunge, in detailed comparison to SCIP’s default

BestEstimate rule. Recall that, when plunging,

BestEstimate chooses the priority child according to the

PrioChild property (see

Section 2), which is one of the input features to our method. It is also important to note that, while the learned policy plunges until finding a leaf node,

BestEstimate may decide to abort the plunge early. This early abort decision is made according a set of pre-established parameters such as a maximum plunge depth (for more details we refer to the SCIP documentation).

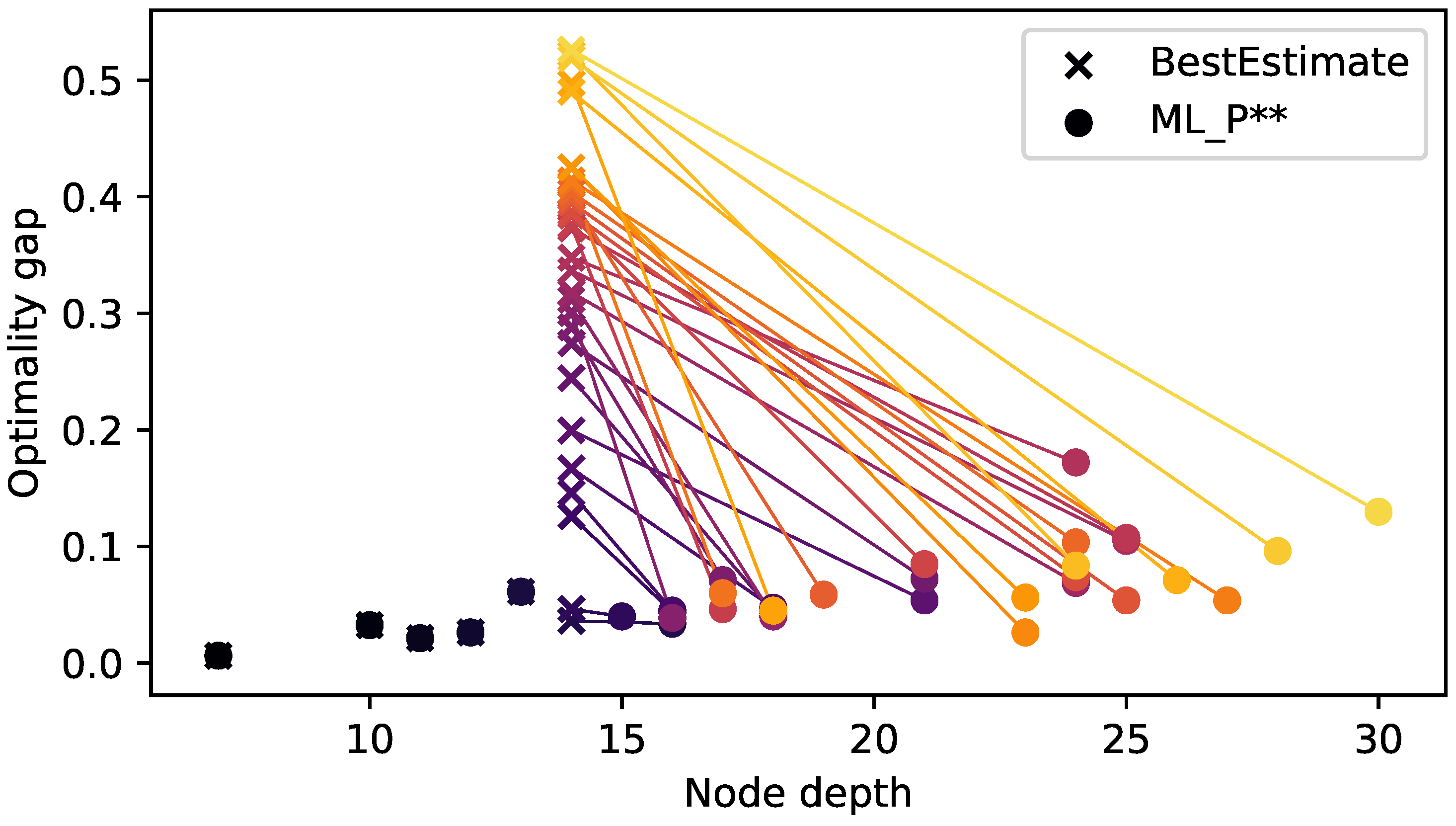

In order to study the trade-off between better feasible solutions and the computational cost of obtaining them, we analyze the attained optimality gap against depth of the final node in the first plunge. This in contrast to our previous experiment, where we considered solving time as the second metric. We compare BestEstimate and the learned policy with on_both set to PrioChild. Further, we present experiments per instance, instead of aggregating them.

Figure 8 shows the results for the 35 (small) test instances. The effect of

BestEstimate’s plunge abort becomes apparent. On instances where the first leaf has depth smaller than 14, both policies perform identically. On the contrary, on the remaining instances,

BestEstimate hits the maximum plunge depth, hence aborting the plunge. These results show that the better optimality gap achieved by the learned policy comes at the cost of processing more nodes, i.e., plunging deeper into the tree. We also note that these results were obtained setting on_both to

PrioChild, however no node was labelled with ‘both’ during our experiments. In spite of this, the learned policy chose the priority child on 99.6% of the occasions, demonstrating its strong imitation of that heuristic.

5. Discussion and Limitations

This section reflects on our experiments and results, and discusses design decisions and possible alternative to explore.

5.1. Experiments

Summarising

Section 4, we undertook three performance experiments—namely measuring the solving time and optimality gap for the first solution found at a leaf node in the branch and bound tree, measuring the total solving time for an exact solution, and setting a low instance-specific time limit to measure the optimality gap—and one exploratory experiment.

For the first experiment, the PrioChild ML policy (ML_P··) performed Pareto-equivalent on four of the five problem sets, performing inferior to the baselines only on maximum independent set instances. We turned off SCIP’s primal heuristics in order to see the ability of the methods to find feasible solutions.

For the second experiment, our method performed better than the baselines in terms of mean solving time for capacitated facility location problem, but worse on the three purely binary problems. The best ML policy is ML_RB, although ML_SB has only slightly higher time, while exploring fewer nodes.

For the third experiment, we chose ML_SRF on set cover, ML_PRF on maximum independent set, ML_SST on capacitated facility location, and ML_PST on combinatorial auctions and hard set cover instances, in order to measure how well these best ML policies perform against the baselines. These policies were chosen based on the (lowest) harmonic mean between the solving time and optimality gap. In four of the five problem sets, our policies had a statistically-significant lower optimality gap than the baselines, while on combinatorial auctions, ML_PST did not perform statistically worse than DFS and RestartDFS.

For Experiments 2 and 3, we kept primary heuristics off, except for hard instances. It would be interesting to repeat the easy instances with primal heuristics on. This is because these heuristics improve the primal side of the bounds improvement while the branching rule takes care of dual side—and the node selection must balance both depending on the situation. The interesting question, which is answered in part by looking at the hard set cover instances, is whether the balance of primal and dual will indicate that the node selection should restrain its diving.

Overall we conclude that the on_both = Random configuration of the policy usually performs worse than the other configurations. on_both ∈ {PrioChild, Second} both do well. The policies from both the on_leaf ∈ {Score, RestartDFS} configurations perform better than those from the on_leaf = BestEstimate configuration. For both prune_on_both configurations, the policy performed well. Recall that when prune_on_both is True, then the search is terminated after the first leaf, saving solving time but resulting in a higher optimality gap. That both prune_on_both configurations lead to effective policies means that we offer the user the choice between a lower optimality gap and higher solving time, or the other way around.

Our method is effective when the ML model is able to meaningfully classify optimal child nodes correctly. By contrast, in the case of the maximum independent set problem, the classification was poor (base acc.: 0.895, test acc.: 0.899, gain: 0.004). Hence, when the predictive model adds value to the prediction, there is potential for effective decision making using the policy; and contrariwise when it does not.

We note that the feature extraction was the biggest contributor to the overall solving time. Applying the predictor had a rather small impact. This means that it is possible to achieve lower solving times by incorporating the entire process in the original C code of SCIP, avoiding the Python interface. However, Experiment 4 suggests that even a lower overhead in invoking the ML policy will not pay off.

Second, instead of actually pruning nodes, one could only assign low priorities to children considered inferior, such that they are never selected after a backtrack. This would automatically make the solver with the learned policy exact since it would never commit to pruning. However, the objective for our work was to explore learning approximate node selection policies. Second, node pruning has the advantage that the search does not visit the subtree of that node at all. Third, by pruning we follow the direction of He et al. [

5] and can compare directly to the previous state-of-art in the literature. Indeed, as seen above, our approach easily outperforms that of He et al. [

5], in both their original implementation and a re-implementation in SCIP 6.

5.2. Using Best k Solutions

As discussed earlier, we set k = 10 for all our experiments bar one, with the hypotheses that, in general, higher values of k allows the ML model better potential to generalise. We found that lower k (below 10) gave inferior results in initial experiments. There is potential to vary k according to the problem class being solved. In particular, the distribution of nodes labelled ‘L’, ‘R’ and ‘B’. If k is too high, it could be the number of ‘B’s become too high, leading to a class imbalance in training the ML model. On the other hand, another artefact of k being too low can be class imbalance, as we saw for maximum independent set instances.

We make three further remarks. First, we rank solutions using SCIP’s solution ranking which is based on the optimality gap. It could be interesting to look also at the depth of the solutions in the tree, especially for the first setting of the heuristic policy use. Second, the k best solutions can vary in their objective value, possibly by relatively large amounts. An alternative to using any number of best solutions is to select an optimality gap bound. However, such an approach is more complicated than using the top-k. Third, in this article we trained on instances where the optimum was known. In the case it is not known, one could choose the best k solutions in terms of their integrality gap.

In

Section 7 we discusses additional possible further work for choosing

k.

5.3. Heuristic and Exact Settings

Recall that our learned policies were studied in two settings. In the first setting, the learned policy is used as a heuristic method. This is akin to a diving heuristic, with the difference that it uses the default branching rule as a variable fixing strategy. In more detail, this diving heuristic uses the branching rule to decide on which variable the disjunction will be made and then uses the child selector to choose the value to which the variable will be fixed (i.e., 0 or 1). In MIP branch-and-bound trees it is known that good branching rules are not good diving rules (attributed to T. Berthold). This is because the branching rule explicitly tries to balance the quality of the two children, in order to focus mostly on improving the lower bound, whereas a diving rule will try to construct an unbalanced (one-sided) tree. It remains to compare the learned policy against actual diving heuristics. We hypothesise that it will be inferior in terms of total time, because our heuristic uses the default branching rule as a variable fixing strategy.

In the second setting—the exact setting—the learned policy is used as a child selector during plunging. The potential of learning here is to augment node selection rules, such that the child selection process is better informed than the simple heuristics that solvers typically use. Recall that in the case of SCIP, the heuristic is BestEstimate with plunging. Indeed, our fourth experiment showed that, when used as a child selector, the learned policy acts almost exactly like SCIP’s PrioChild rule. The main difference comes from the fact that SCIP’s plunging has an abort mechanism. The policy could not learn this because policy learns to select a child, and then the node selection rule dives using this policy until no children are left. That the policy acts like PrioChild is no surprise given that this is the rule that was used to generate samples and it is also one of the features fed to the learner. Table shows that, in terms of solving time, the learned policy is not better than BestEstimate with plunging and its abort mechanism.

We conclude that that there is limited potential for improvement by selecting the best child during plunging. If the branching rule is working well, the two children should be quite balanced. By contrast, an interesting question is choosing a good node after plunging stops.

7. Conclusions

This article shows that approximate solving of mixed integer programs can be achieved by a node selection policy obtained with offline imitation learning. Node selection is important because through it, the solver balances between exploring search nodes with good lower bounds (crucial for proving global optimality), and finding feasible solutions fast. In contrast to previous work using imitation learning, our policy is focused on learning to choose which of its children it should select. We apply the policy within the popular open-source solver SCIP, in exact and heuristic settings.

Empirical results on five MIP datasets indicate that our node selector leads to solutions more quickly than the state-of-the-art in the literature [

5]. While our node selector is not as fast on average as the highly optimised state-of-practice in SCIP in terms of solving time on exact solutions, our heuristic policies have a consistently better optimality gap than all baselines if the accuracy of the predictive model is sufficient. Second, the results also indicate that our heuristic method finds better solutions within a given time limit than all baselines in the majority of the problem classes examined. Third, the results show that learned policies can be Pareto-equivalent or superior to state-of-practice MIP node selection heuristics. However, these results come with a caveat, summarized below.

This work adds to the body of literature that demonstrates how ML can benefit generic constraint optimisation problem solvers. In MIP terminology, our learned policy constitutes a diving rule, focusing on finding a good integer feasible solution. The performance on non-binary problem classes like capacitated facility location is particularly noteworthy. This is because, unlike purely binary problems, for non-binary instances, MIP primal heuristics struggle to obtain decent primal bounds [

11]. By contrast, in general for binary instances, the greater challenge is to close the dual bound, and our learned policy also performs well here.

The results are explained by the conclusion that the learned policies have imitated SCIP brancher’s preferred rule for node selection, but without the latter’s early plunge abort. This is a success for imitation learning, but does not overall improve the best state-of-practice baseline from which it has learned. Pareto-efficiency between total solving time and integrality gap is is important, yet total solving time and the primal integral quality are the most crucial in practice. However, an interesting question to pursue is learning to choose a good node after plunging stops.

Despite the clear improvements over the literature, then, this kind of MIP child selector is better seen in a broader approach to using learning in MIP branch-and-bound tree decisions [

25]. While forms of supervised learning find success [

4], reinforcement learning is also interesting [

12], and could be used for node selection.

Nonetheless, for future work on node selection by imitation learning, more study could be undertaken for choosing the meta-parameter k. Values too low add only few state-action pairs, which naturally degrades the predictive power of neural networks. On the other hand, values too high add noise, as paths to bad solutions add state-action pairs that are not useful. An interesting direction is to exploit an oracle (solver) to decide whether a node is ‘good’ or ‘bad’, e.g., if the node falls onto a path of a solution within say 5% of the optimum value. This more expensive data collection method might eliminate choosing a specific k.

The way in which the ML policies were trained can be explored further. For instance, one could consider the two components of PrioChild separately, or could train on standard SCIP with and without early plunge abort. Comparing learned policies with and without primal heuristics and with and without pre-solve also has scientific value.

Lastly,

Section 3 explained how during pre-processing certain features are removed which are constant throughout the entire dataset. This has the consequence of a different number of input units in the neural network architecture for every problem. Moreover, future work could include a method to unify a ML model that is effective for all problem classes. This would make ML-based node selection a more accessible feature for current MIP solvers such as SCIP; another promising direction in this regard is experimentation via the ‘gym’ of Prouvost et al. [

27].