Surface Defect Inspection in Images Using Statistical Patches Fusion and Deeply Learned Features

Abstract

:1. Introduction

2. Motivation

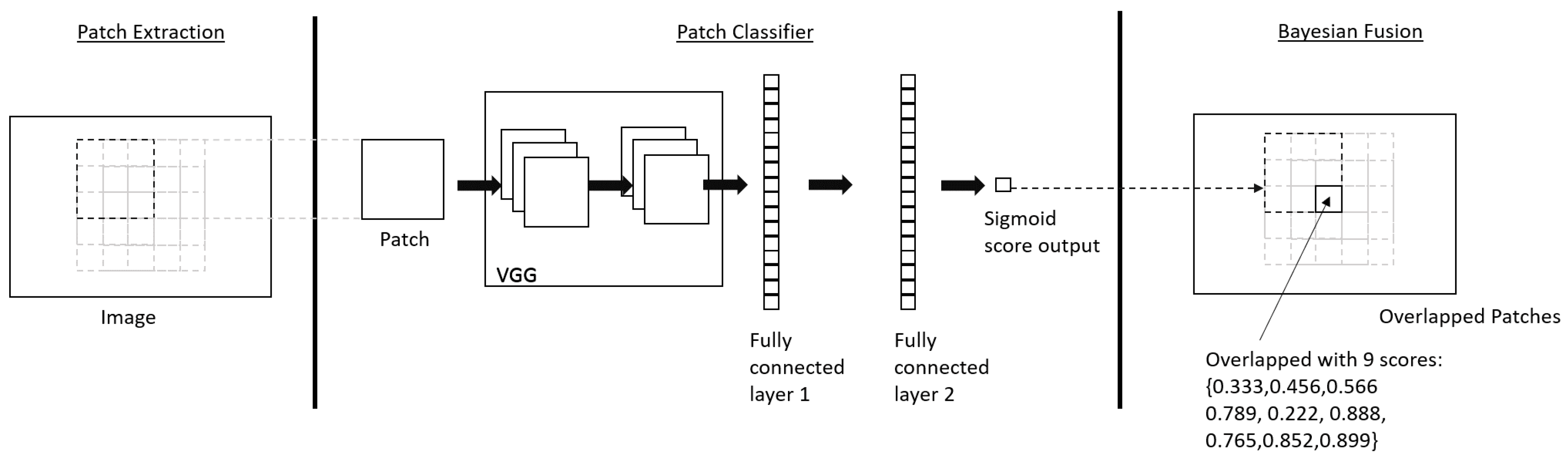

- The gist of the proposed approach is to break a large image that contains multiple separate defects into small overlapping patches to detect the existence of defect in each patch using the convolutional neural network classifier, and then re-combines the patches back to form the final defect decision using a statistical data fusion approach.

- The proposed approach requires less defect labelling efforts in the model training, since it only needs patch-based labelling. This contrasts with that the region-based labelling (for defect object detection) or pixel-based labelling (for defect region segmentation) are required in the literature.

3. Proposed Defect Detection Approach

3.1. Patch Classifier

3.2. Bayesian Data Fusion of Overlapping Patch Classifiers

4. Experimental Results

4.1. Experimental Setup and Implementation Details

- The dataset I is the GDXray dataset [24]. It consists of 10 radiography images of metal pipes. Each image is approximately in resolution and is provided with pixel-wise ground truth. The first 5 images are used as training images and the last 5 images are used as the testing images.

- The dataset II is the Type-I RSDDs data set [25] that contains 67 express rails defect images. Each image is in resolution and is provided with pixel-wise ground truth. 47 images are randomly chosen as training images and the left-over 20 images are used as testing images.

- The dataset III is the NEU steel surface defect dataset [26] that consists of scratch defects from hot-rolled steel strip. There are 300 images in this dataset, each image has a resolution of pixels, with the coordinates of the ground truth bounding boxes provided. The area of ground truth bounding boxes are taken as the pixel-wise ground truth for this dataset. 200 images are randomly chosen as training images and the left-over 100 images are used testing images.

4.2. Experimental Results

4.2.1. Dataset I: GDXray Dataset

4.2.2. Dataset II: Type-I RSDDs Dataset

4.2.3. Dataset III: NEU Steel Surface Defect Dataset

4.3. Evaluation on Parameter Setting

4.3.1. Patch Size of the Proposed Approach

4.3.2. Threshold Parameter of the Proposed Approach

4.4. Evaluation on Computational Complexity

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Luo, Q.; Fang, X.; Su, J.; Zhou, J.; Zhou, B.; Yang, C.; Liu, L.; Gui, W.; Tian, L. Automated Visual Defect Classification for Flat Steel Surface: A Survey. IEEE Trans. Instrum. Meas. 2020, 69, 9329–9349. [Google Scholar] [CrossRef]

- Luo, Q.; Fang, X.; Liu, L.; Yang, C.; Sun, Y. Automated Visual Defect Detection for Flat Steel Surface: A Survey. IEEE Trans. Instrum. Meas. 2020, 69, 626–644. [Google Scholar] [CrossRef] [Green Version]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, J.; Ma, Y.; Zhang, L.; Gao, R.X.; Wu, D. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Yu, H.; Li, Q.; Tan, Y.; Gan, J.; Wang, J.; Geng, Y.; Jia, L. A Coarse-to-Fine Model for Rail Surface Defect Detection. IEEE Trans. Instrum. Meas. 2018, 68, 656–666. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Oral, B. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Makantasis, K.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Loupos, C. Deep Convolutional Neural Networks for efficient vision based tunnel inspection. In Proceedings of the 2015 IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2015; pp. 335–342. [Google Scholar]

- Jahanshahi, M.R.; Masri, S.F.; Padgett, C.W.; Sukhatme, G.S. An innovative methodology for detection and quantification of cracks through incorporation of depth perception. Mach. Vis. Appl. 2013, 24, 227–241. [Google Scholar] [CrossRef]

- Zalama, E.; Gómez-García-Bermejo, J.; Medina, R.; Llamas, J. Road Crack Detection Using Visual Features Extracted by Gabor Filters. Comput. Aided Civ. Infrastruct. Eng. 2014, 29, 342–358. [Google Scholar] [CrossRef]

- Bu, G.; Chanda, S.; Guan, H.; Jo, J.; Blumenstein, M.; Loo, Y. Crack detection using a texture analysis-based technique for visual bridge inspection. J. Struct. Eng. 2015, 14, 41–48. [Google Scholar]

- Huangpeng, Q.; Zhang, H.; Zeng, X.; Huang, W. Automatic Visual Defect Detection Using Texture Prior and Low-Rank Representation. IEEE Access 2018, 6, 37965–37976. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Ferguson, M.; Ak, R.; Lee, Y.T.; Law, K.H. Automatic localization of casting defects with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 1726–1735. [Google Scholar]

- Wang, B.; Zhao, W.; Gao, P.; Zhang, Y.; Wang, Z. Crack Damage Detection Method via Multiple Visual Features and Efficient Multi-Task Learning Model. Sensors 2018, 18, 1796. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, F.C.; Jahanshahi, M.R.; Wu, R.T.; Joffe, C. A texture-Based Video Processing Methodology Using Bayesian Data Fusion for Autonomous Crack Detection on Metallic Surfaces. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 271–287. [Google Scholar] [CrossRef]

- Zhu, Z.; Wei, H.; Hu, G.; Li, Y.; Qi, G.; Mazur, N. A Novel Fast Single Image Dehazing Algorithm Based on Artificial Multiexposure Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 1–23. [Google Scholar]

- Li, H.; Wang, Y.; Yang, Z.; Wang, R.; Li, X.; Tao, D. Discriminative Dictionary Learning-Based Multiple Component Decomposition for Detail-Preserving Noisy Image Fusion. IEEE Trans. Instrum. Meas. 2020, 69, 1082–1102. [Google Scholar] [CrossRef]

- Zheng, M.; Qi, G.; Zhu, Z.; Li, Y.; Wei, H.; Liu, Y. Image Dehazing by an Artificial Image Fusion Method Based on Adaptive Structure Decomposition. IEEE Sens. J. 2020, 20, 8062–8072. [Google Scholar] [CrossRef]

- Chen, F.; Jahanshahi, M.R. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naive Bayes Data Fusion. IEEE Trans. Ind. Electron. 2018, 65, 4392–4400. [Google Scholar] [CrossRef]

- Ren, R.; Hung, T.; Tan, K.C. A generic deep-learning-based approach for automated surface inspection. IEEE Trans. Cybern. 2018, 48, 929–940. [Google Scholar] [CrossRef]

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragón, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The Database of X-ray Images for Nondestructive Testing. J. Nondestruct. Eval. 2015, 34, 42. [Google Scholar] [CrossRef]

- Gan, J.; Li, Q.; Wang, J.; Yu, H. A Hierarchical Extractor-Based Visual Rail Surface Inspection System. IEEE Sens. J. 2017, 17, 7935–7944. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Jing, M.; Tang, Y. A new base basic probability assignment approach for conflict data fusion in the evidence theory. Appl. Intell. 2020. [Google Scholar] [CrossRef]

- Wu, D.; Liu, Z.; Tang, Y. A new classification method based on the negation of a basic probability assignment in the evidence theory. Eng. Appl. Artif. Intell. 2020, 96, 103985. [Google Scholar] [CrossRef]

| Metric | Dataset I [24] | Dataset II [25] | Dataset III [26] | ||

|---|---|---|---|---|---|

| Method | [23] | Ours | Ours | [23] | Ours |

| Accuracy | 0.855 | 0.923 | 0.977 | 0.993 | 0.881 |

| ROC | - | 0.826 | 0.910 | - | 0.891 |

| Metric | Dataset I [24] | Dataset II [25] | Dataset III [26] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Image 1 | Image 2 | Image 3 | Image 4 | Image 5 | ||||||||||

| Method | [23] | Ours | [23] | Ours | [23] | Ours | [23] | Ours | [23] | Ours | [25] | [5] | Ours | Ours |

| Accuracy | - | 0.869 | - | 0.915 | - | 0.835 | - | 0.866 | - | 0.708 | - | - | 0.928 | 0.741 |

| Mean IOU | - | 0.766 | - | 0.528 | - | 0.539 | - | 0.519 | - | 0.421 | - | - | 0.571 | 0.544 |

| Sensitivity | 0.760 | 0.850 | 0.960 | 0.709 | 0.990 | 0.955 | 0.890 | 0.499 | 0.800 | 0.991 | 0.854 | 0.774 | 0.963 | 0.799 |

| Specificity | 0.970 | 0.896 | 0.720 | 0.919 | 0.760 | 0.827 | 0.660 | 0.889 | 0.940 | 0.692 | - | - | 0.928 | 0.723 |

| Precision | - | - | - | - | - | - | - | - | - | - | 0.911 | 0.846 | 0.922 | - |

| Balanced accuracy | 0.865 | 0.873 | 0.840 | 0.814 | 0.875 | 0.891 | 0.775 | 0.699 | 0.870 | 0.842 | - | - | 0.946 | 0.761 |

| F1 score | - | - | - | - | - | - | - | - | - | - | 0.882 | 0.808 | 0.942 | - |

| Test Image Resolution | Patch Classification (Only) | Proposed Approach |

|---|---|---|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eugene Chian, Y.T.; Tian, J. Surface Defect Inspection in Images Using Statistical Patches Fusion and Deeply Learned Features. AI 2021, 2, 17-31. https://doi.org/10.3390/ai2010002

Eugene Chian YT, Tian J. Surface Defect Inspection in Images Using Statistical Patches Fusion and Deeply Learned Features. AI. 2021; 2(1):17-31. https://doi.org/10.3390/ai2010002

Chicago/Turabian StyleEugene Chian, Yan Tao, and Jing Tian. 2021. "Surface Defect Inspection in Images Using Statistical Patches Fusion and Deeply Learned Features" AI 2, no. 1: 17-31. https://doi.org/10.3390/ai2010002

APA StyleEugene Chian, Y. T., & Tian, J. (2021). Surface Defect Inspection in Images Using Statistical Patches Fusion and Deeply Learned Features. AI, 2(1), 17-31. https://doi.org/10.3390/ai2010002