Abstract

Although they are a common type of injury worldwide, burns are challenging to diagnose, not least by untrained point-of-care clinicians. Given their visual nature, developments in artificial intelligence (AI) have sparked growing interest in the automated diagnosis of burns. This review aims to appraise the state of evidence thus far, with a focus on the identification and severity classification of acute burns. Three publicly available electronic databases were searched to identify peer-reviewed studies on the automated diagnosis of acute burns, published in English since 2005. From the 20 identified, three were excluded on the grounds that they concerned animals, older burns or lacked peer review. The remaining 17 studies, from nine different countries, were classified into three AI generations, considering the type of algorithms developed and the images used. Whereas the algorithms for burn identification have not gained much in accuracy across generations, those for severity classification improved substantially (from 66.2% to 96.4%), not least in the latest generation (n = 8). Those eight studies were further assessed for methodological bias and results applicability, using the Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2) tool. This highlighted the feasibility nature of the studies and their detrimental dependence on online databases of poorly documented images, at the expense of a substantial risk for patient selection and limited applicability in the clinical setting. In moving past the pilot stage, future development work would benefit from greater input from clinicians, who could contribute essential point-of-care knowledge and perspectives.

1. Introduction

Burn injuries are the fourth most frequent cause of injury death worldwide [1]. Nonetheless, diagnosing burns accurately is a complex task that often relies on the knowledge and experience of burn specialists working in specialized burn centers. This leads to many burn injuries being inaccurately diagnosed at the point of care, and a consequent worsening of patient prognosis. A burn is defined by its size (or total body surface area, TBSA) and depth. The depth can be divided into up to four categories, including superficial thickness (or 1st degree burns, e.g., sunburns), superficial partial burns (healing within 14 days), mid-partial burns (healing within 21 days), and deep partial and full thickness burns (which will not heal) [2]. Rather than using depth, burn severity can be dichotomized according to whether the burn requires grafting (deep partial and full thickness burns) or not (superficial to mid partial thickness) [3].

Given that there are few burn centers worldwide, and that telemedicine also has outreach limitations, efforts to provide accurate remote diagnostic assistance to point-of-care clinicians are welcome. Because of the visual nature of a burn injury, image-based diagnosis was one of the first candidates for telemedicine, followed by mHealth, and later on, automated diagnosis using artificial intelligence [4,5,6,7]. In fact, the latter has been in the minds of clinicians and researchers for a few decades, with Siegel already in 1986 suggesting computer assistance to calculate burn size [8]. The first attempts to develop algorithms for automated burn diagnosis built on the local collection of images at burn centers, using highly medically specialized cameras, commercially available devices such as digital cameras [4], or, as their camera applications developed, smartphones [6,9,10]. At present, automated burn diagnosis is the object of several international research efforts, building on images gathered either from international clinical collaborators or from online public databases [11].

Despite this, progress in the development of accurate algorithms remains slower than that in other medical fields, e.g., dermatology or ophthalmology, where accuracy levels are now comparable to those of experts [12,13,14]. For burns, two types of image-based algorithms are being developed—those that segment the burn, distinguishing burnt skin from healthy skin or from the background, and those that classify it, assessing its severity.

The latest review on machine learning in burn care dates from 2015, and out of the 15 studies identified then, only four focused on diagnosis, while others used machine learning as a superior procedure to statistical analysis for predicting burn mortality, infection, or length of stay [15]. Regarding image-based automated diagnosis, technological progress, in terms of methodological developments, has been described in a few recent publications [16,17,18]; however, a systematic review and quality assessment of the related studies are lacking.

This study was undertaken to address the following research questions:

- What is the state of evidence on the accuracy of image-based artificial intelligence for burn injury identification and severity classification?

- What is the quality of the evidence at hand, considering both the risk of bias and the applicability of the findings?

2. Methods

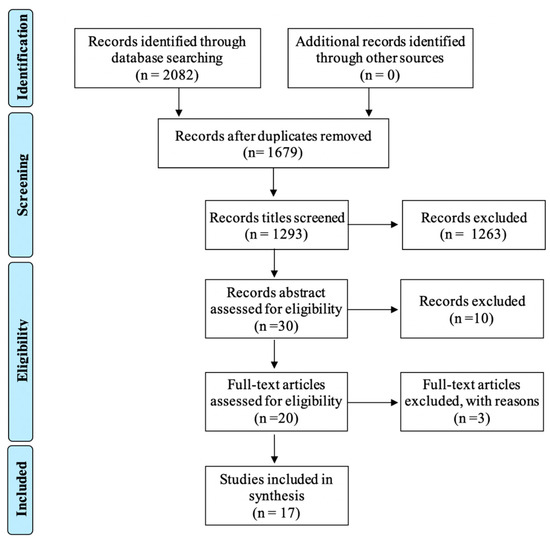

Relevant studies on the automated diagnosis of acute burn injuries were searched for in three publicly available electronic databases, Medline, Embase, and Web of Science, on 29 March 2021. The keywords used can be found in Supplementary Table S1. From an initial list of 1679 records identified across the databases, publications were selected based on the following inclusion criteria: published in English, dated from 2005 and onwards, from a peer-reviewed scientific journal, and based on photographs of human skin. Excluded were publications from conference proceedings that were not peer-reviewed.

The studies were screened according to the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) flow diagram (Figure 1) [19]. After the reading of the title and abstract by the two authors, twenty articles were selected for full-text review, out of which seventeen were retained for this review. Reasons for exclusion at that stage were: study on animal [20], study on old burns and burn scars diagnosis [21], and a study that largely duplicated information from an earlier study by a group of authors included in the review.

Figure 1.

PRISMA flow diagram of selected studies for review.

The data extracted for each study included: authors and year of publication, country of data collection, sample size of the training and validation sets, type of validation set, demographic of the patients, and injuries involved (patient gender and age, burn type/mechanism, skin type, and body parts involved), imaging characteristics (type of camera used, resolution, color, and pre-processing methods), characteristics of the AI model (type of model used, type of classifier, and type of validation technique), reference standard (or comparator) for diagnosis, and diagnostic performance (accuracy, sensitivity, specificity, precisions, and AUROC).

In the first part of the review, for all studies, we report on the source of the image, whether the algorithm was meant for burn identification or classification, the number of images used, the type of training input, the reference standard, and the overall accuracy of the results. Accuracy was defined as positive predicted value (PPV) or sensitivity (S) for burn identification, and success rate (A) for burn classification. When these measures were not available, dice coefficient (DC) was reported.

Studies were divided into three generations, determined based on how the algorithms were built and, more specifically, on the type and number of images processed for the training. Studies of the first and second generations all used image-specific features defined by the user rather than the whole image as is commonly performed in automated diagnosis nowadays. The third generation represents the most recent approach in AI development, and, in particular, the use of transfer learning. For those studies, a quality assessment based on the Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2) tool [22] was performed addressing in turn the risk of bias and the applicability of the results. As indicated in Table 1, QUADAS-2 considers three domains: patient selection, index test, and reference standard. Herein, the index test is the automated algorithm, whereas the reference standard is the definition of the diagnosis made to train the algorithm (to detect where the burn is in an image, and to assess severity). QUADAS-2 suggests dichotomizing the values taken by each item: first, either bias is not evident () or there is a high risk of bias (). High Risk includes cases where bias is not evidently accounted for or is obviously present. Second, either the results are clinically applicable () or clinical applicability is doubtful or ruled out (☹). For the assessment, each author reviewed the studies independently and, in cases of disagreement, consensus was sought and reached.

Table 1.

Adapted version of the risk of bias and applicability judgment according to QUADAS-2 assessment [22].

3. Review

3.1. Peer-Reviewed Scientific Articles over Time and by Location

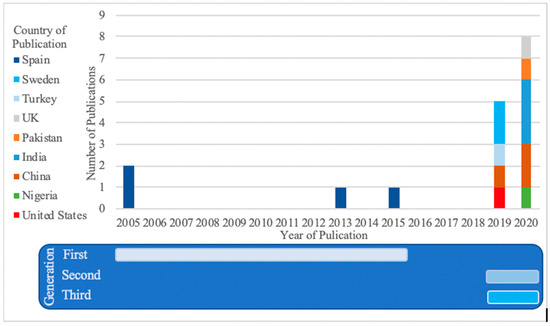

Figure 2 presents the selected articles according to their year and country of publication, split into three different methodological generations. The first generation includes four studies, all from a Spanish team, stretching over about 10 years [23,24,25,26]. Those studies all looked at the problem of assisted diagnosis (rather than a fully automated system, as in the latter generations), usually involving the characterization of specific features from experiments performed with burn experts, in order to replicate their diagnosing process. Fuzzy-ArtMap or Multidimensional Scaling Analyses were the methods used. The second generation, a cluster of four studies published in 2019 and 2020 from four countries, takes advantage of increased computational power and uses more advanced algorithm techniques (e.g., Support Vector Machine) [18,27,28,29]. This generation still requires that the images be pre-processed to feed the algorithm with image-specific features rather than images themselves. The third generation, introduced by teams from five different—and several new—countries, consists of studies from 2020 and 2021, which typically used transfer learning methodologies to increase the power of a small sample sizes of images, and which used as their inputs the whole images [16,17,30,31,32,33,34,35,36]. Indeed, the specificity of transfer learning is such that the fine-tuning of the algorithm can be performed using only a few burn images on an already existing CNN, previously trained on a colossal dataset of unrelated objects for a different task [37].

Figure 2.

Number of publications per year and country (total number of publications reviewed n = 17).

3.2. Main Features of the Selected Studies

Table 2 presents an overview of the main characteristics of the 17 studies retained, considering the year of publication, the source of the images used, whether the algorithm focused on identification or classification, the number of images used, the training input, the use of transfer learning, and the accuracy obtained.

Table 2.

Summary table of the articles reviewed (n = 17) by generation.

Burn identification was taken into consideration in ten of those studies (whether it is through segmentation or classification into burn/non-burn) and burn classification was considered in eleven, using either two classification groups (grafting/no grafting [18,25,26]) or several (most typically in the latest generation) [16,23,24,25,30,32,34,36,38]. Three studies looked at both identification and segmentation [23,24,29] (two of which are from the first generation [23,24]). Burn identification has remained a subject of interest across generations even though it can be considered of lesser clinical importance (if there is no burn, the clinicians do not need to be involved), with four studies from the latest generation studying this specifically [17,31,34,35]. Although not indicated clearly in the studies, burn segmentation could be useful for burn size estimation. The diagnostic accuracy of burn size estimation was, however, only measured in one study.

The table also reveals changes in sources of images over time, with images initially coming from hospitals and, more recently, above all, from online public databases [28,29,32,33,34]. The number of burn images used for training varied greatly, from 6 [27] to 1200 [16]. For the validation sets, all studies from the first generation had separate data, while only one had separate data in the second generation [18]. In fact, that study used an existing dataset from the first generation, and obtained similar accuracy results, despite using a more up-to-date methodology [18]. In the third generation, five studies split their data between training and validation prior to training [16,31,33,34,35], while the four others used cross-validation (i.e., multiple random splitting of their dataset) as a validation technique [17,30,32,36].

With regards to training inputs, all studies from the first generation used pre-defined features from the images. Likewise, in the second generation, image features such as HOG, hue, or chroma were also used as training inputs which were defined directly from the images. By contrast with the previous generation of studies [23,24,25,26], the algorithms from the second generation were entirely automatized in that a human interaction was not needed to specify where the burn injury was [18]. In the third generation, two studies used small, pre-extracted body regions of interest of defined size [30,36], as opposed to using the whole images, as in the seven others studies [16,17,31,32,33,34,35].

The accuracy of the results was measured in several ways, typically using Positive Predictive Value and Sensitivity, but Dice Coefficient (DC) was used for burn identification and Accuracy for burn classification. Albeit with different measures, burns were segmented with relatively similar accuracy across generations, the highest level being reached in one study from the second generation [27]. However, it is difficult to draw any conclusions on whether the accuracy significantly improved, given that the training sets differed greatly. In any case, larger training and validation sets remain a prerequisite for those results to be regarded as robust.

As for burn classification, when using a grafting/non-grafting dichotomization, the overall accuracy ranged from 79.7% to 83.8%, and when three or more classes were used (mainly burn depth categories), it ranged from 66.2% to 91.5%. It seems burn classification has improved, not least recently, with all studies from the third generation performing at over 80%, and three of them even performing at over 90%. Among the studies analyzed, two studies did not report any measure of overall accuracy and, rather, reported measures specific to different burn depths. They both obtained somewhat better [31] sensitivity for superficial burns, and one of them reached higher sensitivity for deep burns [35]. It is of note that the reference standard was not always specified, but when it was, diagnosis was mostly performed a posteriori using images. Clinical diagnosis was only used in a few studies [18,23,24,25,26,27,28,29,30,32,36], which, in view of the fact that the algorithms can only be as good as the data they are trained with, is troublesome. However, in order to obtain the ultimate gold standard in burn care, delayed grafting for at least 21 days would be required, which is ethically problematic, especially in parts of the world where aseptic rooms are not available. Laser Doppler Imagery (LDI) might be an alternative option, but again, it is only available in a number of settings, and would therefore limit the training of algorithms to specific patient populations. In LMICs, the best alternative would be diagnosis by a local specialist, but even that was not available in all studies described.

In comparison to the level of performance reached in fields of image-based diagnosis (e.g., dermatology [12] or ophthalmology [39]), the values presented herein are relatively low. In fact, should there be an interest in employing the algorithms in the clinical setting [40], better performance levels must be obtained. At present, one can say that the algorithms classify burn depth better than untrained clinicians [41], but whether this is sufficient or not needs further consideration, and determining what a reasonable level of performance should be remains to be established.

3.3. Quality of the Evidence Published—Latest Generation of Studies

Regarding the quality of the evidence, none of the studies reported on the following CONSORT criteria, which is usually used for randomized-control trials and diagnostic studies [42]. This can be regarded as a shortcoming, considering the clinical value of the studies included, and the fact that the focus is still rather only on the technological side of the research. Table 3 puts forward that, despite several studies being published recently using transfer learning, the basics required to limit the risk of bias and to apply generalizability in diagnostic accuracy studies are not all taken into account, with no studies showing low risk of bias for all three areas. For risk of bias, patient selection was considered problematic in all studies but one [17], whereas the index test and reference standard were regarded as adequately dealt with in most instances, especially with regards to burn identification studies [30,31,32,34,36]. The biases found in all studies are related to the use of online images, or to the lack of clarity regarding the representability of patient caseload. Only one study included LDI as a diagnostic tool as a reference standard [30], whereas another used existing patient charts, but only for part of the training set [17]. The one study with no obvious bias in patient selection was assessed as having a biased index test, since the images were preprocessed, and all areas not relevant for burn identification were removed prior to training [17].

Table 3.

Specification in terms of patient selection, index test, and reference standard as well as risk of bias and generalizability of the results.

With regards to applicability of the results, several issues arose. All studies but one raised concerns pertaining to patient selection; four studies raised concerns pertaining to the index test, and two studies raised concerns pertaining to the reference standard. The limited patient selection, in terms of size and diversity, led to the high number of concerns revealed in this review. Although this has been brought up in several other medical fields of automated diagnosis [43,44], it is particularly relevant for burn injuries where the skin type of the patient is critical for diagnosis, and where the burden lies mostly in Sub-Saharan Africa, and in South-East Asia [1].

Further, the fact that the validation sets were not completely independent from the training sets makes the reproducibility of the results more unlikely. Indeed, one study addressed the effect of skin type on algorithm performance and showed that accuracy was highest when using two separate models for each skin type rather than one combined model with all images [17]. This is important information to bear in mind while building further algorithms, although it has some implications in terms of equity between cases. This also proves even more the crucial importance of large datasets including various type of cases.

3.4. Other Considerations

Automated diagnosis assistance develops at an unprecedently fast rate. For burns, where the number of specialists is limited, automated diagnosis could be instrumental for the onset of appropriate referral and treatment. As opposed to other skin conditions, such as melanoma, in the clinical setting, burns are diagnosed using visual wound attributes (such as color or texture) but also pain and perfusion. The latter information does not form part of the algorithms for automated diagnosis. As such, this imposes more rigorous demands on the development of the algorithms.

Our findings reveal pressing research needs, among which is the need for studies based on larger numbers of images, representing a diversity of well-defined population groups, with appropriate reference standards, and the necessity of external validation on separate datasets, which are largely lacking at present. In fact, to go beyond “performance” (or feasibility) studies, there is a need for the publicly available image datasets to provide not only pictures of burns but also relevant data. This, in turn, may require collaborations with and between burn centers to a greater extent than that which seems to be the case at present.

One possible limitation of the study is its coverage as it is uncertain whether all eligible studies were identified. Nonetheless, since we searched three publicly available repositories that allowed for the identification of studies at the junction of medicine and technology, as can be expected for this review, we believe the coverage is good. We also scrutinized the lists of references of selected articles. Additionally, limiting the review to studies published in English can be regarded as a restrictive criterion. However, several of the studies that we report on were from non-English speaking countries, which indicates that we have captured contributions beyond the realm of the native English-speaking community.

4. Conclusions

This systematic review sheds lights on the progress made in the automated segmentation and classification of acute burn injury over time and over three methodological generations of algorithm building. The interest in this health outcome, well-suited to image taking, has grown remarkably in recent years, thanks to rapid developments in deep-learning technologies and to easier access to online databases.

Despite the progress made in diagnostic accuracy, namely for burn segmentation, this increased interest comes at the expense of the transferability of the findings at the point of care. The feasibility nature of most studies published in recent years, with relatively limited numbers of pictures and weak implications for clinicians, leaves largely unattended applicability issues.

In moving forward in the further development of those algorithms and paving the way for their clinical implementation, it is imperative that user perspectives are incorporated [45], in a manner that pays attention to and integrates input from remote clinical users in order to safeguard both applicability and usability.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/ebj2040020/s1, Table S1: Documentation of Search Strategies.

Author Contributions

Conceptualization, C.B. and L.L.; methodology, C.B. and L.L.; article review and selection, C.B. and L.L, analyses, C.B.; writing—original draft preparation C.B.; writing—review and editing L.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The authors would like to thank the library services at Karolinska Institutet for their help with the search performed.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization. Global Health Estimates 2016: Estimated Deaths by Cause and Region, 2000 and 2016; World Health Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Karim, A.S.; Shaum, K.; Gibson, A.L.F. Indeterminate-Depth Burn Injury-Exploring the Uncertainty. J. Surg. Res. 2020, 245, 183–197. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sjöberg, F. Pre-Hospital, Fluid and Early Management, Burn Wound Evaluation; Jeschke, M.G., Kamolz, L.-P., Sjöberg, F., Wolf, S.E., Eds.; Springer: NewYork, NY, USA, 2012; p. 105. [Google Scholar]

- Roa, L.; Gómez-Cía, T.; Acha, B.; Serrano, C. Digital imaging in remote diagnosis of burns. Burns 1999, 25, 617–623. [Google Scholar] [CrossRef]

- Saffle, J.R. Telemedicine for acute burn treatment: The time has come. J. Telemed. Telecare 2006, 12, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Wallis, L.A.; Fleming, J.; Hasselberg, M.; Laflamme, L.; Lundin, J. A smartphone App and cloud-based consultation system for burn injury emergency care. PLoS ONE 2016, 11, e0147253. [Google Scholar] [CrossRef] [PubMed]

- Acha, B.; Serrano, C.; Acha, J.I.; Roa, L.M. CAD tool for burn diagnosis. Inf. Process Med. Imaging 2003, 18, 294–305. [Google Scholar] [PubMed]

- Siegel, J.B.; Wachtel, T.L.; Brimm, J.E. Automated documentation and analysis of burn size. J. Trauma-Inj. Infect. Crit. Care 1986, 26, 44–46. [Google Scholar] [CrossRef]

- Martinez, R.; Rogers, A.D.; Numanoglu, A.; Rode, H. The value of WhatsApp communication in paediatric burn care. Burns 2018, 44, 947–955. [Google Scholar] [CrossRef]

- den Hollander, D.; Mars, M. Smart phones make smart referrals. Burns 2017, 43, 190–194. [Google Scholar] [CrossRef]

- Hassanipour, S.; Ghaem, H.; Arab-Zozani, M.; Seif, M.; Fararouei, M.; Abdzadeh, E.; Sabetian, G.; Paydar, S. Comparison of artificial neural network and logistic regression models for prediction of outcomes in trauma patients: A systematic review and meta-analysis. Injury 2019, 50, 244–250. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists Original article. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. J. Am. Med. Assoc. 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Liu, N.T.; Salinas, J. Machine learning in burn care and research: A systematic review of the literature. Burns 2015, 41, 1636–1641. [Google Scholar] [CrossRef] [PubMed]

- Pabitha, C.; Vanathi, B. Densemask RCNN: A Hybrid Model for Skin Burn Image Classification and Severity Grading. Neural Process. Lett. 2021, 53, 319–337. [Google Scholar] [CrossRef]

- Abubakar, A.; Ugail, H.; Bukar, A.M. Assessment of Human Skin Burns: A Deep Transfer Learning Approach. J. Med. Biol. Eng. 2020, 40, 321–333. [Google Scholar] [CrossRef]

- Yadav, D.P.; Sharma, A.; Singh, M.; Goyal, A. Feature extraction based machine learning for human burn diagnosis from burn images. IEEE J. Transl. Eng. Health Med. 2019, 7, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Moher, D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Dubey, K.; Srivastava, V.; Dalal, K. In vivo automated quantification of thermally damaged human tissue using polarization sensitive optical coherence tomography. Comput. Med. Imaging Graph. 2018, 64, 22–28. [Google Scholar] [CrossRef]

- Pham, T.D.; Karlsson, M.; Andersson, C.M.; Mirdell, R.; Sjoberg, F. Automated VSS-based burn scar assessment using combined texture and color features of digital images in error-correcting output coding. Sci. Rep. 2017, 7, 16744. [Google Scholar] [CrossRef] [Green Version]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; Group, Q. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Acha, B.; Serrano, C.; Acha, J.I.; Roa, L.M. Segmentation and classification of burn images by color and texture information. J. Biomed. Opt. 2005, 10, 034014. [Google Scholar] [CrossRef] [Green Version]

- Serrano, C.; Acha, B.; Gomez-Cia, T.; Acha, J.I.; Roa, L.M. A computer assisted diagnosis tool for the classification of burns by depth of injury. Burns 2005, 31, 275–281. [Google Scholar] [CrossRef]

- Acha, B.; Serrano, C.; Fondon, I.; Gomez-Cia, T. Burn depth analysis using multidimensional scaling applied to psychophysical experiment data. IEEE Trans. Med. Imaging 2013, 32, 1111–1120. [Google Scholar] [CrossRef]

- Serrano, C.; Boloix-Tortosa, R.; Gomez-Cia, T.; Acha, B. Features identification for automatic burn classification. Burns 2015, 41, 1883–1890. [Google Scholar] [CrossRef]

- Cirillo, M.D.; Mirdell, R.; Sjöberg, F.; Pham, T.D. Tensor Decomposition for Colour Image Segmentation of Burn Wounds. Sci. Rep. 2019, 9, 3291. [Google Scholar] [CrossRef] [Green Version]

- Şevik, U.; Karakullukçu, E.; Berber, T.; Akbaş, Y.; Türkyılmaz, S. Automatic classification of skin burn colour images using texture-based feature extraction. IET Image Process. 2019, 13, 2018–2028. [Google Scholar] [CrossRef]

- Khan, F.A.; Butt, A.U.R.; Asif, M.; Ahmad, W.; Nawaz, M.; Jamjoom, M.; Alabdulkreem, E. Computer-aided diagnosis for burnt skin images using deep convolutional neural network. Multimed. Tools Appl. 2020, 79, 34545–34568. [Google Scholar] [CrossRef]

- Cirillo, M.D.; Mirdell, R.; Sjöberg, F.; Pham, T.D. Time-Independent Prediction of Burn Depth Using Deep Convolutional Neural Networks. J. Burn Care Res. 2019, 40, 857–863. [Google Scholar] [CrossRef] [PubMed]

- Jiao, C.; Su, K.; Xie, W.; Ye, Z. Burn image segmentation based on Mask Regions with Convolutional Neural Network deep learning framework: More accurate and more convenient. Burn. Trauma 2019, 7, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abubakar, A.; Ugail, H.; Smith, K.M.; Bukar, A.M.; Elhmahmudi, A. Burns Depth Assessment Using Deep Learning Features. J. Med. Biol. Eng. 2020, 40, 923–933. [Google Scholar] [CrossRef]

- Chauhan, J.; Goyal, P. BPBSAM: Body part-specific burn severity assessment model. Burns 2020, 46, 1407–1423. [Google Scholar] [CrossRef]

- Chauhan, J.; Goyal, P. Convolution neural network for effective burn region segmentation of color images. Burns 2020, 12, 12. [Google Scholar] [CrossRef]

- Dai, F.; Zhang, D.; Su, K.; Xin, N. Burn images segmentation based on Burn-GAN. J. Burn Care Res. 2020, 18, 18. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Ke, Z.; He, Z.; Chen, X.; Zhang, Y.; Xie, P.; Li, T.; Zhou, J.; Li, F.; Yang, C.; et al. Real-time burn depth assessment using artificial networks: A large-scale, multicentre study. Burns 2020, 46, 1829–1838. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Khan, F.A.; Butt, A.U.R.; Asif, M.; Aljuaid, H.; Adnan, A.; Shaheen, S.; ul Haq, I. Burnt Human Skin Segmentation and Depth Classification Using Deep Convolutional Neural Network (DCNN). J. Med. Imaging Health Inform. 2020, 10, 2421–2429. [Google Scholar] [CrossRef]

- Wong, T.Y.; Bressler, N.M. Artificial Intelligence with deep learning technology looks into diabetic retinopathy screening. JAMA 2016, 316, 2366–2367. [Google Scholar] [CrossRef]

- Lundin, J.; Dumont, G. Medical mobile technologies—What is needed for a sustainable and scalable implementation on a global scale? Glob. Health Action 2017, 10, 14–17. [Google Scholar] [CrossRef]

- Blom, L.; Boissin, C.; Allorto, N.; Wallis, L.; Hasselberg, M.; Laflamme, L. Accuracy of acute burns diagnosis made using smartphones and tablets: A questionnaire-based study among medical experts. BMC Emerg. Med. 2017, 17, 39. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schulz, K.F.; Altman, D.G.; Moher, D. CONSORT 2010 Statement: Updated guidelines for reporting parallel group randomised trials. BMC Med. 2010, 8, 18. [Google Scholar] [CrossRef] [Green Version]

- Bellemo, V.; Lim, G.; Rim, T.H.; Tan, G.S.W.; Cheung, C.Y.; Sadda, S.V.; He, M.g.; Tufail, A.; Lee, M.L.; Hsu, W.; et al. Artificial Intelligence Screening for Diabetic Retinopathy: The Real-World Emerging Application. Curr. Diabetes Rep. 2019, 19, 72. [Google Scholar] [CrossRef] [PubMed]

- Reddy, C.L.; Mitra, S.; Meara, J.G.; Atun, R.; Afshar, S. Artificial Intelligence and its role in surgical care in low-income and middle-income countries. Lancet Digit. Health 2019, 1, e384–e386. [Google Scholar] [CrossRef] [Green Version]

- Laflamme, L.; Wallis, L.A. Seven pillars for ethics in digital diagnostic assistance among clinicians: Take-homes from a multi-stakeholder and multi-country workshop. J. Glob. Health 2020, 10, 010326. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).