Optimization Method of Energy Saving Strategy for Networked Driving in Road Sections with Frequent Traffic Flow Changes

Abstract

1. Introduction

2. Analysis of Mixed Traffic Flow Driving Scenarios

2.1. Analysis of Energy Saving Driving Strategies

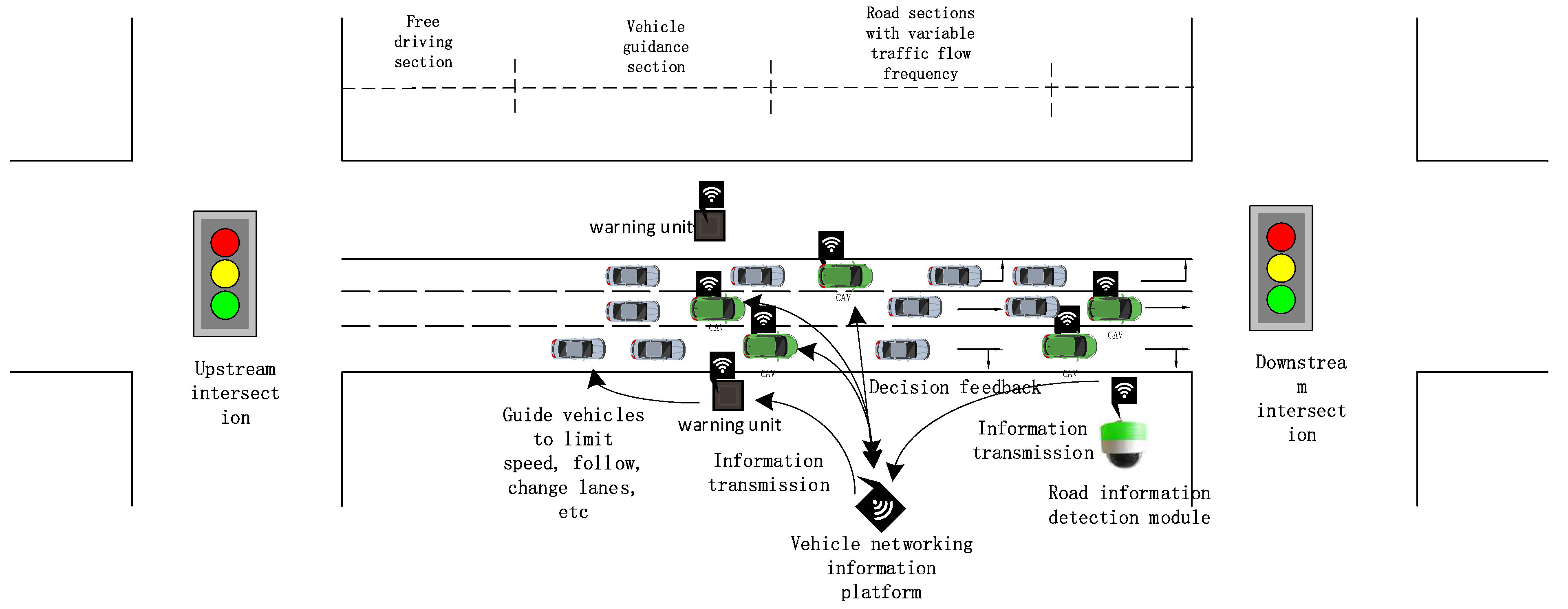

2.2. Energy Saving Framework for Connected Driving

3. A Driving Model for Networked Mixed Traffic Flow

3.1. Vehicle Following and Lane Changing Model

3.2. Hybrid Fleet Energy Consumption Model

3.3. Energy Saving Driving Model for Hybrid Fleet

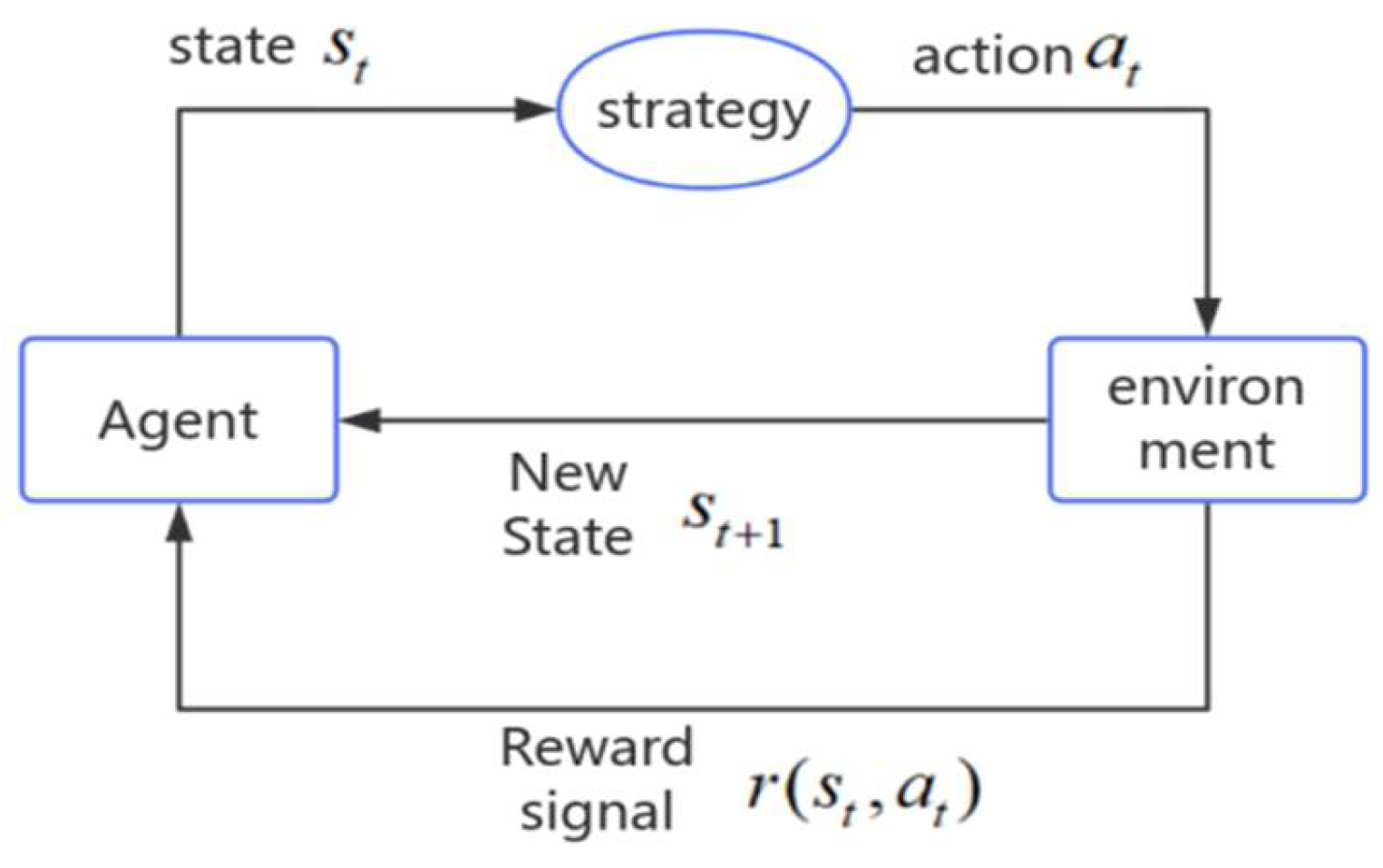

4. Energy Saving Driving Strategy Based on Deep Reinforcement Learning

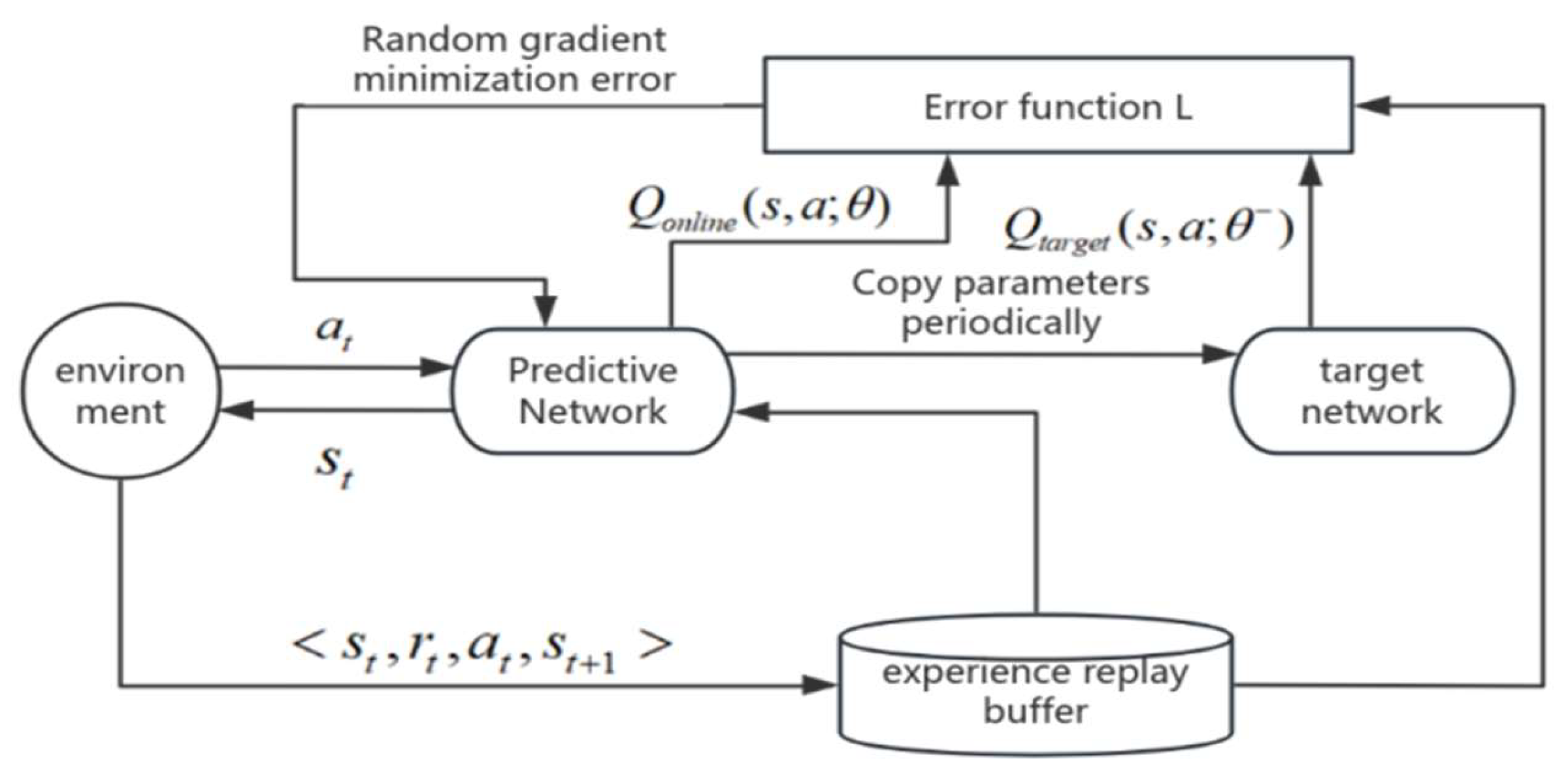

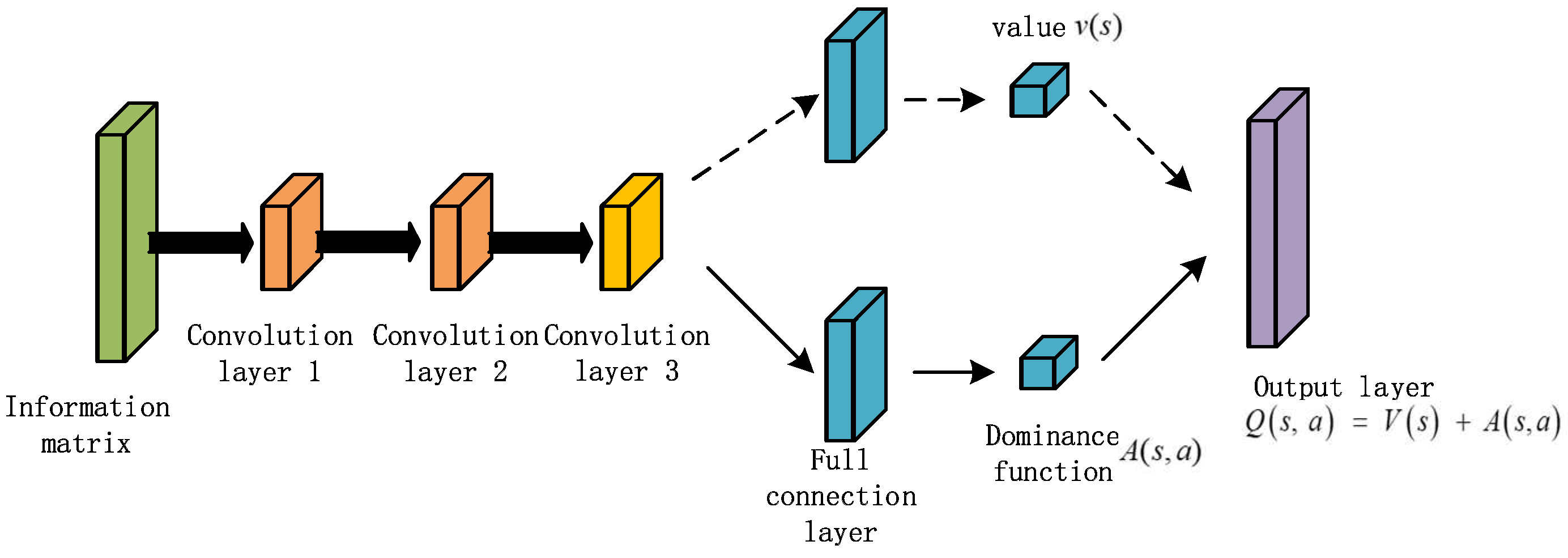

4.1. D3QN Algorithm

- (1)

- Double Q Network

- (2)

- Dueling Q Network

4.2. Loss Function

4.3. Reward Function

5. Simulation Experiment and Result Analysis

5.1. Simulation Experiment Design

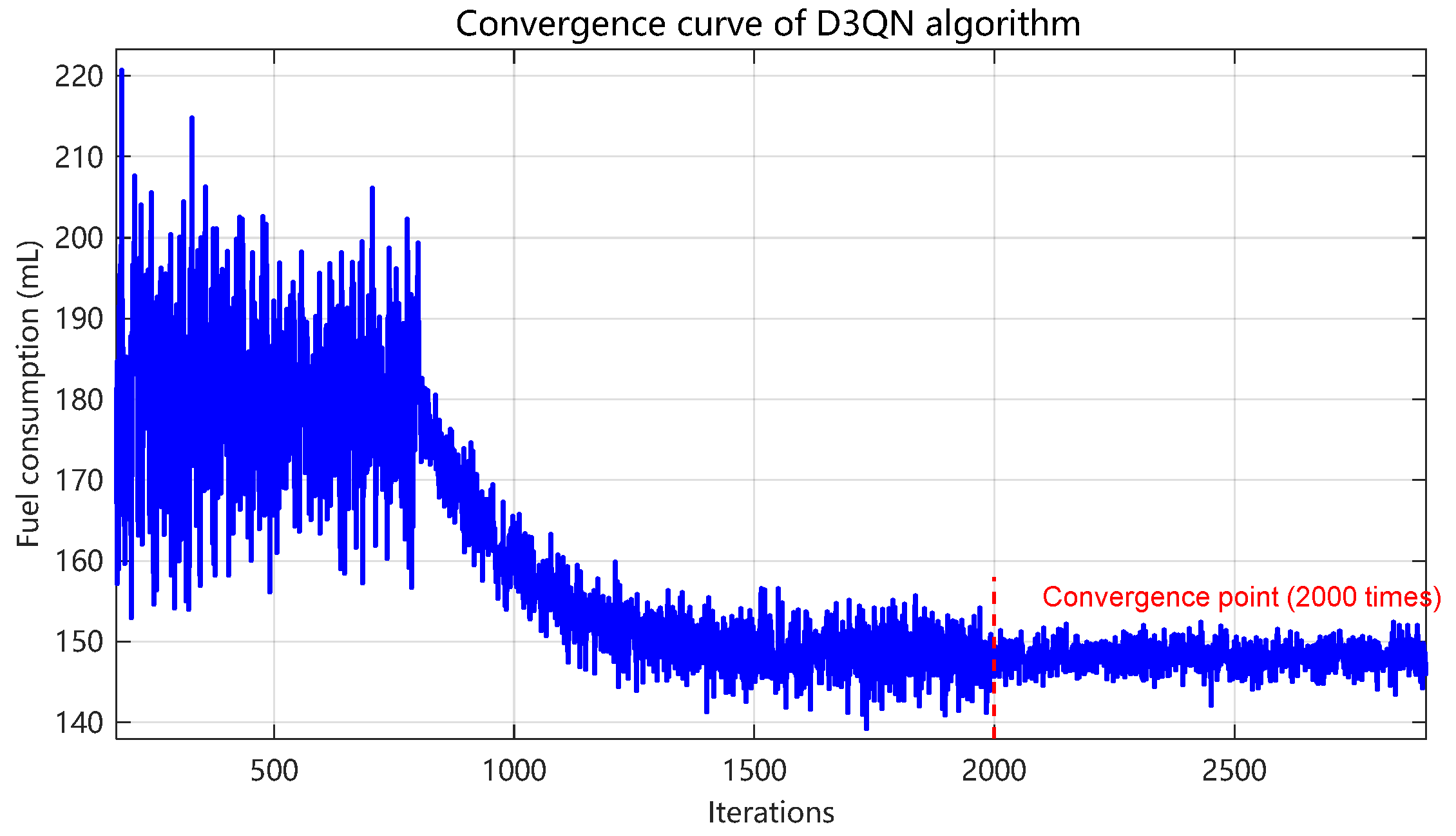

5.2. Solution of Energy Saving Driving Strategy

5.3. Simulation Result

6. Conclusions

- (1)

- Based on the in-depth analysis of traffic flow frequency changing sections, combined with the Internet of vehicles technology, the application framework of energy-saving driving strategy for traffic flow frequency changing sections is constructed. Its core is that the network connected autonomous vehicles operate in the vehicle road collaborative environment. Relying on the high-precision and low delay real-time traffic information transmitted by the vehicle network platform, the collaborative decision-making module of Cavs can generate the global or local optimal lane change strategy, so as to realize the collaborative optimization of path planning and lane maintenance/transformation.

- (2)

- A car following and lane changing model of mixed traffic flow is constructed, and the behavior difference between manual driving and intelligent driving vehicles is accurately described by introducing the driver risk coefficient, the influence factor of the acceleration of the vehicle in front and the random slowing probability model; The unified energy consumption model integrates the energy consumption calculation of fuel vehicles, electric vehicles and hybrid vehicles through the power type coefficient, which solves the complexity of superposition calculation by vehicle type, and provides a unified benchmark for collaborative optimization. Combined with the real-time information interaction (roadside detection, platform decision-making, vehicle collaboration) in the Internet of vehicles environment, the model can dynamically adjust the driving strategy (such as stable car following or safe lane changing) according to the traffic state, taking into account the safety and energy conservation.

- (3)

- D3QN deep reinforcement learning algorithm is introduced as the learning engine of energy-saving driving strategy, which not only solves the limitations of traditional reinforcement learning, but also optimizes the balance between energy saving and safety. By separating the state value function and the action advantage function, the algorithm gives priority to long-term energy-saving actions, and introduces the safety distance penalty term and random moderation probability constraint, so that CAV can take into account the minimum fuel consumption and collision risk avoidance when guiding the hybrid fleet.

- (4)

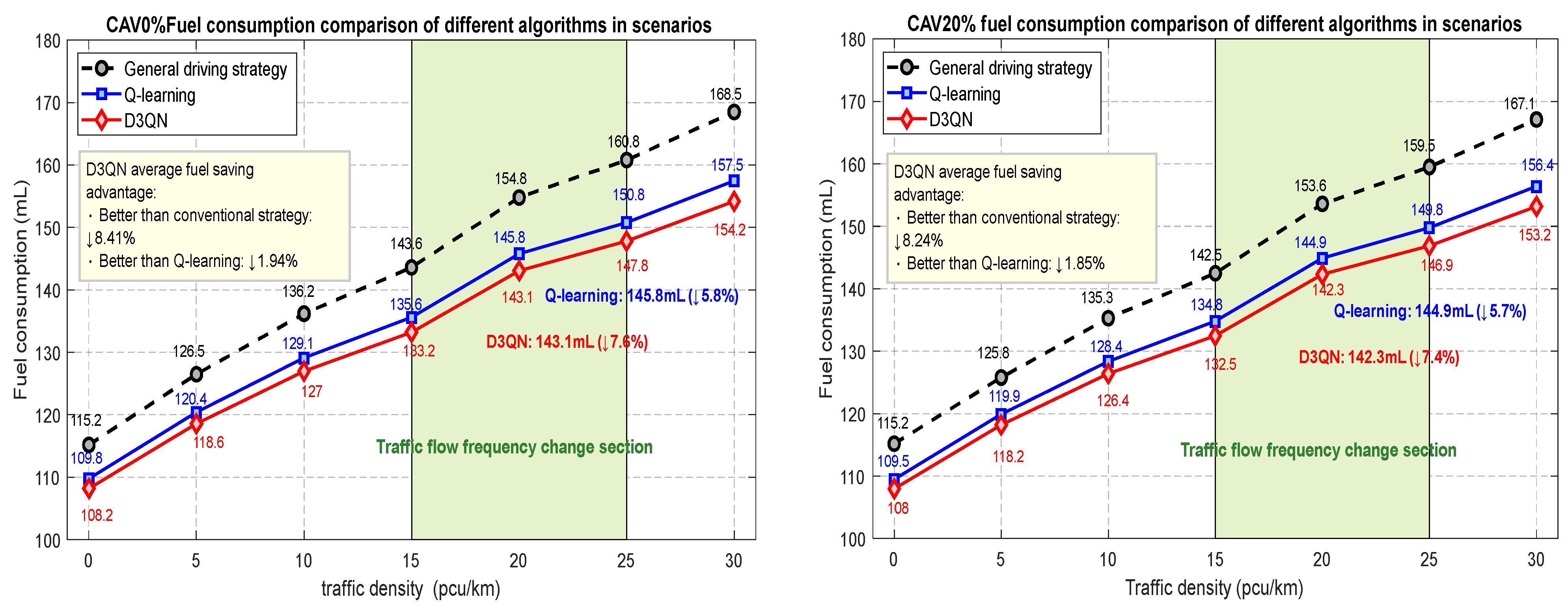

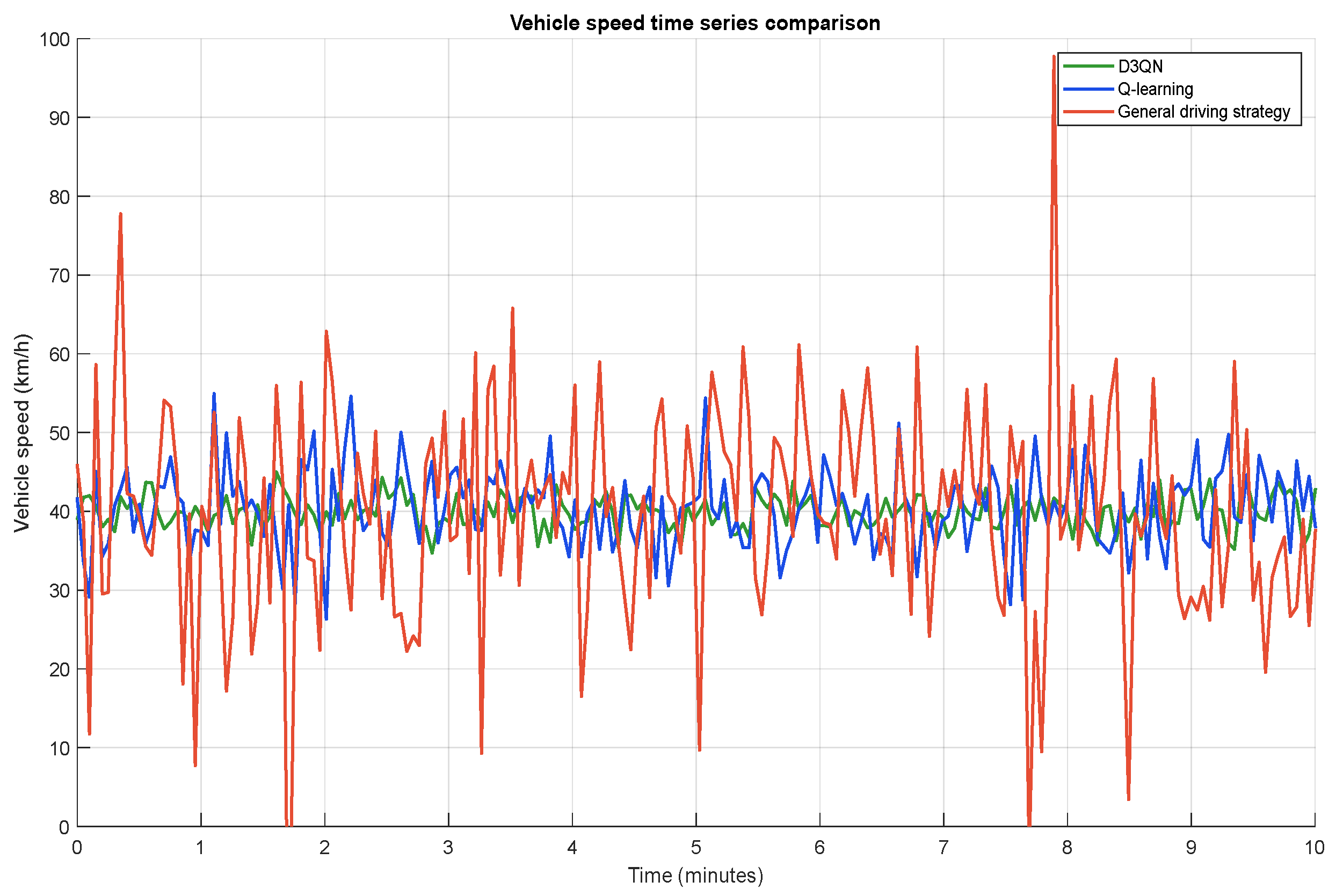

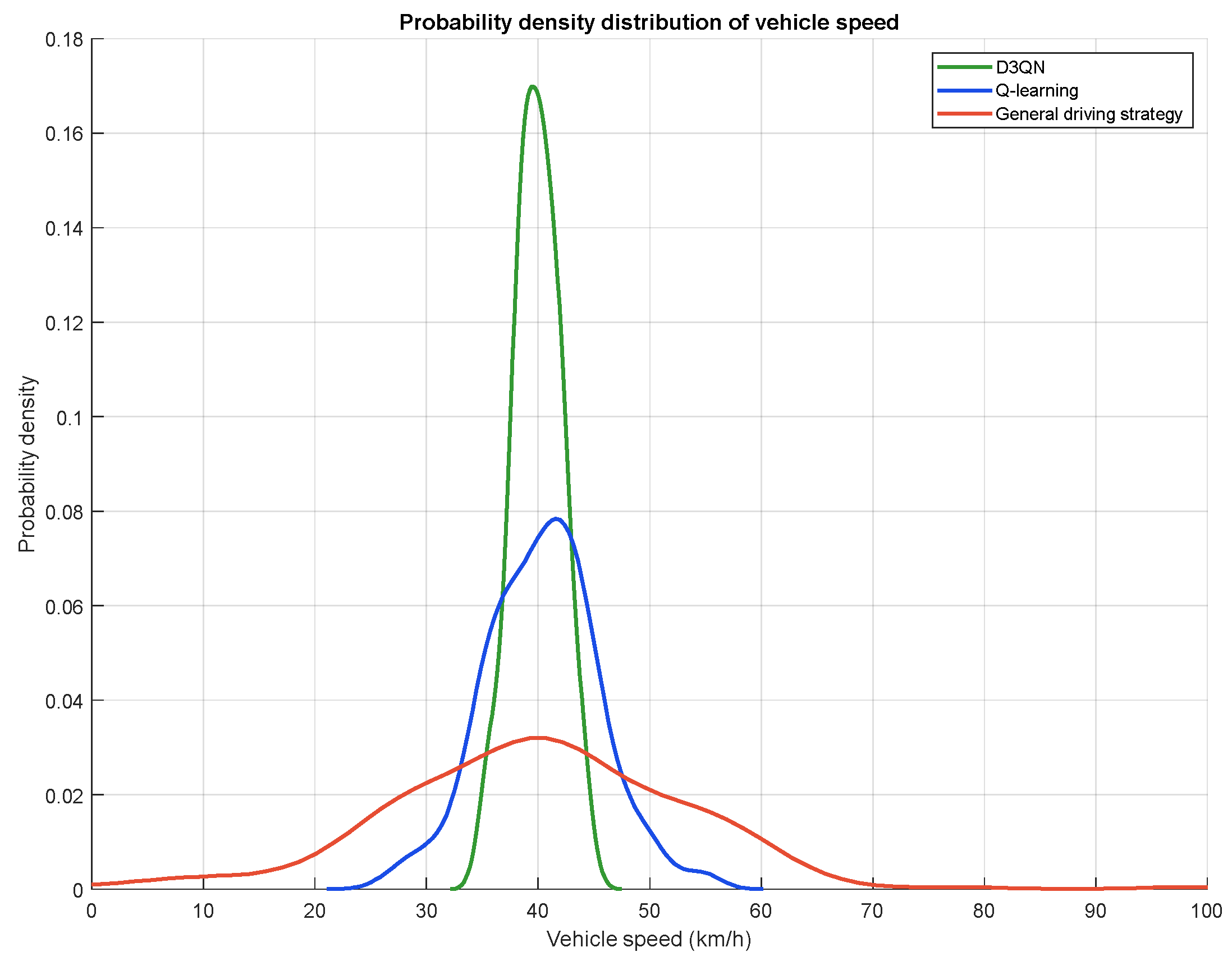

- The simulation scenario is from the intersection of Haigang road and Binhai Avenue in Qingdao to the intersection of dawangang road and Binhai Avenue. According to the simulation results, compared with the conventional strategy, D3QN strategy can save 8.41%~6.67% of fuel consumption under different proportions of intelligent connected vehicles (CAV); Compared with Q-learning strategy, it can still save 1.94%~1.5% of fuel consumption. And with the increase of traffic density, this energy-saving advantage is more obvious. When the traffic flow density increases from 0 to 30 pcu·km−1, the increase of fuel consumption with D3QN strategy is more gentle, indicating that it has stronger energy-saving adaptability in congestion scenarios. In terms of traffic flow stability, D3QN strategy can effectively reduce the speed fluctuation. Through the quantitative analysis of the standard deviation, the vehicle speed of the conventional strategy fluctuates violently in a short time. Although the Q-learning strategy has improved, the speed fluctuation of the D3QN strategy is the lowest. From the probability density distribution of vehicle speed, the D3QN strategy can centrally control the vehicle speed in the range of 30~50 km/h, significantly improve the stability of traffic flow, and reduce the energy consumption waste and traffic efficiency loss caused by frequent acceleration and deceleration.

- (5)

- The simulation verification of this study was only conducted on specific road sections in Qingdao, and the universality of the conclusion under different road topologies needs further verification. The current micro models have not fully considered the interdependence between link costs and traffic flow. Therefore, future core work will focus on the following three points: (a) establishing a macro micro integrated model: coupling this micro simulation with the macro transportation system model, and evaluating the system level net effect of energy-saving strategies through iterative feedback (such as whether it will trigger traffic volume transfer). (b) Implement data-driven model calibration: Focus on utilizing floating car data and probe car data, following advanced data fusion methods, to empirically calibrate the traffic flow basic map, car following model parameters, and energy consumption model parameters, in order to improve the accuracy of model characterization in real environments. (c) Validation at an intelligent connected experimental field: Referring to the model of advanced experimental fields such as Santander, high-quality trajectory, flow, and energy consumption data are obtained in real urban environments to ultimately validate and enhance the practicality and robustness of this framework.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dai, S.; Qu, D.; Meng, Y.; Yang, Y.; Duan, Q. Evolutionary Game Mechanisms of Lane Changing for Intelligent Connected Vehicles on Traffic Flow Frequently Changing Sections. Complex Syst. Complex. Sci. 2024, 21, 128–135+153. [Google Scholar]

- Qu, D.; Liu, H.; Yang, Z.; Dai, S. Dynamic allocation mechanism and model of traffic flow in bottleneck section based on vehicle infrastructure cooperation. J. Jilin Univ. (Eng. Technol. Ed.) 2024, 54, 2187–2196. [Google Scholar]

- Wang, Z.; Wu, G.; Barth Matthew, J. Cooperative eco-driving at signalized intersections in a partially connected and automated vehicle environment. IEEE Trans. Intell. Transp. Syst. 2019, 21, 2029–2038. [Google Scholar] [CrossRef]

- Xu, S.; Li, S.; Deng, K.; Li, S.; Cheng, B. A unified pseudospectral computational framework for optimal control of road vehicles. IEEE/ASME Trans. Mechatron. 2014, 20, 1499–1510. [Google Scholar] [CrossRef]

- Sun, C.; Li, X.; Hu, H.; Yu, W. Eco-driving strategy for intelligent connected vehicles considering secondary queuing. J. Transp. Eng. Inf. 2023, 21, 92–102. [Google Scholar]

- Li, T.; Xie, B.; Liu, T.; Chen, H.; Wang, Z. A Rule-based Energy-saving Driving Strategy for Battery Electric Bus at Signalized Intersections. J. Transp. Syst. Eng. Inf. Technol. 2024, 24, 139–150. [Google Scholar]

- Dong, H.; Zhuang, W.; Chen, B. Enhanced eco-approach control of connected electric vehicles at signalized intersection with queue discharge prediction. IEEE Trans. Veh. Technol. 2021, 70, 5457–5469. [Google Scholar] [CrossRef]

- Lakshmanan, V.K.; Sciarretta, A.; El Ganaoui-Mourlan, O. Cooperative eco-driving of electric vehicle platoons for energy efficiency and string stability. IFAC-Pap. 2021, 54, 133–139. [Google Scholar] [CrossRef]

- Kim, Y.; Guanetti, J.; Borrelli, F. Compact cooperative adaptive cruise control for energy saving: Air drag modelling and simulation. IEEE Trans. Veh. Technol. 2021, 70, 9838–9848. [Google Scholar] [CrossRef]

- Chen, J.; Li, S.E.; Tomizuka, M. Interpretable end-to-end urban autonomous driving with latent deep reinforcement learning. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5068–5078. [Google Scholar] [CrossRef]

- Saxena, D.M.; Bae, S.; Nakhaei, A.; Fujimura, K.; Likhachev, M. Driving in dense traffic with model-free reinforcement learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 5385–5392. [Google Scholar]

- Feng, Y.; Jing, S.; Hui, F.; Zhao, X.; Liu, J. Deep reinforcement learning-based lane-changing trajectory planning method of intelligent and connected vehicles. J. Automot. Saf. Energy 2022, 13, 705–717. [Google Scholar]

- Jiang, H.; Zhang, J.; Zhang, H.; Hao, W.; Ma, C. A Multi-objective Traffic Control Method for Connected and Automated Vehicle at Signalized Intersection Based on Reinforcement Learning. J. Transp. Inf. Saf. 2024, 42, 84–93. [Google Scholar]

- Guo, Q.; Angah, O.; Liu, Z.; Ban, X. Hybrid deep reinforcement learning based eco-driving for low-level connected and automated vehicles along signalized corridor. Transp. Res. Part C Emerg. Technol. 2021, 124, 102980. [Google Scholar] [CrossRef]

- Zeng, X.; Zhu, M.; Guo, K.; Wang, Y.; Feng, D. Optimization of Energy-Saving Driving Strategy on Urban Ecological Road with Mixed Traffic Flows. J. Tongji Univ. (Nat. Sci.) 2024, 52, 1909–1918. [Google Scholar]

- Alonso, B.; Musolino, G.; Rindone, C.; Vitetta, A. Estimation of a fundamental diagram with heterogeneous data sources: Experimentation in the city of santander. ISPRS Int. J. Geo-Inf. 2023, 12, 418. [Google Scholar] [CrossRef]

- Ying, P.; Zeng, X.; Song, H.; Shen, T.; Yuan, T. Energy-efficient train operation with steep track and speed limits: A novel Pontryagin’s maximum principle-based approach for adjoint variable discontinuity cases. IET Intell. Transp. Syst. 2021, 15, 1183–1202. [Google Scholar] [CrossRef]

| Parameter | Symbol | Numerical Value |

|---|---|---|

| road length/m | 1610 | |

| number of lanes | 3 | |

| CAV conductor/m | 5 | |

| car conductor/m | 5 | |

| Speed limit of road section (km·h−1) | 30 | |

| deceleration/(m·s−2) | 0.6 | |

| maximum deceleration/(m·s−2) | 1 | |

| starting position of road section/m | 415 | |

| end position of road section/m | 125 | |

| traffic density/(pcu·km−1) | 0~30 | |

| stochastic moderation probability | 0.2~0.5 | |

| phase interval/m | 10 |

| Parameter | Numerical Value |

|---|---|

| number of training rounds | 500 |

| Pre-experimental steps | 500 |

| minimum batch size | 100 |

| 0.90 | |

| 0.001 | |

| 0.5 | |

| parameter update cycle | 50 steps |

| network layers | 3 |

| experience playback pool size | 500 |

| state matrix size | 1 × 12 × 1 + 1 |

| action space size | 3 × 3 |

| Traffic Flow Density | CAV Ratio/% | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Strategy | 0 | 20 | 40 | 60 | 80 | 100 | |||||||

| Fuel Use | Effect | Fuel Use | Effect | Fuel Use | Effect | Fuel Use | Effect | Fuel Use | Effect | Fuel Use | Effect | ||

| 0 | Q-learning | 109.80 | 4.69% | 109.50 | 4.95% | 109.30 | 5.12% | 109.10 | 5.30% | 108.90 | 5.47% | 108.70 | 5.64% |

| D3QN | 108.20 | 6.08% | 108.00 | 6.25% | 107.80 | 6.42% | 107.60 | 6.60% | 107.40 | 6.77% | 107.20 | 6.94 | |

| 5 | Q-learning | 120.40 | 4.82% | 119.90 | 4.69% | 119.50 | 4.63% | 119.10 | 4.57% | 118.70 | 4.50 | 118.30 | 4.44 |

| D3QN | 118.60 | 6.25% | 118.20 | 6.04% | 117.80 | 5.99% | 117.40 | 5.93% | 117.00 | 5.87 | 116.60 | 5.82 | |

| 10 | Q-learning | 129.10 | 5.21% | 128.40 | 5.10% | 127.90 | 5.05% | 127.40 | 5.00% | 126.90 | 4.94 | 132.90 | 4.89 |

| D3QN | 127.00 | 6.76% | 126.40 | 6.58% | 125.90 | 6.53% | 125.40 | 6.49% | 124.90 | 6.44 | 126.40 | 6.40 | |

| 15 | Q-learning | 135.60 | 5.57% | 134.80 | 5.40% | 134.20 | 5.29% | 133.60 | 5.18% | 133.00 | 5.07 | 132.40 | 4.95 |

| D3QN | 133.20 | 7.24% | 132.50 | 7.02% | 131.90 | 6.91% | 131.30 | 6.81% | 130.70 | 6.71 | 130.10 | 6.60 | |

| 20 | Q-learning | 145.80 | 5.81% | 144.90 | 5.67% | 144.10 | 5.63% | 143.30 | 5.60% | 142.50 | 5.57 | 144.70 | 5.53 |

| D3QN | 143.10 | 7.56% | 142.30 | 7.36% | 141.60 | 7.27% | 140.90 | 7.18% | 140.20 | 7.09 | 139.50 | 7.00 | |

| 25 | Q-learning | 150.80 | 6.22% | 149.80 | 6.08% | 148.90 | 6.06% | 148.00 | 6.03% | 147.10 | 6.01 | 146.20 | 5.98 |

| D3QN | 147.80 | 8.08% | 146.90 | 7.90% | 146.10 | 7.82% | 145.30 | 7.75% | 144.50 | 7.67 | 143.70 | 7.59 | |

| 30 | Q-learning | 157.50 | 6.53% | 156.40 | 6.40% | 155.50 | 6.33% | 154.60 | 6.25% | 153.70 | 6.17 | 152.80 | 6.09 |

| D3QN | 154.20 | 8.52% | 153.20 | 8.32% | 152.30 | 8.25% | 151.40 | 8.18% | 150.50 | 8.11 | 149.60 | 8.05 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, M.; Qu, D.; Wang, K.; Chen, Y.; Zhan, J. Optimization Method of Energy Saving Strategy for Networked Driving in Road Sections with Frequent Traffic Flow Changes. Vehicles 2025, 7, 118. https://doi.org/10.3390/vehicles7040118

Gao M, Qu D, Wang K, Chen Y, Zhan J. Optimization Method of Energy Saving Strategy for Networked Driving in Road Sections with Frequent Traffic Flow Changes. Vehicles. 2025; 7(4):118. https://doi.org/10.3390/vehicles7040118

Chicago/Turabian StyleGao, Minghao, Dayi Qu, Kedong Wang, Yicheng Chen, and Jintao Zhan. 2025. "Optimization Method of Energy Saving Strategy for Networked Driving in Road Sections with Frequent Traffic Flow Changes" Vehicles 7, no. 4: 118. https://doi.org/10.3390/vehicles7040118

APA StyleGao, M., Qu, D., Wang, K., Chen, Y., & Zhan, J. (2025). Optimization Method of Energy Saving Strategy for Networked Driving in Road Sections with Frequent Traffic Flow Changes. Vehicles, 7(4), 118. https://doi.org/10.3390/vehicles7040118