Abstract

The World Health Organization reports approximately 1.35 million fatalities annually due to road traffic accidents, with pedestrians constituting 23% of these deaths. This highlights the critical need to enhance pedestrian safety, especially given the significant role human error plays in road accidents. Autonomous vehicles present a promising solution to mitigate these fatalities by improving road safety through advanced prediction of pedestrian behavior. With the autonomous vehicle market projected to grow substantially and offer various economic benefits, including reduced driving costs and enhanced safety, understanding and predicting pedestrian actions and intentions is essential for integrating autonomous vehicles into traffic systems effectively. Despite significant advancements, replicating human social understanding in autonomous vehicles remains challenging, particularly in predicting the complex and unpredictable behavior of vulnerable road users like pedestrians. Moreover, the inherent uncertainty in pedestrian behavior adds another layer of complexity, requiring robust methods to quantify and manage this uncertainty effectively. This review provides a structured and in-depth analysis of pedestrian intention prediction techniques, with a unique focus on how uncertainty is modeled and managed. We categorize existing approaches based on prediction duration, feature type, and model architecture, and critically examine benchmark datasets and performance metrics. Furthermore, we explore the implications of uncertainty types—epistemic and aleatoric—and discuss their integration into autonomous vehicle systems. By synthesizing recent developments and highlighting the limitations of current methodologies, this paper aims to advance the understanding of Pedestrian intention Prediction and contribute to safer and more reliable autonomous vehicle deployment.

1. Introduction

According to the World Health Organization (WHO) report on road safety [1], approximately 1.35 million people are fatally injured in road crashes each year. Pedestrians account for 23% of all road traffic deaths globally, which is a disturbingly high percentage. As the most vulnerable road users (VRUs), pedestrians are essential participants in traffic and require robust protection. Given that human error is a significant contributor to most road traffic accidents [2], autonomous vehicles (AVs) have the potential to reduce these fatalities and improve road safety.

Autonomous vehicle technology offers numerous economic benefits, including reduced driving costs, improved fuel efficiency, and enhanced safety. By eliminating human drivers, this technology aims to make the driving experience error-free and less stressful for both drivers and passengers, reducing human errors and lowering accident rates, thus creating a safer traffic environment for all road users, including pedestrians. Moreover, AVs offer the convenience of allowing passengers to engage in other productive activities or leisure during travel, without needing to focus on road conditions [3,4].

However, a major challenge for AVs is replicating the human capacity to understand social cues and predict the behavior of VRUs, such as pedestrians, who are not protected by vehicle safety features. To ensure pedestrian safety, AVs must accurately anticipate pedestrian intentions early enough to allow for safe maneuvering. This task is challenging due to the high level of uncertainty in pedestrian behavior, which is influenced by factors such as demographics, traffic conditions, and the environment [5]. To account for this uncertainty, AVs often adopt safe driving strategies, such as reducing speed and avoiding complex interactions, which, while enhancing safety, can also disrupt traffic flow and reduce efficiency [4]. Furthermore, pedestrian intention is often shaped by the physical design of the road, visual cues like traffic lights and signage, environmental conditions like lighting or weather, and even local customs around jaywalking or right-of-way expectations. These external variables introduce added complexity to pedestrian modeling.

While action and trajectory prediction methods [6,7,8,9] can provide insights into pedestrian movements, they often fail to capture the underlying intentions due to the uncertainty and complexity of human behavior. A more accurate prediction of pedestrian intentions requires a deeper understanding of the pedestrian’s context, past behavior, and environmental factors [4].

This paper contributes a structured and comprehensive review of pedestrian intention prediction (PIP) methods, with an emphasis on the integration of uncertainty modeling. Unlike existing surveys, we offer a multi-dimensional classification based on prediction duration, feature types, and modeling approaches. Furthermore, we provide a critical analysis of how uncertainty is modeled and managed in prediction tasks, bridging a gap between theoretical modeling and real-world applicability in AV contexts.

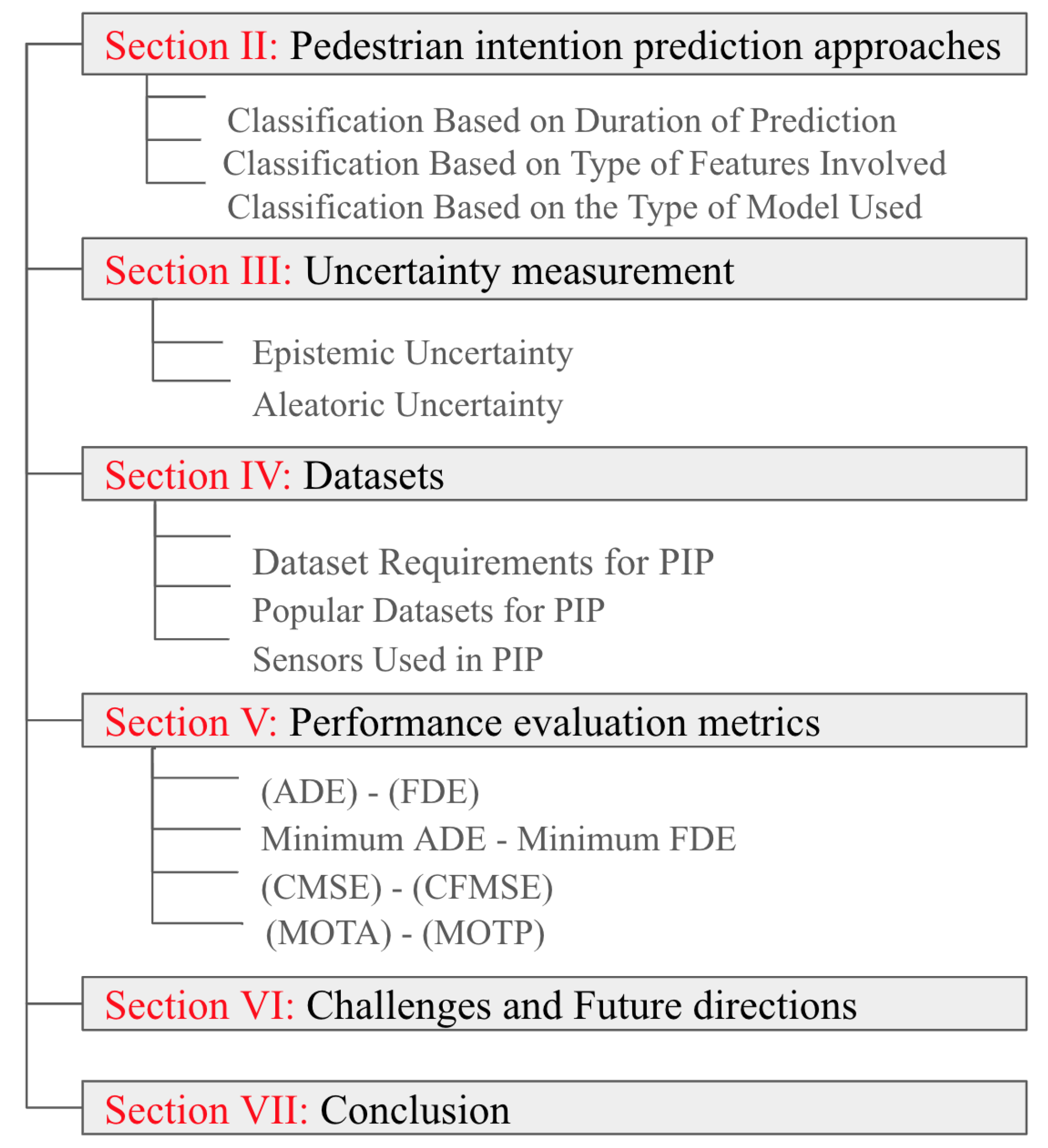

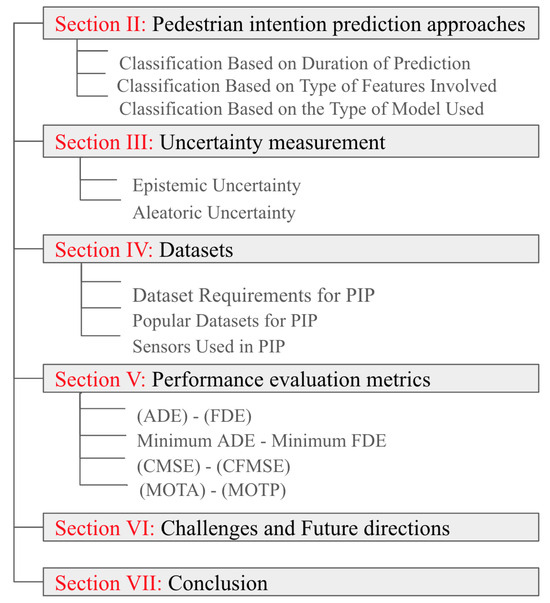

The paper is organized as follows: Section 2 reviews existing surveys on pedestrian intention prediction methods and highlights the role of uncertainty in these models. Section 3 classifies and analyzes various approaches for predicting pedestrian behavior, focusing on trajectory prediction and intention recognition models. Section 4 discusses uncertainty in PIP, exploring the different types of uncertainty (e.g., epistemic and aleatoric) and how they are handled in PIP. Section 5 describes key datasets and sensors used in PIP tasks. Section 6 discusses evaluation metrics for assessing the performance of PIP models. Section 7 discusses the challenges in PIP, such as the complexity of human behavior, the limitations of current models, and the integration of uncertainty. Additionally, it explores future research directions to address these challenges. Finally, Section 8 concludes the study by summarizing the main findings and suggesting further advancements in the field. The organization of this paper is visually outlined in Figure 1.

Figure 1.

A visual representation of the paper’s structure, which outlines the key sections and their organization.

2. Related Work

In the domain of AVs, predicting the intentions and behaviors of pedestrians is crucial for ensuring safe navigation in complex environments. In the following, we review key papers on pedestrian intention prediction and categorize them for a structured overview.

2.1. Pedestrian Intention and Behavior Estimation

Sharma et al. [4] comprehensively surveyed techniques for predicting pedestrian intentions in the context of AVs, emphasizing the challenges posed by the variability in pedestrian behavior and the social norms influencing road scenarios. They categorized prediction methods, reviewed datasets capturing complex human behavior in traffic environments, and conducted a comparative analysis of these approaches using benchmark datasets and evaluation metrics. Additionally, the authors identified key challenges and proposed directions for future research to enhance PIP and support safer AV implementation.

Ridel et al. [10] discuss the critical challenge of anticipating pedestrian actions for intelligent vehicles, highlighting its importance for safety. The authors review the complexities of predicting pedestrian behavior, which is influenced by diverse factors such as movement variability, occlusions, distractions, and external interactions. The paper surveys existing approaches and advancements in pedestrian intention estimation, noting progress in predicting positions shortly before crossing but emphasizing limitations in accurately forecasting when pedestrians will pause at curbs.

2.2. Trajectory Prediction in Crowd Scenarios

Korbmacher et al. [11] explored the challenges of predicting pedestrian trajectories in crowd scenarios, focusing on the influences of scene topology and pedestrian interactions. They reviewed classical knowledge-based models alongside recent deep learning approaches, driven by advancements in data science and collection technologies. Their comparison highlighted the higher accuracy of deep learning methods for local trajectory prediction while questioning the continued relevance of knowledge-based models. However, the study noted limitations in deep learning methods for large-scale simulations and capturing collective dynamics. The authors proposed hybrid approaches, combining the strengths of both methodologies, as a promising direction to address issues like the lack of explainability in deep learning models and to enhance predictive capabilities.

Sighencea et al. [12] reviewed recent advancements in pedestrian trajectory prediction, a critical aspect of computer vision in the automotive industry, particularly for advanced driver assistance systems and AVs. The study explored DL-based methods, noting their dependence on enhanced sensor systems and modern signal processing. It also provided an overview of key datasets, evaluation metrics, and practical applications. While noting significant progress, the authors identified research gaps and proposed future directions to address the remaining challenges in pedestrian trajectory prediction.

Galvão et al. [13] reviewed state-of-the-art algorithms designed to enhance behavior prediction systems in AVs, with a focus on predicting the trajectories and intentions of both pedestrians and vehicles. Despite significant advancements, AV systems remain limited, as evidenced by collision and near-miss reports involving AVs, such as those by Google. The authors highlighted that improving prediction capabilities is crucial for preventing such incidents. Their review synthesized findings from previous literature, recent studies, and experiments conducted on established datasets, offering insights into current progress and areas for improvement in AV behavior prediction.

Kim et al. [14] introduce a Multiple Stakeholder Perspective Model (MSPM) to enhance pedestrian trajectory prediction by incorporating both driver and pedestrian viewpoints. Their model integrates data from virtual reality simulations, achieving a 4.48% reduction in short- and mid-term trajectory errors and an 11.14% reduction in long-term errors. The study highlights the importance of head orientation data for accurate trajectory forecasting.

2.3. VRU Intention Estimation and Safety

Bighashdel et al. [15] focus on the analysis of VRUs behavior, emphasizing its significance for applications like video surveillance and autonomous driving. They highlight the complexity of VRU movements, which has led to the development of diverse predictive models in the literature. This paper provides a comprehensive review of path prediction methods, categorizes these approaches from multiple perspectives, and proposes a framework to enhance the understanding of various aspects of VRU path prediction challenges.

Ahmed et al. [16] review recent advancements in pedestrian and cyclist detection and intent estimation to enhance the safety of AVs. They emphasize the importance of understanding the intentions of VRUs to prevent accidents. The study explores deep learning (DL) techniques, including Fast R-CNN, Faster R-CNN, and SSD, which have significantly advanced pedestrian detection. They highlight the growing feasibility of DL due to advancements in hardware and its application in tracking, motion modeling, and pose estimation for intent prediction. While substantial progress has been made in pedestrian detection using vision-based approaches, the authors note a need for further focus on cyclist detection. Additionally, they recommend exploring sensor fusion and advanced intent estimation methods to improve VRU safety.

Xue et al. [17] explore the complexities of estimating VRU intentions by analyzing scene dynamics from the ego-vehicle’s perspective. Their multimodal PIP framework, using attention mechanisms and a novel (MHAAdjMat)-based GCN, demonstrates superior performance over state-of-the-art models, predicting pedestrian crossing intent with high accuracy up to 2.5 s before the event.

Rasouli et al. [18] analyze various factors influencing VRU behavior and their interconnected effects on intentions. They emphasize a multimodal approach to understanding complex human psychology, enhancing AI-driven vehicles’ scene reasoning and decision-making. The study also addresses the challenges in interpreting social interactions and their impact on VRU behavior.

Pandey et al. [19] explore the challenges AVs face in achieving full autonomy, particularly in urban environments. They emphasize the importance of effective communication and understanding the intentions of road users, including pedestrians, as critical for safe interactions. The authors emphasize the intricacy of verbal and non-verbal social cues, stressing their critical role in life-and-death decision-making scenarios of correctly identifying whether a pedestrian intends to cross the road. The paper discusses challenges in pedestrian-autonomous vehicle interactions and proposes a novel architecture for intention identification, integrating pedestrian detection, pose estimation, and classification algorithms. Additionally, the authors review various methods for these tasks, aiming to enhance safety and interaction norms in urban driving scenarios.

Zou et al. [20] explore how roadway centerline designs and AV signaling affect pedestrian behavior at unmarked midblock crossings. Using VR simulations, they find that roadway features and AV signals significantly impact waiting and crossing times. Older pedestrians tend to wait longer, and past behaviors have limited effects. The findings suggest improvements in AV communication strategies and roadway designs to enhance pedestrian safety.

2.4. Scene Understanding and Event Reasoning

Xue et al. [21] examine the progression towards full autonomy in vehicles, emphasizing the limitations of traditional low-level vision tasks like detection, tracking, and segmentation for understanding traffic scenes. They argue that comprehensive scene understanding requires insights into the past, present, and future behaviors of traffic participants. The paper explores autonomous driving through the lens of event reasoning, reviewing literature and advancements in scene representation, event detection, and intention prediction. The authors also discuss current challenges and propose potential solutions to bridge gaps in achieving fully automated driving systems.

Zhou and Zeng [22] propose a multi-task model for AVs that handles pedestrian detection, tracking, and attribute recognition. Their model, which operates in two stages, uses low-resolution images for initial tasks and high-resolution images for detailed attribute detection. The approach, trained on multiple datasets, shows significant resource savings and accurate detection.

2.5. Specialized Approaches and Case Studies

Haque et al. [23] investigate pedestrian signal violations at urban intersections in New Delhi, using video data from 11 sites. Their study identifies key factors like pedestrian speed and waiting time, modeling these behaviors with an ANN that achieved 85% accuracy, surpassing traditional regression models. Recommendations include site-specific facility design and shorter pedestrian signals.

Razali et al. [24] propose a vision-based system for real-time pedestrian localization, body pose estimation, and intention prediction using a neural network operating at 5 fps. Their model, which utilizes a 5-block ResNet-50 network with parallel convolutional heads, achieves a 20% improvement in intention prediction precision. Despite this, the multitask approach presents trade-offs, such as a minor reduction in pose detection performance. The source code is publicly available for integration into ADAS or traffic light management systems.

Chen et al. [25] examine drivers’ recognition of pedestrian crossing intentions using eye-tracking data. They find that experienced drivers are more conservative and engage in more detailed processing. Both experienced and novice drivers are quicker to detect and respond to pedestrians intending to cross, focusing on the upper body for intention recognition. The study outlines a two-phase intention recognition process involving initial detection and detailed evaluation.

2.6. Use of Historical Road Incident Data for Road Redesign Potential

In the paper by Gkyrtis and Pomoni [26], the authors explore the use of historical road incident data to assess the potential effectiveness of various road redesigns in improving traffic safety. Their approach utilizes a data-driven methodology, which analyzes accident reports and historical traffic data to identify patterns and correlations between road features (e.g., road geometry, signage, lighting) and incident occurrence. They employed advanced statistical modeling techniques to quantify how specific design changes could impact accident rates, providing a valuable framework for future infrastructure improvements.

The findings of their study indicate that certain road features, such as improved lighting, better signage, and revised intersections, significantly reduce the likelihood of road incidents. Particularly, their analysis demonstrated that roads with poor visibility and complex intersections were prone to higher accident rates, suggesting that addressing these issues could enhance pedestrian safety.

However, they primarily focused on specific geographical regions, which may reduce the generalizability of the findings to other urban settings. Additionally, the authors note that driver behavior and external environmental factors (e.g., weather conditions or vehicle types) were not fully integrated into their analysis, which could affect the robustness of the results. Despite this, their study remains valuable for understanding how infrastructure design can be optimized to mitigate road incidents, particularly in urban environments.

This research ties closely with PIP efforts, as pedestrian safety is a critical component of overall road safety. Insights from Gkyrtis and Pomoni’s work suggest that enhancing road design could significantly lower the risk of accidents involving pedestrians, making these findings highly relevant to the development of more effective PIP models. For instance, pedestrian prediction algorithms could integrate factors like road geometry and signage quality into their models to better anticipate pedestrian movements and enhance safety measures in AVs. Moreover, their work highlights the importance of considering road design when implementing safety protocols for VRUs, which directly informs the decision-making processes of AV systems in complex traffic scenarios.

2.7. Unique Contributions of This Survey

While several existing surveys have explored aspects of PIP, they often focus on isolated components such as model architectures or datasets, without providing a holistic analysis of the field. Unlike prior works, this survey offers the most comprehensive review to date, covering a wide range of approaches while identifying critical gaps in the literature. Notably, while some studies focus on model architectures without addressing sensor modalities, others overlook the importance of datasets, limiting their applicability. Additionally, we emphasize the role of uncertainty in PIP, a crucial factor often neglected in previous studies. By integrating discussions on methodologies, sensor modalities, datasets, and uncertainty estimation, this survey provides a well-rounded perspective to guide future research in developing more reliable and safety-oriented PIP models for AVs.

3. Pedestrian Intention Prediction Approaches

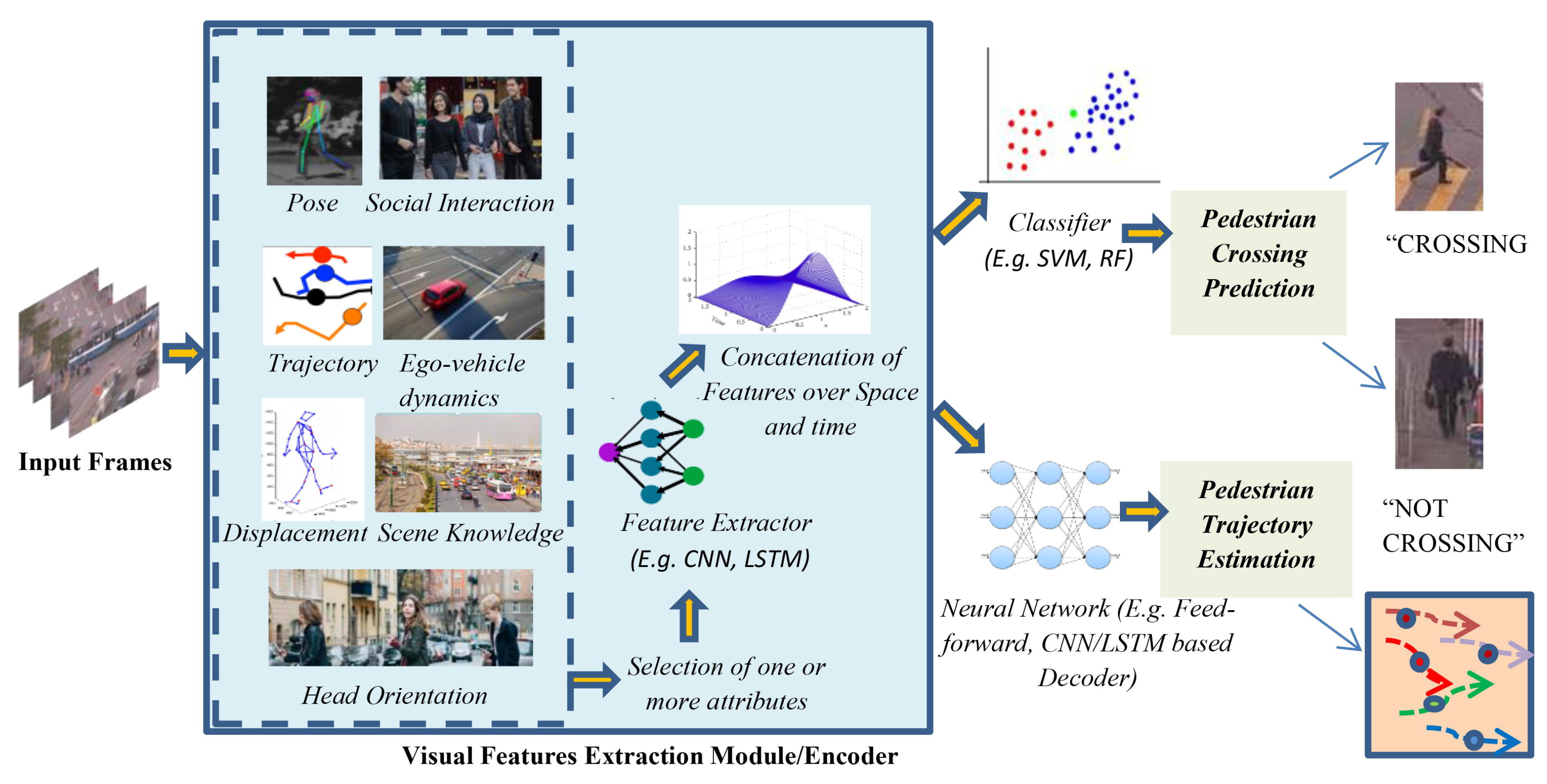

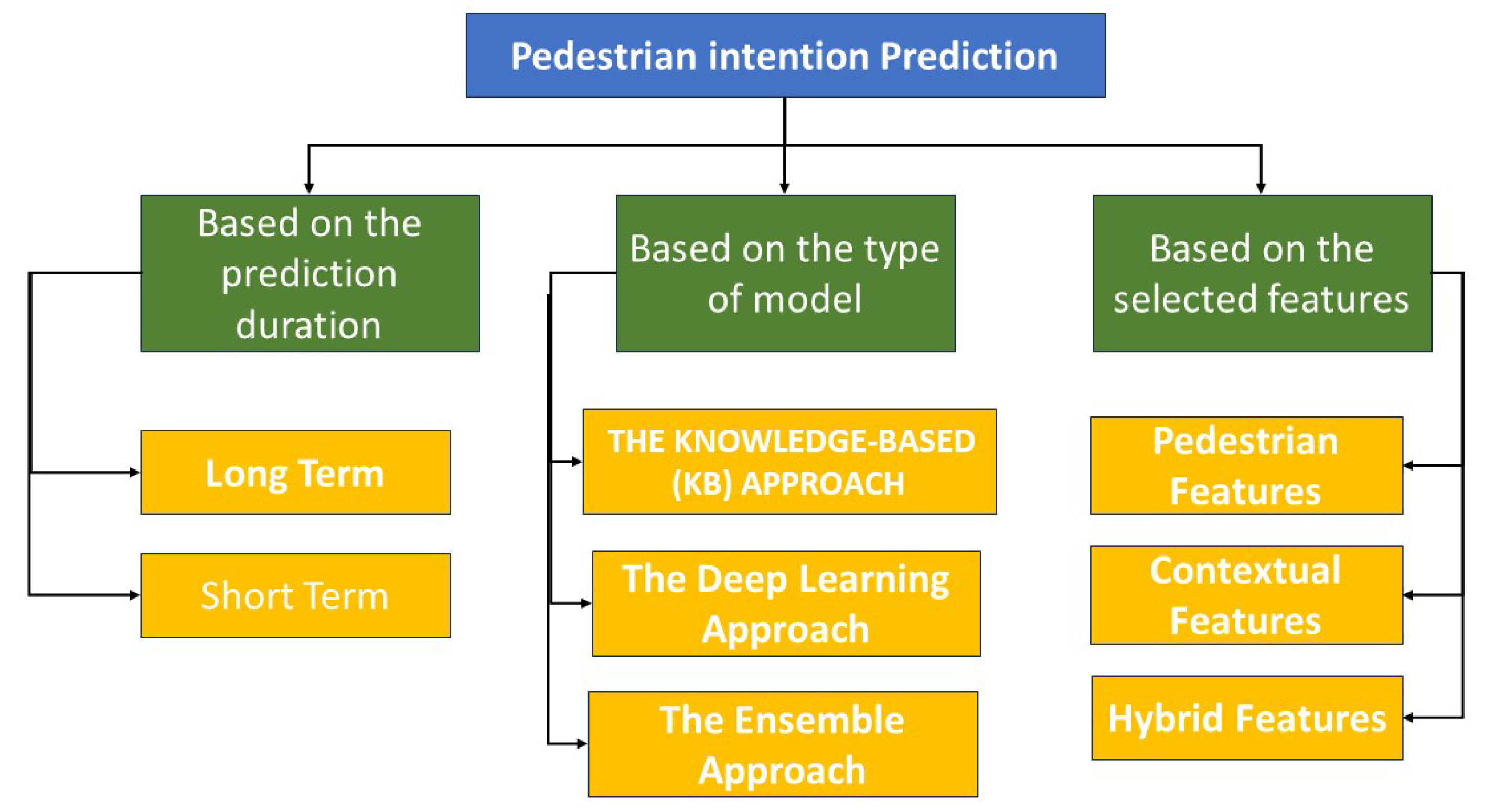

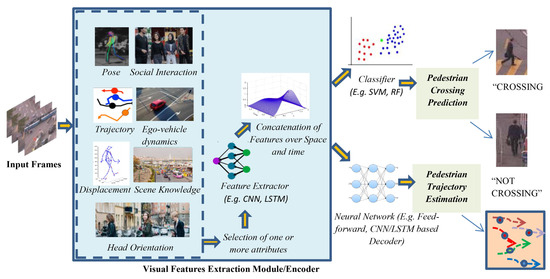

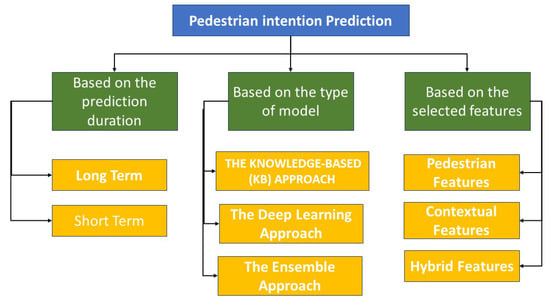

The prediction of pedestrian intentions typically follows three main stages: the input stage, the feature extraction and encoding stage, and the decoding or classification stage, depending on the required output, as illustrated in Figure 2. The input stage consists of frames extracted from video sequences, which can be obtained in real-time or from pre-recorded footage captured by cameras positioned at different angles. During pre-processing, these frames are analyzed to extract relevant attributes based on the specific needs of the proposed algorithm. Various feature extraction techniques encode spatial and temporal features. In the final stage, a classifier such as a neural network-based one is utilized to predict pedestrian crossings and forecast trajectories. The subsequent sections present a detailed categorization of different pedestrian intention estimation approaches, covering a broad spectrum of techniques in the literature. This classification is structured around three primary factors: duration, model type, and input feature type, as depicted in Figure 3 [4].

Figure 2.

Generalized framework for pedestrian intention prediction [4].

Figure 3.

Classification of pedestrian intention prediction.

3.1. Classification Based on Duration of Prediction

Pedestrian intention prediction techniques can be categorized according to the duration over which predictions are made:

3.1.1. Short-Term Prediction

Short-term predictions focus on anticipating the behavior of pedestrians or cyclists within a few seconds. These approaches typically utilize features such as head orientation or body movement patterns. Commonly predicted intentions include walking, stopping, crossing, and waiting for pedestrians, as well as lane changes, turning, or stopping for cyclists. These methods are gaining popularity due to their practicality in real-time applications and lower computational demands [4,24,27,28,29,30].

3.1.2. Long-Term Prediction

Long-term predictions are goal-oriented, aiming to predict a pedestrian’s trajectory or final destination. These methods often incorporate contextual and environmental information to improve trajectory estimation accuracy. Long-term prediction approaches have received significant attention in recent years, particularly in the autonomous vehicle (AV) research community, as they are crucial for fostering trust in fully autonomous systems. Despite their complexity, these methods play a vital role in AV development [4,7,31,32,33,34].

3.2. Classification Based on the Selected Features

The features used in predicting pedestrian intention in traffic scenarios can be broadly classified into three main types [4]:

3.2.1. Pedestrian-Centric Features

These features are specific to the pedestrian and include pose information [5,35,36,37,38], past trajectory [39,40,41,42,43,44,45,46,47,48], and head orientation [4,49,50,51].

- Joints/Pose

Information about a pedestrian’s joints and skeleton provides more distinct features than RGB images, especially under varying lighting conditions. The absence of body dynamics and pose data can delay predictions of changes in crossing intentions. Notably, joints related to the shoulders and legs are more significant for estimating real-time pedestrian activities, while upper body joints, like arms, contribute less to action recognition accuracy [5,35,36,37,38,52]. However, the use of pose features is limited to pedestrians and cannot be generalized to other VRUs like cyclists [4,53].

- Trajectories

Past trajectories play a crucial role in predicting future movements or poses of pedestrians and other VRUs. Recent research suggests that incorporating the uncertainty of human actions through trajectory distribution mapping can improve prediction accuracy [4,40,54,55,56,57,58,59,60,61].

- Head Orientation

The direction in which a pedestrian is looking is a critical cue for assessing their intention. Integrating head orientation with other features, such as leg movement, improves the accuracy of crossing intention predictions. Combining head pose data with trajectory information has also yielded promising results in predicting future pedestrian trajectories [4,49,50,51,62,63,64].

- Displacement

The displacement of a pedestrian, observed through methods like motion history images (MHIs) from stereo data, helps differentiate between standing, stopping, and starting actions. However, displacement alone is insufficient and needs to be combined with joint information for more reliable predictions [4,35,65].

3.2.2. Contextual Features

Contextual features pertain to the environment and include elements like scene infrastructure, road layout, and weather conditions. These features reduce the cognitive load on intelligent driving systems and enhance the accuracy of crossing behavior predictions [4,66].

- Social Interaction

The behavior of pedestrians is influenced by the actions of others around them. Thus, capturing social interactions, such as the movement of neighboring pedestrians, is essential for accurate intention prediction. Recent approaches incorporate the trajectories and actions of both immediate and distant neighbors to better understand the social dynamics that affect pedestrian behavior [4,7,9,39,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83].

- Scene Information

Understanding the scene context is crucial for predicting pedestrian behavior. This includes interactions with elements like zebra crossings, intersections, and waiting areas. Integrating scene information with pedestrian trajectories has been shown to improve prediction accuracy [4,34,42,52,84,85,86,87,88,89,90,91].

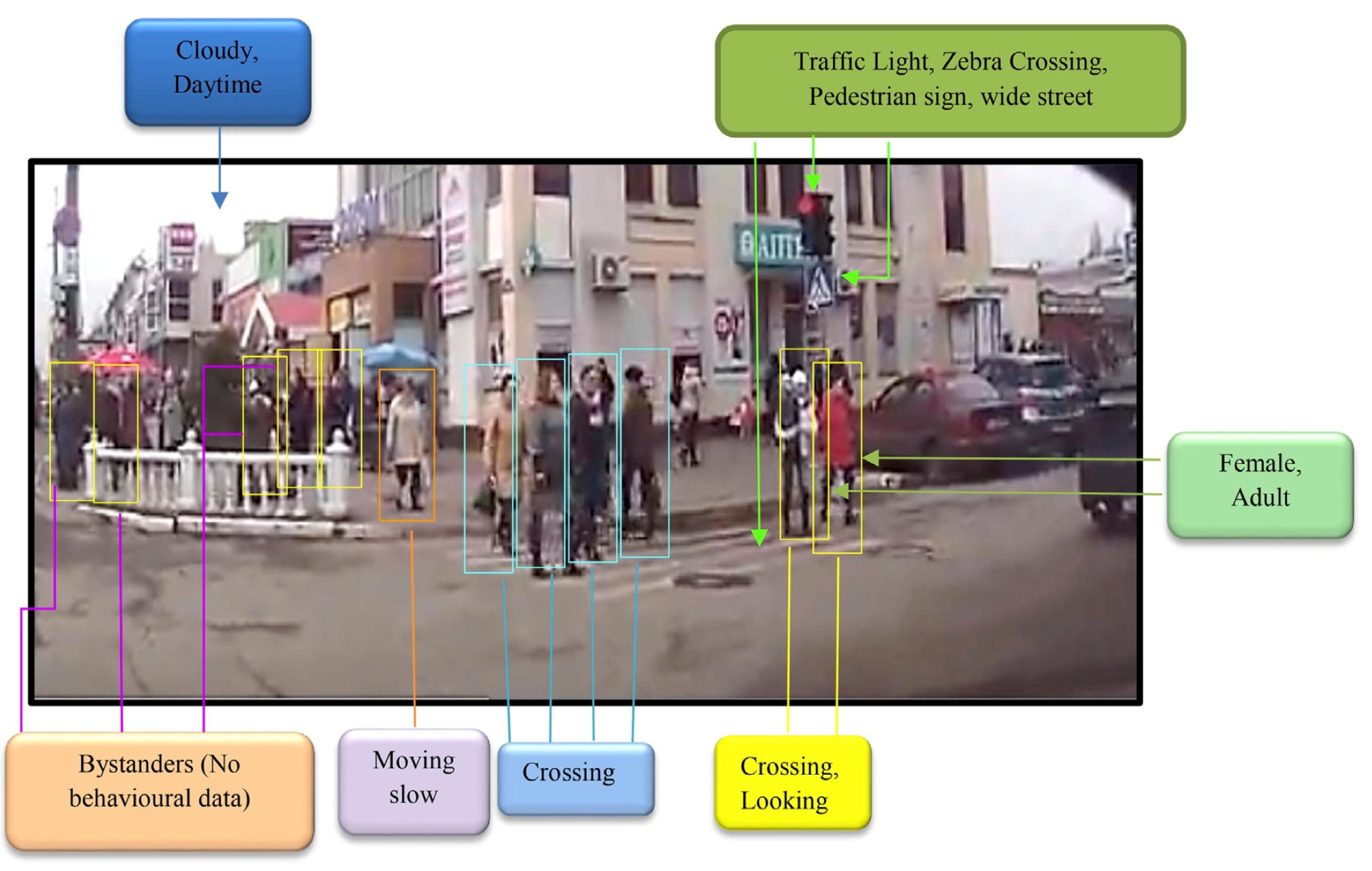

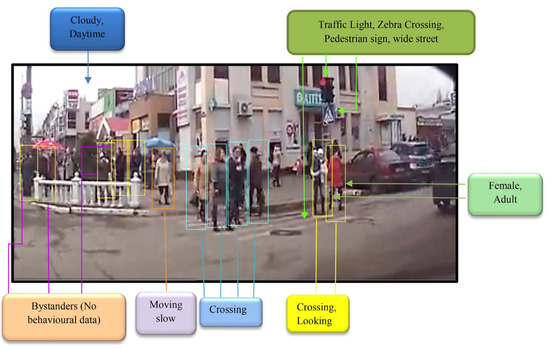

- Ego-Vehicle Information

Ego-vehicle dynamics, such as speed and relative displacement, play a significant role in predicting pedestrian intentions. The fusion of ego-vehicle features with pedestrian-specific or scene-specific data provides a more comprehensive understanding of the scene and improves the accuracy of intention predictions [4,38,40,62,92,93,94]. These features encompass aspects such as the speed of the ego-vehicle and its relative displacement in relation to the target pedestrian. Figure 4 illustrates an example of contextual data annotation [95] from an image in the JAAD dataset.

Figure 4.

An example from the JAAD dataset shows annotations including pedestrian bounding boxes with labels indicating whether they are crossing or not, along with contextual information about road infrastructure, weather conditions, and behavior labels [95].

3.2.3. Hybrid Features

Hybrid features combine pedestrian-centric and contextual information to enhance the understanding of human behavior in traffic environments. Many recent studies emphasize the need to integrate walking patterns, social interactions, and contextual details for more accurate predictions of crossing behavior [4,34,52,88,95,96,97,98,99,100,101].

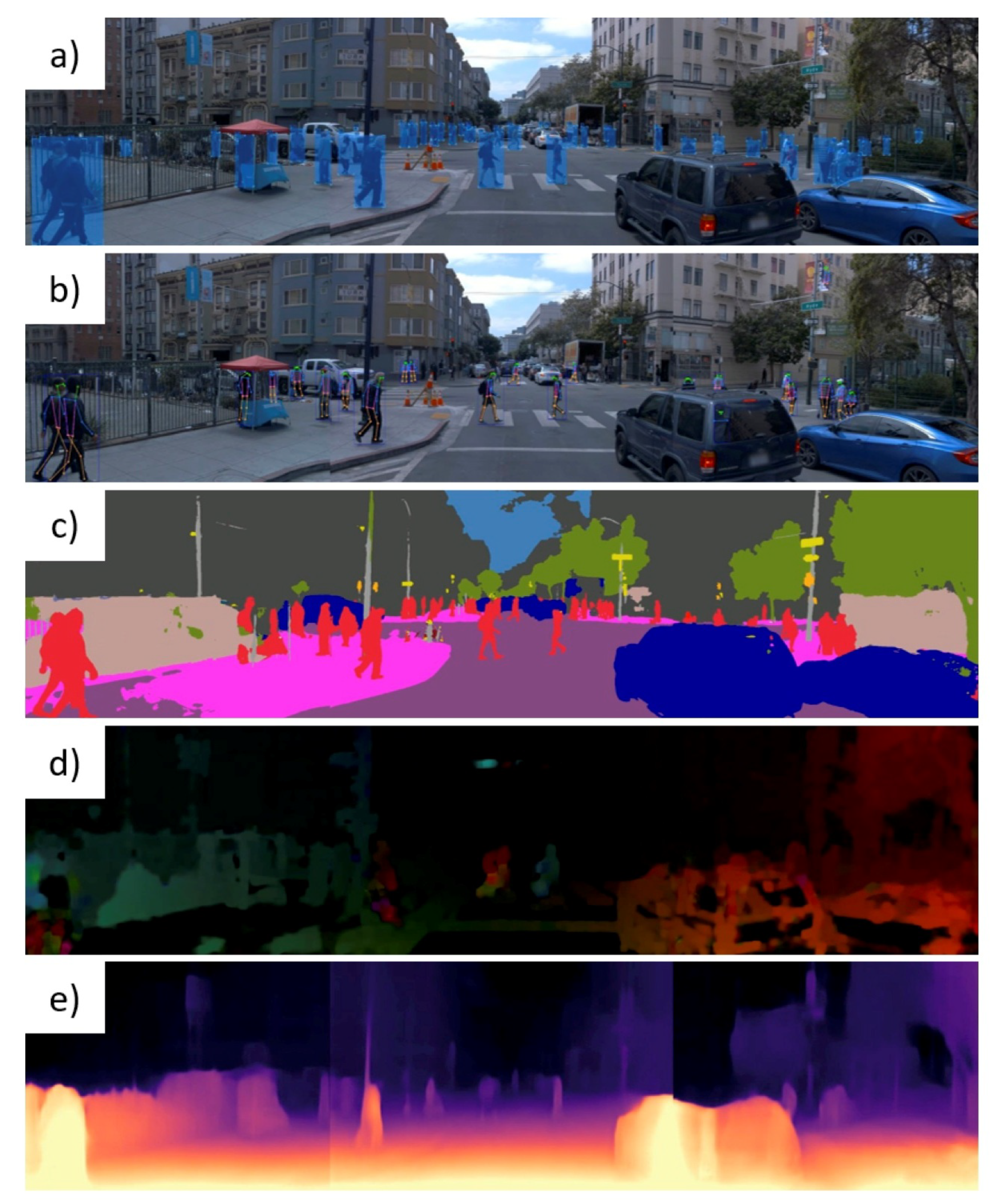

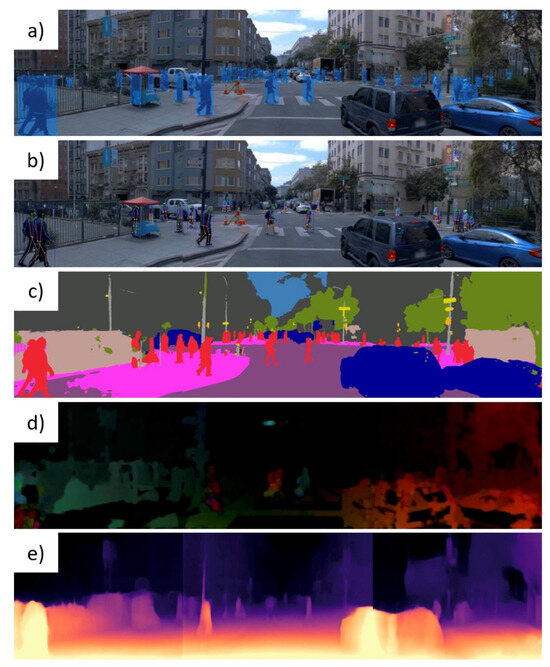

Figure 5 illustrates various features used in predicting pedestrian intention, including localization, pose estimation, object categorization, motion patterns, and depth estimation.

Figure 5.

Analyzing pedestrian’s features and traffic scene dynamics involves the following: (a) localizing pedestrians using bounding boxes; (b) gestures understanding through pose estimation; (c) categorizing objects using segmentation techniques; (d) comprehending overall motion patterns with optical flow; (e) calculating distances with a global depth heat-map [102].

3.3. Classification Based on the Type of Model

Pedestrian intention prediction models can be broadly categorized into two main types: the Knowledge-Based (KB) approach and the DL approach. This section provides an in-depth look at these two categories.

3.3.1. The Knowledge-Based Approach

In the early stages of pedestrian dynamics research, scholars primarily relied on direct observations, photographs, and time-lapse films to enhance their understanding of pedestrian behavior [103]. This understanding was instrumental in developing concepts such as level of service, designing elements for pedestrian facilities, and creating planning guidelines [104,105,106,107]. While these concepts and guidelines are valuable for understanding and managing pedestrian dynamics, they are not well-suited for predicting pedestrian flows or trajectories. As a result, researchers began developing simulation models, including force-based microscopic models [108], queuing models [109], the transition matrix model [110], and Henderson’s models [111,112], which proposed that pedestrian crowd behavior is analogous to the behavior of gases or fluids. These models, which focus on aggregated behaviors rather than individual pedestrian actions, are known as macroscopic models [11].

Today, KB pedestrian models span a range of scales, from macroscopic to mesoscopic and microscopic models, each capturing different aspects of pedestrian dynamics. Macroscopic and mesoscopic approaches are inspired by continuous fluid dynamics or gas-kinetic models, which describe dynamics at an aggregated level, while microscopic approaches focus on individual pedestrian movements. Numerous reviews in the literature explore the various modeling scales in pedestrian dynamics and the transitions between these scales [113,114,115,116,117]. Other reviews emphasize collective pedestrian dynamics [118,119,120,121] or discuss applications in layout design [11,122]. In the following section, we review KB pedestrian models with a focus on microscopic approaches and their use in predicting pedestrian trajectories.

- (A)

- Microscopic Pedestrian ModelsNumerous researchers have focused on modeling individual pedestrian movement using various microscopic approaches. A significant advantage of microscopic models over macroscopic ones is their ability to capture various behaviors. By considering each pedestrian individually, these models can attribute specific characteristics to each agent and accommodate behavioral diversity. However, microscopic models can be computationally demanding, which limits their use in large-scale simulations [11].Microscopic pedestrian models analyze individual behaviors and interactions among pedestrians. These interactions contribute to the emergent crowd dynamics at a macroscopic level [122]. These models are designed to replicate macroscopic features, such as fundamental diagrams or collective formations like band structures [123,124]. Such models, which focus on individual pedestrian dynamics, can predict pedestrian trajectories at various scales. The behavior of individual pedestrians is governed by specific rules based on physical, social, or psychological factors [117]. These rules are expressed through manually crafted dynamic equations based on Newton’s laws of motion. Given initial conditions such as position, velocity, and acceleration, these equations can simulate and predict future trajectories [11].The approach for determining a pedestrian’s new position can vary depending on the model’s inputs and outputs. Models that provide new velocity or acceleration, which are then used to calculate the new position, are classified as velocity-based or acceleration-based models, respectively. Conversely, models that determine position directly through specific rules without relying on differential equations are categorized as decision-based models [11].Acceleration-Based ModelsAcceleration-based models, particularly force-based models, describe pedestrian movement through the interaction of external forces [11]. These models generally include a relaxation term towards the desired direction and an interaction term that accounts for repulsion (social force) from neighbors and obstacles [125,126]. One of the earliest force-based models was introduced in the 1970s by Hirai and Tairu [108].The interaction forces in these models can vary in their mathematical representation: exponential in social force models [127], algebraic in centrifugal force models [128,129], or partly linear as in the optimal velocity model [130]. A vision field concept is often utilized to prioritize obstacles directly in front of the pedestrian. Since these models are of the second order, they require a fine discretization scheme and may encounter numerical challenges [131]. Many of the current developments in acceleration-based models are extensions of the social force model [11,132].Velocity-Based ModelsVelocity-based models, which gained prominence in the 2000s, are designed to model pedestrian dynamics using first-order differential equations [11,117]. These models focus on describing speed functions based on position differences with neighbors and obstacles. Unlike acceleration-based models, which describe inertial effects, velocity-based models are more concerned with collision avoidance, often utilizing techniques such as collision cones [11,133,134,135,136,137,138,139,140].Extensions of these models, like the Reciprocal Velocity Obstacle (RVO) [135] and Optimal Reciprocal Collision Avoidance (ORCA) [133], have been frequently used in computer graphics to simulate crowd behavior. Other velocity-based models are derived from concepts like bearing angle [141], gradient navigation [142], or time gap variables [143,144]. These models are generally formulated as optimization problems on the ensemble of feasible trajectories that avoid collisions [11,145,146].Decision-Based Models and Cellular AutomataIn decision-based or rule-based models, pedestrian behavior is not modeled using differential equations but instead is governed by rules or decisions that determine the new positions, velocities, and other states of agents [117]. Time is treated as a discrete variable in these models, meaning pedestrians make decisions at a future time step based on the system’s state at time t. The time step , which acts as a reaction time, has a direct physical meaning and can be used for model calibration [11].A well-known type of decision-based model is the cellular automata (CA) model, where not only time but also space and pedestrian states (such as velocity) are discrete. In these models, pedestrians move on a lattice, typically square or hexagonal, with each cell representing a space of approximately 40 cm by 40 cm, which corresponds to a maximum density of 6.25 pedestrians per square meter [147]. The early pedestrian CA models were developed in the late 1990s [11,148,149,150,151].In the floor field CA models, the rules and transition probabilities for moving to neighboring cells are derived from static and dynamic floor fields. The static floor field represents the pedestrian’s desired velocity, while the dynamic floor field models interactions with neighbors, inspired by the chemotaxis process observed in insects, like the use of pheromones by ants [152]. One critical aspect of CA models is handling conflicts, such as when two pedestrians simultaneously attempt to occupy the same cell. Solutions to these conflicts include priority rules, which may be random [153], or friction probabilities, where no pedestrian reaches the desired cell if a conflict arises, helping to explain clogging effects at bottlenecks [11,154].Recent advances in decision-based models have incorporated cognitive effects [155,156] and learning processes [157], further enhancing their ability to model pedestrian behavior in complex environments [11].

- (B)

- Trends During the Past DecadesThe study of pedestrian dynamics is a relatively recent field, with foundational research and models emerging in the 1960s and 1970s [103,108,110,111]. Significant advancements, however, have primarily occurred over the past three decades. During the 2010s, there was a notable increase in experimental studies conducted in laboratory settings, focusing on various pedestrian flow scenarios such as uni-directional flow, counter-flow, bottlenecks, and intersecting flows. An extensive data archive related to these experiments is available in Germany [11,158].In parallel with these experimental efforts, a range of KB pedestrian models, spanning from microscopic to macroscopic scales, has been developed [113,114,117,119,159]. The microscopic social force model by Helbing and Molnár is particularly prominent and widely referenced in the literature. Although traditional KB approaches, such as cellular automata and models analogous to fluid or gas dynamics, appear to have plateaued, they remain relevant. Microscopic force-based models and collision avoidance techniques continue to be significant, often serving as benchmarks for evaluating new methods, including those based on deep learning [11].

- (C)

- Knowledge-Based Models for Understanding and PredictingKnowledge-based models aim to elucidate the mechanisms and fundamental parameters that govern pedestrian dynamics. A key aspect of these models is the consideration of body exclusion effects, which are responsible for phenomena such as jamming, clogging, and maximal density. KB models often rely on the fundamental diagram—a phenomenological relationship between flow and density, first highlighted in the 1960s. The shape and variability of this relationship continue to be subjects of active research [11,160,161,162,163,164,165,166].Key parameters in KB models include the desired speed, agent size, and reaction time at microscopic scales, as well as maximal density and capacity at macroscopic scales. The estimation of these parameters and their number and nature are influenced by various factors such as flow type (e.g., uni-directional vs. bi-directional), context, and demographic characteristics like age and cultural background [118]. Simple microscopic rules can explain the macroscopic shapes of the fundamental diagram, with temporal parameters such as reaction time and time gaps being particularly relevant [11,143,167,168,169].A significant highlight of KB models is their ability to identify self-organization phenomena and the emergence of coordinated dynamics at macroscopic scales. Multi-scale approaches help understand how individual microscopic behaviors lead to collective dynamics [123,124]. Examples of collective phenomena include lane formation [170,171], stop-and-go waves [172,173], freezing-by-heating effects [174,175], herding effects [153,174], and pattern formation at bottlenecks and intersections [104,176,177,178]. These self-organization phenomena are also observed in social systems and networks, such as opinion formation [11,179,180,181].In the literature of statistical physics, similar phenomena are studied in non-equilibrium systems of self-driven or active particles, often referred to as active matter [114,182,183,184,185,186,187]. Understanding these complex non-linear dynamics across different scales remains a challenge and is an area of active research, particularly through data-based approaches [11,188,189,190,191,192].In Table 1 and Table 2, we present a selection of important articles in the literature on knowledge-based pedestrian models, specifically focusing on microscopic approaches. This table highlights the key contributions of each article to the field.

Table 1. Selection of important articles in the literature on knowledge-based pedestrian models (Part 1).

Table 1. Selection of important articles in the literature on knowledge-based pedestrian models (Part 1). Table 2. Selection of important articles in the literature on knowledge-based pedestrian models (Part 2).

Table 2. Selection of important articles in the literature on knowledge-based pedestrian models (Part 2).

3.3.2. The Deep Learning Approach

Deep learning, a subfield of machine learning, has gained significant attention due to its ability to process large datasets, recognize complex patterns, and extract meaningful insights across various domains. Unlike traditional machine learning methods that rely on manual feature engineering, deep learning utilizes deep neural networks (DNNs) with multiple hidden layers, enabling automatic hierarchical feature learning from raw data [201].

Several deep learning-based approaches have been proposed for pedestrian intention prediction. This section categorizes the most commonly used methods based on their deep neural network architectures. The primary architectures employed for this task include the following [12]:

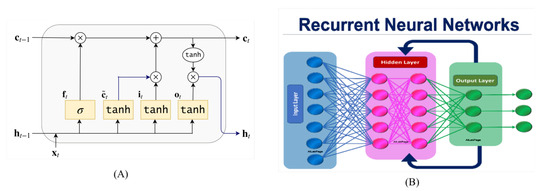

Recurrent Neural Networks (RNNs), often in form of Long Short-Term Memory (LSTM), Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), and Autoencoders.

Each of these architectures offers distinct advantages and challenges, which influence their application in various aspects of pedestrian intention prediction. RNNs and LSTMs are particularly valuable for capturing temporal dependencies in pedestrian behavior, CNNs excel in processing and analyzing visual data, GANs enhance the model’s ability to generate and simulate realistic pedestrian behaviors, and autoencoders contribute to efficient data representation and feature learning.

The integration of these methods into comprehensive prediction systems allows for a more nuanced understanding of pedestrian intentions, ultimately improving the safety and reliability of autonomous driving systems.

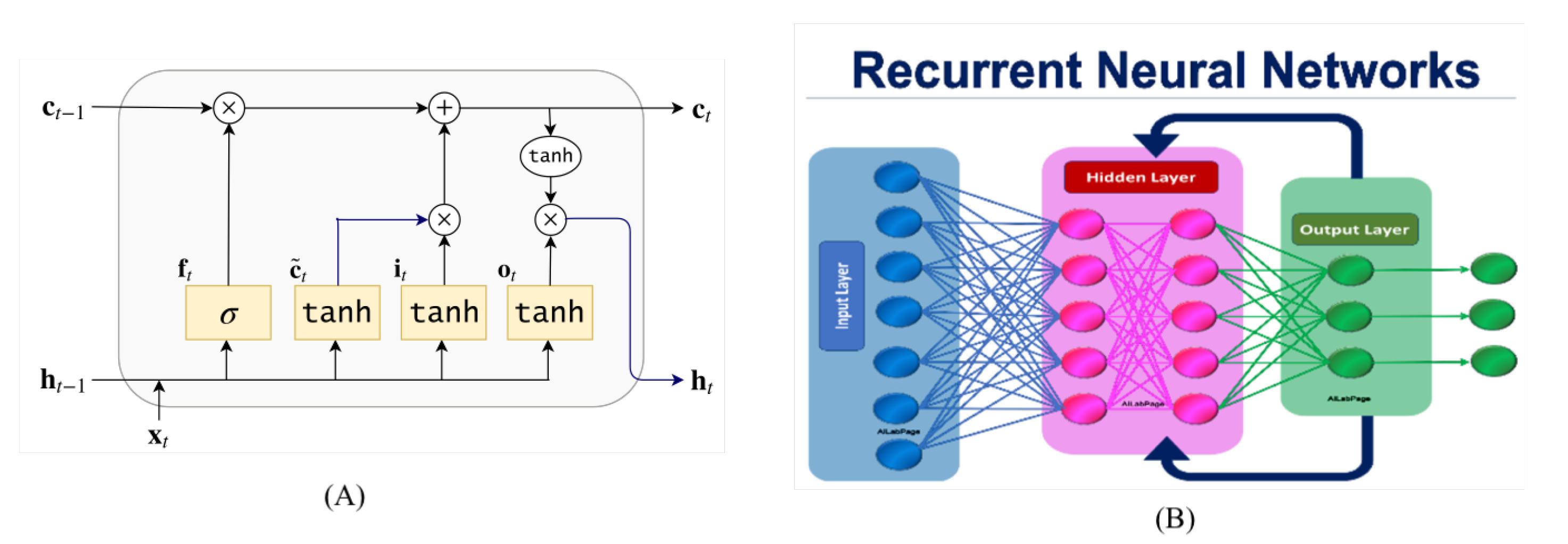

- (A)

- Pedestrian Behavior Prediction Using RNNsRecurrent Neural Networks (RNNs), particularly in their basic form known as Vanilla RNNs, extend the capabilities of standard two-layer fully connected networks by incorporating feedback loops within the hidden layer (see Figure 6). This enhancement allows RNNs to process sequential data more effectively by considering both current input and information from previous time steps, which is preserved in the hidden neurons. RNNs are crucial in sequence-based predictions and have broad applications across various domains. To overcome the limitations in retaining long-term information, the Long Short-Term Memory (LSTM) architecture was introduced. Initially successful in natural language processing (NLP), LSTMs have also proven effective in pedestrian trajectory prediction [12].

Figure 6. (A) LSTM cell. (B) Deep RNN.

Figure 6. (A) LSTM cell. (B) Deep RNN. - (B)

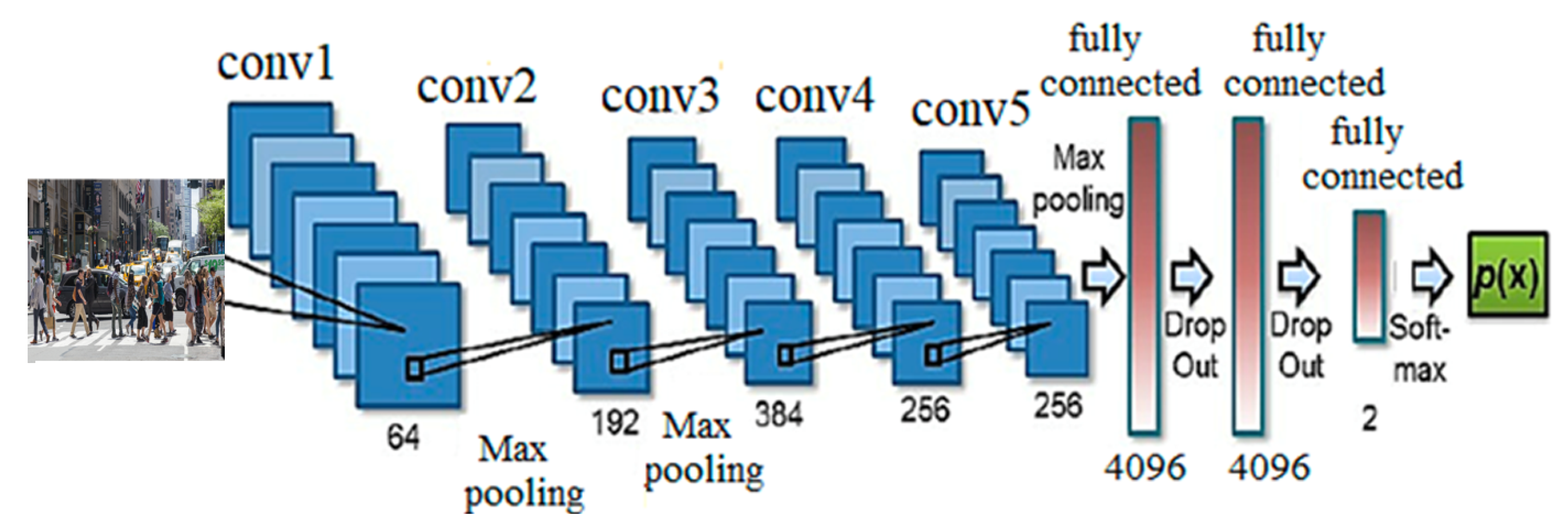

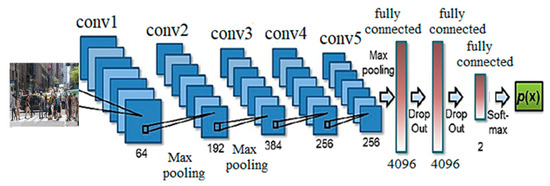

- Pedestrian Behavior Prediction Using CNNsThe convolutional neural network (CNN) is a type of deep neural network (DNN) known for its strong performance in various domains, including object classification and recognition, such as identifying handwritten digits, letters, and faces. As shown in Figure 7, a typical CNN architecture consists of multiple layers, including convolutional layers, non-linearity layers, pooling layers, dropout, batch normalization, and fully connected layers. Through the process of training and optimization, CNNs learn to extract object features. By carefully selecting the network architecture and parameters, these features can capture the most important discriminative information necessary for the accurate identification of the target objects [12].

Figure 7. CNN-typical architecture.

Figure 7. CNN-typical architecture. - (C)

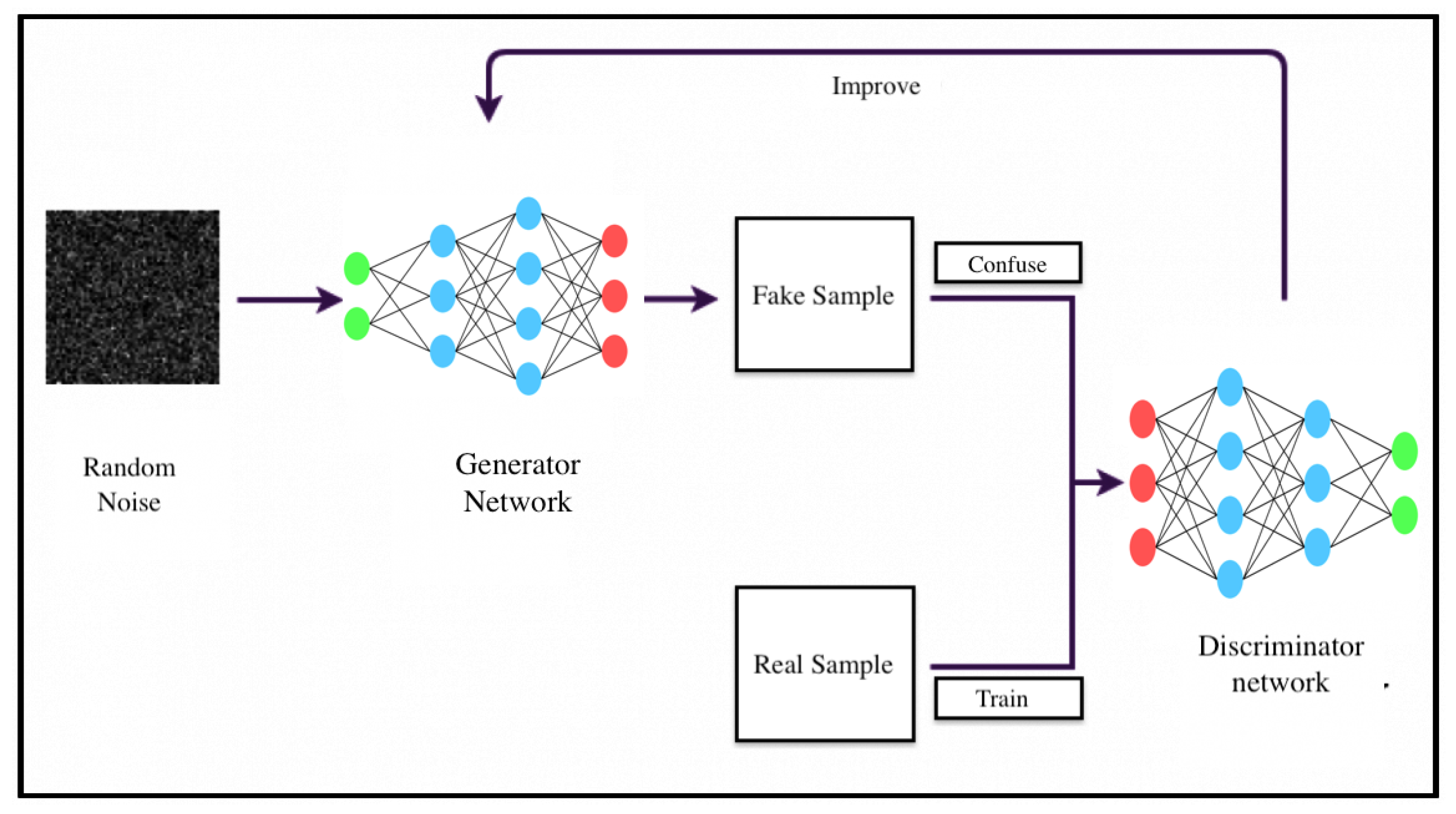

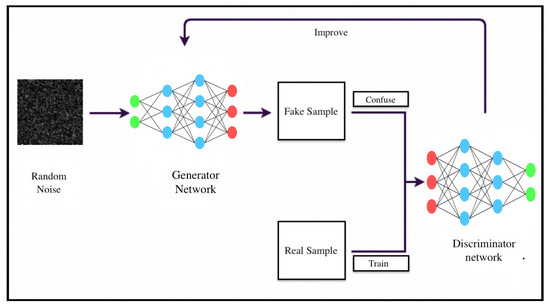

- Pedestrian Behavior Prediction Using Generative Adversarial Networks (GANs)Generative adversarial networks (GANs) operate on a generator (G)–discriminator (D) framework, where the two networks are in constant competition: the generator tries to deceive the discriminator by creating fake data, while the discriminator adapts to recognize these forgeries. In a GAN setup, both models are trained simultaneously (as shown in Figure 8).

Figure 8. GAN architecture.In the context of tracking, GANs help reduce the fragmentation often seen in conventional trajectory prediction models and lessen the need for computationally expensive appearance features. The generative component generates and updates candidate observations, discarding the least updated ones. To process and classify these candidate sequences, an LSTM component is used alongside the generative–discriminative model. This approach can produce highly accurate models of human behavior, especially in group settings, while being significantly more lightweight compared to traditional CNN-based solutions. Recently, GAN architectures have been employed by many researchers to achieve multi-modality in prediction outputs [12].

Figure 8. GAN architecture.In the context of tracking, GANs help reduce the fragmentation often seen in conventional trajectory prediction models and lessen the need for computationally expensive appearance features. The generative component generates and updates candidate observations, discarding the least updated ones. To process and classify these candidate sequences, an LSTM component is used alongside the generative–discriminative model. This approach can produce highly accurate models of human behavior, especially in group settings, while being significantly more lightweight compared to traditional CNN-based solutions. Recently, GAN architectures have been employed by many researchers to achieve multi-modality in prediction outputs [12]. - (D)

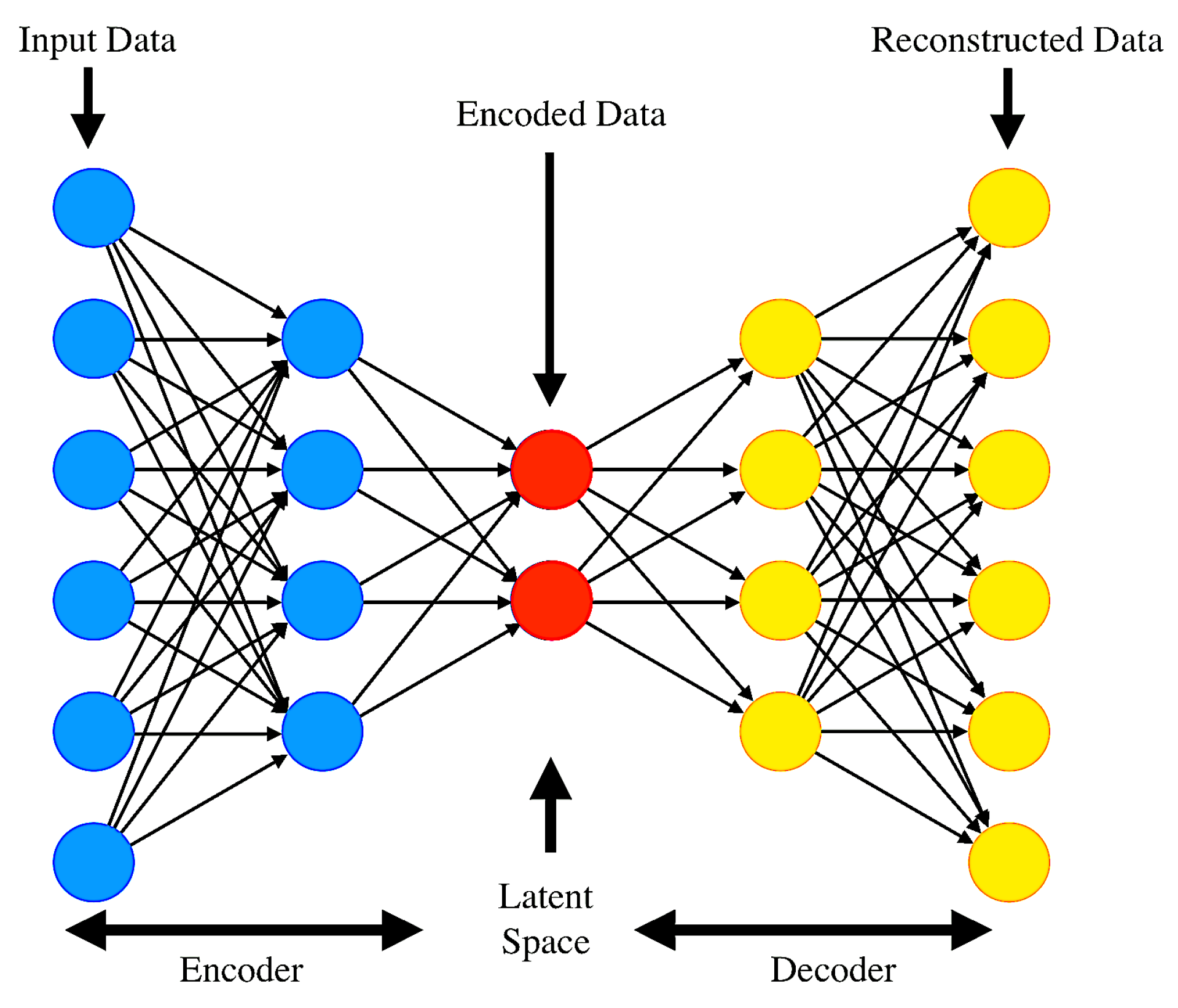

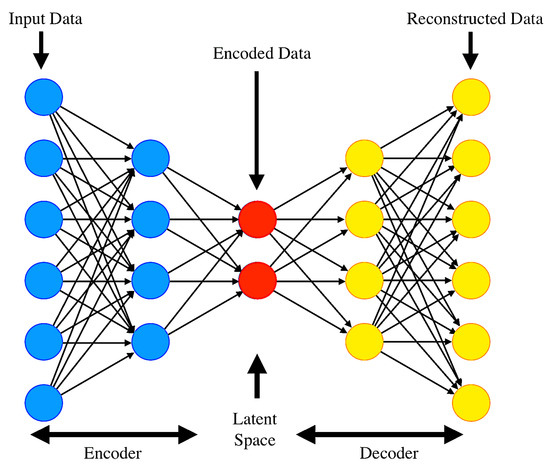

- Pedestrian Behavior Prediction Using AutoencodersAutoencoders are neural networks designed to learn efficient representations of data, typically for the purpose of dimensionality reduction or feature learning. In the context of pedestrian behavior prediction, autoencoders are used to encode high-dimensional trajectory data into a lower-dimensional latent space, capturing the essential features of pedestrian movements (illustrated in Figure 9). This latent representation is then decoded to predict future behavior. Autoencoders are particularly useful for handling noisy data and extracting meaningful patterns that can improve prediction accuracy.

Figure 9. Autoencoder architecture.

Figure 9. Autoencoder architecture.

3.3.3. Recent Advances in Deep Learning-Based Models

L. Xin et al. [202] tackled the challenge of long-horizon trajectory prediction for surrounding vehicles using an intention-aware LSTM architecture. Their model captures high-level spatial-temporal features of driver behavior and was trained on the NGSIM dataset, achieving precise predictions with longitudinal and lateral errors of less than 5.77 m and 0.49 m, respectively.

Lee et al. [98] introduced the DESIRE framework, which leverages deep stochastic inverse-optimal-control RNN encoders. This approach generates potential future trajectories using a conditional variational autoencoder and ranks them through an RNN model incorporating inverse optimal control. By considering scene context and multi-modal prediction, their method demonstrated strong performance on the KITTI and Stanford Drone datasets.

Zheng et al. [203] proposed a hierarchical policy model to predict both micro-actions and macro-goals. This method integrates recurrent convolutional neural networks with supervised learning and attention mechanisms. Zhan et al. [204] later extended this approach using variational RNNs.

Martinez et al. [205] developed an RNN architecture based on gated recurrent units (GRUs), which models velocities instead of absolute angles. This “residual architecture” emphasizes first-order motion derivatives, improving accuracy and generating smoother trajectory predictions.

Salzmann et al. [206] proposed a trajectory forecasting method for diverse agents, such as pedestrians and vehicles, by integrating heterogeneous data and semantic classifications. Their approach was evaluated on the ETH, UCY, and nuScenes datasets, demonstrating its effectiveness in predicting future movements across different environments.

Refer to Table 3, Table 4, Table 5 and Table 6 for a summary of the experimental results from the deep learning models discussed above for predicting pedestrian trajectories.

Table 3.

Selection of deep learning-based pedestrian models.

Table 4.

Selection of DNN architectures for prediction methods (CNN).

Table 5.

Selection of DNN architectures for prediction methods (GAN).

Table 6.

Selection of DNN architectures for prediction methods (autoencoders).

3.3.4. The Ensemble Approach

The ensemble approach combines KB models and DL algorithms to leverage their respective strengths. KB models are advantageous for their interpretable parameters and low data requirements, while DL algorithms offer high predictive power but require extensive data. This synergy has been applied across various fields, such as climate pattern discovery [225,226], material science [227,228], quantum chemistry [229], and biomedical imaging [11,230,231].

Three main methods exist for integrating KB and DL approaches. The first involves using KB models to improve DL algorithms. For example, KB models can generate synthetic data for training neural networks, as seen in autonomous vehicle training [232,233]. Other strategies include knowledge-guided design of neural network architectures, such as embedding the social force model into a neural network for predicting human motion [234], and using knowledge-guided loss functions to ensure outputs adhere to physical laws [11,235,236].

The second method enhances KB models using DL algorithms. This includes residual modeling, where DL corrects the errors of KB models, and parameter calibration using DL, which has been applied in vehicle trajectory prediction [237] and pedestrian motion modeling [11,238,239,240].

Finally, the ensemble approach helps address the limitations of DL algorithms in scenarios with limited data by incorporating the structured knowledge from KB models, making it a promising method for improving pedestrian Intention and trajectory predictions [11].

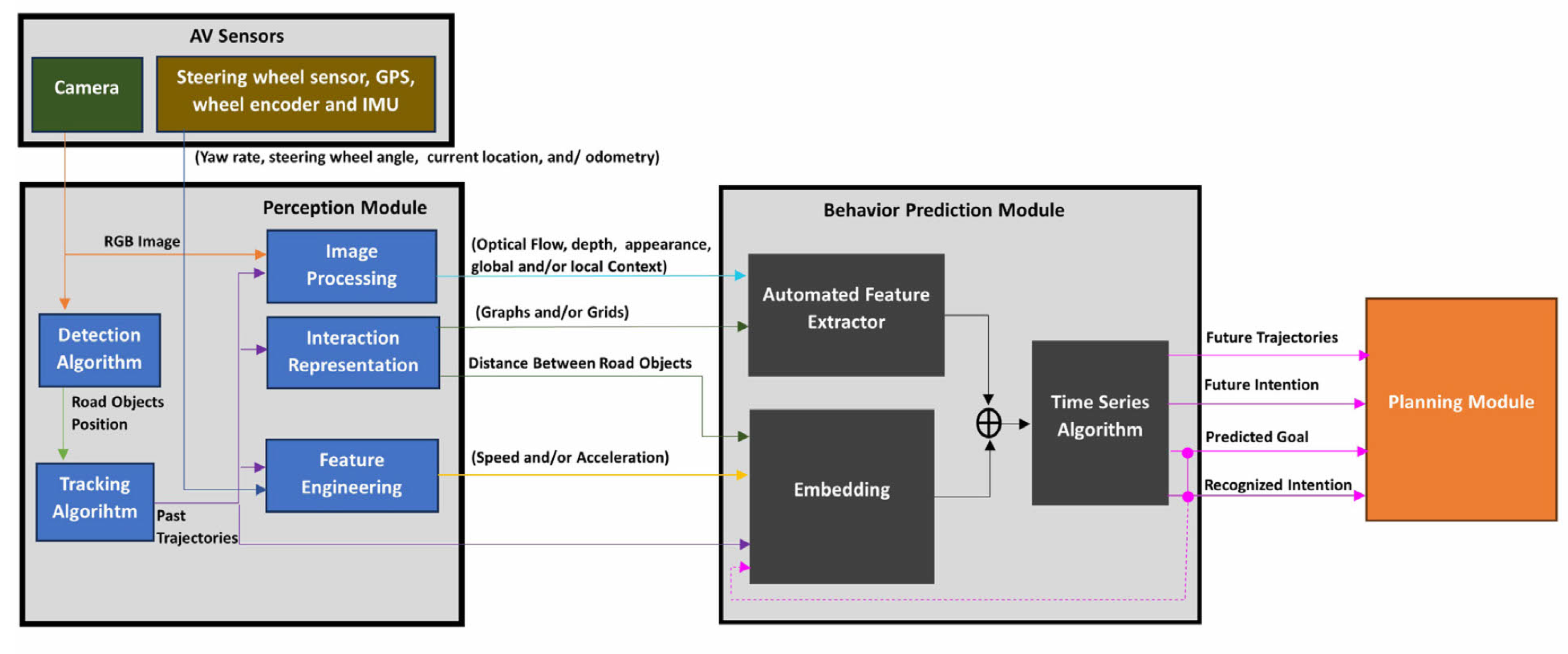

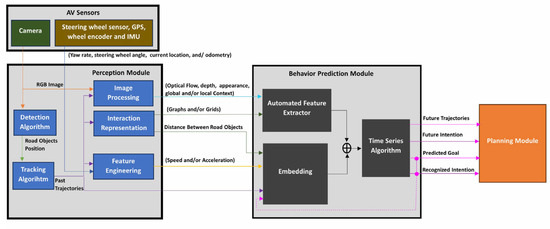

3.3.5. Visualization of PIP Classification Systems

In the previous sections, we provided a comprehensive overview of various pedestrian intention prediction classification methods. To enhance the visualization of a PIP classification system, A proposed overarching framework for a behavior prediction system is illustrated in Figure 10. This framework typically consists of several key components. Initially, a camera sensor captures RGB images that are processed by detection algorithms, which identify both static and dynamic objects on the road, such as vehicles, pedestrians, traffic lights, and road signs. Each detected object is then assigned a unique ID, enabling the system to track its past trajectories, which are essential for further analysis.

Figure 10.

General behavior prediction framework. The behavior prediction module consists of an automated feature extractor (CNN, 3D-CNN, GCN, FCN, CVAE, GAN, etc.), an embedding layer (FCN and ANN), and a time series algorithm (RNN, GRU, and LSTM). This module relies on the perception module (detection, tracking, image processing, interaction representation, and feature engineering), which in turn depends on the ego vehicle sensors (camera, GPS, and wheel encoder). The outputs of the behavior prediction modules are subsequently sent to the planning module [13].

The image processing algorithms utilize the RGB images and past trajectories to generate various forms of data, including optical flow, depth, appearance, and both global and local context images. These images provide critical context, such as cropping specific areas around detected objects to focus on localized regions for deeper analysis. To better understand interactions between different traffic agents, the system employs interaction representation algorithms. These algorithms calculate distances between objects, construct graph networks, and generate grid maps that reflect these interactions. Additional features are derived from the objects’ trajectories and internal sensor data from the AV, such as steering angles and velocities.

The outputs of the perception module feed into the behavior prediction module, which includes automated feature extraction and embedding layers. These components generate feature vectors that capture the spatial and temporal properties of the inputs, which are then used to predict various aspects of object behavior, including future trajectories and intentions. Finally, these predictions are utilized by the AV’s planning module to make decisions that ensure safe navigation [13].

4. Uncertainty Measurement

While pedestrian intention prediction models have made significant advancements, they still face challenges due to the inherent uncertainty in human behavior and environmental conditions. Accurate predictions are often hindered by unpredictable pedestrian actions, sensor limitations, and varying external factors. To address these challenges, it is essential to understand and quantify uncertainty in predictions. This section explores different types of uncertainty—epistemic and aleatoric—and their implications for pedestrian intention prediction. It further discusses how uncertainty impacts autonomous vehicle decision-making and presents strategies for effectively handling uncertainty in predictive models.

Uncertainty is a common factor in many real-world scenarios, including financial investments, medical diagnoses, sports outcome predictions, and weather forecasting. In these domains, decisions are based on available data and the inherent uncertainty of that data. Machine learning and deep learning models are increasingly applied for decision-making and inference across various fields. Given the widespread adoption of artificial intelligence (AI), assessing the reliability and effectiveness of AI systems before deployment has become critical [241], as their predictions are often affected by noise and errors in model inference. Consequently, accurately and reliably representing uncertainty is essential in AI systems. Uncertainty principles play a fundamental role in machine learning [242] and deep learning, particularly in applications like pedestrian intention prediction, which are crucial for AVs [243,244,245].

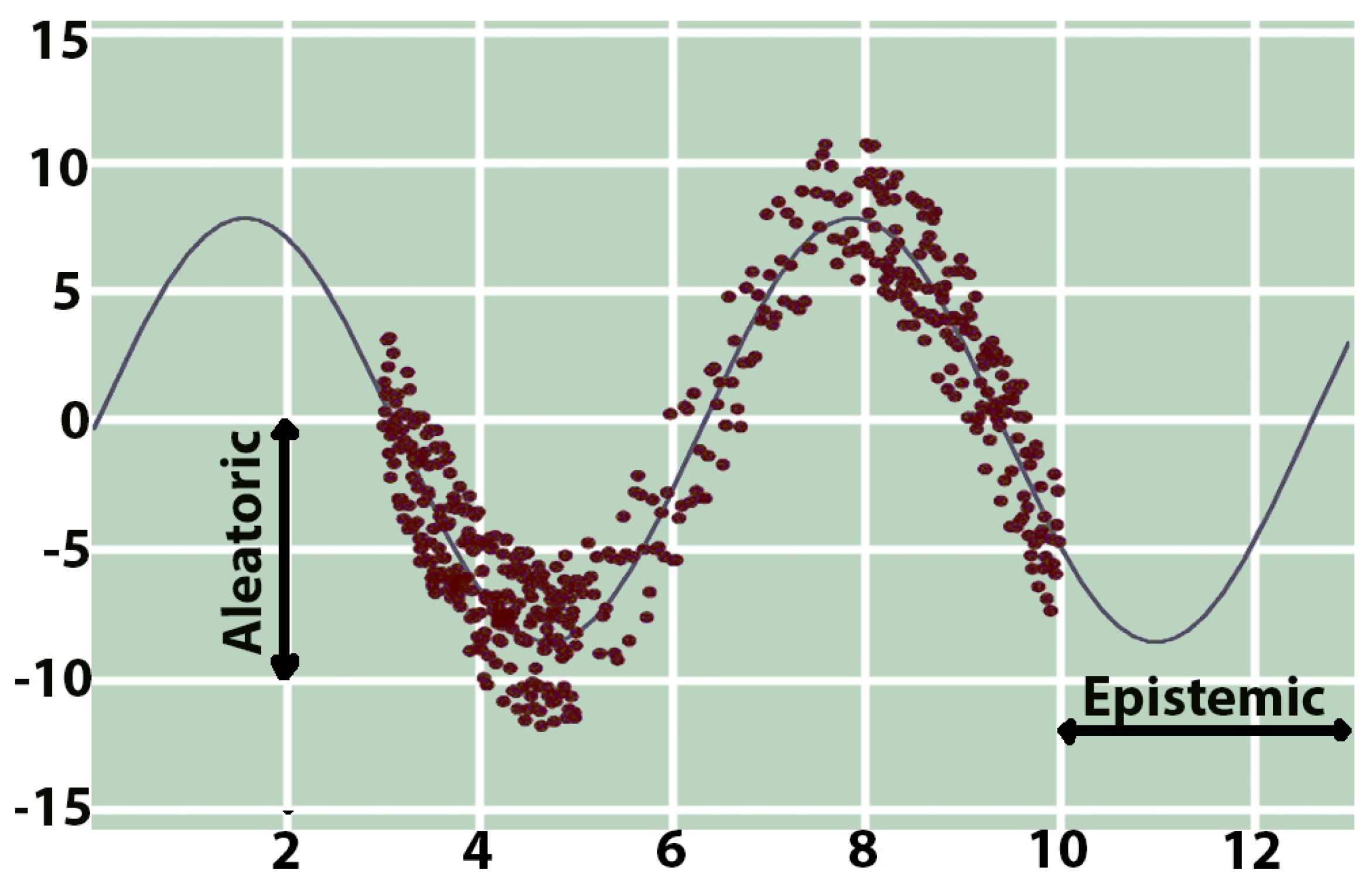

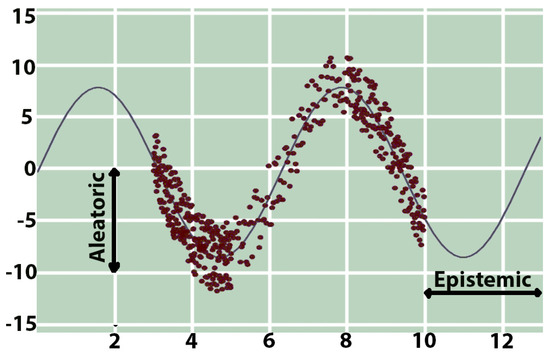

Uncertainty in pedestrian intention prediction refers to the inherent unpredictability and lack of precise knowledge about a pedestrian’s future actions or movements. This uncertainty arises from various factors, including the dynamic nature of pedestrian behavior, environmental conditions, and limitations in sensor data. These challenges make it difficult to accurately predict a pedestrian’s intentions, creating significant hurdles for autonomous vehicle systems. In general, as shown in Figure 11, uncertainty in pedestrian intention prediction can be categorized into epistemic uncertainty (model uncertainty) and aleatoric uncertainty (data or environmental uncertainty).

Figure 11.

A visual representation highlighting the fundamental differences between aleatoric and epistemic uncertainties [245].

4.1. Epistemic Uncertainty (Model Uncertainty)

Epistemic uncertainty arises from a lack of knowledge or information about the system, which can potentially be reduced by gathering more data or improving the model’s design and training. This type of uncertainty reflects the limitations in the model’s understanding of the environment and pedestrian behavior.

This type of uncertainty may occur by the following

Limited Contextual Information

When the model lacks access to all relevant information—such as the pedestrian’s past actions, intentions, or environmental conditions—its predictions become less certain.

Incomplete Modeling

Models may not fully capture the complex dynamics of pedestrian behavior, including social interactions or the influence of external factors such as weather, road conditions, or nearby traffic signals.

For example, consider a scenario where a pedestrian approaches an intersection. If the model is unaware of nearby road signs, the pedestrian’s history of behavior, or the presence of other pedestrians, it may struggle to predict whether the pedestrian intends to cross the street or wait for a signal. This gap in knowledge results in epistemic uncertainty.

4.2. Aleatoric Uncertainty (Data or Environmental Uncertainty)

Aleatoric uncertainty arises from inherent randomness or noise in the environment or the data. Unlike epistemic uncertainty, this type of uncertainty cannot be reduced by gathering more data, as it reflects the unpredictable and stochastic nature of the real world.

- This type of uncertainty may occur due to the following

Human Behavior Randomness

Pedestrian movements are often highly unpredictable, especially in dynamic environments where social interactions, distractions, or personal intentions influence behavior.

Environmental Variability

Variations in environmental factors, such as road layout, traffic conditions, and weather, can introduce additional noise into predictions. External influences like the actions of nearby pedestrians or vehicles can further complicate prediction.

Sensor Noise:

Inaccuracies in sensor data, caused by occlusions, low resolution, or adverse conditions (e.g., poor lighting, rain, or fog), can distort the input data and contribute to uncertainty in predictions.

For example, consider a pedestrian who suddenly stops or changes direction due to an unexpected distraction, such as receiving a phone call or reacting to another person’s actions. Such behavior introduces randomness that makes it challenging for predictive models to accurately anticipate their future trajectory.

4.3. Importance of Uncertainty in Pedestrian Intention Prediction

Understanding and handling uncertainty is essential because pedestrians’ intentions can change rapidly due to dynamic and unpredictable environmental factors. For AVs and pedestrian tracking systems, accounting for uncertainty provides several critical benefits:

Safer Decision-Making

Recognizing the uncertainty in predictions allows systems to adopt more cautious behaviors, minimizing the risk of accidents in complex or ambiguous scenarios.

Robustness in Real-Time Prediction

Handling uncertainty enables systems to maintain strong performance in real-time, even when they lack complete knowledge of all influencing factors, such as environmental changes or unseen pedestrian behavior patterns.

Improved Interaction with Other Road Users

By incorporating uncertainty, models can better predict how pedestrians might react to other vehicles, pedestrians, or environmental conditions. This ensures safer and more harmonious interactions among all road users.

4.4. Addressing Uncertainty in Pedestrian Intention Prediction

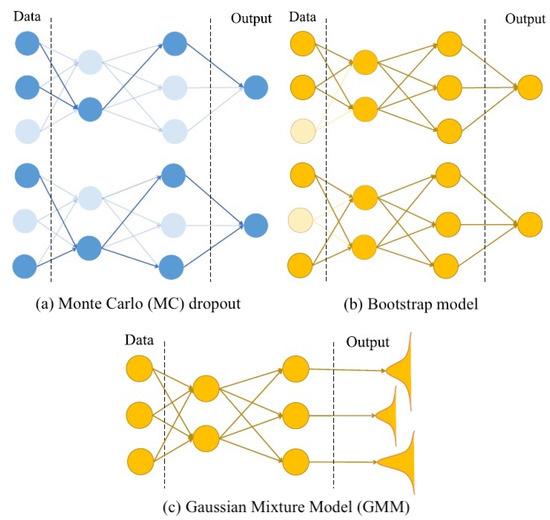

Deep Neural Networks (DNNs) have demonstrated remarkable success in diverse domains such as language modeling [246], speech recognition [247], and image classification [248]. However, while DNNs are widely used for image classification, their deployment in high-stakes applications like AVs [249] and healthcare [250] presents notable challenges. A key concern in these areas is the ability to estimate uncertainty, a capability not inherently provided by standard DNN training methods [251]. To address both epistemic and aleatoric uncertainty, several strategies can be employed:

Probabilistic Models

Models such as Gaussian processes or Bayesian networks can quantify uncertainty and predict a range of possible outcomes, rather than offering a single deterministic trajectory. These models provide more flexible predictions, accounting for various potential pedestrian behaviors.

Ensemble Methods

By combining multiple models with different underlying assumptions, ensemble methods help reduce overall uncertainty by averaging predictions. This approach allows the system to consider diverse scenarios and enhances robustness.

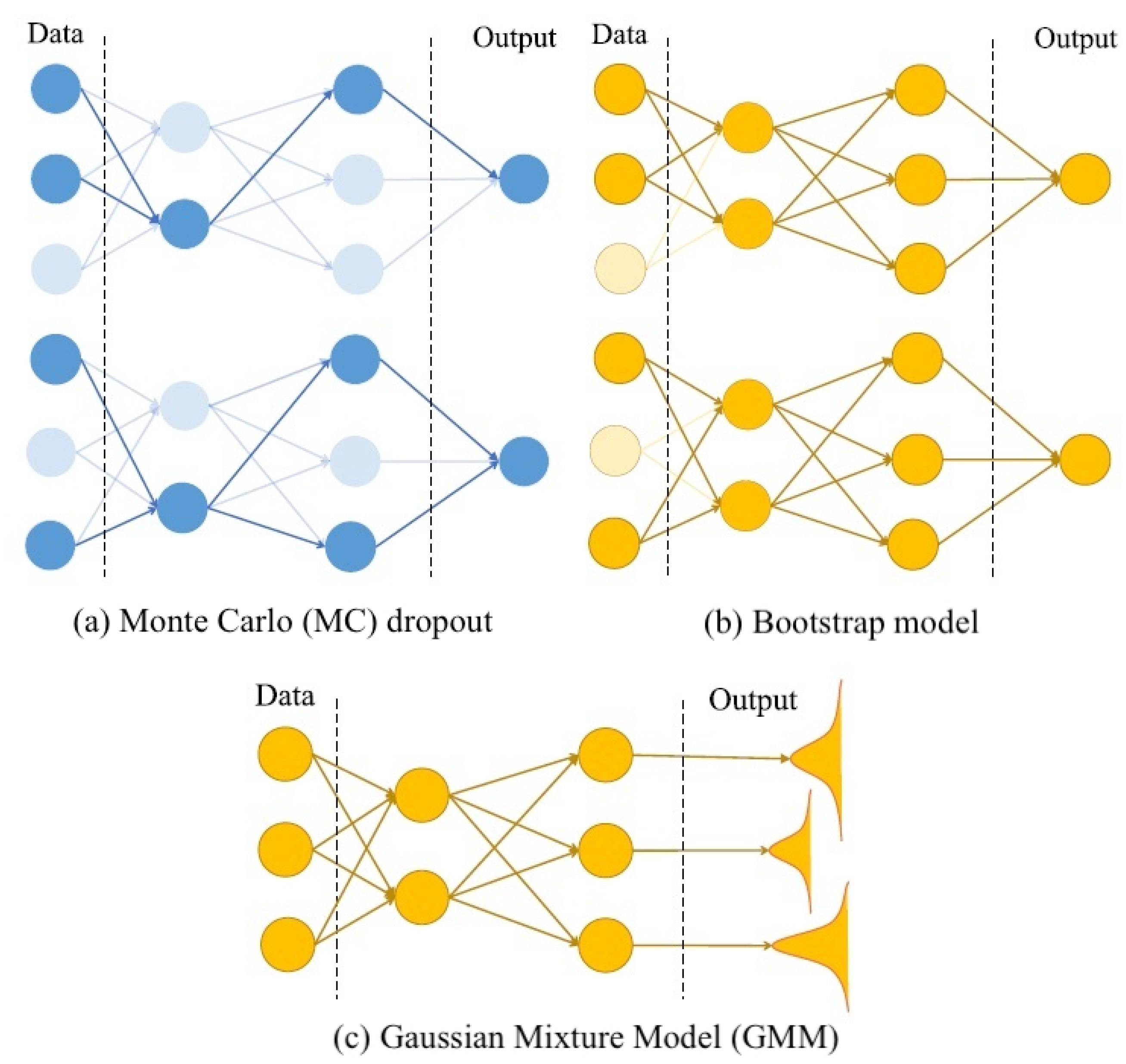

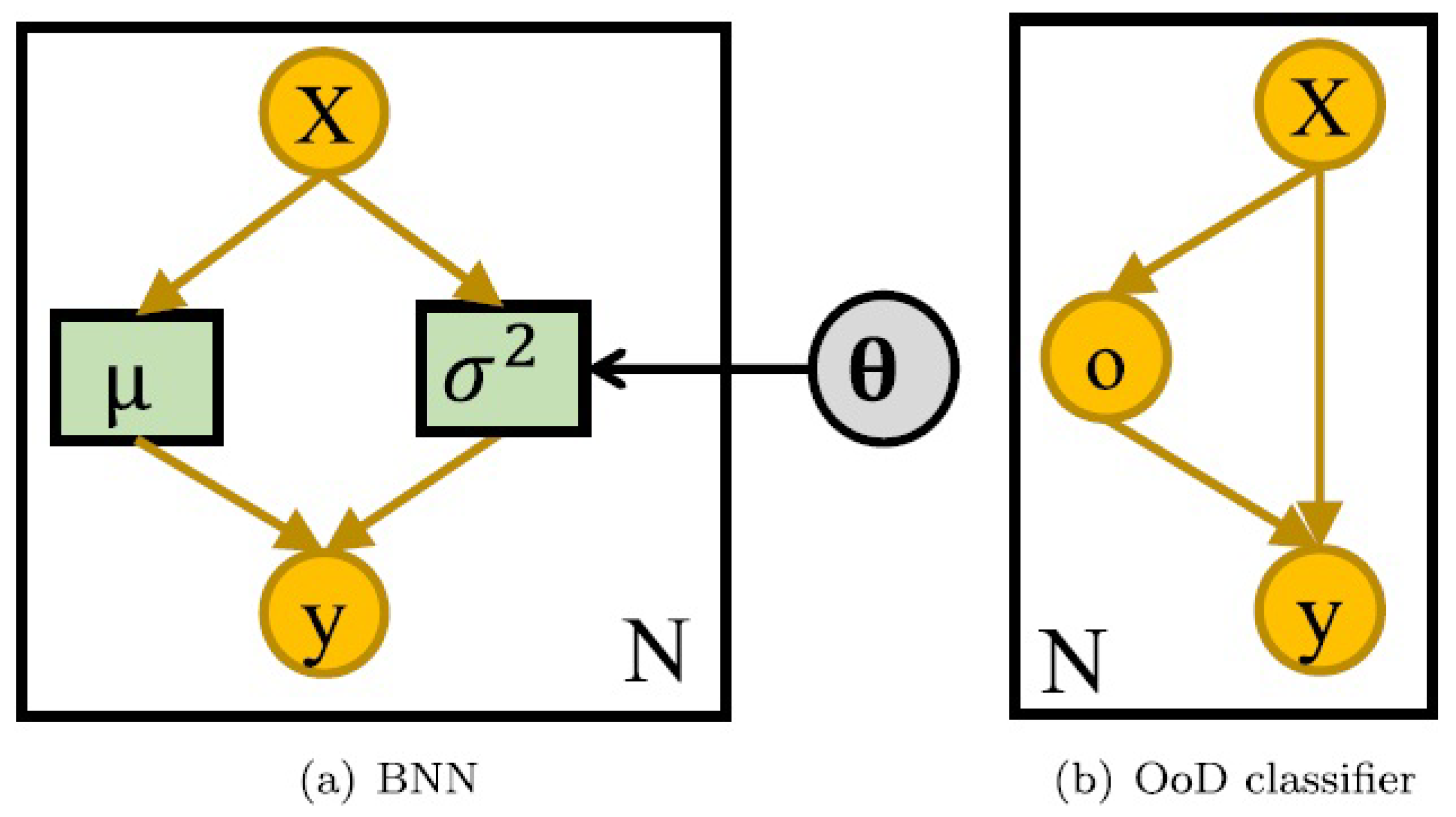

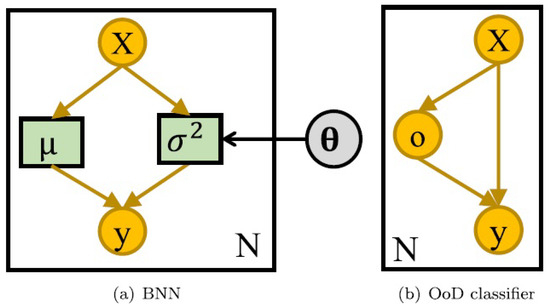

By incorporating these strategies, pedestrian intention prediction systems can deliver more reliable and safer predictions, especially in complex and dynamic environments. A schematic comparison of three different uncertainty models—MC dropout, the Bootstrap model, and the GMM(Gaussian Mixture Model)is presented in Figure 12. Additionally, Figure 13 shows two graphical representations of uncertainty-aware models: BNN and OoD (Out-of-Distribution). The next section provides a brief overview of various approaches to uncertainty quantification.

Figure 12.

Diagram illustrating three distinct uncertainty models along with their corresponding network architectures [252].

Figure 13.

A visual depiction of two distinct uncertainty-aware (UA) models. Source: Adapted from [253].

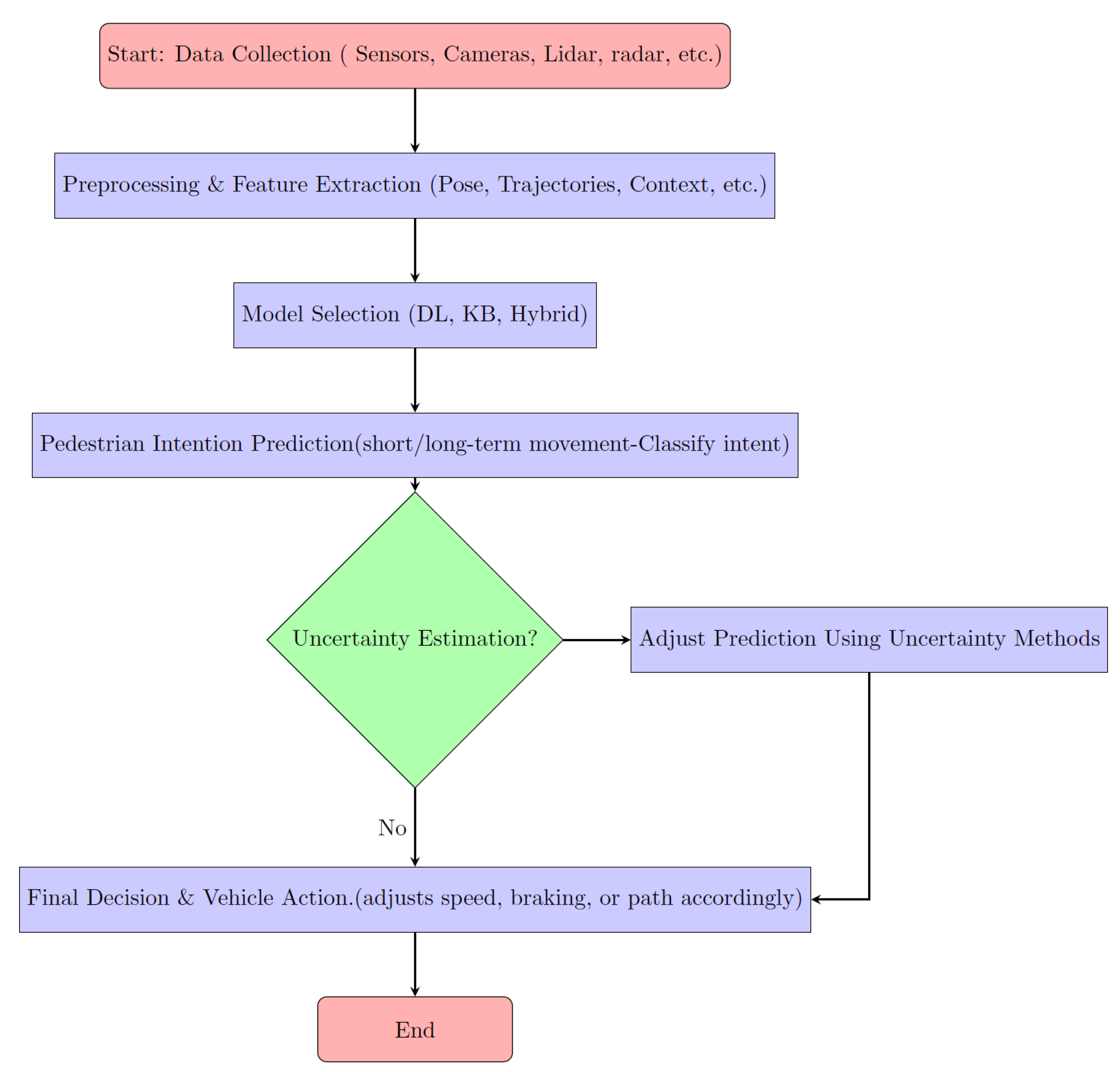

The hierarchical structure of PIP, including the uncertainty option, is presented as a block diagram in Figure 14. Additionally, Table 7 provides an overview of studies that utilize uncertainty approximation in PIP for various applications.

Figure 14.

Block diagram of pedestrian intention prediction framework with feature categories and processing steps.

Table 7.

Summary of studies applying uncertainty estimation on their PIP applications (sorted by year).

4.5. Balancing Computational Efficiency and Safety

In the context of pedestrian intention prediction, handling uncertainty requires not only accurate models but also the ability to balance computational demands with safety considerations. Achieving a balance between these tow remains a significant challenge in the presence of both epistemic and aleatoric uncertainty. Several studies have explored different methods to address this trade-off:

Bayesian Neural Networks (BNNs)

These networks incorporate uncertainty to improve safety, but they are computationally expensive. Nayak et al. [260] applied BNNs for trajectory forecasting, which enhances predictive confidence but requires substantial computational resources.

Transformer-based Models

These models achieve both high accuracy and real-time performance, offering a promising solution to balancing efficiency and safety. Xie et al. [261] introduced GTransPDM, a transformer-based model that efficiently decouples spatial information and predicts pedestrian intention with both high accuracy and real-time performance. The model achieves 92% accuracy on the PIE dataset with an inference time of just 0.05 ms, making it a viable option for real-time systems.

Real-time Tracking with Kalman Filter

For efficient real-time prediction, the Kalman filter remains a widely used technique due to its low computational overhead. However, while these systems are effective in simpler environments, they may struggle in dynamic or complex scenarios. Guo et al. [262] combined Camshift with the Kalman filter for pedestrian tracking, enabling real-time operation, though it may struggle in highly dynamic settings.

Multi-task Learning Frameworks

These frameworks combine trajectory and intention prediction, improving both efficiency and robustness. PTINet, developed by Munir and Kucner [263], simultaneously predicts pedestrian trajectory and intention. By incorporating contextual features, PTINet provides a more efficient solution while maintaining robust performance in various environments.

These techniques demonstrate that managing the trade-off between computational demands and safety is an ongoing challenge. Future research may focus on dynamic models that adjust their complexity based on context, balancing both computational efficiency and safety.

4.6. Impact of Traffic Regulations on Pedestrian and Trajectory Prediction

Traffic regulations play a critical role in shaping pedestrian and vehicle behavior, and their integration into pedestrian intention prediction models can significantly reduce uncertainty and improve the safety of AVs. Pedestrian behavior is often influenced by external regulatory factors, such as crosswalk rules, traffic light patterns, and the right-of-way laws, which can serve as constraints in predicting pedestrian movements. Incorporating these regulatory constraints into prediction models helps in improving their accuracy and reliability by narrowing down the possible pedestrian intentions.

4.6.1. Key Regulatory Constraints

Crosswalk Rules: Pedestrians are generally expected to cross streets at designated crosswalks. Integrating this constraint allows prediction models to assume that pedestrians will follow this pattern, especially when the AV can detect the presence of a crosswalk. This reduces epistemic uncertainty, as the model can predict pedestrian behavior more confidently when it recognizes that a pedestrian is approaching a crosswalk.

Traffic Light Patterns

Pedestrians typically obey traffic signals, waiting for the green pedestrian light to cross. Accounting for these signals in pedestrian trajectory prediction models helps to reduce uncertainty by establishing predictable patterns for when pedestrians are likely to cross roads. This is particularly useful in urban environments, where AVs can interact with multiple traffic lights and pedestrian signals.

Right-of-Way Laws

Pedestrian right-of-way laws influence when pedestrians choose to cross streets. In many jurisdictions, pedestrians have the right of way at intersections, meaning they are more likely to begin crossing when traffic is stopped. This factor can be incorporated into models to enhance predictions of pedestrian behavior in regulated traffic environments.

Speed Limits and Vehicle Behavior

Regulations governing vehicle speed also impact pedestrian safety and behavior. Pedestrians may be more likely to cross streets when vehicles are moving at lower speeds, as they feel safer. Additionally, AVs can adjust their behavior in response to speed limits, ensuring that they respect the safety of pedestrians by slowing down or stopping when necessary.

4.6.2. Incorporating Regulations into Prediction Models

Incorporating these regulations into pedestrian intention prediction models offers several advantages:

Reduction in Epistemic Uncertainty

By embedding these constraints into models, AV systems can better anticipate pedestrian behavior, reducing the uncertainty associated with human unpredictability.

Context-Aware Prediction

Regulations provide additional context for understanding pedestrian behavior. For instance, if the pedestrian is at a crosswalk or waiting at a red pedestrian light, the system can more confidently predict that they will wait or cross, respectively.

Safer Autonomous Decision-Making

By adhering to traffic regulations, AVs can make safer decisions, anticipating pedestrian movements more accurately and adjusting their behavior accordingly to avoid accidents. As pedestrian behavior often follows patterns governed by these regulations, the integration of regulatory information into prediction models can enhance the AV’s ability to safely interact with pedestrians in complex environments.

While incorporating regulations into pedestrian intention prediction models is beneficial, several challenges remain:

Variability in Regulations

Traffic regulations can vary across different regions, making it necessary for AV systems to adapt to local laws and rules.

Model Flexibility

Predictive models must be flexible enough to handle varying levels of enforcement of traffic regulations and the possibility that pedestrians may not always follow them.

Real-Time Adaptation

AVs must be capable of integrating real-time sensor data and adjusting predictions based on observed pedestrian behavior, which may deviate from expected regulatory patterns due to environmental factors, distractions, or individual pedestrian choices.

In conclusion, by incorporating regulatory constraints into pedestrian intention prediction, AV systems can make more accurate predictions, ultimately improving safety and decision-making. Future research should focus on developing more sophisticated models that integrate both traffic regulations and real-time sensor data, enabling AVs to navigate complex, regulated environments safely and efficiently.

5. Datasets

The foundation of any successful pedestrian intention prediction model lies in the quality and comprehensiveness of the datasets used for its development. In the domain of automated driving, where accurate and timely predictions of pedestrian behavior are critical for safety and efficiency, the choice of dataset becomes even more crucial. These datasets provide the raw data needed for training and testing models and shape the models’ performance and generalizability across different environments and scenarios.

This section delves into the various aspects that define a robust dataset for pedestrian intention prediction. We start by outlining the essential prerequisites that such datasets must meet to be effective in real-world applications. Following this, we review some of the most popular and widely used datasets in this field, highlighting their unique features and contributions to the advancement of pedestrian intention prediction. Additionally, we will discuss the types of sensors commonly employed in creating these datasets, along with their advantages and limitations. Finally, a table summarizing key studies that have utilized these datasets will be presented, offering insights into their application and impact on the research community.

Refer to Table 8 for a comprehensive summary of the datasets frequently used in pedestrian intention prediction research.

Table 8.

Datasets used in pedestrian intention prediction and related works.

5.1. Dataset Requirements for Pedestrian Intention Prediction

The effectiveness of pedestrian intention prediction models heavily relies on the quality and characteristics of the datasets used for training and evaluation. Some of the essential prerequisites for datasets in this domain, particularly for applications in automated driving, are outlined as follows [4,275]:

Naturalistic Data

The dataset should consist of traffic agents that behave naturally, without being influenced by the method of data collection, such as visible sensors.

Size

Data-driven methods rely on large datasets for training to ensure accurate performance during testing. Consequently, the dataset should contain a sufficient number of trajectories or instances from diverse traffic agents.

Heterogeneity

The dataset should be collected from diverse locations and times, encompassing different types of road infrastructure, traffic densities, legal and implied traffic norms, and pedestrian social interactions.

Accuracy

The positional accuracy of the recorded trajectories should be high, with positioning errors not exceeding 0.1 m, to minimize errors in model predictions.

5.2. Popular Datasets for Pedestrian Intention Prediction

Several well-known datasets have been developed and widely adopted in the field of pedestrian intention prediction. These datasets offer a range of scenarios and challenges that are critical for advancing research and development in this domain. Below are descriptions of these datasets, highlighting their unique features and contributions:

JAAD (Joint Attention in Autonomous Driving)

The JAAD dataset focuses on the behavioral aspects of pedestrians in traffic scenarios, providing detailed annotations related to pedestrian intention, such as crossing and stopping. Despite its usefulness in studying pedestrian awareness, the action labels in JAAD can sometimes be ambiguous and may not always accurately represent pedestrian intentions. For example, if a pedestrian waits for an ego-vehicle to pass before crossing, this may be labeled as “not crossing”, which could be misleading [276].

PIE (pedestrian intention estimation)

Created by the same team behind JAAD, the PIE dataset addresses ambiguities in JAAD by explicitly distinguishing between crossing and non-crossing intentions rather than merely annotating pedestrian actions. It provides rich visual context, including vehicle-pedestrian interactions, environmental factors, and temporal dynamics, making it a valuable resource for studying pedestrian behavior prediction in complex and dynamic settings [211].

STIP (Spatio-Temporal Intention Prediction)

The STIP dataset is notable for its multi-camera setup, which includes cameras mounted on the front, left, and right of the ego-vehicle, offering a comprehensive view of the traffic scene. This wider perspective helps in better understanding pedestrian intentions in relation to the entire environment, making STIP a valuable resource for advanced PIP models. It provides annotations for pedestrian intentions in various scenarios, thereby widening the scope of research in this field [277].

TITAN (Trajectory Information and Tracking Analysis)

TITAN provides a comprehensive collection of pedestrian trajectories in urban environments, accompanied by detailed annotations that facilitate both trajectory and intention prediction tasks. A key feature of TITAN is its unique 5-tier non-conflicting action labeling system, which ranges from basic postures to high-level contextual interpretations. This extensive annotation framework helps eliminate ambiguities in forecasting future trajectories by capturing a diverse range of pedestrian behaviors. Additionally, TITAN includes labels for transportive and communicative actions, further enhancing its utility for in-depth pedestrian behavior analysis [278].

DPDD Driving-Pedestrian Dynamics Dataset

The DPDD dataset is among the earliest to include annotated pedestrian actions and offers ground-truth data for pedestrian intention analysis. However, since it was recorded in a controlled environment with actors simulating pedestrian behavior, its realism is limited. Due to the absence of natural pedestrian density and common real-world occlusions, DPDD is less suitable for training models compared to more naturalistic datasets [279].

BDD100K (Berkeley DeepDrive 100K)

BDD100K is a large-scale, diverse dataset built to support a wide range of tasks, including object detection, tracking, and behavioral cue analysis. It captures a vast array of variations present in traffic scenarios, from different appearances and pose configurations of objects or people to various environmental conditions. BDD100K’s comprehensive coverage makes it an invaluable resource for training robust PIP models [280].

ETH/UCY

Although primarily used for trajectory prediction, the ETH and UCY datasets have been adapted for intention prediction by extracting pedestrian behavioral cues and contextual information from the scenes [136,281].

LOKI (Looking to Intention)

Recognizing the importance of human intention prediction for trajectory estimation, LOKI was released by Girase et al. [282] as a dataset targeting the anticipation of human intentions over both short-term and long-term temporal horizons. LOKI’s detailed annotations make it a key resource for improving the predictability of pedestrian actions in real-world scenarios [4].

PSI (Pedestrian Signal Interpretation)

To address limitations in existing datasets, such as the lack of human-level reasoning and the ability to handle sudden intention changes, Chen et al. [283] introduced the PSI dataset. PSI integrates visual and language cues to improve the explainability of human behavior prediction algorithms. This novel approach aims to significantly enhance the cognitive abilities of AI models in understanding pedestrian behaviors across diverse scenarios [4].

Waymo Open Dataset

The Waymo Open Dataset is a large-scale, high-quality dataset designed to advance research in autonomous driving, collected from Waymo’s fleet of self-driving vehicles. It includes a wide range of real-world driving scenarios and offers detailed sensor data such as camera images, LiDAR point clouds, and rich annotations for various road users, including pedestrians. The dataset is particularly valuable for pedestrian intention prediction as it provides comprehensive multi-sensor data from multiple perspectives around the vehicle, capturing diverse environmental conditions and complex interactions in urban and suburban settings. This makes the Waymo Open Dataset an essential resource for developing and evaluating models that aim to predict pedestrian behavior in dynamic, real-world environments [284].

While these datasets offer valuable resources for the PIP domain, they also highlight the ongoing challenges in this field. Datasets like DPDD, despite their early contributions, face limitations in realism and variability, which newer datasets like BDD100K and PSI aim to overcome. The evolution of these datasets reflects the growing complexity and demands of pedestrian intention prediction, pushing the boundaries of what AI models can achieve in understanding and predicting human behavior.

5.3. Sensors Used in Pedestrian Intention Prediction Datasets

The creation of PIP datasets relies on various sensors that capture the necessary data for analysis and model training. Each sensor type has its advantages and limitations, which can impact the quality and applicability of the dataset. Below is an overview of the commonly used sensors:

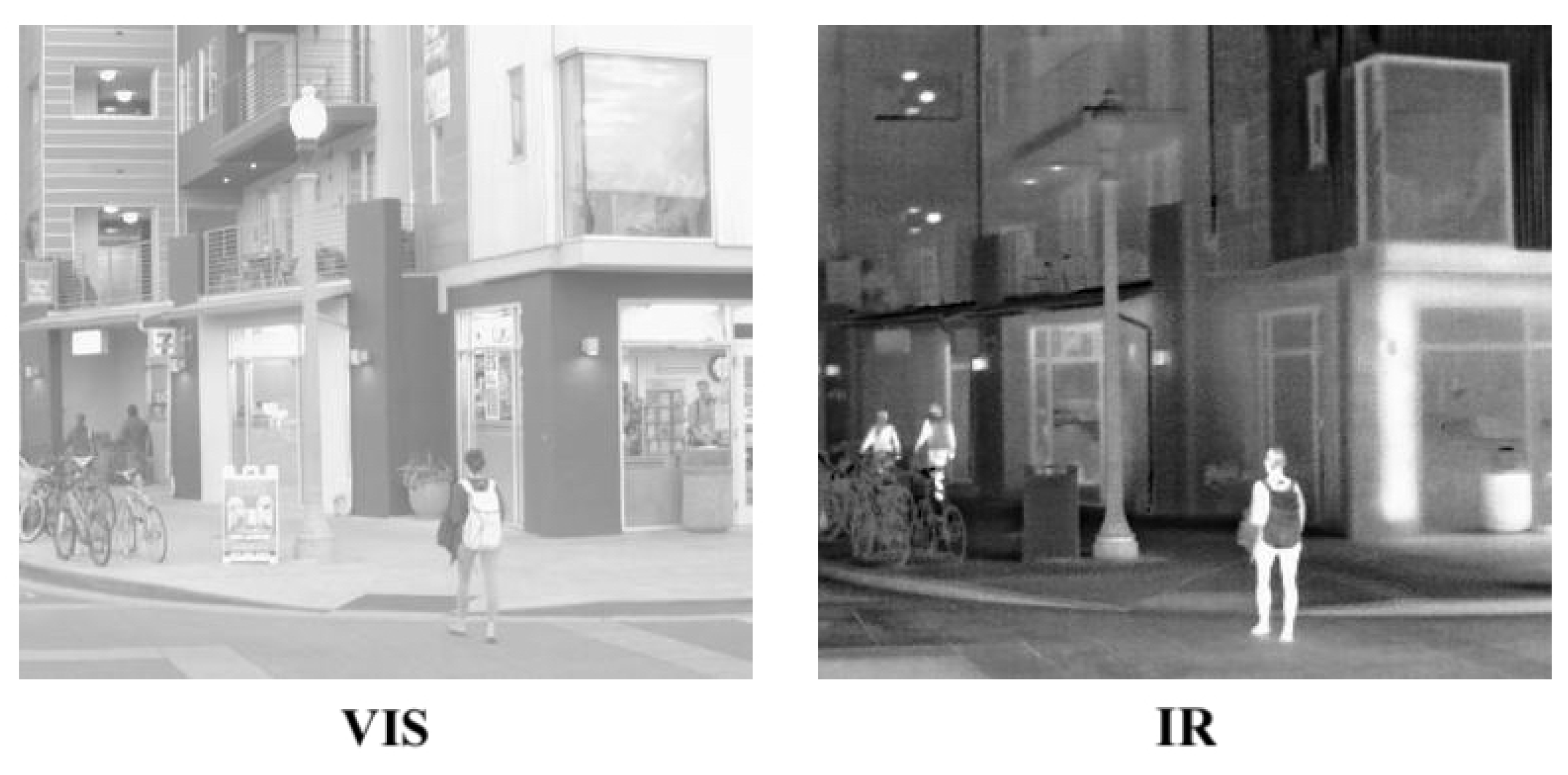

Camera

Cameras are one of the most widely used sensors for capturing pedestrian behavior in urban environments. They provide rich visual information that can be used to extract features such as body posture, gestures, and contextual cues (e.g., traffic lights, crosswalks). These features are essential for understanding pedestrian intentions and predicting their movements accurately.

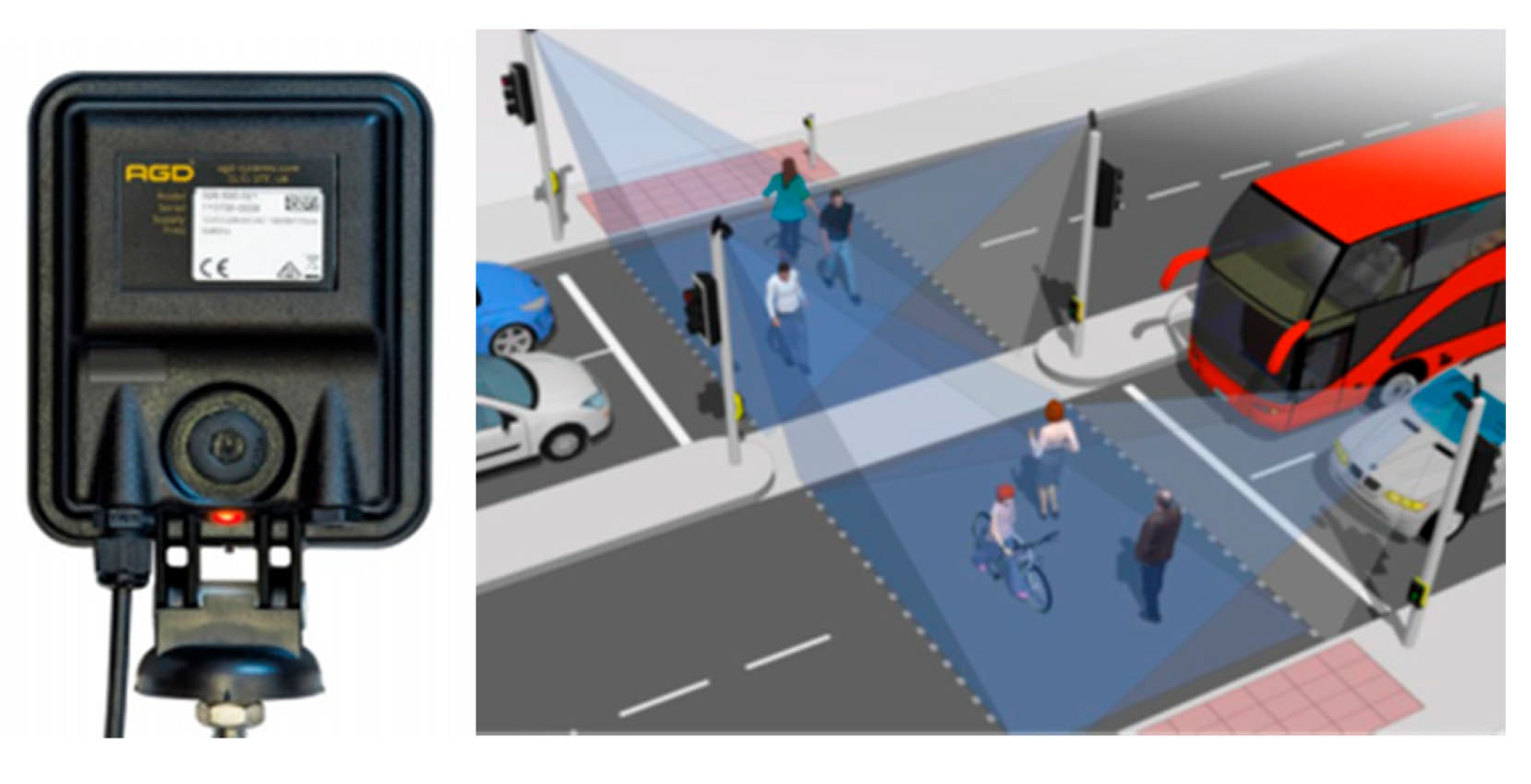

You can see an example of a camera used for this purpose in Figure 15. This example illustrates how cameras are strategically positioned to maximize coverage and effectively monitor pedestrian dynamics, ensuring the relevant data are captured for further analysis.

Figure 15.

Pedestrian detection with SVC cameras. A Valeo 360 surround view camera provides a three-dimensional perspective of the environment [12].

- Pros: High-resolution imagery, ability to capture contextual and environmental details, and compatibility with deep learning methods for visual recognition.

- Cons: Sensitive to lighting conditions (e.g., low light, glare), occlusions, and weather-related issues like rain or fog, which can degrade image quality.

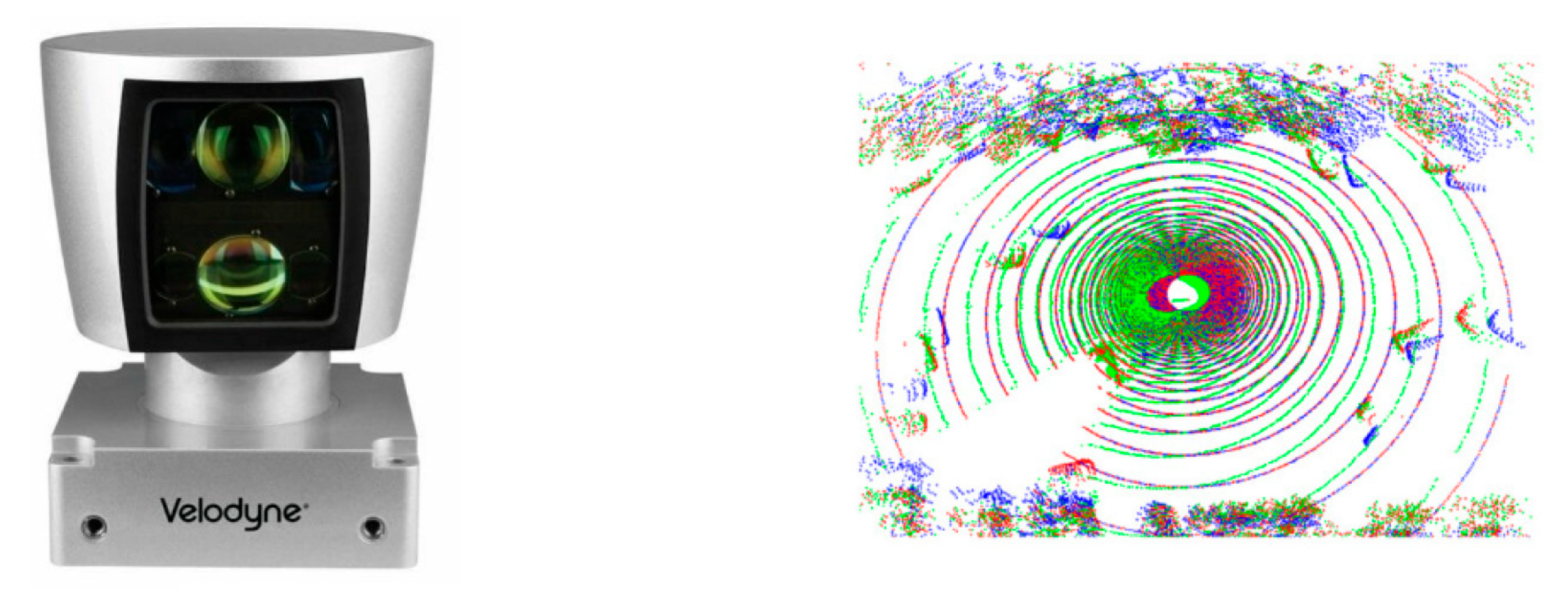

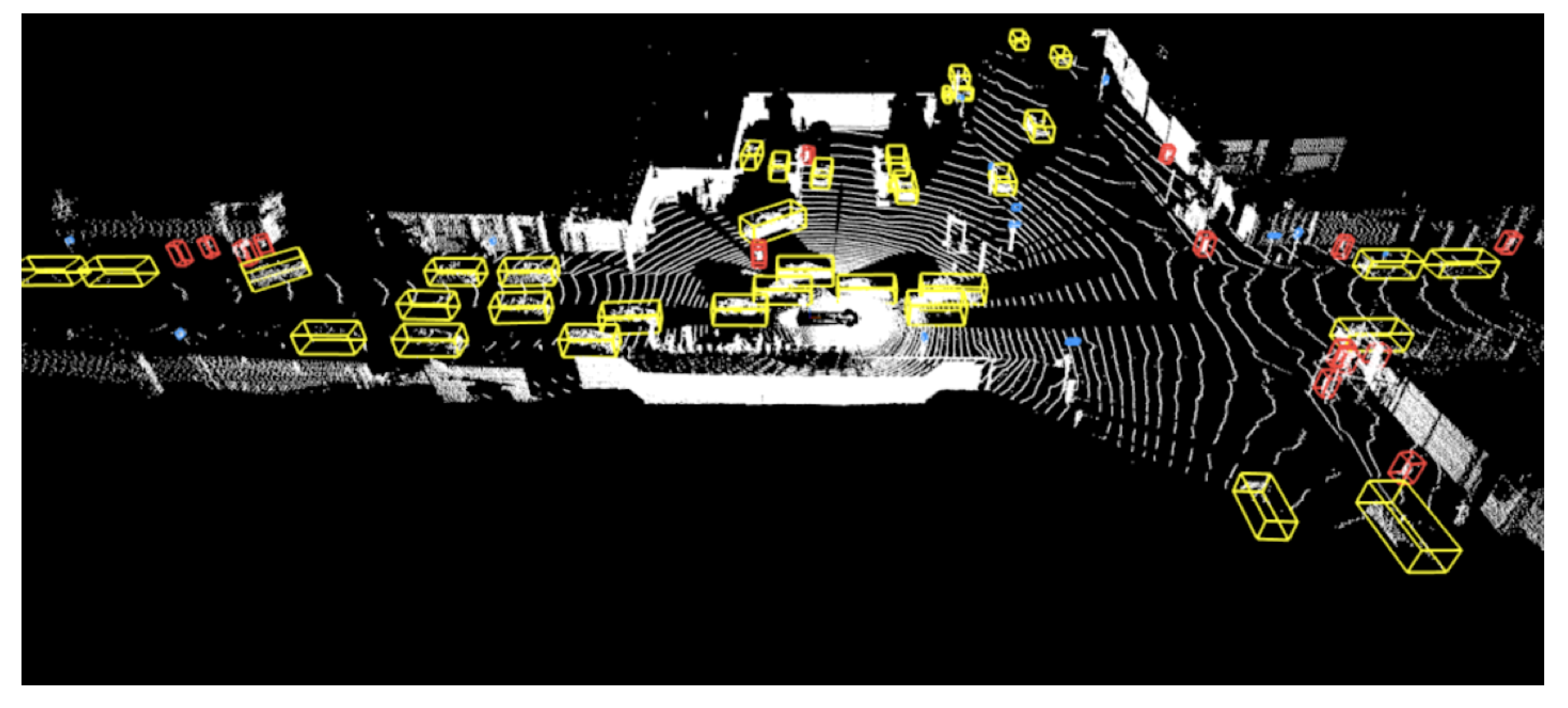

LiDAR

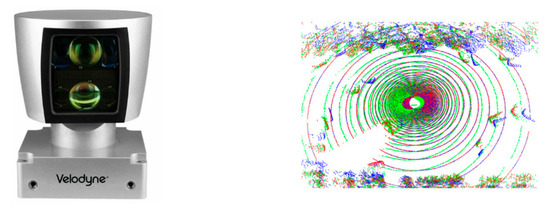

Sensors, as illustrated in Figure 16 and Figure 17, emit laser pulses to measure distances and generate high-precision 3D maps of the environment. These sensors are crucial for accurately detecting the position, size, and shape of pedestrians and other objects, providing detailed spatial information that aids in understanding complex environments.

Figure 16.

A 3D-LiDAR sensor can be utilized for various ranges, including short, medium, telescopic, or combined ranges (such as dual short or dual medium). Depicted here is a Velodyne HDL-64E sensor and the corresponding point cloud data it produces [12].

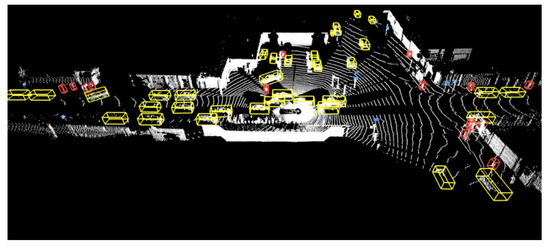

Figure 17.

LiDAR label example, Yellow = vehicle. Red = pedestrian, Blue = sign, Pink = cyclist [284].

- Pros: High accuracy in distance measurement, effective in low-light conditions, and capable of generating detailed 3D point clouds.

- Cons: High cost, large data storage requirements, and performance can be affected by weather conditions such as heavy rain or fog.

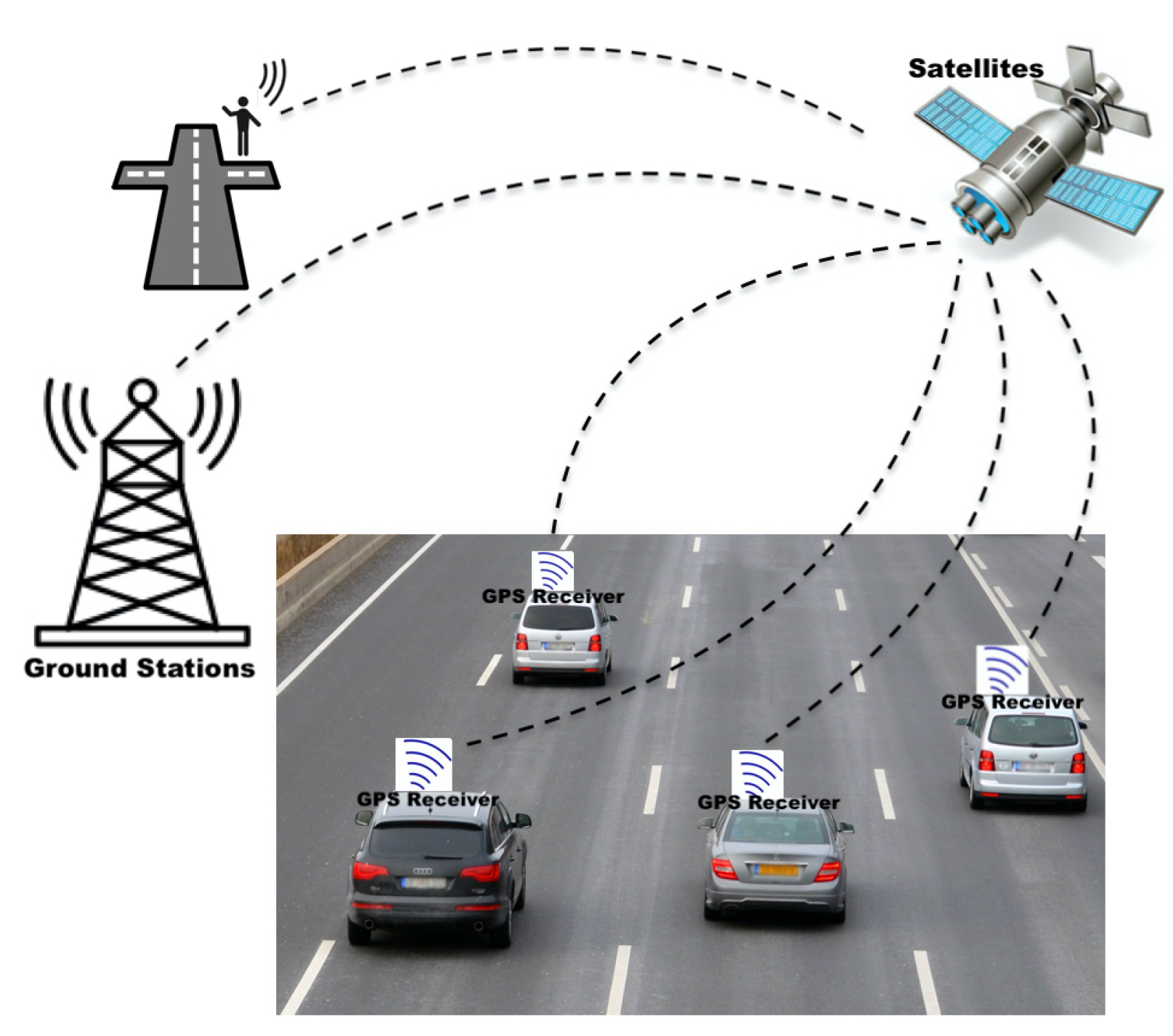

Radar