1. Introduction

Smart cities are widely developed and have been investigated differently recently. Technological development helps to build and monitor these cities. They aim to improve the quality of life by improving the quality of services such as education, healthcare, and transportation. These services have been linked to technological innovation [

1,

2,

3]. Traffic crises are the most critical challenges in traditional cities, especially crowded ones. Modern technologies emerge and provide solutions, especially in the matter of enhancing the safety conditions on road networks. Emergency vehicle management is one of the most critical problems that require sensitive and real-time solutions. Special driving rules are announced to vehicles around emergency vehicles, such as opening the way for them and giving them the highest priority to traverse a signalized road intersection.

Detecting emergency vehicles on the road network is the first step in reacting according to location, speed, and other parameters. Drivers used to be able to see these emergency vehicles and respond accordingly visually. Emergency vehicles produce sirens in more crowded and fast road scenarios to alert the surrounding drivers. Several drivers panic to react to these sudden sirens. Sometimes, they fail to determine these emergency vehicles’ exact location, speed, or other characteristics. Thus, they fail to respond promptly and adequately, threatening the road network’s safety conditions. Benefiting from modern vehicles’ advanced equipment and technologies, it is necessary to develop an intelligent emergency vehicle detection system. Models that use artificial intelligence technology can improve and increase the efficiency of logistics transportation services, reduce response time, choose the healthiest and fastest routes, and alert surrounding vehicles to open the way for them [

4,

5,

6,

7].

Artificial intelligence (AI) offers many varied solutions in this field. It has excellent potential in Internet applications to monitor and manage traffic and predict future events through machine learning (ML). Many scientific research studies have tried to address this topic by finding a way to detect and classify vehicles on the roads. Some of this research specifically studied the issue of detecting emergency vehicles through photos and videos to inform and alert the concerned authorities [

8,

9]. Some problems are reported regarding these previous studies that consider either the used method, the tested dataset, or the accuracy of the obtained model.

Several studies have introduced intelligent emergency vehicle detection models in the literature to classify vehicles on road networks. The main weakness of the previous studies is the suitability of the datasets used. The unbalanced dataset rarely involves an emergency vehicle and is not the best choice for training the machine learning mechanisms and predicting the existing emergency vehicles [

10]. We have noticed that some datasets used are unrealistic in several scenarios. These datasets’ considered photos or videos are not taken from road scenarios (e.g., toys or vehicles on an agency) [

11,

12,

13]. In addition, the method of determining the accuracy as a criterion is not always the most appropriate criterion to rely on to accept the results [

14]. An additional study, exemplified by Sheng et al. [

15], has put forth a learning-oriented strategy addressing video temporal coherence due to the inadequacy of recently devised techniques, specifically, filters designed for improving, restoring, editing, and analyzing static images when applied to video clips. The inherent distinction lies in recognizing that a video transcends a mere sequence of individual images. This disparity poses a challenge in the context of effectively detecting emergency vehicles in transit on roads.

The real problem we may face in applying the proposed model is the same dataset on which we will train the model. It turns out that several conditions must be achieved in the dataset to obtain the precise and accurate detection of emergency vehicles. Among these conditions are the size of the dataset and the quality of the images, in addition to the balance characteristics [

16]. Consequently, this work aims to find a model that achieves these goals with the required accuracy standards. We also plan to produce a suitable new dataset that achieves acceptable accuracy using Generative Adversarial Networks (GANs) due to their proven ability to improve and enhance dataset quality and address specific challenges in vehicle detection. GANs have been successfully used to enhance datasets in many fields [

17,

18,

19,

20], while GANs have been used to improve data quality in image translation models to improve detection under poor lighting conditions to improve night images and increase the accuracy of vehicle detection in the dark [

21,

22].

In addition, some image restoration models using GANs have proven effective in mitigating the negative effects of occlusion on vehicle detection [

23]. This confirms the benefit of GAN networks to create artificial images that enhance the dataset and simulate reality in various difficult circumstances while maintaining the quality of the dataset, which ultimately leads to more accurate detection of emergency vehicles. Finally, we aim to test and verify the generated dataset.

Figure 1 illustrates the general steps of the proposed work, encompassing all the stages from obtaining the initial dataset and its refinement using GANs, to the evaluation and validation of the newly generated dataset.

The remainder of this paper is organized as follows:

Section 2 studies previous work in this field of research. Then,

Section 3 investigates the main characteristics of existing datasets that have been used to detect emergency vehicles on road networks. It clarifies the main weaknesses and problems in each dataset.

Section 4 presents the steps of gathering, preparing, and augmenting the images for the dataset. It also clearly shows the steps of generating the new images using GANs. The details of the testing, verification, and comparing processes are explained in

Section 6. Finally,

Section 7 concludes the entire paper and recommends some future studies.

3. Available Traffic Datasets Contains Emergency Vehicles

This section analyzes various datasets containing images of emergency vehicles, specifically, ambulances, fire trucks, and police vehicles. We assess the datasets based on the type, quantity, and quality of images, emphasizing their realism and the fact that they were captured from real-world scenarios. Furthermore, we investigate the image augmentation techniques used in these datasets, how researchers used them, and for what purposes. We aim to identify the limitations and shortcomings in the available datasets. Moreover, we validate our findings by testing the previously proposed models in this field on real-world images to determine their effectiveness.

3.1. Emergency Vehicle Detection

The "emergency vehicle detection" dataset [

13] from Roboflow [

31] contains 365 training images and 158 testing and validation images with a medium quality of 640 × 640 pixels. However, all the images in the training set are of ambulance vehicles, and there are no other emergency vehicles, such as police or fire truck vehicles. It is an unbalanced dataset; only a few images contain ambulance vehicles. Additionally, no image augmentation techniques were applied. Dissanayake et al. [

32] used this dataset by the Yolo3 detection algorithm. The dataset was divided into 80% training and 20% testing and validation, aiming to detect emergency vehicles upon their arrival at the traffic light and give them a higher priority to pass through the signalized intersection. This study obtained an accuracy of 82%. After testing the online model available on Roboflow [

13] with several realistic images of emergency vehicles, it was observed that the model’s detection performance was poor. This suggests that the model is not trained to detect many types of emergency vehicles outside its limited dataset.

3.2. “JanataHack_AV_ComputerVision”

and “Emergency vs. Non-Emergency Vehicle Classification”

The second dataset, found in multiple locations on Kaggle “JanataHack_AV_ ComputerVision” [

11], “Emergency vs. Non-Emergency Vehicle Classification” [

33], contains approximately 3300 images, including 1000 emergency vehicles such as ambulances, fire trucks, and police vehicles. It also contains around 1300 images of other vehicles, making it a nearly balanced dataset. However, the images are of poor quality, with a resolution of 224 × 224 pixels. Kherraki and Ouazzani [

34] used this dataset for emergency vehicle classification, achieving over 90% accuracy. Still, the primary issue remains the quality of the images and the limited use of data augmentation.

3.3. Ambulance Regression

The "Ambulance Regression" dataset [

35] contains 307 images of ambulances in the YOLOv8 format, with 294 training images and 13 testing images. This dataset applies only standard augmentation techniques such as rotation, cropping, and brightness adjustment. However, the dataset lacks real road images, and there are very few test images.

3.4. Ambulans

The "Ambulans" dataset [

36] contains 2134 images of ambulances in YOLOv8 format and uses only standard augmentation techniques, including rotation, cropping, brightness adjustment, exposure adjustment, and Gaussian blur. However, there is a problem with the low quality of some images due to augmentation. In addition, many of the images are not from real roads but from exhibitions or the Internet.

3.5. Ambulance_detect

The "ambulance_detect" dataset [

37] contains 1400 images of ambulances in the YOLOv8 format without any augmentation techniques applied. The dataset contains 1400 images divided into 980 training images, 140 test images, and 280 validation images. However, the main challenge is the lack of real images of roads in the dataset and the fact that some images in the dataset are from car exhibitions. In contrast, other images were taken from real-world scenarios. In addition, the number of test images is relatively small compared to the number of training images, which may affect the model’s ability to generalize.

3.6. Emergency Vehicle Detection

The "Emergency Vehicle Detection" dataset [

38] contains 1680 vehicle images in the YOLOv8 format, as it relies only on applying standard augmentation techniques such as horizontal flip, random cropping, and salt noise. The dataset is divided into 1470 training images, 71 test images, and 139 validation images. The challenge here is that the images do not focus solely on emergency vehicles for training purposes. Most of the images in the “Emergency Vehicle Detection” dataset were captured using video cameras placed in specific locations. This may limit the ability of the dataset to train the model to detect vehicle emergencies in different locations. In addition, some of the captured images may not show the detailed features of emergency vehicles due to the cameras being located at a far distance, which creates challenges for the model in classifying and detecting emergency vehicles accurately.

3.7. FALCK

The "FALCK" dataset [

12] contains 176 images of ambulances and firefighting vehicles in the YOLOv8 format, with no augmentation techniques applied. The dataset has 140 training images, one testing image, and 35 validation images. The images are of good quality, but there is a lack of real road images, and the number of test images is too small for training images.

3.8. Sirens

Similarly, the “Sirens” dataset [

39] comprises 213 medium-quality images of ambulances, firefighting, and police vehicles in the YOLOv8 format, including 145 training images, 22 testing images, and 44 validation images. However, this dataset is very small, which limits its ability to build high-accuracy and realistic detection models. There is also a lack of real-world road images in this dataset.

3.9. Smart Car

The “Smart car” dataset [

40] was designed for detecting emergency vehicles, including ambulances, firefighting, and police vehicles. It includes 1152 images pre-processed with auto-orientation and resizing to 640 × 640 (stretch), with no augmentation techniques. The dataset is split into 921 training and 231 testing images, with medium-quality images. However, the dataset lacks real road images, and some images are unrealistic. Moreover, most of the images were not taken on the road. Furthermore, the dataset contains no images of vehicles from other classes.

The datasets we reviewed exhibit various limitations and inadequacies.

- 1.

Many available datasets lack realism. They comprise images not obtained from real-world scenarios. This may affect the models’ ability to generalize to practical situations.

- 2.

Available datasets often suffer from a class imbalance. Some types of emergency vehicles have a disproportionate number of images compared to others. Consequently, the models’ performance may be biased toward certain classes and suboptimal for others.

- 3.

Most datasets have limited test data, making it challenging to assess the models’ performance accurately. Poor test data quality also makes developing models that work effectively in real-world scenarios difficult.

- 4.

Some datasets have few images and lack comprehensive data augmentation techniques, hindering the model’s generalization of different scenarios. Excessive augmentation techniques may also reduce image quality.

- 5.

The limited usage of these datasets in published research suggests that they are not widely recognized or effective for ambulance detection.

Table 3 summarizes the main findings from the datasets examined. After defining the main limitations in the available datasets, to address them, we explored the potential of Generative Adversarial Networks (GANs) for augmentation as discussed in the next section.

5. Generating New Images Using GANs

Generative Adversarial Networks (GANs) comprise a generator and a discriminator, making them adept at generating images resembling real ones [

46]. The generator produces synthetic data akin to real data, while the discriminator discerns between real and synthetic data [

47]. Deep Convolutional Generative Adversarial Network (DCGAN) is a type of GAN that employs CNNs in both the generator and discriminator networks, allowing it to capture spatial dependencies in images and generate realistic images [

48]. The DCGAN model can help generate new images similar to real ones. The generated images can be added to the existing dataset, increasing its diversity and size and improving the performance of machine learning models trained on the dataset.

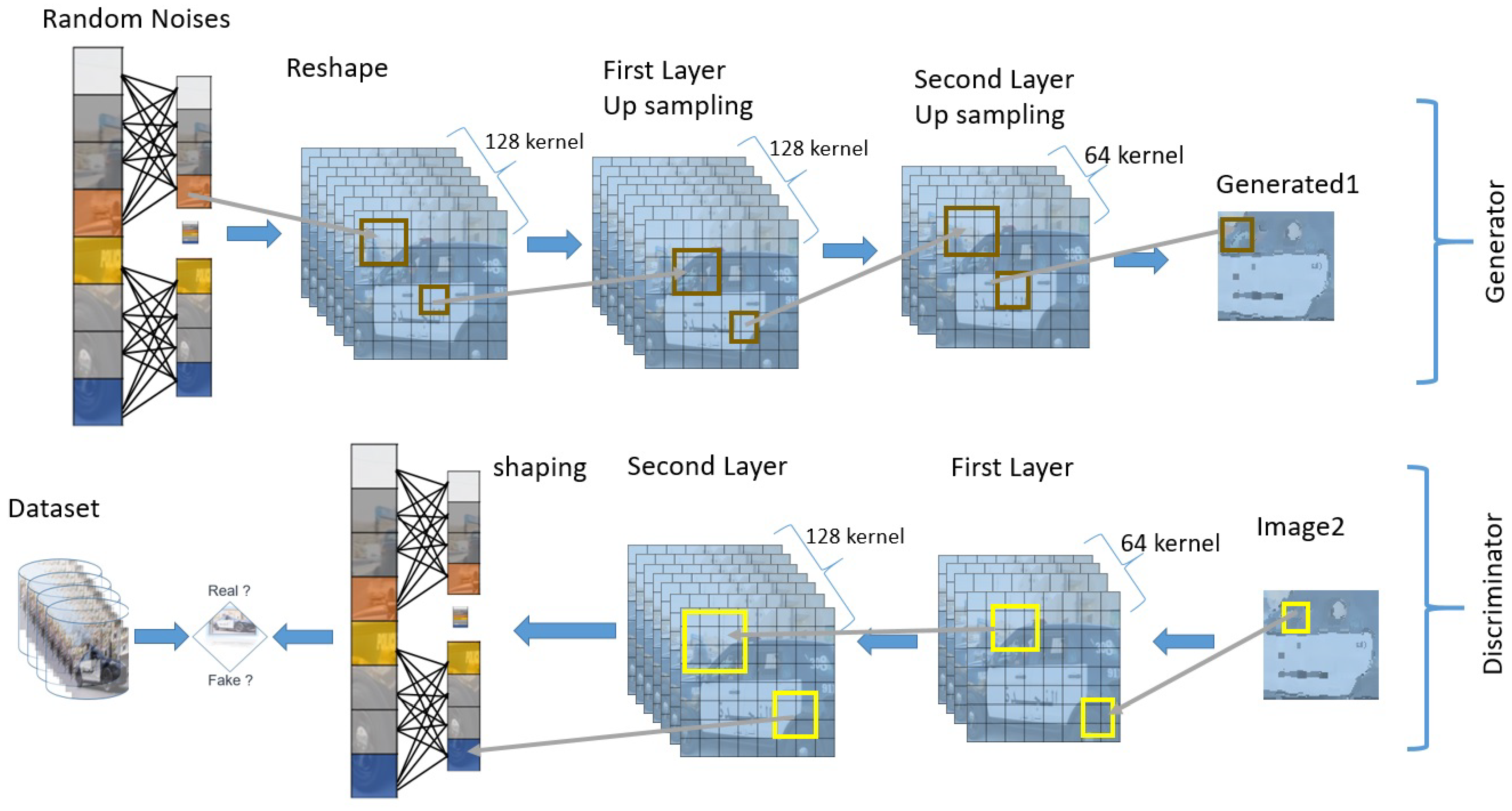

Figure 3 visually represents the image generation process using GANs. In this process, the generator initiates by taking random noise as input and progressively transforms it through multiple layers, ultimately producing the desired image. On the other hand, the discriminator plays a crucial role in assessing the authenticity of the generated image. It takes the generated image as input and passes it through its layers to determine its realism by comparing it to the original dataset.

As we discussed earlier, the GANs model consists of two networks (i.e., generator and discriminator).

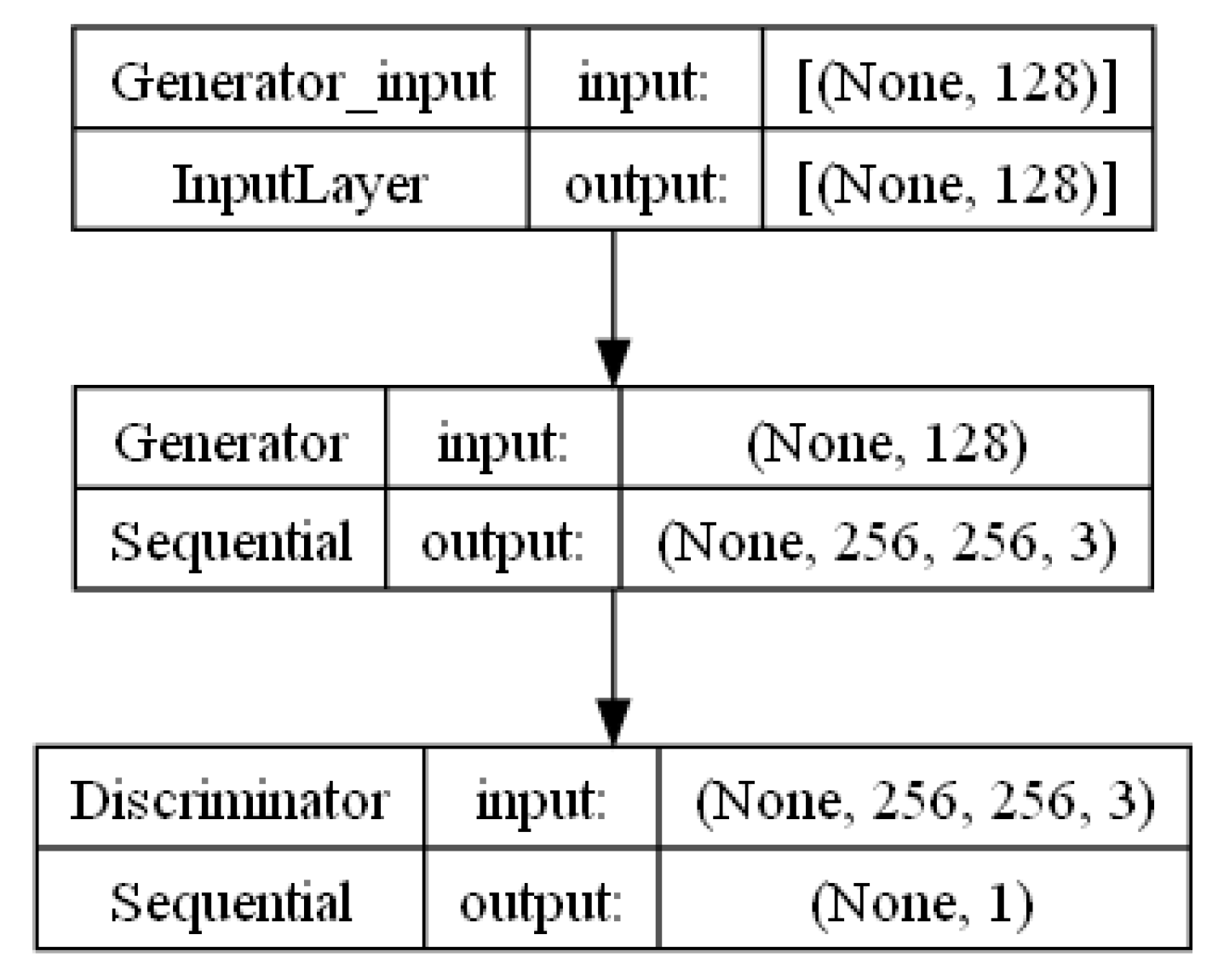

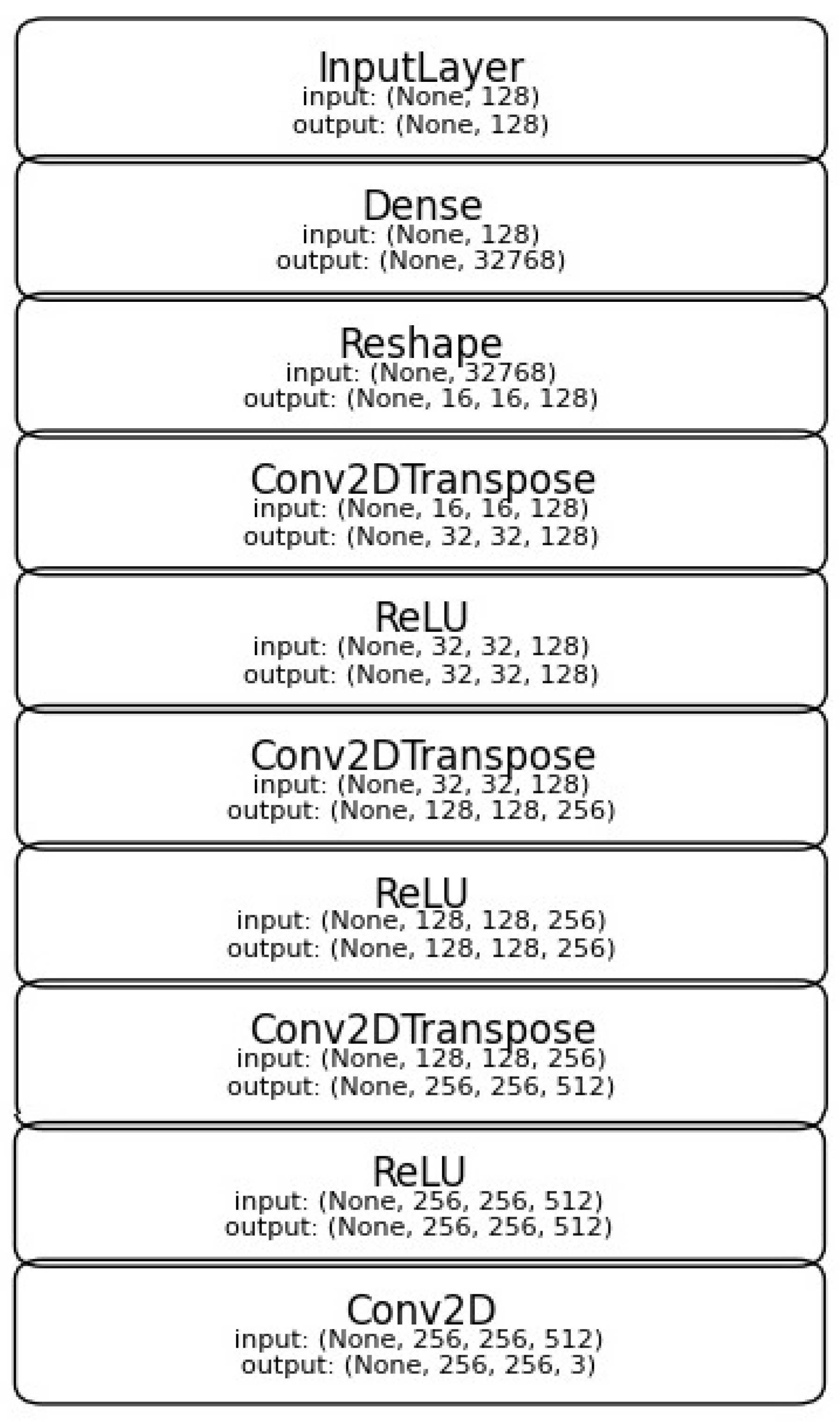

Figure 4 illustrates the general architecture of the designed GANs in our work. The exact architectures of each included network are presented in this section. First, the generator network contains four hidden layers and one output layer as shown in

Figure 5. These layers are explained in detail here:

Hidden Layer 1: The input to the generator is a random noise vector of size latent_dim. This layer has n_nodes nodes, calculated as 16 × 16 × 128. The reshape layer is used to reshape the output of this layer into a 4D tensor of shape (16, 16, 128).

Hidden Layer 2: This layer uses a transposed convolutional layer (Conv2DTranspose) to upsample the input from the previous layer to a size of 52 × 52 pixels. It has 128 filters with a kernel size of (4, 4) and a stride of (2, 2). The ReLU activation function is used to introduce non-linearity.

Hidden Layer 3: This layer further upsamples the input to a size of 104 × 104 pixels. It has 256 filters with a kernel size of (4, 4) and a stride of (4, 4). The ReLU activation function is again used.

Hidden Layer 4: This layer upsamples the input to a size of 416 × 416 pixels. It has 512 filters with a kernel size of (4, 4) and a stride of (2, 2). The ReLU activation function is used.

Output Layer: The final output layer uses a convolutional layer (Conv2D) with three filters (for the three color channels in the image) and a kernel size of (5, 5). The tanh activation function generates pixel values in the range [−1, 1].

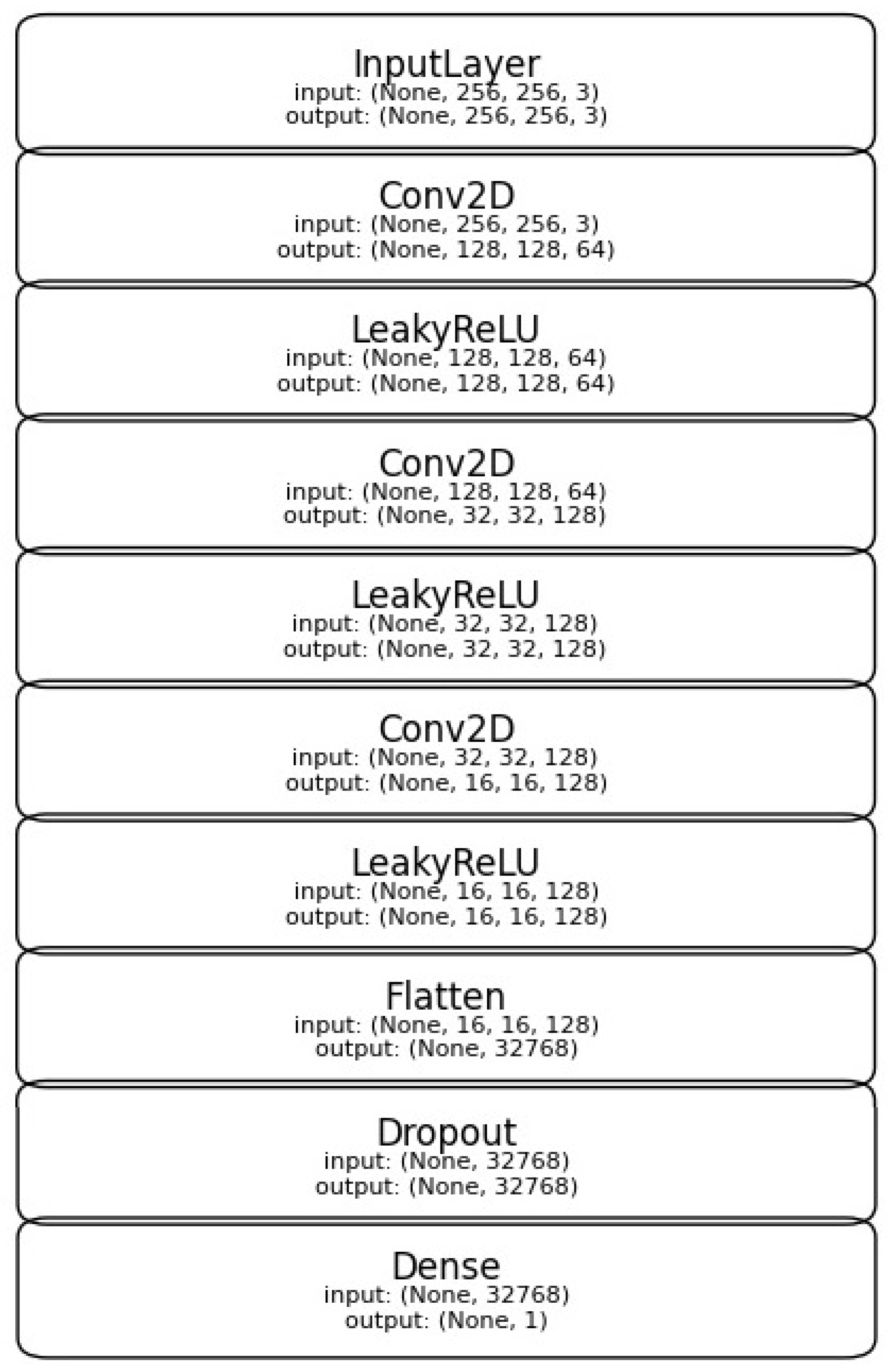

On the other hand, the discriminator network has three hidden layers and one output layer as shown in

Figure 6. The details of these layers are explained here:

Hidden Layer 1: This layer uses a convolutional layer (Conv2D) with 64 filters and a kernel size (4, 4). It has a stride of (2, 2) and uses the LeakyReLU activation function with a negative slope of 0.2.

Hidden Layer 2: This layer uses a convolutional layer (Conv2D) with 128 filters and a kernel size (4, 4). It has a stride of (4, 4) and uses the LeakyReLU activation function with a negative slope of 0.2.

Hidden Layer 3: This layer uses a convolutional layer (Conv2D) with 128 filters and a kernel size (4, 4). It has a stride of (2, 2) and uses the LeakyReLU activation function with a negative slope of 0.2.

Flatten and Output Layer: This layer flattens the output from the previous layer into a 1D tensor and applies a dropout layer to drop some connections for better random generalization. The final output layer has a single node with a sigmoid activation function that outputs a probability between 0 and 1, indicating whether the input image is real or fake. The discriminator model is trained to classify the input images as real or fake, so the loss function used during training is binary cross-entropy.

The key hyperparameters to be adjusted in the designed GANs model for training and generating the desired images are latent_dim, learning rate, and batch size. The latent_dim hyperparameter is specifically related to the generator model. It determines the size of its input and is set to 128 based on preliminary experiments and the literature, suggesting it provides a good balance between the diversity and quality of generated images [

49]. Our model explicitly set the learning rate hyperparameter to 0.0002 for the Adam (Adaptive Moment Estimation) optimizer [

50] used by the discriminator model. The Adam optimizer is a popular optimization algorithm that adapts the learning rate during training. By setting the learning rate to 0.0002, informed by its widespread use in stabilizing GAN training and providing effective convergence rates, we can control the step size used by the Adam optimizer to update the model’s parameters. Additionally, the batch size was set to 32, which defines the number of samples processed together in each iteration during training, considering that smaller batch sizes can help reduce overfitting while maintaining manageable memory usage [

51]. Moreover, the models were trained for 20,000 epochs, indicating the complete passes through the training dataset to ensure thorough learning without overfitting. The dataset used in the experiment consisted of 1000 images with a shape of (256, 256, 3), and the pixel values were scaled to be between −1 and 1. As for selecting additional parameters such as beta_1 (set to 0.5) and dropout_rate (0.3), as well as filters, kernel_sizes, and strides, they were determined based on their proven effectiveness to improve GAN performance in image generation [

52].

Table 4 illustrates the parameters in the designed GANs model.

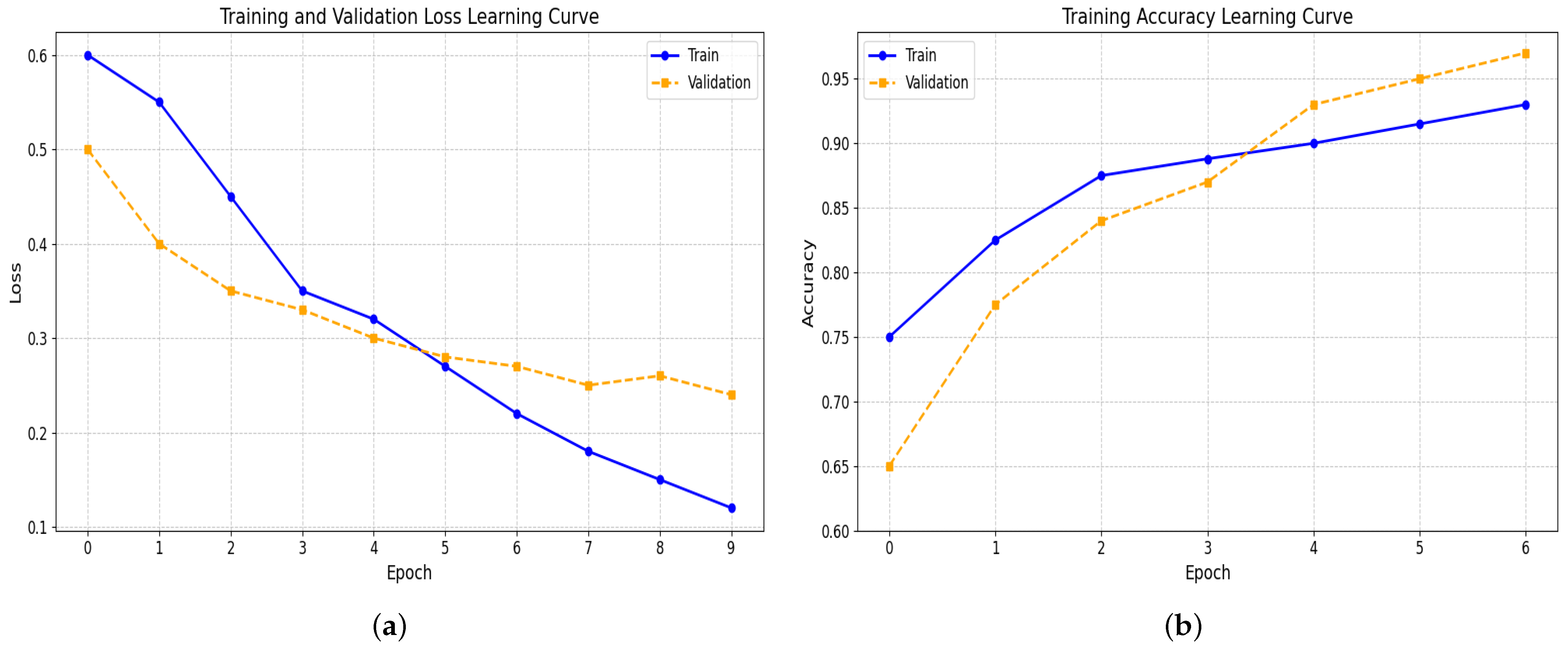

The DCGAN model was trained using an adversarial approach, where the generator generates images that deceive the discriminator while the discriminator learns to differentiate between real and fake images. During training, we employed binary cross-entropy loss as the loss function. We recorded each epoch’s discriminator and generator loss to monitor the training progress. The discriminator loss indicates the accuracy of the discriminator in classifying real and fake images, while the generator loss measures how well the generator can deceive the discriminator. Additionally, after each training epoch, we evaluated the performance of the discriminator on both real and generated images. The discriminator accuracy on real images indicates its ability to distinguish between real and fake images. In contrast, the discriminator accuracy on generated images shows how well the generator can deceive the discriminator. The experimental study utilized a DCGAN model with 7,579,332 parameters out of 7,149,955 that were trainable. The discriminator and generator components had 429,377 and 7,149,955 trainable parameters, respectively. The model’s performance was evaluated during the training process after 300 epochs.

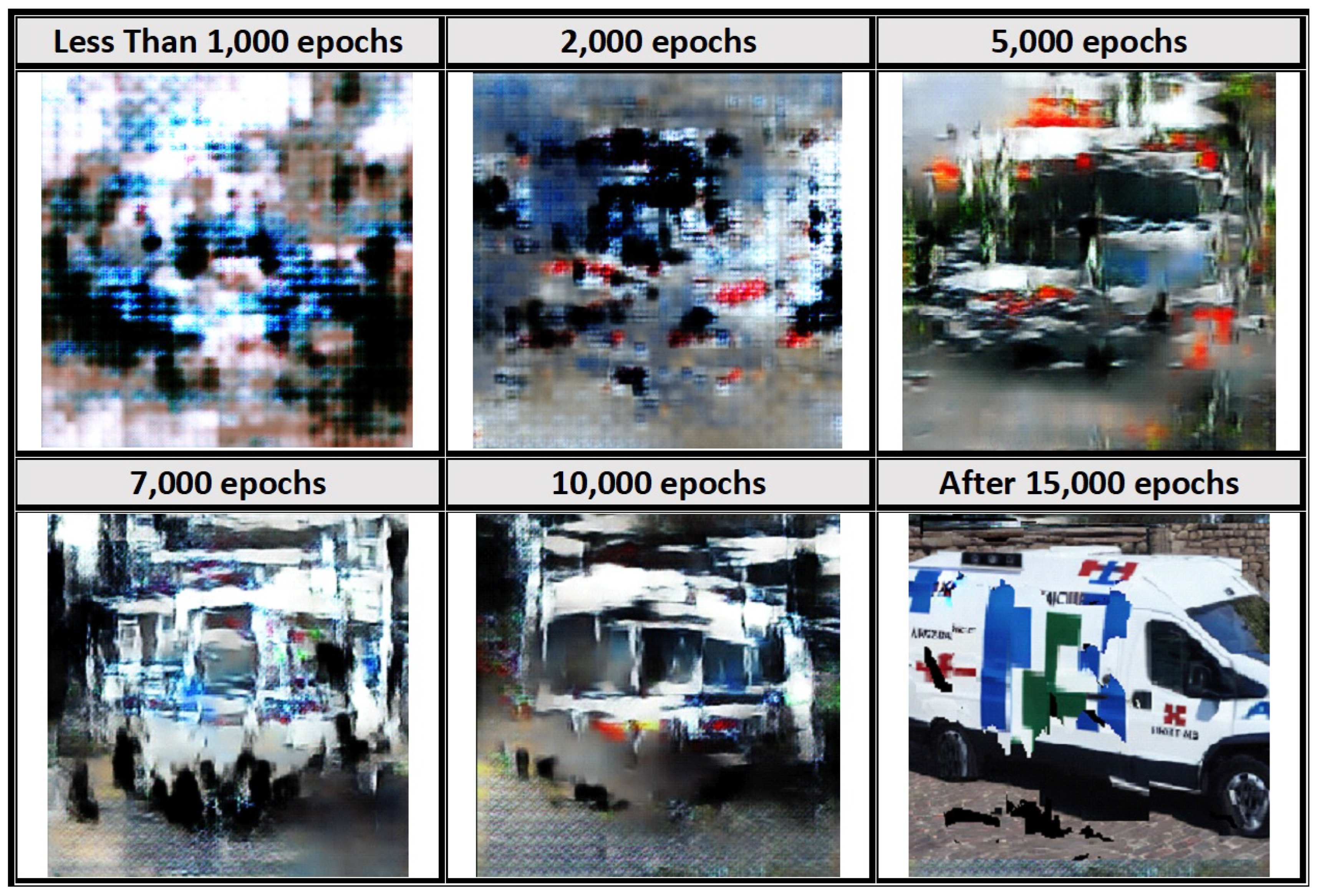

The results showed that as the model continued to train, the discriminator and generator losses decreased, while the discriminator’s accuracy on real and fake images increased. For instance, at epoch 300, the generator loss was 1.749, and the discriminator accuracy on real and fake images was 0.4 and 0.8, respectively. However, after 12,000 epochs, the generator loss decreased to approximately 1, and the discriminator accuracy on real and fake images significantly improved to 0.94. The outcomes of the model demonstrated the effectiveness of the DCGAN architecture in generating new images of emergency vehicles. The model’s performance gradually improved throughout the training process, although with some degree of fluctuation in the outcomes. A set of sample images generated during various epochs of the training is displayed in

Figure 7. And

Figure 8 displays a sample of images illustrating the progression of image enhancement produced by GANs as the number of epochs.

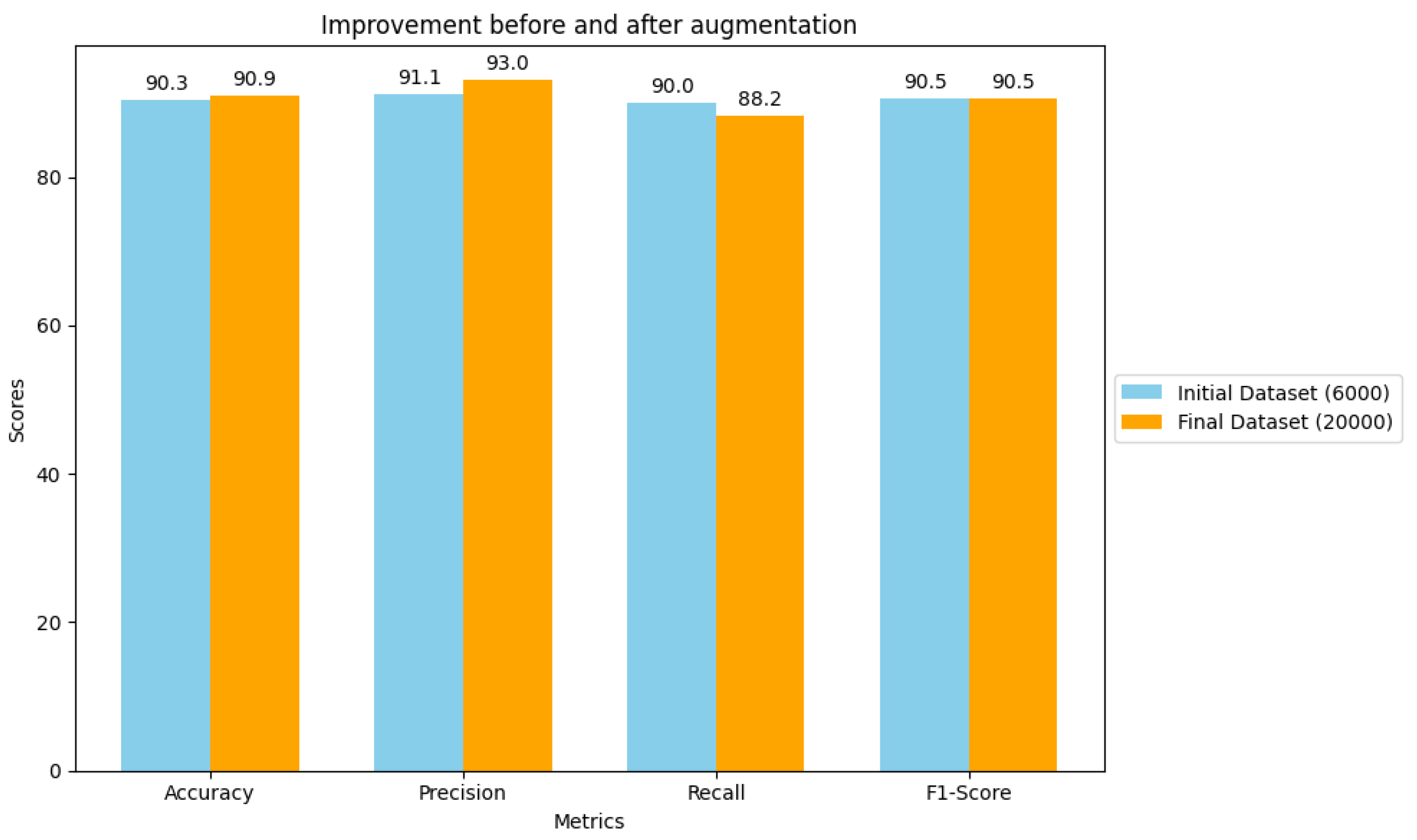

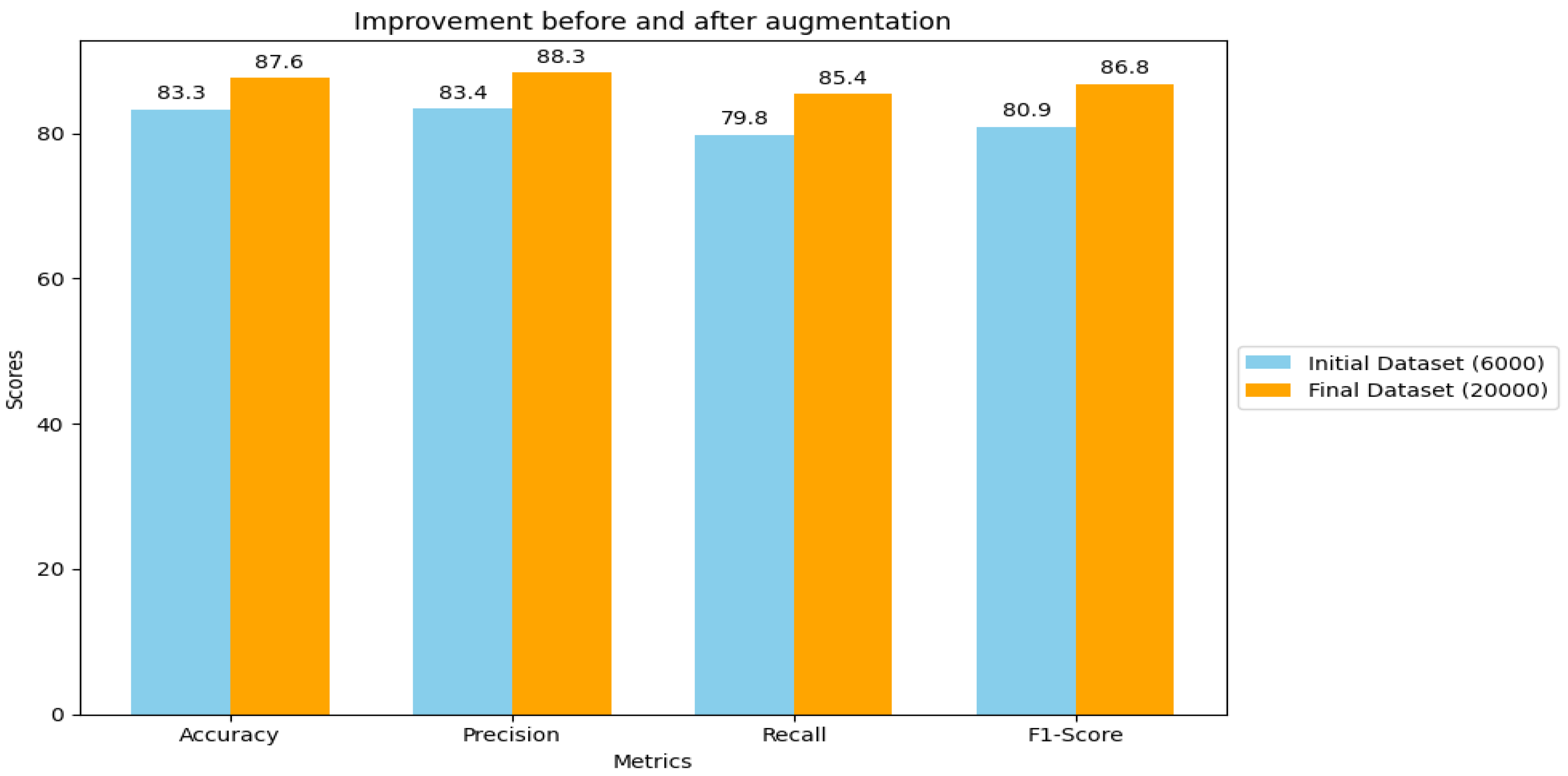

However, despite producing many images during the training, the model still requires refinement, particularly when dealing with the existing dataset. Additionally, further improvement in the results is anticipated through continuous training and increased epochs, which could necessitate using a supercomputer or cloud computing to accelerate and enhance the training process. At the end of this section, a new dataset containing approximately 20,000 images was obtained. The new dataset is well balanced, comprising 10,000 images of emergency vehicles and 10,000 images of non-emergency vehicles. Most of the images in the dataset were taken from real-life scenarios and can be detected over real road scenarios.