Abstract

In the current scenario of fast technological advancement, increasingly characterized by widespread adoption of Artificial Intelligence (AI)-driven tools, the significance of autonomous systems like chatbots has been highlighted. Such systems, which are proficient in addressing queries based on PDF files, hold the potential to revolutionize customer support and post-sales services in the automotive sector, resulting in time and resource optimization. Within this scenario, this work explores the adoption of Large Language Models (LLMs) to create AI-assisted tools for the automotive sector, assuming three distinct methods for comparative analysis. For them, broad assessment criteria are considered in order to encompass response accuracy, cost, and user experience. The achieved results demonstrate that the choice of the most adequate method in this context hinges on the selected criteria, with different practical implications. Therefore, this work provides insights into the effectiveness and applicability of chatbots in the automotive industry, particularly when interfacing with automotive manuals, facilitating the implementation of productive generative AI strategies that meet the demands of the sector.

1. Introduction

In the current technological setting, Artificial Intelligence (AI) has emerged with notable achievements, marking a significant stage in its trajectory as a powerful tool to revolutionize a large set of applications [1]. Such remarkable advancement in recent years has been enhanced by the emerging of deep learning, a subfield of machine learning that employs deep neural networks to discern patterns in data [2]. As a result, generative AI tools have emerged [3], but their full potential remains untapped in certain areas. Actually, although the adoption of deep learning AI tools in different areas of the automotive industry has become more prominent lately, it is reasonable to expect that they will not only impact the development of autonomous vehicles but also how conventional vehicles have been used and perceived [4]. As a result, generative AI tools may bring new services to this sector that is already experiencing a significant transformation in its paradigms of engine operation and driving automation, potentially supporting a swift migration to new driving patterns.

By leveraging machine learning principles and analyzing substantial volumes of data, conventional vehicles may benefit from advanced driver assistance features and improved interactions with occupants [5]. This synergy between the computational competence of AI and anticipated automotive innovation underscores a pivotal moment, which highlights the potential of the application of Large Language Models (LLMs) in this scenario [6].

In general, the advent of large language models comes within the domain of Natural Language Processing (NLP) algorithms, which have gained momentum in multiple fields mainly embodied in tools like OpenAI’s ChatGPT [7,8]. These models represent a shift in natural language understanding and generation, pushing the boundaries of language-based AI approaches [8,9,10]. Through comprehensive textual data training, LLMs have achieved an unprecedented understanding of human language, enabling them to generate text in a way that is contextually coherent and remarkably close to human speech [11]. This transformative capability has culminated in the creation of intelligent chatbots capable of learning from human interactions and providing responses that exhibit an exceptional level of subtlety [3]. More specifically, a critical aspect of LLMs is their ability to extract information from complex sources such as technical manuals, establishing them as advanced knowledge dissemination tools [12,13].

The incorporation of LLMs in the automotive industry may play a particularly significant role in the context of conventional vehicle manuals [6]. With the continuous evolution of conventional vehicles (in opposition to the expected new generation of driverless electric vehicles), which has incorporated increasingly advanced systems and diversified functionalities, the efficient dissemination of information emerges as a pressing challenge [14,15]. Technical manuals represent the main repositories of knowledge about the general operation of the vehicles, guiding users in various tasks, from operation to maintenance and troubleshooting of vehicles [16,17]. However, traditional methods of disseminating information often fail to meet the varying needs of users, resulting in inefficiencies and difficulties in accessing relevant information [5]. In this context, LLMs have demonstrated an ability to navigate the complexities of technical documentation, extracting insights and providing users with simplified access to essential information [13].

While the adoption of LLMs as a tool used to support the access of traditional vehicular manuals (usually in the PDF format) may seem straightforward, its applicability may be very significant. For example, consider a scenario in which a conventional vehicle owner encounters an unexpected problem while driving. Traditionally, solving this problem has involved consulting complex manuals, a process that often results in frustration and delays. With the integration of LLMs, users can engage in natural language interactions by describing the problem to an AI-based assistant, which can quickly analyze the user query and provide relevant information or troubleshooting instructions clearly and effectively.

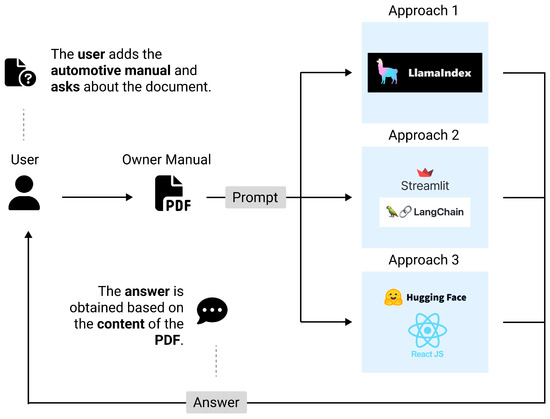

In this context, this work proposes a comprehensive exploration of LLMs as tools used to access relevant data in conventional vehicle manuals. Through a comparative analysis between available tools, this article aims to evaluate the effectiveness of different approaches based on LLMs, considering aspects such as accuracy of responses, cost efficiency, and overall user experience. The LLM approaches selected for evaluation were chosen to highlight the interpretation of technical documentation, the extraction of pertinent details, and the presentation of simplified access to information. Figure 1 provides a visual representation of these solutions in action.

Figure 1.

An overview of the evaluated approaches for AI-based reading of vehicular PDF manuals.

The aim of this article is to provide insights into the role that natural language processing, driven by LLMs, can play in streamlining and optimizing information retrieval from conventional vehicle manuals (usually with mechanical, maintenance, and operation instructions). This effort broadens horizons and opens new paths for the integration of LLMs in an automotive scenario in constant transformation. In this sense, this work aims to make the following contributions:

- (a)

- A comprehensive exploration of the potential of Large Language Models (LLMs) in improving access to information within conventional vehicle manuals;

- (b)

- A comparative analysis of different LLM-based approaches, assessing response accuracy, cost efficiency, and the overall user experience;

- (c)

- Visual representation of the covered solutions in action, providing insights into the practical implementation of LLMs in accessing technical vehicle documentation.

The remainder of this articles is organized as follows. Section 2 presents related works that contributed to the performed discussions and analysis. Section 3 describes the conducted case study carried out to compare the assessed solutions. Then, the main obtained results in this work are presented in Section 4. Finally, Section 5 presents the final considerations and suggests promising directions for future work, followed by conclusions and references.

2. Related Works

Natural language processing has shown exponential progress in the development of a great number of applications. This is largely attributed to the emergence of generative AI and Large Language Models (LLMs). These advancements have catalyzed the conception of chatbots capable of interacting with documents in PDF format, which is still an emerging research topic. While interesting studies have been conducted incorporating technologies such as Optical Character Recognition (OCR) with NLP techniques [18,19], in addition to applications using BERT (Bidirectional Encoder Representation from Transformers) [20], it is important to note that there is still limited research exploring the application of LLMs in the interaction of chatbots with PDF documents, which is the focus of this article. Through a comprehensive literature review and the study of applications developed in this field, studies that have contributed to the development of the proposed solution have been identified.

In the work in [21], the effectiveness of chatbots is explored by applying popular frameworks such as QnA Maker and Dialogflow, along with a Sentence BERT (SBERT) model, to respond to frequently asked questions from students. Although the study has limitations, particularly in terms of dataset diversity and the exploration of a wider range of chatbot frameworks, it was found that the SBERT model outperformed the others in terms of precision, achieving an F1 score of 0.99. That investigation is particularly relevant to the present study as it emphasizes the importance of chatbot accuracy in generating responses, albeit in different domains.

From another perspective, the study conducted in [22] addresses information extraction from resumes in various formats, including PDF. The authors propose an approach using an NLP library called spaCy to perform analyses and extract information from resumes in a manner analogous to a human recruiter. Although a chatbot was not implemented in that work, it explores techniques used to extract information from various types of documents with the aim of providing responses to a user as a corporate recruiter.

The study conducted in [5] addresses the development of a chatbot to provide information about automotive services, such as the nearest workshop’s address and the cost of specific repairs. The article explores the use of Artificial Neural Networks (ANNs) as an approach to train the chatbot, considering different types of ANNs such as Convolutional Neural Networks (CNNs) for efficient image recognition and modifications of Recurrent Neural Networks (RNNs), including Long Short-Term Memory (LSTM) and Bidirectional RNNs (BRNNs). These techniques are applied for text recognition. Thus, the authors concluded that the use of algorithms and methods from text recognition theory is important in the automotive field and that the proposed solution requires adjustments in the text detection part.

Furthermore, extending beyond the realm of automotive services, another pertinent area for NLP is in the field of vehicular defect investigation and analysis, as proposed in [16]. The National Highway Traffic Safety Administration (NHTSA) in the USA stands at the forefront of this domain, regularly receiving an overwhelming influx of reports related to potential defects, recalls, and manufacturer communications. However, the sheer volume and text-based nature of these reports make it challenging for analysts to efficiently identify defect trends. To expedite this process, a groundbreaking NLP application designed to identify key topics and similar defect reports was introduced, offering invaluable assistance to analysts and investigators. By combining the power of NLP with existing NHTSA datasets, the approach provides a method for identifying defect trends within vast text-based datasets.

The authors in [20] presented a heuristic algorithm with the aim of addressing specific challenges in human resources management within large organizations. The approach focused on performing semantic matching between data sources containing information about employees’ professional profiles and competence dictionaries such as O*NET and ESCO to establish a unified skills catalog. Additionally, their algorithm was designed to infer employees’ soft skills based on their resumes and online profiles, demonstrating promising results compared to conventional methods. The study contributed to enhancing human resources management with a data-driven approach, potentially benefiting resource allocation and professional development within companies in the past, also giving clues to the development of our approach.

The comparative Table 1 provides an analysis of key studies relevant to the interaction of chatbots with PDF documents. These studies showcase a variety of objectives and technological approaches used to address this emerging challenge. The table also highlights the primary objectives of each study, the key employed technologies, and some notable achieved results. This analysis serves to underscore the differences and similarities between the approaches, stating the significant contributions of each work to the field of chatbots and PDF document processing.

Table 1.

Research works that address AI-driven processing of PDF documents.

In this context, it is important to highlight the relevance and connection of the mentioned studies to the proposed work. By considering the limitations discussed earlier and the need to explore existing gaps, an opportunity arises to develop new solutions that contribute to new research and development areas. Within this panorama, our work proposes a comparative analysis of three solutions for response generation based on AI prompts for users in the automotive industry.

3. Case Study

Since we want to evaluate different performance metrics concerning three different AI-based approaches when interacting with PDF manuals, it was decided to define a case study for comparison purposes. Actually, the intended evaluation is centered on chatbots following three different approaches, which are used to provide responses to questions based on PDF files, particularly the ones that are vehicular manuals. To achieve this objective, the following subsections will discuss the related concepts and defined parameters.

3.1. Selected Approaches for Evaluation

The approaches to be compared are described as follows:

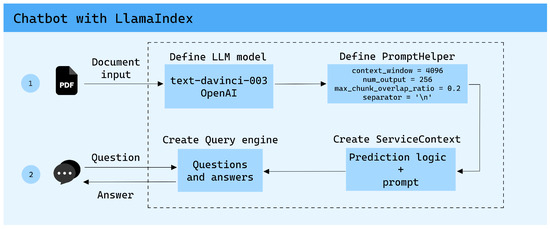

- Approach 1: Doc ChatBot [23]: the Doc ChatBot was developed using the LlamaIndex [24] and LangChain [25] libraries, along with the OpenAI API [26]. The text-davinci-003 model from this API was used to create the chatbot. In general, it consists of a Python notebook that allows for user interaction with PDF documents through code cells. Figure 2 illustrates the functioning of the application, explicitly demonstrating its integration of the LLM with external data sources through the LlamaIndex library;

Figure 2. Operation of the Doc ChatBot application.

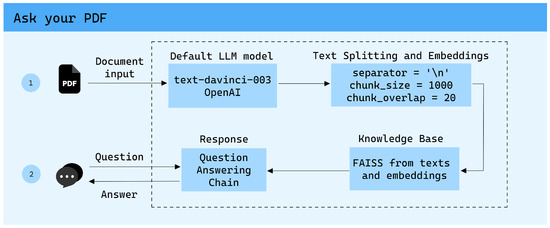

Figure 2. Operation of the Doc ChatBot application. - Approach 2: Ask your PDF [27]: it was developed using the LangChain library and the OpenAI API, utilizing the text-davinci-003 model from this API to create the chatbot. It also employed the Streamlit tool [28] to create an interface that enables user interaction with the chatbot. The overall functioning of this application is illustrated in Figure 3;

Figure 3. Operation of the Ask your PDF application.

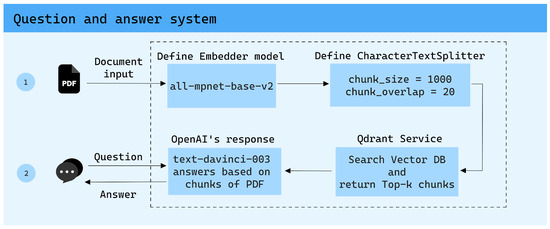

Figure 3. Operation of the Ask your PDF application. - Approach 3: Question and Answer System [29]: it was developed using the LangChain library, the OpenAI API, and the Sentence Transformers library [30], which was utilized for generating embeddings using the all-mpnet-base-v2 model. Tools were also employed for creating the interface, including React for the front-end and FastAPI along with a vector database called Qdrant for the back-end of the application. The functioning of this application is illustrated in Figure 4.

Figure 4. Operation of the question and answer system application.

Figure 4. Operation of the question and answer system application.

In summary, all three approaches utilize LLMs from the OpenAI API, particularly models from the GPT-3 family. However, only the last two approaches have user-friendly interfaces for interaction, whereas the first approach is based on code cells. Actually, the three applications in question were chosen due to their popularity rates. This selection was carried out in the context of a scenario in which a variety of applications in this domain were emerging. Furthermore, the issue of reproducibility was taken into account, considering that the authors provided both the source codes and the corresponding documentation for the approaches under analysis, thus facilitating their replication and fostering familiarity with the codes. With all these facts considered, these three chatbots were selected as references in this work.

Regarding the limitations related to the size of PDF files, the first approach does not have a clearly defined limitation, while the second approach supports files of up to 200 MB, and the third approach only supports files of up to 5 MB. These restrictions should be taken into consideration when applying the approaches in different scenarios.

3.2. Experimental Design

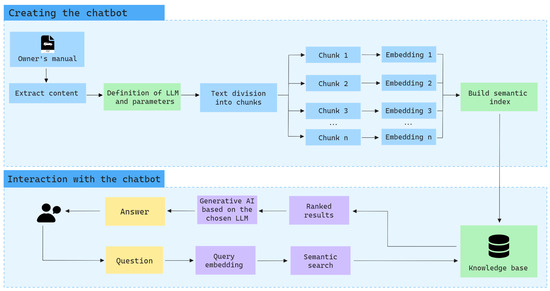

The evaluation of the three approaches utilizes a single flow, segmented into two parts: (1) logic for chatbot creation; and (2) interaction with the chatbot, as depicted in Figure 5.

Figure 5.

Processing flow of an LLM-based chatbot for interacting with PDF documents.

In the first part (1), the file is imported, and its content is extracted. In this step, the selected approach, which involves defining the LLM and appropriate parameterization, is applied to divide the text into chunks and convert them into vector representations known as embeddings. These embeddings are essential for constructing the semantic AI index, which will serve as the knowledge base.

The second part (2) of the process starts from this semantic AI index. When a question arises from the user, a query is made to the knowledge base, identifying the most relevant and related chunks to the question. The selected chunks are then used by the language model to construct an accurate and appropriate response to the question asked.

Thus, the process consists of importing the file, extracting the content, dividing the text into chunks, converting them into embeddings, constructing the semantic AI index as the knowledge base, and subsequently querying this base to find the most relevant chunks and generate responses with the assistance of the language model.

As an additional comment, the instrumentation process will begin with the configuration of the environment for the experiment. For that, a PDF text document is used for information extraction. In our case study, the Ford Fiesta 2015 vehicle manual is considered, which is approximately 4.8 MB in size. In this specific context, the document’s size is a relevant factor as one of the approaches supports only files up to 5 MB. Then, the selected approaches are evaluated, one by one.

3.3. Preparation

In order to ensure maximum consistency in the adopted approaches, an effort was made to standardize the parameters used in the three selected chatbots. In LLM models, it is crucial to specify the parameters responsible for controlling text input segmentation and overlapping during response generation. Therefore, the following parameters were uniformly defined:

- chunk_size: This parameter refers to the size of the chunks into which the input text (in this case, the PDF) is divided during the model’s inference process. After conducting calibration tests, it was decided to set the value of this parameter to 1000 for all three approaches;

- chunk_overlap: This parameter determines the amount of overlap between consecutive chunks. After testing, it was determined to set the value of this parameter to 20 for all three approaches.

These configurations were determined after conducting multiple tests and analyzing the obtained results. The aim was to ensure consistency and comparability across the approaches, enabling a more accurate and fair evaluation of the considered LLM models.

3.4. Evaluation Metrics

To comprehensively evaluate the performance of the three distinct chatbot approaches, test questions were formulated based on the form of the prompts: zero-shot, one-shot, and few-shot. These paradigms reflect the amount of contextual information given to the model before expecting an answer [31].

Prompt Definitions

- Zero-shot prompting: The chatbot is tasked to respond without any specific example in the given context;

- One-shot prompting: The chatbot is provided with a single example or template to guide its response;

- Few-shot prompting: The chatbot uses several examples to shape its response, though the number of these examples remains limited.

Within this evaluation scope, specific questions will be examined to probe the chatbot’s responsiveness under each of these paradigms, as described in Table 2, Table 3, Table 4 and Table 5:

Table 2.

Evaluating approaches for Question 1.

Table 3.

Evaluating approaches for Question 2.

Table 4.

Evaluating approaches for Question 3.

Table 5.

Evaluating approaches for Question 4.

These questions were selected to cover different aspects of the vehicle manual, assessing the ability of the chatbots to provide accurate and useful responses to users. It is worth noting that these questions aim to evaluate not only the quality of the answers provided by the chatbots but also other relevant aspects such as cost and user experience.

Regarding cost, an important consideration is the use of tokens in both questions and answers. Tokens are the basic units of processing in many AI models, including chatbots. Each word or piece of text that the model processes consumes a certain number of tokens. In many cases, the use of these tokens has an associated cost. This means that longer questions or answers, which use more tokens, can be more expensive to process. Therefore, taking into account the three evaluated approaches, it is necessary to consider the cost of two steps: (1) using the LLM, and (2) producing the embeddings.

Regarding the models usage, all three approaches utilize the OpenAI API. Each token in this context, especially for texts written in English, corresponds to approximately four characters, approximately translating to 0.75 words. In this way, 1000 tokens equate to about 750 words. According to the pricing details outlined on the official OpenAI website [32], for applications employing the GPT-3 model family, specifically the text-davinci-003 model, there is a cost of USD 0.02 for every batch of 1000 tokens, covering both input and output data.

As for costs associated with embeddings, approach 3, pertaining to the question and answer system, uses the sentence-transformers all-mpnet-base-v2 model, hosted on Hugging Face [33], which is freely available. On the other hand, the first two approaches utilize OpenAI’s embeddings, specifically the ada-v2 model, which incurs a cost of USD 0.0001 per batch of 1000 tokens.

On the other hand, the user experience is evaluated from the perspective of how easy it is to interact with the system. The analysis specifically considers whether the chatbot solution provides an intuitive interface. The presence of a user-friendly and easy-to-understand interface is essential for ensuring a satisfactory user experience.

Taking these criteria into account, questions were formulated for each approach that required responses in the form of a string or as an image. The next section presents the results of the answers provided by each chatbot, along with a performance comparison of each application.

We have made our methodology readily accessible for research and practical purposes. The detailed implementation of our method is publicly available on our GitHub repository. This open-access approach is intended to facilitate collaboration, replication, and further research endeavors within the academic and professional communities. To access the full implementation, please visit our GitHub repository at https://github.com/conect2ai/carbot-manual-compare (accessed on 11 October 2023).

4. Results

This section aims to discuss the results obtained from the defined case study described in Section 3. Thus, the objective is to present and analyze the responses obtained by each of the chatbot approaches, evaluating their coherence according to the car manual. Subsequently, the results will be compared based on the previously mentioned metrics.

4.1. Responses by Approaches to Question 1

- Zero-shot Response: (1) Doc Chatbot—There is no symbol for fuel pump reset given in the context information. (2) Ask your PDF—The symbol for fuel pump reset is the arrow adjacent to the fuel pump symbol on the fuel gauge. (3) Question and answer system—I don’t know.

- One-shot Response: (1) Doc Chatbot—The symbol for the fuel pump reset is usually a fuel pump icon, which looks like a simplified image of a fuel pump. (2) Ask your PDF—The symbol for the fuel pump reset is an arrow adjacent to the fuel pump symbol. (3) Question and answer system—I don’t know.

- Few-shot Response: (1) Doc Chatbot—The symbol for the fuel pump reset is a fuel pump icon, which looks like a simplified side view of a fuel pump. (2) Ask your PDF—I do not know, but you can find the symbol for the fuel pump reset in the manual for your car. (3) Question and answer system—I don’t know.

4.2. Responses by Approaches to Question 2

- Zero-shot Response: (1) Doc Chatbot—The doors will lock automatically after the vehicle has been moving at a speed greater than 12 mph (20 km/h) for more than two seconds. (2) Ask your PDF—The doors will relock automatically if you do not open a door within 45 s of unlocking the doors with the remote control. (3) Question and answer system—I don’t know.

- One-shot Response: (1) Doc Chatbot—The doors will lock automatically after two seconds if the vehicle has come to a stop and the ignition is switched off or to the accessory position. (2) Ask your PDF—The doors will relock automatically if you do not open a door within 45 s of unlocking the doors with the remote control. (3) Question and answer system—I don’t know.

- Few-shot Response: (1) Doc Chatbot—The doors will lock automatically about two seconds after the car has come to a stop and the ignition is switched off or to the accessory position. (2) Ask your PDF—The doors will lock automatically after 45 s if they are not opened. (3) Question and answer system—It depends on the car model, as some cars have an automatic locking feature that locks the doors after a certain amount of time, while other cars may require you to manually lock the doors.

4.3. Responses by Approaches to Question 3

- Zero-shot Response: (1) Doc Chatbot—Clock (E) and H and M buttons (outside the audio display). (2) Ask your PDF—You should use buttons H and M on the information and entertainment display to adjust the hours and minutes. (3) Question and answer system—You should use the +/− buttons to adjust the hours and minutes.

- One-shot Response: (1) Doc Chatbot—The MENU button should be used to access the clock settings menu, and then the left and right arrow buttons can be used to adjust the hours and minutes. (2) Ask your PDF—The Clock button should be used to set the clock, except when in phone mode. (3) Question and answer system—The buttons used to adjust the hours and minutes will vary depending on the car radio. If you can provide the make and model of the car radio, I may be able to provide a more specific answer.

- Few-shot Response: (1) Doc Chatbot—The ’MENU’ button should be pressed to access the clock settings menu, and then the ’up’ and ’down’ arrow buttons can be used to adjust the hours and minutes. (2) Ask your PDF—The button labeled “clock” should be used to adjust the hours and minutes. (3) Question and answer system—The buttons labeled with “hours” and “minutes” should be used to adjust the hours and minutes.

4.4. Responses by Approaches to Question 4

- Zero-shot Response: (1) Doc Chatbot—The symbol for hazard warning flashers is a triangle with an exclamation mark inside. (2) Ask your PDF—The symbol for hazard warning flashers is a triangle with an exclamation mark inside it. (3) Question and answer system—The symbol for hazard warning flashers is a triangle with an exclamation point inside.

- One-shot Response: (1) Doc Chatbot—The symbol for hazard warning flashers is a triangle with an exclamation point inside. (2) Ask your PDF—The symbol for hazard warning flashers is an exclamation mark inside a triangle. (3) Question and answer system—The symbol for hazard warning flashers is a triangle with an exclamation mark in the center.

- Few-shot Response: (1) Doc Chatbot—The symbol for hazard warning flashers is typically represented by a triangle with an exclamation point inside. (2) Ask your PDF—The symbol for hazard warning flashers is usually represented by an equilateral triangle with an exclamation mark inside. (3) Question and answer system—The symbol for hazard warning flashers is typically represented by a set of triangles arranged in a circle.

4.5. Comparison of Obtained Metrics

Overall, it was observed that the responses obtained through the “Ask your PDF” application were partially accurate, aligning precisely with the vehicle manual for two of the four questions when using the zero-shot prompt. The application boasts an intuitive user interface, enhancing the overall user experience. When evaluating the one-shot and few-shot prompts, this approach was the only one to accurately answer Question 2 across all prompt styles. However, the application failed to correctly identify the icons mentioned in Questions 1 and 4 across all prompts. Given that the assessment was based on a relatively small-sized PDF file (4.8 MB), it is pertinent to mention that the application’s performance may vary with substantially larger or more complex files.

On the other hand, “Doc Chatbot”, despite its moderate accuracy, lacks a user interface. This absence can negatively impact user experience, requiring users to directly manipulate code to input PDF files and pose questions. Hence, users might need a certain degree of programming knowledge, leading to a steeper learning curve. For the zero-shot prompt, it provided accurate answers for only one of the four questions. However, it failed to give correct answers for the one-shot and few-shot prompts for Question 3.

These errors are understandable, as these applications mainly focus on text processing and lack the capabilities to interpret visual elements, such as images and icons.

Regarding the “Question and answer system”, while it benefits from an integrated user interface, the accuracy of the responses was less than satisfactory across all prompts. Although it managed to provide answers for Questions 2, 3, and 4, none of them fully aligned with the information in the vehicle manual, failing to identify the mentioned buttons and symbols.

Furthermore, from an economic standpoint, “Ask your PDF” emerges as the best choice. Its costs predominantly range between USD 0.02 and USD 0.03 across various questions and prompts. In stark contrast, the “Doc Chatbot” consistently incurs higher expenses, particularly notable in one-shot and few-shot scenarios, averaging between USD 0.03 and USD 0.04. The financial advantage of the “Question and answer system” is evident, despite its diminished accuracy, maintaining costs primarily below USD 0.005. This positions it as the most cost-efficient option among the trio. Thus, while “Ask your PDF” and “Doc Chatbot” might offer superior results in specific scenarios, they demand a notably higher financial outlay compared to the “Question and answer system”.

Finally, a comparative summary was conducted, considering not only the quality of chatbot responses but also incorporating metrics highlighted in the experimental Section 3, taking into account the cost and user experience per application. The results of this comparative analysis are displayed in Table 6.

Table 6.

Evaluating approaches.

4.6. Practical Issues

The adoption of AI tools is expected to deeply transform the way we interact with our vehicles. In one sense, the huge amount of data generated by vehicles nowadays are valuable when indicating the achieved performance through many metrics, which may be valuable when guiding preventive maintenance procedures or when optimizing the way they move around a city. Actually, these are very anticipated benefits of vehicular analytics supported by artificial intelligence algorithms, with many research works addressing important issues in this area. On the other hand, AI tools may also be leveraged in other disruptive ways in a more user-centric perspective, with immediate benefits for the drivers.

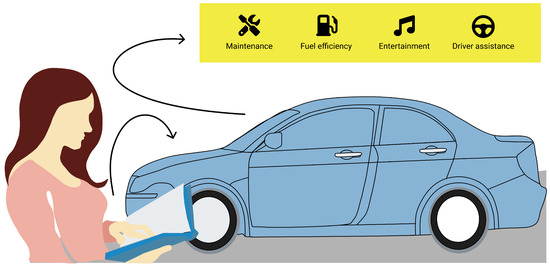

In general, vehicular manuals are the main direct source of information for the users, for different purposes, as illustrated in Figure 6. Users will read the manuals to solve particular questions, which may have different levels of complexities and urgency, especially during emergencies (a flat tire, attention lights on the panel, malfunctioning of the braking system, etc.). However, although the information needed is somehow there, finding it may be hard or time-consuming, which may a natural barrier for a large group of users, especially the ones with less experience.

Figure 6.

Vehicular manuals are the main source of information for most users.

In this context, the performed analyses are crucial for supporting a broader adoption of AI tools to facilitate access to such vital information. The analyzed chatbots aim to assist the interpretation of vehicle manuals, thereby offering individuals, especially those without technical expertise in automobiles, the ability to decipher and understand essential information, assisting them in navigating potentially risky situations. And, we intend to make it as simple as possible: upon introducing an automotive manual into the system (its PDF version), these tools process and translate its content into clear and accessible guidelines. In this way, users can obtain accurate and relevant answers quickly, promoting vehicle safety and proper maintenance.

As a final remark, it is important to say that the access to vehicular information by users with low computational skills may be as easy as accessing popular smartphone tools or even integrated IoT devices like Amazon Alexa. With short voice commands, such users could make questions that would be analyzed by some of the approaches investigated in this article. Although there are still some challenges to be addressed, some works have brought relevant contributions in this direction [34,35].

4.7. Research Limitations and Challenges

In this section, we will discuss some of the limitations identified in our research:

- (a)

- Limitation in Interpreting Visual Elements: The analyzed chatbot methods struggle to interpret visual elements such as icons and images present in PDF documents. This restricts their ability to provide accurate answers to questions that rely on visual information;

- (b)

- Restriction to PDF Document Format: The evaluated chatbots were specifically designed to handle PDF documents. This means that they may not be suitable for documents in other formats, limiting their applicability;

- (c)

- Need for User Interface Enhancement: The “Doc Chatbot” faces usability challenges due to the lack of a user-friendly interface. This can hinder access for users with limited technical experience;

- (d)

- Accuracy of Responses: Even the most effective chatbot, “Ask your PDF”, did not provide 100% accurate answers in all situations. This highlights the ongoing need for improvements to ensure correct and reliable responses in all scenarios;

- (e)

- Performance Variation with Document Size: Chatbot performance may vary based on the size and complexity of the PDF document. Results may not be as consistent when dealing with very large or extremely complex documents.

5. Conclusions

This work presents a comprehensive comparative analysis of three distinct chatbot approaches designed to derive answers from PDF documents. Based on the results, the “Ask your PDF” application emerges as the most optimal solution, satisfactorily blending cost-effectiveness, user experience enhancement, and laudable response accuracy. It positions itself as an appealing choice for those prioritizing an intuitive user interface coupled with precise answers without the need for extensive technical expertise.

In contrast, the “Doc Chatbot” method, while bearing higher costs, managed to deliver accurate answers for just one out of the four evaluated questions. This performance casts a shadow over its value for money, especially when juxtaposed against the efficiencies of the “Ask your PDF” application.

The “Question and answer system”, while notable for its economic edge, demonstrated a deficiency in answer accuracy, underscoring a clear need for further refinement, especially when targeting intricate documents like vehicle manuals.

Future work includes, but is not limited to:

- To improve the ability of chatbots to effectively handle complex PDF documents, including those with intricate formatting or non-standard layouts;

- To optimize the computational efficiency of chatbots, enabling them to provide quick responses without undue processing delays;

- To further evaluate the operational costs for the large-scale adoption of chatbots as an effective tool for the users;

- To explore ways to reduce the operational costs associated with maintaining and running chatbot systems, making them more cost-effective for businesses;

- To enhance the accuracy of chatbot responses, particularly for detailed technical questions, by refining the natural language understanding capabilities through access to reliable technical knowledge;

- To evaluate the user perceptions through the application of specific surveys, extending the performed comparisons with subjective evaluations by the users;

- Adding features that can interpret visual elements, such as graphics and icons, is crucial, and is expected for future works. This will enable chatbots to provide more comprehensive and contextually accurate responses.

Author Contributions

Formal analysis, T.M., M.M. and M.A.; project administration, I.S.; software, T.M., M.M. and M.A.; writing—original draft, M.S. and T.M.; research supervisors, I.S., M.S. and D.G.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financed in the Brazilian fostering agency CNPq (National Council for Scientific and Technological Development), Process No. 405531/2022-2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

https://github.com/conect2ai/carbot-manual-compare (accessed on 11 October 2023).

Acknowledgments

We thank National Council for Scientific and Technological Development (CNPq).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

- The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ANNs | Artificial Neural Networks |

| API | Application Programming Interface |

| BERT | Bidirectional Encoder Representations for Transformers |

| CNNs | Convolutional Neural Networks |

| GPT | Generative Pre-Trained Transformer |

| LLMs | Large Language Models |

| LSTM | Long Short-Term Memory |

| NHTSA | National Highway Traffic Safety Administration |

| NLP | Natural Language Processing |

| OCR | Optical Character Recognition |

| Portable Document Format | |

| RNNs | Recurrent Neural Networks |

References

- Muñoz-Basols, J.; Neville, C.; Lafford, B.A.; Godev, C. Potentialities of Applied Translation for Language Learning in the Era of Artificial Intelligence. Hispania 2023, 106, 171–194. [Google Scholar] [CrossRef]

- Shinde, P.P.; Shah, S. A review of machine learning and deep learning applications. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar]

- Lee, P.; Fyffe, S.; Son, M.; Jia, Z.; Yao, Z. A paradigm shift from “human writing” to “machine generation” in personality test development: An application of state-of-the-art natural language processing. J. Bus. Psychol. 2023, 38, 163–190. [Google Scholar] [CrossRef]

- Ghosh, R.K.; Banerjee, A.; Aich, P.; Basu, D.; Ghosh, U. Intelligent IoT for Automotive Industry 4.0: Challenges, Opportunities, and Future Trends. In Intelligent Internet of Things for Healthcare and Industry; Springer: Cham, Switzerland, 2022; pp. 327–352. [Google Scholar]

- Rubleva, O.; Emelyanova, E.; Mozharova, T.; Polshakova, N.; Gavrilovskaya, N. Development of a chatbot for a car service. In Proceedings of the AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2023; Volume 2700, p. 040016. [Google Scholar]

- Gao, Y.; Tong, W.; Wu, E.Q.; Chen, W.; Zhu, G.; Wang, F.Y. Chat with ChatGPT on interactive engines for intelligent driving. IEEE Trans. Intell. Veh. 2023, 8, 2034–2036. [Google Scholar] [CrossRef]

- Deng, J.; Lin, Y. The benefits and challenges of ChatGPT: An overview. Front. Comput. Intell. Syst. 2022, 2, 81–83. [Google Scholar] [CrossRef]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things-Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Abdullah, M.; Madain, A.; Jararweh, Y. ChatGPT: Fundamentals, applications and social impacts. In Proceedings of the 2022 Ninth International Conference on Social Networks Analysis, Management and Security (SNAMS), Milan, Italy, 28 November–1 December 2022; pp. 1–8. [Google Scholar]

- Gill, S.S.; Kaur, R. ChatGPT: Vision and challenges. Internet Things-Cyber-Phys. Syst. 2023, 3, 262–271. [Google Scholar] [CrossRef]

- Pavlick, E. Symbols and grounding in large language models. Philos. Trans. R. Soc. A 2023, 381, 20220041. [Google Scholar] [CrossRef] [PubMed]

- Boukhers, Z.; Bouabdallah, A. Vision and natural language for metadata extraction from scientific PDF documents: A multimodal approach. In Proceedings of the 22nd ACM/IEEE Joint Conference on Digital Libraries, Cologne, Germany, 20–24 June 2022; pp. 1–5. [Google Scholar]

- Qureshi, R.; Shaughnessy, D.; Gill, K.A.; Robinson, K.A.; Li, T.; Agai, E. Are ChatGPT and large language models “the answer” to bringing us closer to systematic review automation? Syst. Rev. 2023, 12, 72. [Google Scholar] [CrossRef] [PubMed]

- Diniz da Silva, M.B.; Vieira, E.; Silva, I.; Silva, D.; Ferrari, P.; Rinaldi, S.; Carvalho, D.F. A customer feedback platform for vehicle manufacturing in Industry 4.0. In Proceedings of the 2018 IEEE Symposium on Computers and Communications (ISCC), Natal, Brazil, 25–28 June 2018; pp. 01249–01254. [Google Scholar]

- Silva, M.; Flores, T.; Andrade, P.; Silva, J.; Silva, I.; Costa, D.G. An Online Unsupervised Machine Learning Approach to Detect Driving Related Events. In Proceedings of the IECON 2022—48th Annual Conference of the IEEE Industrial Electronics Society, Brussels, Belgium, 17–20 October 2022; pp. 1–6. [Google Scholar]

- Francis, J.; Cates, K.; Caldiera, G. SmarTxT: A Natural Language Processing Approach for Efficient Vehicle Defect Investigation. Transp. Res. Rec. 2023, 2677, 1579–1592. [Google Scholar] [CrossRef]

- Du, H.; Teng, S.; Chen, H.; Ma, J.; Wang, X.; Gou, C.; Li, B.; Ma, S.; Miao, Q.; Na, X.; et al. Chat with chatgpt on intelligent vehicles: An ieee tiv perspective. IEEE Trans. Intell. Veh. 2023, 8, 2020–2026. [Google Scholar] [CrossRef]

- Aljelawy, Q.M.; Salman, T.M. License plate recognition in slow motion vehicles. Bull. Electr. Eng. Inform. 2023, 12, 2236–2244. [Google Scholar] [CrossRef]

- Jindal, A.; Ghosh, R. Word and character segmentation in ancient handwritten documents in Devanagari and Maithili scripts using horizontal zoning. Expert Syst. Appl. 2023, 225, 120127. [Google Scholar] [CrossRef]

- Celsi, L.R.; Moreno, J.F.C.; Kieffer, F.; Paduano, V. HR-Specific NLP for the Homogeneous Classification of Declared and Inferred Skills. Appl. Artif. Intell. 2022, 36, 2145639. [Google Scholar] [CrossRef]

- Peyton, K.; Unnikrishnan, S. A comparison of chatbot platforms with the state-of-the-art sentence BERT for answering online student FAQs. Results Eng. 2023, 17, 100856. [Google Scholar] [CrossRef]

- Channabasamma; Suresh, Y.; Manusha Reddy, A. A Contextual Model for Information Extraction in Resume Analytics Using NLP’s Spacy. In Inventive Computation and Information Technologies; Lecture Notes in Networks and Systems; Springer: Singapore, 2021; Volume 173 LNNS, pp. 395–404. [Google Scholar]

- Huang, Y. How To Create A Doc ChatBot That Learns Everything For You, In 15 Minutes. Available online: https://levelup.gitconnected.com/how-to-create-a-doc-chatbot-that-learns-everything-for-you-in-15-minutes-364fef481307 (accessed on 23 August 2023).

- Liu, J. LlamaIndex. Available online: https://github.com/jerryjliu/llama_index (accessed on 23 August 2023).

- Documentation, L. Introduction. Available online: https://python.langchain.com/docs/get_started/introduction.html (accessed on 23 August 2023).

- API, O. OpenAI API. Available online: https://openai.com/blog/openai-api (accessed on 23 August 2023).

- AO, A. Langchain Ask PDF (Tutorial). Available online: https://github.com/alejandro-ao/langchain-ask-pdf (accessed on 23 August 2023).

- Documentation, S. Streamlit Documentation. Available online: https://docs.streamlit.io/ (accessed on 23 August 2023).

- Allahyari, M. Leveraging LangChain and Large Language Models for Accurate PDF-Based Question Answering. Available online: https://github.com/mallahyari/drqa (accessed on 23 August 2023).

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Toronto, ON, Canada, 2019; pp. 3982–3992. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165v4. [Google Scholar]

- Pricing, O. OpenAI Pricing. Available online: https://openai.com/pricing (accessed on 23 August 2023).

- Documentation, H.F. All-Mpnet-Base-v2. Available online: https://huggingface.co/sentence-transformers/all-mpnet-base-v2 (accessed on 23 August 2023).

- Jiménez, S.; Favela, J.; Quezada, Á.; Alanis, A.; Castillo, E.; Villegas, E. Alexa to support patients with dementia and family caregivers in challenging behaviors. In Proceedings of the World Conference on Information Systems and Technologies, Online, 12–14 April 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 336–345. [Google Scholar]

- Hong, D.; Cho, C.H. Factors affecting innovation resistance of smartphone AI Voice Assistants. Int. J. Hum. Comput. Interact. 2023, 39, 2557–2572. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).