Robust Chi-Square in Extreme and Boundary Conditions: Comments on Jak et al. (2021)

Abstract

:1. Introduction

2. Preliminaries

2.1. Derivative May Not Be Zero

2.2. Unstable Computation on the Border

2.3. Asymptotic Results Failure Due to Border Conditions

2.4. The Log-Likelihood Correction Factor

2.5. The Log-Likelihood Correction Factor Dependence on the Convergence Criteria

2.6. The Log-Likelihood Correction Factor for Poorly Identified or Unidentified Models

2.7. The Log-Likelihood Correction Factor Dependence on Sample Size

3. Mplus Estimation of Two-Level Models via the EM/EMA Algorithm

4. The Log-Likelihood Correction Factor

The New Log-Likelihood Correction Factor in Extreme and Boundary Solutions

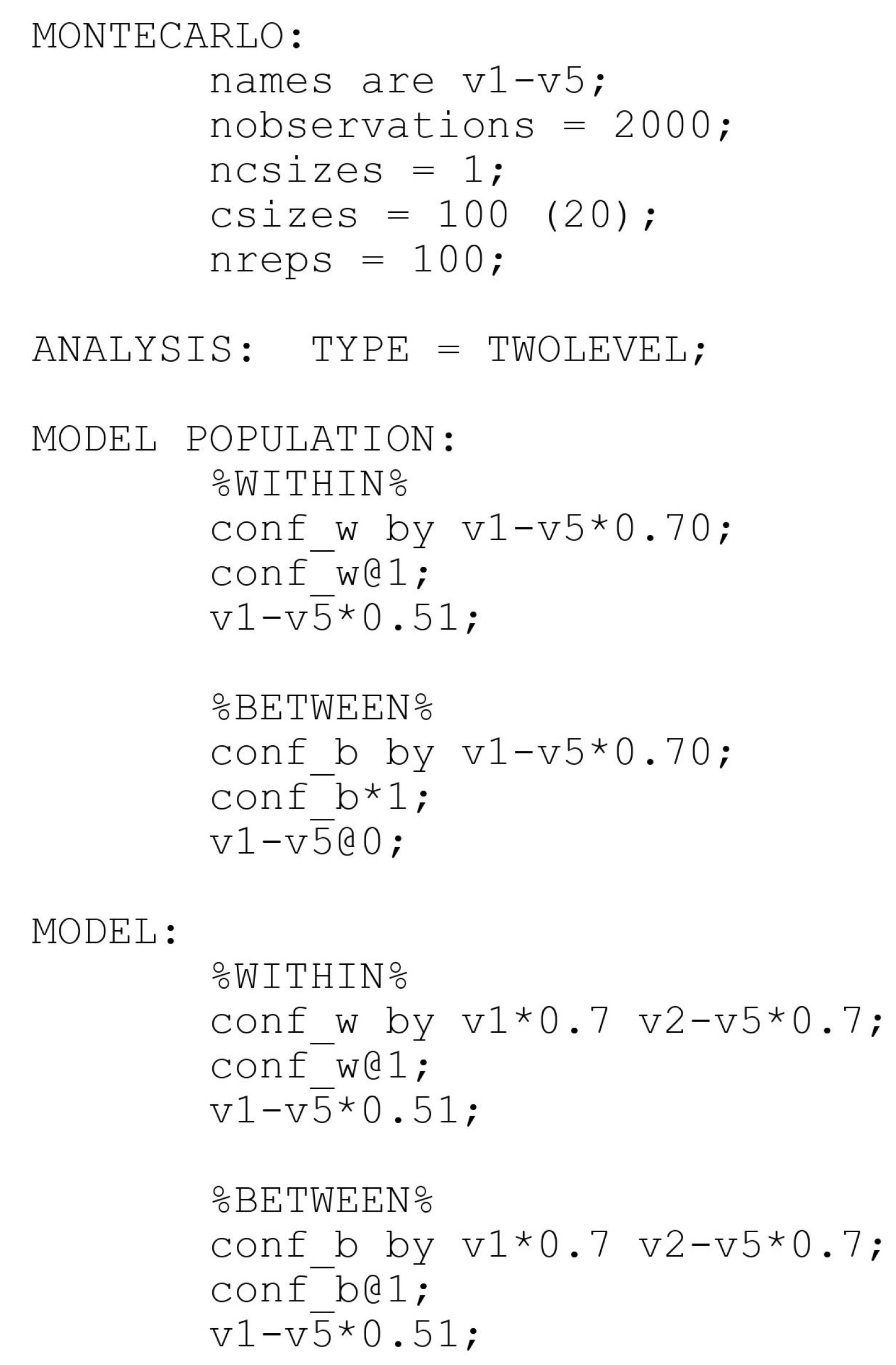

5. Simulation Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Jak, S.; Jorgensen, T.D.; Rosseel, Y. Evaluating cluster-level factor models with lavaan and Mplus. Psych 2021, 3, 134–152. [Google Scholar] [CrossRef]

- Muthén, B.O.; Satorra, A. Multilevel aspects of varying parameters in structural models. In Multilevel Analysis of Educational Data; Academic Press: Cambridge, MA, USA, 1989; pp. 87–99. [Google Scholar]

- Muthén, B.O. Mean and Covariance Structure Analysis of Hierarchical Data; UCLA Statistics Series #62; UCLA: Los Angeles, CA, USA, 1990. [Google Scholar]

- Muthén, L.K.; Muthén, B.O. Mplus User’s Guide, 8th ed.; Muthén & Muthén: Los Angeles, CA, USA, 2017. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum Likelihood from Incomplete Data via the EM Algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar]

- Satorra, A.; Bentler, P.M. Scaling Corrections for Chi-Square Statistics in Covariance Structure Analysis. In Proceedings of the Business and Economic Statistics Section of the American Statistical Association, Atlanta, GA, USA, 24–28 August 1988; pp. 308–313. [Google Scholar]

- Yuan, K.; Bentler, P.M. Three Likelihood-Based Methods for Mean and Covariance Structure Analysis With Nonnormal Missing Data. Sociol. Methodol. 2000, 30, 167–202. [Google Scholar] [CrossRef]

- Asparouhov, T.; Muthén, B. Multivariate Statistical Modeling with Survey Data. In Proceedings of the Federal Committee on Statistical Methodology (FCSM) Research Conference, Washington, DC, USA, 14 November 2005. [Google Scholar]

- Muthén, L.K.; Muthén, B.O. How to use a Monte Carlo study to decide on sample size and determine power. Struct. Equ. Model. 2002, 4, 599–620. [Google Scholar] [CrossRef]

| Generating Model | Estimated Model | Estimated Model | Mplus 8.6 EMA | Mplus 8.7 EMA | Mplus 8.6 QN | Mplus 8.7 QN |

|---|---|---|---|---|---|---|

| Config | Uncon | 0.46 | 0.03 | - | - | |

| Config | Uncon | 0.17 | 0.03 | 0.17 | 0.03 | |

| Config | Config | 0.36 | 0.05 | - | - | |

| Config | Config | 0.10 | 0.03 | 0.10 | 0.03 | |

| Config | Shared | 0.45 | 0.06 | - | - | |

| Config | Shared | 0.17 | 0.07 | 0.11 | 0.05 | |

| Shared | Uncon | 0.46 | 0.03 | - | - | |

| Shared | Uncon | 0.17 | 0.03 | 0.17 | 0.03 | |

| Shared | Config | 0.35 | 0.05 | - | - | |

| Shared | Config | 0.09 | 0.03 | 0.09 | 0.03 | |

| Shared | Shared | 0.41 | 0.08 | - | - | |

| Shared | Shared | 0.19 | 0.05 | 0.10 | 0.03 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asparouhov, T.; Muthén, B. Robust Chi-Square in Extreme and Boundary Conditions: Comments on Jak et al. (2021). Psych 2021, 3, 542-551. https://doi.org/10.3390/psych3030035

Asparouhov T, Muthén B. Robust Chi-Square in Extreme and Boundary Conditions: Comments on Jak et al. (2021). Psych. 2021; 3(3):542-551. https://doi.org/10.3390/psych3030035

Chicago/Turabian StyleAsparouhov, Tihomir, and Bengt Muthén. 2021. "Robust Chi-Square in Extreme and Boundary Conditions: Comments on Jak et al. (2021)" Psych 3, no. 3: 542-551. https://doi.org/10.3390/psych3030035

APA StyleAsparouhov, T., & Muthén, B. (2021). Robust Chi-Square in Extreme and Boundary Conditions: Comments on Jak et al. (2021). Psych, 3(3), 542-551. https://doi.org/10.3390/psych3030035