1. Introduction

Phishing attacks, particularly Smishing (SMS phishing), exploit human psychology by manipulating victims into compromising security measures, leading to the exposure of sensitive data [

1,

2]. These attacks are particularly effective because they rely on trust and emotional triggers, often bypassing traditional cybersecurity defenses [

3]. Recent reports indicate that Americans lost over

$800 million to Smishing scams in 2022, underscoring the growing importance of effective detection strategies [

4,

5]. Given the increasing prevalence of Smishing attacks, it is crucial to develop robust detection mechanisms. Current systems often struggle to identify and classify the diverse range of phishing attempts, especially those that are less frequent but equally harmful [

6,

7]. This research explores integrating data augmentation and transformer-based embeddings to improve the effectiveness of multiclass Smishing detection models.

Despite advancements in machine learning and cybersecurity, conventional Smishing detection systems struggle to keep pace with the continuously evolving tactics of cybercriminals. This challenge is particularly significant when identifying Smishing in resource-constrained environments, which often have limited computational resources [

8]. Many existing models focus solely on binary classification, neglecting the need to detect and classify the diverse variants of phishing within multiclass datasets [

9]. Additionally, minority classes, representing less frequent but equally dangerous Smishing attempts, can go undetected due to the limitations of current models [

10]. To address these gaps, this research builds upon the work of Mishra & Soni [

8] by introducing a deep learning-based detection system designed to differentiate between legitimate messages, spam, and Smishing within a multiclass dataset. Utilizing the ‘SMS Phishing Dataset for Machine Learning and Pattern Recognition,’ this study highlights key features, such as URLs and email addresses, which are crucial for Smishing classification [

8]. However, prior studies have been constrained by dataset diversity, binary classification schemes, and high computational overhead, that restrict their applicability to real-world resource-constrained environments. A new chain transformer model is proposed, integrating GPT-2 for synthetic data generation and BERT for embeddings to enhance model performance, particularly for minority classes [

11,

12].

Through this research, the aim is to contribute to the field by developing a deep learning-based Smishing detection system that effectively classifies SMS messages into legitimate, spam, and phishing categories. This approach includes introducing a chain transformer model that leverages synthetic data generation and advanced embeddings to improve detection rates, especially for minority classes. Additionally, the performance of various deep learning architectures are evaluated to identify the most effective model for deployment on devices with limited computational resources [

13]. While there has been some exploration of deep learning for Smishing detection, there is limited focus on how the choice of architecture impacts efficiency for multiclass classification [

6]. This research intends to fill this gap by assessing how different deep learning models can enhance detection capabilities, particularly for underrepresented phishing types. The originality of this research lies in its comprehensive evaluation of ensemble models that combine deep learning transformer embeddings with traditional machine learning techniques, all with the goal of achieving both accuracy and efficiency.

The remainder of this paper is structured as follows.

Section 2 summarizes a review of related research on Smishing detection using traditional and deep learning methods.

Section 3 describes the dataset used in this research and exploratory data analysis.

Section 4 explains the design, implementation, and experimental setup of the proposed model.

Section 5 presents the results and performance evaluations, and finally,

Section 6 concludes the paper and outlines future research options.

2. Related Works

The Smishing Detector model presented by Mishra & Soni [

14] integrates URL behavior with SMS content analysis to enhance detection accuracy, making it highly effective in identifying Smishing attempts. The dual-analysis approach can be resource-intensive, posing challenges for performance in resource-constrained environments. Its effectiveness depends on the accuracy of the URL behavior analysis component. The model uses binary classification and achieves notable performance, with URL features being the most accurate at 94%. A neural network-based variant of the model [

15] improved detection further, achieving a 97.93% accuracy by utilizing a backpropagation algorithm, though it remains computationally demanding. Other implementations focus on verifying URL authenticity and SMS content, achieving accuracy rates as high as 96.2%.

DSmishSMS [

16] is a system designed to detect Smishing SMS messages by combining content analysis and machine learning. It focuses on extracting five features from SMS texts to classify messages, with phases for checking URL authenticity and analyzing SMS content. Although it is adaptive and can improve with new data, its reliance on machine learning requires regular updates and large datasets, which can lead to overfitting. Unlike neural network-based systems, DSmishSMS uses traditional classifiers to compare results, achieving an accuracy of 97.9% (for both Smishing and Spam combined). Despite its effectiveness, Smishing detection remains a challenge due to the limited information available in SMS messages.

SmiDCA [

17] uses machine learning to detect Smishing attacks and can adapt as threats change over time. The model extracts features from Smishing messages and applies dimensionality reduction to select the 20 most relevant ones for classification. It employs correlation algorithms and machine learning techniques, achieving a 96.4% accuracy using a Random Forest classifier. The model does need large datasets for training, which may limit its effectiveness in real-world scenarios. SmiDCA uses binary classification.

The S-Detector model [

18] detects Smishing messages by analyzing both SMS content and URL behavior. If a URL is included, it checks for Android package file downloads to identify potential Smishing attempts. If no URL is present, it uses keyword classification using the Naive Bayes algorithm to check for suspicious patterns. The model is lightweight and well-suited for resource-constrained environments, but it may have difficulty handling advanced evasion techniques used by attackers and might not perform well with unseen Smishing patterns. It uses binary classification to differentiate between Smishing and legitimate messages, offering reliable detection while focusing on efficiency.

The Compact On-device Pipeline Smishing detection [

19] model is optimized for resource-constrained environments, providing real-time detection of Smishing attacks with minimal impact on performance. It uses a Disentangled Variational Autoencoder (VAE) to analyze both SMS content and URL features without the need for large URL databases. This is important for detecting short-lived malicious URLs. Its lightweight design makes it ideal for resource-constrained environments with limited computational resources. However, its compact structure may limit its effectiveness against more complex or evolving Smishing tactics. The model’s architecture creates a balance between performance efficiency and accuracy, addressing key challenges in resource-constrained environments’ security.

The Detection of Phishing in Mobile Instant Messaging model [

20] analyzes message content using natural language processing, improving its ability to detect phishing attempts in mobile instant messaging. The integration of machine learning increases its adaptability. However, NLP models can be resource-heavy and typically require significant processing power [

21]. The model’s accuracy relies on the quality of the training data and the effectiveness of the NLP techniques applied [

22].

The paper on Investigating Evasive Techniques in SMS Spam Filtering [

23] compares different machine learning models, focusing on their strengths and weaknesses in filtering SMS Spam. It provides useful insights into the effectiveness of various approaches. Because it is a comparative study, it does not offer a clear-cut solution but instead gives an overview of the different models. The performance of each model may vary based on the specific implementation and dataset used [

24].

ExplainableDetector [

25] uses transformer-based language models, which are highly effective at understanding and analyzing text. It also focuses on explainability, offering insights into how decisions are made. Transformer models are resource-intensive and may not be suitable for all mobile devices [

26]. The emphasis on explainability can increase complexity to the model, which may affect its performance [

7]. ExplainableDetector uses binary classification.

Privacy BERT-LSTM [

27] combines BERT and LSTM to detect sensitive information in text, focusing on high accuracy in identifying privacy-related content. The combination of BERT and LSTM can be computationally intensive, requiring substantial processing power [

28]. Privacy BERT-LSTM uses binary classification. In a recent study, researchers applied a Bidirectional LSTM within a federated learning framework to detect Smishing attacks, achieving an accuracy of 88.78% [

29].

While the studies outlined above demonstrate progress in Smishing detection, most focus on binary classification or rely on computationally intensive architectures. This gap motivates the current work, which explores lightweight transformer combinations for multiclass detection.

Table 1 summarizes key characteristics and performance metrics of existing and proposed Smishing detection approaches.

4. Design and Implementation

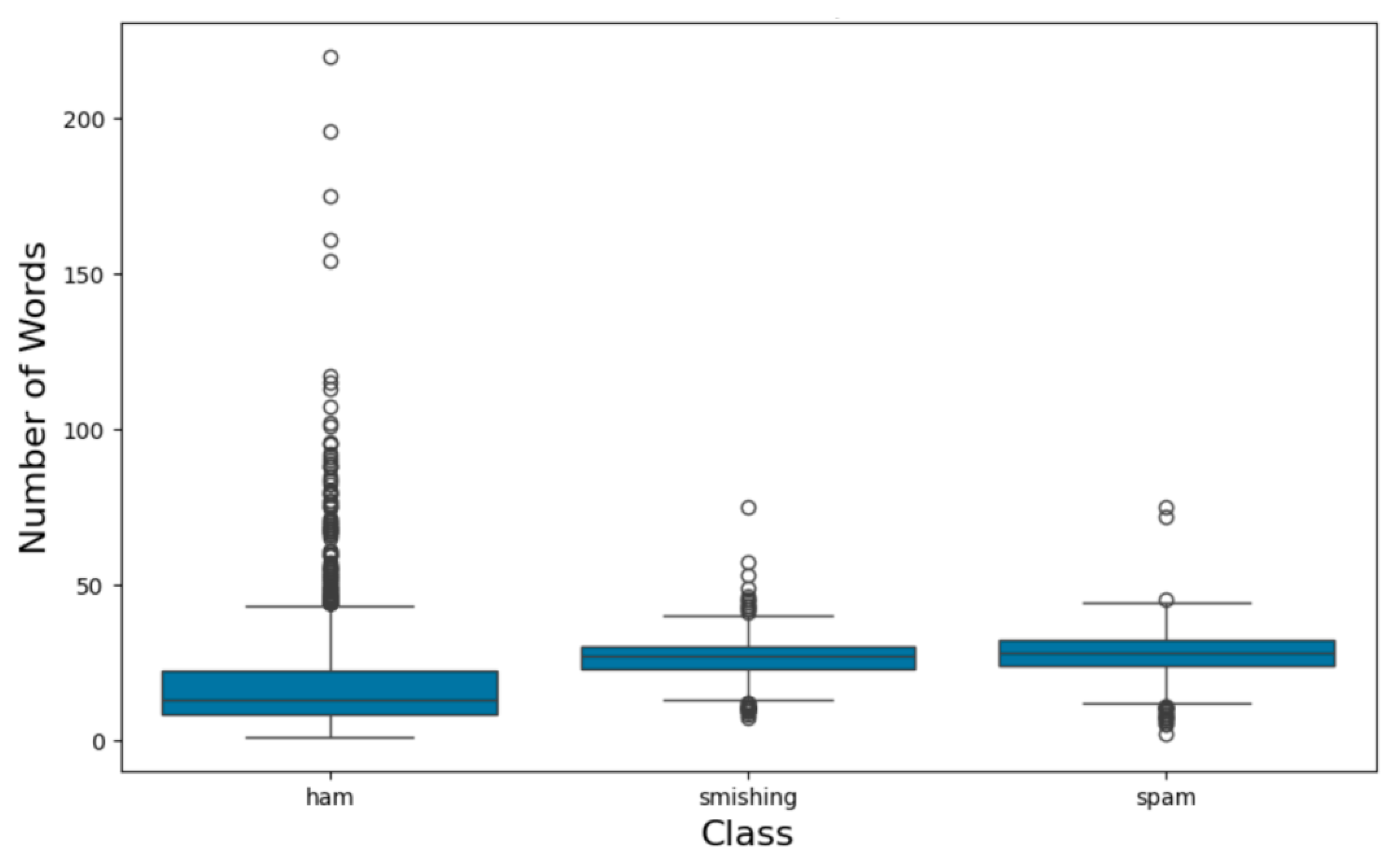

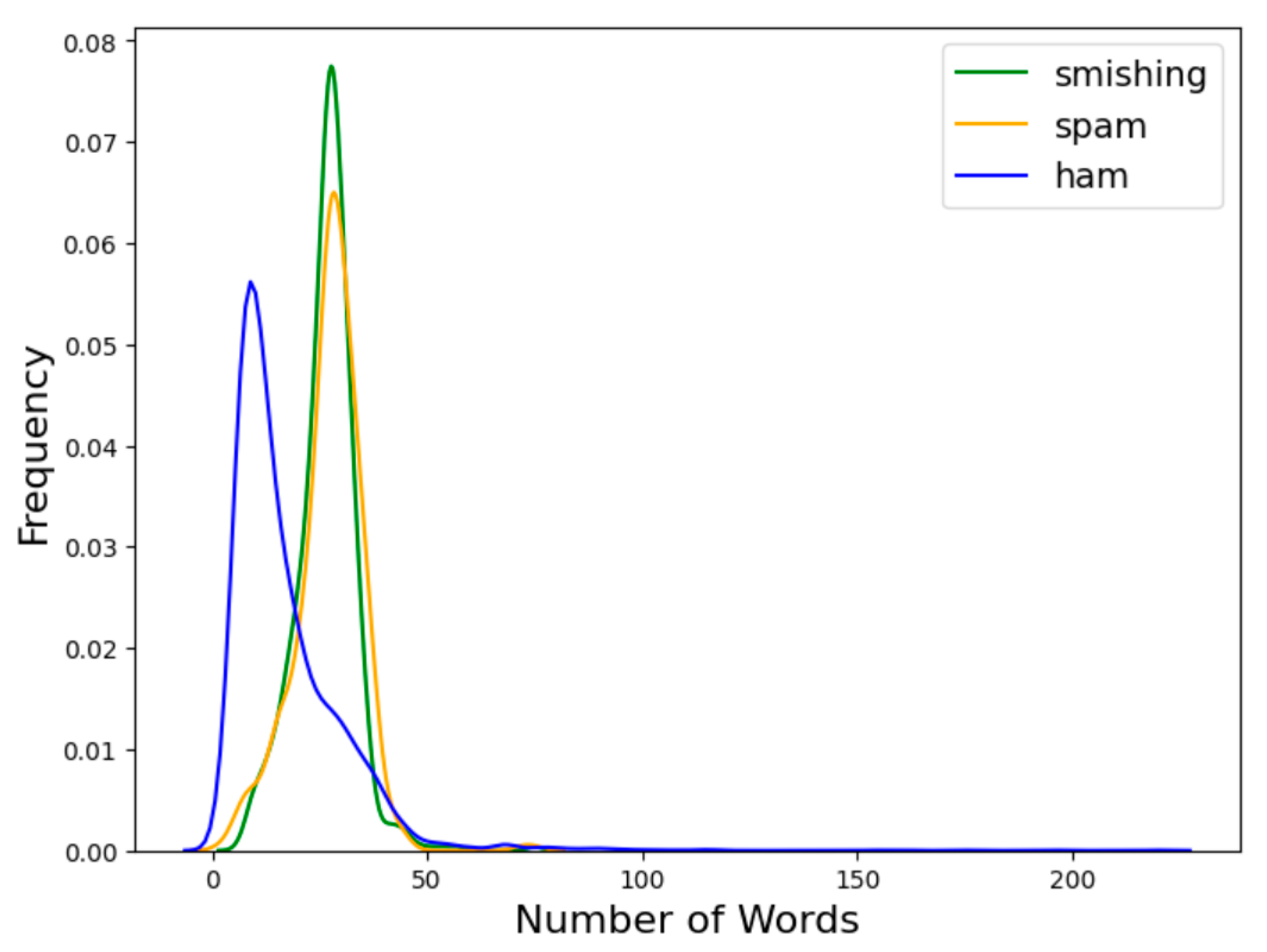

This research explores the effectiveness of deep learning models, particularly transformers, alongside traditional machine learning algorithms for detecting Smishing and Spam in text messages. By leveraging Python (v3.13) for generating synthetic data, fine-tuning transformer models, and conducting analysis and visualizations, this study aims to enhance the accuracy and efficiency of Smishing detection systems. The choice to employ scikit-learn version 1.5.2, rather than the newer 1.6.1 release from January 2025, ensures compatibility with existing methodologies while allowing for a focused evaluation of model performance. To enhance model performance, extensive preprocessing is applied to the dataset, including cleaning, tokenization, and handling missing values. Additionally, Exploratory Data Analysis (EDA) is conducted to assess data distribution, class imbalances, and key challenges, providing essential insights for model development and fine-tuning. The originality of this research lies in its comprehensive approach to integrating deep learning and traditional machine learning techniques, as well as its focus on addressing the unique challenges posed by minority classes in Smishing detection. By systematically evaluating the interplay between different model architectures and preprocessing techniques, this study contributes valuable insights to the field of cybersecurity, particularly in the context of phishing threats in resource-constrained environments.

The dataset is divided into training and testing subsets, with data balancing techniques implemented on the training subset to address class imbalances. Feature selection plays a key role in optimizing efficiency by filtering out irrelevant features. Hyperparameter tuning and validation are performed on the training subset, while the testing subset is used as a benchmark to evaluate model performance and determine the most effective approach for Smishing detection. To ensure data integrity and prevent information leakage, all generated synthetic samples were confined strictly to the training subset and excluded from any validation and testing sets.

To improve Smishing detection, synthetic data generation is employed to balance the dataset. The GPT-2 Medium model, fine-tuned for minority classes, is used to create synthetic text messages that capture distinct linguistic patterns. GPT-2’s pre-trained language capabilities ensure that generated messages align with real-world patterns while not distorting the word count distribution of original training data, leading to superior model training and detection accuracy. To identify the best synthetic data generation method, SMOTE, GPT-2 Medium, and GPT-2 were evaluated using statistical metrics such as mean, standard deviation, minimum, and maximum values. While SMOTE and GPT-2-based balancing were used to address class imbalance, care was taken to minimize overfitting and semantic drift by applying controlled sampling ratios and temperature settings during text generation, ensuring that synthetic data remained representative of real-world patterns. GPT-2 Medium was selected for its ability to produce high-quality synthetic samples that accurately represent minority-class characteristics.

This study enhances Smishing detection by generating synthetic data to create a more balanced dataset. The synthetic training data is generated using the GPT-2 Medium model and fine-tuned for each minority class. By using the model’s pre-training linguistic abilities, synthetic text messages are generated to capture the unique characteristics and semantic details of the minority class. This ensures that the generated messages not only align with the intended meaning but also maintain the word count distribution of the training data.

Table 2 presents the stats of the dataset using the SMOTE technique.

Table 3 displays the statistics of the GPT-2 Medium generated dataset. This is the dataset chosen for this research.

Table 4 shows the statistics of the GPT-2 generated dataset. There was no significant difference between datasets generated by GPT-2 medium and GPT-2, so the smaller model was chosen.

Shapley values are applied for feature selection due to their model-agnostic nature, allowing effective estimation of feature importance across different machine learning models. A major advantage of Shapley values is their ability to handle correlated features by assigning lower importance to redundant variables, ensuring that only unique contributions enhance model performance. In this study, Shapley values helped prioritize features while maintaining model accuracy and reducing classification time. The SHAP analysis revealed that structural indicators, such as the presence of URLs, email addresses, or phone numbers, contributed the most to classification, alongside semantic text embedding. To simplify interpretation, the mean absolute SHAP value was used, providing clear insights into the most influential attributes in the dataset.

Following data preparation and splitting, text embeddings are generated using pre-trained transformer models, such as BERT, DistilBERT (Distilled BERT), and ELECTRA, from Hugging Face’s Transformers library. Although more recent transformer variants such as TinyBERT and MobileBERT are specifically optimized for mobile or edge deployment, this study focused on BERT, DistilBERT, and ELECTRA due to their well-established benchmark performance, broad availability of pretrained weights, and extensive prior validation in smishing and text classification research. These models provided a strong and reproducible foundation for comparative analysis without introducing additional variability from newer, less extensively evaluated architectures. These embeddings are extracted as high-dimensional feature vectors and serve as inputs for traditional machine learning algorithms such as logistic regression, random forest, and support vector machines [

31]. The selection criteria for the machine learning algorithms were based on their proven effectiveness and computational efficiency in resource-constrained environments. Algorithms such as Support Vector Classifier (SVC) and Logistic Regression were chosen because they offer strong classification performance while maintaining relatively low memory and processing requirements, making them well-suited for deployment in lightweight or constrained settings. To enhance model robustness, k-fold cross-validation is employed, ensuring comprehensive performance evaluation while mitigating overfitting through iterative training on different data subsets [

32]. Hyperparameter tuning is conducted using grid search, optimizing parameters such as learning rate, batch size, and regularization strength. The final model is evaluated using F1 score, precision, accuracy, and recall to ensure its ability to generalize effectively to new data [

33].

Overfitting happens when a model memorizes training data instead of learning patterns that generalize to new data, reducing effectiveness [

34]. To prevent this, a small learning rate is adopted during training, allowing gradual updates and preventing drastic weight changes that can lead to overfitting [

35].

Different synthetic data generation methods are combined with BERT-based language models and traditional machine learning algorithms to identify the best-performing model. The model development process begins with generating contextual embeddings from a pre-trained transformer model, effectively capturing the semantic meaning of input text. These embeddings serve as input features for traditional machine learning models such as logistic regression, random forest, and support vector machines [

36]. By integrating the deep contextual understanding of transformers with the efficiency of traditional machine learning models, this hybrid approach enhances classification accuracy and interpretability [

12]. The study incorporates synthetic data generation alongside BERT-based models and machine learning algorithms to optimize multi-class SMS classification.

This research evaluates 9 model variations by testing combinations of three transformer-based embeddings (BERT, Google ELECTRA, and DistilBERT) with three traditional machine learning models: Random Forest Classifier, Logistic Regression, and Support Vector Classifier (SVC). Each combination undergoes testing with various hyperparameter configurations, including batch size, epochs, and learning rates, to determine the most effective settings for improving overall model accuracy. To ensure reproducibility and clarity, the training and evaluation workflow of the proposed smishing detection model is summarized in pseudocode form.

Algorithm 1 presents the main computational steps, including data preprocessing, model fine-tuning, and performance assessment of the chain transformer model.

| Algorithm 1. Smishing Detection Workflow |

Input: D_train, D_test, transformer model M

Output: performance metrics, saved results- 1.

Tokenize D_train and D_test using M’s tokenizer - 2.

Encode labels for all datasets - 3.

Load pretrained transformer model M with classification head - 4.

Fine-tune M on D_train - 5.

Evaluate M on D_test: - 6.

Save metrics and generate ROC curves

|

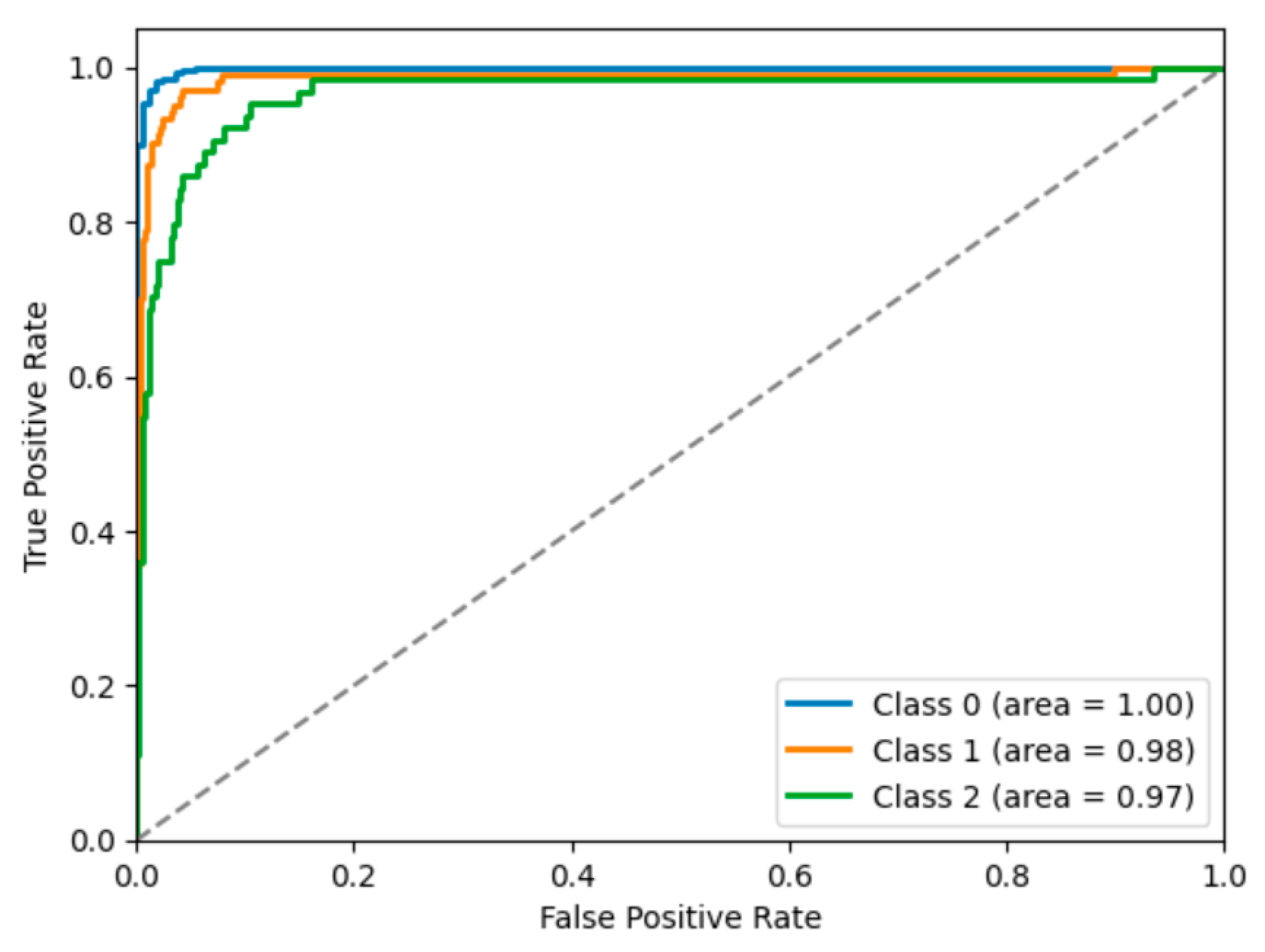

To identify the most suitable deep learning (DL) architecture for achieving the research objective, the following tables present a summary comparison of 47 evaluated architectures, highlighting their F1-Score, Precision, and Recall. The results indicate that the DL architecture integrating GPT-2 with BERT-uncased achieves the highest overall performance.

Table 5 presents the performance of models utilizing BERT embeddings alone, alongside those paired with ML algorithms such as SVC, Logistic Regression, and Random Forest. The results indicate that the BERT embedding without an ML algorithm, trained for 3 epochs, achieves the highest performance.

Table 6 presents the performance of models using DistilBERT embeddings, both without an ML algorithm and in combination with SVC, Random Forest, and Logistic Regression algorithms.

Table 7 presents the performance of models combining Google ELECTRA embeddings with no ML, Random Forest, Logistic Regression, and SVC ML algorithms.

Google ELECTRA models ran faster but unfortunately the results were not as accurate.

Finally,

Table 8 shows the results of models with no Embeddings and using SVC, Logistic Regression, and Random Forest ML algorithms. This shows an improvement in the models’ performance which included an Embedding.

Based on the results presented in

Table 5,

Table 6,

Table 7 and

Table 8, the deep learning architecture combining GPT-2 with BERT-uncased achieves better precision compared to models utilizing DistilBERT or Google ELECTRA. This architecture benefits from GPT-2’s ability to generate coherent and contextually relevant text. Also, it utilizes BERT-uncased’s proficiency in understanding the nuances of language through bidirectional context. By integrating these two transformer models, the architecture enhances the model’s capacity to capture complex linguistic patterns and improve classification accuracy in detecting Smishing and Spam messages. A full list of experiments can be provided upon request.

Precision and recall for the minority Smishing class are reported in

Figure 4 and

Figure 5, showing improved detection with the chosen model. However, additional research time is recommended to perform further hyperparameter tuning to enhance classification performance.

Establishing a clear threat model that distinguishes between Smishing, Spam, and Ham is essential for developing targeted detection strategies and improving user awareness, particularly in operational scenarios such as financial institutions or e-commerce platforms where users are frequently targeted by Smishing attacks. Smishing messages are specifically designed to deceive users into revealing sensitive information, while Spam consists of unsolicited advertisements that do not pose a direct threat. For instance, in a banking application, identifying Smishing messages can enable real-time alerts that warn users about potential phishing attempts, enhancing user trust and security. A precise definition of each class eliminates ambiguity in the classification logic, grounding the classification scheme in practical use cases, such as customer support systems that filter harmful messages while allowing legitimate communications. This strategic approach to multiclass framing provides actionable insights for improving detection systems, enabling organizations to tailor their responses based on the specific threat posed by each class and effectively address the unique challenges posed by minority classes in Smishing detection.