Threat Intelligence Extraction Framework (TIEF) for TTP Extraction

Abstract

1. Introduction

- Proposes a novel Threat Intelligence Extraction Framework (TIEF) for the automated extraction of TTPs from unstructured threat reports to map IOCs to MITRE ATT&CK sub-techniques and the generation of threat intelligence in STIX 2.1 format for interoperability;

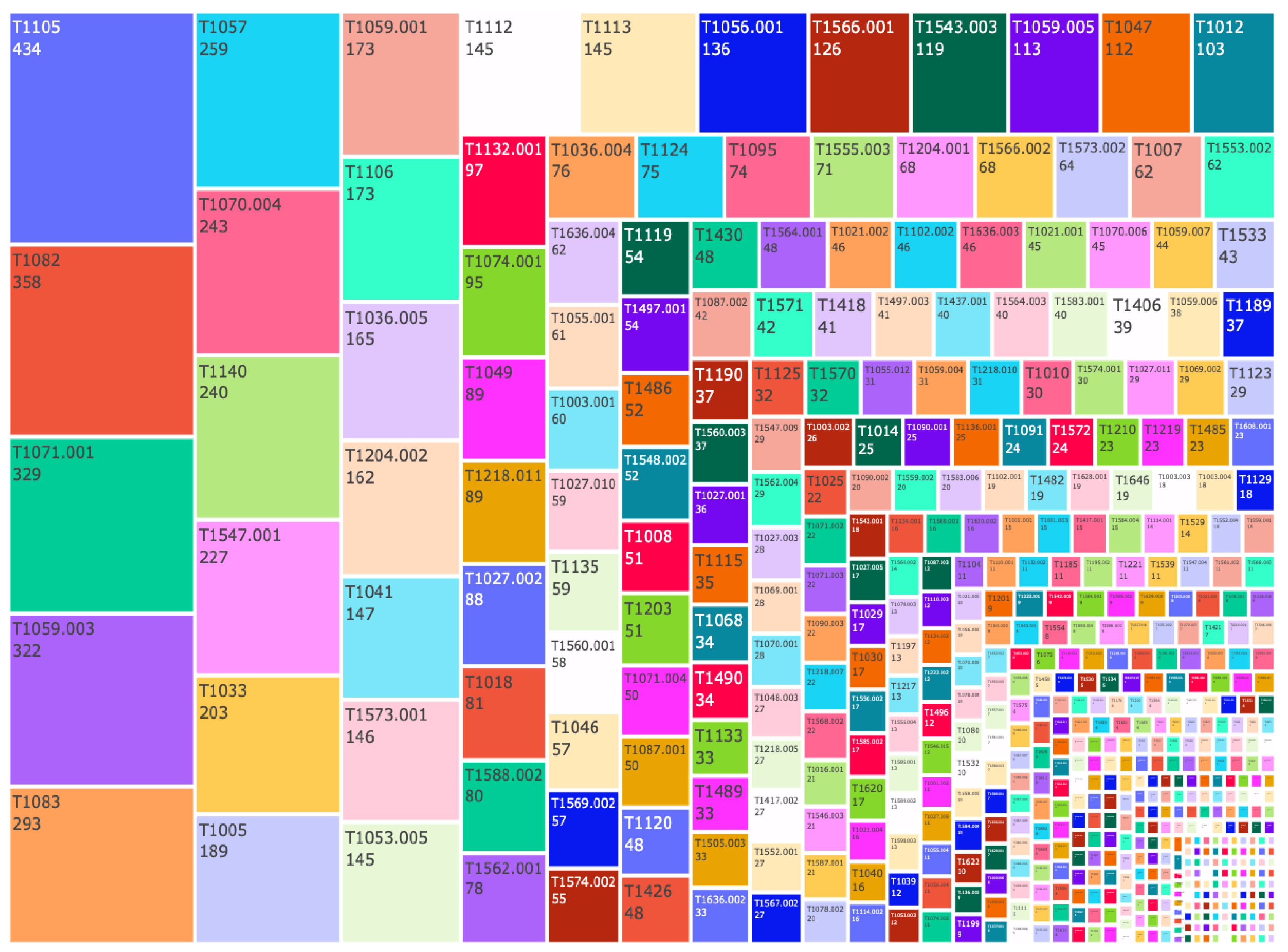

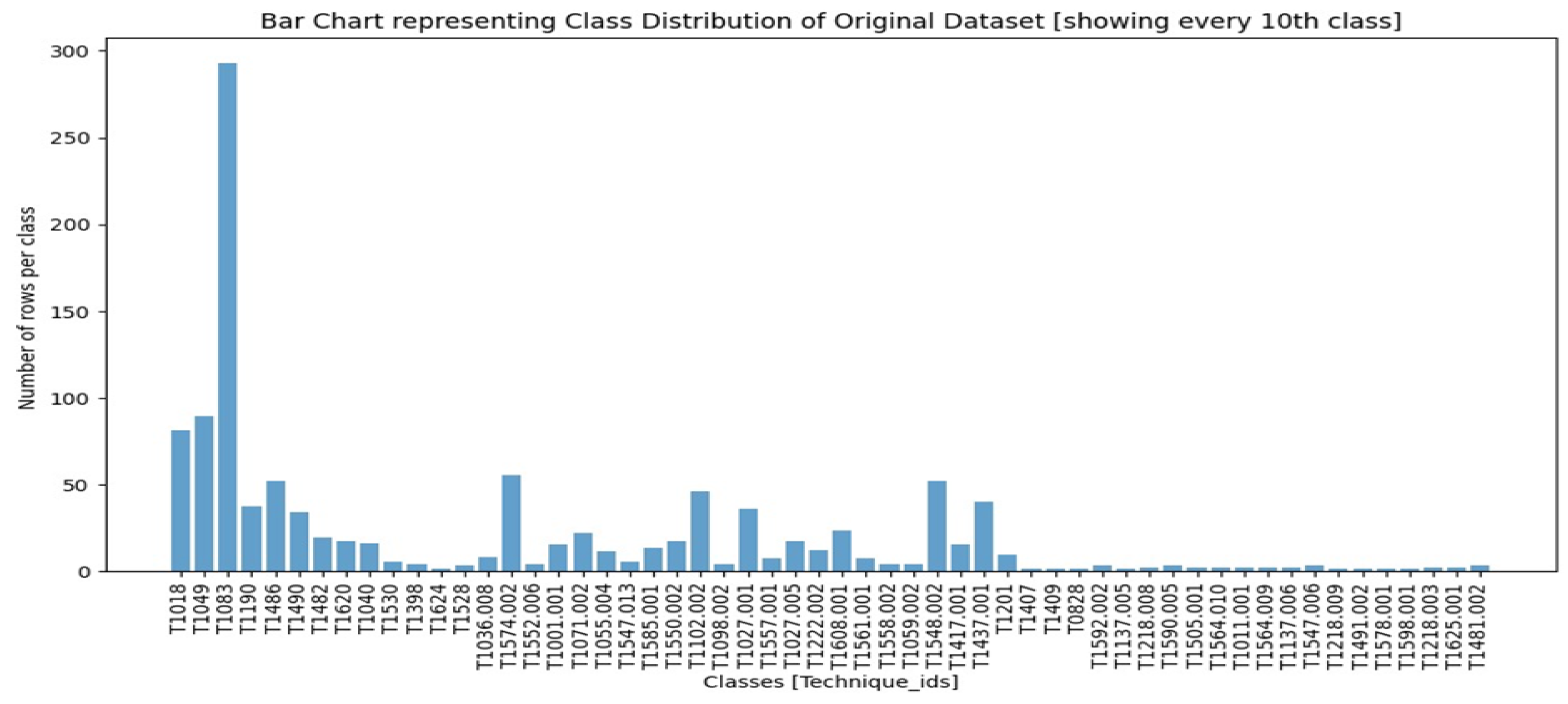

- Designs and implements a multi-class classification model capable of identifying 560 sub-techniques across the MITRE ATT&CK domains of ICS, Enterprise, and Mobile, enabling precise sub-technique-level identification of attacker behaviors;

- Constructs a TTP classifier dataset through the application of Large Language Model (LLM)-driven data generation and augmentation techniques that mitigate class imbalance and ensure equitable representation of low-frequency sub-techniques, enhancing the classifier’s robustness and granularity;

- Introduces a sentence grouping methodology based on semantic correlation rather than simple keyword matching that captures contextual relationships within threat reports, improving adaptability to evolving attack patterns.

2. Literature Review

3. Background and Preliminaries

- Structured Threat Information eXpression (STIX): STIX 2.1 is a standardized language and format for exchanging CTI, facilitating effective sharing, storage, and analysis of cyber threat information. The OASIS Cyber Threat Intelligence Technical Committee develops and maintains STIX. STIX 2.1 structures threat data in a JavaScript Object Notation (JSON) format and includes structured threat information, typically comprising various STIX objects such as Indicator, Attack Pattern, Threat Actor, Report, and their inter-relationships, as illustrated in Figure 1. The adoption of the STIX 2.1 format ensures that threat information is easily shareable and comprehensible across different security platforms, improving collaboration and threat response capabilities within the cybersecurity community.

- Indicators of Compromise (IOCs): IOCs are digital artifacts that serve as forensic evidence of a system or network breach. Cybersecurity professionals utilize these IOCs to identify and analyze security incidents, such as malware infections, data breaches, and unauthorized access. The specific IOCs used in this research are detailed in Table 1, which provides a basis for the identification of TTPs.

- Tactics, Techniques, and Procedures (TTPs): TTPs provide a structured framework to describe the behavior of a threat actor during a cyber-attack, detailing the specific methods they employ. Analyzing TTPs enables a shift from reactive defense mechanisms to a more proactive and strategic cybersecurity approach, allowing for improved anticipation and mitigation of potential threats.

- Tactics: Tactics represent the high-level objectives that an attacker seeks to accomplish, such as gaining initial access, escalating privileges, exfiltrating data, or disrupting operations. Understanding these high-level goals is crucial to developing models that can predict and mitigate potential attack strategies.

- Techniques: Techniques refer to the specific methods used by attackers to achieve their tactical objectives, analogous to tools in a toolbox. These methods may include exploiting vulnerabilities, employing social engineering tactics, such as phishing emails, or deploying malware. Accurate identification and classification of these techniques are essential to improve cybersecurity defenses.

- Procedures: Procedures are the detailed step-by-step instructions that attackers follow to execute a specific technique. Representing the most granular level of an attack, these procedures outline the precise actions taken during an attack.

4. Threat Intelligence Extraction Framework (TIEF)

| Algorithm 1: Algorithm for TTP extraction |

|

4.1. Raw Report Ingestion and Text Preprocessing

4.2. Contextual Sentence Grouping via Topic Modeling

4.3. IOC Extraction and Soft Tagging

4.4. Multi-Label TTP Classification

4.4.1. Training Data Construction and Augmentation

4.4.2. Model Selection and Hyperparameter Optimization

4.5. STIX 2.1 Object Generation

- Attack Pattern PropertiesFigure 9 represents the Attack Pattern STIX object.

- type: Implicitly set by creating an AttackPattern object;

- external_references: Mapped to a list containing source_name, mitreattack, external_id, and technique_id;

- name: Combines technique_name and sub_tech_name;

- description: Mapped to the chunks variable, containing descriptive text for each attack pattern;

- aliases: Mapped to a list containing technique_name and sub_tech_name for alternative identification;

- kill_chain_phases: Mapped to the tactic variable (from tactic_name), with phase_name.

- Indicator Object PropertiesFigure 10 denotes the Indicator STIX object.

- type: Implicitly set by creating an Indicator object;

- name: Mapped to name, dynamically generated as “ioc_type Indicator” (e.g., “ipv4 Indicator”);

- description: Mapped to description, containing ioc_type and ioc_value.;

- indicator_types: Set to (“maliciousactivity”), categorizing the Indicator as malicious;

- pattern: Mapped from ioc_mapping, formatted according to ioc_type and ioc_value;

- pattern_type: Set to “stix”, indicating that the STIX pattern language is used.

- Report Object PropertiesFigure 11 denotes the Report STIX object.

- type: Implicitly set by creating an Indicator object;

- name: Mapped to name, dynamically generated as “ioc_type Indicator”;

- description: Mapped to description, containing ioc_type and ioc_value;

- report_types: Set to (“threat-report”), indicating the report’s content type as a threat report;

- published: Automatically set to the current date and time;

- object_refs: Mapped to all_ids, a list containing the IDs of all Indicator, Relationship, and AttackPattern objects used.

- Relationship Object PropertiesFigure 12 denotes the Relationship STIX object.

- type: Implicitly set by creating a Relationship object;

- relationship_type: Set to “indicates”, indicating the relationship between the Indicator and Attack Pattern;

- description: Mapped to description, which combines ioc_value, technique_name, and sub_tech_name for descriptive context;

- source_ref: Mapped to indicator.id, representing the Indicator as the source;

- target_ref: Mapped to attack_pattern.id, representing the Attack Pattern as the target;

- start_time: Set to datetime.now(), representing the current time as the start of the relationship.

5. Results and Discussion

5.1. Tief Model Validation

5.2. Error Analysis

5.3. Limitations

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| APT | Advanced Persistent Threat |

| CTI | Cyber Threat Intelligence |

| CVE | Common Vulnerabilities and Exposures |

| IOC | Indicator of Compromise |

| LLM | Large Language Model |

| NLP | Natural Language Processing |

| ROC AUC | Receiver Operating Characteristic Area under the Curve |

| SIEM | Security Information and Event Management |

| SVM | Support Vector Machine |

| TTP | Tactics, Techniques, and Procedures |

| STIX | Structured Threat Information eXpression |

| JSON | JavaScript Object Notation |

| ICS | Industrial Control Systems |

| Portable Document Format | |

| UMAP | Uniform Manifold Approximation and Projection |

| HDBSCAN | Hierarchical Density-Based Spatial Clustering of Applications with Noise |

| POS | Part-of-Speech |

Appendix A. Extra Figure References

References

- Abdullahi, M.; Baashar, Y.; Alhussian, H.; Alwadain, A.; Aziz, N.; Capretz, L.F.; Abdulkadir, S.J. Detecting cybersecurity attacks in internet of things using artificial intelligence methods: A systematic literature review. Electronics 2022, 11, 198. [Google Scholar] [CrossRef]

- Chakraborty, A.; Biswas, A.; Khan, A.K. Artificial intelligence for cybersecurity: Threats, attacks and mitigation. Artif. Intell. Societal Issues 2023, 231, 3–25. [Google Scholar] [CrossRef]

- Sun, Z.; Ni, T.; Yang, H.; Liu, K.; Zhang, Y.; Gu, T.; Xu, W. Flora+: Energy-efficient, reliable, beamforming-assisted, and secure over-the-air firmware update in lora networks. ACM Trans. Sens. Netw. 2024, 20, 1–28. [Google Scholar] [CrossRef]

- Li, J.; Wu, S.; Zhou, H.; Luo, X.; Wang, T.; Liu, Y.; Ma, X. Packet-level open-world app fingerprinting on wireless traffic. In Proceedings of the The 2022 Network and Distributed System Security Symposium (NDSS’22), San Diego, CA, USA, 24–28 April 2022. [Google Scholar]

- Ni, T.; Lan, G.; Wang, J.; Zhao, Q.; Xu, W. Eavesdropping mobile app activity via {Radio-Frequency} energy harvesting. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023. [Google Scholar]

- Berady, A.; Jaume, M.; Tong, V.V.T.; Guette, G. From TTP to IoC: Advanced persistent graphs for threat hunting. IEEE Trans. Netw. Serv. Manage. 2021, 18, 1321–1333. [Google Scholar] [CrossRef]

- You, Y.; Jiang, J.; Jiang, Z.; Yang, P.; Liu, B.; Feng, H.; Wang, X.; Li, N. TIM: Threat context-enhanced TTP intelligence mining on unstructured threat data. Cybersecurity 2022, 5, 3. [Google Scholar] [CrossRef]

- Highly Evasive Attacker Leverages SolarWinds Supply Chain to Compromise Multiple Global Victims With SUNBURST Backdoor. Available online: https://cloud.google.com/blog/topics/threat-intelligence/evasive-attacker-leverages-solarwinds-supply-chain-compromises-with-sunburst-backdoor (accessed on 15 April 2025).

- Tan, Z.; Parambath, S.P.; Anagnostopoulos, C.; Singer, J.; Marnerides, A.K. Advanced Persistent Threats Based on Supply Chain Vulnerabilities: Challenges, Solutions, and Future Directions. IEEE Internet Things J. 2025, 12, 6371–6395. [Google Scholar] [CrossRef]

- Froudakis, E.; Avgetidis, A.; Frankum, S.T.; Perdisci, R.; Antonakakis, M.; Keromytis, A. Uncovering Reliable Indicators: Improving IoC Extraction from Threat Reports. arXiv 2025, arXiv:2506.11325. [Google Scholar] [CrossRef]

- Badger, L.; Johnson, C.; Waltermire, D.; Snyder, J.; Skorupka, C. Guide to Cyber Threat Information Sharing. In National Institute of Standards and Technology (NIST); National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2016. [Google Scholar]

- MITRE ATT&CK Framework. Available online: https://attack.mitre.org (accessed on 15 November 2024).

- Husari, G.; Al-Shaer, E.; Ahmed, M.; Chu, B.; Niu, X. Ttpdrill: Automatic and accurate extraction of threat actions from unstructured text of cti sources. In Proceedings of the 33rd Annual Computer Security Applications Conference, Orlando, FL, USA, 4–8 December 2017. [Google Scholar]

- Legoy, V.; Caselli, M.; Seifert, C.; Peter, A. Automated Retrieval of ATT&CK Tactics and Techniques for Cyber Threat Reports. arXiv 2020, arXiv:2004.14322. [Google Scholar] [CrossRef]

- Aghaei, E.; Niu, X.; Shadid, W.; Al-Shaer, E. Securebert: A domain-specific language model for cybersecurity. In Proceedings of the International Conference on Security and Privacy in Communication Systems, Kansas City, MO, USA, 17–19 October 2022. [Google Scholar]

- Park, Y.; You, W. A Pretrained Language Model for Cyber Threat Intelligence. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: Industry Track, Resorts World Convention Centre, Singapore, 6–10 December 2023. [Google Scholar]

- Alves, P.M.; Geraldo Filho, P.; Gonçalves, V.P. Leveraging BERT’s Power to Classify TTP from Unstructured Text. In Proceedings of the 2022 Workshop on Communication Networks and Power Systems (WCNPS), Fortaleza, Brazil, 17–18 November 2022. [Google Scholar]

- Chen, S.S.; Hwang, R.H.; Sun, C.Y.; Lin, Y.D.; Pai, T.W. Enhancing cyber threat intelligence with named entity recognition using bert-crf. In Proceedings of the GLOBECOM 2023-2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023. [Google Scholar]

- Kim, H.; Kim, H. Comparative experiment on TTP classification with class imbalance using oversampling from CTI dataset. Secur. Commun. Netw. 2022, 2022, 5021125. [Google Scholar] [CrossRef]

- Rani, N.; Saha, B.; Maurya, V.; Shukla, S.K. TTPHunter: Automated Extraction of Actionable Intelligence as TTPs from Narrative Threat Reports. In Proceedings of the 2023 Australasian Computer Science Week, Melbourne, Australia, 31 January–3 February 2023. [Google Scholar]

- Rani, N.; Saha, B.; Maurya, V.; Shukla, S.K. TTPXHunter: Actionable Threat Intelligence Extraction as TTPs from Finished Cyber Threat Reports. Dig. Threats Res. Pract. 2024, 5, 1–19. [Google Scholar] [CrossRef]

- Castaño, F.; Gil Lerchundi, A.; Orduna Urrutia, R.; Fernandez, E.F.; Alaiz-Rodrıguez, R. Automating Cybersecurity TTP Classification Based on Unstructured Attack Descriptions. In Proceedings of the IX Jornadas Nacionales de Investigación En Ciberseguridad, Sevilla, Spain, 27–29 May 2024. [Google Scholar]

- Albarrak, M.; Pergola, G.; Jhumka, A. U-BERTopic: An urgency-aware BERT-Topic modeling approach for detecting cyberSecurity issues via social media. In Proceedings of the First International Conference on Natural Language Processing and Artificial Intelligence for Cyber Security, Lancaster, UK, 29–30 July 2024. [Google Scholar]

- Zhong, X.; Zhang, Y.; Liu, J. PenQA: A Comprehensive Instructional Dataset for Enhancing Penetration Testing Capabilities in Language Models. Appl. Sci. 2025, 15, 2117. [Google Scholar] [CrossRef]

- Demirol, D.; Das, R.; Hanbay, D. A Novel Approach for Cyber Threat Analysis Systems Using BERT Model from Cyber Threat Intelligence Data. Symmetry 2025, 17, 587. [Google Scholar] [CrossRef]

- Li, Z.X.; Li, Y.J.; Liu, Y.W.; Liu, C.; Zhou, N.X. K-CTIAA: Automatic Analysis of Cyber Threat Intelligence Based on a Knowledge Graph. Symmetry 2023, 15, 337. [Google Scholar] [CrossRef]

- Alam, M.T.; Bhusal, D.; Nguyen, L.; Rastogi, N. Ctibench: A benchmark for evaluating llms in cyber threat intelligence. Adv. Neural Inf. Process. Syst. 2024, 37, 50805–50825. [Google Scholar]

- Yong, J.; Ma, H.; Ma, Y.; Yusof, A.; Liang, Z.; Chang, E.C. AttackSeqBench: Benchmarking Large Language Models’ Understanding of Sequential Patterns in Cyber Attacks. arXiv 2025, arXiv:2503.03170. [Google Scholar] [CrossRef]

- Zhang, J.; Wen, H.; Li, L.; Zhu, H. UniTTP: A Unified Framework for Tactics, Techniques, and Procedures Mapping in Cyber Threats. In Proceedings of the 2024 IEEE 23rd International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Sanya, China, 17–21 December 2024. [Google Scholar]

- Meng, C.; Jiang, Z.; Wang, Q.; Li, X.; Ma, C.; Dong, F.; Ren, F.; Liu, B. Instantiating Standards: Enabling Standard-Driven Text TTP Extraction with Evolvable Memory. arXiv 2025, arXiv:2505.09261. [Google Scholar] [CrossRef]

- MITRE ATT&CK—Techniques. Available online: https://attack.mitre.org/techniques (accessed on 31 July 2025).

- APT_REPORT: Collection of APT Campaign Reports. Available online: https://github.com/blackorbird/APT_REPORT.git (accessed on 31 July 2025).

- CTI-HAL: Cyber Threat Intelligence—Hierarchical Attention Learning. Available online: https://github.com/dessertlab/CTI-HAL (accessed on 31 July 2025).

- CTI-Bench: A Benchmark Dataset for Cyber Threat Intelligence Evaluation. Available online: https://github.com/xashru/cti-bench (accessed on 31 July 2025).

| IOC Element | Extraction Technique | Example |

|---|---|---|

| IPv4 | Regex | 192.168.1.1 |

| Domain | Regex | example.com |

| Regex | name@domain.com | |

| URL | Regex | https://example.com |

| Query ASN | Regex | AS13335 |

| Filename | Regex | document.txt |

| File Hash | Regex | d41d8cd98f00b204e9800998ecf8427e |

| File Path | Regex | /home/user/…/document.txt |

| CVE | Regex | CVE-2023-45678 |

| Registry Key | Regex | HKEY_LOCAL_MACHINE\… |

| Encryption Algorithm | Gazetteer | AES, DES |

| Communication Protocols | Gazetteer | TCP, HTTP |

| Data Object | Gazetteer | Desktop |

| Aspect | HDP | BERTopic | NER |

|---|---|---|---|

| Approach | Bayesian nonparametric model | BERT embeddings + HDBSCAN | Entity-driven topic extraction |

| Strengths | Adapts topic count dynamically | Rich semantics, dynamic topics | Granular, entity-focused topics |

| Limitations | Computationally intensive | Demands transformer knowledge | Misses non-entity topics |

| Topic generation approach | Topics automatically generated | Topics automatically generated | Topic count to be generated has to be given |

| Column Name | Explanation |

|---|---|

| Technique Id | A unique identifier assigned to each technique. |

| Sub-Technique Id | A unique identifier assigned to each sub-technique. |

| Technique Name | Represents the methods adversaries use to achieve their goals. |

| Sub-technique Name | Provides a more detailed view of the primary techniques. |

| Tactic Name | A broader category or goal under which each technique falls, explaining the adversary’s intent. |

| Platform | The platforms (e.g., Windows, Linux, Mobile) targeted by each technique. |

| Procedure Description | Detailed, step-by-step accounts of how adversaries execute techniques, providing critical operational context. |

| Metrics | Non-Augmented Data | Augmented Data |

|---|---|---|

| ROC AUC | 97.45% | 96.9% |

| F1 Score | 35% | 93% |

| Metrics | DistilBERT | SecBERT | SecureBERT |

|---|---|---|---|

| Accuracy | 93.29% | 69.57% | 87.27% |

| ROC AUC | 96.67% | 75.81% | 91.00% |

| Hamming loss | 0.00062 | 0.00500 | 0.00250 |

| Validation loss | 0.00500 | 0.15000 | 0.02300 |

| Learning rate | |||

| Batch size | 8 | 12 | 12 |

| Epochs | 12 | 10 | 10 |

| Epoch | Training Loss | Validation Loss | F1 Score | ROC AUC | Hamming Loss | Runtime (s) | Samples per Sec | Steps per Sec |

|---|---|---|---|---|---|---|---|---|

| 1 | 0.000000 | 0.002694 | 0.933747 | 0.964762 | 0.000394 | 22.8199 | 524.192 | 16.389 |

| 2 | 0.000000 | 0.002744 | 0.933857 | 0.964838 | 0.000393 | 22.2589 | 537.403 | 16.802 |

| 3 | 0.000000 | 0.002877 | 0.933568 | 0.964492 | 0.000395 | 22.1404 | 540.279 | 16.892 |

| 4 | 0.000000 | 0.002899 | 0.933941 | 0.964665 | 0.000393 | 22.2326 | 538.040 | 16.822 |

| Paper Title | Classification Technique | No. of TTP Classes | Topic Modeling Technique | STIX Object Notation | F1 Score | Advantage | Disadvantage |

|---|---|---|---|---|---|---|---|

| TTPHunter [20] | Multi-class classification with linear classifier | 177 | No | No | 88% | Efficient classification with linear model | Limited to narrative reports without standardized format |

| TTPDrill [13] | Multi-class classification using SVM | 187 | No | Yes | 82.98% | STIX integration for standardized sharing | Extracts separate techniques without comprehensive context |

| TIM [7] | Multi-class classification using TCENet | 6 | No | Yes | 94.1% | High accuracy for covered TTPs | Limited to only 6 TTPs, does not reflect the full spectrum of threats |

| TTPXHunter [21] | Multi-class classification using fine-tuned SecureBERT | 193 | No | Yes | 97.09% | Domain-specific language model | One-to-one classifier limits multi-TTP extraction from single sentences |

| TIEF | Multi-label classification using TIEF classifier | 560 | BERTopic | Yes | 93.39 % | Completely handles the wide range of TTPs with classification accuracy | Lacks domain-specific model benefits |

| Description (CTI-Bench) | Predicted | Correct Technique | Error Category | Explanation of Overlap |

|---|---|---|---|---|

| IBM OpenPages with Watson 8.3 and 9.0 could provide attackers persistence via crafted configs… | t1456, t1398, t1404, t1221 | T1547.001 Registry Run Keys/Startup Folder | Insufficient Detail | The description hints at persistence but does not specify how the model guessed multiple persistence techniques. |

| Improper Neutralization of Input during Web Page Generation leads to reflected XSS… | t1489 | T1059.007 JavaScript | Semantic Overlap | XSS payloads are JavaScript, but the model mapped to phishing impact instead. |

| Out-of-Bounds Write in svc1td_x64.dll allows arbitrary code execution | t1221 | T1203 Exploitation for Client Execution | Label Noise | The training label had “defense evasion”, but the description clearly describes an exploit. |

| The Essential Addons for Elementor plugin allows privilege escalation via unquoted service path. | t1404, t1221 | T1068 Exploitation for Privilege Escalation | Semantic Overlap | Unquoted service paths are a known privilege-escalation exploit, not a code-execution flaw. |

| Server-Side Request Forgery (SSRF) vulnerability in URL fetch allows data exfiltration | t1404, t1105 | T1573.001 Server-Side Request Forgery | Semantic Overlap | SSRF was lumped under “exfiltration” instead of its own technique. |

| Use of hard-coded credentials in Java app’s config file leads to unauthorized account access. | t1136 | T1552.001 Credentials in Files and Locations | Semantic Overlap | The model saw “create account” instead of stolen creds from file. |

| DLL search order hijacking in MyApp.exe allows attacker-controlled DLL to load instead of legit library. | t1221 | T1574.001 DLL Search Order Hijacking | Semantic Overlap | Mapped to generic defense evasion rather than the specific DLL hijack. |

| Weak encryption (MD5) used for password hashing in PHP web app enables offline cracking. | t1486 | T1550.002 Unsecured Credentials: Password Hashes | Insufficient Detail | The model guessed ransomware impact (MD5 = malware), but it was really just weak creds. |

| Container escape via misconfigured Docker socket lets attacker get host-level root. | t1552 | T1611 Escape to Host | Semantic Overlap | The model assigned “credentials”, because Docker socket implies PR, but it is a container escape. |

| Misconfigured S3 bucket ACL publicly exposes sensitive files. | t1537 | T1537 Transfer Data to Cloud Account | Label Noise | The label was “ingress tool transfer”, but public-ACL is simply misconfiguration. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joy, A.; Chandane, M.; Nagare, Y.; Kazi, F. Threat Intelligence Extraction Framework (TIEF) for TTP Extraction. J. Cybersecur. Priv. 2025, 5, 63. https://doi.org/10.3390/jcp5030063

Joy A, Chandane M, Nagare Y, Kazi F. Threat Intelligence Extraction Framework (TIEF) for TTP Extraction. Journal of Cybersecurity and Privacy. 2025; 5(3):63. https://doi.org/10.3390/jcp5030063

Chicago/Turabian StyleJoy, Anooja, Madhav Chandane, Yash Nagare, and Faruk Kazi. 2025. "Threat Intelligence Extraction Framework (TIEF) for TTP Extraction" Journal of Cybersecurity and Privacy 5, no. 3: 63. https://doi.org/10.3390/jcp5030063

APA StyleJoy, A., Chandane, M., Nagare, Y., & Kazi, F. (2025). Threat Intelligence Extraction Framework (TIEF) for TTP Extraction. Journal of Cybersecurity and Privacy, 5(3), 63. https://doi.org/10.3390/jcp5030063