Abstract

With the increasing sophistication of network attacks, machine learning (ML)-based methods have showcased promising performance in attack detection. However, ML-based methods often suffer from high false rates when tackling encrypted malicious traffic. To break through these bottlenecks, we propose EFTransformer, an encrypted flow transformer framework which inherits semantic perception and multi-scale feature fusion, can robustly and efficiently detect encrypted malicious traffic, and make up for the shortcomings of ML in the context of modeling ability and feature adequacy. EFTransformer introduces a channel-level extraction mechanism based on quintuples and a noise-aware clustering strategy to enhance the recognition ability of traffic patterns; adopts a dual-channel embedding method, using Word2Vec and FastText to capture global semantics and subword-level changes; and uses a Transformer-based classifier and attention pooling module to achieve dynamic feature-weighted fusion, thereby improving the robustness and accuracy of malicious traffic detection. Our systematic experiments on the ISCX2012 dataset demonstrate that EFTransformer achieves the best detection performance, with an accuracy of up to 95.26%, a false positive rate (FPR) of 6.19%, and a false negative rate (FNR) of only 5.85%. These results show that EFTransformer achieves high detection performance against encrypted malicious traffic.

1. Introduction

The exponential growth of network traffic, driven by cloud computing, the internet of things (IoT), and mobile edge applications, has led to a dramatic increase in encrypted communication. According to Let’s Encrypt, HTTPS usage surged from 39% to over 80% between 2015 and 2019 [1,2], while TLS 1.3 rapidly gained traction, being adopted in over 30% of all Chrome connections within a year [3]. In parallel, adversaries have advanced their tactics, leveraging encrypted channels to disguise attacks such as stealthy DDoS bursts, DNS over HTTPS (DoH), or lateral movement using zero-day exploits. These trends have rendered traditional plaintext-based detection approaches, such as deep packet inspection (DPI), increasingly ineffective under modern encryption protocols like TLS 1.3 and QUIC [4].

Despite decades of progress in traffic analysis, encrypted malicious traffic detection remains a fundamental unsolved challenge [5]. Traditional DPI techniques, which once dominated, have been ineffective due to encryption protocols that obscure payload content and prevent pattern matching [6,7]. Although machine learning (ML) methods have emerged as promising alternatives [8,9], they rely on statistical metadata rather than payloads, and they suffer from poor generalization in the presence of imbalanced labels and high FPR due to feature overlap between benign and malicious traffic. They have difficulty in capturing the long-range spatiotemporal semantics inherent in encrypted communications [10,11,12,13]. While large language models (LLMs) can provide better contextual understanding, they introduce high computational costs and instability in practical deployments [4,14,15]. The key question is how to build a model that combines expressiveness and detection efficiency while reducing false positives and maintaining performance under encrypted, unbalanced, and dynamic traffic conditions.

The limitations of traditional methods highlight the urgent need for a smarter and more adaptive detection framework. To this end, we are committed to designing a detection architecture that can parse cross-layer protocol semantics and adaptively learn evolving behavior patterns in long sessions. Our motivation, without the need to decrypt traffic, is to accurately identify advanced threats such as IoT malware, effectively reduce FPR and FNR, reduce the burden of manual verification, provide enterprises with more powerful encrypted traffic security monitoring capabilities, and comprehensively improve the level of network boundary protection.

EFTransformer proposes an innovative solution to the above problems. To this end, we built a traffic aggregation and extraction framework based on quintuples to quantify network flow activities, characterize the temporal characteristics of traffic, identify service types and communication characteristics, and thus enhance the ability to understand complex attack behaviors. In order to improve the robustness of the model to noise, we improved the density-based adaptive spatial clustering algorithm (DBSCAN) and designed a noise redistribution mechanism to avoid the problem of valuable information loss caused by simply removing noise points. Subsequently, through the multi-feature semantic fusion mechanism, we broke through the limitation of traditional methods that only rely on shallow statistical features and were able to deeply explore the deep semantic information in encrypted traffic and the inherent logic of attack behaviors.

The main contributions of this work are as follows:

- By using quintuples, we propose a traffic aggregation extraction framework to quantify network flow activity, characterize traffic temporal patterns, and identify service types and communication features.

- The adaptive density-based spatial clustering of applications with noise (DBSCN) clustering algorithm is improved, and a noise reallocation mechanism to enhance the model’s tolerance for noise is designed, avoiding issues such as simply eliminating noise points and losing valuable information.

- A dual-channel embedding method based on Word2Vec and FastText is introduced to enhance generalization ability. Additionally, an embedding enhancement strategy is adopted to construct a 384-dimensional joint embedding space.

- A Transformer classifier for multi-scale feature fusion is designed. By using an attention pooling fusion mechanism, the model dynamically evaluates feature importance through a learned weight matrix, reducing false rates and computational overhead.

The rest of this work is structured as follows: Section 2 provides background research on the current IDS detection methods and the combination of LLMs and network traffic. Section 3 describes the joint embedding and Transformer model architecture and related theoretical knowledge. Section 4 details the experimental implementation. Section 5 analyzes the experimental results, and Section 6 draws conclusions and proposes prospects for future work.

2. Related Work

As encryption becomes ubiquitous, detecting malicious behavior concealed in encrypted traffic has emerged as a central challenge in cyber security [5,16]. The adoption of TLS/SSL protocols renders traditional payload-based intrusion detection ineffective, with reports indicating that nearly 70% of all cyberattacks now exploit encrypted channels [13]. To address this, detection strategies generally fall into two categories: one extracts observable side-channel features to detect threats without decryption [5], while the other analyzes endpoint behaviors such as system calls and session patterns [17]. While signature-based methods struggle with encrypted and evolving threats [18], statistical- and flow-based models can identify anomalies by learning from traffic-level characteristics like timing variance and size distribution [1]. Recent advances apply ML and deep learning to extract discriminative features from encrypted traffic [18], but their performance relies heavily on large labeled datasets and stable protocol conditions, limiting their adaptability in dynamic environments [17].

2.1. Signature-Based Detection for Encrypted Malicious Traffic

Signature-based inspection is one of the earliest approaches for detecting malicious traffic, relying on matching packet content against predefined byte patterns of known attacks [3]. While effective in plaintext environments, this method faces fundamental limitations in encrypted scenarios, where payload content is inaccessible to traditional pattern matching [2]. As a result, its detection capability significantly degrades when applied to TLS/SSL-protected traffic. Moreover, the effectiveness of signature-based detection heavily depends on large and continuously updated signature libraries [19,20]. In most systems, signatures are manually constructed and maintained, leading to slow adaptation to evolving threats and leaving detection gaps for zero-day attacks. Even recent enhancements such as abstract signature codes, dynamic rule updates, and multi-policy engines only marginally improve flexibility and do not resolve the inherent inability to handle unseen or encrypted threats [21]. In summary, while signature methods offer fast and interpretable detection with low FPR for known attacks [22], they lack generalization and scalability. Their limitations in encrypted traffic environments underscore the need for alternative approaches that can infer malicious intent from observable metadata or behavioral patterns [23].

2.2. Statistical Analysis-Based Detection for Encrypted Malicious Traffic

Statistical encrypted traffic detection methods avoid the need for signature libraries by characterizing each flow through aggregate metrics such as packet count, byte volume, flow duration, and timing statistics, flagging deviations that indicate potential malicious behavior [2]. By modeling the baselines of normal traffic, they can effectively detect high-volume anomalies such as DDoS through abrupt shifts in traffic patterns [19,20]. Unlike traditional DPI which fails under encryption conditions, their approaches rely on side-channel characteristics rather than payload content and thus remain viable even when traffic is encrypted [4]. In practice, encrypted flows can be clustered based on statistical traits, allowing abnormal flows, bursty behavior, irregular packet intervals, or excessive duration to be isolated via unsupervised methods [24]. As they adapt over time by updating thresholds or statistical models, such detectors require no prior knowledge of specific attacks and can generalize well to novel threats [25]. Their low computational overhead makes them suitable for real-time or resource-constrained settings [26,27]. However, these methods also face limitations. Rapid traffic changes or evolving attack strategies may lead to misclassification, as statistical baselines can lag behind new behaviors. Moreover, statistical features offer limited semantic insights [28] that reveal anomalies but not their root causes. As a result, deeper inspection or manual analysis is often required to interpret alarms [4]. Statistical-based detection offers an efficient and adaptive alternative for encrypted traffic scenarios. While it may sacrifice fine-grained interpretability, it complements traditional intrusion detection approaches and enhances encrypted traffic coverage [10].

2.3. Machine Learning-Based Detection for Encrypted Malicious Traffic

Machine learning (ML) and deep learning-based intrusion detection methods automatically learn latent patterns in encrypted traffic to identify anomalies [11]. Compared to signature-based and statistical methods, ML not only offers better adaptability, improved detection of unknown threats, and stronger handling of complex data but also introduces new challenges [29].For instance, Lucia et al. applied CNN to classify encrypted TLS traffic, achieving over 99% accuracy by incorporating early stopping to prevent overfitting [30]. CNN reduces model parameters through convolution and pooling, facilitating high-dimensional input processing, but requires the manual extraction of flow size and direction features, increasing labor cost [10]. The Whisper approach extracts flow sequence features via frequency domain analysis, improving detection throughput while maintaining accuracy for low-information flows. This addresses limitations in detecting zero-day attacks and mitigates the high processing overhead commonly associated with ML methods, though real-time detection remains challenging [31]. Despite the increasing adoption of text-based, temporal, and graph-structured models for encoding event dependencies, their complexity and optimization requirements still exceed those of traditional detection techniques [23]. Both supervised and unsupervised learning can achieve high accuracy in specific contexts. Deep learning methods that mine complex temporal correlations in traffic enable adaptive classification strategies to reduce false positives [25]. The integration of traditional and emerging methods, alongside optimized feature selection, supports maintaining IDS accuracy while minimizing false alarms, forming effective multi-modal fusion systems [17,29]. The use of generative adversarial networks (GANs) to synthesize diverse attack samples enhances model robustness, while federated learning and edge computing facilitate scalable distributed monitoring to counter large-scale attacks [3]. Adaptive ML models dynamically update features and rules to respond to evolving network conditions.

2.4. Large Language Model-Based Detection for Encrypted Malicious Traffic

In recent years, the integration of LLMs and intrusion detection technology has injected new vitality into the field of network security, especially in scenarios such as data scarcity, complex attack patterns, and encrypted traffic [32]. Compared with traditional methods that rely on fixed features or rules, LLMs have powerful context understanding and data generation capabilities that can solve the problems of uneven data distribution or insufficient training samples in ML and deep learning training and enhance model training by synthesizing high-quality network traffic data, thereby effectively alleviating data offset and category imbalance [1]. Generative model solutions represented by PAC-GPT demonstrate the feasibility of using GPT-3 to generate network traffic data to make up for the insufficient distribution of actual datasets [15]. This method not only improves the generalization ability and accuracy of intrusion detection models but also provides a more diverse source of training data for simulating real network attack behaviors. Especially in the encrypted traffic detection scenario, due to the inability to directly obtain plaintext content, traditional detection methods face great limitations in modeling capabilities and attack identification accuracy [3]. Therefore, LLMs can be used to construct richer contextual features based on the traffic data generated by early feature research, improving the detection ability of hidden attacks in encrypted communications. In addition, the study also showed that combining neural domain features with sequence modeling technology, such as LSTM-based detection models, has better performance than traditional methods in malware detection in encrypted HTTPS traffic, especially in identifying new or variant malware [23]. With the introduction of models such as BT-TPF and Can-SecureBERT, the performance of LLMs in the encrypted communication environment of the IoT has become increasingly prominent. Some models have achieved more than 98% detection accuracy and low FPR in complex scenarios [24,28]. Despite this, there are still certain challenges in the application of LLMs in intrusion detection systems. First, whether the generated synthetic data can truly and comprehensively reflect the complex and changeable attack patterns in the network lacks systematic verification [32]. Secondly, due to the limitations of resource utilization and actual scenario deployment, LLMs rely on a large number of training samples and historical data, making it difficult to meet the requirements of real-time detection and rapid responses [33]. In addition, while improving detection performance, LLMs also bring problems such as high computational overhead, high deployment cost, and long system response time, which limit its feasibility in resource-constrained environments or large-scale deployment scenarios [15]. At present, research is still mainly focused on the modeling and identification of known threat patterns. In the face of unknown or fuzzy attack traffic, the generalization ability of the model still has room for improvement [28]. Therefore, future research can focus on multi-source heterogeneous data fusion and statistical–semantic feature collaborative modeling, combined with a lightweight generation framework, to improve the system’s ability to adapt to complex threat behaviors in encrypted traffic, reduce the dependence on manual intervention, and thus achieve an efficient and scalable intelligent intrusion detection system [3].

3. Materials and Methods

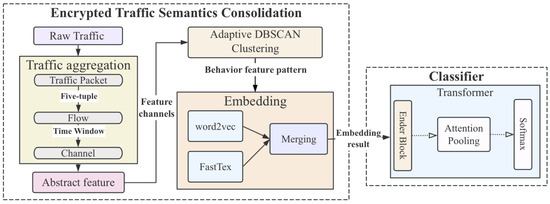

The proposed network intrusion detection framework of EFTransformer is shown in Figure 1. The EFTransformer consists of two main components: an encrypted traffic semantic consolidation module and a classifier. The traffic processing workflow includes three key stages: aggregation, clustering, and embedding. In the classifier, an adaptive attention pooling mechanism is employed to dynamically aggregate feature information. The mechanisms of each stage are illustrated in the following subsections with detailed workflow explanations and algorithms.

Figure 1.

Workflow and Framework of EFTransformer for Encrypted Traffic Analysis.

The logistic description of each stage with symbolic expression is shown in Algorithm 1. Specifically, a packet is the basic unit of network traffic, and is defined in Formula (1), where is the quintuple (source IP, source port, destination IP, destination port, protocol), is the timestamp, and is the payload.

A flow consists of a sequence of packets sharing the same quintuple within a time window is defined as in Formula (2), and all packets in are ordered by time and represent unidirectional or bidirectional traffic sessions.

A channel groups flows by common IP pairs (source and destination IPs) as shown in Formula (3), where the IP pair is symmetric, allowing bidirectional flow grouping.

Each channel is summarized by statistical metrics for downstream classification as a feature vector, as in Formula (4), which provides structured and compressed representations of traffic behavior.

| Algorithm 1 EFTransformer progress |

| Require: Raw packet stream , time window , DBSCAN params |

| Ensure: Predicted labels |

|

3.1. Traffic Aggregation

To better distinguish benign from malicious traffic, we adopt a hierarchical aggregation mechanism that transforms fine-grained packets into semantically meaningful channel units. Initially, packets are grouped into flows based on quintuple features, including source IP, destination IP, source port, destination port, and protocol. A fixed time window is applied to ensure that only packets within a valid interval are merged, preserving temporal continuity and reducing fragmentation. Flows sharing the same source and destination IP addresses are then further aggregated into higher-level channels.

This multi-stage process constructs coherent and consistent units that capture both detailed flow attributes and broader communication patterns. The quintuple features encapsulate the identity, port information, and protocol semantics of communicating parties. By encoding and fusing these channel-level features in parallel, the system enhances the precision of malicious traffic detection and improves the overall pattern recognition capability. Operating on channel-based representations provides the classifier with more coherent and semantically enriched input, which helps to mitigate the impact of isolated or noisy flows.

Malicious traffic often exhibits behavioral similarity and aggregation within a single channel. For instance, single-node continuous attacks frequently reuse the same communication paths for port scanning or repeated access to targeted servers. A typical case is the Mirai botnet, which maintains regular connections with its command and control server, producing multiple flows within the same channel that share similar temporal and structural properties.

3.2. Clustering

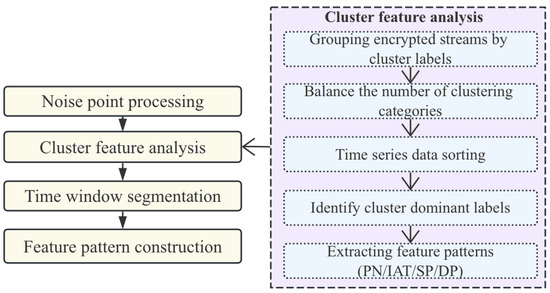

The detailed process of clustering is shown in Figure 2 and Algorithm 2. Firstly, we slice the original network traffic according to a sliding time window of fixed length to maintain the temporal continuity and local context integrity of the feature pattern. This step not only limits the input range of the clustering operation, but also improves the computational efficiency. Next, we introduce the noise-aware density clustering algorithm based on local density differences (DBSCAN), and dynamically and adaptively adjusted the core parameters Eps in combination with the traffic density distribution, so that the algorithm still has good clustering ability and generalization performance in the face of mixed traffic scenarios with different attack intensities and different communication frequencies. Compared with the limitations of traditional DBSCAN in traffic pattern recognition under fixed parameters, our architecture improves the accuracy of clustering in identifying malicious traffic.

Figure 2.

Adaptive clustering processing. (Adaptive part: Encrypted streams are first grouped by their cluster labels, and category counts are balanced to prevent bias. The time-series data is then chronologically sorted, and each stream’s dominant cluster label is identified. Finally, key behavioral patterns such as PN, IAT, SP, and DP are extracted for subsequent modeling).

After completing the preliminary clustering, we modeled the channel-level structure of the traffic within each cluster. Based on the traffic data after the flow channels were merged, the traffic records in each channel were arranged in chronological order, and a set of key attributes describing the dynamic characteristics of the behavior were extracted: number of packets (PN), inter-arrival time (IAT), source port number (SP) and destination port number (DP). These features respectively describe the communication frequency, rhythm change and target orientation of the attack traffic, and have good behavior discrimination capabilities. To construct the final feature pattern sample, we spliced the sequences of all channels in the cluster in the time dimension to form a comprehensive feature expression pattern at the cluster level. This splicing process not only retains the continuity of the attack behavior within the channel, but also reveals the stage evolution path of the attack through time alignment across channels. In addition, we also introduced a behavior consistency measurement indicator based on sequence statistical characteristics to evaluate and screen each cluster, and eliminate low-quality clusters with highly discrete internal feature distribution or mixed multiple behavior patterns to ensure the label consistency and semantic purity of the final sample set.

| Algorithm 2 Adaptive clustering for encrypted traffic |

| Require: Input traffic T, window size W, neighborhood radius (Eps) |

| Ensure: Structured feature pattern channel P |

|

3.3. Embedding

In the task of encrypted traffic anomaly detection, the original traffic is mainly composed of numerical features, which cannot fully reflect the semantic intent of communication behaviors. The major issue can be summarized as:

- Packets Differentiation: The number of packets in different traffic channels may differ by several orders of magnitude. Excessive differences may have a significant impact on the detection results of the model. Although this problem can be solved by normalization, since there is no theoretical upper limit to the number of traffic packets and the distribution of actual traffic has large fluctuations, the normalized data may still not accurately represent the traffic characteristics, thereby affecting the stability of the model.

- Semantic Perception Error: Port numbers are essentially discrete identifiers rather than continuous variables, and adjacent digital port numbers do not always directly correspond to semantic similarities. For example, although port 80 and port 8080 have a large gap, they both point to the same web service, while port 22 and port 23 correspond to SSH and Telnet protocols respectively. Although they have small differences, they belong to different protocol types. Therefore, directly using raw numerical modeling may not accurately learn the correct semantic relationship, reducing the ability to identify malicious traffic.

Aiming at the problem of packet Differentiation, EFTransformer designs multi-scale feature fusion embedding to alleviate the bias problem on the model. The model extracts statistical features and semantic features under different time windows through parallel channels, and achieves cross-scale alignment in spatial and temporal dimensions, thereby more comprehensively modeling the characteristics of encrypted traffic.

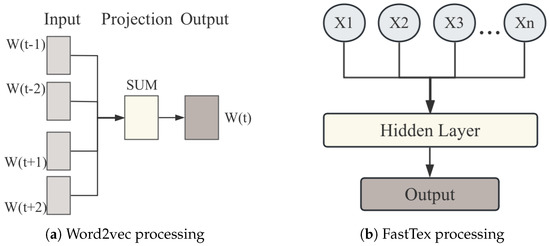

EFTransformer also introduces a semantic perception module to cope with the semantic perception error, which encodes such discrete attributes into continuous vector spaces through embedding techniques, enabling the model to learn semantic correlations that are not directly inferable from raw numerical values. As shown in Figure 3, the Word2Vec and FastText are employed to better capture the characteristic patterns and malicious activity intentions in the traffic. The Word2Vec (Figure 3a) captures the global semantic relationship of traffic patterns through a contextual learning mechanism, and can effectively learn the macro characteristics of different attack types. However, since Word2Vec is only based on a predefined vocabulary, it has weak adaptability to unseen samples (OOV) or variant attack patterns. Therefore, the FastText model (Figure 3b) is introduced. Through subword embedding, it can better parse the fine-grained information of features such as port numbers and packet time, expand the feature embedding dimension, and have stronger generalization ability.

Figure 3.

Word2vec and FastTex processing.

During specific implementation, based on the clustered data, the Word2Vec and FastText language models are trained, respectively, to perform dual modeling of traffic patterns at the global and local levels. In order to improve the embedding expression ability, this work adopts a dual-channel fusion strategy, that is, to obtain the embedding vectors of Word2Vec and FastText, respectively, and splice them on four feature dimensions so that the final embedding reaches 384 dimensions. To enhance the semantic representation of key discrete features such as port numbers and protocol types, this work adopts a dual-channel embedding strategy that leverages the contextual learning capabilities of Word2Vec and the subword-based modeling strength of FastText. This design enables the model to jointly capture global semantic structures and local granular patterns, thereby addressing the representation limitations of raw numerical features. In addition, to ensure the consistency of the embedding vector, this work introduces zero padding and truncation processing in the embedding process to avoid the adverse effects of extreme cases on model learning.

3.4. Classifier

The classifier of EFTransformer can be divided into three main parts: input layer, encoding layer, and classification layer.

The input data (X) is split into multiple heads, each of which processes a portion of the input data. Each head is calculated independently, and each head projects the input into the query, key, and value space through three weight matrices, and then calculates the attention weights. Finally, the output of each head is merged and integrated through an additional linear layer to get the output of the multi-head attention mechanism.

- Input layer: The output after dual-channel semantic embedding processing is used as the input of Transformer. In this work, the feature pattern is the semantic information extracted based on the channel level, which is mainly used to characterize the attack intention. The model processes different features of network traffic through four parallel encoders: packet number timing pattern (pn_encoder), flow interval time distribution (iat_encoder), source port access topology (sp_encoder), destination port service feature (dp_encoder). The 8-head attention mechanism is used to capture the long-distance dependencies in the feature sequence, so the model can effectively extract the traffic features in depth and form a multi-modal embedding representation.

- Encoding layer: Each encoder block consists of two main sub-layers: Multi-Head Attention and Feed-Forward Network. First, the importance score of the feature is calculated through a learnable weight matrix, and the normalized attention weight is generated. This mechanism can help the model adaptively focus on the feature channels that are most relevant to the current feature pattern. Secondly, the feature interaction method is used in the encoding layer to establish nonlinear associations between four-dimensional features through matrix multiplication operations, which effectively solves the problem of spatial heterogeneity. Finally, in order to suppress noise, the model introduces Dropout layer and L2 regularization in feature weighting to achieve dual noise suppression function.The self attention mechanism is a special attention mechanism in Formula (5), Where Q is the query, K is the key, and V is the value matrix, which are calculated from the input matrix X through the weight matrices , , and .The core of multi-head attention is to calculate multiple attention heads in parallel as shown in Formula (6). Each head focuses on different semantic information of the input feature sequence, and finally all the heads are fused. Different attention heads can focus on different position information and semantics, which can also enhance the robustness of the model as shown in Formula (7) and avoid overfitting or information bias caused by a single attention head. In order to improve the expression ability, multi-perspective modeling can be used to enrich feature expression.

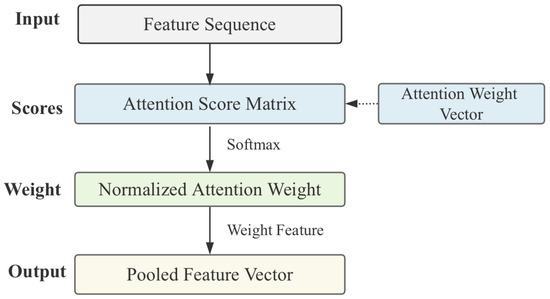

- Output layer: The classification layer will process the output of the encoder and give a dynamic weighted summation mechanism of attention pooling. The model trains the optimal feature combination in different scenarios end-to-end, and uses the pooled feature vector to map into a two-dimensional vector through nonlinear changes to capture deeper nonlinear relationships between semantic features. Finally, the model sums the vectors from four different feature modes to generate a comprehensive representation. The Softmax function is used to process the vector and calculate the final detection probability of whether the traffic is malicious or benign, thereby completing the classification task.As shown in Figure 4, a multi-scale attention pooling fusion framework through multi feature fusion and dynamic attention mechanism is described. Compared with traditional pooling, attention pooling is data-dependent and learnable, which can adaptively adjust information aggregation strategies. The weights are dynamically allocated, and the attention weight of each element is calculated based on the input feature sequence itself, rather than based on fixed rules, so as to achieve the function of content-aware aggregation. Specifically, the attention module computes importance weights across different time steps in the traffic sequence, enabling dynamic aggregation of temporal features. Important information will receive more weight, and irrelevant information will be suppressed, avoiding information loss in maximum pooling or blurring in average pooling, and retaining fine-grained features. In this way, attention pooling highlights semantically rich segments of traffic patterns and provides the model with more discriminative input representations than traditional pooling methods.

Figure 4. Attention pooling.The attention score of each element is calculated using a learnable function, as shown in Formula (8), where each denotes the importance score of the i-th time step in the input feature sequence . The normalized weights enable the model to perform dynamic temporal aggregation by focusing on key segments and down-weighting uninformative or noisy inputs. The aggregated representation is computed as a weighted sum of input features, which preserves fine-grained discriminative cues. The weight matrix , bias term , and scoring vector are learnable parameters, enabling the model to adaptively optimize the feature weighting scheme based on the content of the input.

Figure 4. Attention pooling.The attention score of each element is calculated using a learnable function, as shown in Formula (8), where each denotes the importance score of the i-th time step in the input feature sequence . The normalized weights enable the model to perform dynamic temporal aggregation by focusing on key segments and down-weighting uninformative or noisy inputs. The aggregated representation is computed as a weighted sum of input features, which preserves fine-grained discriminative cues. The weight matrix , bias term , and scoring vector are learnable parameters, enabling the model to adaptively optimize the feature weighting scheme based on the content of the input.

Overall, the coordinated design of these three layers enables EFTransformer to have powerful multi-level feature extraction, adaptive feature weighting, and classification capabilities, and it can cope with complex encrypted traffic detection scenarios.

4. Implementation

4.1. Implementation Setup

The specification of our implementation setup is shown in Table 1. The hardware environment consists of several components, including a CPU, a GPU, 8 GB of RAM memory, 110 GB of storage, and the network interface card (NIC). On the software side, the system runs CentOS 7.6.1810 (64-bit) as the operating system. The development environment is based on PyTorch version 1.12.1, with Python 3.9 as the primary programming language.

Table 1.

Implementation setup.

4.2. Dataset Description

The dataset [34] used in this work is a classic network intrusion detection system benchmark dataset released by the Canadian Information Security Research Center ISCX. Although the dataset was released earlier, it still has significant advantages in terms of the completeness of structured annotations, the authenticity of scenario simulations, and the operability of experimental reproduction. ISCX2012 covers a variety of typical attack types, including brute force, PortScan, and remote access, with a wide and representative attack surface. The dataset uses a user behavior script-based method to generate traffic, with clear labels, unified format, and support for complete two-way communication modeling. It has stronger interpretability and is helpful for the subsequent construction of similar experimental environments. It is particularly suitable for known attack detection and semi-supervised modeling tasks and is also convenient for subsequent controllable experiments and repeated verification.

Table 2 describes the information of this dataset. This dataset has 360,000 benign traffic samples and 70,000 malicious traffic samples that are mainly used for network encrypted traffic detection research. There are detailed traffic features in the data packet, such as the source IP, destination IP, port, packet size, timestamp, and other metadata, which can be used to study malicious traffic detection and traffic classification and clustering.

Table 2.

Classification of traffic types in the ISCXIDS2012 dataset.

In the encrypted traffic dataset, class imbalance is an important issue that affects the performance of the malicious traffic detection model. Downsampling is used to strategically reduce the number of majority class samples, making the distribution of samples in each category more balanced, thereby alleviating the bias problem caused by class imbalance in the model. Through downsampling, the model can learn the characteristics of each type of sample in a balanced manner, avoid excessive bias toward majority class samples, and improve the detection ability of minority malicious traffic. In addition, downsampling can also reduce the amount of training data, reduce the computational complexity of the model, and improve training efficiency. To minimize sampling bias, we performed each experimental setup ten times and used the average value as the final evaluation metric.

4.3. Experimental Evaluation Index Setting

We evaluate the model effect based on four indicators: the false negative rate (FNR), the false positive rate (FPR), the area under the curve (AUC), and the receiver operating characteristic (ROC). The definitions of the FNR and FPR are as follows:

The ROC curve and AUC are important indicators for evaluating the performance of classification models. The closer the ROC curve is to the upper-left corner, the better the model performance. The AUC is the area under the ROC curve. The closer the value is to one, the stronger the model’s ability to distinguish between positive and negative samples. AUC equals to 0.5 means that the model’s performance is equivalent to the value of the random guessing probability. A higher AUC value means the model can achieve a higher FNR while maintaining a lower FPR, thereby effectively evaluating model performance.

During the cluster analysis process, the choice of the K value plays a crucial role in the accuracy and validity of the results. Usually, the choice of the K value requires the comprehensive consideration of the distribution of data and the specific goals of the task. This article uses the elbow method to find the “elbow point” where the curve change significantly slows down as it approaches the optimal K value by drawing the relationship between the number of clusters and the sum of squared clustering errors (SSE). In addition, determining the K value through the contour coefficient helps to classify different traffic behaviors more accurately, thereby effectively distinguishing complex behaviors such as single-node continuous attacks and multi-node burst attacks.

In the setting of the ablation experiment of the embedding module, we focus on analyzing the impact of context information on model performance. The main function of the embedding layer is to combine the original features of the traffic behavior sequence with context information to generate high-dimensional feature representations so as to provide richer input features for the subsequent Transformer model. In the experiment, an ablation experiment is used to verify the impact of the embedding process on a malicious traffic monitoring system so as to prove the importance of context information for malicious traffic detection.

4.4. Baseline Algorithms

To fully explore and evaluate the contribution of EFTransformer, we utilize four baseline methods mentioned below for comparison. The C4.5 decision tree directly classifies the data after channel aggregation and serves as a traditional baseline. CIC-RF, following [35], extracts flow-level statistical features, merges channels, and uses a random forest for malicious traffic detection. FCM-SVM, based on [36], combines fuzzy C-means clustering with a Gaussian kernel SVM to first cluster and then classify the data. Enhanced-MLP, from [37], uses a multi-layer perceptron with a clustering algorithm and introduces Dropout to improve generalization. These methods were compared with the proposed EFTransformer.

C4.5 decision tree: For the data after channel aggregation, we use the C4.5 decision tree as the classifier for analysis.

CIC-Random Forest (RF) [35]: CIC-Flow extracts statistical flow features, including the time interval, packet count, and duration; performs channel merging based on this; and uses a random forest with 200 Bootstrap aggregate decision trees for malware detection.

FCM-SVM [36]: For the data after channel merging, a hybrid detection method combining fuzzy C-means (FCM) clustering and a radial basis function support vector machine (RBF-SVM) is adopted. FCM is responsible for clustering the input data, and the SVM with a Gaussian kernel shows strong nonlinear separation ability that is suitable for the detection of encrypted malicious traffic.

Enhanced-Multi-layer Perceptron (MLP) [37]: The enhanced version combines an MLP with a clustering algorithm and uses its deep fully connected architecture to effectively model nonlinear relationships in network traffic data. The MLP is usually implemented with a two-hidden-layer configuration, and the ReLU activation function is used to solve the common gradient vanishing problem in deep networks. In addition, a Dropout layer is introduced during the training process to randomly deactivate some neurons, thereby preventing overfitting and improving the generalization ability of the model.

5. Experimental Results

This section presents a structured validation of the proposed model through a progressive experimental framework, with each stage targeting a specific component of the detection pipeline. The evaluation begins with feature space visualization to illustrate the distributional separability between benign and malicious traffic, thereby providing a conceptual basis for downstream analysis. Subsequently, clustering parameter tuning is conducted to assess the model’s adaptability and stability across varying traffic scenarios. An ablation study is performed in a hierarchical manner by progressively removing individual component, allowing for a quantitative assessment of the contribution of each component to the classifier performance. Finally, comparative benchmarking against baseline models is conducted using standard metrics such as accuracy, FPR, and ROC curves.

In the overall work, the embedding dimension is 384; the hidden layer dimension is 512; eight attention heads are used; the feed-forward network dimension is 384, and the model consists of eight encoder blocks. The number of training rounds is 25. The learning rate is set to 0.000006, and a weight decay rate of 0.0004 is used to prevent overfitting. The AdamW optimizer is used during training, and the OneCycleLR learning rate scheduler is used to dynamically adjust the learning rate. In addition, the model also applies a multi-head attention mechanism, layer normalization, Dropout regularization, and other technologies to enhance the feature extraction ability of the model by processing four different stream aggregation channels in parallel.

5.1. Dataset Analysis

Based on traffic data, this work extracts key features including source/destination IPs, ports, and protocol types to construct a unique, reversible flow identifier, supporting downstream analysis and feature engineering. A time window mechanism is introduced to dynamically aggregate packets, capturing both the continuity and burst characteristics of traffic. In addition to basic attributes like the duration, packet count, and total length, the model distinguishes upstream and downstream byte counts. A deduplication mechanism ensures that each bidirectional flow is recorded once, minimizing redundancy and computational load.

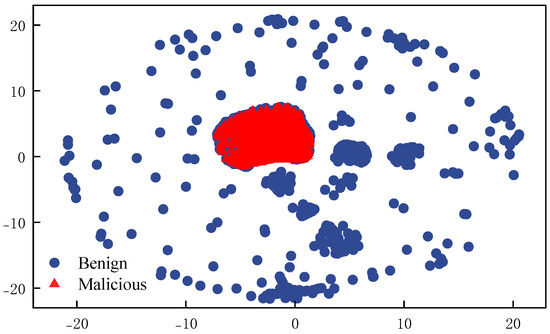

We employ the t-SNE to reduce the dimensionality and map the high-dimensional traffic data onto a two-dimensional space. Figure 5 illustrates the distribution differences between malicious and benign traffic, where the red triangles represent malicious traffic and the blue points represent benign traffic. Malicious traffic shows tight clustering with narrow horizontal spread and pronounced vertical variation, reflecting tool generated regularity. For instance, DDoS traffic exhibits periodic bursts; port scans reveal protocol anomalies, and brute force attacks concentrate around failed session attempts. Furthermore, attack types differ in vertical positioning; low-frequency scans cluster in negative ranges, while high-frequency brute force appears in the positive axis.

Figure 5.

t-SNE Visualization of traffic data distribution.

Benign traffic exhibits radial diffusion, reflecting its inherent heterogeneity. For instance, short HTTP sessions from web browsing coexist with long duration video streams, periodic email client interactions differ from the bursty patterns of instant messaging, and SSH tunnels and DNS queries display distinct payload characteristics across application layer protocols. The slight overlap between benign and malicious traffic is attributed to the ambiguity introduced by encrypted communication, which blurs distributional boundaries.

From a feature engineering perspective, relying on a single dimension such as the packet count is insufficient to distinguish APT attacks from normal encrypted behavior. However, constructing a feature space that integrates timing, protocol, and statistical characteristics enables the natural clustering of attack traffic during dimensionality reduction. This validates the effectiveness of multi-dimensional fusion in amplifying the visibility and separability of malicious behaviors.

5.2. Parameter Selection Strategy

5.2.1. Clustering Parameters

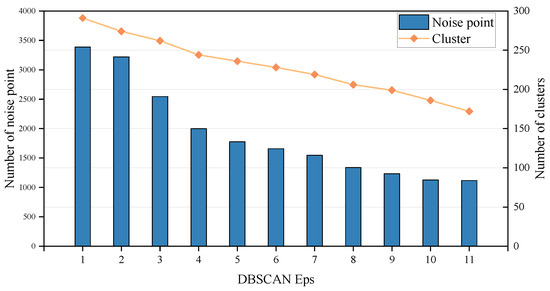

In the experiment, we found that the optimization of DBSCAN clustering and preprocessing strategies for noise processing and imbalanced data significantly affected the detection performance results of the model. The experiment used an adaptive algorithm to adjust the neighborhood radius (Eps) of DBSCAN from 1–11. As shown in Figure 6, it can be found that the number of noise points dropped from 3386 to 1118, while the number of clusters dropped sharply from 291 to 172, and the misclassification rate gradually decreased from 6.45% to 0.67%. This phenomenon shows that although a larger Eps value can effectively suppress noise interference, it may also lead to the loss of fine-grained attack features in the traffic channel. Specifically, when Eps is in the range of 4–7, the misclassification rate is stable between 0.98–2.14%, and the number of clusters is maintained between 244–219. Within this range, a balance is achieved between retaining the local density characteristics of malicious traffic and suppressing random noise. Therefore, Eps is set to four in the experiment as the optimal choice. In the preprocessing stage, we incorporate noise points into independent clusters through a re-labeling strategy to avoid information loss caused by data discard.

Figure 6.

Results on the Eps and noise point of clustering.

And through research, it is found that some unknown low-rate continuous-attack malicious traffic may be misjudged as noise because of the overlap of feature density with normal traffic. For example, when Eps is six, the average flow of the normal flow aggregation channel is 3456 pkt/channel with a standard deviation of 1124 pkt/channel, while the attack flow aggregation channel is 4567 pkt/channel with a standard deviation of 1456 pkt/channel. There is a significant difference in the distribution of the two, but low-variance attack traffic may still be filtered due to insufficient local density. In addition, to deal with the problem of imbalanced datasets, we use dynamic balancing of normal and attack samples to alleviate the category imbalance in traffic data, and it shows good robustness in experiments. In the time dimension, the adaptive algorithm effectively captures the dynamic evolution characteristics of attack traffic by combining sliding window technology. For DDoS attacks that last for several minutes, the algorithm can identify changes in traffic patterns at different stages; for short-term port scanning attacks, the continuity of its complete behavior sequence is maintained. It provides a richer feature representation for subsequent real-time detection.

5.2.2. Embedding Size

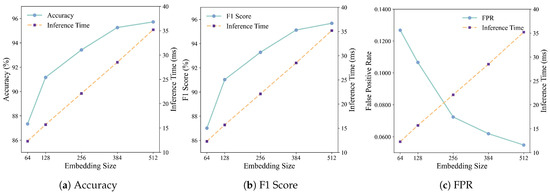

We use different embedding strategies based on dimensionality to optimize model performance, as shown in Table 3. For 64- and 128-dimensional embeddings, we exclusively employ Word2Vec. For 256-dimensional and higher configurations, we combine Word2Vec and FastText to enhance feature representation. In lower-dimensional spaces, the constrained vector space may lead to feature interference between models, resulting in mutual exclusion effects when applying dual embeddings. Consequently, we exclusively employ the Word2Vec strategy in this dimensional range. When the dimensionality exceeds 128, Word2Vec exhibits performance saturation, whereas the expanded vector space enables FastText to effectively complement the representation with subword-level features, ultimately enhancing the model’s overall ability. The concatenated vectors form a 384-dimensional comprehensive representation, retaining both the flow-level semantic context and substructure local features.

Table 3.

Performance comparison under different embedding sizes.

The 384-dimensional embedding achieves an optimal trade-off between detection performance and computational efficiency. It yields an accuracy of 95.26% and an F1 score of 95.12%, indicating robust discriminative capability. This performance stems from the embedding’s capacity to capture both global semantic dependencies and fine-grained local structures in the traffic data through the complementary encoding strengths of Word2Vec and FastText. The dual-channel embedding of 384 dimensions plays a key role in reducing false positives and false negatives while maintaining a high overall accuracy. It significantly reduces the false positive rate of the FPR to 6.19% and the FNR to 5.85% compared to using word2vec alone in low dimensions. Although 512 dimensions achieves better metrics (FPR: 5.48%, and FNR: 2.71%), it comes with a higher computational cost. Therefore, the 384-dimensional embedding provides the best balance, ensuring efficiency while providing enough capacity to capture semantic and structural patterns and minimize the risk of misclassification in encrypted traffic detection.

In terms of resource overhead, the 384-dimensional configuration maintains real-time applicability as shown in Figure 7, with an inference time of 47.3 ms and a model size of 156 MB at an average batch size of 128. Compared to the 512-dimensional configuration, which only improved accuracy by 0.46%, the 384-dimensional version avoids unnecessary computational costs. Specifically, increasing to 512 dimensions results in a 23.5% increase in inference time from 47.3 ms to 58.4 ms, as well as a 31% increase in memory consumption, which significantly affects the feasibility of deployment in resource-constrained or edge environments. The 256-dimensional embedding provides a lightweight alternative, achieving 94.1% accuracy in an inference time of 35.2 ms, making it more suitable for latency sensitive scenarios.

Figure 7.

Comparison of different embedding sizes.

In addition, the dual embedding strategy improves the ability to express features compared to a single Word2Vec model. For example, FastText can effectively capture the relationship between digital tokens and better identify the similarity between port numbers “80” and “8080”, while Word2Vec can better model temporal dependencies, such as packet arrival intervals. This complementarity reduces the false positive rate by 6.49% compared to a single embedding method under the same setting, thus proving the rationality of the high-dimensional dual embedding configuration.

Traditional network traffic detection methods usually directly use discrete features as numerical inputs or use one-hot encoding. They artificially assume that there is a certain order relationship between numerical values. For example, the numerical difference between port “22” and port “80” does not reflect the actual semantic difference and cannot capture the contextual semantic association between features, resulting in insufficient model generalization ability. To overcome the above limitations, the dual-channel embedding method that combines Word2Vec and FastText, which can learn the global semantic structure of traffic patterns through contextual co-occurrence relationships, enables the model to identify potential behavioral commonalities between different attack types and enrich the semantic information of traffic representation. FastText, by decomposing features into subword units for embedding training, is an effective supplement to the generalization ability of Word2Vec. This dual-channel embedding method combines the expression of global trends and local details, converting the originally sparse, discrete, and semantically inconsistent numerical features into high-dimensional vector representations with contextual semantics, which not only alleviates the representation bias problem in traditional methods but also improves the ability to identify malicious traffic. Our experimental results also verify that this method significantly improves detection performance. In summary, the dual-channel embedding strategy effectively bridges the semantic gap in traditional methods, improves the model’s ability to express and detect abnormal traffic, and is a substantial improvement over existing numerical modeling methods.

5.3. Ablation Experiment

The experiment designs the synergy of components such as the multi-head attention mechanism, the feature fusion layer, attention pooling, and the residual link. We design ablation experiments of different modules to highlight the effects of different components and embeddings, as shown in Table 4. This ablation study highlights the core components of EFTransformer by systematically evaluating the impact of removing key architectural modules. Notably, removing the multi-head attention module results in the most significant drop in accuracy, suggesting that it plays a crucial role in capturing diverse and global dependencies in encrypted traffic. The feature fusion layer is also critical as it enables the interaction of multiple behavioral channels, helping to unify the representation of network activity. Attention pooling enhances temporal focus and interpretability, especially in identifying short-lived or sparse anomalies. Residual connections, while often overlooked, have been shown to support stable training and deep feature extraction. In addition, the performance of single-feature encoder variants drops significantly, further demonstrating the value of multi-semantic encodings. Taken together, these findings validate that each module of EFTransformer plays a synergistic role in achieving high-accuracy detection of complex encrypted traffic patterns.

Table 4.

Performance Comparison of different model variants.

Ablation experiments validate the critical design choices in EFTransformer. As shown in Table 4, the complete model achieves 95.26% accuracy, a 6.19% FPR, and a 95.12% F1 score, significantly outperforming all its variants. Among the ablations, removing the multi-head attention module causes the most substantial drop in accuracy, down 5.83%, underscoring its essential role in capturing global and diverse dependency patterns across feature subspaces. This model enables us to effectively capture intricate behavioral correlations embedded in encrypted traffic.

The feature fusion layer is another cornerstone of the architecture. Its removal leads to a 3.98% decrease in accuracy. This layer aggregates information from four parallel behavior encoding channels, allowing cross-feature interaction and enhancing the model’s ability to capture complementary patterns. Without this fusion, the model struggles to form a unified understanding of network behavior.

The attention pooling mechanism, while less impactful in terms of absolute accuracy loss (2.14%), plays a unique role in refining feature representation. By computing importance weights over sequence positions, it enables the model to focus on key temporal segments and suppress irrelevant or redundant information. Attention Pooling dynamically calculates the attention weight of the output of each time step in the input feature sequence. This means that the model can adaptively evaluate the contribution of the feature content at each moment to the overall behavior, thereby focusing on key time segments rather than looking at all time steps equally. Compared with average pooling and maximum pooling, attention pooling retains the information of multiple key time segments through weighted fusion and gives them higher expression priority. This method can more effectively highlight the representative behavioral features in malicious communications and improve the model’s perception of changes in behavioral patterns. In short, attention pooling implements a dynamic aggregation strategy based on feature content, which not only improves the representation strength of key features but also avoids the shortcomings of traditional pooling methods in information utilization.

Removing residual connections results in a 4.84% drop in accuracy, confirming their importance in stabilizing training, mitigating gradient vanishing, and facilitating the learning of deep hierarchical features. As a standard design in deep networks, residual connections ensure smoother gradient flow and enhance convergence during training.

The four-behavior pattern encoding, combined with subsequent fusion, is crucial for constructing a holistic view of traffic behavior and identifying anomalies that manifest across multiple dimensions. While removing modules reduces the parameter count, it also leads to pronounced accuracy degradation. This confirms the effectiveness of EFTransformer’s multi-level architecture in achieving fine-grained, high-precision encrypted traffic detection.

EFTransformer minimizes the FPR and FNR by striking a balance between feature modeling and component control through a tightly integrated architectural design. By leveraging multi-head attention mechanisms and feature fusion on four behavioral channels, it captures temporal and semantic patterns in encrypted traffic, thereby achieving fine-grained differentiation between benign and malicious traffic. The design achieves a significantly low FPR of 6.19% and an FNR of 5.85%, outperforming all ablation variants. Ablation studies confirm that removing key modules such as attention mechanisms, feature fusion, or residual connections significantly improves the FPR and FNR to 12.44% and 11.39%, highlighting the role of multi-dimensional encoding, dynamic aggregation, and gradient stabilization in suppressing false positives. Together, these strategies ensure robust detection performance even under dynamic and unbalanced traffic conditions.

5.4. Baseline Experiment Comparison

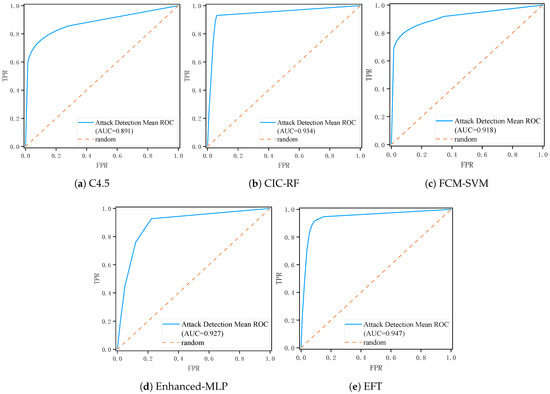

The C4.5 decision tree achieves an area under the ROC curve of 0.891, as shown in Figure 8, combined with an accuracy of 90.13%, a precision of 89.48%, and a recall of 84.16%. Its FPR of 12.03% and FNR of 15.84%, as shown in Table 5, reflect a model that excels at interpreting discrete protocols and categorical features yet suffers from overfitting to training traffic patterns. The resulting decision boundaries adapt closely to observed malicious signatures but exhibit limited robustness when presented with novel or slightly perturbed inputs.

Figure 8.

Comparison of the ROC for the baseline model.

Table 5.

Baseline model performance comparison.

Aggregating two hundred trees in the CIC-RF framework yields superior stability and discrimination capacity, as shown in Table 5. The ensemble records an AUC of 0.934, as shown in Figure 8, and an accuracy of 93.48%. Precision reaches 91.42%, while recall stands at 88.47%, yielding a low FPR of 10.26% and an FNR of 11.53%. Bootstrap sampling decorrelates individual trees and reduces variance at the expense of interpretability. The model’s dependence on high-dimensional continuous features necessitates dimensionality reduction or discretization during preprocessing which may obscure subtle temporal or sequential traffic characteristics.

The FCM-SVM secures an AUC of 0.918, as shown in Figure 8, and an accuracy of 92.83%. Its precision equals 91.77%, while its recall reaches 86.82%, leading to an FPR of 11.08% and an FNR of 13.18%. FCM performs initial data clustering, while the Gaussian kernel SVM captures nonlinear separability in feature space; however, hyperparameter sensitivity limits real-time deployment and requires extensive cross-validation to achieve optimal performance. Despite these limitations, the hybrid FCM-SVM approach exhibits higher accuracy and generalization ability than the standalone methods.

The Enhanced-MLP, composed of two hidden layers with rectified linear activations and Dropout regularization, records an AUC of 0.92, as shown in Figure 8, alongside an accuracy of 93.71%. It achieves a precision of 91.19% and a recall of 87.74%, resulting in an FPR of 10.52% and an FNR of 12.26%, as shown in Table 5. The MLP in the benchmark experiment is combined with clustering using the ReLU activation function to alleviate the gradient vanishing problem and combined with the Dropout layer to enhance the generalization ability by randomly deactivating neurons during training. Although the layered abstraction is able to learn to encrypt the complex associations of malicious traffic, the static fully connected topology still has limitations in modeling dynamic time dependencies when attack and benign traffic exhibit highly overlapping feature distributions, although reducing dropout can alleviate the overfitting problem.

The EFTransformer model demonstrates exceptional performance in attack detection, achieving an AUC of 0.947, as shown in Figure 8. The model sustains a recall of 94.15% while maintaining an impressively low FPR of 6.19%. This balance yields a precision of 91.30%, an F1 score of 95.12%, and an overall accuracy of 95.26%, as shown in Table 5. These figures confirm that EFTransformer not only identifies the vast majority of malicious sessions but does so with minimal collateral alerts, making it highly suitable for deployment under strict operational thresholds. The model’s stable output in multiple rounds of experiments and ablation tests shows that it is highly robust under noisy conditions. Even in the face of label distribution drift, the model relies on dual embedding representations and attention guidance mechanisms to effectively alleviate the deviation problem caused by the difference in the proportion of malicious traffic in the training set and the test environmental imbalance.

In terms of accuracy improvement, EFTransformer significantly outperforms traditional ML detection models, with an overall accuracy of 95.26%, an increase of 1.55% over the best-performing baseline model Enhanced-MLP, and an increase of 2.43% and 1.78% over FCM-SVM and CIC-RF, respectively. Secondly, EFTransformer’s performance in the two core indicators of FPR and FNR is particularly outstanding. Its FPR is 6.19%, which is at least 4% lower than that of CIC-RF and FCM-SVM, and indicates that its false alarm control ability has been greatly improved. At the same time, its FNR is 5.85%, which is much lower than other models, indicating that EFTransformer is less likely to miss real attack traffic and has stronger robustness. In summary, EFTransformer not only leads in detection accuracy but also shows obvious advantages in reducing FPR and FNR, reflecting its practical application value in encrypted malicious traffic scenarios.

The EFTransformer model achieved the highest accuracy of 95.26% and the lowest FPR and FNR in the experimental comparison, demonstrating that the dual-channel embedding module effectively captures the global semantics of traffic patterns through contextual co-occurrence relationships, thereby enriching the semantic representation of network traffic. It also confirms that the parallel encoding module enables semantic interactions across feature dimensions; the multi-head attention mechanism enhances deep dependencies between features, and the attention pooling mechanism, as a dynamic aggregation strategy, improves the model’s ability to focus on key behavioral features. This lays a solid foundation for future research. In addition to improving detection accuracy, further work will explore lightweight multi-channel architectures and adaptive attention mechanisms to enhance the model’s deployment efficiency and real-time detection capabilities in edge computing environments.

The detection accuracy of 95.26% indicates that EFTransformer can already identify most potential encrypted malicious behavior patterns, demonstrating strong practical value and stability in real-world applications. It reflects the model’s high reliability and decision-making capability when dealing with complex traffic scenarios. However, less than 5% of attack traffic remains inaccurately detected, suggesting that there is still room for improvement in handling extreme or stealthy threats. Future research will continue to optimize the model to achieve higher detection coverage and accuracy.

6. Conclusions

This study proposes a novel architecture, EFTransformer, for encrypted malicious traffic detection, which integrates dual-semantic embedding, multi-dimensional feature encoding, and a self-attention mechanism. Experimental results demonstrate its robustness and reliability in complex encrypted environments. Overall, EFTransformer effectively addresses key challenges such as feature ambiguity, attack pattern diversity, and class imbalance. Its hybrid modeling framework—combining semantic representation, behavioral abstraction, and an adaptive attention mechanism—offers a scalable and deployable solution for encrypted traffic analysis. This work lays a solid foundation for future research on more lightweight and real-time detection systems and provides a structural blueprint for integrating LLM capabilities with fine-grained traffic behavior modeling.

In future research, in the face of the continuous evolution of network threats and the rapid changes in the technology ecosystem, it is still necessary to deepen exploration in the following directions: First, in response to the lightweight deployment requirements of edge computing and IoT scenarios, it is necessary to further integrate model compression technology and hardware acceleration architecture and explore adaptive model optimization based on neural architecture search (NAS) to balance detection accuracy and real-time performance in resource-constrained environments. Secondly, with the emergence of AI-driven adaptive attack methods, an online incremental learning mechanism can be introduced to build a dynamic defense system so that the model can quickly identify unknown attack variants based on a small number of samples and reduce the dependence on labeled data. In addition, in order to address privacy compliance issues, it is necessary to explore federated learning and secure multi-party computing frameworks in the future, taking into account both detection efficiency and user privacy protection. At the technical integration level, digital twin technology can be combined to build a high-fidelity network simulation environment to simulate multi-dimensional attack scenarios to enhance the generalization ability of the model; at the same time, a causal reasoning mechanism can be introduced to analyze the potential correlation of the attack chain, thereby improving the threat tracing and blocking efficiency from the root. Finally, we will promote the intelligent collaboration of detection systems; combine traffic analysis with threat intelligence maps and user behavior modeling; build an integrated detection, response, defense ecosystem; and establish an industry-level benchmark testing platform and adversarial sample library to promote the robust iteration of algorithms in complex real-world scenarios. These directions will not only promote the paradigm upgrade of malicious traffic detection technology but also provide theoretical support and practical paths for building a new type of autonomous and evolving network security protection system.

Author Contributions

Conceptualization, Z.D. and W.F.; methodology, W.F.; software, S.T.; validation, S.T., F.D., and Z.D.; formal analysis, S.T.; investigation, S.T.; resources, Z.D.; data curation, S.T.; writing—original draft preparation, S.T.; writing—review and editing, F.D. and W.F.; visualization, S.T.; supervision, Z.D. and W.F.; project administration, Z.D. and W.F.; funding acquisition, W.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the XJTLU AI University Research Center, Jiangsu Province Engineering Research Center of Data Science and Cognitive Computation at XJTLU, and SIP AI innovation platform (YZCXPT2022103). This work was also supported in part by XJTLU Research Development Funding (RDF-21-02-012) and XJTLU Teaching Development Funding (TDF21/22-R24-177).

Data Availability Statement

Data available upon request due to restrictions.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Aas, J.; Barnes, R.; Case, B.; Durumeric, Z.; Eckersley, P.; Flores-López, A.; Halderman, J.A.; Hoffman-Andrews, J.; Kasten, J.; Rescorla, E.; et al. Let’s Encrypt: An automated certificate authority to encrypt the entire web. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2473–2487. [Google Scholar]

- Papadogiannaki, E.; Ioannidis, S. A survey on encrypted network traffic analysis applications, techniques, and countermeasures. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- de Carné de Carnavalet, X.; van Oorschot, P.C. A Survey and Analysis of TLS Interception Mechanisms and Motivations: Exploring how end-to-end TLS is made “end-to-me” for web traffic. ACM Comput. Surv. 2023, 55, 1–40. [Google Scholar] [CrossRef]

- Alwhbi, I.A.; Zou, C.C.; Alharbi, R.N. Encrypted network traffic analysis and classification utilizing machine learning. Sensors 2024, 24, 3509. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Zhang, Z.; Guo, M. A Survey of TLS Traffic Analysis and Detection Techniques. ACM Comput. Surv. (CSUR) 2020, 53, 1–36. [Google Scholar]

- Fernandes, S.; Antonello, R.; Lacerda, T.; Santos, A.; Sadok, D.; Westholm, T. Slimming down deep packet inspection systems. In Proceedings of the IEEE INFOCOM Workshops 2009, Rio De Janeiro, Brazil, 19–25 April 2009; pp. 1–6. [Google Scholar]

- Hubballi, N.; Swarnkar, M. Bitcoding: Network traffic classification through encoded bit level signatures. IEEE/ACM Trans. Netw. 2018, 26, 2334–2346. [Google Scholar] [CrossRef]

- Liu, J.; Fan, W.; Dai, Y.; Lim, E.G.; Pan, Z.; Lisitsa, A. Leveraging semi-supervised learning for enhancing anomaly-based ids in automotive ethernet. In Proceedings of the IEEE 23rd International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Sanya, China, 17–21 December 2024; pp. 1563–1571. [Google Scholar]

- Liu, J.; Fan, W.; Dai, Y.; Lim, E.G.; Lisitsa, A. A lightweight and responsive on-line ids towards intelligent connected vehicles system. In Proceedings of the 43rd International Conference on Computer Safety, Reliability, and Security, Florence, Italy, 17–20 September 2024; pp. 184–199. [Google Scholar]

- Azab, A.; Khasawneh, M.; Alrabaee, S.; Choo, K.K.R.; Sarsour, M. Network traffic classification: Techniques, datasets, and challenges. Digit. Commun. Netw. 2024, 10, 676–692. [Google Scholar] [CrossRef]

- Anderson, B.; McGrew, D. Machine learning for encrypted malware traffic classification: Accounting for noisy labels and non-stationarity. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1723–1732. [Google Scholar]

- Hwang, R.H.; Peng, M.C.; Huang, C.W.; Lin, P.C.; Nguyen, V.L. An unsupervised deep learning model for early network traffic anomaly detection. IEEE Access 2020, 8, 30387–30399. [Google Scholar] [CrossRef]

- Black, V.C. The Value of Threat Visibility in the Age of Encryption; Technical Report; White Paper; VMware: Palo Alto, CA, USA, 2020. [Google Scholar]

- Benabderrahmane, S.; Valtchev, P.; Cheney, J.; Rahwan, T. APT-LLM: Embedding-Based Anomaly Detection of Cyber Advanced Persistent Threats Using Large Language Models. arXiv 2025, arXiv:2502.09385. [Google Scholar]

- Zhang, H.; Sediq, A.B.; Afana, A.; Erol-Kantarci, M. Generative ai-in-the-loop: Integrating llms and gpts into the next generation networks. arXiv 2024, arXiv:2406.04276. [Google Scholar]

- Javadpour, A.; Ja’fari, F.; Taleb, T.; Shojafar, M.; Benzaïd, C. A Comprehensive Survey on Cyber Deception Techniques to Improve Honeypot Performance. Comput. Secur. 2024, 140, 103792. [Google Scholar] [CrossRef]

- Evangelou, M.; Adams, N.M. An anomaly detection framework for cyber-security data. Comput. Secur. 2020, 97, 101941. [Google Scholar] [CrossRef]

- Rezaei, S.; Liu, X. Deep learning for encrypted traffic classification: An overview. IEEE Commun. Mag. 2019, 57, 76–81. [Google Scholar] [CrossRef]

- Thakkar, A.; Lohiya, R. A survey on intrusion detection system: Feature selection, model, performance measures, application perspective, challenges, and future research directions. Artif. Intell. Rev. 2022, 55, 453–563. [Google Scholar] [CrossRef]

- Cui, S.; Dong, C.; Shen, M.; Liu, Y.; Jiang, B.; Lu, Z. CBSeq: A channel-level behavior sequence for encrypted malware traffic detection. IEEE Trans. Inf. Forensics Secur. 2023, 18, 5011–5025. [Google Scholar] [CrossRef]

- Sharma, A.; Lashkari, A.H. A survey on encrypted network traffic: A comprehensive survey of identification/classification techniques, challenges, and future directions. Comput. Netw. 2025, 257, 110984. [Google Scholar] [CrossRef]

- Otoum, Y.; Nayak, A. As-ids: Anomaly and signature based ids for the internet of things. J. Netw. Syst. Manag. 2021, 29, 23. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, M.; Wang, D.; Cao, Y.; Yang, P.; Jiang, B.; Lu, Z.; Liu, B. A survey of large language models for cyber threat detection. Comput. Secur. 2024, 145, 104016. [Google Scholar] [CrossRef]

- Misbha, J.C.; Raj, T.A.B.; Jiji, G. Security Assessment Framework for DDoS Attack Detection via Deep Learning. IETE J. Res. 2024, 70, 8462–8475. [Google Scholar] [CrossRef]

- Abdulganiyu, O.H.; Ait Tchakoucht, T.; Saheed, Y.K. A systematic literature review for network intrusion detection system (IDS). Int. J. Inf. Secur. 2023, 22, 1125–1162. [Google Scholar] [CrossRef]

- Fu, C.; Li, Q.; Xu, K.; Wu, J. Point cloud analysis for ML-based malicious traffic detection: Reducing majorities of false positive alarms. In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security, Copenhagen, Denmark, 26–30 November 2023; pp. 1005–1019. [Google Scholar]

- Ahmed, M.; Mahmood, A.N.; Hu, J. A survey of network anomaly detection techniques. J. Netw. Comput. Appl. 2016, 60, 19–31. [Google Scholar] [CrossRef]

- Aghaei, E.; Niu, X.; Shadid, W.; Al-Shaer, E. Securebert: A domain-specific language model for cybersecurity. In Proceedings of the 18th EAI International Conference on Security and Privacy in Communication Networks, Kansas City, MO, USA, 17–19 October 2022; pp. 39–56. [Google Scholar]

- Khan, L.U. Visible light communication: Applications, architecture, standardization and research challenges. Digit. Commun. Netw. 2017, 3, 78–88. [Google Scholar] [CrossRef]

- Liu, L.; Wang, P.; Lin, J.; Liu, L. Intrusion detection of imbalanced network traffic based on machine learning and deep learning. IEEE Access 2020, 9, 7550–7563. [Google Scholar] [CrossRef]

- Fu, C.; Li, Q.; Shen, M.; Xu, K. Realtime robust malicious traffic detection via frequency domain analysis. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, 15–19 November 2021; pp. 3431–3446. [Google Scholar]

- Ji, I.H.; Lee, J.H.; Kang, M.J.; Park, W.J.; Jeon, S.H.; Seo, J.T. Artificial intelligence-based anomaly detection technology over encrypted traffic: A systematic literature review. Sensors 2024, 24, 898. [Google Scholar] [CrossRef] [PubMed]

- Albasheer, H.; Md Siraj, M.; Mubarakali, A.; Elsier Tayfour, O.; Salih, S.; Hamdan, M.; Khan, S.; Zainal, A.; Kamarudeen, S. Cyber-attack prediction based on network intrusion detection systems for alert correlation techniques: A survey. Sensors 2022, 22, 1494. [Google Scholar] [CrossRef] [PubMed]

- Shiravi, A.; Shiravi, H.; Tavallaee, M.; Ghorbani, A.A. Intrusion Detection Evaluation Dataset (ISCXIDS2012). 2012. Available online: https://www.unb.ca/cic/datasets/ids.html (accessed on 27 April 2025).

- Lashkari, A.H.; Draper-Gil, G.; Mamun, M.S.I.; Ghorbani, A.A. Characterization of Tor Traffic Using Time Based Features. In Proceedings of the 3rd International Conference on Information Systems Security and Privacy (ICISSP), Porto, Portugal, 19–21 February 2017; pp. 253–262. [Google Scholar]

- Chandrasekhar, A.M.; Raghuveer, K. An Effective Technique for Intrusion Detection Using Neuro-Fuzzy and Radial SVM Classifier. In Computer Networks & Communications (NetCom); Lecture Notes in Electrical Engineering; Springer: New York, NY, USA, 2013; Volume 131, pp. 499–507. [Google Scholar] [CrossRef]

- Yin, Y.; Jang-Jaccard, J.; Sabrina, F.; Kwak, J. Improving Multilayer-Perceptron (MLP)-based Network Anomaly Detection with Birch Clustering on CICIDS-2017 Dataset. In Proceedings of the IEEE 26th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Rio de Janeiro, Brazil, 24–26 May 2023; pp. 423–431. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).