Data Privacy in the Internet of Things: A Perspective of Personal Data Store-Based Approaches

Abstract

1. Introduction

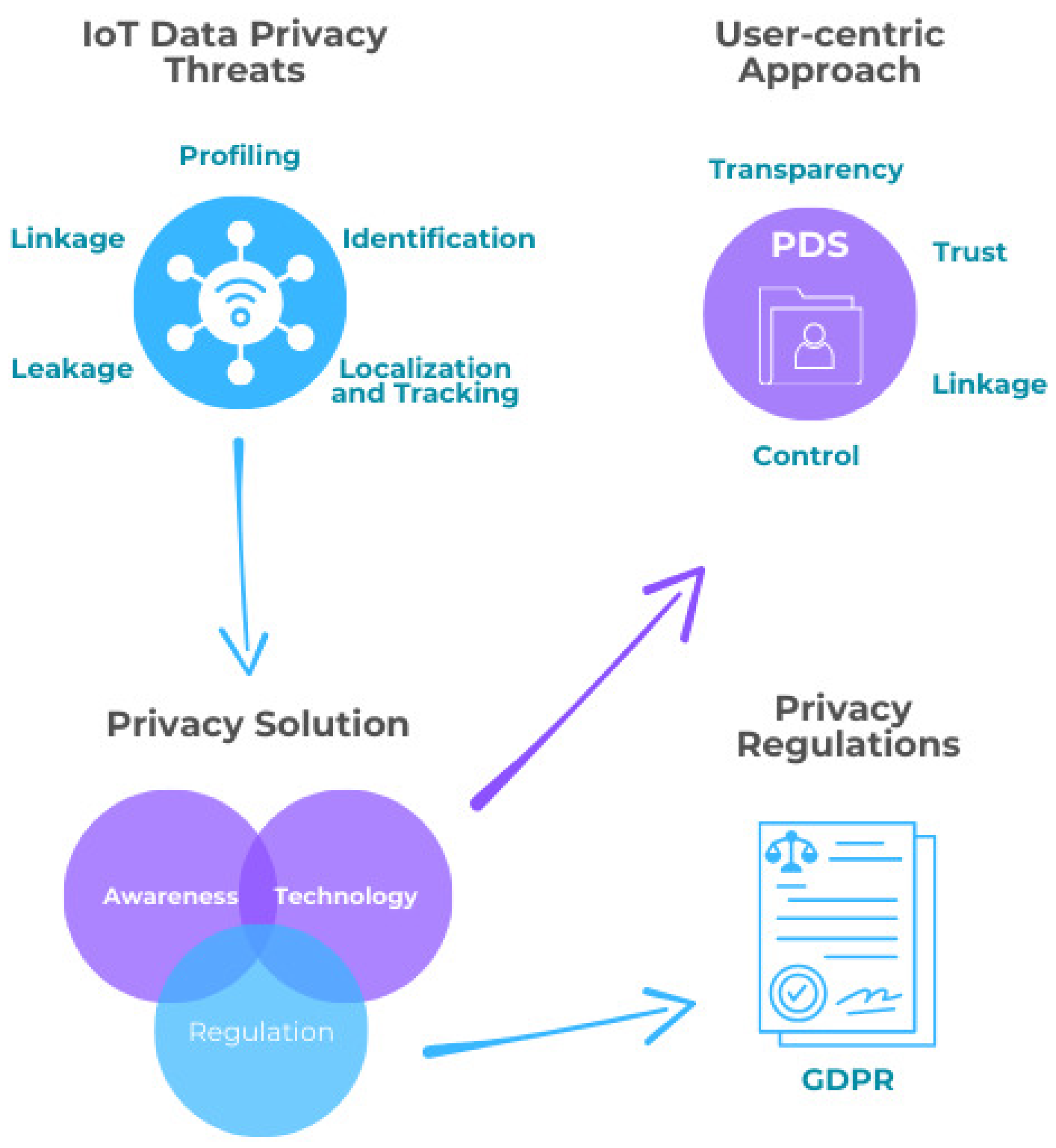

- Identifies and examines the critical privacy challenges in the context of the IoT, which arise from the increasing collection and sharing of large volumes of personal data;

- Discusses the triad of privacy solutions: privacy awareness, privacy regulation, and privacy-enhancing technologies;

- Introduces and explains the PDS concept and explores it as a promising solution to mitigate privacy threats by presenting relevant works in this way;

- Discusses the challenges of PDS implementation in relation to GDPR complaints.

2. Related Works

| Authors | Year | Domain | Cycle | User-Centric | Decentralized | GDPR | Data Control |

|---|---|---|---|---|---|---|---|

| Briggs et al. [17] | 2020 | Federated Learning | DP | ✗ | Partial | ✓ | Low |

| Kounoudes and Kapitsaki [16] | 2020 | Privacy Law | DS, DP, DSR, Processing | ✓ | ✗ | ✓ | Medium |

| Ogonji et al. [15] | 2020 | Taxonomy | DS, DP, DSR | ✗ | ✗ | ✗ | Low |

| Rodriguez et al. [14] | 2023 | Machine Learning | DP | ✗ | ✗ | Partial | Low |

| Kolevski and Michael [18] | 2024 | General | DG, DS, DP, DSR | ✗ | ✗ | ✗ | Medium |

| Abbas et al. [19] | 2024 | Federated Learning | DP | ✗ | Partial | ✓ | Low |

| Tudoran [20] | 2025 | General | DG, DS, DP, DSR | ✓ | ✗ | ✓ | Medium |

| This study | 2025 | Personal Data Store | DS, DP, DSR | ✓ | ✓ | ✓ | High |

3. Data Privacy in the Internet of Things

3.1. IoT Data

3.2. IoT Data Privacy

- Information privacy necessitates the establishment of unequivocal rules governing the acquisition and management of personal data. Diverse data types, including data banks, medical, or governmental records, fall within this case;

- Bodily privacy entails safeguarding physical tests from intrusion, including blood samples, DNA, and genetic tests;

- Privacy of communications relates to the security of any forms of communication regardless of the technologies, such as mail, email, and telephone;

- Territorial privacy establishes boundaries against intrusion into domestic, work, and public spaces.

- Awareness of privacy risks imposed by smart things and services surrounding the data subject;

- Individual control over the collection and processing of personal information by surrounding smart things;

- Awareness and control of subsequent use and dissemination of personal information by those entities to any entity outside the subject’s personal control sphere.

3.3. Information Privacy Protection

3.3.1. Privacy Awareness

3.3.2. Privacy Regulation

- Lawfulness, fairness, and transparency implies that any personal data processing by a controller must have a legal basis, be fair towards the individual, and be transparent to individuals and regulators. Users must be informed in a concise, easily accessible, and easy-to-understand manner;

- Purpose limitation implies that personal data must be collected for specific, explicit, and legitimate purposes, and it should not be processed in ways that are incompatible with those purposes;

- Data minimization implies that personal data must be adequate, relevant, and limited to what is necessary for the purposes;

- Accuracy implies that controllers should ensure personal data are accurate and, where necessary, kept up to date;

- Storage limitation implies that controllers must hold personal data in a way that allows the identification of individuals for no longer than necessary for the specified purposes;

- Integrity and confidentiality implies that personal data must be processed securely, ensuring protection against unauthorized or unlawful use and accidental loss, destruction, or damage;

- Accountability implies that the controller shall be responsible for and able to demonstrate compliance with the principles mentioned above.

- Right to be informed about the data collection and its purposes (Art. 13).

- Right of access from the controller confirmation as to whether or not personal data concerning him or her are being processed (Art. 15).

- Right to rectification of inaccurate personal data concerning him or her or to complete the data if they are incomplete (Art. 16).

- Right to erasure (to be forgotten) of personal data about him or her maintained by the controller and to withdraw consent (Art. 17).

- Right to restrict processing, placing limitations on the way that organizations use data (Art. 18).

- Right to portability of data about him or her. Data subjects have the right to have data transferred to themselves or a third party in a structured, commonly used, and machine-readable format (Art. 20).

- Right to object to personal data processing at any time and under specific circumstances (Art. 21).

- Right not to be subject to automated decision-making and profiling (Art. 22)

3.3.3. Privacy-Enhancing Technologies

4. PDS-Based Solution for IoT Privacy

4.1. System Model

4.1.1. Personal Data Store Definition

4.1.2. PDS Benefits and Drawbacks

- The ability to collect, analyze, manage, and share data with others;

- Complete and granular control over data processing; i.e., users need to give their consent and be informed about it;

- Users’ consent based on better-informed decisions because they will have more information about the processing (e.g., potential risks, real-time logs, audits, monitoring, and visualization);

- A more effective architecture (including controlled collection, processing on PDSs) against inappropriate access by third parties;

- More security and privacy levels once they can decide which information to share, with whom, and for what purposes;

- Incentive to service providers to adopt more privacy-friendly approaches;

- Power to choose between different platforms or services without losing control or ownership over data, fostering competition and innovation among service providers;

- Ways and opportunities for users to monetize their personal data;

- Means to execute individual analysis and gain insights about themselves.

4.2. PDS Technical Overview

4.3. PDS-Based Solution for Privacy Threats

4.4. GDPR Compliance

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kemp, S. Digital 2023: Global Statshot Report. DataReportal—Global Digital Insights. 2023. Available online: https://datareportal.com/reports/digital-2023-global-overview-report (accessed on 13 January 2025).

- Laoutaris, N. Data Transparency: Concerns and Prospects. Proc. IEEE 2018, 106, 1867–1871. [Google Scholar] [CrossRef]

- Alessi, M.; Camillò, A.; Giangreco, E.; Matera, M.; Pino, S.; Storelli, D. A Decentralized Personal Data Store based on Ethereum: Towards GDPR Compliance. J. Commun. Softw. Syst. 2019, 15, 79–88. [Google Scholar] [CrossRef]

- Grothaus, M. The Biggest Data Scandals and Breaches of 2018. 2018. Available online: https://www.businessinsider.com/data-hacks-breaches-biggest-of-2018-2018-12 (accessed on 13 January 2025).

- Sinha, S. Number of Connected IoT Devices Growing 16% to 16.7 Billion Globally. 2023. Available online: https://iot-analytics.com/number-connected-iot-devices/ (accessed on 11 January 2025).

- ONU. População Mundial Atinge 8 Bilhões de Pessoas|ONU News. 2022. Available online: https://shorturl.at/fWwkd (accessed on 10 January 2025).

- Lueth, K.L. State of the IoT 2020: 12 Billion IoT Connections. 2020. Available online: https://iot-analytics.com/state-of-the-iot-2020-12-billion-iot-connections-surpassing-non-iot-for-the-first-time/ (accessed on 16 January 2025).

- Duarte, F. Number of IoT Devices (2023–2030). 2023. Available online: https://explodingtopics.com/blog/number-of-iot-devices (accessed on 10 January 2025).

- Joshi, S. 70 IoT Statistics to Unveil the Past, Present, and Future of IoT. 2023. Available online: https://learn.g2.com/iot-statistics#top-stats (accessed on 10 January 2025).

- Majumder, S.; Aghayi, E.; Noferesti, M.; Memarzadeh-Tehran, H.; Mondal, T.; Pang, Z.; Deen, M.J. Smart Homes for Elderly Healthcare—Recent Advances and Research Challenges. Sensors 2017, 17, 2496. [Google Scholar] [CrossRef]

- Ashraf, S. A proactive role of IoT devices in building smart cities. Internet Things Cyber-Phys. Syst. 2021, 1, 8–13. [Google Scholar] [CrossRef]

- Garg, D.; Alam, M. Smart agriculture: A literature review. J. Manag. Anal. 2023, 10, 359–415. [Google Scholar] [CrossRef]

- Kokolakis, S. Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Comput. Secur. 2017, 64, 122–134. [Google Scholar] [CrossRef]

- Rodríguez, E.; Otero, B.; Canal, R. A Survey of Machine and Deep Learning Methods for Privacy Protection in the Internet of Things. Sensors 2023, 23, 1252. [Google Scholar] [CrossRef]

- Ogonji, M.M.; Okeyo, G.; Wafula, J.M. A survey on privacy and security of Internet of Things. Comput. Sci. Rev. 2020, 38, 100312. [Google Scholar] [CrossRef]

- Kounoudes, A.D.; Kapitsaki, G.M. A mapping of IoT user-centric privacy preserving approaches to the GDPR. Internet Things 2020, 11, 100179. [Google Scholar] [CrossRef]

- Briggs, C.; Fan, Z.; Andras, P. A Review of Privacy Preserving Federated Learning for Private IoT Analytics. arXiv 2020. [Google Scholar] [CrossRef]

- Kolevski, D.; Michael, K. Edge Computing and IoT Data Breaches: Security, Privacy, Trust, and Regulation. IEEE Technol. Soc. Mag. 2024, 43, 22–32. [Google Scholar] [CrossRef]

- Abbas, S.R.; Abbas, Z.; Zahir, A.; Lee, S.W. Federated Learning in Smart Healthcare: A Comprehensive Review on Privacy, Security, and Predictive Analytics with IoT Integration. Healthcare 2024, 12, 2587. [Google Scholar] [CrossRef] [PubMed]

- Tudoran, A.A. Rethinking privacy in the Internet of Things: A comprehensive review of consumer studies and theories. Internet Res. 2025, 35, 514–545. [Google Scholar] [CrossRef]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Context Aware Computing for The Internet of Things: A Survey. IEEE Commun. Surv. Tutor. 2014, 16, 414–454. [Google Scholar] [CrossRef]

- Ding, X.; Wang, H.; Li, G.; Li, H.; Li, Y.; Liu, Y. IoT data cleaning techniques: A survey. Intell. Converg. Netw. 2022, 3, 325–339. [Google Scholar] [CrossRef]

- Renaud, K.; Gálvez-Cruz, D. Privacy: Aspects, definitions and a multi-faceted privacy preservation approach. In Proceedings of the 2010 Information Security for South Africa, Johannesburg, South Africa, 2–4 August 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Westin, A. Privacy and Freedom; Athenaeum: New York, NY, USA, 1968. [Google Scholar]

- Margulis, S.T. On the Status and Contribution of Westin’s and Altman’s Theories of Privacy. J. Soc. Issues 2003, 59, 411–429. [Google Scholar] [CrossRef]

- Banisar, D. Privacy and Human Rights—An International Survey of Privacy Laws and Practice. 2007. Available online: https://gilc.org/privacy/survey/intro.html (accessed on 16 January 2025).

- Clarke, R. Internet privacy concerns confirm the case for intervention. Commun. ACM 1999, 42, 60–67. [Google Scholar] [CrossRef]

- Atlam, H.F.; Wills, G.B. IoT Security, Privacy, Safety and Ethics. In Digital Twin Technologies and Smart Cities, Internet of Things; Springer: Cham, Switzerland, 2020; pp. 123–149. [Google Scholar] [CrossRef]

- Al-Sharekh, S.I.; Al-Shqeerat, K.H.A. An Overview of Privacy Issues in IoT Environments. In Proceedings of the 2019 International Conference on Advances in the Emerging Computing Technologies (AECT), Al Madinah Al Munawwarah, Saudi Arabia, 10 February 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Safa, N.S.; Mitchell, F.; Maple, C.; Azad, M.A.; Dabbagh, M. Privacy Enhancing Technologies (PETs) for connected vehicles in smart cities. Trans. Emerg. Telecommun. Technol. 2022, 33, e4173. [Google Scholar] [CrossRef]

- Ziegeldorf, J.H.; Morchon, O.G.; Wehrle, K. Privacy in the Internet of Things: Threats and challenges. Secur. Commun. Netw. 2014, 7, 2728–2742. [Google Scholar] [CrossRef]

- Pinto, G.P.; Donta, P.K.; Dustdar, S.; Prazeres, C. A Systematic Review on Privacy-Aware IoT Personal Data Stores. Sensors 2024, 24, 2197. [Google Scholar] [CrossRef]

- UNION, E. General Data Protection Regulation (GDPR)—Official Legal Text. 2016. Available online: https://gdpr-info.eu/ (accessed on 31 October 2024).

- Oomen, I.; Leenes, R. Privacy Risk Perceptions and Privacy Protection Strategies. In Policies and Research in Identity Management; de Leeuw, E., Fischer-Hübner, S., Tseng, J., Borking, J., Eds.; Springer: Boston, MA, USA, 2008; pp. 121–138. [Google Scholar] [CrossRef]

- Conrad, S.S. Protecting Personal Information and Data Privacy: What Students Need to Know. J. Comput. Sci. Coll. 2019, 35, 77–86. [Google Scholar]

- Tavani, H.T.; Moor, J.H. Privacy protection, control of information, and privacy-enhancing technologies. ACM SIGCAS Comput. Soc. 2001, 31, 6–11. [Google Scholar] [CrossRef]

- Soumelidou, A.; Tsohou, A. Towards the creation of a profile of the information privacy aware user through a systematic literature review of information privacy awareness. Telemat. Inform. 2021, 61, 101592. [Google Scholar] [CrossRef]

- Pötzsch, S. Privacy Awareness: A Means to Solve the Privacy Paradox? In The Future of Identity in the Information Society; Matyáš, V., Fischer-Hübner, S., Cvrček, D., Švenda, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 226–236. [Google Scholar]

- Mugariri, P.; Abdullah, H.; García-Torres, M.; Parameshchari, B.D.; Sattar, K.N.A. Promoting Information Privacy Protection Awareness for Internet of Things (IoT). Mob. Inf. Syst. 2022, 2022, 4247651. [Google Scholar] [CrossRef]

- Ghazinour, K.; Matwin, S.; Sokolova, M. YourPrivacyProtector: A Recommender System for Privacy Settings in Social Networks. Int. J. Secur. Priv. Trust Manag. 2013, 2, 11–25. [Google Scholar] [CrossRef]

- Paspatis, I.; Tsohou, A.; Kokolakis, S. AppAware: A policy visualization model for mobile applications. Inf. Comput. Secur. 2020, 28, 116–132. [Google Scholar] [CrossRef]

- Fatima, R.; Yasin, A.; Liu, L.; Wang, J.; Afzal, W.; Yasin, A. Sharing information online rationally: An observation of user privacy concerns and awareness using serious game. J. Inf. Secur. Appl. 2019, 48, 102351. [Google Scholar] [CrossRef]

- UNCTAD. Data Protection and Privacy Legislation Worldwide|UNCTAD. 2021. Available online: https://unctad.org/page/data-protection-and-privacy-legislation-worldwide (accessed on 31 October 2024).

- Aljeraisy, A.; Barati, M.; Rana, O.; Perera, C. Privacy Laws and Privacy by Design Schemes for the Internet of Things. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Cha, S.C.; Hsu, T.Y.; Xiang, Y.; Yeh, K.H. Privacy enhancing technologies in the internet of things: Perspectives and challenges. IEEE Internet Things J. 2019, 6, 2159–2187. [Google Scholar] [CrossRef]

- Shen, Y.; Pearson, S. Privacy Enhancing Technologies: A Review. 2011. Available online: https://bit.ly/3cfpAKz (accessed on 31 October 2024).

- Alsheikh, M.A. Five Common Misconceptions About Privacy-Preserving Internet of Things. IEEE Commun. Mag. 2023, 61, 151–157. [Google Scholar] [CrossRef]

- CISCO. Consumer Privacy Survey: The Growing Imperative of Getting Data Privacy Right. 2019. Available online: https://www.cisco.com/c/dam/global/en_uk/products/collateral/security/cybersecurity-series-2019-cps.pdf (accessed on 16 January 2024).

- Zheng, S.; Apthorpe, N.; Chetty, M.; Feamster, N. User perceptions of smart home IoT privacy. Proc. ACM Hum.-Comput. Interact. 2018, 2, 200. [Google Scholar] [CrossRef]

- Schwab, K.; Marcus, A.; Oyola, J.R.; Hoffman, W.; Luzi, M. Personal Data: The Emergence of a New Asset Class; Technical report; World Economic Forum: Cologny, Switzerland, 2011. [Google Scholar]

- Van Kleek, M.; OHara, K. The Future of Social Is Personal: The Potential of the Personal Data Store. In Social Collective Intelligence: Combining the Powers of Humans and Machines to Build a Smarter Society; Springer International Publishing: Cham, Switzerland, 2014; Chapter 7; pp. 125–128. [Google Scholar] [CrossRef]

- Jones, W. Chapter Two—A personal space of information. In Keeping Found Things Found; Jones, W., Ed.; The Morgan Kaufmann Series in Multimedia Information and Systems; Morgan Kaufmann: San Francisco, CA, USA, 2008; pp. 22–53. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Z. A Survey on Personal Data Cloud. Sci. World J. 2014, 2014, 969150. [Google Scholar] [CrossRef] [PubMed]

- Verborgh, R. Re-Decentralizing the Web, For Good This Time. In Linking the World’s Information: Essays on Tim Berners-Lee’s Invention of the World Wide Web; Seneviratne, O., Hendler, J., Eds.; ACM: New York, NY, USA, 2023; pp. 215–230. [Google Scholar] [CrossRef]

- Fallatah, K.U.; Barhamgi, M.; Perera, C. Personal Data Stores (PDS): A Review. Sensors 2023, 23, 1477. [Google Scholar] [CrossRef] [PubMed]

- Moiso, C.; Antonelli, F.; Vescovi, M. How Do I Manage My Personal Data?—A Telco Perspective. In Proceedings of the International Conference on Data Technologies and Applications—DATA, Rome, Italy, 25–27 July 2012; INSTICC; SciTePress: Setúbal, Portugal, 2012; pp. 123–128. [Google Scholar] [CrossRef]

- Janssen, H.; Cobbe, J.; Norval, C.; Singh, J. Decentralized data processing: Personal data stores and the GDPR. Int. Data Priv. Law 2020, 10, 356–384. [Google Scholar] [CrossRef]

- Bus, J.; Nguyen, C. Personal Data Management—A Structured Discussion. In Digital Enlightenment Yearbook 2013; Ios Press: London, UK, 2013; pp. 270–287. [Google Scholar] [CrossRef]

- Hummel, P.; Braun, M.; Dabrock, P. Own Data? Ethical Reflections on Data Ownership. Philos. Technol. 2021, 34, 545–572. [Google Scholar] [CrossRef]

- Shanmugarasa, Y.; Paik, H.Y.; Kanhere, S.S.; Zhu, L. Towards Automated Data Sharing in Personal Data Stores. In Proceedings of the 2021 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Kassel, Germany, 22–26 March 2021; pp. 328–331. [Google Scholar] [CrossRef]

- Verbrugge, S.; Vannieuwenborg, F.; Van der Wee, M.; Colle, D.; Taelman, R.; Verborgh, R. Towards a personal data vault society: An interplay between technological and business perspectives. In Proceedings of the 2021 60th FITCE Communication Days Congress for ICT Professionals: Industrial Data—Cloud, Low Latency and Privacy (FITCE), Vienna, Austria, 29–30 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Solid Project. Solid Specification. 2025. Available online: https://solidproject.org/specification (accessed on 21 March 2025).

- Mydex CIC. Mydex Charter. Available online: https://mydex.org/about-us/mydex-charter/ (accessed on 21 March 2025).

- Digi.me. Digi.me—Your Personal Data, Your Rules. 2025. Available online: https://digi.me/ (accessed on 11 April 2025).

- Hub of All Things. What Is the HAT. 2024. Available online: https://www.hubofallthings.com/main/what-is-the-hat (accessed on 11 April 2025).

- de Montjoye, Y.A.; Shmueli, E.; Wang, S.S.; Pentland, A.S. openPDS: Protecting the Privacy of Metadata through SafeAnswers. PLoS ONE 2014, 9, e98790. [Google Scholar] [CrossRef]

- Pinto, G.P.; Prazeres, C. Towards data privacy in a fog of things. Internet Technol. Lett. 2024, 7, e512. [Google Scholar] [CrossRef]

- Vescovi, M.; Moiso, C.; Pasolli, M.; Cordin, L.; Antonelli, F. Building an eco-system of trusted services via user control and transparency on personal data. IFIP Adv. Inf. Commun. Technol. 2015, 454, 240–250. [Google Scholar] [CrossRef]

- Esteves, B. Challenges in the Digital Representation of Privacy Terms. In International Workshop on AI Approaches to the Complexity of Legal Systems; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2021; Volume 13048, pp. 313–327. [Google Scholar]

- Sun, C.; van Soest, J.; Dumontier, M. Analyze Decentralized Personal Health Data using Solid, Digital Consent, and Federated Learning. In Proceedings of the 14th International Conference on Semantic Web Applications and Tools for Health Care and Life Sciences, Basel, Switzerland, 13–16 February 2023; Volume 3415, pp. 169–170. [Google Scholar]

- Komeiha, F.; Cheniki, N.; Sam, Y.; Jaber, A.; Messai, N.; Devogele, T. Towards a Privacy Conserved and Linked Open Data Based Device Recommendation in IoT. In International Conference on Service-Oriented Computing; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: Cham, Switzerland, 2021; Volume 12632, pp. 32–39. [Google Scholar] [CrossRef]

- Boi, B.; De Santis, M.; Esposito, C. A Decentralized Smart City Using Solid and Self-Sovereign Identity. In Proceedings of the Computational Science and Its Applications—ICCSA 2023 Workshops, Athens, Greece, 3–6 July 2023; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switzerland, 2023; Volume 4109, pp. 149–161. [Google Scholar] [CrossRef]

| Type of PET | Privacy Threats Addressed |

|---|---|

| Control over data | Lifecycle transitions, privacy-violating interaction and presentation |

| Anonymization/Pseudonymization | Identification, profiling, linkage |

| Anonymous authorization | Identification, localization and tracking, profiling, privacy-violating interaction and presentation, linkage |

| Partial data disclosure | Identification, localization and tracking, profiling, privacy-violating interaction and presentation, linkage |

| Policy enforcement | Inventory attacks, linkage, profiling, privacy-violating interaction and presentation |

| Personal data protection | Profiling, lifecycle transitions, inventory attacks, privacy-violating interaction and presentation, linkage |

| Platform | Architecture Type | Processing | GDPR Compliance | User Control | Data Monetization |

|---|---|---|---|---|---|

| Solid | Decentralized | Local | Yes | High | Possible |

| Mydex | Centralized | Cloud | Yes | Medium | No |

| Digi.me | Centralized | Cloud | Yes | Medium | Possible |

| HAT | Hybrid | Local/Cloud | Yes | High | Yes |

| OpenPDS | Hybrid | Local/Cloud | Partial | High | No |

| Criterion | Centralized Systems | PDS Solutions |

|---|---|---|

| User Control | Low—data are managed by third parties | High—users define access and usage policies |

| Privacy Risk | High—single points of failure and uncontrolled data reuse | Low—user-centric control and distributed access |

| Computational Overhead | Low—cloud-based processing at scale | Medium to High—some rely on local or distributed processing |

| Monetization Capability | Absent—user data monetized by providers | Possible—some PDS platforms support data sharing with compensation |

| Transparency | Limited—access and processing logs are not always available | Moderate to High—PDS can provide dashboards, logs, and audit tools |

| Scalability | High—mature and consolidated architectures | Variable—depends on platform design (local/cloud, centralized/decentralized) |

| User Experience | High—refined and integrated interfaces | Medium—usability remains a challenge for broader adoption |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinto, G.P.; Prazeres, C. Data Privacy in the Internet of Things: A Perspective of Personal Data Store-Based Approaches. J. Cybersecur. Priv. 2025, 5, 25. https://doi.org/10.3390/jcp5020025

Pinto GP, Prazeres C. Data Privacy in the Internet of Things: A Perspective of Personal Data Store-Based Approaches. Journal of Cybersecurity and Privacy. 2025; 5(2):25. https://doi.org/10.3390/jcp5020025

Chicago/Turabian StylePinto, George P., and Cássio Prazeres. 2025. "Data Privacy in the Internet of Things: A Perspective of Personal Data Store-Based Approaches" Journal of Cybersecurity and Privacy 5, no. 2: 25. https://doi.org/10.3390/jcp5020025

APA StylePinto, G. P., & Prazeres, C. (2025). Data Privacy in the Internet of Things: A Perspective of Personal Data Store-Based Approaches. Journal of Cybersecurity and Privacy, 5(2), 25. https://doi.org/10.3390/jcp5020025