Navigating Through Human Rights in AI: Exploring the Interplay Between GDPR and Fundamental Rights Impact Assessment

Abstract

1. Introduction

1.1. Research Objectives

1.2. Research Questions

- Q1.

- What are the similarities and differences in the scopes, objectives, and methodologies of DPIA and FRIA within the realm of AI?

- Q2.

- How can we assess and merge existing methodologies for DPIA and FRIA into a cohesive framework that ensures thorough risk evaluation?

- Q3.

- What advantages and obstacles might stakeholders in the EU encounter when embracing such a unified DPIA/FRIA framework?

- Q4.

- How does the proposed framework contribute to enhancing transparency, accountability, and public confidence in AI technologies, across diverse industries?

1.3. Structure of the Paper

1.4. AI Techniques

1.5. Ethical Challenges: AI and Human Rights

1.6. Legal Framework

1.6.1. General Data Protection Regulation (GDPR)

1.6.2. AI Act

- Deceptive AI Practices: AI systems exploiting vulnerabilities in particular groups (e.g., age, physical, or mental disability) to substantially influence a person’s behavior potentially resulting in physical or psychological harm to that individual or others;

- Government Social Scoring: Use of AI systems by governments to assess the individual’s reliability over time based on their social status or personal traits, leading to negative outcomes for specific individuals or groups in social settings unrelated to the original data gathering context;

- Real-time Biometric Identification Systems for Law Enforcement: The use of AI systems to identify individuals remotely through biometric data in public spaces is generally prohibited, except for certain cases (such as searching for crime victims, preventing a particular and imminent terrorist threat or identifying and prosecuting a criminal offender or suspect).

- Unacceptable Risk: AI systems that threaten safety, well-being, and rights are prohibited in the EU. This includes AI exploiting vulnerabilities in specific groups (e.g., age and disabilities) to alter behavior harmfully and government social scoring systems.

- High-Risk AI Systems (HRAIS): These systems, used in sectors such as transportation, education, employment, public services, law enforcement, and justice, must meet strict regulatory requirements for safety and transparency before their market introduction.

- Limited Risk: AI systems with limited risk must meet transparency requirements, e.g., chatbots must disclose their AI nature to users.

- Minimal Risk: These systems, such as AI-powered video games or spam filters, do not require additional legal obligations under the AI Act, as existing laws address their risks adequately.

The Notion of the Fundamental Rights Impact Assessment (FRIA)

1.6.3. A Comparison Between DPIA and FRIA

2. Materials and Methods

2.1. Previous Work on DPIA

DPIA Methodologies

- UK Information Commissioner’s Office (ICO) DPIA Guidance and Template [40]: The ICO provides comprehensive guidance and a template for conducting DPIAs. The inclusion of a template simplifies documentation, but may not fully accommodate the intricacies of diverse processing activities, possibly necessitating further resources for complex scenarios.

- International Association of Privacy Professionals (IAPP) DPIA Tool [41]: The IAPP offers resources and tools for privacy professionals, including a DPIA tool designed to streamline the process of conducting impact assessments. This tool typically includes checklists and questionnaires to help identify and assess privacy risks associated with data processing activities. With its practical checklists and a supportive privacy professional community, it offers a flexible approach to DPIA. However, access to some IAPP resources is not open.

- French Data Protection Authority (CNIL) DPIA Tool [42]: The CNIL provides an open-source DPIA tool and methodology to guide organizations through the assessment process. This tool is designed to help identify processing operations that require a DPIA, evaluate the risks to the rights and freedoms of natural persons, and determine measures to mitigate those risks (it should be pointed out, though, that emphasis is given to security risks). The CNIL’s approach to DPIAs includes detailed guidance on when a DPIA is required, how to carry it out, and what elements it should contain. The CNIL also provides templates and supporting documents to facilitate the DPIA process, making it more accessible and manageable for organizations of all sizes.

2.2. Previous Work on FRIA

FRIA Methodologies

- The AIA tool aims to guide federal institutions in evaluating the impact of using an automated decision system. It helps identify potential risks to privacy, human rights, and transparency, and proposes measures to mitigate these risks before these systems are deployed.

- The AIA consists of a questionnaire that assesses the design, use, and deployment of an algorithmic system. Based on the responses, it generates a risk score that indicates the level of impact the system may have on individuals or society. This score helps determine the level of oversight and measures needed to mitigate potential risks.

- The AIA tool and the broader framework for the responsible use of AI in government are subject to ongoing review and improvement. This iterative approach allows the government to adapt to new challenges and developments in AI technology, ensuring that its use remains aligned with public values and legal obligations.

2.3. The Proposed DPIA—FRIA Framework

3. Results

3.1. Components of the New DPIA—FRIA Framework

3.1.1. The DPIA Part of the Framework

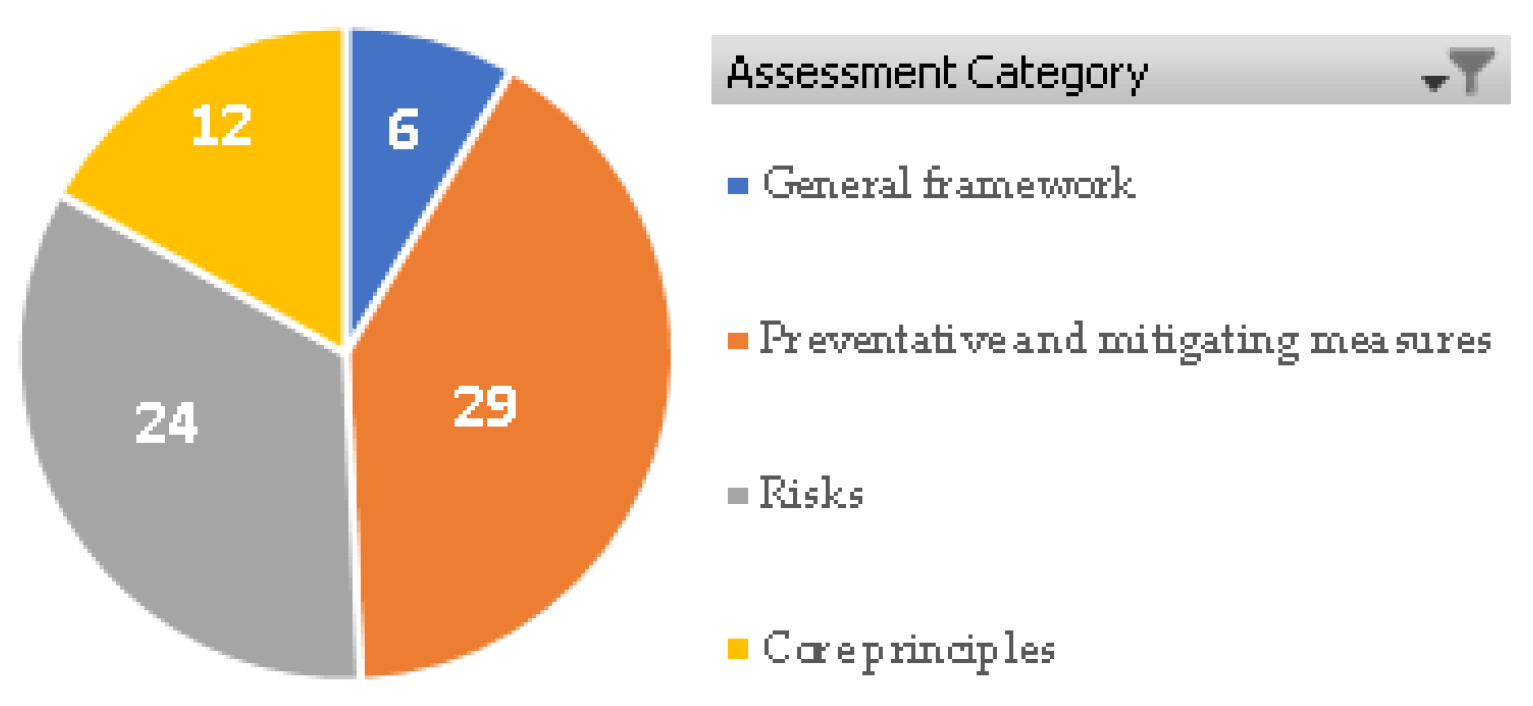

Assessment Category 1: General Framework

- Overview

- 1.

- What is the processing under consideration? (D)

- 2.

- What are the responsibilities linked to data processing? Describe the responsibilities of the data controller and processor. (D), →FRIA: Q10, Q13

- 3.

- Are there standards applicable to the processing? List all relevant standards and references governing the processing that are mandatory for compliance. (D)

- Data, Procedures, and Support Elements

- 4.

- What types of personal data are processed? (D), ←FRIA: Q34

- 5.

- What is the operational lifecycle for data and processes? (D)

- 6.

- What are the supporting elements for the data? (D)

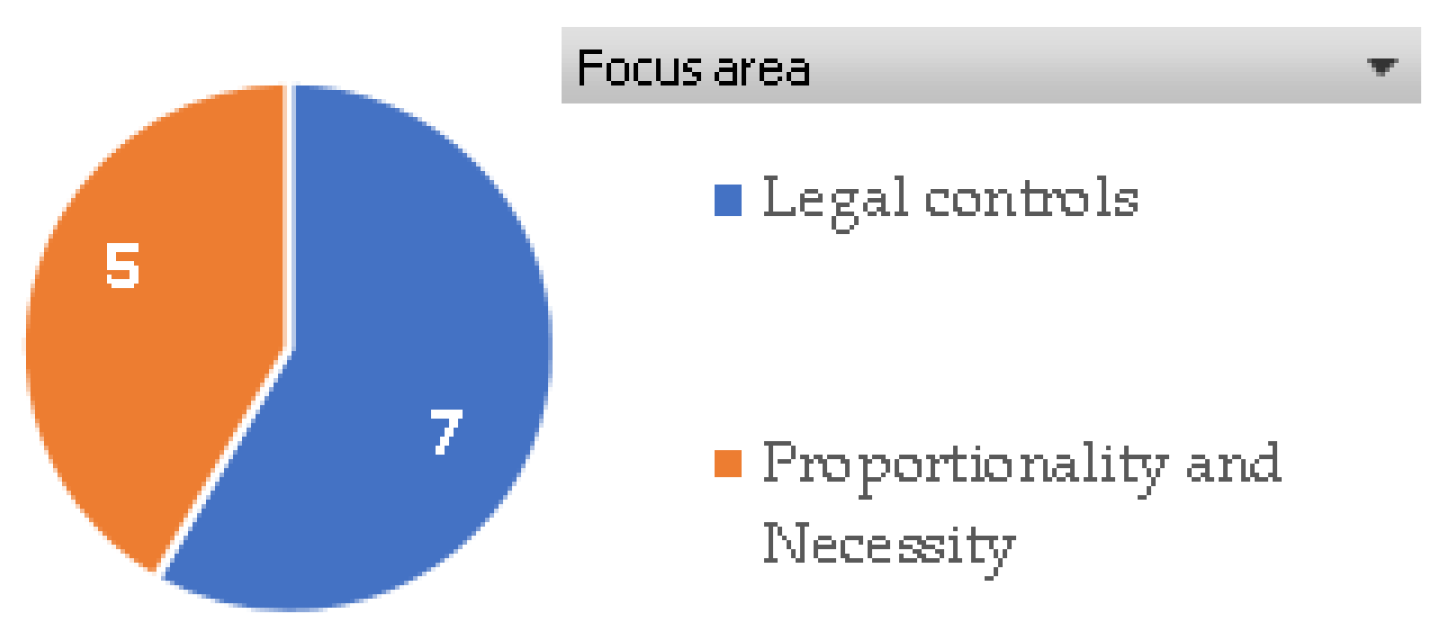

Assessment Category 2: Core Principles

- Proportionality and Necessity

- 7.

- Are the goals of the processing clear, explicit, and lawful? (D)

- 8.

- What legal foundation justifies the processing? (D), →FRIA: Q123

- 9.

- Do you apply the principle of data minimization? (D), ←FRIA: Q35

- 10.

- Are the data adequate and up-to-date? (D), ← FRIA: Q18

- 11.

- What is the duration of data storage? (D)

- Legal controls

- 12.

- By what means are data subjects notified about the processing? (D), ← FRIA: Q106–Q108

- 13.

- If applicable, how do you obtain the data subjects’ consent? (D)

- 14.

- In what ways can data subjects exercise their rights to access and data portability? (D), →FRIA:Q124.

- 15.

- By what means can data subjects request correction or erasure of their personal data? (D), → FRIA:Q124.

- 16.

- By what means can data subjects exercise their rights to restriction and objection? (D), →FRIA:Q124.

- 17.

- Are the responsibilities of the processing personnel clearly specified and contractually governed? (D), ← FRIA: Q52.

- 18.

- When transferring data outside the EU, are the data protection measures sufficient? (D)

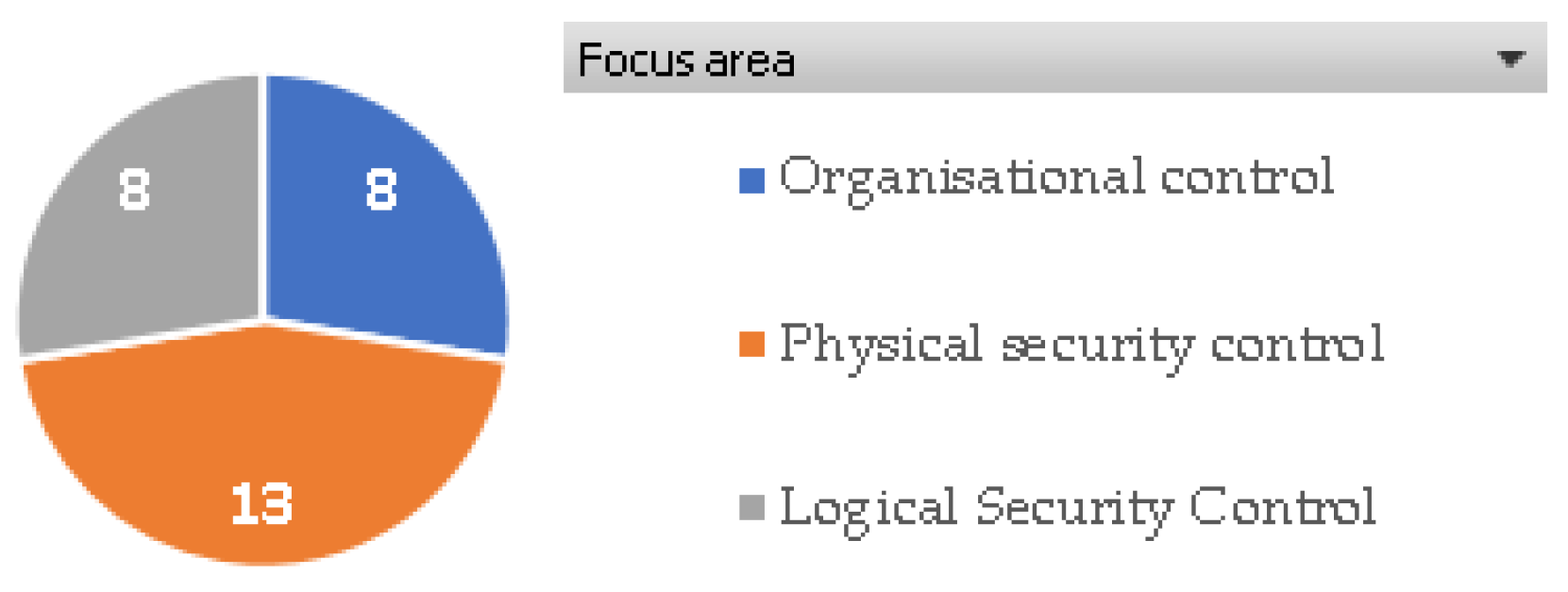

Assessment Category 3: Preventative and Mitigating Measures

- Organizational control

- 19.

- Is there a designated individual ensuring compliance with privacy regulations? Is there a dedicated oversight body (or equivalent) offering guidance and monitoring actions related to data protection? (D)

- 20.

- Have you implemented a data protection policy? (D), ←FRIA: Q28

- 21.

- Have you implemented a policy for risk controlling? (D), ←FRIA: Q28

- 22.

- Have you implemented a policy for integrating privacy protection in all new projects? (D), ←FRIA: Q28

- 23.

- Is there an operational entity capable of identifying and addressing incidents that could impact data subjects’ privacy and civil liberties? (D), ←FRIA: Q28

- 24.

- Do you have a policy in place that outlines the procedures for educating new employees about data protection, and the measures taken to secure data when someone with data access leaves the company? (D), ←FRIA:Q28,Q84

- 25.

- Is there a policy and procedures in place to minimize the risk to data subjects’ privacy and rights, when third parties have legitimate access to their personal data? (D), ←FRIA: Q28

- 26.

- Does your organization have a policy and procedures in place to ensure the management and control of the personal data protection that it holds? (D), ←FRIA:Q28,Q115

- Physical security control

- 27.

- Have measures been put in place to minimize the likelihood and impact of risks affecting assets that handle personal data? (D), ←FRIA: Q28

- 28.

- Have protective measures been established on workstations and servers to defend against malicious software when accessing untrusted networks? (D), ←FRIA: Q28

- 29.

- Have safeguards been put in place on workstations, to mitigate the risk of software exploitation? (D), ←FRIA: Q28

- 30.

- Have you implemented a backup policy? (D), ←FRIA: Q28

- 31.

- Have you implemented ANSSI’s Recommendations for securing websites? (D)

- 32.

- Have you implemented policies for the physical maintenance of hardware? (D), ←FRIA: Q28

- 33.

- How do you ensure and document that your subcontractors provide sufficient guarantees in terms of data protection, particularly regarding data encryption, secure data transmission, and incident management, as required for a comprehensive DPIA? (D), ←FRIA: Q28, Q52

- 34.

- How do you ensure network security? (D), ←FRIA: Q28, Q51

- 35.

- Have you implemented policies for physical security? (D), ←FRIA: Q28

- 36.

- How do you monitor network activity? (D), ←FRIA: Q28

- 37.

- Which controls have you implemented for the physical security of servers and workstations? (D), ←FRIA: Q28

- 38.

- How do you assess and document environmental risk factors, such as susceptibility to natural disasters and proximity to hazardous materials, in the chosen implantation area for a project to ensure safety and compliance with regulatory requirements? (D), ←FRIA: Q28

- 39.

- Have you implemented processes for protection from non-human risk sources? (D), ←FRIA: Q28

- Logical Security Control

- 40.

- Have adequate measures been established to maintain the confidentiality of stored data along with a structured process for handling encryption keys? (D), ←FRIA: Q28

- 41.

- Are there anonymization measures in place? If so, what methods are used, and what is their intended purpose? (D), ←FRIA: Q28, Q33, Q53

- 42.

- Is data partitioning carried out or planned? (D), ←FRIA: Q28

- 43.

- Have you implemented authentication means, which ones and which rules are applicable to passwords? (D), ←FRIA: Q31

- 44.

- Have you implemented policies that define traceability and log management? (D), ←FRIA: Q28, Q32

- 45.

- What are the archive management procedures under your responsibility, including delivery, storage, and access? (D), ←FRIA: Q28, Q30

- 46.

- If paper documents containing data are used in the processing, what procedures are followed for printing, storage, disposal, and exchange? (D), ←FRIA: Q28

- 47.

- Which methods have you implemented for personal data minimization? (D), ←FRIA: Q35, Q36

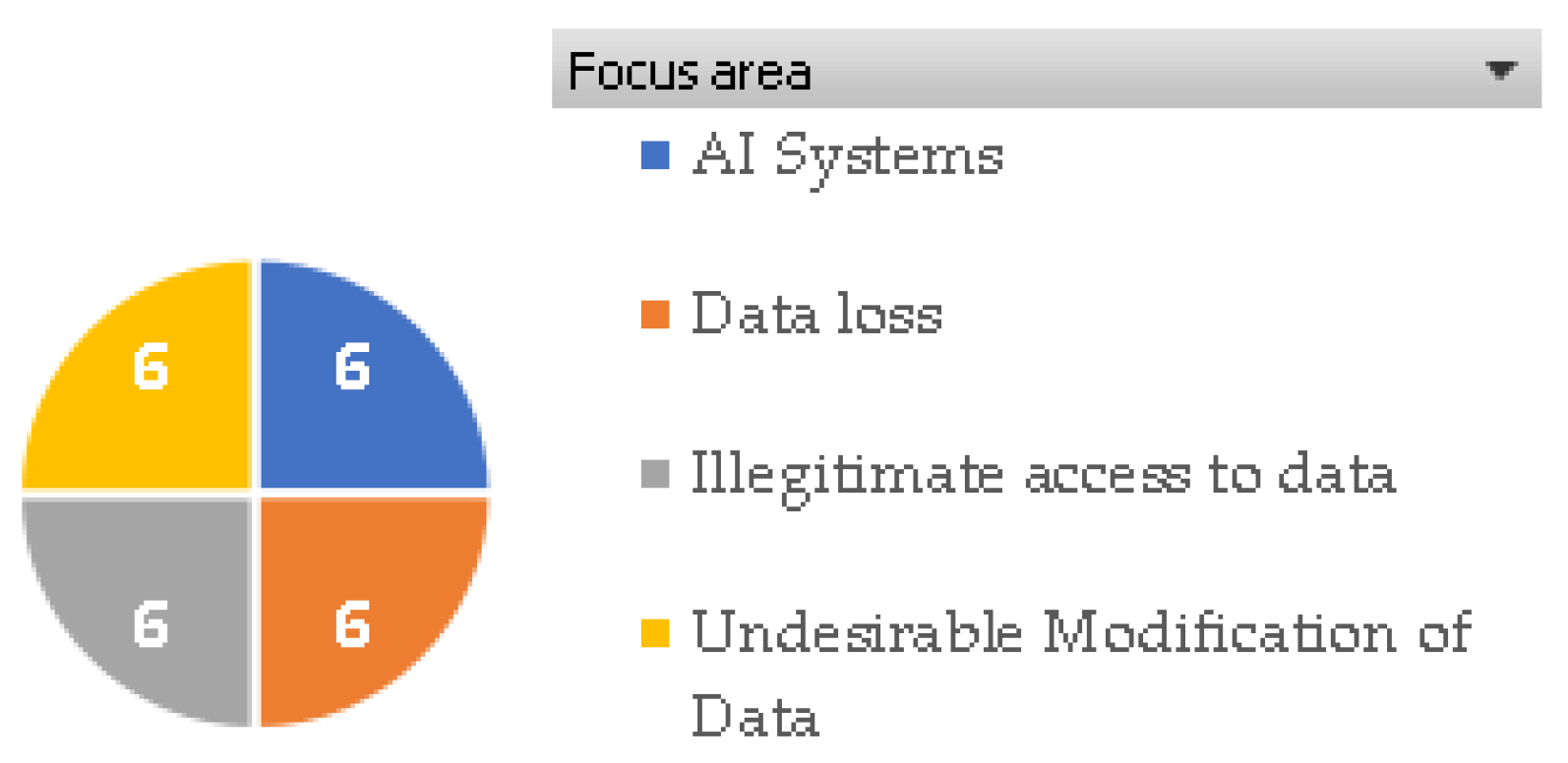

Assessment Category 4: Risks

- 66.

- What would be the primary impact on data subjects if the risk materialized? (D), ← FRIA:Q8, Q69–Q82, Q122–Q128

- 67.

- What are the primary threats that could cause this risk to materialize? (D), ← FRIA Q9–Q16, Q18–Q37, Q44, Q48–Q50, Q52, Q64

- 68.

- What are the risk sources? (D), ← FRIA Q9–Q37, Q44, Q48–Q53, Q56, Q59–Q68, Q83–Q146

- 69.

- Which of the implemented or proposed controls help mitigate the risk? (D), ← FRIA Q9–Q37, Q44, Q48–Q53, Q59–Q68, Q83–Q121, Q129–Q146

- 70.

- How is the level of risk determined, considering both its potential consequences and the effectiveness of existing mitigation measures? (D)

- 71.

- How is the probability of the risk assessed, considering potential threats, risk origins, and mitigation measures? (D)

3.1.2. The FPIA Part of the Framework

Assessment Category 1: Purpose Assessment

- Rationale and problem statement

- 1.

- Describe your plan for implementing the AI system. What specific issue is it designed to address? What is the underlying motivation or trigger for its use? (D)

- Objective

- 2.

- What goal is the AI system expected to accomplish? What is its main purpose, and what additional goals does it aim to fulfill? (D)

- 3.

- What requirements does the Deployer have, and how will the system effectively satisfy them? (P/D)

- 4.

- How effective will the system likely meet Deployer’s needs? (P)

- 5.

- Have alternative non-automated processes been considered? (D)

- 6.

- What impact would result from not implementing the system? (D)

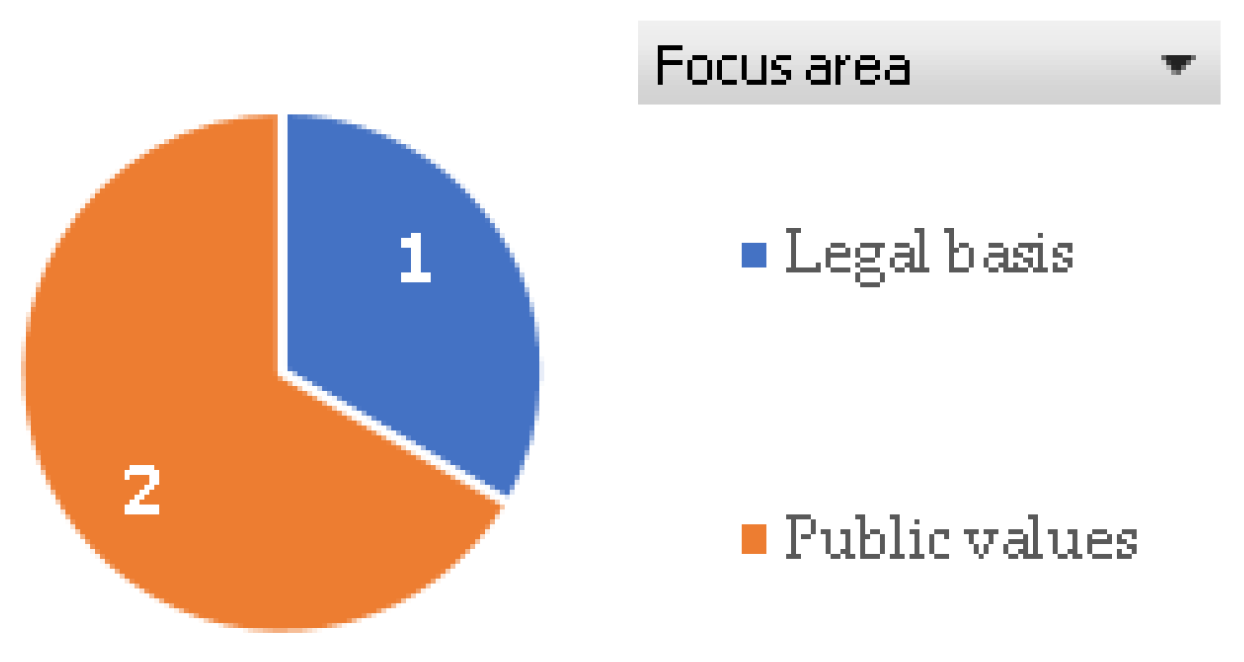

Assessment Category 2: Public Values and Legal Basis

- Public values

- 7.

- What societal principles justify the implementation of the system? If multiple values drive its adoption, can they be prioritized? (D)

- 8.

- Which societal values could be negatively affected by the implementation of the system? (D), →DPIA Q66

- Legal basis

- 9.

- On what legal grounds is the system’s implementation based, and what legitimizes the decisions it facilitates? (D), → DPIA: Q67 or Q68 or Q69, depending on the answer.

Assessment Category 3: Stakeholders Engagement and Responsibilities

- Involved Parties and Roles:

- 10.

- Which organizations and individuals contribute to the system’s creation, deployment, operation, and maintenance? Have clear responsibilities been assigned? (P/D), ← DPIA: Q2, → DPIA: Q67 or Q68 or Q69, depending on the answer.

- 11.

- Have the duties related to the system’s development and implementation been clearly defined? What measures ensure that these responsibilities remain transparent after deployment and during its operational phase? (P/D), → DPIA: Q67 or Q68 or Q69, depending on the answer.

- 12.

- Who is ultimately responsible for the AI system? (P/D), → DPIA: Q67 or Q68 or Q69, depending on the answer.

- 13.

- Which internal and external stakeholders will be engaged during consultations? (P/D), ← DPIA: Q2, → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 14.

- If the system was created by a third party, have definitive arrangements been made concerning its ownership and governance? What do these arrangements specify? (D), → DPIA: Q67 or Q68 or Q69, depending on the answer.

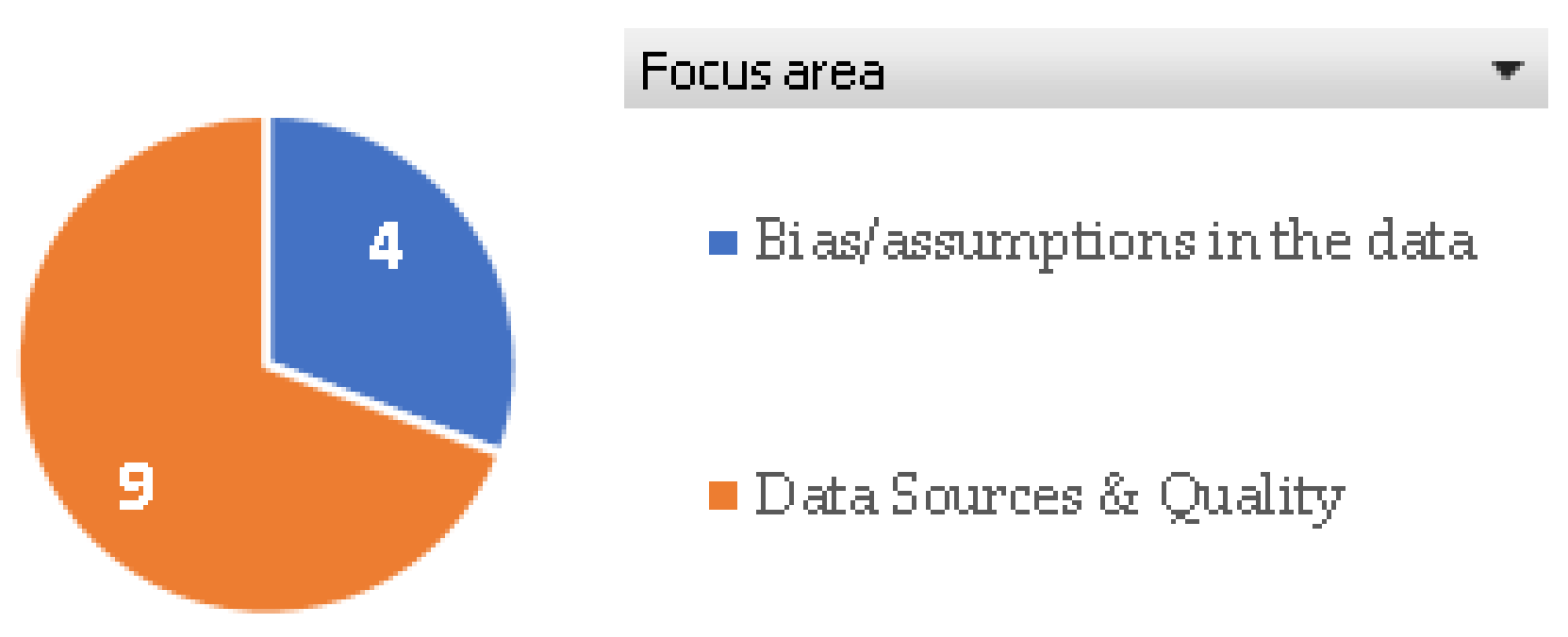

Assessment Category 4: Data Sources and Quality

- Data sources and quality

- 15.

- What categories of data will be utilized as input for the system, and what are its sources? Which is the method of collection? (D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 16.

- Does the data possess the necessary accuracy and trustworthiness for its intended application? (P/D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 17.

- Are you planning to establish a process for recording how data quality issues were addressed during the design phase? Will this information be made publicly accessible? (P), → DPIA: Q68 or Q69 depending on the answer.

- 18.

- Will you establish a documented procedure to mitigate the risk of outdated or inaccurate data influencing automated decisions? Do you plan to make this information publicly accessible? (P/D), → DPIA: Q10 and Q67 or Q68 or Q69 depending on the answer

- 19.

- Who controls the data? (D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 20.

- Will the system integrate data from various sources? (D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 21.

- Who was responsible for gathering the data used to train the system? (P), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 22.

- Who was responsible for gathering the input data utilized by the system? (D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 23.

- Does the system rely on analyzing unstructured data to produce recommendations or decisions? If so, what specific types of unstructured data are involved? (D), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- Bias/assumptions in the data

- 24.

- What underlying presumptions and biases are present in the data? What measures are in place to detect, counteract, or reduce their impact on the system’s outcomes? (P), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 25.

- If the system relies on training data, does it appropriately represent the environment in which it will be deployed? (P), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 26.

- Will there be formal procedures in place to assess datasets for potential biases and unintended consequences? (P), → DPIA: Q67 or Q68 or Q69 depending on the answer.

- 27.

- Will you undertake a Gender Based Analysis Plus of the data? Will you be making this information publicly available? (P), → DPIA: Q67 or Q68 or Q69 depending on the answer.

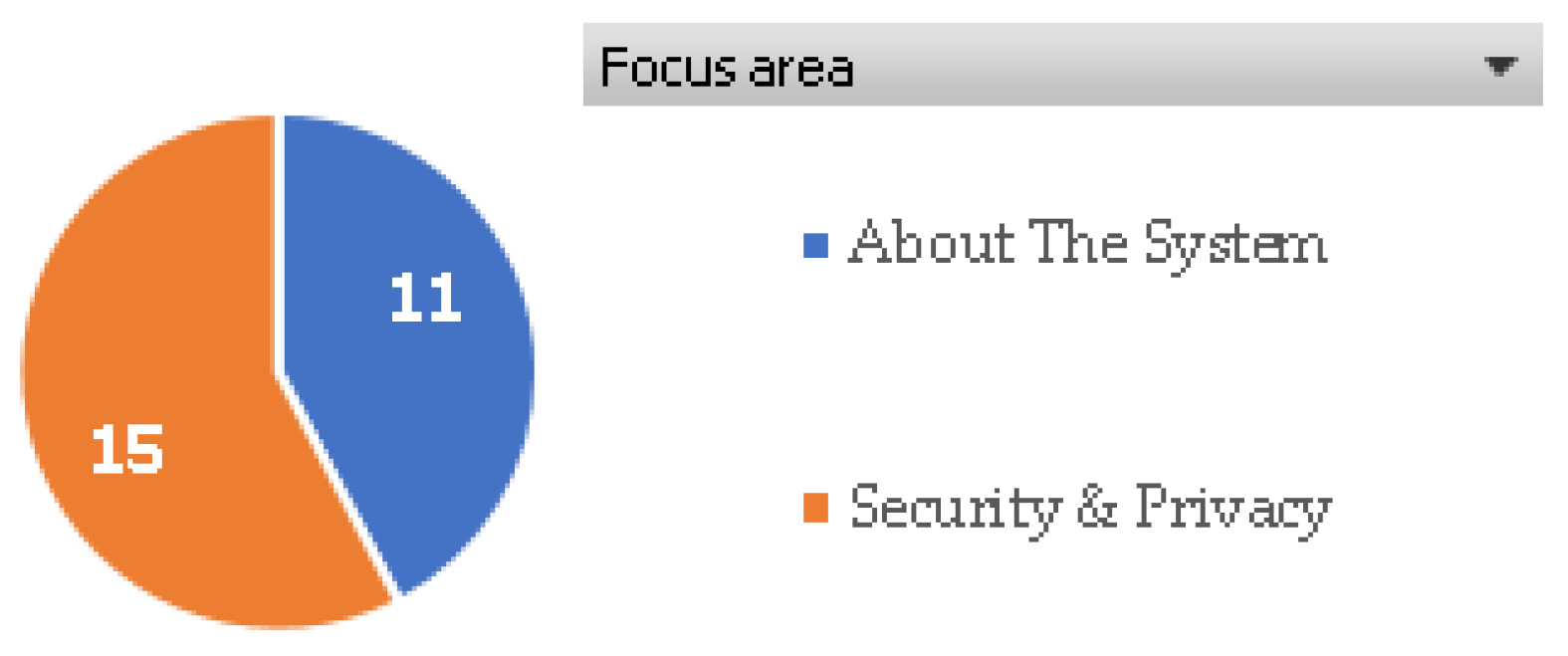

Assessment Category 5: System Specifications

- Security and privacy

- 28.

- Is the data within the system adequately protected? Consider security measures separately for both input and output data. (P/D), →DPIA: Q20–Q30, Q32–Q42, Q44–Q46, Q67, or Q68, or Q69, depending on the answer.

- 29.

- Is access to the system data supervised? (P/D), →DPIA: Q67 or Q68 or Q69 depending on the answer.

- 30.

- Does the system adhere to applicable regulations regarding data archiving? (P/D), → DPIA: Q45, Q67 or Q68 or Q69 depending on the answer.

- 31.

- Have proper controls been established to regulate access for authorized users or groups within the system? Will a procedure be implemented to authorize, monitor, and withdraw access privileges? (P/D), → DPIA: Q43, Q67 or Q68 or Q69 depending on the answer.

- 32.

- Is a logging system in place to track data access and usage, ensuring threat detection, prevention, and investigation within the organization? (P/D), → DPIA: Q44, Q67, or Q68, or Q69, depending on the answer.

- 33.

- Have adequate safeguards been implemented to protect the identity of system data, such as through anonymization or pseudonymization of personal information? (P/D), → DPIA: Q41, Q67, or Q68, or Q69, depending on the answer.

- 34.

- Will the Automated Decision System rely on personal data for its input? (D), → DPIA:Q4, Q67 or Q68 or Q69 depending on the answer.

- 35.

- Have you confirmed that personal data are used solely for purposes directly linked to the delivery of the program or service? (D), → DPIA: Q9, Q47, Q67, or Q68, or Q69, depending on the answer.

- 36.

- Does the decision-making process utilize individuals’ personal data in a way that directly impacts them? (D), → DPIA: Q47, Q67 or Q68, or Q69, depending on the answer.

- 37.

- Have you ensured that the system’s use of personal data aligns with: (a) the existing Personal Information Banks (PIBs) and Privacy Impact Assessments (PIAs) for your programs, or (b) any planned or implemented updates to PIBs or PIAs that reflect new uses and processing methods? (P/D), →DPIA: Q67 or Q68, or Q69, depending on the answer.

- About The System

- 38.

- Does the system support image and object recognition?(P)

- 39.

- Does the system support text and speech analysis? (P)

- 40.

- Does the system support risk assessment? (P)

- 41.

- Does the system support content generation? (P)

- 42.

- Does the system support process optimization and workflow automation? (P)

- 43.

- Do you plan a system fully or partially automated? Please explain how the system contributes to the decision-making process. (D)

- 44.

- Will the system be responsible for making decisions or evaluations that require subjective judgment or discretion? (D), → DPIA: Q67 or Q68, or Q69, depending on the answer.

- 45.

- Can you explain the standards used to assess citizens’ data and the procedures implemented to process it? (D)

- 46.

- Can you outline the system’s output and any essential details required to understand it in relation to the administrative decision-making process? (D)

- 47.

- Will the system carry out an evaluation or task that would typically require human involvement? (D)

- 48.

- Is the system utilized by a department other than the one responsible for its development? (P/D), →DPIA: Q67 or Q68 or Q69, depending on the answer.

- 49.

- If your system processes or generates personal information, will you perform or have you already performed a Privacy Impact Assessment, or revised a previous one? (P/D), →DPIA: Q67 or Q68 or Q69, depending on the answer.

- 50.

- Will you integrate security and privacy considerations into the design and development of your systems from the initial project phase? (P/D), →DPIA: Q67 or Q68 or Q69, depending on the answer.

- 51.

- Will the information be used within a standalone system with no connections to the Internet, intranet, or any other external systems? (P/D), →DPIA: Q34, Q68, or Q69, depending on the answer.

- 52.

- If personal information is being shared, have appropriate safeguards and agreements been implemented? (P/D), →DPIA: Q17, Q33, Q67, or Q68, or Q69, depending on the answer.

- 53.

- Will the system remove personal identifiers at any point during its lifecycle? (P/D), →DPIA: Q41, Q68, or Q69, depending on the answer.

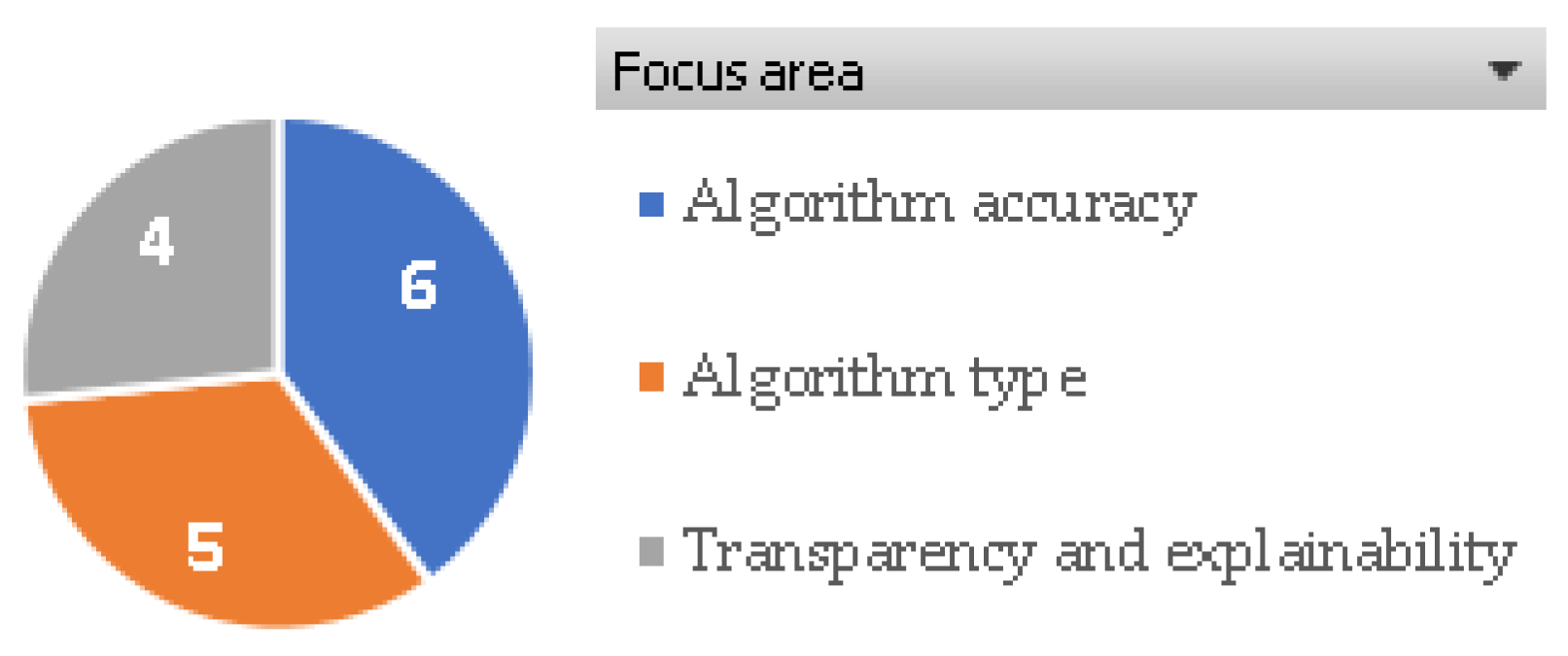

Assessment Category 6: Algorithm Specifications

- Algorithm type:

- 54.

- What type of algorithm will be used (self-learning or non-self-learning)? (P)

- 55.

- Why was this type of algorithm chosen? (P)

- 56.

- Will the algorithm have any of the following traits: a) being considered a proprietary or confidential process, or b) being complex or challenging to interpret or clarify? (P), →DPIA: Q68

- 57.

- What makes this algorithm the most appropriate choice for meeting the goals outlined in question 2? (D)

- 58.

- What other options exist, and why are they less suitable or effective? (D)

- Algorithm accuracy:

- 59.

- How accurate is the algorithm, and what evaluation criteria are used to determine its accuracy? (P), →DPIA: Q68 or Q69, depending on the answer.

- 60.

- Does the algorithm’s accuracy meet the standards required for its intended application? (D), →DPIA: Q68 or Q69, depending on the answer.

- 61.

- How is the algorithm tested? (D/P), →DPIA: Q68 or Q69, depending on the answer.

- 62.

- What steps can be implemented to prevent the replication or intensification of biases (e.g., different sampling strategy, feature modification, etc.)? (P), →DPIA: Q68 or Q69, depending on the answer.

- 63.

- What underlying assumptions were made in choosing and weighing the indicators? Are these assumptions valid? Why or why not? (P), →DPIA: Q68 or Q69, depending on the answer.

- 64.

- What is the error rate of the algorithm? For example, how many false positives or false negatives occur, or what is the R-squared value? (D/P), →DPIA: Q67, Q68, or Q69, depending on the answer.

- Transparency and explainability:

- 65.

- Is it clear what the algorithm does, how it does this, and on what basis (what data) it does this? (D/P), →DPIA: Q68 or Q69, depending on the answer.

- 66.

- Which individuals or groups, both internal and external, will be informed about how the algorithm operates, and what methods will be used to achieve this transparency? (D/P), →DPIA: Q68 or Q69, depending on the answer.

- 67.

- For which target groups must the algorithm be explainable? (D/P), →DPIA: Q68 or Q69, depending on the answer.

- 68.

- Can the algorithm’s operation be described clearly enough for the target groups mentioned in Question 67? (D/P), →DPIA: Q68 or Q69, depending on the answer.

Assessment Category 7: Effects of the AΙ System

- Impact Assessment

- 69.

- What impact will the AI system have on citizens, and how will the “human measure” factor into decision-making? (D), →DPIA: Q66

- 70.

- What risks might arise, such as stigmatization, discrimination, or other harmful outcomes, and what measures will be taken to address or reduce them? (D), →DPIA: Q66

- 71.

- How will the anticipated outcomes help resolve the original issue that led to the AI’s creation and deployment, and how will they support achieving the intended goals? (D), →DPIA: Q66

- 72.

- In what ways do the anticipated effects align with the underlying values, and how will the potential for undermining those values be managed? (D), →DPIA: Q66

- 73.

- Is the project in a field that attracts significant public attention, such as privacy issues or is it frequently challenged through legal actions? (D), →DPIA: Q66

- 74.

- Are citizens in this line of business particularly vulnerable? (D), →DPIA: Q66

- 75.

- Are the stakes of the decisions very high? (D), →DPIA: Q66

- 76.

- Will this project significantly affect staff levels or the nature of their responsibilities? (D), →DPIA: Q66

- 77.

- Will the implementation of the system introduce new obstacles or intensify existing challenges for individuals with disabilities? (D), →DPIA: Q66

- 78.

- Does the project require obtaining new policy authority? (D), →DPIA: Q66

- 79.

- Are the consequences of the decision reversible? (D), →DPIA: Q66

- 80.

- How long will the impact from the decision last? (D), →DPIA: Q66

Assessment Category 8: Procedural Fairness and Governance

- Decision process and human role

- 81.

- What is done with the AI system’s results, and what decisions rely on these outcomes? (D), → DPIA: Q66

- 82.

- Does the decision pertain to any of the categories below: health related services, economic interests, social assistance, access and mobility, licensing and issuance of permits, and employment? (D), → DPIA: Q66

- 83.

- What is the human role in decisions made using the system’s output, and how can individuals make responsible choices based on that output? (D), → DPIA: Q68 or Q69, depending on the answer.

- 84.

- Are there enough trained personnel available to oversee, evaluate, and refine the AI system as required, both now and in the future? (D), → DPIA: Q24, Q68, or Q69, depending on the answer.

- Feedback mechanism

- 85.

- Will a feedback collection mechanism be implemented for system users? (D), → DPIA: Q68 or Q69, depending on the answer.

- Appeal process

- 86.

- Will a formal process be in place for citizens to appeal and challenge decisions made by the system? (D), → DPIA: Q68 or Q69, depending on the answer.

- Audit Trails and Documentation:

- 87.

- Does the audit trail inform the authority or delegated authority as specified by the applicable legislation? (D), → DPIA: Q68 or Q69, depending on the answer.

- 88.

- Will the system generate an audit trail documenting every recommendation or decision it produces? (D), → DPIA: Q68 or Q69, depending on the answer.

- 89.

- Will the audit trail clearly indicate all critical decision points? (D), → DPIA: Q68 or Q69 depending on the answer.

- 90.

- What procedures will be in place to ensure that the audit trail remains accurate and tamper-proof? (D), → DPIA: Q68 or Q69, depending on the answer.

- 91.

- Could the audit trail produced by the system support the generation of a decision notification? (D), → DPIA: Q68 or Q69, depending on the answer.

- 92.

- Will the audit trail specify the exact system version responsible for each supported decision? (D), → DPIA: Q68 or Q69, depending on the answer.

- 93.

- Will the audit trail identify the authorized individual responsible for the decision? (D), → DPIA: Q68 or Q69, depending on the answer.

- Accountability

- 94.

- What processes have been established for making decisions using the AI system? (D), → DPIA: Q68 or Q69, depending on the answer.

- 95.

- How are the different stakeholders incorporated into the decision-making process? (D), → DPIA: Q68 or Q69, depending on the answer.

- 96.

- What measures are in place to ensure that decision-making procedures adhere to principles of good governance, good administration and legal protection? (D), → DPIA: Q68 or Q69, depending on the answer.

- Human oversight process

- 97.

- Will the system include an option for human intervention to override its decisions? (D), → DPIA: Q68 or Q69 depending on the answer.

- 98.

- Will there be a procedure to track instances where overrides occur? (D), → DPIA: Q68 or Q69 depending on the answer.

- System logic and change management

- 99.

- Will all critical decision points in the automated logic be tied to relevant laws, policies, or procedures? (D), → DPIA: Q68 or Q69 depending on the answer.

- 100.

- Will you keep a detailed record of every change made to the model and the system? (D), → DPIA: Q68 or Q69 depending on the answer.

- 101.

- Will the audit trail contain change control processes to document adjustments to how the system functions or performs? (D), → DPIA: Q68 or Q69 depending on the answer.

- Transparency and explainability

- 102.

- Will the system have the capability to provide explanations for its decisions or recommendations upon request? (D), → DPIA: Q68 or Q69 depending on the answer.

Assessment Category 9: Contextual Factors

- Context:

- 103.

- When will the AI system be operational, and for how long will it remain in use? (D), → DPIA Q68 or Q69 depending on the answer.

- 104.

- In what specific locations or circumstances will the AI system be applied? Does it target a particular geographic region, group of people, or cases? (D), → DPIA Q68 or Q69 depending on the answer.

- 105.

- Will the algorithm remain functional if the context shifts or if it is deployed in a different environment than initially intended? (P), → DPIA Q68 or Q69 depending on the answer.

Assessment Category 10: Communication Strategy

- Information dissemination

- 106.

- To what extent can the operation of the AI system be made transparent, given its objectives and deployment context? (D), → DPIA: Q12, Q68, or Q69, depending on the answer.

- 107.

- What approach will you take to communicate about the AI system’s use? (D), → DPIA: Q12, Q68, or Q69, depending on the answer.

- 108.

- Will the system’s output be displayed in a visual format? If so, does the visualization accurately reflect the system’s results and provide clarity for different user groups? (D), → DPIA: Q12, Q68, or Q69, depending on the answer.

Assessment Category 11: Evaluation, Auditing and Safeguarding

- Accountability and review

- 109.

- Have suitable tools been made available for the evaluation, audit, and protection of the AI system? (D), → DPIA: Q68 or Q69 depending on the answer.

- 110.

- Are there sufficient mechanisms to ensure the system’s operation and outcomes remain accountable and transparent? (D), → DPIA: Q68 or Q69, depending on the answer.

- 111.

- What options exist for auditors and regulators to enforce formal actions related to the government’s use of the AI system? (D), → DPIA: Q68 or Q69, depending on the answer.

- 112.

- How frequently and at what intervals should the AI system’s use be reviewed? Does the organization have the appropriate personnel in place to carry out these evaluations? (D), → DPIA: Q68 or Q69, depending on the answer.

- 113.

- What processes can be implemented to ensure the system remains relevant and effective in the future? (D), → DPIA: Q68 or Q69, depending on the answer.

- 114.

- How can validation tools be implemented to confirm that decisions and actions continue to align with the system’s purpose and objectives, even as the application context evolves ? (D), → DPIA: Q68 or Q69, depending on the answer.

- 115.

- What safeguards are in place to maintain the system’s integrity, fairness, and effectiveness throughout its lifecycle? (D), → DPIA: Q68 or Q69, depending on the answer.

- 116.

- Have the human capital and infrastructure requirements specified in Q72 and Q73 been fulfilled? (D), → DPIA: Q68 or Q69, depending on the answer.

- 117.

- For self-learning algorithms, have monitoring processes and systems been established (for example, concerning data drift, concept drift and accuracy)? (D), → DPIA: Q68 or Q69, depending on the answer.

- 118.

- Are there adequate means to modify the system or change how it is used if it no longer serves its original purpose or goals? ((D), → DPIA: Q68 or Q69, depending on the answer.

- 119.

- Is there an external auditing and oversight mechanism in place? (D), → DPIA: Q68 or Q69, depending on the answer.

- 120.

- Is sufficient information provided about the system to enable external supervision? (D), → DPIA: Q68 or Q69, depending on the answer.

- 121.

- Is the audit frequency and practice clearly communicated? (D), → DPIA: Q68 or Q69, depending on the answer.

Assessment Category 12: Core Human Rights

- Fundamental rights

- 122.

- Does the system impact any human rights? (D), → DPIA: Q66

- Applicable laws and standards:

- 123.

- Are there particular legal regulations or standards that address violations of human rights? (D), ←DPIA: Q8, → DPIA: Q66

- Defining seriousness:

- 124.

- To what extent does the system impact on human rights? (D), ←DPIA: Q14–Q16, → DPIA: Q66

- 125.

- Which objectives are pursued by using the system? (D), → DPIA: Q66

- 126.

- Is the system that is to be used a suitable means to realize the set objectives? (D), → DPIA: Q66

- Necessity and subsidiarity

- 127.

- Is using this specific system necessary to achieve this objective, and are there no other or mitigating measures available to do so? (D), → DPIA: Q66

- 128.

- Does the use of the system result in a reasonable balance between the objectives pursued and the fundamental rights that will be infringed? (D), → DPIA: Q66

Assessment Category 13: Preventative and Mitigating Measures

- During the development and implementation of the AI system

- 129.

- Have actions been taken to reduce bias risks through instance class adjustment, instance filtering, or instance weighting? (P), → DPIA: Q68 or Q69, depending on the answer.

- 130.

- Have you employed tools like “gender tagging” to highlight how protected personal characteristics influence the system’s operation? (P), → DPIA: Q68 or Q69, depending on the answer.

- 131.

- Is the developed system “fairness-aware”? (P), → DPIA: Q68 or Q69, depending on the answer.

- 132.

- Have “in-processing” or “post-processing” methods of “bias mitigation” been implemented? (P), → DPIA: Q68 or Q69, depending on the answer.

- 133.

- Have mechanisms for “ethics by design”, “equality by design”, and “security by design” been implemented? (P), → DPIA: Q68 or Q69, depending on the answer.

- 134.

- Have “Testing and validation” been performed (P), → DPIA: Q68 or Q69, depending on the answer.

- 135.

- Have methods to enhance the system openness and interpretability such as a “crystal box” or “multi-stage” implemented? (P), → DPIA: Q68 or Q69, depending on the answer.

- Administrative measures

- 136.

- Did the initial development involve a trial phase using a limited dataset and restricted access, before gradually expanding upon successful results? (P), → DPIA: Q68 or Q69, depending on the answer.

- 137.

- Have requirements been set for regular reporting and auditing to periodically review the system’s effects and its impact on human rights? (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 138.

- Have you adopted a ban or moratorium on specific uses of the system? (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 139.

- Have you formulated upper limits for the use of specific AI systems? (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 140.

- Have you established “codes of conduct”, “professional guidelines”, or “ethical standards” for stakeholders who will operate the system? (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 141.

- Have you established a formal ethical commitment for the system’s Providers and Deployers? (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 142.

- Do you provide education/training on data-ethics awareness (P/D), → DPIA: Q68 or Q69, depending on the answer.

- 143.

- Are systems normalized, accredited and certified according to standards for credible performance? (P), → DPIA: Q68 or Q69, depending on the answer.

- 144.

- Are checklists available for decision-making on the basis of the system’s output? (D), → DPIA: Q68 or Q69 depending on the answer.

- 145.

- How do you ensure that affected citizens have opportunities to engage and participate? (D), → DPIA: Q68 or Q69, depending on the answer.

- 146.

- Have you created a strategy to phase out the system if it is determined to be no longer appropriate? (D), → DPIA: Q68 or Q69, depending on the answer.

3.2. Synergies Between DPIA and FRIA

- Stakeholder responsibilities: The responsibilities outlined in DPIA concerning data processing activities will contribute to the corresponding question in FRIA that address the allocation of responsibility and accountability throughout the system’s design, development, operational use, and ongoing maintenance.

- Personal data processing: Understanding whether and which personal information the AI system utilizes will provide essential details for the DPIA, enabling a clear definition of the scope and nature of personal data processing. Therefore, the relevant questions in FRIA will supply valuable information to the DPIA’s question concerning the processing of personal data.

- Legal basis: The questions in DPIA that identify the lawful basis for data processing are related somehow with corresponding questions in FRIA regarding specific legislation and standards.

- Data security and privacy: the questions concerning data security and privacy in the FRIA can offer valuable insights into the DPIA regarding the preventative and mitigating measures needed to safeguard personal data. For instance, the implementation of encryption, anonymization, traceability, and logical access control alleviate risks into both DPIA and FRIA contexts.

- Contracts/agreements: The DPIA question regarding contracts, which seeks to understand how subcontractors are committed and being managed in terms of data protection practices, is relevant with the corresponding FRIA question concerning the existence of agreements or arrangements with appropriate safeguards when personal data are being processed.

- Network security: Network security questions within the FRIA part offer vital insights to the relevant queries in the DPIA part, particularly concerning network security practices and their effectiveness.

- Data anonymization and minimization: The answers to the corresponding FRIA questions may provide essential input the DPIA framework about identifying, categorizing, and addressing risks, threats, and mitigation measures.

- Logical access control: The FRIA questions regarding who has access to the system, how access rights are granted, monitored, and revoked provide feedback to the DPIA relevant questions understanding the potential risks and vulnerabilities associated with unauthorized access or misuse of personal data.

- The questions within FRIA pertaining to the effects of the AI system: The roadmap for protecting fundamental rights, mitigation measures, data quality, stakeholder responsibilities, security, privacy and system accuracy will significantly affect the responses to specific questions of the DPIA, concerning the potential threats, sources of risk, and the necessary measures to be adopted in the DPIA.

4. Case Study

4.1. The Dutch Childcare Benefits Scandal

- Racial profiling and discrimination: The risk-scoring algorithm employed by the Dutch Tax Administration inherently discriminated against people based on nationality and ethnicity, marking non-Dutch nationals and those with dual citizenship for harsher scrutiny. This led to significant financial and personal distress for affected families, many of whom belonged to minority groups.

- Consequences for families: The scandal had devastating impacts on the lives of thousands of families. Many experienced debt, unemployment, evictions, mental health issues, and even family breakdowns due to the false fraud accusations and the subsequent financial burdens placed upon them.

- Political and institutional response: The Dutch Government’s admission that institutional racism of the Dutch Tax and Customs Administration was the root cause of the scandal marked a pivotal moment in acknowledging the serious issues within the ADM systems used. It highlighted the need for systemic change to prevent such injustices from occurring in the future.

- Calls for reform: Organizations like Amnesty International have emphasized the scandal as a wake-up call, urging for an immediate ban on the use of discriminatory data of nationality and ethnicity in risk-scoring for law enforcement and public service delivery. They advocate for a framework that ensures ADM systems are transparent and accountable, and they safeguard human rights.

- Inefficiency in achieving the objectives of the deployment and use.

- Discrimination–racial profiling, inadequate data source, and quality validation.

- Black box systems, self-learning algorithms in the public sector, and lack of human supervision in the decision-making process.

- Lack of transparency and explainability.

- Lack of FRIA.

- Lack of consultations with stakeholders and accountability.

- Lack of effective remedies and redress.

- Lack of review and auditing.

- GDPR violations.

4.2. Theoretical Application of the Integrated Framework

5. Discussion

- Q1.

- The DPIA (in the AI domain) and the FRIA aim to proactively identify and mitigate risks to human rights. The DPIA is focused on data protection and privacy, leveraging a structured, regulatory-driven methodology, whereas the FRIA broadens the scope to encompass a range of fundamental rights beyond privacy, adopting a more principle-based approach. On the other hand, the DPIA may be obligatory for processes that do not employ the use of AI systems.

- Q2.

- Merging DPIA and FRIA methodologies into a unified framework involves harmonizing their structured and principle-based approaches to ensure comprehensive risk evaluation. Such an integrated framework needs to consider their complementary strengths as well as their strong interconnections, facilitating a thorough assessment that encompasses both data protection as well as other fundamental rights. In any case, key elements include a detailed analysis of the AI system’s impact on fundamental rights, a clear documentation process, and mechanisms for continuous monitoring and updating in response to new insights or system changes.

- Q3.

- Stakeholders in the EU, by adopting such a framework, would benefit from several advantages, including a comprehensive approach to risk assessment that aligns simultaneously with more than one regulatory requirement and ethical standards, potentially enhancing public trust in AI systems. The integrated framework addresses compliance with the GDPR and the AI Act by providing a comprehensive tool for evaluating both data protection and fundamental rights impacts, thereby promoting ethical AI system utilization. This approach ensures that AI systems are developed and deployed in a manner consistent with EU regulatory frameworks and ethical considerations. However, obstacles such as the complexity of integrating methodologies, resource constraints, and the need for specialized knowledge to apply the framework effectively could arise; as it became evident, the role of the Provider of the system is also crucial in conducting a proper assessment.

- Q4.

- By fostering a comprehensive evaluation of transparency, accountability, and impacts on fundamental rights, including data protection and privacy, the proposed framework aims to contribute significantly to enhancing public confidence in AI technologies. Therefore, it may encourage the development of AI systems that are not only compliant with legal standards, but also aligned with ethical principles, thereby supporting the responsible use of AI across diverse industries. Making the output of the FRIA/DPIA publicly available, the overall transparency, as well as the user’s trust, will be increased, whilst at the same time, the relevant stakeholders will be obligated to indeed implement what they are supposed to do.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aggarwal, K.; Mijwil, M.M.; Al-Mistarehi, A.-H.; Alomari, S.; Gök, M.; Alaabdin, A.M.Z.; Abdulrhman, S.H. Has the Future Started? The Current Growth of Artificial Intelligence, Machine Learning, and Deep Learning. Iraqi J. Comput. Sci. Math. 2022, 3, 115–123. [Google Scholar] [CrossRef]

- Chugh, R. Impact of Artificial Intelligence on the Global Economy: Analysis of Effects and Consequences. Int. J. Soc. Sci. Econ. Res. 2023, 8, 1377–1385. [Google Scholar] [CrossRef]

- Bezboruah, T.; Bora, A. Artificial Intelligence: The Technology, Challenges and Applications. Trans. Eng. Comput. Sci. 2020, 8, 44–51. [Google Scholar] [CrossRef]

- Poalelungi, D.G.; Musat, C.L.; Fulga, A.; Neagu, M.; Neagu, A.I.; Piraianu, A.I.; Fulga, I. Advancing Patient Care: How Artificial Intelligence Is Transforming Healthcare. J. Pers. Med. 2023, 13, 1214. [Google Scholar] [CrossRef] [PubMed]

- Zamponi, M.E.; Barbierato, E. The Dual Role of Artificial Intelligence in Developing Smart Cities. Smart Cities 2022, 5, 728–755. [Google Scholar] [CrossRef]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural Language Processing: State of the Art, Current Trends and Challenges. Multimed. Tools Appl. 2023, 82, 3713–3744. [Google Scholar] [CrossRef] [PubMed]

- Etzioni, A.; Etzioni, O. Incorporating Ethics into Artificial Intelligence. J. Ethics 2017, 21, 403–418. [Google Scholar] [CrossRef]

- Mökander, J.; Morley, J.; Taddeo, M.; Floridi, L. Ethics-Based Auditing of Automated Decision-Making Systems: Nature, Scope, and Limitations. Sci. Eng. Ethics 2021, 27, 44. [Google Scholar] [CrossRef]

- Selbst, A.D. An Institutional View of Algorithmic Impact Assessments. Harv. J. Law Technol. Harv. JOLT 2021, 35, 117–191. [Google Scholar]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a Defense to Adversarial Perturbations Against Deep Neural Networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 23–25 May 2016; pp. 582–597. [Google Scholar]

- European Union. Regulation—EU—2024/1689—EN—EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj (accessed on 16 July 2024).

- Heikkilä, M. Five Things You Need to Know About the EU’s New AI Act. Available online: https://www.technologyreview.com/2023/12/11/1084942/five-things-you-need-to-know-about-the-eus-new-ai-act/ (accessed on 27 March 2024).

- Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA Relevance); 2016; Volume 119. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 17 December 2024).

- Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions Artificial Intelligence for Europe; 2018. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex:52018DC0237 (accessed on 17 December 2024).

- European Parliament Legislative Resolution of 13 March 2024 on the Proposal for a Regulation of the European Parliament and of the Council on Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts (COM(2021)0206—C9-0146/2021—2021/0106(COD)); 9_TA. Available online: https://www.europarl.europa.eu/doceo/document/TA-9-2024-0138_EN.html (accessed on 17 December 2017).

- Brock, D.C. Learning from Artificial Intelligence’s Previous Awakenings: The History of Expert Systems. AI Mag. 2018, 39, 3–15. [Google Scholar] [CrossRef]

- Grosan, C.; Abraham, A. Rule-Based Expert Systems. In Intelligent Systems: A Modern Approach; Grosan, C., Abraham, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 149–185. ISBN 978-3-642-21004-4. [Google Scholar]

- Buiten, M.C. Towards Intelligent Regulation of Artificial Intelligence. Eur. J. Risk Regul. 2019, 10, 41–59. [Google Scholar] [CrossRef]

- Woschank, M.; Rauch, E.; Zsifkovits, H. A Review of Further Directions for Artificial Intelligence, Machine Learning, and Deep Learning in Smart Logistics. Sustainability 2020, 12, 3760. [Google Scholar] [CrossRef]

- Cao, L. AI in Finance: Challenges, Techniques and Opportunities. arXiv 2021, arXiv:2107.09051. [Google Scholar] [CrossRef]

- Tan, E.; Jean, M.P.; Simonofski, A.; Tombal, T.; Kleizen, B.; Sabbe, M.; Bechoux, L.; Willem, P. Artificial Intelligence and Algorithmic Decisions in Fraud Detection: An Interpretive Structural Model. Data Policy 2023, 5, e25. [Google Scholar] [CrossRef]

- Heins, C. Artificial Intelligence in Retail—A Systematic Literature Review. Foresight 2023, 25, 264–286. [Google Scholar] [CrossRef]

- Lacroux, A.; Martin-Lacroux, C. Should I Trust the Artificial Intelligence to Recruit? Recruiters’ Perceptions and Behavior When Faced With Algorithm-Based Recommendation Systems During Resume Screening. Front. Psychol. 2022, 13, 895997. [Google Scholar] [CrossRef] [PubMed]

- Brooks, C.; Gherhes, C.; Vorley, T. Artificial Intelligence in the Legal Sector: Pressures and Challenges of Transformation. Camb. J. Reg. Econ. Soc. 2020, 13, 135–152. [Google Scholar] [CrossRef]

- Ethics Guidelines for Trustworthy AI|Shaping Europe’s Digital Future. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 23 November 2023).

- European Union. Charter of Fundamental Rights of the European Union. 2010. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:12012P/TXT (accessed on 17 December 2024).

- Gaud, D. Ethical Considerations for the Use of AI Language Model. Int. J. Res. Appl. Sci. Eng. Technol. 2023, 11, 6–14. [Google Scholar] [CrossRef]

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. SSRN Electron. J. 2020, 2020-1. [Google Scholar] [CrossRef]

- Art. 29 Working Party. Justice and Consumers Article 29—Guidelines on Data Protection Impact Assessment (DPIA) (Wp248rev.01). Available online: https://ec.europa.eu/newsroom/article29/items/611236 (accessed on 26 January 2025).

- Söderlund, K.; Larsson, S. Enforcement Design Patterns in EU Law: An Analysis of the AI Act. Digit. Soc. 2024, 3, 41. [Google Scholar] [CrossRef]

- Novelli, C.; Casolari, F.; Rotolo, A.; Taddeo, M.; Floridi, L. Taking AI Risks Seriously: A New Assessment Model for the AI Act. AI Soc. 2023, 39, 2493–2497. [Google Scholar] [CrossRef]

- Mantelero, A. The Fundamental Rights Impact Assessment (FRIA) in the AI Act: Roots, Legal Obligations and Key Elements for a Model Template. Comput. Law Secur. Rev. 2024, 54, 106020. [Google Scholar] [CrossRef]

- Getting the Future Right—Artificial Intelligence and Fundamental Rights|European Union Agency for Fundamental Rights. Available online: https://fra.europa.eu/en/publication/2020/artificial-intelligence-and-fundamental-rights (accessed on 29 January 2025).

- Ivanova, Y. The Data Protection Impact Assessment as a Tool to Enforce Non-Discriminatory AI. In Privacy Technologies and Policy, Proceedings of the 8th Annual Privacy Forum, APF 2020, Lisbon, Portugal, 22–23 October 2020; Lecture Notes in Computer Science (LNCS, Volume 12121); Springer: Cham, Switzerland, 2020; pp. 3–24. [Google Scholar] [CrossRef]

- Lazcoz, G.; de Hert, P. Humans in the GDPR and AIA Governance of Automated and Algorithmic Systems. Essential Pre-Requisites against Abdicating Responsibilities. Comput. Law Secur. Rev. 2023, 50, 105833. [Google Scholar] [CrossRef]

- Kaminski, M.E.; Malgieri, G. Algorithmic Impact Assessments under the GDPR: Producing Multi-Layered Explanations. Int. Data Priv. Law 2021, 11, 125–144. [Google Scholar] [CrossRef]

- Kazim, E.; Koshiyama, A. The Interrelation between Data and AI Ethics in the Context of Impact Assessments. AI Ethics 2021, 1, 219–225. [Google Scholar] [CrossRef]

- Mitrou, L. Data Protection, Artificial Intelligence and Cognitive Services: Is the General Data Protection Regulation (GDPR) ‘Artificial Intelligence-Proof’? SSRN Electron. J. 2018. [Google Scholar] [CrossRef]

- Kloza, D.; Van Dijk, N.; Casiraghi, S.; Maymir, S.V.; Roda, S.; Tanas, A.; Konstantinou, I. Towards a Method for Data Protection Impact Assessment: Making Sense of GDPR Requirements. d.pia.lab Policy Brief 2019, 1, 1–8. [Google Scholar]

- Data Protection Impact Assessments (DPIAs). Available online: https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/accountability-and-governance/data-protection-impact-assessments-dpias/ (accessed on 29 February 2024).

- Data Protection and Privacy Impact Assessments. Available online: https://iapp.org/resources/topics/privacy-impact-assessment-2/ (accessed on 30 January 2025).

- The Open Source PIA Software Helps to Carry Out Data Protection Impact Assessment. Available online: https://www.cnil.fr/en/open-source-pia-software-helps-carry-out-data-protection-impact-assessment (accessed on 29 February 2024).

- Bertaina, S.; Biganzoli, I.; Desiante, R.; Fontanella, D.; Inverardi, N.; Penco, I.G.; Cosentini, A.C. Fundamental Rights and Artificial Intelligence Impact Assessment: A New Quantitative Methodology in the Upcoming Era of AI Act. Comput. Law Secur. Rev. 2025, 56, 106101. [Google Scholar] [CrossRef]

- Gerards, J.; Schäfer, M.T.; Muis, I.; Vankan, A. Impact Assessment Fundamental Rights and Algorithms—Report—Government.Nl. Available online: https://www.government.nl/documents/reports/2022/03/31/impact-assessment-fundamental-rights-and-algorithms (accessed on 30 January 2025).

- Waem, H.; Dauzier, J.; Demircan, M. Fundamental Rights Impact Assessments Under the EU AI Act: Who, What and How? Available online: https://www.technologyslegaledge.com/2024/03/fundamental-rights-impact-assessments-under-the-eu-ai-act-who-what-and-how/ (accessed on 31 March 2024).

- Human Rights Impact Assessment of Digital Activities|the Danish Institute for Human Rights. Available online: https://www.humanrights.dk/publications/human-rights-impact-assessment-digital-activities (accessed on 5 March 2024).

- Treasury Board of Canada Secretariat Algorithmic Impact Assessment Tool. Available online: https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai/algorithmic-impact-assessment.html (accessed on 5 March 2024).

- Xenophobic Machines: Discrimination Through Unregulated Use of Algorithms in the Dutch Childcare Benefits Scandal. Available online: https://www.amnesty.org/en/documents/eur35/4686/2021/en/ (accessed on 22 March 2024).

- Dutch House of Representatives Endorses Mandatory Use of Human Rights and Algorithms Impact Assessment—News—Utrecht University. Available online: https://www.uu.nl/en/news/dutch-house-of-representatives-endorses-mandatory-use-of-human-rights-and-algorithms-impact (accessed on 26 January 2025).

- European Data Protection Board; Statement 3/2024 on Data Protection Authorities’ Role in the Artificial Intelligence Act Framework. Available online: https://www.edpb.europa.eu/our-work-tools/our-documents/statements/statement-32024-data-protection-authorities-role-artificial_en (accessed on 29 January 2025).

| DPIA | FRIA | |

| Legal Basis | GDPR | AI Act. |

| When? | For any personal data process with high risks for individuals (regardless of the use of AI system or not). | For high-risk AI systems, as defined in the AI Act. |

| Who? | Data controllers | AI Deployers (who typically are expected to be data controllers). The role of AI Providers (providing feedback) is also important, if they are different entities from the AI Deployers. |

| Scope | Focuses mainly on the rights of privacy and personal data protection (and other rights possibly affected due to the misuse of personal data). | Encompasses all fundamental rights, such as equality, non-discrimination, freedom of speech and human dignity. |

| People affected | Data subjects (i.e., the individuals whose data are being processed in the context of the said data process), e.g., customers and employees. | Individuals whose data are being used for training purposes, individuals who use the systems, and individuals for whom AI systems take decisions. More generally, there is a broader societal impact, especially for vulnerable populations or marginalized groups. |

| Engagement of Authorities | Consultation with data protection authorities if high risks are still identified after the mitigation measures induced by the DPIA. | Communicate the output of the FRIA to the market surveillance authority, independently from whether high risks are still identified after the mitigation measures or not. |

| Requirement | DPIA | FRIA |

|---|---|---|

| Purpose | The purpose of the said data process, as well as the relevant legal basis according to Art. 6 of the GDPR. | The purposes for which the Deployer uses the AI system. |

| Description of Processing/System | Detailed description of personal data being processed, including data flows, recipients etc. | Description of the AI system’s operation, context, and intended societal or operational goals. Specific information related to the design of the AI system are also needed. |

| Necessity and Proportionality | Assess whether the processing is necessary and proportional to achieve the intended purpose. | Evaluate whether the use of an AI system is necessary and whether its benefits outweigh risks to human rights. |

| Risks Identified | Risks to privacy and data protection (e.g., breaches, profiling, unauthorized access, collecting excessive personal data related to the desired purposes etc.). | Risks to fundamental rights due to the use of the AI system (e.g., discrimination, bias, human dignity, and freedom of expression). Societal risks are also considered. |

| Mitigation Measures | Technical and organizational measures (e.g., encryption, pseudonymization, and access controls). | Ethical, legal, and technical safeguards, including safeguards related to the inners of the AI system (e.g., bias detection, fairness audits, redress mechanisms, and explainability). |

| Time Period and Frequency | Expected duration of processing. | A description of the intended timeframe and usage frequency for the high-risk AI system. |

| Description of Connection | Notes |

|---|---|

| DPIA: Q2 → FRIA: Q10, Q13 | Stakeholders responsibilities |

| FRIA: Q34 → DPIA: Q4 | Personal data processing |

| DPIA: Q8 → FRIA: Q123 | Legal basis |

| FRIA: Q35 → DPIA: Q9 FRIA: Q35–36 → DPIA: Q47 | Data minimization principle |

| FRIA: Q18 → DPIA: Q10 | Data quality |

| FRIA: Q106–108 → DPIA: Q12 | Communication |

| DPIA: Q14–Q16 → FRIA: Q124 | Fundamental Rights |

| FRIA: Q52 → DPIA: Q17 FRIA: Q52 → DPIA: Q33 | Contracts: Agreements |

| FRIA: Q84 → DPIA: Q24 | Personnel management |

| FRIA: Q115 → DPIA: Q26 | Accountability and review |

| FRIA: Q28 → DPIA: Q20–Q30, Q32–Q42, Q44–Q46, Q67 or Q68 or Q69 depending on the answer | Data Security |

| FRIA: Q51 → DPIA: Q34 | Network security |

| FRIA: Q33, Q53 → DPIA: Q41 | Data anonymization |

| FRIA:31 → DPIA: Q43 | Authentication methods |

| FRIA: Q32 → DPIA: Q44 | Logging |

| FRIA: Q30 →DPIA: Q45 | Archiving |

| FRIA: Q8, Q69–Q82, Q122–Q128 → DPIA: Q66 | AΙ system implications |

| FRIA: Q9–Q16, Q18–Q37, Q44, Q48–Q50, Q52, Q64 → DPIA: Q67 | AΙ system threats |

| FRIA: Q9–Q37, Q44, Q48–Q53, Q56, Q59–Q68, Q83–Q146 → DPIA: Q68 | AΙ system sources of risk |

| FRIA: Q9–Q37, Q44, Q48–Q53, Q59–Q68, Q83–Q121, Q129–Q146 → DPIA: Q69 | AΙ system measures |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thomaidou, A.; Limniotis, K. Navigating Through Human Rights in AI: Exploring the Interplay Between GDPR and Fundamental Rights Impact Assessment. J. Cybersecur. Priv. 2025, 5, 7. https://doi.org/10.3390/jcp5010007

Thomaidou A, Limniotis K. Navigating Through Human Rights in AI: Exploring the Interplay Between GDPR and Fundamental Rights Impact Assessment. Journal of Cybersecurity and Privacy. 2025; 5(1):7. https://doi.org/10.3390/jcp5010007

Chicago/Turabian StyleThomaidou, Anna, and Konstantinos Limniotis. 2025. "Navigating Through Human Rights in AI: Exploring the Interplay Between GDPR and Fundamental Rights Impact Assessment" Journal of Cybersecurity and Privacy 5, no. 1: 7. https://doi.org/10.3390/jcp5010007

APA StyleThomaidou, A., & Limniotis, K. (2025). Navigating Through Human Rights in AI: Exploring the Interplay Between GDPR and Fundamental Rights Impact Assessment. Journal of Cybersecurity and Privacy, 5(1), 7. https://doi.org/10.3390/jcp5010007