A Security and Privacy Scoring System for Contact Tracing Apps

Abstract

:1. Introduction

1.1. Contact Tracing

1.2. Digital Contact Tracing Methods

1.2.1. Bluetooth-Based Contact Tracing Methods

1.2.2. GPS-Based Contact Tracing Methods

1.2.3. Other Contact Tracing Methods

1.3. Dangers of Privacy Loss

1.4. Research Method

1.4.1. Privacy

1.4.2. Security

1.5. Contribution

2. Methodology

2.1. Motivation of Methodology

2.2. Methodology of the Privacy Review

2.2.1. Privacy Principles of Contact Tracing

- Independent expert review: the app should be reviewed by privacy and security experts prior to deployment.

- Simple design: the average user should be able to understand how the app performs its functions.

- Minimal functionality: the app should only perform contact tracing.

- Data minimization: the app should collect as little data as possible to function.

- Trusted data governance: who controls, has access to, and how the data are used should be known and subject to public review.

- Cyber security: best practices should be used for data storage and transmission throughout the system.

- Minimum data retention: collected data should only be stored for the infectious period of the individual, according to the WHO.

- Protection of derived data and meta-data: these data types should be deleted as soon as they are created in the system, as they are not required for contact tracing.

- Proper disclosure and consent: users should be provided easy to understand information on the functions of the app and all data it uses and stores. Users must consent to all data collection.

- Provision to sunset: there should be a timeline in place for when the app will not longer be used and all data will be deleted.

2.2.2. Criteria for Privacy Principles

2.3. Methodology of the Vulnerability Analysis

2.3.1. Vulnerability Rubric

- Exploitability metrics: these are made up of characteristics of the vulnerable component and the attack against it.

- (a)

- Access: level of access (privilege) to the system required to perform the exploit.

- (b)

- Knowledge: level of knowledge required to implement the exploit.

- (c)

- Complexity: what the technical requirements of the exploit are, the computing power, and the workers required.

- i.

- Technology: the computing power required to perform or create the exploit.

- ii.

- Build: the amount of workers it would take to build this attack and the time frame they could build it within.

- (d)

- Effort: how much work it would take to operate the exploit.

- i.

- Planning: the amount of components that have to work together to perform the exploit (that cannot just be automated to perfectly work).

- ii.

- Human: the number of people required to work together to perform the exploit and whether they are aware of their involvement.

- Impact metrics: these focus on the outcome that an exploit of the vulnerability could achieve.

- (a)

- Scope: the amount of the user base that this exploit could affect.

- (b)

- Impact: severity of what the exploit allows an attacker to do. This can be getting data logs, inputting their own data, etc.

- i.

- Data: the kind of data that an attacker could get access to and how immediately useful they are to them, or how far into the system they are able to place false data.

- ii.

- Trust: how this exploit being implemented will impact the user base’s willingness to use the system, or a similar system in the future.

- (c)

- Detection: how easy it would be for someone to realize that this exploit has been used on the system.

- (d)

- Repairability: how easy it would be to fix what the attacker did.

- i.

- System: level of effort required to return system to a state of safely operating again as it was before the attack.

- ii.

- User: how the exploit would affet the individuals impacted by this attack in the long run.

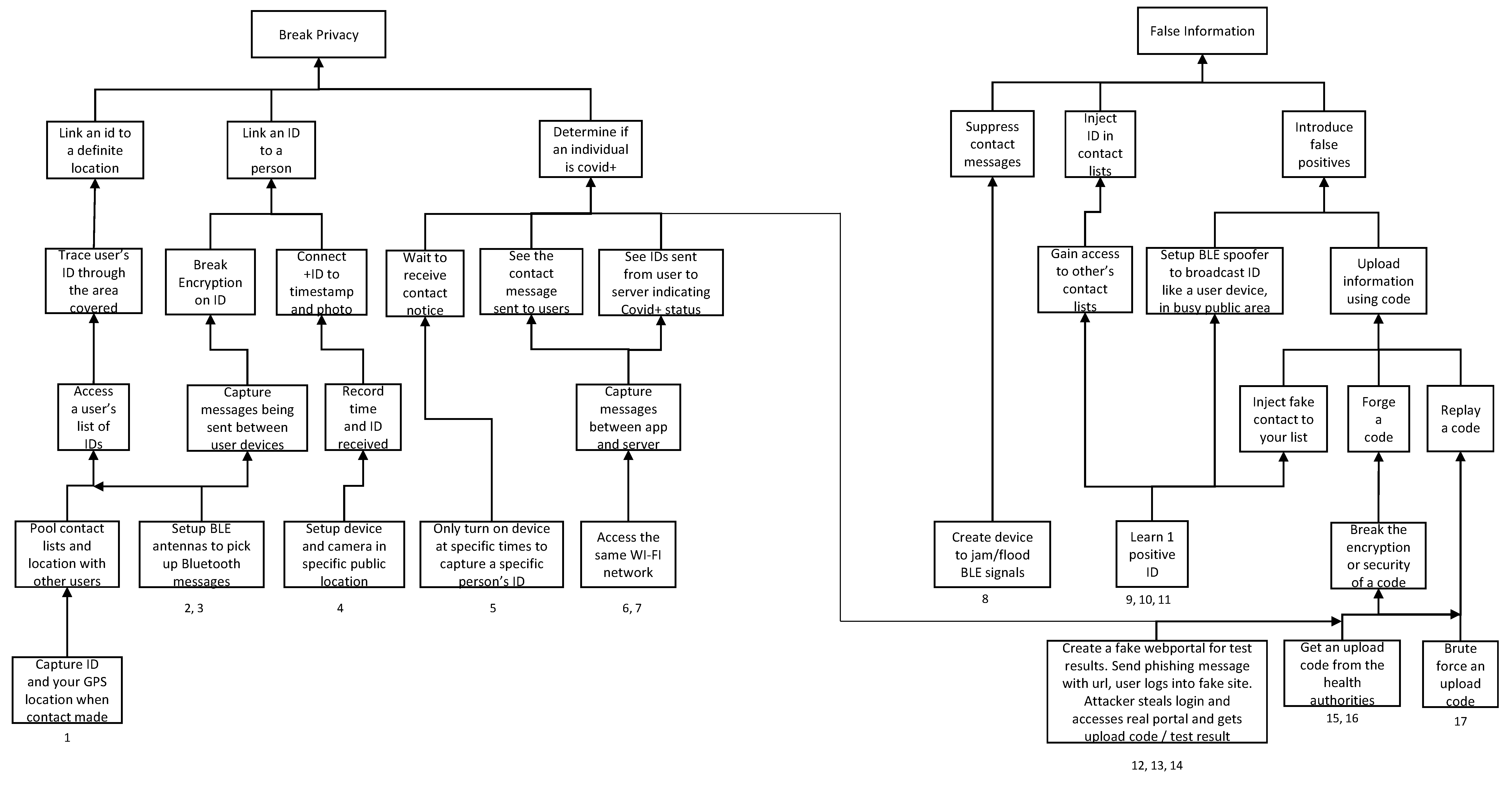

2.3.2. Attack Tree for Assessing Contact Tracing Application Vulnerability

3. Results

3.1. Selected Applications

3.2. Privacy Review

3.2.1. Privacy Metric

3.2.2. Full Privacy Review

3.3. Analysis of the Vulnerability of Contact Tracing Applications

3.3.1. Vulnerability Review

- Canada Covid Alert—Medium.

- France TousAntiCovid—Medium.

- Iceland Rakning C-19—High.

- India Aarogya Setu—Low.

- Singapore TraceTogether—Medium.

3.3.2. Vulnerability Ranking

3.4. Privacy and Security Rankings

4. Discussion and Conclusions

- GAEN framework: both apps use the Google Apple Exposure Notification Framework, which uses decentralized Bluetooth as the base of their design.

- Minimalism: although the German app does allow the user to scan a QR code they were given to see the results of a COVID-19 test, both apps otherwise contain no added functionality, collect almost no information about the user, and any data they do hold or send are deleted within 14 days.

- Transparency: both released the source code for their apps, and Canada also sought out experts to perform a review prior to release. Both have comprehensive privacy statements and policies that clarify all the relevant information.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BLE | Bluetooth Low Energy |

| CVSS | Common Vulnerability Scoring System |

| GAEN | Google Apple Exposure Notification |

| OS | Operating System |

| SIG | Special Interest Group |

Appendix A. App Attack Analysis Example, Canada Covid Alert

Attack 1

- Access: 2/10. There is nothing in the protocol to prevent someone from building a tool to collect GPS location data when the phone makes a BLE connection. The attack requires either circumventing the app to log the contact information outside of it or rooting the attackers device to access the logged contact information. The bottleneck of attack 1 is obtaining the temporary IDs of the victim to trace through the system. In the Covid Alert documentation, all it says is that the temporary IDs are “securely stored” without a clear definition of what that means [22]. It is assumed that to see the temporary IDs, the attacker would need to root the victims device. Thus, this attack should be marked as a 1. However, because the IDs of positive users are made available through the server, an attacker could collect all of this information, and then recreate the list of IDs of positive users, find matches and follow them. This possibility is why the score is a 2.

- Knowledge: 3/10. The attack would require being able to make the GPS logging code, detect that the device has logged the Bluetooth information, root the attacker’s own device and potentially the victims, or pull the list from the server and recreate the IDs of positive users.

- Complexity: 6/10.

- – Technology: 7/10. The tools required for the exploit would require a standard computer to create. The analysis can be done on a consumer computer.

- – Build: 5. The attack requires one or two tools, the GPS logger, the access to the contact list, and the method of collecting the IDs to track. Then, the program matches the ID and every GPS coordinate and maps them together. One person could do this in more than a month, or a few could do this in about a month. All of these components were created before for GPS-based data attacks and would need to be put together for this.

- Effort: 1.5/10.

- – Planning: 1/10. This is high effort because the code has to be placed on many phones to collect all of the required information. Then, the data have to be compiled.

- – Human: 2. To effectively cover an area, quite a few people would need to be involved. Once the software is on their phone, they would not have to necessarily do anything out of the ordinary however, just go through their usual routine.

- Scope: 4/10. This would affect the group that the IDs could be identified for. People close to any of those involved whose devices could be accessed to obtain the IDs or people who test positive in the community. This is smaller than everyone that an attacker knows and is not every positive individual, as it is relegated to the area covered.

- Impact: 4/10.

- – Data: 4/10. The contact log information itself is not easy to use, but the GPS data allow the creation of a map of where someone has been and could then determine where they live, work, or other personal information.

- – Trust: 4/10. This would damage trust in the system. There is the potential that a politically motivated group could use this to single out certain people, especially if they can target those that have the virus.

- Detection: 8/10. It is possible that this would not be noticed. The code that collects the information does not need to interfere with the app, just monitor it; however, the amount of people involved would require quite the vow of secrecy. It could involve access to someone’s phone to retrieve the IDs, which would increase the risk of detection.

- Repairability: 2.5/10.

- – System: 1/10. The code added to the groups devices would have to be removed, but otherwise, the system itself has not truly been touched.

- – User: 4/10. The damage solely from this vulnerability would not be permanent, but harmful to someone’s privacy, as they would have been tracked.

References

- Lomas, N. Norway Pulls Its Coronavirus Contacts-Tracing App after Privacy Watchdog’s Warning. TechCrunch 2020. Available online: https://techcrunch.com/2020/06/15/norway-pulls-its-coronavirus-contacts-tracing-app-after-privacy-watchdogs-warning/ (accessed on 10 June 2021).

- Government of Singapore. Blue Trace Protocol. Bluetrace.io 2020. Available online: https://bluetrace.io/ (accessed on 10 May 2021).

- Apple Inc. Exposure Notification Framework. Apple Dev. Doc. 2020. Available online: https://developer.apple.com/documentation/exposurenotification (accessed on 10 May 2021).

- Luccio, M. Using contact tracing and GPS to fight spread of COVID-19. GPS World, 3 June 2020. [Google Scholar]

- UK NHS. What the App Does. NHS COVID-19 App Support 2020. Available online: https://covid19.nhs.uk/what-the-app-does.html (accessed on 10 June 2021).

- Mozur, P.; Zhong, R.; Krolik, A. In Coronavirus Fight, China Gives Citizens a Color Code, With Red Flags. The New York Times, 1 March 2020. [Google Scholar]

- Johns Hopkins Coronavirus Resource Center. COVID-19 Map. 2020. Available online: https://coronavirus.jhu.edu/map.html (accessed on 10 March 2021).

- Sweeney, L. Simple Demographics Often Identify People Uniquely; Carnegie Mellon University: Pittsburgh, PA, USA, 2000. [Google Scholar]

- Tockar, A. Riding with the Stars: Passenger Privacy in the NYC Taxicab Dataset; Neustar Research: Sterling, VA, USA, 2014. [Google Scholar]

- Drakonakis, K.; Ilia, P.; Ioannidis, S.; Polakis, J. Please Forget Where I Was Last Summer: The Privacy Risks of Public Location (Meta)Data. CoRR 2019, abs/1901.00897. [Google Scholar]

- Díaz, C.; Seys, S.; Claessens, J.; Preneel, B. Towards Measuring Anonymity. In Privacy Enhancing Technologies; Dingledine, R., Syverson, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 54–68. [Google Scholar]

- Serjantov, A.; Danezis, G. Towards an Information Theoretic Metric for Anonymity. In Privacy Enhancing Technologies; Dingledine, R., Syverson, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 41–53. [Google Scholar]

- Alderson, E. Aarogya Setu: The Story of a Failure. Medium 2020. Available online: https://medium.com/@fs0c131y/aarogya-setu-the-story-of-a-failure-3a190a18e34 (accessed on 10 June 2021).

- Amnesty International. Major Security Flaw Uncovered in Qatar’s Contact Tracing App. Amnesty Int. 2020. Available online: https://diaspora.evforums.net/posts/ecc5380081860138a774005056264835 (accessed on 26 May 2020).

- Hamilton, I.A. Cybersecurity Experts Found Seven Flaws in the UK’s Contact-Tracing App. Bus. Insid. 2020. Available online: https://www.businessinsider.com/cybersecurity-experts-find-security-flaws-in-nhs-contact-tracing-app-2020-5 (accessed on 20 May 2020).

- Goodes, G. REPORT: Most Government-Sanctioned Covid-19 Tracing Apps Risk Exposing Users’ Data and Privacy. 2020. Available online: https://www.guardsquare.com/blog/report-proliferation-covid-19-contact-tracing-apps-exposes-significant-security-risks (accessed on 16 June 2020).

- Krehling, L.; Essex, A. Support Document for “A Security and Privacy Scoring System for Contact Tracing Applications”. Mendeley Data 2021, 1. [Google Scholar] [CrossRef]

- Wikipedia. COVID-19 Apps. Available online: https://www.wikipedia.org/ (accessed on 13 March 2021).

- Rahman, M. Here Are the Countries Using Google and Apple’s COVID-19 Contact Tracing API. 2020. Available online: https://www.xda-developers.com/google-apple-covid-19-contact-tracing-exposure-notifications-api-app-list-countries/ (accessed on 13 March 2021).

- FIRST. CVSS v3.1 Specification Document. FIRST 2015. Available online: https://www.first.org/cvss/v3.1/specification-document (accessed on 10 April 2021).

- Kerschbaum, F.; Barker, K. Coronavirus Statement. Waterloo Cybersecur. Priv. Inst. 2020. Available online: https://uwaterloo.ca/cybersecurity-privacy-institute/news/coronavirus-statement (accessed on 10 April 2021).

- Office of the Privacy Commissioner of Canada. A Framework for the Government of Canada to Assess Privacy-Impactful Initiatives in Response to COVID-19; Office of the Privacy Commissioner of Canada: Ottawa, QC, Canada, 2020. [Google Scholar]

- Gillmor, D.K. ACLU White Paper—Principles for Technology-Assisted Contact-Tracing; American Civil Liberties Union: New York, NY, USA, 2020. [Google Scholar]

- Club, C.C. 10 Requirements for the Evaluation of “Contact Tracing” Apps. 2020. Available online: https://www.ccc.de/en/updates/2020/contact-tracing-requirements (accessed on 10 April 2021).

- Ministry of Electronics & Information Technology. AarogyaSetu Bug Bounty Programme (for Android App). Bug Bounty Program 2020. Available online: https://static.mygov.in/rest/s3fs-public/mygov_159057669351307401.pdf (accessed on 10 June 2021).

- Health Canada. Canada’s Exposure Notification App. 2020. Available online: https://www.canada.ca/en/public-health/services/diseases/coronavirus-disease-covid-19/covid-alert.html (accessed on 10 June 2021).

- The Directorate of Health and The Department of Civil Protection and Emergency Management (Iceland). Privacy policy Rakning C-19—App. Upplýsingar um Covid-19 á Íslandi. 2020. Available online: https://www.covid.is/app/protection-of-personal-data (accessed on 1 June 2021).

- National Informatics Center of India. Aarogya Setu 2020. Available online: https://aarogyasetu.gov.in/technical-faqs/ (accessed on 10 June 2021).

- PRIVATICS Team—Inria and Fraunhofer AISEC. ROBust and privacy-presERving proximity Tracing protocol. 2020. Available online: https://github.com/ROBERT-proximity-tracing/documents (accessed on 1 May 2021).

- Aranja. Rakning-c19-App. GitHub 2020. Available online: https://github.com/aranja/rakning-c19-app (accessed on 10 June 2021).

- The Government of Canada. COVID Alert Privacy Notice (Google-Apple Exposure Notification). Canada.ca. 9 February 2021. Available online: https://www.canada.ca/en/public-health/services/diseases/coronavirus-disease-covid-19/covid-alert/privacy-policy.html (accessed on 1 May 2021).

- Office of the Privacy Commissioner of Canada. Privacy Review of the COVID Alert Exposure Notification Application; Office of the Privacy Commissioner of Canada: Ottawa, QC, Canada, 2020. [Google Scholar]

- Government of France. TousAntiCovid Application. Gouvernement.fr. 11 May 2021. Available online: https://www.gouvernement.fr/info-coronavirus/tousanticovid (accessed on 1 May 2021).

- Government of France. Help for Using TousAntiCovid. Tousanticovid.stonly. 5 March 2021. Available online: https://tousanticovid.stonly.com/kb/fr/donnees-personnelles-26615 (accessed on 1 May 2021).

- National Informatics Center of India. Aarogya Setu FAQ’s. Aarogya Setu 2020. Available online: https://aarogyasetu.gov.in/faq/ (accessed on 10 June 2021).

- Clarance, A. Aarogya Setu: Why India’s Covid-19 Contact Tracing App Is Controversial. BBC News, 15 May 2020. [Google Scholar]

- Government of India. Aarogya Setu. 2020. Available online: https://www.aarogyasetu.gov.in/ (accessed on 10 June 2021).

- Government of Singapore. OpenTrace. 2020. Available online: https://github.com/OpenTrace-community (accessed on 10 June 2021).

- Asher, S. TraceTogether: Singapore turns to wearable contact-tracing Covid tech. BBC News, 5 July 2020. [Google Scholar]

- Government of Singapore. TraceTogether Privacy Safeguards. TraceTogether, 1 April 2020. [Google Scholar]

- Google; Apple Inc. Exposure Notifications: Using Technology to Help Public Health Authorities Fight COVID-19. Covid-19 Information & Resources. Available online: https://www.google.com/search?q=privacyinformationgain&rlz=1C1CHBF_enCA960CA961&oq=privacyinformationgain&aqs=chrome..69i57j33i160.3632j1j7&sourceid=chrome&ie=UTF-8 (accessed on 10 June 2021).

- Sun, R.; Wang, W.; Xue, M.; Tyson, G.; Camtepe, S.; Ranasinghe, D. Vetting Security and Privacy of Global COVID-19 Contact Tracing Applications. CoRR 2021, arXiv:2006.10933v3. [Google Scholar]

- Sowmiya, B.; Abhijith, V.; Sudersan, S.; Sundar, R.S.J.; Thangavel, M.; Varalakshmi, P. A Survey on Security and Privacy Issues in Contact Tracing Application of Covid-19. SN Comput. Sci. 2021, 2, 136. [Google Scholar] [CrossRef] [PubMed]

| Metric (Privacy Principle) | Met | Not Met | Partially Met |

|---|---|---|---|

| Independent Expert Review | Reviewed prior to release | No formal review process or public documentation | No formal review process but documentation made public |

| Simple Design | Protocol or design is public | No documentation of design | Some design information |

| Minimal Functionality | Only contact tracing | Function outside of contact tracing | Related functions require no extra data |

| Data Minimization | No personal data collected | Detailed health, personal, GPS data | Minimal extra data not for identifying purposes |

| Trusted Data Governance | Only trusted public entity has access, data stored in country | No stated ownership or outside entity can access | Ownership known, unknown if outside entities have access |

| Cyber Security | Encryption best practices, audits | Unknown or not best practice | Statements indicating security is used |

| Minimum Data Retention | WHO 14 day infectious period | Unknown or over years | Longer than infectious period, less than a year |

| Protection of Derived Data and Meta-Data | Data never created or deleted | Unknown or given to third party | Some data stated to be deleted |

| Proper Disclosure and Consent | Voluntary use, data release. App can be paused or deleted, available privacy policy | Mandatory use, data release. No privacy policy | Privacy policy unclear or missing expected information |

| Provision to sunset | Clearly stated when and how to sunset | Unknown or implied to never sunset | Stated but without definite time |

| Score | |||||

|---|---|---|---|---|---|

| Metric | 0 | 1 | 4 | 7 | 10 |

| Access | Prevented at any privilege level | High level of access required, need root access | Medium level of access required, need higher access than regular user but not full root access | Some level of access required, need access beyond the user interfaces | Lowest level of access required, regular users could do this without higher privilege |

| Knowledge | Full knowledge of the system does not give someone the tools to attack it | Expert level of knowledge required, expert in the field with many years of experience in a specific subject | Advanced level of knowledge required, individual with career in the field | Intermediate level of knowledge required, graduate level student | Novice or fundamental level of knowledge, undergraduate level student |

| Complexity | Not possible with modern computing power | Highly complex and resource intensive, this requires a large amount of computing power | Medium complexity, requires high end computers | Low complexity, requires an average consumer computer and some tools need to be created | No complexity, this can be performed with minimal computing power, directly on a phone, with a single-board computer, etc. |

| Not reasonably possible for any group to design | Large group actor would be required | Multiple people could create with a month of time invested | Single person could create with a month of time invested | Single person could create in less than a month | |

| Effort | Extremely high effort, many components with very specific timing that cannot be automated | High effort lots of components that need to all work perfectly | Medium level of effort, several components required | Low effort, a couple of components required | Very low effort, minimal components or steps |

| Would requires a large percentage of the user population to work together | Many people required to help (wittingly or unwittingly) | Multiple people required to help (wittingly or unwittingly) | One or two people working together | One person can perform alone | |

| Scope | Does not affect anything in the system | Affects a single person, Attacker can gain information for one person that they may know | Affects a small group, attacker could target everyone they know | Affects a large group; attacker could target an entire section of the user base or a demographic | Could affect everyone in the system |

| Impact | Attacker gains no access to alter or view the system | Attacker gains access to de-identified data that are not practically re-identifiable or introduces false data on the device | Attacker gains access to de-identified data that could with effort be re-identified or introduces false data on the server that will be deleted | Attacker gains access to data that with minimal effort can be re-identified or introduces false data that is persistent in the system | Attacker gains access to personal information and identities or introduces false data that are persistent and indistinguishable from real data |

| Attack has no effect on the trust in the system | Temporarily lowers trust in the system | Lowers trust in the system | Damages trust in the system | Destroys trust in the system | |

| Detection | Is actively monitored for and prevented | Is monitored for and would be noticed on a daily report | Could take up to a week to recognize | Could take a month or more before it is recognized that the attack was implemented | It is possible and likely that the attack would not be noticed until the attackers revealed their actions publicly (releasing a dataset, making public claims) |

| Repairability | The attacker did nothing to the system that requires action | Requires minor code fixes to prevent future attacks | Requires alterations to the system and/or removal of the attackers code | The system requires significant alteration/repair | The system requires significant code updates and/or changes in the underlying protocol |

| The attacker did nothing to individuals that requires action | The users are affected but no action is required | Some users will have to perform a one-time action to mitigate the effect | The attacker gained access to user information that cannot be altered easily | The repercussions from this attack will affect people on a continual basis and are difficult to quantify | |

| Country | App | Ind.Review | Design | Min.Func. | Data Min. | Data Gov. | Security | Retention | Meta-data | Disclosure | Sunset |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CAN | Covid Alert | ● | ● | ● | ● | ◑ | ● | ● | ◑ | ● | ◑ |

| FRA | TousAntiCovid | ◑ | ● | ● | ❍ | ❍ | ◑ | ● | ◑ | ◑ | ◑ |

| ISL | Rakning C-19 | ◑ | ● | ● | ❍ | ● | ● | ● | ❍ | ● | ❍ |

| IND | Aarogya Setu | ● | ● | ❍ | ❍ | ❍ | ● | ◑ | ❍ | ❍ | ◑ |

| SGP | TraceTogether | ◑ | ● | ● | ❍ | ◑ | ◑ | ◑ | ❍ | ● | ◑ |

| Country | App Name | Meets Privacy Requirements | ||||

|---|---|---|---|---|---|---|

| Yes | Partial | No | Score | Rank | ||

| Australia | COVIDSafe | 4 | 6 | 0 | 7 | Medium |

| Austria | Stopp Corona | 3 | 4 | 3 | 5 | Medium |

| Azerbaijan | e-Tabib | 0 | 1 | 9 | 0.5 | Low |

| Bahrain | BeAware Bahrain | 0 | 0 | 10 | 0 | Low |

| Bangladesh | Corona Tracer BD | 1 | 4 | 5 | 3 | Low |

| Canada | Covid Alert | 7 | 3 | 0 | 8.5 | High |

| China | Health Code | 2 | 0 | 8 | 2 | Low |

| Colombia | CoronApp | 0 | 5 | 5 | 2.5 | Low |

| Czech Republic | eRouška (eFacemask) | 4 | 4 | 2 | 6 | Medium |

| Denmark | Smittestop | 4 | 3 | 3 | 5.5 | Medium |

| Ecuador | ASI (SO) | 1 | 5 | 4 | 3.5 | Low |

| Estonia | Hoia | 6 | 1 | 3 | 6.5 | Medium |

| Ethiopia | Debo | 0 | 1 | 9 | 0.5 | Low |

| France | TousAntiCovid | 3 | 5 | 2 | 5.5 | Medium |

| Fiji | careFIJI | 4 | 2 | 4 | 5 | Medium |

| Finland | Koronavilkku | 3 | 4 | 3 | 5 | Medium |

| Germany | Corona-Warn-App | 7 | 2 | 1 | 8 | High |

| Ghana | GH Covid-19 Tracker App | 0 | 1 | 9 | 0.5 | Low |

| Gibraltar | Beat Covid Gibraltar | 2 | 0 | 8 | 2 | Low |

| Guatemala | Alerta Guate | 0 | 0 | 10 | 0 | Low |

| Hungary | VirusRadar | 2 | 2 | 6 | 3 | Low |

| Iceland | Rakning C-19 | 6 | 1 | 3 | 6.5 | Medium |

| India | Aarogya Setu | 3 | 2 | 5 | 4 | Low |

| Ireland | COVID Tracker | 5 | 4 | 1 | 7 | Medium |

| Israel | HaMagen | 3 | 3 | 4 | 4.5 | Low |

| Italy | Immuni | 5 | 3 | 2 | 6.5 | Medium |

| Japan | COVID-19 Contact Confirming Application | 6 | 2 | 2 | 7 | Medium |

| Jordan | AMAN APP—Jordan | 3 | 2 | 5 | 4 | Low |

| Kasakhstan | eGovbizbirgemiz mobile app | 4 | 2 | 4 | 5 | Medium |

| Kuwait | Shlonik | 0 | 1 | 0 | 0.5 | Low |

| Latvia | Apturi Covid | 4 | 2 | 4 | 5 | Medium |

| Malaysia | MyTrace | 1 | 5 | 4 | 3.5 | Low |

| Netherlands | CoronaMelder | 5 | 3 | 2 | 6.5 | Medium |

| New Zealand | NZ COVID Tracer | 2 | 5 | 3 | 4.5 | Low |

| North Macedonia | Stop Korona! | 3 | 1 | 6 | 3.5 | Low |

| Northern Ireland | StopCOVID NI | 5 | 4 | 1 | 7 | Medium |

| Norway | Smittestopp | 1 | 2 | 7 | 2 | Low |

| Poland | ProteGO Safe | 3 | 4 | 3 | 5 | Medium |

| Portugal | STAYAWAY COVID | 4 | 4 | 2 | 6 | Medium |

| Qatar | Ehteraz App | 0 | 0 | 10 | 0 | Low |

| Russia (Moscow) | Social Monitoring Service | 0 | 2 | 8 | 1 | Low |

| Russia | Contact Tracer | 1 | 0 | 9 | 1 | Low |

| Saudia Arabia | Tabaud | 3 | 3 | 4 | 4.5 | Low |

| Scotland | Protect Scotland | 5 | 3 | 2 | 6.5 | Medium |

| Singapore | TraceTogether | 3 | 5 | 2 | 5.5 | Medium |

| Slovenia | #OstaniZdrav | 6 | 1 | 3 | 6.5 | Medium |

| South Africa | COVID Alert South Africa | 4 | 2 | 4 | 5 | Medium |

| Spain | Radar COVID | 6 | 2 | 2 | 7 | Medium |

| Switzerland | SwissCovid App | 6 | 2 | 2 | 7 | Medium |

| United Kingdom | NHS COVID-19 | 3 | 3 | 4 | 4.5 | Low |

| United States | CoEpi | 1 | 3 | 6 | 2.5 | Low |

| United States | CovidSafe/CommonCircle Exposures | 3 | 3 | 4 | 4.5 | Low |

| United States (Ariz.) | Covid Watch | 3 | 3 | 4 | 4.5 | Low |

| United States (Calif.) | California COVID Notify | 5 | 0 | 5 | 5 | Medium |

| United States (N.Dak. and Wyo.) | Care19 Alert | 3 | 2 | 5 | 4 | Low |

| Ranking | Privacy Score |

|---|---|

| High | 8–10 |

| Medium | 5–7.5 |

| Low | 0–4.5 |

| Ranking | Highest Severity of a Vulnerability |

|---|---|

| High | 0–3.9 |

| Medium | 4–7.9 |

| Low | 8–10 |

| Ranking | Privacy Score | Vulnerability Score |

|---|---|---|

| Covid Alert | 8.5 | 7.313 |

| TousAntiCovid | 5.5 | 7.063 |

| Rakning C-19 | 6.5 | 0 |

| Aarogya Setu | 4 | 8.375 |

| TraceTogether | 5.5 | 7.375 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krehling, L.; Essex, A. A Security and Privacy Scoring System for Contact Tracing Apps. J. Cybersecur. Priv. 2021, 1, 597-614. https://doi.org/10.3390/jcp1040030

Krehling L, Essex A. A Security and Privacy Scoring System for Contact Tracing Apps. Journal of Cybersecurity and Privacy. 2021; 1(4):597-614. https://doi.org/10.3390/jcp1040030

Chicago/Turabian StyleKrehling, Leah, and Aleksander Essex. 2021. "A Security and Privacy Scoring System for Contact Tracing Apps" Journal of Cybersecurity and Privacy 1, no. 4: 597-614. https://doi.org/10.3390/jcp1040030

APA StyleKrehling, L., & Essex, A. (2021). A Security and Privacy Scoring System for Contact Tracing Apps. Journal of Cybersecurity and Privacy, 1(4), 597-614. https://doi.org/10.3390/jcp1040030