Abstract

Quantitative Information Flow (QIF) and Differential Privacy (DP) are both concerned with the protection of sensitive information, but they are rather different approaches. In particular, QIF considers the expected probability of a successful attack, while DP (in both its standard and local versions) is a max-case measure, in the sense that it is compromised by the existence of a possible attack, regardless of its probability. Comparing systems is a fundamental task in these areas: one wishes to guarantee that replacing a system A by a system B is a safe operation that is the privacy of B is no worse than that of A. In QIF, a refinement order provides strong such guarantees, while, in DP, mechanisms are typically compared w.r.t. the privacy parameter in their definition. In this paper, we explore a variety of refinement orders, inspired by the one of QIF, providing precise guarantees for max-case leakage. We study simple structural ways of characterising them, the relation between them, efficient methods for verifying them and their lattice properties. Moreover, we apply these orders in the task of comparing DP mechanisms, raising the question of whether the order based on provides strong privacy guarantees. We show that, while it is often the case for mechanisms of the same “family” (geometric, randomised response, etc.), it rarely holds across different families.

1. Introduction

The enormous growth in the use of internet-connected devices and the big-data revolution have created serious privacy concerns, and motivated an intensive area of research aimed at devising methods to protect the users’ sensitive information. During the last decade, two main frameworks have emerged in this area: Differential Privacy (DP) and Quantitative Information Flow (QIF).

Differential privacy (DP) [1] was originally developed in the area of statistical databases, and it aims at protecting the individuals’ data while allowing the release of aggregate information through queries. This is obtained by obfuscating the result of the query via the addition of controlled noise. Naturally, we need to assume that the curator, namely the entity collecting and storing the data and handling the queries, is honest and capable of protecting the data from security breaches. Since this assumption cannot always be guaranteed, a variant has been proposed: local differential privacy (LDP) [2], where the data are obfuscated individually before they are collected.

Both DP and LPD are subsumed by -privacy [3], and, in this paper, we will use the latter as a unifying framework. The definition of -privacy assumes an underlying metric structure on the data domain . An obfuscation mechanism K for is a probabilistic mapping from to some output domain , namely a function from to probabilistic distributions over . We will use the notation to represent the probability that K on input x gives output y. The mechanism K is -private, where is a parameter representing the privacy level, if

Standard DP is obtained from this definition by assuming to be a set of all datasets and the Hamming distance between two datasets, seen as vectors or records (i.e., the number of positions in which the two datasets differ). Note that the more common definition of differential privacy assumes that are adjacent, i.e., their Hamming distance is 1, and requires . It is easy to prove that the two definitions are equivalent. As for LDP, it is obtained by considering the so-called discrete metric which assigns distance 0 to identical elements, and 1 otherwise.

The other framework for the protection of sensitive information, quantitative information flow (QIF), focuses on the potentialities and the goals of the attacker, and the research on this area has developed rigorous foundations based on information theory [4,5]. The idea is that a system processing some sensitive data from a random variable X and releasing some observable data as a random variable Y can be modelled as an information-theoretic channel with input X and output Y. The leakage is then measured in terms of correlation between X and Y. There are, however, many different ways to define such correlation, depending on the notion of adversary. In order to provide a unifying approach, Ref. [6] has proposed the theory of g-leakage, in which an adversary is characterized by a functional parameter g representing its gain for each possible outcomes of the attack.

One issue that arises in both frameworks is how to compare systems from the point of view of their privacy guarantees. It is important to have a rigorous and effective way to establish whether a mechanism is better or worse than another one, in order to guide the design and the implementation of mechanisms for information protection. This is not always an obvious task. To illustrate the point, consider the following examples.

Example 1.

Let and be the programs illustrated in Table 1, whereHis a “high” (i.e., secret) input andLis a “low” (i.e., public) output. We assume thatHis a uniformly distributed 32-bit integer with range . All these programs leak information aboutHviaL, in different ways: revealsHwhenever it is a multiple of 8 (H mod 8represents the integer division ofHby 8), and reveals nothing otherwise. does the same thing wheneverHis a multiple of 4. reveals the last 8 bits ofH(note thatH represents the bitwise conjunction betweenHand a string of 24 bits “0” followed by 8 bits “1”). Analogously, reveals the last 4 bits ofH. Now, it is clear that leaks more than , and that leaks more than , but how to compare, for instance, , and ? It is debatable which one is worse because their behavior is very different: reveals nothing in most cases, but when it does reveal something, it reveals everything. , on the other hand, always reveals part of the secret. Clearly, we cannot decide which situation is worse, unless we have some more information about the goals and the capabilities of the attacker. For instance, if the adversary has only one attempt at his disposal (and no extra information), then the program is better because even after the output ofLthere are still 24 bits ofHthat are unknown. On the other hand, if the adversary can repeat the attacks on program similar to , then eventually it will uncover the secret entirely all the times.

Table 1.

Programs that take in input a secret H and leak information about H via the output L.

Example 2.

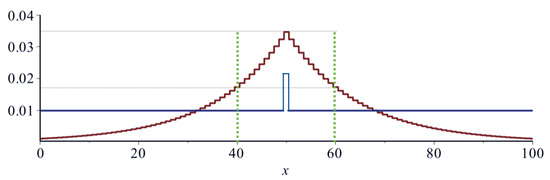

Consider the domain of the integer numbers between 0 and 100, and consider a geometric mechanism (cf. Definition 9) on this domain, with . Then, consider a randomized response mechanism (cf. Definition 13) still on the same domain and . The two mechanisms are illustrated in Figure 1. They both satisfy -privacy, but for different : in the first case is the standard distance between numbers, while in the second case it is the discrete metric. Clearly, it does not make sense to compare these mechanisms on the basis of their respective privacy parameters ε because they represent different privacy properties, and it is not obvious how to compare them in general: The geometric mechanism tends to make the true value indistinguishable from his immediate neighbors, but it separates it from the values far away. The randomized response introduces the same level of confusion between the true value and any other value of the domain. Thus, which mechanism is more private depends on the kind of attack we want to mitigate: if the attacker is trying to guess an approximation of the value, then the randomized response is better. If the attacker is only interested in identifying the true value among the immediate neighbors, then the geometric is better. Indeed, note that in the subdomain of the numbers between 40 and 60 the geometric mechanism also satisfies -privacy with being the discrete metric and , and in any subdomain smaller than that it satisfies the discrete metric -privacy with .

Figure 1.

Comparison between the geometric (red) and the randomized response (blue) mechanisms. The area between 40 and 60, delimited by the green lines, represents the sub-domain where the geometric mechanism satisfies also the discrete metric -privacy with .

In this respect, the QIF approach has led to an elegant theory of refinement (pre)order (In this paper, we call and the other refinement relations “orders”, although, strictly speaking, they are preorders.) , which provides strong guarantees: means that B is safer than A in all circumstances, in the sense that the expected gain of an attack on B is less than on A, for whatever kind of gain the attacker may be seeking. This means that we can always substitute the component A by B without compromising the security of the system. An appealing aspect of this particular refinement order is that it is characterized by a precise structural relation between the stochastic channels associated with A and B [6,7], which makes it easy to reason about, and relatively efficient to verify. It is important to remark that this order is based on an average notion of adversarial gain (vulnerability), defined by mediating over all possible observations and their probabilities. We call this perspective average-case.

At the other end of the spectrum, DP, LDP and -privacy are max-case measures. In fact, by applying the Bayes theorem to (1), we obtain:

where, for , is the probability of and is the posterior probability of given y. We can interpret and as knowledge about : they represent how much more likely is with respect to , before (prior) and after (posterior) observing y, respectively. Thus, the property expresses a bound on how much the adversary can learn from each individual outcome of the mechanism (The property (2) is also the semantic interpretation of the guarantees of the Pufferfish framework (cf. [8], Section 3.1). The ratio is known as odds ratio).

In the literature of DP, LDP and -privacy, mechanisms are usually compared on the basis of their -value (In DP and LDP is a parameter that usually appears explicitly in the definition of the mechanism. In d-privacy, it is an implicit scaling factor.), which controls a bound on the log-likelihood ratio of an observation y given two “secrets” and : smaller means more privacy. In DP and LDP, the bound is itself, while in -privacy it is We remark that the relation induced by in -privacy is fragile, in the sense that the definition of -privacy assumes an underlying metric structure on the data, and whether a mechanism B is “better” than A depends in general on the metric considered.

Average-case and max-case are different principles, suitable for different scenarios: the former represents the point of view of an organization, for instance an insurance company providing coverage for risks related to credit cards, which for the cost–benefit analysis is interested in reasoning in terms of expectation (expected cost of an attack). The max-case represents the point of view of an individual, who is interested in limiting the cost of any attack. As such, the max-case seems particularly suitable for the domain of privacy.

In this paper, we combine the max-case perspective with the robustness of the QIF approach, and we introduce two refinement orders:

- , based on the max-case leakage introduced in [9]. This order takes into account all possible privacy breaches caused by any observable (like in the DP world), but it quantifies over all possible quasi-convex vulnerability functions (in the style of the QIF world).

- , based on -privacy (like in the DP world), but quantified over all metrics .

To underline the importance of a robust order, let us consider the case of the oblivious mechanisms for differential privacy: These mechanisms are of the form , where is a query, namely a function from datasets in to some answer domain , and H is a probabilistic mechanism implementing the noise. The idea is that the system first computes the result of the query (true answer), and then it applies H to y to obtain a reported answer z. In general, if we want K to be -DP, we need to tune the mechanism H in order to take into account the sensitivity of f, which is the maximum distance between the results of f on two adjacent databases, and as such it depends on the metric on . However, if we know that is -DP, and that for some other mechanism , then we can safely substitute H by as it is because one of our results (cf. Theorem A5) guarantees that is also -DP. In other words, implies that we can substitute H by in an oblivious mechanism for whatever query f and whatever metric on , without the need to know the sensitivity of f and without the need to do any tuning of . Thanks to Theorems 3 and 4, we know that this is the case also for and . We illustrate this with the following example.

Example 3.

Consider datasets of records containing the age of people, expressed as natural numbers from 0 to 100, and assume that each dataset in contains at least 100 records. Consider two queries, and , which give the rounded average age and the minimum age of the people in x, respectively. Finally, consider the truncated geometric mechanism (cf. Definition 10), and the randomized response mechanism (cf. Definition 13). It is easy to see that is ε-DP, and it is possible to prove that (cf. Theorem 14). We can then conclude that is ε-DP as well, and that in general it is safe to replace by for whatever query. On the other hand, , so we cannot expect that it is safe to replace by in any context. In fact, is ε-DP, but isnotε-DP, despite the fact that both mechanisms are constructed using the same privacy parameter ε. Hence, we can conclude that a refinement relation based only on the comparison of the ε parameters would not be robust, at least not for a direct replacement in an arbitrary context. Note that is -DP. In order to make it ε-DP, we should divide the parameter ε by the sensitivity of g (with respect to the ordinary distance on natural numbers), which is 100, i.e., use . For , this is not necessary because it is defined using the discrete metric on , and the sensitivity of g with respect to this metric is 1.

The robust orders allow us to take into account different kinds of adversaries. The following example shows what the idea is.

Example 4.

Consider the following three LDP mechanisms, represented by their stochastic matrices (where each element is the conditional probability of the outcome of the mechanism, given the secret value). The secrets are three possible economic situations of an individual, p, a and r, standing for “poor”, “average” and “rich”, respectively. The observable outcomes are r and , standing for “rich” and “not rich”.

Let us assume that the prior distribution π on the secrets is uniform. We note that A is -LDP while B is -LDP. Hence, if we only look at the value of ε, we would think that B is better than A from the privacy point of view. However, there are attackers that gain more from B than from A (which means that, with respect to those attackers, the privacy of B is worse). For instance, this is the case when the attacker is only interested in discovering whether the person is rich or not. In fact, if we consider a gain 1 when the attacker guesses the right class (r versus (either p or a)) and 0 otherwise, we have that the highest possible gain in A is , while in B is , which is higher than . This is consistent with our orders: it is possible to show that none of the three orders hold between A and B, and that therefore we should not expect B to be better (for privacy) than A with respect to all possible adversaries.

On the other hand, the mechanism C is also -LDP, and in this case we have that the relation holds, implying that we can safely replace A by C. We can also prove that the reverse does not hold, which means that C is strictly better than A.

A fundamental issue is how to prove that these robust orders hold: Since and involve universal quantifications, it is important to devise finitary methods to verify them. To this purpose, we will study their characterizations as structural relations between stochastic matrices (representing the mechanisms to be compared), along the lines of what was done for .

We will also study the relation between the three orders (the two above and ), and their algebraic properties. Finally, we will analyze various mechanisms for DP, LDP, and -privacy to see in which cases the order induced by is consistent with the three orders above.

1.1. Contribution

The main contributions of this paper are the following:

- We introduce two refinement orders for the max case, and , that are robust with respect to a large class of adversaries.

- We give structural characterizations of both and in terms of relations on the stochastic matrices of the mechanisms under comparison. These relations help the intuition and open the way to verification.

- We study efficient methods to verify the structural relations above. Furthermore, these methods are such that, when the verification fails, they produce counterexamples. In this way, it is possible to pin down what the problem is and try to correct it.

- We show that .

- We apply the three orders (, , and ) to the comparison of some well-known families of -private mechanisms: geometric, exponential and randomised response. We show that, in general, (and thus all the refinement orders between A and B) holds within the same family whenever the of B is smaller than that of A.

- We show that, if A and B are mechanisms from different families, then, even if the of B is smaller than that of A, the relations and do not hold, and in most cases does not hold either. We conclude that a comparison based only on the value of the ’s is not robust across different families, at least not for the purposes illustrated above.

- We study lattice-properties of these orders. In contrast to , which was shown to not be a lattice, we prove that suprema and infima exist for and , and that therefore these orders form lattices.

1.2. Related Work

We are not aware of many studies on refinement relations for QIF. Yasuoka and Terauchi [10] and Malacaria [11] have explored strong orders on deterministic mechanisms, focusing on the fact that such mechanisms induce partitions on the space of secrets. They showed that the orders produced by min-entropy leakage [5] and Shannon leakage [12,13] are the same and, moreover, they coincide with the partition refinement order in the Lattice of Information [14]. This order was extended to the probabilistic case in [6], resulting in the relation mentioned in Section 2. The same paper [6] proposed the theory of g-leakage and introduced the corresponding order . Furthermore, [6] proved that and conjectured that also the reverse should hold. This conjecture was then proved valid in [7]. The max-case leakage, on which the relation is based, was introduced in [9], but and its properties were not investigated. Finally, is a novel notion introduced in this paper.

In the field of differential privacy, on the other hand, there have been various works aimed at trying understand the operational meaning of the privacy parameter and at providing guidelines for the choice of its values. We mention, for example [15,16], which consider the value of from an economical point of view, in terms of cost. We are not aware, however, of studies aimed at establishing orders between the level of privacy of different mechanisms, except the one based on the comparison of the ’s.

The relation between QIF and DP, LDP, and -privacy is based on the so-called semantic interpretation of the privacy notions that regard these properties as expressing a bounds on the increase of knowledge (from prior to posterior) due to the answer reported by the mechanism. For -privacy, the semantic interpretation is expressed by (2). To the best of our knowledge, this interpretation was first pointed out (for the location privacy instance) in [17]. The seminal paper on -privacy, [3], also proposed a semantic interpretation, with a rather different flavor, although formally equivalent. As for DP, as explained in the Introduction, (2) instantiated to databases and Hamming distance corresponds to the odds ratio on which the semantics interpretation is based provided in [8]. Before that, another version of semantic interpretation was presented in [1] and proved equivalent to a form of DP called -indistinguishability. Essentially, in this version, an adversary that queries the database, and knows all the database except one record, cannot infer too much about this record from the answer to the query reported by the mechanism. Later on, an analogous version of semantic interpretation was reformulated in [18] and proved equivalent to DP. A different interpretation of DP, called semantic privacy, was proposed by [19]. This interpretation is based on a comparison between two posteriors (rather between the posterior and the prior), and the authors show that, within certain limits, it is equivalent to DP.

A short version of this paper, containing only some of the proofs, appeared in [20].

1.3. Plan of the Paper

In the next three sections, Section 2, Section 3 and Section 4, we define the order refinements , and , respectively, and we study their properties. In Section 5.1, we investigate methods to verify them. In Section 6, we consider various mechanisms for DP and its variants, and we investigate the relation between the parameter and the orders introduced in this paper. In Section 7, we show that and form a lattice. Finally, Section 8 provides conclusions.

Note: In order to make the reading of the paper more fluid, we have moved all the proofs to the appendix at the end of the paper.

2. Average-Case Refinement

We recall here some basic concepts from the literature. Table 2 lists the symbols used for the main concepts through the paper.

Table 2.

Definitions and symbols used in this paper.

2.1. Vulnerability, Channels, and Leakage

Quantitative Information Flow studies the problem of quantifying the information leakage of a system (e.g., a program, or an anonymity protocol). A common model in this area is to consider that the user has a secret x from a finite set of possible secrets , about which the adversary has some probabilistic knowledge ( denoting the set of probability distributions over ). A function is then employed to measure the vulnerability of our system: quantifies the adversary’s success in achieving some desired goal, when his knowledge about the secret is .

Various such functions can be defined (e.g., employing well-known notions of entropy), but it quickly becomes apparent that no single vulnerability function is meaningful for all systems. The family of g-vulnerabilities [6] tries to address this issue by parametrizing V in an operational scenario: first, the adversary is assumed to possess a set of actions ; second, a gain function models the adversary’s gain when choosing action w and the real secret is x. g-vulnerability can be then defined as the expected gain of an optimal guess: . Different adversaries can be modelled by proper choices of and g. We denote by the set of all gain functions.

A system is then modelled as a channel: a probabilistic mapping from the (finite) set of secrets to a finite set of observations , described by a stochastic matrix C, where is the probability that secret x produces the observation y. When the adversary observes y, he can transform his initial knowledge into a posterior knowledge . Since each observation y is produced with some probability , it is sometimes conceptually useful to consider that the result of running a channel C, on the initial knowledge , is a “hyper” distribution : a probability distribution on posteriors , each having probability .

It is then natural to define the (average-case) posterior vulnerability of the system by applying V to each posterior , then averaging by its probability of being produced:

when defining vulnerability in this way, it can be shown [9] that V has to be convex on π; otherwise, fundamental properties (such as the data processing inequality) are violated. Any continuous and convex function V can be written as for a properly chosen g, so, when studying average-case leakage, we can safely restrict to using g-vulnerability.

Leakage can be finally defined by comparing the prior and posterior vulnerabilities, e.g., as . (Comparing vulnerabilities “multiplicatively” is also possible but is orthogonal to the goals of this paper.)

2.2. Refinement

A fundamental question arises in the study of leakage: can we guarantee that a system B is no less safe than a system A? Having a family of vulnerability functions, we can naturally define a strong order on channels by explicitly requiring that B leaks (Note that comparing the leakage of is equivalent to comparing their posterior vulnerability, so we choose the latter for simplicity.) no more than A, for all priors and all gain functions : (Note also that quantifying over is equivalent to quantifying over all continuous and convex vulnerabilities.)

Although is intuitive and provides clear leakage guarantees, the explicit quantification over vulnerability functions makes it hard to reason about and verify. Thankfully, this order can be characterized in a “structural” way that is as a direct property of the channel matrix. We first define the refinement order on channels by requiring that B can be obtained by post-processing A by some other channel R that is:

A fundamental result [6,7] states that and coincide.

We read as “A is refined by B”, or “B is as safe as A”. When holds, we have a strong privacy guarantee: we can safely replace A by B without decreasing the privacy of the system, independently from the adversary’s goals and his knowledge. However, refinement can be also useful in case ; namely, we can conclude that some adversary must exist, modelled by a gain function g, and some initial knowledge , such that the adversary actually prefers to interact with A rather than interacting with B. (Whether this adversary is of practical interest or not is a different issue, but we know that one exists.) Moreover, we can actually construct such a “counter-example” gain function; this is discussed in Section 5.

3. Max-Case Refinement

Although provides a strong and precise way of comparing systems, one could argue that average-case vulnerability might underestimate the threat of a system. More precisely, imagine that there is a certain observation y such that the corresponding posterior is highly vulnerable (e.g., the adversary can completely infer the real secret), but y happens with very small probability . In this case, the average-case posterior vulnerability can be relatively small, although is large for that particular y.

If such a scenario is considered problematic, we can naturally quantify leakage using a max-case (called “worse”-case in some contexts, although the latter is more ambiguous, “worse” can refer to a variety of factors.) variant of posterior vulnerability, where all observations are treated equally regardless of their probability of being produced:

Under this definition, it can be shown [9] that V has to be quasi-convex on (instead of convex), in order to satisfy fundamental properties (such as the data processing inequality). Hence, in the max-case, we no longer restrict to g-vulnerabilities (which are always convex), but we can use any vulnerability , where denotes the set of all continuous quasi-convex functions .

Inspired by , we can now define a corresponding max-case leakage order.

Definition 1.

The max-case leakage order is defined as

Similarly to its average-case variant, provides clear privacy guarantees by explicitly requiring that B leaks no more than A for all adversaries (modelled as a vulnerability V). However, this explicit quantification makes the order hard to reason about and verify. We would thus like to characterize by a refinement order that depends only on the structure of the two channels.

Given a channel C from to , we denote by the channel obtained by normalizing C’s columns (if a column consists of only zeroes, it is simply removed.) and then transposing:

Note that the row y of can be seen as the posterior distribution obtained by C under the uniform prior. Note also that is non-negative and its rows sum up to 1, so it is a valid channel from to . The average-case refinement order required that B can be obtained by post-processing A. We define the max-case refinement order by requiring that can be obtained by pre-processing .

Definition 2.

The max-case refinement order is defined as iff for some channel R.

Our goal now is to show that and are different characterizations of the same order. To do so, we start by giving a “semantic” characterization of that is, expressing it, not in terms of the channel matrices A and B, but in terms of the posterior distributions that they produce. Thinking of as a (“hyper”) distribution on the posteriors produced by and C, its support supp is the set of all posteriors produced with non-zero probability. We also denote by ch S the convex hull of S.

Theorem 1.

Let. If, then the posteriors of B (under) are convex-combinations of those of A, that is,

Moreover, if (A1) holds andis full support, then.

Note that, if (A1) holds for any full-support prior, then it must hold for all priors.

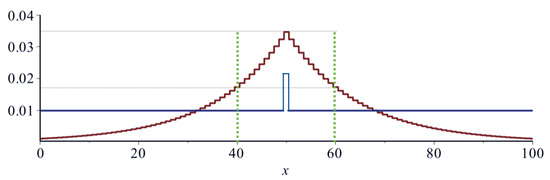

Theorem A1 has a nice geometric intuition (cf. Figure 2) that we are going to illustrate in the following example.

Figure 2.

The simplex and the convex hulls of the posterior distributions in Example 5.

Example 5.

Consider the following systems.

Consider the prior . The set of the posterior distributions generated by A under π are:

while those generated by B are:

These posteriors, and the convex hulls that they generate, are illustrated in Figure 2. The pink area is the ch supp and the purple area is the ch supp. We can see that suppch supp, or, equivalently, ch suppch supp.

We are now ready to give the main result of this section.

Theorem 2.

The ordersandcoincide.

Similarly to the average case, gives us a strong leakage guarantee: can safely replace A by B, knowing that, for any adversary, the max-case leakage of B can be no-larger than that of A. Moreover, in case , we can always find an adversary, modelled by a vulnerability function V, who prefers (wrt the max-case) interacting with A that with B. Such a function is discussed in Section 5.

Finally, we resolve the question of how and are related.

Theorem 3.

is strictly stronger than.

This result might appear counter-intuitive at first; one might expect to imply . To understand why it does not, note that the former only requires that, for each output of B, there exists some output of that is at least as vulnerable, regardless of how likely and are to happen (this is max-case, after all). We illustrate this with the following example.

Example 6.

Consider the following systems:

Under the uniform prior, the posteriors for both channels are the same, namely , and , respectively. Thus, the knowledge that can be obtained by each output of B can be also obtained by some output of A (albeit with a different probability). Hence, from Theorem A1, we get that . However, we can check (see Section 5.1) that B cannot be obtained by post-processing A, that is, .

The other direction might also appear tricky: if B leaks no more than A in the average-case, it must also leak no more than B in the max-case. The quantification over all gain functions in the average-case is powerful enough to “detect” differences in max-case leakage. The above result also means that could be useful even if we are interested in the max-case, since it gives us for free.

4. Privacy-Based Refinement

Thus far, we have compared systems based on their (average-case or max-case) leakage. In this section, we turn our attention to the model of differential privacy and discuss new ways of ordering mechanisms based on that model.

4.1. Differential Privacy and -Privacy

Differential privacy relies on the observation that some pairs of secrets need to be indistinguishable from the point of view of the adversary in order to provide some meaningful notion of privacy; for instance, databases differing in a single individual should not be distinguishable, otherwise the privacy of that individual is violated. At the same, other pairs of secrets can be allowed to be distinguishable in order to provide some utility; for instance, distinguishing databases differing in many individuals allows us to answer a statistical query about those individuals.

This idea can be formalized by a distinguishability metric . (To be precise, is an extended pseudo metric, namely one in which distinct secrets can have distance 0, and distance is allowed.) Intuitively, models how distinguishable we allow these secrets to be. A value 0 means that we require x and to be completely indistinguishable to the adversary, while means that she can distinguish them completely.

In this context, a mechanism is simply a channel (the two terms will be used interchangeably), mapping secrets to some observations . Denote by the set of all metrics on . Given , we define -privacy as follows:

Definition 3.

A channel C satisfies -privacy iff

Intuitively, this definition requires that the closer x and are (as measured by ), the more similar (probabilistically) the output of the mechanism on these secrets should be.

Remark 1.

Note that the definition of -privacy given in (1) is slightly different from the above one because of the presence of ε in the exponent. Indeed, it is common to scale by a privacy parameter , in which case can be thought of as the “kind” and ε as the “amount” of privacy. In other words, the structure determined by on the data specifies how we want to distinguish each pair of data, and ε specifies (uniformly) the degree of the distinction. Note that is itself a metric, so the two definitions are equivalent.

Using a generic metric in this definition allows us to express different scenarios, depending on the domain on which the mechanism is applied and the choice of . For instance, in the standard model of differential privacy, the mechanism is applied to a database x (i.e., is the set of all databases), and produces some observation y (e.g., a number). The Hamming metric —defined as the number of individuals in which x and differ—captures standard differential privacy.

4.2. Oblivious Mechanisms

In the case of an oblivious mechanism, a query is first applied to database x, and a noise mechanism H from to is applied to , producing an observation z. In this case, it is useful to study the privacy of H wrt some metric on . Then, to reason about the -privacy of the whole mechanism , we can first compute the sensitivity of f wrt :

and then use the following property [3]:

For instance, the geometric mechanism satisfies -privacy (where denotes the Euclidean metric), hence it can be applied to any numeric query f: the resulting mechanism satisfies -differential privacy. The sensitivity wrt the Hamming and Euclidean metrics reduces to where denotes .

4.3. Applying Noise to the Data of a Single Individual

There are also scenarios in which a mechanism C is applied directly to the data of a single individual (that is, is the set of possible values). For instance, in the local model of differential privacy [2], the value of each individual is obfuscated before sending them to an untrusted curator. In this case, C should satisfy -privacy, where is the discrete metric, since any change in the individual’s value should have negligible effects.

Moreover, in the context of location-based services, a user might want to obfuscate his location before sending it to the service provider. In this context, it is natural to require that locations that are geographically close are indistinguishable, while far away ones are allowed to be distinguished (in order to provide the service). In other words, we wish to provide -privacy, for the Euclidean metric on , called geo-indistinguishability in [17].

4.4. Comparing Mechanisms by Their “Smallest ” (For Fixed )

Scaling by a privacy parameter allows us to turn -privacy (for some fixed ) into a quantitative “leakage” measure, by associating each channel to the smallest ε by which we can scale without violating privacy.

Definition 4.

The privacy-based leakage (wrt ) of a channel C is defined as

Note that iff there is no such ; also iff C satisfies -privacy.

It is then natural to compare two mechanisms A and B based on their “smallest ”.

Definition 5.

Define iff .

For instance, means that B satisfies standard differential privacy for at least as small as the one of A.

4.5. Privacy-Based Leakage and Refinement Orders

When discussing the average- and max-case leakage orders , we obtained strong leakage guarantees by quantifying over all vulnerability functions. It is thus natural to investigate a similar quantification in the context of -privacy. Namely, we define a stronger privacy-based “leakage” order, by comparing mechanisms not on a single metric , but on all metrics simultaneously.

Definition 6.

The privacy-based leakage order is defined as iff for all .

Similarly to the other leakage orders, the drawback of is that it quantifies over an uncountable family of metrics. As a consequence, our first goal is to characterize it as a property of the channel matrix alone, which would make it much easier to reason about or verify.

To do so, we start by recalling an alternative way of thinking about -privacy. Consider the multiplicative total variation distance between probability distributions , defined as:

If we think of C as a function (mapping every x to the distribution ), C satisfies -privacy iff , in other words iff C is non-expansive (1-Lipschitz) wrt .

Then, we introduce the concept of the distinguishability metric induced by the channel C, defined as

Intuitively, expresses exactly how much the channel distinguishes (wrt ) the secrets . It is easy to see that is the smallest metric for which C is private; in other words, for any :

We can now give a refinement order on mechanisms, by comparing their corresponding induced metrics.

Definition 7.

The privacy-based refinement order is defined as iff , or equivalently iff B satisfies -privacy.

This achieves our goal of goal of characterizing .

Proposition 1.

The ordersandcoincide.

We now turn our attention to the question of how these orders relate to each other.

Theorem 4.

is strictly stronger than, which is strictly stronger than.

The fact that is stronger than is due to the fact than can be seen as a max-case information leakage, for a properly constructed vulnerability function . This is discussed in detail in Section 4.7. This implication means that can be useful even if we “only” care about -privacy.

4.6. Application to Oblivious Mechanisms

We conclude the discussion on privacy-based refinement by showing the usefulness of our strong order in the case of oblivious mechanisms.

Theorem 5.

Let be any query and be two mechanisms on . If , then .

This means that replacing A by B is the context of an oblivious mechanism is always safe, regardless of the query (and its sensitivity) and regardless of the metric by which the privacy of the composed mechanism is evaluated.

Assume, for instance that we care about standard differential privacy, and we have properly constructed A such that satisfies -differential privacy for some . If we know that (several such cases are discussed in Section 6), we can replace A by B without even knowing what f does. The mechanism is guaranteed to also satisfy -differential privacy.

Note also that the above theorem fails for the weaker order . Establishing for some metric gives no guarantees that for some other metric of interest . It is possible that replacing A by B in that case is not safe (one would need to re-examine the behavior of B, and possibly reconfigure it to the sensitivity of f).

Table 3 summarizes the relations between the various orderings.

Table 3.

Comparison of leakage and refinement orders. All implications are strict.

4.7. Privacy as Max-Case Capacity

In this section we show that -privacy can be expressed as a (max-case) information leakage. Note that this provides an alternative proof that is stronger than . We start this by defining a suitable vulnerability function:

Definition 8.

The -vulnerability function is defined as

Note the difference between (a vulnerability function on distributions) and (a “leakage” measure on channels).

A fundamental notion in QIF is that of capacity: the maximization of leakage over all priors. In turns out that, for , the capacity-realizing prior is the uniform one. In the following, denotes the additive max-case leakage, namely:

and denotes the additive max-case -capacity, namely:

Theorem 6.

is always achieved on a uniform prior. Namely

This finally brings us to our goal of expressing in terms of information leakage (for a proper vulnerability function).

Theorem 7.

[DP as max-case capacity] C satisfies -privacy iff . In other words: .

5. Verifying the Refinement Orders

We now turn our attention to the problem of checking whether the various orders hold, given two explicit representations of channels A and B (in terms of their matrices). We show that, for all orders, this question can be answered in time polynomial in the size of the matrices. Moreover, when one of the order fails, we discuss how to obtain a counter-example (e.g., a gain function g or a vulnerability function V), demonstrating this fact. All the methods discussed in the section have been implemented in a publicly available library, and have been used in the experimental results of Section 6.

5.1. Average-Case Refinement

Verifying can be done in polynomial time (in the size of ) by solving the system of equations , with variables R, under the linear constraints that R is a channel matrix (non-negative and rows sum up to 1). However, if the system has no solution (i.e., ), this method does not provide us with a counter-example gain function g.

We now show that there is an alternative efficient method: define , the set of all channels obtainable by post-processing C. The idea is to compute the projection of B on . Clearly, the projection is B itself iff ; otherwise, the projection can be directly used to construct a counter-example g.

Theorem 8.

Letbe the projection ofBon.

- 1.

- If , then .

- 2.

- Otherwise, let . The gain function provides a counter-example to , which is , for uniform .

Since , the projection of y to a convex set can be written as for . This is a quadratic program with Q being the identity matrix, which is positive definite, hence it can be solved in polynomial time.

Note that the proof that is stronger than (the “coriaceous” theorem of [7]) uses the hyperplane-separation theorem to show the existence of a counter example g in case . The above technique essentially computes such a separating hyperplane.

5.2. Max-Case Refinement

Similarly to , we can verify directly using its definition, by solving the system under the constraint that R is a channel.

In contrast to , when , the proof of Theorem 2 directly gives us a counter-example:

where ch supp and is any full-support prior. For this vulnerability function, it holds that .

5.3. Privacy-Based Refinement

The order can be verified directly from its definition, by checking that . This can be done in time , by computing for each pair of secrets. If , then provides an immediate counter-example metric, since B satisfies -privacy, but A does not.

6. Application: Comparing DP Mechanisms

In differential privacy, it is common to compare the privacy guarantees provided by different mechanisms by ‘comparing the epsilons’. However, it is interesting to ask to what extent -equivalent mechanisms are comparable wrt the other leakage measures defined here—or we might want to know whether reducing in a mechanism also corresponds to a refinement of it. This could be useful if, for example, it is important to understand the privacy properties of a mechanism with respect to any max-case leakage measure, and not just the DP measure given by .

Since the -based order given by is (strictly) the weakest of the orders considered here, it cannot be the case that we always get a refinement (wrt other orders). However, it may be true that, for particular families of mechanisms, some (or all) of the refinement orders hold.

We investigate three families of mechanisms commonly used in DP or LDP: geometric, exponential and randomized response mechanisms.

6.1. Preliminaries

We define each family of mechanisms in terms of their channel construction. We assume that mechanisms operate on a set of inputs (denoted by ) and produce a set of outputs (denoted ). In this sense, our mechanisms can be seen as oblivious (as in standard DP) or as LDP mechanisms. (We use the term ‘mechanism’ in either sense). We denote by a mechanism parametrized by , where is defined to be the same as . For the purposes of comparison, we make sure that we use the best possible for each mechanism. In order to compare mechanisms, we restrict our input and output domains of interest to sequences of non-negative integers. We assume are finite unless specified. In addition, as we are operating in the framework of -privacy, it is necessary to provide an appropriate metric defined over ; here, it makes sense to use the Euclidean distance metric .

Definition 9.

A geometric mechanism is a channel , parametrized by constructed as follows:

where and . Such a mechanism satisfies -privacy.

In practice, the truncated geometric mechanism is preferred to the infinite geometric. We define the truncated geometric mechanism as follows.

Definition 10.

A truncated geometric mechanism is a channel , parametrized by with constructed as follows:

where and . Such a mechanism satisfies -privacy.

It is also possible to define the ‘over-truncated’ geometric mechanism whose input space is not entirely included in the output space.

Definition 11.

An over-truncated geometric mechanism is a channel , parametrized by with constructed as follows:

- 1.

- Start with the truncated geometric mechanism .

- 2.

- Sum up the columns at each end until the output domain is reached.

Such a mechanism satisfies -privacy.

For example, the set of inputs to an over-truncated geometric mechanism could be integers in the range , but the output space may have a range of or perhaps . In either of these cases, the mechanism has to ‘over-truncate’ the inputs to accommodate the output space.

We remark that we do not consider the over-truncated mechanism a particularly useful mechanism in practice. However, we provide results on this mechanism for completeness since its construction is possible, if unusual.

Definition 12.

An exponential mechanism is a channel , parametrized by constructed as follows:

where are normalizing constants ensuring . Such a mechanism satisfies -privacy where (which can be calculated exactly from the channel construction).

Note that the construction presented in Definition 12 uses the Euclidean distance metric since we only consider integer domains. The general construction of the exponential mechanism uses arbitrary domains and arbitrary metrics. Note that its parameter does not correspond to the true (best-case) -DP guarantee that it provides. We will denote by the exponential mechanism with ‘true’ privacy parameter rather than the reported one, as our intention is to capture the privacy guarantee provided by the channel in order to make reasonable comparisons.

Definition 13.

A randomized response mechanism is a channel , parametrized by constructed as follows:

where and is the discrete metric (that is, and for ). Such a mechanism satisfies -privacy.

We note that the randomized response mechanism also satisfies -privacy.

Intuitively, the randomized response mechanism returns the true answer with high probability and all other responses with equal probability. In the case where the input x lies outside (that is, in ‘over-truncated’ mechanisms), all of the outputs (corresponding to the outlying inputs) have equal probability.

Example 7.

The following are examples of each of the mechanisms described above, represented as channel matrices. For this example, we set for the geometric and randomized response mechanisms, while, for the exponential mechanism, we use .

Note that the exponential mechanism here actually satisfies -privacy even though it is specified by .

We now have three families of mechanisms which we can characterize by channels, and which satisfy -privacy. For the remainder of this section, we will refer only to the parameter and take as given, as we wish to understand the effect of changing (for a fixed metric) on the various leakage measures.

6.2. Refinement Order within Families of Mechanisms

We first ask which refinement orders hold within a family of mechanisms. That is, when does reducing for a particular mechanism produce a refinement? Since we have the convenient order , it is useful to first check if holds as we get the other refinements ‘for free’.

For the (infinite) geometric mechanism, we have the following result.

Theorem 9.

Let be geometric mechanisms. Then, iff . That is, decreasing produces a refinement of the mechanism.

This means that reducing in an infinite geometric mechanism is safe against any adversary that can be modelled using, for example, max-case or average-case vulnerabilities.

For the truncated geometric mechanism, we get the same result.

Theorem 10.

Let be truncated geometric mechanisms. Then, iff . That is, decreasing produces a refinement of the mechanism.

However, the over-truncated geometric mechanism does not behave so well.

Theorem 11.

Let be over-truncated geometric mechanisms. Then, for any . That is, decreasing does not produce a refinement.

We can think of this last class of geometrics as ‘skinny’ mechanisms, that is, corresponding to a channel with a smaller output space than input space.

Intuitively, this theorem means that we can always find some (average-case) adversary who prefers the over-truncated geometric mechanism with the smaller .

We remark that the gain function we found can be easily calculated by treating the columns of channel A as vectors, and finding a vector orthogonal to both of these. This follows from the results in Section 5.1. Since the columns of A cannot span the space , it is always possible to find such a vector, and, when this vector is not orthogonal to the ‘column space’ of B, it can be used to construct a gain function preferring B to A.

Even though the refinement does not hold, we can check whether the other refinements are satisfied.

Theorem 12.

Let be an over-truncated geometric mechanism. Then, reducing does not produce a refinement; however, it does produce a refinement.

This means that, although a smaller does not provide safety against all max-case adversaries, it does produce a safer mechanism wrt d-privacy for any choice of metric we like.

Intuitively, the order relates mechanisms based on how they distinguish inputs. Specifically, if , then, for any pair of inputs , the corresponding output distributions are ‘further apart’ in channel A than in channel B, and thus the inputs are more distinguishable using channel A. When fails to hold, it means that there are some inputs in A which are more distinguishable than in B, and vice versa. This means an adversary who is interested in distinguishing some particular pair of inputs would prefer one mechanism to the other.

We now consider the exponential mechanism. In this case, we do not have a theoretical result, but, experimentally, it appears that the exponential mechanism respects refinement, so we present the following conjecture.

Conjecture 1.

Let be an exponential mechanism. Then, decreasing ε in E produces a refinement. That is, iff .

Finally, we consider the randomized response mechanism.

Theorem 13.

Let be a randomized response mechanism. Then, decreasing in R produces a refinement. That is, iff .

In conclusion, we can say that, in general, the usual DP families of mechanisms are ‘well-behaved’ wrt all of the refinement orders. This means that it is safe (wrt any adversary we model here) to replace a mechanism from a particular family with another mechanism from the same family with a lower .

6.3. Refinement Order between Families of Mechanisms

Now, we explore whether it is possible to compare mechanisms from different families. We first ask: can we compare mechanisms which have the same ? We assume that the input and output domains are the same, and the intention is to decide whether to replace one mechanism with another.

Theorem 14.

Let R be a randomized response mechanism, E an exponential mechanism and TG a truncated geometric mechanism. Then, and . However, does not hold between and .

Proof.

We present a counter-example to show and . The remainder of the proof is in Appendix D.

Consider the following channels:

Channel A represents an exponential mechanism and channel B a randomized response mechanism. Both have (true) of . (Channel A was generated using . However, as noted earlier, this corresponds to a lower true .) However, and . Thus, A does not satisfy -privacy, nor does B satisfy -privacy. □

Intuitively, the randomized response mechanism maintains the same () distinguishability level between inputs, whereas the exponential mechanism causes some inputs to be less distinguishable than others. This means that, for the same (true) , an adversary who is interested in certain inputs could learn more from the randomized response than the exponential. In the above counter-example, points in the exponential mechanism of channel A are less distinguishable than the corresponding points in the randomized response mechanism B.

As an example, let’s say the mechanisms are to be used in geo-location privacy and the inputs represent adjacent locations (such as addresses along a street). Then, an adversary (your boss) may be interested in how far you are from work, and therefore wants to be able to distinguish between points distant from (your office) and points within the vicinity of your office, without requiring your precise location. Your boss chooses channel A as the most informative. However, another adversary (your suspicious partner) is more concerned about where exactly you are, and is particularly interested in distinguishing between your expected position (, the boulangerie) versus your suspected position (, the brothel). Your partner chooses channel B as the most informative.

Regarding the other refinements, we find (experimentally) that in general they do not hold between families of mechanisms. (Recall that we only need to produce a single counter-example to show that a refinement doesn’t hold, and this can be done using the methods presented in Section 5.)

We next check what happens when we compare mechanisms with different epsilons. We note the following.

Theorem 15.

For any (truncated geometric, randomized response, exponential) mechanisms , if for any of our refinements (), then for .

Proof.

This follows directly from transitivity of the refinement relations, and our results on refinement with families of mechanisms. (We recall however that our result for the exponential mechanism is only a conjecture.) □

This tells us that, once we have a refinement between mechanisms, it continues to hold for reduced in the refining mechanism.

Corollary 1.

Let be the geometric, truncated geometric, randomized response and exponential mechanisms respectively. Then, for all , we have that , , and .

Thus, it is safe to ‘compare epsilons’ wrt if we want to replace a geometric mechanism with either a randomized response or exponential mechanism. (As with the previous theorem, note that the results for the exponential mechanism are stated as conjecture only, and this conjecture is assumed in the statement of this corollary.) What this means is that if, for example, we have a geometric mechanism that operates on databases with distance measured using the Hamming metric and satisfying -privacy, then any randomized response mechanism R parametrized by will also satisfy -privacy. Moreover, if we decide we’d rather use the Manhattan metric to measure distance between the databases, then we only need to check that also satisfies -privacy, as this implies that R will too.

The following Table 4, Table 5 and Table 6 summarize the refinement relations with respect to the various families of mechanisms.

Table 4.

The refinements respected by families of mechanisms for decreasing . We recall that the results in the grey cells are based on Conjecture 1.

Table 5.

Comparing different families of mechanisms with respect to the different refinements under the same .

Table 6.

Comparing different families of mechanisms with differing . We recall that the results in the grey cells are based on Conjecture 1.

6.4. Asymptotic Behavior

We now consider the behavior of the relations when approximates 0, which represents the absence of leakage. We start with the following result:

Theorem 16.

Every (truncated geometric, randomized response, exponential) mechanism is ‘the safest possible mechanism’ when parametrized by . That is, for all mechanisms (possibly from different families) and .

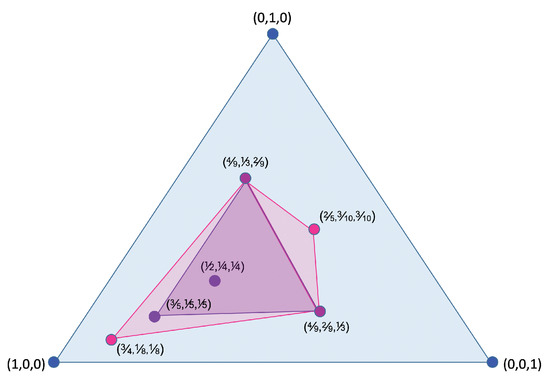

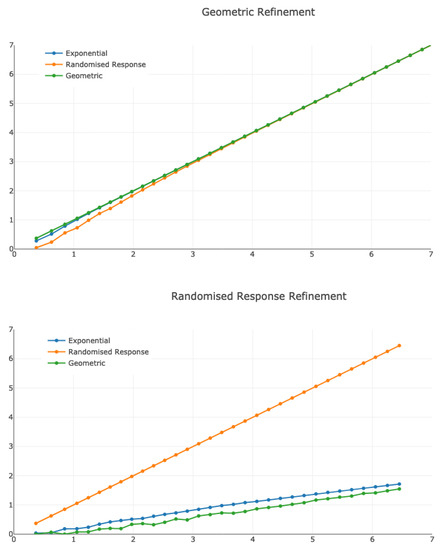

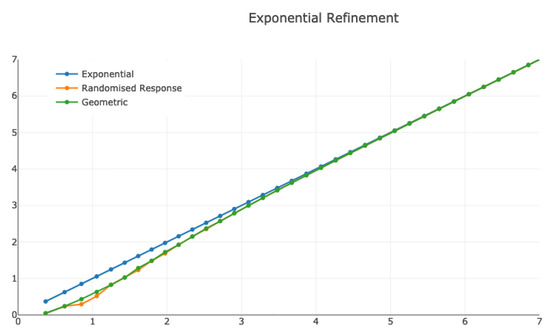

While this result may be unsurprising, it means that we know that refinement must eventually occur when we reduce . It is interesting then to ask just when this refinement occurs. We examine this question experimentally by considering different mechanisms and investigating for which values of average-case refinement holds. For simplicity of presentation, we show results for matrices, noting that we observed similar results for experiments across different matrix dimensions, at least wrt the coarse-grained comparison of plots that we do here. The results are plotted in Figure 3.

Figure 3.

Refinement of mechanisms under for channels. The x-axis represents the on the LHS of the relation, and the y-axis represents the one on the RHS. The top graph represents refinement of the truncated geometric mechanism (that is, ), the middle graph is refinement of randomized response (), and the bottom graph is refinement of the exponential mechanism ().

The plots show the relationship between (x-axis) and (y-axis) where parametrizes the mechanism being refined and parametrizes the refining mechanism. For example, the blue line on the top graph represents . We fix and ask for what value of do we get a refinement. Notice that the line corresponds to the same mechanism in both axes (since every mechanism refines itself).

We can see that refining the randomized response mechanism requires much smaller values of epsilon in the other mechanisms. For example, from the middle graph, we can see that (approximately) whereas, from the top graph, we have . This means that the randomized response mechanism is very ‘safe’ against average-case adversaries compared with the other mechanisms, as it is much more ‘difficult’ to refine than the other mechanisms.

We also notice that, for ‘large’ values of , the exponential and geometric mechanisms refine each other for approximately the same values. This suggests that, for these values, the epsilons are comparable (that is, the mechanisms are equally ‘safe’ for similar values of ). However, smaller values of require a (relatively) large reduction in to obtain a refinement.

6.5. Discussion

At the beginning of this section, we asked whether it is safe to compare differential privacy mechanisms by ‘comparing the epsilons’. We found that it is safe to compare epsilons within families of mechanisms (except in the unusual case of the over-truncated geometric mechanism). However, when comparing different mechanisms, it is not safe to just compare the epsilons, since none of the refinements hold in general. Once a ‘safe’ pair of epsilons has been calculated, then reducing epsilon in the refining mechanism is always safe. However, computing safe epsilons relies on the ability to construct a channel representation, which may not always be feasible.

7. Lattice Properties

The orders and are all reflexive and transitive (i.e., preorders), but not anti-symmetric (i.e., not partial orders). This is due to the fact that there exist channels that have “syntactic” differences but the same semantics; e.g., two channels having their columns swapped. However, if we are only interested in a specific type of leakage, then all channels such that (where ⊑ is one of ) have identical leakage, so we can view them as the “same channel” (either by working on the equivalence classes of or by writing all channels in some canonical form).

Seeing now ⊑ as a partial order, the natural question is whether it forms a lattice that is whether suprema and infima exist. If it exists, the supremum has an interesting property: it is the “least safe” channel that is safer than both A and B (any channel C such that and would necessarily satisfy ). If we wanted a channel that is safer than both A and B, would be a natural choice.

In this section, we briefly discuss this problem and show that—in contrast to —both and do have suprema and infima (i.e., they form a lattice).

7.1. Average-Case Refinement

In the case of , “equivalent” channels are those producing the exact same hypers. However, even if we identify such channels, it is known [7] that two channels do not necessarily have a least upper bound wrt , hence does not form a lattice.

7.2. Max-Case Refinement

In the case of , “equivalent” channels are those producing the same posteriors (or more generally the same convex hull of posteriors). However, in contrast to , if we identify such channels that is if we represent a channel only by the convex hull of its posteriors, then becomes a lattice.

First, note that given a finite set of posteriors , such that ch , i.e., such that , it is easy to construct a channel C producing each posterior with output probability . It suffices to take .

Thus, can be simply constructed by taking the intersection of the convex hulls of the posteriors of . This intersection is a convex polytope itself, so it has (finitely many) extreme points, so we can construct as the channel having exactly those as posteriors. , on the other hand, can be constructed as the channel having as posteriors the union of those of A and B.

Note that computing the intersection of polytopes is NP-hard in general [21], so might be hard to construct. However, efficient special cases do exist [22]; we leave the study of the hardness of as future work.

7.3. Privacy-Based Refinement

In the case , “equivalent” channels are those producing the same induced metric, i.e., . Representing channels only by their induced metric, we can use the fact that does form a lattice under ≥. We first show that any metric can be turned into a corresponding channel.

Theorem 17.

For any metric , we can construct a channel such that .

Then, will be simply the channel whose metric is , where ∨ is the supremum in the lattice of metrics, and similarly for .

Note that the infimum of two metrics is simply the max of the two (which is always a metric). The supremum, however, is more tricky, since the min of two metrics is not always a metric: the triangle inequality might be violated. Thus, we first need to take the min of , then compute its “triangle closure”, by finding the shortest path between all pairs of elements, for instance using the well-known Floyd–Warshall algorithm.

8. Conclusions

We have investigated various refinement orders for mechanisms for information protection, combining the max-case perspective typical of DP and its variants with the robustness of the QIF approach. We have provided structural characterizations of these preorders and methods to verify them efficiently. Then, we have considered various DP mechanisms, and investigated the relation between the -based measurement of privacy and our orders. We have shown that, while within the same family of mechanisms, a smaller implies the refinement order, this is almost never the case for mechanisms belonging to different families.

Author Contributions

Conceptualization, K.C., N.F. and C.P.; methodology, K.C., N.F. and C.P.; software, K.C. and N.F.; validation, K.C. and N.F.; formal analysis, K.C. and N.F.; investigation, K.C. and N.F.; resources, C.P.; data curation, K.C. and N.F.; writing—original draft preparation, K.C. and N.F.; writing—review and editing, K.C., N.F. and C.P.; visualization, K.C. and N.F.; supervision, K.C. and C.P.; project administration, K.C. and C.P.; funding acquisition, C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme, Grant agreement No. 835294, by the project ANR-16-CE25-0011 REPAS, and by the Equipe Associée LOGIS.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proofs of Results about the Max-Case Refinement

Theorem A1.

Let. If, then the posteriors ofB(under) are convex-combinations of those ofA, that is,

Moreover, if (A1) holds and is full support, then .

Proof.

Note that seeing as a row vector, and are the output distributions of A and B, respectively. Denote by and the posteriors of and , respectively; we have as many posteriors as the elements in the support of the output distributions, that is, for each supp supp . (A1) can be written as

The proof consists of two parts: first, we show that (A2) for uniform is equivalent to . Second, we show that (A2) for full-support implies (A2) for any other prior.

For the first part, letting be uniform, we show that (A2) is equivalant to . This is easy to see since since the y-th row of is and the z-th row of is . Hence, we can construct R from the convex coefficients, and vice versa, as .

For the second part, let be full-support and be arbitrary. Since supp supp, we necessarily have supp supp for any channel C. Assume that (A1) holds for and let be the corresponding convex coefficients. Fixing an arbitrary supp, define

We first show that

From this, it follows that .

Finally, denote by and the posteriors of and , respectively; we show that (A1) holds for . Fixing , we have that

□

Theorem A2.

The orders and coincide.

Proof.

Fix some arbitrary and denote by and the posteriors of and , respectively. Assuming , from Theorem A1, we get that each can be written as a convex combination . Hence,

from which follows.

Now, assume that , let ch supp and define a vulnerability function that maps every prior to its Euclidean distance from S, that is,

Since S is a convex set, it is well known that is convex on (hence also quasi-convex). Note that for all and strictly positive anywhere else.

By definition of S, we have that and hence for all posteriors of A, as a consequence . On the other hand, since , from Theorem A1, we get that there exists some posterior of B such that . As a consequence, , which implies that . □

Theorem A3.

is strictly stronger than .

Proof.

The “stronger” part is essentially the data-processing inequality for max-case vulnerability [9] (Prop. 14). To show it directly, assume that , that is, for some channel R, and define a channel S from to as

It is easy to check that S is a valid channel, i.e., that for all y. Moreover, we have that

hence .

The “strictly” part has already been shown in the body of the paper: The two matrices A and B in (14) provide an example in which , while . □

Appendix B. Proofs of the Results about the Privacy-Based Refinement

Proposition A1.

The orders and coincide.

Proof.

Assuming , recall that a channel C satisfies -privacy iff . Note also that . Setting , we get , which implies that B satisfies -privacy, hence .

Assuming , to show that , it is equivalent to show that A satisfies -privacy only if B also satisfies it. Let , if A satisfies -privacy, then ; hence, B also satisfies -privacy, concluding the proof. □

Theorem A4.

is strictly stronger than, which is strictly stronger than.

Proof.

The “stronger” part is a direct consequence of the fact that can be expressed as max-case capacity for a suitable vulnerability measure (more concretely, a consequence of Theorems 2, 6 and 7). This is discussed in detail in Section 4.7.

For the “strictly” part, consider the following counter-example:

The only difference between A and B is that the two rows have been swapped. Hence, , which implies . However, the posteriors of (for uniform prior) are (written in columns):

Since cannot be written as a convex combination of and , and similarly cannot be written as a convex combination of and , from Theorem A1, we conclude that . □

Theorem A5.

Let be any query and be two mechanisms on . If , then .

Proof.

Define , and similarly for . Then, we have:

□

Theorem A6.

is always achieved on a uniform prior . Namely

Proof.

Fix , and let and be the outer and inners of and , respectively. Since and , we have that:

Moreover, it holds that:

Finally, we have that:

which concludes the proof. □

Theorem A7.

[DP as max-case capacity] C satisfies -privacy iff . In other words: .

Proof.

Let denote the inners of . From Theorem 6, we have that

which, from the definition of , holds iff for all . □

Appendix C. Proofs of the Results on the Refinement Verification

Proposition A2

(Projection theorem, [23] (Prop. 1.1.9)). Let be closed and convex and let . There exists a unique that minimizes over , called the projection of z on C. Moreover, a vector is the projection of z on C iff

Theorem A8.

Letbe the projection ofBon.

- 1.

- If, then.

- 2.

- Otherwise, let. The gain functionprovides a counter-example to, which is, for uniform.

Proof.

(1) is immediate from the definition of . For (2), we first show that

(in other words that is a hyperplane with normal G, separating B from ). For the left-hand inequality, we have . Moreover, since is closed and convex, from the projection theorem (Proposition A2), we get that for all , from which directly follows.

The proof continues similarly to the one of [7] (Theorem 9). We write posterior vulnerability (for uniform prior) as a maximization over all remapping strategies for respectively, namely

Then, follows from (A13) and the fact that (the identity is a remapping strategy). □

Appendix D. Proofs of Results about Refinement Comparison

We call a truncated geometric mechanism ‘square’ if is has the same input and output space (that is, the channel representation is a square matrix).

We first show that geometric mechanisms and truncated geometric mechanisms are equivalent to square mechanisms under .

Lemma A1.

Let be a geometric mechanism. Then, the reduced (abstract) channel form of is the square channel .

Proof.

First, note that the square channel is obtained from the (infinite) geometric by summing up all the ‘extra’ columns (i.e., those columns in ). Now, note that these ‘extra’ columns are scalar multiples of each other, since each column has the form

for increasing values of k. Thus, the ‘summing up’ operation is a valid reduction operation, and so the infinite geometric is reducible to the corresponding square channel. □

Lemma A2.

Let be a truncated geometric mechanism. Then, the reduced (abstract) channel form of is the square channel .

Proof.

First, note that the truncated geometric is obtained from the infinite geometric by summing up columns at the ends of the matrix. This is exactly the ‘reduction’ step noted above. We can continue, as above, to sum up ‘extra’ columns until we get a square matrix. □

Corollary A1.

Any refinement that holds for a square geometric mechanism also holds for any truncated geometric mechanism or (the) geometric mechanism having domain .

Note that we only define truncation as far as the square geometric matrix, since at this point the columns of the matrix are linearly independent and can no longer be truncated via matrix reduction operations. We now show that refinement holds for the square geometric mechanisms.

Lemma A3.

Let be a square geometric mechanism. Then, decreasing ε produces a refinement of it. That is, iff .

Proof.

The square geometric mechanism has the following form:

where , and similarly for with .

Now, this matrix is invertible and the inverse has the following form:

Recalling that

for some channel R, we can construct a suitable R using , namely . It suffices to show that R is a valid channel.

It is clear that the rows of R sum to 1, since it is the product of matrices with rows summing to 1.

Multiplying out the matrix R yields:

where and . The only way that any of these matrix entries can be less than 0 is if , or . Thus, R is a valid channel precisely when and so as required. □

The following theorems now follow from the previous lemmas.

Theorem A9.

Letbe geometric mechanisms. Then,iff. That is, decreasingproduces a refinement of the mechanism.

Proof.

Using Lemma A1, we can express as a square channel and from Lemma A3 it follows that the refinement holds. □

Theorem A10.

Let be truncated geometric mechanisms. Then, iff . That is, decreasing produces a refinement of the mechanism.

Proof.

As above, using Lemmas A2 and A3. □

Theorem A11.

Let be over-truncated geometric mechanisms. Then, for any . That is, decreasing does not produce a refinement.

Proof.

Consider the following counter-example:

Channels and are over-truncated geometric mechanisms parametrized by , , respectively. We expect to be safer than , that is, . However, under the uniform prior , the gain function

yields and , thus leaks more than for this adversary. (In fact, for this gain function, we have and so the adversary learns nothing from observing the output of ). □

Theorem A12.

Let be an over-truncated geometric mechanism. Then, reducing does not produce a refinement; however, it does produce a refinement.

Proof.

We show the first part using a counter-example. Consider the following channels:

Channels and are over-truncated geometric mechanisms using , , respectively. We can define the (prior) vulnerability V as the usual (convex) g-vulnerability. Then, under a uniform prior , the gain function given by:

yields and . Thus, .

For the second part, we first note that for any square geometric channel we have exactly when are adjacent rows in the matrix (this can be seen from the construction of the square channel). Now, the over-truncated geometric is obtained by summing columns of the square geometric. By construction, the square geometric A has adjacent elements satisfying when and x is above (or on) the diagonal of the channel matrix; otherwise, . This means that each (over)-truncation step maintains the ratio except when and occur on diagonal elements, in which case their sum is between and . Since this affects only two elements in each row, we still have that (until the final truncation step to produce a single 1 vector). Therefore, since holds for the square matrix, and it holds under truncation, we must have that it holds for over-truncated geometric mechanisms. Thus, reducing corresponds to refinement under as required. □

We show that the randomized response mechanism behaves well with respect to by considering three cases.

Firstly, we consider the case where . We use the following lemmas:

Lemma A4.

Let , be ‘square’ randomized response mechanisms. Then, is a randomized response mechanism with parameter where is the dimension of , and B.

Proof.

Observe that can be factorised as where R has the form:

and similarly for . Multiplying out gives the matrix:

(Note that the constant co-efficient factorised out the front does not affect the calculation for the channel). This is exactly the randomized response mechanism required. □

Lemma A5.

For any , the function

defined for is increasing and has range .

Proof.

We can see that is continuous for the given domain and the derivative is for all .

Additionally, at , the function is defined and equal to 1. In addition,

□

Lemma A6.

Let , be randomized response mechanisms represented by square matrices (that is, ). Then, iff .

Proof.

Note first that , are in reduced (abstract channel) form and so the partial order of holds.

From Lemma A4, we know that the composition of two randomized response mechanisms is another randomized response mechanism. Therefore, for the reverse direction, if then Lemma A5 tells us that we can find a randomized response mechanism such that . In the case of equality, we can choose the identity mechanism.

For the forward direct, we show the contrapositive. If , then we know there exists an R such that . However, this means that . Since the matrices are reduced (as channels), then is a partial order and so this implies . Thus, we must have . □

Interestingly, we can use this result to show the second case where we consider ‘over-truncated’ mechanisms.

Lemma A7.

Let , be randomized response mechanisms with . Then, iff .

Proof.

Notice that this ‘over-truncated’ randomized response mechanism is just a square randomized response mechanism ‘glued’ onto a matrix containing all values .

Denote the corresponding square mechanisms by (note the parameters are the same) and denote by N the matrix containing only (note that this is the same matrix for both and ).