Computer Vision-Based Multiple-Width Measurements for Agricultural Produce

Abstract

1. Introduction

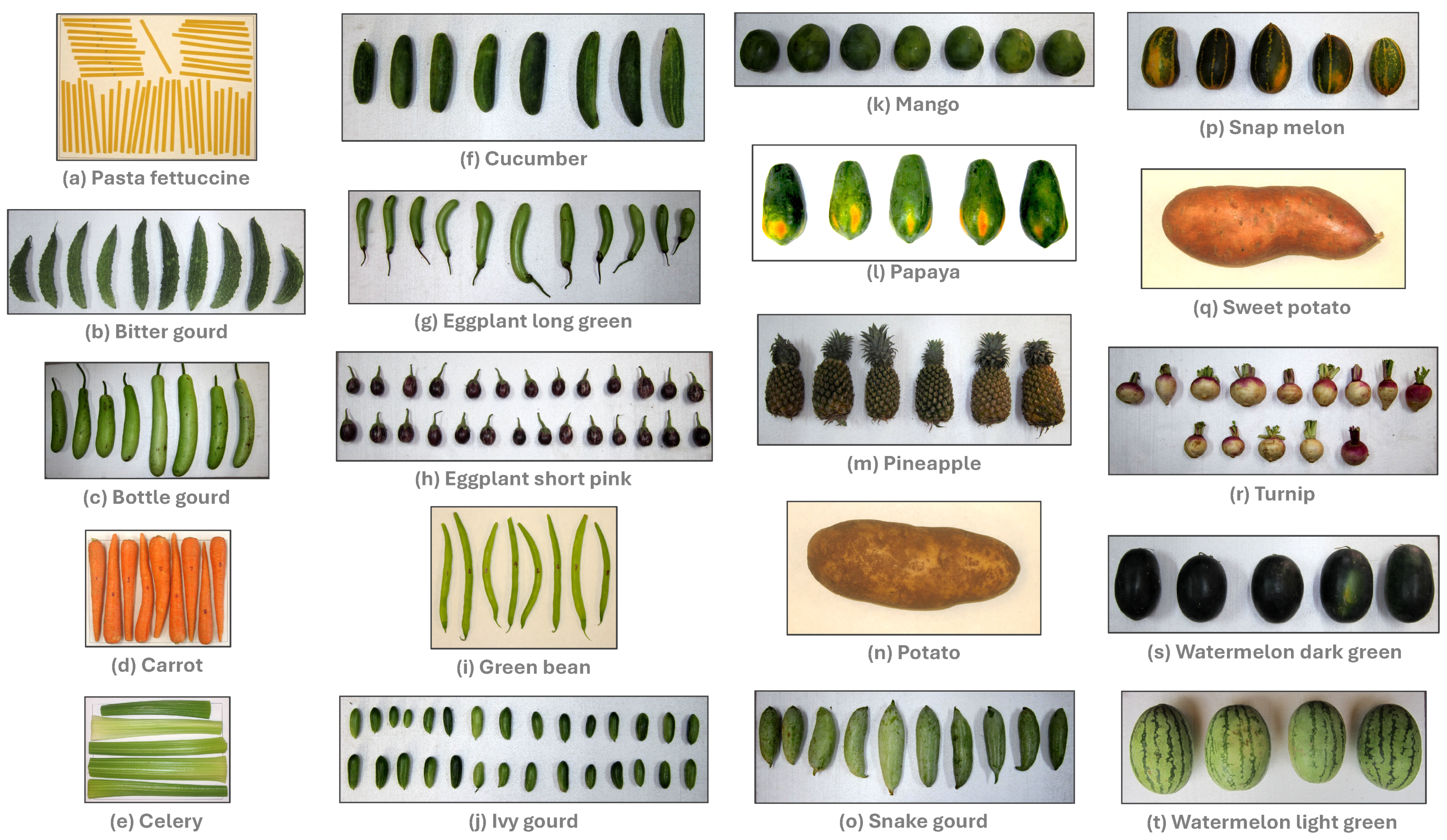

2. Materials and Methods

2.1. Test Material

2.2. Overall Workflow of the Research Work

2.3. Image Acquisition of Agricultural Produce Samples

2.4. Computer Vision Image Analysis Framework Used

2.5. Image Preprocessing

2.6. Plugin Development and Description of Methodology

2.6.1. Plugin’s User Input Front Panel

2.6.2. Methodology of Multiple-Width and -Length Measurements

2.7. Statistical Analysis of Multiple Widths

3. Results and Discussion

3.1. Features of the Developed Plugin

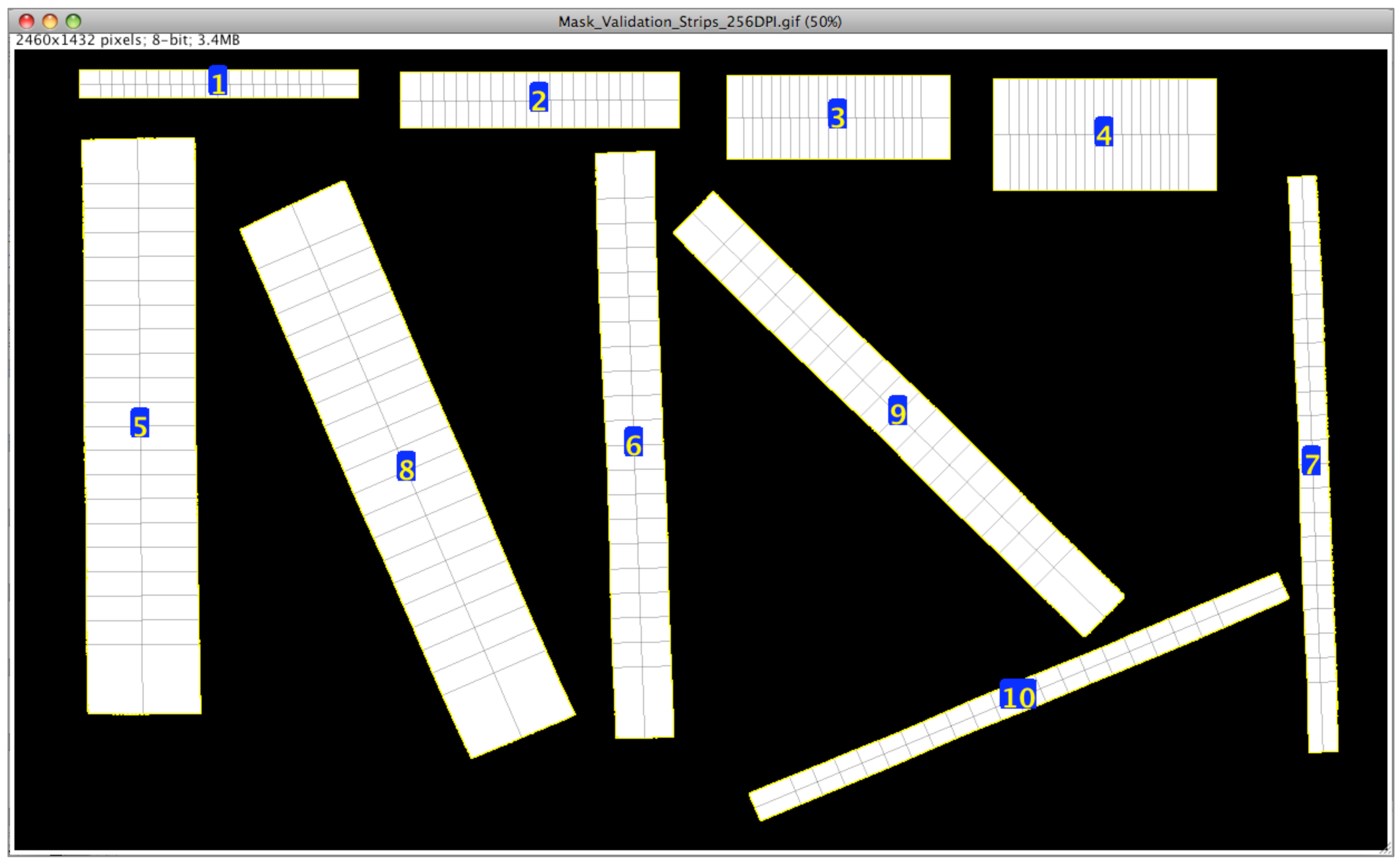

3.2. Plugin Validation

3.3. Multiple Width Results of Agricultural Produce

3.4. Effect of Number of Width Measurements and Significance

3.5. Deviation with Single Dimensions

3.6. Computational Speed

3.7. Limitations and Recommendations for Future Work

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, B.; Huang, W.; Li, J.; Zhao, C.; Fan, S.; Wu, J.; Liu, C. Principles, developments and applications of computer vision for external quality inspection of fruits and vegetables: A review. Food Res. Int. 2014, 62, 326–343. [Google Scholar] [CrossRef]

- Igathinathane, C.; Pordesimo, L.; Batchelor, W. Major orthogonal dimensions measurement of food grains by machine vision using ImageJ. Food Res. Int. 2009, 42, 76–84. [Google Scholar] [CrossRef]

- Gunasekaran, S. Computer vision technology for food quality assurance. Trends Food Sci. Technol. 1996, 7, 245–256. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D.W. Inspection and grading of agricultural and food products by computer vision systems—A review. Comput. Electron. Agric. 2002, 36, 193–213. [Google Scholar] [CrossRef]

- Lorén, N.; Hamberg, L.; Hermansson, A.M. Measuring shapes for application in complex food structures. Food Hydrocoll. 2006, 20, 712–722. [Google Scholar] [CrossRef]

- Huynh, T.T.; TonThat, L.; Dao, S.V. A vision-based method to estimate volume and mass of fruit/vegetable: Case study of sweet potato. Int. J. Food Prop. 2022, 25, 717–732. [Google Scholar] [CrossRef]

- Jarimopas, B.; Jaisin, N. An experimental machine vision system for sorting sweet tamarind. J. Food Eng. 2008, 89, 291–297. [Google Scholar] [CrossRef]

- Ercisli, S.; Sayinci, B.; Kara, M.; Yildiz, C.; Ozturk, I. Determination of size and shape features of walnut (Juglans regia L.) cultivars using image processing. Sci. Hortic. 2012, 133, 47–55. [Google Scholar] [CrossRef]

- Clement, J.; Novas, N.; Manzano-Agugliaro, F.; Gazquez, J.A. Active contour computer algorithm for the classification of cucumbers. Comput. Electron. Agric. 2013, 92, 75–81. [Google Scholar] [CrossRef]

- Moreda, G.; Ortiz-Cañavate, J.; García-Ramos, F.J.; Ruiz-Altisent, M. Non-destructive technologies for fruit and vegetable size determination—A review. J. Food Eng. 2009, 92, 119–136. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D.W. Improving quality inspection of food products by computer vision—A review. J. Food Eng. 2004, 61, 3–16. [Google Scholar] [CrossRef]

- Du, C.J.; Sun, D.W. Learning techniques used in computer vision for food quality evaluation: A review. J. Food Eng. 2006, 72, 39–55. [Google Scholar] [CrossRef]

- Costa, C.; Antonucci, F.; Pallottino, F.; Aguzzi, J.; Sun, D.W.; Menesatti, P. Shape analysis of agricultural products: A review of recent research advances and potential application to computer vision. Food Bioprocess Technol. 2011, 4, 673–692. [Google Scholar] [CrossRef]

- Du, C.J.; Sun, D.W. Recent developments in the applications of image processing techniques for food quality evaluation. Trends Food Sci. Technol. 2004, 15, 230–249. [Google Scholar] [CrossRef]

- Neupane, C.; Pereira, M.; Koirala, A.; Walsh, K.B. Fruit sizing in orchard: A review from caliper to machine vision with deep learning. Sensors 2023, 23, 3868. [Google Scholar] [CrossRef]

- Sabliov, C.; Boldor, D.; Keener, K.; Farkas, B. Image processing method to determine surface area and volume of axi-symmetric agricultural products. Int. J. Food Prop. 2002, 5, 641–653. [Google Scholar] [CrossRef]

- Khojastehnazhand, M.; Omid, M.; Tabatabaeefar, A. Determination of orange volume and surface area using image processing technique. Int. Agrophysics 2009, 23, 237–242. [Google Scholar]

- Vivek Venkatesh, G.; Iqbal, S.M.; Gopal, A.; Ganesan, D. Estimation of volume and mass of axi-symmetric fruits using image processing technique. Int. J. Food Prop. 2015, 18, 608–626. [Google Scholar] [CrossRef]

- Blasco, J.; Munera, S.; Aleixos, N.; Cubero, S.; Molto, E. Machine Vision-Based Measurement Systems for Fruit and Vegetable Quality Control in Postharvest. In Measurement, Modeling and Automation in Advanced Food Processing; Hitzmann, B., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 71–91. [Google Scholar]

- Zheng, B.; Sun, G.; Meng, Z.; Nan, R. Vegetable size measurement based on stereo camera and keypoints detection. Sensors 2022, 22, 1617. [Google Scholar] [CrossRef]

- Amin, J.; Anjum, M.A.; Sharif, M.; Kadry, S. Fruits and Vegetable Diseases Recognition Using Convolutional Neural Networks. Comput. Mater. Contin. 2022, 70, 619–635. [Google Scholar] [CrossRef]

- Xiang, L.; Wang, D. A review of three-dimensional vision techniques in food and agriculture applications. Smart Agric. Technol. 2023, 5, 100259. [Google Scholar] [CrossRef]

- Le Louëdec, J.; Cielniak, G. 3D shape sensing and deep learning-based segmentation of strawberries. Comput. Electron. Agric. 2021, 190, 106374. [Google Scholar] [CrossRef]

- Guevara, C.; Rostan, J.; Rodriguez, J.; Gonzalez, S.; Sedano, J. Computer-Vision-Based Industrial Algorithm for Detecting Fruit and Vegetable Dimensions and Positioning. In Proceedings of the International Conference on Soft Computing Models in Industrial and Environmental Applications, Salamanca, Spain, 9–11 October 2024; Springer: Cham, Switzerland, 2024; pp. 93–104. [Google Scholar]

- Chen, Z.; Zhou, R.; Jiang, F.; Zhai, Y.; Wu, Z.; Mohammad, S.; Li, Y.; Wu, Z. Development of Interactive Multiple Models for Individual Fruit Mass Estimation of Tomatoes with Diverse Shapes. 2024. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5023176 (accessed on 25 June 2025).

- Igathinathane, C.; Visvanathan, R. Machine Vision-Based Multiple width Measurements for Agricultural Produce—Original and Multiple width Measurements Images. Mendeley Data, V1. 2025. Available online: https://data.mendeley.com/datasets/jprxshtr4t/1 (accessed on 25 June 2025).

- Rasband, W.S. ImageJ; U.S. National Institutes of Health: Bethesda, MD, USA, 2011; Available online: https://imagej.net/ij/index.html (accessed on 25 June 2025).

- Rasband, W.S. ImageJ: Image processing and analysis in Java. Astrophys. Source Code Libr. 2012, 1, 06013. [Google Scholar]

- Schneider, C.A.; Rasband, W.S.; Eliceiri, K.W. 671 NIH image to ImageJ: 25 years of image analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef]

- Bailer, W. Writing ImageJ Plugins—A Tutorial. Version 1.71; Upper Austria University of Applied Sciences: Wels, Austria, 2006; Available online: https://imagingbook.github.io/imagingbook-doc/imagej-tutorial/tutorial171.pdf (accessed on 25 June 2025).

- Crawford, E.C.; Mortensen, J.K. An ImageJ plugin for the rapid morphological characterization of separated particles and an initial application to placer gold analysis. Comput. Geosci. 2009, 35, 347–359. [Google Scholar] [CrossRef]

- Fischer, M.J.; Uchida, S.; Messlinger, K. Measurement of meningeal blood vessel diameter in vivo with a plug-in for ImageJ. Microvasc. Res. 2010, 80, 258–266. [Google Scholar] [CrossRef]

- Saxton, A. A macro for converting mean separation output to letter groupings in Proc Mixed. In Proceedings of the 23rd SAS Users Group International; SAS Institute: Cary, NC, USA, 1998; pp. 1243–1246. [Google Scholar]

- Mebatsion, H.; Paliwal, J. A Fourier analysis based algorithm to separate touching kernels in digital images. Biosyst. Eng. 2011, 108, 66–74. [Google Scholar] [CrossRef]

- Zhang, G.; Jayas, D.S.; White, N.D. Separation of touching grain kernels in an image by ellipse fitting algorithm. Biosyst. Eng. 2005, 92, 135–142. [Google Scholar] [CrossRef]

| Object | DPI | Actual (mm) | Plugin Measured (mm) | Accuracy (%) # | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Length | Width | Length | Width | Length | Width | |||||

| min | max | mean | STD | |||||||

| 1 * | 256 | 500 | 50 | 500.00 | 50.010 | 50.010 | 50.01 | 0.00 | 100.00 | 99.98 |

| 2 * | 256 | 500 | 100 | 500.00 | 100.005 | 100.005 | 100 | 0.00 | 100.00 | 100.00 |

| 3 * | 256 | 400 | 150 | 400.00 | 150.003 | 150.003 | 150 | 0.00 | 100.00 | 100.00 |

| 4 * | 256 | 400 | 200 | 400.00 | 200.003 | 200.003 | 200 | 0.00 | 100.00 | 100.00 |

| 256 | 100 | 20 | 102.50 | 19.74 | 20.04 | 19.85 | 0.09 | 97.50 | 99.25 | |

| 256 | 100 | 10 | 104.25 | 10.12 | 10.42 | 10.33 | 0.10 | 95.75 | 96.70 | |

| 256 | 100 | 5 | 102.36 | 4.96 | 5.46 | 5.21 | 0.11 | 97.64 | 95.80 | |

| 256 | 100 | 20 | 103.23 | 20.00 | 20.57 | 20.33 | 0.17 | 96.77 | 98.35 | |

| 256 | 100 | 10 | 102.79 | 10.31 | 10.59 | 10.43 | 0.10 | 97.21 | 95.70 | |

| 256 | 100 | 5 | 102.03 | 4.98 | 5.38 | 5.20 | 0.10 | 97.97 | 96.00 | |

| 165 | 279.4 | 108.9 | 278.66 | 107.61 | 109.46 | 108.50 | 0.56 | 99.74 | 99.63 | |

| 165 | 279.4 | 107.4 | 278.94 | 106.53 | 107.76 | 107.18 | 0.41 | 99.84 | 99.80 | |

| 165 | 279.4 | 215.9 | 278.84 | 214.17 | 216.33 | 215.19 | 0.67 | 99.80 | 99.67 | |

| 265 | 279.4 | 215.9 | 279.78 | 215.56 | 216.14 | 215.74 | 0.16 | 99.86 | 99.93 | |

| N | Image_File * | Scientific Name | # Objects | Plugin Measured Sample Dimensions | W/L † | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Samples Lengths (L, mm) | Samples Widths (W, mm) | |||||||||

| Minimum | Maximum | Mean | Minimum | Maximum | Mean | |||||

| ±STD | ±STD | ±STD | ±STD | |||||||

| 1 | PastaFettuccine_252DPI | — | 48 | 69.95 | 99.04 | 85.10 ± 6.26 | 4.45 ± 0.03 | 4.98 ± 0.08 | 4.71 ± 0.05 | 0.06 |

| 2 | BitterGourds_109DPI | Momordica charatia | 10 | 159.64 | 290.62 | 237.24 ± 41.97 | 45.49 ± 3.87 | 58.06 ± 7.55 | 50.42 ± 5.58 | 0.22 |

| 3 | BottleGourds_109DPI | Lagenaria siceraria | 8 | 265.48 | 449.76 | 349.17 ± 61.44 | 60.41 ± 1.20 | 78.32 ± 12.11 | 67.47 ± 5.07 | 0.20 |

| 4 | Carrots_169DPI | Daucus carota | 9 | 179.76 | 210.27 | 196.98 ± 8.55 | 18.99 ± 3.51 | 28.80 ± 7.17 | 22.56 ± 5.92 | 0.11 |

| 5 | CeleryHearts_236DPI | Apium graveolens var. Dulce | 5 | 206.97 | 263.76 | 247.11 ± 21.09 | 26.27 ± 1.49 | 41.21 ± 4.73 | 30.80 ± 2.89 | 0.12 |

| 6 | Cucumbers_109DPI | Cucumis sativus | 8 | 130.43 | 209.34 | 172.43 ± 23.09 | 40.05 ± 3.06 | 48.27 ± 4.99 | 43.79 ± 4.07 | 0.28 |

| 7 | EggplantLongGreen_109DPI | Solanum melongena | 11 | 96.94 | 198.4 | 151.30 ± 27.90 | 25.60 ± 2.65 | 35.26 ± 6.77 | 30.12 ± 4.54 | 0.20 |

| 8 | EggplantShortPink_109DPI | Solanum melongena | 27 | 62.89 | 96.07 | 75.25 ± 9.33 | 30.86 ± 2.75 | 45.42 ± 9.66 | 37.72 ± 4.29 | 0.55 |

| 9 | GreenBeans_244DPI | Phaseolus vulgaris | 7 | 79.36 | 123.09 | 104.79 ± 14.38 | 7.65 ± 0.15 | 10.33 ± 0.77 | 8.79 ± 0.34 | 0.08 |

| 10 | IvyGourds_109DPI | Coccinea indica | 29 | 39.89 | 74.6 | 60.79 ± 7.90 | 16.21 ± 2.08 | 25.15 ± 3.36 | 21.66 ± 2.72 | 0.39 |

| 11 | Mangos_109DPI | Mangifera indica | 7 | 114.7 | 132.57 | 121.79 ± 5.73 | 86.52 ± 11.10 | 101.15 ± 14.03 | 94.79 ± 12.35 | 0.88 |

| 12 | Papayas_109DPI | Carica papaya | 5 | 213.69 | 238.91 | 228.30 ± 9.74 | 98.35 ± 12.70 | 106.75 ± 17.27 | 103.21 ± 15.01 | 0.50 |

| 13 | Pineapple_109DPI | Ananas cosmosus | 6 | 234.67 | 276.97 | 260.26 ± 15.83 | 100.16 ± 4.24 | 121.59 ± 6.66 | 113.54 ± 5.05 | 0.45 |

| 14 | Potato_193DPI | Solanum tuberosum | 177.38 | 177.51 | 177.42 ± 0.06 | 64.80 ± 6.65 | 64.90 ± 6.92 | 64.86 ± 6.74 | 0.40 | |

| 15 | SnakeGourdsShort_109DPI | Trichosanthes cucumerina | 10 | 169.71 | 271.48 | 202.59 ± 31.52 | 50.14 ± 1.67 | 65.14 ± 12.28 | 57.18 ± 7.94 | 0.31 |

| 16 | SnapMelon_109DPI | Cucumis melo var. Momordica | 5 | 166.64 | 200.2 | 177.82 ± 11.95 | 90.09 ± 10.83 | 103.92 ± 16.47 | 98.87 ± 13.02 | 0.62 |

| 17 | SweetPotato_246DPI | Ipomoea batatas | 175.32 | 175.63 | 175.53 ± 0.15 | 60.19 ± 5.73 | 60.34 ± 6.09 | 60.28 ± 5.86 | 0.36 | |

| 18 | Turnips_109DPI | Brassica rapa var. Rapa | 14 | 95.5 | 150.35 | 117.67 ± 15.07 | 48.37 ± 6.98 | 99.14 ± 13.40 | 68.26 ± 10.28 | 0.66 |

| 19 | WaterMelonDarkGreen_109DPI | Citrulus lanatus | 5 | 201.52 | 232.1 | 214.87 ± 11.63 | 122.87 ± 14.23 | 143.46 ± 18.50 | 134.43 ± 16.07 | 0.70 |

| 20 | WaterMelonLightGreen_109DPI | Citrulus lanatus | 4 | 271.97 | 297.61 | 284.93 ± 9.12 | 171.94 ± 18.65 | 196.43 ± 24.00 | 187.28 ± 21.71 | 0.73 |

| #Widths † | Pasta fettuccine | Bitter gourd | Bottle gourd | Carrot | Celery | Cucumber | Eggplant long green |

| 1 | 4.713 ± 0.00 A | 53.0 ± 0.05 A | 69.3 ± 0.10 C | 22.1 ± 0.02 B | 30.0 ± 0.03 A | 47.8 ± 0.02 B | 30.0 ± 0.04 B |

| 3 | 4.705 ± 0.00 A | 45.7 ± 0.04 B | 62.4 ± 0.10 A | 22.1 ± 0.02 B | 30.8 ± 0.03 A | 38.9 ± 0.02 D | 28.5 ± 0.03 A |

| 5 | 4.709 ± 0.00 A | 47.9 ± 0.05 E | 64.4 ± 0.10 AB | 22.2 ± 0.02 AB | 30.6 ± 0.03 A | 41.4 ± 0.02 E | 29.3 ± 0.04 AB |

| 7 | 4.706 ± 0.00 A | 48.6 ± 0.05 DE | 65.2 ± 0.10 AB | 22.3 ± 0.02 AB | 30.5 ± 0.03 A | 42.2 ± 0.02 C | 29.5 ± 0.04 B |

| 10 | 4.711 ± 0.00 A | 49.4 ± 0.05 CDE | 65.7 ± 0.10 ABC | 22.7 ± 0.02 A | 30.6 ± 0.03 A | 42.4 ± 0.02 C | 29.2 ± 0.04 AB |

| 15 | 4.708 ± 0.00 A | 49.7 ± 0.05 CD | 65.9 ± 0.10 ABC | 22.4 ± 0.02 AB | 30.4 ± 0.03 A | 43.2 ± 0.02 A | 29.8 ± 0.04 B |

| 20 | 4.710 ± 0.00 A | 50.1 ± 0.05 CD | 66.8 ± 0.10 BC | 22.6 ± 0.02 A | 30.4 ± 0.03 A | 43.3 ± 0.02 A | 29.6 ± 0.04 B |

| 25 | 4.711 ± 0.00 A | 50.1 ± 0.05 CD | 66.2 ± 0.10 BC | 22.4 ± 0.02 AB | 30.4 ± 0.03 A | 43.5 ± 0.02 A | 29.9 ± 0.04 B |

| 50 | 4.710 ± 0.00 A | 50.4 ± 0.05 C | 66.5 ± 0.10 BC | 22.5 ± 0.02 AB | 30.4 ± 0.03 A | 43.7 ± 0.02 A | 29.9 ± 0.04 B |

| 75 | 4.709 ± 0.00 A | 50.4 ± 0.05 C | 66.4 ± 0.10 BC | 22.4 ± 0.02 AB | 30.4 ± 0.03 A | 43.8 ± 0.02 A | 30.0 ± 0.04 B |

| 100 | 4.709 ± 0.00 A | 50.4 ± 0.05 C | 66.5 ± 0.10 BC | 22.5 ± 0.02 AB | 30.4 ± 0.03 A | 43.8 ± 0.02 A | 29.9 ± 0.04 B |

| 150 | 4.709 ± 0.00 A | 50.4 ± 0.05 C | 66.5 ± 0.10 BC | 22.5 ± 0.02 AB | 30.4 ± 0.03 A | 43.8 ± 0.02 A | 29.9 ± 0.04 B |

| 200 | 4.709 ± 0.00 A | 50.4 ± 0.05 C | 66.5 ± 0.10 BC | 22.4 ± 0.02 AB | 30.3 ± 0.03 A | 43.9 ± 0.02 A | 30.0 ± 0.04 B |

| #SigWidths ‡ | 1 ⇔ 1 | 50 ⇔ 7 | 20 ⇔ 3 | 10 ⇔ 3 | 1 ⇔ 1 | 15 ⇔ 10 | 7 ⇔ 3 |

| #Widths † | Eggplant short pink | Green bean | Ivy gourd | Mango | Papaya | Pineapple | Potato |

| 1 | 41.2 ± 0.02 F | 8.8 ± 0.01 B | 23.8 ± 0.01 C | 107.6 ± 0.01 G | 114.9 ± 0.03 G | 118.0 ± 0.03 D | 70.3 ± 0.01 J |

| 3 | 33.5 ± 0.01 G | 8.5 ± 0.01 A | 18.2 ± 0.01 F | 81.7 ± 0.02 F | 87.3 ± 0.02 F | 107.7 ± 0.03 E | 56.4 ± 0.01 I |

| 5 | 35.6 ± 0.01 D | 8.6 ± 0.01 AB | 20.0 ± 0.01 D | 88.4 ± 0.02 D | 95.5 ± 0.02 E | 110.3 ± 0.03 F | 60.7 ± 0.01 G |

| 7 | 36.3 ± 0.01 C | 8.7 ± 0.01 AB | 20.6 ± 0.01 B | 90.7 ± 0.02 C | 98.4 ± 0.03 D | 111.7 ± 0.03 CF | 62.3 ± 0.01 H |

| 10 | 36.5 ± 0.01 C | 8.7 ± 0.01 AB | 20.7 ± 0.01 B | 91.5 ± 0.02 C | 99.2 ± 0.03 D | 111.9 ± 0.03 BC | 62.9 ± 0.01 F |

| 15 | 37.1 ± 0.01 A | 8.7 ± 0.01 AB | 21.2 ± 0.01 E | 93.3 ± 0.02 E | 101.6 ± 0.03 C | 112.8 ± 0.03 ABC | 64.0 ± 0.01 E |

| 20 | 37.2 ± 0.01 AE | 8.7 ± 0.01 AB | 21.3 ± 0.01 E | 93.7 ± 0.02 E | 102.0 ± 0.03 BC | 112.7 ± 0.03 ABC | 64.2 ± 0.01 D |

| 25 | 37.4 ± 0.01 AB | 8.7 ± 0.01 B | 21.4 ± 0.01 AE | 94.1 ± 0.02 AE | 102.5 ± 0.03 ABC | 113.0 ± 0.03 ABC | 64.5 ± 0.01 C |

| 50 | 37.5 ± 0.01 AB | 8.7 ± 0.01 B | 21.5 ± 0.01 A | 94.7 ± 0.02 AB | 103.1 ± 0.03 AB | 113.3 ± 0.03 AB | 64.9 ± 0.01 A |

| 75 | 37.6 ± 0.01 BE | 8.8 ± 0.01 B | 21.6 ± 0.01 A | 94.9 ± 0.02 AB | 103.4 ± 0.03 A | 113.4 ± 0.03 AB | 65.0 ± 0.01 AB |

| 100 | 37.6 ± 0.01 BE | 8.8 ± 0.01 B | 21.6 ± 0.01 A | 95.0 ± 0.02 AB | 103.4 ± 0.03 A | 113.4 ± 0.03 AB | 65.0 ± 0.01 AB |

| 150 | 37.6 ± 0.01 B | 8.8 ± 0.01 B | 21.6 ± 0.01 A | 95.1 ± 0.02 B | 103.5 ± 0.03 A | 113.4 ± 0.03 A | 65.1 ± 0.01 B |

| 200 | 37.6 ± 0.01 B | 8.8 ± 0.01 B | 21.6 ± 0.01 A | 95.1 ± 0.02 B | 103.6 ± 0.03 A | 113.5 ± 0.03 A | 65.1 ± 0.01 B |

| #SigWidths ‡ | 75 ⇔ 15 | 25 ⇔ 3 | 50 ⇔ 20 | 50 ⇔ 20 | 75 ⇔ 20 | 150 ⇔ 10 | 150 ⇔ 50 |

| #Widths † | Snake gourd | Snap melon | Sweet potato | Turnip | Watermelon dark green | Watermelon light green | |

| 1 | 62.7 ± 0.06 D | 109.8 ± 0.06 F | 63.2 ± 0.01 A | 76.6 ± 0.05 F | 150.1 ± 0.03 A | 208.5 ± 0.04 F | |

| 3 | 47.7 ± 0.05 C | 83.9 ± 0.05 E | 52.7 ± 0.01 H | 57.3 ± 0.05 E | 114.4 ± 0.03 G | 160.6 ± 0.03 C | |

| 5 | 52.6 ± 0.05 A | 91.8 ± 0.06 D | 56.8 ± 0.01 G | 62.5 ± 0.05 C | 124.7 ± 0.03 F | 174.4 ± 0.03 A | |

| 7 | 54.2 ± 0.05 AB | 94.3 ± 0.06 CD | 58.1 ± 0.01 F | 64.2 ± 0.05 BC | 128.3 ± 0.03 E | 179.2 ± 0.03 G | |

| 10 | 54.7 ± 0.05 BE | 95.9 ± 0.06 BC | 58.4 ± 0.01 E | 64.9 ± 0.05 BD | 129.3 ± 0.03 E | 180.6 ± 0.03 G | |

| 15 | 56.1 ± 0.05 EF | 97.2 ± 0.06 AB | 59.6 ± 0.01 D | 66.1 ± 0.05 AB | 132.2 ± 0.03 D | 184.4 ± 0.04 E | |

| 20 | 56.2 ± 0.05 EF | 98.0 ± 0.06 AB | 59.7 ± 0.01 D | 66.3 ± 0.05 AD | 132.7 ± 0.03 CD | 185.1 ± 0.04 DE | |

| 25 | 56.6 ± 0.05 EF | 98.1 ± 0.06 AB | 60.0 ± 0.01 C | 67.7 ± 0.04 A | 133.4 ± 0.03 BCD | 185.9 ± 0.04 BDE | |

| 50 | 57.0 ± 0.05 F | 98.8 ± 0.06 A | 60.3 ± 0.01 B | 67.1 ± 0.05 A | 134.2 ± 0.03 BC | 187.0 ± 0.04 BD | |

| 75 | 57.1 ± 0.05 F | 98.9 ± 0.06 A | 60.4 ± 0.01 B | 67.2 ± 0.05 A | 134.5 ± 0.03 B | 187.4 ± 0.04 BD | |

| 100 | 57.1 ± 0.05 F | 99.1 ± 0.06 A | 60.4 ± 0.01 B | 67.3 ± 0.05 A | 134.7 ± 0.03 B | 187.6 ± 0.04 B | |

| 150 | 57.2 ± 0.05 F | 99.2 ± 0.06 A | 60.5 ± 0.01 B | 67.4 ± 0.05 A | 134.8 ± 0.03 B | 187.8 ± 0.04 B | |

| 200 | 57.2 ± 0.05 F | 99.2 ± 0.06 A | 60.5 ± 0.01 B | 67.4 ± 0.05 A | 134.9 ± 0.03 B | 187.9 ± 0.04 B | |

| #SigWidths ‡ | 50 ⇔ 10 | 50 ⇔ 10 | 50 ⇔ 25 | 25 ⇔ 10 | 75 ⇔ 20 | 100 ⇔ 20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Igathinathane, C.; Visvanathan, R.; Bora, G.; Rahman, S. Computer Vision-Based Multiple-Width Measurements for Agricultural Produce. AgriEngineering 2025, 7, 204. https://doi.org/10.3390/agriengineering7070204

Igathinathane C, Visvanathan R, Bora G, Rahman S. Computer Vision-Based Multiple-Width Measurements for Agricultural Produce. AgriEngineering. 2025; 7(7):204. https://doi.org/10.3390/agriengineering7070204

Chicago/Turabian StyleIgathinathane, Cannayen, Rangaraju Visvanathan, Ganesh Bora, and Shafiqur Rahman. 2025. "Computer Vision-Based Multiple-Width Measurements for Agricultural Produce" AgriEngineering 7, no. 7: 204. https://doi.org/10.3390/agriengineering7070204

APA StyleIgathinathane, C., Visvanathan, R., Bora, G., & Rahman, S. (2025). Computer Vision-Based Multiple-Width Measurements for Agricultural Produce. AgriEngineering, 7(7), 204. https://doi.org/10.3390/agriengineering7070204