1. Introduction

In swine production, respiratory disease morbidity can reach up to 25%, with mortality reaching 2% [

1]. Consequently, negligence regarding respiratory health can lead to productivity and financial losses [

2]. Many pathogens can cause respiratory diseases in pigs (e.g.,

Mycoplasma hyopneumoniae (MH), Porcine reproductive and respiratory syndrome virus (PRRS), Swine influenza virus [

3]); however, the clinical manifestation of this ailment is similar. Cough, fever, lethargy, and reduced feed intake are common clinical signs caused by respiratory diseases [

3,

4].

The development of precision livestock farming (PLF) technologies to monitor clinical signs of respiratory diseases have been widely explored [

5,

6,

7]. The term PLF refers to the use of sensors for continuous and automated monitoring of animals to assist with farm management [

8]. Past research of PLF technologies for monitoring respiratory diseases have mainly used microphones for detecting pigs’ cough [

5,

9,

10]. Thus, cough monitoring can be helpful as an early warning for respiratory diseases, assisting farmers to start early treatment, potentially increasing treatment success [

11].

For implementing a new technology on a commercial farm, it is imperative to assess its effectiveness, which can be achieved through the evaluation of the PLF technology performance measures. Though, only assessing performance is not satisfactory as other key points (e.g., gold standard and study conditions) might hinder performance under field conditions [

12].

In the case of technologies developed for monitoring cough, the observation of cough events is used as gold standard, but many studies identify these cough events from audio files previously recorded at the animals’ site [

5,

6,

13]. Aerts et al. [

14] compared the identification of cough events from audio recordings with a live observer and concluded that the observer labelling remotely underestimated cough events, which could lead to a less reliable dataset. Thus, labelling cough sounds with a live observer at the animal’s site is a preferable gold standard.

Therefore, testing PLF technologies under field conditions using a reliable gold standard is essential to accurately assess their effectiveness. A systematic review identified only one study in the literature that met both conditions—field testing and the use of a reliable gold standard [

12].

Furthermore, scientific validation of commercially available PLF technologies remain limited. In a systematic review it was found that, among 83 identified technologies, only 12 had any testing documented in the scientific literature [

15]. Publishing such evaluations is crucial to demonstrate whether technology works in real-world settings.

Thus, this study represents an effort to assess a commercially available technology designed to monitor cough sounds in pig production. This study aims to evaluate the performance of a commercially available technology for monitoring cough sounds in pig production under field conditions, using an on-site observer as the reference for cough sound labelling.

2. Materials and Methods

2.1. Animal Use

This study was carried accordingly to the animal care protocols by the Comissão de Ética em Pesquisa no Uso de Animais (CEUA, protocol #2283 of 2022) of the Pontifícia Universidade Católica do Paraná (PUCPR). Data collection was conducted in a commercial pig farm located in Oliveira, Minas Gerais, Brazil. Data were collected in June 2021, for 6 days. A total of 256 Pigs (Landrace x Large White) were involved in the study. Data were collected from 16 pens (8 growing and 8 finishing), divided into two barns. In each pen there were 16 animals. Animals received water and feed ad libitum. The feed was provided with an automatic feeding system. Water was provided with nipple drinkers. A worker of the farm did visual inspection of animals once a day and applied interventions in cases where animals were hurt or diseased. The research team did not conduct clinical assessment of pigs as the main functionality of the technology being tested is monitoring cough sounds.

2.2. Live Data Collection and Labelling

Data from both barns were collected in the same period. Sixteen microphones (SmartMic, PecSmart, Florianópolis, Brazil) were used for recording bioacoustics in the pen. The microphone captured sounds within a 10 m radius at a sampling frequency of 44.1 kHz. The microphones were distributed along the barn, each positioned in front of a separate pen. Recordings were carried out in a different pen during each live labelling session.

Data collection was conducted with an on-site observer to assist in identifying cough sounds. This approach aimed to improve the efficiency of cough detection, as remote labelling could lead to an underestimation of labelled cough events [

14]. For collecting coughs events, a cough induction methodology was applied [

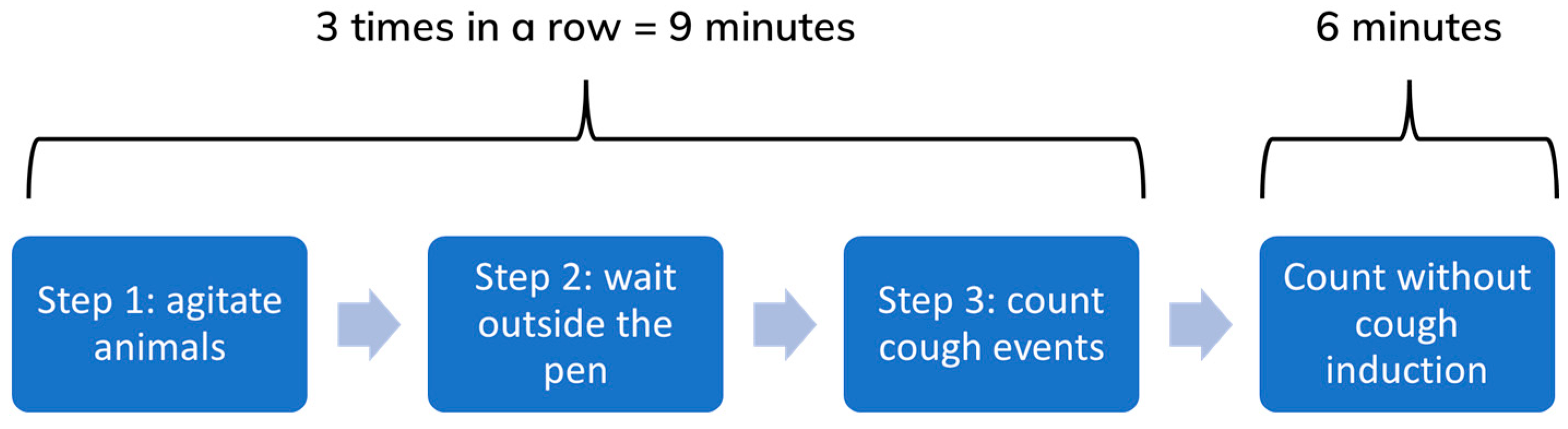

16]. The methodology was applied to induce cough sounds to increase the size of the dataset to train the algorithm. Briefly, the methodology consists of 3 steps that last 1 min each. In step 1, animals were agitated inside the pen (by an observer that walked and emitted sounds by clapping). In step 2, the observer waited a minute outside the pen. In step 3, cough events were annotated by the observer during the third minute. This methodology was repeated 3 times in a row, reaching a total of 9 min. In addition, recordings lasted 6 more minutes to collect cough sounds without the cough induction methodology. Therefore, each recording lasted 15 min. An illustration of the cough induction methodology is presented in

Figure 1.

Data were collected in two different periods of the day, 8 am to noon and 2 pm to 6 pm. For each period, data collection was conducted in a barn and the cough induction methodology was applied in all 8 pens in each barn. In the same day, data were collected in both barns at different periods. For example, if from 8 am to noon data were collected once, in all 8 pens in the finishing barn, from 2 pm to 6 pm data were collected once in all 8 pens in the growing barn. Therefore, data from all 16 barns were collected on the same day. The barn order that the data collection was conducted switched from one day to the other, ensuring that cough sounds from both barns were collected in the morning and afternoon.

Every heard cough event was annotated live by one trained observer during data collection. These cough events were classified as “continuous coughs” or “isolated cough”. Cough events were distinguished in this way as to simplify the labelling process.

“Continuous coughs” events were considered when the animal started to cough and continued repeatedly. Thus, a cough event of an animal that started to cough and continued repeatedly within 5 s, was considered as a single “continuous cough” event. An “Isolated cough” event was considered when the pig just coughed once and did not continue to cough within the following 5 s.

The cough events identified by the live observer were later labelled remotely into cough sounds for training and validating the algorithm. The algorithm consists of a Convolutional Neural Network and Recurrent Neural Network hybrid algorithm (CNN-RNN) that aims to identify cough sounds. The proposed algorithm diagram is presented in

Figure 2.

The algorithm was trained after three steps: noise elimination, labelling cough events into cough sounds, and feature extraction.

After data collection, recordings passed through the process of noise elimination. This step is held for eliminating any noise that might interfere in the identification of a cough sound in the labelling process.

In the second step, coughs sounds were manually labelled. As previously mentioned, the live observer classified cough events into “continuous coughs” and “isolated cough”. These annotations were used as a reference for manually labelling cough events into cough sounds. This step is necessary because cough sounds labelled from the cough events were used for training the algorithm. For “isolated cough” events, the single cough emitted by the animal was labelled as one cough sound. However, every cough in a “continuous cough” event was labelled as many cough sounds (e.g., a “continuous cough” event encompassed by 4 coughs was labelled as 4 independent cough sounds.

The recordings of labelled coughs sounds were transformed into spectrogram images that were later used as data for training the algorithm. The spectrogram image is a representation of the sound, and it is possible to visualize the change in sound in the time-frequency domain [

17]. Examples of spectrograms are presented in

Figure 3. The last step consisted of the feature extraction. From spectrogram images, the features were extracted in the format of tensors that were used as input for training the CNN-RNN.

2.3. Dataset

An open-world classification approach [

18] was used for training the algorithm. With the open-world classification we sought to identify target classes (i.e., cough sounds) while grouping other sounds into a single “other sounds” category. To do so, we labelled sounds from open-source datasets to enhance the diversity of sounds in the dataset for training. The sounds from open-source datasets were labelled within the sound class “other sounds”. The open-source datasets used were as follows:

ESC-50: dataset with environmental sounds such as dog bark (800 samples), rain (700 samples), and engine noise (500 samples) [

19];

UrbanSound8K: dataset with urban noise such as siren (800 samples) and car horn (700 samples) [

20];

AudioSet: dataset with set of sounds such as human voice (700 samples) and crowd noise (738 samples) [

21].

The final dataset used consisted of 10048 labelled sounds, of which 1110 were “cough sounds” and 8938 were “other sounds”. The subdivision of the “other sounds” class is as follows: farm ambient recordings from the study’s period (4000 samples), ESC-50 (2000 samples), UrbanSound8K (1500 samples), and AudioSet (1438 samples).

The heterogeneity of the “other sounds” class, which aggregates both farm ambient sounds and samples from open-source repositories, introduces class imbalance. Thus, the “other sounds” class over-represents the acoustic variability in real-world farms. Such imbalance was applied to ensure robust model training. Class weighting was implemented during mini-batch sampling for attributing proportional higher importance to minority classes. Focal loss was used to reduce contribution of easily classified examples in the “other sounds” class. Finally, data augmentation techniques and transfer learning from pre-trained large-scale models were employed to enrich the representation of features of the target classes (i.e., cough sounds).

2.4. Feature Extraction

We evaluated 34 features extracted from 1 s audio segments, optimizing their parameters to maximize classification performance while maintaining computational efficiency. These features were selected based on well-established descriptors with extensive validation in the literature, features developed by other researchers but with unclear utility in this specific task, and proprietary feature extraction methods designed in-house.

The first group of features consisted of seven features explored in previous studies and are widely used in audio classification: Mel-Frequency Cepstral Coefficients (MFCC) [

22,

23], Spectral Centroid [

23], Spectral Bandwidth [

23], Zero Crossing Rate [

23], Chroma Features [

23], Spectral Contrast [

24], and Tonnetz [

25]. Given their well-documented effectiveness, their names have been retained in our analysis. The second group consists of 15 features developed by external researchers that do not have extensive validation for swine cough classification. Their usage in this study required empirical assessment, thus their names were anonymized in the results. The third group consists of 12 feature extraction methods developed specifically for this classification task and remain confidential to protect the intellectual property of PecSmart

® (Florianópolis, Brazil).

To optimize the performance of each extracted feature, we applied a Bayesian hyperparameter tuning process using Weights & Biases Sweeps. This involved systematically adjusting relevant parameters to identify configurations that enhanced classification accuracy while maintaining computational efficiency. For example, the MFCC feature extraction process was conducted by tuning the number of coefficients, window length, hop length, and filter bank. Optimization was applied to all features, although specific parameter values remain undisclosed to protect proprietary configurations.

The feature extraction process was further accelerated by a proprietary algorithm designed to identify 1 s segments that are most likely to contain a cough event from 30 s audio recordings. Splitting recording into 1 s segments enables significant reduction in processing time by pre-selecting segments with cough sounds. Thus, only the most relevant audio segments pass through feature extraction, contributing to computational efficiency.

The effectiveness of each feature was conducted by using a 10-fold cross-validation procedure. The dataset was divided into ten subsets, of which nine subsets were used for training while one served as a validation set. A composite custom score was computed for each feature, defining the feature impact on classification accuracy to its extraction runtime. The feature evaluation enabled to identify features that have a positive or negative impact on the classification performance (

Figure 4). Features with negative scores were excluded from the final set.

The final model retained eight features their impact on classification performance and computational efficiency. The optimized feature extraction process achieved a runtime of 0.04 s per 1 s audio segment.

2.5. Final Model

The algorithm was trained based on many architectures for identifying the correlation of the hyperparameter with the validation metrics. The final architecture was chosen based on the hyperparameter that presented the highest correlation with the validation metrics. Python Language (version 3.8) was used for all coding and Weight and Biases & Biases Sweeps were used for automatically optimizing the hyperparameters.

The final model is a hybrid architecture that integrates several deep learning components. Two-dimensional convolutional layers were employed to extract patterns from features in a two-dimensional structure, such as spectrogram representations. One-dimensional convolutional layers are used to capture local temporal dependencies from sequential or window-based features. A Recurrent Neural Network (RNN) process features in a regression-like structure, which is tasked with modelling long-term temporal dynamics. The final fully connected layer uses the Softmax activation function to map the output logits into “cough sounds” or “other sounds”. The number of layers, neurons, and other hyperparameters are confidential.

2.6. Performance

The performance of the algorithm was assessed by comparing results from gold standard (labelled coughs from cough events annotated by the live observer) with the algorithm. A threshold was defined for considering a sound as “cough sound” or “other sounds”. True positives (TP) and true negatives (TN) were considered sounds that both algorithm and gold standard detected as cough for TP or non-cough sound for TN. False negatives (FN) were considered when the gold standard detected a “cough sound” but the algorithm did not detect. False positives (FP) were considered when the algorithm mistakenly detected a “cough sound”.

The performance measures used for analyzing the technology effectiveness were recall, specificity (Sp), precision, accuracy, and F1-score. The algorithm was considered efficient for field application if all performance measures were high (>90%). Each performance measure is calculated by the following equations:

3. Results

The performance was assessed by comparing the labelled data with the results of the algorithm. From the confusion matrix (

Table 1) and the equations (recall, Sp, precision, accuracy, and F1-score). The results for recall, Sp, precision, accuracy, and F1-score are 98.6%, 99.7%, 98.8%, 99.6%, and 98.6%, respectively.

The algorithm achieved a high performance with all measures > 90% [

12]. Usually, PLF technologies for monitoring respiratory diseases emit alarms based on the occurrence of coughs. Therefore, the correct identification of cough events and non-cough events is important for technology’s success to emit trustworthy alarms. The high Sp indicates few false alarms are emitted since the number of sounds mistakenly identified as cough sounds is very low. The high recall also supports the efficiency of the technology, since it indicates that cough sounds are correctly identified and the possibility of a cough not being identified is very low, reducing the chance of the technology to fail in classifying a cough sound as the clinical sign.

4. Discussion

Precision livestock farming technologies for monitoring respiratory diseases have been researched for over two decades [

5,

9,

10,

13,

26], making it possible for studies to improve technology’s performance over time exploring different machine learning methods.

Performance measures achieved by the CNN-RNN explored by this article were similar with the performance of other algorithms found in the literature [

5,

6,

13,

25]. These studies were held in field conditions and algorithms achieved high performance (>90% [

12]). However, the gold standard used in these studies are based on remotely labelling sounds from audio files. The issue with this gold standard is that an underestimation of cough sounds may occur [

14] and lead to a less reliable dataset for training and validating the algorithm.

In our study, data were collected in both growing and finishing phases for enabling the algorithm to be applied in different production phases. Unfortunately, we were unable to assess if the performance of the algorithm was significantly different between phases because once in the dataset it was not possible to identify from which barn the cough was recorded, however, the low number of FN and FP indicates that the algorithm should be equally efficient in both phases. Other technical points such as selection of features could play an important role in the difference between performances; however, due to confidential information of the proposed technology in our study, we were not able to assess the difference between the technical aspects.

The technology achieved a high specificity (99.7%), indicating that few sounds were misclassified as coughs. However, even with a low false positive rate (0.3%), practical applications—such as continuous 24 h monitoring—may accumulate a significant number of false detections over time. These false positives should not be disregarded, as they could trigger false alarms regarding cough incidence or compromise analyses related to the pig’s respiratory health. Future studies should explore strategies to mitigate false positives, such as applying temporal filtering based on cough patterns or establishing thresholds for the number of coughs detected within specific time windows to trigger alerts.

The construction of a “other sounds” class with a broad spectrum of non-target sounds serves two purposes. First, it reflects the heterogenicity of field conditions acoustic. Second, it challenges the classifier to identify subtle features of pig coughs from a wide array of ambient sounds. The integration of the open-source datasets with distinct sound classes enhanced the robustness of the training process. The adoption of class weighting, focal loss, and data augmentation further mitigates the adverse effects of class imbalance, thereby improving model generalizability.

It is important to note that this study was conducted on only one farm. In machine learning, over-fitting is a problem that occurs when the algorithm achieves high performance with training data, but under different environments or conditions the performance is low. A variable dataset for training the algorithm is required to prevent over-fitting [

27]. Although an open world approach was used to minimize this effect, it is necessary to test the algorithm under different farms that may have a different sound variability.

The technology tested in the current study is a tool that can give the farmer insights into the respiratory health of their animals. The cough detection system empowers farmers to investigate the underlying causes of the cough, whether due to environmental factors, infections, or other health concerns.

As more studies present technologies with high efficiency in monitoring cough sounds, we see that diagnosing specific types of disease based on bioacoustics could be further explored. Information regarding the type of disease affecting animals could improve decision-making on prevention and treatment, which could help farmers to better manage livestock health. Some studies tested the possibility of monitoring bioacoustics data for diagnosing the etiology of the disease (e.g., PRRS, postweaning multisystemic wasting syndrome (PMWS),

Mycoplasma hypopneumoniae (MH), swine influenza virus,

Ctinobacillus pleuropneumoniae, and porcine circovirus 2 (PCV2)) [

28,

29,

30].

The cough monitoring may also be used for identifying other problems besides respiratory diseases. There are technologies in the literature that researched the use of cough monitoring for assessing air quality [

31,

32]. This could be problematic for some technologies as some coughs may be caused by poor air quality and not respiratory diseases, leading to an increase in the number of false alarms. The development of technologies with the ability to differentiate between coughs of sick animals and cough of healthy animals could decrease false alarms. This system could be developed by collecting data of coughs from sick animals and healthy animals. These data could be used to train an algorithm able to differentiate both types of coughs.

Our study has some limitations that need to be explored in the future. One of such limitations is that the used technology is not able to identify the primary stressor provoking the clinical sign. It is important to point out that a technology able to identify specific causes of coughs is required to have a more impactful role in the pig industry. Furthermore, as clinical assessment was not conducted, it is not possible to define if the coughs collected were indeed caused by respiratory diseases; it is only an indication that the number of coughs is increasing in the herd. As conducted in some studies [

29,

30], pathogen identification would be necessary to indicate whether the technology is able to identify coughs that were caused by respiratory diseases. Another limitation of our approach is that cough events were annotated by a single observer. Although the observer received prior training in identifying cough sounds, it was not possible to independently verify potential bias or confirm that all annotated events were indeed true coughs. Ensuring the quality and accuracy of annotated data is critical for reliably evaluating the performance of the technology. This limitation could be addressed by involving multiple annotators and assessing inter-observer agreement to ensure consistency and reliability in the annotations.