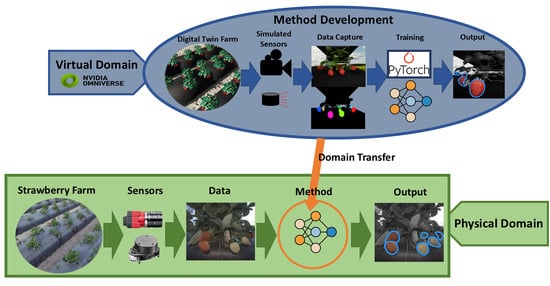

From Simulation to Field Validation: A Digital Twin-Driven Sim2real Transfer Approach for Strawberry Fruit Detection and Sizing

Abstract

1. Introduction

- Develop a virtual representation of a plasticulture strawberry farm containing beds, plants, and environment for robotic sensing applications.

- Gather RGB stereo imaging data in the virtual and physical domains for the tasks of fruit count and fruit size.

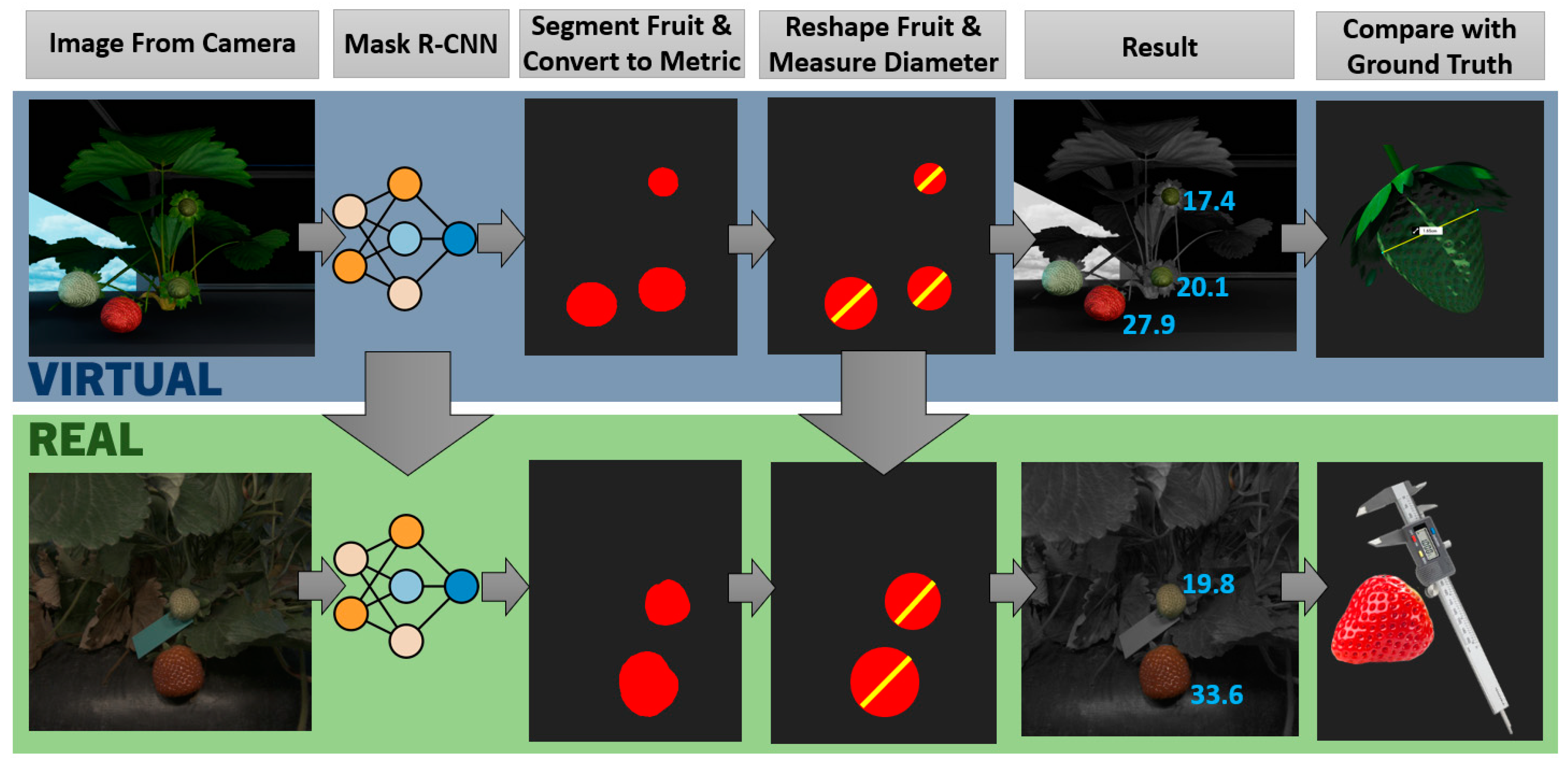

- Implement fruit detection and sizing algorithms in simulation using the digital twin and apply them to field data.

2. Materials and Methods

2.1. Field Data Collection

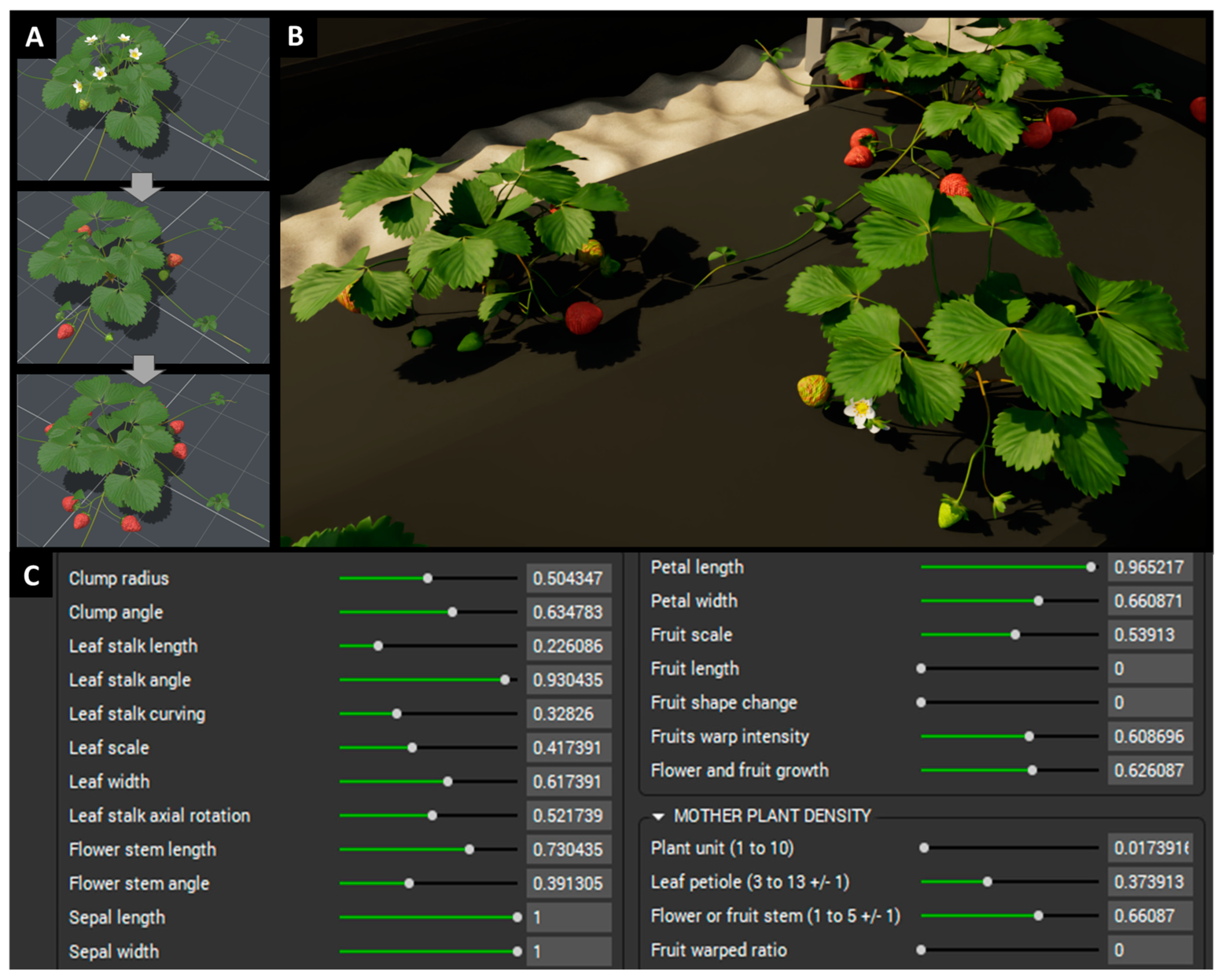

2.2. Virtual Strawberry Farm Implementation

2.2.1. Strawberry Farm Scene

2.2.2. Ground Vehicle and Camera System

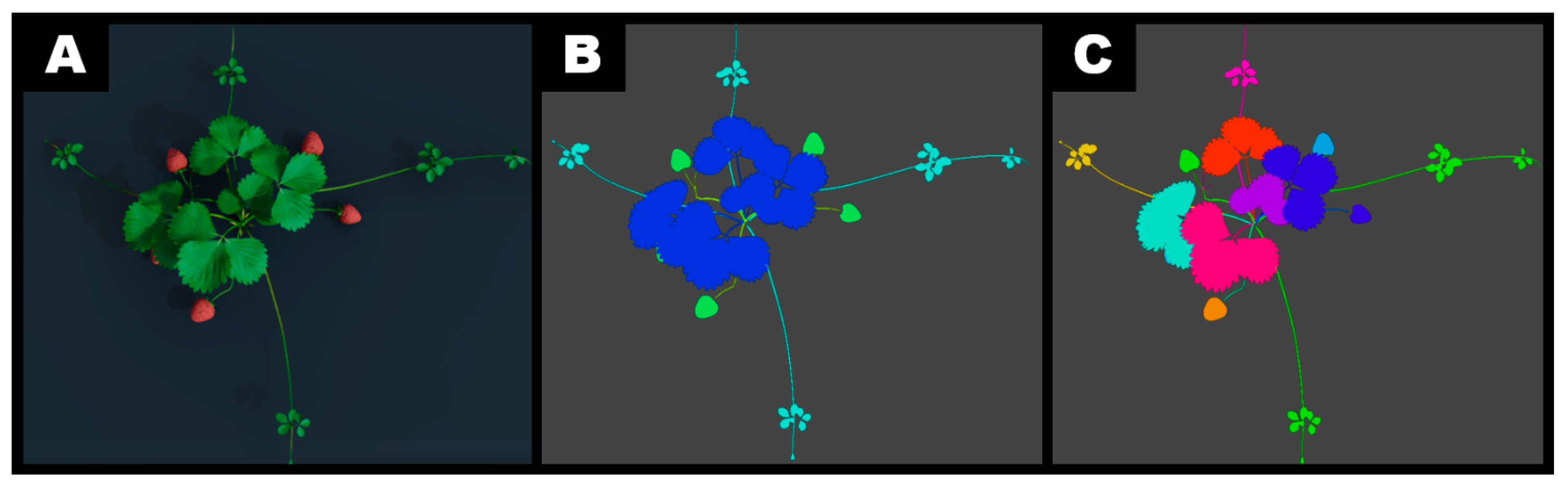

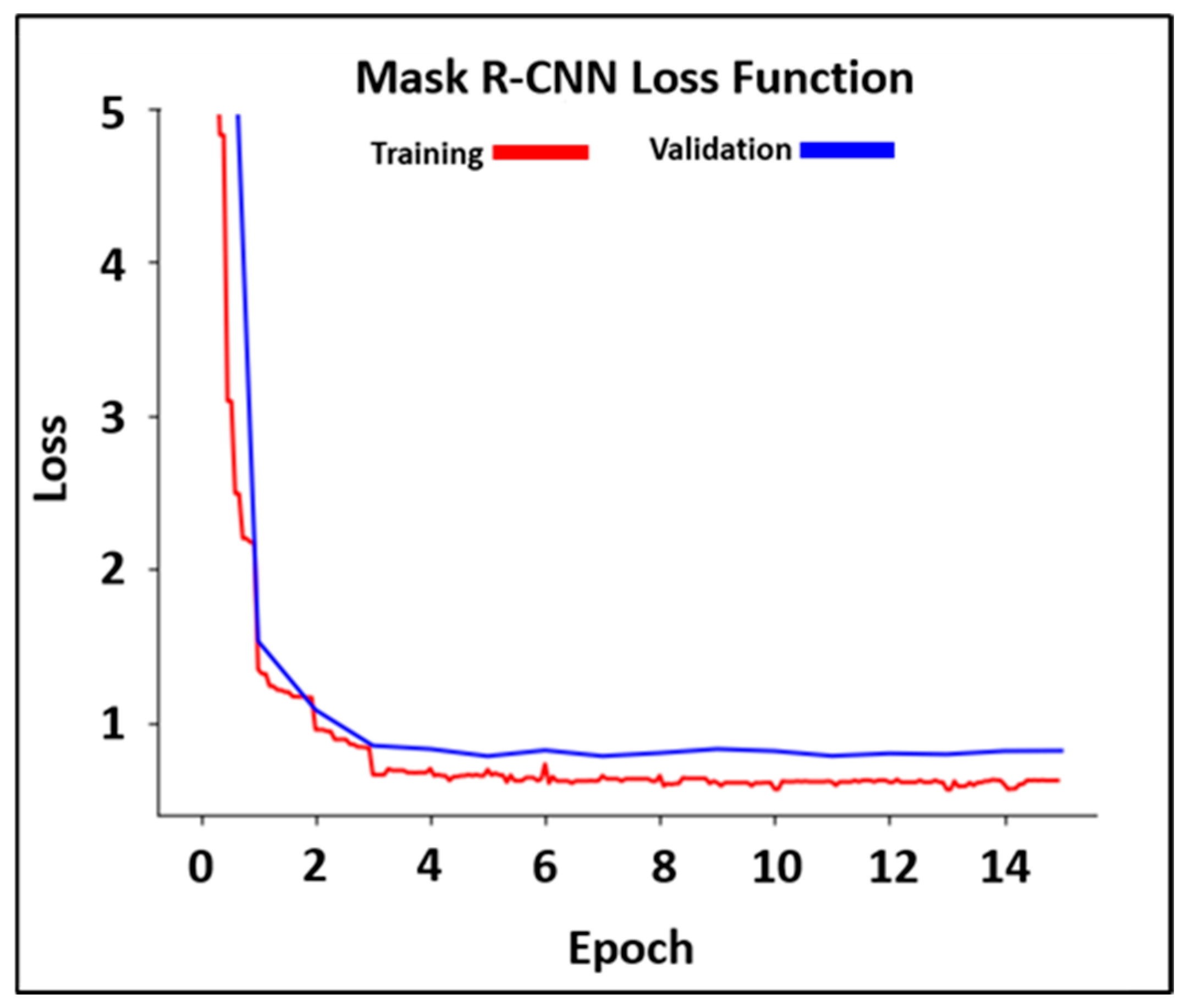

2.3. Fruit Detection Model Training Using Synthetic Data

2.4. Validating Strawberry Fruit Sizing Pipeline with Synthetic Data

3. Results and Discussions

3.1. Comparison of Virtual and Real Strawberry Farms

3.2. Fruit Detection Model Performance Using Synthetic Data

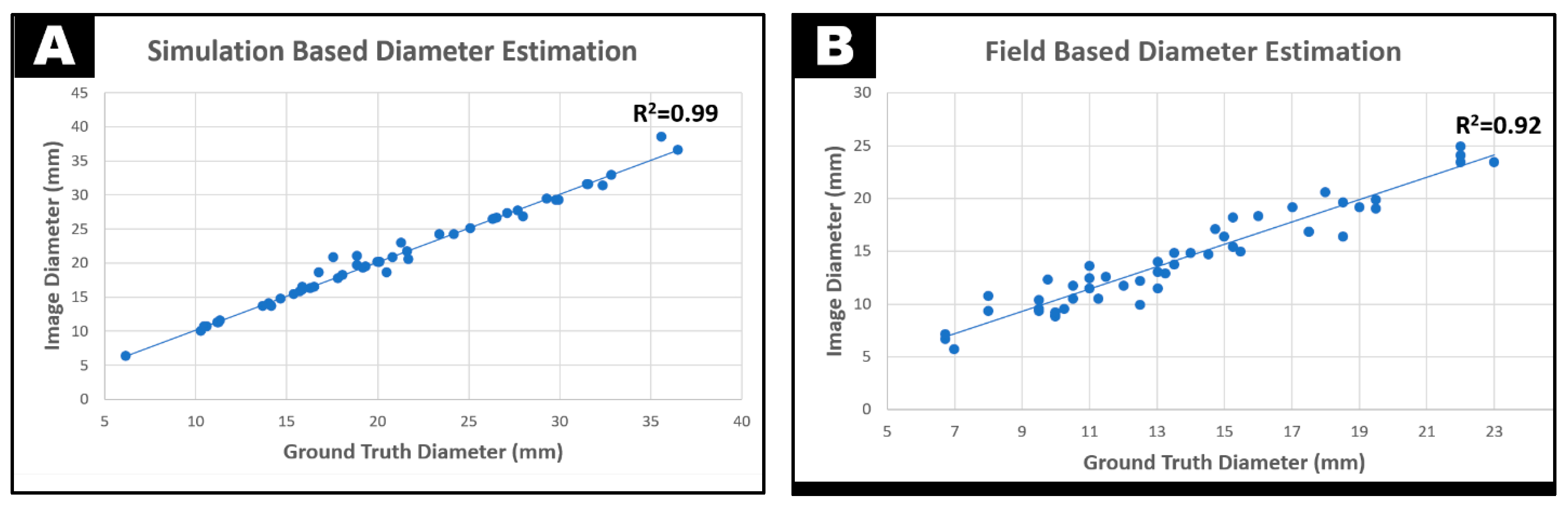

3.3. Fruit Size Estimation Using Synthetic and Field Data

3.4. Limitations and Future Recommendations

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| R-CNN | Region-based CNN |

| Sim2real | Simulation-to-Reality |

| GCREC | Gulf Coast Research and Education Center |

| URDF | Unified Robot Description Format |

| GPS | Global Positioning Unit |

| RGB | Red–Green–Blue |

| LED | Light-Emitting Diode |

| RMSE | Root Mean Squared Error |

| ROS | Robot Operating System |

References

- Astill, G.; Perez, A.; Thornsbury, S. Developing Automation and Mechanization for Specialty Crops: A Review of Us Department of Agriculture Programs—A Report to Congress; Administrative Publication Number 082; USDA Economic Research Service: Washington, DC, USA, 2020. Available online: https://www.ers.usda.gov/publications/pub-details?pubid=95827 (accessed on 11 March 2025).

- AgFunder. Farm Tech Investing Report; AgFunder: San Francisco, CA, USA, 2020. [Google Scholar]

- Kim, S.; Heo, S. An agricultural digital twin for mandarins demonstrates the potential for individualized agriculture. Nat. Commun. 2024, 15, 1561. [Google Scholar] [CrossRef] [PubMed]

- Purcell, W.; Neubauer, T. Digital twins in agriculture: A state-of-the-art review. Smart Agric. Technol. 2023, 3, 100094. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Hensel, O. Toward the next generation of digitalization in agriculture based on digital twin paradigm. Sensors 2022, 22, 498. [Google Scholar] [CrossRef] [PubMed]

- VanDerHorn, E.; Mahadevan, S. Digital twin: Generalization, characterization and implementation. Decis. Support Syst. 2021, 145, 113524. [Google Scholar] [CrossRef]

- Alves, R.G.; Maia, R.F.; Lima, F. Development of a digital twin for smart farming: Irrigation management system for water saving. J. Clean. Prod. 2023, 388, 135920. [Google Scholar] [CrossRef]

- Kampker, A.; Stich, V.; Jussen, P.; Moser, B.; Kuntz, J. Business models for industrial smart services—The example of a digital twin for a product-service-system for potato harvesting. Procedia CIRP 2019, 83, 534–540. [Google Scholar] [CrossRef]

- Shoji, K.; Schudel, S.; Onwude, D.; Shrivastava, C.; Defraeye, T. Mapping the postharvest life of imported fruits from packhouse to retail stores using physics-based digital twins. Resour. Conserv. Recycl. 2022, 176, 105914. [Google Scholar] [CrossRef]

- Singh, R.; Seneviratne, L.; Hussain, I. A deep learning-based approach to strawberry grasping using a telescopic-link differential drive mobile robot in ros-gazebo for greenhouse digital twin environments. IEEE Access 2025, 13, 361–381. [Google Scholar] [CrossRef]

- Guan, Z.; Abd-Elrahman, A.; Whitaker, V.; Agehara, S.; Wilkinson, B.; Gastellu-Etchegorry, J.-P.; Dewitt, B. Radiative transfer image simulation using l-system modeled strawberry canopies. Remote Sens. 2022, 14, 548. [Google Scholar] [CrossRef]

- Kim, D.; Kang, W.H.; Hwang, I.; Kim, J.; Kim, J.H.; Park, K.S.; Son, J.E. Use of structurally-accurate 3d plant models for estimating light interception and photosynthesis of sweet pepper (capsicum annuum) plants. Comput. Electron. Agric. 2020, 177, 105689. [Google Scholar] [CrossRef]

- Luo, D.; Luo, R.; Cheng, J.; Liu, X. Quality detection and grading of peach fruit based on image processing method and neural networks in agricultural industry. Front. Plant Sci. 2024, 15, 1415095. [Google Scholar] [CrossRef]

- Xie, Z.; Yang, Z.; Li, C.; Zhang, Z.; Jiang, J.; Guo, H. Yolo-ginseng: A detection method for ginseng fruit in natural agricultural environment. Front. Plant Sci. 2024, 15, 1422460. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Li, X.; Yuan, L.; Jiang, Z.; Jin, H.; Wu, W.; Cai, R.; Zheng, M.; Bai, H. Sdes-yolo: A high-precision and lightweight model for fall detection in complex environments. Sci. Rep. 2025, 15, 2026. [Google Scholar] [CrossRef] [PubMed]

- Tao, W.; Wang, X.; Yan, T.; Liu, Z.; Wan, S. Esf-yolo: An accurate and universal object detector based on neural networks. Front. Neurosci. 2024, 18, 1371418. [Google Scholar] [CrossRef]

- Sun, H.; Wang, B.; Xue, J. Yolo-p: An efficient method for pear fast detection in complex orchard picking environment. Front. Plant Sci. 2022, 13, 1089454. [Google Scholar] [CrossRef] [PubMed]

- Ang, G.; Zhiwei, T.; Wei, M.; Yuepeng, S.; Longlong, R.; Yuliang, F.; Jianping, Q.; Lijia, X. Fruits hidden by green: An improved yolov8n for detection of young citrus in lush citrus trees. Front. Plant Sci. 2024, 15, 1375118. [Google Scholar] [CrossRef]

- Fang, S.; Zhang, B.; Hu, J. Improved mask r-cnn multi-target detection and segmentation for autonomous driving in complex scenes. Sensors 2023, 23, 3853. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing yolov8 and mask r-cnn for instance segmentation in complex orchard environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Apple detection and instance segmentation in natural environments using an improved mask scoring r-cnn model. Front. Plant Sci. 2022, 13, 1016470. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Jia, M.; Li, J.; Hu, T.; Luo, J. Instance segmentation and number counting of grape berry images based on deep learning. Appl. Sci. 2023, 13, 6751. [Google Scholar] [CrossRef]

- Huang, X.; Peng, D.; Qi, H.; Zhou, L.; Zhang, C. Detection and instance segmentation of grape clusters in orchard environments using an improved mask r-cnn model. Agriculture 2024, 14, 918. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of depth cameras for use in fruit localization and sizing: Finding a successor to kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Neupane, C.; Walsh, K.B.; Goulart, R.; Koirala, A. Developing machine vision in tree-fruit applications-fruit count, fruit size and branch avoidance in automated harvesting. Sensors 2024, 24, 5593. [Google Scholar] [CrossRef]

- Mirbod, O.; Choi, D. Synthetic data-driven AI using mixture of rendered and real imaging data for strawberry yield estimation. In Proceedings of the 2023 ASABE Annual International Meeting, Omaha, NE, USA, 9–12 July 2023; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2023; pp. 1–5. [Google Scholar]

- Rajeswari, D.; Venkatachalam Parthiban, A.; Ponnusamy, S. Digital twin-based crop yield prediction in agriculture. In Harnessing AI and Digital Twin Technologies in Businesses; Ponnusamy, S., Assaf, M., Antari, J., Singh, S., Kalyanaraman, S., Eds.; IGI Global: Hershey, PA, USA, 2024; pp. 99–110. [Google Scholar]

- Goondram, S.; Cosgun, A.; Kulic, D. Strawberry detection using mixed training on simulated and real data. arXiv 2020, arXiv:2008.10236. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Lu, Y.; Chen, D.; Olaniyi, E.; Huang, Y. Generative adversarial networks (gans) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 2022, 200, 107208. [Google Scholar] [CrossRef]

- Fei, Z.; Olenskyj, A.G.; Bailey, B.N.; Earles, M. Enlisting 3d crop models and gans for more data efficient and generalizable fruit detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1269–1277. [Google Scholar]

- Zhang, W.; Chen, K.; Wang, J.; Shi, Y.; Guo, W. Easy domain adaptation method for filling the species gap in deep learning-based fruit detection. Hortic. Res. 2021, 8, 119. [Google Scholar] [CrossRef]

- Li, Y.; Zhan, X.; Liu, S.; Lu, H.; Jiang, R.; Guo, W.; Chapman, S.; Ge, Y.; Solan, B.; Ding, Y.; et al. Self-supervised plant phenotyping by combining domain adaptation with 3d plant model simulations: Application to wheat leaf counting at seedling stage. Plant Phenomics 2023, 5, 0041. [Google Scholar] [CrossRef]

- Hartley, Z.K.; French, A.P. Domain adaptation of synthetic images for wheat head detection. Plants 2021, 10, 2633. [Google Scholar] [CrossRef]

- Brawner, S. Solidworks to Urdf Exporter. Available online: http://wiki.ros.org/sw_urdf_exporter (accessed on 11 March 2025).

- Mirbod, O.; Choi, D.; Heinemann, P.H.; Marini, R.P.; He, L. On-tree apple fruit size estimation using stereo vision with deep learning-based occlusion handling. Biosyst. Eng. 2023, 226, 27–42. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Karabatis, Y.; Lin, X.; Sanket, N.J.; Lagoudakis, M.G.; Aloimonos, Y. Detecting olives with synthetic or real data? Olive the above. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 4242–4249. [Google Scholar]

- MacArthur, D.; Schueller, J.; Lee, W.S.; Crane, C. Remotely-piloted helicopter citrus yield map estimation. In Proceedings of the 2006 ASAE Annual Meeting, Boston, MA, USA, 6–8 January 2006; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2006. [Google Scholar]

- Koirala, A.; Walsh, K.B.; Wang, Z. Attempting to estimate the unseen—Correction for occluded fruit in tree fruit load estimation by machine vision with deep learning. Agronomy 2021, 11, 347. [Google Scholar] [CrossRef]

| Field Experiment Dates 2023 | Data Type Collected | Number of Plants Imaged | Number of Images Processed |

|---|---|---|---|

| 16 March | Images | 50 | 75 |

| 20 March | Images + Ground-Truth Fruit Size | 50 | 60 |

| Fruit Detection Neural Network Model | Fruit Sizing Method | |||

|---|---|---|---|---|

| Training Synthetic Images | Validation Synthetic Images | Testing Real Images | Validation Synthetic Images | Testing Real Images |

| 315 | 50 | 135 | 50 | 50 |

| Label | Description | Simulated Lidar Sensor (m) | Real Lidar Sensor (m) | Ground-Truth Measurement (m) |

|---|---|---|---|---|

| (W) | Bed Spacing | 0.50 | 0.53 | 0.51 |

| (X) | Bed Width | 0.72 | 0.71 | 0.74 |

| (Y) | Bed Height | 0.26 | 0.29 | 0.25 |

| (Z) | Front Wheel Returns | Sensor proximity effect | Sensor proximity effect | N/A |

| Simulation Trial | Field Trial 1 (16 March) | Field Trial 2 (20 March) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C | P | R | F1 | C | P | R | F1 | C | P | R | F1 | |

| All Fruit | 329 | 0.92 | 0.90 | 0.91 | 295 | 0.95 | 0.89 | 0.92 | 134 | 0.89 | 0.75 | 0.81 |

| Red Fruit | 139 | 0.97 | 1.0 | 0.99 | 167 | 0.95 | 0.99 | 0.97 | 39 | 0.87 | 0.95 | 0.91 |

| White Fruit | 87 | 0.93 | 0.92 | 0.92 | 61 | 1.00 | 0.87 | 0.93 | 52 | 0.98 | 0.81 | 0.89 |

| Green Fruit | 103 | 0.89 | 0.79 | 0.83 | 67 | 0.94 | 0.72 | 0.82 | 43 | 0.83 | 0.58 | 0.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mirbod, O.; Choi, D.; Schueller, J.K. From Simulation to Field Validation: A Digital Twin-Driven Sim2real Transfer Approach for Strawberry Fruit Detection and Sizing. AgriEngineering 2025, 7, 81. https://doi.org/10.3390/agriengineering7030081

Mirbod O, Choi D, Schueller JK. From Simulation to Field Validation: A Digital Twin-Driven Sim2real Transfer Approach for Strawberry Fruit Detection and Sizing. AgriEngineering. 2025; 7(3):81. https://doi.org/10.3390/agriengineering7030081

Chicago/Turabian StyleMirbod, Omeed, Daeun Choi, and John K. Schueller. 2025. "From Simulation to Field Validation: A Digital Twin-Driven Sim2real Transfer Approach for Strawberry Fruit Detection and Sizing" AgriEngineering 7, no. 3: 81. https://doi.org/10.3390/agriengineering7030081

APA StyleMirbod, O., Choi, D., & Schueller, J. K. (2025). From Simulation to Field Validation: A Digital Twin-Driven Sim2real Transfer Approach for Strawberry Fruit Detection and Sizing. AgriEngineering, 7(3), 81. https://doi.org/10.3390/agriengineering7030081