Abstract

Smart agricultural machinery is built upon traditional agricultural equipment, further integrating modern information technologies to achieve automation, precision, and intelligence in agricultural production. Currently, significant progress has been made in the autonomous operation and monitoring technologies of smart agricultural machinery in China. However, challenges remain, including poor adaptability to complex environments, high equipment costs, and issues with system implementation and standardization integration. To help industry professionals quickly understand the current state and promote the rapid development of smart agricultural machinery, this paper provides an overview of the key technologies related to autonomous operation and monitoring in China’s smart agricultural equipment. These technologies include environmental perception, positioning and navigation, autonomous operation and path planning, agricultural machinery status monitoring and fault diagnosis, and field operation monitoring. Each of these key technologies is discussed in depth with examples and analyses. On this basis, the paper analyzes the main challenges faced by the development of autonomous operation and monitoring technologies in China’s smart agricultural machinery sector. Furthermore, it explores the future directions for the development of autonomous operation and monitoring technologies in smart agricultural machinery. This research is of great importance for promoting the transition of China’s agricultural production towards automation and intelligence, improving agricultural production efficiency, and reducing reliance on human labor.

1. Introduction

Agricultural mechanization is the main pathway and fundamental direction for the development of modern agriculture, while the intelligence of agricultural machinery is a key task and advanced goal in improving the current level of agricultural mechanization. With the continuous advancement of agricultural mechanization and informatization, China has now entered a critical stage of accelerating the transition from traditional to modern agriculture. Driven by intelligent technologies, the automation, precision, and efficiency of agricultural machinery have significantly enhanced the efficiency and quality of agricultural production. The efficiency of smart agricultural machinery is from 50% to 60% higher than that of conventional machinery, and during the crop planting phase, the use of smart agricultural machinery can increase per acre yield by at least 5% to 10%. Although the total number of agricultural machines in China has reached 200 million units, with a total power output of 1.1 billion kilowatts and an overall mechanization rate of over 74%, high-end intelligent agricultural machinery accounts for less than 10% of the total agricultural machinery fleet and is primarily reliant on imports. At present, China faces issues such as an aging rural labor force, labor shortages in rural areas, and the imbalance between supply and demand in the agricultural labor market. To achieve modernization, labor reduction, and automation in agricultural production, the development of agricultural machinery intelligence is crucial [1].

The “14th Five-Year Plan” points out that the development of smart agriculture is a key direction for China’s continuous high-quality agricultural development. As a key component of smart agriculture, intelligent agricultural machinery plays a role in improving the quality and efficiency of agricultural production, which helps enhance the precision and intelligence of agricultural practices [2]. By fully integrating the new generation of information technology into agricultural mechanization and utilizing advanced information technologies and sensors to upgrade traditional agricultural machinery, it promotes a transformation in agricultural machinery operations, significantly improving production efficiency and reducing reliance on manual labor [3]. Autonomous operation and monitoring are the core features of intelligent agricultural machinery. These systems autonomously plan paths for agricultural operations while intelligently perceiving and precisely monitoring their own status and operational processes. The level of development in these technologies determines the degree of intelligence in agricultural machinery. Currently, China continues to increase investment in the research and development of autonomous operation and monitoring technologies for intelligent agricultural machinery. Significant progress has been made in this field, with initial results in precise operations and intelligent management across various stages of agricultural production. However, there remains a gap compared to advanced international levels, and further breakthroughs are urgently needed.

Some countries began the process of agricultural modernization early and have developed a higher level of intelligent agricultural machinery, with well-established research systems for autonomous operation and monitoring technologies. Internationally, the primary focus for achieving the autonomous operation of agricultural machinery is through research of key technologies such as obstacle avoidance and path planning [4]. In Italy, Reina et al. [5] developed a multi-sensor perception system to enhance the environmental awareness of agricultural vehicles in farmland. By integrating various onboard sensor technologies, including stereovision, LiDAR, radar, and thermal imaging, the system automatically detects obstacles and distinguishes between passable and impassable areas. In Germany, Höffmann et al. [6] divided complex agricultural areas into simpler units, each equipped with a guide rail, forming a fixed track system. The subsequent stages of route planning and smooth path planning calculate a path that adheres to path constraints, optimally navigating through the units while aligning with the track system. This laid a solid foundation for accurate and efficient agricultural coverage path planning. Multi-sensor perception provides real-time environmental data, allowing the obstacle avoidance system to take obstacles into account during path planning and optimize the route. Path planning technology dynamically adjusts the working route based on real-time environmental data, ensuring that agricultural machinery not only avoids obstacles in complex farmland environments but also completes tasks autonomously and efficiently.

In the process of operation monitoring, the focus is on integrating artificial intelligence technology with agricultural machinery, applied to various agricultural processes such as tilling, planting, and harvesting [7]. Intelligent operation monitoring aims to improve the accuracy and efficiency of field operations, thereby increasing crop yields and promoting the advancement of agricultural machinery equipment [8,9]. In Iran, Karimi et al. [10] designed and built a new seeder monitoring system based on an infrared seed sensor developed earlier. Hall-effect sensors measure ground speed, and location information provided by a GPS module is used to record seeding data. Ultrasonic sensors continuously measure the seed and fertilizer levels in the hopper. In South Korea, Kim [11] employed various image processing techniques, including Hough transformation, hue-saturation-value (HSV) color space conversion, image morphological techniques, and Gaussian blur, to accurately track seeding rates and locate missed seeding areas in mechanical seeders with planting trays. The integration of multi-sensor and image processing technologies enhances monitoring capabilities during the operation process, ensuring the accuracy of fieldwork under various environmental conditions. This provides more reliable data support for agricultural production.

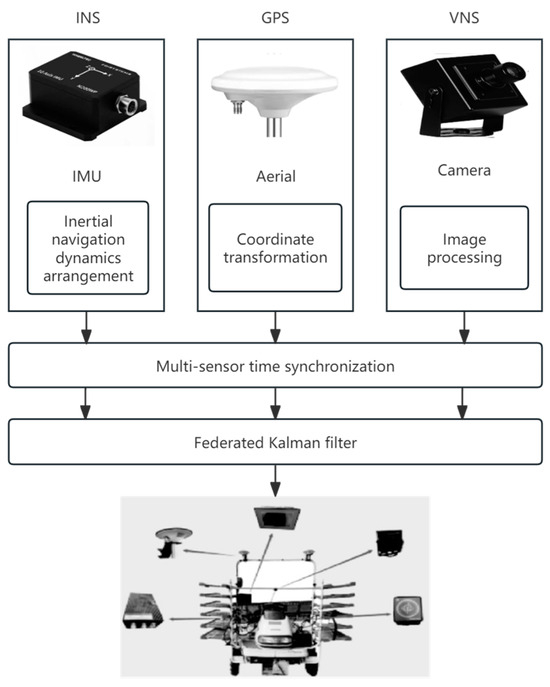

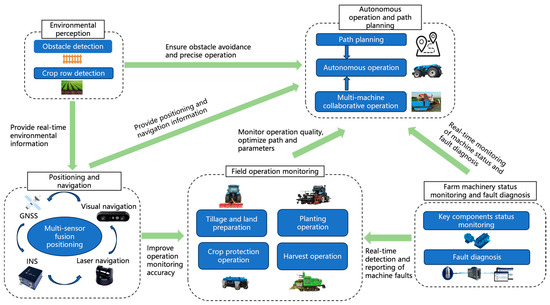

This paper reviews the current development status of key technologies in the autonomous operation and monitoring of intelligent agricultural machinery in China. It provides a comprehensive analysis of the main research achievements across the entire process, from environmental perception technology, positioning and navigation technology, autonomous operation and path planning technology to agricultural machinery status monitoring and fault diagnosis technology, as well as the monitoring technologies for tilling, planting, crop protection, and harvesting operations in the field. The system architecture diagram is shown in Figure 1. By examining these key technologies, the paper discusses the issues that still need further research and solutions. It also looks ahead to the development trends of autonomous operation and monitoring technologies, offering suggestions for future directions toward developing intelligent agricultural machinery in China.

Figure 1.

Agricultural autonomous unmanned operation system architecture diagram.

2. Environmental Perception Technology

With the rapid development of agricultural mechanization and automation, the demand for agricultural machinery to achieve autonomous operation in complex and variable field environments is increasing. Environmental perception technology, as a critical component of unmanned agricultural machinery in achieving autonomous operation, directly impacts operational accuracy. Environmental perception mainly involves obstacle detection and recognition, as well as crop row detection.

2.1. Obstacle Detection and Recognition

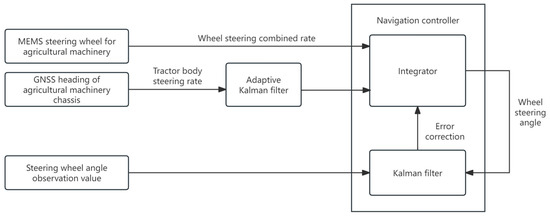

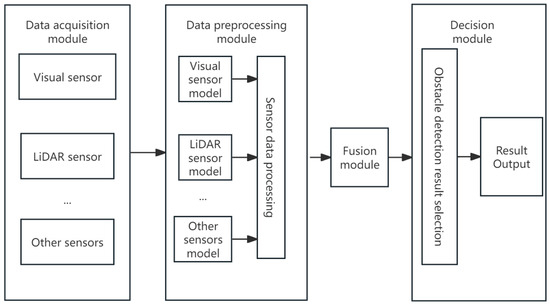

With the development of intelligent agricultural machinery, automatic navigation technology has been widely applied in production. To ensure the safe operation of agricultural machinery, it is essential to continuously detect and identify obstacles, ensuring the reliability and safety of autonomous operation. Obstacle detection in agricultural machinery primarily relies on technologies such as Light Detection and Ranging (LiDAR), millimeter-wave radar, ultrasonic radar, and visual sensors [12]. LiDAR provides a large amount of distance information through relatively high-frequency signals and can operate in all weather conditions. Millimeter-wave radar, with its longer wavelength, has stronger penetration capabilities, especially through smoke, and can not only measure the precise distance to a target but also determine the relative speed of the target. Ultrasonic radar is suitable for low-speed, short-range sensing. Visual sensors automatically detect obstacles from images and videos, using the corresponding algorithms to determine the location of the target obstacle [13]. In practical applications, a single sensor cannot guarantee reliable results in every scenario. Most researchers combine multiple sensors to perform obstacle detection through sensor fusion. The specific process is shown in Figure 2.

Figure 2.

Obstacle detection method using multi-sensor fusion.

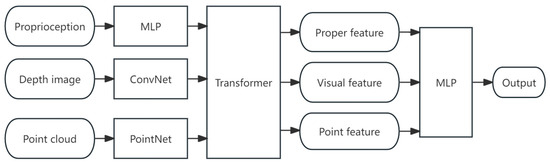

Li Xinghao [14] proposed an obstacle detection and recognition system that integrates LiDAR and machine vision. The LiDAR sensor determines information such as the distance, location, and size of obstacles, while the visual camera identifies the number and category of obstacles. The complementary functions of these two sensors provide a solution for obstacle detection in agricultural fields. Lv Youhao et al. [15] proposed a multi-modal information fusion Transformer architecture, with the model structure shown in Figure 3. This combines the proprioceptive states from robot state estimation, inertial measurement unit readings, and motor encoders with inputs from visual cameras and LiDAR. This approach enables the complementary and collaborative reasoning of three types of modalities. By utilizing end-to-end reinforcement learning, the robot is trained to autonomously avoid obstacles and traverse dynamically unknown complex terrains.

Figure 3.

Multi-modal information fusion neural network model.

2.2. Crop Row Detection

Crop row detection is crucial for the precise operation of agricultural machinery, with its core being crop row identification and row control. Unmanned agricultural machinery achieves tasks such as precise inter-row weeding, spraying, and harvesting by detecting the position and direction of crop rows and continuously adjusting lateral offsets. The main sensors used for this purpose are visual sensors and LiDAR, with common methods primarily relying on vision-based image processing techniques and multi-sensor fusion for crop row detection.

Vision-based image processing techniques primarily identify crop rows by analyzing features such as the color and texture in the images. With the development of machine learning and deep learning technologies, learning crop row features through pre-training using large datasets has become an important method for crop row detection. Li Hongbo et al. [16] proposed a crop row detection method based on YOLOv8-G. The YOLOv8-G object detection algorithm is used to extract the central position of maize seedlings, followed by clustering analysis using an affinity propagation clustering algorithm. Finally, the crop rows are fitted using the least squares method. This detection method is capable of quickly and accurately identifying crops in the field and simulating the target crop rows, even under complex lighting conditions and interference from weeds.

The fusion of LiDAR and vision can effectively capture both the three-dimensional and color information of target objects, addressing the problem of poor adaptability associated with single sensors. Jiang Qing et al. [17] proposed a corn crop row detection method based on the fusion of solid-state LiDAR and an RGB camera. The method involves preprocessing the acquired corn crop row images and point cloud data. The preprocessed image and point cloud data are then fused to achieve “point cloud coloring”. Using the “colored” point cloud, clustering is performed, and the crop planting agronomic standards (such as row spacing) are incorporated to validate the usability of the point cloud information and color information. The optimal data are selected to complete the clustering of the crop row region of interest. Finally, the clustering of the target point cloud’s feature points is determined by dividing the point cloud into horizontal strips, from which the crop row feature points are extracted. The crop row detection line is then fitted using the least squares method.

4. Autonomous Operation and Path Planning Technology

Autonomous operation and path planning technology refers to the ability of robots to autonomously navigate and plan paths in an unknown environment by using their own sensors to gather environmental information, enabling them to complete specific tasks. With the rapid development of agricultural machinery autonomous navigation technologies, intelligent agricultural equipment is playing an increasingly important role in agricultural production, and farms are entering the era of automation [46].

Autonomous operation allows agricultural machinery to complete specific tasks without human intervention, reducing the reliance on manual labor. It enables uninterrupted work, reduces labor costs, and significantly increases agricultural production efficiency. The autonomous operation system can also dynamically adjust its operational strategy based on real-time data and environmental changes, enhancing the flexibility and adaptability of the work. Path planning algorithms are designed to generate the optimal path that covers the entire work area for agricultural machinery. Path planning technology helps find the best route from the starting point to the destination, saving time and resources. It also helps avoid obstacles and find feasible paths. Multi-machine collaborative operation refers to the efficient and collaborative completion of agricultural tasks in the same working scenario by multiple agricultural machines, achieved through intelligent technology, communication technologies, and collaborative control algorithms.

In modern agriculture, achieving autonomous operation and efficient path planning for agricultural machinery is of great significance for improving production efficiency and reducing costs. For instance, the all-encompassing path planning method for multi-machine collaborative operations greatly enhances the overall work efficiency of agricultural machinery [47]; the autonomous tracking and obstacle avoidance functions of agricultural machinery provide new solutions for operations in complex environments [48]; the integration of autonomous operation technology, path planning algorithms, and multi-machine collaborative operation works synergistically to improve agricultural work efficiency, reduce labor costs, and optimize resource utilization. These are the core components of the intelligent development of agricultural machinery in modern agriculture.

4.1. Autonomous Operation Technology

With the continuous development of China’s economy, the decreasing number of agricultural laborers, and the rising labor costs, the traditional agricultural production model can no longer meet the current demands for automation, low cost, and high efficiency. In recent years, research related to autonomous operation of agricultural machinery has become an important focus in the field of agricultural mechanization. This research encompasses several key technologies, including obstacle avoidance technology, road defect detection technology, and path planning technology.

Agricultural machinery obstacle avoidance technology refers to how agricultural machines precisely identify and effectively avoid obstacles during autonomous operation, ensuring the safety of both the equipment and personnel. Field road defect detection technology aims to promptly identify issues such as mud, water accumulation, and other problems on farm roads, preventing the machine from becoming stuck in depressions or holes. Agricultural machinery path planning technology is designed to ensure that machines travel along the optimal path during operations, thereby improving work efficiency, reducing energy consumption, and protecting crops [49].

Ma Wenqiang [50] conducted research on the autonomous operation control model for transplanting robots. Based on a kinematic model, the study utilized a pure pursuit method to decompose the motion of a four-wheel steering chassis. To address the drawback of the pure pursuit method, which cannot dynamically adjust the lookahead distance in real-time, a particle swarm optimization-based pure pursuit method was proposed, enabling dynamic adjustment of the lookahead distance during the tracking process. Chen Zeyu [49] discussed and researched the implementation of obstacle avoidance technology in autonomous agricultural operations and how edge deployment can be achieved. For issues such as pedestrian detection in fields and the detection of road defects, lightweight models were optimized and designed using machine learning techniques. The edge deployment of obstacle avoidance technology for agricultural machinery was implemented through the Jetbot edge intelligence carrier on Jetson Nano. Meng Liwen et al. [51] elaborated on methods for the precise positioning and alignment of robots during the pruning and shaping process of single plants such as spheres, columns, and cones under complex environmental conditions. They analyzed the robot’s self-adaptive alignment method based on image recognition, as well as the features and advantages of a mapping method that integrates multi-line LiDAR and monocular vision. They also pointed out potential optimization improvements. Additionally, they researched and described a pruning robot for autonomous operations, including methods for seedling recognition, mapping, and alignment, significantly improving the automation and intelligence of pruning equipment. Li Mingchun [52] designed a binocular vision-based perception system that enables bulldozers to autonomously identify obstacles and provide real-time feedback on their depth information while performing leveling operations along a planned path. The system can also estimate earthwork information based on point clouds, facilitating construction process planning. Cai Daoqing [53] achieved the fusion of vision and millimeter-wave radar in both time and space. Using valid targets selected by the millimeter-wave radar as seed points, the system completed the task of detecting obstacle dimensions in the visual depth map. Bai Xiaoping [54] proposed a comprehensive distributed intelligent control solution for the autonomous harvesting operations of agricultural machinery. He developed core devices with independent intellectual property rights for intelligent harvesting control systems, including a header profiling device, an online grain breakage rate detection device, a foreign material loss rate detection device, a sieve opening adjustment device, and a concave clearance adjustment device.

4.2. Path Planning Algorithm

Path planning algorithms aim to generate the optimal path that covers the entire working area for agricultural machinery. In agriculture, path planning is often integrated into agricultural robots. Agricultural robot path planning refers to the use of autonomous navigation technologies and intelligent path planning algorithms to enable agricultural robots to effectively avoid obstacles during operations, thereby autonomously planning efficient, safe, and low-energy work paths [55,56]. Figure 6 shows a scenario where the intelligent agricultural machine performs autonomous turning and U-turns using a path optimization algorithm.

Figure 6.

Autonomous U-turn operation using the path optimization algorithm.

Common algorithms used in agricultural machinery path planning include graph-based search algorithms (such as the A* algorithm and Dijkstra’s algorithm), sampling-based algorithms (such as the RRT algorithm [57]), and swarm optimization algorithms (such as Particle Swarm Optimization (PSO)). These algorithms take into account the shape of the farmland, the location of the obstacles, and the motion characteristics of the machinery to generate efficient operational paths. They are widely used to address issues such as pathfinding and obstacle avoidance in agricultural machinery operations [58].

The A* algorithm is frequently used for optimal path planning in static and complex environments. By considering both the path length and heuristic function values, it can efficiently find the optimal path [59]. Dijkstra’s algorithm is used to find the shortest path in weighted graphs, and in agricultural robot path planning, it has been improved and applied according to practical scenarios. The basic idea of the PSO algorithm is to transform the optimization problem into a search problem in a multidimensional space, where particles simulate movement within this space to search for the optimal solution. The RRT algorithm is a random search algorithm used for path planning, and it has been widely applied in the field of agricultural machinery path planning. Each of these algorithms has its advantages and disadvantages. A comparative analysis of the current mainstream path planning algorithms for agricultural machinery is shown in Table 2.

Table 2.

Comparative analysis of mainstream path planning algorithms for agricultural machinery.

With the advancement of technology, the integration of multi-sensor and multi-path planning technologies will help improve the efficiency and precision of agricultural production, and the application prospects of path planning technology in the agricultural sector are becoming increasingly broad [60]. Jiang Xinbo et al. [61] proposed a path planning algorithm that combines an improved A* algorithm with the Artificial Potential Field (APF) algorithm. By optimizing the heuristic function of the A* algorithm, introducing intermediate nodes, and employing non-uniform cubic spline interpolation, global path planning is achieved. Additionally, by modifying the gravitational potential field function and integrating the simulated annealing algorithm, the obstacle avoidance capability of the APF method is enhanced. The key points of the global path are extracted as sub-goal points, and the APF algorithm is applied for secondary planning. Xin Peng et al. [62], addressing the issues of high randomness and slow convergence speed in RRT, introduced the APF method into RRT. They proposed a method that guides the random tree growth using obstacles and the target, which reduces the randomness of tree growth and accelerates the convergence speed. Wang Yu et al. [63], considering the problem of repeated searching in RRT, proposed a coverage search method to avoid redundant sampling, significantly reducing the number of nodes maintained by the random tree, which facilitates the algorithm’s rapid convergence. Zou Qijie et al. [64] incorporated reinforcement learning into RRT, using it to optimize the direction of random tree growth and process the initial path, resulting in a more optimal path. Deng Yizhao et al. [65] proposed an improved RRT algorithm, which accelerates the convergence speed by modifying the random expansion approach of the traditional RRT algorithm, thereby avoiding local optima. They also introduced a one-step memory mechanism mathematically and improved the sampling method of traditional RRT. A random rotation handling mechanism for collision nodes was introduced to overcome the local optimum trap, and a bidirectional tree growth mechanism was incorporated to accelerate the algorithm’s convergence. Li Zhaoying et al. [66] integrated Deep Q-Networks (DQN) into RRT, and they proposed the concept of a variable step size. By using DQN to learn the optimal growth direction and step size, they improved the planning efficiency of the algorithm. Luo Ronghao et al. [67] proposed a static full-coverage path planning method and path optimization scheme. Based on information about rectangular fields, obstacles, the minimum turning radius of agricultural machines, and tool widths, the method automatically selects parameters such as work direction, work spacing, headland turning area, and turning mode. Furthermore, the algorithm was optimized to meet the needs for a high coverage rate and low repetition rate in field operations, based on the shape of obstacles and the requirement for a high coverage-to-work path ratio.

PSO, A*, and other classical algorithms perform well in simple environments but have limitations in complex and large-scale scenarios. With the development of artificial intelligence and the rise of machine learning, deep learning and reinforcement learning have been applied in path planning, enabling them to handle complex environments and large-scale data. This has significantly improved the adaptability and intelligence of path planning.

Deep learning-based path planning is a technology that uses deep neural networks to model and optimize environmental perception, path decision making, and trajectory planning. By learning the inherent patterns of path planning samples, it enables agricultural machinery to autonomously learn and plan feasible movement paths. With its strong environmental perception ability, adaptability, and high efficiency and precision, deep learning-based path planning being widely applied in agricultural machinery. The Transformer, as an important architecture in deep learning, is specifically designed to handle complex sequential data. With its self-attention mechanism, it demonstrates powerful feature extraction and global modeling capabilities across various domains. Not only has it revolutionized traditional Natural Language Processing (NLP) methods, but it has also been successfully applied in fields such as computer vision and audio processing. By incorporating Transformer networks into path planning, the self-attention mechanism captures temporal dependencies in long sequences without relying on traditional sequential information processing methods. This allows for more accurate inference of the current state and selection of the optimal path [68].

Dai Shengtang et al. [69] addressed the collaborative path planning problem in multi-UAV systems by utilizing deep reinforcement learning methods. They designed an efficient path planning framework and developed kinematic models for differential drive unmanned vehicles and mathematical models for collaborative obstacle avoidance scenarios. Based on this, they further analyzed the challenges of deep reinforcement learning in handling complex dynamic environments with high-dimensional state spaces and continuous action spaces, such as slow training speeds, low sampling efficiency, and poor adaptability. This work provides a theoretical foundation for multi-UAV collaborative path planning research. Yang Bu et al. [70] proposed an improved deep deterministic policy gradient algorithm for the path planning of the mechanical arm of intelligent weeding robots, addressing the lack of active weed avoidance path planning in the field of robotic weeding. Zhuang Jinwei et al. [71] proposed an optimal global coverage path planning method aimed at minimizing vehicle losses along the route. Based on the DQN algorithm, they created a reward strategy based on the vehicle’s real trajectory during operation, optimizing losses such as reducing the number of turns, U-turns, and overlapping work areas. They also designed the RLP-DQN algorithm for this purpose. Luo Xiangwen et al. [72] designed a real-time obstacle target recognition system based on the Swin Transformer network. Combining this with the RRT* algorithm and Bézier curve-based path fitting algorithm, they proposed a Swin Transformer-based autonomous driving path planning algorithm. Using video frames as the data source, they constructed an obstacle image dataset through data augmentation. After obstacle recognition, path smoothing optimization was applied to complete path planning. Li Juan et al. [73] proposed a path planning method for crop detection robots based on a lightweight Transformer. They replaced the softmax function with the cosine function to overcome the non-differentiable calculation issue of softmax, while retaining the key features of attention computation. This approach further reduces time complexity. Experiments conducted in farmland at different scales showed that the robot’s path length was shortened by 5.91%, inference time was reduced by 50% compared to the Transformer model, and training time was reduced by 75%. Xiong Chunyu et al. [74] proposed a path planning method for citrus harvesting robotic arms in unstructured environments, which combines deep reinforcement learning (DRL) and artificial potential fields, to overcome the challenges of low efficiency and poor success rate in picking path planning when using deep reinforcement learning in unstructured environments.

4.3. Multi-Machine Collaborative Operation

Multi-machine collaborative operation refers to the use of intelligent technologies, communication technologies, and collaborative control algorithms to enable multiple agricultural machines to efficiently and cooperatively complete agricultural production tasks in the same operational scenario. This approach involves the following two core aspects: task allocation and path planning [75]. Multi-machine collaborative operations can enhance work efficiency, save operational time, and reduce food waste caused by untimely harvesting of crops [76].

With the development of smart agriculture, multi-machine collaborative operation in agricultural machinery is increasingly applied in large-scale field production processes, contributing to enhanced production efficiency. Agricultural machinery path planning is a critical foundational technology in the implementation of smart agriculture. Depending on the number of operating machines, path planning can be divided into single-machine operation path planning and multi-machine collaborative operation path planning. Currently, in large-scale farmland operations, multi-machine collaborative operation is gradually replacing single-machine operation, becoming the main method of operation in field agriculture [77]. Figure 7 shows the collaborative operation scene of the unmanned radish harvester and transport vehicle. By integrating multi-machine collaboration with path planning, it becomes possible to effectively promote efficient agricultural production under complex and dynamic environmental conditions.

Figure 7.

Collaborative operation of unmanned radish harvester and transport vehicle.

Wu Jian et al. [78], addressing the issue of poor endurance of single small inter-row weeding robots that are unable to independently complete large-scale weeding tasks in rice fields, proposed a multi-machine collaborative path planning method based on a multi-chromosome optimized genetic algorithm (MGA). They established a multi-machine collaborative path planning model. Xie Jinyan et al. [79], in order to improve the work efficiency of multiple unmanned mowing machines during collaborative operation in a new apple orchard, proposed an improved genetic algorithm (IGA) to assign and optimize operation paths for each mower. Based on the actual operation of unmanned mowers, they constructed a multi-machine operation path optimization model with total turning time and operation duration as comprehensive optimization objectives. Ma Haojie [80] aimed to minimize the movement distance of the most labor-intensive spiral propulsion vehicle and addressed the multi-machine collaborative weeding operation problem by establishing a multi-machine collaborative task allocation model. Liu Xiaoming [81] proposed a 5G-based multi-machine collaborative system for plant protection drones, where the cloud serves as the core for multi-machine networking. Through 5G and onboard nodes, the cloud can directly control the plant protection drones. A data transmission protocol between the plant protection drones and the cloud control terminal was designed to enable the cloud to acquire field information and monitor/control the drones during the multi-machine collaboration process. Additionally, a genetic algorithm-based task allocation method for multi-machine plant protection operations was proposed, allowing the cloud to rationally allocate and schedule tasks across multiple machines, thus improving overall operational efficiency. Gao Wenjie [77] proposed a U-shaped and bow-shaped turning path selection strategy for a rule-based farmland operation scenario. He designed a multi-machine collaborative operation path planning method for both regional and full-region operations and conducted field validation tests. Tang Can et al. [82] addressed the multi-drone path planning problem under the condition of farmland blocks with obstacles and proposed a complete multi-drone collaborative operation path optimization algorithm solution. Li Han et al. [83] designed and developed a WebGIS-based multi-machine collaborative navigation service platform to provide map and navigation service support for multi-machine agricultural machinery collaborative operations.

5. Farm Machinery Status Monitoring and Fault Diagnosis

In the process of modern agricultural mechanization, real-time monitoring of agricultural machinery’s operational status and fault diagnosis are crucial for improving operational efficiency and ensuring safe equipment operation. By leveraging advanced sensor technologies, data analysis methods, and intelligent diagnostic systems, it is possible to monitor the status of key components of agricultural machinery and provide early fault warnings. Fault diagnosis can predict and prevent potential mechanical, electrical, and hydraulic failures by monitoring the status of critical parts, determining the fault location, and providing technical support for troubleshooting. The application of operational status monitoring and fault diagnosis technologies in agricultural machinery helps achieve intelligent equipment management, improves operational efficiency, and reduces maintenance costs.

5.1. Key Components Status Monitoring

In agricultural production, due to the impact of working conditions and the intensity of tasks, agricultural machinery is prone to wear and tear, which can lead to malfunctions. Monitoring the operational status and key components of agricultural machinery can serve as an early warning system, transforming passive responses into proactive measures, thus effectively reducing the impact of faults. Monitoring the status of critical components involves using sensors to collect information on the condition of these parts, providing real-time monitoring to assess the overall operational state and performance of the machinery. Fault monitoring can track the current state of the machinery, perform diagnostic analysis, and enable remote data transmission through a data transmission module, providing essential data support for health management and fault diagnosis.

Wen Xin et al. [84] developed a remote monitoring system for a corn fertilizing machine, using the STM32103 main controller for data processing and conversion. The BC20 wireless communication module was responsible for data transmission. Through the OneNet platform, real-time remote monitoring of key parameters such as the fertilizing machine’s speed, coordinates, and fertilizer distribution shaft status was achieved on both PC and mobile platforms. Xiao Fengming [85] installed various sensors on critical parts of agricultural machinery to collect data on their operation, performance, and status. These data were then uploaded to a cloud platform via industrial control computers and communication networks. Additionally, a deep learning-based fault prediction model was developed to automatically analyze state changes and assess the likelihood of faults, enabling automatic alerts for typical mechanical failures.

5.2. Fault Diagnosis Technology

During sowing and harvesting operations, agricultural machinery typically operates under high intensity and full-load conditions, making it highly susceptible to mechanical failures. By monitoring key components of agricultural machinery and collecting information on the operating status of critical parts, real-time monitoring and fault diagnosis of the machine’s operation can be achieved through various detection modules and intelligent monitoring terminals. Currently, common fault diagnosis technologies include vibration signal feature extraction technology, intelligent fault diagnosis technology, and multi-parameter and multi-fault diagnosis techniques.

Vibration signal feature extraction is a crucial method in mechanical fault diagnosis. It involves measuring the machine’s vibration and noise signals, processing the fault signals using techniques such as time-domain analysis, frequency-domain analysis, and time-frequency analysis; With the rapid development of sensor technology, monitoring, and diagnostic techniques, intelligent fault diagnosis has attracted widespread attention and encouraged research in this field. Intelligent fault diagnosis typically utilizes fault association models based on simulation dynamics, big data, and neural network-driven fault analysis and health assessment, combined with fuzzy theory, regression prediction, and time series analysis techniques, to establish a comprehensive diagnostic system [86]. The multi-fault diagnosis method integrates various types of information, such as vibration signals, electromagnetic signals, radiation, and stress, to provide the comprehensive monitoring and fault diagnosis of agricultural machinery [87].

Zhang Yaping et al. [88] proposed an adaptive selection method for the Laplace wavelet parameters and damping parameters of the wavelet model to address the fault diagnosis of rolling bearings in agricultural machinery. They established a Laplace wavelet model that is the most suitable for the impact characteristics of the signal. Qi Meng et al. [89] proposed a bearing fault diagnosis method based on the short-time Fourier transform (STFT) time-frequency spectrogram and Vision Transformer (ViT). By applying the short-time Fourier transform, the original vibration signal is converted into a two-dimensional time-frequency image. This time-frequency image is then used as a feature map input into the ViT network for training, with the goal of constructing the optimal model structure to achieve fault diagnosis. Zhang Weipeng [90] analyzed common fault phenomena of combine harvesters during field operations and verified the fault diagnosis classification effect through simulation of field operations. By combining the sparrow search algorithm (SSA) with the BP neural network, a training set and test set were constructed, and the SSA-BP neural network algorithm was trained and validated, achieving an average recognition rate of 98.56%. The superiority of the SSA-BP algorithm was verified through its comparison with algorithms such as SVM and BP-SVM. Song Enzhe et al. [91] addressed the issue of reduced fault diagnosis accuracy caused by noise interference during motor operation. By extracting low-frequency information from noise signals using Mel Frequency Cepstral Coefficient (MFCC) dynamic feature extraction and combining the adaptive adjustment capability of the convolutional attention module with a multi-feature fusion strategy, they further reduced the impact of noise on fault diagnosis.

6. Field Operation Monitoring Technology

Agricultural machinery field operation monitoring technology utilizes multiple sensors to collect real-time operational data from the field. Through data processing and analysis, the working status of the machinery during operations is obtained for monitoring and analysis. By monitoring and analyzing the operational process, the accuracy and efficiency of field work are improved, promoting the sustainable development of agricultural production. This chapter provides a review focusing on the monitoring of key field operation states, including tillage depth, surface leveling, soil fragmentation rate, sowing, transplanting, crop protection, and harvesting. The monitoring technologies for various field operations are shown in Table 3.

Table 3.

Field operation monitoring technologies.

6.1. Tillage and Land Preparation Operation Monitoring Technology

Soil tillage is a fundamental process in agricultural production, and the quality of its operation has a significant impact on subsequent stages. To ensure the quality of tillage, modern agriculture employs various monitoring technologies to track and control key parameters during the tillage process. These include monitoring the plowing depth, surface leveling, and soil fragmentation rate. Through data fusion and intelligent algorithms, the machine’s operating parameters are adjusted in real time to accommodate different soil conditions and operational requirements, ensuring optimal tillage results.

6.1.1. Tillage Depth Monitoring

Tillage depth refers to the depth at which a tillage machine operates in the soil, directly affecting the soil’s loosening degree and the growth space for plant roots. Traditional tillage depth measurement relies on manual measurement and recording, which is inefficient and limited in accuracy. Modern tillage depth monitoring technology mainly uses sensors and information technology to achieve real-time and precise monitoring. Sensors are installed on the beam of tillage machinery or the tractor’s three-point hitch mechanism. By fitting the sensor data to the relationship model of tillage depth, the actual tillage depth is calculated.

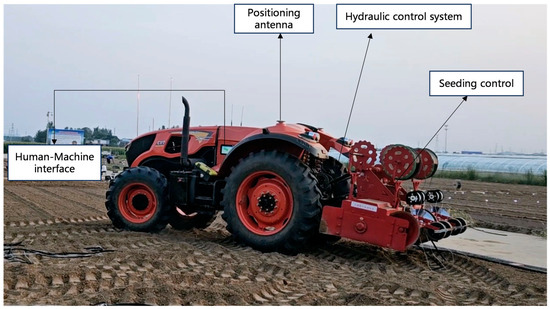

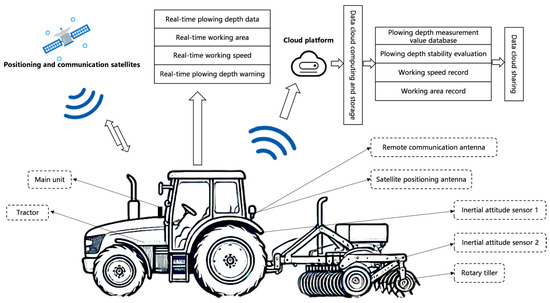

Wang Hongwei et al. [92] used the CAN bus and sensors to read the position of the tractor’s three-point hitch and then convert the data into soil penetration depth. They fitted the data through experiments to achieve real-time tillage depth monitoring. Du Xinwu et al. [93] analyzed the suspension posture of the rotary tiller and determined the mathematical relationship between the tillage depth and suspension posture. They incorporated factors such as the deformation of the rotary tiller and the lower limit of the tires to establish a three-parameter nonlinear tillage depth measurement model, which enables real-time monitoring of the tillage depth based on suspension posture. The overall scheme of the tilling depth monitoring system is shown in Figure 8. Jiang Xiaohu et al. [94] installed ultrasonic and infrared sensors on the machine frame. The ultrasonic sensor measures the tillage depth using the time-of-flight method, while the infrared sensor employs the triangulation method to measure the depth. The data from both sensors are filtered and fused in real time using the Kalman filtering method to monitor the tillage depth continuously.

Figure 8.

Overall scheme of the tilling depth monitoring system.

6.1.2. Surface Leveling Monitoring

The surface leveling of the field affects the uniformity of planting and the efficiency of irrigation. Traditional assessments of surface leveling mainly rely on manual measurements, which are subjective and inefficient. Modern surface leveling monitoring technologies use sensors and data processing to quantify the surface leveling, with the main techniques being laser leveling and ultrasonic monitoring. Laser leveling technology utilizes laser transmitters and receivers to measure surface height differences, guiding leveling machinery to make adjustments to ensure the surface is even. Ultrasonic sensors, installed at the front of the machinery, can measure surface height changes in real time, feeding the data back to the control system, which automatically adjusts the machinery’s operational parameters to maintain a leveled surface.

Zhou Hao et al. [95] designed a laser-controlled paddy field leveling machine that adjusts the depth of the leveling blade based on the laser signal received by the laser receiver. The signal controls an electromagnetic valve that adjusts the elevation cylinder, automatically regulating the working depth of the blade. Wang Ling [96] mounted several sensors on an experimental vehicle, including attitude sensors, magnetostrictive displacement sensors, ultrasonic sensors, and GPS sensors. The displacement sensor is connected to the sliding rod via a connecting rod, with rollers installed on the sliding rod. The rollers move vertically with the surface contours, providing measurement values. The ultrasonic sensor calculates the distance from the transmitter to the soil surface based on the time difference between the emitted and received signals. The attitude sensor, mounted on a vertical plane, measures the vehicle’s attitude angle. The GPS module, mounted on the vehicle’s moving surface, provides real-time location data. When measuring the length of the connecting rod and the initial position, the angle between the sliding rod and the connecting rod is corrected for accurate measurements.

6.1.3. Soil Fragmentation Rate Monitoring

The soil fragmentation rate refers to the degree to which the soil is broken up after cultivation, which affects seed germination rates and transplanting quality. Traditional measurement of the soil fragmentation rate requires collecting soil samples and manually conducting sieving and analysis, a process that is cumbersome. Modern technology, through optimized mechanical design and real-time monitoring, enables the control and evaluation of the soil fragmentation rate. The main methods used include image processing techniques and online fragmentation rate detection systems. The image processing method involves capturing post-cultivation soil surface images using camera equipment and applying image processing algorithms to analyze the size distribution of soil particles, thus calculating the fragmentation rate. However, this method can only measure the surface layer of soil and does not represent the fragmentation rate of the entire soil layer, leading to larger errors in the results. The online fragmentation rate detection system simulates manual measurement, automatically performing the steps of soil sampling, weighing, sieving, re-weighing, and calculation, enabling the automated measurement of the soil fragmentation rate.

Xia Qicheng [97] mounted a camera on a small tillage machine to capture soil samples after cultivation. The camera measures the area of soil clods and calculates the fragmentation rate by determining the ratio of the area of clods with a diameter greater than 5 cm to the sample area. Yang Xulong [98] developed an online soil fragmentation rate monitoring system, which consists mainly of a soil sampling unit, a sieving unit, a weighing unit, and the corresponding software and hardware. The soil sampling unit collects soil and delivers it to the sieving unit for classification. The soil is then transferred into different bins of the weighing unit. When the total mass of soil in the weighing unit reaches a preset threshold, the system interrupts the soil transport using an active overbridge. It then calculates the fragmentation rate of the sampling point and unloads the weighed soil.

6.2. Planting Operation Monitoring Technology

In modern agriculture, the quality of planting operations directly impacts crop yield and quality. To achieve precision agriculture, monitoring technologies for sowing and transplanting operations have been widely applied. Through the real-time adjustment of the machine’s operational parameters, these technologies adapt to varying soil conditions and operational requirements, ensuring optimal planting results. The main areas of focus include sowing operation monitoring and transplanting operation monitoring.

6.2.1. Sowing Operation Monitoring Technology

Sowing is a fundamental step in agricultural production, and the quality of sowing directly affects crop growth and development. Monitoring the sowing process can effectively address issues such as blockages, missed seeds, and seed shortages, allowing for timely corrective actions to minimize losses. Commonly used methods include piezoelectric monitoring, photoelectric monitoring, capacitance monitoring, and machine vision methods [99,100,101].

The piezoelectric monitoring method uses various piezoelectric film materials. When the seeds come into contact with the film, it deforms, generating an electrical signal that allows for seed monitoring. This is a contact-based monitoring technique with relatively high accuracy. However, it requires the seeds to collide with the monitoring material to generate a signal, making it susceptible to vibration interference. Additionally, since it is typically installed in the seed distribution tube, it can also affect the trajectory of the seeds. The photoelectric monitoring method works by detecting the interruption of a photoelectric sensor during the falling of seeds, generating a voltage signal. This signal is then processed and converted into a pulse signal that can be recognized by the controller, enabling seed monitoring. This method has a simple structure and is easy to apply. However, it is highly effective for larger seeds, such as corn, with good accuracy, while its performance is less effective for smaller seeds, resulting in lower accuracy. The capacitive monitoring method uses a capacitive sensor, where seeds passing between the two plates of the sensor generate a signal. This signal is processed into a recognizable digital signal for seed monitoring. This method is more sensitive to larger seeds, but less effective for smaller seeds. The machine vision method utilizes high-speed photography of falling seeds, followed by image processing systems to obtain information such as the position and quantity of seeds, enabling real-time monitoring. While this method offers higher monitoring accuracy, its cost is relatively higher compared to other methods.

Ding Youchun et al. [102] designed a substrate-based piezoelectric film sensing structure, which reduced the collision signal attenuation time from 9 ms to 1 ms, thus improving the time resolution for high-frequency seed flow detection. This design also effectively mitigates interference from mechanical vibrations. Additionally, Ding Youchun and colleagues developed a medium- and small-sized seed flow monitoring device based on a thin-layer laser emitter module with a light thickness of approximately 1 mm and the photovoltaic effect of silicon photodiodes. This device enables the non-collisional detection of medium- and small-sized seed flows. Experimental results indicated that the monitoring accuracy for rapeseed was no less than 98.6%, and for wheat seeds, it was no less than 95.8%, with light conditions and equipment vibrations having no impact on monitoring accuracy. Xu Luochuan et al. [103] developed a finger-type capacitive cotton seed hole-planting monitoring system. Using the Pcap02 micro-capacitive acquisition module, it collects the capacitive output values and processes the data to accurately determine normal single-seed planting, replanting, and missed planting situations. Zhao Zhengbin et al. [104] applied machine vision technology to detect the planting performance of a precision seed-planting machine for seed trays. A photoelectric sensor detects the position of the seed tray, triggering a camera, and dual cameras scan and capture the images of the seed tray row by row. Visual algorithms were then used to analyze and process the images, identifying the planting conditions based on the image data.

6.2.2. Transplanting Operation Monitoring Technology

Transplanting is the process of transferring seedlings to the field, and the quality of this operation directly affects the survival rate and growth status of the crops. The monitoring of transplanting quality mainly focuses on parameters such as the mis-planting rate. The quality monitoring system for transplanting operations typically involves installing industrial cameras and other sensors on the transplanter. These sensors capture real-time images of the seedlings being transplanted in the field. The images are then processed and analyzed to calculate the precise planting distance by measuring the geometric center of the seedlings’ leaves. By comparing the actual planting distance with the theoretical distance, the system can identify quality issues such as missing seedlings, lodged seedlings, and exposed seedlings.

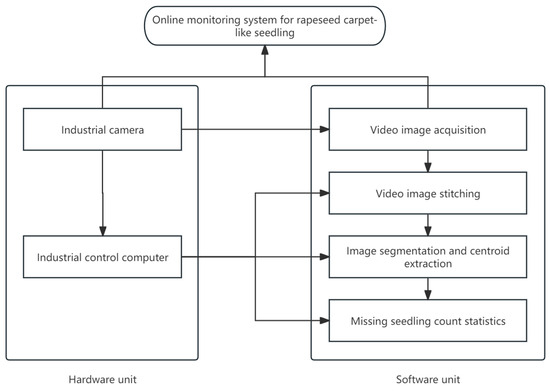

Han Hongfei et al. [105] designed a monitoring system for transplanting machine operation quality and working trajectory information. The system uses industrial cameras combined with absolute encoders to capture image data of the transplanted seedlings. The images are then analyzed to identify whether seedlings are missing at any planting points, and the positions of these points are stored. A GIS system was created to display the transplanting machine’s operating trajectory and missing seedling information on the map. Jiang Zhan et al. [106] designed a real-time monitoring system for transplanting missing seedlings based on video image stitching, as shown in Figure 9. The system collects real-time videos of rapeseed blanket seedlings during field transplanting operations. Using parameters such as video-to-frame rate, the number of image stitches, and corner detection algorithms, and evaluating based on image quality scores and image stitching efficiency, the optimal image stitching parameter combination was obtained through orthogonal experimental design and optimization methods. The system then uses image segmentation and centroid fitting algorithms, along with criteria based on the distance between adjacent projection points and column slopes, to calculate the number of missing seedlings.

Figure 9.

Online monitoring system for rapeseed carpet-like seedlings.

6.3. Crop Protection Operation Monitoring Technology

Crop protection operation monitoring technology involves the real-time monitoring of crop information in the field, transmitting the data to a control unit for analysis and decision making on variable control. This allows for management based on the actual conditions of crops and soil, achieving precise pesticide application, improving operational efficiency and quality, and reducing pesticide waste. As shown in Figure 10, the smart agricultural machinery is performing crop protection monitoring operations. Currently, precision spraying technology primarily focuses on targeted pesticide application. Sensors are used to detect features of the target canopy, including the presence of the target, its appearance and outline, leaf area index, and pest or disease information, providing data support for the pesticide application decision model. The main sensors used include ultrasonic sensors, infrared sensors, LiDAR sensors, optical sensors, and machine vision. Ultrasonic sensors detect distances by measuring the echo time; infrared sensors calculate distance by measuring the time between emission and return of infrared light; LiDAR sensors detect distance either by measuring the time of flight between the emitted and reflected signal or by analyzing the phase difference between incident and reflected laser beams; optical sensors use image processing techniques to analyze images captured from different light positions for detection; machine vision detects by capturing and analyzing image feature information [107].

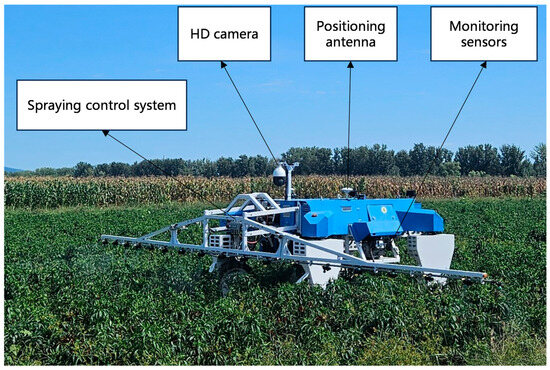

Figure 10.

Crop protection operation monitoring.

Jiang Honghua et al. [108] proposed a fast method for field weed identification based on deep convolutional networks and binary hash codes. By utilizing a trained model to extract the fully connected layer features and hash features of input images, they compared these features with those in the database, calculating the Hamming distance and Euclidean distance, respectively. The K most similar images were identified, and their labels were counted. The most frequent label was assigned to the image, achieving the classification and recognition goal. The experimental results showed that this method achieved an accuracy of 98.6% for field weed identification. Liu Liming et al. [109] integrated laser sensors and ultrasonic sensors for canopy information collection. The experiments demonstrated that this approach provided higher accuracy compared to using a single-sensor array. Gu Chenchen et al. [110] developed a mobile experimental platform equipped with LiDAR for canopy leaf area detection. They established a detection model using partial least squares regression and BP neural network algorithms. The experiments showed that the detection model had high accuracy for dense canopy detection but lower accuracy for sparse canopy detection. Song Ling et al. [111] proposed a cassava leaf disease detection model based on an improved YOLOX network. By employing methods such as data augmentation, multi-scale feature extraction modules, and channel attention mechanisms, along with a quality focal loss function as the classification loss function to assist the network’s convergence, the experimental results showed an average precision of 93.53%, which was 6.02 percentage points higher than the baseline model. Its comprehensive detection capability outperformed several mainstream models.

6.4. Harvest Operation Monitoring Technology

Harvest operation monitoring technology enhances harvesting efficiency, ensures operational quality, and reduces losses through the real-time monitoring of the working status and operational parameters of the harvesting machinery.

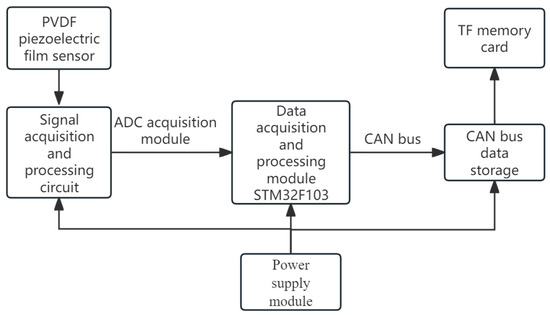

Guo Hui et al. [112] designed a cleaning loss monitoring device suitable for sunflower combine harvesters, as shown in Figure 11. The device mainly consists of a PVDF piezoelectric film, a signal acquisition and processing circuit board, and an installation adjustment device, enabling the real-time monitoring of sunflower cleaning loss rates. Du Yuefeng et al. [113] developed a cleaning loss monitoring sensor based on the minimal energy criterion EMD denoising method, which achieved the separation of vibration, operational noise, and other unwanted signals from the collected data. Chen Man et al. [114] proposed an online monitoring method for soybean mechanized harvest quality based on machine vision. By effectively segmenting soybean images and selecting RGB and HSV color space feature values, they constructed a quantitative evaluation model. Experimental results showed that this method is consistent with manual detection.

Figure 11.

Schematic diagram of the monitoring device.

7. Problems Faced and Suggested Measures

7.1. Problems Faced

- (1)

- Impact of Complex Environments on Multi-Sensor Perception Technology

Currently, environmental perception technologies have made some progress in terms of recognition accuracy and response speed. The fusion of multiple sensors (such as LiDAR, millimeter-wave radar, and visual sensors) has been proven to enhance recognition accuracy. However, there is still significant room for improvement in the construction of precise maps for farmland. For instance, issues such as fuzzy farmland boundaries may cause deviations between the navigation recognition and the operation path. Changes in lighting conditions and adverse weather conditions can also affect the accuracy of environmental perception [115]. The irregular terrain and crop planting in farmland further complicate crop row monitoring, leading to a decline in recognition rates of image processing and multi-sensor fusion detection methods. Uneven farmland terrain and agricultural machinery vibrations can cause changes in the scanning direction of sensors, creating blind spots. Additionally, agricultural work environments tend to generate large amounts of dust, which can cover the sensor surfaces and result in false signals.

- (2)

- Accuracy and Real-Time Issues of Navigation and Positioning Technology

In scenarios with signal obstructions, such as mountainous areas and orchards, GNSS accuracy significantly decreases, leading to path deviations [116]. The drift error accumulation problem of INS has not been fully resolved, and even when fused with the GNSS, it struggles to provide sustained high-precision positioning in complex environments. Visual navigation is influenced by crop growth stages, lighting conditions, and obstacle occlusions, making its accuracy unstable. While LiDAR navigation equipment offers high precision, its high cost limits large-scale application on agricultural machinery. The real-time availability and synergy of multi-source data obtained from multiple sensors are insufficient, and current data fusion algorithms lack both real-time computational capacity and accuracy in complex farmland environments. The spatial complexity of narrow, cluttered, and large-scale scenarios increases the technical difficulty, and the combination of spatial complexity and temporal uncertainty has become a key technological bottleneck that urgently needs to be overcome in agricultural machinery navigation technology.

- (3)

- Technical Bottlenecks in Autonomous Operation and Multi-Machine Collaboration

Farmland environments typically feature an undulating terrain and irregularly distributed obstacles, which complicate path planning and navigation. In dynamic environments with numerous obstacles, obstacle avoidance technologies face issues such as delays and misjudgments, making it difficult to adjust autonomous operation strategies in real time. Traditional path planning algorithms (such as A* and RRT) perform poorly when dealing with dynamic environments and multi-objective tasks. While deep learning-based path planning algorithms offer adaptability, they require pre-training, which is time-consuming and has limited generalization capabilities for large-scale scenarios. The high real-time demands for environmental perception and path planning in multi-robot coordination algorithms increase system complexity. Additionally, the lack of efficient coordination mechanisms for communication and task allocation in multi-robot collaboration leads to problems such as information transmission delays and resource waste.

- (4)

- Challenges in Monitoring and Diagnostics of Key Components

Most agricultural field environments are harsh, and agricultural machinery often encounters various malfunctions during operation. Due to the lack of real-time tracking and monitoring, this results in abnormal machine operation and, in some cases, equipment damage. The arrangement of key component monitoring sensors for agricultural machinery is complex. Currently, there are few self-developed agricultural sensors, and their stability is poor. They also lack sufficient anti-interference capabilities when facing vibrations, dust, and high-temperature conditions in field operations. Some domestic manufacturers have developed monitoring systems based on mature foreign products, but these systems face issues such as low monitoring accuracy, complex operating systems, and unintuitive interfaces. Additionally, they are expensive, making it difficult to popularize the products. Most domestic monitoring systems are still in the experimental stage and cannot be widely deployed for large-scale use [117]. Monitoring data lacks unified standards, and the interoperability between devices is poor, which affects the accuracy of data analysis. Traditional vibration signal analysis methods rely on manual experience and are unable to adapt to the complexity of various fault modes. Intelligent diagnostic algorithms, such as those using deep learning methods for diagnosis, heavily depend on labeled data, making it difficult to cover all fault scenarios that may occur in actual operations.

- (5)

- Inadequate Adaptability of Operation Monitoring Technology

In some domestic agricultural fields, the area is relatively small, and the environment is complex, with soft and uneven soil. Domestic tillage and leveling machinery often use single sensors to monitor tillage depth and surface leveling. These systems are easily affected by surface undulations and soil quality, leading to poor monitoring results [118]. Monitoring technologies for parameters such as leveling accuracy and soil pulverization rate face challenges in balancing dynamic real-time performance with precision. Multi-parameter collaborative optimization algorithms are still not mature, resulting in insufficient alignment between monitoring results and actual operational requirements. There is a lack of domestically produced high-performance sensors, creating technological barriers when compared to international standards, such as seed-level resolution sowing monitoring sensors or precision devices for monitoring small seed sowing (e.g., photoelectric sensors and capacitive sensors), which have relatively low accuracy. Additionally, under harsh environmental conditions, transplanting operation monitoring for missed planting and row spacing control technologies are not yet stable enough.

- (6)

- Standardization and Efficiency Issues in Technology Integration

Currently, there is a lack of unified interface standards between various technical systems, making it difficult to achieve efficient integration of hardware and software. The lack of a standardized platform makes it difficult for systems developed by different manufacturers and research teams to be compatible. In this situation, integrating different technologies and algorithms becomes complex, limiting the system’s flexibility and interoperability, and increasing the costs of development and maintenance [119]. Incompatible cross-platform data formats and transmission protocols, along with the challenge of unifying field communication protocols, hinder the seamless fusion of heterogeneous data processing and reprocessing. Research in this area is still insufficient, limiting the deeper integration of multiple technologies. Additionally, algorithms such as deep learning and SLAM require significant computational power, but the embedded hardware in agricultural machinery often struggles to support the real-time execution of complex algorithms. Some data acquisition devices also face bandwidth limitations when transmitting high-frequency sampling data, which affects real-time processing. With limitations in real-time performance and hardware capabilities, one effective solution to the spatial complexity challenge is minimizing the number of samples taken while retaining as much spatial information as possible. There is also a lack of low-cost, high-efficiency solutions to promote large-scale applications.

7.2. Suggested Measures

- (1)

- Impact of Complex Environments on Multi-Sensor Perception Technology

To address the impact of complex environments on multi-sensor perception technology, dynamic sensor calibration and adaptive compensation techniques can be employed to enhance the system’s ability to adapt to variations in light, weather, and terrain. This can be combined with deep learning-based multi-modal data fusion technologies to integrate data from LiDAR, millimeter-wave radar, and visual sensors at the feature level, improving the perception accuracy and stability in complex agricultural environments. Additionally, to minimize dust interference, a gas jet cleaning system and hydrophobic coatings can be designed. These solutions, together with a gyroscope correction module, can dynamically adjust the sensor orientation, preventing blind spot issues caused by vibrations.

- (2)

- Accuracy and Real-Time Issues of Navigation and Positioning Technology

To improve the accuracy and real-time performance of navigation and positioning technologies, the fusion algorithm of GNSS and INS should be enhanced by adopting factor graph optimization and unbiased Kalman filtering techniques to correct navigation errors. In areas with signal blockage, the positioning accuracy can be compensated by combining low-cost GNSS RTK modules with SBAS augmentation systems. Additionally, lightweight deep learning-based semantic segmentation algorithms can optimize visual navigation, while low-cost 2D LiDAR can address positioning challenges in low-light or obstructed environments. Furthermore, a distributed real-time data processing framework should be implemented to enhance the efficiency and real-time performance of multi-sensor collaborative fusion, ensuring the reliability of navigation in complex scenarios.

- (3)

- Technical Bottlenecks in Autonomous Operation and Multi-Machine Collaboration

In autonomous operations and multi-machine collaboration, reinforcement learning-based path planning algorithms can be used to dynamically address the path adjustment needs in areas with dense obstacles, combined with local optimization methods to enhance short-term obstacle avoidance capabilities. By leveraging the multi-modal data processing capabilities of the Transformer model, data from different sensors such as vision, LiDAR, and others are integrated to enhance the comprehensiveness and accuracy of environmental perception, thereby optimizing path planning and obstacle avoidance. A distributed task scheduling protocol based on blockchain technology can be developed to ensure efficient task allocation and state synchronization between machines. By sharing LiDAR and GNSS data, a global 3D agricultural field model can be constructed to enhance the overall efficiency of collaborative operations and improve path planning accuracy, while simultaneously reducing resource waste caused by redundant modeling.

- (4)

- Challenges in Monitoring and Diagnostics of Key Components

To address the challenges in monitoring and diagnosing critical components, high-temperature-resistant, vibration-proof, and dust-resistant MEMS sensors can be deployed at key nodes of agricultural machinery. These sensors can be integrated into a multi-physical field collaborative monitoring system to comprehensively analyze vibration and temperature data. By leveraging a Transformer-based few-shot learning framework and self-supervised algorithms, the reliance on labeled data can be reduced, enhancing the diagnostic capability for complex fault patterns. Additionally, high-precision signal analysis algorithms can be used to accurately locate faults and predict trends in key components, improving the operational stability of the equipment.

- (5)

- Inadequate Adaptability of Operation Monitoring Technology

To address the issue of insufficient adaptability in operation monitoring technology, a multi-parameter dynamic optimization model based on genetic algorithms can be developed to adjust key parameters such as planting depth and soil leveling in real time, adapting to various terrains and soil conditions. Additionally, a seed monitoring device combining photoelectric and capacitive sensing technologies can be designed to enhance the accuracy of planting single seeds and small grains. In the operation data processing stage, an edge computing architecture can be deployed to run dynamic prediction models, enabling fast analysis and precise feedback of monitoring data, thus meeting the high adaptability requirements of field operations.

- (6)

- Standardization and Efficiency Issues in Technology Integration

To address the issues of standardization and efficiency in technology integration, a unified communication protocol based on OPC UA should be developed to optimize data compatibility between multiple technological systems. Additionally, by incorporating lightweight deep learning algorithms and utilizing GPU and FPGA co-processors, the performance of embedded hardware in complex algorithm processing can be enhanced. Moreover, by applying compressed sensing and adaptive sampling technologies, the bandwidth usage for data transmission can be reduced, improving the efficiency of transmitting and processing high-frequency sampling data. These efforts will promote the rapid development of agricultural engineering technologies in terms of standardization and large-scale application.

8. Conclusions and Development Prospects

8.1. Conclusions

This paper explores in depth the key technologies behind the development of intelligent agricultural machinery in China, covering aspects such as autonomous operation and path planning, state monitoring and fault diagnosis, and field operation monitoring. The integration of global satellite navigation, inertial navigation, and laser navigation technologies has driven precise positioning and efficient navigation of agricultural machinery. Path planning technology uses intelligent algorithms to optimize the operation paths of agricultural machines, improving operational efficiency and resource utilization. Meanwhile, multi-machine collaborative operations have demonstrated significant potential in large-scale farmland applications. The state monitoring and fault diagnosis technologies support the intelligent management of equipment, with real-time monitoring and fault prediction helping to reduce equipment failures and improve production efficiency. In terms of field operation monitoring, real-time monitoring technologies for tasks such as tillage, sowing, and harvesting have enhanced operational accuracy and quality, further advancing the application of precision agriculture.

The key technologies are closely interconnected, forming a highly integrated intelligent system. Environmental perception technology, through the fusion of multiple sensors, provides precise environmental data, supporting positioning and navigation technologies to ensure that agricultural machinery can accurately locate itself and autonomously navigate in complex farmland environments. Path planning technology generates the optimal path based on real-time environmental information, effectively avoiding obstacles and improving operational efficiency. State monitoring and fault diagnosis technologies allow for the monitoring of agricultural machinery’s health during operations, enabling the real-time tracking of the machine’s status and fault prediction, ensuring the stability of the system. Finally, field operation monitoring technology precisely tracks the quality of operations in tasks such as tillage, sowing, and harvesting, thereby improving operational accuracy and crop yield. The synergy of these technologies enables agricultural autonomous unmanned systems to achieve efficient, intelligent, and precise field operations, driving agriculture toward a more modern and intelligent direction.

8.2. Development Prospects

- (1)

- Autonomous Agricultural Machinery Operations and Sensor Development in Complex Environments

With the successful deployment of China’s BeiDou Satellite Navigation System, positioning and navigation technology has found widespread application in agricultural production. To mitigate the impact of complex environments, key technologies for autonomous operations in agricultural machinery, particularly in automated navigation and environmental sensing, require breakthroughs. Due to the diversity and uncontrollability of the agricultural environment, it is essential to further optimize environmental sensing and positioning/navigation technologies, develop sensors that are robust to interference and have wide adaptability, and utilize information fusion algorithms to integrate multi-source data for improved precision. At the same time, it is essential to explore how to dynamically plan paths in the ever-changing agricultural environment, responding in real-time to factors such as crop growth stages, field obstacles, and weather changes, to chart safe and efficient routes. The development and application of high-precision sensing devices (such as LiDAR) and intelligent algorithms come with high costs, making them difficult for farmers to adopt. Therefore, the development of low-cost, high-efficiency domestic sensors with independent intellectual property rights is crucial for promoting large-scale applications, ensuring the widespread adoption and popularization of autonomous operation technologies in agricultural machinery.

- (2)

- Multi-Parameter Monitoring and Comprehensive Fault Diagnosis of Smart Agricultural Machinery

The development of agricultural machinery state monitoring and fault diagnosis, as well as field operation monitoring technologies, will further promote the realization of intelligent and precise agricultural machinery. To achieve comprehensive monitoring of key components of agricultural machinery, a combination of vibration, temperature, acoustic, and other sensors can be used, while optimizing sensor anti-interference technology to enhance the equipment’s adaptability in harsh farmland environments. Regarding fault diagnosis technology, a multi-fault diagnosis platform can be built using big data and artificial intelligence, and through unsupervised learning and transfer learning algorithms, the dependency on labeled data in deep learning methods can be reduced.