Deep Learning-Driven Plant Pathology Assistant: Enabling Visual Diagnosis with AI-Powered Focus and Remediation Recommendations for Precision Agriculture

Abstract

1. Introduction

- We implemented a dual-augmentation pipeline that integrates an adaptive MixUp to strengthen inter-class separability with a plant-specific Albumentations strategy, effectively preserving subtle disease features and enhancing robustness to variations in illumination, viewpoint, and occlusion.

- We implement a class-aware weighted sampling strategy to elevate the representation of minority classes, improving recognition of rare diseases and ensuring balanced feature learning across all categories.

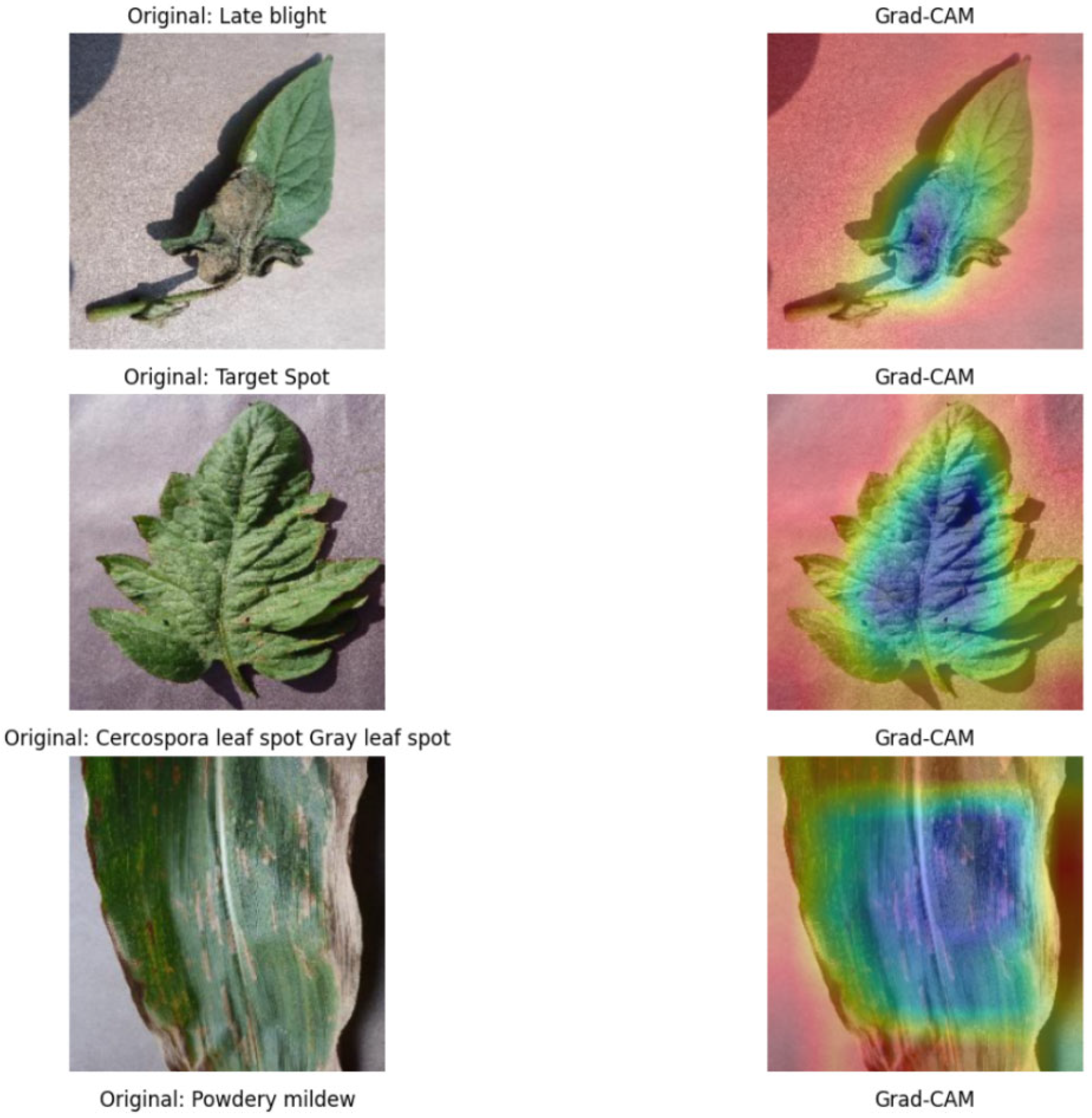

- We integrated Grad-CAM to generate high-resolution class activation heatmaps, enabling precise localization of disease regions within plant images. This visualization approach not only enhances model transparency but also provides agricultural practitioners with intuitive and actionable insights. For instance, farmers can rapidly identify infected leaf areas from the heatmaps, allowing for targeted interventions such as precise pesticide application or pruning, thereby improving disease management efficiency.

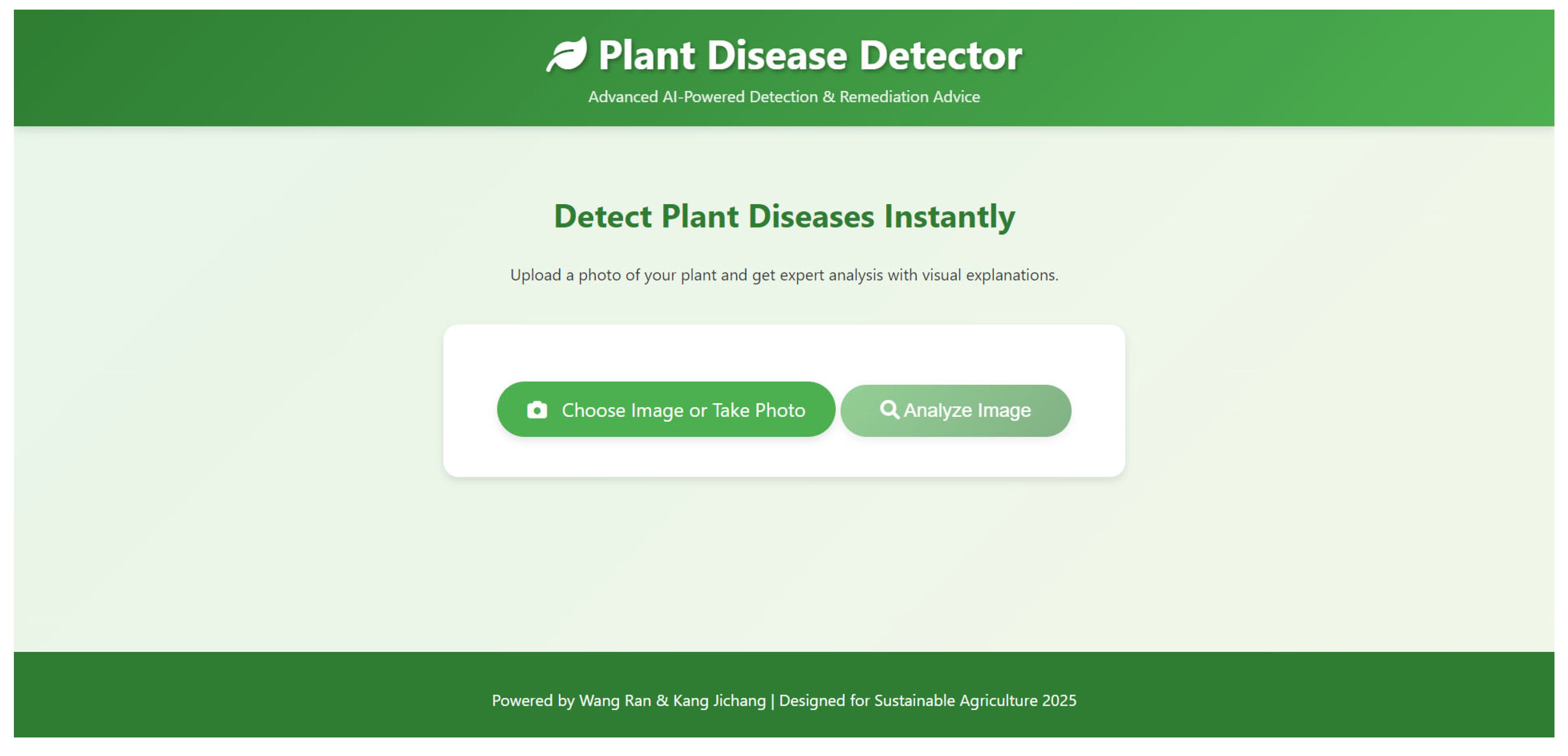

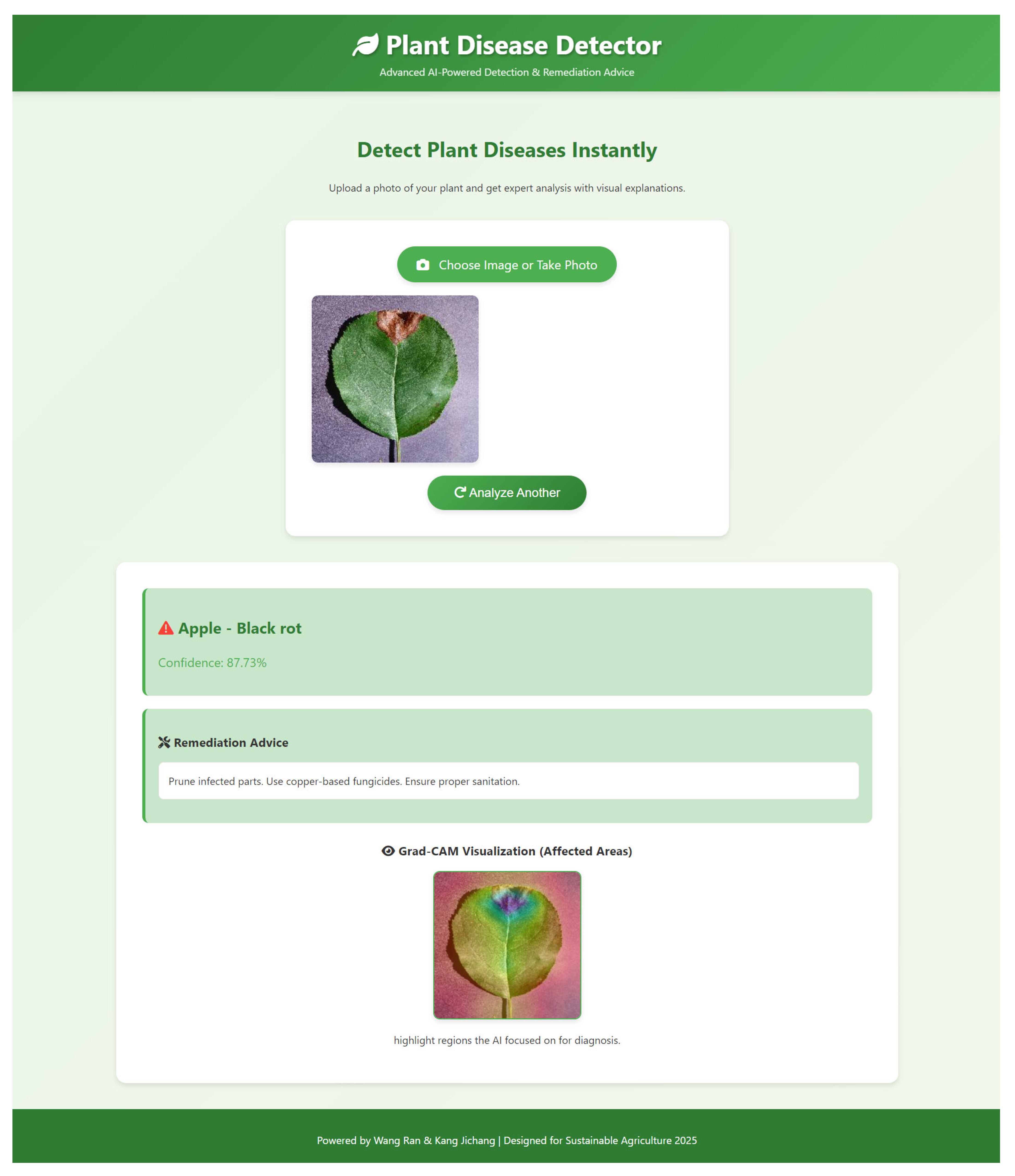

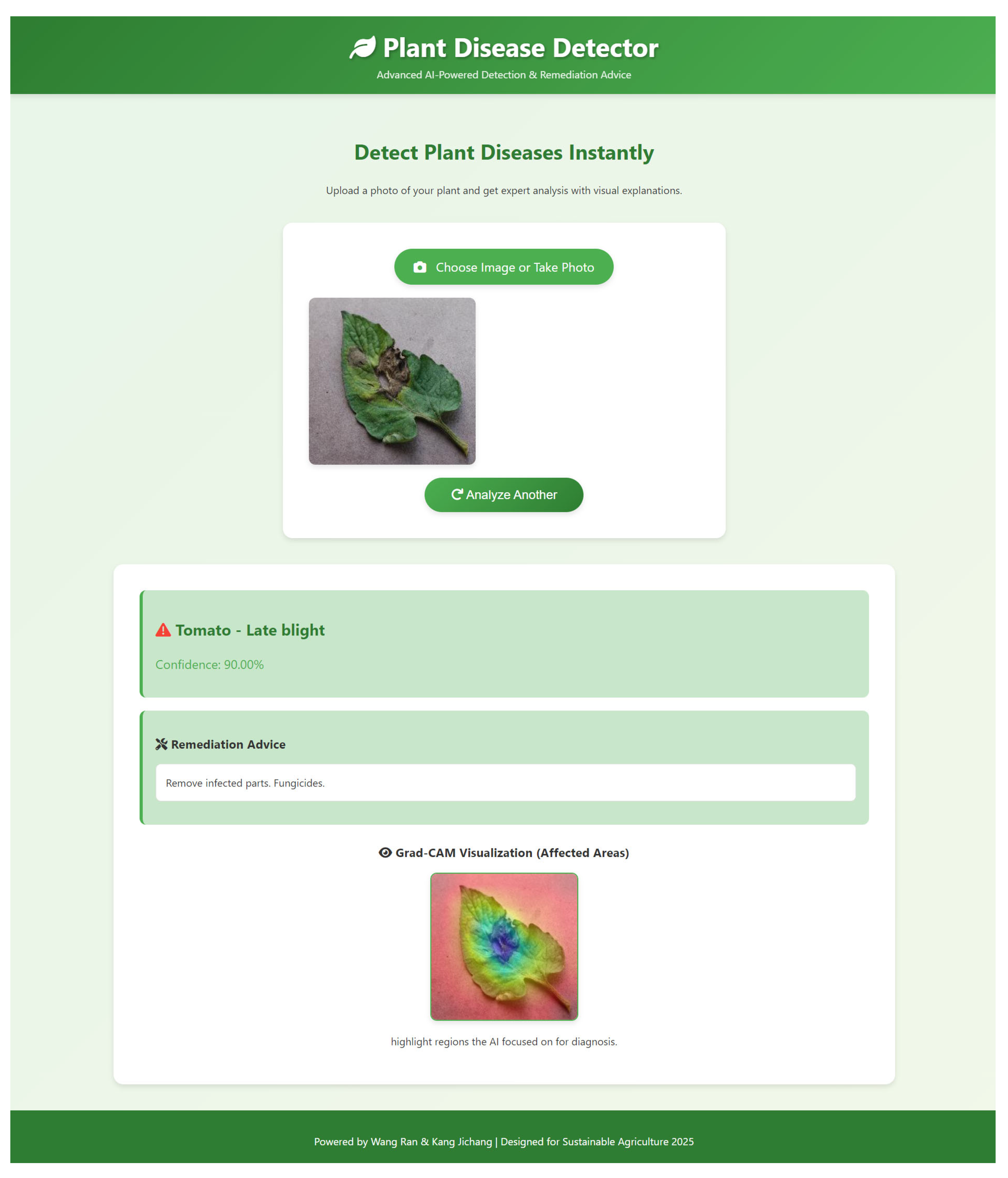

- To enhance practical applicability, we developed an integrated platform combining the SDA-CAH model with an intuitive front-end interface. The system enables real-time image upload, disease diagnosis, Grad-CAM visualization, and remediation guidance, thereby lowering technical barriers and empowering practitioners to leverage AI-driven decision support in precision agriculture.

2. Related Work

3. Materials and Methods

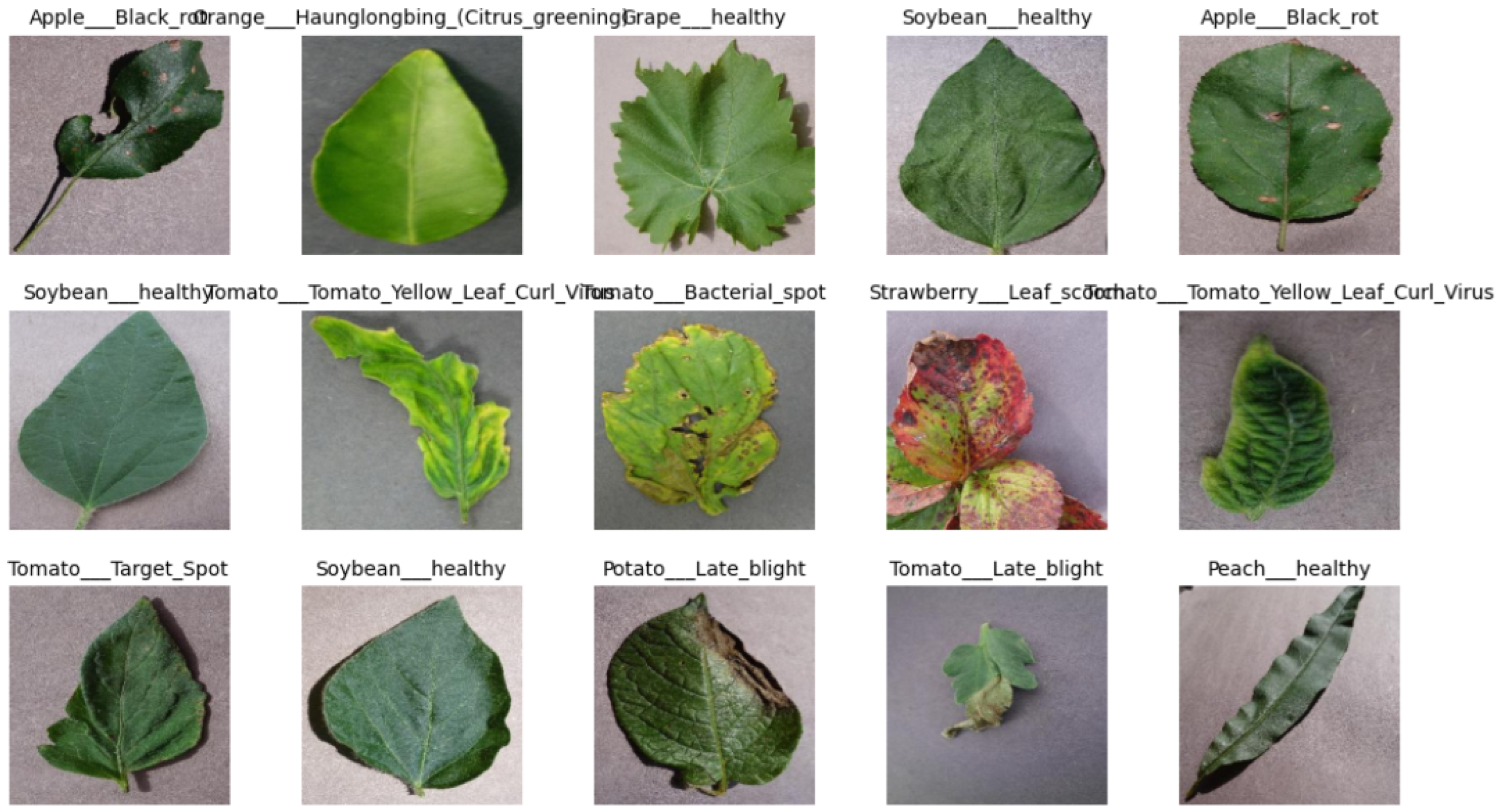

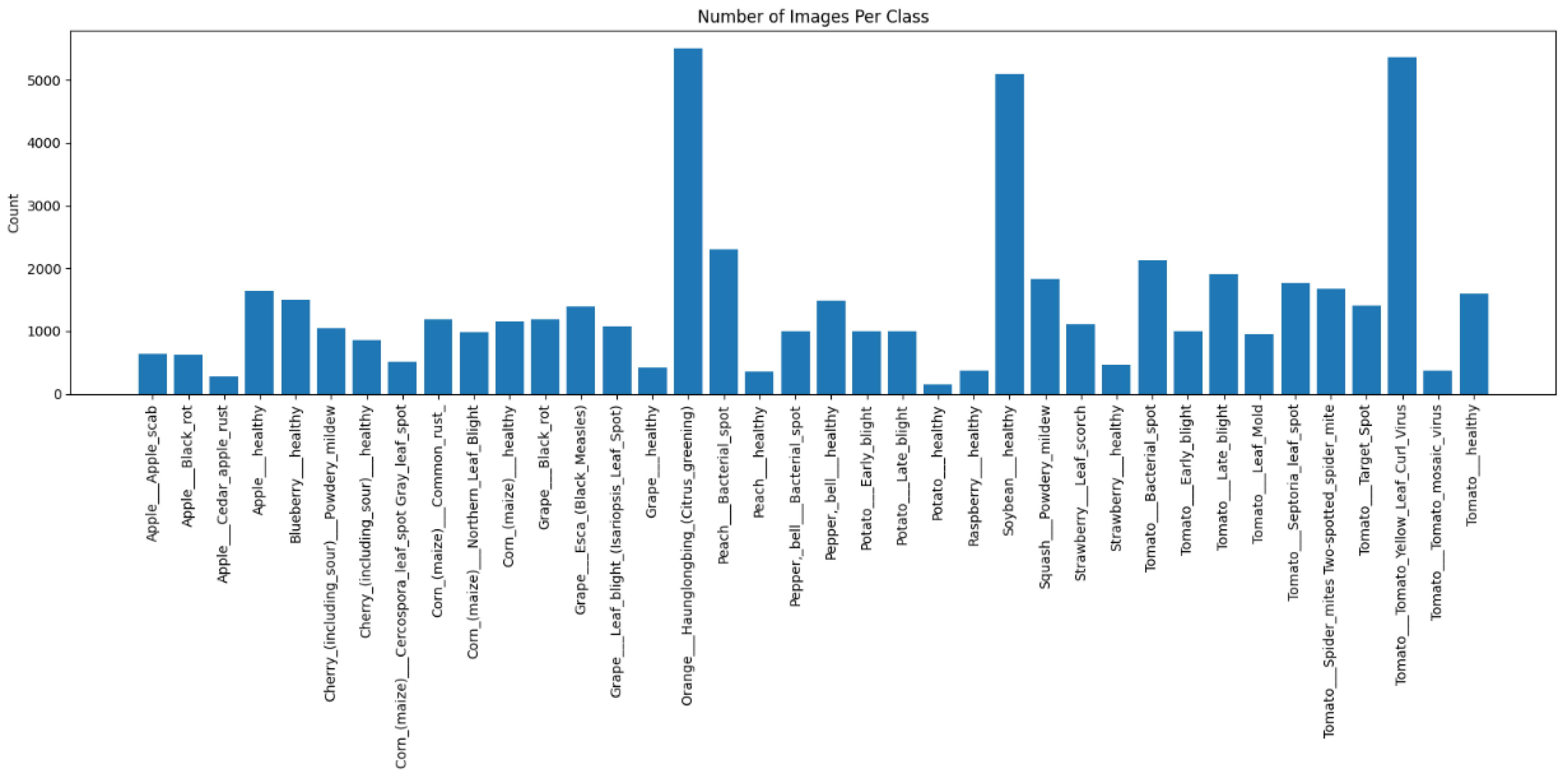

3.1. Dataset

3.2. Methodology

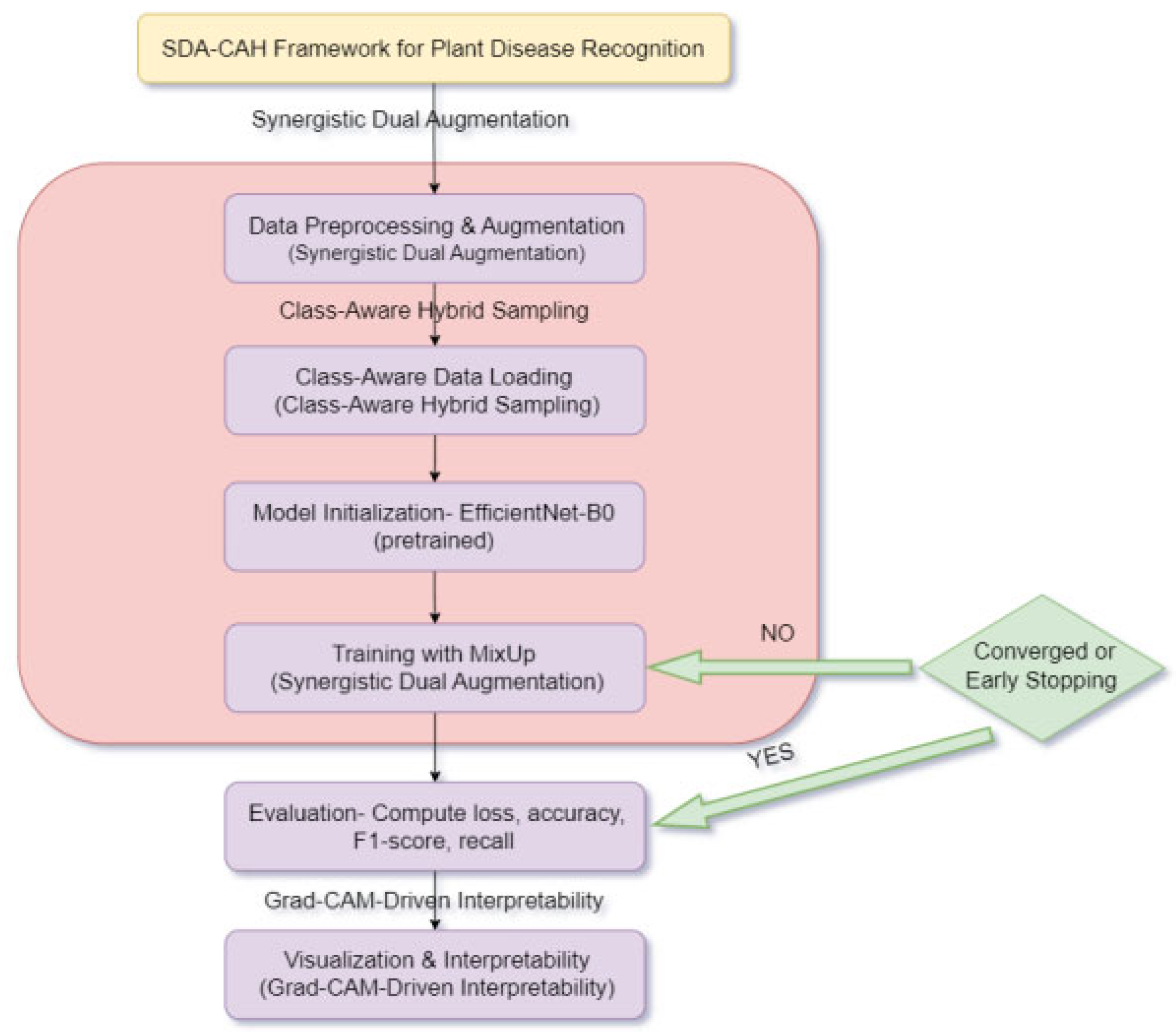

3.2.1. Overview of SDA-CAH Framework

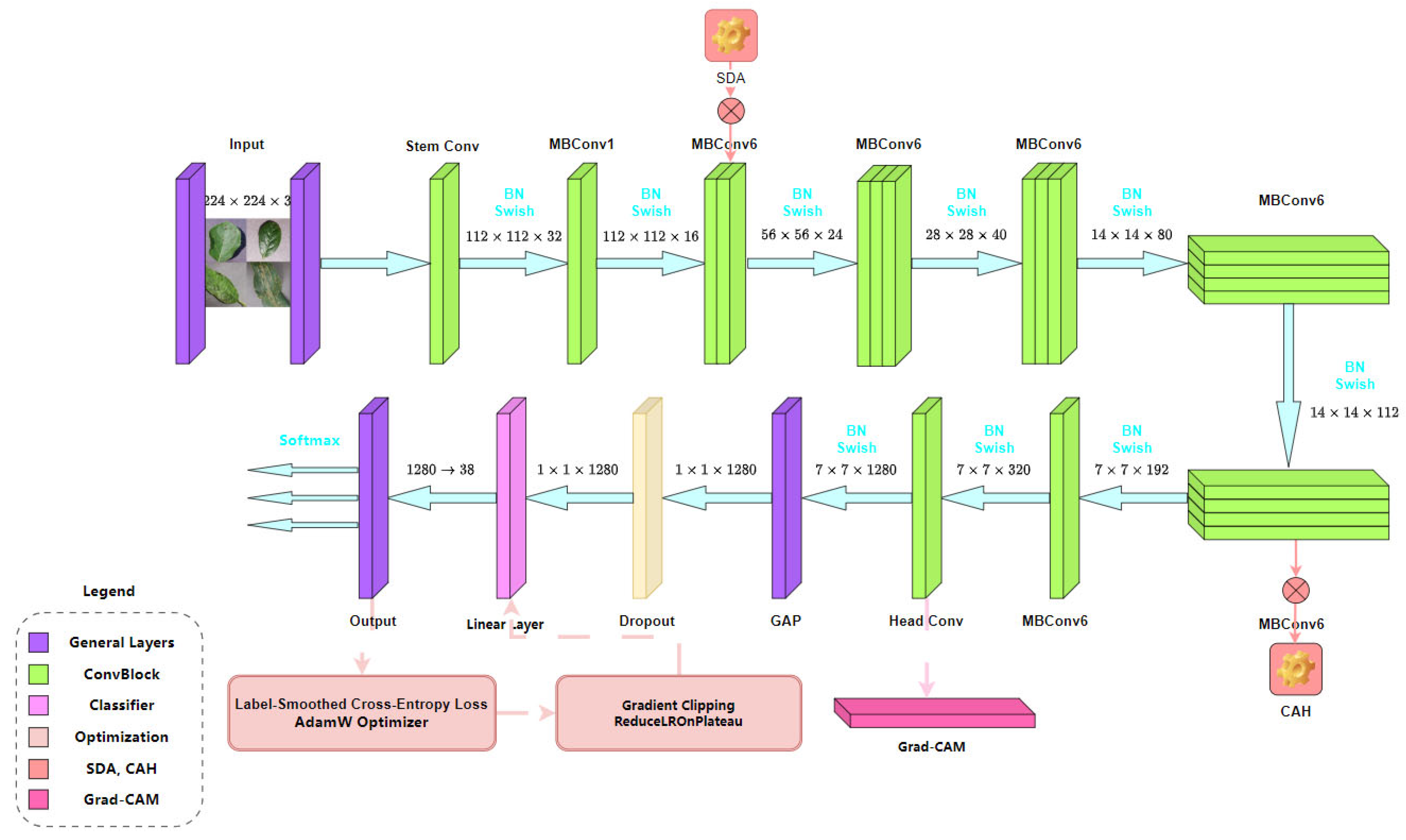

3.2.2. Synergistic Dual Augmentation

- Traditional MixUp generates synthetic samples through linear interpolation, which may blur localized disease features critical for plant disease identification. To address this, we propose a dynamic MixUp implementation that employs an adaptive sampling strategy based on a Beta distribution to dynamically select the mixing coefficient , ensuring both intra-class feature diversity and inter-class boundary clarity. Specifically, for a pair of input images and their corresponding labels , we generate a mixed sample (Equation (1)) and a mixed label (Equation (2)).where the mixing coefficient . The probability density function of the Beta distribution is defined as Equation (3).By setting , the Beta distribution is configured to favor values close to 0 or 1, thereby preserving more features from the original images while introducing controlled diversity.This adaptive sampling strategy enhances the model’s sensitivity to disease-specific features, balancing intra-class variability and inter-class discriminability, and significantly improving recognition performance for rare categories. During training, we incorporate a mixed loss function to ensure the model optimizes contributions from both labels:where denotes the cross-entropy loss, and represents the model’s output. Compared to traditional MixUp, our optimized approach better preserves the integrity of disease features through dynamic sampling, providing robust generalization support for complex agricultural scenarios.

- To address the visual characteristics of plant imagery, we developed a tailored Albumentations augmentation pipeline that integrates selective geometric and photometric transformations to simulate the complexity of field environments while preserving the integrity of disease symptoms. The geometric transformations include RandomRotate90, HorizontalFlip, and VerticalFlip to emulate the natural orientations of plants from various angles. The photometric transformations encompass RandomBrightnessContrast and selective blur/noise operations, including GaussianBlur and GaussNoise, to mitigate variations in illumination and noise interference. The brightness transformation can be modeled as Equation (5).where is the original pixel value, controls contrast, and adjusts brightness.To safeguard critical disease features, such as lesions and discoloration, we reduced the frequency of CoarseDropout to prevent excessive occlusion of symptomatic regions. This carefully calibrated combination of transformations enhances the model’s adaptability to real-world image variability. Ultimately, the synergistic dual augmentation strategy, combining optimized MixUp with the customized Albumentations pipeline, achieves an effective balance between feature preservation and robustness, ensuring superior performance in complex agricultural scenarios.

3.2.3. Class-Aware Hybrid Sampling

- We designed a novel sampling strategy that assigns inverse class frequency weights to each disease category, where denotes the number of samples for category . This approach allocates higher sampling probabilities to rare categories, ensuring more frequent exposure to minority class samples during training iterations, thereby enhancing the learning of underrepresented disease features. The weight calculation is defined as Equation (6).where represents the total number of categories ( for the PlantVillage dataset). To ensure stability in the sampling distribution, we normalize the weights as follows:The normalized weights are used to construct a weighted random sampler, where the sampling probability for each sample is given by , with denoting the class label of sample . Unlike traditional oversampling methods, which often introduce noise or information loss, our proposed sampler mitigates data redundancy and stabilizes the sampling distribution through dynamic weight adjustment and normalization, while incorporating random perturbations to avoid local optima. This class-aware strategy effectively addresses the severe class imbalance in the PlantVillage dataset, prioritizing the learning of rare category features and significantly enhancing the model’s generalization capability in complex agricultural environments. This approach demonstrates substantial innovation in tackling class imbalance challenges.

- To achieve efficient and stable batch processing, we integrate the category-aware sampler into the data loading framework and use weighted random sampling based on the inverse category frequency to generate a multinomial distribution sampling sequence to ensure balanced category representation in each batch. The batch size is set to 64, and multithreading and prefetching mechanisms are combined to optimize data loading efficiency and minimize I/O bottlenecks during training. The data loading throughput can be approximated as Equation (8).where is the batch size, is the total number of samples in the dataset, is the data loading time, and is the time to transfer data to the GPU. Both and are significantly reduced through parallelization and memory pinning. This efficient integration scheme supports high-throughput processing of large-scale datasets while maintaining category balance. Experimental verification on the PlantVillage dataset confirms its effectiveness and significantly improves the performance of the SDA-CAH framework in class-imbalanced environments.

3.2.4. Model Architecture

3.2.5. Explainable Visualization

- To enhance the interpretability of the SDA-CAH framework, we developed a customized Grad-CAM module based on the final convolutional layer of EfficientNet-B0. This module extracts activation maps and gradient information to compute the contribution of each channel to the prediction, generating precise disease localization heatmaps. For a target class , let the activation map of the last convolutional layer be , where denotes the activation of the -th feature channel at spatial location , with dimensions (height) and (width). The activation map is obtained through forward propagation, and the gradient of the target class score concerning the activation map is computed via backpropagation:where () represents the spatial position. The global average pooling of these gradients yields the weight for each feature channel:Using these weights , the activation maps are weighted and combined, followed by ReLU activation to generate the initial heatmap:To align with the 224 × 224 resolution of plant images, the heatmap is upsampled to the target size using bilinear interpolation:where denotes the bilinear interpolation kernel. The final heatmap is normalized as Equation (13).This optimized Grad-CAM implementation, tailored for EfficientNet-B0, effectively captures subtle disease features (e.g., spots, discoloration), significantly improving the heatmap’s adaptability to agricultural scenarios compared to standard Grad-CAM methods.

- To enhance interpretability for end-users, we designed an innovative heatmap overlay process. Initially, the input image is de-standardized to restore its RGB format. Let the standardized image be ; the de-standardization process is defined as Equation (14).where and represent the mean and standard deviation vectors, respectively. Subsequently, the normalized heatmap is converted to a pseudo-color representation using the JET colormap:The final output image is generated via a weighted overlay formula:where is the transparency parameter, ensuring clear correspondence between disease-affected regions and plant structures. The output image is normalized to ensure pixel values fall within the range [0, 255]:This process not only preserves the details of the original image but also highlights disease-affected areas through the heatmap, providing actionable insights for farmers, such as localizing infected leaves to guide precise pesticide application.

3.2.6. System Implementation

- The platform employs a client–server architecture, comprising a frontend user interface and a backend server. The frontend, built with HTML5, CSS, and JavaScript (V8 12.7.224.18), provides an intuitive interface that supports image uploads, real-time result visualization, and Grad-CAM heatmap display. Bootstrap is utilized to implement a responsive design, ensuring a consistent user experience across smartphones, tablets, and desktop devices—critical for diverse connectivity conditions in rural field settings. The backend is built on Flask and is responsible for image preprocessing, model inference, Grad-CAM generation, and response formatting. To optimize technical performance, the platform implements batch processing and asynchronous request handling, ensuring low latency and efficient concurrent inference. Model weights are loaded into memory for rapid prediction, and GPU acceleration is supported when available. The architecture also incorporates input validation and secure API endpoints to maintain reliability and safety. This design allows scalable deployment on cloud platforms such as AWS or Heroku, facilitating broad accessibility and robust performance across different devices and environments. Figure 5 illustrates the complete system architecture.

- The platform operationalizes the SDA-CAH framework as a fully integrated end-to-end diagnostic workflow, seamlessly combining algorithmic precision with engineering optimization. Users can submit plant images via file upload or camera capture, which are then preprocessed—resized, normalized, and color-adjusted—to align with the model’s training pipeline. Both preprocessing and inference are executed in parallel and asynchronously, leveraging GPU acceleration when available, with model weights preloaded into memory to minimize latency. Disease predictions are generated through softmax probabilities, while Grad-CAM heatmaps are computed by backpropagating gradients from the final convolutional layer, resized, and overlaid on the original image for intuitive and interpretable visualization. Each disease class is associated with expert-curated remediation guidance, dynamically retrieved for immediate presentation. The frontend dynamically displays predictions, confidence scores, Grad-CAM overlays, and actionable recommendations through a responsive interface and asynchronous rendering, ensuring low-latency, scalable, and interpretable deployment across diverse devices. This architecture exemplifies the synergistic integration of high-performance modeling, efficient inference, and user-centric visualization, facilitating practical application in real-world agricultural scenarios.

- The platform is fully containerized using Docker (version 20.10.7), enabling consistent and reproducible deployment across diverse environments, and utilizes NGINX (version 1.24.0) as a reverse proxy to handle load balancing, SSL termination, and request routing in the production environment. Usability testing, simulating real-world user scenarios, confirmed the interface’s intuitiveness and highlighted planned support for multi-language expansion and Service Workers—based offline caching to maintain functionality under intermittent network conditions. From a technical perspective, the deployment pipeline integrates automated build and continuous integration workflows, with GPU-enabled containers for efficient model inference and memory optimization. Logging, monitoring, and input validation mechanisms are incorporated to ensure robustness, security, and maintainability. This implementation not only democratizes access to the SDA-CAH framework but also enables anonymized collection of user data for iterative model fine-tuning, supporting continuous improvement. By combining scalable, secure deployment with real-time, explainable AI, the platform translates research into a practical tool for precision agriculture, advancing global food security and sustainable crop management.

3.2.7. Training and Evaluation

- To ensure consistency in data distribution, experimental reproducibility, and prevention of potential data leakage, a stratified sampling strategy was employed on the PlantVillage dataset (38 classes, over 50,000 images), partitioning it at the image level into 70% training, 15% validation, and 15% testing sets using a fixed random seed of 42. The splitting process was conducted in two stages: first, the dataset was divided into a training set and a temporary set; the latter was then proportionally split into validation and test sets, effectively preserving the distribution of rare classes (each comprising less than 2% of the data). Crucially, all subsets were mutually exclusive—no image or its augmented variant appeared across multiple subsets—ensuring that model performance reflects genuine learning rather than memorization of duplicated samples. Table 3 details the dataset split. This rigorous partitioning strategy not only supports the class-aware hybrid sampling mechanism but also guarantees fairness across classes during training, tuning, and evaluation. All experiments were conducted exclusively on the PlantVillage dataset to maintain controlled comparability with prior studies. While this dataset provides consistent illumination and background conditions, it does not fully capture the complexity of real-world field scenarios. Consequently, the reported accuracy primarily reflects strong in-domain performance rather than cross-domain generalization, thereby enhancing the framework’s overall robustness and interpretability within the controlled experimental scope.

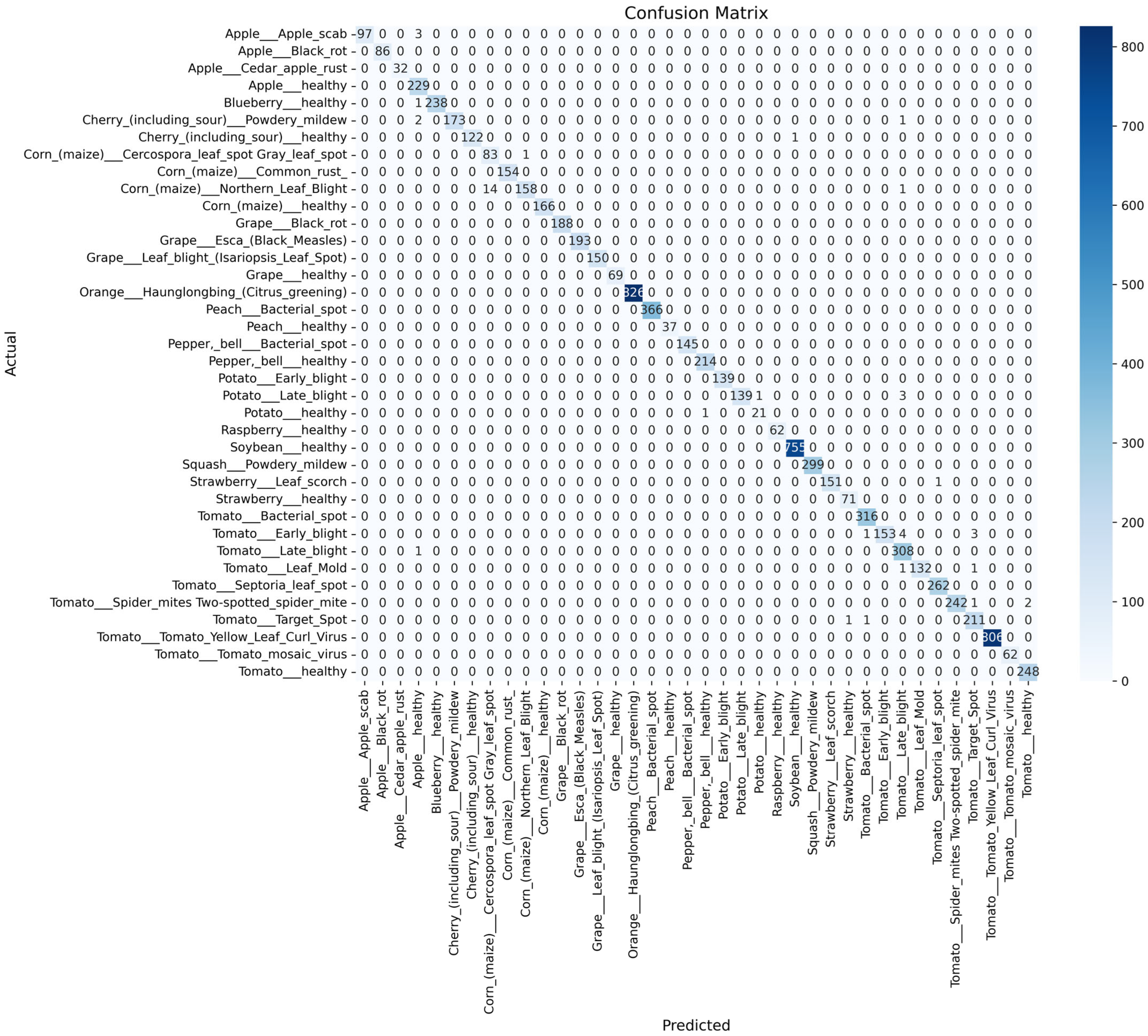

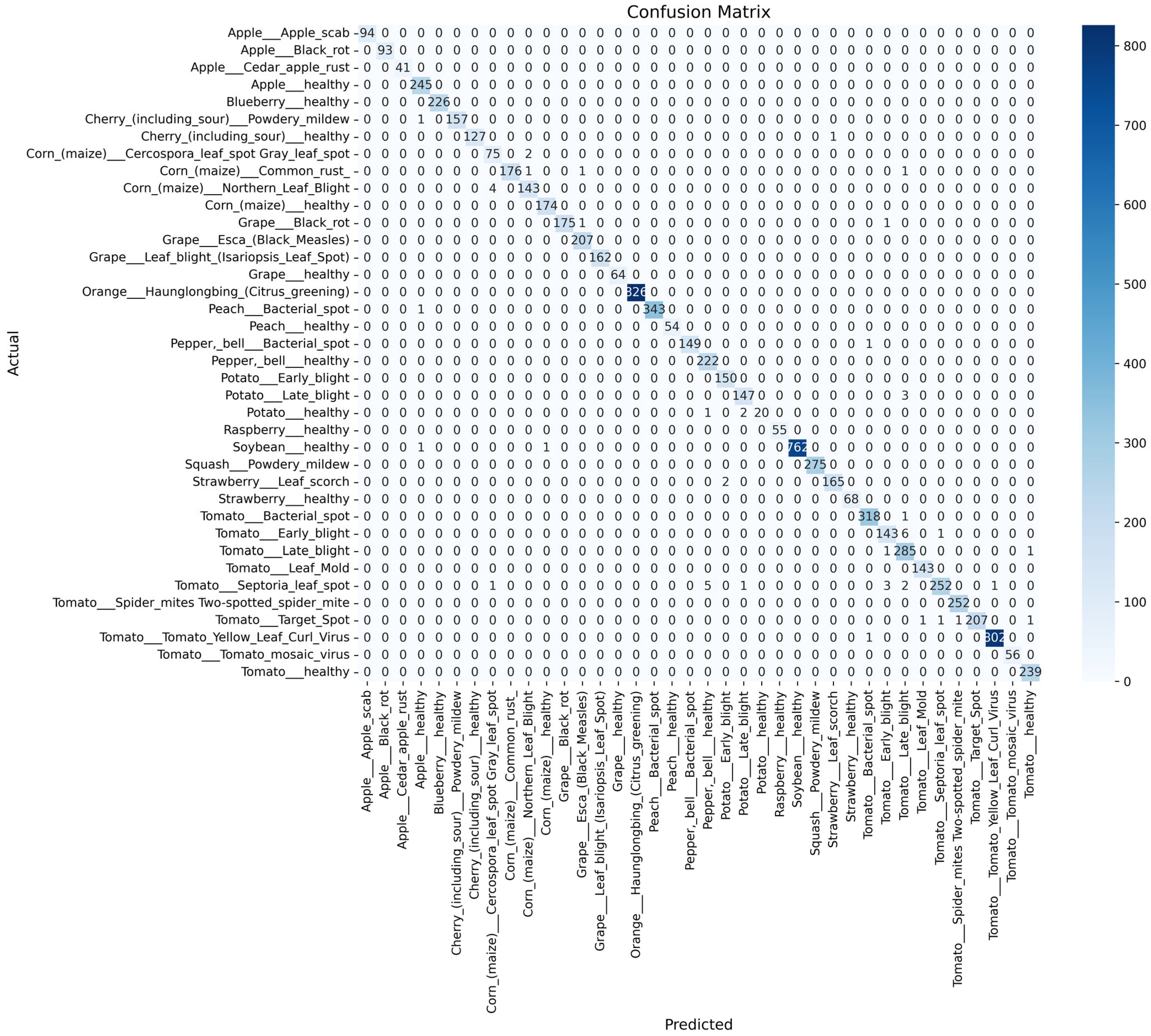

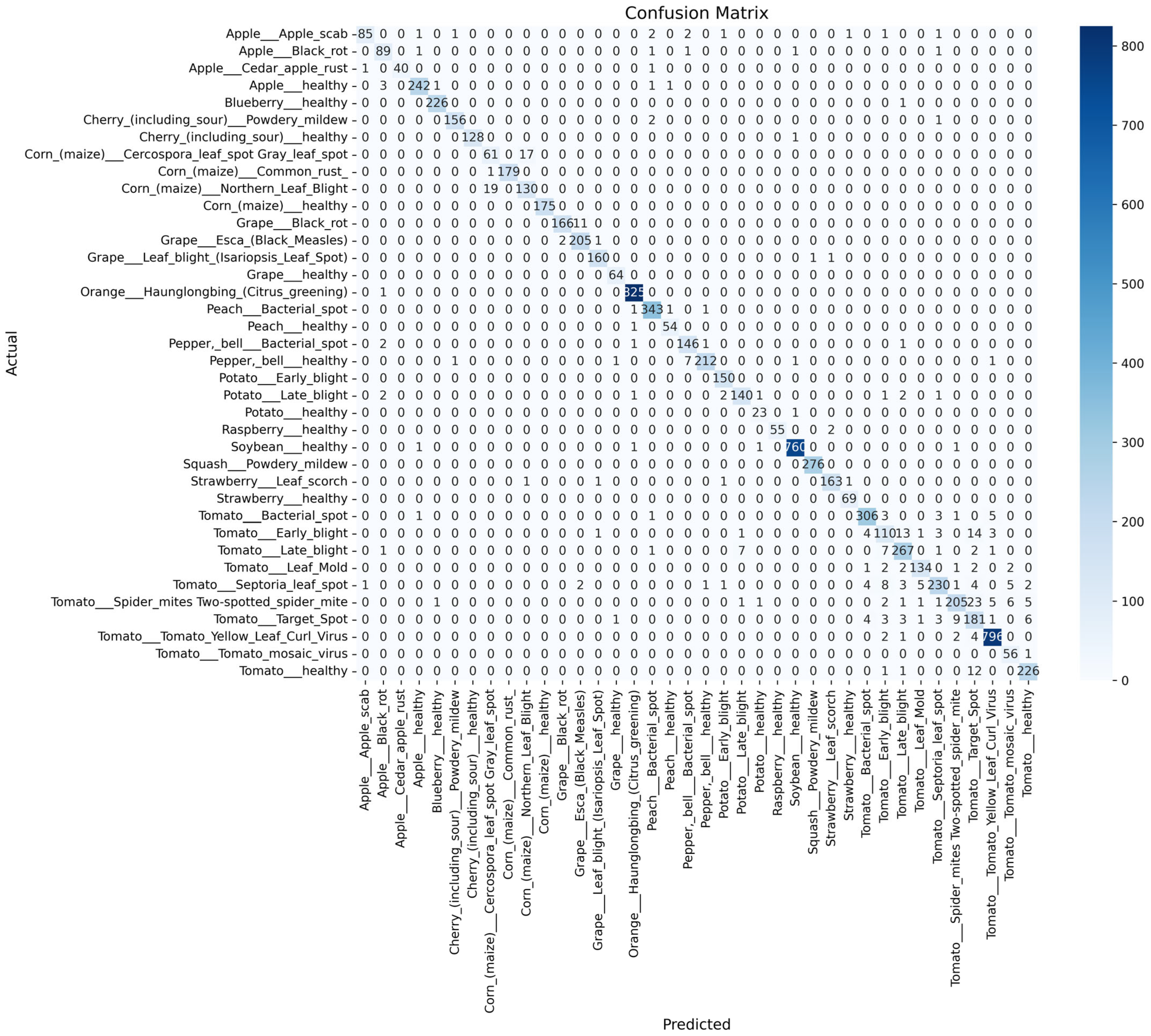

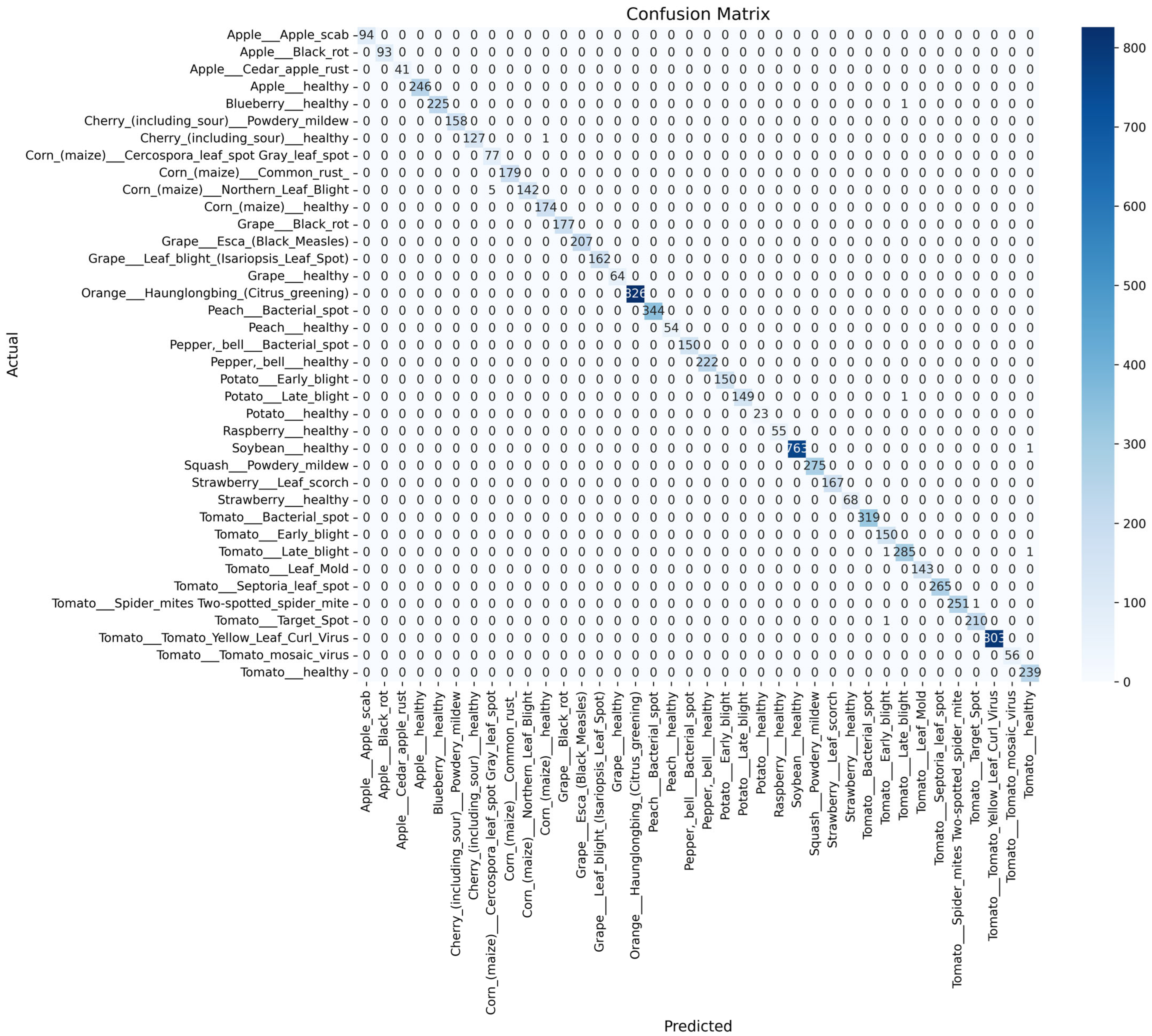

- To comprehensively assess the performance of the SDA-CAH framework, we employ multiple evaluation metrics, including accuracy (Equation (18)), weighted F1 score (Equation (19)), weighted recall (Equation (20)), and confusion matrix analysis. Accuracy measures the overall classification correctness, while the weighted F1 score and recall, by accounting for class sample sizes, are particularly suited for imbalanced datasets, emphasizing the model’s ability to recognize rare classes. The confusion matrix further elucidates inter-class misclassification patterns, providing clear insights into the model’s classification granularity.

3.3. Experimental Setup

3.3.1. Implementation Details

- This study was conducted on a dual NVIDIA T4 GPU setup (2 × 16 GB VRAM). The T4 GPUs, based on the NVIDIA Turing architecture, provide powerful tensor computation capabilities and low power consumption, making them particularly suitable for parallel computing optimization in large-scale image classification tasks. The experimental environment utilized Python 3.10.12, with the PyTorch ecosystem as the core deep learning framework, complemented by Torchvision for pretrained models and image processing tools, enabling a complete workflow from feature extraction to model fine-tuning. To fully leverage the hardware capabilities, we employed PyTorch’s standard device-agnostic coding strategy, which ensures automatic switching between GPU and CPU, achieving high cross-platform portability and flexibility. For the PlantVillage dataset, the allocated GPU memory sufficiently meets the high computational and memory demands of large-scale image augmentation and batch training. Furthermore, by integrating PyTorch’s parallel data-loading mechanisms, we achieved asynchronous concurrency during training and preprocessing, effectively improving I/O throughput. Overall, this hardware environment provides a robust computational foundation for the proposed SDA-CAH framework, enabling the coordinated optimization of dual data augmentation and class-aware sampling mechanisms under stable and efficient conditions. Table 4 summarizes the software and hardware configuration of the SDA-CAH framework, demonstrating its efficiency and portability.

- To optimize model performance, all hyperparameters were meticulously fine-tuned through controlled experimentation. To ensure a fair and unbiased comparison while emphasizing the effectiveness of the proposed SDA-CAH framework, identical hyperparameter configurations were employed for both SDA-CAH + EfficientNet-B0 and the baseline EfficientNet-B0 model. The batch size was set to 64 to balance computational efficiency and gradient stability, and training was conducted for 20 epochs with an early-stopping mechanism (patience = 5) to prevent overfitting. The MixUp coefficient (α = 0.1) dynamically adjusted the sample-mixing ratio, enhancing inter-class feature discrimination and improving robustness to data variability. These settings were integrated with the AdamW optimizer (learning rate = 0.0005, weight decay = 1 × 10−3), gradient clipping (max_norm = 1.0), and a ReduceLROnPlateau scheduler (decay factor = 0.4, patience = 2) to maintain stable optimization dynamics and promote efficient convergence. Table 5 summarizes the complete hyperparameter configuration, highlighting the methodological rigor of the training strategy and its appropriateness for plant disease recognition tasks.

3.3.2. Comparative Experiment

4. Results and Discussion

4.1. Quantitative Results

- On the PlantVillage test set, the SDA-CAH framework achieved an accuracy of 99.95%, a weighted F1-score of 99.89%, and a weighted recall of 99.89%, markedly surpassing state-of-the-art methods across multiple key performance indicators. Figure 6 illustrates the training and validation loss and accuracy curves, confirming the model’s rapid and stable convergence. To further validate its effectiveness, we benchmarked SDA-CAH against three mainstream baselines: Xception, standard EfficientNet-B0, and MobileNetV2. Specifically, Xception achieved 99.42% accuracy, 99.39% weighted F1-score, and 99.41% weighted recall; standard EfficientNet-B0 attained 99.35%, 99.32%, and 99.33% on the same metrics; and MobileNetV2 recorded 95.77%, 94.52%, and 94.77%, respectively. In comparison, SDA-CAH—built upon the EfficientNet-B0 backbone—delivered performance gains of approximately 0.49%, 0.50%, and 0.56% over Xception; 0.56%, 0.57%, and 0.56% over standard EfficientNet-B0; and 4.18%, 5.37%, and 5.12% over MobileNetV2, demonstrating unequivocally superior classification capability. These substantial improvements stem primarily from the synergistic dual-augmentation strategy, which enhances adaptability to image diversity and disease-variant complexity, and the class-aware sampling mechanism, which effectively addresses learning challenges in rare categories (each comprising less than 2% of the data). Table 8 presents a detailed comparison of SDA-CAH and the three baselines on the PlantVillage test set, including not only core performance metrics but also introduced indicators—Inference Speed (ms) and Training Time—to comprehensively assess computational efficiency and scalability. Beyond this, a systematic comparison with several representative state-of-the-art plant disease recognition methods from recent years is summarized in Table 9, which includes extended metrics such as Accuracy (%), F1 Score (%), Recall (%), Params (M), and Model Size (MB), enabling a more holistic evaluation of model accuracy, generalization, and computational cost. Although the proposed SDA-CAH framework achieved up to 99.95% accuracy on the PlantVillage test set, such near-perfect results must be interpreted with caution. The PlantVillage dataset consists of laboratory-acquired images with minimal background noise and highly visible lesions—conditions that inherently facilitate elevated performance. No cross-dataset or field validation was conducted in this study; thus, the reported metrics primarily reflect strong in-domain performance rather than real-world robustness. Future evaluations on field-acquired datasets under diverse environmental conditions are essential to rigorously assess cross-domain generalization and practical deployability. Nonetheless, within the controlled scope of this benchmark, these results not only affirm SDA-CAH’s robustness and precision in handling highly imbalanced data but also establish a new performance standard for plant disease recognition, underscoring its significant potential for scalable deployment in precision agriculture.

- To further substantiate the practical advantages of the proposed SDA-CAH framework beyond classification accuracy, we conducted a comprehensive efficiency and resource utilization analysis to assess computational complexity, model compactness, and inference latency relative to the baseline models. As summarized in Table 8 and Table 9, the SDA-CAH + EfficientNet-B0 framework preserves a comparable parameter count (5.33 M) and lightweight storage footprint (≈21 MB) to the standard EfficientNet-B0, while delivering markedly superior recognition accuracy (99.95% vs. 99.50%) and F1-score (99.89% vs. 99.45%). Despite integrating the synergistic dual-augmentation and class-aware hybrid sampling modules, the framework effectively reduces training time while maintaining performance, primarily attributable to the additional data transformation operations during training. Crucially, inference latency remains nearly identical at ≈70 ms per image when evaluated on a NVIDIA T4 GPU, confirming that the model’s architectural optimizations do not compromise deployment efficiency. This well-balanced trade-off between accuracy, computational efficiency, and energy economy highlights the SDA-CAH framework’s strong potential for scalable deployment in resource-constrained or energy-sensitive environments, such as mobile-based diagnostic platforms, edge computing devices, or embedded agricultural monitoring systems. By achieving state-of-the-art precision with minimal hardware overhead, the proposed method offers a cost-effective and environmentally sustainable AI solution for next-generation precision agriculture applications.

4.2. Qualitative Results

4.3. System Demonstration and Case Studies

4.3.1. User Operation Workflow

4.3.2. Interface Demonstration

5. Conclusions

5.1. Summary of Contributions

5.2. Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ristaino, J.B.; Anderson, P.K.; Bebber, D.P.; Brauman, K.A.; Cunniffe, N.J.; Fedoroff, N.V.; Wei, Q. The persistent threat of emerging plant disease pandemics to global food security. Proc. Natl. Acad. Sci. USA 2021, 118, e2022239118. [Google Scholar] [CrossRef]

- Singh, B.K.; Delgado-Baquerizo, M.; Egidi, E.; Guirado, E.; Leach, J.E.; Liu, H.; Trivedi, P. Climate change impacts on plant pathogens, food security and paths forward. Nat. Rev. Microbiol. 2023, 21, 640–656. [Google Scholar] [CrossRef]

- Upadhyay, A.; Chandel, N.S.; Singh, K.P.; Chakraborty, S.K.; Nandede, B.M.; Kumar, M.; Elbeltagi, A. Deep learning and computer vision in plant disease detection: A comprehensive review of techniques, models, and trends in precision agriculture. Artif. Intell. Rev. 2025, 58, 92. [Google Scholar] [CrossRef]

- Bagga, M.; Goyal, S. Image-based detection and classification of plant diseases using deep learning: State-of-the-art review. Urban Agric. Reg. Food Syst. 2024, 9, e20053. [Google Scholar] [CrossRef]

- Islam, M.; Azad, A.K.M.; Arman, S.E.; Alyami, S.A.; Hasan, M.M. PlantCareNet: An advanced system to recognize plant diseases with dual-mode recommendations for prevention. Plant Methods 2025, 21, 52. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Sapkota, B.B.; Thomasson, J.A.; Bagavathiannan, M.V. Influence of image quality and light consistency on the performance of convolutional neural networks for weed mapping. Remote Sens. 2021, 13, 2140. [Google Scholar] [CrossRef]

- Amara, J.; König-Ries, B.; Samuel, S. Explainability of Deep Learning-Based Plant Disease Classifiers Through Automated Concept Identification. arXiv 2024, arXiv:2412.07408. [Google Scholar] [CrossRef]

- Hughes, D.P.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics through machine learning and crowdsourcing. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Ngugi, H.N.; Akinyelu, A.A.; Ezugwu, A.E. Machine Learning and Deep Learning for Crop Disease Diagnosis: Performance Analysis and Review. Agronomy 2024, 14, 3001. [Google Scholar] [CrossRef]

- Cap, Q.H.; Uga, H.; Kagiwada, S.; Iyatomi, H. Leafgan: An effective data augmentation method for practical plant disease diagnosis. IEEE Trans. Autom. Sci. Eng. 2020, 19, 1258–1267. [Google Scholar] [CrossRef]

- Gopalan, K.; Srinivasan, S.; Singh, M.; Mathivanan, S.K.; Moorthy, U. Corn leaf disease diagnosis: Enhancing accuracy with resnet152 and grad-cam for explainable AI. BMC Plant Biol. 2025, 25, 440. [Google Scholar] [CrossRef]

- Khare, O.; Mane, S.; Kulkarni, H.; Barve, N. LeafNST: An improved data augmentation method for classification of plant disease using object-based neural style transfer. Discov. Artif. Intell. 2024, 4, 50. [Google Scholar] [CrossRef]

- Alhwaiti, Y.; Ishaq, M.; Siddiqi, M.H.; Waqas, M.; Alruwaili, M.; Alanazi, S.; Khan, F. Early Detection of Late Blight Tomato Disease using Histogram Oriented Gradient based Support Vector Machine. arXiv 2023, arXiv:2306.08326. [Google Scholar] [CrossRef]

- Yao, J.; Tran, S.N.; Sawyer, S.; Garg, S. Machine learning for leaf disease classification: Data, techniques and applications. Artif. Intell. Rev. 2023, 56 (Suppl. S3), 3571–3616. [Google Scholar] [CrossRef]

- Demilie, W.B. Plant disease detection and classification techniques: A comparative study of the performances. J. Big Data 2024, 11, 5. [Google Scholar] [CrossRef]

- Pai, P.; Amutha, S.; Basthikodi, M.; Ahamed Shafeeq, B.M.; Chaitra, K.M.; Gurpur, A.P. A twin CNN-based framework for optimized rice leaf disease classification with feature fusion. J. Big Data 2025, 12, 89. [Google Scholar] [CrossRef]

- Rodríguez-Lira, D.C.; Córdova-Esparza, D.M.; Álvarez-Alvarado, J.M.; Terven, J.; Romero-González, J.A.; Rodríguez-Reséndiz, J. Trends in Machine and Deep Learning Techniques for Plant Disease Identification: A Systematic Review. Agriculture 2024, 14, 2188. [Google Scholar] [CrossRef]

- Rizwan, M.; Bibi, S.; Haq, S.U.; Asif, M.; Jan, T.; Zafar, M.H. Automatic plant disease detection using computationally efficient convolutional neural network. Eng. Rep. 2024, 6, e12944. [Google Scholar] [CrossRef]

- Gohil, M.K.; Bhattacharjee, A.; Rana, R.; Lal, K.; Biswas, S.K.; Tiwari, N.; Bhattacharya, B. A Hybrid Technique for Plant Disease Identification and Localisation in Real-time. arXiv 2024, arXiv:2412.19682. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.H.; Han, X. Plant disease diagnosis using deep learning based on aerial hyperspectral images: A review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Ngugi, H.N.; Ezugwu, A.E.; Akinyelu, A.A.; Abualigah, L. Revolutionizing crop disease detection with computational deep learning: A comprehensive review. Environ. Monit. Assess. 2024, 196, 302. [Google Scholar] [CrossRef]

- Hong, P.; Luo, X.; Bao, L. Crop disease diagnosis and prediction using two-stream hybrid convolutional neural networks. Crop Prot. 2024, 184, 106867. [Google Scholar] [CrossRef]

- Kaya, Y.; Gürsoy, E. A novel multi-head CNN design to identify plant diseases using the fusion of RGB images. Ecol. Inform. 2023, 75, 101998. [Google Scholar] [CrossRef]

- Sun, X.; Li, G.; Qu, P.; Xie, X.; Pan, X.; Zhang, W. Research on plant disease identification based on CNN. Cogn. Robot. 2022, 2, 155–163. [Google Scholar] [CrossRef]

- Shoaib, M.; Shah, B.; Ei-Sappagh, S.; Ali, A.; Ullah, A.; Alenezi, F.; Ali, F. An advanced deep learning models-based plant disease detection: A review of recent research. Front. Plant Sci. 2023, 14, 1158933. [Google Scholar]

- Ray, S.K.; Hossain, M.A.; Islam, N.; Hasan, M.A.F.M.R. Enhanced plant health monitoring with dual head CNN for leaf classification and disease identification. J. Agric. Food Res. 2025, 21, 101930. [Google Scholar] [CrossRef]

- González-Briones, A.; Florez, S.L.; Chamoso, P.; Castillo-Ossa, L.F.; Corchado, E.S. Enhancing Plant Disease Detection: Incorporating Advanced CNN Architectures for Better Accuracy and Interpretability. Int. J. Comput. Intell. Syst. 2025, 18, 120. [Google Scholar] [CrossRef]

- Saddami, K.; Nurdin, Y.; Zahramita, M.; Safiruz, M.S. Advancing Green AI: Efficient and Accurate Lightweight CNNs for Rice Leaf Disease Identification. arXiv 2024, arXiv:2408.01752. [Google Scholar] [CrossRef]

- Mazumder, M.K.A.; Kabir, M.M.; Rahman, A.; Abdullah-Al-Jubair, M.; Mridha, M.F. DenseNet201Plus: Cost-effective transfer-learning architecture for rapid leaf disease identification with attention mechanisms. Heliyon 2024, 10, e35625. [Google Scholar] [CrossRef]

- Alghamdi, H.; Turki, T. PDD-Net: Plant disease diagnoses using multilevel and multiscale convolutional neural network features. Agriculture 2023, 13, 1072. [Google Scholar] [CrossRef]

- Song, Y.; Yang, C. DS_FusionNet: Dynamic Dual-Stream Fusion with Bidirectional Knowledge Distillation for Plant Disease Recognition. arXiv 2025, arXiv:2504.20948. [Google Scholar] [CrossRef]

- Nieradzik, L.; Stephani, H.; Sieburg-Rockel, J.; Helmling, S.; Olbrich, A.; Keuper, J. Challenging the black box: A comprehensive evaluation of attribution maps of CNN applications in agriculture and forestry. arXiv 2024, arXiv:2402.11670. [Google Scholar] [CrossRef]

- Antwi, K.; Bennin, K.E.; Pobi, D.K.; Tekinerdogan, B. On the application of image augmentation for plant disease detection: A systematic literature review. Smart Agric. Technol. 2024, 9, 100590. [Google Scholar] [CrossRef]

- Xu, M.; Kim, H.; Yang, J.; Fuentes, A.; Meng, Y.; Yoon, S.; Park, D.S. Embracing limited and imperfect training datasets: Opportunities and challenges in plant disease recognition using deep learning. Front. Plant Sci. 2023, 14, 1225409. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Lee, H.; Park, Y.S.; Yang, S.; Lee, H.; Park, T.J.; Yeo, D. A Deep Learning-Based Crop Disease Diagnosis Method Using Multimodal Mixup Augmentation. Appl. Sci. 2024, 14, 4322. [Google Scholar] [CrossRef]

- Li, L.H.; Tanone, R. Improving robustness using mixup and cutmix augmentation for corn leaf diseases classification based on convmixer architecture. J. ICT Res. Appl. 2023, 17, 167–180. [Google Scholar] [CrossRef]

- Harris, E.; Marcu, A.; Painter, M.; Niranjan, M.; Prügel-Bennett, A.; Hare, J. Fmix: Enhancing mixed sample data augmentation. arXiv 2020, arXiv:2002.12047. [Google Scholar]

- Ojo, M.O.; Zahid, A. Improving deep learning classifiers performance via preprocessing and class imbalance approaches in a plant disease detection pipeline. Agronomy 2023, 13, 887. [Google Scholar] [CrossRef]

- Talab, H.K.; Mohammadzamani, D.; Parashkoohi, M.G. Investigating the accuracy of classification in unbalanced data in order to diagnose two common potato leaf diseases (early blight and late blight) using image processing and machine learning. Discov. Appl. Sci. 2024, 6, 286. [Google Scholar] [CrossRef]

- Sinha, S.; Ohashi, H.; Nakamura, K. Class-wise difficulty-balanced loss for solving class-imbalance. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Liu, Y.; Yang, G.; Qiao, S.; Liu, M.; Qu, L.; Han, N.; Peng, Y. Imbalanced data classification: Using transfer learning and active sampling. Eng. Appl. Artif. Intell. 2023, 117, 105621. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Ennab, M.; Mcheick, H. Advancing AI Interpretability in Medical Imaging: A Comparative Analysis of Pixel-Level Interpretability and Grad-CAM Models. Mach. Learn. Knowl. Extr. 2025, 7, 12. [Google Scholar] [CrossRef]

- Hulsman, G.W.; van der Heijden, J.A.; da Silva Torres, R. Automated Detection and Localization of Potato Blackleg Using a Convolutional Neural Network and Activation Maps. Potato Res. 2025, 68, 3339–3356. [Google Scholar] [CrossRef]

- Karim, M.J.; Goni, M.O.F.; Nahiduzzaman, M.; Ahsan, M.; Haider, J.; Kowalski, M. Enhancing agriculture through real-time grape leaf disease classification via an edge device with a lightweight CNN architecture and Grad-CAM. Sci. Rep. 2024, 14, 16022. [Google Scholar] [CrossRef]

- Alhammad, S.M.; Khafaga, D.S.; El-Hady, W.M.; Samy, F.M.; Hosny, K.M. Deep learning and explainable AI for classification of potato leaf diseases. Front. Artif. Intell. 2025, 7, 1449329. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, X.; Gao, H.; Jiang, M.; Que, H.; Mu, L. A Wireless Collaborated Inference Acceleration Framework for Plant Disease Recognition. In Proceedings of the International Conference on Intelligent Computing, Ningbo, China, 26–29 July 2025; Springer: Singapore, 2025; pp. 331–341. [Google Scholar]

- Kondaveeti, H.K.; Simhadri, C.G. Evaluation of deep learning models using explainable AI with qualitative and quantitative analysis for rice leaf disease detection. Sci. Rep. 2025, 15, 31850. [Google Scholar] [CrossRef] [PubMed]

- Hu, W.J.; Fan, J.; Du, Y.X.; Li, B.S.; Xiong, N.; Bekkering, E. MDFC–ResNet: An agricultural IoT system to accurately recognize crop diseases. IEEE Access 2020, 8, 115287–115298. [Google Scholar] [CrossRef]

- Anandhan, K.; Singh, A.S. Detection of paddy crops diseases and early diagnosis using faster regional convolutional neural networks. In Proceedings of the 2021 International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 4–5 March 2021; IEEE: New York City, NY, USA, 2021; pp. 898–902. [Google Scholar]

- Amin, H.; Darwish, A.; Hassanien, A.E.; Soliman, M. End-to-end deep learning model for corn leaf disease classification. IEEE Access 2022, 10, 31103–31115. [Google Scholar] [CrossRef]

- Ravi, V.; Acharya, V.; Pham, T.D. Attention deep learning-based large-scale learning classifier for Cassava leaf disease classification. Expert Syst. 2022, 39, e12862. [Google Scholar] [CrossRef]

- Widiyanto, S.; Nugroho, D.P.; Daryanto, A.; Yunus, M.; Wardani, D.T. Monitoring the growth of tomatoes in real time with deep learning-based image segmentation. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 353–358. [Google Scholar] [CrossRef]

- Saleem, M.H.; Khanchi, S.; Potgieter, J.; Arif, K.M. Image-based plant disease identification by deep learning meta-architectures. Plants 2020, 9, 1451. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.J.; Al Riza, D.F.; Nugroho, H. Comparative study on the performance of deep learning implementation in the edge computing: Case study on the plant leaf disease identification. J. Agric. Food Res. 2022, 10, 100389. [Google Scholar] [CrossRef]

- Kaur, P.; Harnal, S.; Tiwari, R.; Upadhyay, S.; Bhatia, S.; Mashat, A.; Alabdali, A.M. Recognition of leaf disease using hybrid convolutional neural network by applying feature reduction. Sensors 2022, 22, 575. [Google Scholar] [CrossRef] [PubMed]

- Hukkeri, G.S.; Soundarya, B.C.; Gururaj, H.L.; Ravi, V. Classification of various plant leaf disease using pretrained convolutional neural network on Imagenet. Open Agric. J. 2024, 18, e18743315305194. [Google Scholar] [CrossRef]

| Category Name | Number | Proportion (%) |

|---|---|---|

| Apple___Apple_scab | 630 | 1.16 |

| Apple___Black_rot | 621 | 1.14 |

| Apple___Cedar_apple_rust | 275 | 0.51 |

| Apple___healthy | 1645 | 3.03 |

| Blueberry___healthy | 1502 | 2.77 |

| Cherry_(including_sour)___Powdery_mildew | 1052 | 1.94 |

| Cherry_(including_sour)___ healthy | 854 | 1.57 |

| Corn_(maize)___Cercospora_leaf_spot Gray_leaf_spot | 513 | 0.94 |

| Corn_(maize)___Common_ rust_ | 1192 | 2.20 |

| Corn_(maize)___Northern_ Leaf_Blight | 985 | 1.81 |

| Corn_(maize)___healthy | 1162 | 2.14 |

| Grape___Black_rot | 1180 | 2.17 |

| Grape___Esca_(Black_ Measles) | 1383 | 2.55 |

| Grape___Leaf_blight_( Isariopsis_Leaf_Spot) | 1076 | 1.98 |

| Grape___healthy | 423 | 0.78 |

| Orange___Haunglongbing_(Citrus_greening) | 5507 | 10.14 |

| Peach___Bacterial_spot | 2297 | 4.23 |

| Peach___healthy | 360 | 0.66 |

| Pepper,_bell___Bacterial_ spot | 997 | 1.84 |

| Pepper,_bell___healthy | 1478 | 2.72 |

| Potato___Early_blight | 1000 | 1.84 |

| Potato___Late_blight | 1000 | 1.84 |

| Potato___healthy | 152 | 0.28 |

| Raspberry___healthy | 371 | 0.68 |

| Soybean___healthy | 5090 | 9.37 |

| Squash___Powdery_mildew | 1835 | 3.38 |

| Strawberry___Leaf_scorch | 1109 | 2.04 |

| Strawberry___healthy | 456 | 0.84 |

| Tomato___Bacterial_spot | 2127 | 3.92 |

| Tomato___Early_blight | 1000 | 1.84 |

| Tomato___Late_blight | 1909 | 3.52 |

| Tomato___Leaf_Mold | 952 | 1.75 |

| Tomato___Septoria_leaf_spot | 1771 | 3.26 |

| Tomato___Spider_mites Two-spotted_spider_mite | 1676 | 3.09 |

| Tomato___Target_Spot | 1404 | 2.59 |

| Tomato___Tomato_Yellow_ Leaf_Curl_Virus | 5357 | 9.86 |

| Tomato___Tomato_mosaic_ virus | 373 | 0.69 |

| Tomato___healthy | 1591 | 2.9 |

| Total | 54,305 | 100 |

| Statistical Indicators | Height (Pixels) | Width (Pixels) |

|---|---|---|

| Average value | 224.00 | 224.00 |

| Minimum | 200.00 | 200.00 |

| Maximum | 256.00 | 256.00 |

| Dataset | Sample Size | Proportion (%) | Class |

|---|---|---|---|

| Training set | 38,066 | 70 | 38 |

| Validation set | 8150 | 15 | 38 |

| Test Set | 8150 | 15 | 38 |

| Total | 54,366 | 100 | 38 |

| Category | Project | Configuration Details |

|---|---|---|

| Hardware | GPU | NVIDIA T4 (2 × 16 GB) |

| CPU | Multi-core processors | |

| storage | High-speed SSD | |

| Dataset size | 54,305 RGB images | |

| Software | Python | 3.10.12 |

| PyTorch | 2.5.0 | |

| torchvision | 0.20.0 |

| Hyperparameter Categories | Hyperparameters | Setting Value |

|---|---|---|

| Training settings | Batch size | 64 |

| epoch | 20 | |

| Early Stop Patience Value | 5 | |

| Optimizer Settings | Optimizer | AdamW |

| Learning Rate | 0.0005 | |

| Weight decay | 1 × 10−3 | |

| Gradient Clipping | max_norm = 1.0 | |

| Learning rate scheduling | Scheduler | ReduceLROnPlateau |

| Attenuation Factor | 0.4 | |

| Patience | 2 | |

| Loss Function | Label smoothing | 0.1 |

| Hyperparameter Categories | Hyperparameters | Setting Value |

|---|---|---|

| Training settings | Batch size | 32 |

| epoch | 20 | |

| Early Stop Patience Value | - | |

| Optimizer Settings | Optimizer | Adamax |

| Learning Rate | 0.001 | |

| Weight decay | - | |

| Gradient Clipping | - | |

| Learning rate scheduling | Scheduler | Fixed learning rate |

| Attenuation Factor | - | |

| Patience | - | |

| Loss Function | Categorical Cross-Entropy | - |

| Hyperparameter Categories | Hyperparameters | Setting Value |

|---|---|---|

| Training settings | Batch size | 32 |

| epoch | 20 | |

| Early Stop Patience Value | - | |

| Optimizer Settings | Optimizer | Adam |

| Learning Rate | 0.001 | |

| Weight decay | - | |

| Gradient Clipping | - | |

| Learning rate scheduling | Scheduler | Fixed learning rate |

| Attenuation Factor | - | |

| Patience | - | |

| Loss Function | Categorical Cross-Entropy | - |

| Model | SDA-CAH | Accuracy (%) | F1 Score (%) | Recall (%) | Inference Speed (ms) | Training Time |

|---|---|---|---|---|---|---|

| Xception | × | 99.42 | 99.39 | 99.41 | 178 | 2 h 34 m 56 s |

| EfficientNet-B0 | × | 99.35 | 99.32 | 99.33 | 70 | 1 h 21 m 19 s |

| MobileNetV2 | × | 95.77 | 94.52 | 94.77 | 81 | 1 h 50 m 35 s |

| EfficientNet-B0 | √ | 99.95 | 99.89 | 99.89 | 70 | 1 h 19 m 16 s |

| References | Dataset | Classifier | Accuracy (%) | F1 Score (%) | Recall (%) | Params (M) | Size (MB) |

|---|---|---|---|---|---|---|---|

| [22] | PlantVillage | Enhanced VGG-16 | 98.89 | - | - | 138.36 | 528.00 |

| [23] | PlantVillage | DenseNet | 98.17 | 98.12 | 98.17 | 7.98 | 32.00 |

| [24] | new plant diseases dataset | FL-EfficientNet | 99.72 | - | 99.70 | - | - |

| [26] | PlantVillage | DH-CNN | 99.26 | - | - | 87.86 | 335.13 |

| [27] | PlantVillage | EfficientNetB0-Attn | 99.39 | - | - | 5.33 | 21.00 |

| [51] | Crop Diseases | MDFC-ResNet | 85.22 | 82.33 | 82.24 | 25.56 | 98.00 |

| [52] | Paddy Crops Diseases | Mask R-CNN | 95.83 | - | - | 45.00 | 175.00 |

| [53] | Corn plant diseases | CNN | 98.56 | - | - | 16.20 | 64.80 |

| [54] | Cassava leaf disease | EfficientNet | 87.08 | - | - | - | - |

| [55] | Tomato leaf disease | CNN | 96.60 | - | - | 44.55 | 170.00 |

| [56] | PlantVillage | SSD | 73.07 | - | - | 11.20 | 45.00 |

| [57] | PlantVillage | DenseNet121 | 96.40 | 95.70 | 95.90 | 7.98 | 32.00 |

| [58] | PlantVillage | EfficientNet B7 | 98.70 | 99.00 | 97.00 | 66.66 | 256.00 |

| [59] | PlantVillage | EfficientNet | 97.50 | 93.00 | 93.00 | - | - |

| Comp-1 | PlantVillage | Xception | 99.42 | 99.39 | 99.41 | 22.91 | 88.00 |

| Comp-2 | PlantVillage | EfficientNet-B0 | 99.35 | 99.32 | 99.33 | 5.33 | 21.00 |

| Comp-3 | PlantVillage | MobileNetV2 | 95.77 | 94.52 | 94.77 | 3.47 | 14.00 |

| Ours | PlantVillage | SDA-CAH+EfficientNet-B0 | 99.95 | 99.89 | 99.89 | 5.33 | 21.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.; Wang, R.; Zhao, L. Deep Learning-Driven Plant Pathology Assistant: Enabling Visual Diagnosis with AI-Powered Focus and Remediation Recommendations for Precision Agriculture. AgriEngineering 2025, 7, 386. https://doi.org/10.3390/agriengineering7110386

Kang J, Wang R, Zhao L. Deep Learning-Driven Plant Pathology Assistant: Enabling Visual Diagnosis with AI-Powered Focus and Remediation Recommendations for Precision Agriculture. AgriEngineering. 2025; 7(11):386. https://doi.org/10.3390/agriengineering7110386

Chicago/Turabian StyleKang, Jichang, Ran Wang, and Lianjun Zhao. 2025. "Deep Learning-Driven Plant Pathology Assistant: Enabling Visual Diagnosis with AI-Powered Focus and Remediation Recommendations for Precision Agriculture" AgriEngineering 7, no. 11: 386. https://doi.org/10.3390/agriengineering7110386

APA StyleKang, J., Wang, R., & Zhao, L. (2025). Deep Learning-Driven Plant Pathology Assistant: Enabling Visual Diagnosis with AI-Powered Focus and Remediation Recommendations for Precision Agriculture. AgriEngineering, 7(11), 386. https://doi.org/10.3390/agriengineering7110386