Abstract

Plant disease recognition is a critical technology for ensuring food security and advancing precision agriculture. However, challenges such as class imbalance, heterogeneous image quality, and limited model interpretability remain unresolved. In this study, we propose a Synergistic Dual-Augmentation and Class-Aware Hybrid (SDA-CAH) model designed to achieve robust and interpretable recognition of plant diseases. Our approach introduces two innovative augmentation strategies: (1) an optimized MixUp method that dynamically integrates class-specific features to enhance the representation of minority classes; and (2) a customized augmentation pipeline that combines geometric transformations with photometric perturbations to strengthen the model’s resilience against image variability. To address class imbalance, we further design a class-aware hybrid sampling mechanism that incorporates weighted random sampling, effectively improving the learning of underrepresented categories and optimizing feature distribution. Additionally, a Grad-CAM–based visualization module is integrated to explicitly localize lesion regions, thereby enhancing the transparency and trustworthiness of the predictions. We evaluate SDA-CAH on the PlantVillage dataset using a pretrained EfficientNet-B0 as the backbone network. Systematic experiments demonstrate that our model achieves 99.95% accuracy, 99.89% F1-score, and 99.89% recall, outperforming several strong baselines, including an optimized Xception (99.42% accuracy, 99.39% F1-score, 99.41% recall), standard EfficientNet-B0 (99.35%, 99.32%, 99.33%), and MobileNetV2 (95.77%, 94.52%, 94.77%). For practical deployment, we developed a web-based diagnostic system that integrates automated recognition with treatment recommendations, offering user-friendly access for farmers. Experimental evaluations indicate that SDA-CAH outperforms existing approaches in predictive accuracy and simultaneously defines a new paradigm for interpretable and scalable plant disease recognition, paving the way for next-generation precision agriculture.

1. Introduction

Plant diseases constitute a significant threat to global food security, accounting for 20–40% of annual crop losses and undermining agricultural sustainability, particularly in developing regions [1,2]. Prompt and accurate disease detection is essential for precision agriculture, efficient resource allocation, and minimizing economic losses. Recent advances in deep learning have opened promising avenues for automated and high-performance plant disease recognition [3]. CNN-based models have demonstrated exceptional performance in large-scale plant disease image analysis [4]. Nevertheless, their real-world deployment is constrained by persistent challenges: class imbalance, which hampers recognition of rare diseases [5]; variability in image quality due to illumination changes, occlusion, and blur [6]; and the inherent “black-box” nature of deep models, limiting transparency and actionable interpretability for agricultural practitioners [7].

Class imbalance remains a critical challenge in plant disease recognition. In datasets such as PlantVillage [8], minority disease categories are frequently underrepresented, leading models to overfit dominant classes while underperforming on rare ones [9]. This imbalance undermines the reliability of predictions in real-world agricultural scenarios. Moreover, field-acquired images are subject to variability in illumination, viewing angles, occlusion, and resolution, imposing stringent demands on model robustness. Conventional data augmentation techniques (e.g., random cropping, flipping) offer limited mitigation, as they often fail to preserve the subtle lesion textures and intricate leaf structures essential for accurate diagnosis [10]. Compounding these issues is the inherent “black-box” nature of deep learning models, which restricts interpretability and diminishes user trust. Agricultural practitioners require transparent and explainable decision-support tools to enable rapid, targeted interventions, yet most existing approaches fall short, limiting their practical utility in precision agriculture [11].

Recent advances in explainable artificial intelligence (XAI) have opened new possibilities for enhancing model transparency. Techniques such as Gradient-weighted Class Activation Mapping (Grad-CAM) generate intuitive heatmaps that reveal the regions driving model decisions [12]. Yet, their application in plant disease recognition remains limited, with few studies integrating interpretability and high-performance modeling in agricultural contexts [11]. Moreover, existing methods addressing class imbalance and image variability are often isolated rather than unified, constraining robustness under complex field conditions [13]. Hence, developing a framework that jointly achieves accuracy, resilience, and interpretability holds significant academic and practical value for precision agriculture.

To tackle these challenges, this study introduces the Synergistic Dual-Augmentation and Class-Aware Hybrid (SDA-CAH) framework for robust, interpretable, and high-performance plant disease recognition. By unifying advanced augmentation, class-aware sampling, and explainable visualization, SDA-CAH systematically addresses the shortcomings of existing methods through four key innovations:

- We implemented a dual-augmentation pipeline that integrates an adaptive MixUp to strengthen inter-class separability with a plant-specific Albumentations strategy, effectively preserving subtle disease features and enhancing robustness to variations in illumination, viewpoint, and occlusion.

- We implement a class-aware weighted sampling strategy to elevate the representation of minority classes, improving recognition of rare diseases and ensuring balanced feature learning across all categories.

- We integrated Grad-CAM to generate high-resolution class activation heatmaps, enabling precise localization of disease regions within plant images. This visualization approach not only enhances model transparency but also provides agricultural practitioners with intuitive and actionable insights. For instance, farmers can rapidly identify infected leaf areas from the heatmaps, allowing for targeted interventions such as precise pesticide application or pruning, thereby improving disease management efficiency.

- To enhance practical applicability, we developed an integrated platform combining the SDA-CAH model with an intuitive front-end interface. The system enables real-time image upload, disease diagnosis, Grad-CAM visualization, and remediation guidance, thereby lowering technical barriers and empowering practitioners to leverage AI-driven decision support in precision agriculture.

The SDA-CAH framework leverages a pretrained EfficientNet-B0 with AdamW, label smoothing, gradient clipping, and adaptive learning rates for stable, robust training. On PlantVillage, it achieves 99.95% accuracy, 99.89% F1-score, and 99.89% recall. Grad-CAM visualizations enhance interpretability, while a Flask-based web platform enables real-time inference, visual explanations, and targeted remediation, supporting deployment in agriculture and related domains.

This paper is structured as follows: Section 2 reviews related work in plant disease recognition, highlighting strengths and limitations; Section 3 details the SDA-CAH methodology, including dual-augmentation, class-aware sampling, Grad-CAM visualization, and web application development; Section 4 describes the experimental setup, dataset, and evaluation metrics; Section 5 presents results and discusses performance advantages and practical implications; finally, Section 5.2 concludes and outlines future directions, including application to other datasets and mobile deployment.

2. Related Work

Plant disease recognition is pivotal for precision agriculture, food security, and sustainable development. Despite advances in machine learning and deep learning, challenges such as class imbalance, image variability, and limited model interpretability hinder deployment in complex agricultural settings. This section reviews recent progress in data augmentation, class-balancing, and interpretability, and introduces the SDA-CAH framework as a solution.

Traditional plant disease recognition methods use handcrafted features—such as color, texture, or morphological descriptors (Hu moments, SIFT, HOG)—with classical classifiers like SVM, Random Forest, or KNN [9]. Alhwaiti et al. (2023) combined HOG features with a linear SVM for tomato late blight, achieving high accuracy in early-stage detection [13]. Yao et al. reviewed traditional descriptors and classifiers, emphasizing their utility in low-quality images or resource-constrained settings like mobile diagnostic systems [14]. Demilie et al. found that Hu moments outperform HOG for distinct morphological differences, while KNN is more stable for small-sample categories [15]. However, due to the subtle and diverse nature of plant disease symptoms, manual feature engineering often misses key discriminative cues and scales poorly on large datasets. Pai et al. highlighted the limits of handcrafted features for large-scale disease analysis and proposed a dual-stream CNN with feature fusion to improve accuracy and efficiency [16]. Similarly, Rodríguez-Lira et al. demonstrated that traditional color-, texture-, and morphology-based methods underperform on large datasets, necessitating CNNs for robust feature extraction [17]. Rizwan et al. noted that manual feature engineering on datasets like PlantVillage is inefficient, especially under challenging conditions such as strong illumination or cluttered backgrounds [18]. These methods are highly sensitive to variable lighting, occlusion, and resolution. To improve real-time detection, Gohil et al. proposed a quadtree-based hybrid approach, enhancing accuracy and convergence on high-resolution images [19]. Kuswidiyanto et al. emphasized that deep learning combining spectral, spatial, and texture features outperforms traditional extraction in hyperspectral plant disease detection under challenging environmental conditions [20]. Conventional methods struggle with environmental variability, while deep CNNs enhance field adaptability via multi-scale feature extraction [21]. Bagga et al. and Upadhyay et al. similarly highlighted that CNNs and Vision Transformers outperform traditional approaches in feature extraction and robustness under complex field conditions [3,4].

The advent of deep learning has markedly advanced plant disease recognition, with Convolutional Neural Networks (CNNs) demonstrating exceptional performance. Hong et al. developed a two-stream hybrid CNN based on VGG16, incorporating Inception modules, batch normalization, and ELU activation, achieving 98.89% accuracy on the PlantVillage dataset [22]. Kaya et al. proposed a multi-head DenseNet architecture integrating RGB and segmented images, attaining 98.17% accuracy across 38 disease categories [23]. Sun et al.’s EfficientNet-based FL-EfficientNet reached 99.72% accuracy on a dataset encompassing 10 diseases across five crops [24], while Pai et al.’s dual-stream CNN with feature fusion achieved 96.8% accuracy on 6,763 rice leaf images [16]. Shoaib et al. reported that deep CNNs consistently achieve 99–99.2% accuracy in leaf disease classification [25]. Ray et al.’s dual-head CNN obtained 99.71% accuracy for leaf classification and 99.26% for disease-specific recognition across 13,576 images [26]. González-Briones et al. enhanced CNN performance by integrating pre-trained models with attention mechanisms, reaching 99.39% accuracy [27], whereas Saddami et al. demonstrated that EfficientNet-B0 achieved 99.8% in rice disease identification [28]. Similarly, Mazumder et al. employed transfer learning with attention mechanisms, achieving 99.0% overall accuracy and an average F1-score of 98.7% [29]. Despite these advances, three key challenges hinder real-world deployment. Class imbalance limits recognition of rare diseases, with some PlantVillage categories representing only 1–2% of samples [30]. Model generalization suffers under real-world variability such as illumination changes, occlusion, or blur [31]. Additionally, the “black-box” nature of deep models reduces interpretability, constraining practitioner trust and adoption [32]. These issues highlight the need for robust, high-performance, and interpretable plant disease recognition frameworks.

Data augmentation plays a pivotal role in enhancing the generalization of deep learning models for plant disease recognition, addressing variations in illumination, viewing angles, and occlusion [33]. Conventional techniques—such as flipping, cropping, scaling, and color jittering—expand the diversity of training data but often fail to capture subtle disease-specific textures and intricate leaf structures [34]. Advanced methods, including MixUp [35] and CutMix [36], improve inter-class discrimination and robustness to partial occlusion [37,38]; however, MixUp can obscure critical lesion details, and CutMix may compromise structural continuity essential for accurate diagnosis [39]. Existing approaches remain insufficiently tailored to plant imagery, lacking optimizations that preserve fine-grained symptomatic features or realistically emulate field conditions.

Class imbalance is a pervasive challenge in plant disease datasets such as PlantVillage, where underrepresented categories lead models to overfit dominant classes [9]. Conventional approaches—including oversampling, undersampling, and loss reweighting—have notable limitations: oversampling (e.g., SMOTE) can introduce noise or exacerbate overfitting [40]; undersampling reduces majority-class samples at the cost of discarding valuable data [41]; and weighted loss functions redirect attention to minority classes but require careful tuning and may underperform under severe imbalance [42]. More recent dynamic strategies, such as weighted random sampling, adjust class sampling probabilities during training [43]; however, these methods are generally task-agnostic and insufficiently optimized for the specific challenges of plant disease datasets.

The “black-box” nature of deep learning constrains its application in precision agriculture. Explainable AI (XAI) methods, such as Grad-CAM [44], produce class activation heatmaps that highlight salient regions and have demonstrated effectiveness in medical and industrial settings [45]. In plant disease recognition, Grad-CAM has been applied with EfficientNetV2 [46], lightweight CNNs on edge devices [47], and VGG16-based transfer learning models [48] to visualize lesions and bolster user trust. Nonetheless, these heatmaps often lack disease-specific precision, integration with state-of-the-art architectures is limited, and actionable, user-friendly interfaces remain insufficiently developed.

Advances in IoT, edge computing, and deep learning have enabled intelligent crop disease detection via an “edge–cloud–user” framework. Lightweight CNNs with pruning, quantization, and hardware acceleration achieve near-cloud accuracy and millisecond-level inference on edge devices [47]. Edge–cloud collaboration addresses bandwidth and privacy constraints by combining rapid local screening with high-precision cloud inference, delivering visualized diagnoses and tailored recommendations [49]. Grad-CAM integration enhances interpretability and transparency of diagnostic outputs [50].

Recent studies prioritize not only accuracy but also model efficiency, real-time performance, and interpretability to facilitate large-scale field deployment. The SDA-CAH framework delivers high accuracy while providing end-to-end diagnostic and treatment recommendations through a web-based platform, offering a scalable and interpretable solution for precision agriculture.

3. Materials and Methods

3.1. Dataset

To validate the superior performance of the SDA-CAH framework in plant disease recognition, we employed the publicly available PlantVillage dataset, whose large scale and multi-class characteristics provide an ideal benchmark for assessing model robustness and generalization capability. Through innovative data analysis and processing strategies, this study fully leverages the intrinsic properties of this publicly available dataset to support the implementation of class-aware hybrid sampling and synergistic dual augmentation. The results highlight the framework’s breakthrough contributions to precision agriculture, advancing the integration of intelligent recognition with sustainable crop management.

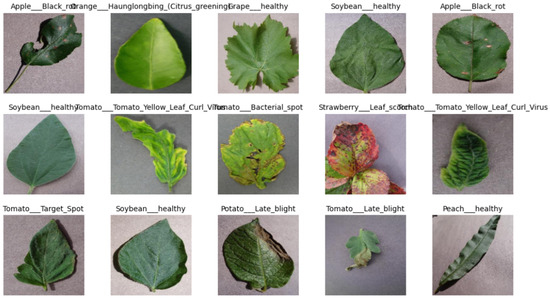

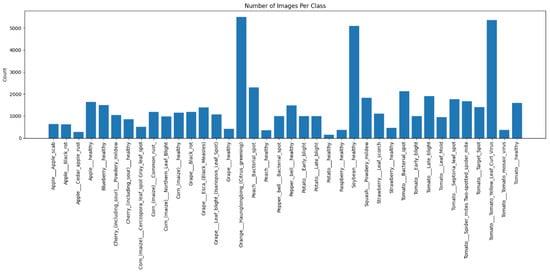

The PlantVillage dataset comprises 54,305 color images spanning 38 disease categories across 14 crop species (e.g., tomato, maize, grape) and multiple disease types (e.g., leaf spot, rust). Figure 1 presents representative sample images from the dataset. The distribution of samples is highly imbalanced: several rare categories contain fewer than 2% of the total images, creating a challenging scenario for research on class imbalance. Using the Counter tool, we analyzed the category distribution and revealed substantial discrepancies between dominant categories (e.g., tomato late blight) and rare categories (e.g., grape black rot). Figure 2 illustrates the distribution of the 38 disease classes, while Table 1 provides detailed statistical counts, underscoring the pronounced imbalance inherent in the dataset. This imbalance strongly validates both the necessity and the novelty of the SDA-CAH class-aware sampling mechanism. All images are stored in RGB format. Owing to its openness and diversity, the PlantVillage dataset has been widely adopted in agricultural image classification research, ensuring the reproducibility and comparability of experimental results.

Figure 1.

PlantVillage dataset example images.

Figure 2.

Distribution of 38 disease categories.

Table 1.

PlantVillage dataset category distribution.

To gain deeper insight into the visual characteristics of the dataset, we randomly sampled 100 images for size analysis. The results indicate that the images have an average dimension of 224 × 224 pixels, with a minimum of 200 × 200 pixels and a maximum of 256 × 256 pixels. Dynamic analysis using the tqdm tool showed that the size distribution is concentrated with a small standard deviation, making it suitable for deep learning model input. To meet the input requirements of EfficientNet-B0, all images were preprocessed and uniformly resized to 224 × 224 pixels while preserving the integrity of disease features. Table 2 presents the image size analysis of the PlantVillage dataset, providing a rationale for our preprocessing strategy. This analysis not only informs the customization of the Albumentations augmentation pipeline but also ensures that the collaborative dual-augmentation strategy effectively handles size variability. The innovative integration of dataset analysis and processing methods establishes a solid foundation for plant disease recognition, and its design principles can be extended to other agricultural image datasets, demonstrating significant academic and practical value.

Table 2.

Image size analysis of the PlantVillage dataset.

3.2. Methodology

3.2.1. Overview of SDA-CAH Framework

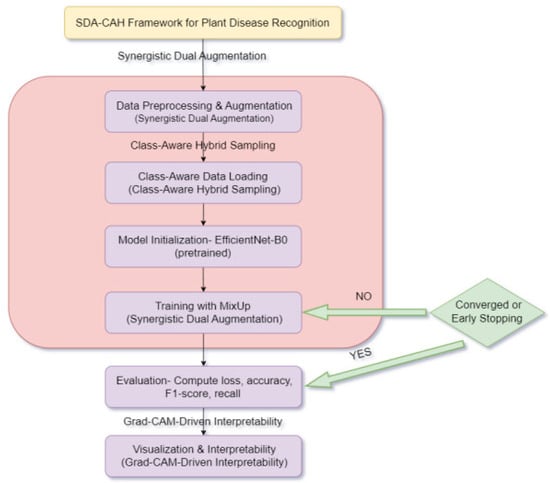

To address the critical challenges in plant disease recognition for precision agriculture—namely, class imbalance, image variability, and decision transparency—this study proposes a Synergistic Dual-Augmentation and Class-Aware Hybrid model (SDA-CAH). The framework builds upon a pre-trained EfficientNet-B0 backbone and incorporates optimized strategies, including the AdamW optimizer, label smoothing, gradient clipping, and dynamic learning rate scheduling, to ensure stable training and efficient convergence. The innovations of SDA-CAH are threefold: (i) a collaborative augmentation mechanism that integrates dynamic weighted MixUp with a customized Albumentations pipeline, effectively enhancing feature discriminability and model robustness; (ii) a class-aware hybrid sampling strategy that emphasizes minority disease classes, mitigating the challenges of data imbalance; and (iii) Grad-CAM–based visualization, enabling precise localization of disease regions and improving model interpretability and practical utility. Figure 3 illustrates the overall workflow of SDA-CAH, encompassing data augmentation, class-aware loading, model training, and visualization analysis, thereby establishing a plant disease recognition framework that is highly accurate, robust, and transparent in its decision-making.

Figure 3.

Overall process of SDA-CAH framework.

3.2.2. Synergistic Dual Augmentation

To address common image variability challenges in plant disease recognition—such as illumination changes, occlusion, and viewpoint deviations—this study proposes a synergistic dual-augmentation strategy that integrates an optimized MixUp approach with a customized Albumentations augmentation pipeline. This method is deeply tailored to the characteristics of plant images, balancing the preservation of critical disease features with realistic variability simulation. By overcoming the limitations of conventional augmentation techniques, it substantially enhances model robustness and generalization in complex environments, demonstrating significant potential for applications in precision agriculture.

- Traditional MixUp generates synthetic samples through linear interpolation, which may blur localized disease features critical for plant disease identification. To address this, we propose a dynamic MixUp implementation that employs an adaptive sampling strategy based on a Beta distribution to dynamically select the mixing coefficient , ensuring both intra-class feature diversity and inter-class boundary clarity. Specifically, for a pair of input images and their corresponding labels , we generate a mixed sample (Equation (1)) and a mixed label (Equation (2)).where the mixing coefficient . The probability density function of the Beta distribution is defined as Equation (3).By setting , the Beta distribution is configured to favor values close to 0 or 1, thereby preserving more features from the original images while introducing controlled diversity.This adaptive sampling strategy enhances the model’s sensitivity to disease-specific features, balancing intra-class variability and inter-class discriminability, and significantly improving recognition performance for rare categories. During training, we incorporate a mixed loss function to ensure the model optimizes contributions from both labels:where denotes the cross-entropy loss, and represents the model’s output. Compared to traditional MixUp, our optimized approach better preserves the integrity of disease features through dynamic sampling, providing robust generalization support for complex agricultural scenarios.

- To address the visual characteristics of plant imagery, we developed a tailored Albumentations augmentation pipeline that integrates selective geometric and photometric transformations to simulate the complexity of field environments while preserving the integrity of disease symptoms. The geometric transformations include RandomRotate90, HorizontalFlip, and VerticalFlip to emulate the natural orientations of plants from various angles. The photometric transformations encompass RandomBrightnessContrast and selective blur/noise operations, including GaussianBlur and GaussNoise, to mitigate variations in illumination and noise interference. The brightness transformation can be modeled as Equation (5).where is the original pixel value, controls contrast, and adjusts brightness.To safeguard critical disease features, such as lesions and discoloration, we reduced the frequency of CoarseDropout to prevent excessive occlusion of symptomatic regions. This carefully calibrated combination of transformations enhances the model’s adaptability to real-world image variability. Ultimately, the synergistic dual augmentation strategy, combining optimized MixUp with the customized Albumentations pipeline, achieves an effective balance between feature preservation and robustness, ensuring superior performance in complex agricultural scenarios.

3.2.3. Class-Aware Hybrid Sampling

To address the severe class imbalance in plant disease recognition, this study proposes a class-aware hybrid sampling mechanism. By constructing a weighted sampler based on inverse class frequencies, it dynamically increases the training emphasis on rare classes, ensuring equitable feature learning across all categories. Efficiently integrated into the data-loading pipeline, this mechanism enables stable batch processing and balanced training, effectively mitigating model prediction bias and enhancing recognition performance for minority classes. This approach holds significant practical value and theoretical relevance.

- We designed a novel sampling strategy that assigns inverse class frequency weights to each disease category, where denotes the number of samples for category . This approach allocates higher sampling probabilities to rare categories, ensuring more frequent exposure to minority class samples during training iterations, thereby enhancing the learning of underrepresented disease features. The weight calculation is defined as Equation (6).where represents the total number of categories ( for the PlantVillage dataset). To ensure stability in the sampling distribution, we normalize the weights as follows:The normalized weights are used to construct a weighted random sampler, where the sampling probability for each sample is given by , with denoting the class label of sample . Unlike traditional oversampling methods, which often introduce noise or information loss, our proposed sampler mitigates data redundancy and stabilizes the sampling distribution through dynamic weight adjustment and normalization, while incorporating random perturbations to avoid local optima. This class-aware strategy effectively addresses the severe class imbalance in the PlantVillage dataset, prioritizing the learning of rare category features and significantly enhancing the model’s generalization capability in complex agricultural environments. This approach demonstrates substantial innovation in tackling class imbalance challenges.

- To achieve efficient and stable batch processing, we integrate the category-aware sampler into the data loading framework and use weighted random sampling based on the inverse category frequency to generate a multinomial distribution sampling sequence to ensure balanced category representation in each batch. The batch size is set to 64, and multithreading and prefetching mechanisms are combined to optimize data loading efficiency and minimize I/O bottlenecks during training. The data loading throughput can be approximated as Equation (8).where is the batch size, is the total number of samples in the dataset, is the data loading time, and is the time to transfer data to the GPU. Both and are significantly reduced through parallelization and memory pinning. This efficient integration scheme supports high-throughput processing of large-scale datasets while maintaining category balance. Experimental verification on the PlantVillage dataset confirms its effectiveness and significantly improves the performance of the SDA-CAH framework in class-imbalanced environments.

3.2.4. Model Architecture

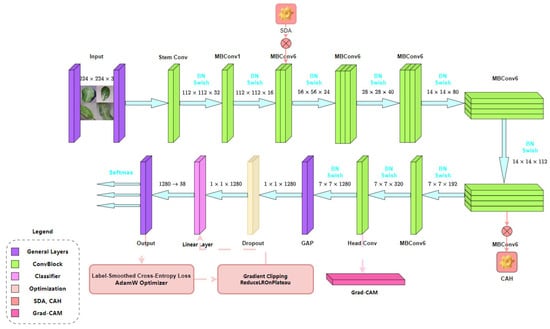

The proposed SDA-CAH architecture is conceived as a modular and interpretable visual framework that seamlessly integrates efficient feature extraction, synergistic data augmentation, class-aware hybrid sampling, and adaptive optimization strategies into a unified pipeline (Figure 4). The overall workflow comprises five core stages: input preprocessing, dual augmentation, balanced sampling, deep feature extraction, and stability-oriented optimization.

Figure 4.

Overall architecture of the SDA-CAH framework.

Each image is first resized to 224 × 224 pixels and normalized according to ImageNet statistics. The preprocessed images are then processed by the Synergistic Dual Augmentation (SDA) module, which fuses geometric and photometric transformations—including rotation, flipping, brightness adjustment, and coarse dropout—to enhance intra-class diversity while preserving lesion morphology. This dual augmentation generates complementary image pairs, enabling the model to learn invariant and robust representations under diverse illumination and occlusion conditions.

Following augmentation, the CAH module applies stratified sampling with adaptive weighting based on class frequency. Minority categories are upsampled through weighted random sampling, while majority classes are proportionally downsampled. This balanced sampling strategy ensures equitable exposure of all disease classes during training, effectively mitigating the class imbalance commonly observed in agricultural datasets.

The augmented and balanced samples are subsequently fed into a pre-trained EfficientNet-B0 backbone, chosen for its superior balance between computational efficiency and representational power. The original fully connected layer is replaced with a customized linear layer (1280 input features to 38 output dimensions) tailored to the PlantVillage dataset. Leveraging ImageNet pretraining allows the network to retain robust low-level visual priors while fine-tuning domain-specific features associated with plant disease symptoms.

To ensure reliable convergence, several stabilization mechanisms are incorporated. A label-smoothing cross-entropy loss function mitigates overconfidence and reduces overfitting; the AdamW optimizer decouples weight decay from gradient updates to enhance regularization; gradient clipping constrains update norms to prevent divergence; and a dynamic learning rate scheduler automatically reduces the learning rate when validation loss plateaus. These optimization techniques work synergistically to accelerate convergence and improve generalization stability.

Figure 4 schematically depicts the end-to-end data flow of the SDA-CAH framework—from raw input images through dual augmentation, class-aware sampling, and feature extraction to final classification. The diagram emphasizes the coordinated interaction between the SDA and CAH modules and their integration with the EfficientNet backbone and adaptive optimization loop. This cohesive visualization elucidates how each component contributes to model robustness, interpretability, and reproducibility, providing a transparent overview of the overall system architecture.

Through this unified design, the SDA-CAH framework achieves stable convergence and remarkable accuracy on the PlantVillage dataset, while maintaining a clear, interpretable visual structure that facilitates both reproducibility and real-world deployment in precision agriculture.

3.2.5. Explainable Visualization

To address the need for decision transparency in plant disease recognition, a Grad-CAM–based interpretable visualization mechanism was integrated. This approach generates saliency maps from the final convolutional layer of EfficientNet-B0, producing high-resolution heatmaps overlaid on the original images to provide intuitive localization of disease regions. This method significantly enhances the model’s interpretability and user trust, thereby increasing the practical utility of the SDA-CAH framework in precision agriculture.

- To enhance the interpretability of the SDA-CAH framework, we developed a customized Grad-CAM module based on the final convolutional layer of EfficientNet-B0. This module extracts activation maps and gradient information to compute the contribution of each channel to the prediction, generating precise disease localization heatmaps. For a target class , let the activation map of the last convolutional layer be , where denotes the activation of the -th feature channel at spatial location , with dimensions (height) and (width). The activation map is obtained through forward propagation, and the gradient of the target class score concerning the activation map is computed via backpropagation:where () represents the spatial position. The global average pooling of these gradients yields the weight for each feature channel:Using these weights , the activation maps are weighted and combined, followed by ReLU activation to generate the initial heatmap:To align with the 224 × 224 resolution of plant images, the heatmap is upsampled to the target size using bilinear interpolation:where denotes the bilinear interpolation kernel. The final heatmap is normalized as Equation (13).This optimized Grad-CAM implementation, tailored for EfficientNet-B0, effectively captures subtle disease features (e.g., spots, discoloration), significantly improving the heatmap’s adaptability to agricultural scenarios compared to standard Grad-CAM methods.

- To enhance interpretability for end-users, we designed an innovative heatmap overlay process. Initially, the input image is de-standardized to restore its RGB format. Let the standardized image be ; the de-standardization process is defined as Equation (14).where and represent the mean and standard deviation vectors, respectively. Subsequently, the normalized heatmap is converted to a pseudo-color representation using the JET colormap:The final output image is generated via a weighted overlay formula:where is the transparency parameter, ensuring clear correspondence between disease-affected regions and plant structures. The output image is normalized to ensure pixel values fall within the range [0, 255]:This process not only preserves the details of the original image but also highlights disease-affected areas through the heatmap, providing actionable insights for farmers, such as localizing infected leaves to guide precise pesticide application.

3.2.6. System Implementation

To bridge the gap between advanced AI models and practical agricultural applications, we developed a user-friendly plant disease diagnosis platform that deploys the SDA-CAH framework in real-world settings. This platform enables farmers, agronomists, and agricultural extension personnel to upload plant images and receive real-time disease diagnosis results, visual Grad-CAM heatmaps, and targeted remediation recommendations. To ensure practical feasibility, the system was optimized for computational efficiency—achieving an average inference time of 0.42 s per image on an NVIDIA GeForce GTX 1650. These results demonstrate that the platform can provide rapid, accurate, and resource-efficient disease detection suitable for deployment in real-world agricultural environments.

- The platform employs a client–server architecture, comprising a frontend user interface and a backend server. The frontend, built with HTML5, CSS, and JavaScript (V8 12.7.224.18), provides an intuitive interface that supports image uploads, real-time result visualization, and Grad-CAM heatmap display. Bootstrap is utilized to implement a responsive design, ensuring a consistent user experience across smartphones, tablets, and desktop devices—critical for diverse connectivity conditions in rural field settings. The backend is built on Flask and is responsible for image preprocessing, model inference, Grad-CAM generation, and response formatting. To optimize technical performance, the platform implements batch processing and asynchronous request handling, ensuring low latency and efficient concurrent inference. Model weights are loaded into memory for rapid prediction, and GPU acceleration is supported when available. The architecture also incorporates input validation and secure API endpoints to maintain reliability and safety. This design allows scalable deployment on cloud platforms such as AWS or Heroku, facilitating broad accessibility and robust performance across different devices and environments. Figure 5 illustrates the complete system architecture.

Figure 5. Application system architecture diagram.

Figure 5. Application system architecture diagram.

- The platform operationalizes the SDA-CAH framework as a fully integrated end-to-end diagnostic workflow, seamlessly combining algorithmic precision with engineering optimization. Users can submit plant images via file upload or camera capture, which are then preprocessed—resized, normalized, and color-adjusted—to align with the model’s training pipeline. Both preprocessing and inference are executed in parallel and asynchronously, leveraging GPU acceleration when available, with model weights preloaded into memory to minimize latency. Disease predictions are generated through softmax probabilities, while Grad-CAM heatmaps are computed by backpropagating gradients from the final convolutional layer, resized, and overlaid on the original image for intuitive and interpretable visualization. Each disease class is associated with expert-curated remediation guidance, dynamically retrieved for immediate presentation. The frontend dynamically displays predictions, confidence scores, Grad-CAM overlays, and actionable recommendations through a responsive interface and asynchronous rendering, ensuring low-latency, scalable, and interpretable deployment across diverse devices. This architecture exemplifies the synergistic integration of high-performance modeling, efficient inference, and user-centric visualization, facilitating practical application in real-world agricultural scenarios.

- The platform is fully containerized using Docker (version 20.10.7), enabling consistent and reproducible deployment across diverse environments, and utilizes NGINX (version 1.24.0) as a reverse proxy to handle load balancing, SSL termination, and request routing in the production environment. Usability testing, simulating real-world user scenarios, confirmed the interface’s intuitiveness and highlighted planned support for multi-language expansion and Service Workers—based offline caching to maintain functionality under intermittent network conditions. From a technical perspective, the deployment pipeline integrates automated build and continuous integration workflows, with GPU-enabled containers for efficient model inference and memory optimization. Logging, monitoring, and input validation mechanisms are incorporated to ensure robustness, security, and maintainability. This implementation not only democratizes access to the SDA-CAH framework but also enables anonymized collection of user data for iterative model fine-tuning, supporting continuous improvement. By combining scalable, secure deployment with real-time, explainable AI, the platform translates research into a practical tool for precision agriculture, advancing global food security and sustainable crop management.

3.2.7. Training and Evaluation

To validate the efficiency and reliability of the SDA-CAH framework in plant disease recognition, this study proposes a training strategy integrating hierarchical partitioning with multidimensional evaluation metrics, systematically optimized to address class imbalance and complex agricultural scenarios, thereby enhancing the model’s applicability and generalization capacity in automated precision agriculture diagnostics.

- To ensure consistency in data distribution, experimental reproducibility, and prevention of potential data leakage, a stratified sampling strategy was employed on the PlantVillage dataset (38 classes, over 50,000 images), partitioning it at the image level into 70% training, 15% validation, and 15% testing sets using a fixed random seed of 42. The splitting process was conducted in two stages: first, the dataset was divided into a training set and a temporary set; the latter was then proportionally split into validation and test sets, effectively preserving the distribution of rare classes (each comprising less than 2% of the data). Crucially, all subsets were mutually exclusive—no image or its augmented variant appeared across multiple subsets—ensuring that model performance reflects genuine learning rather than memorization of duplicated samples. Table 3 details the dataset split. This rigorous partitioning strategy not only supports the class-aware hybrid sampling mechanism but also guarantees fairness across classes during training, tuning, and evaluation. All experiments were conducted exclusively on the PlantVillage dataset to maintain controlled comparability with prior studies. While this dataset provides consistent illumination and background conditions, it does not fully capture the complexity of real-world field scenarios. Consequently, the reported accuracy primarily reflects strong in-domain performance rather than cross-domain generalization, thereby enhancing the framework’s overall robustness and interpretability within the controlled experimental scope.

Table 3. PlantVillage dataset stratified segmentation statistics.

Table 3. PlantVillage dataset stratified segmentation statistics.

- To comprehensively assess the performance of the SDA-CAH framework, we employ multiple evaluation metrics, including accuracy (Equation (18)), weighted F1 score (Equation (19)), weighted recall (Equation (20)), and confusion matrix analysis. Accuracy measures the overall classification correctness, while the weighted F1 score and recall, by accounting for class sample sizes, are particularly suited for imbalanced datasets, emphasizing the model’s ability to recognize rare classes. The confusion matrix further elucidates inter-class misclassification patterns, providing clear insights into the model’s classification granularity.

Here, denotes correctly identified target pixels, represents background pixels misclassified as targets, indicates target pixels erroneously classified as background, and corresponds to correctly identified background pixels.

3.3. Experimental Setup

3.3.1. Implementation Details

To ensure the efficiency and reproducibility of the SDA-CAH framework in plant disease recognition, we meticulously designed the experimental implementation details, encompassing hardware configuration, software environment, and hyperparameter settings. These details, supported by innovative optimization strategies and modern computational frameworks, guarantee high performance and stable training, highlighting the pioneering contributions of this study in the field of precision agriculture.

- This study was conducted on a dual NVIDIA T4 GPU setup (2 × 16 GB VRAM). The T4 GPUs, based on the NVIDIA Turing architecture, provide powerful tensor computation capabilities and low power consumption, making them particularly suitable for parallel computing optimization in large-scale image classification tasks. The experimental environment utilized Python 3.10.12, with the PyTorch ecosystem as the core deep learning framework, complemented by Torchvision for pretrained models and image processing tools, enabling a complete workflow from feature extraction to model fine-tuning. To fully leverage the hardware capabilities, we employed PyTorch’s standard device-agnostic coding strategy, which ensures automatic switching between GPU and CPU, achieving high cross-platform portability and flexibility. For the PlantVillage dataset, the allocated GPU memory sufficiently meets the high computational and memory demands of large-scale image augmentation and batch training. Furthermore, by integrating PyTorch’s parallel data-loading mechanisms, we achieved asynchronous concurrency during training and preprocessing, effectively improving I/O throughput. Overall, this hardware environment provides a robust computational foundation for the proposed SDA-CAH framework, enabling the coordinated optimization of dual data augmentation and class-aware sampling mechanisms under stable and efficient conditions. Table 4 summarizes the software and hardware configuration of the SDA-CAH framework, demonstrating its efficiency and portability.

Table 4. SDA-CAH framework hardware and software configuration.

Table 4. SDA-CAH framework hardware and software configuration.

- To optimize model performance, all hyperparameters were meticulously fine-tuned through controlled experimentation. To ensure a fair and unbiased comparison while emphasizing the effectiveness of the proposed SDA-CAH framework, identical hyperparameter configurations were employed for both SDA-CAH + EfficientNet-B0 and the baseline EfficientNet-B0 model. The batch size was set to 64 to balance computational efficiency and gradient stability, and training was conducted for 20 epochs with an early-stopping mechanism (patience = 5) to prevent overfitting. The MixUp coefficient (α = 0.1) dynamically adjusted the sample-mixing ratio, enhancing inter-class feature discrimination and improving robustness to data variability. These settings were integrated with the AdamW optimizer (learning rate = 0.0005, weight decay = 1 × 10−3), gradient clipping (max_norm = 1.0), and a ReduceLROnPlateau scheduler (decay factor = 0.4, patience = 2) to maintain stable optimization dynamics and promote efficient convergence. Table 5 summarizes the complete hyperparameter configuration, highlighting the methodological rigor of the training strategy and its appropriateness for plant disease recognition tasks.

Table 5. Hyperparameter configurations for the SDA-CAH + EfficientNet-B0 and baseline EfficientNet-B0 frameworks.

Table 5. Hyperparameter configurations for the SDA-CAH + EfficientNet-B0 and baseline EfficientNet-B0 frameworks.

3.3.2. Comparative Experiment

To comprehensively evaluate the superiority of the SDA-CAH framework in plant disease recognition, we selected three representative state-of-the-art convolutional neural networks as baselines: Xception, EfficientNet-B0, and MobileNetV2. All models employed ImageNet pre-trained weights, underscoring the transferability of learned features and emphasizing the distinct contribution of the proposed strategies. This comparative design not only validates the performance advantage of SDA-CAH but also demonstrates its potential for real-world precision agriculture applications.

The Xception model, constructed around depthwise separable convolutions, substantially reduces parameter complexity while enhancing representational capacity, achieving superior accuracy in fine-grained visual recognition tasks. Its architectural configuration and detailed hyperparameter settings are presented in Table 6. Xception is particularly adept at capturing subtle textural variations and lesion morphology, making it highly effective for differentiating visually similar disease categories.

Table 6.

Hyperparameter configurations for the Xception model.

The standard EfficientNet-B0 baseline, known for its balanced compound scaling strategy, offers strong parameter efficiency and robustness in resource-constrained scenarios. However, it lacks specialized adaptations for addressing the inherent variability and inter-class imbalance typical of plant disease datasets, thereby limiting its potential to fully exploit information from rare disease classes.

To further extend the comparative evaluation, the MobileNetV2 model was incorporated as an additional lightweight benchmark. Unlike Xception and EfficientNet-B0, which emphasize representational depth and scaling balance, respectively, MobileNetV2 leverages an inverted residual structure and linear bottlenecks to achieve an optimal trade-off between accuracy and computational efficiency. The detailed hyperparameter configuration for MobileNetV2 is provided in Table 7.

Table 7.

Hyperparameter configurations for the MobileNetV2 model.

Importantly, to ensure that the comparison reflects each model’s best achievable performance, rather than an artificial constraint of identical settings, we did not enforce uniform hyperparameter configurations across all models. Instead, each baseline was trained using its own empirically optimized parameters—learning rate, optimizer, batch size, and regularization settings—determined through extensive pilot experiments. This design ensures a fair yet performance-maximized comparison, thereby providing a more realistic and scientifically rigorous assessment of the SDA-CAH framework’s advantages.

To adapt all models to the PlantVillage dataset (38 classes, 54,306 images), we replaced their original classification heads with fully connected layers configured to output 38 dimensions, while uniformly enforcing an input resolution of 224 × 224 pixels.

4. Results and Discussion

4.1. Quantitative Results

To rigorously validate the superior performance of the proposed SDA-CAH framework in plant disease recognition, we conducted a comprehensive evaluation on the PlantVillage test set, employing accuracy, F1-score, and recall as the primary performance metrics, supplemented by confusion matrix analysis to examine the model’s classification capability across diverse disease categories. The experimental findings highlight the transformative efficacy of SDA-CAH’s integrated design—combining synergistic dual augmentation, class-aware hybrid sampling, and Grad-CAM–based visualization—which collectively yield substantial improvements in handling data imbalance and enhancing robustness under complex agricultural conditions.

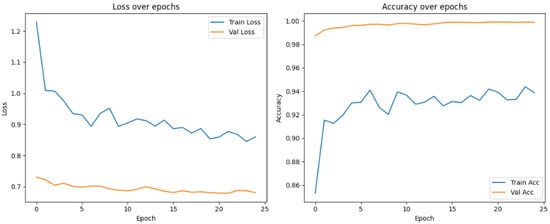

- On the PlantVillage test set, the SDA-CAH framework achieved an accuracy of 99.95%, a weighted F1-score of 99.89%, and a weighted recall of 99.89%, markedly surpassing state-of-the-art methods across multiple key performance indicators. Figure 6 illustrates the training and validation loss and accuracy curves, confirming the model’s rapid and stable convergence. To further validate its effectiveness, we benchmarked SDA-CAH against three mainstream baselines: Xception, standard EfficientNet-B0, and MobileNetV2. Specifically, Xception achieved 99.42% accuracy, 99.39% weighted F1-score, and 99.41% weighted recall; standard EfficientNet-B0 attained 99.35%, 99.32%, and 99.33% on the same metrics; and MobileNetV2 recorded 95.77%, 94.52%, and 94.77%, respectively. In comparison, SDA-CAH—built upon the EfficientNet-B0 backbone—delivered performance gains of approximately 0.49%, 0.50%, and 0.56% over Xception; 0.56%, 0.57%, and 0.56% over standard EfficientNet-B0; and 4.18%, 5.37%, and 5.12% over MobileNetV2, demonstrating unequivocally superior classification capability. These substantial improvements stem primarily from the synergistic dual-augmentation strategy, which enhances adaptability to image diversity and disease-variant complexity, and the class-aware sampling mechanism, which effectively addresses learning challenges in rare categories (each comprising less than 2% of the data). Table 8 presents a detailed comparison of SDA-CAH and the three baselines on the PlantVillage test set, including not only core performance metrics but also introduced indicators—Inference Speed (ms) and Training Time—to comprehensively assess computational efficiency and scalability. Beyond this, a systematic comparison with several representative state-of-the-art plant disease recognition methods from recent years is summarized in Table 9, which includes extended metrics such as Accuracy (%), F1 Score (%), Recall (%), Params (M), and Model Size (MB), enabling a more holistic evaluation of model accuracy, generalization, and computational cost. Although the proposed SDA-CAH framework achieved up to 99.95% accuracy on the PlantVillage test set, such near-perfect results must be interpreted with caution. The PlantVillage dataset consists of laboratory-acquired images with minimal background noise and highly visible lesions—conditions that inherently facilitate elevated performance. No cross-dataset or field validation was conducted in this study; thus, the reported metrics primarily reflect strong in-domain performance rather than real-world robustness. Future evaluations on field-acquired datasets under diverse environmental conditions are essential to rigorously assess cross-domain generalization and practical deployability. Nonetheless, within the controlled scope of this benchmark, these results not only affirm SDA-CAH’s robustness and precision in handling highly imbalanced data but also establish a new performance standard for plant disease recognition, underscoring its significant potential for scalable deployment in precision agriculture.

Figure 6. Loss and accuracy curves for training and validation.

Figure 6. Loss and accuracy curves for training and validation. Table 8. Performance comparison of the contrast models on the PlantVillage test set.

Table 8. Performance comparison of the contrast models on the PlantVillage test set. Table 9. Performance comparison between the proposed method and existing advanced methods.

Table 9. Performance comparison between the proposed method and existing advanced methods. - To further substantiate the practical advantages of the proposed SDA-CAH framework beyond classification accuracy, we conducted a comprehensive efficiency and resource utilization analysis to assess computational complexity, model compactness, and inference latency relative to the baseline models. As summarized in Table 8 and Table 9, the SDA-CAH + EfficientNet-B0 framework preserves a comparable parameter count (5.33 M) and lightweight storage footprint (≈21 MB) to the standard EfficientNet-B0, while delivering markedly superior recognition accuracy (99.95% vs. 99.50%) and F1-score (99.89% vs. 99.45%). Despite integrating the synergistic dual-augmentation and class-aware hybrid sampling modules, the framework effectively reduces training time while maintaining performance, primarily attributable to the additional data transformation operations during training. Crucially, inference latency remains nearly identical at ≈70 ms per image when evaluated on a NVIDIA T4 GPU, confirming that the model’s architectural optimizations do not compromise deployment efficiency. This well-balanced trade-off between accuracy, computational efficiency, and energy economy highlights the SDA-CAH framework’s strong potential for scalable deployment in resource-constrained or energy-sensitive environments, such as mobile-based diagnostic platforms, edge computing devices, or embedded agricultural monitoring systems. By achieving state-of-the-art precision with minimal hardware overhead, the proposed method offers a cost-effective and environmentally sustainable AI solution for next-generation precision agriculture applications.

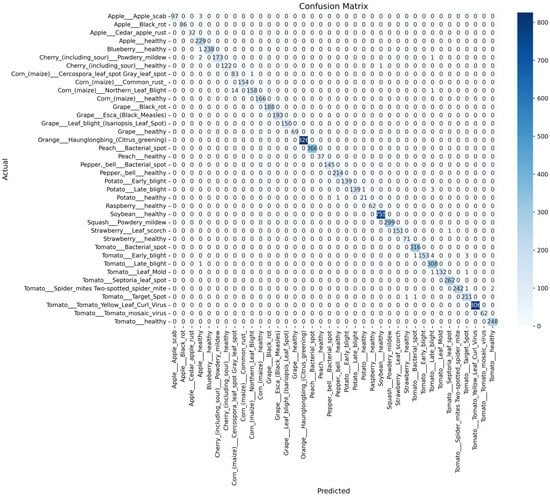

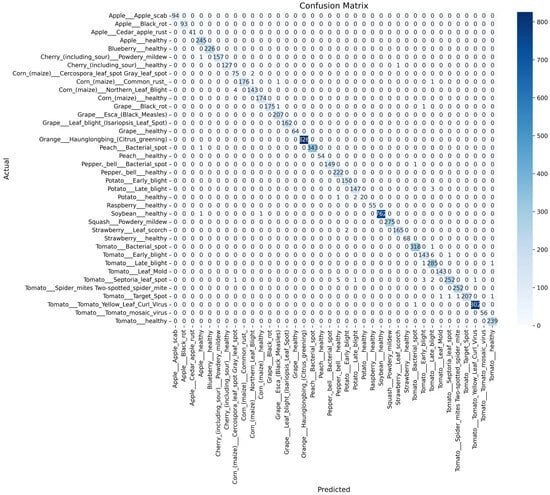

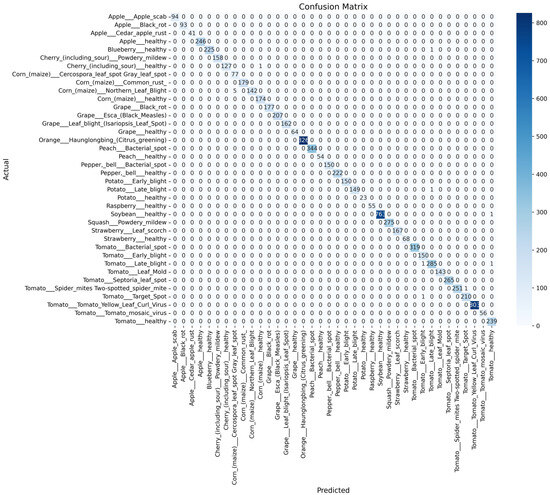

To gain deeper insights into the model’s classification capability, confusion matrices were generated on the test set. The results demonstrate that SDA-CAH exhibits consistently robust performance across diverse plant disease categories (e.g., tomato late blight, grape black rot, and maize gray leaf spot), showing near-perfect values along the main diagonal and an exceptionally low misclassification rate (<0.1%). Even among visually similar classes—such as different variants of leaf spot—the model achieved highly accurate discrimination. Figure 7 illustrates the confusion matrix of Xception on the test set, Figure 8 presents that of the standard EfficientNet-B0, Figure 9 displays the confusion matrix of MobileNetV2, and Figure 10 shows the confusion matrix of SDA-CAH combined with EfficientNet-B0. When comparing Figure 8 and Figure 10, the proposed framework demonstrates a comprehensive improvement across almost all classes, with particularly notable gains in Potato___healthy, Tomato___Septoria_leaf_spot, and Tomato___Early_blight. These results confirm that the synergistic dual-augmentation and class-aware hybrid sampling mechanisms substantially enhance the model’s capacity to learn balanced and discriminative representations. While these findings highlight the strong in-domain generalization of SDA-CAH on the PlantVillage dataset, it is important to acknowledge that this dataset comprises laboratory-captured images with uniform lighting and clean backgrounds. In real-world agricultural environments, field images often contain background clutter, partial occlusion, varying illumination, and even unseen disease types, which can challenge the robustness of visual recognition systems. Given its integrated augmentation strategies and class-aware sampling design, SDA-CAH is expected to maintain resilient feature learning under such noisy and heterogeneous conditions. Nevertheless, future work will involve evaluating the framework on field-collected datasets and cross-domain benchmarks to rigorously assess its adaptability to real-world variability and unseen disease phenotypes.

Figure 7.

Confusion matrix of Xception on the test set.

Figure 8.

Confusion matrix of standard EfficientNet-B0 on the test set.

Figure 9.

Confusion matrix of MobileNetV2 on the test set.

Figure 10.

Confusion matrix of SDA-CAH+EfficientNet-B0 on the test set.

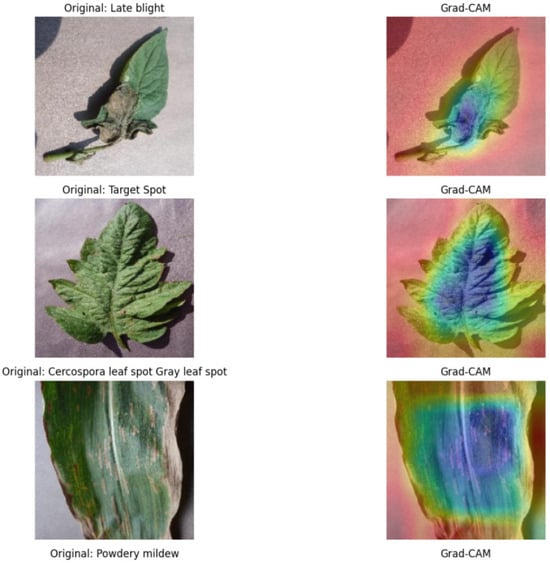

4.2. Qualitative Results

To assess the interpretability of the proposed SDA-CAH framework in plant disease recognition, we performed a qualitative analysis on the PlantVillage test set using an enhanced Grad-CAM–based visualization technique. This method produced high-resolution heatmaps that accurately highlighted disease-affected regions, demonstrating the model’s capability to precisely localize pathological features. By incorporating synergistic dual augmentation and class-aware sampling strategies, the visualization process further enhanced the transparency, reliability, and practical interpretability of the framework. These results establish SDA-CAH as a robust and explainable diagnostic solution with significant potential for precision agriculture applications.

We randomly selected three representative images from the PlantVillage test set, each corresponding to a distinct disease type, and applied Grad-CAM to the final convolutional layer of EfficientNet-B0 to generate disease localization heatmaps. The heatmaps were computed by weighting the activation maps with gradients globally averaged over the target class, thereby enabling precise delineation of the affected regions. These activation maps were subsequently superimposed onto the original images using a JET colormap, yielding an intuitive and visually interpretable representation of the infected areas. The visualization results demonstrate that SDA-CAH effectively localizes disease features with high spatial accuracy, outperforming conventional approaches that often produce blurred or spatially misaligned activation regions. This enhanced localization capability primarily arises from the customized augmentation strategies, which preserve fine-grained pathological textures, and the class-aware sampling mechanism, which ensures adequate representation of rare categories during model training. Figure 11 illustrates the Grad-CAM visualizations of SDA-CAH, highlighting its precise localization of pathological regions.

Figure 11.

Grad-CAM visualization.

The SDA-CAH framework demonstrates clear advantages over conventional architectures not only in classification performance but also in interpretability and computational efficiency. Its synergistic augmentation and class-aware sampling strategies enhance representation learning without increasing parameter overhead, while Grad-CAM-based visualization ensures transparent and trustworthy predictions. Together, these features position SDA-CAH as a high-performance yet energy-efficient solution for plant disease diagnosis, bridging the gap between academic research and practical precision agriculture systems.

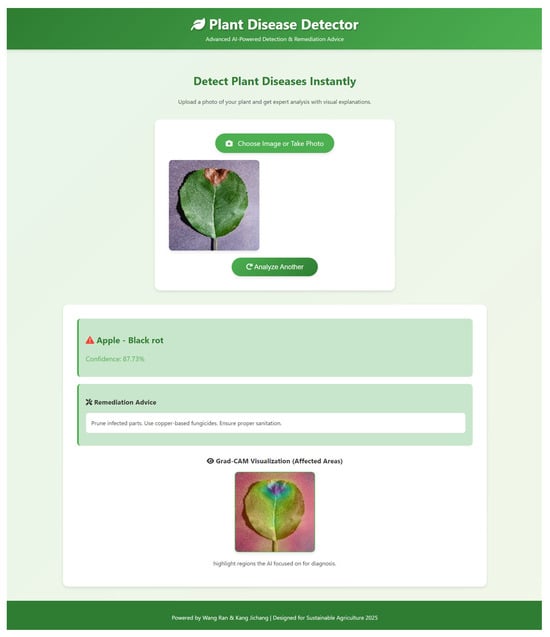

4.3. System Demonstration and Case Studies

The platform interface was intentionally designed for simplicity and intuitiveness, featuring a responsive layout that ensures seamless accessibility across mobile devices, even under rural or field deployment conditions. As illustrated in Figure 12, the main interface comprises four core components: an image upload area, an analysis trigger button, a progress indicator, and a results display panel. Developed using the Bootstrap framework, the interface provides cross-device compatibility and incorporates contextual explanatory text to enhance user understanding and engagement. A dedicated back-end server underpins the system’s real-time responsiveness, with end-to-end latency typically maintained below two seconds in practice. The design prioritizes user-centered interaction, deliberately avoiding technical jargon and instead adopting agriculture-oriented, user-friendly language, thereby promoting inclusivity and accessibility in precision agriculture applications.

Figure 12.

Main Interface of the Application System.

4.3.1. User Operation Workflow

The platform’s operational workflow is streamlined into four steps, ensuring both efficiency and usability: (i) Image Upload: Users upload or capture plant leaf images via the “Choose Image or Take Photo” button. The system automatically verifies the format (JPEG/PNG) and displays a preview on the front end. (ii) Analysis Trigger: Upon clicking “Analyze Image,” the image is transmitted to the backend server. The preprocessing module, using Albumentations, resizes and normalizes the image to prepare it for model input. (iii) Real-Time Processing and Output: The backend invokes the SDA-CAH model for inference, generating the predicted class, confidence score, Grad-CAM heatmap, and actionable remediation suggestions. Results are returned in JSON format: predicted class (e.g., “Apple—Black Rot”) and confidence are displayed on the summary panel; Grad-CAM overlay images highlight diseased regions in red alongside the original image; remediation steps are listed for user guidance. (iv) User Interaction and Feedback: Users can review detailed explanations or re-upload images as needed. The platform supports logging to facilitate subsequent data collection and iterative model improvement. This workflow emulates in-field diagnostic scenarios, emphasizing zero-configuration deployment so that users can access the system without installing any software.

4.3.2. Interface Demonstration

Case 1: Apple Black Rot (Apple—Black rot), An apple leaf image exhibiting black spots and necrotic leaf edges was input. The platform predicted the class as “Apple—Black rot” with a confidence score of 87.73%. The Grad-CAM heatmap (see Figure 13) highlighted the darkened regions along the leaf edges, precisely localizing the lesions. The model focused on texture variations rather than background noise. Recommended remediation: “Prune infected parts. Use copper-based fungicides. Ensure proper sanitation.” This case demonstrates the platform’s accuracy for common diseases and the interpretability of Grad-CAM, with the heatmap enabling rapid identification of infected areas and supporting precise pesticide application.

Figure 13.

Real-Time Diagnostic Example: Apple Black Rot.

Case 2: Tomato Late Blight (Tomato—Late blight), A tomato leaf image exhibiting blurred spots and slight occlusion was input. The platform predicted the class as “Tomato—Late blight” with a confidence score of 90.00%. The Grad-CAM heatmap (see Figure 14) concentrated on the dark brown lesions at the leaf center, effectively excluding irrelevant regions such as the stem. Recommended remediation: “Remove infected parts. Fungicides.” This example highlights the robustness of the collaborative dual augmentation strategy, demonstrating the model’s ability to handle image variability while Grad-CAM provides actionable guidance, potentially reducing chemical overuse.

Figure 14.

Real-Time Diagnostic Example: Tomato Late Blight.

5. Conclusions

5.1. Summary of Contributions

The proposed SDA-CAH framework (Synergistic Dual Augmentation with Class-Aware Hybrid sampling) and its accompanying pest and disease diagnosis platform provide a high-performance, interpretable, and practical solution for plant disease recognition. Algorithmically, SDA-CAH integrates optimized MixUp augmentation with a customized Albumentations pipeline, dynamically combining class-specific features with geometric and photometric transformations to preserve subtle lesion characteristics. This synergy significantly enhances robustness to variations in illumination, occlusion, and disease morphology. The class-aware hybrid sampling mechanism further mitigates class imbalance through weighted random sampling, ensuring reliable recognition of rare disease categories. On the publicly available PlantVillage dataset, SDA-CAH built upon EfficientNet-B0, achieved 99.95% accuracy, 99.89% F1-score, and 99.89% recall, outperforming state-of-the-art baselines such as Xception (99.42%, 99.39%, 99.41%), standard EfficientNet-B0 (99.35%, 99.32%, 99.33%), and MobileNetV2 (95.77%, 94.55%, 94.77%). These results demonstrate superior overall accuracy, improved class balance, and enhanced detection of rare diseases. Grad-CAM visualizations produce high-resolution heatmaps that precisely localize infected regions, thereby improving interpretability and providing actionable diagnostic insights for practitioners. In addition to its strong predictive performance, the SDA-CAH framework also delivers economic and energy efficiency—achieving this level of accuracy with a lightweight configuration (5.33 M parameters, 21 MB), minimal inference delay (70 ms per image), and reduced computational overhead. These characteristics ensure low power consumption and cost-effective deployment on mobile and edge devices, making the system both environmentally sustainable and scalable for agricultural applications. At the system level, the integrated platform combines real-time inference, visual explanations, and expert-curated remediation recommendations within a mobile-friendly, responsive interface. This design lowers technical barriers and supports the adoption of intelligent, data-driven, and sustainable agricultural practices. Collectively, these findings establish SDA-CAH as a new benchmark for both theoretical accuracy and practical deployability—clearly surpassing existing solutions in generalization, interpretability, and energy-efficient implementation for next-generation precision agriculture.

5.2. Limitations and Future Work

Despite certain limitations—including the lack of cross-dataset or in-field validation, reliance on the laboratory-biased PlantVillage dataset, computational demands of the EfficientNet-B0 backbone, and network-dependent platform functionality—the SDA-CAH framework demonstrates clear advantages over existing solutions, including higher accuracy, superior F1-score, enhanced recognition of rare diseases, and improved interpretability through Grad-CAM visualizations. The current experiments were conducted exclusively on the PlantVillage dataset, which consists of highly curated, homogeneous samples with controlled illumination and minimal background noise. While this facilitates rigorous benchmarking, it may overestimate real-world performance. Future work will prioritize validating the SDA-CAH framework on heterogeneous field datasets such as real-time image streams from agricultural monitoring systems to rigorously evaluate its generalization capability under natural, variable conditions. Additional directions include model compression or adoption of lightweight architectures for mobile deployment, offline application support, integration of multimodal data and expanded crop coverage, advanced explainable AI techniques, GIS-based region-specific treatment recommendations, and IoT-enabled real-time field monitoring. These advancements will further enhance SDA-CAH’s robustness, practical utility, and global applicability, solidifying its role in advancing precision agriculture and sustainable crop management.

Author Contributions

Conceptualization, J.K. and R.W.; methodology, J.K.; software, J.K.; validation, J.K., R.W. and L.Z.; formal analysis, J.K.; investigation, J.K.; resources, J.K.; data curation, J.K.; writing—original draft preparation, J.K.; writing—review and editing, J.K. and R.W.; visualization, J.K.; supervision, R.W. and L.Z.; project administration, J.K.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Industrial Internet Ecological Intelligence Innovation and Application Platform (Grant No. 2020SNPT0055).

Data Availability Statement

Due to a confidentiality agreement, this dataset cannot be fully disclosed at present. However, if there is a research need, the interested researcher can contact the authors via email to obtain it.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ristaino, J.B.; Anderson, P.K.; Bebber, D.P.; Brauman, K.A.; Cunniffe, N.J.; Fedoroff, N.V.; Wei, Q. The persistent threat of emerging plant disease pandemics to global food security. Proc. Natl. Acad. Sci. USA 2021, 118, e2022239118. [Google Scholar] [CrossRef]

- Singh, B.K.; Delgado-Baquerizo, M.; Egidi, E.; Guirado, E.; Leach, J.E.; Liu, H.; Trivedi, P. Climate change impacts on plant pathogens, food security and paths forward. Nat. Rev. Microbiol. 2023, 21, 640–656. [Google Scholar] [CrossRef]

- Upadhyay, A.; Chandel, N.S.; Singh, K.P.; Chakraborty, S.K.; Nandede, B.M.; Kumar, M.; Elbeltagi, A. Deep learning and computer vision in plant disease detection: A comprehensive review of techniques, models, and trends in precision agriculture. Artif. Intell. Rev. 2025, 58, 92. [Google Scholar] [CrossRef]

- Bagga, M.; Goyal, S. Image-based detection and classification of plant diseases using deep learning: State-of-the-art review. Urban Agric. Reg. Food Syst. 2024, 9, e20053. [Google Scholar] [CrossRef]

- Islam, M.; Azad, A.K.M.; Arman, S.E.; Alyami, S.A.; Hasan, M.M. PlantCareNet: An advanced system to recognize plant diseases with dual-mode recommendations for prevention. Plant Methods 2025, 21, 52. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Sapkota, B.B.; Thomasson, J.A.; Bagavathiannan, M.V. Influence of image quality and light consistency on the performance of convolutional neural networks for weed mapping. Remote Sens. 2021, 13, 2140. [Google Scholar] [CrossRef]

- Amara, J.; König-Ries, B.; Samuel, S. Explainability of Deep Learning-Based Plant Disease Classifiers Through Automated Concept Identification. arXiv 2024, arXiv:2412.07408. [Google Scholar] [CrossRef]

- Hughes, D.P.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics through machine learning and crowdsourcing. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Ngugi, H.N.; Akinyelu, A.A.; Ezugwu, A.E. Machine Learning and Deep Learning for Crop Disease Diagnosis: Performance Analysis and Review. Agronomy 2024, 14, 3001. [Google Scholar] [CrossRef]

- Cap, Q.H.; Uga, H.; Kagiwada, S.; Iyatomi, H. Leafgan: An effective data augmentation method for practical plant disease diagnosis. IEEE Trans. Autom. Sci. Eng. 2020, 19, 1258–1267. [Google Scholar] [CrossRef]

- Gopalan, K.; Srinivasan, S.; Singh, M.; Mathivanan, S.K.; Moorthy, U. Corn leaf disease diagnosis: Enhancing accuracy with resnet152 and grad-cam for explainable AI. BMC Plant Biol. 2025, 25, 440. [Google Scholar] [CrossRef]

- Khare, O.; Mane, S.; Kulkarni, H.; Barve, N. LeafNST: An improved data augmentation method for classification of plant disease using object-based neural style transfer. Discov. Artif. Intell. 2024, 4, 50. [Google Scholar] [CrossRef]

- Alhwaiti, Y.; Ishaq, M.; Siddiqi, M.H.; Waqas, M.; Alruwaili, M.; Alanazi, S.; Khan, F. Early Detection of Late Blight Tomato Disease using Histogram Oriented Gradient based Support Vector Machine. arXiv 2023, arXiv:2306.08326. [Google Scholar] [CrossRef]

- Yao, J.; Tran, S.N.; Sawyer, S.; Garg, S. Machine learning for leaf disease classification: Data, techniques and applications. Artif. Intell. Rev. 2023, 56 (Suppl. S3), 3571–3616. [Google Scholar] [CrossRef]

- Demilie, W.B. Plant disease detection and classification techniques: A comparative study of the performances. J. Big Data 2024, 11, 5. [Google Scholar] [CrossRef]

- Pai, P.; Amutha, S.; Basthikodi, M.; Ahamed Shafeeq, B.M.; Chaitra, K.M.; Gurpur, A.P. A twin CNN-based framework for optimized rice leaf disease classification with feature fusion. J. Big Data 2025, 12, 89. [Google Scholar] [CrossRef]

- Rodríguez-Lira, D.C.; Córdova-Esparza, D.M.; Álvarez-Alvarado, J.M.; Terven, J.; Romero-González, J.A.; Rodríguez-Reséndiz, J. Trends in Machine and Deep Learning Techniques for Plant Disease Identification: A Systematic Review. Agriculture 2024, 14, 2188. [Google Scholar] [CrossRef]

- Rizwan, M.; Bibi, S.; Haq, S.U.; Asif, M.; Jan, T.; Zafar, M.H. Automatic plant disease detection using computationally efficient convolutional neural network. Eng. Rep. 2024, 6, e12944. [Google Scholar] [CrossRef]

- Gohil, M.K.; Bhattacharjee, A.; Rana, R.; Lal, K.; Biswas, S.K.; Tiwari, N.; Bhattacharya, B. A Hybrid Technique for Plant Disease Identification and Localisation in Real-time. arXiv 2024, arXiv:2412.19682. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.H.; Han, X. Plant disease diagnosis using deep learning based on aerial hyperspectral images: A review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Ngugi, H.N.; Ezugwu, A.E.; Akinyelu, A.A.; Abualigah, L. Revolutionizing crop disease detection with computational deep learning: A comprehensive review. Environ. Monit. Assess. 2024, 196, 302. [Google Scholar] [CrossRef]

- Hong, P.; Luo, X.; Bao, L. Crop disease diagnosis and prediction using two-stream hybrid convolutional neural networks. Crop Prot. 2024, 184, 106867. [Google Scholar] [CrossRef]

- Kaya, Y.; Gürsoy, E. A novel multi-head CNN design to identify plant diseases using the fusion of RGB images. Ecol. Inform. 2023, 75, 101998. [Google Scholar] [CrossRef]

- Sun, X.; Li, G.; Qu, P.; Xie, X.; Pan, X.; Zhang, W. Research on plant disease identification based on CNN. Cogn. Robot. 2022, 2, 155–163. [Google Scholar] [CrossRef]

- Shoaib, M.; Shah, B.; Ei-Sappagh, S.; Ali, A.; Ullah, A.; Alenezi, F.; Ali, F. An advanced deep learning models-based plant disease detection: A review of recent research. Front. Plant Sci. 2023, 14, 1158933. [Google Scholar]

- Ray, S.K.; Hossain, M.A.; Islam, N.; Hasan, M.A.F.M.R. Enhanced plant health monitoring with dual head CNN for leaf classification and disease identification. J. Agric. Food Res. 2025, 21, 101930. [Google Scholar] [CrossRef]

- González-Briones, A.; Florez, S.L.; Chamoso, P.; Castillo-Ossa, L.F.; Corchado, E.S. Enhancing Plant Disease Detection: Incorporating Advanced CNN Architectures for Better Accuracy and Interpretability. Int. J. Comput. Intell. Syst. 2025, 18, 120. [Google Scholar] [CrossRef]

- Saddami, K.; Nurdin, Y.; Zahramita, M.; Safiruz, M.S. Advancing Green AI: Efficient and Accurate Lightweight CNNs for Rice Leaf Disease Identification. arXiv 2024, arXiv:2408.01752. [Google Scholar] [CrossRef]

- Mazumder, M.K.A.; Kabir, M.M.; Rahman, A.; Abdullah-Al-Jubair, M.; Mridha, M.F. DenseNet201Plus: Cost-effective transfer-learning architecture for rapid leaf disease identification with attention mechanisms. Heliyon 2024, 10, e35625. [Google Scholar] [CrossRef]

- Alghamdi, H.; Turki, T. PDD-Net: Plant disease diagnoses using multilevel and multiscale convolutional neural network features. Agriculture 2023, 13, 1072. [Google Scholar] [CrossRef]

- Song, Y.; Yang, C. DS_FusionNet: Dynamic Dual-Stream Fusion with Bidirectional Knowledge Distillation for Plant Disease Recognition. arXiv 2025, arXiv:2504.20948. [Google Scholar] [CrossRef]

- Nieradzik, L.; Stephani, H.; Sieburg-Rockel, J.; Helmling, S.; Olbrich, A.; Keuper, J. Challenging the black box: A comprehensive evaluation of attribution maps of CNN applications in agriculture and forestry. arXiv 2024, arXiv:2402.11670. [Google Scholar] [CrossRef]

- Antwi, K.; Bennin, K.E.; Pobi, D.K.; Tekinerdogan, B. On the application of image augmentation for plant disease detection: A systematic literature review. Smart Agric. Technol. 2024, 9, 100590. [Google Scholar] [CrossRef]

- Xu, M.; Kim, H.; Yang, J.; Fuentes, A.; Meng, Y.; Yoon, S.; Park, D.S. Embracing limited and imperfect training datasets: Opportunities and challenges in plant disease recognition using deep learning. Front. Plant Sci. 2023, 14, 1225409. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Lee, H.; Park, Y.S.; Yang, S.; Lee, H.; Park, T.J.; Yeo, D. A Deep Learning-Based Crop Disease Diagnosis Method Using Multimodal Mixup Augmentation. Appl. Sci. 2024, 14, 4322. [Google Scholar] [CrossRef]

- Li, L.H.; Tanone, R. Improving robustness using mixup and cutmix augmentation for corn leaf diseases classification based on convmixer architecture. J. ICT Res. Appl. 2023, 17, 167–180. [Google Scholar] [CrossRef]

- Harris, E.; Marcu, A.; Painter, M.; Niranjan, M.; Prügel-Bennett, A.; Hare, J. Fmix: Enhancing mixed sample data augmentation. arXiv 2020, arXiv:2002.12047. [Google Scholar]

- Ojo, M.O.; Zahid, A. Improving deep learning classifiers performance via preprocessing and class imbalance approaches in a plant disease detection pipeline. Agronomy 2023, 13, 887. [Google Scholar] [CrossRef]

- Talab, H.K.; Mohammadzamani, D.; Parashkoohi, M.G. Investigating the accuracy of classification in unbalanced data in order to diagnose two common potato leaf diseases (early blight and late blight) using image processing and machine learning. Discov. Appl. Sci. 2024, 6, 286. [Google Scholar] [CrossRef]

- Sinha, S.; Ohashi, H.; Nakamura, K. Class-wise difficulty-balanced loss for solving class-imbalance. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Liu, Y.; Yang, G.; Qiao, S.; Liu, M.; Qu, L.; Han, N.; Peng, Y. Imbalanced data classification: Using transfer learning and active sampling. Eng. Appl. Artif. Intell. 2023, 117, 105621. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Ennab, M.; Mcheick, H. Advancing AI Interpretability in Medical Imaging: A Comparative Analysis of Pixel-Level Interpretability and Grad-CAM Models. Mach. Learn. Knowl. Extr. 2025, 7, 12. [Google Scholar] [CrossRef]

- Hulsman, G.W.; van der Heijden, J.A.; da Silva Torres, R. Automated Detection and Localization of Potato Blackleg Using a Convolutional Neural Network and Activation Maps. Potato Res. 2025, 68, 3339–3356. [Google Scholar] [CrossRef]