Adaptive CNN Ensemble for Apple Detection: Enabling Sustainable Monitoring Orchard

Abstract

1. Introduction

- We propose a flexible framework for automated ensemble selection and optimization of CNN inference, specifically tailored to agricultural applications under variable environmental conditions.

- We integrate and benchmark eleven ensemble methods, dynamically configured via Pareto-based multi-objective optimization, an approach not yet fully explored for fruit detection.

- We introduce a novel decision-support framework for pre-deployment benchmarking that enables data-driven selection of neural network models and ensemble strategies before field integration. This moves beyond conventional trial-and-error by leveraging multi-objective Pareto optimization to identify the optimal trade-off between accuracy and computational efficiency for specific operational scenarios (e.g., time of day, weather conditions, hardware constraints).

2. Related Works

2.1. Advancements in Single-Model Architectures

2.2. Ensemble and Advanced Methods

2.3. The Identified Research Gap and Our Contribution

3. Materials and Methods

3.1. Dataset Description

3.2. Algorithms and Models

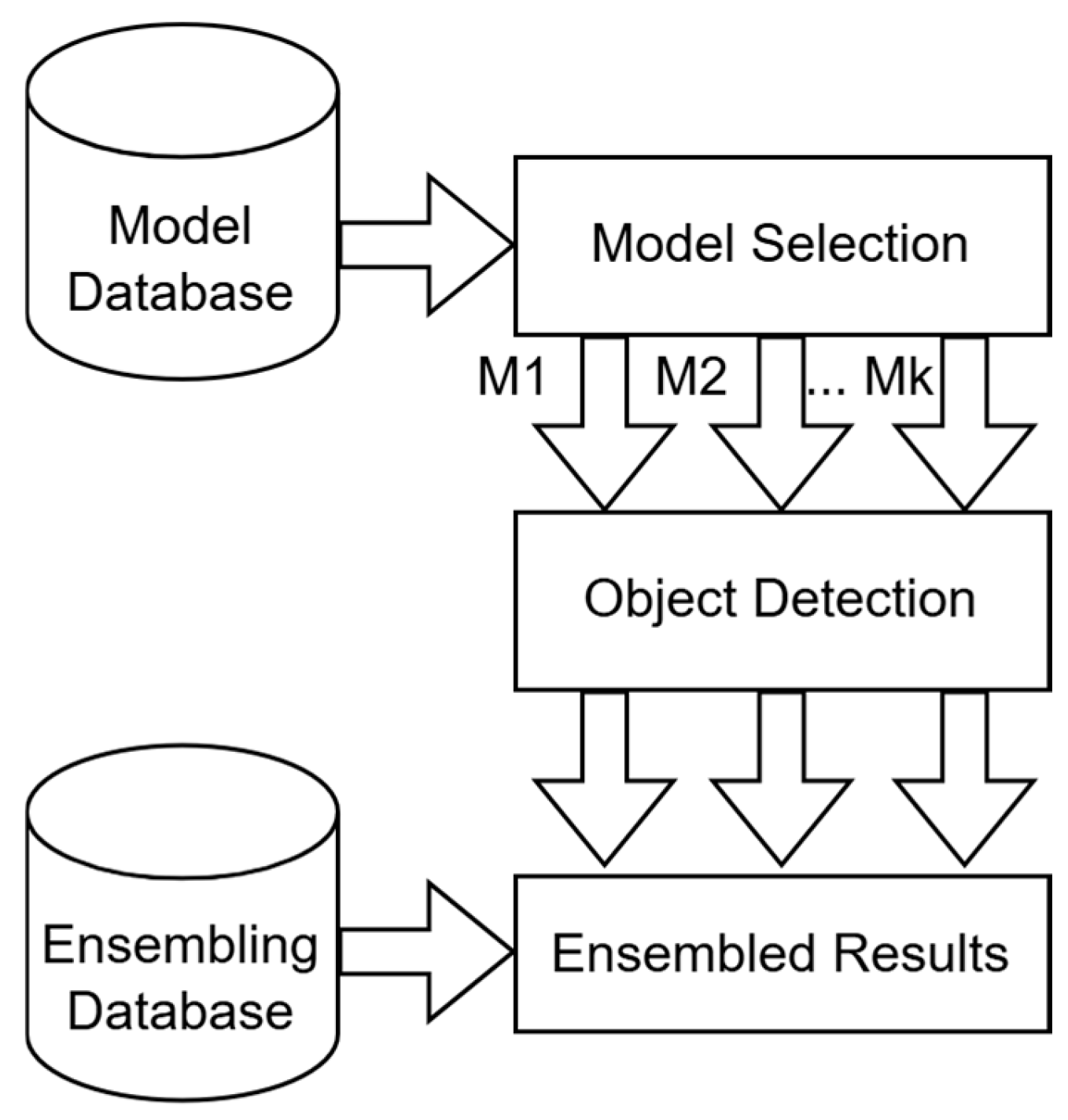

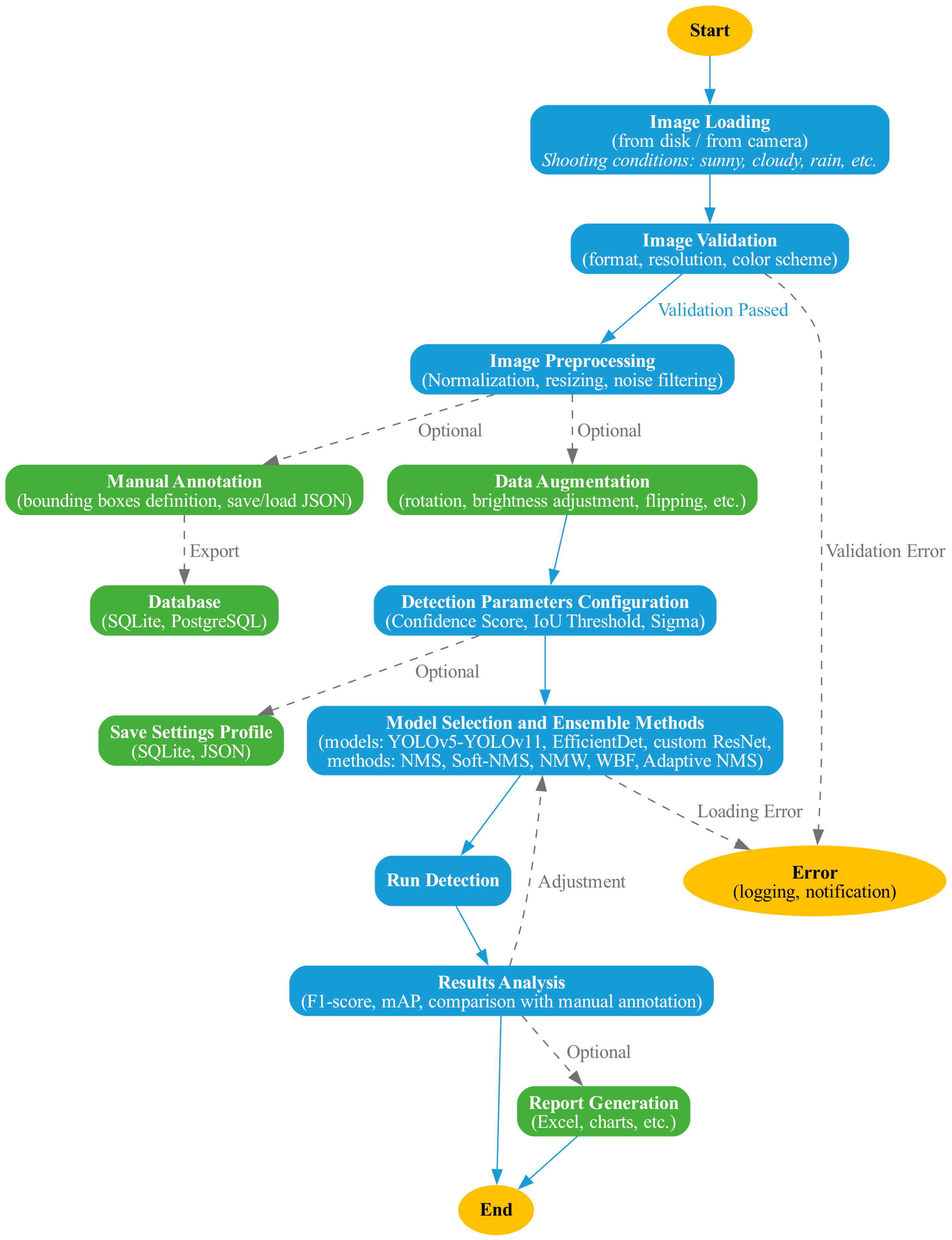

- Pre-deployment Benchmarking and Optimization Phase. In this phase, the system performs an exhaustive evaluation of a portfolio of individual models (e.g., YOLOv8, EfficientDet) and their combinations using 11 ensemble methods (NMS, Soft NMS, Non-Maximum Weighted (NMW), Weighted Boxes Fusion (WBF), Score Averaging, Weighted Averaging, IoU Voting, Consensus Fusion, Adaptive NMS, Test-Time Augmentation (TTA), and Bayesian Ensembling). This evaluation uses a small, representative dataset (e.g., a single image or a short clip from the target environment) to simulate expected conditions. The selection of the optimal configuration (a single model or a specific ensemble method with tuned hyperparameters) is driven by Pareto-based multi-objective optimization, balancing accuracy (mAP) and performance (FPS).

- Runtime Deployment Phase. Only the single best configuration identified in the first phase is deployed for continuous, real-time monitoring. This ensures high throughput and low latency during field operation, as the computational overhead of running multiple models and complex fusion algorithms is incurred only once during the setup phase.

- -

- auto_threshold_tuning: Automatically selects detection thresholds based on image characteristics and object density;

- -

- extract_labels_and_scores: Extracts class labels and probabilities from model outputs;

- -

- convert_json: Converts annotation formats, supporting JSON-to-CSV and vice versa;

- -

- evaluate_detector: Validates a model on a validation subset and computes key metrics;

- -

- compute_map_metric: Calculates mean Average Precision (mAP) across multiple IoU thresholds;

- -

- generate_recommendations: Provides decision-support by analyzing metrics and recommending optimal models and ensembling strategies.

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cembrowska-Lech, D.; Krzemińska, A.; Miller, T.; Nowakowska, A.; Adamski, C.; Radaczyńska, M.; Mikiciuk, G.; Mikiciuk, M. An Integrated Multi-Omics and Artificial Intelligence Framework for Advance Plant Phenotyping in Horticulture. Biology 2023, 12, 1298. [Google Scholar] [CrossRef]

- Korchagin, S.A.; Gataullin, S.T.; Osipov, A.V.; Smirnov, M.V.; Suvorov, S.V.; Serdechnyi, D.V.; Bublikov, K.V. Development of an Optimal Algorithm for Detecting Damaged and Diseased Potato Tubers Moving along a Conveyor Belt Using Computer Vision Systems. Agronomy 2021, 11, 1980. [Google Scholar] [CrossRef]

- Andriyanov, N.A.; Dementiev, V.E.; Kargashin, Y.D. Analysis of the impact of visual attacks on the characteristics of neural networks in image recognition. Procedia Comput. Sci. 2021, 186, 495–502. [Google Scholar] [CrossRef]

- Al Mudawi, N.; Qureshi, A.M.; Abdelhaq, M.; Alshahrani, A.; Alazeb, A.; Alonazi, M.; Algarni, A. Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences. Sustainability 2023, 15, 14597. [Google Scholar] [CrossRef]

- Kutyrev, A.; Andriyanov, N. Apple Flower Recognition Using Convolutional Neural Networks with Transfer Learning and Data Augmentation Technique. E3S Web Conf. 2024, 493, 01006. [Google Scholar] [CrossRef]

- Wang, Y.; Lin, X.; Xiang, Z.; Su, W.-H. VM-YOLO: YOLO with VMamba for Strawberry Flowers Detection. Plants 2025, 14, 468. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Gao, Y.; Yin, M.; Li, H. Automatic Apple Detection and Counting with AD-YOLO and MR-SORT. Sensors 2024, 24, 7012. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; He, L.; Jiang, H.; Li, R.; Mao, W.; Zhang, D.; Majeed, Y.; Andriyanov, N.; Soloviev, V.; Fu, L. Morphological estimation of primary branch length of individual apple trees during the deciduous period in modern orchard based on PointNet++. Comput. Electron. Agric. 2024, 220, 108873. [Google Scholar] [CrossRef]

- Dai, M.; Dorjoy, M.M.H.; Miao, H.; Zhang, S. A New Pest Detection Method Based on Improved YOLOv5m. Insects 2023, 14, 54. [Google Scholar] [CrossRef]

- Rathar, A.S.; Choudhury, S.; Sharma, A.; Nautiyal, P.; Shah, G. Empowering vertical farming through IoT and AI-Driven technologies: A comprehensive review. Heliyon 2024, 10, 1014. [Google Scholar] [CrossRef]

- Muhammed, D.; Ahvar, E.; Ahvar, S.; Trocan, M.; Montpetit, M.J.; Ehsani, R. Artificial Intelligence of Things (AIoT) for smart agriculture: A review of architectures, technologies and solutions. J. Netw. Comput. Appl. 2024, 228, 103905. [Google Scholar] [CrossRef]

- Khan, A.T.; Jensen, S.M. LEAF-Net: A Unified Framework for Leaf Extraction and Analysis in Multi-Crop Phenotyping Using YOLOv11. Agriculture 2025, 15, 196. [Google Scholar] [CrossRef]

- Olguín-Rojas, J.C.; Vasquez, J.I.; López-Canteñs, G.d.J.; Herrera-Lozada, J.C.; Mota-Delfin, C. A Lightweight YOLO-Based Architecture for Apple Detection on Embedded Systems. Agriculture 2025, 15, 838. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Mehdizadeh, S.A. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Nix, S.; Sato, A.; Madokoro, H.; Yamamoto, S.; Nishimura, Y.; Sato, K. Detection of Apple Trees in Orchard Using Monocular Camera. Agriculture 2025, 15, 564. [Google Scholar] [CrossRef]

- Han, B.; Lu, Z.; Zhang, J.; Almodfer, R.; Wang, Z.; Sun, W.; Dong, L. Rep-ViG-Apple: A CNN-GCN Hybrid Model for Apple Detection in Complex Orchard Environments. Agronomy 2024, 14, 1733. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J.; Ricci, R.; de Almeida Silva, R.; Marcato Junior, J. A Seamless Deep Learning Approach for Apple Detection, Depth Estimation, and Tracking Using YOLO Models Enhanced by Multi-Head Attention Mechanism. Computers 2024, 13, 83. [Google Scholar] [CrossRef]

- Amantay, N.; Mohamad, K. Deep Learning Based Apple Detection: A Comparative Analysis of CNN Architectures. In Proceedings of the 2025 IEEE 5th International Conference on Smart Information Systems and Technologies (SIST), Astana, Kazakhstan, 14–16 May 2025; pp. 1–7. [Google Scholar] [CrossRef]

- Ma, L.; Zhao, L.; Wang, Z.; Zhang, J.; Chen, G. Detection and Counting of Small Target Apples under Complicated Environments by Using Improved YOLOv7-tiny. Agronomy 2023, 13, 1419. [Google Scholar] [CrossRef]

- Lv, M.; Xu, Y.; Miao, Y.; Su, W. A Comprehensive Review of Deep Learning in Computer Vision for Monitoring Apple Tree Growth and Fruit Production. Sensors 2025, 25, 2433. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit Detection and Recognition Based on Deep Learning for Automatic Harvesting: An Overview and Review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Khosravi, H.; Saedi, S.I.; Rezaei, M. Real-Time Recognition of on-Branch Olive Ripening Stages by a Deep Convolutional Neural Network. Sci. Hortic. 2021, 287, 110252. [Google Scholar] [CrossRef]

- Wang, D.; Li, C.; Song, H.; Xiong, H.; Liu, C.; He, D. Deep Learning Approach for Apple Edge Detection to Remotely Monitor Apple Growth in Orchards. IEEE Access 2020, 8, 26911–26925. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of Consumer RGB-D Cameras for Fruit Detection and Localization in Field: A Critical Review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Abulfaraj, A.W.; Binzagr, F. A Deep Ensemble Learning Approach Based on a Vision Transformer and Neural Network for Multi-Label Image Classification. Big Data Cogn. Comput. 2025, 9, 39. [Google Scholar] [CrossRef]

- Vyas, R.; Williams, B.M.; Rahmani, H.; Boswell-Challand, R.; Jiang, Z.; Angelov, P.; Black, S. Ensemble-Based Bounding Box Regression for Enhanced Knuckle Localization. Sensors 2022, 22, 1569. [Google Scholar] [CrossRef]

- Andriyanov, N.; Tashlinsky, A.; Dementiev, V. Zero-Shot Detection in Satellite Images. In Proceedings of the 2025 Systems of Signal Synchronization, Generating and Processing in Telecommunications (SYNCHROINFO), Tyumen, Russia, 30 June–3 July 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Jung, S.; Song, A.; Lee, K.; Lee, W.H. Advanced Building Detection with Faster R-CNN Using Elliptical Bounding Boxes for Displacement Handling. Remote Sens. 2025, 17, 1247. [Google Scholar] [CrossRef]

- Sun, L.; Chen, J.; Feng, D.; Xing, M. Parallel Ensemble Deep Learning for Real-Time Remote Sensing Video Multi-Target Detection. Remote Sens. 2021, 13, 4377. [Google Scholar] [CrossRef]

- Abdullah, L.N.; Sidi, F.; Kurmashev, I.G.; Iklassova, K.E. Ensemble Deep Learning Approach for Apple Fruitlet Detection from Digital Images. Kozybayev N. Kazakhstan Univ. 2024, 4, 183–194. [Google Scholar] [CrossRef]

- Suo, R.; Gao, F.; Zhou Zh Fu, L.; Song, Z.; Dhupia, J.; Li, R.; Cui, Y. Improved multi-classes kiwifruit detection in orchard to avoid collisions during robotic picking. Comput. Electron. Agric. 2021, 182, 106052. [Google Scholar] [CrossRef]

- Zhao, Z.-A.; Wang, S.; Chen, M.-X.; Mao, Y.-J.; Chan, A.C.-H.; Lai, D.K.-H.; Wong, D.W.-C.; Cheung, J.C.-W. Enhancing Human Detection in Occlusion-Heavy Disaster Scenarios: A Visibility-Enhanced DINO (VE-DINO) Model with Reassembled Occlusion Dataset. Smart Cities 2025, 8, 12. [Google Scholar] [CrossRef]

- Yang, Z.-X.; Li, Y.; Wang, R.-F.; Hu, P.; Su, W.-H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability 2025, 17, 5255. [Google Scholar] [CrossRef]

- Melnychenko, O.; Ścisło, Ł.; Savenko, O.; Sachenko, A.; Radiuk, P. Intelligent Integrated System for Fruit Detection Using Multi-UAV Imaging and Deep Learning. Sensors 2024, 24, 1913. [Google Scholar] [CrossRef] [PubMed]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for Instance Segmentation in Complex Orchard Environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Chen, J.; Liu, H.; Zhang, Y.; Zhang, D.; Ouyang, H.; Chen, X. A Multiscale Lightweight and Efficient Model Based on YOLOv7: Applied to Citrus Orchard. Plants 2022, 11, 3260. [Google Scholar] [CrossRef]

- Li, J.; Tang, Y.; Zou, X.; Lin, G.; Wang, H. Detection of Fruit-Bearing Branches and Localization of Litchi Clusters for Vision-Based Harvesting Robots. IEEE Access 2020, 8, 117746–117758. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Impact of Dataset Size and Variety on the Effectiveness of Deep Learning and Transfer Learning for Plant Disease Classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Quiroz, I.A.; Alférez, G.H. Image Recognition of Legacy Blueberries in a Chilean Smart Farm through Deep Learning. Comput. Electron. Agric. 2020, 168, 105044. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Auat Cheein, F. Comparison of Convolutional Neural Networks in Fruit Detection and Counting: A Comprehensive Evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Kambhatla, C.; Sharma, M. A Comprehensive Review of YOLO Architectures in Computer Vision. Vision 2024, 5, 83. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L. Soft-NMS—Improving Object Detection with One Line of Code. arXiv 2017, arXiv:1704.04503. [Google Scholar]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted boxes fusion: Ensembling boxes from different object detection models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.; Wang, Y. Multi-Attribute NMS: An Enhanced Non-Maximum Suppression for Crowded Scenes. Appl. Sci. 2023, 13, 8073. [Google Scholar]

- Sharifuzzaman, S.A.S.M.; Tanveer, J.; Chen, Y.; Chan, J.H.; Kim, H.S.; Kallu, K.D.; Ahmed, S. Bayes R-CNN: An Uncertainty-Aware Bayesian Approach to Object Detection in Remote Sensing Imagery for Enhanced Scene Interpretation. Remote Sens. 2024, 16, 2405. [Google Scholar] [CrossRef]

- Chen, Y.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal Object Detection via Probabilistic Ensembling. arXiv 2021, arXiv:2104.02904. [Google Scholar]

- Vilhelm, A.; Limbert, M.; Audebert, C.; Ceillier, T. Ensemble Learning techniques for object detection in high-resolution satellite images. arXiv 2022, arXiv:2202.10554. [Google Scholar] [CrossRef]

- Khort, D.O.; Kutyrev, A.; Smirnov, I.; Andriyanov, N.; Filippov, R.; Chilikin, A.; Astashev, M.E.; Molkova, E.A.; Sarimov, R.M.; Matveeva, T.A.; et al. Enhancing Sustainable Automated Fruit Sorting: Hyperspectral Analysis and Machine Learning Algorithms. Sustainability 2024, 16, 10084. [Google Scholar] [CrossRef]

| Shooting Condition | Number of Images | Average Apples per Image | Condition Features |

|---|---|---|---|

| Clear, Morning | 850 | 12.3 | Soft morning light, long shadows, moderate contrast |

| Clear, Day | 920 | 16.7 | High contrast, sunlight glare, saturated colors |

| Overcast | 780 | 14.9 | Diffused lighting, no shadows, reduced color gradient |

| Fog | 510 | 9.1 | Blurred contours, low local contrast, light veil |

| Rain (Light/Heavy) | 620 | 10.2 | Glare, raindrops on lens, partial dimming |

| Evening/Sunset | 470 | 13.5 | Warm spectrum, pronounced shadows |

| Night | 350 | 7.6 | Black-and-white silhouettes, no color information |

| Wind/Cloudy | 390 | 12.0 | Shifted contours, motion blur, unstable background |

| Total | 4890 | ~12 | - |

| Method | Disadvantages | Advantages |

|---|---|---|

| NMS | Loss of detections for partially occluded objects | Simplicity, speed |

| Soft NMS | Dependence on parameter σ | Preservation of overlapping objects |

| NMW | Sensitivity to confidence score values, computational complexity | Weighted averaging of coordinates, precise box positioning |

| WBF | Computational complexity | Accounting for model weights, coordinate accuracy |

| Score Averaging | Ignoring differences in model reliability | Ease of implementation, stabilization of scores |

| Weighted Averaging | Requires prior weight calibration | Considering model reliability via weighting |

| IoU Voting | Ineffective at low IoU values | Noise robustness |

| Improved reliability through detection agreement | Loss of detections with insufficient agreement | Consensus Fusion |

| Adaptiveness to object density | Requires parameter calibration | Adaptive NMS |

| Improved detection robustness via augmentation | Increased processing time | TTA |

| Accounting for uncertainty, adjusting model confidence | Computational and parameter tuning complexity | Bayesian Ensembling |

| Shooting Conditions | NMS | Soft NMS | NMW | WBF | Score Averaging | Weighted Averaging | IoU Voting | Consensus Fusion | Adaptive NMS | TTA | Bayesian Ensembling |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Wind, cloudiness | 0.859 | 0.893 | 0.864 | 0.924 | 0.865 | 0.890 | 0.858 | 0.887 | 0.889 | 0.908 | 0.917 |

| Evening/Sunset | 0.861 | 0.874 | 0.855 | 0.915 | 0.889 | 0.897 | 0.883 | 0.893 | 0.884 | 0.927 | 0.943 |

| Rain (Light/Heavy) | 0.881 | 0.887 | 0.872 | 0.957 | 0.916 | 0.922 | 0.943 | 0.963 | 0.966 | 0.949 | 0.935 |

| Night | 0.867 | 0.859 | 0.74 | 0.937 | 0.943 | 0.943 | 0.948 | 0.935 | 0.957 | 0.968 | 0.946 |

| Overcast | 0.885 | 0.905 | 0.912 | 0.923 | 0.874 | 0.921 | 0.856 | 0.923 | 0.870 | 0.944 | 0.909 |

| Fog | 0.862 | 0.932 | 0.903 | 0.959 | 0.865 | 0.909 | 0.865 | 0.854 | 0.947 | 0.887 | 0.966 |

| Clear, Day | 0.966 | 0.950 | 0.875 | 0.872 | 0.872 | 0.887 | 0.913 | 0.902 | 0.885 | 0.923 | 0.867 |

| Clear, Morning | 0.869 | 0.969 | 0.857 | 0.935 | 0.954 | 0.922 | 0.954 | 0.922 | 0.895 | 0.938 | 0.852 |

| Model | Precision (%) | Recall (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Inference Time (ms) |

|---|---|---|---|---|---|

| YOLOv8n (2024) | 91.2 ± 0.8 (95% CI [90.1, 92.3]) | 89.5 ± 1.0 (95% CI [87.6, 91.4]) | 91.8 ± 0.7 (95% CI [90.6, 93.0]) | 69.4 ± 1.2 (95% CI [67.1, 71.7]) | 12.8 |

| Rep-ViG-Apple (2024) | 92.5 ± 0.6 (95% CI [91.4, 93.6]) | 90.1 ± 0.9 (95% CI [88.5, 91.7]) | 92.7 ± 0.8 (95% CI [91.2, 94.2]) | 71.3 ± 1.1 (95% CI [69.2, 73.4]) | 16.5 |

| AD-YOLO (2024) | 93.0 ± 0.9 (95% CI [91.3, 94.7]) | 91.7 ± 0.8 (95% CI [90.1, 93.3]) | 93.8 ± 0.7 (95% CI [92.5, 95.1]) | 72.6 ± 1.0 (95% CI [70.6, 74.6]) | 14.7 |

| Proposed Adaptive Ensemble (Ours) | 95.4 ± 0.5 (95% CI [94.5, 96.3]) | 94.1 ± 0.6 (95% CI [93.0, 95.2]) | 95.8 ± 0.4 (95% CI [95.0, 96.6]) | 74.8 ± 0.7 (95% CI [73.5, 76.1]) | 18.9 |

| Model | Precision (%) | Recall (%) | mAP@0.5 (%) | mAP@0.5–0.95 (%)/Significance |

|---|---|---|---|---|

| YOLOv8n (2024) | 91.2 ± 0.8 (95% CI [90.1, 92.3]) | 89.5 ± 1.0 (95% CI [87.6, 91.4]) | 91.8 ± 0.7 (95% CI [90.6, 93.0]) | 69.4 ± 1.2 (95% CI [67.1, 71.7])/– |

| EfficientDet-D1 (2024) | 92.8 ± 0.6 (95% CI [91.7, 93.9]) | 90.4 ± 0.8 (95% CI [88.9, 91.9]) | 93.1 ± 0.5 (95% CI [92.1, 94.1]) | 71.8 ± 0.9 (95% CI [70.0, 73.6])/– |

| RT-DETR (R-50) | 90.5 ± 1.1 (95% CI [88.4, 92.6]) | 88.1 ± 1.2 (95% CI [85.7, 90.5]) | 91.0 ± 0.8 (95% CI [89.4, 92.6]) | 74.1 ± 1.0 (95% CI [72.1, 76.1])/– |

| Proposed Adaptive Ensemble | 95.4 ± 0.5 (95% CI [94.5, 96.3]) | 94.1 ± 0.6 (95% CI [93.0, 95.2]) | 95.8 ± 0.4 (95% CI [95.0, 96.6]) | 74.8 ± 0.7 (95% CI [73.5, 76.1])/p < 0.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kutyrev, A.; Andriyanov, N.; Khort, D.; Smirnov, I.; Zubina, V. Adaptive CNN Ensemble for Apple Detection: Enabling Sustainable Monitoring Orchard. AgriEngineering 2025, 7, 369. https://doi.org/10.3390/agriengineering7110369

Kutyrev A, Andriyanov N, Khort D, Smirnov I, Zubina V. Adaptive CNN Ensemble for Apple Detection: Enabling Sustainable Monitoring Orchard. AgriEngineering. 2025; 7(11):369. https://doi.org/10.3390/agriengineering7110369

Chicago/Turabian StyleKutyrev, Alexey, Nikita Andriyanov, Dmitry Khort, Igor Smirnov, and Valeria Zubina. 2025. "Adaptive CNN Ensemble for Apple Detection: Enabling Sustainable Monitoring Orchard" AgriEngineering 7, no. 11: 369. https://doi.org/10.3390/agriengineering7110369

APA StyleKutyrev, A., Andriyanov, N., Khort, D., Smirnov, I., & Zubina, V. (2025). Adaptive CNN Ensemble for Apple Detection: Enabling Sustainable Monitoring Orchard. AgriEngineering, 7(11), 369. https://doi.org/10.3390/agriengineering7110369