A Review of Crop Attribute Monitoring Technologies for General Agricultural Scenarios

Abstract

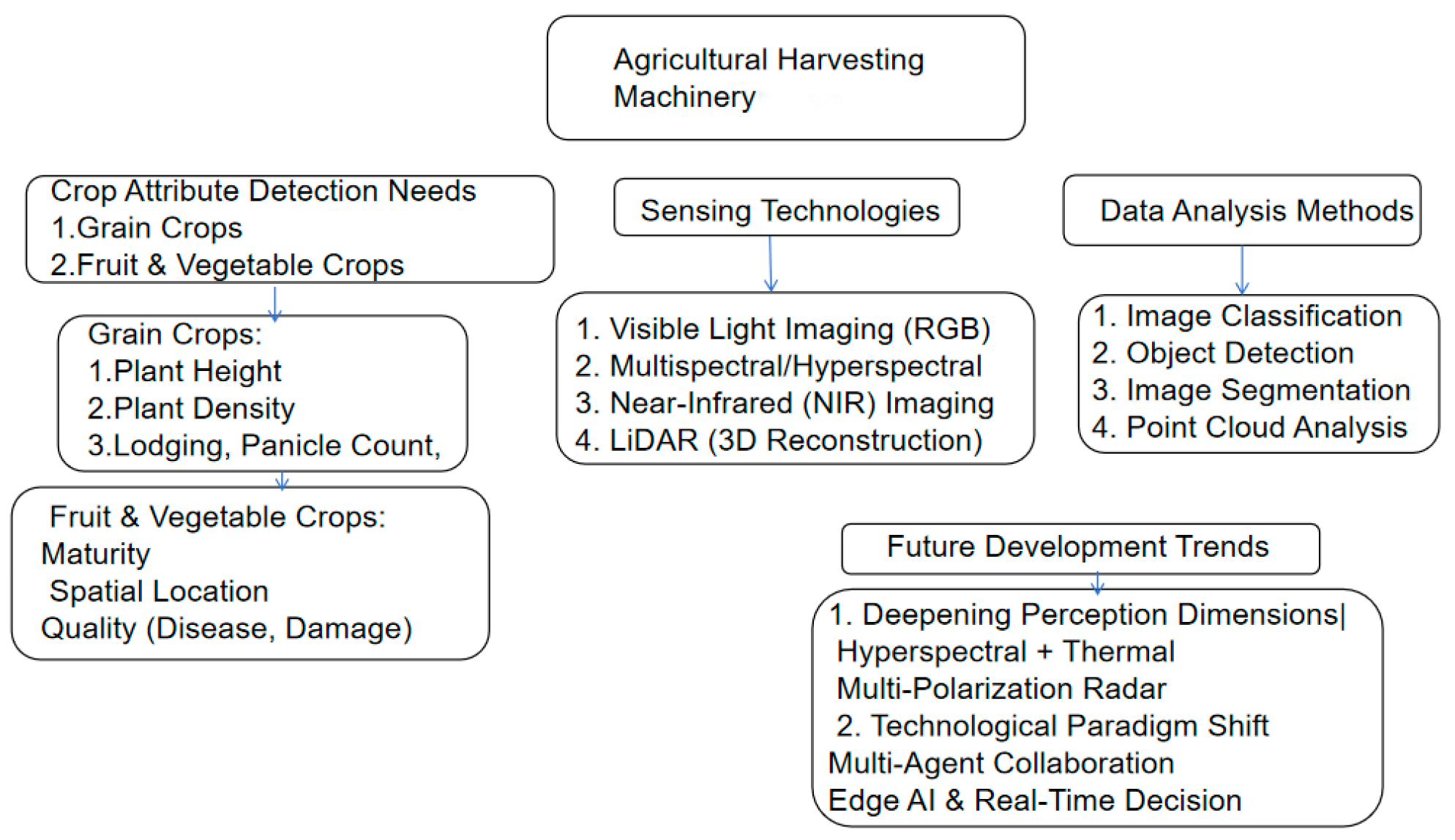

1. Introduction

2. Crop Attributes

2.1. Grain Crop Attributes

2.1.1. Crop Height

2.1.2. Plant Density

2.2. Fruit and Vegetable Crop Attributes

2.2.1. Maturity

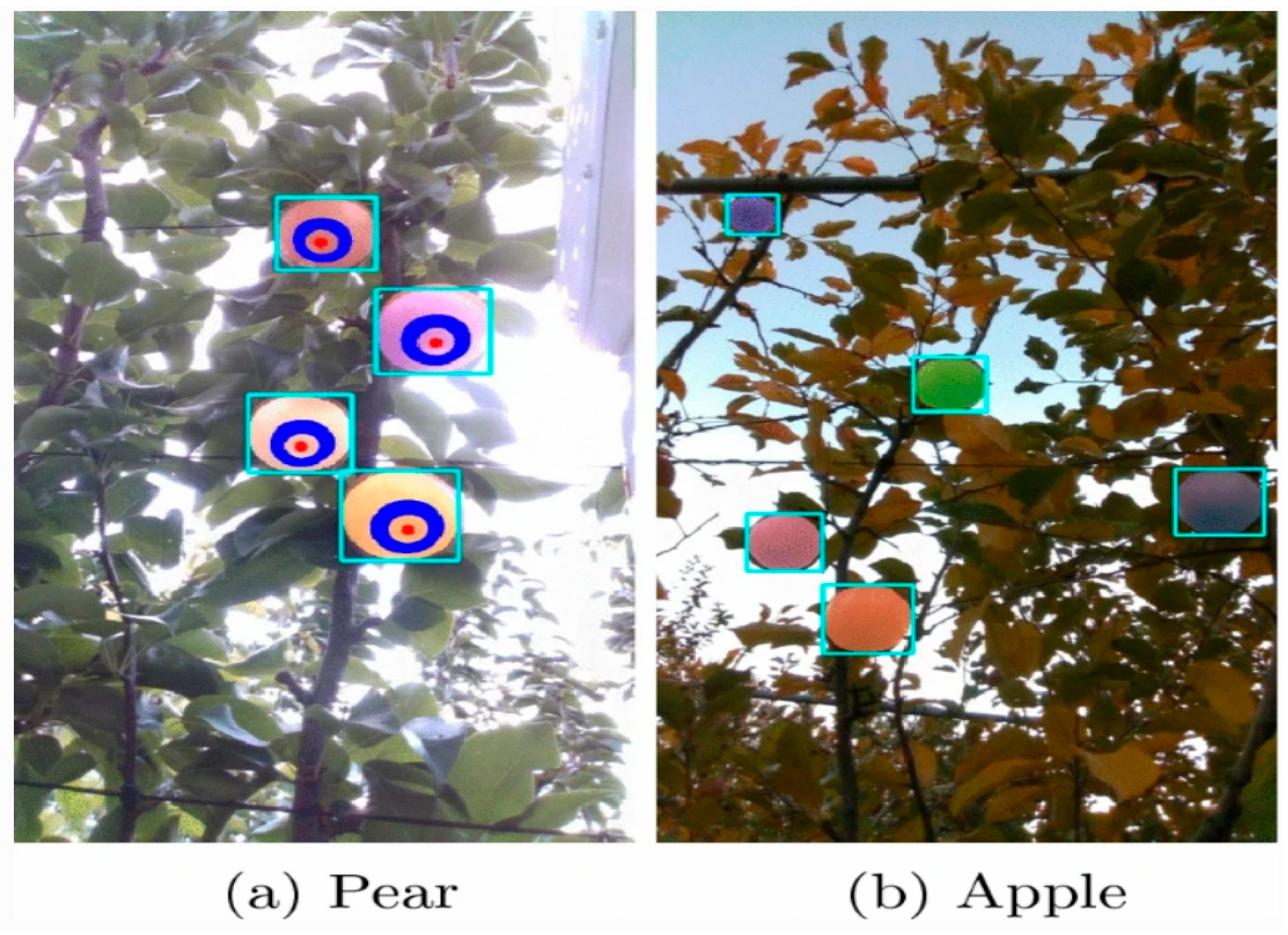

2.2.2. Plant and Fruit Location

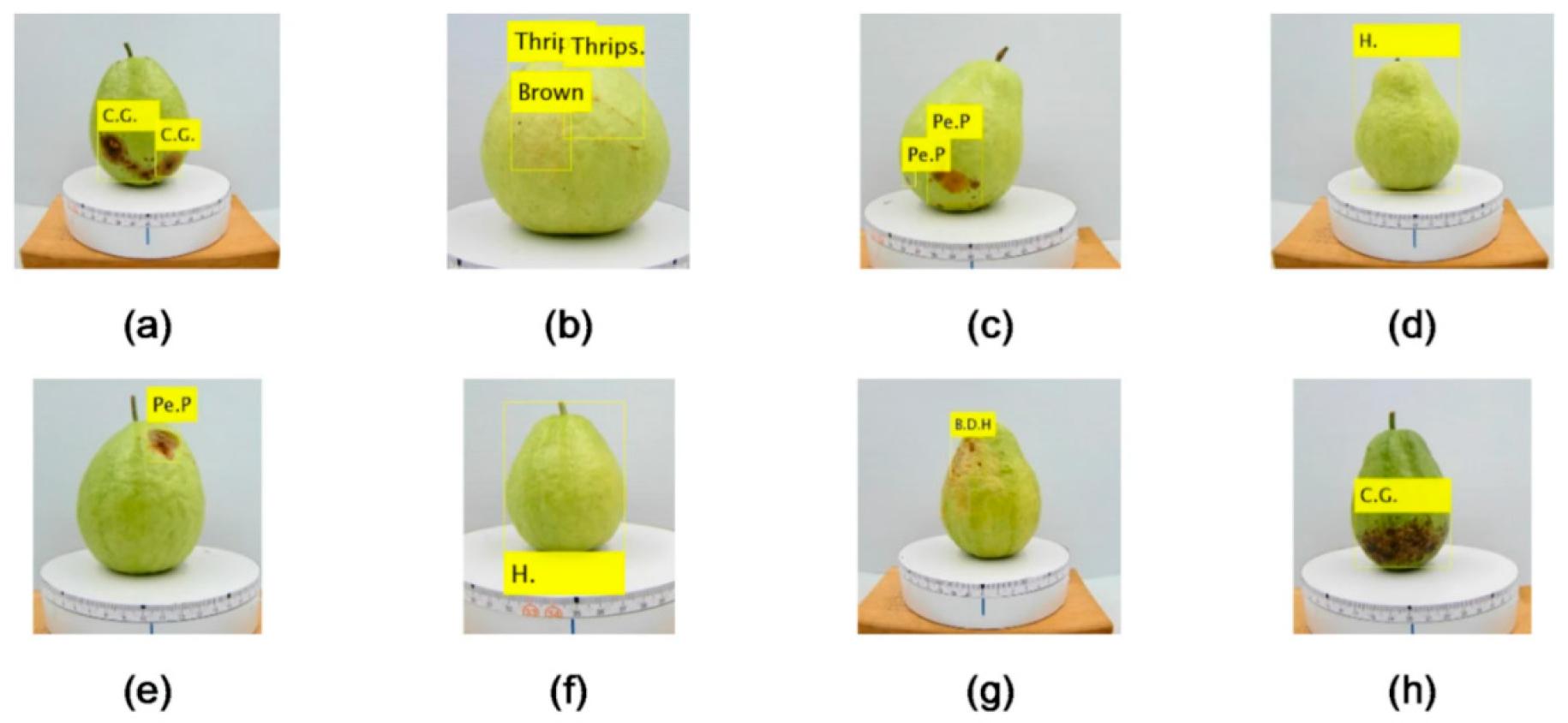

2.2.3. Crop Quality

3. Techniques for Crop Attribute Sensing

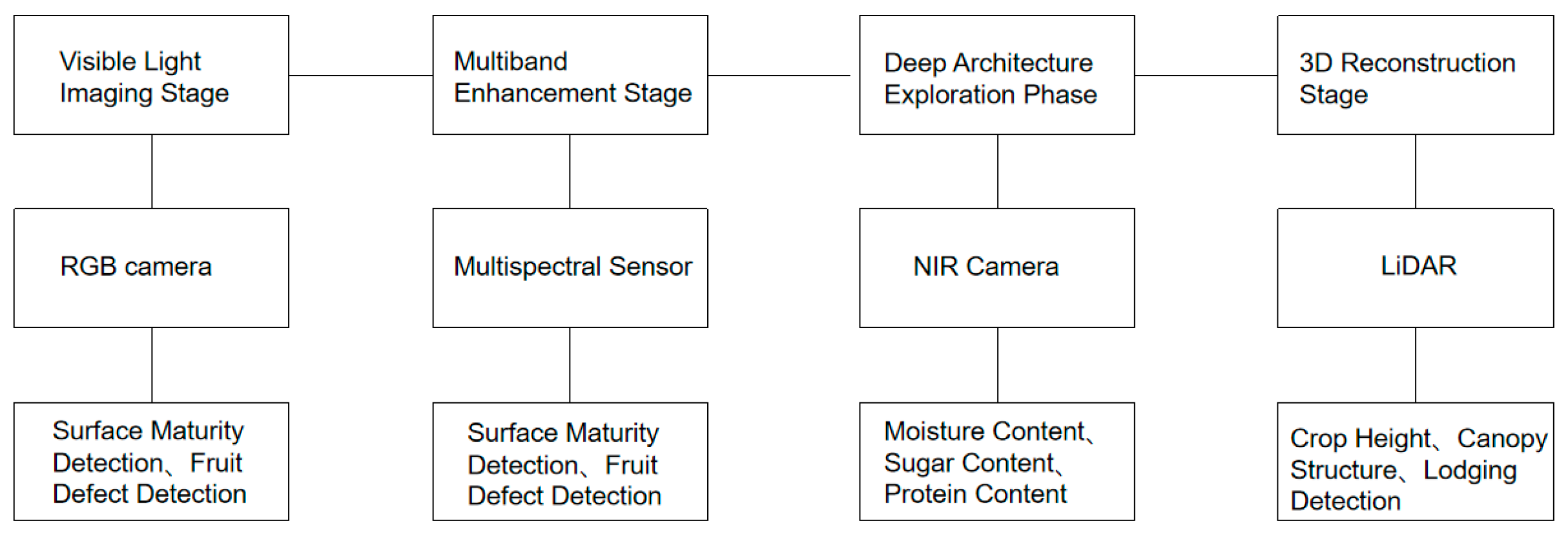

3.1. Data Sensor

3.1.1. Visible Light Imaging Stage: RGB Camera

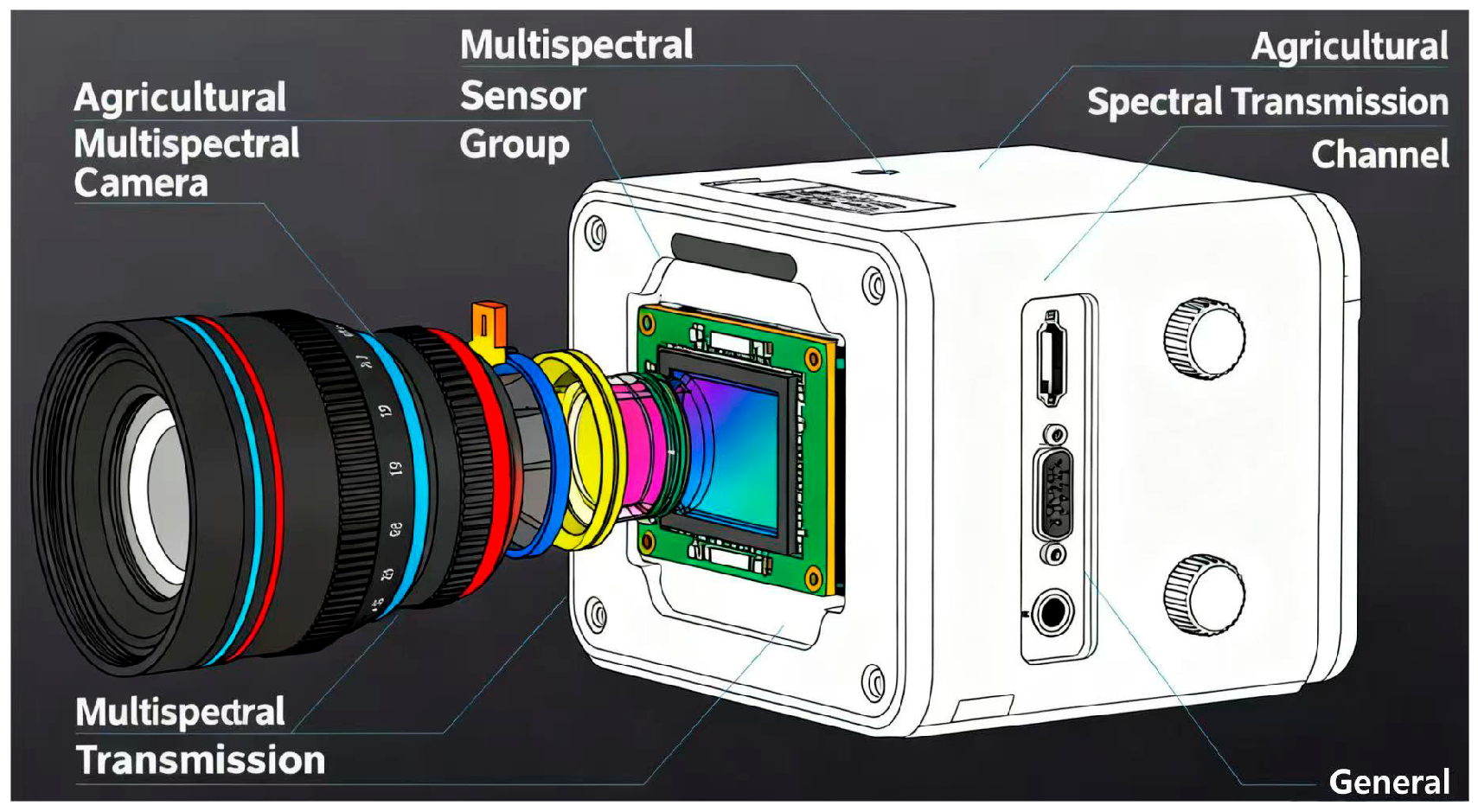

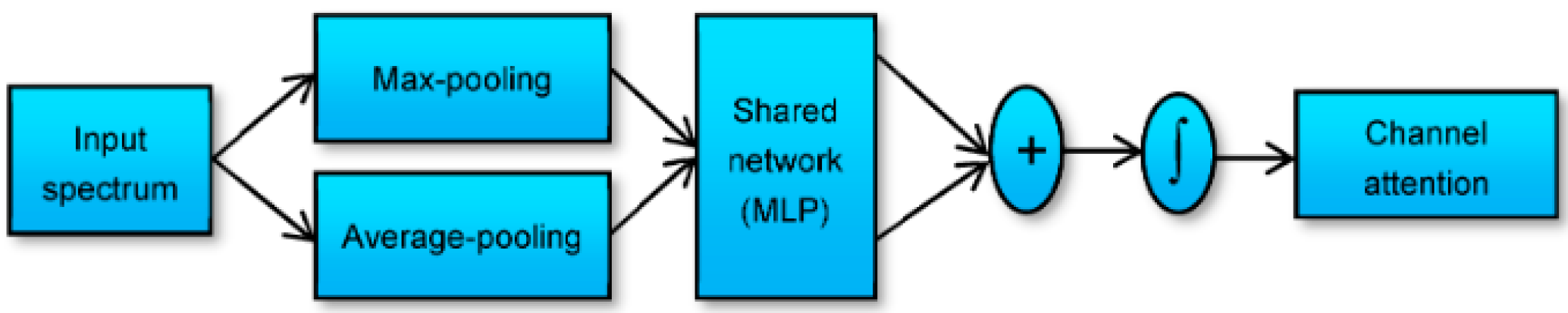

3.1.2. Multiband Enhancement Stage: Multispectral and Hyperspectral Sensors

3.1.3. Subsurface Structure Probing Stage: Near-Infrared (NIR) Imaging

3.1.4. Three-Dimensional Reconstruction Stage: LiDAR

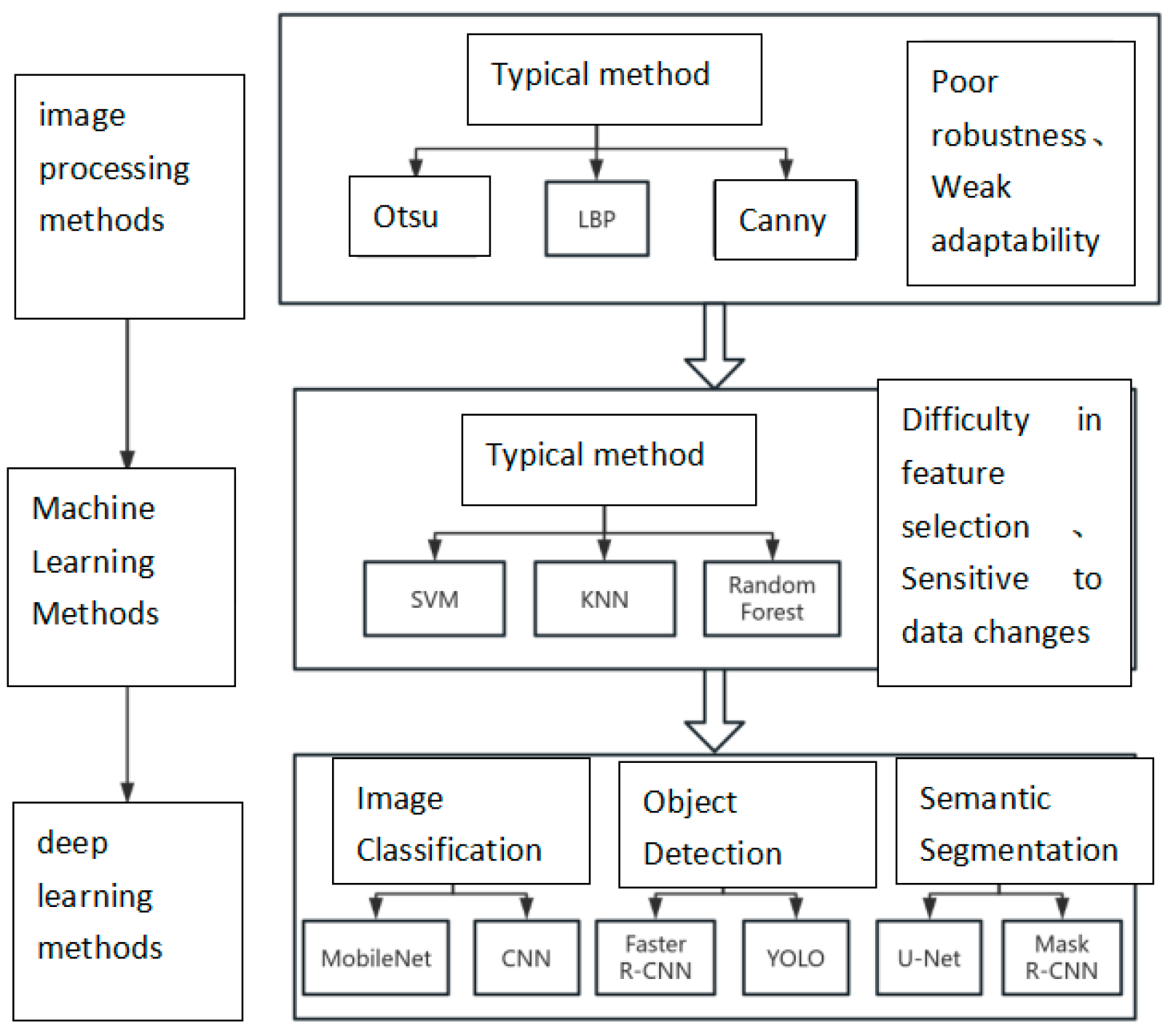

3.2. Data Analysis

3.2.1. Image Classification

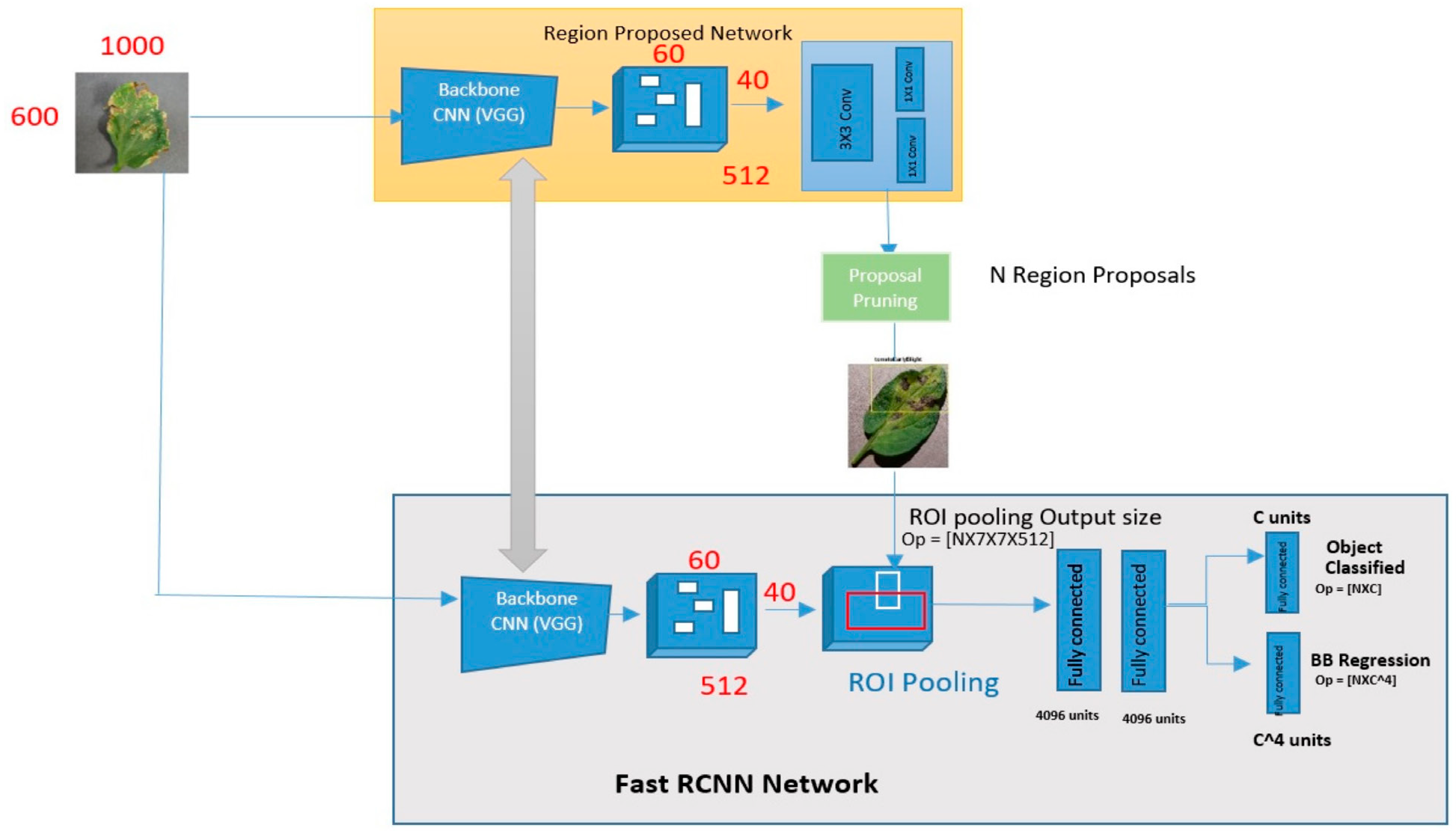

3.2.2. Object Detection

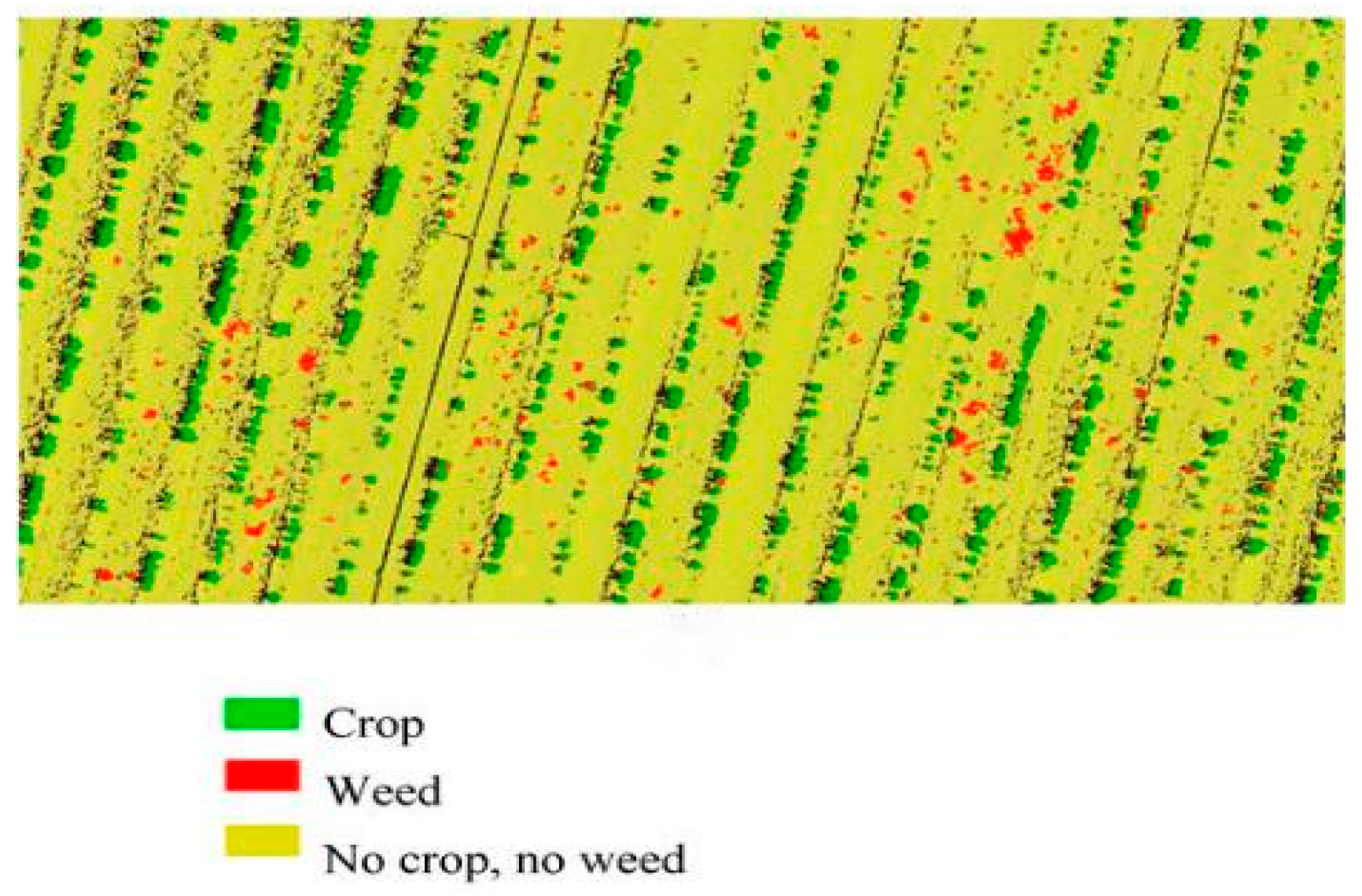

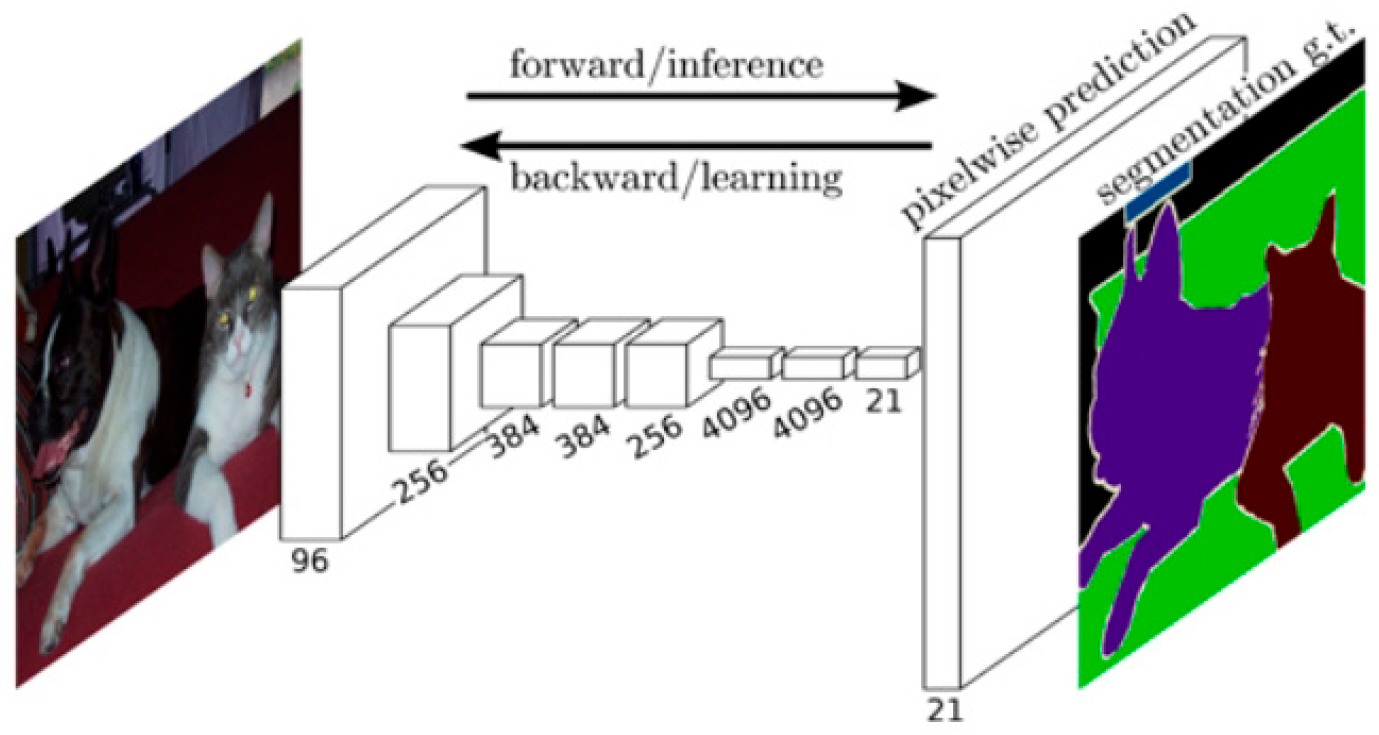

3.2.3. Image Segmentation

3.2.4. Point Cloud Analysis

3.2.5. Other Methods

4. Future Development Trends

4.1. Deepening of Perception Dimensions: From Macroscopic Phenomena to Microscopic Mechanisms

4.1.1. Hyperspectral Imaging + Fluorescence/Thermal Imaging Fusion

- From hyperspectral images, extract the reflectance of specific bands, vegetation indices (such as NDVI, PRI), and biochemical parameters (chlorophyll content, anthocyanin concentration, water content, etc.) obtained through spectral inversion models;

- From fluorescence images, acquire key indicators reflecting photosynthetic efficiency, such as the maximum quantum yield (Fv/Fm) of photosystem II (PSII) and non-photochemical quenching (NPQ);

- From thermal images, obtain features closely related to transpiration and water status, such as canopy temperature distribution and temperature stress index.

- Hyperspectral data provide extremely rich chemical composition backgrounds, enabling fine distinction of the spectral fingerprints of different substances;

- Fluorescence data dynamically and sensitively reveal the internal operating status of photosynthetic apparatus and their immediate responses to stress;

- Thermal data intuitively reflect the transpiration cooling effect of crop canopies and the degree of water deficit from the perspective of energy balance.

4.1.2. Multi-Polarization and Multi-Band Penetrating Perception

4.2. Technological Paradigm Shift: Agricultural Intelligent Agents with a “Perception-Decision-Execution” Closed Loop

4.2.1. Multi-Agent Collaborative Perception System

4.2.2. Edge Computing and Real-Time Decision-Making

- Adopting compact network architectures: Replacing traditional deep networks with compact architectures (e.g., MobileNet, ShuffleNet, and SqueezeNet) to drastically reduce the number of parameters and computational load;

- Pruning technology: Removing redundant connections or channels in the model while preserving its core feature extraction capabilities;

- Quantization technology: Converting the weights and activation values of the model from 32-bit floating-point numbers to 8-bit integers, which significantly reduces computational and storage overhead with minimal loss of accuracy.

5. Conclusions

6. Review Methodology

6.1. Databases and Search Strategy

6.2. Keywords

6.3. Selection Criteria

6.3.1. Inclusion Criteria

6.3.2. Exclusion Criteria

6.4. Time Span

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Yang, F.; Liao, D.; Wu, X.; Gao, R.; Fan, Y.; Raza, M.A.; Wang, X.; Yong, T.; Liu, W.; Liu, J.; et al. Effect of Aboveground and Belowground Interactions on the Intercrop Yields in Maize-Soybean Relay Intercropping Systems. Field Crops Res. 2017, 203, 16–23. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Mestrot, A.; Schulin, R.; Tandy, S. Uptake and Transformation of Methylated and Inorganic Antimony in Plants. Front. Plant Sci. 2018, 9, 140. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Auat Cheein, F.A.; Carelli, R. Agricultural Robotics: Unmanned Robotic Service Units in Agricultural Tasks. IEEE Ind. Electron. Mag. 2013, 7, 48–58. [Google Scholar] [CrossRef]

- Lu, D.; Wang, Y. MAR-YOLOv9: A Multi-Dataset Object Detection Method for Agricultural Fields Based on YOLOv9. PLoS ONE 2024, 19, e0307643. [Google Scholar] [CrossRef] [PubMed]

- Magar, L.P.; Sandifer, J.; Khatri, D.; Poudel, S.; Kc, S.; Gyawali, B.; Gebremedhin, M.; Chiluwal, A. Plant Height Measurement Using UAV-Based Aerial RGB and LiDAR Images in Soybean. Front. Plant Sci. 2025, 16, 1488760. [Google Scholar] [CrossRef]

- Xie, T.; Li, J.; Yang, C.; Jiang, Z.; Chen, Y.; Guo, L.; Zhang, J. Crop Height Estimation Based on UAV Images: Methods, Errors, and Strategies. Comput. Electron. Agric. 2021, 185, 106155. [Google Scholar] [CrossRef]

- Panday, U.S.; Shrestha, N.; Maharjan, S.; Pratihast, A.K.; Shahnawaz Shrestha, K.L.; Aryal, J. Correlating the Plant Height of Wheat with Above-Ground Biomass and Crop Yield Using Drone Imagery and Crop Surface Model, A Case Study from Nepal. Drones 2020, 4, 28. [Google Scholar] [CrossRef]

- Li, Y.; Li, C.; Cheng, Q.; Chen, L.; Li, Z.; Zhai, W.; Mao, B.; Chen, Z. Precision estimation of winter wheat crop height and above-ground biomass using unmanned aerial vehicle imagery and oblique photoghraphy point cloud data. Front. Plant Sci. 2024, 15, 1437350. [Google Scholar] [CrossRef] [PubMed]

- Velumani, K.; Lopez-Lozano, R.; Madec, S.; Guo, W.; Gillet, J.; Comar, A.; Baret, F. Estimates of Maize Plant Density from UAV RGB Images Using Faster-RCNN Detection Model: Impact of the Spatial Resolution. Plant Phenomics 2021, 2021, 9824843. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Fang, Y.; Fang, X.; Ma, Y.; Li, H. A Large-Scale Building Unsupervised Extraction Method Leveraging Airborne LiDAR Point Clouds and Remote Sensing Images Based on a Dual P-Snake Model. Sensors 2024, 24, 7503. [Google Scholar] [CrossRef] [PubMed]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved Estimation of Aboveground Biomass in Wheat from RGB Imagery and Point Cloud Data Acquired with a Low-Cost Unmanned Aerial Vehicle System. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of Plant Density of Wheat Crops at Emergence from Very Low Altitude UAV Imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Xu, X.; Geng, Q.; Gao, F.; Xiong, D.; Qiao, H.; Ma, X. Segmentation and Counting of Wheat Spike Grains Based on Deep Learning and Textural Feature. Plant Methods 2023, 19, 77. [Google Scholar] [CrossRef]

- Shi, L.; Sun, J.; Dang, Y.; Zhang, S.; Sun, X.; Xi, L.; Wang, J. YOLOv5s-T: A Lightweight Small Object Detection Method for Wheat Spikelet Counting. Agriculture 2023, 13, 872. [Google Scholar] [CrossRef]

- Qiu, R.; He, Y.; Zhang, M. Automatic Detection and Counting of Wheat Spikelet Using Semi-Automatic Labeling and Deep Learning. Front. Plant Sci. 2022, 13, 872555. [Google Scholar] [CrossRef]

- Sun, X.; Jiang, T.; Hu, J.; Song, Z.; Ge, Y.; Wang, Y.; Liu, X.; Bing, J.; Li, J.; Zhou, Z.; et al. Counting Wheat Heads Using a Simulation Model. Comput. Electron. Agric. 2025, 228, 109633. [Google Scholar] [CrossRef]

- Sanaeifar, A.; Guindo, M.L.; Bakhshipour, A.; Fazayeli, H.; Li, X.; Yang, C. Advancing Precision Agriculture: The Potential of Deep Learning for Cereal Plant Head Detection. Comput. Electron. Agric. 2023, 209, 107875. [Google Scholar] [CrossRef]

- Wen, J.; Yin, Y.; Zhang, Y.; Pan, Z.; Fan, Y. Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation. Agriculture 2023, 13, 120. [Google Scholar] [CrossRef]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat Lodging Detection from UAS Imagery Using Machine Learning Algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Kang, G.; Wang, J.; Zeng, F.; Cai, Y.; Kang, G.; Yue, X. Lightweight Detection System with Global Attention Network (GloAN) for Rice Lodging. Plants 2023, 12, 1595. [Google Scholar] [CrossRef] [PubMed]

- Xie, B.; Wang, J.; Jiang, H.; Zhao, S.; Liu, J.; Jin, Y.; Li, Y. Multi-Feature Detection of In-Field Grain Lodging for Adaptive Low-Loss Control of Combine Harvesters. Comput. Electron. Agric. 2023, 208, 107772. [Google Scholar] [CrossRef]

- Li, Z.; Feng, X.; Li, J.; Wang, D.; Hong, W.; Qin, J.; Wang, A.; Ma, H.; Yao, Q.; Chen, S. Time Series Field Estimation of Rice Canopy Height Using an Unmanned Aerial Vehicle-Based RGB/Multispectral Platform. Agronomy 2024, 14, 883. [Google Scholar] [CrossRef]

- Shu, M.; Li, Q.; Ghafoor, A.; Zhu, J.; Li, B.; Ma, Y. Using the Plant Height and Canopy Coverage to Estimate Maize Aboveground Biomass with UAV Digital Images. Eur. J. Agron. 2023, 151, 126957. [Google Scholar] [CrossRef]

- Xie, J.; Zhou, Z.; Zhang, H.; Zhang, L.; Li, M. Combining Canopy Coverage and Plant Height from UAV-Based RGB Images to Estimate Spraying Volume on Potato. Sustainability 2022, 14, 6473. [Google Scholar] [CrossRef]

- Valluvan, A.B.; Raj, R.; Pingale, R.; Jagarlapudi, A. Canopy Height Estimation Using Drone-Based RGB Images. Smart Agric. Technol. 2023, 4, 100145. [Google Scholar] [CrossRef]

- Joshi, A.; Pradhan, B.; Gite, S.; Chakraborty, S. Remote-Sensing Data and Deep-Learning Techniques in Crop Mapping and Yield Prediction: A Systematic Review. Remote Sens. 2023, 15, 2014. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Liu, Y.; Li, J.; Liu, Y. Crop Row Detection in the Middle and Late Periods of Maize under Sheltering Based on Solid State LiDAR. Agriculture 2022, 12, 2011. [Google Scholar] [CrossRef]

- Wu, F.; Wang, J.; Zhou, Y.; Song, X.; Ju, C.; Sun, C.; Liu, T. Estimation of Winter Wheat Tiller Number Based on Optimization of Gradient Vegetation Characteristics. Remote Sens. 2022, 14, 1338. [Google Scholar] [CrossRef]

- Ma, J.; Li, M.; Fan, W.; Liu, J. State-of-the-Art Techniques for Fruit Maturity Detection. Agronomy 2024, 14, 2783. [Google Scholar] [CrossRef]

- Chu, X.; Miao, P.; Zhang, K.; Wei, H.; Fu, H.; Liu, H.; Jiang, H.; Ma, Z. Green Banana Maturity Classification and Quality Evaluation Using Hyperspectral Imaging. Agriculture 2022, 12, 530. [Google Scholar] [CrossRef]

- Mahmood, A.; Singh, S.K.; Tiwari, A.K. Pre-Trained Deep Learning-Based Classification of Jujube Fruits According to Their Maturity Level. Neural Comput. Appl. 2022, 34, 13925–13935. [Google Scholar] [CrossRef]

- Zhao, M.; Cang, H.; Chen, H.; Zhang, C.; Yan, T.; Zhang, Y.; Gao, P.; Xu, W. Determination of Quality and Maturity of Processing Tomatoes Using Near-Infrared Hyperspectral Imaging with Interpretable Machine Learning Methods. LWT-Food Sci. Technol. 2023, 183, 114861. [Google Scholar] [CrossRef]

- Tzuan, G.T.H.; Hashim, F.H.; Raj, T.; Baseri Huddin, A.; Sajab, M.S. Oil Palm Fruits Ripeness Classification Based on the Characteristics of Protein, Lipid, Carotene, and Guanine/Cytosine from the Raman Spectra. Plants 2022, 11, 1936. [Google Scholar] [CrossRef]

- Yoshida, T.; Kawahara, T.; Fukao, T. Fruit Recognition Method for a Harvesting Robot with RGB-D Cameras. Robomech J. 2022, 9, 15. [Google Scholar] [CrossRef]

- Liu, T.; Wang, X.; Hu, K.; Zhou, H.; Kang, H.; Chen, C. FF3D: A Rapid and Accurate 3D Fruit Detector for Robotic Harvesting. Sensors 2024, 24, 3858. [Google Scholar] [CrossRef]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral Image Analysis Techniques for the Detection and Classification of the Early Onset of Plant Disease and Stress. Plant Methods 2017, 13, 80. [Google Scholar] [CrossRef]

- García-Vera, Y.E.; Polochè-Arango, A.; Mendivelso-Fajardo, C.A.; Gutiérrez-Bernal, F.J. Hyperspectral Image Analysis and Machine Learning Techniques for Crop Disease Detection and Identification: A Review. Sustainability 2024, 16, 6064. [Google Scholar] [CrossRef]

- Nagasubramanian, K.; Jones, S.; Singh, A.K.; Sarkar, S.; Singh, A.; Ganapathysubramanian, B. Plant Disease Identification Using Explainable 3D Deep Learning on Hyperspectral Images. Plant Methods 2019, 15, 98. [Google Scholar] [CrossRef]

- Chiou, K.D.; Chen, Y.X.; Chen, P.S.; Jou, Y.T.; Tsai, S.H.; Chang, C.Y. Application of Deep Learning for Fruit Defect Recognition in Psidium guajava L. Sci. Rep. 2025, 15, 6145. [Google Scholar] [CrossRef]

- Pang, Q.; Huang, W.; Fan, S.; Zhou, Q.; Wang, Z.; Tian, X. Detection of Early Bruises on Apples Using Hyperspectral Imaging Combining with YOLOv3 Deep Learning Algorithm. J. Food Process Eng. 2022, 45, e13952. [Google Scholar] [CrossRef]

- Yue, J.; Zhou, C.; Feng, H.; Yang, Y.; Zhang, N. Novel Applications of Optical Sensors and Machine Learning in Agricultural Monitoring. Agriculture 2023, 13, 1970. [Google Scholar] [CrossRef]

- Wang, W.; den Brinker, A.C. Modified RGB Cameras for Infrared Remote-PPG. IEEE Trans. Biomed. Eng. 2020, 67, 2893–2904. [Google Scholar] [CrossRef] [PubMed]

- Linhares, J.M.M.; Monteiro, J.A.R.; Bailão, A.; Cardeira, L.; Kondo, T.; Nakauchi, S.; Picollo, M.; Cucci, C.; Casini, A.; Stefani, L.; et al. How Good Are RGB Cameras Retrieving Colors of Natural Scenes and Paintings?—A Study Based on Hyperspectral Imaging. Sensors 2020, 20, 6242. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, C.; Song, H.; Hoffmann, W.C.; Zhang, D.; Zhang, G. Evaluation of an Airborne Remote Sensing Platform Consisting of Two Consumer-Grade Cameras for Crop Identification. Remote Sens. 2016, 8, 257. [Google Scholar] [CrossRef]

- dos Santos, L.M.; Ferraz, G.A.E.S.; Barbosa, B.D.S.; Diotto, A.V.; Maciel, D.T.; Xacier, L.A.G. Biophysical Parameters of Coffee Crop Estimated by UAV RGB Images. Precis. Agric. 2020, 21, 1227–1241. [Google Scholar] [CrossRef]

- Okamoto, Y.; Tanaka, M.; Monno, Y.; Okutomi, M. Deep Snapshot HDR Imaging Using Multi-Exposure Color Filter Array. Vis. Comput. 2024, 40, 3285–3301. [Google Scholar] [CrossRef]

- Yan, B.; Li, X. RGB-D Camera and Fractal-Geometry-Based Maximum Diameter Estimation Method of Apples for Robot Intelligent Selective Graded Harvesting. Fractal Fract. 2024, 8, 649. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, M.; Wu, Y.; Gao, Y.; Tang, Y.; Jiang, B.; Wu, M.; Zhang, J.; Hou, L. Detection of Maize Stem Diameter by Using RGB-D Cameras’ Depth Information under Selected Field Condition. Front. Plant Sci. 2024, 15, 1371252. [Google Scholar] [CrossRef]

- Orlandella, I.; Smith, K.N.; Belcore, E.; Ferrero, R.; Piras, M.; Fiore, S. Monitoring Strawberry Plants’ Growth in Soil Amended with Biochar. AgriEngineering 2025, 7, 324. [Google Scholar] [CrossRef]

- Mafuratidze, P.; Chibarabada, T.P.; Shekede, M.D.; Masocha, M. A New Four-Stage Approach Based on Normalized Vegetation Indices for Detecting and Mapping Sugarcane Hail Damage Using Multispectral Remotely Sensed Data. Geocarto Int. 2023, 38, 2245788. [Google Scholar] [CrossRef]

- Lee, J.; Park, Y.; Kim, H.; Yoon, Y.-Z.; Ko, W.; Bae, K.; Lee, J.-Y.; Choo, H.; Roh, Y.-G. Compact Meta-Spectral Image Sensor for Mobile Applications. Nanophotonics 2022, 11, 2563–2569. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Rivard, B.; Rogge, D.M. The Successive Projection Algorithm (SPA), an Algorithm with a Spatial Constraint for the Automatic Search of Endmembers in Hyperspectral Data. Sensors 2008, 8, 1321–1342. [Google Scholar] [CrossRef]

- Wu, P.; Sun, S.; Wei, W.; Yuan, X.; Zhou, R. Research on Calibration Methods of Long-Wave Infrared Camera and Visible Camera. J. Sens. 2022, 2022, 8667606. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, M.; Yan, L.; Yao, Y.; Fu, Y.; Luo, S.; Lin, Y. Angular Effect in Proximal Sensing of Leaf-Level Chlorophyll Content Using Low-Cost DIY Visible/Near-Infrared Camera. Comput. Electron. Agric. 2020, 178, 105765. [Google Scholar] [CrossRef]

- Kang, R.; Ma, T.; Tsuchikawa, S.; Inagaki, T.; Chen, J.; Zhao, J.; Li, D.; Cui, G. Non-Destructive Near-Infrared Moisture Detection of Dried Goji (Lycium barbarum L.) Berry. Horticulturae 2024, 10, 302. [Google Scholar] [CrossRef]

- Pandey, P.; Mishra, G.; Mishra, H.N. Development of a Non-Destructive Method for Wheat Physico-Chemical Analysis by Chemometric Comparison of Discrete Light Based Near Infrared and Fourier Transform Near Infrared Spectroscopy. Food Meas. Charact. 2018, 12, 2535–2544. [Google Scholar] [CrossRef]

- Guo, Z.; Chen, X.; Zhang, Y.; Sun, C.; Jayan, H.; Majeed, U.; Watson, N.J.; Zou, X. Dynamic Nondestructive Detection Models of Apple Quality in Critical Harvest Period Based on Near-Infrared Spectroscopy and Intelligent Algorithms. Foods 2024, 13, 1698. [Google Scholar] [CrossRef]

- Zhang, N.; Li, P.C.; Liu, H.; Huang, T.C.; Liu, H.; Kong, Y.; Dong, Z.C.; Yuan, Y.H.; Zhao, L.L.; Li, J.H. Water and Nitrogen In-Situ Imaging Detection in Live Corn Leaves Using Near-Infrared Camera and Interference Filter. Plant Methods 2021, 17, 117. [Google Scholar] [CrossRef]

- Zhang, C.; Li, C.; He, M.; Cai, Z.; Feng, Z.; Qi, H.; Zhou, L. Leaf Water Content Determination of Oilseed Rape Using Near-Infrared Hyperspectral Imaging with Deep Learning Regression Methods. Infrared Phys. Technol. 2023, 134, 104921. [Google Scholar] [CrossRef]

- Kathirvelan, J.; Vijayaraghavan, R. An Infrared Based Sensor System for the Detection of Ethylene for the Discrimination of Fruit Ripening. Infrared Phys. Technol. 2017, 85, 403–409. [Google Scholar] [CrossRef]

- Lee, Y.H.; Khalil-Hani, M.; Bakhteri, R.; Nambiar, V.P. A Real-Time Near Infrared Image Acquisition System Based on Image Quality Assessment. J. Real-Time Image Process. 2017, 13, 103–120. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, L.; Zhai, X.; Li, L.; Zhou, Q.; Chen, X.; Li, X. Polarization Lidar: Principles and Applications. Photonics 2023, 10, 1118. [Google Scholar] [CrossRef]

- Tan, H.; Wang, P.; Yan, X.; Xin, Q.; Mu, G.; Lv, Z. A Highly Accurate Detection Platform for Potato Seedling Canopy in Intelligent Agriculture Based on Phased Array LiDAR Technology. Agriculture 2024, 14, 1369. [Google Scholar] [CrossRef]

- Xu, W.; Yang, W.; Wu, J.; Chen, P.; Lan, Y.; Zhang, L. Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy 2023, 13, 2584. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Ji, C.; Sun, J.; Sun, Y. GNSS and LiDAR Integrated Navigation Method in Orchards with Intermittent GNSS Dropout. Appl. Sci. 2024, 14, 3231. [Google Scholar] [CrossRef]

- Yuan, W.; Choi, D.; Bolkas, D. GNSS-IMU-Assisted Colored ICP for UAV-LiDAR Point Cloud Registration of Peach Trees. Comput. Electron. Agric. 2022, 197, 106966. [Google Scholar] [CrossRef]

- Murcia, H.F.; Tilaguy, S.; Ouazaa, S. Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR. Plants 2021, 10, 2804. [Google Scholar] [CrossRef]

- Reji, J.; Nidamanuri, R.R. Deep Learning Based Fusion of LiDAR Point Cloud and Multispectral Imagery for Crop Classification Sensitive to Nitrogen Level. In Proceedings of the 2023 International Conference on Machine Intelligence for GeoAnalytics and Remote Sensing (MIGARS), Hyderabad, India, 27–29 January 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Jones, H.G. Application of Thermal Imaging and Infrared Sensing in Plant Physiology and Ecophysiology. In Advances in Botanical Research; Academic Press: New York, NY, USA, 2004; Volume 41, pp. 107–163. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of Depth Cameras for Use in Fruit Localization and Sizing: Finding a Successor to Kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Chen, H.; Zhou, G.; He, W.; Duan, X.; Jiang, H. Classification and Identification of Agricultural Products Based on Improved MobileNetV2. Sci. Rep. 2024, 14, 3454. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Tommaselli, A.M.G. Application of Image Processing in Agriculture. Agronomy 2023, 13, 2399. [Google Scholar] [CrossRef]

- Devi, P.S.; Rajan, A.S. An Inquiry of Image Processing in Agriculture to Perceive the Infirmity of Plants Using Machine Learning. Multimed. Tools Appl. 2024, 83, 80631–80640. [Google Scholar] [CrossRef]

- Benmouna, B.; Pourdarbani, R.; Sabzi, S.; Fernandez-Beltran, R.; García-Mateos, G.; Molina-Martínez, J.M. Attention Mechanisms in Convolutional Neural Networks for Nitrogen Treatment Detection in Tomato Leaves Using Hyperspectral Images. Electronics 2023, 12, 2706. [Google Scholar] [CrossRef]

- Nuanmeesri, S. Enhanced Hybrid Attention Deep Learning for Avocado Ripeness Classification on Resource Constrained Devices. Sci. Rep. 2025, 15, 3719. [Google Scholar] [CrossRef] [PubMed]

- Ebrahimi, M.A.; Khoshtaghaza, M.H.; Minaee, S.; Jamshidi, B. Vision-Based Pest Detection Based on SVM Classification Method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Mirzaee-Ghaleh, E.; Omid, M.; Keyhani, A.; Dalvand, M.J. Comparison of fuzzy and on/off controllers for winter season indoor climate management in a model poultry house. Comput. Electron. Agric. 2015, 112, 187–195. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Alruwaili, M.; Siddiqi, M.H.; Khan, A.; Azad, M.; Khan, A.; Alanazi, S. RTF-RCNN: An Architecture for Real-Time Tomato Plant Leaf Diseases Detection in Video Streaming Using Faster-RCNN. Bioengineering 2022, 9, 565. [Google Scholar] [CrossRef]

- Hou, B. Theoretical Analysis of the Network Structure of Two Mainstream Object Detection Methods: YOLO and Fast RCNN. Appl. Comput. Eng. 2023, 17, 213–225. [Google Scholar] [CrossRef]

- Wang, Z.; Su, Y.; Kang, F.; Wang, L.; Lin, Y.; Wu, Q.; Li, H.; Cai, Z. PC-YOLO11s: A Lightweight and Effective Feature Extraction Method for Small Target Image Detection. Sensors 2025, 25, 348. [Google Scholar] [CrossRef]

- Cao, J.; Bao, W.; Shang, H.; Yuan, M.; Cheng, Q. GCL-YOLO: A GhostConv-Based Lightweight YOLO Network for UAV Small Object Detection. Remote Sens. 2023, 15, 4932. [Google Scholar] [CrossRef]

- Jiang, K.; Xie, T.; Yan, R.; Wen, X.; Li, D.; Jiang, H.; Jiang, N.; Feng, L.; Duan, X.; Wang, J. An Attention Mechanism-Improved YOLOv7 Object Detection Algorithm for Hemp Duck Count Estimation. Agriculture 2022, 12, 1659. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A Review of Deep Learning Techniques Used in Agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Vite-Chávez, O.; Flores-Troncoso, J.; Olivera-Reyna, R.; Munoz-Minjares, J.U. Improvement Procedure for Image Segmentation of Fruits and Vegetables Based on the Otsu Method. Image Anal. Stereol. 2023, 42, 185–196. [Google Scholar] [CrossRef]

- Zhang, C.; Li, T.; Li, J. Detection of Impurity Rate of Machine-Picked Cotton Based on Improved Canny Operator. Electronics 2022, 11, 974. [Google Scholar] [CrossRef]

- Hamedpour, V.; Oliveri, P.; Malegori, C.; Minami, T. Development of a Morphological Color Image Processing Algorithm for Paper-Based Analytical Devices. Sens. Actuators B Chem. 2020, 322, 128571. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Y.; Li, Y.; Ji, Y.; Wu, Y.; Chen, Y.; Han, Y.; Han, Y.; Liu, Y.; Ruan, Y.; et al. An Improved U-Net and Attention Mechanism-Based Model for Sugar Beet and Weed Segmentation. Front. Plant Sci. 2025, 16, 123456. [Google Scholar] [CrossRef] [PubMed]

- Paulus, S. Measuring Crops in 3D: Using Geometry for Plant Phenotyping. Plant Methods 2019, 15, 103. [Google Scholar] [CrossRef]

- Jiang, L.; Li, C.; Fu, L. Apple Tree Architectural Trait Phenotyping with Organ-Level Instance Segmentation from Point Cloud. Comput. Electron. Agric. 2025, 229, 109708. [Google Scholar] [CrossRef]

- Yuan, Q.; Wang, P.; Luo, W.; Zhou, Y.; Chen, H.; Meng, Z. Simultaneous Localization and Mapping System for Agricultural Yield Estimation Based on Improved VINS-RGBD: A Case Study of a Strawberry Field. Agriculture 2024, 14, 784. [Google Scholar] [CrossRef]

- Zare, M.; Helfroush, M.S.; Kazemi, K.; Scheunders, P. Hyperspectral and Multispectral Image Fusion Using Coupled Non-Negative Tucker Tensor Decomposition. Remote Sens. 2021, 13, 2930. [Google Scholar] [CrossRef]

- Guo, H.; Bao, W.; Qu, K.; Ma, X.; Cao, M. Multispectral and Hyperspectral Image Fusion Based on Regularized Coupled Non-Negative Block-Term Tensor Decomposition. Remote Sens. 2022, 14, 5306. [Google Scholar] [CrossRef]

- Qiao, M.; He, X.; Cheng, X.; Li, P.; Zhao, Q.; Zhao, C.; Tian, Z. KSTAGE: A Knowledge-Guided Spatial-Temporal Attention Graph Learning Network for Crop Yield Prediction. Inf. Sci. 2023, 619, 19–37. [Google Scholar] [CrossRef]

- Ye, Z.; Zhai, X.; She, T.; Liu, X.; Hong, Y.; Wang, L.; Zhang, L.; Wang, Q. Winter Wheat Yield Prediction Based on the ASTGNN Model Coupled with Multi-Source Data. Agronomy 2024, 14, 2262. [Google Scholar] [CrossRef]

- Kwaghtyo, D.K.; Eke, C.I. Smart Farming Prediction Models for Precision Agriculture: A Comprehensive Survey. Artif. Intell. Rev. 2023, 56, 5729–5772. [Google Scholar] [CrossRef]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. Smart Farming Using Artificial Intelligence: A Review. Eng. Appl. Artif. Intell. 2023, 120, 105899. [Google Scholar] [CrossRef]

- Vonikakis, V.; Kouskouridas, R.; Gasteratos, A. On the Evaluation of Illumination Compensation Algorithms. Multimed. Tools Appl. 2018, 77, 9211–9231. [Google Scholar] [CrossRef]

- Nitin Gupta, S.B.; Yadav, R.; Bovand, F.; Tyagi, P.K. Developing Precision Agriculture Using Data Augmentation Framework for Automatic Identification of Castor Insect Pests. Front. Plant Sci. 2023, 14, 1101943. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, Q.; Hu, P.; Zhang, H.; Zhang, Z.; Liu, X.; Zhou, J. Adaptive Exposure Control for Line-Structured Light Sensors Based on Global Grayscale Statistics. Sensors 2025, 25, 1195. [Google Scholar] [CrossRef]

- Hsu, W.-Y.; Cheng, H.-C. A Novel Automatic White Balance Method for Color Constancy Under Different Color Temperatures. IEEE Access 2021, 9, 111925–111937. [Google Scholar] [CrossRef]

- Dhal, K.G.; Das, A.; Ray, S.; Gálvez, J.; Das, S. Histogram Equalization Variants as Optimization Problems: A Review. Arch. Comput. Methods Eng. 2021, 28, 1471–1496. [Google Scholar] [CrossRef]

- Liu, M.; Chen, J.; Han, X. Research on Retinex Algorithm Combining with Attention Mechanism for Image Enhancement. Electronics 2022, 11, 3695. [Google Scholar] [CrossRef]

- Zheng, J.; Xu, C.; Zhang, W.; Yang, X. Single Image Dehazing Using Global Illumination Compensation. Sensors 2022, 22, 4169. [Google Scholar] [CrossRef]

- Pang, S.; Thio, T.H.G.; Siaw, F.L.; Chen, M.; Xia, Y. Research on Improved Image Segmentation Algorithm Based on GrabCut. Electronics 2024, 13, 4068. [Google Scholar] [CrossRef]

- Avola, G.; Matese, A.; Riggi, E. An Overview of the Special Issue on “Precision Agriculture Using Hyperspectral Images”. Remote Sens. 2023, 15, 1917. [Google Scholar] [CrossRef]

- Xin, J.; Cao, X.; Xiao, H.; Liu, T.; Liu, R.; Xin, Y. Infrared Small Target Detection Based on Multiscale Kurtosis Map Fusion and Optical Flow Method. Sensors 2023, 23, 1660. [Google Scholar] [CrossRef]

- Yang, G.; Yang, H.; Yu, S.; Wang, J.; Nie, Z. A Multi-Scale Dehazing Network with Dark Channel Priors. Sensors 2023, 23, 5980. [Google Scholar] [CrossRef]

- Liu, Z.; Xiao, G.; Liu, H.; Wei, H. Multi-Sensor Measurement and Data Fusion. IEEE Instrum. Meas. Mag. 2022, 25, 28–36. [Google Scholar] [CrossRef]

- Guan, Z.; Li, H.; Chen, X.; Mu, S.; Jiang, T.; Zhang, M.; Wu, C. Development of Impurity-Detection System for Tracked Rice Combine Harvester Based on DEM and Mask R-CNN. Sensors 2022, 22, 9550. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, T.; Li, P.; Wang, J.; Xu, J.; Jin, C. The Design and Experimentation of a Differential Grain Moisture Detection Device for a Combined Harvester. Sensors 2024, 24, 4551. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.-M.; Tsai, H.-H.; Ling, J.F. Parallel Computation of Dominance Scores for Multidimensional Datasets on GPUs. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 919–931. [Google Scholar] [CrossRef]

- Kumar, U.S.; Kapali, B.S.C.; Nageswaran, A.; Umapathy, K.; Jangir, P.; Swetha, K.; Begum, M.A. Fusion of MobileNet and GRU: Enhancing Remote Sensing Applications for Sustainable Agriculture and Food Security. Remote Sens. Earth Syst. Sci. 2025, 8, 118–131. [Google Scholar] [CrossRef]

- Shen, L.; Su, J.; He, R.; Song, L.; Huang, R.; Fang, Y.; Song, Y.; Su, B. Real-Time Tracking and Counting of Grape Clusters in the Field Based on Channel Pruning with YOLOv5s. Comput. Electron. Agric. 2023, 206, 107662. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, Y.; Wang, Y.; Li, X. Fruit Fast Tracking and Recognition of Apple Picking Robot Based on Improved YOLOv5. IET Image Process. 2024, 18, 3179–3191. [Google Scholar] [CrossRef]

- Li, H.; Du, Y.Q.; Xiao, X.Z.; Chen, Y.X. Remote Sensing Identification Method of Cultivated Land at Hill County of Sichuan Basin Based on Deep Learning. Smart Agric. 2024, 6, 34–45. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Y.; Qin, Z.; Zhang, M.; Zhang, J. Study on Terrace Remote Sensing Extraction Based on Improved DeepLab v3+ Model. Smart Agric. 2024, 6. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, T.; Luo, J.; Hu, X.; Wang, L.; Li, M.; Lu, X.; Li, Z. Toward Agricultural Cultivation Parcels Extraction in the Complex Mountainous Areas Using Prior Information and Deep Learning. In IEEE Transactions on Geoscience and Remote Sensing; IEEE: New York, NY, USA, 2025. [Google Scholar]

- Chinese Academy of Sciences, Aerospace Information Innovation Institute. AI and Remote Sensing Fusion Technology Developed to Quantify Forage Planting Potential in Arid and Semi-Arid Basins of Northern China. China Daily. 29 September 2025. Available online: https://www.aircas.cn/dtxw/cmsm/202509/t20250929_7982637.html (accessed on 10 October 2025).

- Chinese Academy of Sciences, Northeast Institute of Geography and Agroecology. China’s First DeepSeek-Driven Intelligent Platform for Black Soil Protection Put into Trial Operation. China News Service. 28 April 2025. Available online: https://www.iga.ac.cn/news/cmsm/202504/t20250428_7618122.html (accessed on 10 October 2025).

- Anonymous. New Idea of Agricultural Machinery Path Planning Based on Spatio-Temporal Graph Convolutional Network (Complex Terrain Optimization Practice). CSDN Blog. 26 May 2025. Available online: https://blog.csdn.net/m0_38141444/article/details/148218324 (accessed on 10 October 2025).

| Sensing Technology | Core Working Principle | Advantages | Disadvantages | Typical Applications |

|---|---|---|---|---|

| RGB Camera | Captures visible light (R/G/B bands) to generate color images; extracts appearance features (color, shape, texture) | Low cost and easy to operate | Limited to visible spectrum (cannot detect internal crop attributes) | Corn stem diameter detection (combines RGB-D depth information to extract contours) |

| High image clarity for surface feature recognition | Susceptible to lighting variations (e.g., shadows in hilly terrain) and background clutter | Corn disease classification (removes background interference via deep learning) | ||

| Widely compatible with edge devices (e.g., harvesters, UAVs) | Fruit counting and surface defect identification (e.g., tomato skin blemishes) | |||

| Multispectral Sensor | Captures 4–10 discrete bands (including NIR); calculates vegetation indices (e.g., NDVI) to invert crop status | Balances data richness and computational efficiency | Discrete bands limit fine-grained attribute detection (e.g., cannot distinguish sugar-acid ratio) | Sugarcane hail damage mapping (uses NDVI threshold model) |

| Suitable for large-scale field monitoring (e.g., UAV-mounted) | Accuracy declines under dense canopy occlusion (common in hilly orchards) | Wheat group health assessment (inverts nitrogen content via NDVI) | ||

| Low power consumption (long endurance for hilly terrain surveys) | Hilly field crop distribution mapping (UAV-based multispectral imaging) | |||

| Hyperspectral Sensor | Captures dozens to hundreds of continuous narrow bands; obtains “spectral fingerprints” for biochemical composition analysis. | High spectral resolution (detects early stress and internal attributes) | High cost and large data volume (heavy computational burden) | Processing tomato maturity grading (combines NIR hyperspectral imaging with RNN, R2 > 0.87) |

| Enables non-destructive testing (e.g., fruit sugar content, hidden lesions) data data | Slow imaging speed (hard to match high-speed harvest operations) | Early apple disease detection (extracts lesion reflectance features across bands) | ||

| Sensitive to atmospheric scattering (hilly fog affects data quality) data | Wheat protein content inversion (spectral absorption at specific wavelengths) | |||

| Near-Infrared (NIR) Camera | Detects 700–1400 nm band; uses absorption characteristics of water, sugar, and protein to invert internal physiological parameters. | Penetrates crop tissues (detects internal attributes like hidden bruises) | Limited penetration depth (ineffective for thick-skinned crops like citrus) | Corn leaf water/nitrogen content in situ imaging (uses NIR + interference filters) |

| Fast response (suitable for real-time harvest monitoring) | Susceptible to ambient temperature (hilly diurnal temperature variation interferes with data) | Apple early bruise detection (combines NIR imaging with adaptive threshold segmentation) | ||

| Lower cost than hyperspectral sensors | Grain moisture content measurement (guides harvester drying system adjustment) | |||

| LiDAR (Light Detection and Ranging) | Emits laser pulses; calculates distance via time-of-flight to generate 3D point clouds for spatial structure modeling. | High spatial precision (cm-level accuracy for plant height, fruit location) | High cost (especially multi-line LiDAR) | Soybean plant height estimation (UAV-LiDAR point cloud + ground truth validation) |

| Unaffected by lighting (works in low-light hilly mornings/evenings) | Point cloud noise (hilly terrain vibrations cause data distortion) | Apple orchard organ segmentation (PointNeXt network, branch counting accuracy 93.4%) | ||

| Captures 3D canopy structure (resolves occlusion issues) | Large data storage requirements (needs edge computing for real-time processing) | Wheat lodging angle detection (analyzes point cloud surface normal variation) |

| Data Analysis Method | Core Task Objective | Advantages | Disadvantages | Typical Applications |

|---|---|---|---|---|

| Image Classification | Maps entire images to predefined categories (e.g., “mature/immature,” “healthy/diseased”) | Simple model structure (easy to deploy on edge devices) | Cannot locate targets (only outputs overall image labels) | Tomato maturity grading (MobileNet model + Lab color space b channel) |

| Fast inference speed (meets harvest real-time requirements, <100 ms) | Poor performance in complex backgrounds (hilly weed interference reduces accuracy) data | Wheat variety identification (adds attention mechanism to resist background clutter) | ||

| Effective for batch attribute screening | Crop health status batch screening (UAV RGB image classification) | |||

| Object Detection | Locates multiple targets and outputs “category + bounding box” (e.g., fruit position, spike count) | Integrates localization and classification (supports harvest path planning) | Higher computational cost than image classification | Grape cluster counting (pruned YOLOv5s, balances speed and accuracy) |

| Adaptable to multi-target scenarios (e.g., dense fruit clusters) | Small target detection accuracy declines (e.g., wheat spikelets in hilly wind-blown canopies) | Corn ear localization (Faster R-CNN, resolves leaf occlusion) | ||

| Image Segmentation | Performs pixel-level semantic labeling (e.g., “fruit/leaf/branch,” “diseased area/healthy area”) | High spatial resolution (extracts fine-grained regions like small lesions) | Complex model training (needs large-scale pixel-level annotations) | Wheat stripe rust lesion segmentation (Attention U-Net, accuracy > 90%) |

| Enables quantitative analysis (e.g., lodging area ratio) data | Slow inference (hard to match high-speed harvesters) data | Rice lodging area mapping (semantic segmentation + lodging angle statistics) | ||

| Overlapping apple separation (Mask R-CNN, supports robotic arm grasping) | ||||

| Point Cloud Analysis | Processes 3D point clouds to extract spatial structure attributes (e.g., plant height, canopy porosity) | Captures 3D geometric features (resolves 2D image occlusion issues) | High data preprocessing requirements (needs denoising, downsampling) | Corn canopy structure analysis (voxel grid division + porosity calculation) |

| Unaffected by color/lighting (stable in hilly variable environments) | Deep learning models are complex (e.g., PointNet requires large training datasets) | Fruit tree height prediction (CHM from LiDAR DSM-DTM, R2 = 0.987) | ||

| Tensor Decomposition | Processes multi-dimensional data (e.g., time-series hyperspectral + meteorological data) to extract coupled features | Preserves spatiotemporal correlations (captures dynamic crop growth trends) | Requires prior knowledge of tensor structure (poor adaptability to new crops) | Corn ear development monitoring (3D tensor: time + space + spectrum) |

| Reduces data dimensionality (alleviates computational burden) | Sensitive to missing data (hilly sensor malfunctions cause errors) | Multi-modal data fusion (visible light + thermal infrared + fluorescence images) | ||

| Graph Neural Networks (GNNs) | Models spatial topological relationships (e.g., fruit-branch connections, plant competition) | Captures non-Euclidean data features (e.g., grape cluster adjacency) | Dependent on graph construction quality (poor topology leads to errors) | Winter wheat yield prediction (ASTGNN, fuses remote sensing + soil data, R2 = 0.70) |

| Supports small-sample learning (reduces annotation cost) | Slow inference for large-scale fields (hilly large orchards need parallel computing) | Grape berry integrity assessment (constructs fruit connection graphs) | ||

| Crop population competition analysis (models plant interaction via graph edges) |

| Dimension | Keywords |

|---|---|

| Study Context | Hilly regions, Mountainous areas, Uneven terrain |

| Crop Attributes | Crop attribute monitoring, Maturity detection, Plant height, Fruit location, Crop quality, Lodging detection |

| Technical Methods | Sensing technology (LiDAR, NIR spectroscopy, Hyperspectral imaging, RGB-D), Deep learning (CNN, YOLO, PointNet), Machine learning (Random Forest, SVM) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Wang, R.; Ding, R. A Review of Crop Attribute Monitoring Technologies for General Agricultural Scenarios. AgriEngineering 2025, 7, 365. https://doi.org/10.3390/agriengineering7110365

Li Z, Wang R, Ding R. A Review of Crop Attribute Monitoring Technologies for General Agricultural Scenarios. AgriEngineering. 2025; 7(11):365. https://doi.org/10.3390/agriengineering7110365

Chicago/Turabian StyleLi, Zhuofan, Ruochen Wang, and Renkai Ding. 2025. "A Review of Crop Attribute Monitoring Technologies for General Agricultural Scenarios" AgriEngineering 7, no. 11: 365. https://doi.org/10.3390/agriengineering7110365

APA StyleLi, Z., Wang, R., & Ding, R. (2025). A Review of Crop Attribute Monitoring Technologies for General Agricultural Scenarios. AgriEngineering, 7(11), 365. https://doi.org/10.3390/agriengineering7110365