SCS-YOLO: A Lightweight Cross-Scale Detection Network for Sugarcane Surface Cracks with Dynamic Perception

Abstract

1. Introduction

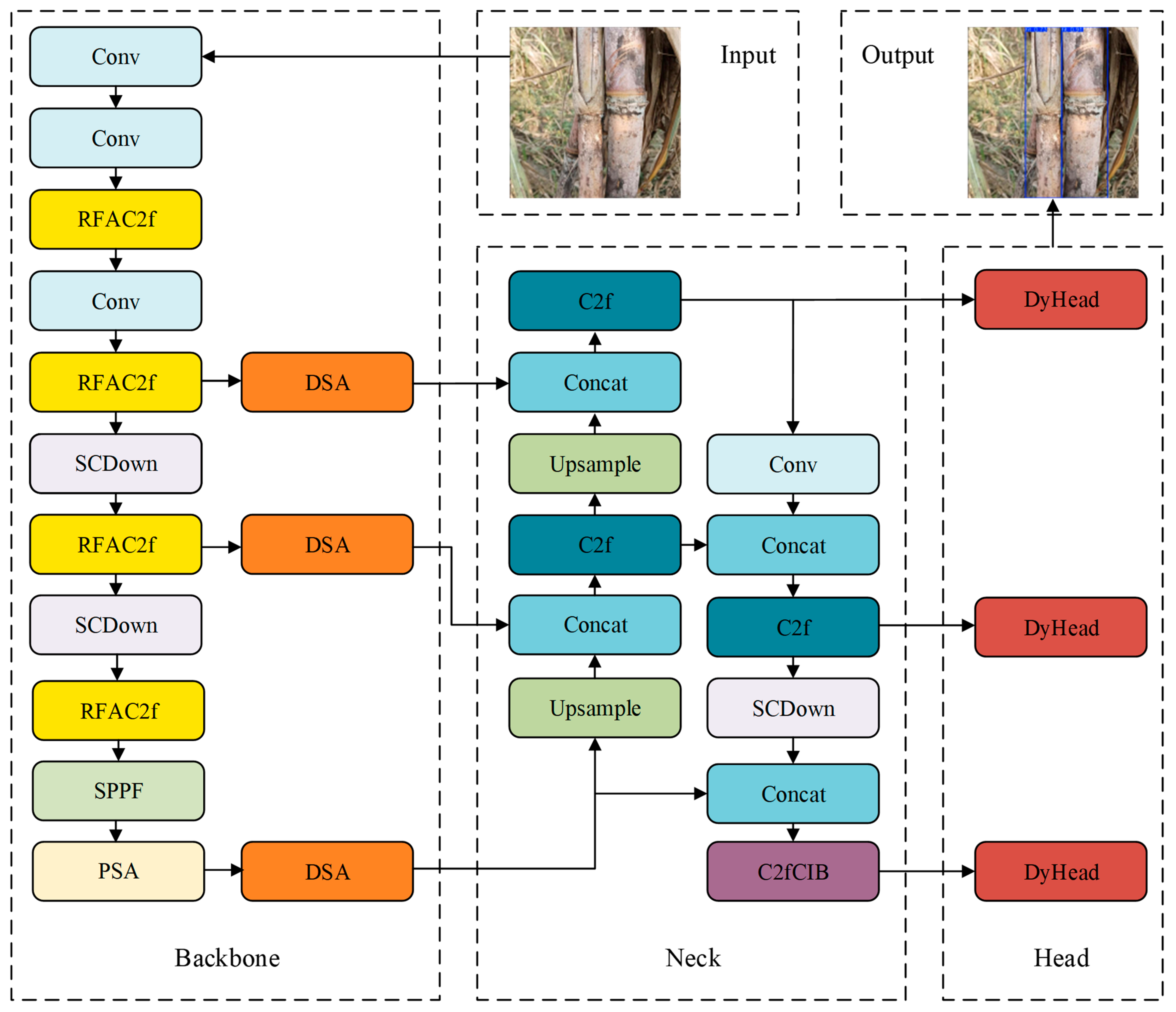

- Multi-scale Receptive Field Attention Bottleneck Module (RFAC2f): To address feature loss in slender cracks and multi-scale crack issues, it innovatively integrates the receptive field-level attention mechanism of RFA convolutions with the multi-branch structure of C2f. This provides the model with enhanced spatial feature perception and cross-scale feature fusion capabilities, making it suitable for detecting slender, low-contrast, multi-scale targets like sugarcane cracks.

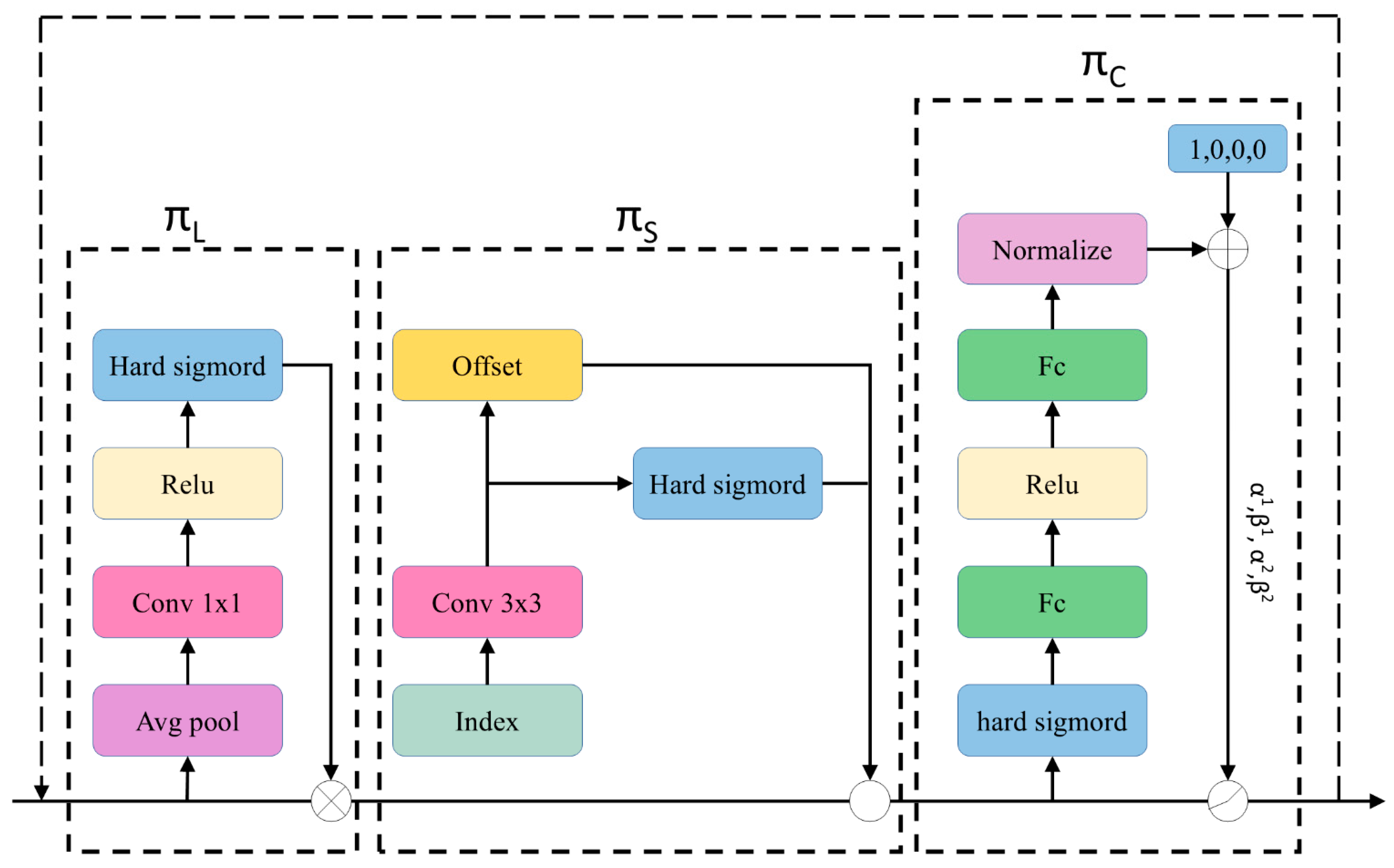

- Dynamic Spatial Attention Mechanism (DSA): Deeply integrates dynamically generated convolutional kernels with a parameter-free spatial attention mechanism to capture overall contour features of sugarcane surfaces while focusing on crack texture details. This module enhances the feature extraction network’s ability to capture information from diverse perspectives, improving the model’s modeling capability for the spatial distribution of multi-scale crack features.

- Dynamic Detection Head Optimization Strategy: Addressing issues in traditional YOLO series detection heads—feature loss, inadequate multi-scale adaptation, and computational redundancy—by replacing them with DyHead. This achieves multi-scale feature adaptive fusion through attention weight allocation and dynamic convolution, enhancing spatial perception of elongated crack features while improving computational efficiency and reducing model parameters and computational load.

2. Data Preprocessing

2.1. Experimental Data Acquisition

2.2. Data Augmentation

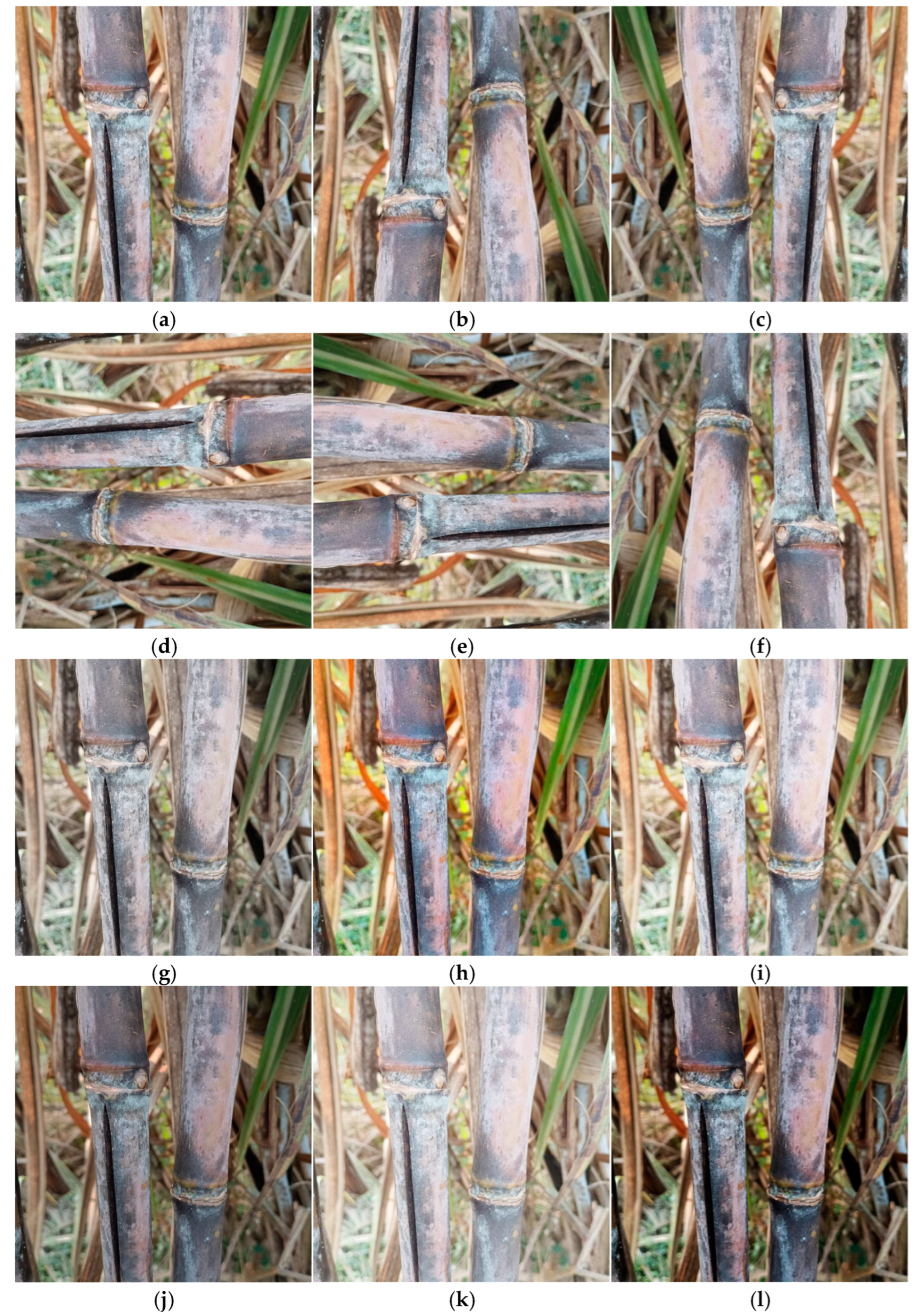

- (1)

- Geometric transformations: horizontal flip (as shown in Figure 2b), vertical flip (as shown in Figure 2c), clockwise rotation (as shown in Figure 2d), counterclockwise rotation (as shown in Figure 2e), and inverted rotation (as shown in Figure 2f), which help the model adapt to the random spatial distribution of sugarcane stalks and cracks in actual field scenarios;

- (2)

- Luminance Adjustment: Saturation −30% (as shown in Figure 2g), Saturation +30% (as shown in Figure 2h), Brightness +25% (as shown in Figure 2i), Brightness −25% (as shown in Figure 2j), Exposure +15% (as shown in Figure 2k), Exposure −15% (as shown in Figure 2l). This design aims to simulate variable lighting conditions in agricultural environments, such as intense sunlight and overcast skies, while reducing the model’s sensitivity to light interference.

3. Network Model Improvement and Training

3.1. SCS-YOLO Model

3.1.1. Receptive-Field Attention C2f

3.1.2. Dynamic Simam Attention Module

3.1.3. Dynamic Head

3.2. Experimental Environment and Evaluation Criteria

3.2.1. Experimental Environment

3.2.2. Evaluation Criteria

4. Experimental Results and Analysis

4.1. Ablation Experiment

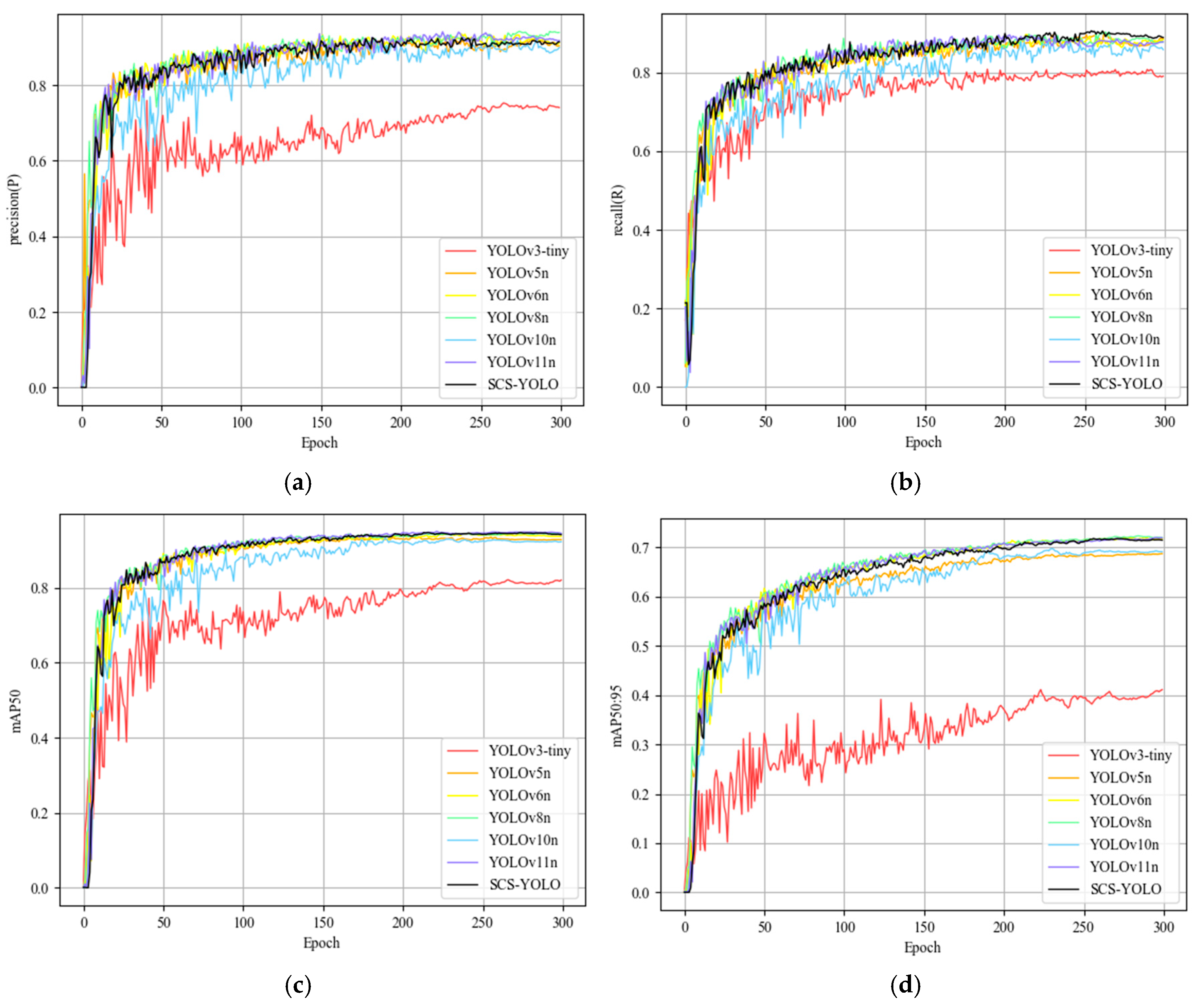

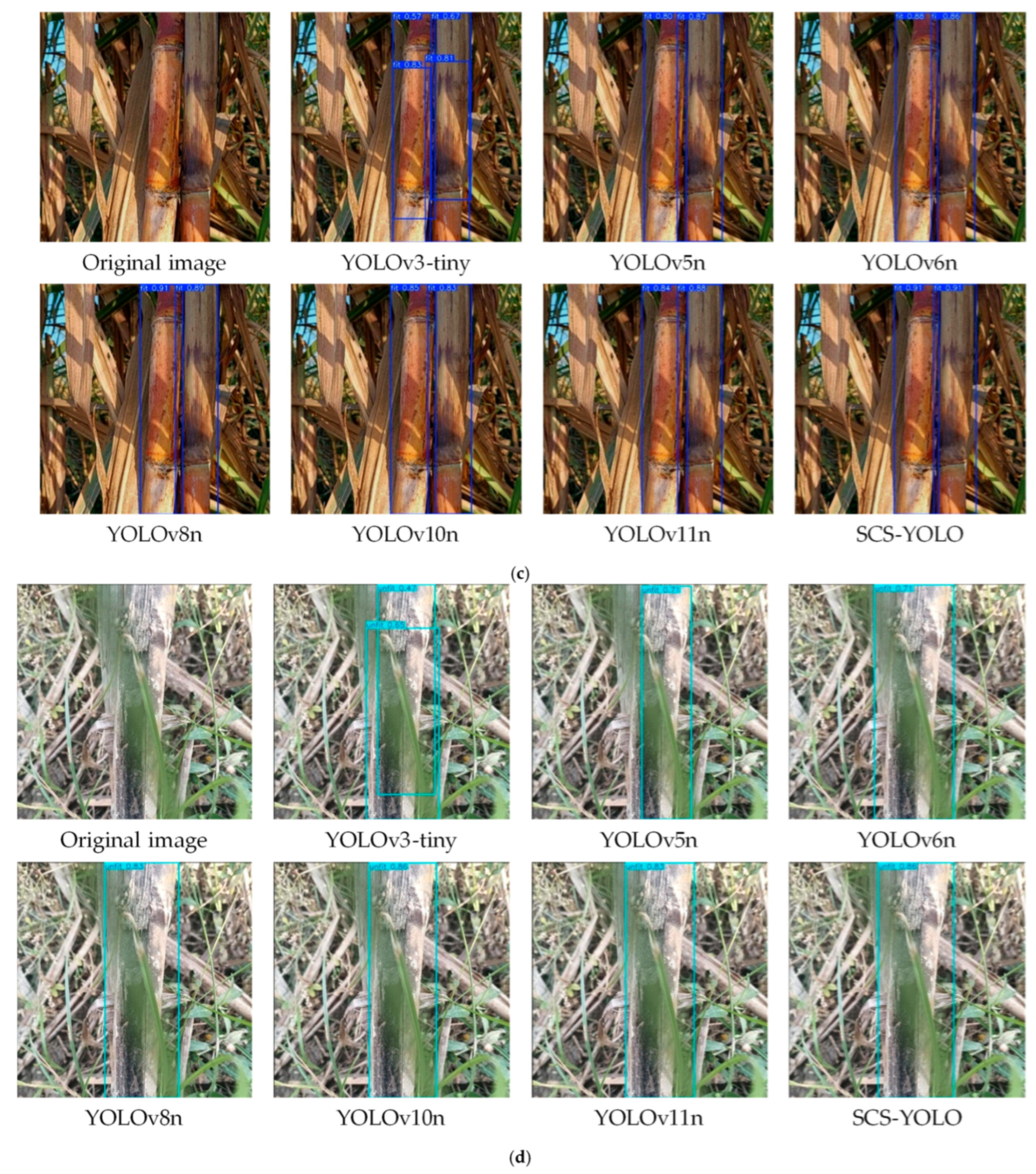

4.2. Comparative Test

5. Discussion

6. Conclusions

- (1)

- The recently suggested RFAC2f module builds a multi-branch feature processing network using a dynamic receptive field adaptive modeling technique to extract crack characteristics at various sizes. This module demonstrated a notable improvement over the YOLOv10n model and successfully overcame the interference generated by complex field environments on crack recognition, achieving a detection accuracy of 90.2% in the Sugarcane Crack Dataset v3.1 benchmark test.

- (2)

- To improve the capture of tiny crack features, the DSA module incorporates a spatial attention mechanism and a dynamic weight allocation method that adaptively modifies the weight distribution of feature maps. According to experimental data, this module greatly increases the model’s capacity to recognize intricate crack patterns by optimizing recall to 87.8% (an increase of 0.8%) and improving detection accuracy to 88.5% (a rise of 1.2%).

- (3)

- Through dynamic parameter sharing and lightweight convolution design, the DyHead detection head optimizes the architecture to lower the model parameter count to 2.0 MB, 23.41% less than the baseline model while preserving stable detection performance. The model can now accommodate the resource limitations of edge computing devices thanks to the inclusion of DyHead, offering a workable technological solution for accurate field detection.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, H.; Pan, Y.-B.; Wu, M.; Liu, J.; Yang, S.; Wu, Q.; Que, Y. Sugarcane genetics: Underlying theory and practical application. Crop J. 2024, 13, 328–338. [Google Scholar] [CrossRef]

- Maitra, S.; Dien, B.; Eilts, K.; Kuanyshev, N.; Cortes-Pena, Y.R.; Jin, Y.-S.; Guest, J.S.; Singh, V. Resourceful and economical designing of fermentation medium for lab and commercial strains of yeast from alternative feedstock: ‘transgenic oilcane’. Biotechnol. Biofuels Bioprod. 2025, 18, 14. [Google Scholar] [CrossRef] [PubMed]

- Maitra, S.; Viswanathan, M.B.; Park, K.; Kannan, B.; Alfanar, S.C.; McCoy, S.M.; Cahoon, E.B.; Altpeter, F.; Leakey, A.D.B.; Singh, V. Bioprocessing, Recovery, and Mass Balance of Vegetative Lipids from Metabolically Engineered “Oilcane” Demonstrates Its Potential as an Alternative Feedstock for Drop-In Fuel Production. ACS Sustain. Chem. Eng. 2022, 10, 16833–16844. [Google Scholar] [CrossRef]

- Maitra, S.; Cheng, M.-H.; Liu, H.; Cao, V.D.; Kannan, B.; Long, S.P.; Shanklin, J.; Altpeter, F.; Singh, V. Sustainable co-production of plant lipids and cellulosic sugars from transgenic energycane at an industrially relevant scale: A proof of concept for alternative feedstocks. Chem. Eng. J. 2024, 487, 150450. [Google Scholar] [CrossRef]

- Cao, V.D.; Kannan, B.; Luo, G.; Liu, H.; Shanklin, J.; Altpeter, F. Triacylglycerol, total fatty acid, and biomass accumulation of metabolically engineered energycane grown under field conditions confirms its potential as feedstock for drop-in fuel production. GCB Bioenergy 2023, 15, 1450–1464. [Google Scholar] [CrossRef]

- Gomathi, R.; Gururaja Rao, P.N.; Chandran, K.; Selvi, A. Adaptive Responses of Sugarcane to Waterlogging Stress: An Over View. Sugar Tech 2015, 17, 325–338. [Google Scholar] [CrossRef]

- Garcia, A.; Crusciol, C.A.C.; Rosolem, C.A.; Bossolani, J.W.; Nascimento, C.A.C.; McCray, J.M.; Dos Reis, A.R.; Cakmak, I. Potassium-magnesium imbalance causes detrimental effects on growth, starch allocation and Rubisco activity in sugarcane plants. Plant Soil 2022, 472, 225–238. [Google Scholar] [CrossRef]

- Yao, S.; Wang, B.; Liu, D.L.; Li, S.; Ruan, H.; Yu, Q. Assessing the impact of climate variability on Australia’s sugarcane yield in 1980–2022. Eur. J. Agron. 2025, 164, 127519. [Google Scholar] [CrossRef]

- Zhou, B.; Ma, S.; Li, W.; Peng, C.; Li, W. Study on sugarcane chopping and damage mechanism during harvesting of sugarcane chopper harvester. Biosyst. Eng. 2024, 243, 1–12. [Google Scholar] [CrossRef]

- Flack-Prain, S.; Shi, L.; Zhu, P.; Da Rocha, H.R.; Cabral, O.; Hu, S.; Williams, M. The impact of climate change and climate extremes on sugarcane production. GCB Bioenergy 2021, 13, 408–424. [Google Scholar] [CrossRef]

- Li, A.-M.; Chen, Z.-L.; Liao, F.; Zhao, Y.; Qin, C.-X.; Wang, M.; Pan, Y.-Q.; Wei, S.L.; Huang, D.-L. Sugarcane borers: Species, distribution, damage and management options. J. Pest Sci. 2024, 97, 1171–1201. [Google Scholar] [CrossRef]

- Misra, V.; Mall, A.K.; Shrivastava, A.K.; Solomon, S.; Shukla, S.P.; Ansari, M.I. Assessment of Leuconostoc spp. invasion in standing sugarcane with cracks internode. J. Environ. Biol. 2019, 40, 316–321. [Google Scholar] [CrossRef]

- Satpathi, A.; Chand, N.; Setiya, P.; Ranjan, R.; Nain, A.S.; Vishwakarma, D.K.; Saleem, K.; Obaidullah, A.J.; Yadav, K.K.; Kisi, O. Evaluating statistical and machine learning techniques for sugarcane yield forecasting in the tarai region of North India. Comput. Electron. Agric. 2025, 229, 109667. [Google Scholar] [CrossRef]

- Shang, X.-K.; Wei, J.-L.; Liu, W.; Nikpay, A.; Pan, X.-H.; Huang, C.-H. Integrated Pest Management of Sugarcane Insect Pests in China: Current Status and Future Prospects. Sugar Tech 2025, 27, 299–317. [Google Scholar] [CrossRef]

- Djenouri, Y.; Belbachir, A.N.; Michalak, T.; Belhadi, A.; Srivastava, G. A Knowledge-Enhanced Object Detection for Sustainable Agriculture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 728–740. [Google Scholar] [CrossRef]

- Hu, X.; Du, Z.; Wang, F. Research on detection method of photovoltaic cell surface dirt based on image processing technology. Sci. Rep. 2024, 14, 16842. [Google Scholar] [CrossRef]

- Tahir, N.U.A.; Zhang, Z.; Asim, M.; Chen, J.; ELAffendi, M. Object Detection in Autonomous Vehicles under Adverse Weather: A Review of Traditional and Deep Learning Approaches. Algorithms 2024, 17, 103. [Google Scholar] [CrossRef]

- Yang, W.; Chen, X.-D.; Wang, H.; Mao, X. Edge detection using multi-scale closest neighbor operator and grid partition. Vis. Comput. 2024, 40, 1947–1964. [Google Scholar] [CrossRef]

- Qiao, L.; Liu, K.; Xue, Y.; Tang, W.; Salehnia, T. A multi-level thresholding image segmentation method using hybrid Arithmetic Optimization and Harris Hawks Optimizer algorithms. Expert Syst. Appl. 2024, 241, 122316. [Google Scholar] [CrossRef]

- Li, Z.; Xiang, J.; Duan, J. A low illumination target detection method based on a dynamic gradient gain allocation strategy. Sci. Rep. 2024, 14, 29058. [Google Scholar] [CrossRef]

- He, Z.; Chen, X.; Yi, T.; He, F.; Dong, Z.; Zhang, Y. Moving Target Shadow Analysis and Detection for ViSAR Imagery. Remote Sens. 2021, 13, 3012. [Google Scholar] [CrossRef]

- Chen, R.; Tian, X. Gesture Detection and Recognition Based on Object Detection in Complex Background. Appl. Sci. 2023, 13, 4480. [Google Scholar] [CrossRef]

- Xu, F.; Zhu, Z.; Feng, C.; Leng, J.; Zhang, P.; Yu, X.; Wang, C.; Chen, X. An object planar grasping pose detection algorithm in low-light scenes. Multimed. Tools Appl. 2024, 84, 5583–5604. [Google Scholar] [CrossRef]

- Agrawal, S.; Natu, P. OBB detector: Occluded object detection based on geometric modeling of video frames. Vis. Comput. 2025, 41, 921–943. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Qiu, S.; Chen, X.; Liu, Z.; Zhou, C.; Yao, W.; Cheng, H.; Zhang, Y.; Wang, F.; et al. Multi-Scale Hierarchical Feature Fusion for Infrared Small-Target Detection. Remote Sens. 2025, 17, 428. [Google Scholar] [CrossRef]

- Li, Z.; Miao, Y.; Li, X.; Li, W.; Cao, J.; Hao, Q.; Li, D.; Sheng, Y. Speed-Oriented Lightweight Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5601014. [Google Scholar] [CrossRef]

- Zhao, B.; Qin, Z.; Wu, Y.; Song, Y.; Yu, H.; Gao, L. A Fast Target Detection Model for Remote Sensing Images Leveraging Roofline Analysis on Edge Computing Devices. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19343–19360. [Google Scholar] [CrossRef]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance Problems in Object Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3388–3415. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.; Cheng, Y.; He, J.; Zhu, X. A review of small object detection based on deep learning. Neural Comput. Appl. 2024, 36, 6283–6303. [Google Scholar] [CrossRef]

- Shi, W.; Lyu, X.; Han, L. An Object Detection Model for Power Lines With Occlusions Combining CNN and Transformer. IEEE Trans. Instrum. Meas. 2025, 74, 5007012. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Luo, Y.; Ni, L.; Cai, F.; Wang, D.; Luo, Y.; Li, X.; Fu, N.; Tang, J.; Xue, L. Detection of Agricultural Pests Based on YOLO. J. Phys. Conf. Ser. 2023, 2560, 012013. [Google Scholar] [CrossRef]

- Yang, M.; Peng, L.; Liu, L.; Wang, Y.; Zhang, Z.; Yuan, Z.; Zhou, J. LCSED: A low complexity CNN based SED model for IoT devices. Neurocomputing 2022, 485, 155–165. [Google Scholar] [CrossRef]

- Guo, X.; Jiang, Q.; Pimentel, A.D.; Stefanov, T. Model and system robustness in distributed CNN inference at the edge. Integration 2025, 100, 102299. [Google Scholar] [CrossRef]

- Ruiz-Barroso, P.; Castro, F.M.; Guil, N. Real-time unsupervised video object detection on the edge. Future Gener. Comput. Syst. 2025, 167, 107737. [Google Scholar] [CrossRef]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-based Object Detection Models: A Review and its Applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Lawal, O.M. YOLOv5-LiNet: A lightweight network for fruits instance segmentation. PLoS ONE 2023, 18, e0282297. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Ma, N.; Su, Y.; Yang, L.; Li, Z.; Yan, H. Wheat Seed Detection and Counting Method Based on Improved YOLOv8 Model. Sensors 2024, 24, 1654. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Zou, C.; Yu, S.; Yu, Y.; Gu, H.; Xu, X. Side-Scan Sonar Small Objects Detection Based on Improved YOLOv11. J. Mar. Sci. Eng. 2025, 13, 162. [Google Scholar] [CrossRef]

- Wei, M.; Chen, K.; Yan, F.; Ma, J.; Liu, K.; Cheng, E. YOLO-ESFM: A multi-scale YOLO algorithm for sea surface object detection. Int. J. Nav. Archit. Ocean Eng. 2025, 17, 100651. [Google Scholar] [CrossRef]

- Mittal, P. A comprehensive survey of deep learning-based lightweight object detection models for edge devices. Artif. Intell. Rev. 2024, 57, 242. [Google Scholar] [CrossRef]

- Pitts, H. Warehouse Robot Detection for Human Safety Using YOLOv8. In Proceedings of the SoutheastCon 2024, Atlanta, GA, USA, 20–24 March 2024; pp. 1184–1188. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Guo, C.; Huang, H. Enhancing camouflaged object detection through contrastive learning and data augmentation techniques. Eng. Appl. Artif. Intell. 2025, 141, 109703. [Google Scholar] [CrossRef]

- Chen, R.; Zhang, D.; Liu, Q.; Li, J. Robust 3D Object Detection Based on Point Feature Enhancement in Driving Scenes. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 2791–2798. [Google Scholar]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating Spatial Attention and Standard Convolutional Operation. arXiv 2024, arXiv:2304.03198. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution: Attention Over Convolution Kernels. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11027–11036. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtually, 18–24 July 2021. [Google Scholar]

- Kim, W.; Tanaka, M.; Sasaki, Y.; Okutomi, M. Deformable element-wise dynamic convolution. J. Electron. Imaging 2023, 32, 053029. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 7369–7378. [Google Scholar]

- Tang, P.; Ding, Z.; Jiang, M.; Xu, W.; Lv, M. LBT-YOLO: A Lightweight Road Targeting Algorithm Based on Task Aligned Dynamic Detection Heads. IEEE Access 2024, 12, 180422–180435. [Google Scholar] [CrossRef]

- Park, I.; Kim, S. Performance Indicator Survey for Object Detection. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13–16 October 2020; pp. 284–288. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4—Universal Models for the Mobile Ecosystem. arXiv 2024, arXiv:2404.10518. [Google Scholar] [CrossRef]

- Pino, A.F.S.; Apraez, L.S.C. Artificial intelligence and multispectral imaging in coffee production: A systematic literature review. Eur. J. Agron. 2025, 170, 127725. [Google Scholar] [CrossRef]

- Saeed, F.; Tan, C.; Liu, T.; Li, C. 3D neural architecture search to optimize segmentation of plant parts. Smart Agric. Technol. 2025, 10, 100776. [Google Scholar] [CrossRef]

| YOLOv10 | RFAC2f | DSA | DyHead | P/% | R/% | mAP50/% | mAP50:95/% | F1-Score | Param/M | GFLOPs/G | Size/M | FPS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| √ | × | × | × | 87.3 | 87.0 | 92.9 | 69.7 | 87.15 | 2.707820 | 8.4 | 5.51 | 91 |

| √ | √ | × | × | 90.2 | 85.8 | 92.8 | 69.4 | 87.95 | 2.694764 | 8.4 | 5.48 | 129 |

| √ | × | √ | × | 88.5 | 87.8 | 93.0 | 65.6 | 88.15 | 2.822124 | 9.2 | 5.80 | 96 |

| √ | × | × | √ | 89.4 | 91.4 | 94.3 | 71.0 | 90.38 | 2.073790 | 5.2 | 4.25 | 86 |

| √ | √ | √ | × | 91.6 | 87.3 | 93.8 | 70.2 | 89.39 | 3.584108 | 5.6 | 7.22 | 116 |

| √ | √ | × | √ | 89.6 | 89.3 | 94.2 | 70.7 | 89.45 | 2.060734 | 5.2 | 4.22 | 95 |

| √ | × | √ | √ | 91.4 | 89.1 | 94.7 | 72.3 | 90.23 | 2.188094 | 6.0 | 4.54 | 103 |

| √ | √ | √ | √ | 90.9 | 89.8 | 94.7 | 71.8 | 90.35 | 2.175038 | 6.0 | 4.51 | 122 |

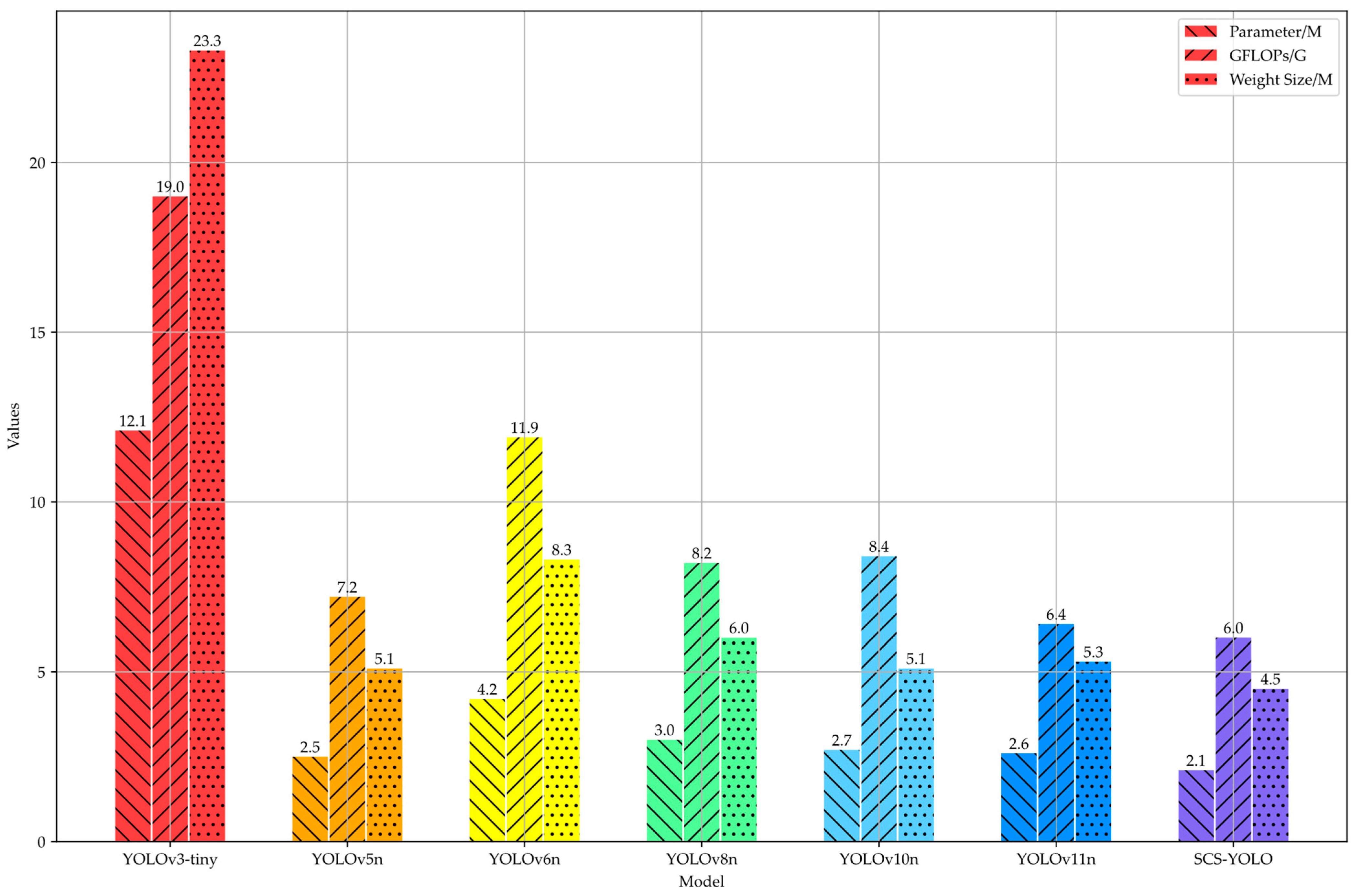

| Model | P/% | R/% | mAP50/% | mAP50:95/% | F1-Score | Parameters/M | GFLOPs/G | Size/M | FPS |

|---|---|---|---|---|---|---|---|---|---|

| Faster R-CNN | 80.4 | 75.8 | 94.1 | 69.3 | 78.03 | 137.098724 | 370.2 | 108.22 | 0.38 |

| YOLOv3-tiny | 74.4 | 78.9 | 82.1 | 41.2 | 76.58 | 12.133156 | 19.0 | 23.3 | 69 |

| YOLOv5n | 90.5 | 88.4 | 92.9 | 68.8 | 89.43 | 2.508854 | 7.2 | 5.1 | 129 |

| YOLOv6n | 91.3 | 88.1 | 94.1 | 72.0 | 89.67 | 4.238342 | 11.9 | 8.3 | 75 |

| YOLOv8n | 93.0 | 88.8 | 94.4 | 72.4 | 90.85 | 3.011222 | 8.2 | 6.0 | 109 |

| YOLOv10n | 87.3 | 87.0 | 92.9 | 69.7 | 87.15 | 2.707820 | 8.4 | 5.1 | 91 |

| YOLOv11n | 92.1 | 87.4 | 94.8 | 72.0 | 89.69 | 2.590230 | 6.4 | 5.3 | 111 |

| SCS-YOLO | 90.9 | 89.8 | 94.7 | 71.8 | 90.35 | 2.175038 | 6.0 | 4.5 | 122 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Ding, X.; Wang, J.; Luo, R. SCS-YOLO: A Lightweight Cross-Scale Detection Network for Sugarcane Surface Cracks with Dynamic Perception. AgriEngineering 2025, 7, 321. https://doi.org/10.3390/agriengineering7100321

Li M, Ding X, Wang J, Luo R. SCS-YOLO: A Lightweight Cross-Scale Detection Network for Sugarcane Surface Cracks with Dynamic Perception. AgriEngineering. 2025; 7(10):321. https://doi.org/10.3390/agriengineering7100321

Chicago/Turabian StyleLi, Meng, Xue Ding, Jinliang Wang, and Rongxiang Luo. 2025. "SCS-YOLO: A Lightweight Cross-Scale Detection Network for Sugarcane Surface Cracks with Dynamic Perception" AgriEngineering 7, no. 10: 321. https://doi.org/10.3390/agriengineering7100321

APA StyleLi, M., Ding, X., Wang, J., & Luo, R. (2025). SCS-YOLO: A Lightweight Cross-Scale Detection Network for Sugarcane Surface Cracks with Dynamic Perception. AgriEngineering, 7(10), 321. https://doi.org/10.3390/agriengineering7100321