Machine Learning in the Classification of RGB Images of Maize (Zea mays L.) Using Texture Attributes and Different Doses of Nitrogen

Abstract

1. Introduction

2. Material and Methods

2.1. Study Site

2.2. Characterization of the Experiment and Image Acquisition

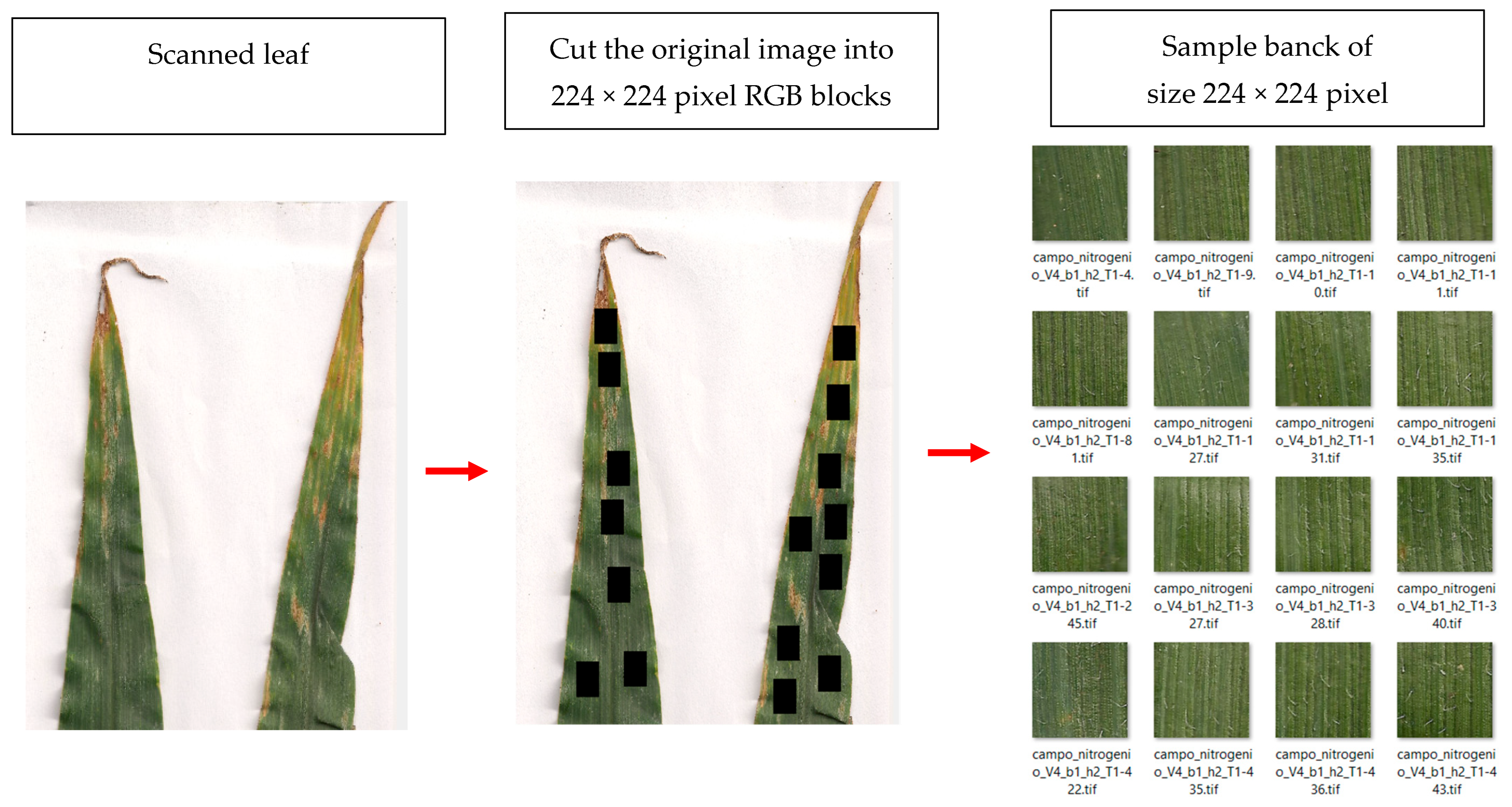

2.3. Image Pre-Processing

2.4. Texture Analysis

2.5. Classification

3. Results

3.1. Chemical Analysis

3.2. ReliefF Feature Ranking

3.3. Algorithm Performance

3.4. Confusion Matrix and ROC Curves

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Conab–Companhia Nacional de Abastecimento. Acompanhamento da Safra Brasileira de Grãos, Brasília, DF, v. 12, Safra 2024/25, n. 8, Maio 2025. Available online: https://www.gov.br/conab/pt-br/atuacao/informacoes-agropecuarias/safras/safra-de-graos/boletim-da-safra-de-graos/8o-levantamento-safra-2024-25/boletim-da-safra-de-graos (accessed on 20 June 2025).

- Anas, M.; Liao, F.; Verma, K.K.; Sarwar, M.A.; Mahmood, A.; Chen, Z.-L.; Li, Q.; Zeng, X.-P.; Liu, Y.; Li, Y.-R. Fate of nitrogen in agriculture and environment: Agronomic, eco-physiological and molecular approaches to improve nitrogen use efficiency. Biol. Res. 2020, 53, 47. [Google Scholar] [CrossRef]

- Theerawitaya, C.; Supaibulwatana, K.; Tisarum, R.; Samphumphuang, T.; Chungloo, D.; Singh, H.P.; Cha-Um, S. Expression levels of nitrogen assimilation-related genes, physiological responses, and morphological adaptations of three indica rice (Oryza sativa L. ssp. indica) genotypes subjected to nitrogen starvation conditions. Protoplasma 2023, 260, 691–705. [Google Scholar] [CrossRef]

- Lv, X.; Zhang, Q.; He, J.; Yang, Y.; Xia, Z.; Gong, Y.; Liu, J.; Lu, H. Effects of exogenous melatonin on growth and photosynthesis of maize (Zea mays L.) seedlings under low nitrogen stress. Plant Physiol. Biochem. 2025, 223, 109810. [Google Scholar] [CrossRef]

- Jiang, N.; Zou, T.; Huang, H.; Li, C.; Xia, Y.; Yang, L. Auxin synthesis promotes N metabolism and optimizes root structure enhancing N acquirement in maize (Zea mays L.). Planta 2024, 259, 46. [Google Scholar] [CrossRef]

- Cheng, W.; Sun, D.-W.; Pu, H.; Wei, Q. Chemical spoilage extent traceability of two kinds of processed pork meats using one multispectral system developed by hyperspectral imaging combined with effective variable selection methods. Food Chem. 2017, 221, 1989–1996. [Google Scholar] [CrossRef]

- Liu, Y.; Pu, H.; Sun, D.-W. Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends Food Sci. Technol. 2021, 113, 193–204. [Google Scholar] [CrossRef]

- Xie, K.; Ren, Y.; Chen, A.; Yang, C.; Zheng, Q.; Chen, J.; Wang, D.; Li, Y.; Hu, S.; Xu, G. Plant nitrogen nutrition: The roles of arbuscular mycorrhizal fungi. J. Plant Physiol. 2022, 269, 153591. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.-D.; Hsu, Y.-C.; Chen, Y.-H.; Yang, C.-Y.; Li, K.-Y. Precision monitoring of rice nitrogen fertilizer levels based on machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2025, 237, 110523. [Google Scholar] [CrossRef]

- Farhood, H.; Bakhshayeshi, I.; Pooshideh, M.; Rezvani, N.; Beheshti, A. Chapter 7-Recent adanvances of image processing techniques in agriculture. In Cognitive Data Science in Sustainable Computing, Artificial Intelligence and Data Science in Environmental Sensing; Asadnia, M., Razmjou, A., Eds.; Academic Press: London, UK, 2022; pp. 129–153. [Google Scholar] [CrossRef]

- Castellano, G.; Bonilha, L.; Li, L.M.; Cendes, F. Texture analysis of medical images. Clin. Radiol. 2004, 59, 1061–1069. [Google Scholar] [CrossRef]

- Teng, J.; Zhang, D.; Lee, D.-J.; Chou, Y. Recognition of Chinese food using convolutional neural network. Multimed. Tools Appl. 2019, 78, 11155–11172. [Google Scholar] [CrossRef]

- Devechio, F.D.F.D.S.; Luz, P.H.D.C.; Romualdo, L.M.; Herling, V.R. Sufficiency range and response of maize hybrids subjected to potassium doses in nutrient solution. In Proceedings of the Stepping Forward to Global Nutrient Use Efficiency, 19th International Plant Nutrition Colloquium (IPNC), Iguassu Falls, State of Paraná, Brazil, 22–27 August 2022; São Paulo State University (UNESP): São Paulo State, Brazil, 2022. ISBN 978-65-85111-01-0. [Google Scholar]

- Tavares, M.S.; Silva, C.A.A.C.; Regazzo, J.R.; Sardinha, E.J.d.S.; da Silva, T.L.; Fiorio, P.R.; Baesso, M.M. Performance of Machine Learning Models in Predicting Common Bean (Phaseolus vulgaris L.) Crop Nitrogen Using NIR Spectroscopy. Agronomy 2024, 14, 1634. [Google Scholar] [CrossRef]

- Dinesh, A.; Balakannan, S.P.; Maragatharajan, M. A novel method for predicting plant leaf disease based on machine learning and deep learning techniques. Eng. Appl. Artif. Intell. 2025, 155, 111071. [Google Scholar] [CrossRef]

- Patel, J.; Patel, J.; Kapdi, R.; Patel, Y. Weed Recognition in Brinjal Crop using Deep Learning. Procedia Comput. Sci. 2025, 259, 2014–2024. [Google Scholar] [CrossRef]

- Regazzo, J.R.; Silva, T.L.D.; Tavares, M.S.; Sardinha, E.J.D.S.; Figueiredo, C.G.; Couto, J.L.; Gomes, T.M.; Tech, A.R.B.; Baesso, M.M. Desempenho de Redes Neurais na Predição da Nutrição Nitrogenada em Plantas de Morango. AgriEngineering 2024, 6, 1760–1770. [Google Scholar] [CrossRef]

- Singh, K.; Yadav, M.; Barak, D.; Bansal, S.; Moreira, F. Machine-Learning-Based Frameworks for Reliable and Sustainable Crop Forecasting. Sustainability 2025, 17, 4711. [Google Scholar] [CrossRef]

- Karakullukcu, E. Leveraging convolutional neural networks for image-based classification of feature matrix data. Expert Syst. Appl. 2025, 281, 127625. [Google Scholar] [CrossRef]

- Pineda, J.M. Modelos predictivos en salud basados en aprendizaje de maquina (machine learning). Rev. Médica Clínica Las Condes 2022, 33, 583–590. [Google Scholar] [CrossRef]

- Abderrahim, D.; Taoufiq, S.; Bouchaib, I.; Rabie, R. Enhancing tomato leaf nitrogen analysis through portable NIR spectrometers combined with machine learning and chemometrics. Chemom. Intell. Lab. Syst. 2023, 240, 104925. [Google Scholar] [CrossRef]

- Sirsat, M.S.; Isla-Cernadas, D.; Cernadas, E.; Fernández-Delgado, M. Machine and deep learning for the prediction of nutrient deficiency in wheat leaf images. Knowl.-Based Syst. 2025, 317, 113400. [Google Scholar] [CrossRef]

- Santos, H.G.D.; Jacomine, P.K.T.; Anjos, L.H.C.D.; Oliveira, V.A.D.; Lumbreras, J.F.; Coelho, M.R.; Almeida, J.A.D.; Araujo Filho, J.C.D.; Oliveira, J.B.D.; Cunha, T.J.F. Sistema Brasileiro de Classificação de Solos. 5° ed., rev. e ampl. Brasília, DF: Embrapa, 2018. Available online: http://livimagens.sct.embrapa.br/amostras/00053080.pdf (accessed on 20 June 2025).

- Silva, F.F.d. Reconhecimento de Padrões de Nutrição Para Nitrogênio e Potássio em Híbridos de Milho por Análise de Imagens Digitais; Tese (Doutorado)—Faculdade de Zootecnia e Engenharia de Alimentos, Universidade de São Paulo: Pirassununga, Brazil, 2015. [Google Scholar]

- Ordóñez, R.A.; Olmedo-Pico, L.; Ferela, A.; Trifunovic, S.; Eudy, D.; Archontoulis, S.; Vyn, T.J. Modern maize hybrids have increased grain yield and efficiency tolerances to nitrogen-limited conditions. Eur. J. Agron. 2025, 168, 127621. [Google Scholar] [CrossRef]

- Song, G.; Lu, Y.; Wang, Y.; Nie, C.; Xu, M.; Wang, L.; Bai, Y. Analysis of metabolic differences in maize in different growth stages under nitrogen stress based on UPLC-QTOF-MS. Front. Plant Sci. 2023, 14, 1141232. [Google Scholar] [CrossRef]

- Fancelli, A.L. Plantas Alimentícias: Guia Para Aulas, Estudo e Discussão; Centro Acadêmico “Luiz de Queiroz”, ESALQ/USP: Piracicaba, Brazil, 1986; p. 131. [Google Scholar]

- Lencioni, G.C.; Sousa, R.V.; Sardinha, E.J.S.; Corrêa, R.R.; Zanella, A.J. Pain assessment in horses using automatic facial expression recognition through deep learning-based modeling. PLoS ONE 2021, 16, e0258672. [Google Scholar] [CrossRef]

- MATLAB; R2022b; MathWorks Inc.: Natick, MA, USA, 2022.

- Lu, J.; Eitel, J.U.H.; Engels, M.; Zhu, J.; Ma, Y.; Liao, F.; Zheng, H.; Wang, X.; Yao, X.; Cheng, T.; et al. Improving Unmanned Aerial Vehicle (UAV) remote sensing of rice plant potassium accumulation by fusing spectral and textural information. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102592. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Zeitoune, A.A.; Erbes, L.A.; Casco, V.H.; Adur, J.F. Improvement of co-occurrence matrix calculation and collagen fibers orientation estimation. Proceedings of SPIE 2017, 10160, 101601B. In Proceedings of the 12th International Symposium on Medical Information Processing and Analysis, Tandil, Argentina, 5–7 December 2016. [Google Scholar] [CrossRef]

- Barburiceanu, S.; Terebes, R.; Meza, S. 3D Texture Feature Extraction and Classification Using GLCM and LBP-Based Descriptors. Appl. Sci. 2021, 11, 2332. [Google Scholar] [CrossRef]

- Mukherjee, A.; Gaurav, K.; Verma, A.; Kumar, H.; Thakur, R. Content Based Image Retrieval using GLCM. Int. J. Innov. Res. Comput. Commun. Eng. 2016, 4, 20142–20149. Available online: https://teams.microsoft.com/l/message/19:565a7e33-2568-466a-a333-b8886c2dd88d_9ede494f-50c3-47a7-ba55-44fb41fae952@unq.gbl.spaces/1758679716129?context=%7B%22contextType%22%3A%22chat%22%7D (accessed on 20 June 2025).

- Wen, X.; Shan, J.; He, Y.; Song, K. Steel Surface Defect Recognition: A Survey. Coatings 2023, 13, 17. [Google Scholar] [CrossRef]

- Subramanian, M.; Lingamuthu, V.; Venkatesan, C.; Perumal, S. Content-Based Image Retrieval Using Colour, Gray, Advanced Texture, Shape Features, and Random Forest Classifier with Optimized Particle Swarm Optimization. Int. J. Biomed. Imaging 2022, 2022, 3211793. [Google Scholar] [CrossRef]

- Kabir, M.; Unal, F.; Akinci, T.C.; Martinez-Morales, A.A.; Ekici, S. Revealing GLCM Metric Variations across a Plant Disease Dataset: A Comprehensive Examination and Future Prospects for Enhanced Deep Learning Applications. Electronics 2024, 13, 2299. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Nyasulu, C.; Diattara, A.; Traore, A.; Ba, C.; Diedhiou, P.M.; Sy, Y.; Raki, H.; Peluffo-Ordóñez, D.H. A comparative study of Machine Learning-based classification of Tomato fungal diseases: Application of GLCM texture features. Heliyon 2023, 9, e21697. [Google Scholar] [CrossRef] [PubMed]

- Alexander, I. Zenodo, Version v0.5; GLCMTextures: GLCM Textures of Raster Layers; 2025. [CrossRef]

- Khojastehnazhand, M.; Roostaei, M. Classification of seven Iranian wheat varieties using texture features. Expert Syst. Appl. 2022, 199, 117014. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Javed, A.; Amin, S.T.; Jeribi, F.; Tahir, A. CoffeeNet: A deep learning approach for coffee plant leaves diseases recognition. Expert Syst. Appl. 2024, 237, 121481. [Google Scholar] [CrossRef]

- Zhang, L.; Song, X.; Niu, Y.; Zhang, H.; Wang, A.; Zhu, Y.; Zhu, X.; Chen, L.; Zhu, Q. Estimation of nitrogen content in winter wheat plants by combining spectral and textural characteristics based on a low-cost RGB system with drones throughout the growing season. Agriculture 2024, 14, 456. [Google Scholar] [CrossRef]

- Wu, L.; Gong, Y.; Bai, X.; Wang, W.; Wang, Z. Nondestructive Determination of Leaf Nitrogen Content in Corn by Hyperspectral Imaging Using Spectral and Texture Fusion. Appl. Sci. 2023, 13, 1910. [Google Scholar] [CrossRef]

- Perico, C.; Zaidem, M.; Sedelnikova, O.; Bhattacharya, S.; Korfhage, C.; Langdale, J.A. Multiplexed in situ hybridization reveals distinct lineage identities for major and minor vein initiation during maize leaf development. Proc. Natl. Acad. Sci. USA 2024, 121, e2402514121. [Google Scholar] [CrossRef]

- Wang, Y.; Xing, J.; Wan, J.; Yao, Q.; Zhang, Y.; Mi, G.; Chen, L.; Li, Z.; Zhang, M. Auxin efflux carrier ZmPIN1a modulates auxin reallocation involved in nitrate-mediated root formation. BMC Plant Biol. 2023, 23, 74. [Google Scholar] [CrossRef]

- Jiang, N.; Wang, P.; Yang, Z.; Li, C.; Xia, Y.; Rong, X.; Han, Y.; Yang, L. Auxin regulates leaf senescence and nitrogen assimilation to promote grain filling in maize (Zea mays L.). Field Crops Res. 2025, 323, 109787. [Google Scholar] [CrossRef]

- Thölke, P.; Mantilla-Ramos, Y.-J.; Abdelhedi, H.; Maschke, C.; Dehgan, A.; Harel, Y.; Kemtur, A.; Berrada, L.M.; Sahraoui, M.; Young, T.; et al. Class imbalance should not throw you off balance: Choosing the right classifiers and performance metrics for brain decoding with imbalanced data. NeuroImage 2023, 277, 120253. [Google Scholar] [CrossRef]

- Wen, X.; Zhong, S.; Sun, W.; Xue, W.; Bai, W. Development of an optimal bilayered back propagation neural network (BPNN) to identify termal behaviors of reactions in isoperibolic semi-batch reactors. Process Saf. Environ. Prot. 2023, 173, 627–641. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Castro, C.L.; Braga, A.P. Aprendizado supervisionado com conjunto de dados desbalanceados. Sist. Intel. Sba Controle Automação. 2011, 22, 441–466. [Google Scholar] [CrossRef]

- Meza, J.K.S.; Yepes, D.O.; Rodrigo-Ilarri, J.; Rodrigo-Clavero, M.-E. Análise Comparativa da Implementação de Máquinas de Vetores de Suporte e Redes Neurais Artificiais de Memória de Longo Prazo em Modelos de Gestão de Resíduos Sólidos Municipais em Megacidades. Int. J. Environ. Res. Public Health 2023, 20, 4256. [Google Scholar] [CrossRef]

- Shu, M.; Zhu, J.; Yang, X.; Gu, X.; Li, B.; Ma, Y. A Spectral Decomposition Method for Estimating the Leaf Nitrogen Status of Maize by UAV-Based Hyperspectral Imaging. Comput. Electron. Agric. 2023, 212, 108100. [Google Scholar] [CrossRef]

- Chen, J.; Dai, Z.; Duan, J.; Matzinger, H.; Popescu, I. Improved Naive Bayes with Optimal Correlation Factor for Text Classification. SN Appl. Sci. 2019, 1, 1129. [Google Scholar] [CrossRef]

- Azad, M.; Nehal, T.H.; Moshkov, M.A. Novel Ensemble Learning Method Using Majority Based Voting of Multiple Selective Decision Trees. Computing 2025, 107, 42. [Google Scholar] [CrossRef]

- Singh, G.; Sharma, S. Enhancing Precision Agriculture through Cloud Based Transformative Crop Recommendation Model. Sci. Rep. 2025, 15, 9138. [Google Scholar] [CrossRef]

- Cheng, K.; Yan, J.; Li, G.; Ma, W.; Guo, Z.; Wang, W.; Li, H.; Da, Q.; Li, X.; Yao, Y. Remote sensing inversion of nitrogen content in silage maize plants based on feature selection. Front. Plant Sci. 2025, 16, 1554842. [Google Scholar] [CrossRef]

| Stage | Stage Characterization |

|---|---|

| Vegetative phase | |

| V0 ou VE | Germination and emergence: imbibition, digestion of reserve substances in the caryopsis, cell division, and growth of seminal roots. |

| V2 | Second leaf emergence: emergence of primary and seminal roots, onset of photosynthesis with two fully expanded leaves. |

| V4 | Fourth leaf emergence: determination of yield potential. |

| V6 | Sixth leaf emergence: increase in stem diameter, acceleration of tassel development, and determination of the number of kernel rows on the ear. |

| V8 | Eighth leaf emergence: beginning of plant height and stem thickness determination. |

| V12 | Twelfth leaf emergence: onset of ear number and size determination. |

| V14 | Fourteenth leaf emergence. |

| Reproductive phase | |

| Vt | Tassel emergence and opening of male flowers. |

| R1 | Full flowering: onset of yield confirmation. |

| R2 | Milk stage. |

| R3 | Dough stage. |

| R4 | Floury stage. |

| R5 | Dent stage. |

| R6 | Physiological maturity: maximum dry matter accumulation and maximum seed vigor, appearance of the black layer at the base of the kernel. |

| Algorithms | Vegetative Stage | Model Hyperparameters | Optimized Hyperparameters | Hyperparameter Search Range |

|---|---|---|---|---|

| Decision Trees | V4, R1 | Optimizable Tree Surrogate decision; split: Off. | Maximum number of splits: 583; Split criterion: Maximum deviance reduction. | Maximum number of splits: 1–3599; Split criterion: Maximum deviance reduction, Twoing rule, Gini’s diversity index. |

| Naive Bayes | V4, R1 | Optimizable Naive Bayes; Support: Unbounded. | Distribution names: Kernel; Kernel Type: Box. | Distribution names: Gaussian, Kernel; Kernel type: Gaussian, Box, Epanechnikov, Triangle. |

| K-Nearest Neighbors | V4 | Optimizable KNN. | Number of neighbors: 28; Distance metric: Mahalanobis; Distance weight: Squared inverse; Standardize data: No. | Number of neighbors: 1–1800; Distance metric: Mahalanobis, City block, Chebyshev, Correlation, Cosine, Euclidean, Hamming, Jaccard, Minkowski (cubic), Spearman; Standardize data: True, False. |

| K-Nearest Neighbors | R1 | Optimizable KNN. | Number of neighbors: 12; Distance metric: Mahalanobis; Distance weight: Squared inverse; Standardize data: yes. | Number of neighbors: 1–1800; Distance metric: Mahalanobis, City block, Chebyshev, Correlation, Cosine, Euclidean, Hamming, Jaccard, Minkowski (cubic), Spearman; Standardize data: True, False. |

| Support Vector Machines | V4 | Optimizable SVM; Kernel function: Gaussian; Kernel scale: Automatic. | Box constraint level: 10; Multiclass method: one-vs-one; Standardize data: Yes. | Multiclass method: one-vs-all, one-vc-one; Box constraint level: 0.001–1000; Standardize data: True, False. |

| Support Vector Machines | R1 | Optimizable SVM; Kernel function: Quadratic; Kernel scale: Automatic. | Box constraint level: 2.1544; Multiclass method: one-vs-all; Standardize data: False. | Multiclass method: one-vs-all, one-vc-one; Box constraint level: 0.001–1000; Standardize data: True, False. |

| Neural Network | V4 | Optimizable Neural Network; Iteration: 1000 | Number of fully connected layers: 1; Activation: ReLU; Regularization strength (Lambda): 3.5876 × 10−8; Standardize data: Yes; First layer size: 24. | Number of fully connected layers: 1–3; Activation: ReLU, Tanh, Sigmoid, None; Standardize data: Yes, No; Regularization strength (Lambda): 2.7778 × 10−9–27.7778; First layer size: 1–300; Second layer size: 1–300; Third layer size: 1–300. |

| Neural Network | R1 | Optimizable Neural Network; Iteration: 1000 | Number of fully connected layers: 1; Activation: Tanh; Regularization strength (Lambda): 7.7293 × 10−5; Standardize data: Yes; First layer size: 13. | Number of fully connected layers: 1–3; Activation: ReLU, Tanh, Sigmoid, None; Standardize data: Yes, No; Regularization strength (Lambda): 2.7778 × 10−9–27.7778; First layer size: 1–300; Second layer size: 1–300; Third layer size: 1–300. |

| Accuracy (%) | Total Cost | F1-Score (%) | Precision (%) | Sensitivity (%) | Prediction Speed (Obs/s) | Training Time (s) | |

|---|---|---|---|---|---|---|---|

| V4 | |||||||

| Decision Trees | 61.5 | 154 | 60.7 | 60.4 | 61.5 | 31,000 | 56,524 |

| Naive Bayes | 49.0 | 204 | 43.5 | 46.7 | 49.0 | 150 | 94.506 |

| Support Vector Machines | 78.7 | 85 | 78.6 | 78.7 | 78.7 | 12,000 | 60,687 |

| K-Nearest Neighbors | 77.7 | 89 | 75.6 | 77.8 | 77.7 | 700 | 17.324 |

| Neural Network | 80.7 | 77 | 80.7 | 80.7 | 80.7 | 25,000 | 66,648 |

| R1 | |||||||

| Decision Trees | 70.7 | 117 | 70.7 | 71.3 | 70.7 | 55,000 | 28.527 |

| Naive Bayes | 57.7 | 169 | 57.0 | 56.7 | 57.7 | 680 | 112.54 |

| Support Vector Machines | 87.0 | 52 | 86.9 | 86.9 | 87.0 | 11,000 | 4.3941 × 105 |

| K-Nearest Neighbors | 79.7 | 81 | 79.1 | 80.4 | 79.7 | 650 | 2844.7 |

| Neural Network | 86.5 | 54 | 86.5 | 86.8 | 86.5 | 19,000 | 4.1896 × 105 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Silva, T.L.d.; Devechio, F.d.F.d.S.; Tavares, M.S.; Regazzo, J.R.; Sardinha, E.J.d.S.; Altão, L.M.R.; Pagin, G.; Tech, A.R.B.; Baesso, M.M. Machine Learning in the Classification of RGB Images of Maize (Zea mays L.) Using Texture Attributes and Different Doses of Nitrogen. AgriEngineering 2025, 7, 317. https://doi.org/10.3390/agriengineering7100317

Silva TLd, Devechio FdFdS, Tavares MS, Regazzo JR, Sardinha EJdS, Altão LMR, Pagin G, Tech ARB, Baesso MM. Machine Learning in the Classification of RGB Images of Maize (Zea mays L.) Using Texture Attributes and Different Doses of Nitrogen. AgriEngineering. 2025; 7(10):317. https://doi.org/10.3390/agriengineering7100317

Chicago/Turabian StyleSilva, Thiago Lima da, Fernanda de Fátima da Silva Devechio, Marcos Silva Tavares, Jamile Raquel Regazzo, Edson José de Souza Sardinha, Liliane Maria Romualdo Altão, Gabriel Pagin, Adriano Rogério Bruno Tech, and Murilo Mesquita Baesso. 2025. "Machine Learning in the Classification of RGB Images of Maize (Zea mays L.) Using Texture Attributes and Different Doses of Nitrogen" AgriEngineering 7, no. 10: 317. https://doi.org/10.3390/agriengineering7100317

APA StyleSilva, T. L. d., Devechio, F. d. F. d. S., Tavares, M. S., Regazzo, J. R., Sardinha, E. J. d. S., Altão, L. M. R., Pagin, G., Tech, A. R. B., & Baesso, M. M. (2025). Machine Learning in the Classification of RGB Images of Maize (Zea mays L.) Using Texture Attributes and Different Doses of Nitrogen. AgriEngineering, 7(10), 317. https://doi.org/10.3390/agriengineering7100317