Abstract

In the field of agriculture, measuring the leaf area is crucial for the management of crops. Various techniques exist for this measurement, ranging from direct to indirect approaches and destructive to non-destructive techniques. The non-destructive approach is favored because it preserves the plant’s integrity. Among these, several methods utilize leaf dimensions, such as width and length, to estimate leaf areas based on specific models that consider the unique shapes of leaves. Although this approach does not damage plants, it is labor-intensive, requiring manual measurements of leaf dimensions. In contrast, some indirect non-destructive techniques leveraging convolutional neural networks can predict leaf areas more swiftly and autonomously. In this paper, we propose a new direct method using 3D point clouds constructed by semantic RGB-D (Red Green Blue and Depth) images generated by a semantic segmentation neural network and RGB-D images. The key idea is that the leaf area is quantified by the count of points depicting the leaves. This method demonstrates high accuracy, with an R2 value of 0.98 and a RMSE (Root Mean Square Error) value of 3.05 cm2. Here, the neural network’s role is to segregate leaves from other plant parts to accurately measure the leaf area represented by the point clouds, rather than predicting the total leaf area of the plant. This method is direct, precise, and non-invasive to sweet pepper plants, offering easy leaf area calculation. It can be implemented on laptops for manual use or integrated into robots for automated periodic leaf area assessments. This innovative method holds promise for advancing our understanding of plant responses to environmental changes. We verified the method’s reliability and superior performance through experiments on individual leaves and whole plants.

1. Introduction

Chlorophyll pigments in leaves play an important role in plant photosynthesis. Leaves are primarily responsible for capturing light, gas exchange, and regulating thermal conditions. Changing environmental factors such as light and temperature can lead to changes in leaf area [1,2,3]. Whole plant leaf area results from the interaction between the genotype and environmental conditions [4,5]. In addition, leaves are an important factor in determining crop yield [6,7]. Therefore, estimating leaf area is a fundamental aspect of plants and plays a vital role in agriculture, ecology, and various research and practical applications. Accurate leaf area measurements contribute to better-informed decisions [5,8,9].

There are two types of measuring leaf area: destructive and non-destructive methods. Although destructive methods have the disadvantage that plants are destroyed, the destructive measurement approaches have remained as the reference and the most accurate methods. In these methods, leaves are separated, and then every single leaf is measured by boards or machines. Other popular ways used for estimating the area of a leaf pile at once, are developed based on the leaf weight. There is a strong relationship between leaf area and dry or fresh weights [10]. A similar method but using a different property which is volume has been public recently [11]. The result of this method has R2 of 0.99 and RMSE of 2.7 mm. However, it is impossible to obtain the leaf area of a plant at different times when using a destructive method. It is only suitable for research or as a reference basis for other methods. Therefore, non-destructive receive more attention. Many researchers study the relationship between leaf area and the width and height of leaves of different plant types [6,12,13,14,15,16,17]. Mathematical models were deployed based on this relationship and they are different depending on the leaf morphology. These methods may not destroy leaves but it takes time to obtain the whole leaf area of a plant. Other non-destructive methods using camera sensors and image processing are also very considerable [18,19,20,21]. These methods can estimate not only a single leaf but also all of the plant’s leaves. Leaves were detected by the difference in leaf color from other objects’ colors. The weakness of this method is that it cannot determine the area of obscured leaves. In addition, leaf colors may change differently depending on the environmental light or age.

With the development of deep learning, some methods applied convolution neural networks to obtain more accurate results [20,22,23]. In these studies, cameras were located at high places and looked down at the plants. They use convolution neural networks to obtain image features and return the LA values. Their training dataset included RGB-D images and LA values calculated via the destructive method. The latest research on estimated sweet pepper LA by T. Moon et al. reached an R2 = 0.93 and RMSE of 0.12 m2 [23].

Besides applying neural networks, there are other research studies based on 3D reconstruction [24,25,26,27,28,29]. The main steps of these studies are: capturing many RGB-D images around plants, removing background by removing non-green segments, applying leaf segmentation with different methods but not using neural networks, and 3D reconstruction by SfM (structure from motion). Finally, LA values were estimated by counting the number of voxels of 3D point clouds or converting them to a surface area via a mesh-based calculation. Because cameras were moved around plants or multi-cameras were placed around the plants, most occluded leaves were detected. Although this method has a high accuracy with R2 = 0.99 and RMSE = 3.23 cm2, it took a lot of time and it is impossible to move cameras around plants in sweet pepper green farms.

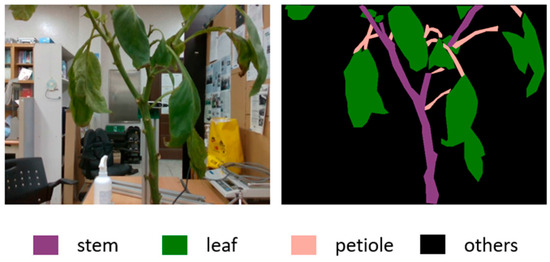

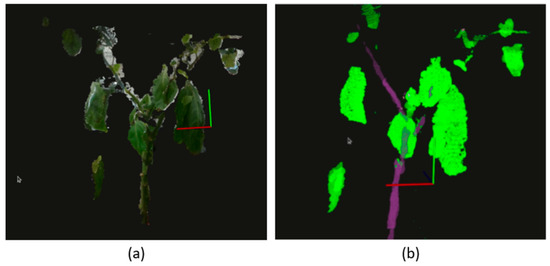

Along with this trend, we developed a leaf area estimation method based on depth cameras and semantic segmentation neural networks. The advantages of neural networks and 3D reconstruction were explored to create a new high-performance system. Different from previous studies, LA values are not detected directly from the neural network. Firstly, the neural network is used to recognize leaves and to create semantic images. Then, LA values are calculated from a semantic point cloud that is created by semantic images and their corresponding depth images. This method has R2 values of 0.98 and RMSE values of 3.05 cm2. Figure 1 shows the RGB and its semantic images created by the semantic segmentation neural network. In Figure 2a is an RGB point cloud and Figure 2b is a semantic point cloud of a sweet pepper plant. Green, pink, and purple colors represent leaves, petioles, and stems, respectively. These point clouds reflect the sweet pepper plant’s true size with a specific resolution. In our application, we used a resolution of 0.001 m. Therefore, the number of green points can express the leaf area. It is the key point of this method.

Figure 1.

Semantic segmentation of sweet pepper plant parts.

Figure 2.

3D point clouds. (a) RGB point cloud, and (b) semantic 3D point cloud.

Our method can estimate the leaf area quickly. It does not depend on leaf morphology. The accuracy of this method depends on the accuracy of semantic segmentation neural networks and the depth camera. A literature review on semantic segmentation neural networks was made before choosing a suitable one. Deeplab V3+ is one of the well-known and high-performance neural networks [30]; however, it takes time and memory. In addition, this program will be installed on a robotic system to obtain the leaf area automatically. A neural network that can run in real time is required. MobileNet series can run in real-time, however, their results did not meet the requirement [31,32,33]. In the job of recognizing plant parts, we recognize leaves, stems, and petioles as in Figure 1. Based on the plant parts’ features such as thin and long shapes and the number of recognized objects that are small, we proposed a semantic segmentation neural network that can run in real-time and have the same high performance as seen in previous research [34]. In addition, this neural network can leverage both RGB and depth images to enhance the results. The right image in Figure 1 is named a semantic image.

The depth images store the distance of objects from the camera. A point cloud can be created from the RGB and depth image by Equation (1). The values x, y, and z are the coordinate values in 3D space. The scaling_factor and focal_length are intrinsic camera values. Figure 1 shows an example of an RGB point cloud created from RGB and depth images and a semantic point cloud created from semantic and depth images. The right image of Figure 1 shows the semantic point cloud created from semantic and depth images. The semantic point cloud is very useful, as it can help us find the petiole pruning point [35]. In this research, we can leverage the semantic point cloud to estimate the leaf area.

2. Materials and Methods

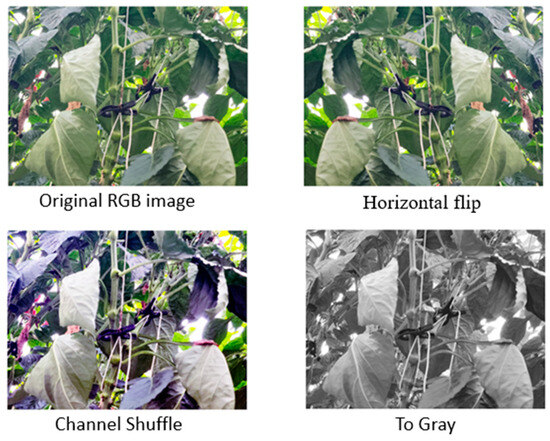

2.1. Building Dataset and Training Semantic Segmentation Neural Network

Before creating a semantic point cloud to detect the leaf area, the neural network should be well-trained. Sweet pepper is the study object in this research, so a sweet pepper dataset with more than 500 images was made for the training phase by the camera RealSense D435. These images were taken from green farms and plants in the lab from infancy to maturity. Each image should include a stem and leaves. After obtaining the mask images, we employed various augmentation techniques on the RGB images, including blurring, horizontal flipping, scaling, graying, color channel noise, and brightness adjustments. Figure 3 presents an example of the augmentation techniques in this dataset. Our training dataset comprises more than 6000 RGB images and 6000 depth images.

Figure 3.

Example of augmentation to generate training dataset.

The structure of the semantic segmentation neural network has two stages [34]. The first stage includes a simple convolution neural network to remove the background and detect easily recognized pixels. The second stage uses the first stage’s output as its input and generates the final output. Bottleneck residual blocks [32] and an Atrous Spatial Pyramid Pooling block [36] are employed in this stage.

The training program was written in Python 3.9 and uses PyTorch 1.8 and TorchVision 1.10 library. The model was trained and tested on a machine with GPU Nvidia Geforce RTX 3090 with CUDA Version 11.2. It was trained with 50 epochs for each stage and had the best IOU (intersection over union) of 0.65 and fps (frame per second) of 138.2.

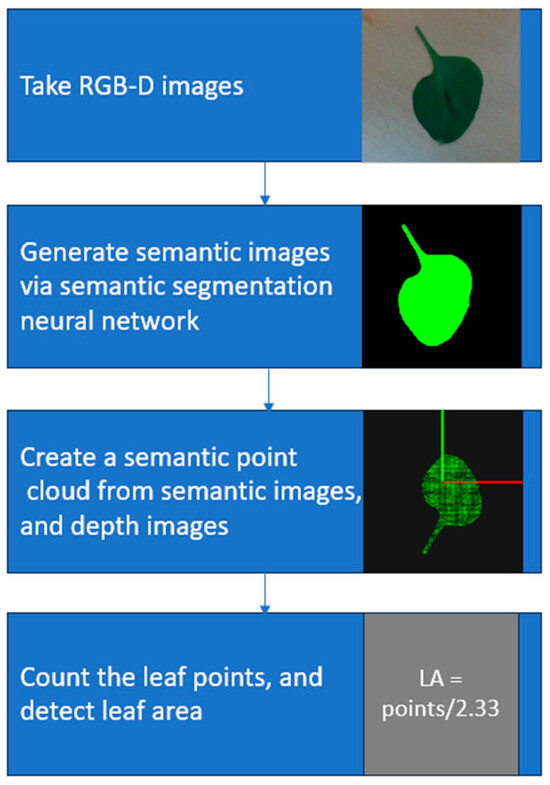

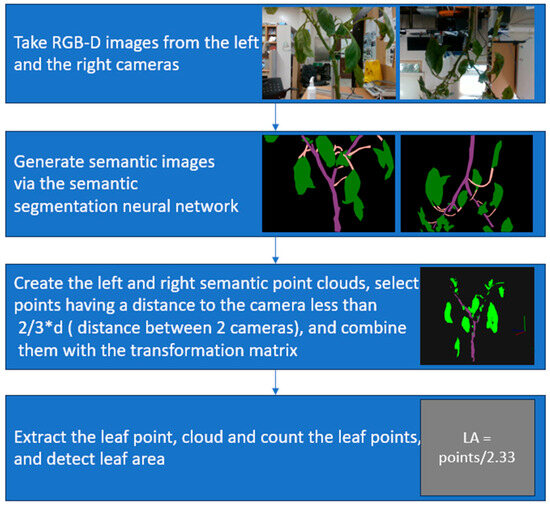

2.2. Estimating Single Leaf Area

After having the trained model, it was used in the leaf area estimating process. To obtain the area for a single leaf, an RGB-D camera was used. This process is described in Figure 4. Firstly, the camera takes RGB-D images and then they are transferred to the semantic segmentation neural network to obtain the semantic images. Then, the semantic point cloud is created from the semantic image and the corresponding depth image. We used the PCL library to store this point cloud [37]. After setting the resolution to 0.001, each pair of points should have a distance of 1 mm. Therefore, the number of green points is proportional to the area and reflects the leaf area with a constant ratio. In other words, the area of the leaf can be found by finding the total number of points.

Figure 4.

Steps to estimate leaf area. The red and green lines are the Ox- and Oy-axes of the 3D coordinates.

2.3. Estimating Leaf Area for Both Sides of the Plant

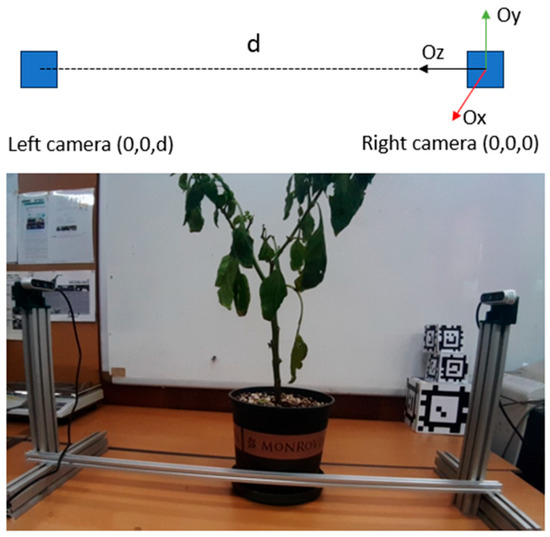

Using one camera can estimate leaf area easily, no matter what the leaf shape is because the semantic segmentation neural network can recognize leaves in various forms. However, applying this method to obtain the whole leaf area of a plant by adding the areas of the leaves takes a lot of time. Moreover, one camera can capture one side of the plant at one moment. If building a 3D point cloud by moving the camera around the factory so that one may encounter cumulative problems during 3D reconstruction, and if one may not work on a green farm, we propose a method using two RGB-D cameras facing each other to obtain both sides of a plant at the same time, as shown in Figure 5. If the right camera is positioned at (0, 0, 0) of its coordinate, the left camera is placed at (0, 0, d), where d represents the distance between the two cameras. To ensure that both cameras capture the plant, the left camera is rotated by 180 degrees. This enables us to calculate the transformation matrix between the two cameras in Equation (2).

Figure 5.

Experimental setup of leaf area estimation using two RGB-D cameras.

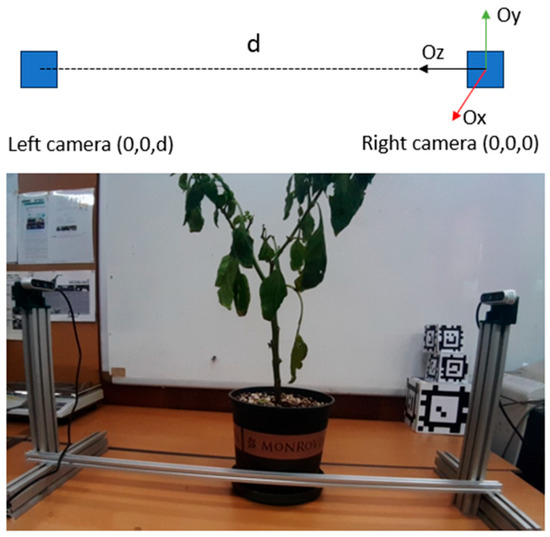

Figure 6 shows the steps to detect the leaf area of the whole plant. The process involves taking images from both cameras and passing them through the neural network module to obtain semantic images. The semantic images are then combined with their respective depth images to create semantic 3D point clouds. During this process, only points having a distance of less than 2/3*d are selected. This helps us to remove the background and to obtain information about the plant between the two cameras. Then, the two separate semantic point clouds are merged using the transformation matrix. Because the cameras capture two sides of the plants, the two point clouds share many of the same leaves and other objects but with opposite sides. Therefore, when integrating these point clouds there could be a small distance between the two point clouds of the same objects. To improve the integration of the point clouds, we increased the point cloud resolution after merging them to convert the two-side-object points to become one point. We then extracted the leaf points, and the number of leaf points obtained was proportional to the leaf area and point cloud resolution. To summarize, we used two cameras to capture images of a plant from both sides, and a transformation matrix was calculated to merge the two semantic 3D point clouds obtained from the images. This helped us to estimate the whole leaf area of the plant.

Figure 6.

Process to estimate the whole leaf area. The red and green lines are the Ox- and Oy-axes of 3D coordinates.

3. Experiments and Results

3.1. Experiment on Single Leaf

We used an Intel RealSense D435 camera to take RGB-D images. We wrote a program based on an ROS with 3 modules. One module controlled the camera, took images, and published through a camera topic. Another module read RGB images, converted them to semantic images, and published them to the semantic image topic. The third module created semantic 3D point clouds from semantic and depth images and counted leaf points. The 3D point clouds were displayed by the PCL library.

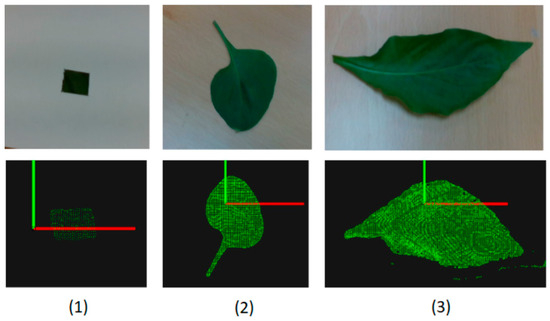

Our experiments focus on the relationship between the number of points and the proper area. We set up experiments to estimate leaf area with 21 samples. At first, we cut 400 mm2 of a leaf and counted its points with the application. The second sample was a flat leaf, and the third leaf was curved. Figure 7 shows that each experiment’s semantic 3D point cloud is similar to its real leaf, so that the prediction error is negligible. Table 1 shows the number of points in the semantic 3D point cloud of the three experiments. We can see a correlation between the number of points and the leaf area estimated by the board. In the third experiment, the curved leaf was complex to evaluate by the board, so the estimated area could be inaccurate. In contrast, the RGB-D images can describe curved leaves as well as flat leaves. Therefore, the number of points in the 3D point cloud was much higher than the leaf area estimated by the board. We conducted more experiments to estimate the average ratio between the point numbers and the real area. We used different leaves with different sizes to have a good result.

Figure 7.

Three samples for single-leaf area estimation experiments. The red and green lines are the Ox- and Oy-axes of the 3D coordinate. (1)–(3) are the first three samples. (1) is a 2 cm2 rectangle leaf, (2) is flat leaf and (3) is a curved leaf.

Table 1.

Leaf area estimation and number of points in 3D semantic point clouds.

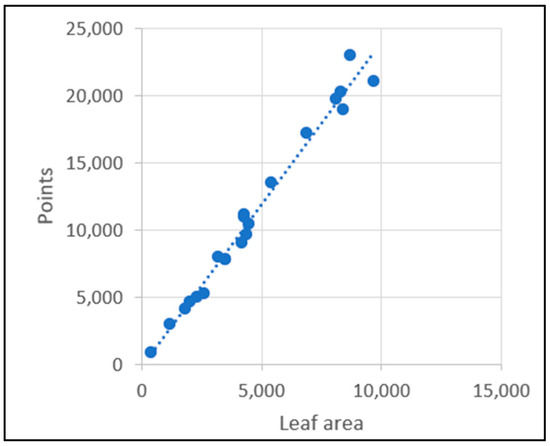

Figure 8 shows the relationship between the number of points and leaf area values as a linear function. The best ratio NP/LA is 2.33. With this ratio, the leaf area in these experiments can be detected quickly by the equation: LA = Number of points/2.33. From this equation, the leaf area values estimated by the semantic point cloud were listed in the last column of Table 1. We considered the LA estimated by the board as the true leaf area; the RMSE of this method is 3.05 in cm2 and R2 = 0.98.

Figure 8.

Regressed leaf areas.

3.2. Experiment for the Whole Leaf Area

Our experiments focus on the stability of using two cameras to estimate the leaf area. We used one plant but moved the plant around. We focus on the error of the number of points between each case. The experiments were set up as in Figure 5. The distance between the two cameras was 930 mm.

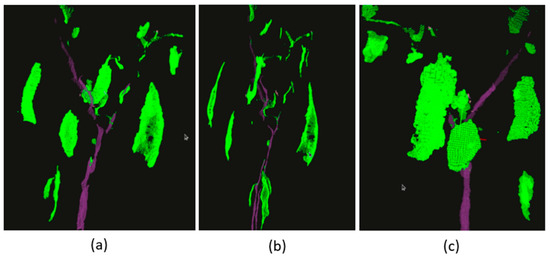

Figure 9b shows the semantic 3D point cloud created by two cameras. It is easy to find that errors in integrating the two-point clouds are negligible. The final semantic 3D point cloud can express both sides of the plant. It can show many hidden leaves if we just look from one side. Table 2 presents the number of leaf points in three cases. We found that the errors between them were small. This means that the proposed method using two cameras can estimate the leaf area properly.

Figure 9.

The visual result of creating a semantic 3D point cloud from two RGB-D cameras. Images (a–c) are the front, left, and back viewpoints, respectively.

Table 2.

Leaf area estimation results using 3D semantic point clouds.

3.3. Discussion

The experiments have demonstrated that using a 3D semantic point cloud to estimate leaf area has several advantages. Firstly, there is no need to cut down leaves. By simply taking photos and performing simple operations, leaf area can be estimated. If one camera is used, the total leaf area can be estimated by summing the area of each leaf. However, this process is time-consuming and must be completed by humans. In contrast, using two cameras makes it easy to estimate the leaf area of the whole plant. This type of system can be installed on a robot to automatically obtain the whole plant leaf area. Measuring leaf area is usually completed weekly, as it is not only time-consuming but also requires a lot of effort. Moreover, it is typically only used for research purposes. However, with this method, we have the opportunity to create an automatic leaf area estimation system that is more accurate and can obtain leaf area in a shorter period. This, in turn, enables farmers to determine the plant’s response to current environmental changes and predict future productivity. This can help them make timely decisions. Leaf area estimation is no longer only used in research but can also be employed in smart farms to help take better care of plants.

Our method uses neural networks with a different purpose from other research. In this method, the convolution neural network is used to obtain features for leaf recognition at the pixel level. This is a strong point of convolution neural networks. Many well-known semantic segmentation neural networks perform this task, while using convolution neural networks to return a value in number seems unpopular. Therefore, the R2 value of this method is higher than other methods using convolution neural networks.

An RGB-D camera combines a standard RGB camera with a depth sensor in a single device. The depth sensor typically uses time-of-flight, structured light, or stereo-vision technology to measure the distance to objects in the scene. In this research, the RealSense D435 camera uses structured light to obtain all leaf information. Leaves can be reconstructed into a 3D point cloud from the depth information. Therefore, the more accurate the depth sensor, the more precise the 3D point cloud. With the help of the semantic segmentation neural network, the leaf point cloud can be extracted and the leaf area value can be calculated by counting the points regardless of leaf morphologies.

The second factor that can have a significant impact on the leaf area value is the performance of the semantic segmentation neural network. Errors in leaf recognition directly affect leaf area values. However, this is a strong point for this method since it makes this method independent of leaf morphologies. We can apply this method to various types of plants by changing the training dataset, whereas other methods require numerous experiments to find new mathematical formulas.

The equation to detect leaf area will be changed with different point cloud resolutions. The higher the resolution is the lower the number of points is. The ratio between the number of points and leaf areas should be detected for new resolutions.

Although two cameras can gather information on two sides of the plant, they cannot obtain all the plant information if the plant is tall. Thus, it is necessary to develop a method that can create a 3D point cloud based on image sequences taken from cameras while they are moving upwards to gather complete plant information.

Throughout the history of scientific attempts to estimate the leaf area, there has been a need to flatten individual leaves and analyze them one at a time. This process is time-consuming and labor-intensive, even when high-speed sensors or equipment are used. Additionally, errors can occur when operations are carried out by humans during a long time, such as when measuring the area of dense samples. The quantification of these practical errors is difficult to detect. With our proposed technique, an automatic system determining leaf area for the farm can be built. Results are returned periodically and manual errors are eliminated.

4. Conclusions

We have proposed a novel method for estimating the leaf area of sweet pepper plants. It is a direct method based on deep learning and 3D construction. A semantic segmentation neural network was used to recognize leaves and generate semantic images. Then, with the two camera systems, a point cloud of only leaves was built. From the number of points, the leaf area was estimated.

Our experiments have demonstrated a correlation between the number of points and the actual leaf area. The result of this method shows higher performance than other methods using convolution neural networks. It also has several other advantages. Firstly, it saves time because the process of building a 3D point cloud for leaves can run in real time. The whole process is done by machine, which decreases the errors that often happened with humans when working with numbers of samples. Secondly, it is independent of leaf morphologies, making it applicable to a wide variety of plant types. The leaves do not have to be removed for measurement, which is a significant benefit. Finally, this method paves the way for the development of a robotic system that can automatically measure leaf area, similar to other environmental sensors.

In the future, we plan to conduct more experiments with various types of leaf area measurements to determine the optimal point-to-area ratio for the best results. Furthermore, we are dedicated to enhancing this application by enabling it to estimate the total leaf area of a plant through the upward movement of cameras.

Author Contributions

Conceptualization, T.T.H.G. and Y.-J.R.; data curation: T.T.H.G.; formal analysis, T.T.H.G.; methodology, T.T.H.G.; programming, T.T.H.G.; investigation, Y.-J.R.; resources, Y.-J.R.; supervision, Y.-J.R.; review and editing, T.T.H.G. and Y.-J.R.; project administration, Y.-J.R.; funding acquisition, Y.-J.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Institute of Planning and Evaluation for Technology in Food, Agriculture and Forestry (IPET) and Korea Smart Farm R&D Foundation (KosFarm) through the Smart Farm Innovation Technology Development Program, funded by the Ministry of Agriculture, Food and Rural Affairs (MAFRA), and Ministry of Science and ICT (MSIT), Rural Development Administration (RDA) (421032-04-2-HD060).

Data Availability Statement

The data presented in this study are available on request from the corresponding author (the data will not be available until the project is finished).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fanourakis, D.; Hyldgaard, B.; Giday, H.; Bouranis, D.; Körner, O.; Nielsen, K.L.; Ottosen, C.-O. Differential effects of elevated air humidity on stomatal closing ability of Kalanchoë blossfeldiana between the C3 and CAM states. Environ. Exp. Bot. 2017, 143, 115–124. [Google Scholar] [CrossRef]

- Fauset, S.; Freitas, H.C.; Galbraith, D.R.; Sullivan, M.J.; Aidar, M.P.; Joly, C.A.; Phillips, O.L.; Vieira, S.A.; Gloor, M.U. Differences in leaf thermoregulation and water use strategies between three co-occurring Atlantic forest tree species. Plant Cell Environ. 2018, 41, 1618–1631. [Google Scholar] [CrossRef]

- Zou, J.; Zhang, Y.; Zhang, Y.; Bian, Z.; Fanourakis, D.; Yang, Q.; Li, T. Morphological and physiological properties of indoor cultivated lettuce in response to additional far-red light. Sci. Hortic. 2019, 257, 108725. [Google Scholar] [CrossRef]

- Honnaiah, P.A.; Sridhara, S.; Gopakkali, P.; Ramesh, N.; Mahmoud, E.A.; Abdelmohsen, S.A.; Alkallas, F.H.; El-Ansary, D.O.; Elansary, H.O. Influence of sowing windows and genotypes on growth, radiation interception, conversion efficiency and yield of guar. Saudi J. Biol. Sci. 2021, 28, 3453–3460. [Google Scholar] [CrossRef]

- Jo, W.J.; Shin, J.H. Effect of leaf-area management on tomato plant growth in greenhouses. Hortic. Environ. Biotechnol. 2020, 61, 981–988. [Google Scholar] [CrossRef]

- Keramatlou, I.; Sharifani, M.; Sabouri, H.; Alizadeh, M.; Kamkar, B. A simple linear model for leaf area estimation in Persian walnut (Juglans regia L.). Sci. Hortic. 2015, 184, 36–39. [Google Scholar] [CrossRef]

- Li, S.; van der Werf, W.; Zhu, J.; Guo, Y.; Li, B.; Ma, Y.; Evers, J.B. Estimating the contribution of plant traits to light partitioning in simultaneous maize/soybean intercropping. J. Exp. Bot. 2021, 72, 3630–3646. [Google Scholar] [CrossRef]

- Huang, J.; Tian, L.; Liang, S.; Ma, H.; Becker-Reshef, I.; Huang, Y.; Su, W.; Zhang, X.; Zhu, D.; Wu, W. Improving winter wheat yield estimation by assimilation of the leaf area index from Landsat TM and MODIS data into the WOFOST model. Agric. For. Meteorol. 2015, 204, 106–121. [Google Scholar] [CrossRef]

- Fiorani, F.; Schurr, U. Future scenarios for plant phenotyping. Annu. Rev. Plant Biol. 2013, 64, 267–291. [Google Scholar] [CrossRef]

- Huang, W.; Su, X.; Ratkowsky, D.A.; Niklas, K.J.; Gielis, J.; Shi, P. The scaling relationships of leaf biomass vs. leaf surface area of 12 bamboo species. Glob. Ecol. Conserv. 2019, 20, e00793. [Google Scholar] [CrossRef]

- Haghshenas, A.; Emam, Y. Accelerating leaf area measurement using a volumetric approach. Plant Methods 2022, 18, 61. [Google Scholar] [CrossRef]

- Sala, F.; Arsene, G.G.; Iordănescu, O.; Boldea, M. Leaf area constant model in optimizing foliar area measurement in plants: A case study in apple tree. Sci. Hortic. 2015, 193, 218–224. [Google Scholar] [CrossRef]

- Sriwijaya, U.; Sriwijaya, U. Non-destructive leaf area (Capsicum chinense Jacq.) estimation in habanero chili. Int. J. Agric. Technol. 2022, 18, 633–650. [Google Scholar]

- Wei, H.; Li, X.; Li, M.; Huang, H. Leaf shape simulation of castor bean and its application in nondestructive leaf area estimation. Int. J. Agric. Biol. Eng. 2019, 12, 135–140. [Google Scholar] [CrossRef]

- Koubouris, G.; Bouranis, D.; Vogiatzis, E.; Nejad, A.R.; Giday, H.; Tsaniklidis, G.; Ligoxigakis, E.K.; Blazakis, K.; Kalaitzis, P.; Fanourakis, D. Leaf area estimation by considering leaf dimensions in olive tree. Sci. Hortic. 2018, 240, 440–445. [Google Scholar] [CrossRef]

- Yu, X.; Shi, P.; Schrader, J.; Niklas, K.J. Nondestructive estimation of leaf area for 15 species of vines with different leaf shapes. Am. J. Bot. 2020, 107, 1481–1490. [Google Scholar] [CrossRef]

- Cho, Y.Y.; Oh, S.; Oh, M.M.; Son, J.E. Estimation of individual leaf area, fresh weight, and dry weight of hydroponically grown cucumbers (Cucumis sativus L.) using leaf length, width, and SPAD value. Sci. Hortic. 2007, 111, 330–334. [Google Scholar] [CrossRef]

- Campillo, C.; García, M.I.; Daza, C.; Prieto, M.H. Study of a non-destructive method for estimating the leaf area index in vegetable crops using digital images. HortScience 2010, 45, 1459–1463. [Google Scholar] [CrossRef]

- Liu, H.; Ma, X.; Tao, M.; Deng, R.; Bangura, K.; Deng, X.; Liu, C.; Qi, L. A plant leaf geometric parameter measurement system based on the android platform. Sensors 2019, 19, 1872. [Google Scholar] [CrossRef]

- Schrader, J.; Pillar, G.; Kreft, H. Leaf-IT: An Android application for measuring leaf area. Ecol. Evol. 2017, 7, 9731–9738. [Google Scholar] [CrossRef]

- Easlon, H.M.; Bloom, A.J. Easy Leaf Area: Automated digital image analysis for rapid and accurate measurement of leaf area. Appl. Plant Sci. 2014, 2, 1400033. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, Z.; Xu, D.; Ma, J.; Chen, Y.; Fu, Z. Growth monitoring of greenhouse lettuce based on a convolutional neural network. Hortic. Res. 2020, 7, 124. [Google Scholar] [CrossRef]

- Moon, T.; Kim, D.; Kwon, S.; Ahn, T.I.; Son, J.E. Non-Destructive Monitoring of Crop Fresh Weight and Leaf Area with a Simple Formula and a Convolutional Neural Network. Sensors 2022, 22, 7728. [Google Scholar] [CrossRef]

- Xia, C.; Wang, L.; Chung, B.K.; Lee, J.M. In situ 3D segmentation of individual plant leaves using a RGB-D camera for agricultural automation. Sensors 2015, 15, 20463–20479. [Google Scholar] [CrossRef]

- Zhang, Y.; Teng, P.; Shimizu, Y.; Hosoi, F.; Omasa, K. Estimating 3D leaf and stem shape of nurserypaprika plants by a novel multi-camera photography system. Sensors 2016, 16, 874. [Google Scholar] [CrossRef]

- Bailey, B.N.; Mahaffee, W.F. Rapid measurement of the three-dimensional distribution of leaf orientation and the leaf angle probability density function using terrestrial LiDAR scanning. Remote Sens. Environ. 2017, 194, 63–76. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Automatic leaf segmentation for estimating leaf area and leaf inclination angle in 3D plant images. Sensors 2018, 18, 3576. [Google Scholar] [CrossRef]

- Ando, R.; Ozasa, Y.; Guo, W. Robust surface reconstruction of plant leaves from 3D point clouds. Plant Phenomics 2021, 2021, 3184185. [Google Scholar] [CrossRef]

- Paulus, S. Measuring crops in 3D: Using geometry for plant phenotyping. Plant Methods 2019, 15, 103. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2018; Volume 11211, pp. 833–851. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for mobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Giang, T.T.H.; Khai, T.Q.; Im, D.; Ryoo, Y. Fast Detection of Tomato Sucker Using Semantic Segmentation Neural Networks Based on RGB-D Images. Sensors 2022, 22, 5140. [Google Scholar] [CrossRef]

- Giang, T.T.H.; Ryoo, Y.J.; Im, D.Y. 3D Semantic Point Clouds Construction based on ORB-SLAM3 and ICP Algorithm for Tomato Plants. In Proceedings of the 2022 Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems (SCIS&ISIS), Ise, Japan, 29 November–2 December 2022; pp. 2–4. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; Volume 74, pp. 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).