Abstract

Precision agriculture requires accurate methods for classifying crops and soil cover in agricultural production areas. The study aims to evaluate three machine learning-based classifiers to identify intercropped forage cactus cultivation in irrigated areas using Unmanned Aerial Vehicles (UAV). It conducted a comparative analysis between multispectral and visible Red-Green-Blue (RGB) sampling, followed by the efficiency analysis of Gaussian Mixture Model (GMM), K-Nearest Neighbors (KNN), and Random Forest (RF) algorithms. The classification targets included exposed soil, mulching soil cover, developed and undeveloped forage cactus, moringa, and gliricidia in the Brazilian semiarid. The results indicated that the KNN and RF algorithms outperformed other methods, showing no significant differences according to the kappa index for both Multispectral and RGB sample spaces. In contrast, the GMM showed lower performance, with kappa index values of 0.82 and 0.78, compared to RF 0.86 and 0.82, and KNN 0.86 and 0.82. The KNN and RF algorithms performed well, with individual accuracy rates above 85% for both sample spaces. Overall, the KNN algorithm demonstrated superiority for the RGB sample space, whereas the RF algorithm excelled for the multispectral sample space. Even with the better performance of multispectral images, machine learning algorithms applied to RGB samples produced promising results for crop classification.

1. Introduction

Water scarcity and food security are recurring challenges in the Brazilian semiarid region, and agricultural wastewater reuse alternatives show high potential [1,2,3]. In addition, conservation soil management and intercrops allow better utilization of water resources from rainfall and irrigation systems [2,4]. The intercrop can improve the water use efficiency, enhance agricultural production sustainability, and increase the protein bank [5]. Among the crops with high potential and resilience in the Brazilian semiarid environment, the forage cactus stands out. When irrigated using conservation techniques, it shows high development and production, yielding positive results when grown intercropped with some other crop species [6].

The ability to accurately forecast the crop types is highly necessary for estimating cultivated area, predicting yield volume, and determining crop water requirements [7]. Detailed Monitoring of agricultural lands is pivotal in precision agriculture, contributing to enhancing crop production and water conservation [8]. Remote sensing, within the precision agriculture approach, provides crucial information for monitoring natural resources, including crop growth, land use, soil moisture, plant health, and crop forecasting [9]. For widespread adoption in agriculture, it is essential to rely on measured data and integrate sources to ensure practical robustness [10]. Unmanned Aerial Vehicles (UAVs) equipped with multispectral (MS) sensors offer several benefits in precision agriculture, enabling the acquisition of high-resolution data that capture the spatial variability of attributes and crops [11,12,13]. Cao et al. [14] compared both RGB and multispectral imagery from UAV to map Stay Green (SG) phenotyping of diversified wheat germplasm. Although visible Red-Green-Blue (RGB) images could be valuable information, spectral indices containing red edge or near-infrared band were more effective for proper crop classification. Unmanned Aerial Vehicles (UAVs) in agriculture allow for capturing aerial images with high spatial resolution due to their low flight altitude [12,15,16,17].

The adoption of Artificial Intelligence (AI) techniques optimizes productivity and irrigation management by identifying zones requiring interventions and reducing water wastage [18]. Machine learning may be associated with resource savings, such as in autonomous irrigation, where the algorithm adjusts the irrigation volume and time based on crop needs, optimizing irrigation application, thus enhancing water productivity and agricultural sustainability [19], being increasingly strategic for implementation of Sustainable Objectives Goals [20].

Studies such as Yadav et al. [21] highlight the potential of remote sensing in monitoring crop health, weed control, and estimating evapotranspiration. Iqbal et al. [22] developed machine learning (ML) applications for crop classification to identify invasive plants in Pakistan, demonstrating the high performance of Gaussian Mixture Model (GMM), support vector machine (SVM), and Random Forest (RF) algorithms. Meanwhile, Sivakumar and TYJ [23] detected weeds from high-resolution images, emphasizing the potential of Unmanned Aerial Vehicles (UAVs) in agricultural research. Zhang et al. [24] used UAV hyperspectral data to investigate methods for crop classification (16 crop species) and status monitoring (tea plant and rice growth), including K-nearest Neighbors (KNN), Random Forest (RF), and a genetic algorithm coupled with a support vector machine (GA-SVM). The authors highlight that mixed crop planting, or a mixed crop growth status represents a complex scenario, particularly because the overlap of leaves, stems, and plant structures in the intercropping can create challenges in clearly distinguishing among different crops in the image. This complexity may result in confusion during classification, thus requiring machine learning algorithms for proper image analysis.

Utilizing spectral responses and crop classification allows adjusting management strategies for enhancing productivity, particularly in the case of intercropped cultivations. Despite recent research employing multispectral UAV remote sensing, exemplified by the study conducted by Lourenço et al. [12] for highly heterogeneous fields, and the integration of machine learning for agricultural mapping through remote sensing, as demonstrated in the work of Iqbal et al. [22], investigations that jointly incorporate artificial intelligence and UAV images for crop classification are still in their early stages in the literature. Specifically, there is a significant need for Machine Learning (ML) applications to interpret multispectral and RGB images acquired by UAVs for the automatic classification of intercropped forage cacti in irrigated areas of the Brazilian semiarid.

In this context, this study aims to: (i) evaluate the performance of Machine Learning (ML) algorithms for the automatic classification of intercropped fields in the Brazilian semi-arid region and compare different sample spaces; (ii) classify different crops and development levels of forage cactus.

2. Materials and Methods

2.1. The Study Area

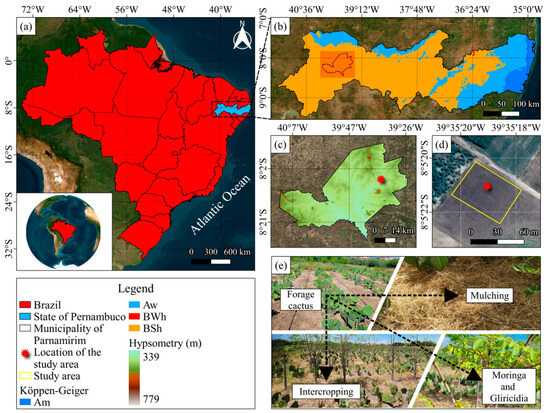

The research was conducted at Primavera Farm, located in the municipality of Parnamirim in the semiarid region of Pernambuco State, Brazil. The experimental area is situated in the Brígida River Basin (see Figure 1). The soil in the experimental area is classified as Fluvisol [25], which presents a flat topography. The region is characterized by the Caatinga biome. Figure 1d illustrates the experimental plot with 0.25 hectares, while Figure 1e shows the intercropped cultivation of forage cactus (Opuntia stricta (Haw.) Haw.) with gliricidia (Gliricidia sepium) and moringa (Moringa oleifera). The experimental area is part of a scientific project carried out by the Federal Rural of Pernambuco, addressing irrigation reuse and entrepreneurial activities, aiding local farmers to cope with water scarcity in the Brazilian semiarid region.

Figure 1.

Location of the study area (a), climatic classification (b), hypsometry (c), experimental plot (d), and images of the intercropped system (e). Aw: tropical savanna climate with a dry winter season; BWh: hot arid climate; BSh: hot semiarid tropical climate with a defined dry season.

Forage cactus was cultivated in May 2021 without irrigation. Subsequently, irrigation with treated wastewater effluent was initiated in November 2021, along with the planting of intercropped moringa and gliricidia. Forage cactus was cropped with a spacing of 2.5 × 0.5 m (double row), totaling ~13,300 plants ha−1. Gliricidia and moringa were planted adopting a 1.0 m spacing between rows, totaling ~4000 plants ha−1. Those crops were arranged in rows, positioned 1.25 m from the forage cactus lines in intercropped plots. The plot comprised eleven rows, each 6 m long, including six rows of forage cactus and five of intercropping. Two soil cover conditions were considered: bare soil and mulch, composed of plant residue straws, applied at a density of 8 Mg ha−1. Treated domestic effluent from the Sewage Treatment Plant operated by the “Companhia Pernambucana de Saneamento” (COMPESA) was used for irrigation.

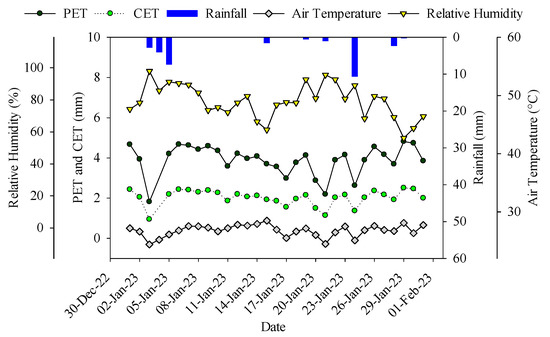

The regional climate is classified as tropical semiarid (BShw), with a wet season from November to April [26]. The average temperature is 26 °C, with mean annual precipitation of 569 mm, and potential evapotranspiration being approximately 1600 mm [27]. The remote sensing investigation was conducted on 31 January 2023, and climate information is presented in Figure 2, recorded by a Campbell Scientific weather station located near the experimental area. For the calculation of crop evapotranspiration (CET), the potential evapotranspiration (PET) was multiplied by the crop coefficient (Kc) of 0.52. The PET was estimated using the Penman–Monteith method standardized by FAO Bulletin 56 [28].

Figure 2.

Climate information of the study area. Period from 31 December 2022 to 1 February 2023. Parnamirim, Pernambuco State, Brazil. PET: Potential Crop Evapotranspiration; CET: Crop Evapotranspiration.

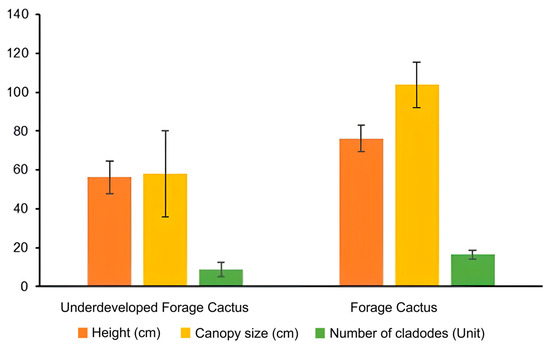

On 31 January 2023, nearly 15 months after starting the irrigation, moringa and gliricidia presented mean values of crop height, stem diameter, and maximum canopy width of 2.13 m, 53.24 mm, and 0.8 m; 1.42 m, 25.75 mm, and 1.23 m, respectively. For forage cactus, the mean values for height, canopy size, and number of cladodes were 0.66 m, 0.80 m, and 12.6 cladodes, respectively.

2.2. Image Acquisition

Multispectral and RGB images were acquired on 31 January 2023. Surveys were performed using an Unmanned Aerial Vehicle (UAV), DJI Phantom 4 Multispectral RTK model, equipped with a high spatial resolution camera that shoots simultaneously six images, one in RGB composition and five monochrome spectral images: blue (B), green (G), red (R), red edge (RE), and near-infrared (NIR) bands, respectively, generating images at 2 MP resolution.

The flight characteristics were determined according to technical recommendations while respecting the regulations of ANAC (National Civil Aviation Agency), the regulatory body responsible for flight regulations in Brazil. The flight was performed at a uniform speed of 2 m/s and a height of 40 m. An automatic surveying mission was set up to ensure 75% overlap and sidelap. The photogrammetric process, including image alignment, stacking, and radiometric correction, was carried out using Agisoft Metashape software (Version 2.1), along with a reflectance calibration panel.

2.3. Machine Learning Algorithms

The images were analyzed and classified using QGIS software (version 3.28.2), with the assistance of the Dzetsaka plugin (version 3.70) [29]. The classification conducted by the Dzetsaka plugin is object-oriented with applications spanning various areas such as deforestation progress, illegal road opening, and the conversion of pasture areas, among others [30,31]. Three classification algorithms were tested: Gaussian Mixture Model, K-nearest Neighbors, and Random Forest.

2.3.1. Gaussian Mixture Model (GMM)

The Gaussian Mixture Model (GMM) algorithm is a probabilistic algorithm used to represent normally distributed subpopulations within a general population. Typically, it is employed for unsupervised learning to automatically learn subpopulations and their assignment. It is also used for supervised learning or classification to learn the boundaries of subpopulations.

The Gaussian Mixture Model starts with the means of each Gaussian being equal to the means of each actual class, as well as their standard deviations. Each potential class is represented by a Gaussian function. This representation is iteratively refined, or trained, by the Expectation Maximization algorithm. Instance labels are assigned according to the Gaussian that best represents each one. The Gaussian Mixture Model is a weighted sum of Gaussian densities with M components, as follows:

where x corresponds to a vector of continuous data with D dimensions, ωi (for i ranging from 1 to M) represents the mixture weights, and g (x|µi, Σi) with (i ranging from 1 to M) represents the Gaussian densities of the components. Each component density takes the form of a Gaussian function with D variables.

where, the mean vector: μi, and the covariance matrix: .

The model assumes that all data points are obtained from a mixture of finite Gaussian distributions with unknown parameters [32].

2.3.2. K-Nearest Neighbors (KNN)

The K-nearest Neighbors algorithm [33] is a method that classifies data based on their proximity to the nearest neighbors. It uses a labeled dataset, and when a new point needs to be classified, the algorithm identifies the K-nearest points based on a distance measure. Then, the algorithm assigns the new point the label of the most frequent class among these K neighbors. The value of K is predefined or optimized in advance and influences the smoothness of the decision boundary.

2.3.3. Random Forest (RF)

The Random Forest (RF) algorithm is a method that employs multiple Decision Trees for classification or regression. Each tree is constructed using a random sample of the training data and a random selection of features. During training, each tree makes independent predictions, and the result is obtained through a combination of individual predictions, such as voting or averaging. The key concept of Random Forest is that the combination of trees helps reduce overfitting to the training data and improves the model’s generalization ability [34].

The Random Forest classifier uses the Gini index (G) to select features that predict the classes. For a given training set T, by randomly selecting an instance (pixel) and stating that it belongs to some class Ci, the Gini index can be written as:

where is the probability that the selected pixel belongs to the class [35].

2.4. Application of the Algorithms

The application of the classification algorithms was performed for two distinct sample spaces:

(I) The input data for the mosaic used in the classifications were generated from the sample space composed of rasters from the composition of multispectral bands, namely: R, G, B, RE, NIR, and the Normalized Difference Vegetation Index (NDVI) [36] to enhance the classification accuracy [37].

(II) The input rasters used for the classifications were generated from the bands of the visible spectrum, namely: R, G, B, and the Visible-band Difference Vegetation Index (VDVI), which is well-suited for extracting vegetative vigor from UAV images containing only the visible spectrum bands—RGB [38].

2.5. Training Samples

For the application of the classification algorithms, samples were selected based on the number of pixels located in each polygon selected as a classification target. This selection process was carried out manually, relying on knowledge of the study area and visual analysis of the images. Consequently, a shapefile layer was created with the same DATUM reference as the drone images, using the WGS 84 coordinate reference system.

The classification of developed and underdeveloped forage cacti was based on the biometric values of the crops, namely: height, canopy size, and number of cladodes. The values were measured in 72 samples and presented in Figure 3.

Figure 3.

Mean biometric data and standard deviation of forage cactus at the irrigated area.

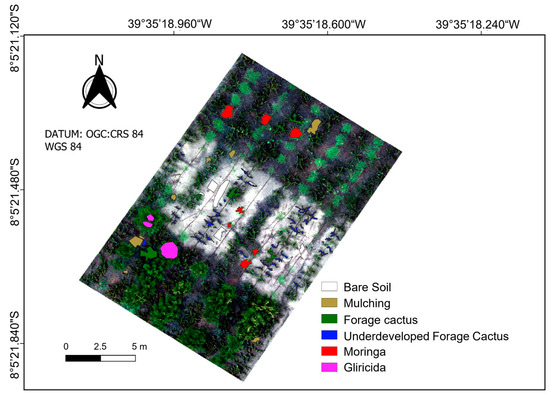

The study area consisted of three cultivation plots, in which six types of samples were defined for the classification of the processed image (Figure 4). Sample selection per class was balanced manually aiming to obtain the most faithful results possible in the classification and resulted in the following quantities: bare soil (7327 samples), soil with cover (6353 samples), forage cactus (7837 samples), underdeveloped forage cactus (or with impaired development) (6603 samples), moringa (7203 samples), and gliricidia (6143 samples). It is worth noting that sample selection was based on the presence of all sample elements (classes) in the study area.

Figure 4.

Samples used for the classification of the plots in the irrigated area.

After applying the algorithms, with a data distribution where 70% of the samples were used for training and 30% for testing, confusion matrices were generated and analyzed for each applied classification algorithm. Thus, it was possible to analyze metrics derived from the classifications performed by the algorithms, such as precision, recall (sensitivity), F1 Score, accuracy, and finally, the Kappa index [39]. According to Landis and Koch [39], Kappa values from 0.0 to 0.2 indicate ‘slight agreement’, 0.21 to 0.40 indicate ‘fair agreement’, 0.41 to 0.60 indicate ‘moderate agreement’, 0.61 to 0.80 indicate ‘substantial agreement’, and 0.81 to 1.0 correspond to ‘almost perfect’ agreement. This allows evaluating the performance of each algorithm for each individual class of selected samples, presenting an overall average of the values, and assessing the general performance of the algorithm.

Precision measures the proportion of samples classified as positive that are truly positive. Recall, or sensitivity, measures the proportion of positive samples correctly classified relative to the total positive samples. In other words, it is the model’s ability to find all positive samples [40]. The F1 Score is a helpful metric for finding a balance between precision and recall in classification tasks. A high F1 Score indicates that the model is succeeding in both positive instances and minimizing false positives.

Furthermore, a comparative study was conducted, both on the classification algorithms and the RGB and multispectral sample spaces.

3. Results

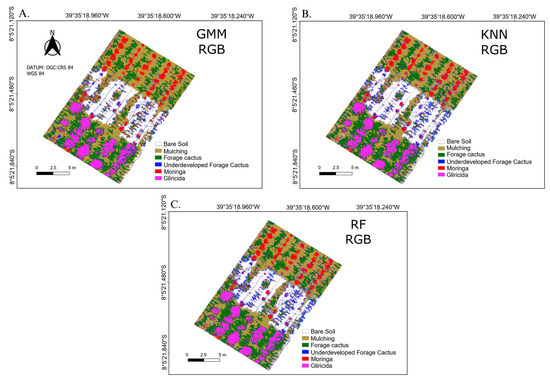

The classification applied using the multispectral band composition with the Gaussian Mixture, K-Nearest Neighbors, and Random Forest algorithms can be observed in Figure 5.

Figure 5.

Classification by the Gaussian Mixture algorithm (A), K-Nearest Neighbors (B), and the Random Forest algorithm (C) with multispectral sample space.

Table 1 displays the confusion matrices of the Gaussian Mixture Model (GMM), K-Nearest Neighbors (KNN), and Random Forest (RF) algorithms. The confusion matrix can be described as a model that shows classification accuracy, where the rows represent the references used as targets for the samples in the database, while the columns reflect the predictions made for each reference. Consequently, the main diagonal of these matrices clarifies the number of predictions correctly recognized by the adopted algorithm. High values can be observed for all diagonal elements, especially for bare soil (BS).

Table 1.

The confusion matrix generated by the Gaussian Mixture Model, K-Nearest Neighbors algorithm, Random Forest—Multispectral.

Based on the above results, a comparison among the three algorithms was carried out, considering metrics such as precision, recall (sensitivity), F1 score accuracy, and finally the Kappa index [39] presented in Table 2.

Table 2.

Results of the metrics for the Gaussian Mixture Model, K-Nearest Neighbors algorithm, and Random Forest—Multispectral.

Based on the evaluation of models’ performance applied to multispectral images, Random Forest (RF) stood out by producing the best F1 score for all analyzed classes, resulting in the highest average. Concerning the recall index, the performance varied among classes, with slightly better results in crops that exhibit a more pronounced spectral response (moringa, gliricidia, and developed forage cactus), although it reached a higher average. On the other hand, RF performed slightly worse than K-Nearest Neighbors (KNN) in classifying underdeveloped forage cactus, exposed soil, and mulch. It is worth noting that the precision of RF surpassed the KNN and the Gaussian Mixture Model (GMM) classification algorithms. However, KNN excelled in classifying moringa and gliricidia with slightly superior results.

Regarding individual model accuracy, the recorded values were 84.59% for the GMM algorithm, 87.98% for the KNN algorithm, and 88.50% for the RF algorithm. Calculated Kappa index values were 0.81 for GMM, 0.86 for KNN, and 0.86 for RF. All three models exhibited Kappa index values exceeding 0.80, categorizing them ‘as almost perfect’ [39]. Both the Random Forest and K-Nearest Neighbors algorithms demonstrated remarkably similar metrics, with Random Forest standing out in the multispectral sample space.

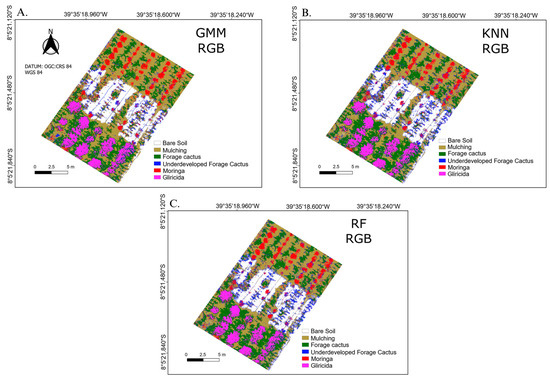

Likewise, in the classification of images acquired by the drone based on the sample space composed of RGB bands, a highly consistent outcome with classifications based on multispectral bands was observed. This congruence was primarily evident through visual inspection, as depicted in Figure 6.

Figure 6.

Classification by the Gaussian Mixture Model (A), K-Nearest Neighbors (B), and Random Forest (C) algorithms with the RGB sample space.

Similarly to the approach taken for multispectral image classifications, confusion matrices (Table 3) derived from these RGB images were subjected to a detailed analysis regarding precision, recall, F1 Score, accuracy, and Kappa index metrics (Table 4). The following tables present these results concisely.

Table 3.

Confusion matrix generated by the Gaussian Mixture Model, K-Nearest Neighbors algorithm, Random Forest—RGB.

Table 4.

Results of the metrics for the Gaussian Mixture Model, K-Nearest Neighbors algorithm, Random Forest—RGB.

In contrast to multispectral images, RGB images presented a better performance with the K-Nearest Neighbors (KNN) algorithm, which achieved the best F1 score in all classes, except for gliricidia, where Random Forest (RF) predicted a better result but still maintained a satisfactory overall average, compared to the other methods used. A similar pattern emerged in terms of recall, where KNN outperformed the other methods in all classes except for gliricidia, where RF once again demonstrated a superior result.

In precision metrics, K-Nearest Neighbors (KNN) stood out by achieving superior results for all studied classes. It is noteworthy that Random Forest (RF) outperformed the Gaussian Mixture Model (GMM), despite GMM showing a better result in precision regarding the classification of exposed soil.

Concerning the individual accuracy of the models, the recorded values were 81.78% for GMM, 85.40% for KNN, and 85.08% for RF. The Kappa index results were 0.78, 0.82, and 0.82, respectively. Both the K-Nearest Neighbors and Random Forest algorithms presented Kappa indices above 0.80, classified as ‘almost perfect’, while the Gaussian Mixture Model was classified as ‘substantial’.

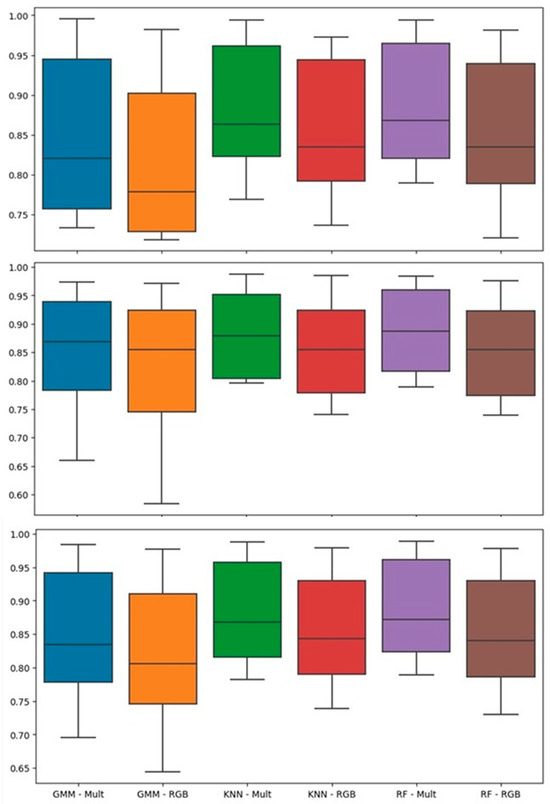

The Random Forest and K-Nearest Neighbors algorithms stand out, showing very similar values, with particular emphasis on the KNN algorithm in the context of RGB samples. An overall view of these individual results for each algorithm, including Precision, Recall, and F1 Score values in different RGB and multispectral sample scenarios, can be obtained through the analysis of the box plots presented in Figure 7.

Figure 7.

Box plot regarding the individual precision (Top), recall (Middle), and F1 Score (Bottom) results of each algorithm in RGB and Multispectral sample spaces.

4. Discussion

Crop monitoring can assist farmers in making reliable decisions for agricultural management, where remote sensing acts as a strategic tool. Multispectral indices can be used in crop identification, aiding precision agriculture [41]. In a study by Wang et al. [42], machine learning techniques were applied in conjunction with multispectral index data to monitor Land Use and Land Cover (LULC), and the results obtained were highly relevant.

In our study, the results show a general good performance of the adopted algorithms in classifying intercropped forage cactus and considering bare soil (BS) and mulch cover (MC), from both RGB and MS images produced by a UAV. Nearly the entire experimental area was accurately classified, albeit with slight discrepancies between the methods. However, it is noticeable that the mentioned algorithms, especially the Gaussian Mixture Model, exhibit certain inconsistencies by confusing underdeveloped forage cactus with moringa for MS space (Table 2) and RGB space (Table 4). Such behavior is an indicator that the samples of these two crops demonstrate possibly analogous values of spectral response. A similar study using hyperspectral images to classify crops in Bengaluru, India, also presented problems related to confusion between two crops (tomato and eggplant) due to their similar spectral response [43].

Inconsistencies in the identification of cacti may be associated with the intrinsic characteristics of the crop, which closes its stomata during the day. Furthermore, on the edge of the forage cactus racket, there is a protective layer [44], which may be related to changes in the crop’s spectral response.

The Gaussian Mixture Model algorithm showed the lowest performance in contrast to K-Nearest Neighbors and Random Forest, possibly because it was not developed as a classification algorithm, contrasting with the results obtained by Iqbal et al. [22]. Classification algorithms are subject to continuous updates and improvements, reflecting a constant evolution in accuracy. In this context, studies focusing on the enhancement of classification algorithms stand out, such as the case of GMM [45].

It is relevant to observe that, although the Random Forest model has shown the best results, the values are very close to those achieved by K-Nearest Neighbors. This suggests that K-Nearest Neighbors may exhibit proper performance in areas with a more significant presence of larger crops, such as moringa and gliricidia, compared to forage cactus. The Guo et al. [46] study produced similar results when applied to tree classification in urban areas, using the same UAV model adopted in our study. The Random Forest algorithm outperformed with a Kappa index of 0.91. Rodriguez–Garlito and Paz–Gallardo [47] applied machine learning methods for the semi-automatic classification of multispectral UAV images with a pixel size of 3 cm, where the Support Vector Machine (SVM) and Random Forest (RF) stood out, efficiently distinguishing ground cover classes due to the high spatial resolution of the image.

In the analysis of RGB-format images (Table 4), it was observed that the precision, F1 score, recall index, Kappa index, and accuracy results were lower compared to multispectral images (Table 2). This is mainly due to distinct crop responses for the near-infrared band, which is directly correlated with the amount of chlorophyll in plants. This correlation was more pronounced in crop classes compared to the exposed soil class, increasing the performance of multispectral analysis. These obtained results are in line with those reported in the application of machine learning in agriculture, where Random Forest (RF) stands out in various applications, such as in the study proposed by Fathololoumi et al. [48], which applied the algorithm for soil moisture determination. In the work of Silva Júnior et al. [49] the algorithm was used for data interpolation of evapotranspiration and yielded better results than conventional techniques such as kriging in that case.

The differences presented in comparison to the studies in the literature are significant, considering the spatial resolution of approximately 2 cm of our study and the application in a semiarid region, which presents significant differences in the performance of K-Nearest Neighbors for region classification. The Iqbal et al. [22] study focused on the application of the Dzetsaka tool with the use of algorithms for classification with satellite images. The results were better for the PlanetScope satellite constellation compared to Sentinel-2, given that the spatial resolution of PlanetScope is 3 m, while the Sentinel-2 is 10 m. The difference in resolution is even more evident in our study, which produced a pixel size of 2.1 cm, significantly affecting the algorithm ranking and performance.

Regarding RGB images, although KNN achieved slightly better performance, Random Forest demonstrates to be a more robust algorithm, being less affected by noise. This is attributed to its ensemble technique, which combines predictions from multiple individual trees, resulting in a prediction that is more robust to noise for each node. Additionally, the utilization of random sampling helps reduce model variance and ensures diversity among the trees used [50]. On the other hand, K-Nearest Neighbors is not an ensemble technique, potentially making its predictions more susceptible to data quality issues, thereby increasing sensitivity to noise. Moreover, its performance in remote sensing applications is inferior to that of Random Forest, with its optimal performance in this study being attributed to the quality of pixel information [22,51].

The results highlighted and addressed in our study indicate, based on the Kappa index, that both the Random Forest classification algorithm and KNN were the most efficient when compared to GMM. These algorithms are recommended for the supervised classification of forage palms and also for intercropping classification in semiarid regions. However, it is advisable to undergo network training for improvement in efficiency and greater spatial accuracy of plant species. Corroborating our findings, Pantoja et al. [52] compared the Random Forest algorithm with GMM, indicating that Random Forest outperformed with the following Kappa index: Random Forest (K = 0.94) and Gaussian Mixture Model (K = 0.85).

As a limitation of our study, we emphasize that a single collection in the fifteenth month of forage palm cultivation development limits the analysis of different scenarios and the assessment of algorithm accuracy over time. Nevertheless, based on the complexity and mathematical applicability of Random Forest and KNN, we recommend the use of Random Forest due to its natural reduction in model variance, employing multiple individual decision trees with a voting scheme. A model with low variance is less prone to learning noise and produces smoother decision boundaries, encouraging the learning of the true class distribution in the data. This variance reduction comes with the inherent cost of increased computational time compared to individual methods. However, since individual decision trees have a relatively fast training algorithm and the training phase of our approach is offline and performed only once, this time overhead is negligible. Moreover, Random Forests produce relatively easily interpretable models, aiding practitioners in gaining valuable insights and supporting management decisions. KNN, on the other hand, is a single model with no training procedure, relying solely on voting among neighboring pixels. These neighbors may contain noise that could lead to classification errors.

5. Conclusions

In this research, we conducted a comparative performance analysis of three algorithms—Gaussian Mixture Model, K-Nearest Neighbors, and Random Forest—for classifying crops from UAV images captured in an irrigated area with intercropped forage cactus. Additionally, we investigated the influence of two distinct sample spaces: multispectral and RGB. The obtained results indicate that the Random Forest algorithm and the K-Nearest Neighbors algorithm exhibit similar performance levels in terms of classification quality. Although Random Forest demonstrates better performance in crop classification when utilizing samples from a multispectral space, and K-Nearest Neighbors performs better in the RGB sample space, the differences between them are negligible. This observation is supported by the Kappa index values, with Random Forest yielding 0.86 and 0.82, and K-Nearest Neighbors presenting values of 0.86 and 0.82, respectively. Both algorithms were classified as ‘almost perfect’ in both sample contexts, achieving accuracy rates close to 88% for multispectral samples and above 85% for RGB samples. It is important to highlight that the Gaussian Mixture Model algorithm demonstrated the weakest performance among the three tested algorithms, with kappa index values of 0.82 and 0.78 for the multispectral and RGB spaces, respectively, indicating potential limitations for its use in crop classification tasks. This inferior performance is likely attributed to the algorithm’s inherent characteristics, which are not specifically designed for classification tasks.

The applied algorithms were those available in the Dzetsaka tool, which are algorithms already widely used in literature for image classification purposes. Despite its usefulness, the tool has a system that prevents the alteration of algorithm parameters, thereby not allowing for improvements to be made. Notwithstanding such restrictions, the Dzetsaka plugin proved to be efficient for crop classification in intercropped forage cactus fields. Another limiting factor of the study was the application to an image captured on a single date, restricting the ability to capture images at various dates. Our findings confirm that machine learning with RGB images successfully classified crops in the intercropped forage cactus area, as well as in zones of exposed soil and mulch cover, allowing for a low-cost remote sensing alternative for indirect monitoring of similar areas. In addition to crop classification, the development level classification of forage cactus is an important application, as it can be used to identify crops requiring specific management, thereby improving food security, as well as contributing to inclusive and sustainable economic growth in the semiarid region. To further explore the applications of these algorithms in crop classification, we recommend conducting similar analyses for different crop stages, investigating performance during plant growth, and thus assessing the impacts of temporal variability on the effectiveness of those algorithms. We also recommend applying the algorithms at different spatial resolutions, as well as outside the Dzetsaka tool, enabling more detailed analysis and comparison with other more complex algorithms and crop fields.

Author Contributions

Conceptualization, A.A.d.A.M., O.B.d.A., T.G.F.d.S. and L.d.B.d.S.; methodology, A.A.d.A.M., B.P.V., O.B.d.A., V.W.C.d.M., R.G.F.S., T.G.F.d.S. and L.d.B.d.S.; software, A.A.d.C., M.V.d.S., M.A.d.S.N., T.A.B.A., L.d.B.d.S., V.W.C.d.M. and R.G.F.S., investigation, O.B.d.A. and A.A.d.A.M. resources, A.A.d.C., B.P.V. and M.A.d.S.N.; data curation, M.A.d.S.N.; writing—original draft preparation, O.B.d.A., A.A.d.A.M., M.A.d.S.N., L.d.B.d.S. and J.L.M.P.d.L.; writing—review & editing, T.A.B.A.; visualization, A.A.d.A.M. and J.L.M.P.d.L.; supervision, A.A.d.A.M.; project administration, A.A.d.A.M. and J.L.M.P.d.L.; funding acquisition, A.A.d.A.M., B.P.V. and J.L.M.P.d.L. All authors have read and agreed to the published version of the manuscript.

Funding

This project is funded by CNPq (Universal Project process 420.488/2018-9, and MAIDAI Project—Master’s and Doctorate in Innovation, process 403.488/2020-4, with financial contributions from TPF Engenharia and Companhia Pernambucana de Saneamento-COMPESA for wastewater reuse in agriculture), by FACEPE (Foundation for Support to Science and Technology of Pernambuco) (process APQ 0414-5.03/20 for development of livestock economic chain in Pernambuco State), and by the Ministry of Integration and Regional Development (MIDR), coordinated by the National Institute of the Semiarid (INSA). Scholarships were also supported by CAPES-PrInt/UFRPE. In addition, the study received support from Portuguese funds through the Foundation for Science and Technology, I.P. (FCT), under grants UIDB/04292/2020, awarded to MARE, and LA/P/0069/2020, awarded to the Associate Laboratory ARNET.

Data Availability Statement

Data are contained within the article.

Acknowledgments

To the Postgraduate Program in Agricultural Engineering (PGEA) of the Federal Rural University of Pernambuco (UFRPE) for supporting the development of this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pohl, S.C.; Lenz, D.M. Utilização de efluente tratado em complexo industrial automotivo. Eng. Sanit. Ambient. 2017, 22, 551–562. [Google Scholar] [CrossRef][Green Version]

- Carvalho, A.A.; Montenegro, A.A.A.; de Lima, J.L.M.P.; Silva, T.G.F.D.; Pedrosa, E.M.R.; Almeida, T.A.B. Coupling Water Resources and Agricultural Practices for Sorghum in a Semiarid Environment. Water 2021, 13, 2288. [Google Scholar] [CrossRef]

- Mainardis, M.; Cecconet, D.; Moretti, A.; Callegari, A.; Goi, D.; Freguia, S.; Capodaglio, A.G. Wastewater Fertigation in Agriculture: Issues and Opportunities for Improved Water Management and Circular Economy. Environ. Pollut. 2022, 296, 118755. [Google Scholar] [CrossRef] [PubMed]

- Lira, E.C.; Felix, E.S.; Oliveira Filho, T.J.; Alves, R.C.; Lima, R.P.; Souza, J.T.A.; Oliveira, J.A.; Araújo, F.G.; Cavalcanti, M.T.; Araujo, J.S. Intercropping forage cactus genotypes with wood species in a semiarid environment. Agron. J. 2022, 114, 3173–3182. [Google Scholar] [CrossRef]

- Pimentel, C. Agronomic Practices to Improve Water Use Efficiency. Environ. Sci. Ecol. Curr. Res. 2022, 3, 1081. [Google Scholar] [CrossRef]

- Alves, C.P.; Jardim, A.M.D.R.F.; Araújo Júnior, G.D.N.; Souza, L.S.B.D.; Araújo, G.G.L.D.; Souza, C.A.A.D.; Salvador, K.R.D.S.; Leite, R.M.C.; Pinheiro, A.G.; Silva, T.G.F.D. How to Enhance the Agronomic Performance of Cactus-Sorghum Intercropped System: Planting Configurations, Density and Orientation. Ind. Crops Prod. 2022, 184, 115059. [Google Scholar] [CrossRef]

- Bhuyan, B.P.; Tomar, R.; Singh, T.P.; Cherif, A.R. Crop Type Prediction: A Statistical and Machine Learning Approach. Sustainability 2022, 15, 481. [Google Scholar] [CrossRef]

- Asadi, B.; Shamsoddini, A. Crop Mapping through a Hybrid Machine Learning and Deep Learning Method. Remote Sens. Appl. Soc. Environ. 2024, 33, 101090. [Google Scholar] [CrossRef]

- Shanmugapriya, P.; Rathika, S.; Ramesh, T.; Janaki, P. Applications of Remote Sensing in Agriculture—A Review. Int. J. Curr. Microbiol. App. Sci. 2019, 8, 2270–2283. [Google Scholar] [CrossRef]

- Chakraborty, S.K.; Chandel, N.S.; Jat, D.; Tiwari, M.K.; Rajwade, Y.A.; Subeesh, A. Deep Learning Approaches and Interventions for Futuristic Engineering in Agriculture. Neural Comput. Appl. 2022, 34, 20539–20573. [Google Scholar] [CrossRef]

- Badagliacca, G.; Messina, G.; Praticò, S.; Lo Presti, E.; Preiti, G.; Monti, M.; Modica, G. Multispectral Vegetation Indices and Machine Learning Approaches for Durum Wheat (Triticum Durum Desf.) Yield Prediction across Different Varieties. AgriEngineering 2023, 5, 2032–2048. [Google Scholar] [CrossRef]

- Lourenço, V.R.; Montenegro, A.A.A.; Carvalho, A.A.D.; Sousa, L.D.B.D.; Almeida, T.A.B.; Almeida, T.F.S.D.; Vilar, B.P. Spatial Variability of Biophysical Multispectral Indexes under Heterogeneity and Anisotropy for Precision Monitoring. Rev. Bras. Eng. Agríc. Ambient. 2023, 27, 848–857. [Google Scholar] [CrossRef]

- Dericquebourg, E.; Hafiane, A.; Canals, R. Generative-Model-Based Data Labeling for Deep Network Regression: Application to Seed Maturity Estimation from UAV Multispectral Images. Remote Sens. 2022, 14, 5238. [Google Scholar] [CrossRef]

- Cao, X.; Liu, Y.; Yu, R.; Han, D.; Su, B. A Comparison of UAV RGB and Multispectral Imaging in Phenotyping for Stay Green of Wheat Population. Remote Sens. 2021, 13, 5173. [Google Scholar] [CrossRef]

- Peng, Z.-R.; Wang, D.; Wang, Z.; Gao, Y.; Lu, S. A Study of Vertical Distribution Patterns of PM2.5 Concentrations Based on Ambient Monitoring with Unmanned Aerial Vehicles: A Case in Hangzhou, China. Atmos. Environ. 2015, 123, 357–369. [Google Scholar] [CrossRef]

- Stark, D.J.; Vaughan, I.P.; Evans, L.J.; Kler, H.; Goossens, B. Combining Drones and Satellite Tracking as an Effective Tool for Informing Policy Change in Riparian Habitats: A Proboscis Monkey Case Study. Remote Sens. Ecol. Conserv. 2018, 4, 44–52. [Google Scholar] [CrossRef]

- Santana, D.C.; Theodoro, G.D.F.; Gava, R.; De Oliveira, J.L.G.; Teodoro, L.P.R.; De Oliveira, I.C.; Baio, F.H.R.; Da Silva Junior, C.A.; De Oliveira, J.T.; Teodoro, P.E. A New Approach to Identifying Sorghum Hybrids Using UAV Imagery Using Multispectral Signature and Machine Learning. Algorithms 2024, 17, 23. [Google Scholar] [CrossRef]

- Pallathadka, H.; Mustafa, M.; Sanchez, D.T.; Sekhar Sajja, G.; Gour, S.; Naved, M. Impact of Machine Learning on Management, Healthcare, and Agriculture. Mater. Today Proc. 2023, 80, 2803–2806. [Google Scholar] [CrossRef]

- Abioye, E.A.; Hensel, O.; Esau, T.J.; Elijah, O.; Abidin, M.S.Z.; Ayobami, A.S.; Yerima, O.; Nasirahmadi, A. Precision Irrigation Management Using Machine Learning and Digital Farming Solutions. AgriEngineering 2022, 4, 70–103. [Google Scholar] [CrossRef]

- Fleacă, E.; Fleacă, B.; Maiduc, S. Aligning Strategy with Sustainable Development Goals (SDGs): Process Scoping Diagram for Entrepreneurial Higher Education Institutions (HEIs). Sustainability 2018, 10, 1032. [Google Scholar] [CrossRef]

- Yadav, J.; Chauhan, U.; Sharma, D. Importance of Drone Technology in Indian Agriculture, Farming. In Advances in Electronic Government, Digital Divide, and Regional Development; Khan, M.A., Gupta, B., Verma, A.R., Praveen, P., Peoples, C.J., Eds.; IGI Global: Hershey, PA, USA, 2023; pp. 35–46. ISBN 978-1-66846-418-2. [Google Scholar]

- Iqbal, I.M.; Balzter, H.; Firdaus-e-Bareen; Shabbir, A. Mapping Lantana camara and Leucaena leucocephala in Protected Areas of Pakistan: A Geo-Spatial Approach. Remote Sens. 2023, 15, 1020. [Google Scholar] [CrossRef]

- Sivakumar, M.; TYJ, N.M. A Literature Survey of Unmanned Aerial Vehicle Usage for Civil Applications. J. Aerosp. Technol. Manag. 2021, 13, e4021. [Google Scholar] [CrossRef]

- Zhang, J.; He, Y.; Yuan, L.; Liu, P.; Zhou, X.; Huang, Y. Machine Learning-Based Spectral Library for Crop Classification and Status Monitoring. Agronomy 2019, 9, 496. [Google Scholar] [CrossRef]

- Souza, R.; Souza, E.S.; Netto, A.M.; Almeida, A.Q.D.; Barros Júnior, G.; Silva, J.R.I.; Lima, J.R.D.S.; Antonino, A.C.D. Assessment of the Physical Quality of a Fluvisol in the Brazilian Semiarid Region. Geoderma Reg. 2017, 10, 175–182. [Google Scholar] [CrossRef]

- Alvares, C.A.; Stape, J.L.; Sentelhas, P.C. Köppen’s Climate Classification Map for Brazil. Meteorol. Z. 2013, 22, 711–728. [Google Scholar] [CrossRef]

- Silva, L.A.P.; de Souza, C.M.P.; Silva, C.R.; Filgueiras, R.; Sena-Souza, J.P.; Fernandes Filho, E.I.; Leite, M.E. Mapping the effects of climate change on reference evapotranspiration in future scenarios in the Brazilian Semiarid Region—South America. Rev. Bras. De Geogr. Física 2023, 16, 1001–1012. [Google Scholar] [CrossRef]

- Allen, R.G.; Pereira, L.S.; Raes, D.; Smith, M. Crop Evapotranspiration: Guidelines for Computing Crop Water Requirements; FAO—Food and Agriculture Organization of the United Nations: Roma, Italy, 1998. [Google Scholar]

- Karasiak, N. Dzetsaka: Classification Plugin for Qgis. Available online: https://github.com/nkarasiak/dzetsaka (accessed on 20 December 2023).

- Fitz, P.R. Classificação de imagens de satélite e índices espectrais de vegetação: Uma análise comparativa. Geosul 2020, 35, 171–188. [Google Scholar] [CrossRef]

- Balieiro, B.T.D.S.; Veloso, G.A. Análise multitemporal da cobertura do solo da Terra Indígena Ituna-Itatá através da classificação supervisionada de imagens de satélites. Cerrados 2022, 20, 261–282. [Google Scholar] [CrossRef]

- Reynolds, D.A. Gaussian Mixture Models. Encycl. Biom. 2009, 741, 659–663. [Google Scholar]

- Mucherino, A.; Papajorgji, P.J.; Pardalos, P.M. K-Nearest Neighbor Classification. In Data Mining in Agriculture; Springer Optimization and Its Applications; Springer New York: New York, NY, USA, 2009; Volume 34, pp. 83–106. ISBN 978-0-387-88614-5. [Google Scholar]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for Land Cover Classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pal, M. Random Forest Classifier for Remote Sensing Classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (GreenWave Effect) of Natural Vegetation; NASA: Washington, DC, USA, 1974. [Google Scholar]

- Lu, T.; Wan, L.; Wang, L. Fine Crop Classification in High Resolution Remote Sensing Based on Deep Learning. Front. Environ. Sci. 2022, 10, 991173. [Google Scholar] [CrossRef]

- Qubaa, A.R.; Aljawwadi, T.A.; Hamdoon, A.N.; Mohammed, R.M. Using UAVs/Drones and Vegetation Indices in the Visible Spectrum to Monitor Agricultural Lands. Iraqi J. Agric. Sci. 2021, 52, 601–610. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Miao, J.; Zhu, W. Precision–Recall Curve (PRC) Classification Trees. Evol. Intel. 2022, 15, 1545–1569. [Google Scholar] [CrossRef]

- Li, M.; Shamshiri, R.R.; Weltzien, C.; Schirrmann, M. Crop monitoring using Sentinel-2 and UAV multispectral imagery: A comparison case study in Northeastern Germany. Remote Sens. 2022, 14, 4426. [Google Scholar] [CrossRef]

- Wang, L.; Wang, J.; Liu, Z.; Zhu, J.; Qin, F. Evaluation of a deep-learning model for multispectral remote sensing of land use and crop classification. Crop J. 2022, 10, 1435–1451. [Google Scholar] [CrossRef]

- Sarma, A.S.; Nidamanuri, R.R. Transfer Learning for Plant-Level Crop Classification Using Drone-Based Hyperspectral Imagery. In Proceedings of the 2023 International Conference on Machine Intelligence for GeoAnalytics and Remote Sensing (MIGARS), Hyderabad, India, 27–29 January 2023; IEEE: Hyderabad, India, 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Souza, J.T.A.; Ribeiro, J.E.D.S.; Araújo, J.S.; Ramos, J.P.D.F.; Nascimento, J.P.D.; Medeiros, L.T.V.D. Gas Exchanges and Water-Use Efficiency of Nopalea Cochenillifera Intercropped under Edaphic Practices. Com. Sci. 2020, 11, e3035. [Google Scholar] [CrossRef]

- Wan, H.; Wang, H.; Scotney, B.; Liu, J. A Novel Gaussian Mixture Model for Classification. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; IEEE: Bari, Italy, 2019; pp. 3298–3303. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Guo, S.; Ye, Z.; Deng, H.; Hou, X.; Zhang, H. Urban Tree Classification Based on Object-Oriented Approach and Random Forest Algorithm Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2022, 14, 3885. [Google Scholar] [CrossRef]

- Rodriguez-Garlito, E.C.; Paz-Gallardo, A. Efficiently Mapping Large Areas of Olive Trees Using Drones in Extremadura, Spain. IEEE J. Miniat. Air Space Syst. 2021, 2, 148–156. [Google Scholar] [CrossRef]

- Fathololoumi, S.; Vaezi, A.R.; Alavipanah, S.K.; Ghorbani, A.; Biswas, A. Comparison of Spectral and Spatial-Based Approaches for Mapping the Local Variation of Soil Moisture in a Semi-Arid Mountainous Area. Sci. Total Environ. 2020, 724, 138319. [Google Scholar] [CrossRef]

- Silva Júnior, J.C.; Medeiros, V.; Garrozi, C.; Montenegro, A.A.A.; Gonçalves, G.E. Random Forest Techniques for Spatial Inter-polation of Evapotranspiration Data from Brazilian’s Northeast. Comput. Electron. Agric. 2019, 166, 105017. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Thakur, R.; Panse, P. Classification Performance of Land Use from Multispectral Remote Sensing Images using Decision Tree, K-Nearest Neighbor, Random Forest and Support Vector Machine Using EuroSAT Data. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 67–77. [Google Scholar]

- Pantoja, D.A.; Spenassato, D.; Emmendorfer, L.R. Comparison Between Classification Algorithms: Gaussian Mixture Model—GMM and Random Forest—RF, for Landsat 8 Images. RGSA 2023, 16, e03234. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).