1. Introduction

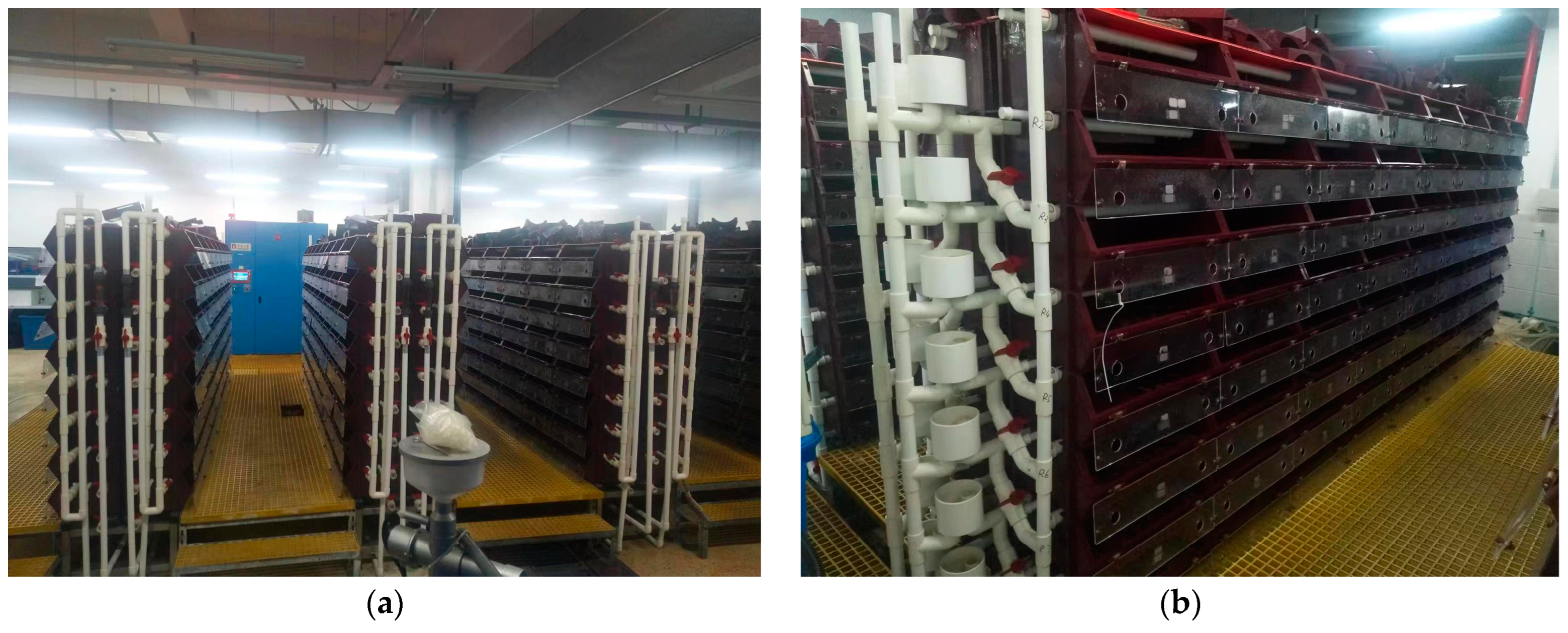

A new agricultural farming model known as single-frame three-dimensional aquaculture was created on the principle of circulating water and can be used to reuse aquaculture water, reduce agricultural energy use and environmental pollution, and realize the sustainable development of new agricultural technology. In light of the development of recirculating water culture technology [

1], the

Portunus three-dimensional apartment culture technology has become widely popular. According to

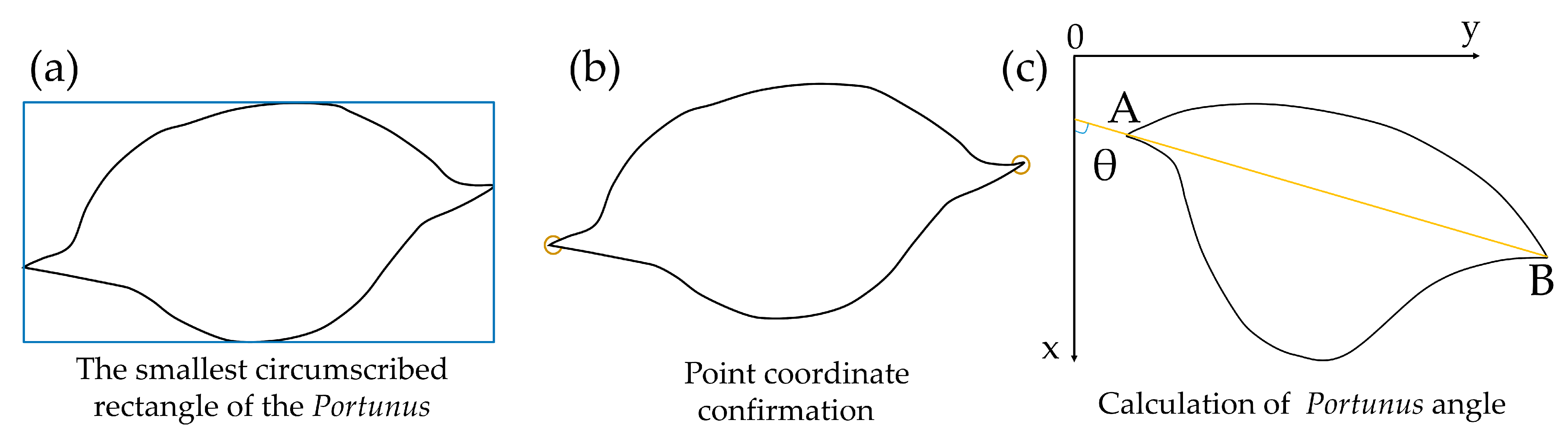

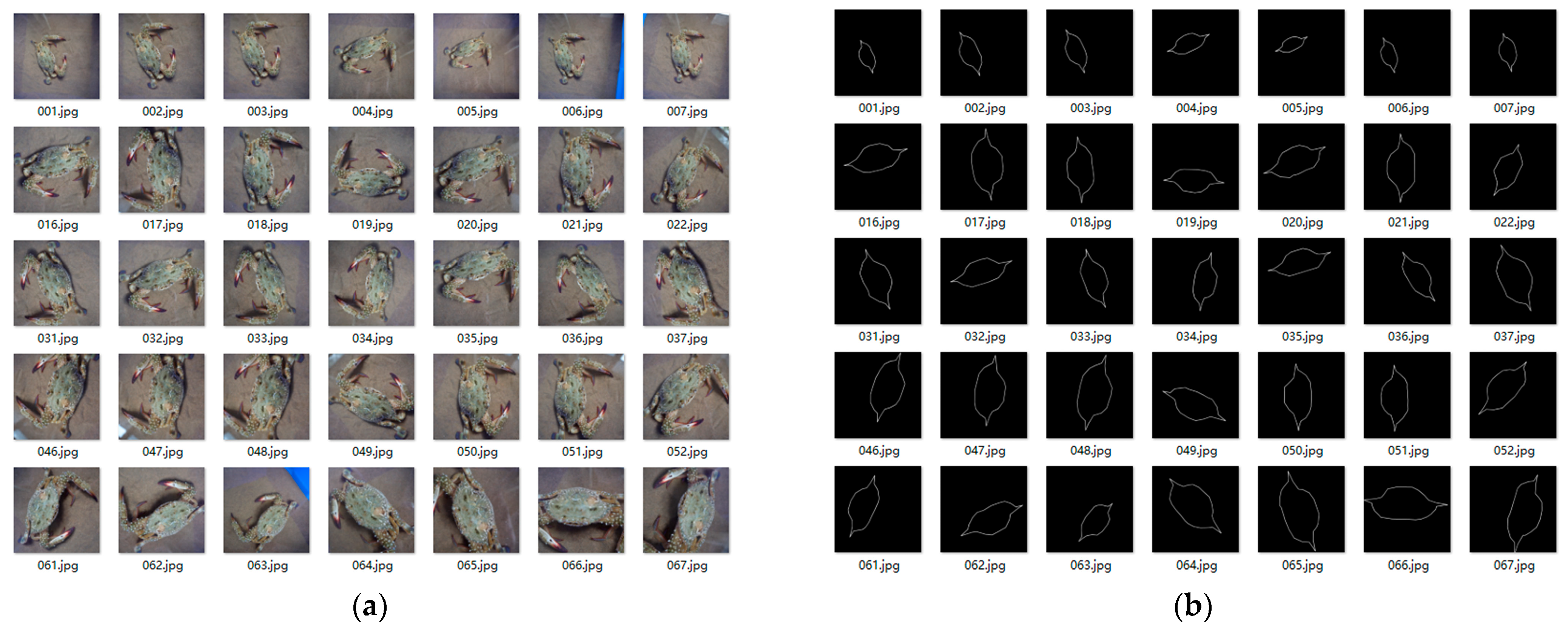

Figure 1, each aquaculture frame corresponds to one Portunus; the frame is covered with sand, and the water depth of the frame is approximately 10–20 cm.

Portunus are fed regularly and quantitatively using pellet feed or small fish and shrimp as bait for culture. Currently, a large number of daily inspections are handled manually in the factory-based single-frame three-dimensional culture. In order to improve the efficiency of farming, there is an urgent need for a new technological method to manage the daily inspections of large-scale three-dimensional aquaculture. On the other hand, machine vision, as an efficient method for automatic detection [

2,

3], has been widely used for behavioral detection, quantitative feeding, and feeding tracking in wisdom breeding [

4,

5,

6]. For example, the fusion of images with mathematical models to increase the reliability of the acquired information, 3D coordinate models, and tracking infrared reflection (IREF) detection devices [

7] for fish behavior detection. Based on machine learning, differences between one or more frames of the camera [

8] are used to determine the differences in fish feeding and quantitative indicators of feeding. To increase the reliability of information acquisition and to reduce interference, the optical flow was used to extract behavioral features (speed and rotation angle) for feeding tracking [

9]. Based on this, this paper addresses the inefficiency of manual inspections of large-scale single-frame three-dimensional culture by conducting a study on the survival rate judgment of

Portunus based on visual detection. Identifying visual-feature parameters such as

Portunus centroid movement, feed residual bait, and

Portunus rotation angle in the frame were performed using machine vision technology. Further, the fusion of the three parameters was used to determine the survival rate of the

Portunus.

The recognition of residual bait and

Portunus centroid belongs to the target detection problem in visual inspection. In deep-learning target detection, a deeper network, richer feature annotation and extraction, and higher computing power are the key technical issues to improve target detection accuracy and real-time efficiency. Deeper networks can improve the accuracy of target detection, and Rauf [

10] proposed to deepen the number of CNN convolutional layers with reference to the VGG-16 framework [

11], which enabled the CNN to be extended from 16 to 32 layers and improved the accuracy of target recognition on the Fish-Pak dataset by 15.42% compared to the VGG-16 network. Richer feature annotation and network frameworks can improve learning efficiency. As the problem of aquaculture fish-action recognition arises, Måløy [

12] proposed to use a CNN framework that combined spatial information and motion features fused with time-series information to provide richer information features related to fish behavior, with an accuracy of 80% in the task of predicting feeding and non-feeding behavior in fish farms. Combining network depth, higher feature extraction capability, and real-time requirements, the Faster R-CNN [

13] and YOLO [

14] frameworks have become the two leading algorithmic frameworks for target detection. They are widely used in crab target identification, fish target identification, and water quality prediction [

15,

16,

17]. Faster R-CNN with an RPN network makes for high accuracy. Li [

18] initialized the Faster R-CNN network using pretrained Zeiler and Fergus (ZF) models and optimized the convolutional feature map window for the ZF model network, resulting in improved detection speed and a 15.1% increase in mAP. YOLO is an end-to-end network framework that is fast and highly accurate. In order to identify fish species in deep water, Xu [

19] performed experiments based on the YOLOV3 model [

20] for fish detection in the high turbidity, high velocity, and murky water environment, and evaluated the fish detection accuracy in three datasets under marine and hydrokinetic (MHK) environments. It was shown that the mAP score reached 0.5392 under this dataset, and YOLOV3 improved the target detection accuracy. Due to the problem of unstable data transmission conditions in fish farms, Cai [

21] used MobileNet to replace the Darknet-53 network in YOLOV3, which reduced the model size and the computation by a factor of 8–9. The speed of the optimized algorithm was improved, and the mAP was increased by 1.73% based on the fish dataset. Both Faster R-CNN and YOLO networks can extract deeper features from images, but YOLO belongs to the end-to-end algorithmic framework, which is faster. In large-scale three-dimensional factory farming, faster recognition algorithms mean real-time detection of targets in aquaculture. Therefore, the improved YOLO network was chosen for the recognition of residual bait and

Portunus centroid targets.

The edge contour (rotation angle) recognition of

Portunus belongs to the problem of visual inspection target segmentation. Aside from the traditional Canny and Pb algorithms, most target segmentation problems are now solved by architectures that use deep learning. Most of the time, different backbone network architectures and information fusion methods are made based on convolutional neural network frameworks like GooLeNet and VGG to pull out multi-scale features and achieve more accurate edge detection and segmentation. Typical backbone network architectures include multi-stream learning, skip-layer network learning, single model on multiple inputs, training independent network, holistically-nested networks, etc. In 2015, Xie [

22] proposed the Holistically-Nested Edge Detection (HED) algorithm, which uses VGG-16 as the backbone network base, initializes the network weights using migration learning, and achieves simple contour segmentation of the target through multi-scale and multi-level feature learning. The HED algorithm achieves an ODS (optimal dataset scale) of 0.782 on the BSDS500 dataset, reflecting better performance of the dataset on the training set, achieving better segmentation results, and realizing target contour recognition. Subsequently, the Convolutional Oriented Boundaries (COB) algorithm [

23] was improved on the basis of HED, which generates multi-scale, contour-oriented, regionally-high-level features for sparse boundary-level segmentation with an ODS of 0.79 on BSDS500, optimizing the training set contour information feature extraction. Further, to reduce the number of deep learning network parameters and maintain the spatial information in segmentation, Badrinarayanan [

24] proposed SegNet, an encoder–decoder-based segmentation network architecture. The SegNet algorithm transfers the maximum pooling index to the decoder, improving the segmentation resolution. The SegNet algorithm moves the maximum pooling index to the decoder, improving the segmentation accuracy. The CEDN (Fully Convolutional Encoder–Decoder Network) algorithm [

25] can detect higher-level object contours, further optimizing the encoder–decoder framework for contour detection, and the CEDN algorithm improves the average recall on the PASCAL VOC dataset from 0.62 to 0.67 and the ODS reached 0.79 on the BSDS500 dataset. In 2015, researchers found that the ResNet network framework [

26] can extend the neural network depth and extract complex features efficiently. In 2019, Kelm [

27] proposed the RCN network architecture based on the ResNet algorithm, which is used for contour detection by using multi-path refinement and fuses mid-level and low-level features in a specific order with a concise and efficient algorithm, thus becoming a leading framework for edge detection. For example, Abdennour [

28] proposed a driver profile recognition system with 99.3% accuracy for facial profile recognition based on RCN networks.

In summary, the problem is the visual detection of the survival rate for

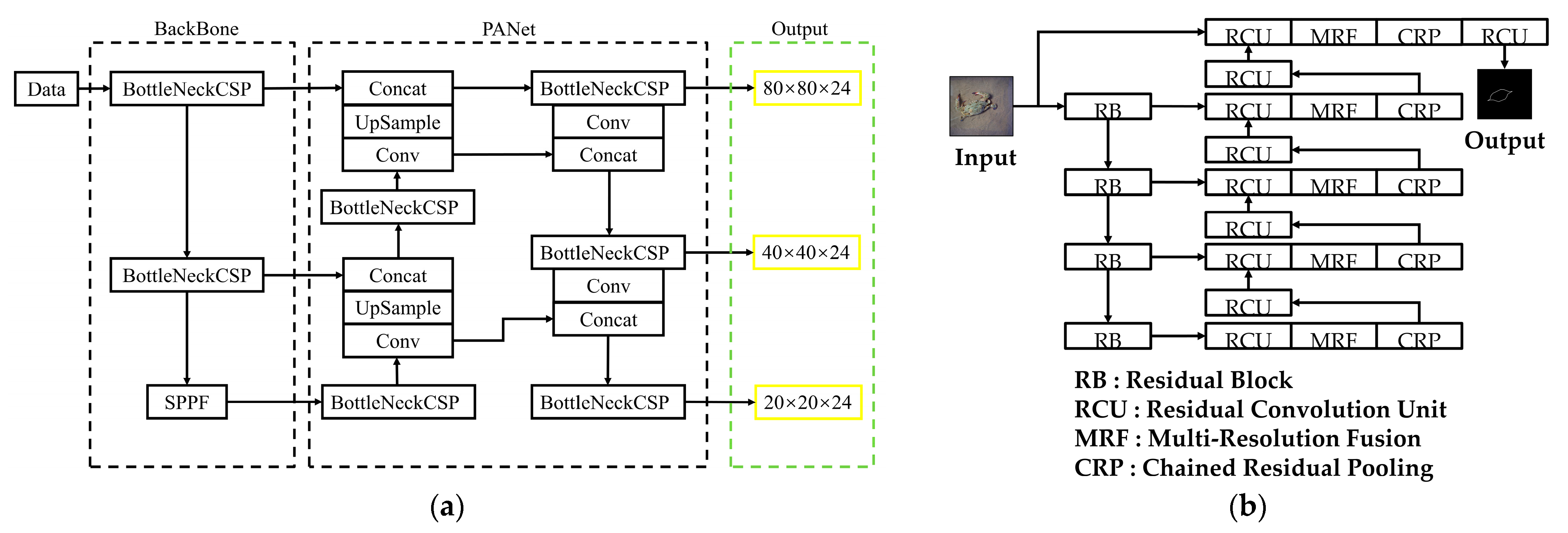

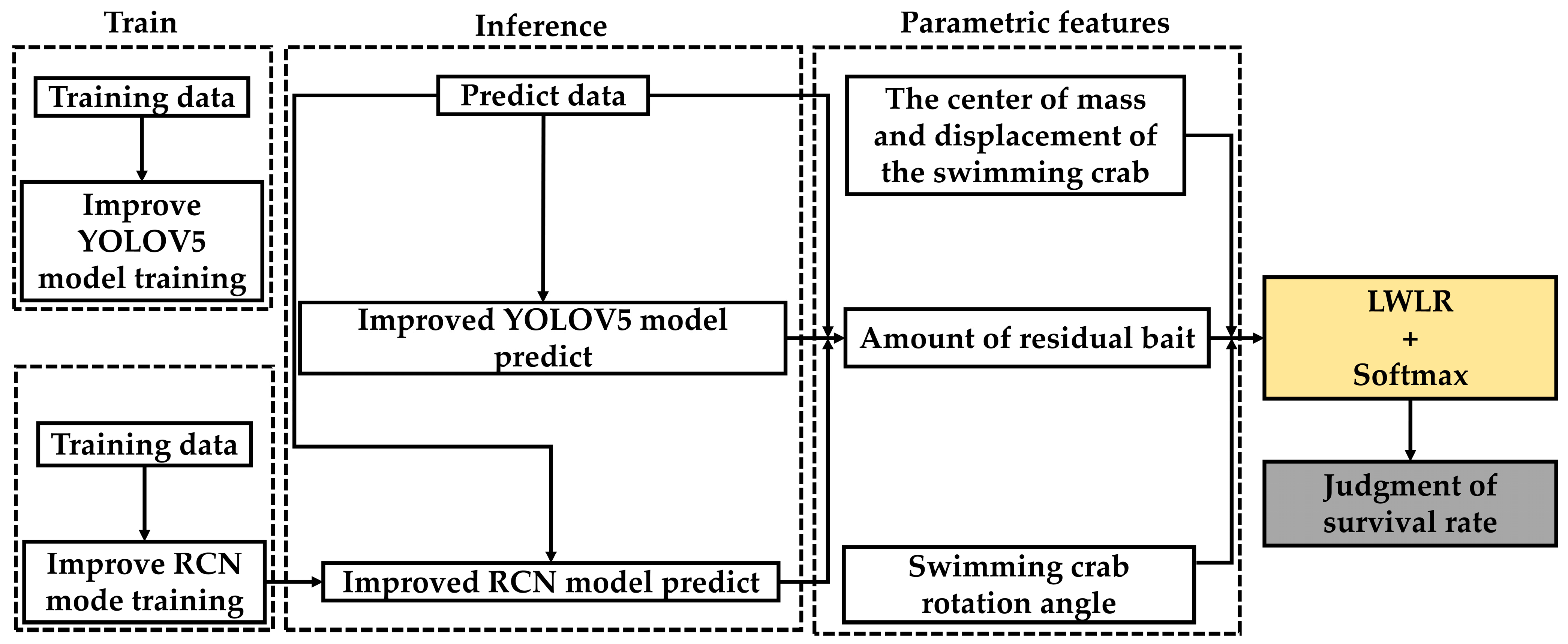

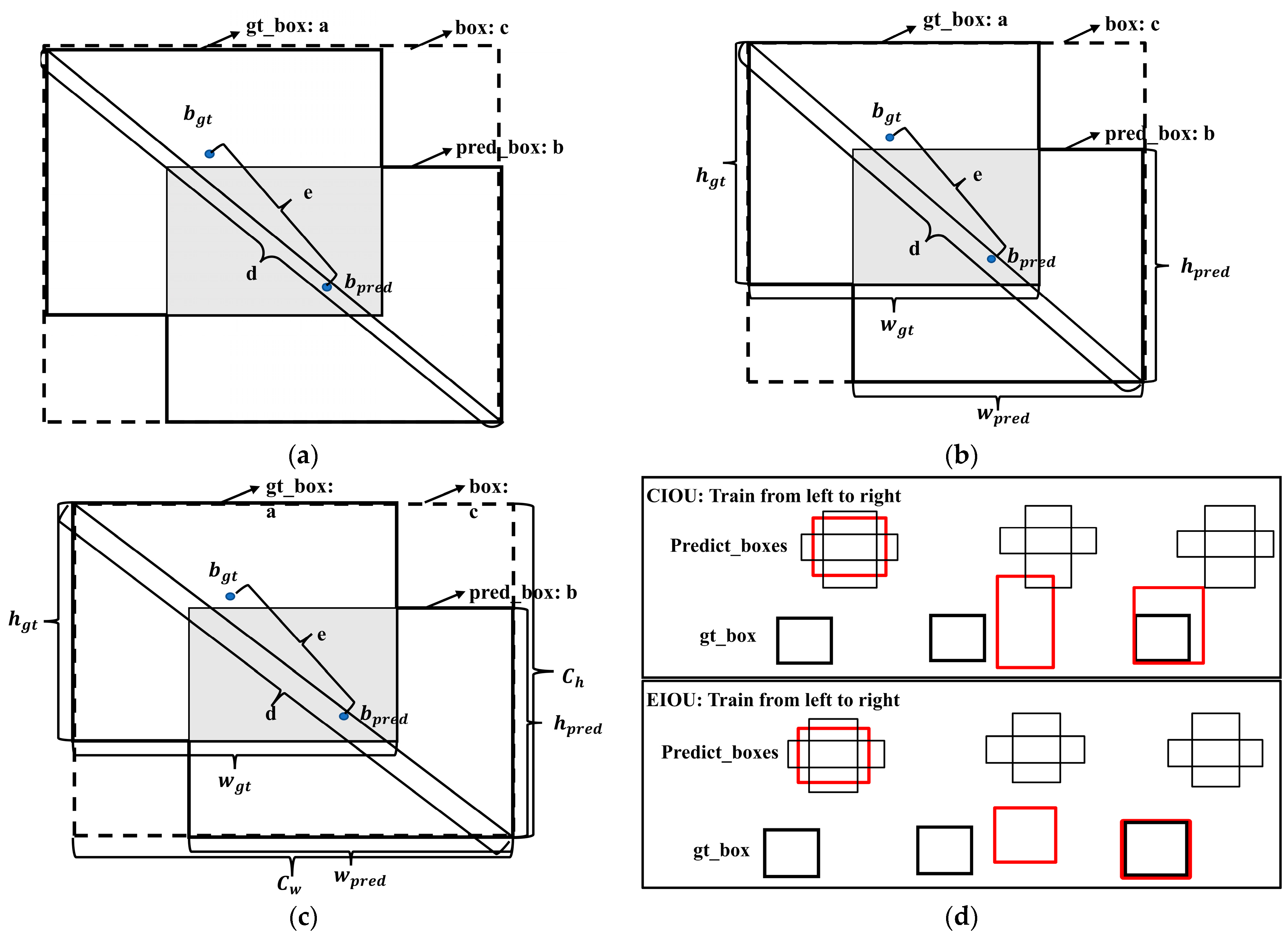

Portunus’ three-dimensional apartment culture. The main work of this paper is as follows: (i) Based on YOLOV5, the residual bait and

Portunus centroid were detected, and the EIOU loss [

29] bounding box loss function was proposed on the basis of CIOU (Complete IOU) loss to optimize the accuracy of YOLOV5 in predicting the centroid moving distance of

Portunus and residual bait. (ii) For the

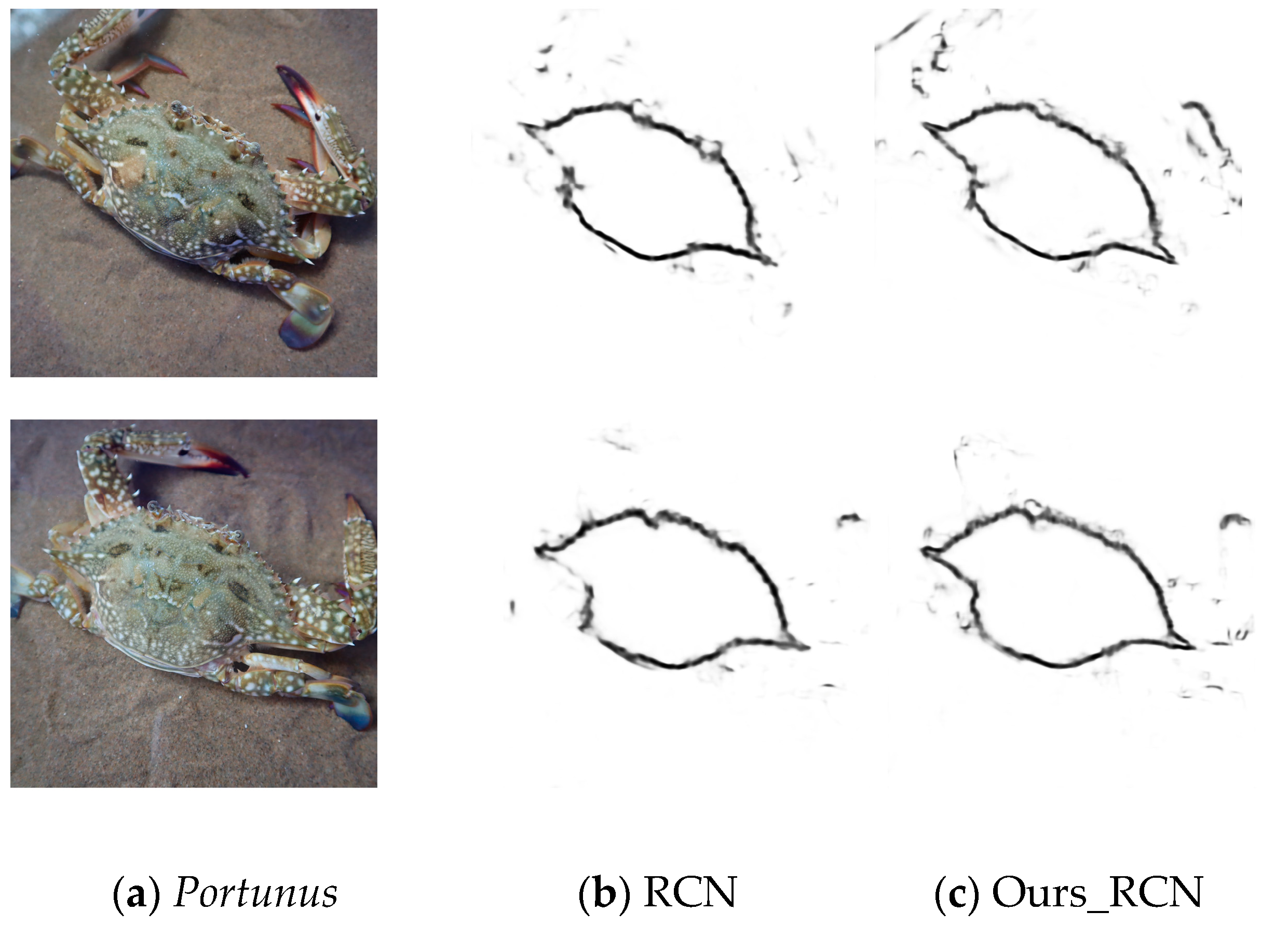

Portunus contour target segmentation problem, the RCN-based binary cross-entropy loss to double-threshold binary cross-entropy loss function is optimized to improve the

Portunus contour detection accuracy and calculate the contour endpoint coordinates (i.e., rotation angle) to improve the

Portunus contour curve accuracy. (iii) Based on YOLOV5’s RCN algorithm,

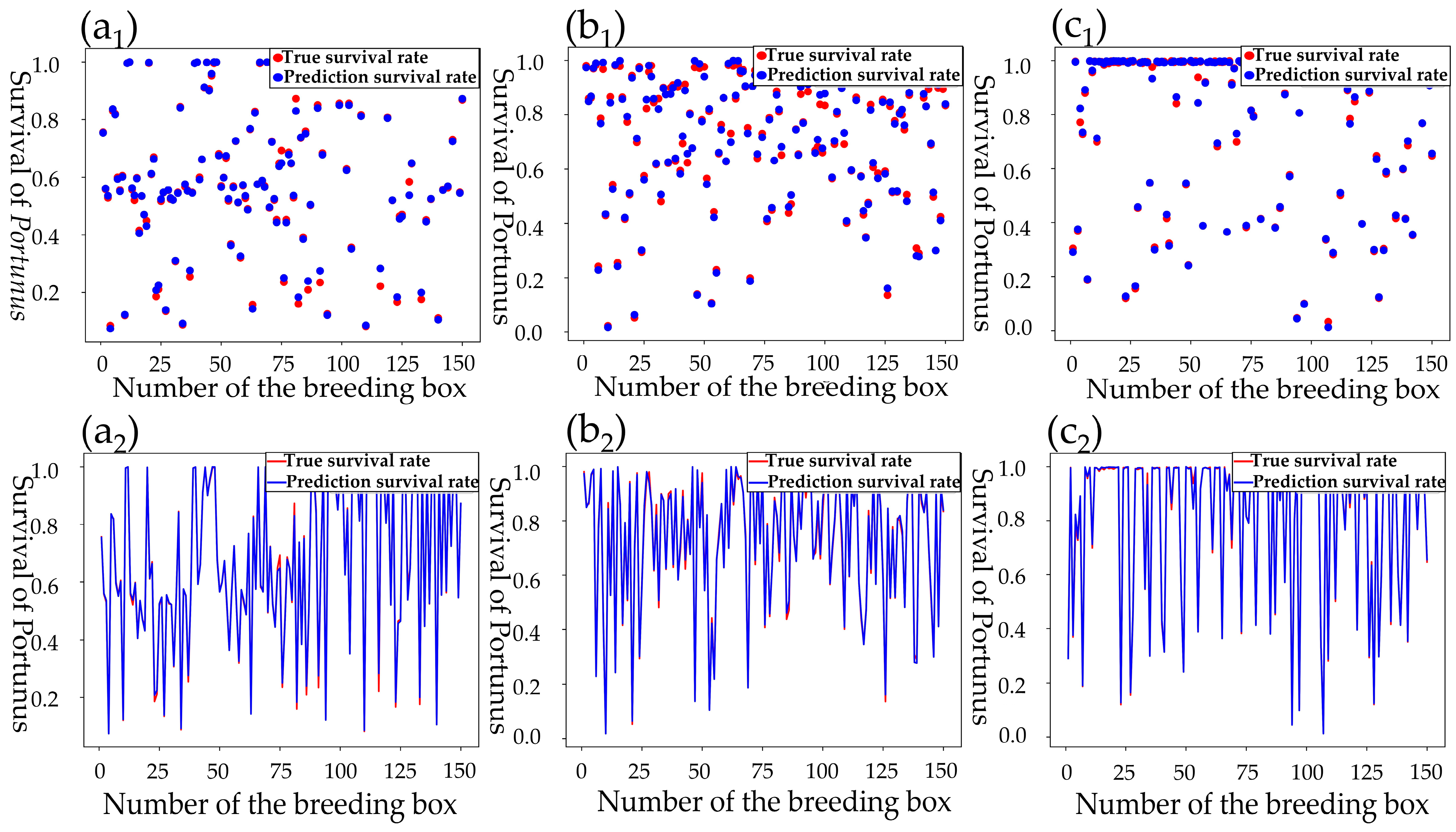

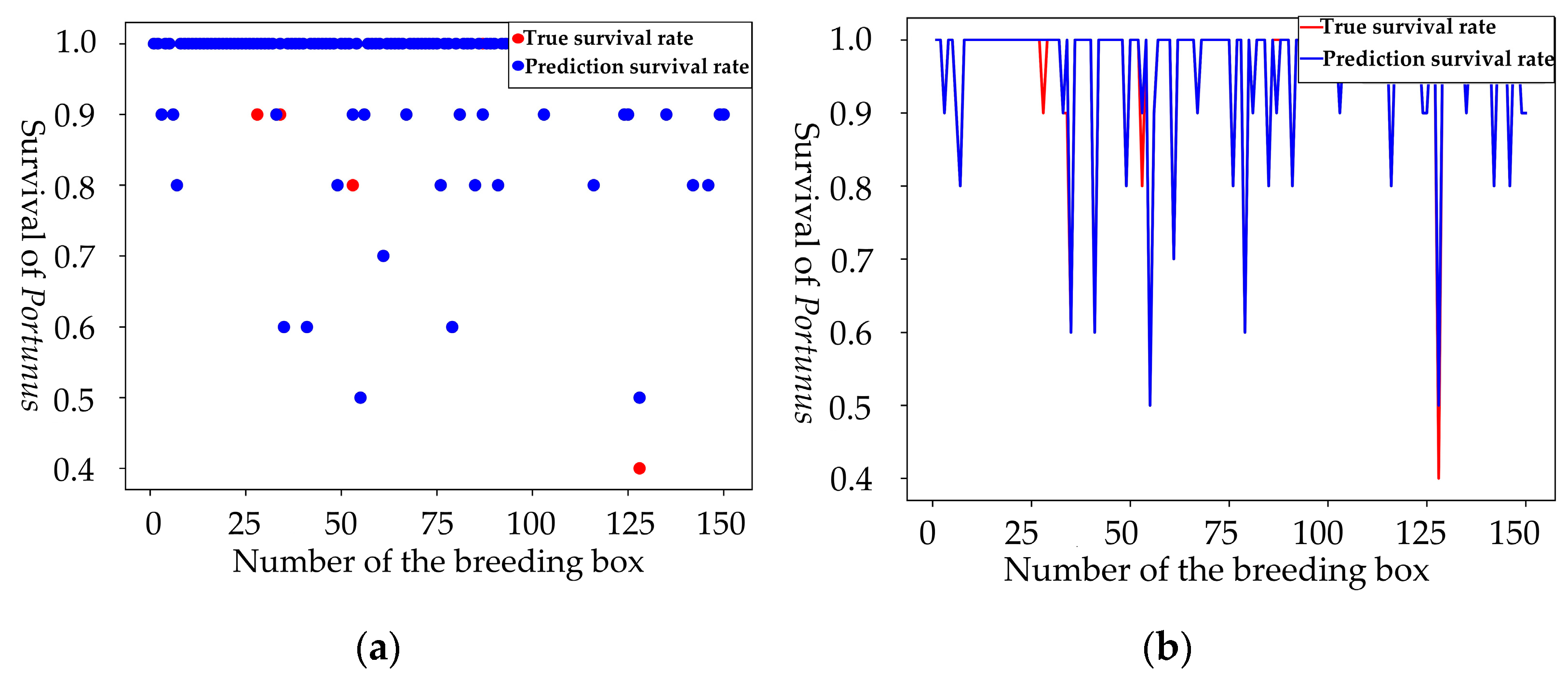

Portunus centroid movement, residual bait, and rotation angle were three parameters identified, using locally weighted linear regression (LWLR) and the softmax algorithm for information fusion to give a comprehensive determination model of the

Portunus survival rate.

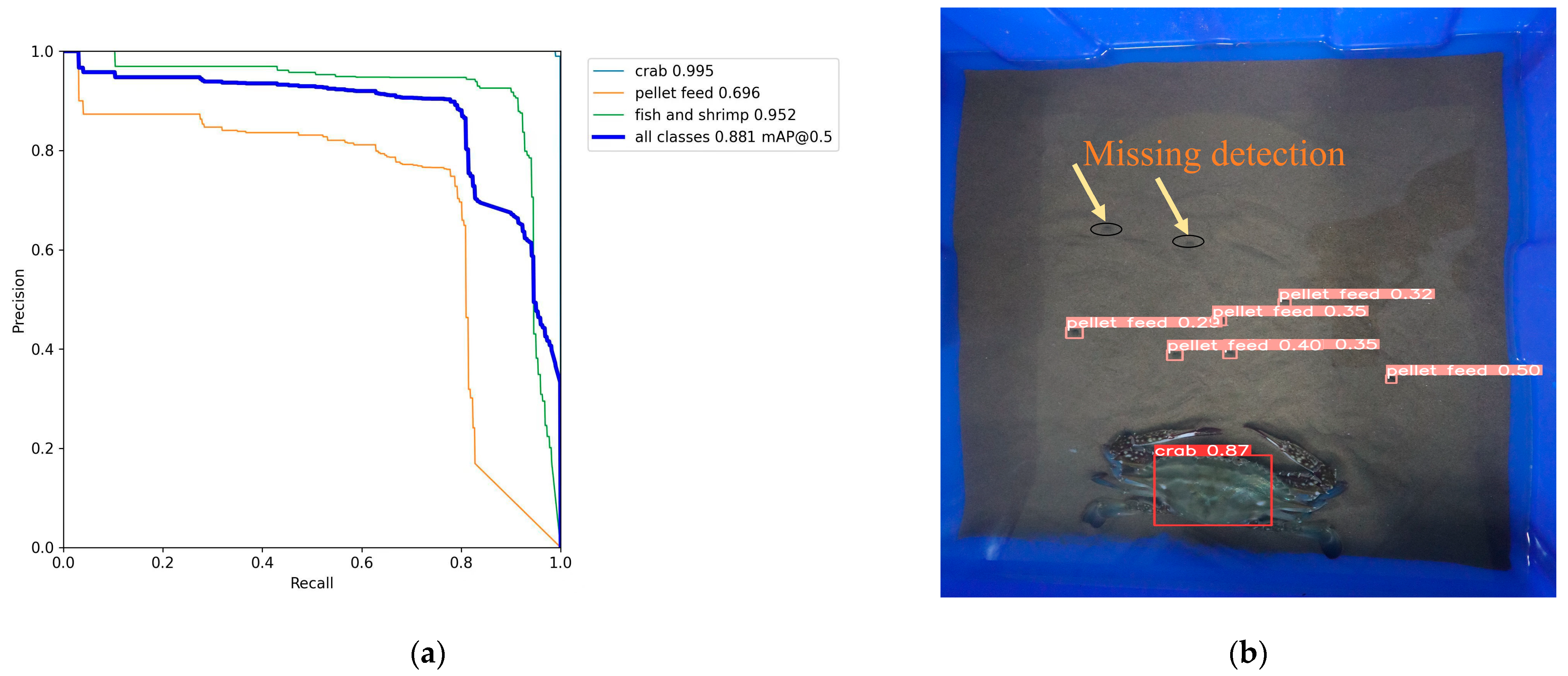

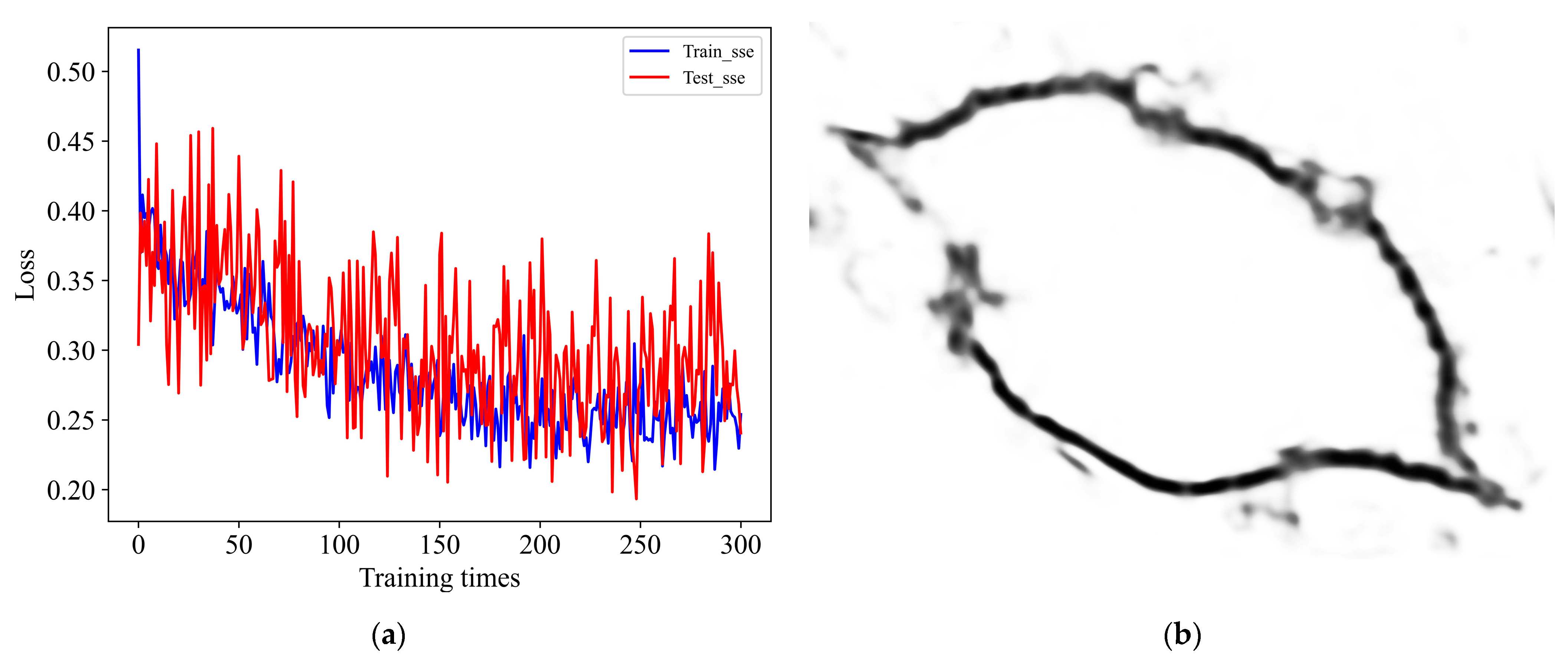

The main innovation of this paper lies in the visual detection of three parameters that indirectly reflect the survival of the Portunus using machine vision technology, and the establishment of a single-parameter survival determination model and a three-parameter fusion determination model, respectively. Thus, a vision detection-based survival detection method for Portunus was given. Currently, most of the vision inspection techniques for Portunus are focused on target detection. In the context of three-dimensional culture, the visual-detection method of multi-parameter fusion has not been reported. Meanwhile, this paper uses the YOLOV5 algorithm to detect the centroid movement distance and residual bait of Portunus and the RCN algorithm to detect the rotation angle of Portunus. In residual bait recognition, pellet bait belongs to small target recognition; this paper uses the EIOU loss function to enhance the training mAP and improve the recognition accuracy of pellet bait and Portunus. In RCN for Portunus contour rotation angle recognition, this paper proposes a double-threshold loss function algorithm to reduce the loss function and improve the training accuracy. Finally, a combined three-parameter LWLR fusion determination algorithm was given, and the fused survival recognition accuracy was improved by 5.5%, relative to the single-parameter (average accuracy) recognition accuracy.

5. Conclusions

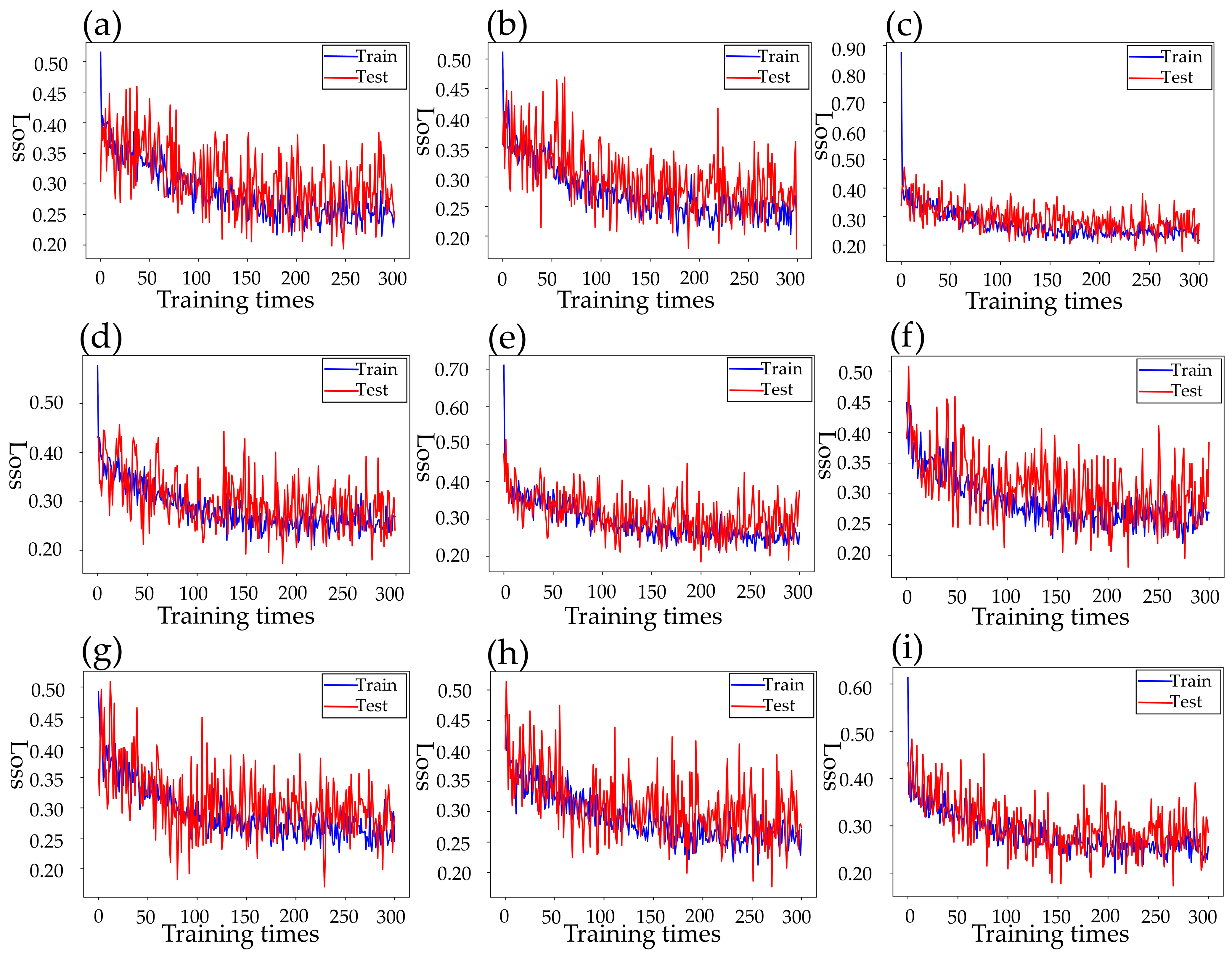

To detect Portunus survival in real time, the original YOLOV5 and RCN networks missed detection of both pellet feed and contour, and the original algorithm must be optimized and fused with many parameters. Firstly, the k-means clustering algorithm was used to cluster the size of anchors in YOLOV5, which could improve the accuracy of the algorithm model. Through EIOU loss, the convergence was accelerated, and the regression accuracy was also improved. Based on the original basis, the mAP(0.5) values for pellet feed, fish, and shrimp increased by 1.7% and 1%, respectively; EIOU loss increased precision by 2% and mAP (threshold for 0.5:0.95:0.05) by 1.8%; and the prediction accuracy of centroid moving distance and residual bait ratio of Portunus was improved. Secondly, the RCN network was optimized using the double-threshold binary cross-entropy loss function, and its last loss could be reduced to 0.2 when 300 times of training were made. Before optimization, last loss reached 0.23 after 500 training, and the RCN average loss was reduced by 4%. After RCN improvement, the ODS of contour recognition index increases by 2%, the probability of blurring or missing contour prediction was reduced, and the rotation angle of Portunus was obtained. Finally, based on the LWLR and algorithm, multi-parameter fusion was carried out. The recognition accuracy of the residual bait ratio characteristic parameter to the survival rate of Portunus was 0.920, the recognition accuracy of centroid moving distance characteristic parameter to the survival rate of Portunus was 0.840, the recognition accuracy of rotation angle characteristic parameter to the survival rate of Portunus was 0.955, the recognition accuracy of multi-parameter fusion for the survival rate of Portunus was 0.960, and the accuracy of multi-parameter fusion was 5.5% higher than that of single parameter (average accuracy). Therefore, multi-parameter fusion greatly improves the accuracy of judging whether the Portunus is alive.