Cloud-Enabled Hybrid, Accurate and Robust Short-Term Electric Load Forecasting Framework for Smart Residential Buildings: Evaluation of Aggregate vs. Appliance-Level Forecasting

Highlights

- A cloud-enabled hybrid framework combining Seasonal ARIMAX, Random For-est, and LSTM achieves high accuracy for a short-term residential load forecasting.

- Appliance-level (AL) and aggregate forecasts (AF) are compared, showing that AF outperforms AL in reliability and accuracy.

- Multi-cloud parallel calculations with a two-out-of-three voting scheme enhances forecasting robustness by mitigating single-cloud failures.

- The proposed scalable solution supports smart-city energy management, demand response, and grid reliability.

Abstract

1. Introduction

1.1. Load Forecasting Categories and Challenges

- Are statistical methods (SM) more effective for load prediction in residential buildings compared to machine learning (ML) techniques?

- Does a hybrid approach that combines SM and ML provide superior results?

- Is it more accurate to predict the overall consumption of a residential building (RB), or to forecast the consumption of individual appliances and then aggregate the results?

1.2. Feature Selection

1.3. Statistical Methods (SM)

1.4. Machine Learning (ML)

1.5. The Contributions of the Presented Work Can Be Summarized as Follows

- Integration across paradigms: A unified forecasting architecture that synergistically combines Seasonal ARIMAX, Random Forest, and LSTM models through a residual-correction layer, effectively merging statistical trend modeling with nonlinear and sequential learning.

- Feature–model customization: Each sub-model employs an optimized feature selection strategy (Correlation Matrix, Chi-Square, or MRMR), enabling the framework to capture diverse predictive relationships with minimal redundancy.

- Multi-cloud redundancy and voting: A two-out-of-three cloud voting ensures continuous operation under individual cloud or model failures, an aspect rarely addressed in prior forecasting frameworks.

- Fine-grained temporal resolution: The framework is validated on 5 min interval residential load data, demonstrating that aggregate forecasts outperform the summation of appliance-level predictions (sum of predictions per appliances) in both stability and accuracy.

- Demonstrated accuracy and robustness: The system achieves a 35% reduction in RMSLE relative to the best single-model benchmark and maintains reliable performance under simulated cloud outages.

2. Methodology

2.1. Long Short-Term Memory (LSTM) Networks

2.2. Random Forest (RF)

2.3. Seasonal Autoregressive Integrated Moving Average with Exogenous Variables (SARIMAX)

- Non-seasonal ARIMA component:

- ϕ(B): is the non-seasonal AR polynomial

- θ(B): is the non-seasonal MA polynomial

- B: is the backshift operator

- yt: is the series at time t, and

- : is a white-noise error term

- 2.

- Seasonal Component (s)

- Φ(): Seasonal autoregressive polynomial of order P.

- Θ(): Seasonal moving average polynomial of order Q.

- S: Seasonality period

- D: Number of seasonal differences.

- 3.

- Exogenous regressors ()

- : External (exogenous) regressors at time t.

- : Coefficient vector for the exogenous variables.

2.4. SSRLR

| Algorithm 1. Pseudocode of proposed algorithm |

| Input: Historical load data, Weather features, Forecast horizon = 12 Step 1: Data Preprocessing Normalize data using MinMaxScaler to range [0,1] Step 2: Feature Selection Apply CM Select relevant features → CM_Output Use this for LSTM Apply Chi-Square Select relevant features → Chi-Square_Output Use this for SARIMAX Apply MRMR Select relevant features → MRMR_Output Use this for RF Step 3: Model Training and Prediction Train SARIMAX model with seasonal and non-seasonal components Predict next 12 points → SARIMAX_Output Train Random Forest model using weather and historical data Predict next 12 points → RF_Output Train LSTM model for time-series forecasting Predict next 12 points → LSTM_Output Step 4: Remainder Prediction (RP) Perform additional adjustments if needed: Use SARIMAX for LSTM on cloud 1 Use LSTM for RF on cloud 2 Use RF for SARIMAX on cloud 3 Step 5: Ensemble Averaging SARIMAX Final Output (PP+RP) → cloud 1_Output RF Final Output (PP+RP) → cloud 2_Output LSTM Final Output (PP+RP) → cloud 3_Output Step 6: Ensemble Averaging Ensemble_Output = (cloud 2_Output + cloud 2_Output + cloud 3_Output)/3 Step 7: Output Final Prediction Return Ensemble_Output |

- The process begins with feature selection using the CM method, followed by primary forecasting with LSTM. A trend is then identified for the remainders, and SARIMAX is applied to forecast the subsequent remainders. The final output for this stage is obtained by summing the LSTM predictions with the forecasted remainders.

- Feature selection is performed using the MRMR method, followed by primary forecasting with Random Forest (RF). A trend is then identified for the remainders, and LSTM is applied to forecast the subsequent remainders. The final output for this stage is obtained by summing the RF predictions with the LSTM-forecasted remainders.

- Feature selection is performed using the Chi-Square method, followed by primary forecasting with SARIMAX. A trend is then identified for the remainders, and Random Forest (RF) is applied to forecast the subsequent remainders. The final output for this stage is obtained by summing the SARIMAX predictions with the RF-forecasted remainders.

2.5. Two-Out-of-Three Multi-Cloud Voting Architecture

- Cloud 1: Implements the CM → LSTM → SARIMAX pipeline.

- Cloud 2: Implements the MRMR → RF → LSTM pipeline.

- Cloud 3: Implements the Chi-Square → SARIMAX → RF pipeline.

- High Availability and Robustness: The redundancy of multiple clouds ensures continuous operation even if one instance experiences downtime or degraded performance.

- Elastic Scalability: Cloud resources can be dynamically allocated to handle varying computational demands, enabling real-time or near real-time processing for large-scale datasets.

- Parallel Computation: Hosting clouds in different regions improving forecast delivery speed and responsiveness.

- Security and Data Integrity: Cloud platforms provide encryption, controlled access, and automated backup systems, ensuring the safety and reliability of both input data and forecasting models.

- Cost Efficiency: Pay-as-you-go billing models allow for optimal use of resources without the overhead of maintaining extensive on-premises infrastructure.

- Seamless Integration and Maintenance: Cloud ecosystems support easy deployment of updates, integration of analytics tools, and automated monitoring of model performance with cheaper price compare to on- premise systems.

- Collaboration and Accessibility: Authorized users can securely access the forecasting system from anywhere, enabling collaborative work across geographically distributed teams.

- R3 denotes the case where all three systems perform without error.

- 3R2 (1 − R) corresponds to the case where two systems deliver correct outputs and one produces an error, which is automatically overruled by the majority decision.

3. Data Description and Preparation

- Forced-air heating unit on the lower level

- Air conditioner on the lower level

- Refrigerator

- Air conditioner on the upper level of the house

- General (miscellaneous) household load

- Laundry machines (washer and dryer)

- Dishwasher and garbage disposal

- Microwave oven

- Forced-air heating unit on the upper level of the house

- Kitchen circuit (GFI) including the toaster

Estimating the Holiday/Weekend Adjustment Factor and Modeling the Seasonal Effect

- Applying this procedure to our 2022 dataset produces:

- A total of 372 “normalized” days (12 months × 31 days)

- 372 days × 24 h = 8928 h

- At 5 min resolution: 8928 h × 12 readings per hour = 107,136 data points

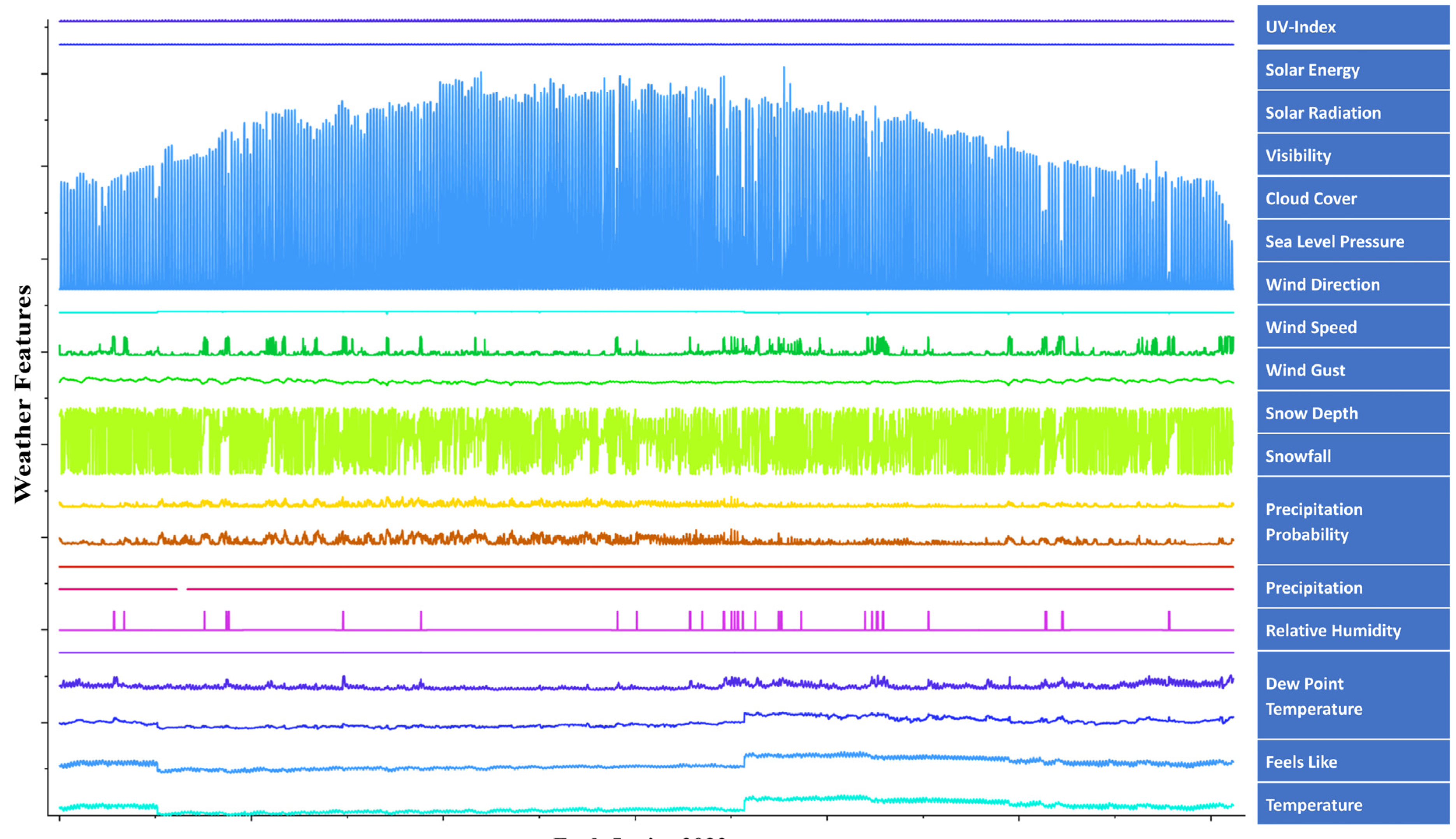

4. Applying Feature Selection

4.1. Chi-Square Test

- Split weather variables (temperature, UV index, etc.) into a set of categories.

- Bin the target: Organize historical load values into energy consumption intervals.

- Compute frequencies: Tally how often each weather-category/load-category pair occurs.

- Calculate statistic: For each feature, sum over all bins using the term:

- 5.

- Rank features: Order variables by their Chi-Square scores higher scores indicate greater predictive value.

4.2. Minimum Redundancy Maximum Relevance (MRMR)

- Standardize each numeric feature:

- 2.

- Compute mutual information between each feature and the energy-consumption target.

- 3.

- Rank features by high relevance (large MI) and low redundancy (little overlap with already-selected features).

- Temperature and feels-like temperature emerge as the strongest predictors.

- Dew point, sea level pressure, and humidity have moderate importance.

- Solar energy and visibility register relatively low relevance scores.

| Feature | Temperature | Feels-Like | UV Index | Sea Level Pressure | Solar Energy | Solar Radiation | Visibility |

| Score | 0.386 | 0.381 | 0.052 | 0.203 | 0.073 | 0.153 | 0.040 |

| Feature | Temperature | Feels-Like | UV Index | Sea Level Pressure | Solar Energy | Solar Radiation | |

| Score | 0.242 | 0.159 | 0.139 | 0.106 | 0.114 | 0.085 |

4.3. Correlation Matrix

- Sea level pressure has the strongest negative correlation (−0.34), implying that higher pressures generally coincide with lower energy use (likely due to milder weather).

- Temperature, feels-like temperature, and dew point have strong positive correlations (approximately +0.32, +0.31, and +0.22), reflecting increased cooling demand under warmer or more humid conditions.

- Solar variables (UV index, solar radiation, solar energy) exhibit modest positive correlations (around +0.17).

- Cloud cover and visibility feature near-zero correlations, indicating negligible direct effects on consumption.

5. Discussion and Comparison of the Results: Overall Consumption Prediction vs. Aggregated Appliance Forecasts

- FAU Downstairs: RMSLE 0.01783, RMSE 0.02536

- AC Downstairs: RMSLE 0.04838, RMSE 0.04962

- Refrigerator: RMSLE 0.04195, RMSE 0.04103

- AC Upstairs: RMSLE 0.04732, RMSE 0.04878

- Laundry/Dryer: RMSLE 0.04137, RMSE 0.04238

- Dishwasher/Garbage: RMSLE 0.04063, RMSE 0.04165

- Microwave: RMSLE 0.04116, RMSE 0.04207

- FAU Upstairs: RMSLE 0.04192, RMSE 0.07004

- Kitchen GFI/Toaster: RMSLE 0.03949, RMSE 0.04051

- General/Misc: SSRLR is best but errors are higher (RMSLE 0.06854, RMSE 0.10892) because this circuit mixes many small, irregular uses.

- Spiky/episodic loads (Microwave, Dishwasher/Garbage, Laundry) are handled well by SSRLR, which efficiently manages short bursts.

- HVAC loads (AC and FAU) also favor SSRLR, showing that it captures both seasonality and start/stop behavior.

- General/Misc is the hardest because it blends many small and random loads: SSRLR shows the best accuracy.

- SSRLR is best overall (aggregate and per appliance averages), strong on both relative (RMSLE) and absolute (RMSE/MAE) errors.

- RF/XGBoost are reliable runner ups; they capture nonlinear effects and are stable across devices.

- SARIMAX is a good seasonal baseline, but weaker on sharp spikes.

- LSTM and GRU do not dominate here, likely due to intermittency, short forecast horizons, and limited effective data per appliance.

- LR/DT/DBN/SVM perform poorly for short-term load forecasting in this setting.

- Direct aggregate forecasting (SSRLR) is much more accurate than the sum of appliance forecasts on this dataset (~39.5% lower RMSLE, best vs. best).

- SSRLR is the most consistent model at both levels; RF/XGBoost are strong baselines.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ML | Machine Learning |

| MSE | Mean Squared Error |

| LF | Load Forecasting |

| MAPE | Mean Absolute Percentage Error |

| MAE | Mean Absolute Error |

| STLF | Short-Term Load Forecasting |

| RMSLE | Root Mean Squared Logarithmic Error |

| MTLF | Mid-Term Load Forecasting |

| RMSE | Root Mean Squared Error |

| LTLF | Long-Term Load Forecasting |

| LR | Linear Regression |

| RF | Random Forest |

| DT | Decision Tree |

| GRU | Gated Recurrent Unit |

| SVM | Support Vector Machine |

| DBN | Deep Belief Network |

| ARIMA | Autoregressive Integrated Moving Average |

| SSRLR | Sparse SARIMAX, Random Forest, LSTM, and Remainder Prediction |

| SARIMAX | Seasonal Autoregressive Integrated Moving Average Exogenous |

| XGBoost | Extreme Gradient Boosting |

| LSTM | Long Short-Term Memory |

| CM | Correlation Matrix |

| MRMR | Minimum Redundancy Maximum Relevance |

| RP | Remainder Prediction |

| PP | Primary Prediction |

| AL | Appliance-level |

| AF | Aggregate forecasts |

Appendix A

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1.569 | 0.911 | 0.592 | 1.634 | 0.853 | 0.951 | 0.621 | 0.938 | 0.871 | 0.782 | 0.650 |

| 2 | 2.104 | 0.887 | 0.545 | 1.610 | 0.703 | 0.279 | 0.602 | 0.923 | 0.699 | 0.775 | 0.646 |

| 3 | 2.104 | 1.040 | 0.516 | 1.576 | 0.643 | 0.513 | 0.569 | 0.901 | 0.637 | 0.768 | 0.752 |

| 4 | 1.569 | 0.941 | 1.294 | 1.941 | 1.963 | 3.180 | 0.665 | 1.112 | 1.968 | 1.730 | 1.840 |

| 5 | 2.104 | 0.884 | 0.812 | 1.658 | 0.892 | 0.946 | 0.637 | 0.953 | 0.89 | 0.883 | 0.846 |

| 6 | 1.569 | 0.888 | 0.580 | 1.573 | 0.643 | 0.513 | 0.565 | 0.899 | 0.643 | 0.612 | 0.590 |

| 7 | 1.569 | 0.920 | 0.561 | 1.577 | 0.631 | 0.513 | 0.570 | 0.902 | 0.632 | 0.612 | 0.590 |

| 8 | 1.569 | 0.927 | 0.535 | 1.624 | 0.854 | 0.788 | 0.613 | 0.932 | 0.833 | 0.708 | 0.625 |

| 9 | 2.639 | 1.050 | 0.535 | 1.634 | 0.853 | 0.951 | 0.621 | 0.938 | 0.865 | 0.795 | 0.664 |

| 10 | 2.104 | 0.958 | 1.247 | 2.004 | 1.708 | 1.031 | 0.642 | 1.143 | 1.71 | 1.714 | 1.732 |

| 11 | 2.639 | 0.902 | 0.789 | 1.689 | 0.988 | 1.019 | 0.655 | 0.972 | 0.988 | 0.989 | 0.989 |

| 12 | 1.569 | 0.911 | 0.575 | 1.596 | 0.738 | 0.794 | 0.589 | 0.914 | 0.737 | 0.701 | 0.699 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.017513 | 0.008902 | 0.007134 | 0.012425 | 0.006982 | 0.009112 | 0.007698 | 0.008235 | 0.007091 | 0.006785 | 0.00601 |

| 2 | 0.018425 | 0.009503 | 0.00721 | 0.012833 | 0.006991 | 0.009621 | 0.007895 | 0.008501 | 0.007254 | 0.006945 | 0.006043 |

| 3 | 0.017901 | 0.007625 | 0.007625 | 0.012332 | 0.006951 | 0.009432 | 0.00921 | 0.007193 | 0.008395 | 0.006851 | 0.005963 |

| 4 | 1.202331 | 0.731299 | 0.557128 | 0.983572 | 0.645221 | 0.71294 | 0.58932 | 0.638521 | 0.552118 | 0.501237 | 0.477047 |

| 5 | 0.391254 | 0.251332 | 0.173555 | 0.288413 | 0.210892 | 0.239144 | 0.199813 | 0.220335 | 0.176624 | 0.161225 | 0.145373 |

| 6 | 0.018311 | 0.009108 | 0.007324 | 0.012641 | 0.006914 | 0.009405 | 0.007809 | 0.00829 | 0.007114 | 0.006831 | 0.006037 |

| 7 | 0.018812 | 0.00961 | 0.007501 | 0.013001 | 0.007225 | 0.009832 | 0.008014 | 0.008502 | 0.007334 | 0.007009 | 0.006147 |

| 8 | 0.018501 | 0.009215 | 0.007254 | 0.012721 | 0.007001 | 0.009611 | 0.007921 | 0.008411 | 0.007209 | 0.006982 | 0.00611 |

| 9 | 0.0189 | 0.0097 | 0.007605 | 0.01322 | 0.007332 | 0.009955 | 0.008225 | 0.00872 | 0.007445 | 0.00712 | 0.00617 |

| 10 | 1.210422 | 0.740291 | 0.56321 | 0.990512 | 0.653222 | 0.721008 | 0.59512 | 0.642892 | 0.557231 | 0.503188 | 0.477707 |

| 11 | 0.39012 | 0.250992 | 0.172801 | 0.287134 | 0.209921 | 0.238551 | 0.198923 | 0.219832 | 0.175832 | 0.160534 | 0.145263 |

| 12 | 0.01825 | 0.00902 | 0.007111 | 0.01252 | 0.006952 | 0.009255 | 0.007715 | 0.008175 | 0.007003 | 0.006822 | 0.006033 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.002301 | 0.001205 | 0.000924 | 0.00161 | 0.000851 | 0.001132 | 0.000967 | 0.001031 | 0.000912 | 0.000843 | 0.00078 |

| 2 | 0.002298 | 0.001211 | 0.000919 | 0.001599 | 0.000845 | 0.001127 | 0.000963 | 0.001028 | 0.000908 | 0.000839 | 0.00078 |

| 3 | 0.002115 | 0.001099 | 0.000842 | 0.001503 | 0.000799 | 0.001046 | 0.000892 | 0.00095 | 0.000838 | 0.000772 | 0.0007 |

| 4 | 0.002069 | 0.001067 | 0.000826 | 0.001469 | 0.00078 | 0.001021 | 0.00087 | 0.000928 | 0.00082 | 0.000755 | 0.00069 |

| 5 | 0.001986 | 0.001025 | 0.000793 | 0.001409 | 0.000748 | 0.000979 | 0.000834 | 0.00089 | 0.000787 | 0.000724 | 0.00066 |

| 6 | 0.002083 | 0.001074 | 0.00083 | 0.001453 | 0.000772 | 0.001009 | 0.00086 | 0.000919 | 0.000815 | 0.000747 | 0.000693 |

| 7 | 0.002352 | 0.001215 | 0.000938 | 0.001644 | 0.000869 | 0.001154 | 0.000985 | 0.001051 | 0.000933 | 0.000865 | 0.00081 |

| 8 | 0.001858 | 0.000961 | 0.000742 | 0.001298 | 0.000688 | 0.000906 | 0.000774 | 0.000827 | 0.000737 | 0.000676 | 0.000643 |

| 9 | 0.002067 | 0.001072 | 0.00083 | 0.00145 | 0.00077 | 0.001006 | 0.000858 | 0.000917 | 0.000813 | 0.000746 | 0.00071 |

| 10 | 0.002157 | 0.00112 | 0.000865 | 0.001515 | 0.000805 | 0.001051 | 0.000897 | 0.000958 | 0.000851 | 0.000781 | 0.00074 |

| 11 | 0.002276 | 0.001183 | 0.000914 | 0.001619 | 0.000861 | 0.001124 | 0.00096 | 0.001028 | 0.000913 | 0.000845 | 0.000773 |

| 12 | 0.002296 | 0.001196 | 0.000922 | 0.001635 | 0.000871 | 0.001137 | 0.00097 | 0.001039 | 0.000921 | 0.000852 | 0.000783 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | −0.00108 | −0.00052 | −0.00041 | −0.0008 | −0.00033 | −0.00049 | −0.00045 | −0.00047 | −0.00034 | −0.0003 | −0.00036 |

| 2 | −0.00106 | −0.00051 | −0.0004 | −0.00078 | −0.00033 | −0.00049 | −0.00044 | −0.00047 | −0.00033 | −0.00029 | −0.00035 |

| 3 | −0.00113 | −0.00054 | −0.00043 | −0.00082 | −0.00034 | −0.00051 | −0.00046 | −0.00049 | −0.00035 | −0.00031 | −0.00038 |

| 4 | −0.00137 | −0.00066 | −0.00052 | −0.00099 | −0.00042 | −0.00062 | −0.00056 | −0.00059 | −0.00042 | −0.00037 | −0.00046 |

| 5 | −0.00125 | −0.0006 | −0.00047 | −0.0009 | −0.00038 | −0.00056 | −0.00051 | −0.00054 | −0.00038 | −0.00034 | −0.00042 |

| 6 | −0.00123 | −0.00059 | −0.00046 | −0.00089 | −0.00037 | −0.00055 | −0.0005 | −0.00053 | −0.00038 | −0.00033 | −0.00041 |

| 7 | −0.00097 | −0.00046 | −0.00036 | −0.0007 | −0.00029 | −0.00044 | −0.00039 | −0.00042 | −0.0003 | −0.00026 | −0.00032 |

| 8 | −0.00142 | −0.00068 | −0.00054 | −0.00104 | −0.00043 | −0.00064 | −0.00058 | −0.00062 | −0.00044 | −0.00038 | −0.00048 |

| 9 | −0.00161 | −0.00077 | −0.00061 | −0.00117 | −0.00049 | −0.00072 | −0.00065 | −0.00069 | −0.00049 | −0.00043 | −0.00054 |

| 10 | −0.00164 | −0.00079 | −0.00062 | −0.00119 | −0.0005 | −0.00074 | −0.00067 | −0.00071 | −0.0005 | −0.00044 | −0.00055 |

| 11 | −0.00134 | −0.00064 | −0.00051 | −0.00098 | −0.00041 | −0.00061 | −0.00055 | −0.00059 | −0.00042 | −0.00036 | −0.00045 |

| 12 | −0.00155 | −0.00074 | −0.00059 | −0.00113 | −0.00047 | −0.0007 | −0.00063 | −0.00067 | −0.00048 | −0.00042 | −0.00052 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSXLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.018945 | 0.009118 | 0.007204 | 0.013375 | 0.007001 | 0.009402 | 0.008015 | 0.008465 | 0.007293 | 0.006981 | 0.006727 |

| 2 | 0.018832 | 0.009065 | 0.007166 | 0.013288 | 0.006942 | 0.009351 | 0.007984 | 0.008432 | 0.007259 | 0.006951 | 0.006703 |

| 3 | 0.01884 | 0.009071 | 0.00717 | 0.013296 | 0.006946 | 0.009356 | 0.007988 | 0.008437 | 0.007263 | 0.006955 | 0.006703 |

| 4 | 0.017858 | 0.008604 | 0.00681 | 0.01264 | 0.006601 | 0.008892 | 0.0076 | 0.008042 | 0.006897 | 0.006581 | 0.006343 |

| 5 | 0.018201 | 0.008771 | 0.00694 | 0.012894 | 0.00674 | 0.009086 | 0.007769 | 0.00823 | 0.00706 | 0.006732 | 0.00648 |

| 6 | 0.01826 | 0.0088 | 0.006962 | 0.012933 | 0.006761 | 0.009115 | 0.007794 | 0.008255 | 0.007081 | 0.006751 | 0.0065 |

| 7 | 0.018273 | 0.008807 | 0.006968 | 0.012944 | 0.006768 | 0.009122 | 0.0078 | 0.008261 | 0.007087 | 0.006756 | 0.0065 |

| 8 | 0.018284 | 0.008812 | 0.006971 | 0.01295 | 0.006771 | 0.009126 | 0.007804 | 0.008265 | 0.00709 | 0.006759 | 0.006503 |

| 9 | 0.018323 | 0.008832 | 0.006986 | 0.012978 | 0.006788 | 0.009145 | 0.00782 | 0.00828 | 0.007105 | 0.006772 | 0.006517 |

| 10 | 0.017671 | 0.008507 | 0.006718 | 0.012506 | 0.00655 | 0.008794 | 0.007527 | 0.007974 | 0.006833 | 0.006528 | 0.006193 |

| 11 | 0.018766 | 0.00902 | 0.007139 | 0.013233 | 0.006915 | 0.009312 | 0.007955 | 0.008407 | 0.007238 | 0.006931 | 0.006593 |

| 12 | 0.0189 | 0.009091 | 0.007201 | 0.01335 | 0.006986 | 0.009381 | 0.00801 | 0.00846 | 0.00729 | 0.006981 | 0.00664 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.010825 | 0.005273 | 0.004242 | 0.007917 | 0.004008 | 0.005536 | 0.004821 | 0.005129 | 0.004181 | 0.003956 | 0.003603 |

| 2 | 0.01091 | 0.005316 | 0.004277 | 0.007988 | 0.004045 | 0.005588 | 0.004866 | 0.005175 | 0.004217 | 0.00399 | 0.003637 |

| 3 | 0.010789 | 0.005256 | 0.004227 | 0.007878 | 0.003993 | 0.005522 | 0.004804 | 0.005113 | 0.00416 | 0.003935 | 0.00358 |

| 4 | 0.010833 | 0.005278 | 0.004244 | 0.007913 | 0.004012 | 0.005539 | 0.004822 | 0.005133 | 0.004176 | 0.003951 | 0.003607 |

| 5 | 0.010537 | 0.005131 | 0.004127 | 0.00769 | 0.003898 | 0.005382 | 0.004686 | 0.004983 | 0.004058 | 0.003836 | 0.00351 |

| 6 | 0.010678 | 0.005202 | 0.004182 | 0.007824 | 0.003964 | 0.005464 | 0.004762 | 0.005063 | 0.004118 | 0.003893 | 0.003563 |

| 7 | 0.010579 | 0.005152 | 0.004142 | 0.007738 | 0.003921 | 0.005414 | 0.004718 | 0.005018 | 0.004079 | 0.003856 | 0.003527 |

| 8 | 0.010584 | 0.005154 | 0.004145 | 0.007744 | 0.003924 | 0.005418 | 0.004721 | 0.005021 | 0.004082 | 0.003858 | 0.003527 |

| 9 | 0.010606 | 0.005166 | 0.004154 | 0.00776 | 0.003934 | 0.00543 | 0.004731 | 0.005032 | 0.004091 | 0.003867 | 0.00352 |

| 10 | 0.010582 | 0.005154 | 0.004144 | 0.007742 | 0.003923 | 0.005417 | 0.00472 | 0.00502 | 0.004081 | 0.003857 | 0.003527 |

| 11 | 0.010919 | 0.00532 | 0.004281 | 0.008003 | 0.00405 | 0.005593 | 0.004872 | 0.005183 | 0.004224 | 0.003996 | 0.003637 |

| 12 | 0.010732 | 0.005227 | 0.004207 | 0.007853 | 0.003972 | 0.00549 | 0.004787 | 0.00509 | 0.004142 | 0.003919 | 0.003567 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.002256 | 0.001121 | 0.000874 | 0.001661 | 0.000752 | 0.001051 | 0.000942 | 0.001003 | 0.000789 | 0.000705 | 0.00075 |

| 2 | 0.002452 | 0.001222 | 0.000953 | 0.001799 | 0.000821 | 0.001152 | 0.00103 | 0.001098 | 0.000863 | 0.000773 | 0.000817 |

| 3 | 0.002224 | 0.001103 | 0.000859 | 0.001636 | 0.00074 | 0.001033 | 0.000926 | 0.000985 | 0.000775 | 0.000693 | 0.00074 |

| 4 | 0.004831 | 0.002374 | 0.001857 | 0.003555 | 0.001598 | 0.002243 | 0.002026 | 0.002155 | 0.001692 | 0.001515 | 0.001643 |

| 5 | 0.003074 | 0.001514 | 0.001186 | 0.002263 | 0.001022 | 0.001435 | 0.001296 | 0.001379 | 0.001082 | 0.000968 | 0.001047 |

| 6 | 0.002365 | 0.001174 | 0.000913 | 0.001719 | 0.000777 | 0.001093 | 0.00098 | 0.001049 | 0.000823 | 0.000739 | 0.000787 |

| 7 | 0.002599 | 0.001283 | 0.000997 | 0.001892 | 0.000857 | 0.0012 | 0.001077 | 0.001151 | 0.000902 | 0.000809 | 0.000863 |

| 8 | 0.002129 | 0.00105 | 0.000814 | 0.001552 | 0.000704 | 0.000985 | 0.000882 | 0.000944 | 0.00074 | 0.000664 | 0.000707 |

| 9 | 0.002097 | 0.001034 | 0.000802 | 0.001528 | 0.000693 | 0.00097 | 0.000869 | 0.00093 | 0.000729 | 0.000654 | 0.000697 |

| 10 | 0.004711 | 0.002321 | 0.001815 | 0.003466 | 0.001556 | 0.002184 | 0.001974 | 0.0021 | 0.00165 | 0.001477 | 0.00161 |

| 11 | 0.002857 | 0.001414 | 0.001106 | 0.002102 | 0.000945 | 0.001326 | 0.00119 | 0.001272 | 0.001 | 0.000897 | 0.000977 |

| 12 | 0.002264 | 0.00112 | 0.000874 | 0.001662 | 0.000752 | 0.00105 | 0.000942 | 0.001004 | 0.00079 | 0.000705 | 0.00075 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.003218 | 0.001858 | 0.001458 | 0.002322 | 0.001188 | 0.001566 | 0.001501 | 0.001598 | 0.001382 | 0.001318 | 0.00108 |

| 2 | 0.003312 | 0.001927 | 0.00151 | 0.002387 | 0.001239 | 0.001645 | 0.001555 | 0.001678 | 0.001432 | 0.001362 | 0.0011266 |

| 3 | 0.003099 | 0.001774 | 0.001387 | 0.002224 | 0.001138 | 0.001512 | 0.001429 | 0.001535 | 0.001316 | 0.001252 | 0.0010433 |

| 4 | 0.003178 | 0.001847 | 0.001439 | 0.002299 | 0.001191 | 0.001568 | 0.001481 | 0.001589 | 0.001364 | 0.00131 | 0.0010733 |

| 5 | 0.003275 | 0.001898 | 0.001487 | 0.002364 | 0.001221 | 0.00161 | 0.001532 | 0.001654 | 0.00141 | 0.001343 | 0.00111 |

| 6 | 0.003214 | 0.001864 | 0.001459 | 0.002327 | 0.001206 | 0.00159 | 0.001503 | 0.001624 | 0.001395 | 0.001339 | 0.0010966 |

| 7 | 0.003329 | 0.00192 | 0.001507 | 0.002402 | 0.001228 | 0.001631 | 0.001552 | 0.001665 | 0.001428 | 0.001363 | 0.0011166 |

| 8 | 0.003112 | 0.001814 | 0.001417 | 0.002265 | 0.001169 | 0.001546 | 0.00146 | 0.001577 | 0.001342 | 0.001298 | 0.0010733 |

| 9 | 0.0032 | 0.001843 | 0.001432 | 0.002295 | 0.001184 | 0.001561 | 0.001474 | 0.001594 | 0.001369 | 0.001313 | 0.0010766 |

| 10 | 0.003252 | 0.001892 | 0.001481 | 0.002362 | 0.001225 | 0.001616 | 0.001536 | 0.001637 | 0.001403 | 0.001347 | 0.0011133 |

| 11 | 0.003284 | 0.00191 | 0.001497 | 0.002389 | 0.001228 | 0.001631 | 0.00154 | 0.001653 | 0.00142 | 0.001363 | 0.0011166 |

| 12 | 0.003286 | 0.001909 | 0.001499 | 0.002387 | 0.001232 | 0.001634 | 0.001543 | 0.001654 | 0.001421 | 0.001354 | 0.00111 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.023895 | 0.01113 | 0.009172 | 0.017882 | 0.008612 | 0.012544 | 0.011276 | 0.011869 | 0.009442 | 0.008772 | 0.00794 |

| 2 | 0.02366 | 0.011016 | 0.00908 | 0.017737 | 0.008532 | 0.012452 | 0.011191 | 0.01178 | 0.009363 | 0.008698 | 0.007887 |

| 3 | 0.02375 | 0.011062 | 0.009115 | 0.017801 | 0.008568 | 0.012493 | 0.011227 | 0.011821 | 0.009392 | 0.008728 | 0.007917 |

| 4 | 1.84365 | 0.869225 | 0.694118 | 1.385427 | 0.657311 | 0.951882 | 0.864113 | 0.910225 | 0.702447 | 0.635822 | 0.62757 |

| 5 | 0.320535 | 0.155773 | 0.124193 | 0.244153 | 0.115867 | 0.168152 | 0.152559 | 0.161037 | 0.12491 | 0.112967 | 0.109373 |

| 6 | 0.02404 | 0.011191 | 0.009218 | 0.017975 | 0.008665 | 0.012605 | 0.011332 | 0.011929 | 0.009491 | 0.008819 | 0.008013 |

| 7 | 0.02371 | 0.011043 | 0.009107 | 0.017768 | 0.008554 | 0.012476 | 0.011212 | 0.011802 | 0.009376 | 0.008713 | 0.007903 |

| 8 | 0.02399 | 0.011177 | 0.009207 | 0.01795 | 0.008655 | 0.012595 | 0.011323 | 0.01192 | 0.009481 | 0.00881 | 0.008007 |

| 9 | 0.02387 | 0.011118 | 0.00916 | 0.017868 | 0.008608 | 0.012544 | 0.011276 | 0.011871 | 0.009437 | 0.008771 | 0.007967 |

| 10 | 1.84782 | 0.871025 | 0.695452 | 1.388362 | 0.659014 | 0.954193 | 0.866174 | 0.912356 | 0.704123 | 0.63731 | 0.629273 |

| 11 | 0.317834 | 0.154462 | 0.123164 | 0.242273 | 0.114952 | 0.166883 | 0.151423 | 0.159822 | 0.123991 | 0.112142 | 0.10793 |

| 12 | 0.023565 | 0.010981 | 0.00905 | 0.017696 | 0.008513 | 0.012426 | 0.01117 | 0.011758 | 0.009338 | 0.008672 | 0.00785 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.000033 | 0.000018 | 0.000014 | 0.000027 | 0.000012 | 0.000019 | 0.000017 | 0.000018 | 0.000014 | 0.000013 | 0.0000133 |

| 2 | 0.00006 | 0.00003 | 0.000024 | 0.000048 | 0.000021 | 0.000033 | 0.000029 | 0.000031 | 0.000024 | 0.000022 | 0.00002 |

| 3 | 0.000108 | 0.000052 | 0.000041 | 0.000086 | 0.000038 | 0.000058 | 0.000051 | 0.000055 | 0.000043 | 0.000039 | 0.0000367 |

| 4 | 0.000176 | 0.000084 | 0.000067 | 0.00014 | 0.000061 | 0.000095 | 0.000084 | 0.00009 | 0.000071 | 0.000065 | 0.00006 |

| 5 | 0.000168 | 0.00008 | 0.000064 | 0.000134 | 0.000059 | 0.00009 | 0.000081 | 0.000087 | 0.000069 | 0.000063 | 0.0000567 |

| 6 | 0.00017 | 0.000081 | 0.000065 | 0.000136 | 0.000059 | 0.000091 | 0.000082 | 0.000088 | 0.00007 | 0.000064 | 0.0000567 |

| 7 | 0.00013 | 0.000062 | 0.00005 | 0.000104 | 0.000045 | 0.000069 | 0.000063 | 0.000067 | 0.000053 | 0.000048 | 0.0000433 |

| 8 | 0.00033 | 0.000155 | 0.000124 | 0.000264 | 0.000118 | 0.000176 | 0.000159 | 0.000168 | 0.000133 | 0.00012 | 0.00011 |

| 9 | 0.000249 | 0.00012 | 0.000095 | 0.0002 | 0.000089 | 0.000133 | 0.00012 | 0.000127 | 0.000101 | 0.000092 | 0.0000833 |

| 10 | 0.000289 | 0.000139 | 0.000111 | 0.000232 | 0.000103 | 0.000154 | 0.000139 | 0.000147 | 0.000116 | 0.000105 | 0.0000967 |

| 11 | 0.000219 | 0.000106 | 0.000084 | 0.000176 | 0.000079 | 0.000118 | 0.000106 | 0.000112 | 0.000089 | 0.000081 | 0.0000733 |

| 12 | 0.00009 | 0.000044 | 0.000035 | 0.000072 | 0.000032 | 0.00005 | 0.000045 | 0.000047 | 0.000037 | 0.000034 | 0.00003 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1.822104 | 0.91924 | 0.676118 | 1.392781 | 0.210125 | 0.534925 | 0.61302 | 0.57248 | 0.225194 | 0.196833 | 0.624233 |

| 2 | 1.807326 | 0.910481 | 0.669839 | 1.38035 | 0.208012 | 0.529689 | 0.607623 | 0.56747 | 0.223217 | 0.195084 | 0.619827 |

| 3 | 2.1154 | 1.06139 | 0.780626 | 1.61483 | 0.243584 | 0.620552 | 0.71228 | 0.665982 | 0.261883 | 0.229029 | 0.72587 |

| 4 | 2.10937 | 1.05824 | 0.778301 | 1.610066 | 0.242905 | 0.618736 | 0.710184 | 0.664031 | 0.260815 | 0.228099 | 0.723123 |

| 5 | 1.69062 | 0.848426 | 0.624025 | 1.29016 | 0.194717 | 0.495586 | 0.568477 | 0.530534 | 0.208309 | 0.182078 | 0.579567 |

| 6 | 1.64558 | 0.825861 | 0.60728 | 1.255844 | 0.189549 | 0.482486 | 0.553916 | 0.51662 | 0.203005 | 0.177666 | 0.56406 |

| 7 | 1.64375 | 0.82494 | 0.606606 | 1.254294 | 0.189313 | 0.481886 | 0.553226 | 0.515967 | 0.202685 | 0.177383 | 0.563447 |

| 8 | 1.74768 | 0.877021 | 0.644677 | 1.33329 | 0.201133 | 0.511807 | 0.587489 | 0.547783 | 0.21495 | 0.18815 | 0.59914 |

| 9 | 1.86149 | 0.933968 | 0.685921 | 1.42944 | 0.215455 | 0.547872 | 0.628909 | 0.586144 | 0.231409 | 0.202521 | 0.63872 |

| 10 | 1.78459 | 0.894606 | 0.657066 | 1.3691 | 0.206324 | 0.524664 | 0.602642 | 0.561501 | 0.220727 | 0.193142 | 0.612323 |

| 11 | 2.11404 | 1.06072 | 0.780099 | 1.61376 | 0.243448 | 0.620224 | 0.711905 | 0.665644 | 0.261753 | 0.228913 | 0.723473 |

| 12 | 1.96819 | 0.988808 | 0.726622 | 1.50408 | 0.226812 | 0.578106 | 0.663648 | 0.619833 | 0.243294 | 0.213253 | 0.673247 |

| Method Point | DBN | LSTM | SARIMAX | LR | RF | DT | SVM | GRU | XGBOOST | SSRLR | Actual Data |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 4.402809 | 0.996231 | 1.087194 | 4.957531 | 0.753281 | 0.924844 | 1.001538 | 0.829909 | 1.033635 | 0.740985 | 0.650 |

| 2 | 4.527383 | 1.022295 | 1.101331 | 5.090235 | 0.760627 | 0.940943 | 1.015361 | 0.844171 | 1.040605 | 0.747044 | 0.646 |

| 3 | 4.838178 | 1.008571 | 1.225377 | 5.306084 | 0.844195 | 1.01274 | 1.083204 | 0.918764 | 1.162196 | 0.831497 | 0.752 |

| 4 | 9.84792 | 1.645598 | 1.992939 | 9.40809 | 2.022073 | 2.111469 | 2.054901 | 1.659253 | 2.045687 | 2.183267 | 1.840 |

| 5 | 5.580026 | 1.113722 | 1.2569 | 5.837724 | 0.982709 | 1.214348 | 1.236753 | 1.003334 | 1.209144 | 1.024185 | 0.846 |

| 6 | 4.546716 | 0.976813 | 1.05935 | 5.12311 | 0.727287 | 0.904705 | 0.968167 | 0.813176 | 0.986795 | 0.709672 | 0.590 |

| 7 | 4.611737 | 1.04493 | 1.069944 | 5.27021 | 0.740032 | 0.926468 | 1.005123 | 0.829526 | 0.997419 | 0.719465 | 0.590 |

| 8 | 4.975247 | 0.991344 | 1.151328 | 5.511958 | 0.778107 | 0.95712 | 1.009119 | 0.865246 | 1.056523 | 0.755695 | 0.625 |

| 9 | 4.854031 | 1.004369 | 1.159956 | 5.392624 | 0.789248 | 0.961816 | 1.023475 | 0.870084 | 1.078246 | 0.773757 | 0.664 |

| 10 | 9.811275 | 1.671429 | 1.906255 | 9.498042 | 1.959133 | 2.057394 | 1.998976 | 1.598688 | 1.939943 | 2.111271 | 1.732 |

| 11 | 6.211354 | 1.246643 | 1.465467 | 6.482264 | 1.119608 | 1.35283 | 1.388854 | 1.136581 | 1.403961 | 1.159455 | 0.989 |

| 12 | 4.606389 | 1.00431 | 1.149319 | 5.153972 | 0.791093 | 0.961008 | 1.034905 | 0.868796 | 1.091916 | 0.783885 | 0.699 |

References

- Wang, S.; Deng, X.; Chen, H.; Shi, Q.; Xu, D. A bottom-up short-term residential load forecasting approach based on appliance characteristic analysis and multi-task learning. Electr. Power Syst. Res. 2021, 196, 107233. [Google Scholar] [CrossRef]

- Shabbir, N.; Vassiljeva, K.; Nourollahi Hokmabad, H.; Husev, O.; Petlenkov, E.; Belikov, J. Comparative Analysis of Machine Learning Techniques for Non-Intrusive Load Monitoring. Electronics 2024, 13, 1420. [Google Scholar] [CrossRef]

- Noroozi, Z.; Orooji, A.; Erfannia, L. Analyzing the impact of feature selection methods on machine learning algorithms for heart disease prediction. Sci. Rep. 2023, 13, 22588. [Google Scholar] [CrossRef]

- Hassanpouri Baesmat, K.; Farrokhi, Z.; Chmaj, G.; Regentova, E.E. Parallel Multi-Model Energy Demand Forecasting with Cloud Redundancy: Leveraging Trend Correction, Feature Selection, and Machine Learning. Forecasting 2025, 7, 25. [Google Scholar] [CrossRef]

- Farrokhi, Z. AI-Powered Grid Analytics for Sustainable Energy Management in ESG Frameworks. In Data-Driven ESG Strategy Implementation Through Business Intelligence; IGI Global Scientific Publishing: Hershey, PA, USA, 2026; pp. 279–318. [Google Scholar] [CrossRef]

- Mohtasham, F.; Pourhoseingholi, M.; Hashemi Nazari, S.S.; Kavousi, K.; Zali, M.R. Comparative analysis of feature selection techniques for COVID-19 dataset. Sci. Rep. 2024, 14, 18627. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.; Islam, S.; Uddin, M.N.; Uddin, A. A conceptual IoT framework based on Anova-F feature selection for chronic kidney disease detection using deep learning approach. Intell.-Based Med. 2024, 10, 100170. [Google Scholar] [CrossRef]

- Souza, F.; Premebida, C.; Araújo, R. High-order conditional mutual information maximization for dealing with high-order dependencies in feature selection. Pattern Recognit. 2022, 131, 108895. [Google Scholar] [CrossRef]

- Qu, K.; Xu, J.; Hou, Q.; Qu, K.; Sun, Y. Feature selection using Information Gain and decision information in neighborhood decision system. Appl. Soft Comput. 2023, 136, 110100. [Google Scholar] [CrossRef]

- Muthukrishnan, R.; Rohini, R. LASSO: A feature selection technique in predictive modeling for machine learning. In Proceedings of the 2016 IEEE International Conference on Advances in Computer Applications (ICACA), Coimbatore, India, 24 October 2016; pp. 18–20. [Google Scholar] [CrossRef]

- Altarabichi, M.G.; Nowaczyk, S.; Pashami, S.; Mashhadi, P.S. Fast Genetic Algorithm for feature selection A qualitative approximation approach. Expert Syst. Appl. 2023, 211, 118528. [Google Scholar] [CrossRef]

- Luong, H.H.; Tran, T.T.; Van Nguyen, N.; Le, A.D.; Nguyen, H.T.T.; Nguyen, K.D.; Tran, N.C.; Nguyen, H.T. Feature Selection Using Correlation Matrix on Metagenomic Data with Pearson Enhancing Inflammatory Bowel Disease Prediction. In International Conference on Artificial Intelligence for Smart Community; Ibrahim, R., Porkumaran, K., Kannan, R., Mohd Nor, N., Prabakar, S., Eds.; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; Volume 758. [Google Scholar] [CrossRef]

- Rosidin, S.; Muljono; Fajar Shidik, G.; Zainul Fanani, A.; Al Zami, F.; Purwanto. Improvement with Chi Square Selection Feature using Supervised Machine Learning Approach on COVID-19 Data. In Proceedings of the 2021 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarangin, Indonesia, 18–19 September 2021; pp. 32–36. [Google Scholar] [CrossRef]

- Zhao, Z.; Anand, R.; Wang, M. Maximum Relevance and Minimum Redundancy Feature Selection Methods for a Marketing Machine Learning Platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 442–452. [Google Scholar] [CrossRef]

- Jamali, H.; Dascalu, S.M.; Harris, F.C. AI-Driven Analysis and Prediction of Energy Consumption in NYC’s Municipal Buildings. In Proceedings of the 2024 IEEE/ACIS 22nd International Conference on Software Engineering Research, Management and Applications (SERA), Honolulu, HI, USA, 30 May–1 June 2024; pp. 277–283. [Google Scholar] [CrossRef]

- Baesmat, K.H.; Shokoohi, F.; Farrokhi, Z. SP-RF-ARIMA: A sparse random forest and ARIMA hybrid model for electric load forecasting. Glob. Energy Interconnect. 2025, 8, 486–496. [Google Scholar] [CrossRef]

- Baesmat, K.H.; Regentova, E.E.; Baghzouz, Y. A Hybrid Machine Learning–Statistical Based Method for Short-Term Energy Consumption Prediction in Residential Buildings. Energy AI 2025, 21, 100552. [Google Scholar] [CrossRef]

- Alam, M.M.; Latifi, S. A Systematic Review of Techniques for Early-Stage Alzheimer’s Disease Diagnosis Using Machine Learning and Deep Learning. J. Data Sci. Intell. Syst. 2025. Vol. 00(00) 1–13. Available online: https://ojs.bonviewpress.com/index.php/jdsis/article/view/5037/1573 (accessed on 24 November 2025). [CrossRef]

- Shaon, M.A.R.; Baghzouz, Y. Day-Ahead Residential Customer Load Forecasting Using Prophet. In Proceedings of the 2023 IEEE International Conference on Environment and Electrical Engineering and 2023 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Madrid, Spain, 6–9 June 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Sina, L.B.; Secco, C.A.; Blazevic, M.; Nazemi, K. Hybrid Forecasting Methods A Systematic Review. Electronics 2023, 12, 2019. [Google Scholar] [CrossRef]

- Fattah, J.; Ezzine, L.; Aman, Z.; El Moussami, H.; Lachhab, A. Forecasting of demand using ARIMA model. Int. J. Eng. Bus. Manag. 2018, 10, 1847979018808673. [Google Scholar] [CrossRef]

- Kumari, S.; Muthulakshmi, P. SARIMA Model: An Efficient Machine Learning Technique for Weather Forecasting. Procedia Comput. Sci. 2024, 235, 656–670. [Google Scholar] [CrossRef]

- Ram, C.S.; Raj, M.; Chaturvedi, R.K. Boosting Time-Series Forecasting Accuracy with SARIMAX Seasonal Interval Automation. Procedia Comput. Sci. 2025, 260, 814–821. [Google Scholar] [CrossRef]

- Li, J.; Lei, Y.; Yang, S. Mid-long term load forecasting model based on support vector machine optimized by improved sparrow search algorithm. Energy Rep. 2022, 8 (Suppl. S5), 491–497. [Google Scholar] [CrossRef]

- Nooruldeen, O.; Baker, M.R.; Aleesa, A.M.; Ghareeb, A.; Shaker, E.H. Strategies for predictive power: Machine learning models in city-scale load forecasting, e-Prime—Advances in Electrical Engineering. Electron. Energy 2023, 6, 100392. [Google Scholar] [CrossRef]

- Wang, Y.-F.; Wang, M.Y.-F.; Tu, L.-Y. An Evaluation of Machine Learning Models for Forecasting Short-Term U.S. Treasury Yields. Appl. Sci. 2025, 15, 6903. [Google Scholar] [CrossRef]

- Kanagaraj, R.; Revanth, N.; Vishnu, K.; Saxena, R. Demand Forecasting with XGBoost and LSTM: An Exploratory Analysis. In Proceedings of the 2025 International Conference on Inventive Computation Technologies (ICICT), Kirtipur, Nepal, 23–25 April 2025; pp. 1216–1220. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M.; Yousefi, M. An optimized model using LSTM network for demand forecasting. Comput. Ind. Eng. 2020, 143, 106435. [Google Scholar] [CrossRef]

- Gomez-Rosero, S.; Capretz, M.A.M.; Mir, S. Transfer Learning by Similarity Centred Architecture Evolution for Multiple Residential Load Forecasting. Smart Cities 2021, 4, 217–240. [Google Scholar] [CrossRef]

- Li, Y.; Gao, Z.; Zhou, Z.; Zhang, Y.; Guo, Z.; Yan, Z. Abnormal Load Variation Forecasting in Urban Cities Based on Sample Augmentation and TimesNet. Smart Cities 2025, 8, 43. [Google Scholar] [CrossRef]

- Aldossary, M. Enhancing Urban Electric Vehicle (EV) Fleet Management Efficiency in Smart Cities: A Predictive Hybrid Deep Learning Framework. Smart Cities 2024, 7, 3678–3704. [Google Scholar] [CrossRef]

- Bin Kamilin, M.H.; Yamaguchi, S.; Bin Ahmadon, M.A. Radian Scaling and Its Application to Enhance Electricity Load Forecasting in Smart Cities Against Concept Drift. Smart Cities 2024, 7, 3412–3436. [Google Scholar] [CrossRef]

- Richter, L.; Lenk, S.; Bretschneider, P. Advancing Electric Load Forecasting: Leveraging Federated Learning for Distributed, Non-Stationary, and Discontinuous Time Series. Smart Cities 2024, 7, 2065–2093. [Google Scholar] [CrossRef]

- Malashin, I.; Tynchenko, V.; Gantimurov, A.; Nelyub, V.; Borodulin, A. Applications of Long Short-Term Memory (LSTM) Networks in Polymeric Sciences: A Review. Polymers 2024, 16, 2607. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Alam, M.M.; Ahamed, A.; Meerza, S.I.A. Prediction of Diabetes at Early Stage using Interpretable Machine Learning. In Proceedings of the SoutheastCon 2023, Orlando, FL, USA, 13–16 April 2023; pp. 261–265. [Google Scholar] [CrossRef]

- Alharbi, F.R.; Csala, D. A Seasonal Autoregressive Integrated Moving Average with Exogenous Factors (SARIMAX) Forecasting Model-Based Time Series Approach. Inventions 2022, 7, 94. [Google Scholar] [CrossRef]

- Jamali, H.; Karimi, A.; Haghighizadeh, M. A new method of cloud-based computation model for mobile devices: Energy consumption optimization in mobile-to-mobile computation offloading. In Proceedings of the 6th International Conference on Communications and Broadband Networking, Singapore, 24–26 February 2018. [Google Scholar] [CrossRef]

- Chetry, B.P.; Kar, B. Online Signature Verification Using Chi-Square (χ2) Feature Selection and SVM Classifier. In Proceedings of the 2024 6th International Conference on Energy, Power and Environment (ICEPE), Shillong, India, 20–22 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Hermo, J.; Bolón-Canedo, V.; Ladra, S. Fed-mRMR: A lossless federated feature selection method. Inf. Sci. 2024, 669, 120609. [Google Scholar] [CrossRef]

- Forghani, R.; Savadjiev, P.; Chatterjee, A.; Muthukrishnan, N.; Reinhold, C.; Forghani, B. Radiomics and Artificial Intelligence for Biomarker and Prediction Model Development in Oncology. Comput. Struct. Biotechnol. J. 2019, 17, 995–1008. [Google Scholar] [CrossRef]

- Farrokhi, Z.; Baesmat, K.; Regentova, E. Enhancing Urban Intelligence Energy Management: Innovative Load Forecasting Techniques for Electrical Networks. J. Power Energy Eng. 2024, 12, 72–88. [Google Scholar] [CrossRef]

- Zhao, Q.; Xiang, W.; Huang, B.; Wang, J.; Fang, J. Optimised extreme gradient boosting model for short term electric load demand forecasting of regional grid system. Sci. Rep. 2022, 12, 19282. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Guo, G.; Fan, L.; Han, L.; Yu, Q.; Wang, Z. Short-term natural gas load forecasting based on EL-VMD-Transformer-ResLSTM. Sci. Rep. 2024, 14, 20343. [Google Scholar] [CrossRef]

- Joshi, B.; Singh, V.K.; Vishwakarma, D.K.; Ghorbani, M.A.; Kim, S.; Gupta, S.; Chandola, V.K.; Rajput, J.; Chung, I.-M.; Yadav, K.K.; et al. A comparative survey between cascade correlation neural network (CCNN) and feedforward neural network (FFNN) machine learning models for forecasting suspended sediment concentration. Sci. Rep. 2024, 14, 10638. [Google Scholar] [CrossRef] [PubMed]

- Sarker, B.; Chakraborty, S.; Čep, R.; Kalita, K. Development of optimized ensemble machine learning-based prediction models for wire electrical discharge machining processes. Sci. Rep. 2024, 14, 23299. [Google Scholar] [CrossRef] [PubMed]

| Parametric Statistical Methods Gap |

|---|

| Handling high variability and peaks |

| Non-stationarity of load data |

| Difficulty handling missing data |

| Inaccuracy at different time horizons |

| Difficulty incorporating external variables |

| Inefficiency in real-time forecasting |

| Computational constraints with large datasets |

| Autocorrelation and multicollinearity |

| Risk of overfitting in complex models |

| Capturing seasonality and periodicity |

| Machine Learning Techniques Gap |

|---|

| Generalization challenges across different regions or time periods |

| Need for large, high-quality datasets |

| Hyperparameter tuning complexity |

| Overfitting with complex models |

| Limited effectiveness in incorporating domain knowledge |

| Difficulty interpreting model decisions (black-box nature) |

| Model retraining requirements for real-time data |

| Difficulty handling rare events or outliers |

| High computational requirements |

| Sensitivity to feature selection and engineering |

| Feature | Temperature | Feels-Like | UV Index | Sea Level Pressure | Solar Energy | Solar Radiation | Visibility |

| Score | 7162.332 | 5907.64 | 4932.46 | 4775.704 | 4176.774 | 4151.809 | 55.890 |

| Feature | Temperature | Feels-Like | UV Index | Sea Level Pressure | Solar Energy | Solar Radiation | |

| Score | 3776.295 | 2270.17 | 867.17 | 676.616 | 600.80606 | 284.650 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hassanpouri Baesmat, K.; Regentova, E.E.; Baghzouz, Y. Cloud-Enabled Hybrid, Accurate and Robust Short-Term Electric Load Forecasting Framework for Smart Residential Buildings: Evaluation of Aggregate vs. Appliance-Level Forecasting. Smart Cities 2025, 8, 199. https://doi.org/10.3390/smartcities8060199

Hassanpouri Baesmat K, Regentova EE, Baghzouz Y. Cloud-Enabled Hybrid, Accurate and Robust Short-Term Electric Load Forecasting Framework for Smart Residential Buildings: Evaluation of Aggregate vs. Appliance-Level Forecasting. Smart Cities. 2025; 8(6):199. https://doi.org/10.3390/smartcities8060199

Chicago/Turabian StyleHassanpouri Baesmat, Kamran, Emma E. Regentova, and Yahia Baghzouz. 2025. "Cloud-Enabled Hybrid, Accurate and Robust Short-Term Electric Load Forecasting Framework for Smart Residential Buildings: Evaluation of Aggregate vs. Appliance-Level Forecasting" Smart Cities 8, no. 6: 199. https://doi.org/10.3390/smartcities8060199

APA StyleHassanpouri Baesmat, K., Regentova, E. E., & Baghzouz, Y. (2025). Cloud-Enabled Hybrid, Accurate and Robust Short-Term Electric Load Forecasting Framework for Smart Residential Buildings: Evaluation of Aggregate vs. Appliance-Level Forecasting. Smart Cities, 8(6), 199. https://doi.org/10.3390/smartcities8060199