Striking a Pose: DIY Computer Vision Sensor Kit to Measure Public Life Using Pose Estimation Enhanced Action Recognition Model

Highlights

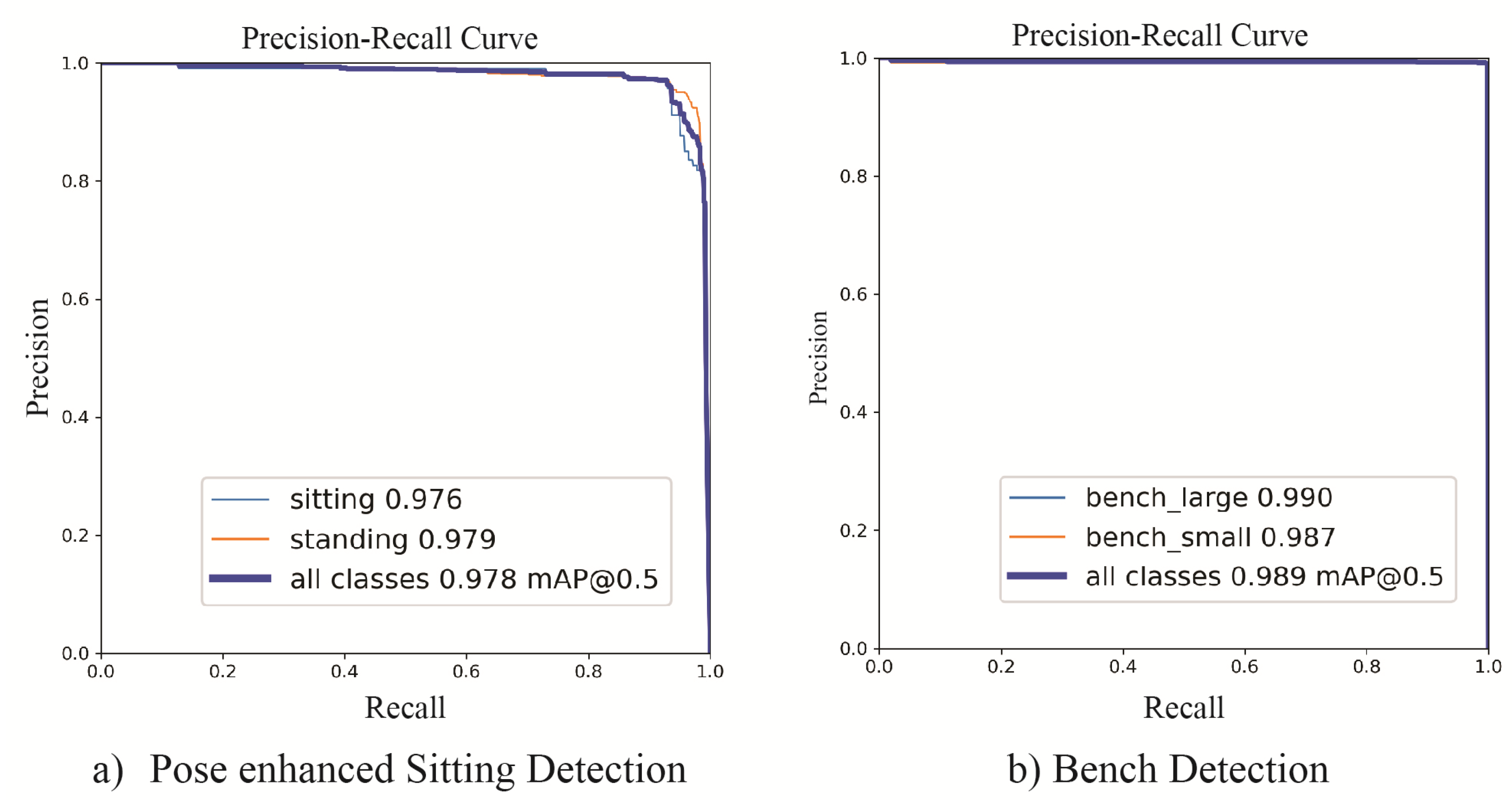

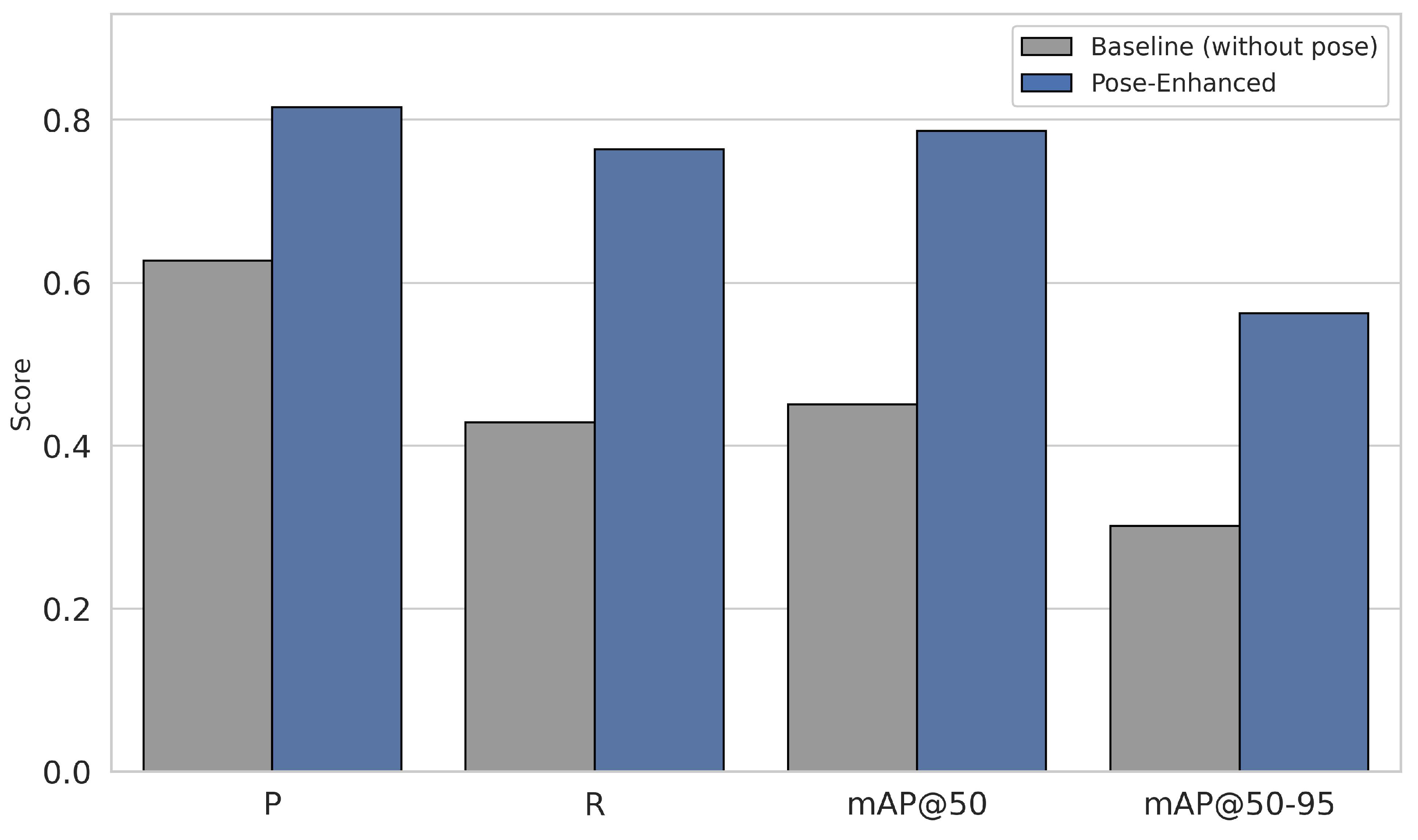

- This study found that integrating a pose estimation model with a traditional computer vision model improves the accuracy and reliability of detecting public-space behaviors such as sitting and socializing. The pose-enhanced sitting recognition model achieved a mean Average Precision (mAP@0.5) of 97.8%, showing major gains in precision, recall, and generalizability compared to both baseline data and commercially available vision recognition systems.

- Measurements from the field deployment of movable benches at the University of New South Wales demonstrated a clear behavioral impact. The number of people staying increased by 360%, sitting rose by 1400%, and socializing grew from none to an average of nine individuals per day.

- Including pose estimation models with vision recognition models can improve standardized vision recognition models of public space elements. This improvement can help better measure public space.

- The Public Life Sensor Kit (PLSK) developed through this research provides a scalable, low-cost, and privacy-conscious framework for real-time behavioral observation. As an open-source and edge-based alternative to commercial sensors, it enables researchers to continuously measure human activity without storing identifiable video. This approach democratizes behavioral observation, enabling local governments and communities to collect high-resolution data ethically.

Abstract

1. Introduction

2. Literature Review

2.1. Historical Context of Research on Public Life

2.2. Computer Vision in Urban Studies and Public Life

2.2.1. Urban Studies Using Data Collected by External Providers

2.2.2. Urban Studies Using Data Collected by the Researchers

2.2.3. Contributions to Public-Life Sensing and Urban Computer Vision

3. Methodology

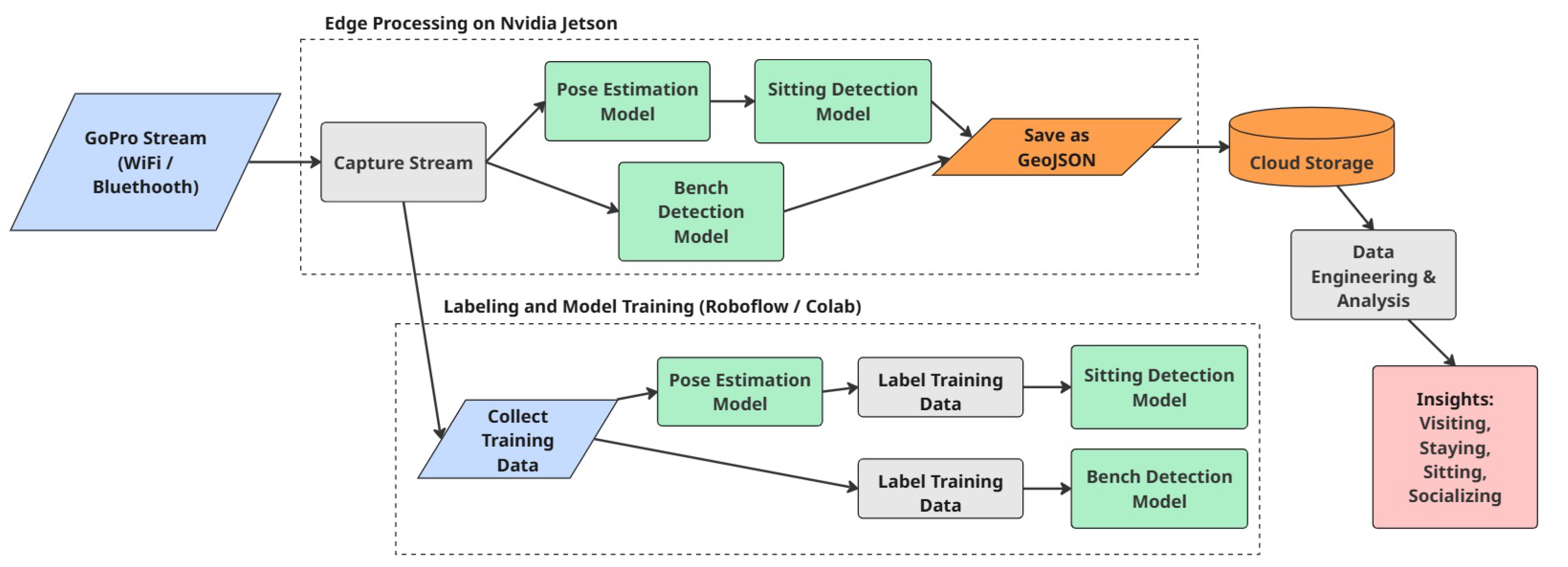

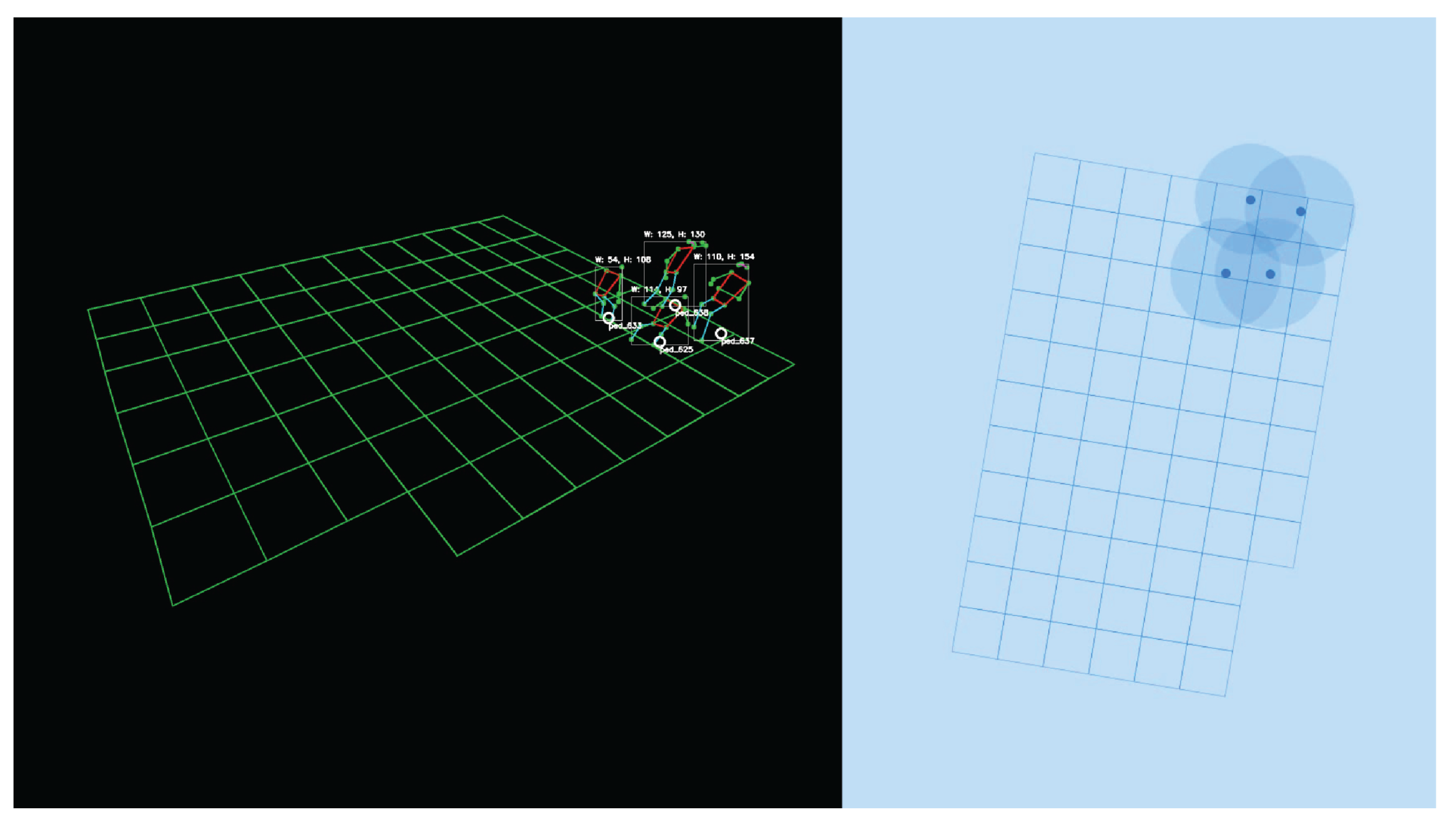

3.1. Public Life Sensor Kit Development

3.1.1. Hardware: Edge Device and Camera System

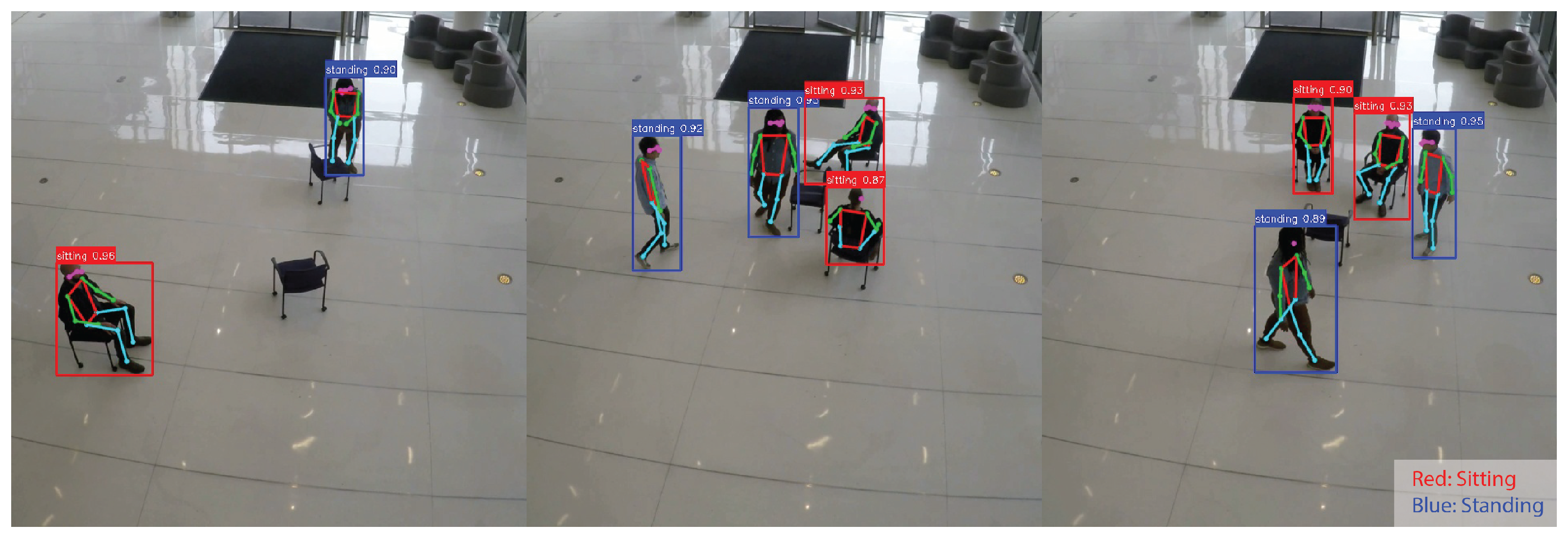

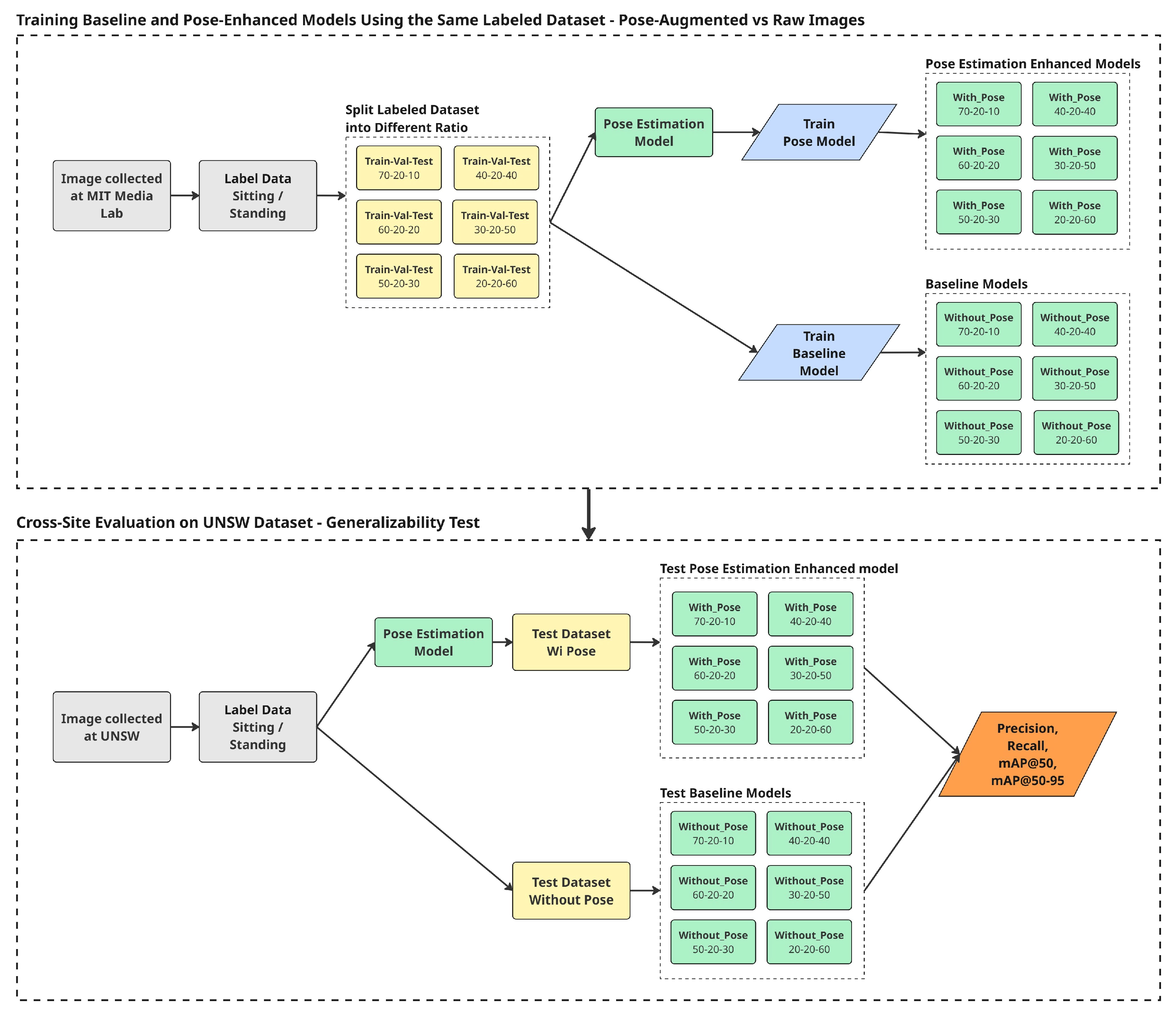

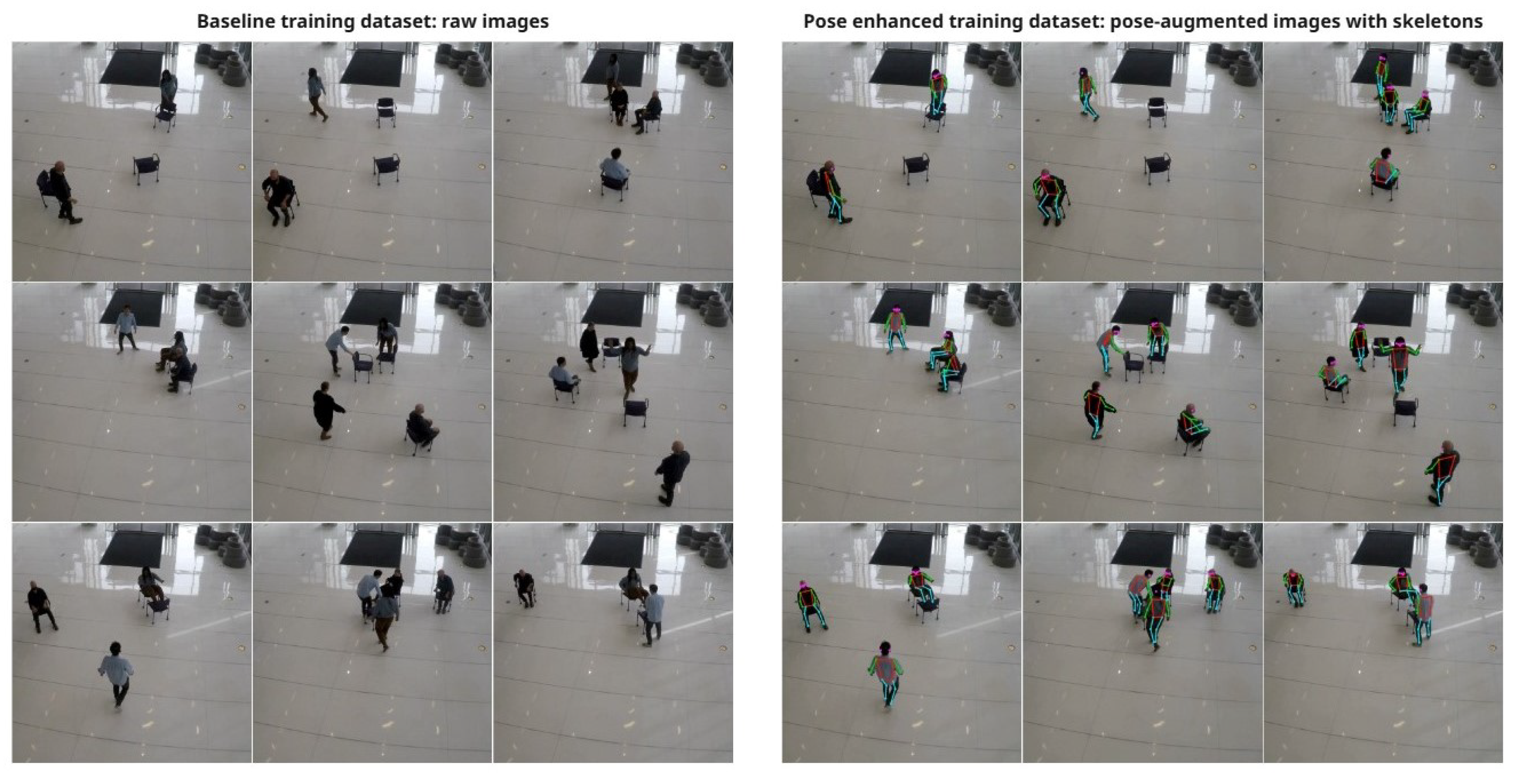

3.1.2. Software: Computer Vision Model for Public Life Studies

3.2. Public Life Analysis

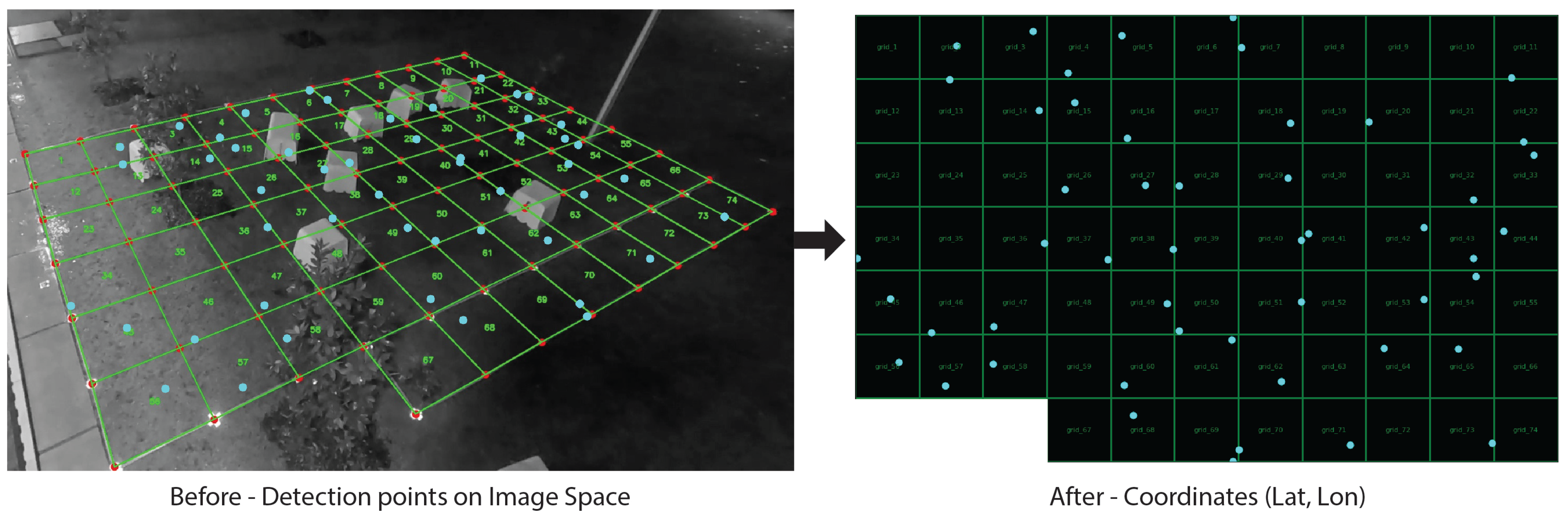

3.2.1. GPS Data Acquisition

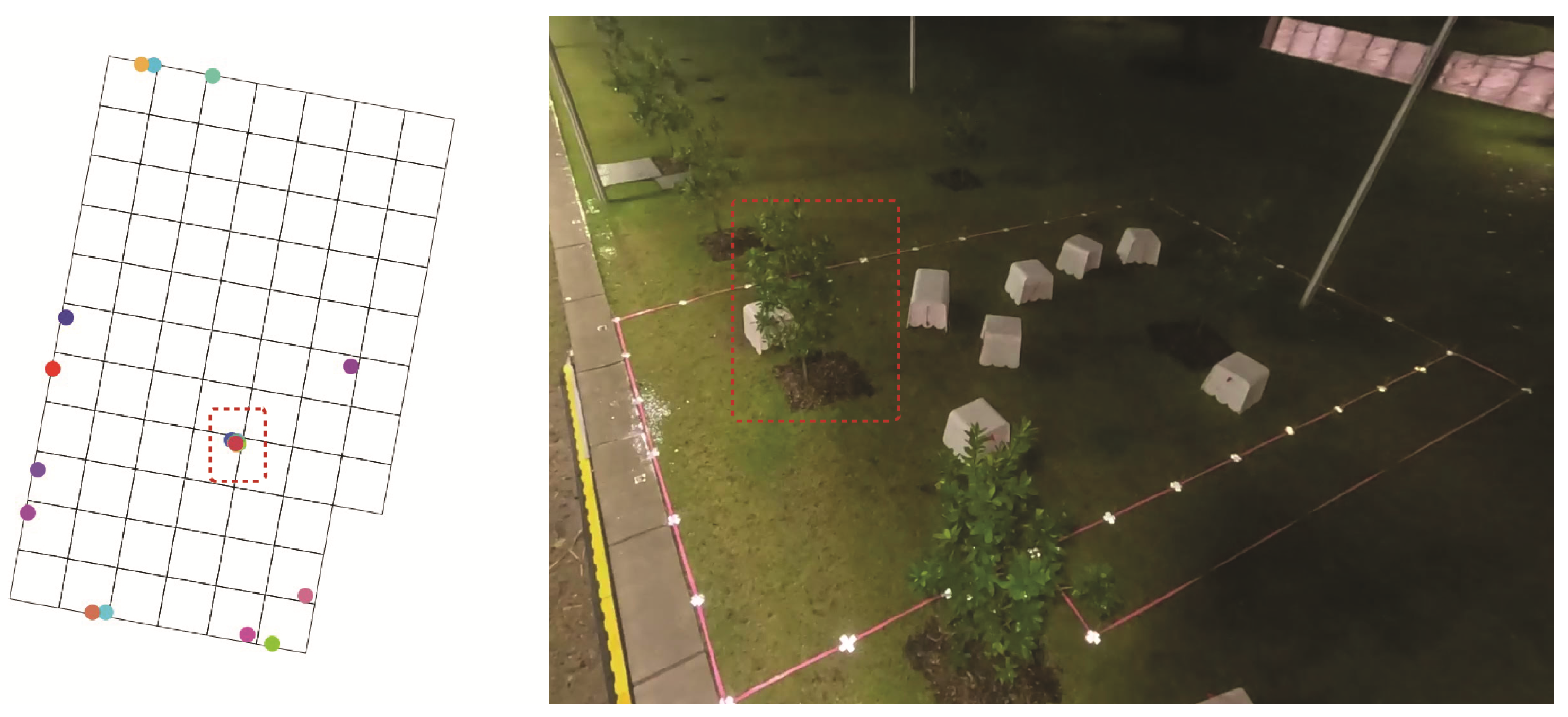

3.2.2. Socializing Behavior Categorization

4. Results

4.1. PLSK Test Deployment

4.1.1. PLSK Model Performance

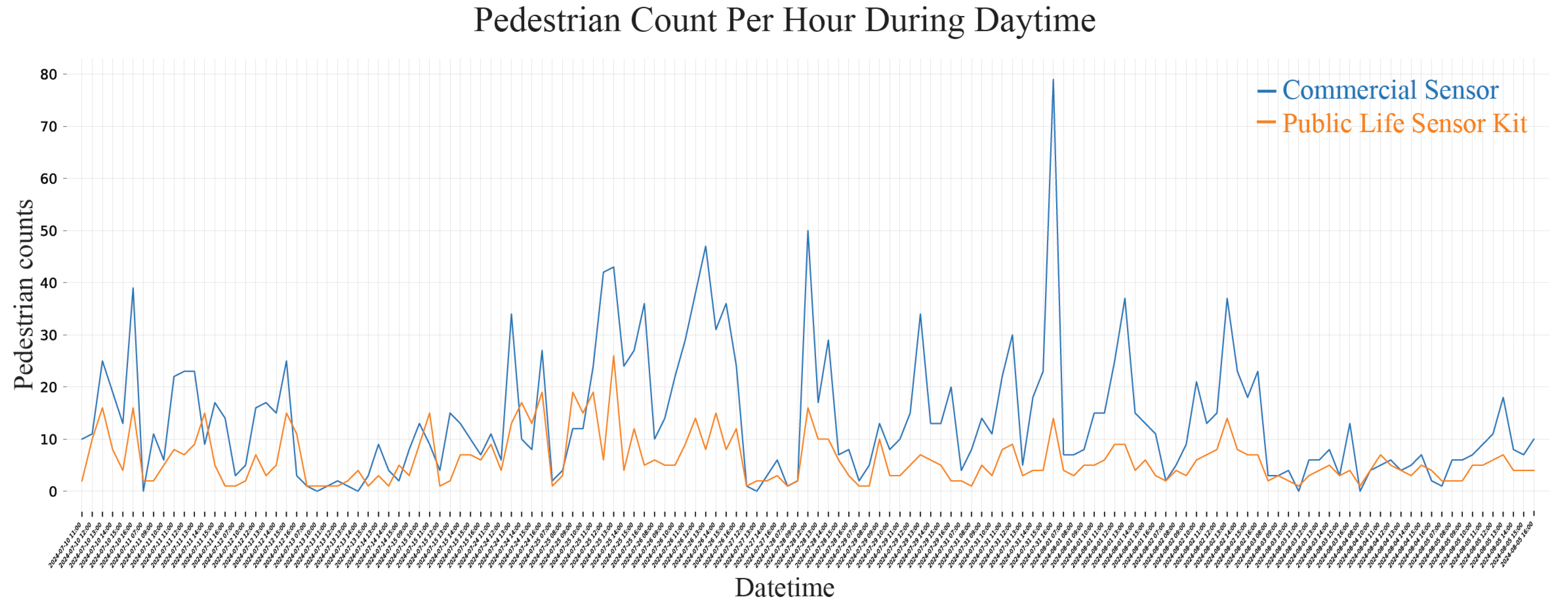

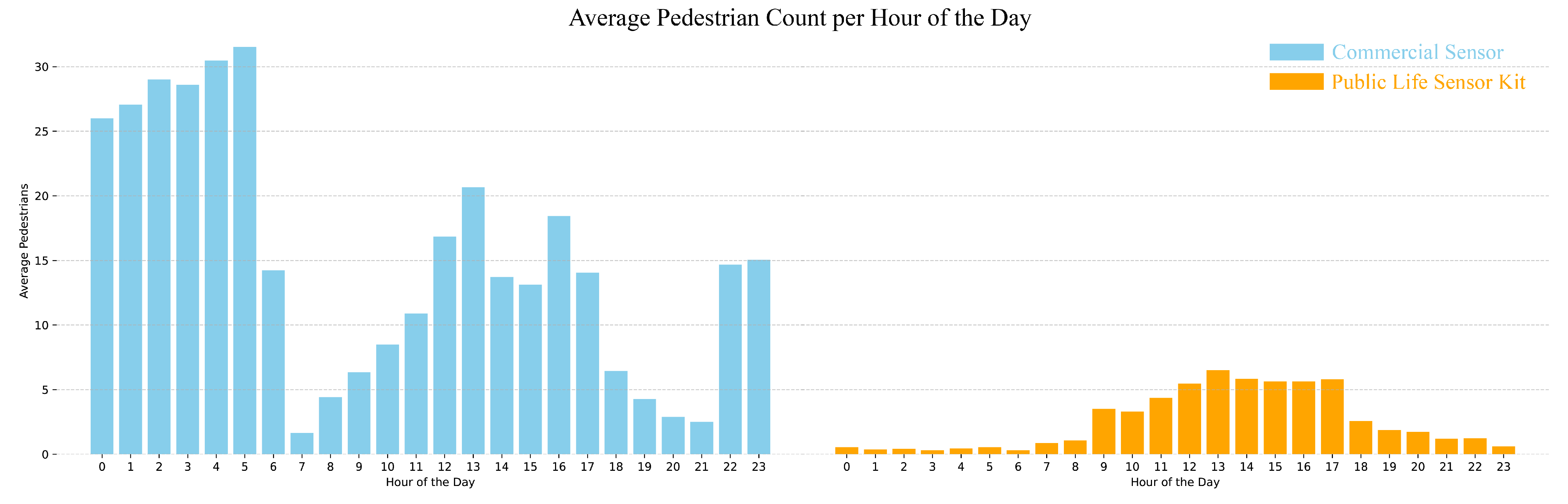

4.1.2. PLSK Comparison with Vivacity Sensor

4.2. Public Life Analysis Result

Results of the Intervention: Changes in Individual Behavior

5. Discussion

5.1. Public Life Measurement and Broader Implications

5.2. Lessons from Comparing PLSK and Commercial Sensor

5.3. Future Research Considerations

5.3.1. Integrating Vision-Language Models with PLSK

5.3.2. Exploring Alternative Approaches to Spatial Mapping

5.3.3. Integrating PLSK with Other Sensor Networks

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CV | Computer Vision |

| SVI | Street View Image |

| PLSK | Public Life Sensor Kit |

| UNSW | University of New South Wales |

| AI | Artificial Intelligence |

| GVI | Green View Index |

| SVF | Sky View Factor |

| MLLM | Multimodal Large Language Model |

| VLM | Vision Language Model |

| OVD | Open Vocabulary Object Detection |

| UAV | Unmanned Aerial Vehicle |

| GSV | Google Street View |

| CCTV | Closed Circuit Television |

| AP | Average Precision |

| P2P | Peer to Peer |

| PR | Precision Recall |

| mAP | Mean Average Precision |

| ST-DBSCAN | Spatio Temporal Density Based Spatial Clustering of Applications with Noise |

| SLAM | Simultaneous Localization and Mapping |

References

- Lynch, K. The Image of the City; MIT Press: Cambridge, MA, USA, 1964. [Google Scholar]

- Whyte, W. The Social Life of Small Urban Spaces; Project for Public Spaces: New York, NY, USA, 1980. [Google Scholar]

- Gehl, J. Life Between Buildings; Danish Architectural Press: Copenhagen, Denmark, 2011. [Google Scholar]

- Rosso-Alvarez, J.; Jiménez-Caldera, J.; Campo-Daza, G.; Hernández-Sabié, R.; Caballero-Calvo, A. Integrating Objective and Subjective Thermal Comfort Assessments in Urban Park Design: A Case Study of Monteria, Colombia. Urban Sci. 2025, 9, 139. [Google Scholar] [CrossRef]

- Luo, Z.; Marchi, L.; Gaspari, J. A Systematic Review of Factors Affecting User Behavior in Public Open Spaces Under a Changing Climate. Sustainability 2025, 17, 2724. [Google Scholar] [CrossRef]

- Lopes, H.S.; Remoaldo, P.C.; Vidal, D.G.; Ribeiro, V.; Silva, L.T.; Martín-Vide, J. Sustainable Placemaking and Thermal Comfort Conditions in Urban Spaces: The Case Study of Avenida dos Aliados and Praça da Liberdade (Porto, Portugal). Urban Sci. 2025, 9, 38. [Google Scholar] [CrossRef]

- He, K.; Li, H.; Zhang, H.; Hu, Q.; Yu, Y.; Qiu, W. Revisiting Gehl’s urban design principles with computer vision and webcam data: Associations between public space and public life. Environ. Plan. B Urban Anal. City Sci. 2025, 23998083251328771. [Google Scholar] [CrossRef]

- Salazar-Miranda, A.; Fan, Z.; Baick, M.; Hampton, K.N.; Duarte, F.; Loo, B.P.Y.; Glaeser, E.; Ratti, C. Exploring the social life of urban spaces through AI. Proc. Natl. Acad. Sci. USA 2025, 122, e2424662122. [Google Scholar] [CrossRef]

- Loo, B.P.; Fan, Z. Social interaction in public space: Spatial edges, moveable furniture, and visual landmarks. Environ. Plan. B Urban Anal. City Sci. 2023, 50, 2510–2526. [Google Scholar] [CrossRef]

- Niu, T.; Qing, L.; Han, L.; Long, Y.; Hou, J.; Li, L.; Tang, W.; Teng, Q. Small public space vitality analysis and evaluation based on human trajectory modeling using video data. Build. Environ. 2022, 225, 109563. [Google Scholar] [CrossRef]

- Williams, S.; Ahn, C.; Gunc, H.; Ozgirin, E.; Pearce, M.; Xiong, Z. Evaluating sensors for the measurement of public life: A future in image processing. Environ. Plan. B Urban Anal. City Sci. 2019, 46, 1534–1548. [Google Scholar] [CrossRef]

- Huang, J.; Teng, F.; Yuhao, K.; Jun, L.; Ziyu, L.; Wu, G. Estimating urban noise along road network from street view imagery. Int. J. Geogr. Inf. Sci. 2024, 38, 128–155. [Google Scholar] [CrossRef]

- Zhao, T.; Liang, X.; Tu, W.; Huang, Z.; Biljecki, F. Sensing urban soundscapes from street view imagery. Comput. Environ. Urban Syst. 2023, 99, 101915. [Google Scholar] [CrossRef]

- Lian, T.; Loo, B.P.Y.; Fan, Z. Advances in estimating pedestrian measures through artificial intelligence: From data sources, computer vision, video analytics to the prediction of crash frequency. Comput. Environ. Urban Syst. 2024, 107, 102057. [Google Scholar] [CrossRef]

- Salazar-Miranda, A.; Zhang, F.; Sun, M.; Leoni, P.; Duarte, F.; Ratti, C. Smart curbs: Measuring street activities in real-time using computer vision. Landsc. Urban Plan. 2023, 234, 104715. [Google Scholar] [CrossRef]

- Liu, D.; Wang, R.; Grekousis, G.; Liu, Y.; Lu, Y. Detecting older pedestrians and aging-friendly walkability using computer vision technology and street view imagery. Comput. Environ. Urban Syst. 2023, 105, 102027. [Google Scholar] [CrossRef]

- Koo, B.W.; Hwang, U.; Guhathakurta, S. Streetscapes as part of servicescapes: Can walkable streetscapes make local businesses more attractive? Comput. Environ. Urban Syst. 2023, 106, 102030. [Google Scholar] [CrossRef]

- Qin, K.; Xu, Y.; Kang, C.; Kwan, M.P. A graph convolutional network model for evaluating potential congestion spots based on local urban built environments. Trans. GIS 2020, 24, 1382–1401. [Google Scholar] [CrossRef]

- Tanprasert, T.; Siripanpornchana, C.; Surasvadi, N.; Thajchayapong, S. Recognizing Traffic Black Spots From Street View Images Using Environment-Aware Image Processing and Neural Network. IEEE Access 2020, 8, 121469–121478. [Google Scholar] [CrossRef]

- den Braver, N.R.; Kok, J.G.; Mackenbach, J.D.; Rutter, H.; Oppert, J.M.; Compernolle, S.; Twisk, J.W.R.; Brug, J.; Beulens, J.W.J.; Lakerveld, J. Neighbourhood drivability: Environmental and individual characteristics associated with car use across Europe. Int. J. Behav. Nutr. Phys. Act. 2020, 17, 8. [Google Scholar] [CrossRef]

- Mooney, S.J.; Wheeler-Martin, K.; Fiedler, L.M.; LaBelle, C.M.; Lampe, T.; Ratanatharathorn, A.; Shah, N.N.; Rundle, A.G.; DiMaggio, C.J. Development and Validation of a Google Street View Pedestrian Safety Audit Tool. Epidemiology 2020, 31, 301. [Google Scholar] [CrossRef]

- Li, Y.; Long, Y. Inferring storefront vacancy using mobile sensing images and computer vision approaches. Comput. Environ. Urban Syst. 2024, 108, 102071. [Google Scholar] [CrossRef]

- Qiu, W.; Zhang, Z.; Liu, X.; Li, W.; Li, X.; Xu, X.; Huang, X. Subjective or objective measures of street environment, which are more effective in explaining housing prices? Landsc. Urban Plan. 2022, 221, 104358. [Google Scholar] [CrossRef]

- Yao, Y.; Zhang, J.; Qian, C.; Wang, Y.; Ren, S.; Yuan, Z.; Guan, Q. Delineating urban job-housing patterns at a parcel scale with street view imagery. Int. J. Geogr. Inf. Sci. 2021, 35, 1927–1950. [Google Scholar] [CrossRef]

- Orsetti, E.; Tollin, N.; Lehmann, M.; Valderrama, V.A.; Morató, J. Building Resilient Cities: Climate Change and Health Interlinkages in the Planning of Public Spaces. Int. J. Environ. Res. Public Health 2022, 19, 1355. [Google Scholar] [CrossRef]

- Hoover, F.A.; Lim, T.C. Examining privilege and power in US urban parks and open space during the double crises of antiblack racism and COVID-19. Socio-Ecol. Pract. Res. 2021, 3, 55–70. [Google Scholar] [CrossRef]

- Huang, Y.; Napawan, N.C. “Separate but Equal?” Understanding Gender Differences in Urban Park Usage and Its Implications for Gender-Inclusive Design. Landsc. J. 2021, 40, 1–16. [Google Scholar] [CrossRef]

- Kaźmierczak, A. The contribution of local parks to neighbourhood social ties. Landsc. Urban Plan. 2013, 109, 31–44. [Google Scholar] [CrossRef]

- Credit, K.; Mack, E. Place-making and performance: The impact of walkable built environments on business performance in Phoenix and Boston. Environ. Plan. B Urban Anal. City Sci. 2019, 46, 264–285. [Google Scholar] [CrossRef]

- Gehl, J.; Svarre, B. How to Study Public Life; Island Press/Center for Resource Economics: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Gehl Institute; San Francisco Planning Department; Copenhagen Municipality City Data Department; Seattle Department of Transportation. Public Life Data Protocol (Version: Beta); Technical Report; Gehl Institute: New York, NY, USA, 2017. [Google Scholar]

- Zhang, F.; Salazar-Miranda, A.; Duarte, F.; Vale, L.; Hack, G.; Chen, M.; Liu, Y.; Batty, M.; Ratti, C. Urban Visual Intelligence: Studying Cities with Artificial Intelligence and Street-Level Imagery. Ann. Am. Assoc. Geogr. 2024, 114, 876–897. [Google Scholar] [CrossRef]

- Lu, Y. Using Google Street View to investigate the association between street greenery and physical activity. Landsc. Urban Plan. 2019, 191, 103435. [Google Scholar] [CrossRef]

- He, H.; Lin, X.; Yang, Y.; Lu, Y. Association of street greenery and physical activity in older adults: A novel study using pedestrian-centered photographs. Urban For. Urban Green. 2020, 55, 126789. [Google Scholar] [CrossRef]

- Liu, L.; Sevtsuk, A. Clarity or confusion: A review of computer vision street attributes in urban studies and planning. Cities 2024, 150, 105022. [Google Scholar] [CrossRef]

- Sun, Q.C.; Macleod, T.; Both, A.; Hurley, J.; Butt, A.; Amati, M. A human-centred assessment framework to prioritise heat mitigation efforts for active travel at city scale. Sci. Total Environ. 2021, 763, 143033. [Google Scholar] [CrossRef]

- Yang, L.; Driscol, J.; Sarigai, S.; Wu, Q.; Lippitt, C.D.; Morgan, M. Towards Synoptic Water Monitoring Systems: A Review of AI Methods for Automating Water Body Detection and Water Quality Monitoring Using Remote Sensing. Sensors 2022, 22, 2416. [Google Scholar] [CrossRef]

- Cai, J.; Tao, L.; Li, Y. CM-UNet++: A Multi-Level Information Optimized Network for Urban Water Body Extraction from High-Resolution Remote Sensing Imagery. Remote Sens. 2025, 17, 980. [Google Scholar] [CrossRef]

- Bazzett, D.; Marxen, L.; Wang, R. Advancing regional flood mapping in a changing climate: A HAND-based approach for New Jersey with innovations in catchment analysis. J. Flood Risk Manag. 2025, 18, e13033. [Google Scholar] [CrossRef]

- Fuentes, S.; Tongson, E.; Gonzalez Viejo, C. Urban Green Infrastructure Monitoring Using Remote Sensing from Integrated Visible and Thermal Infrared Cameras Mounted on a Moving Vehicle. Sensors 2021, 21, 295. [Google Scholar] [CrossRef]

- Hosseini, M.; Cipriano, M.; Eslami, S.; Hodczak, D.; Liu, L.; Sevtsuk, A.; Melo, G.d. ELSA: Evaluating Localization of Social Activities in Urban Streets using Open-Vocabulary Detection. arXiv 2024, arXiv:2406.01551. [Google Scholar] [CrossRef]

- Li, J.; Xie, C.; Wu, X.; Wang, B.; Leng, D. What Makes Good Open-Vocabulary Detector: A Disassembling Perspective. arXiv 2023, arXiv:2309.00227. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y.; Fukuda, T.; Wang, B. Urban safety perception assessments via integrating multimodal large language models with street view images. Cities 2025, 165, 106122. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhang, J.; Zhu, Z. Evaluating Urban Visual Attractiveness Perception Using Multimodal Large Language Model and Street View Images. Buildings 2025, 15, 2970. [Google Scholar] [CrossRef]

- Guo, G.; Qiu, X.; Pan, Z.; Yang, Y.; Xu, L.; Cui, J.; Zhang, D. YOLOv10-DSNet: A Lightweight and Efficient UAV-Based Detection Framework for Real-Time Small Target Monitoring in Smart Cities. Smart Cities 2025, 8, 158. [Google Scholar] [CrossRef]

- Balivada, S.; Gao, J.; Sha, Y.; Lagisetty, M.; Vichare, D. UAV-Based Transport Management for Smart Cities Using Machine Learning. Smart Cities 2025, 8, 154. [Google Scholar] [CrossRef]

- Marasinghe, R.; Yigitcanlar, T.; Mayere, S.; Washington, T.; Limb, M. Computer vision applications for urban planning: A systematic review of opportunities and constraints. Sustain. Cities Soc. 2024, 100, 105047. [Google Scholar] [CrossRef]

- Garrido-Valenzuela, F.; Cats, O.; van Cranenburgh, S. Where are the people? Counting people in millions of street-level images to explore associations between people’s urban density and urban characteristics. Comput. Environ. Urban Syst. 2023, 102, 101971. [Google Scholar] [CrossRef]

- Ito, K.; Biljecki, F. Assessing bikeability with street view imagery and computer vision. Transp. Res. Part C Emerg. Technol. 2021, 132, 103371. [Google Scholar] [CrossRef]

- Mohanaprakash, T.A.; Somu, C.S.; Nirmalrani, V.; Vyshnavi, K.; Sasikumar, A.N.; Shanthi, P. Detection of Abnormal Human Behavior using YOLO and CNN for Enhanced Surveillance. In Proceedings of the 2024 5th International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 18–20 September 2024; pp. 1823–1828. [Google Scholar] [CrossRef]

- Mahajan, R.C.; Pathare, N.K.; Vyas, V. Video-based Anomalous Activity Detection Using 3D-CNN and Transfer Learning. In Proceedings of the 2022 IEEE 7th International Conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2022; pp. 1–6. [Google Scholar] [CrossRef]

- He, Y.; Qin, Y.; Chen, L.; Zhang, P.; Ben, X. Efficient abnormal behavior detection with adaptive weight distribution. Neurocomputing 2024, 600, 128187. [Google Scholar] [CrossRef]

- Yang, Y.; Angelini, F.; Naqvi, S.M. Pose-driven human activity anomaly detection in a CCTV-like environment. IET Image Process. 2023, 17, 674–686. [Google Scholar] [CrossRef]

- Thyagarajmurthy, A.; Ninad, M.G.; Rakesh, B.G.; Niranjan, S.; Manvi, B. Anomaly Detection in Surveillance Video Using Pose Estimation. In Proceedings of the Emerging Research in Electronics, Computer Science and Technology: Proceedings of International Conference, ICERECT 2018, Mandya, India, 23–24 August 2018; pp. 753–766. [Google Scholar] [CrossRef]

- Gravitz-Sela, S.; Shach-Pinsly, D.; Bryt, O.; Plaut, P. Leveraging City Cameras for Human Behavior Analysis in Urban Parks: A Smart City Perspective. Sustainability 2025, 17, 865. [Google Scholar] [CrossRef]

- Kim, S.K.; Chan, I.C. Novel Machine Learning-Based Smart City Pedestrian Road Crossing Alerts. Smart Cities 2025, 8, 114. [Google Scholar] [CrossRef]

- Dinh, Q.M.; Ho, M.K.; Dang, A.Q.; Tran, H.P. TrafficVLM: A Controllable Visual Language Model for Traffic Video Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar] [CrossRef]

- Chen, C.; Wang, W. Jetson Nano-Based Subway Station Area Crossing Detection. In Proceedings of the International Conference on Artificial Intelligence in China, Tianjin, China, 29 November 2024; Wang, W., Mu, J., Liu, X., Na, Z.N., Eds.; Springer: Singapore, 2024; pp. 627–635. [Google Scholar] [CrossRef]

- Sarvajcz, K.; Ari, L.; Menyhart, J. AI on the Road: NVIDIA Jetson Nano-Powered Computer Vision-Based System for Real-Time Pedestrian and Priority Sign Detection. Appl. Sci. 2024, 14, 1440. [Google Scholar] [CrossRef]

- Wang, Y.; Zou, R.; Chen, Y.; Gao, Z. Research on Pedestrian Detection Based on Jetson Xavier NX Platform and YOLOv4. In Proceedings of the 2023 4th International Symposium on Computer Engineering and Intelligent Communications (ISCEIC), Nanjing, China, 18–20 August 2023; pp. 373–377. [Google Scholar] [CrossRef]

- Kono, V. Viva’s Peter Mildon to Present at NVIDIA GTC: Overcoming Commuting Conditions with Computer Vision; Vivacity Labs: London, UK, 2024. [Google Scholar]

- NVIDIA Technical Blog. Metropolis Spotlight: Sighthound Enhances Traffic Safety with NVIDIA GPU-Accelerated AI Technologies; NVIDIA: Santa Clara, CA, USA, 2021. [Google Scholar]

- Plastiras, G.; Terzi, M.; Kyrkou, C.; Theocharides, T. Edge Intelligence: Challenges and Opportunities of Near-Sensor Machine Learning Applications. In Proceedings of the 2018 IEEE 29th International Conference on Application-specific Systems, Architectures and Processors (ASAP), Milan, Italy, 10–12 July 2018; pp. 1–7, ISSN 2160-052X. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, M.; Wang, X.; Ma, X.; Liu, J. Deep Learning for Edge Computing Applications: A State-of-the-Art Survey. IEEE Access 2020, 8, 58322–58336. [Google Scholar] [CrossRef]

- NVIDIA. Robotics & Edge AI|NVIDIA Jetson. Available online: https://marketplace.nvidia.com/en-us/enterprise/robotics-edge/?limit=15 (accessed on 19 October 2025).

- Google Cloud. GPU Pricing. Available online: https://cloud.google.com/products/compute/gpus-pricing?hl=en (accessed on 19 October 2025).

- Iqbal, U.; Davies, T.; Perez, P. A Review of Recent Hardware and Software Advances in GPU-Accelerated Edge-Computing Single-Board Computers (SBCs) for Computer Vision. Sensors 2024, 24, 4830. [Google Scholar] [CrossRef]

- Byzkrovnyi, O.; Smelyakov, K.; Chupryna, A.; Lanovyy, O. Comparison of Object Detection Algorithms for the Task of Person Detection on Jetson TX2 NX Platform. In Proceedings of the 2024 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 25 April 2024; pp. 1–6, ISSN 2690-8506. [Google Scholar] [CrossRef]

- Nguyen, H.H.; Tran, D.N.N.; Jeon, J.W. Towards Real-Time Vehicle Detection on Edge Devices with Nvidia Jetson TX2. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics—Asia (ICCE-Asia), Seoul, Republic of Korea, 26–28 April 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Afifi, M.; Ali, Y.; Amer, K.; Shaker, M.; ElHelw, M. Robust Real-time Pedestrian Detection in Aerial Imagery on Jetson TX2. arXiv 2019, arXiv:1905.06653. [Google Scholar] [CrossRef]

- Civic Data Design Lab. @SQ9: An MIT DUSP 11.458 Project, 2019. Available online: https://sq9.mit.edu/index.html (accessed on 19 October 2025).

- Zhou, J.; Wang, Z.; Meng, J.; Liu, S.; Zhang, J.; Chen, S. Human Interaction Recognition with Skeletal Attention and Shift Graph Convolution. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8, ISSN 2161-4407. [Google Scholar] [CrossRef]

- Ingwersen, C.K.; Mikkelstrup, C.M.; Jensen, J.N.; Hannemose, M.R.; Dahl, A.B. SportsPose—A Dynamic 3D Sports Pose Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5219–5228. [Google Scholar] [CrossRef]

- Fani, M.; Neher, H.; Clausi, D.; Wong, A.; Zelek, J. Hockey Action Recognition via Integrated Stacked Hourglass Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Dai, Y.; Liu, L.; Wang, K.; Li, M.; Yan, X. Using computer vision and street view images to assess bus stop amenities. Comput. Environ. Urban Syst. 2025, 117, 102254. [Google Scholar] [CrossRef]

- Roboflow. Roboflow and Ultralytics Partner to Streamline YOLOv5 MLOps, 2021. Available online: https://blog.roboflow.com/ultralytics-partnership/ (accessed on 19 October 2025).

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; pp. 237–242, ISSN 2157-8702. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Google Research. Open Images 2019—Object Detection, 2019. Available online: https://www.kaggle.com/competitions/open-images-2019-object-detection (accessed on 19 October 2025).

- Viloria, I.R. Creating Better Data: How To Map Homography, 2024. Available online: https://blogarchive.statsbomb.com/articles/football/creating-better-data-how-to-map-homography/ (accessed on 19 October 2025).

- Prakash, H.; Shang, J.C.; Nsiempba, K.M.; Chen, Y.; Clausi, D.A.; Zelek, J.S. Multi Player Tracking in Ice Hockey with Homographic Projections. arXiv 2024, arXiv:2405.13397. [Google Scholar] [CrossRef]

- Pandya, Y.; Nandy, K.; Agarwal, S. Homography Based Player Identification in Live Sports. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5209–5218. [Google Scholar]

- OpenCV. OpenCV: Geometric Image Transformations, 2024. Available online: https://docs.opencv.org/4.x/da/d54/group__imgproc__transform.html (accessed on 19 October 2025).

- Barrett, L.F. How Emotions Are Made: The Secret Life of the Brain; HarperCollins: New York, NY, USA, 2017. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN Revisited, Revisited: Why and How You Should (Still) Use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–19. [Google Scholar] [CrossRef]

- Hall, E.T. A System for the Notation of Proxemic Behavior. Am. Anthropol. 1963, 65, 1003–1026. [Google Scholar] [CrossRef]

- Patterson, M. Spatial Factors in Social Interactions. Hum. Relations 1968, 21, 351–361. [Google Scholar] [CrossRef]

- Birant, D.; Kut, A. ST-DBSCAN: An algorithm for clustering spatial–temporal data. Data Knowl. Eng. 2007, 60, 208–221. [Google Scholar] [CrossRef]

- Cakmak, E.; Plank, M.; Calovi, D.S.; Jordan, A.; Keim, D. Spatio-temporal clustering benchmark for collective animal behavior. In Proceedings of the 1st ACM SIGSPATIAL International Workshop on Animal Movement Ecology and Human Mobility, New York, NY, USA, 2 November 2021; HANIMOB ’21. pp. 5–8. [Google Scholar] [CrossRef]

- Ou, Z.; Wang, B.; Meng, B.; Shi, C.; Zhan, D. Research on Resident Behavioral Activities Based on Social Media Data: A Case Study of Four Typical Communities in Beijing. Information 2024, 15, 392. [Google Scholar] [CrossRef]

- NVIDIA. NanoVLM—NVIDIA Jetson AI Lab, 2024. Available online: https://www.jetson-ai-lab.com/tutorial_nano-vlm.html (accessed on 19 October 2025).

- Zhang, Q.; Gou, S.; Li, W. Visual Perception System for Autonomous Driving. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024. [Google Scholar] [CrossRef]

- Wang, K.; Yao, X.; Ma, N.; Ran, G.; Liu, M. DMOT-SLAM: Visual SLAM in dynamic environments with moving object tracking. Meas. Sci. Technol. 2024, 35, 096302. [Google Scholar] [CrossRef]

- Singh, B.; Kumar, P.; Kaur, N. Advancements and Challenges in Visual-Only Simultaneous Localization and Mapping (V-SLAM): A Systematic Review. In Proceedings of the 2024 2nd International Conference on Sustainable Computing and Smart Systems (ICSCSS), Vitually, 10–12 July 2024; pp. 116–123. [Google Scholar] [CrossRef]

| Field | Example | Description |

|---|---|---|

| type | Feature | Defines the GeoJSON object as an individual spatial feature. |

| geometry.type | Point | Geometry type representing a single detected person as a point feature. |

| geometry.coordinates | [1059, 685] | Point coordinates (x, y) in image space corresponding to the estimated foot-ground contact position derived from lower-body keypoints using pose estimation. |

| properties.category | standing | Classified behavioral category (e.g., sitting and standing). |

| properties.confidence | 0.6397 | Model confidence score associated with the detection. |

| properties.timestamp | 2024-06-19T13:51:12.279838 | Timestamp indicating when the detection occurred. |

| properties.objectID | 6 | Unique identifier assigned to the tracked individual across frames. |

| properties.gridId | 7 | Spatial grid identifier indicating which analysis zone the point falls within. |

| properties.keypoints | [[x, y, c], …] | Array of 17 human body keypoints following the COCO format, each containing coordinates (x, y) and confidence (c) values. |

| Sitting Action Recognition | Bench Detection | |

|---|---|---|

| mAP * | 97.8 | 98.9 |

| Class/Metric | Sitting Action Recognition (This Study) | Street Activity Detection Model [15] |

|---|---|---|

| Sitting/Stayer | 0.976 | 0.48 |

| Standing/Passerby | 0.979 | 0.87 |

| Car | – | 0.82 |

| Micro-mobility | – | 0.56 |

| Overall mAP@0.50 | 0.978 | 0.68 |

| Metric | Pose-Enhanced Mean | Baseline Mean | Improvement % | t Value | p Value |

|---|---|---|---|---|---|

| Precision | 0.815 | 0.627 | +29.9 | 5.131 | <0.001 |

| Recall | 0.764 | 0.429 | +78.2 | 4.911 | <0.001 |

| mAP@50 | 0.786 | 0.451 | +74.5 | 5.685 | <0.001 |

| mAP@50-95 | 0.562 | 0.302 | +86.5 | 5.284 | <0.001 |

| Behavior | Before Intervention | After Intervention | Change (%) |

|---|---|---|---|

| Visiting | 55 | 63 | +14 |

| Staying 1 | 5 | 23 | +360 |

| Sitting | 1 | 15 | +1400 |

| Socializing 2 | – | 9 | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Williams, S.; Kang, M. Striking a Pose: DIY Computer Vision Sensor Kit to Measure Public Life Using Pose Estimation Enhanced Action Recognition Model. Smart Cities 2025, 8, 183. https://doi.org/10.3390/smartcities8060183

Williams S, Kang M. Striking a Pose: DIY Computer Vision Sensor Kit to Measure Public Life Using Pose Estimation Enhanced Action Recognition Model. Smart Cities. 2025; 8(6):183. https://doi.org/10.3390/smartcities8060183

Chicago/Turabian StyleWilliams, Sarah, and Minwook Kang. 2025. "Striking a Pose: DIY Computer Vision Sensor Kit to Measure Public Life Using Pose Estimation Enhanced Action Recognition Model" Smart Cities 8, no. 6: 183. https://doi.org/10.3390/smartcities8060183

APA StyleWilliams, S., & Kang, M. (2025). Striking a Pose: DIY Computer Vision Sensor Kit to Measure Public Life Using Pose Estimation Enhanced Action Recognition Model. Smart Cities, 8(6), 183. https://doi.org/10.3390/smartcities8060183