1.2. Literature Review

Studies that are relevant to the topic of abnormal load variation forecasting can be summarized into the following three threads:

The

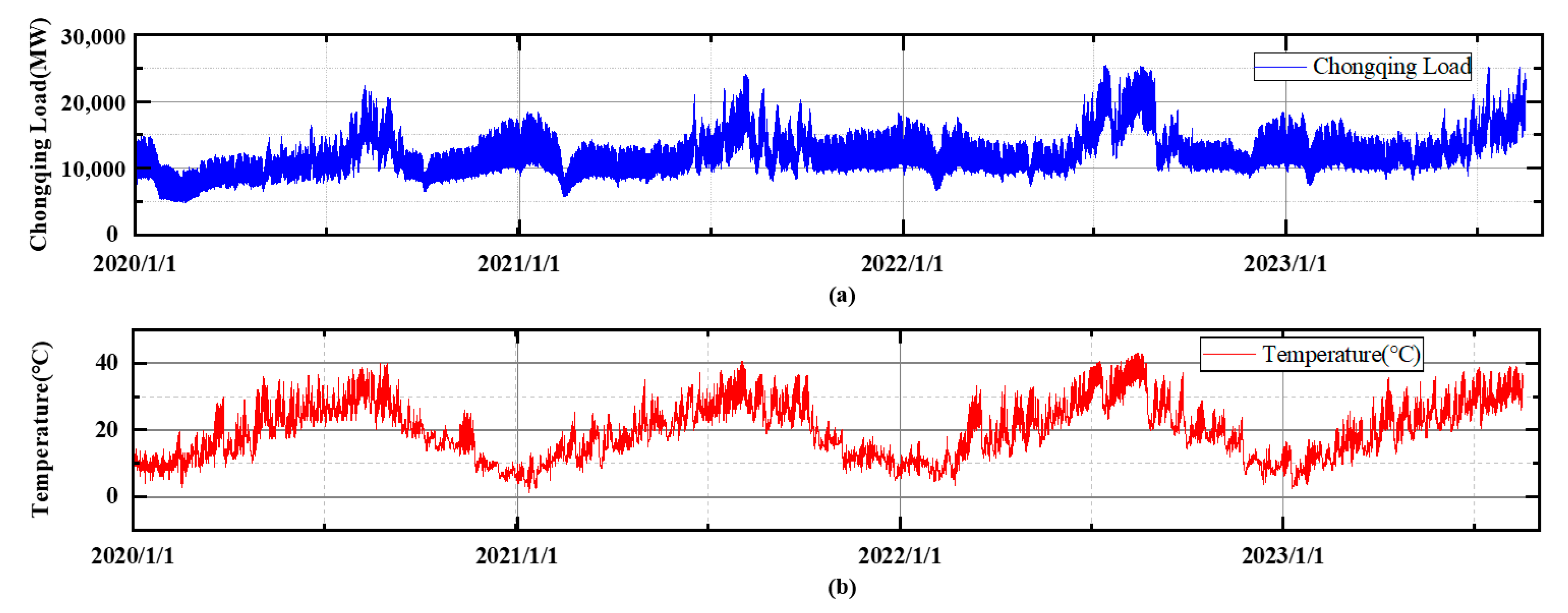

first thread focuses on the feature engineering process of the forecasting problem. In particular, weather features such as temperature and humidity have been extensively studied because weather conditions are highly correlated with the power load. Fay et al. [

3] analyze the influence of weather forecasting errors on the load forecasting performances. In [

4], the Heat Index is introduced by Chu et al. as a key input parameter for the load forecasting model. The Heat Index combines temperature and humidity to determine the apparent temperature, which is considered highly correlated with air conditioning loads and therefore can benefit the load forecasting model. Yu et al. [

5] establish a two-level Intelligent Feature Engineering (IFE) framework to assist the short-term load forecasting model. Weather features are processed in the first-level IFE to reflect the load-weather dependency. Wang et al. [

6] focus on the challenging forecasting problem of individual residential load. Meteorological variables are analyzed and introduced to the Long Short-Term Memory model to achieve forecasting. Instead of focusing on the common load–temperature dependence, Xie et al. in [

7] study the impact of humidity on the power load and find that relative humidity is another important driving factor for power load, especially in warm months. A systematic approach is proposed to identify a group of humidity-based variables, based on which the load forecasting accuracy can be improved on different forecasting horizons. In [

8], Hong et al. study the weather station selection problem to assign different number of weather stations to each forecasting zone automatically. The proposed framework can simultaneously determine the optimal number and the best choices by ranking and combining the candidate weather stations. Mansouri et al. in [

9] propose a load forecasting model based on dynamic mode decomposition with control. Perceptible temperature is considered the most important external variable, reflecting a hybrid effect of temperature and humidity on humans. In [

10], three temperature scenario generation methods are compared by Xie et al. in probabilistic load forecasting based on the quantile score. General guidelines for selecting scenario generation methods are proposed accordingly. However, despite the influence of weather factors on the load forecasting problem having been widely studied, abnormal load variations still lack a generalized and quantitative definition, which is considered another important aspect during the feature engineering process. Providing such a definition method can help identify abnormal load variation events and benefit the downstream forecasting model.

The

second thread focuses on improving the load forecasting models to better capture the load patterns and load–weather dependency. In [

11], Dehalwar et al. compare the accuracy of an Artificial Neural Network (ANN) and Bagged Regression Tree in urban power load forecasting. Weather forecasts are incorporated as the model inputs and are identified as important features to the forecasting accuracy. In [

12], Lu et al. decompose the load profiles into base component and weather-sensitive component to conduct the forecasting separately. Meteorological data are used to train a Support Vector Regression model to forecast the weather-sensitive component. Following similar decomposition–aggregation methodology, Xu et al. in [

13] propose a probabilistic forecasting model for building load forecasting. Weather features like dry-bulb temperature and relative humidity are included in the normal load forecasting model. Several forecasting models in the peak load forecasting of institutional buildings are compared by Kim et al. in [

14]. Weather features are proven to be important external features to improve the forecasting performance. Considering the different load patterns between weekdays and weekends, Li et al. propose a semi-parametric load forecasting model in [

15] focusing on weekend load forecasting. The coupling relation between meteorological factors and the load is considered, including the temperature accumulation effect. In [

16], a systematic load forecasting approach is proposed by Pinheiro et al. covering from system level to low-voltage level. Numerical weather predictions, especially temperature, are used as explanatory variables. Simulation results based on 100,000 secondary substations in Portugal demonstrate the proposed method can improve the forecasting accuracy while maintaining good applicability, interpretability, and reproducibility. Guo et al. extend the power system load forecasting to an integrated energy system including cooling, heating, and electrical loads, as demonstrated by [

17]. An attention layer is introduced to extract correlations among diverse loads, and an attentive quantile regression temporal convolutional network is proposed to achieve probabilistic forecasting. Weather variables such as temperature, humidity, and wind speed are incorporated as important model inputs. A theory-guided deep learning load forecasting model is proposed by Chen et al. in [

18] that combines domain knowledge and machine learning. The machine learning model takes the weather forecasts as part of the model inputs and is robust to the weather forecasting errors by adding synthetic disturbances during the training process. In [

19], Li et al. propose a two-stage electrical load forecasting method based on the fast-developing Internet of Things (IoT) system. Sensitivity analysis is conducted to identify the factor importance to the power load, among which temperature is recognized as a key factor. Note that although numerous efforts have been made to improve the forecasting model, the sample scarcity issue of the abnormal load variation forecasting problem has not been fully addressed. Because the abnormal load variations usually occur infrequently, the available data samples are much less than normal load samples, making it difficult to train complex forecasting models. It is necessary to propose sample augmentation methods to boost the limited abnormal load variation samples to a larger quantity, so that the numerous forecasting models can be well trained.

The

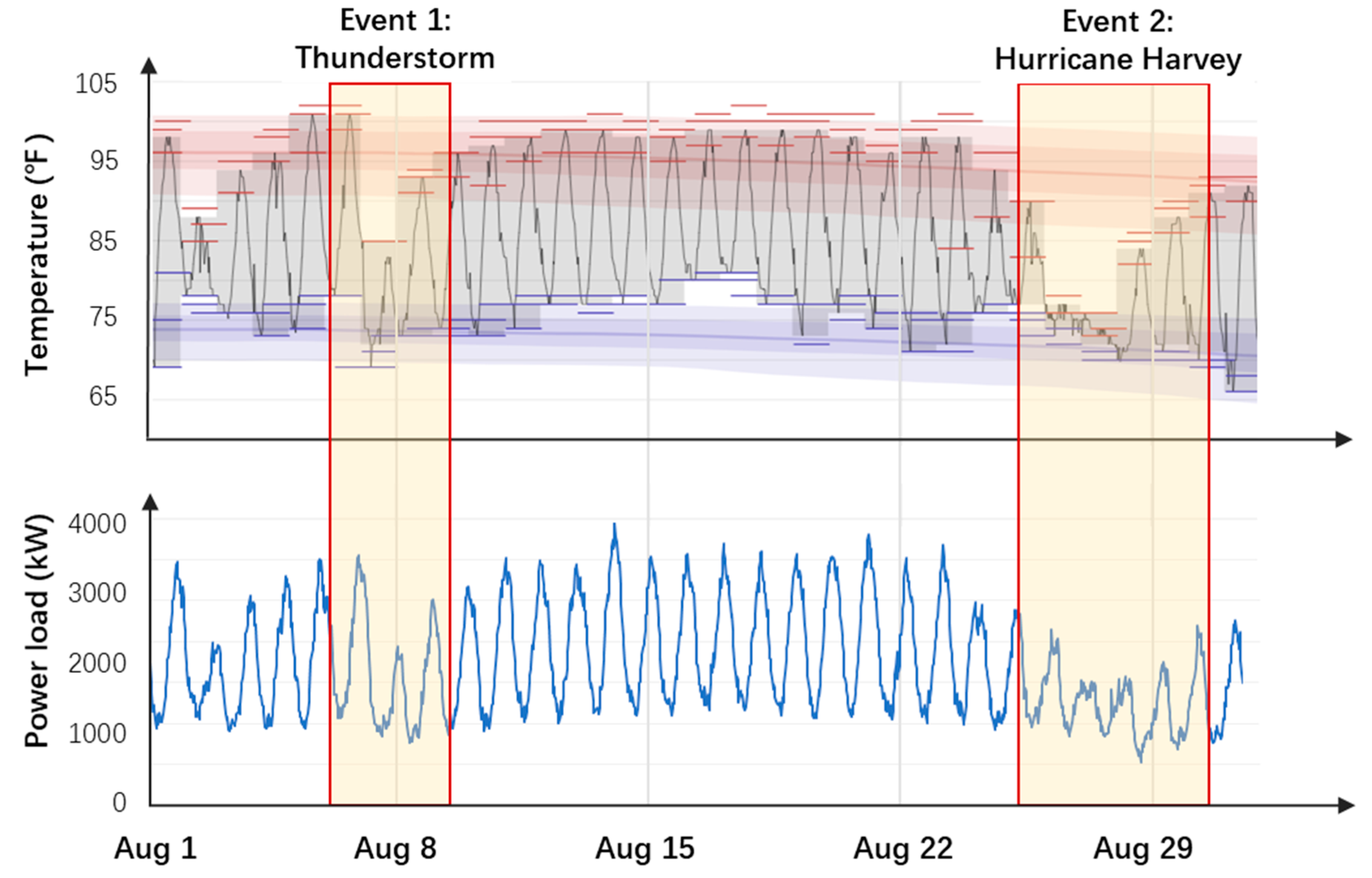

third thread focuses on studying and characterizing extreme weather events, which usually occur in rare circumstances but lead to drastic load variations. Deng et al. [

20] first establish an extreme weather identification model considering load, weather, and time factors to determine the occurrence range of upcoming peak load. Then, the Extreme Gradient Boosting (XGBoost) algorithm with Bagging strategy is proposed to forecast the short-term power load. Focusing on wildfire as the extreme event, Yang et al. [

21] develop a wildfire resilient load forecasting model to enhance the load forecasting accuracy during wildfire season. The Fire Weather Index is used as the major exogenous factor to assist the deep learning-based forecasting model. In [

22], Zhang et al. focus on the impact of hurricanes on the cascading failures of the EV charging networks. A probabilistic graphical model based on a Bayesian Network is proposed to model the correlations among different nodes, and an AC-based Cascading Failure model is implemented to simulate the power system cascading failure process. Shield et al. examine the disruptions of various types of extreme weather events, such as thunderstorms, winter storms, and tropical storms, to the electrical power grid [

23]. Multiple indexes are established to quantify the impact, which provides valuable information for government and utility company decision-making. Considering the increasing penetration of solar and wind power in power systems, Watson et al. [

24] study the power grid resilience with high renewable energy penetration under hurricane attack. Simulation results demonstrate that the generation capacity loss and the system restoration cost increase significantly for a power grid with high renewable penetration. Taking Hurricane Maria as an example, Kwasinski et al. [

25] study the systematic impact of hurricane on the Puerto Rico power grid, including generation, transmission, and distribution. Results demonstrate that the resilience of the Puerto Rico power grid is weaker than the U.S. power grid under hurricane attack, requiring further investment and technological development. In [

26], Fatima et al. provide an extensive review of power system outage prediction methods during hurricanes. Conclusions include that using a single machine learning model may lead to significant forecasting bias, which can be improved by implementing an ensemble learning strategy. It is also revealed that factors such as data quality, algorithm complexity, and model interpretability would be the focus in future research. However, as these studies mainly focus on the impact of extreme weather events on power system resiliency and economic loss analysis, their influence on the load forecasting problem has not been sufficiently discussed.

1.4. Contribution

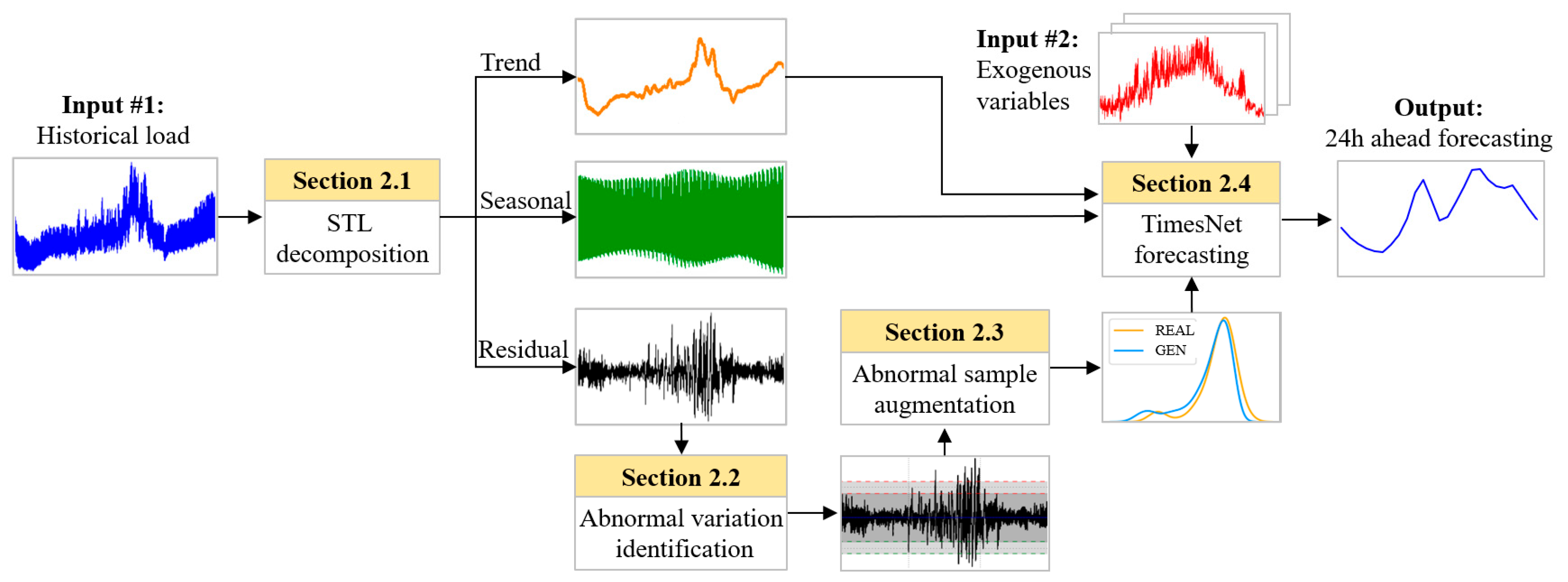

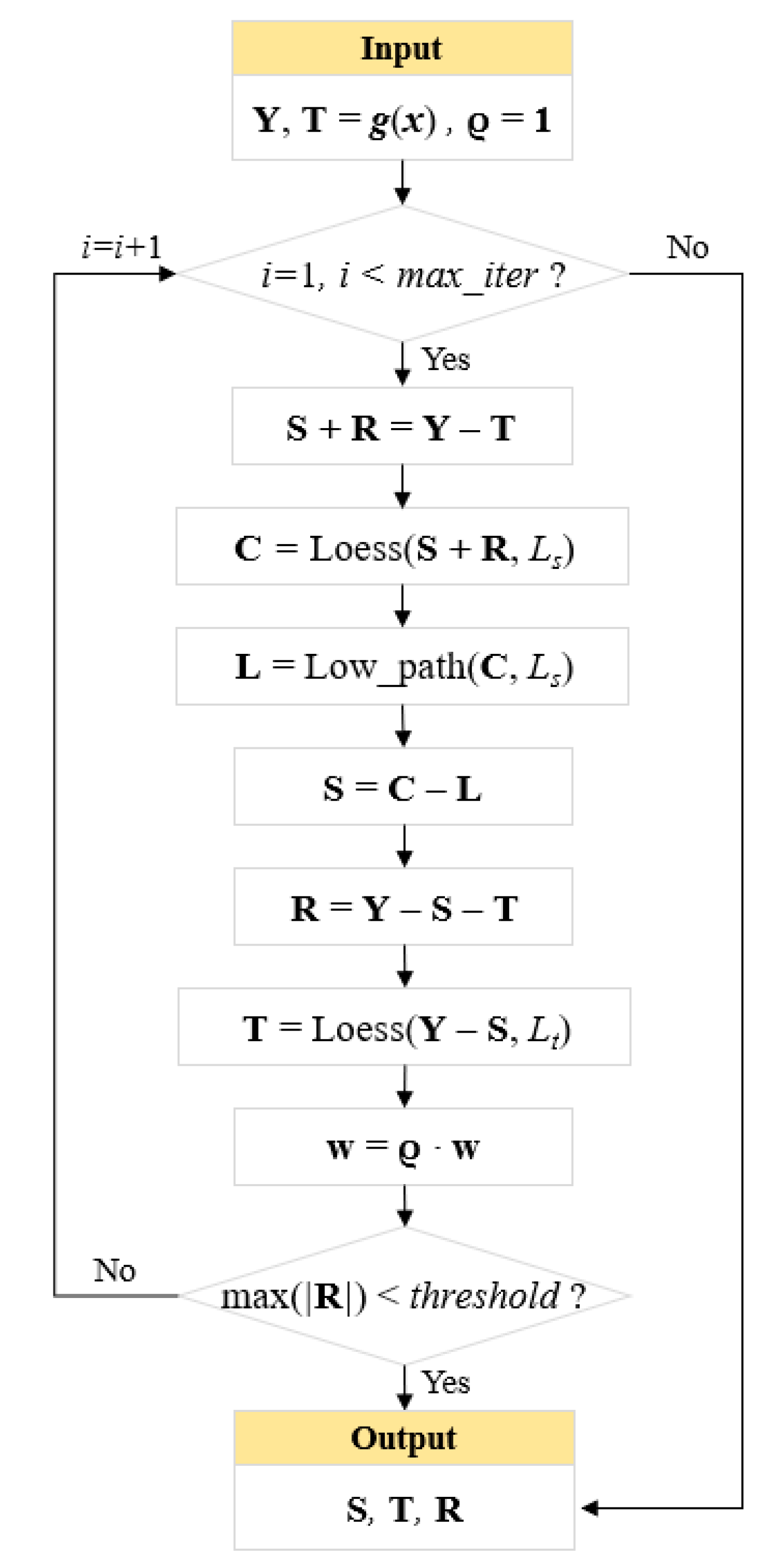

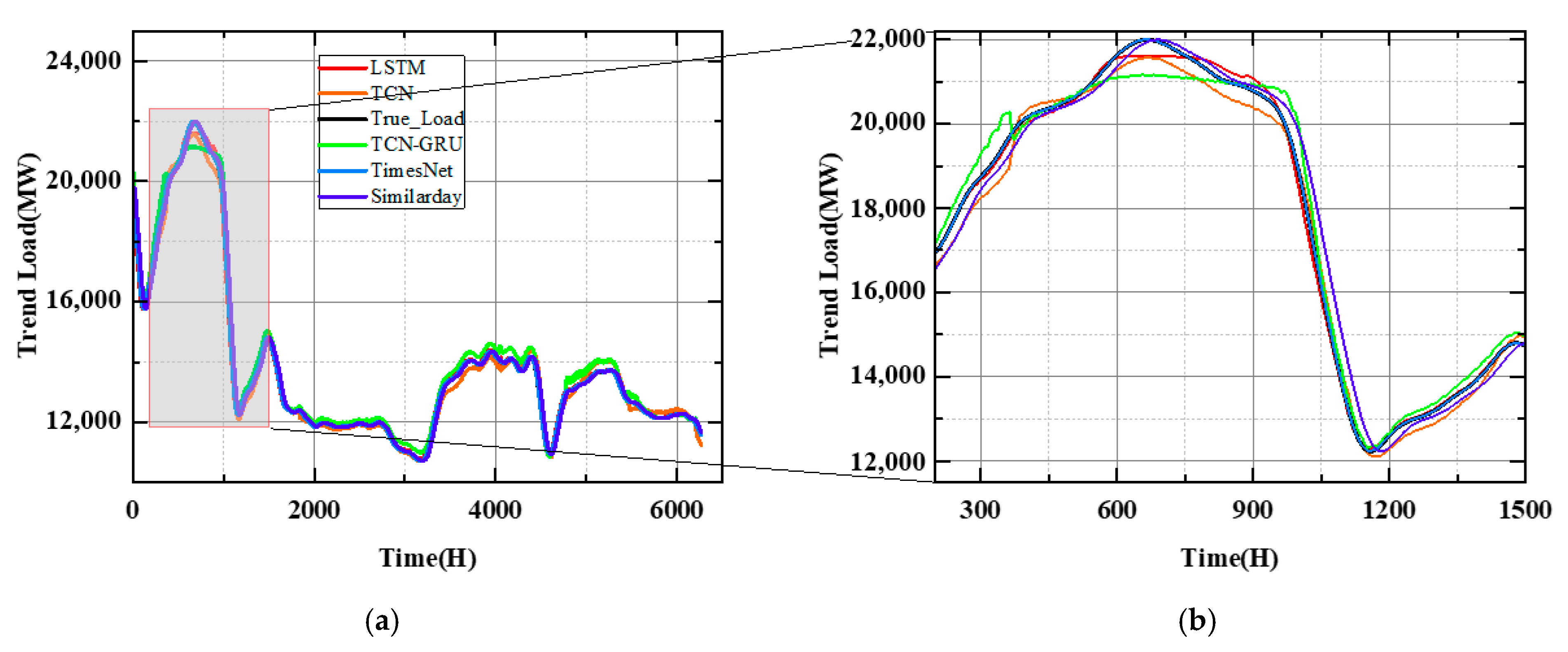

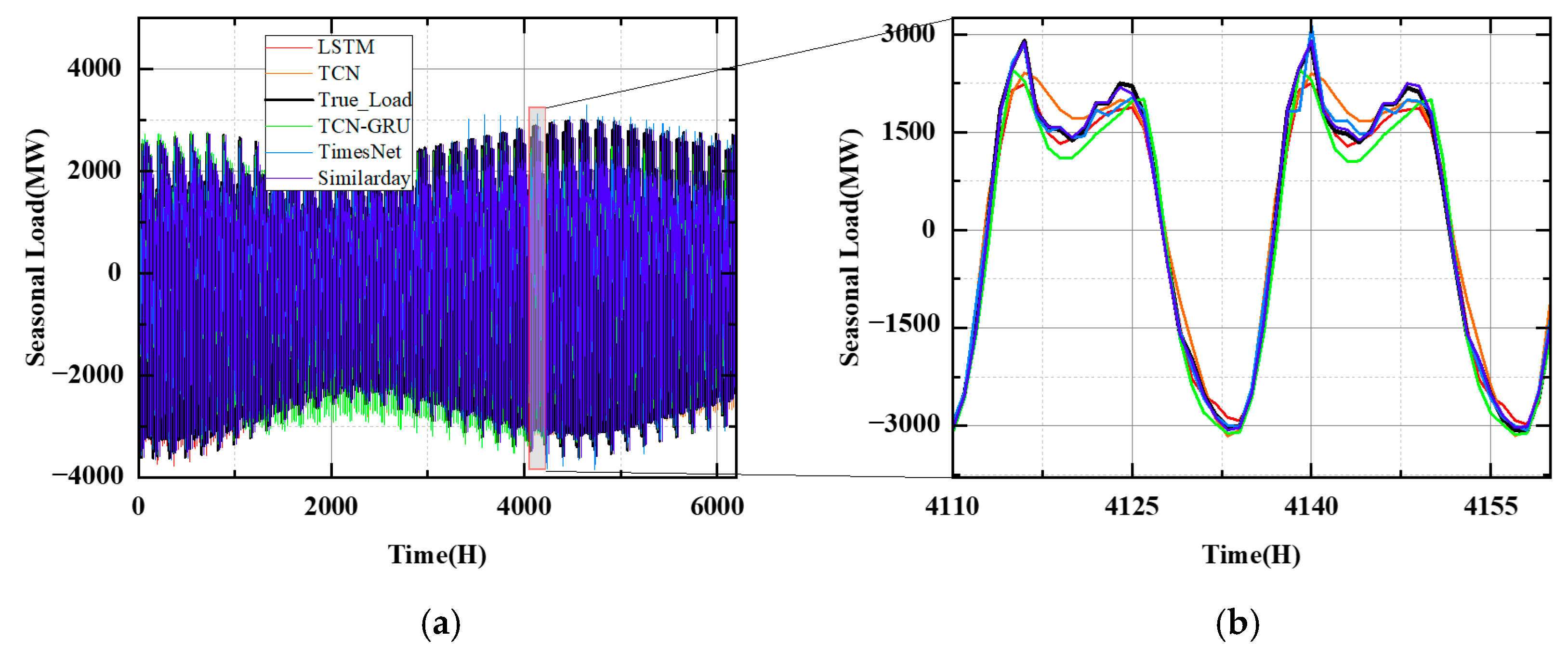

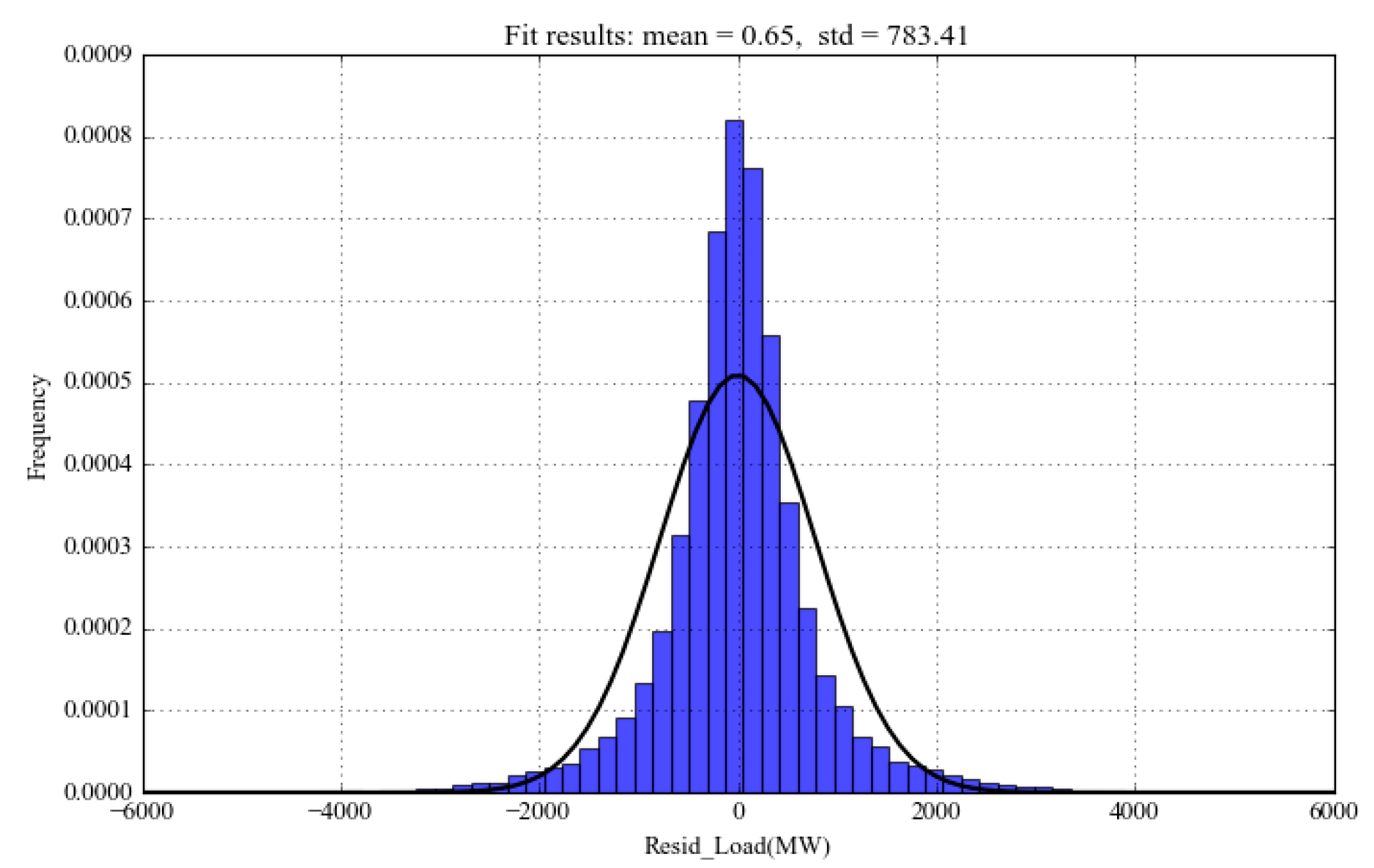

In this paper, a hybrid forecasting framework is proposed focusing on the abnormal load variations in urban cities. First, seasonal and trend decomposition using Loess (STL) [

27] is implemented to process the original load series. STL is a classical time series analysis method that can decompose the target series into trending, seasonal, and residual components, which is particularly suitable for power load analysis because power load series naturally has multi-cyclical characteristics. After STL decomposition, the abnormal load variation events can be defined based on the residual component representing irregular load changes.

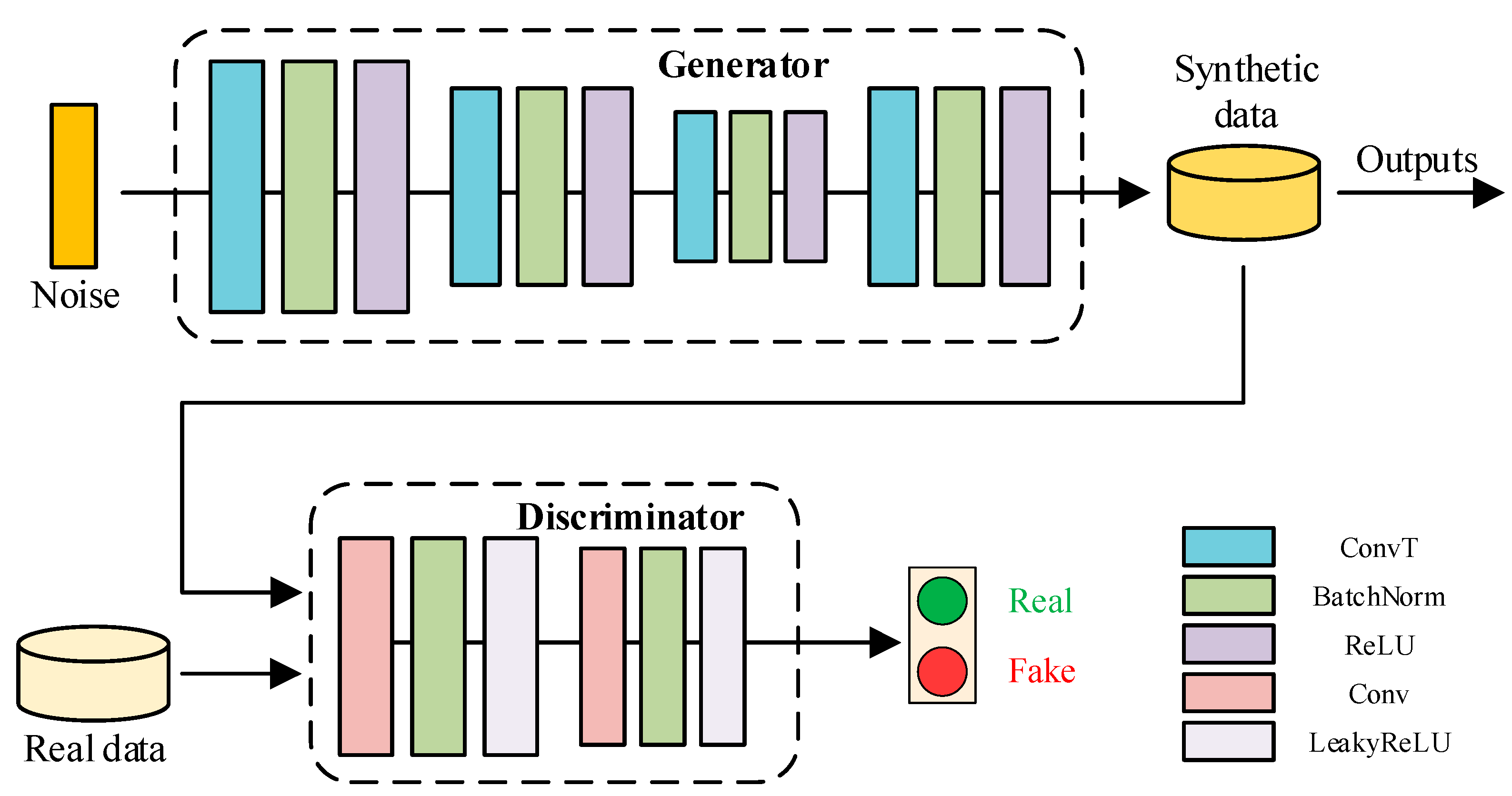

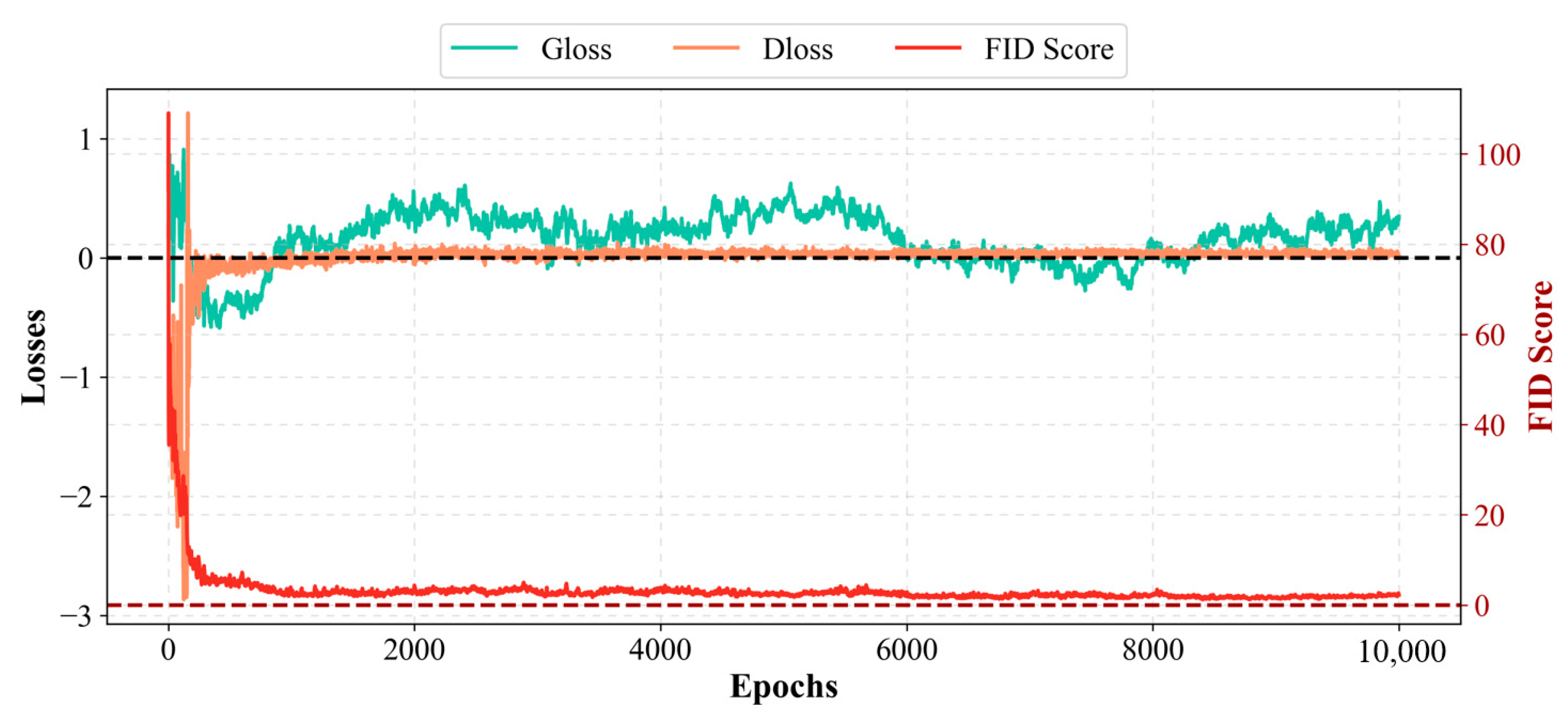

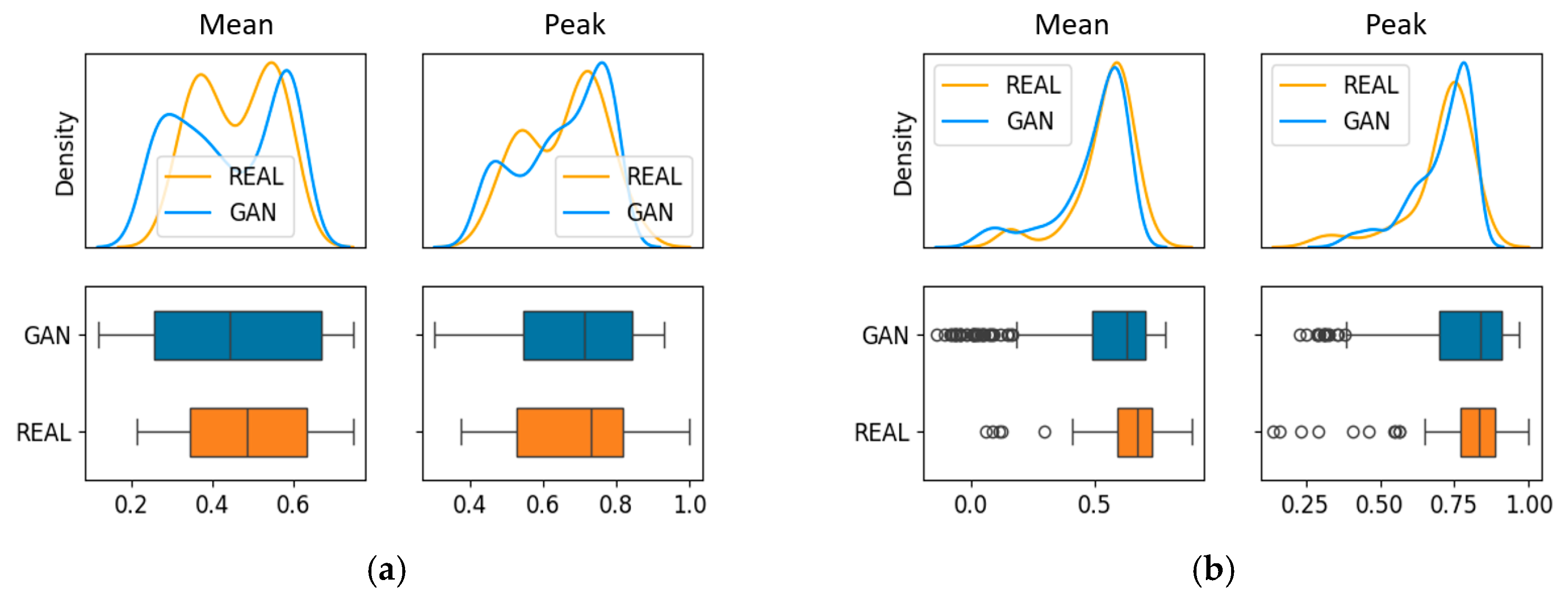

Second, Wasserstein Generative Adversarial Nets with Gradient Penalty (WGAN-GP) [

28] is introduced to boost the limited number of abnormal load variation samples to a larger quantity to solve the sample scarcity issue. Compared with the vanilla GAN model, WGAN-GP can learn the implicit distribution from the limited abnormal load variation samples to create unlimited synthetic samples, while maintaining a more stable training process and avoiding mode collapse.

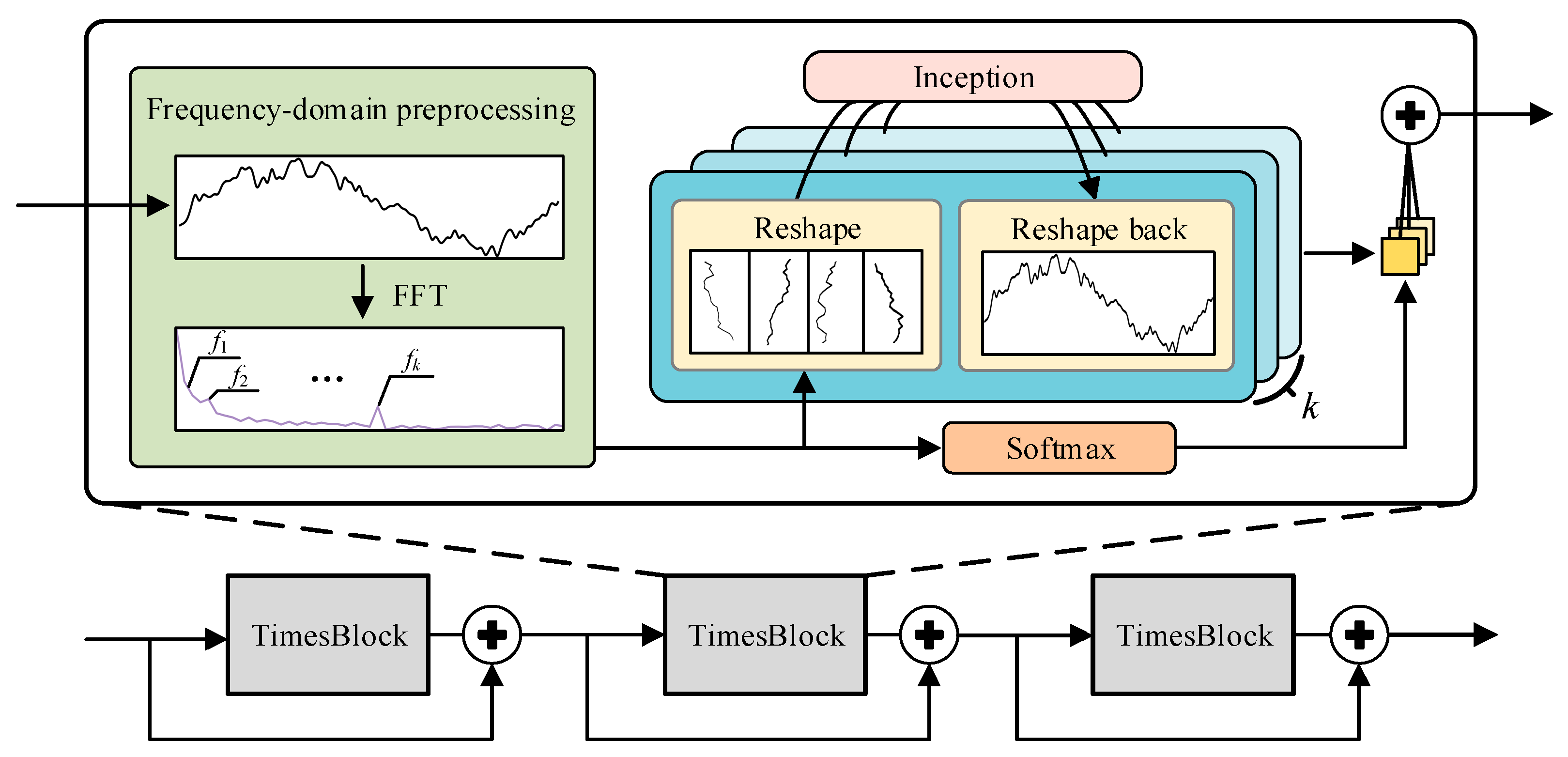

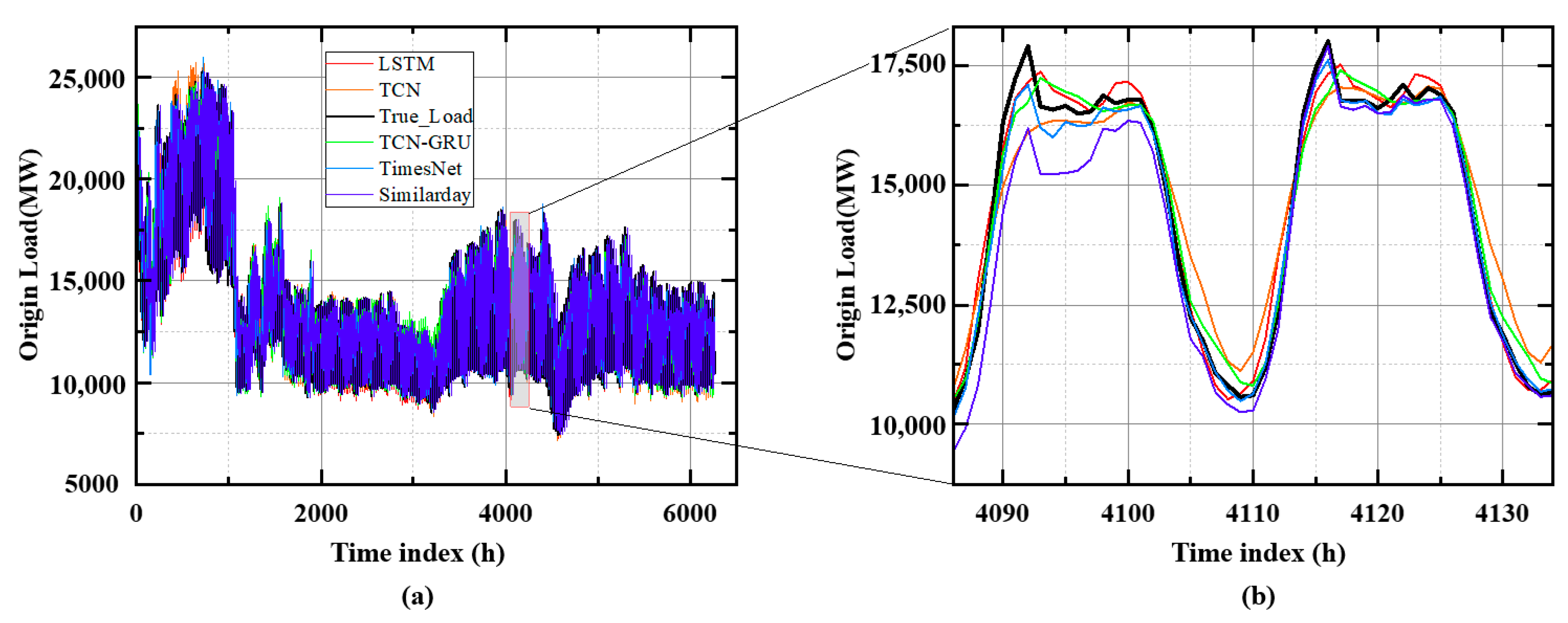

Last, to better capture the complex and nonlinear load patterns during abnormal load variation periods, an advanced neural network structure, TimesNet [

29], is introduced as the major forecasting model. TimesNet analyzes the load series from the frequency domain to identify the main frequency components and then reshapes the series accordingly to learn both intra-cyclical and cross-cyclical load patterns. Such a learning process is consistent with the multi-cyclical characteristics of the power load, enabling TimesNet to learn complex load patterns during abnormal variation periods.

The major contributions and originalities of this paper are as follows:

- (1)

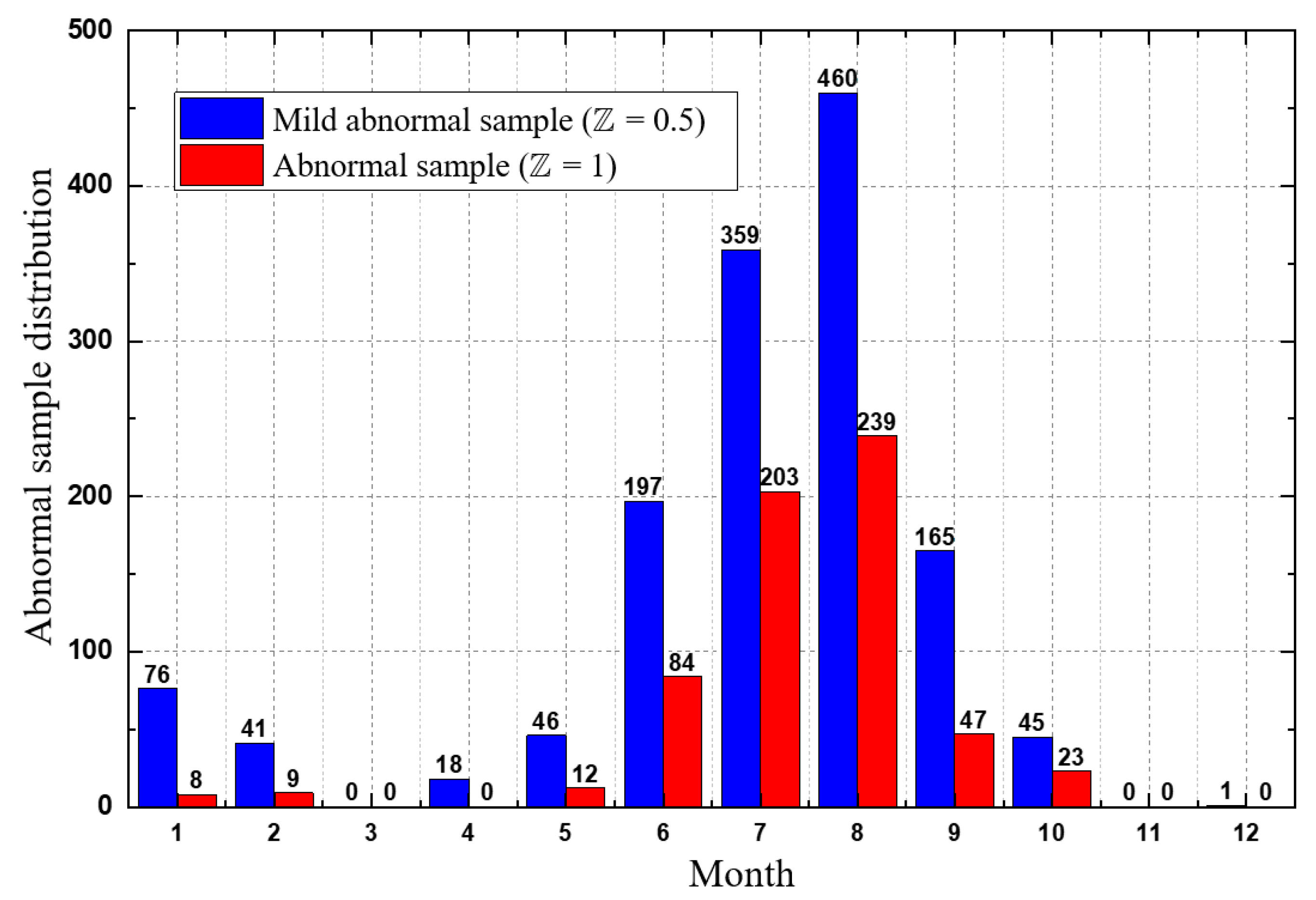

A quantitative method of defining and characterizing abnormal load variation events is proposed. Based on the residual component after STL decomposition, the abnormal events can be identified as the samples locating at the tail part of the residual probability distribution, which indicates low occurrence probability. Then, a quantitative feature can be derived to reflect the abnormity of the load samples according to their occurrence probability, which can be further used to assist the forecasting model training. Such a methodology of defining and characterizing abnormal load variation events is considered original in this paper.

- (2)

A customized forecasting framework targeting abnormal load variation forecasting is proposed. Compared with normal load forecasting methods, the proposed framework combines a definition and characterization process of abnormal load variation events, a sample augmentation process to solve the sample scarcity issue, and a state-of-the-art neural network structure as the forecaster, making it customized to the abnormal load variation forecasting problem. To the best of the authors’ knowledge, such a customized and hybrid forecasting framework targeting at abnormal load forecasting has rarely been reported in the existing literature.

The rest of this paper is organized as follows:

Section 2 introduces the methodology,

Section 3 demonstrates the case study results, and

Section 4 concludes this paper.