Smoke Detection on the Edge: A Comparative Study of YOLO Algorithm Variants

Abstract

1. Introduction

1.1. Object Recognition

1.2. Smoke Recognition

1.3. Related Work

1.4. Motivation and Contribution

- Comparing the performances in terms of the measures mAP50, mAP50_90, F-1 Score, precision, recall and training time and inference time.

- Provide an assessment on the variants of YOLO that can be utilized in the real-time scenario of smoke detection over edge computing systems.

2. Theoretical Background

2.1. Real-Time Object Recognition

- Low latency and high speed, making it appropriate for real-time applications.

- End-to-end training, thus reducing complexity.

- Global reasoning of the image at a glance.

2.2. Edge Computing Using YOLO Algorithm

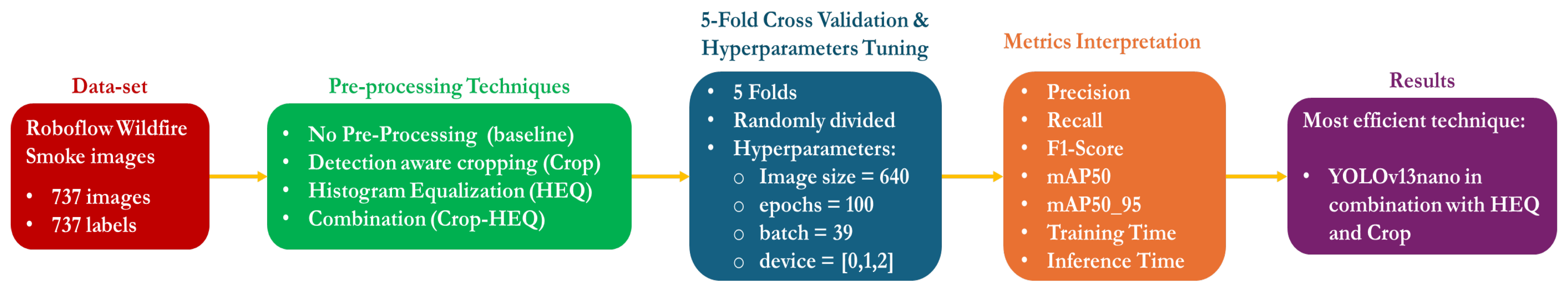

3. Methodology

3.1. Investigating the Use of YOLO Versions for Detecting Smoke in Images

3.2. Investigating the Potentials of Cropping Pre-Processing Techniques

- Train the Models

- For each candidate YOLO architecture (YOLOv9, YOLOv10, …), run train.py on all folds of your dataset.

- Save the trained weights (best.pt) per fold.

- Determine Optimal Crop Percentage

- For each trained model checkpoint:

- –

- Run find optimal Crop on validation images.

- –

- Loop over Crop scales (e.g., 0.25 → 1.0).

- –

- For each detection, Crop around it, re-run inference, and log detection confidences.

- –

- Compute the average confidence per scale for all images.

- Select the Crop scale with the highest mean confidence.

- Store the chosen Crop percentage for each model architecture.

- Evaluate Cropped Dataset

- Use the optimal Crop percentage per model as input to the evaluation of the Cropped dataset routine.

- –

- Crop each test image around detections.

- –

- Rescale ground truth labels.

- –

- Evaluate detection metrics (precision, recall, mAP).

- Save results with metrics and Crop percentage for comparison.

| Algorithm 1: Optimal Crop Selection for Smoke Detection Models |

|

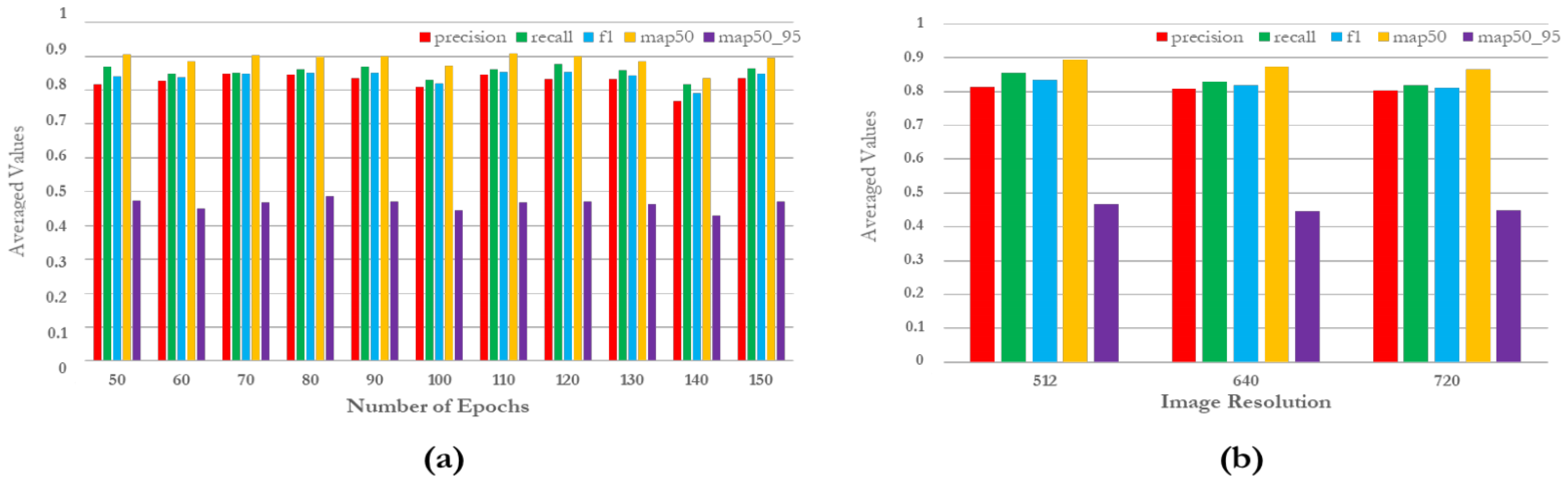

3.3. Investigating the Capabilities of YOLO Variants over Different Techniques

- –

- The choice of 100 epochs follows prior practice in YOLO-based studies on small-sized or medium-sized datasets, which report that this range is sufficient for stable convergence without overfitting. Larger epoch counts led to only marginal gains in pilot tests while significantly increasing training time.

- –

- The image resolution of 640 pixels was adopted as it represents the default setting in YOLO literature, providing a balance between capturing fine detail and keeping inference cost manageable.

- –

- The batch size of 39 was selected based on the available GPU memory: it allowed efficient utilization of the three GPUs while avoiding memory overflow, and preliminary trials with higher values showed no performance benefit.

- Precision measures the proportion of correctly predicted positive instances out of all predicted positive samples.where represents true positives and represents false positives.

- Recall (or sensitivity) measures the proportion of correctly predicted positive instances out of all actual positive samples.where represents false negative samples.

- F1-Score is the harmonic mean of precision and recall. It balances the two metrics, especially when there is an uneven class distribution.

- Mean Average Precision (mAP) is used mainly in object detection. It calculates the average precision (AP) for each class and then computes the mean across all classes. It gives a comprehensive measure of both precision and recall across multiple thresholds.where N is the number of classes and is the average precision for class i. This metric can be deployed also by considering the IoU values (intersection-over-union) as mAP50 and mAP50_95.

3.4. Edge-Device Deployment and Lightweight Architecture Synthesis

- Model Pruning: Reduces the number of neurons, channels, or layers by removing redundant parameters, minimizing memory requirements without a significant loss of detection accuracy.

- Quantization: Converts model weights from 32-bit floating point to lower-precision representations (e.g., 16-bit or 8-bit integers), which decreases model size and accelerates inference on CPUs and GPUs typical of edge devices.

- Knowledge Distillation: Transfers knowledge from a larger “teacher” model to a smaller “student” model, allowing the compact model to maintain high accuracy while improving efficiency.

- Edge-Aware Pre-processing: Applies techniques such as Histogram Equalization and detection-aware Cropping before inference, reducing input complexity and improving the extraction of relevant features in noisy or low-contrast wildfire imagery.

- Memory Footprint: Amount of RAM required to load and run the model.

- Inference Speed: Frames per second (FPS) achievable under edge-device hardware constraints.

- Detection Accuracy: Ensuring that optimizations such as pruning and quantization do not degrade performance on smoke detection metrics (precision, recall, F1-score, and mAP).

4. Evaluation

4.1. Experimental Design

4.2. Experimental Results

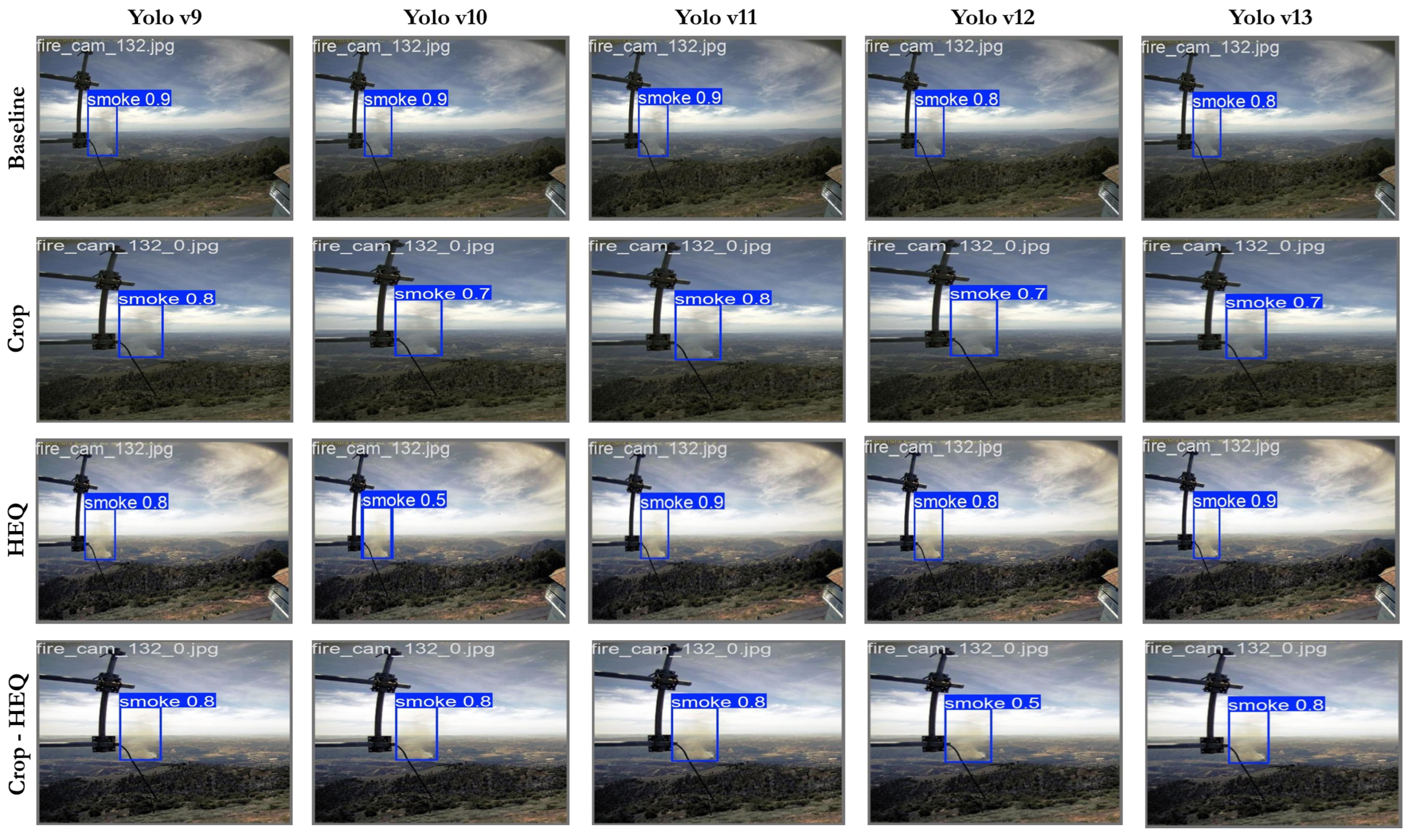

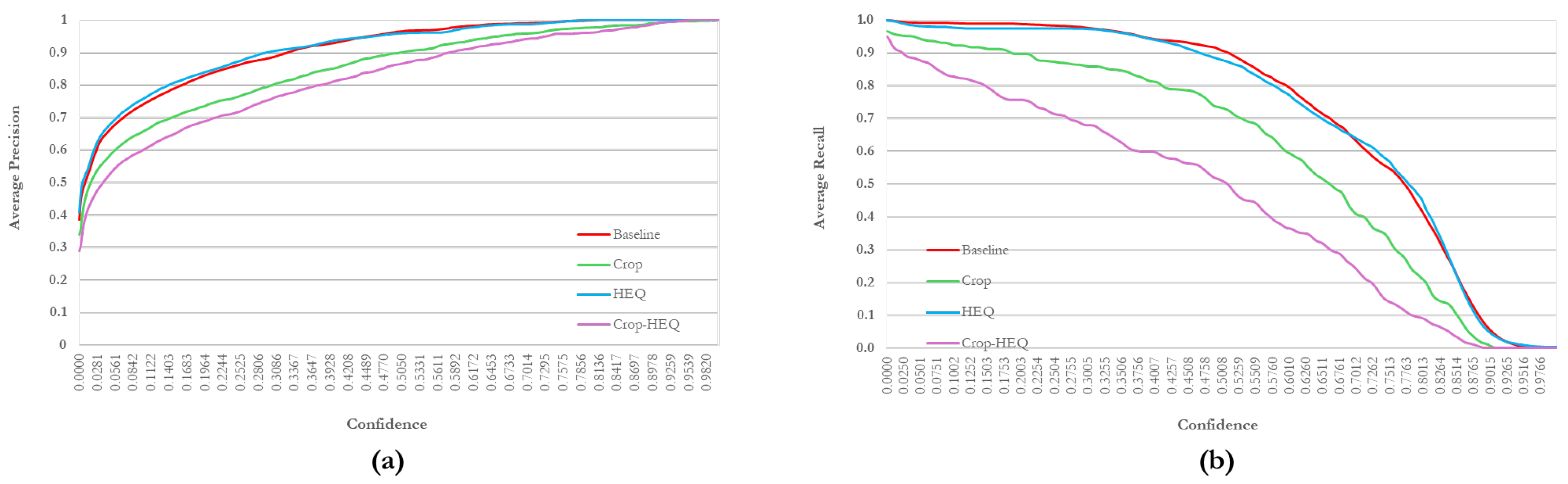

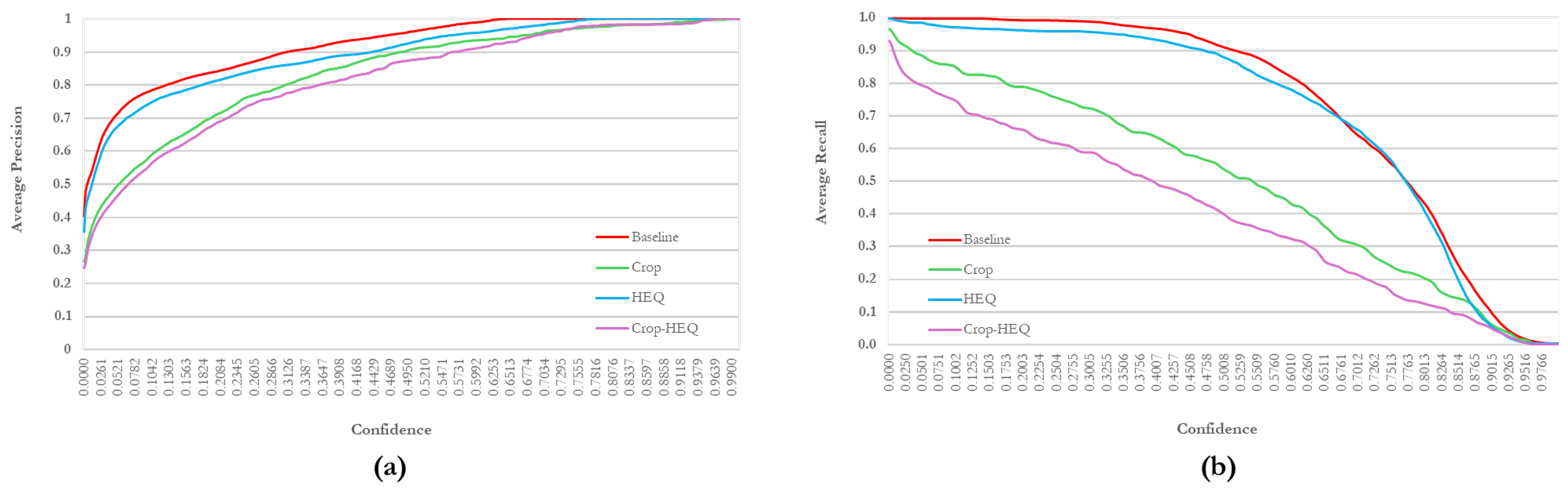

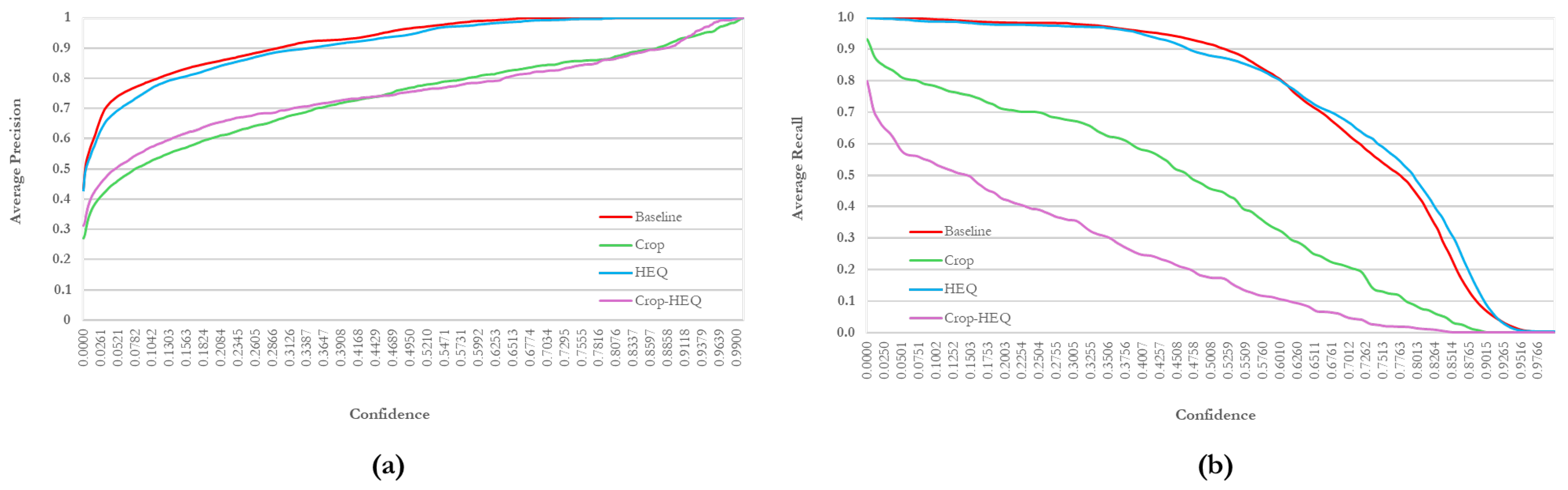

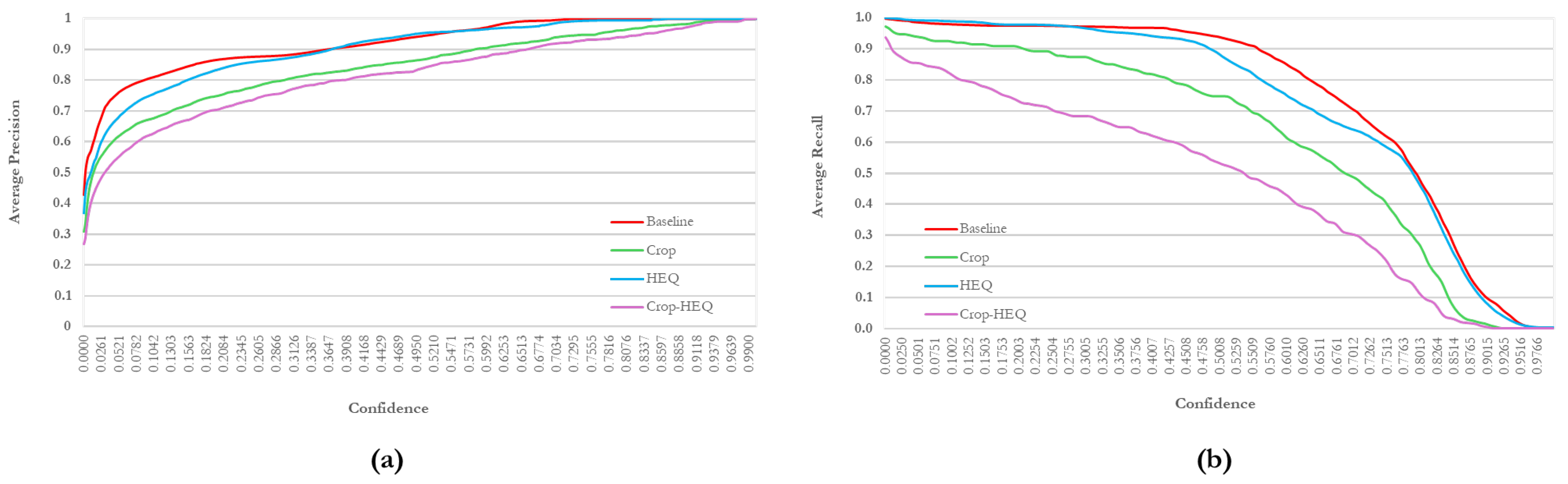

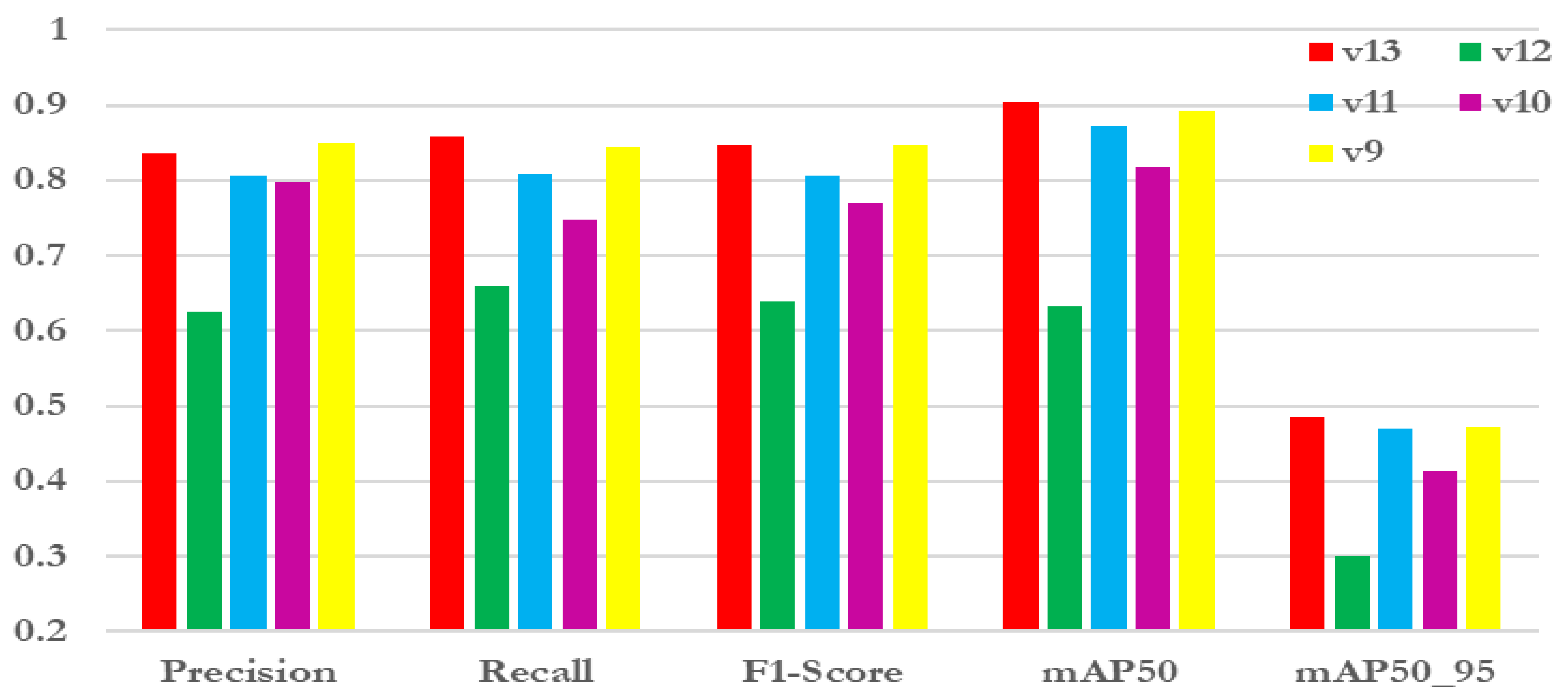

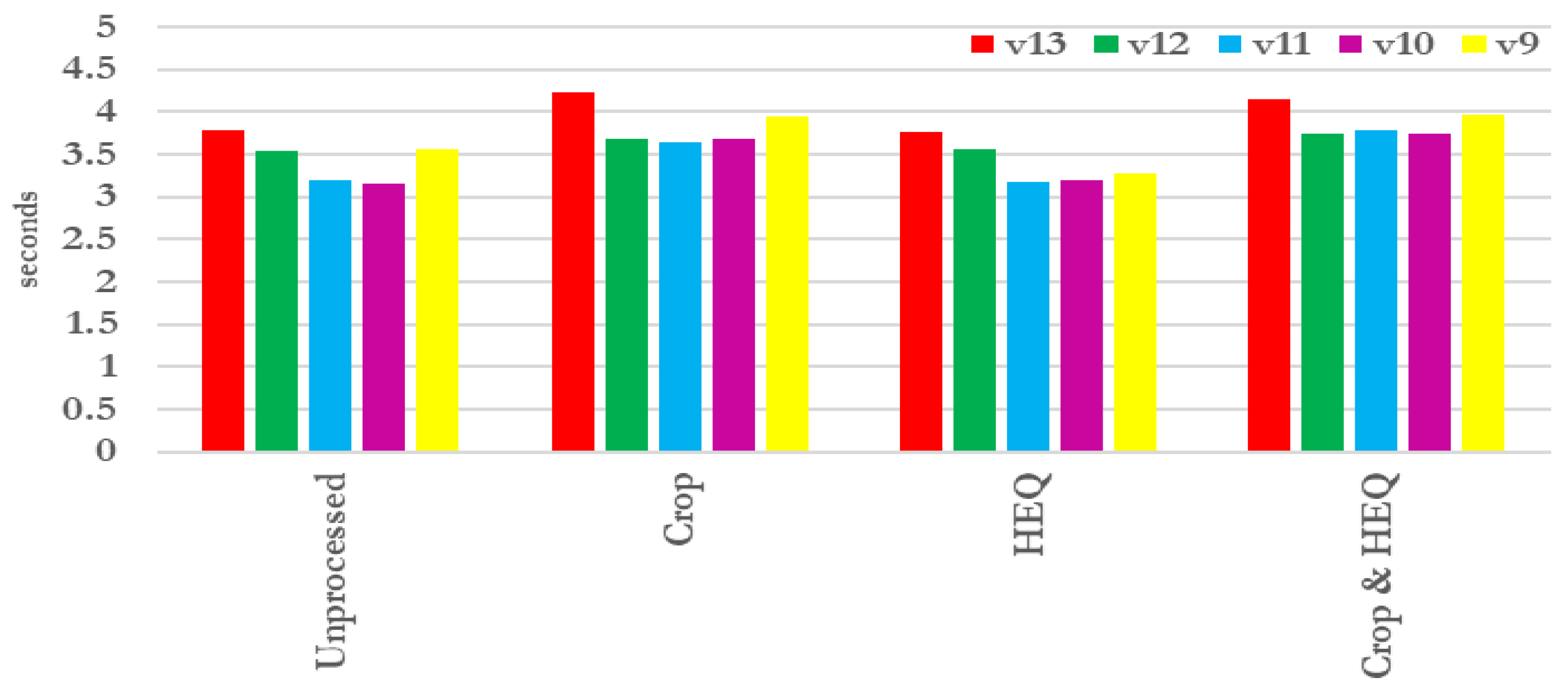

4.3. Observations on the Exhibited Results

4.4. Sensitivity Analysis

4.5. Discussion

4.5.1. Practical Deployment Considerations on Edge Devices

- Inference speed: Based on reported FLOPs and prior YOLO benchmarks on Jetson-class hardware, lightweight models such as YOLOv9-tiny and YOLOv10-nano achieve real-time throughput (15–20 FPS), whereas more recent variants like YOLOv11-nano and YOLOv13-nano achieve higher accuracy but at reduced speeds (8–12 FPS). This indicates a trade-off between responsiveness and detection precision.

- Accuracy vs. efficiency: YOLOv11-nano and YOLOv13-nano consistently deliver higher mAP scores in our experiments, but the computational overhead may limit their use in latency-critical scenarios. Conversely, YOLOv9-tiny and YOLOv10-nano provide faster inference, making them suitable for early-warning systems where speed is prioritized over marginal gains in accuracy.

- Memory footprint: Model size is another limiting factor: YOLOv9-tiny and YOLOv10-nano require less than 10 MB of storage and under 1 GB RAM for inference, while YOLOv11/YOLOv13 can exceed these requirements. On devices with only 2–4 GB RAM (e.g., Jetson Nano), careful model selection or compression techniques (quantization, pruning) may be required to ensure smooth operation.

- Deployment feasibility: For practical wildfire monitoring, the choice of model should therefore depend on deployment context: YOLOv9-tiny and YOLOv10-nano are best suited for low-power real-time detection on embedded devices, while YOLOv11-nano and YOLOv13-nano may be deployed where higher accuracy is critical and slightly higher latency can be tolerated.

4.5.2. Potentials and Limitations

- Dataset size: The dataset contained 737 annotated smoke images, which is suitable for benchmarking but may limit generalization to more diverse fire scenarios.

- Visual ambiguity of smoke: Due to its amorphous, semi-transparent nature, smoke can lead to false negatives, particularly in low-contrast scenes.

- Modality constraints: Our work focuses solely on RGB visual data. Multimodal approaches (thermal or infrared) may provide more reliable detection under certain conditions.

4.5.3. Lessons Learned

- Task-specific pre-processing is essential: It was shown that there is more value in domain-specific pre-processing such as Histogram Equalizing and detection-wise Cropping as applied in our wildfire dataset.

- Histogram Equalization consistently improves model accuracy: The Histogram Equalization technique increased the mAP and F1-scores across a wide range of variants as regards the YOLO models. This goes to show that image edge should be improved by maximizing image contrast patterns to detect faint or small objects in low sight scenes.

- Detection-aware Cropping enhances confidence and precision: The confidence and accuracy are enhanced with the detection-aware Cropping: Detection awareness Cropping increased the average confidence in detections, particularly of the YOLOv9-v11 versions.

- Pre-processing impact varies by model architecture: Pre-processing effect is dependent on model architecture: Although the newer architecture like YOLOv13-nano experienced the greatest positive effect of pre-processing, some of the older ones like YOLOv10-nano had more variable pre-processing effects, which suggests that architecture design interacts with pre-processing quite strongly.

- YOLOv13-nano with HEQ and Crop pre-processing is the most robust configuration: This model was the most effective in terms of all major indicators (Precision, Recall, F1, mAP50, mAP50_95) and, therefore, it may be regarded as the one that can be applied to the framework of high-performance wildfire detecting pipelines.

5. Conclusions

5.1. Concluding Remarks

5.2. Future Research

- Evaluation under adverse environmental conditions: Evaluation of the pipeline working under subjective environmental conditions: The proposed pipeline is to be tested in different weather conditions, illuminating environmental conditions and occlusion conditions to evaluate in generalizability in deployed environment.

- Expansion to multispectral and thermal data: Infrared or multispectral imagery can be incorporated to enhance the low visibility situation detection that would be performed at night or during times of dense smoke.

- Real-time deployment on edge devices: There is still room to optimize and test the hardware part of the program (e.g., on Jetson Nano or Coral TPU) in order to prove the real-time inference with limited resources.

- Exploration of attention mechanisms and transformer-based variants: New lightweight and vision transformers (or attention-augmented YOLO variables) are being proposed that introduce improved context awareness at a small overhead.

- Adaptive pre-processing pipelines: Investigations of dynamic data-driven or learned pre-processing modules that would be adaptive to the character of the input imagery, as opposed to fixed strategies, could be implemented.

- Crowdsourced and drone-based imagery evaluation: The UAV imagery and the crowd-sourced data may also be included to make the testbed more varied and to verify the model with the heterogeneous datasets.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jocher, G. YOLO by Ultralytics. Available online: https://github.com/ultralytics/yolov5 (accessed on 17 March 2025).

- Ko, B.C.; Cheong, K.H.; Nam, J.Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Bugarić, M.; Jakovčević, T.; Stipaničev, D. Computer vision based measurement of wildfire smoke dynamics. Adv. Electr. Comput. Eng. 2015, 15, 55–62. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Alkhammash, E.H. A Comparative Analysis of YOLOv9, YOLOv10, YOLOv11 for Smoke and Fire Detection. Fire 2025, 8, 26. [Google Scholar] [CrossRef]

- Li, Y.; Nie, L.; Zhou, F.; Liu, Y.; Fu, H.; Chen, N.; Dai, Q.; Wang, L. Improving fire and smoke detection with You Only Look Once 11 and multi-scale convolutional attention. Fire 2025, 8, 165. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Wang, C.; Xu, C.; Akram, A.; Shan, Z.; Zhang, Q. Wildfire smoke detection with cross contrast patch embedding. arXiv 2023, arXiv:2311.10116. [Google Scholar] [CrossRef]

- Pan, W.; Wang, X.; Huan, W. EFA-YOLO: An efficient feature attention model for fire and flame detection. arXiv 2024, arXiv:2409.12635. [Google Scholar] [CrossRef]

- Dinavahi, G.A.; Addanki, U.K. Assessing the effectiveness of object detection models for early forest wildfire detection. In Proceedings of the 2024 5th International Conference on Data Intelligence and Cognitive Informatics (ICDICI), Tirunelveli, India, 18–20 November 2024; pp. 1451–1458. [Google Scholar]

- An, Y.; Tang, J.; Li, Y. A mobilenet ssdlite model with improved fpn for forest fire detection. In Proceedings of the Chinese Conference on Image and Graphics Technologies, Beijing, China, 23–24 April 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 267–276. [Google Scholar]

- Liau, H.; Yamini, N.; Wong, Y. Fire SSD: Wide fire modules based single shot detector on edge device. arXiv 2018, arXiv:1806.05363. [Google Scholar] [CrossRef]

- Ahmed, H.; Jie, Z.; Usman, M. Lightweight Fire Detection System Using Hybrid Edge-Cloud Computing. In Proceedings of the 2021 IEEE 4th International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 13–15 August 2021; pp. 153–157. [Google Scholar]

- Wang, J.; Yao, Y.; Huo, Y.; Guan, J. FM-Net: A New Method for Detecting Smoke and Flames. Sensors 2025, 25, 5597. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Yang, H.; Liu, Y.; Liu, H. A Forest Fire Smoke Monitoring System Based on a Lightweight Neural Network for Edge Devices. Forests 2024, 15, 1092. [Google Scholar] [CrossRef]

- Vazquez, G.; Zhai, S.; Yang, M. Detecting wildfire flame and smoke through edge computing using transfer learning enhanced deep learning models. arXiv 2025, arXiv:2501.08639. [Google Scholar] [CrossRef]

- Wang, Y.; Piao, Y.; Wang, H.; Zhang, H.; Li, B. An improved forest smoke detection model based on YOLOv8. Forests 2024, 15, 409. [Google Scholar] [CrossRef]

- Li, C.; Zhu, B.; Chen, G.; Li, Q.; Xu, Z. Intelligent Monitoring of Tunnel Fire Smoke Based on Improved YOLOX and Edge Computing. Appl. Sci. 2025, 15, 2127. [Google Scholar] [CrossRef]

- Wong, K.Y. YOLOv9-Tiny. 2024. GitHub Repository. Available online: https://github.com/WongKinYiu/yolov9 (accessed on 17 March 2025).

- Ultralytics Team. YOLOv10-Nano. 2024. Ultralytics Docs. Available online: https://docs.ultralytics.com/models/yolov10/#performance (accessed on 17 March 2025).

- Ultralytics Team. YOLOv11-Nano. 2024. Ultralytics Docs. Available online: https://docs.ultralytics.com/tasks/detect/#models (accessed on 17 March 2025).

- Tian, Y. YOLOv12: Attention-Centric Real-Time Object Detectors. 2025. GitHub Repository. Available online: https://github.com/sunsmarterjie/yolov12 (accessed on 17 March 2025).

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Ding, G.; Du, S.; Wu, Z.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arXiv 2025, arXiv:2506.17733. [Google Scholar] [CrossRef]

- iMoonLab. yolov13n.pt [Model Weight File] (Release “YOLOv13 Model Weights”) [Data Set]. 2025. Available online: https://github.com/iMoonLab/yolov13/releases (accessed on 15 July 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2016, arXiv:1510.00149. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-Validation. In Encyclopedia of Database Systems; Springer: Boston, MA, USA, 2009. [Google Scholar]

- Dwyer, B. Wildfire Smoke [Dataset]. 2020. Available online: https://universe.roboflow.com/brad-dwyer/wildfire-smoke (accessed on 15 July 2025).

- Smoke Detection YOLO Versions Comparison. Available online: https://github.com/ChristoSarantidis/Smoke_detection_YOLO_versions_comparison/ (accessed on 1 August 2025).

| Approach | Contribution | Strengths | Limitations |

|---|---|---|---|

| YOLO-based (YOLOv4–v13) [4,5,6,10,11,17,18,19] | Lightweight adaptations, attention modules, backbone refinements, benchmarking on wildfire datasets. | High accuracy, real-time detection, efficient on edge devices, scalable improvements across versions. | Limited evaluation of multi-step pre-processing, some sensitivity to small or occluded smoke regions. |

| Single-Shot MultiBox Detector (SSD) [7,8,13,14] | Early single-stage detector with backbone variants (SqueezeNet, MobileNet) and custom SSD derivatives. | Simple architecture, efficient multi-scale detection, adaptable to real-time edge applications. | Lower accuracy compared to modern YOLO variants; less effective on thin or distant smoke. |

| Transformer-based (CCPE, Swin) [9] | Cross-contrast patch embedding with transformer backbones. | Strong feature representation, robustness in complex backgrounds. | Computationally heavier, reduced feasibility for real-time deployment on edge devices. |

| Hybrid/Pyramid networks [12,15,16] | Feature pyramid refinements and lightweight smoke-specific designs. | Enhanced small-object detection, parameter reduction with competitive accuracy. | Gains often task-specific, limited generalization across benchmarks. |

| Hyperparameter | Justification |

|---|---|

| Epochs = 100 | Sufficient for stable convergence on small datasets; longer runs showed diminishing returns while increasing training time. |

| Image size = 640 | Default in YOLO literature; balances fine detail preservation with manageable computational cost. |

| Batch size = 39 | Optimized for available GPU memory (3 GPUs); maximizes throughput without memory overflow. |

| Device = [0, 1, 2] | Parallelized training across 3 GPUs for faster convergence and efficient resource use. |

| Fold_yaml | Enables five-fold cross-validation, reducing variance and improving generalization. |

| Pre-Processing | Model | Precision | Recall | F1-score | mAP50 | mAP50_95 |

|---|---|---|---|---|---|---|

| Baseline | YOLOv9 | 0.0287 | 0.0805 | 0.0463 | 0.0615 | 0.0354 |

| YOLOv10 | 0.0457 | 0.0579 | 0.0472 | 0.0628 | 0.0419 | |

| YOLOv11 | 0.0406 | 0.0693 | 0.0537 | 0.0671 | 0.0339 | |

| YOLOv12 | 0.0612 | 0.0713 | 0.0643 | 0.0558 | 0.0495 | |

| YOLOv13 | 0.0315 | 0.0413 | 0.0209 | 0.0292 | 0.0136 | |

| HEQ | YOLOv9 | 0.0408 | 0.0502 | 0.0450 | 0.0400 | 0.0558 |

| YOLOv10 | 0.0558 | 0.0595 | 0.0531 | 0.0482 | 0.0455 | |

| YOLOv11 | 0.0572 | 0.0601 | 0.0553 | 0.0452 | 0.0595 | |

| YOLOv12 | 0.1428 | 0.1019 | 0.1166 | 0.1430 | 0.1057 | |

| YOLOv13 | 0.0668 | 0.0489 | 0.0512 | 0.0489 | 0.0593 | |

| Crop | YOLOv9 | 0.0254 | 0.0412 | 0.0302 | 0.0330 | 0.0266 |

| YOLOv10 | 0.0468 | 0.0570 | 0.0488 | 0.0510 | 0.0221 | |

| YOLOv11 | 0.0373 | 0.0594 | 0.0429 | 0.0370 | 0.0363 | |

| YOLOv12 | 0.1012 | 0.0728 | 0.0750 | 0.0783 | 0.0345 | |

| YOLOv13 | 0.0487 | 0.0429 | 0.0313 | 0.0420 | 0.0388 | |

| Crop + HEQ | YOLOv9 | 0.0097 | 0.0202 | 0.0132 | 0.0205 | 0.0206 |

| YOLOv10 | 0.0520 | 0.0643 | 0.0552 | 0.0428 | 0.0212 | |

| YOLOv11 | 0.0451 | 0.0455 | 0.0362 | 0.0281 | 0.0355 | |

| YOLOv12 | 0.0817 | 0.0475 | 0.0316 | 0.0446 | 0.0297 | |

| YOLOv13 | 0.0660 | 0.0308 | 0.0356 | 0.0470 | 0.0454 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Polenakis, I.; Sarantidis, C.; Karydis, I.; Avlonitis, M. Smoke Detection on the Edge: A Comparative Study of YOLO Algorithm Variants. Signals 2025, 6, 60. https://doi.org/10.3390/signals6040060

Polenakis I, Sarantidis C, Karydis I, Avlonitis M. Smoke Detection on the Edge: A Comparative Study of YOLO Algorithm Variants. Signals. 2025; 6(4):60. https://doi.org/10.3390/signals6040060

Chicago/Turabian StylePolenakis, Iosif, Christos Sarantidis, Ioannis Karydis, and Markos Avlonitis. 2025. "Smoke Detection on the Edge: A Comparative Study of YOLO Algorithm Variants" Signals 6, no. 4: 60. https://doi.org/10.3390/signals6040060

APA StylePolenakis, I., Sarantidis, C., Karydis, I., & Avlonitis, M. (2025). Smoke Detection on the Edge: A Comparative Study of YOLO Algorithm Variants. Signals, 6(4), 60. https://doi.org/10.3390/signals6040060