Why Partitioning Matters: Revealing Overestimated Performance in WiFi-CSI-Based Human Action Recognition

Abstract

1. Introduction

1.1. Contributions

- Critical analysis of an existing WiFi-CSI-based HAR method that applies image-based processing and deep learning. We show that the original data partitioning strategy used in that work introduces significant data leakage.

- Reimplementation of the original approach with a correct, subject-independent partitioning strategy and rigorous evaluation, demonstrating the actual generalization performance.

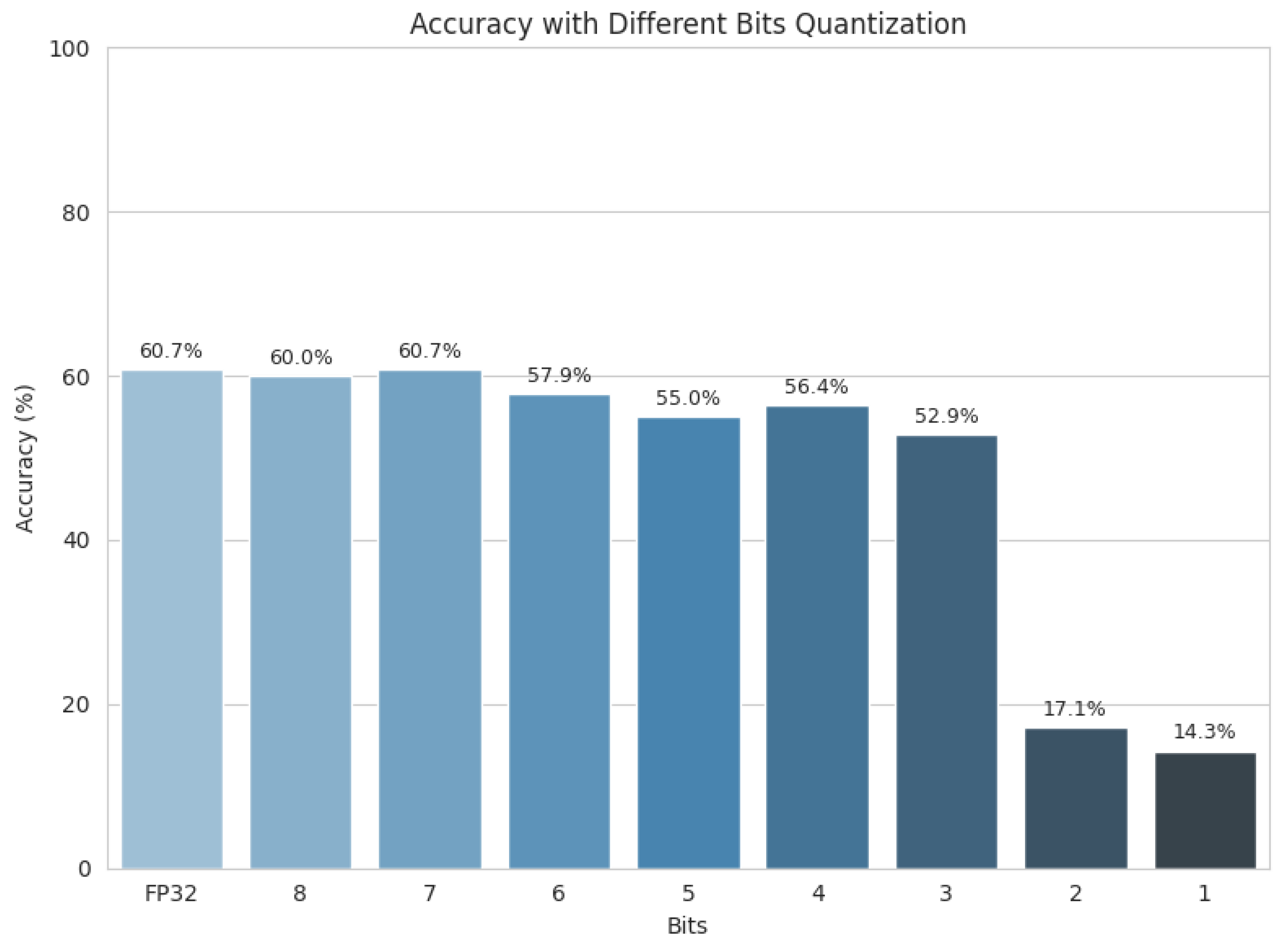

- Quantitative analysis of post-training quantization under both correct and incorrect data partitioning, showing that methodological flaws can mask substantial performance degradation—in our case, resulting in an overestimation of about 32% in F1-score when data from the same subjects appeared in both training and test sets.

- Comprehensive discussion and guidelines for designing robust evaluation protocols in WiFi-CSI-based HAR, helping future studies avoid similar methodological pitfalls.

1.2. Structure of the Paper

2. Related Work

2.1. WiFi-CSI-Based HAR

2.2. Data Leakage in Machine Learning Research

3. Preliminaries

3.1. WiFi Channel State Information

3.2. Canny Edge Detection

- Noise reduction. The input image I is smoothed using a Gaussian filter to suppress noise:where ∗ denotes the convolution andwith being the standard deviation of the Gaussian kernel.

- Gradient calculation. The gradients in the x and y directions— and —are computed, typically using Sobel operators. The gradient magnitude M and direction at each pixel are then calculated as

- Non-maximum suppression. The algorithm suppresses all gradient magnitudes that are not local maxima along the gradient direction, resulting in thin edges.

- Double thresholding.where and are the high and low thresholds, respectively.

- Edge tracking by hysteresis. Weak edges are retained only if they are connected to strong edges, ensuring edge continuity and discarding isolated responses.

3.3. Post-Training Quantization

- Weights only (static quantization): All model parameters are quantized, while activations remain in floating point.

- Weights and activations (full quantization): Both parameters and intermediate values are quantized, which further reduces latency and memory requirements.

4. Materials and Methods

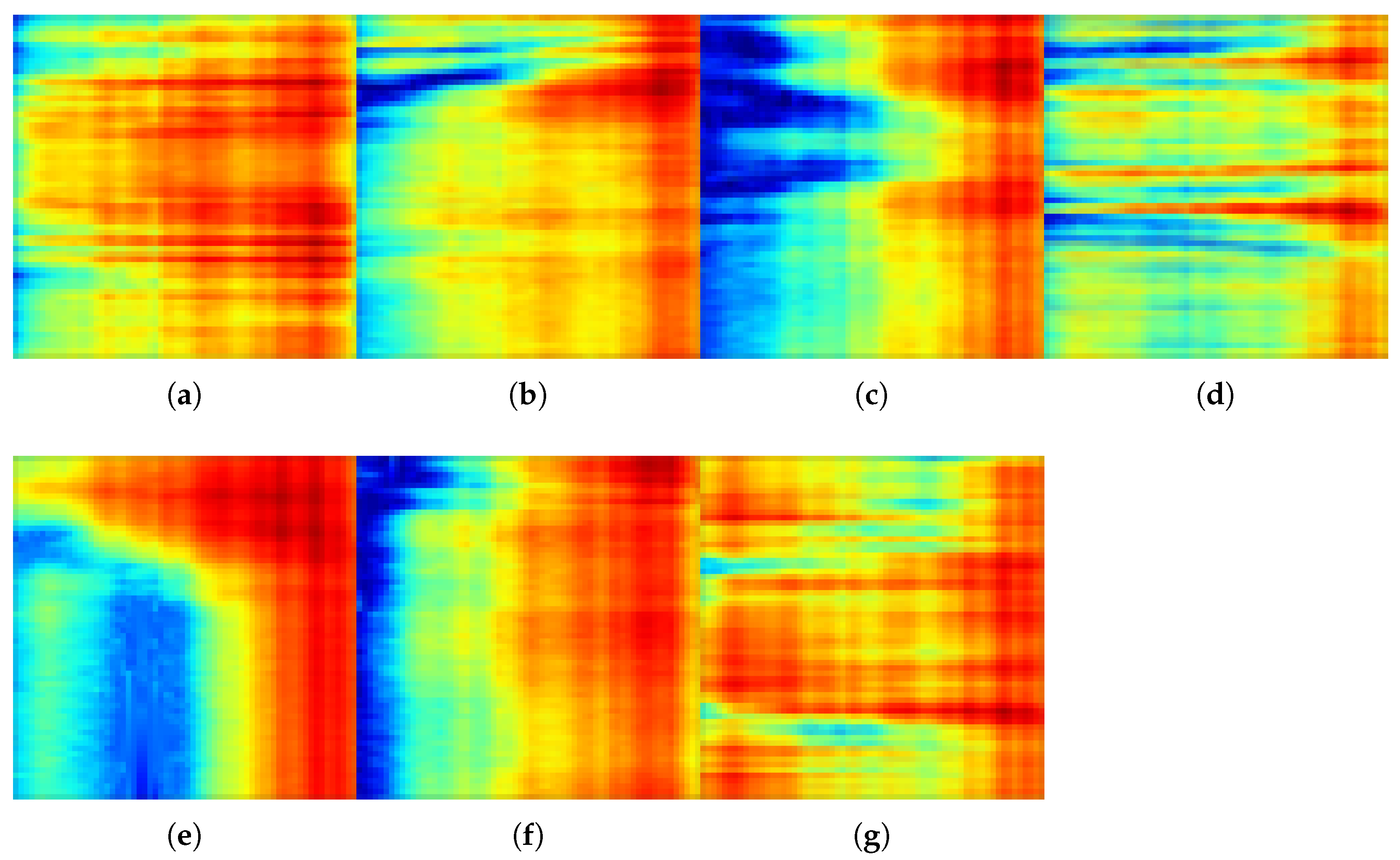

4.1. Applied Database

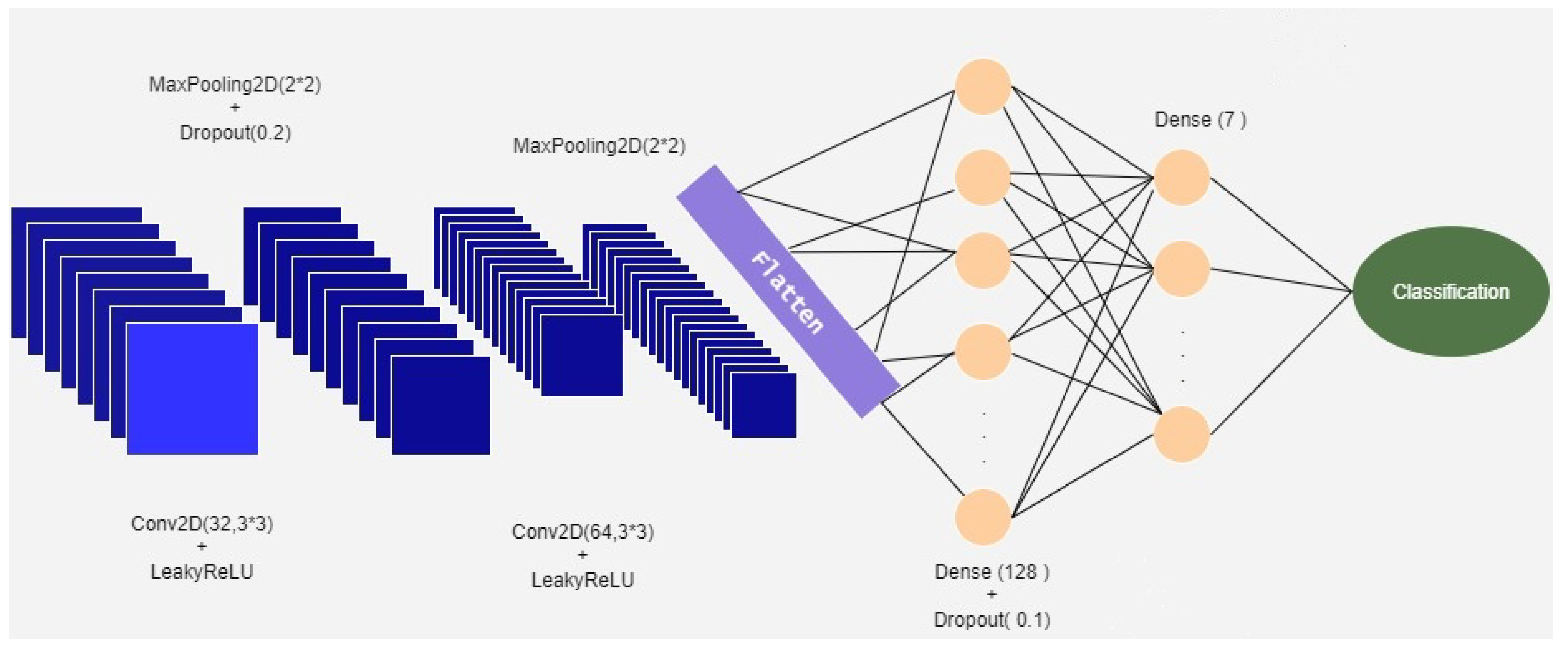

4.2. Proposed Method

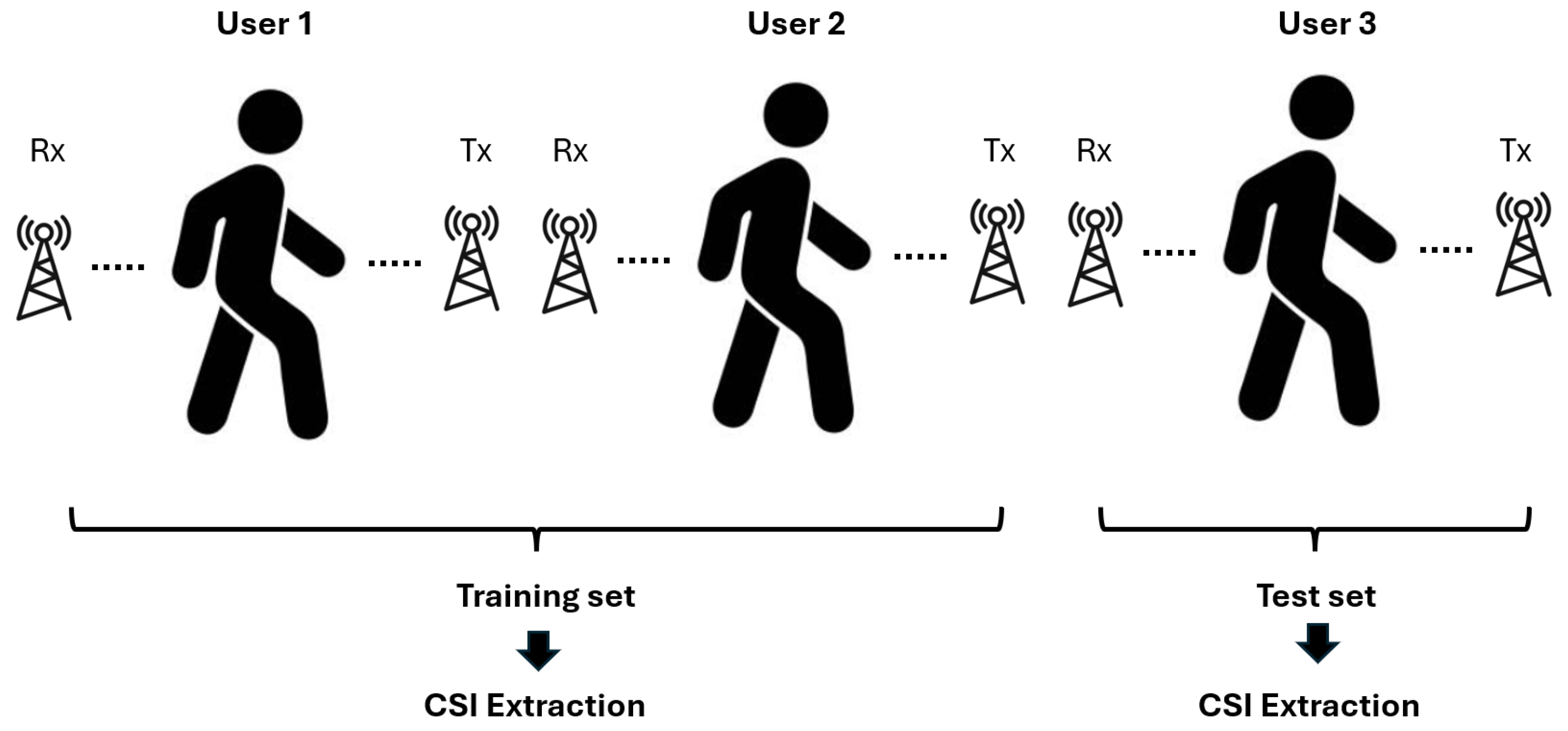

4.3. Detected Data Leakage

5. Experimental Results and Analysis

5.1. Evaluation Metrics

- Accuracy is the ratio of correctly classified instances to the total number of instances:

- Precision is the proportion of correctly predicted positive samples among all samples predicted as positive:

- Recall is the proportion of correctly predicted positive samples among all actual positive samples:

- F1-score is the harmonic mean of precision and recall:

- Since the dataset used in this study is class-balanced, macro-averaged values of the above metrics provide a fair and reliable measure of classification performance. Nevertheless, it is still important to report precision, recall, and F1-score in addition to accuracy, as these metrics capture complementary aspects of performance and highlight the trade-offs between different types of classification errors.

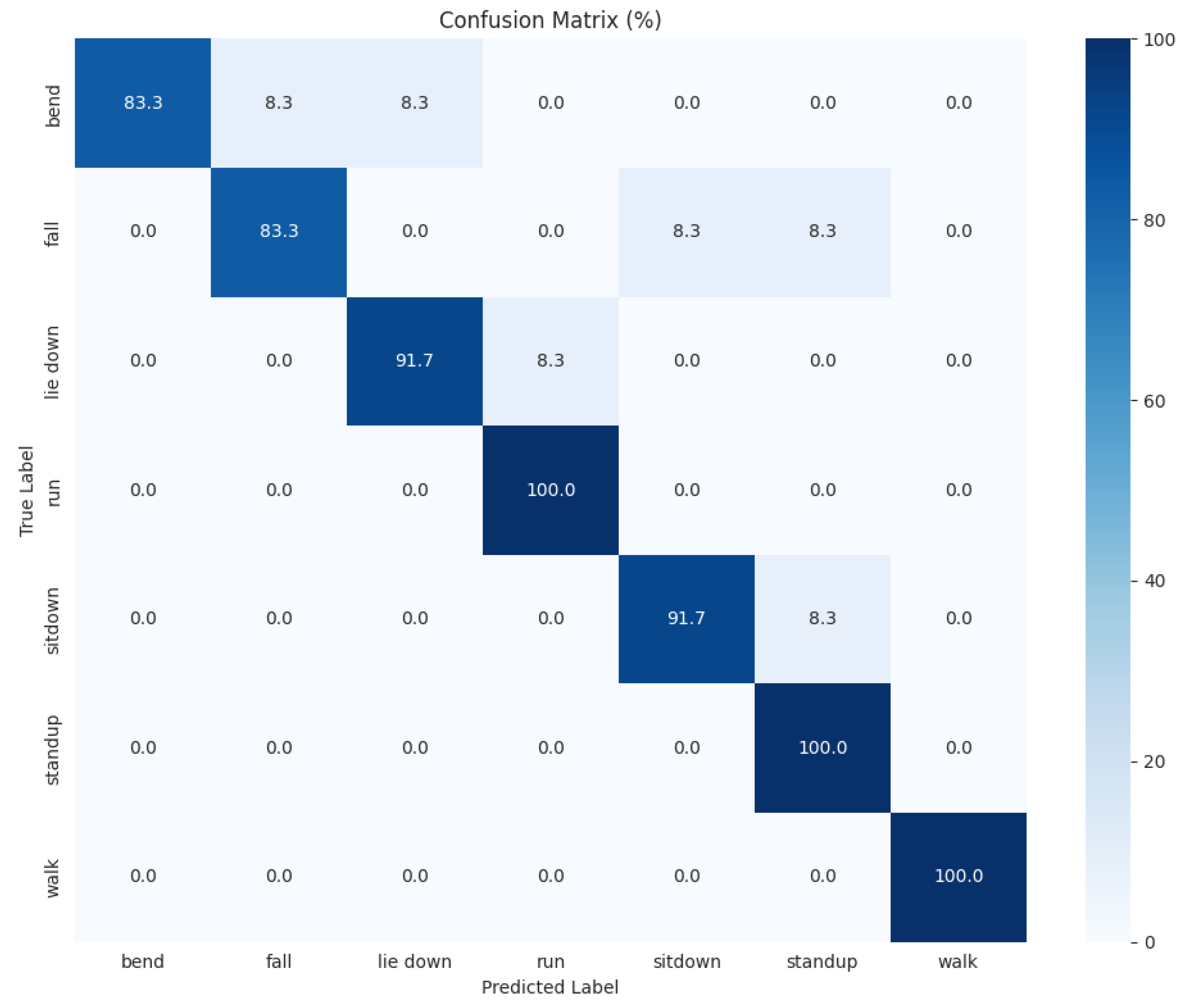

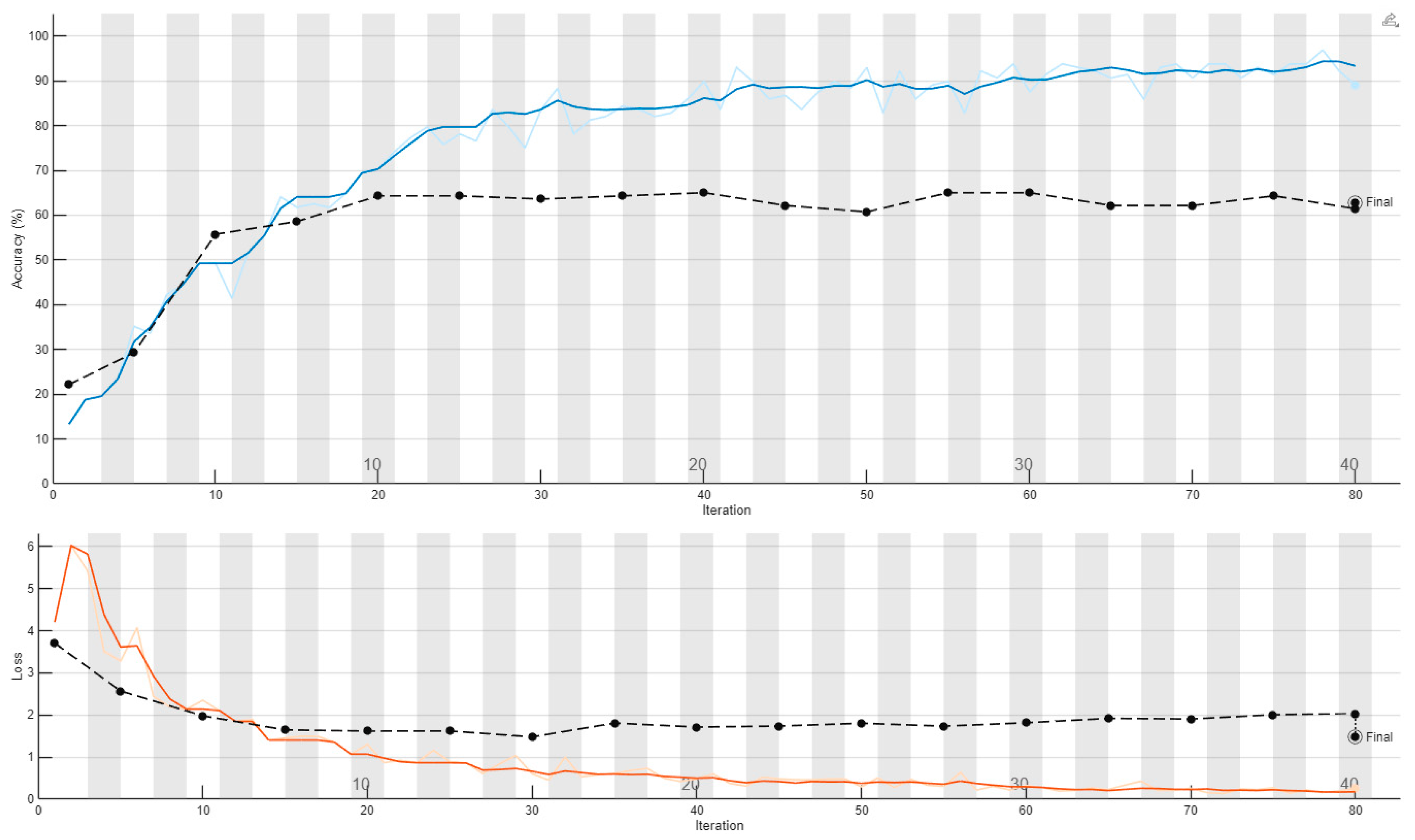

5.2. Numerical Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CPU | central processing unit |

| CSI | channel state information |

| FN | false negative |

| FP | false positive |

| GPU | graphics processing unit |

| HAR | human action recognition |

| LSTM | long short-term memory |

| MIMO | multiple-input multiple-output |

| OFDM | orthogonal frequency division multiplexing |

| PCA | principal component analysis |

| PTQ | post-training quantization |

| ReLU | rectified linear unit |

| SVM | support vector machine |

| TN | true negative |

| TP | true positive |

References

- Lei, Z.; Rong, B.; Jiahao, C.; Yonghong, Z. Smart City Healthcare: Non-Contact Human Respiratory Monitoring with WiFi-CSI. IEEE Trans. Consum. Electron. 2024, 70, 5960–5968. [Google Scholar] [CrossRef]

- Jiang, H.; Cai, C.; Ma, X.; Yang, Y.; Liu, J. Smart home based on WiFi sensing: A survey. IEEE Access 2018, 6, 13317–13325. [Google Scholar] [CrossRef]

- Zhang, R.; Jiang, C.; Wu, S.; Zhou, Q.; Jing, X.; Mu, J. Wi-Fi sensing for joint gesture recognition and human identification from few samples in human-computer interaction. IEEE J. Sel. Areas Commun. 2022, 40, 2193–2205. [Google Scholar] [CrossRef]

- Shahverdi, H.; Moshiri, P.F.; Nabati, M.; Asvadi, R.; Ghorashi, S.A. A csi-based human activity recognition using canny edge detector. In Human Activity and Behavior Analysis; CRC Press: Boca Raton, FL, USA, 2024; pp. 67–82. [Google Scholar]

- Miao, F.; Huang, Y.; Lu, Z.; Ohtsuki, T.; Gui, G.; Sari, H. Wi-Fi sensing techniques for human activity recognition: Brief survey, potential challenges, and research directions. Acm Comput. Surv. 2025, 57, 1–30. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-free fall detection by wireless networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Kecman, V. Support vector machines–an introduction. In Support Vector Machines: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–47. [Google Scholar]

- Al-qaness, M.A. Device-free human micro-activity recognition method using WiFi signals. Geo. Spat. Inf. Sci. 2019, 22, 128–137. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-eyes: Device-free location-oriented activity identification using fine-grained wifi signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 617–628. [Google Scholar]

- Chen, C.; Shu, Y.; Shu, K.I.; Zhang, H. WiTT: Modeling and the evaluation of table tennis actions based on WIFI signals. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3100–3107. [Google Scholar]

- Yousefi, S.; Narui, H.; Dayal, S.; Ermon, S.; Valaee, S. A survey on behavior recognition using WiFi channel state information. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar] [CrossRef]

- Schmidhuber, J.; Hochreiter, S. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, L.; Jiang, C.; Cao, Z.; Cui, W. WiFi CSI based passive human activity recognition using attention based BLSTM. IEEE Trans. Mob. Comput. 2018, 18, 2714–2724. [Google Scholar] [CrossRef]

- Schäfer, J.; Barrsiwal, B.R.; Kokhkharova, M.; Adil, H.; Liebehenschel, J. Human activity recognition using CSI information with nexmon. Appl. Sci. 2021, 11, 8860. [Google Scholar] [CrossRef]

- Shen, X.; Ni, Z.; Liu, L.; Yang, J.; Ahmed, K. WiPass: 1D-CNN-based smartphone keystroke recognition using WiFi signals. Pervasive Mob. Comput. 2021, 73, 101393. [Google Scholar] [CrossRef]

- Zhang, C.; Jiao, W. Imgfi: A high accuracy and lightweight human activity recognition framework using csi image. IEEE Sens. J. 2023, 23, 21966–21977. [Google Scholar] [CrossRef]

- Xu, Z.; Lin, H. Quantum-Enhanced Forecasting: Leveraging Quantum Gramian Angular Field and CNNs for Stock Return Predictions. arXiv 2023, arXiv:2310.07427. [Google Scholar] [CrossRef]

- Moshiri, P.F.; Shahbazian, R.; Nabati, M.; Ghorashi, S.A. A CSI-based human activity recognition using deep learning. Sensors 2021, 21, 7225. [Google Scholar] [CrossRef] [PubMed]

- Jawad, S.K.; Alaziz, M. Human Activity and Gesture Recognition Based on WiFi Using Deep Convolutional Neural Networks. Iraqi J. Electr. Electron. Eng. 2022, 18, 110–116. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Sun, Z.; Ke, Q.; Rahmani, H.; Bennamoun, M.; Wang, G.; Liu, J. Human action recognition from various data modalities: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3200–3225. [Google Scholar] [CrossRef]

- Kong, Y.; Fu, Y. Human action recognition and prediction: A survey. Int. J. Comput. Vis. 2022, 130, 1366–1401. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, D.; Yao, L.; Guo, B.; Yu, Z.; Liu, Y. Deep learning for sensor-based human activity recognition: Overview, challenges, and opportunities. Acm Comput. Surv. (CSUR) 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Vrigkas, M.; Nikou, C.; Kakadiaris, I.A. A review of human activity recognition methods. Front. Robot. AI 2015, 2, 28. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, K.; Hou, Y.; Dou, W.; Zhang, C.; Huang, Z.; Guo, Y. A survey on human behavior recognition using channel state information. IEEE Access 2019, 7, 155986–156024. [Google Scholar] [CrossRef]

- Liu, J.; Liu, H.; Chen, Y.; Wang, Y.; Wang, C. Wireless sensing for human activity: A survey. IEEE Commun. Surv. Tutor. 2019, 22, 1629–1645. [Google Scholar] [CrossRef]

- Apicella, A.; Isgrò, F.; Prevete, R. Don’t push the button! exploring data leakage risks in machine learning and transfer learning. arXiv 2024, arXiv:2401.13796. [Google Scholar] [CrossRef]

- Domnik, J.; Holland, A. On Data Leakage Prevention and Machine Learning. In Proceedings of the 35th Bled eConference Digital Restructuring and Human (Re) Action, Bled, Slovenia, 26–29 June 2022; p. 695. [Google Scholar]

- Kaufman, S.; Rosset, S.; Perlich, C.; Stitelman, O. Leakage in data mining: Formulation, detection, and avoidance. Acm Trans. Knowl. Discov. Data (TKDD) 2012, 6, 1–21. [Google Scholar] [CrossRef]

- Kapoor, S.; Narayanan, A. Leakage and the reproducibility crisis in machine-learning-based science. Patterns 2023, 4, 100804. [Google Scholar] [CrossRef]

- Ma, Y.; Zhou, G.; Wang, S. WiFi sensing with channel state information: A survey. ACM Comput. Surv. (CSUR) 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Caire, G.; Shamai, S. On the capacity of some channels with channel state information. IEEE Trans. Inf. Theory 2002, 45, 2007–2019. [Google Scholar] [CrossRef]

- Guo, J.; Ho, I.W.H. CSI-based efficient self-quarantine monitoring system using branchy convolution neural network. In Proceedings of the 2022 IEEE 8th World Forum on Internet of Things (WF-IoT), Yokohama, Japan, 26 October–11 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Sun, R.; Lei, T.; Chen, Q.; Wang, Z.; Du, X.; Zhao, W.; Nandi, A.K. Survey of image edge detection. Front. Signal Process. 2022, 2, 826967. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Nagel, M.; Fournarakis, M.; Amjad, R.A.; Bondarenko, Y.; Van Baalen, M.; Blankevoort, T. A white paper on neural network quantization. arXiv 2021, arXiv:2106.08295. [Google Scholar] [CrossRef]

- Gringoli, F.; Schulz, M.; Link, J.; Hollick, M. Free your CSI: A channel state information extraction platform for modern Wi-Fi chipsets. In Proceedings of the 13th International Workshop on Wireless Network Testbeds, Experimental Evaluation & Characterization, Los Cabos, Mexico, 25 October 2019; pp. 21–28. [Google Scholar]

- Majumdar, N.; Banerjee, S. MATLAB Graphics and Data Visualization Cookbook; PACKT Publishing: Birmingham, UK, 2012. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Çalik, R.C.; Demirci, M.F. Cifar-10 image classification with convolutional neural networks for embedded systems. In Proceedings of the 2018 IEEE/ACS 15th International Conference on Computer Systems and Applications (AICCSA), Aqaba, Jordan, 28 October–1 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–2. [Google Scholar]

- Aslam, S.; Nassif, A.B. Deep learning based CIFAR-10 classification. In Proceedings of the 2023 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 20–23 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4. [Google Scholar]

- Saupe, D.; Hahn, F.; Hosu, V.; Zingman, I.; Rana, M.; Li, S. Crowd workers proven useful: A comparative study of subjective video quality assessment. In Proceedings of the QoMEX 2016: 8th International Conference on Quality of Multimedia Experience, Lisbon, Portugal, 6–8 June 2016. [Google Scholar]

| Parameter | Value |

|---|---|

| Loss function | Cross-entropy |

| Optimizer | Adam [43] (, , ) |

| Learning rate | 0.001 |

| Weight decay | 0.0 |

| Batch size | 128 |

| Number of epochs | 40 |

| Parameter | Value |

|---|---|

| Computer model | STRIX Z270H Gaming |

| Operating system | Windows 10 |

| CPU | Intel(R) Core(TM) i7-7700K CPU 4.20 GHz (8 cores) |

| Memory | 15 GB |

| GPU | NVIDIA GeForce GTX 1080 |

| Accuracy | F1-Score | |

|---|---|---|

| Reported in [4] | 0.92 | 0.92 |

| Retrained w/o.r.t. humans | 0.929 | 0.928 |

| Retrained w.r.t. humans | 0.607 | 0.604 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Varga, D.; Cao, A.Q. Why Partitioning Matters: Revealing Overestimated Performance in WiFi-CSI-Based Human Action Recognition. Signals 2025, 6, 59. https://doi.org/10.3390/signals6040059

Varga D, Cao AQ. Why Partitioning Matters: Revealing Overestimated Performance in WiFi-CSI-Based Human Action Recognition. Signals. 2025; 6(4):59. https://doi.org/10.3390/signals6040059

Chicago/Turabian StyleVarga, Domonkos, and An Quynh Cao. 2025. "Why Partitioning Matters: Revealing Overestimated Performance in WiFi-CSI-Based Human Action Recognition" Signals 6, no. 4: 59. https://doi.org/10.3390/signals6040059

APA StyleVarga, D., & Cao, A. Q. (2025). Why Partitioning Matters: Revealing Overestimated Performance in WiFi-CSI-Based Human Action Recognition. Signals, 6(4), 59. https://doi.org/10.3390/signals6040059