Design and Implementation of a Musical System for the Development of Creative Activities Through Electroacoustics in Educational Contexts

Abstract

1. Introduction

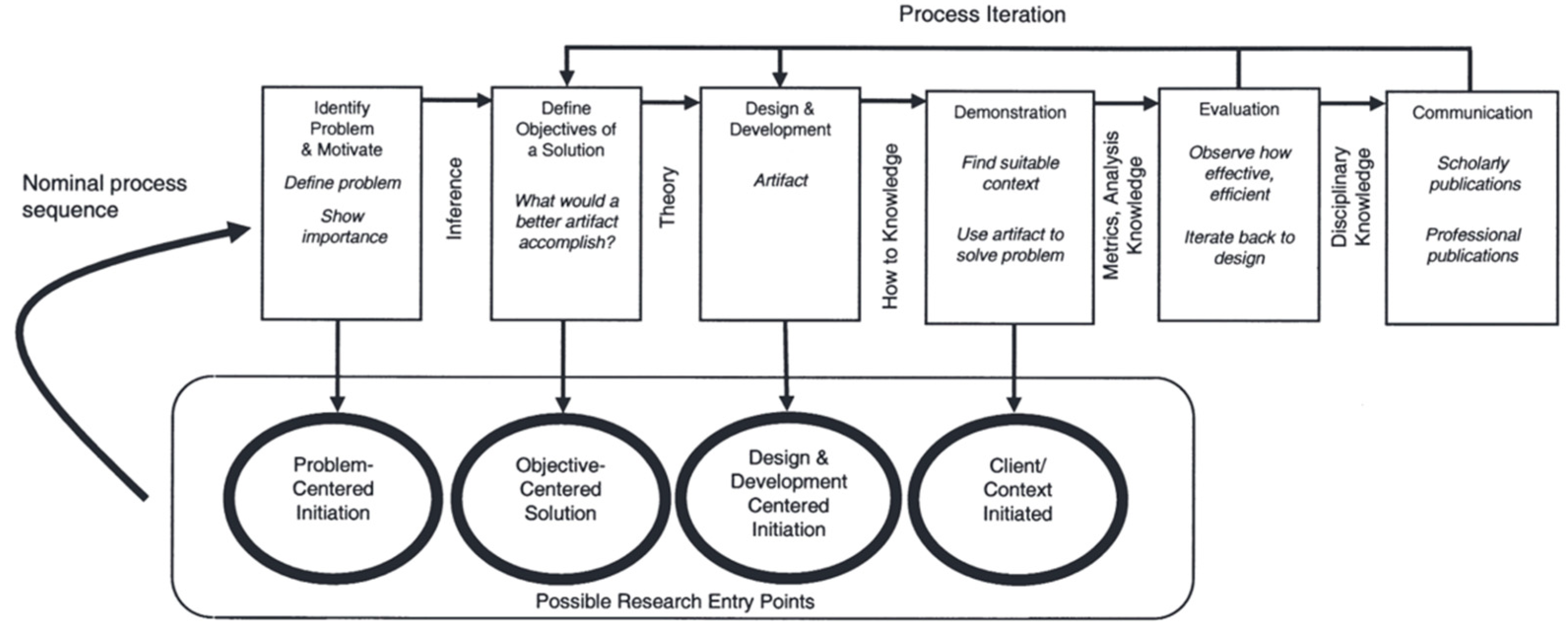

2. Method

2.1. Background

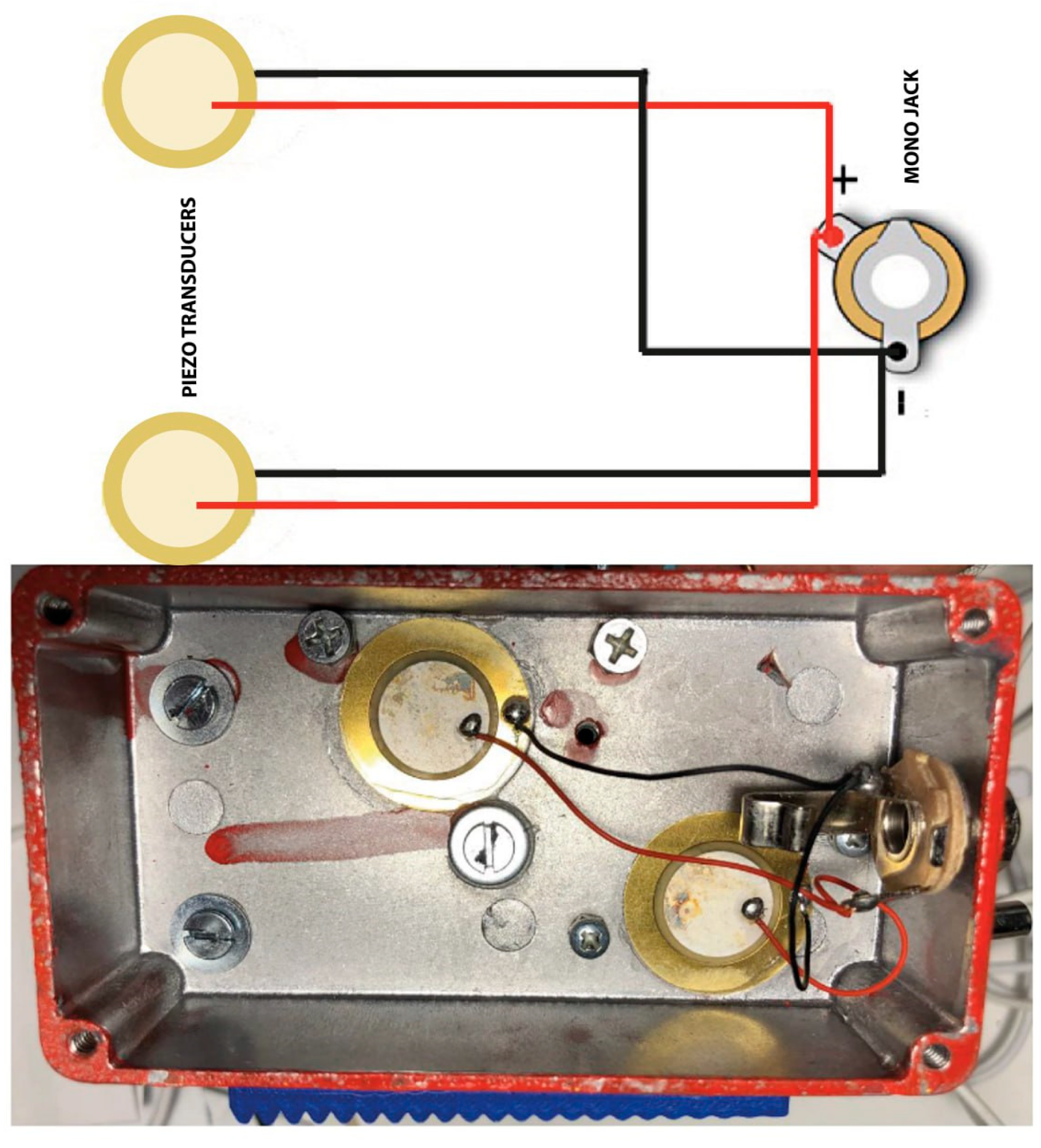

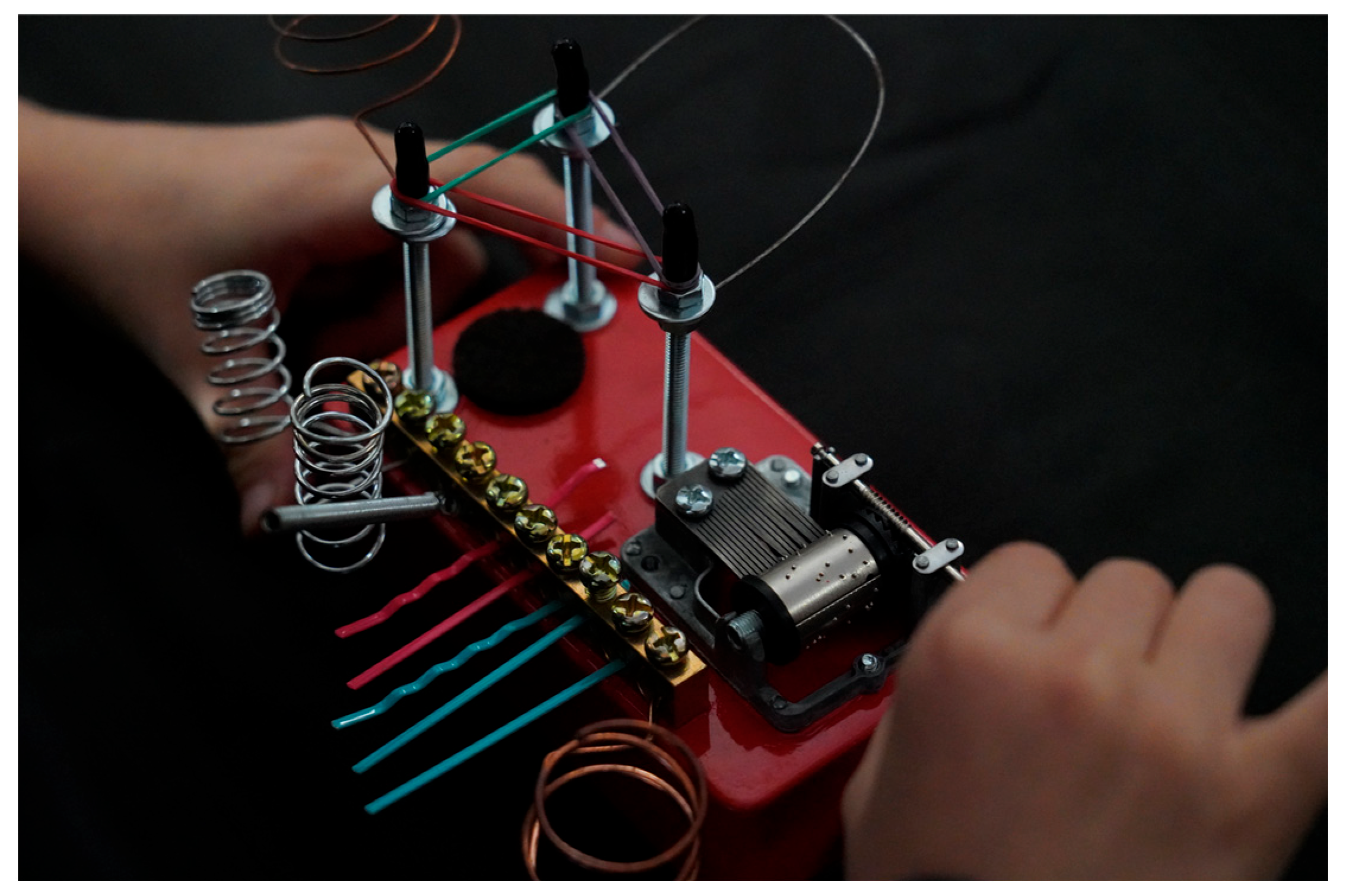

2.1.1. Hardware Description: Play Box

2.1.2. Play Box Design Elements (Hardware)

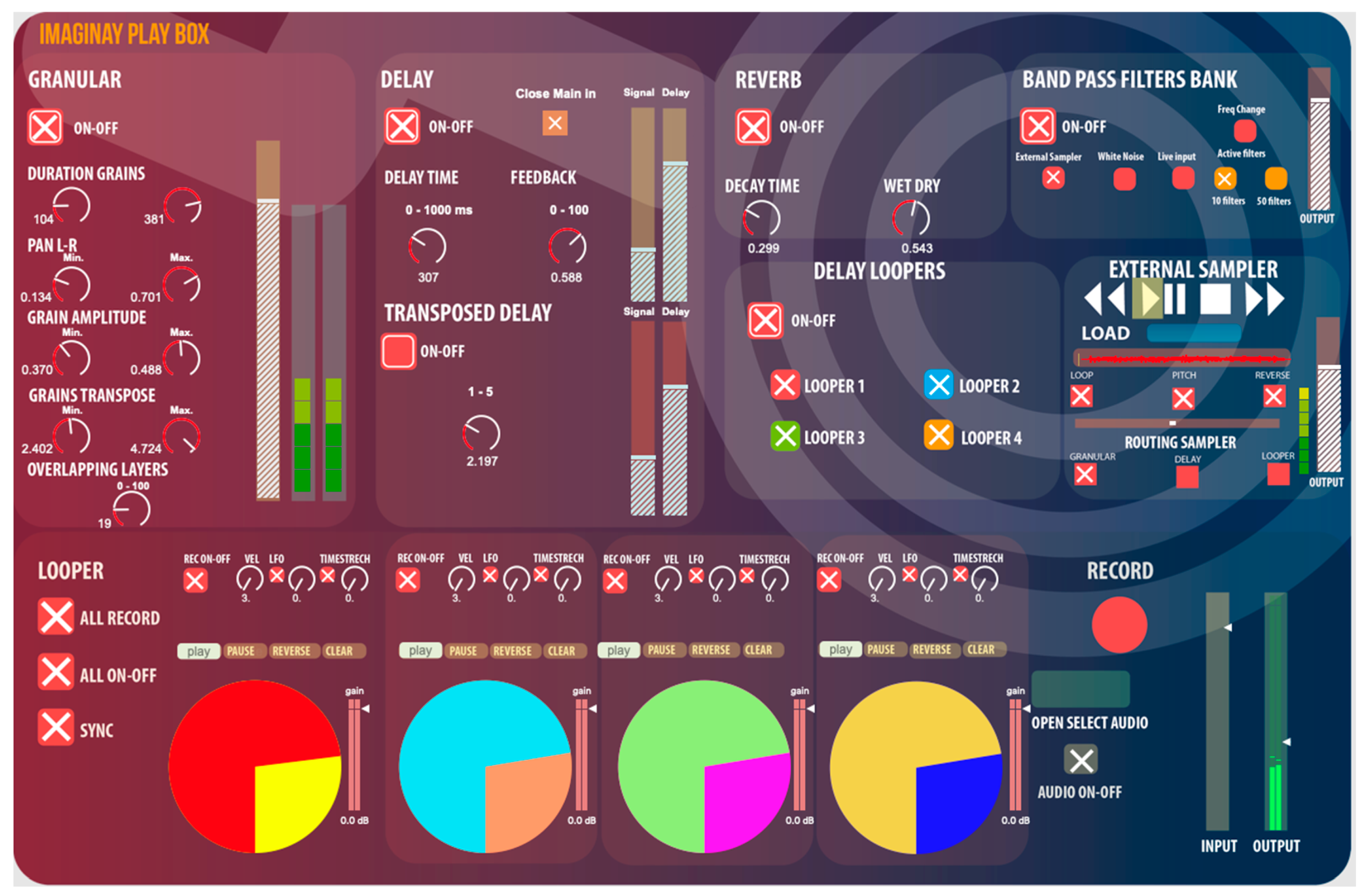

2.2. Initial Programming of the Imaginary Play Box Software v.1.1

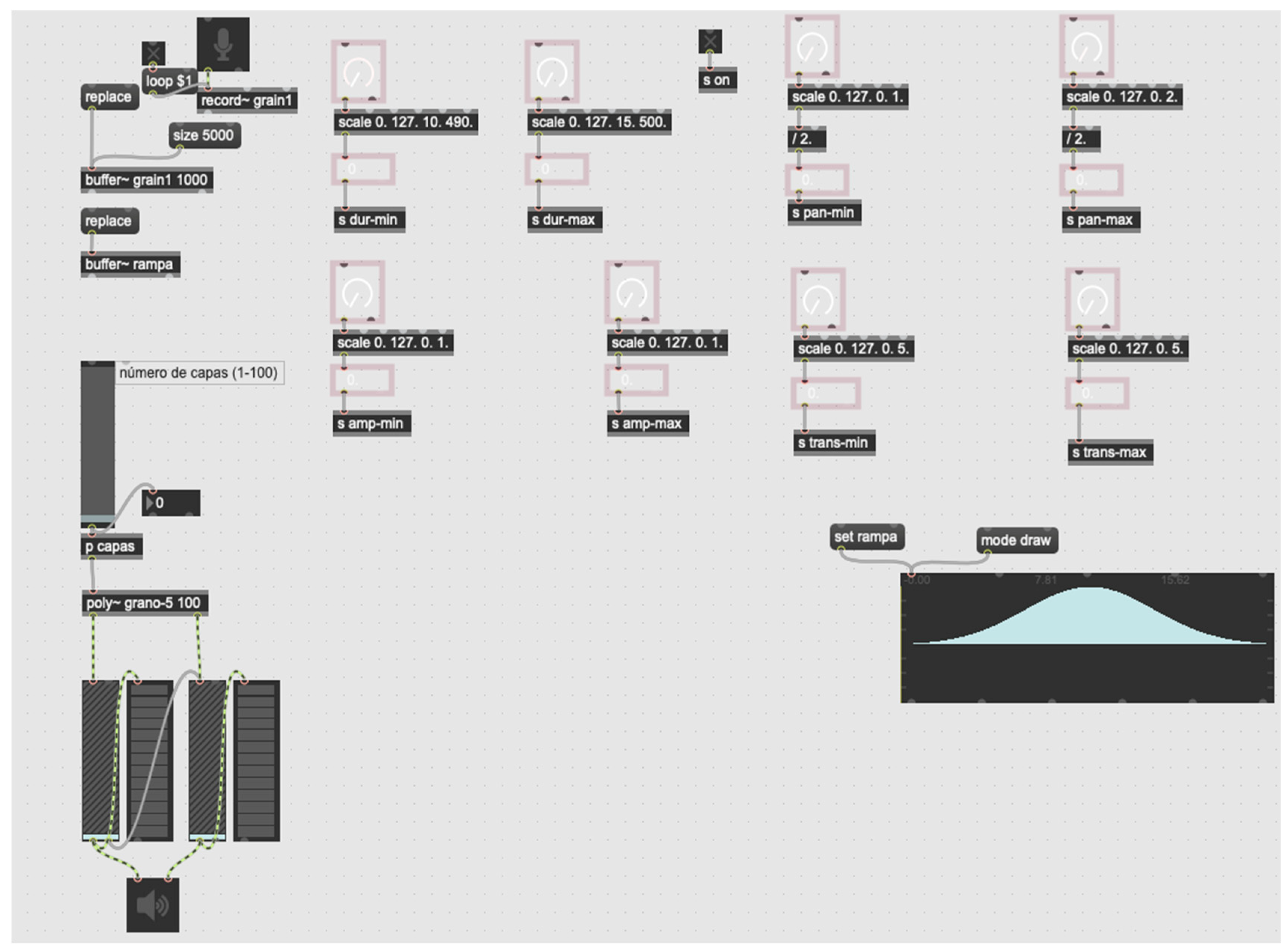

2.2.1. Granular

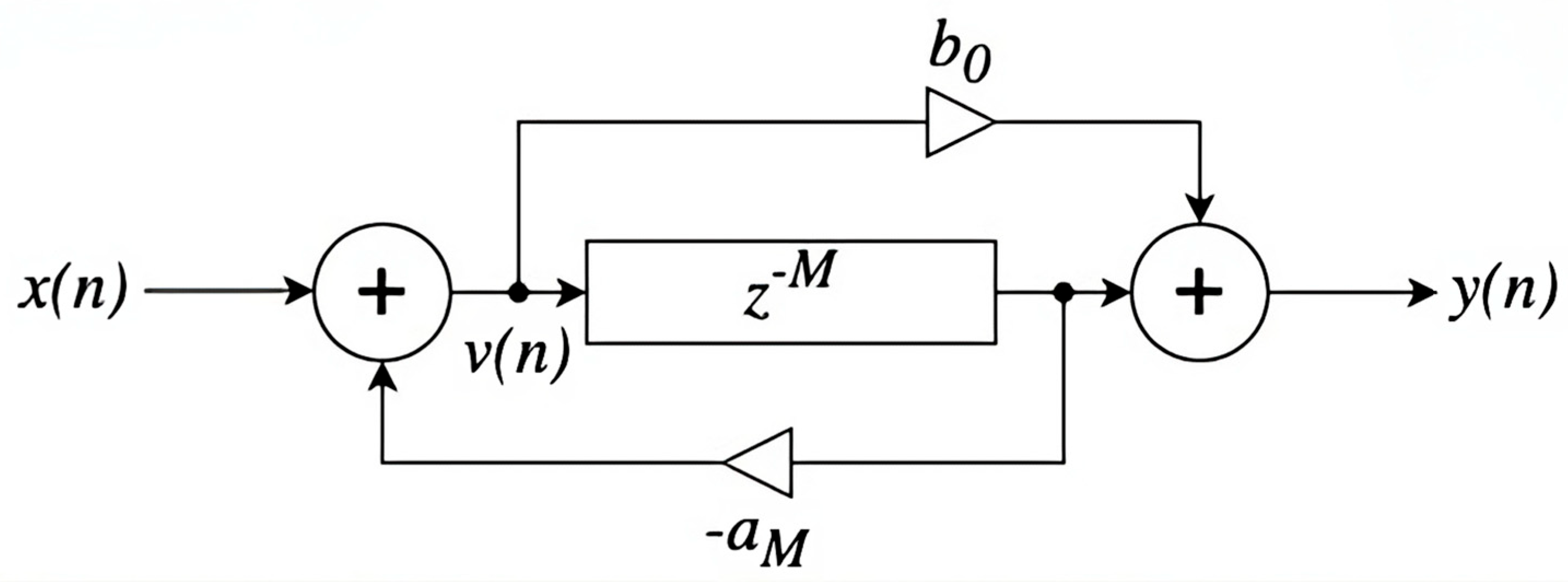

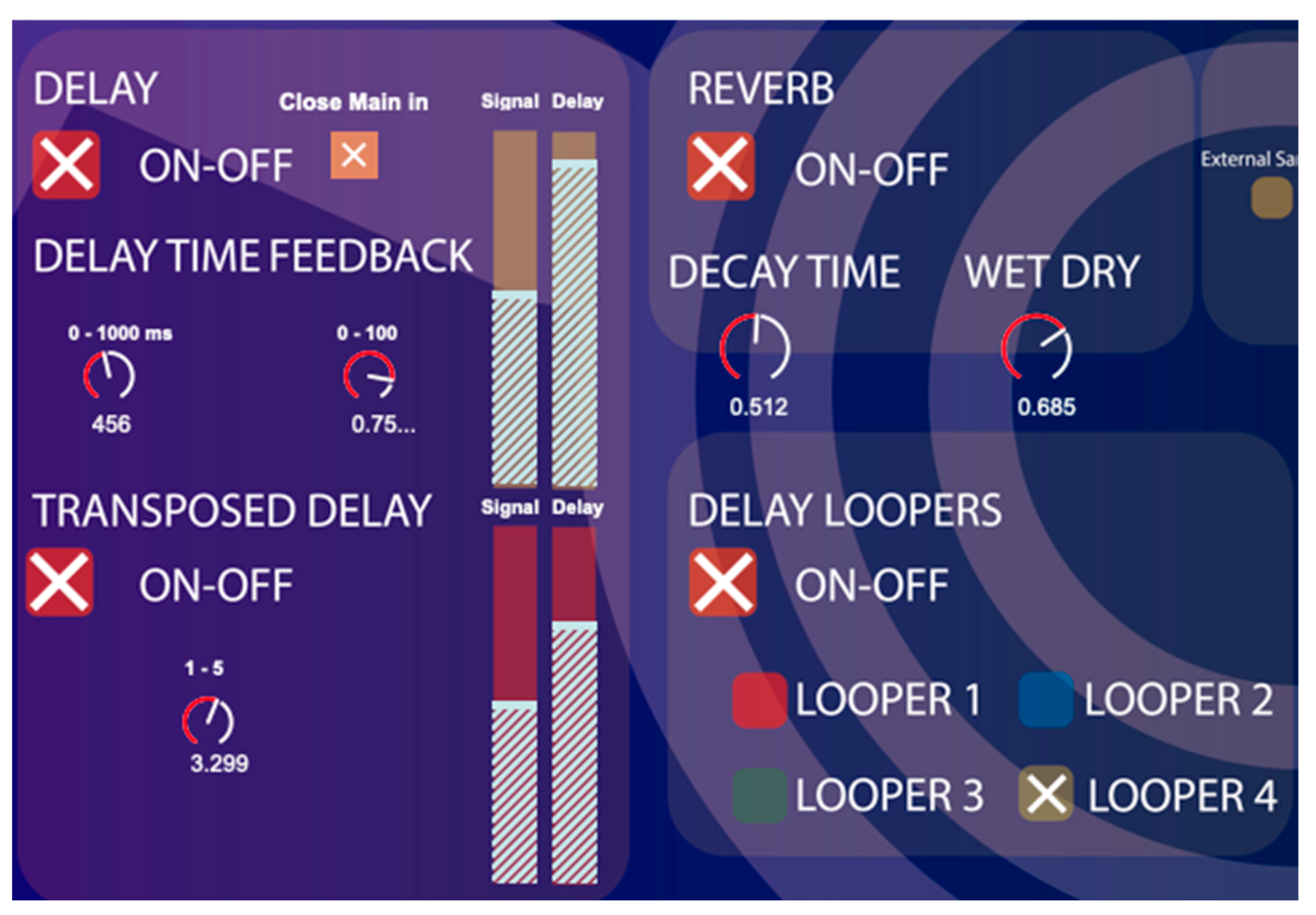

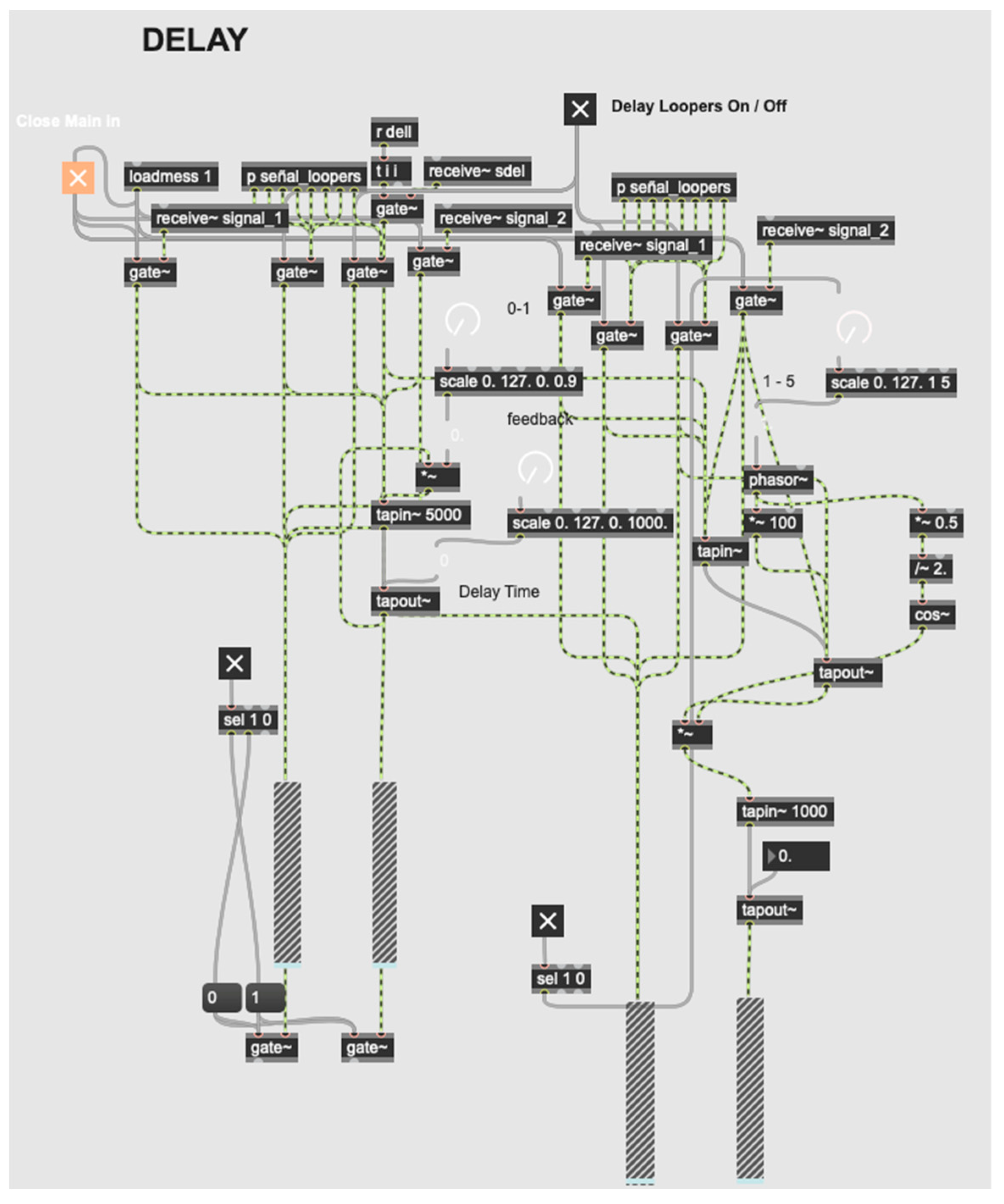

2.2.2. Delay and Reverb

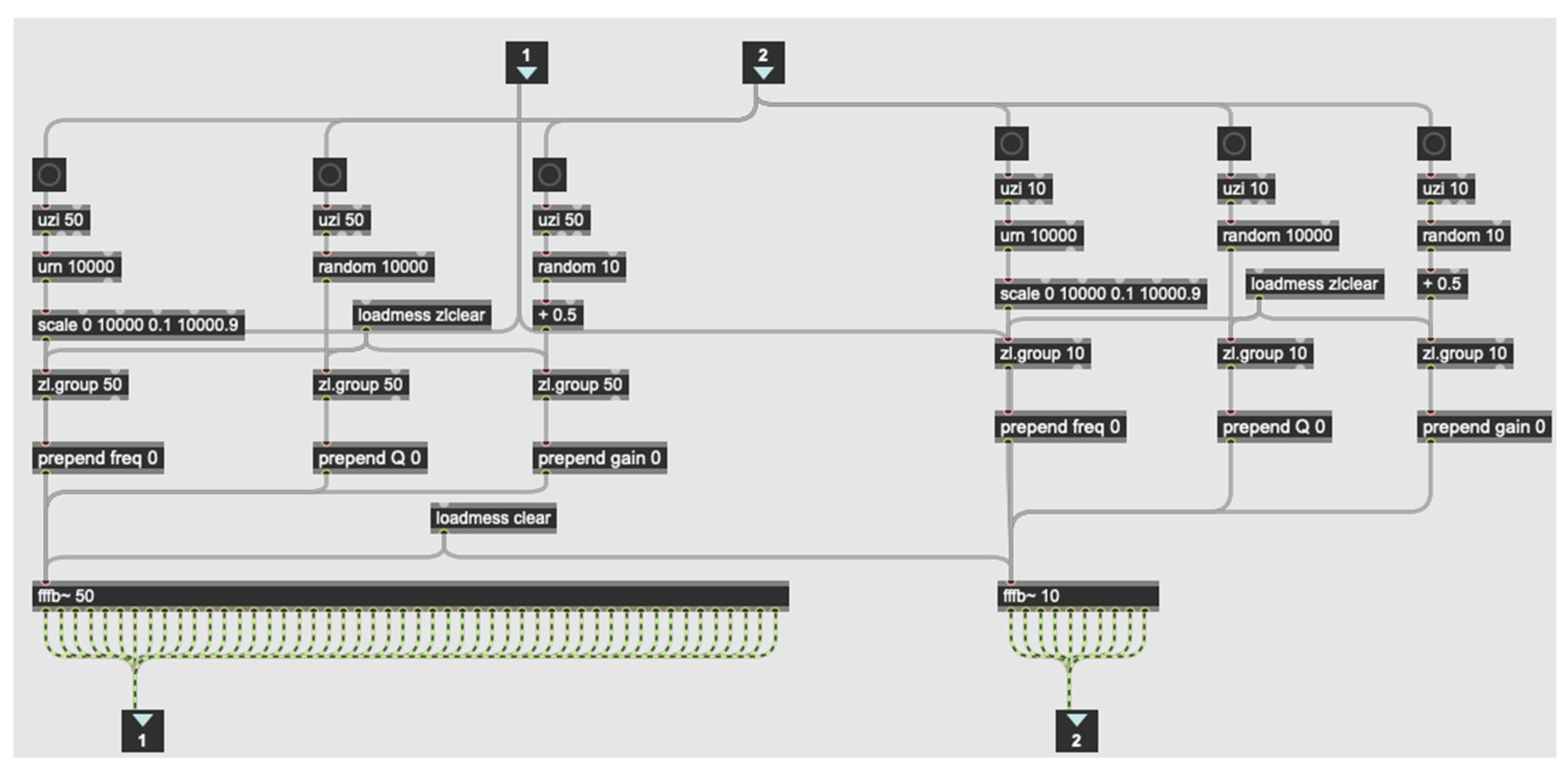

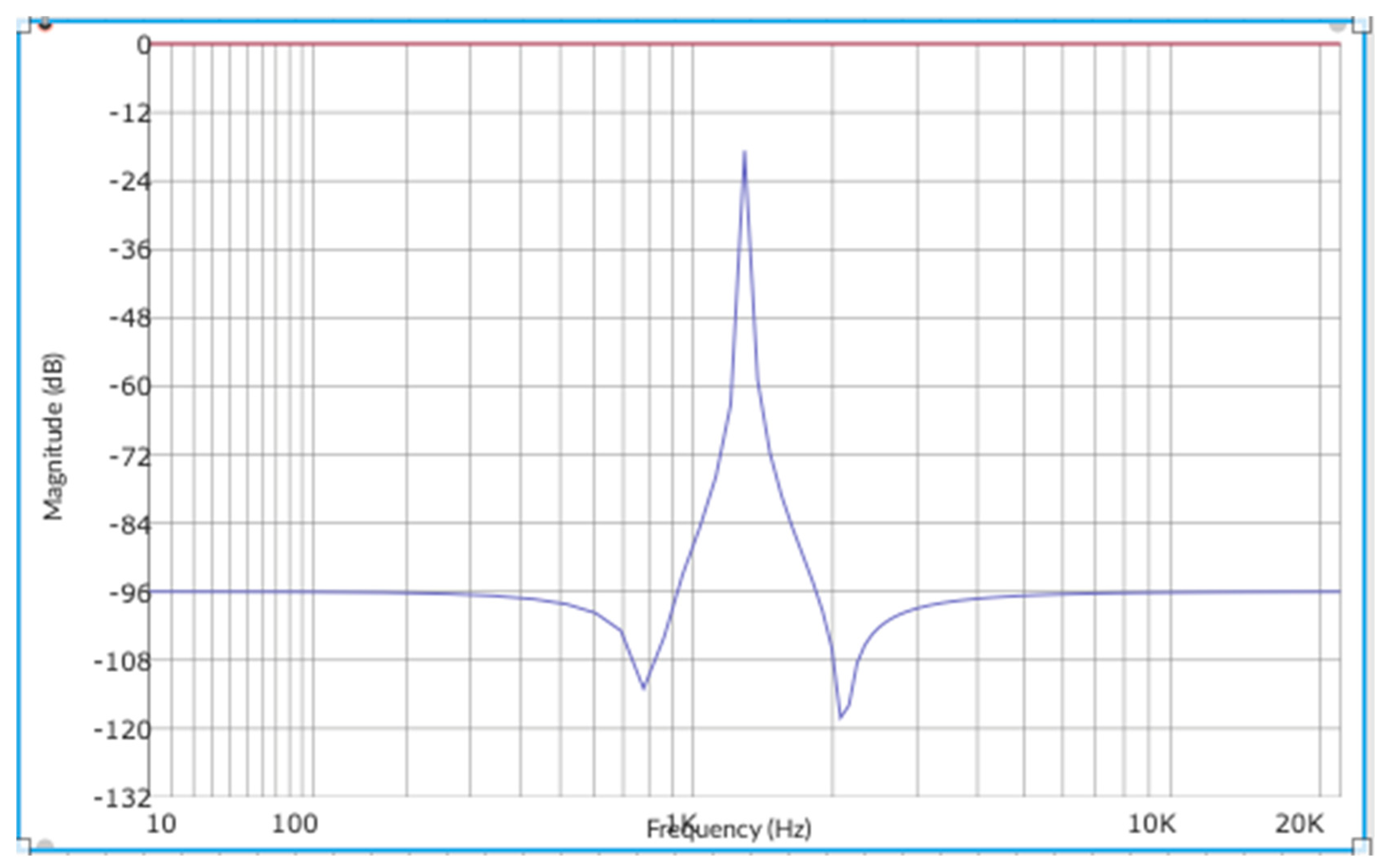

2.2.3. Bandpass Filter Bank

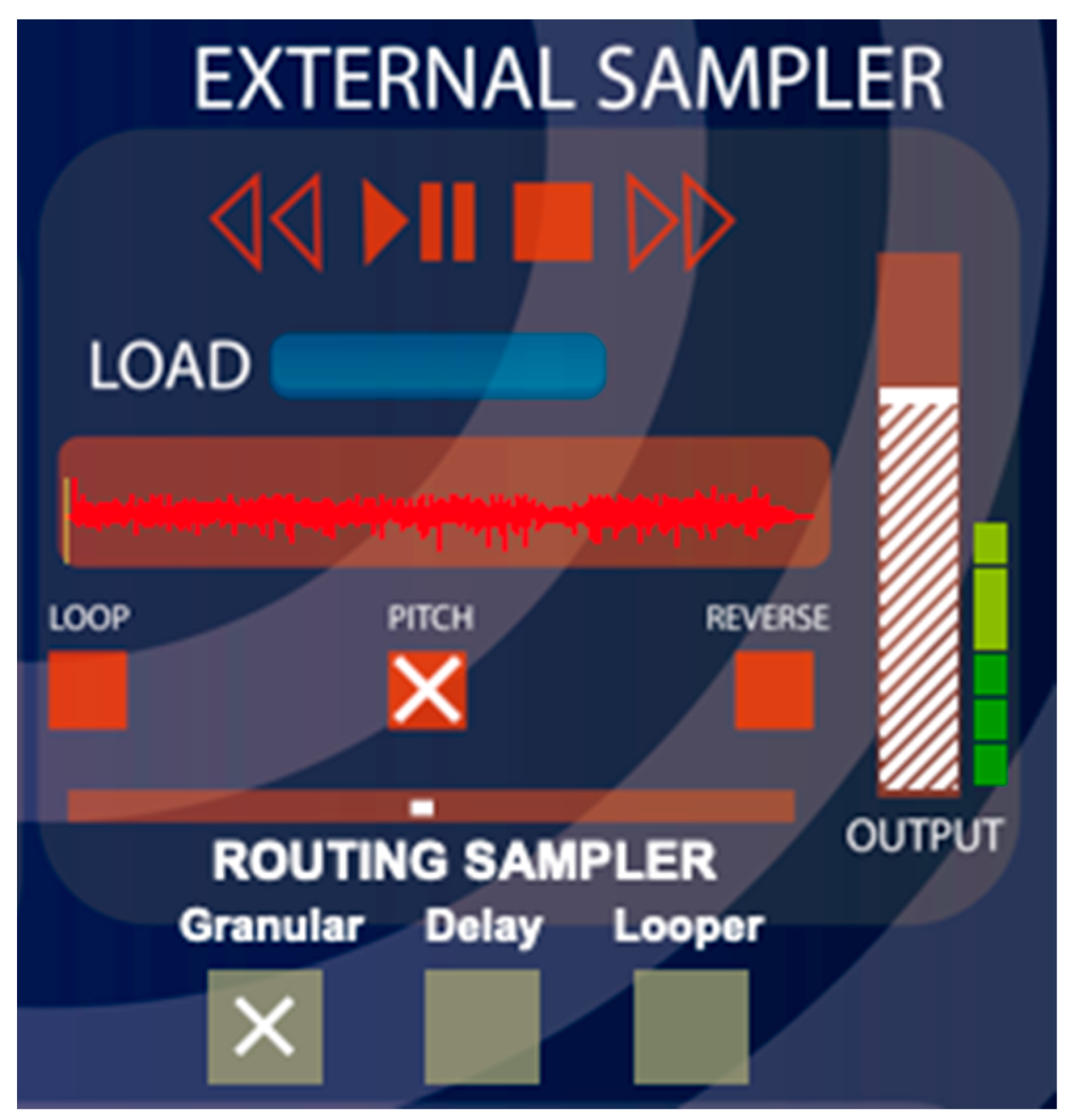

2.2.4. External Sampler

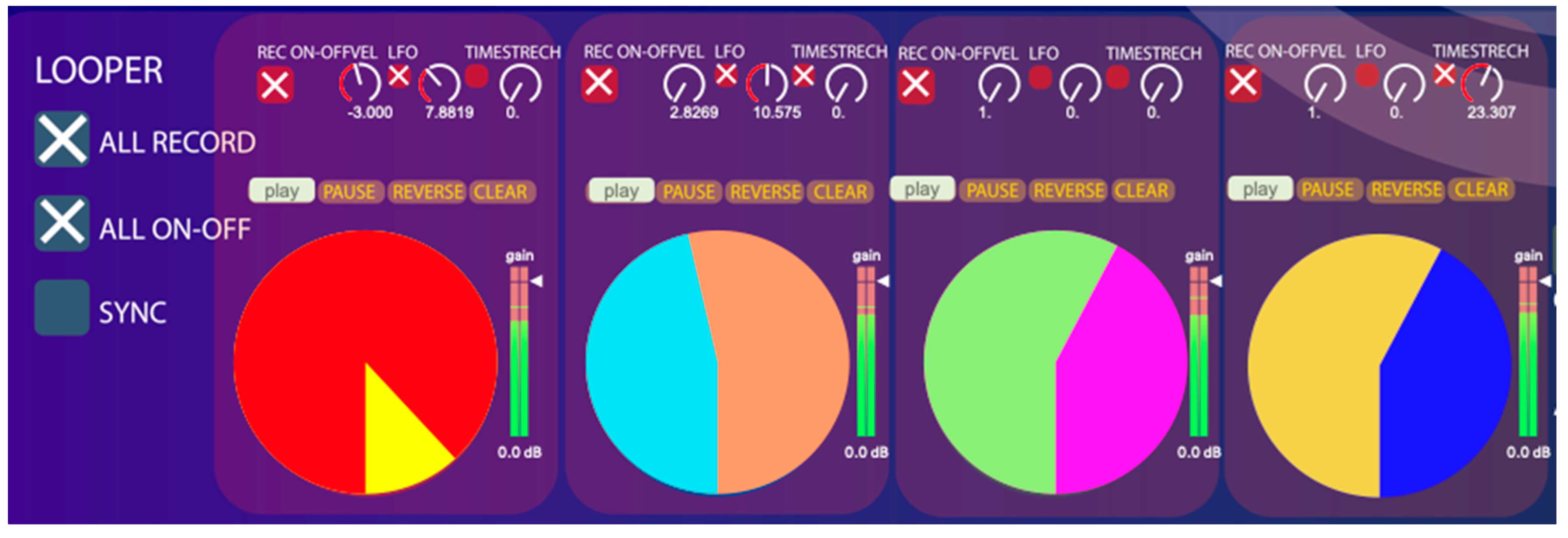

2.2.5. Looper

2.3. Results of Software Evaluation by Experts

2.3.1. Max and Music Technology Expert Ratings

2.3.2. Experts in Music Education

3. Implementation of Innovations from the Expert Panels

3.1. Changes in the Graphical Interface of the Software Imaginary Play Box v.1.2

3.2. Transformations Applied to Max Programming

3.3. Phase 4 Beta Design and Introduction of the Proposed Innovation in the Educational Context

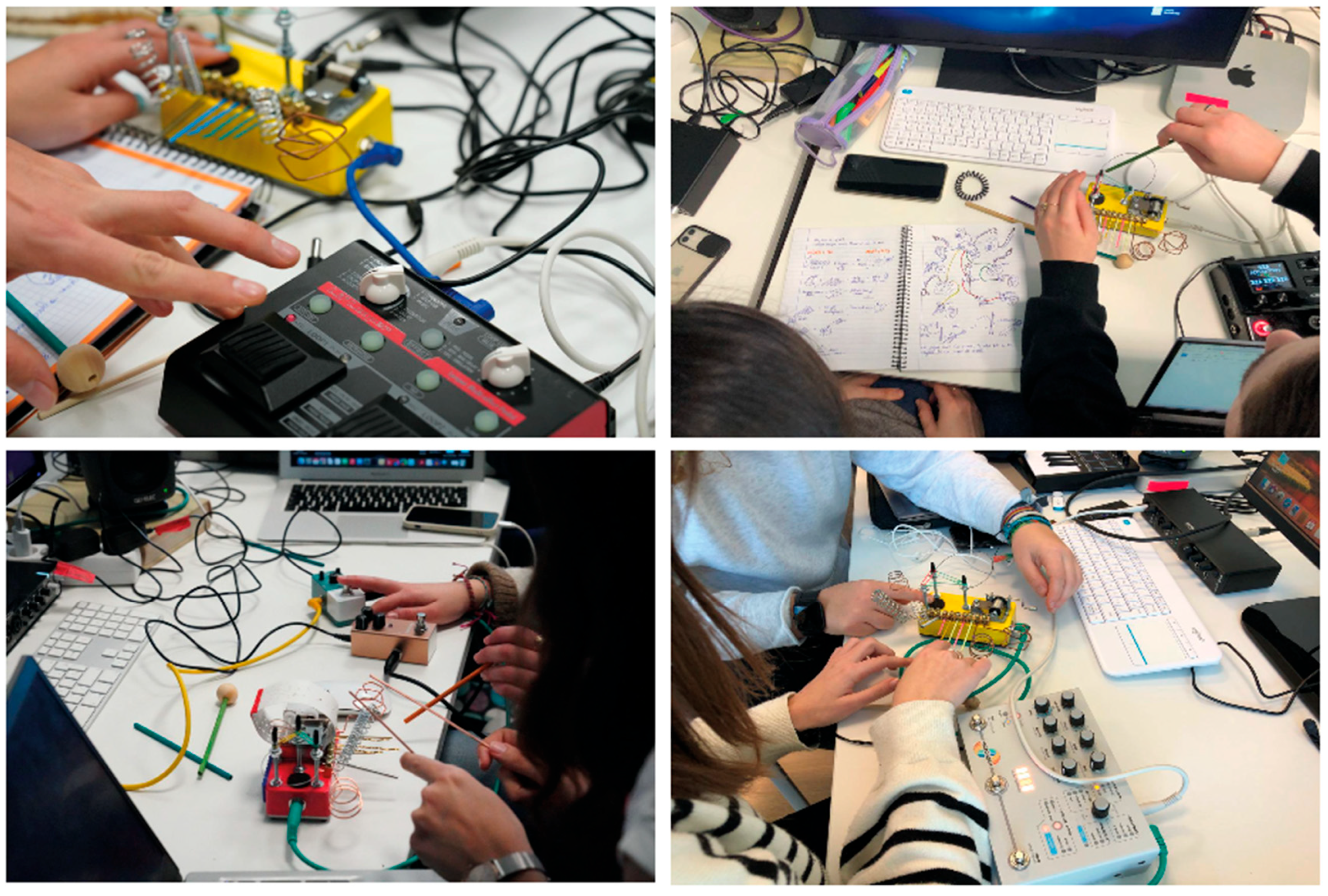

4. Some Educational Applications of the System

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, J.C.W.; O'Neill, S.A. Computer-mediated composition pedagogy: Students engagement and learning in popular music and classical music. Music Educ. Res. 2020, 22, 185–200. [Google Scholar]

- Himonides, E.; Purves, R. The role of technology. In Music Education in the 21st Century in the United Kingdom: Achievements, Analysis and Aspirations; Hallam, S., Creech, A., Eds.; Institute of Education: Dublin, Ireland, 2010; pp. 123–140. [Google Scholar]

- Bauer, W. Music Learning Today: Digital Pedagogy for Creating, Performing, and Responding to Music; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Savage, J. Technology and the Music Teacher. In Music and Music Education in People's Lives: An Oxford Handbook of Music Education; McPherson, G., Welch, G., Eds.; Oxford University Press: Oxford, UK, 2019; pp. 567–582. [Google Scholar]

- Bautista, A.; Toh, G.Z.; Mancenido, Z.; Wong, J. Student-centered pedagogies in the Singapore music classroom: A case study on collaborative composition. Aust. J. Educ. 2021, 43, 1–25. [Google Scholar]

- Webster, R.P. Computer-based technology. In The Child as Musician: A Handbook of Musical Development; McPherson, G., Ed.; Oxford University Press: Oxford, UK, 2016; pp. 500–519. [Google Scholar]

- Virtaluoto, J.; Suojanen, T.; Isohella, S. Minimalism Heuristics Revisited: Developing a Practical Review Tool. Tech. Commun. 2021, 68, 20–36. [Google Scholar]

- Ruthmann, A.; Mantie, R. The Oxford Handbook of Technology and Music Education; Oxford Academic: Oxford, UK, 2017. [Google Scholar]

- Jorgensen, E.R. Values and Music Education; Indiana University Press: Bloomington, IN, USA, 2021. [Google Scholar]

- O'Neill, S.A. Transformative music engagement and musical flourishing. In The Child as Musician, 2nd ed.; McPherson, G.E., Ed.; Oxford University Press: Oxford, UK, 2016; pp. 606–625. [Google Scholar]

- Yang, X. The perspectives of teaching electroacoustic music in the digital environment in higher music education. Interact. Learn. Environ. 2022, 32, 1183–1193. [Google Scholar]

- Grossman, P.; McDonald, M. Back to the Future: Directions for Research in Teaching and Teacher Education. Am. Educ. Res. J. 2008, 45, 184–205. [Google Scholar]

- Tan, A.-G.; Yukiko, T.; Oie, M.; Mito, H. Creativity and music education: A state of art reflection. In Creativity in Music Education; Yukiko, T., Tan, A.-G., Oie, M., Eds.; Springer: London, UK, 2019; pp. 3–16. [Google Scholar]

- Emmerson, S. Living Electronic Music; Ashgate: Farnham, UK, 2007. [Google Scholar]

- Martin, J. Tradition and Transformation: Addressing the gap between electroacoustic music and the middle and secondary school curriculum. Organ Sound 2013, 18, 101–107. [Google Scholar]

- Bull, A. Class, control, and Classical Music; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Bull, A.; Scharff, C. ‘McDonald's music’ versus ‘serious music’: How production and consumption practices help to re-produce class inequality in the classical music profession. Cult. Sociol. 2017, 11, 283–301. [Google Scholar]

- Dwyer, R. Music Teachers' Values and Beliefs; Routledge: London, UK, 2016. [Google Scholar]

- Landy, L. Understanding the Art of Sound Organization; The MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Landy, L. Making Music with Sounds; Routledge: London, UK, 2012. [Google Scholar]

- Holland, D. A constructivist approach for opening minds to sound-based music. J. Music Technol. Educ. 2015, 8, 23–29. [Google Scholar]

- Murillo, A.; Riaño, M.E.; Tejada, J. Aglaya Play: Designing a Software solution for group compositions in the music classroom. Music Technol. Educ. 2021, 13, 239–261. [Google Scholar]

- Holland, D.; Chapman, D. Introducing New Audiences to Sound-Based Music through Creative Engagement. Organ Sound 2019, 24, 240–251. [Google Scholar]

- Kazi, S. Screens, Swipes, and Society: The Future of Digital Citizenship in an Ever-Changing Tech Landscape. Childhood Educ. 2024, 100, 48–51. [Google Scholar]

- Gall, M.R.; Breeze, N. The sub-culture of music and ICT in the classroom. Technol. Pedagog. Educ. 2007, 16, 41–56. [Google Scholar]

- Gall, M. Trainee teachers' perceptions: Factors that constrain the use of music technology in teaching placements. Music Technol. Educ. 2013, 6, 5–27. [Google Scholar]

- Leman, M. Embodied Music Cognition and Mediation Technology; The MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Leman, M. Musical entrainment subsumes bodily gestures: Its definition needs a spatiotemporal dimension. Empir. Musicol. Rev. 2012, 7, 63–67. [Google Scholar]

- Leman, M.; Maes, P.-J. The Role of Embodiment in the Perception of Music. Empir. Musicol. Rev. 2012, 9, 236–246. [Google Scholar]

- Maturana, H.R.; Varela, F.J. Autopoiesis and Cognition: The Realization of the Living; Reidel: Dordrecht, The Netherlands, 1980. [Google Scholar]

- Maturana, H.R.; Varela, F.J. The Tree of Knowledge: The Biological Roots of Human Understanding; New Science Library: Boston, MA, USA, 1987. [Google Scholar]

- Varela, F.J.; Thompson, E.; Rosch, E. The Embodied Mind: Cognitive Science and Human Experience; The MIT Press: Cambridge, MA, USA, 1991. [Google Scholar]

- Hein, E. The Promise and Pitfalls of the Digital Studio. In The Oxford Handbook of Thecnology and Music Education; Ruthmann, A., Mantie, R., Eds.; Oxford University Press: Oxford, UK, 2017; pp. 383–395. [Google Scholar]

- Howell, G. Getting in the way? Limitations of technology in community music. In The Oxford Handbook of Technology and Music Education; Ruthmann, A., Mantie, R., Eds.; Oxford University Press: Oxford, UK, 2017; pp. 449–463. [Google Scholar]

- Peppler, K. Interest-Driven Music Education: Youth, Technology, and Music Making Today. In The Oxford Handbook of Technology and Music Education; Ruthmann, S.A., Mantie, R., Eds.; Oxford University Press: Oxford, UK, 2017; pp. 191–202. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A design science research methodology for information systems research. J. Manage. Inform. Syst. 2007, 24, 45–77. [Google Scholar]

- Štemberger, T.; Cencič, M. Design Based Research: The Way of Developing and Implementing Educational Innovation. World J. Educ. Technol. 2016, 8, 180–189. [Google Scholar]

- Aglaya.org. Available online: https://www.aglaya.org/about (accessed on 15 August 2024).

- Tejada, J.; Murillo, A.; Berenguer, J.M. Acouscapes: A software for ecoacoustic education and soundscape composition in primary and secondary education. Organ Sound 2023, 29, 55–63. [Google Scholar]

- Murillo, A.; Riaño, M.E. Play Box: A sound artefact to approach sound experimentation in the classroom. An exploratory study based on an electroacoustic creation intervention in initial teacher training. Rev. Interuniv. Form. P 2023, 98, 93–116. [Google Scholar]

- Cycling'74; IRCAM. Max-MSP v.8, Computer Software: Paris, France, 2019.

- Cipriani, A.; Giri, M. Electronic Music and Sound Design: Theory and Practice with Max 8; Contemponet: Roma, Italy, 2019. [Google Scholar]

- Manzo, V.J. Max/MSP/Jitter for Music: A Practical Guide to Developing Interactive Music Systems for Education and More; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Taylor, G. Step by Step: Adventures in Sequencing with Max/MSP.; Cycling ‘74: San Francisco, CA, USA, 2018. [Google Scholar]

- Gabor, D. Acoustical quanta and the theory of hearing. Nature 1947, 159, 591–594. [Google Scholar]

- Roads, C. Microsound; The MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Vaggione, H. Articulating Microtime. Comput. Music J. 1996, 20, 33–38. [Google Scholar]

- Morgan, R.P. La música del Siglo XX.; Akal Ediciones: Madrid, Spain, 1994. [Google Scholar]

- Pritchett, J. The Music of John Cage; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Roig-Francolí, M.A. Understanding Post-Tonal Music; McGraw-Hill Education: New York, NY, USA, 2008. [Google Scholar]

- Xenakis, I. Formalized Music: Thought and Mathematics in Composition; Pendragon Press: Stuyvesant, NY, USA, 1992. [Google Scholar]

- de León, L.P.; Betored, P.S.; Mayo, R.M.D.; Prada, R.P. The Language of Aleatoric Music: Trends, Reflections, and Didactic Proposals; Wanceulen Editorial S.L.: Sevilla, Spain, 2023. [Google Scholar]

- Santamaría, J. Brownian Motion: A Paradigm of Soft Matter and Biology. Cienc. Exact Fís Nat. 2013, 106, 39–54. [Google Scholar]

- Manning, P. Electronic and Computer Music; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Roads, C. Composing Electronic Music: A New Aesthetic; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Kühn, C. History Of Musical Composition In Annotated Examples; Idea Books: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Schoenberg, A. Theory of Harmony; University of California Press: Oakland, CA, USA, 2010. [Google Scholar]

- Chion, M. Sound: An Acoulogical Treatise; Duke University Press: Durham, NC, USA, 2016. [Google Scholar]

- Schafer, R.M. The Composer in the Classroom; Melos: Buenos Aires, Argentina, 2007. [Google Scholar]

- Schaeffer, P. Treatise on Musical Objects; Éditions du Seuil: Paris, France, 1966. [Google Scholar]

- Tejada, J.; Thayer, T. Design and validation of a music technology course for initial music teacher education based on the TPACK Model and the Project-Based Learning approach. J. Music Technol. Educ. 2019, 12, 225–246. [Google Scholar]

- Könings, K.D.; Brand-Gruwel, S.; van Merriënboer, J.J.G. Teachers' perspectives on innovations: Implications for educational design. Teach. Teach. Educ. 2007, 23, 985–997. [Google Scholar]

| Module | Granular (Max 100) | Dealy (Max 125) | Reverb (Max 100) | BPFB (Max 125) | Sampler (Max 125) | Looper (Max 200) |

|---|---|---|---|---|---|---|

| Score | 69 | 92 | 66 | 76 | 95 | 157 |

| % | 69 | 74 | 66 | 60 | 76 | 78 |

| Module | Interface (Max 120) | Granular (Maxx120) | Delay (Max 120) | Reverb (Max 120) | BPFB (Max 120) | Sampler (Max 120) | Looper (Max 120) |

|---|---|---|---|---|---|---|---|

| Score | 92 | 85 | 101 | 102 | 93 | 102 | 79 |

| % | 76 | 71 | 84 | 85 | 77 | 85 | 66 |

| Global by Module | Global Overall | |

|---|---|---|

| Score | 137 | 26 |

| % | 91 | 86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peris, E.; Murillo, A.; Tejada, J. Design and Implementation of a Musical System for the Development of Creative Activities Through Electroacoustics in Educational Contexts. Signals 2025, 6, 16. https://doi.org/10.3390/signals6020016

Peris E, Murillo A, Tejada J. Design and Implementation of a Musical System for the Development of Creative Activities Through Electroacoustics in Educational Contexts. Signals. 2025; 6(2):16. https://doi.org/10.3390/signals6020016

Chicago/Turabian StylePeris, Esteban, Adolf Murillo, and Jesús Tejada. 2025. "Design and Implementation of a Musical System for the Development of Creative Activities Through Electroacoustics in Educational Contexts" Signals 6, no. 2: 16. https://doi.org/10.3390/signals6020016

APA StylePeris, E., Murillo, A., & Tejada, J. (2025). Design and Implementation of a Musical System for the Development of Creative Activities Through Electroacoustics in Educational Contexts. Signals, 6(2), 16. https://doi.org/10.3390/signals6020016